Neural Network IDS/IPS Intrusion Detection and Prevention System with Adaptive Online Training to Improve Corporate Network Cybersecurity, Evidence Recording, and Interaction with Law Enforcement Agencies

Abstract

1. Introduction

2. Related Works

- Ensuring the model’s reliable and safe incremental adaptation in the concept drift conditions without a significant need for manual marking.

- Reducing false positives while maintaining sensitivity to new and hidden attacks.

- Integrating online training into real corporate SOC processes with low latency and an acceptable computing load guarantee.

- Ensuring the model’s stability against targeted tuning (poisoning) and adversarial attacks.

- Attack detection in encrypted and polymorphic traffic, where classic signatures are useless.

- Developing hybrid pipelines of the type “novelty detection → active labelling → incremental supervised update” with accessible risk management and performance metrics.

3. Materials and Methods

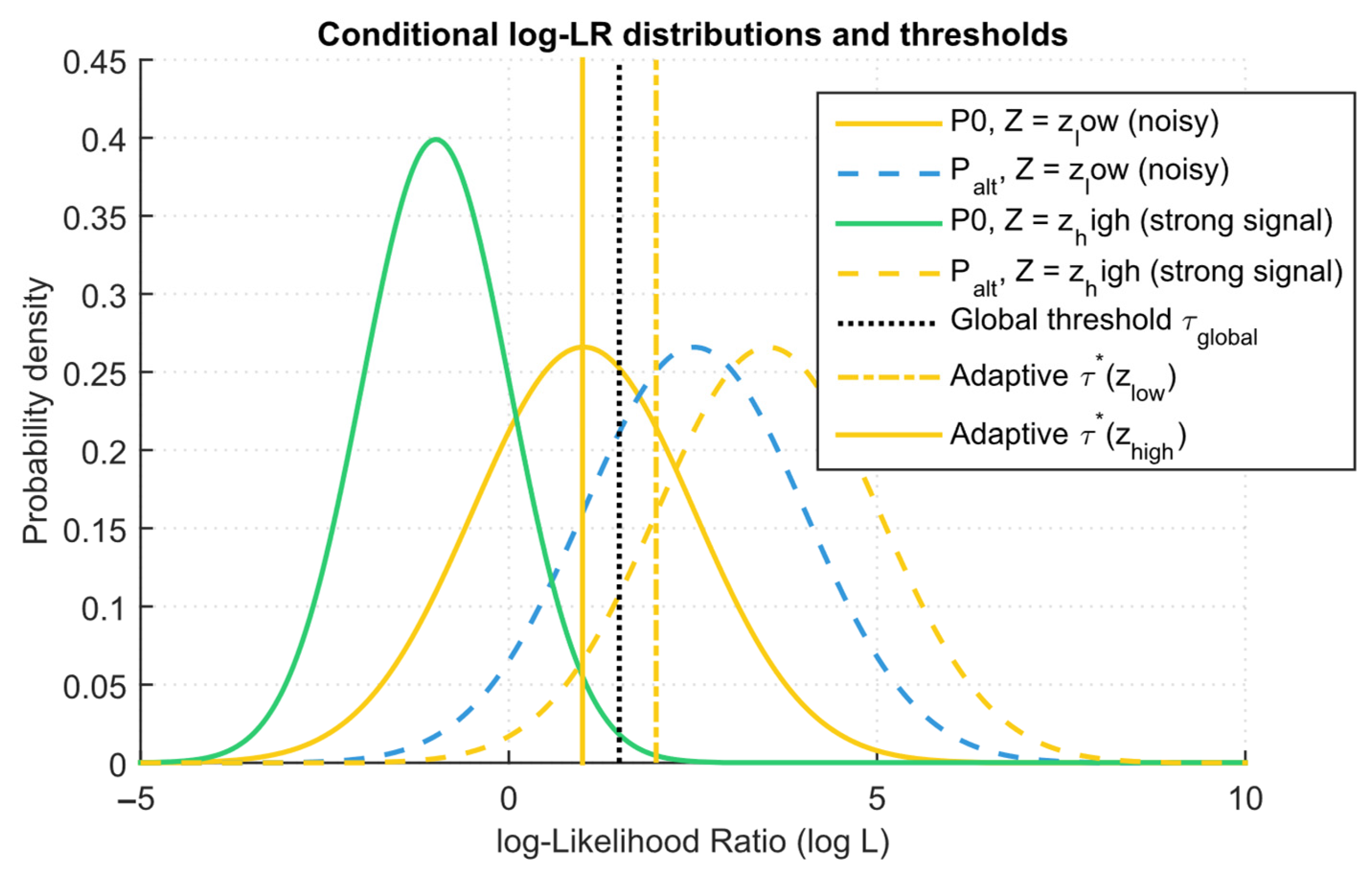

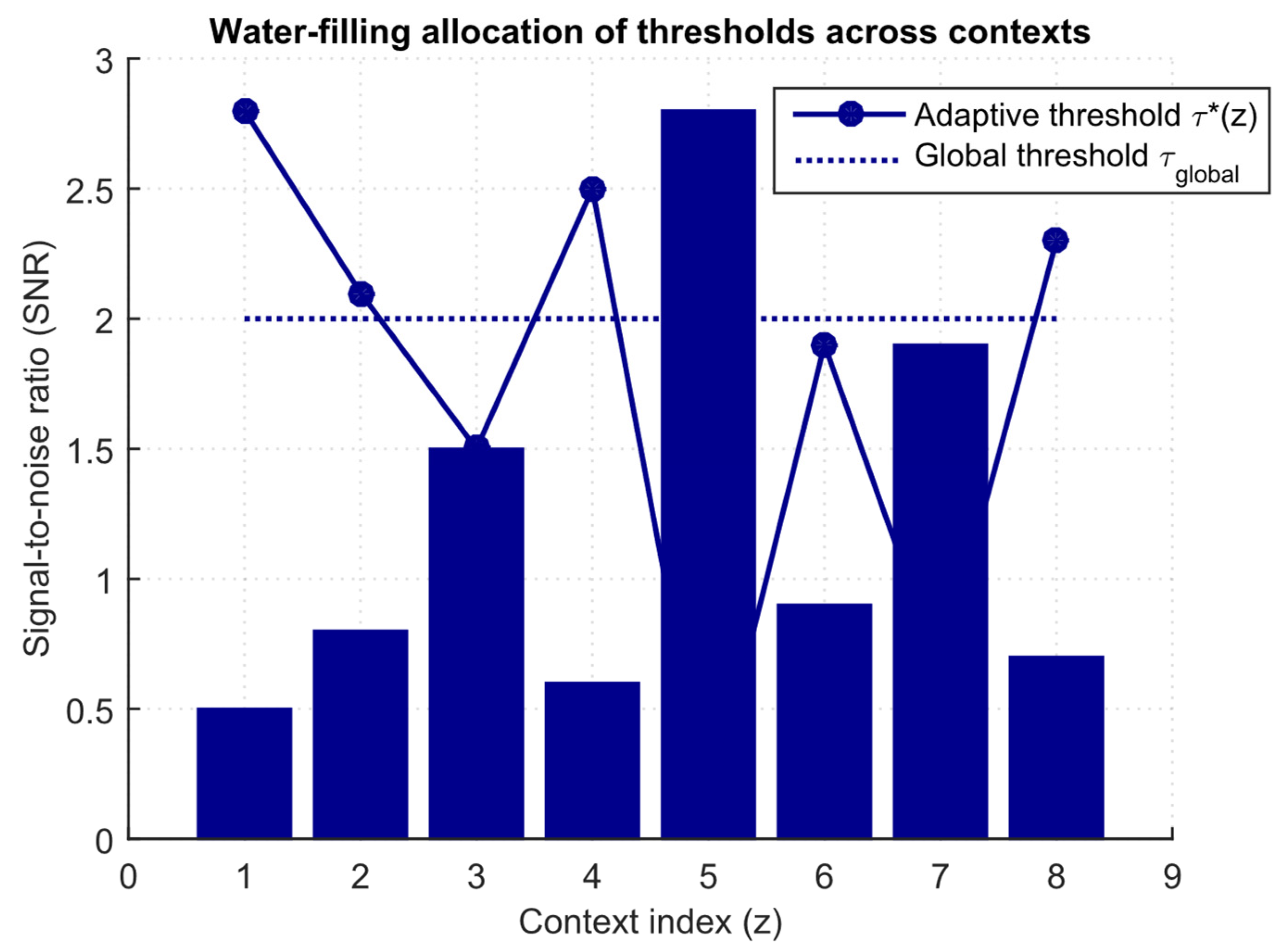

3.1. Development of Theoretical Foundations for the IDS/IPS Intrusion Detection and Prevention

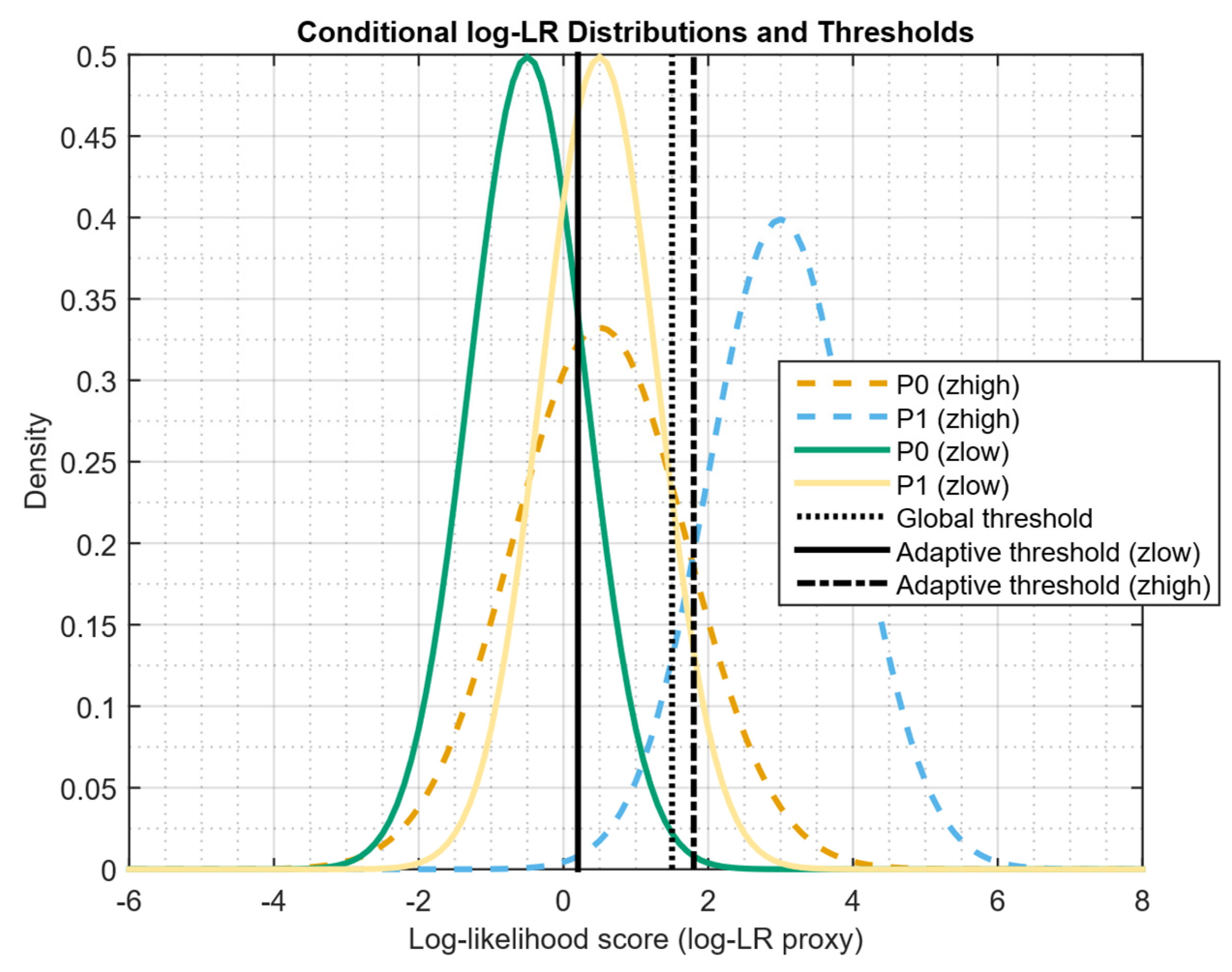

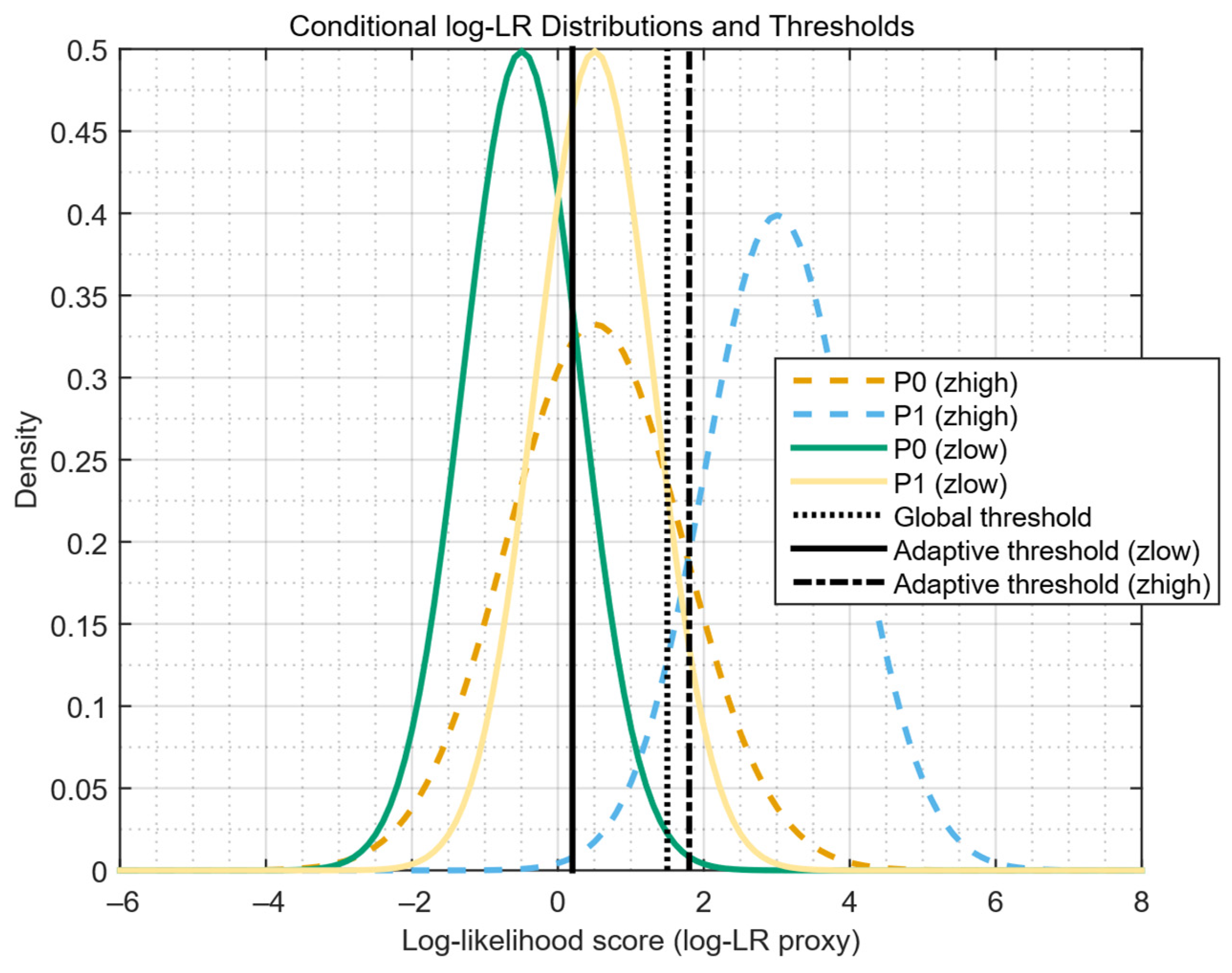

- Global test with threshold chosen so that and R0(δglob) = αglob;

- The optimal context-adaptive test with the threshold τ*(z) chosen such that and R0(δctx) = αctx. Then, if there exist indices i, j such that the distribution functionsdo not coincide, and the relative “information content” ratiowhere F0,i is a similar cumulative distribution function under P0, then αctx < αglob, that is, with a difference in the conditional LR laws across contexts, the gain in FPR is strictly positive.

3.2. Development of Theoretical Foundations for Ensuring the Models’ Stability Against Targeted Adjustment (Poisoning) and Adversarial Attacks

- When training with contamination rate ε, the drop in sensitivity (TPR) and the increase in false alarms (FPR) are limited and correctly controlled;

- For test evasion attacks limited by the “power” δ, there is a complete (minimax) rule that ensures the given sensitivity level γ preservation and the FPR minimization in the worst-case;

- When poisoning and adversarial evasion are combined, combined assessments are given.

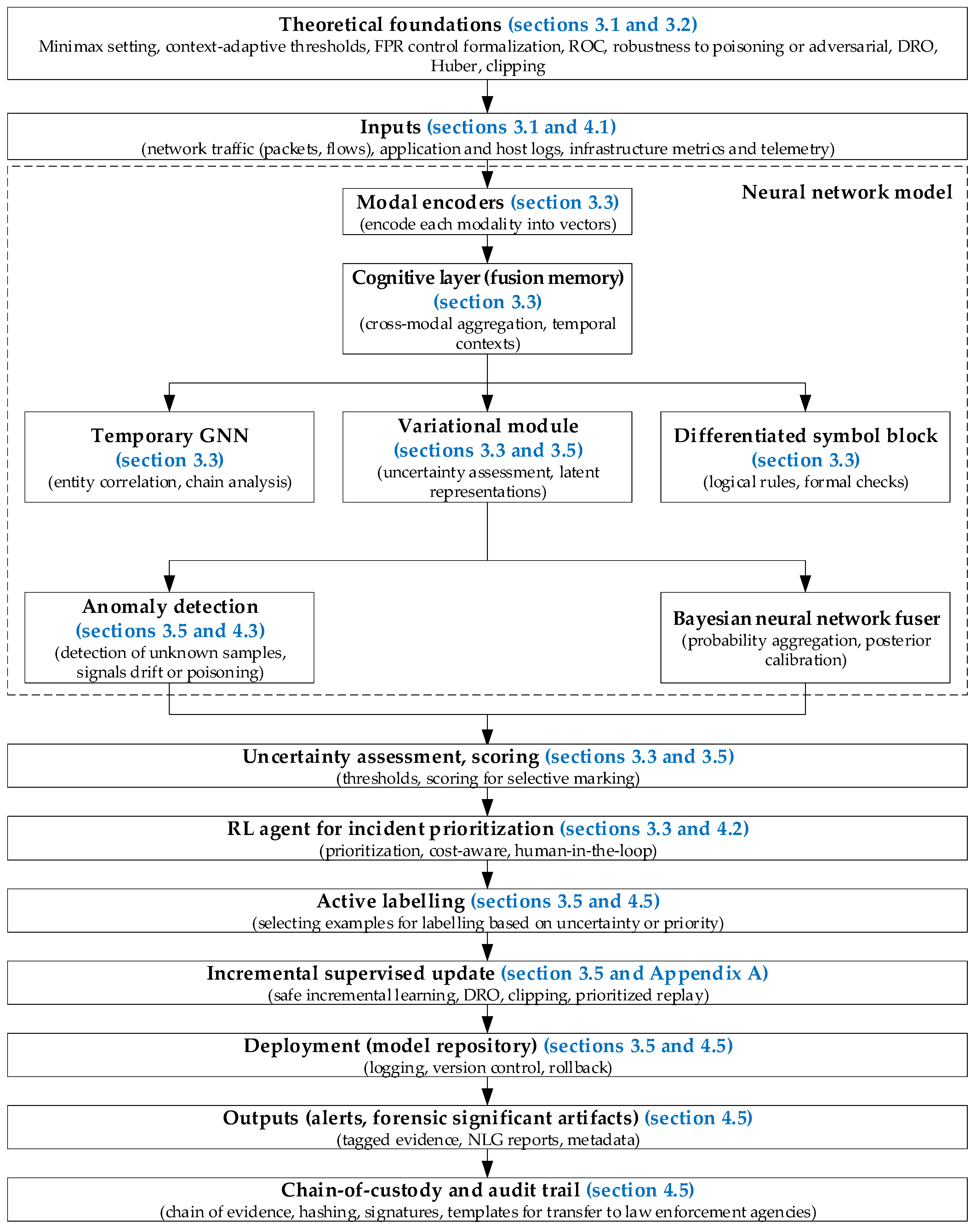

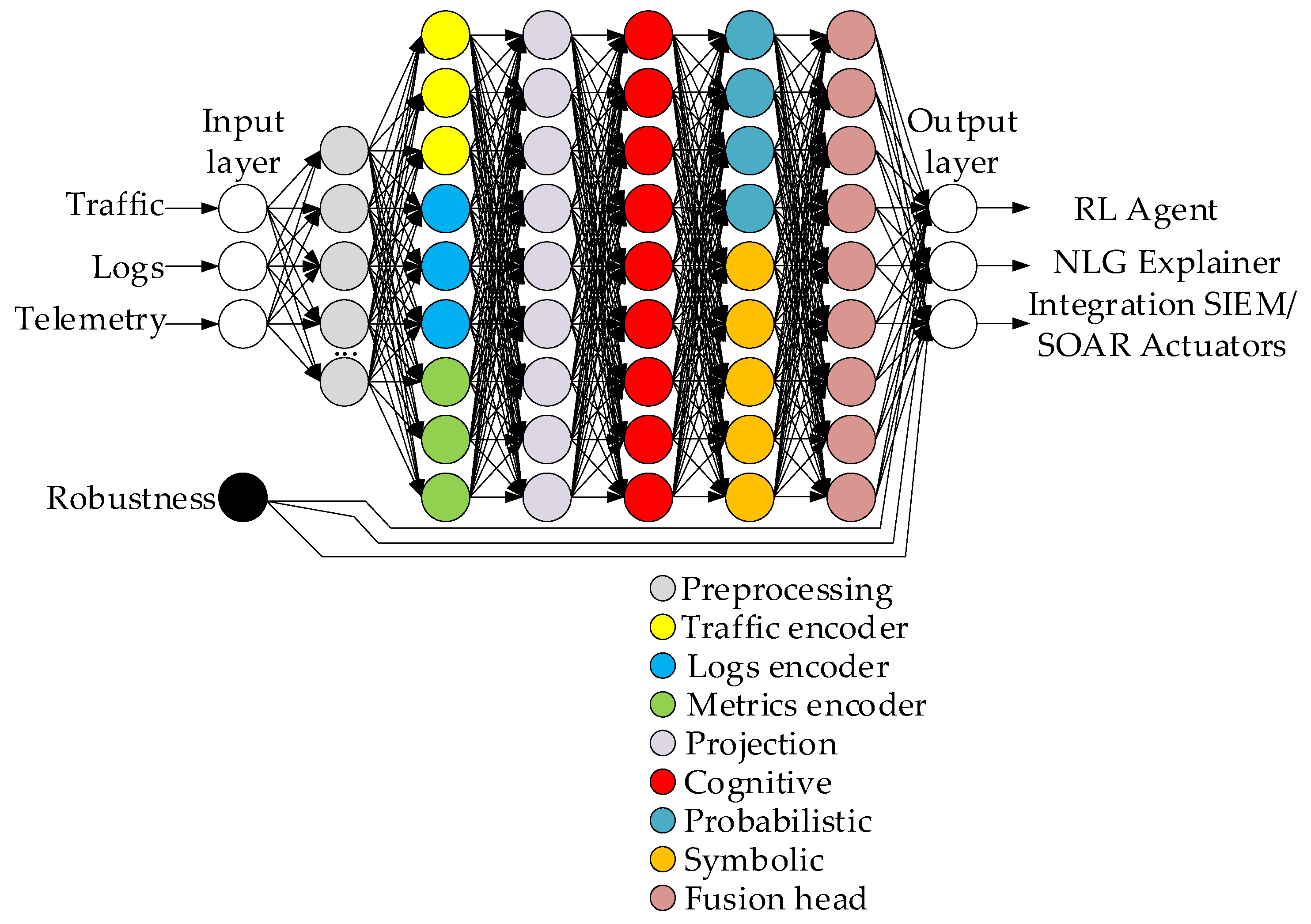

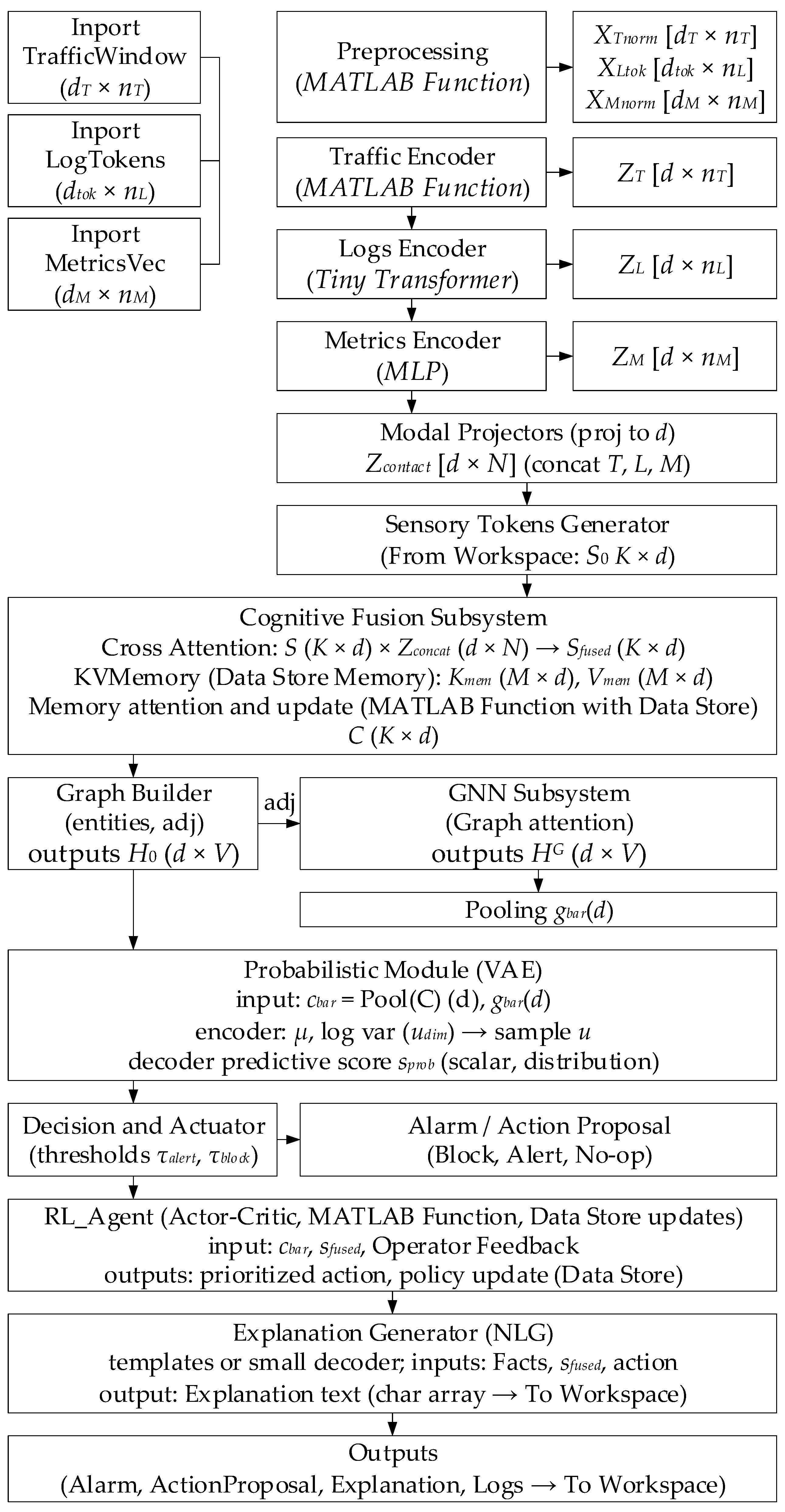

3.3. Development of a Neural Network Model for Detecting and Preventing IDS/IPS Intrusions

- Approximation of the posterior qψ(u│C, HG) (recognition network) and the variational generator pθ(C, HG│u), while ELBO is represented as:from which follows the formal optimization ;

- For classification (scoring), a posterior predictive of the type is used:where u(r) ∼ qψ.

- Clipped weights or gradient clipping and differential privacy options are used for robust training;

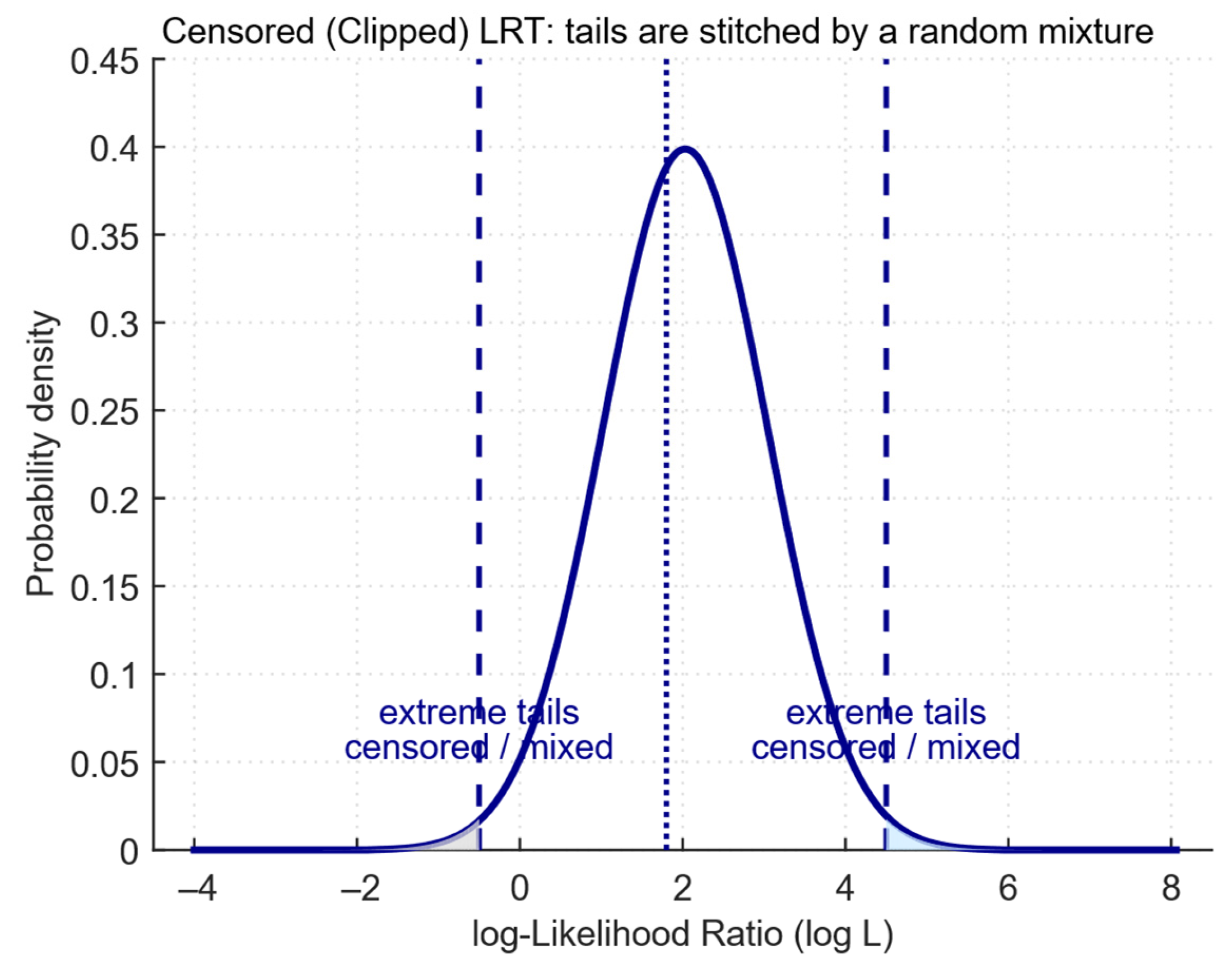

- The censored LRT equivalent is implemented by limiting the points’ influence in the probabilistic layer (ELBO terms truncation for outliers), that is, formally: replace pθ(x) with min(pθ(x), τmax) in the loss during training;

- DRO and gradient-norm regularizer prevent heavy dependence on small substituted sets. Mathematically, the DRO bound is represented as:that is, moment-generating control is used.

- Coefficients λi are selected by validation.

- To ensure resistance to poisoning, keep a stock.

- For a share ε, train so that .

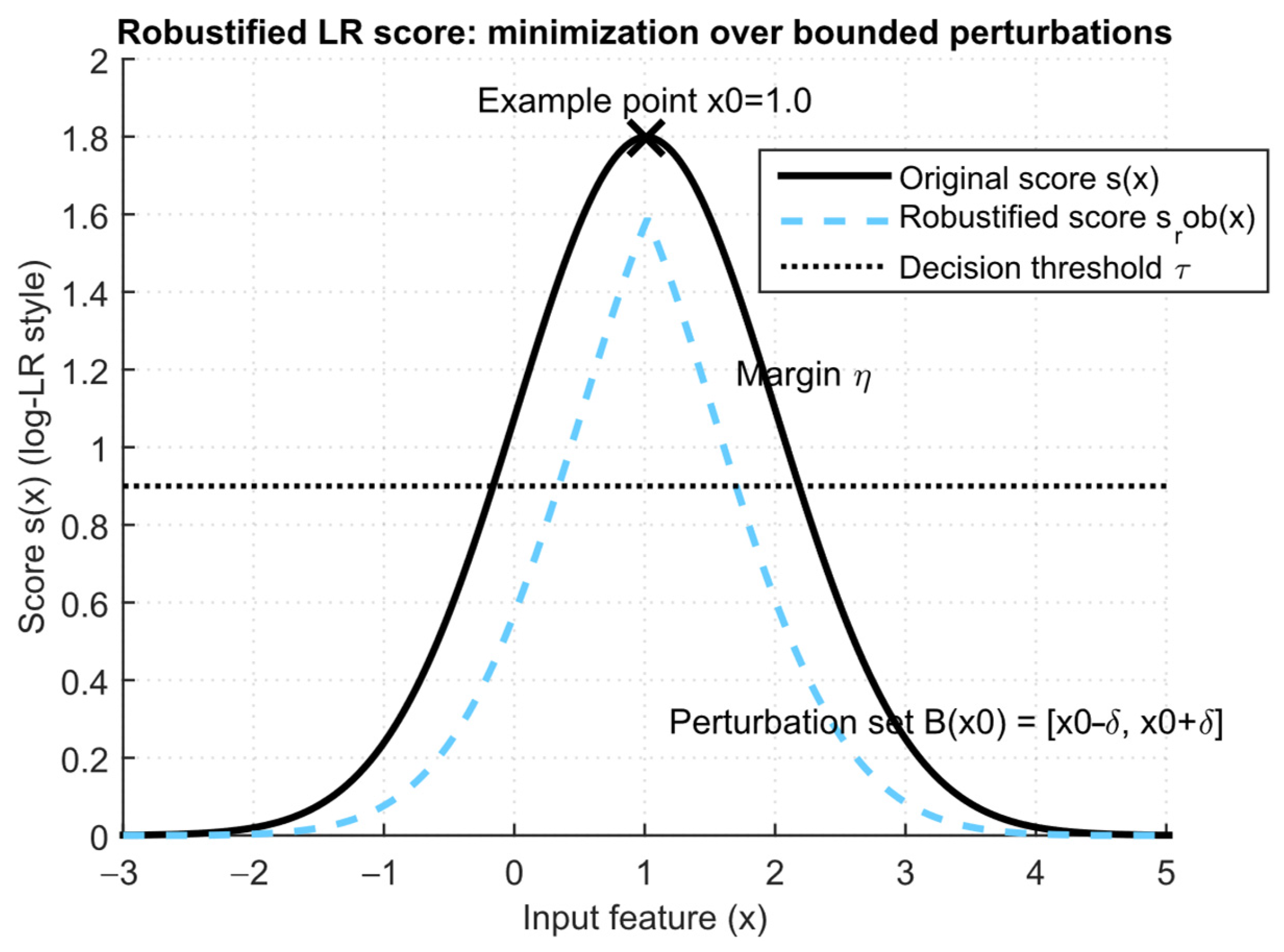

- The margin for adversarial η is provided through adversarial training step 6 (maximize worst-case loss inside small ball).

- For scalability, sharded memory, sparse attention for long sequences, and approximate GNN (sampling neighbours) were used.

- Optimizer is AdamW, lr = 3 · 10−4 (warmup 1 k steps), weight_decay = 10−2, which is a common combination for transformers;

- Batch size (by modality) is 256, mini-batches for sequence chunks;

- The dropout is 0.1 for the transformer, which is the layer norm. ε = 10−6;

- Gradient. Clip. Norm. is 1.0 (explosion resistance);

- Adversarial training radius δ = 0.01, …, 0.05 (in normalized features), that is, it is necessary to maintain a margin for robustness;

- Poisoning reserve ε (setting mode) = 0.01–0.05, while during training, it is essential to ensure a reserve ;

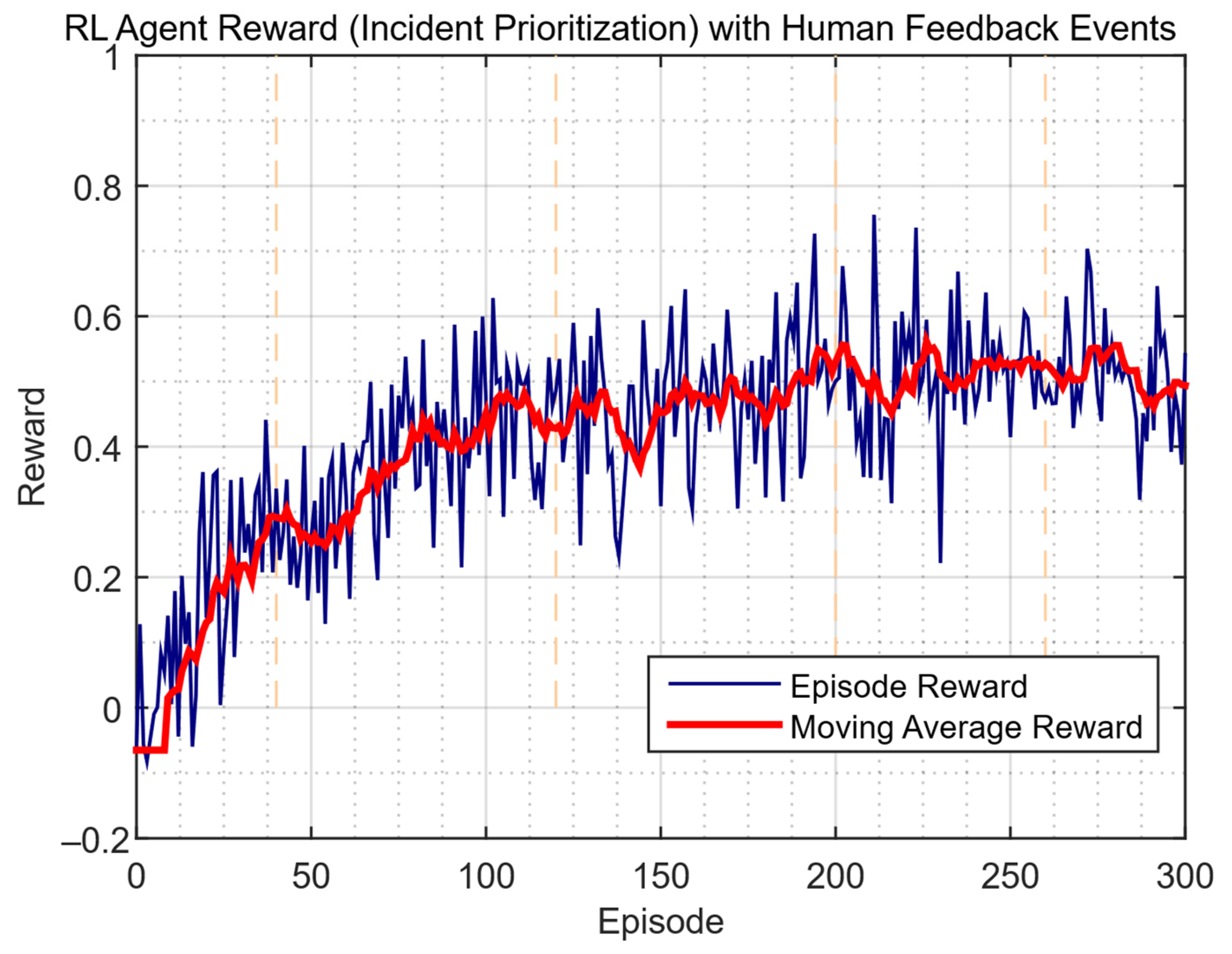

- γreward = 0.99, PPO clip ε = 0.1, …, 0.2, GAE λ = 0.95, action space is 8;

- Sensory tokens K = 32; memory M = 512; transformer layers: traffic is 4, logs is 6, decoder is 4;

- dff = 4 · dmodel = 1024, heads = 8 (by default, dk = 32).

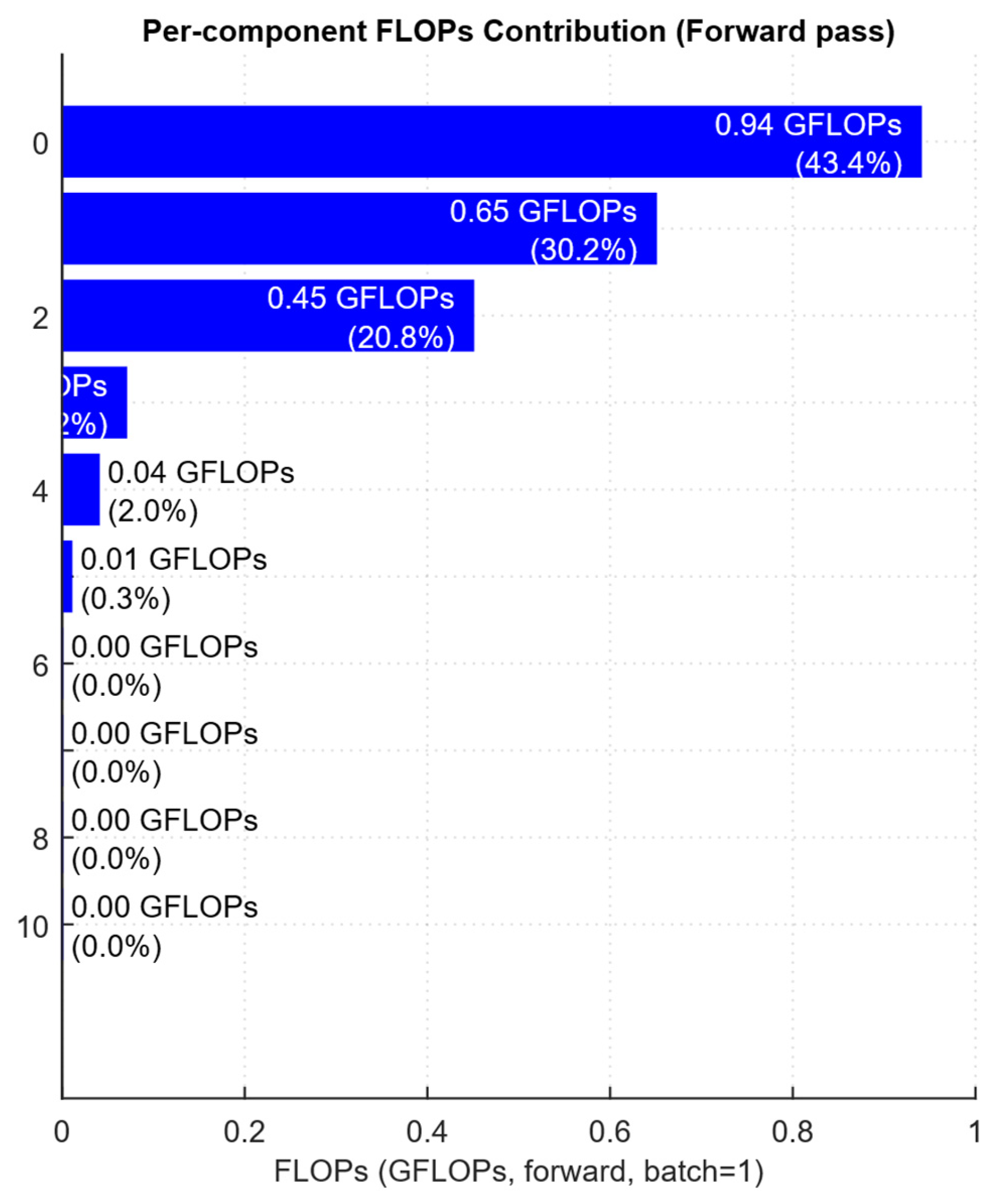

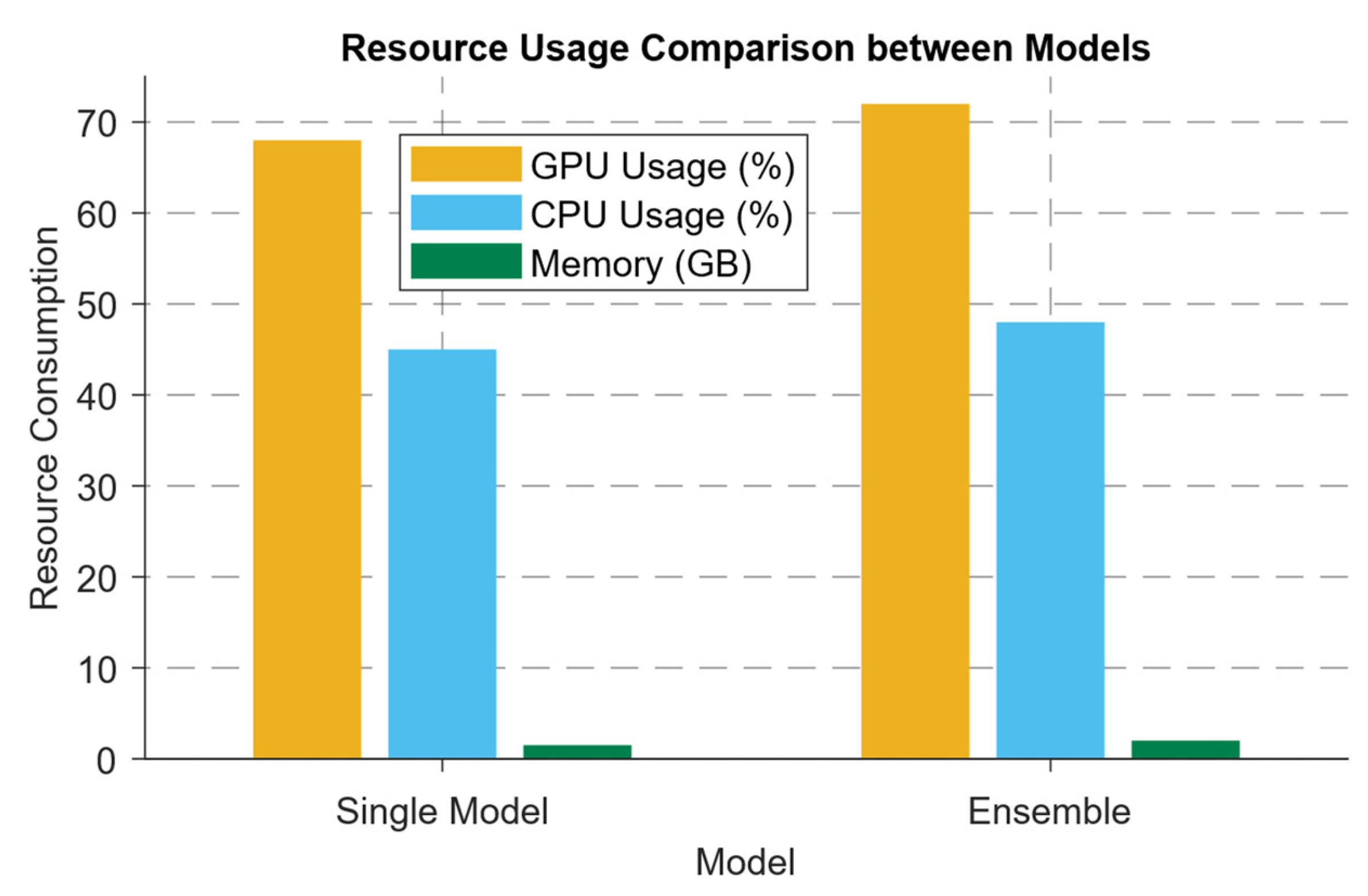

3.4. Estimating the Developed Neural Network Model for Detecting and Preventing IDS/IPS Intrusions, Computational Costs

- NVIDIA V100 FP32 peak ≈ 15.7 × 1012 FLOP/s;

- NVIDIA A100 FP32 peak ≈ 19.5 × 1012 FLOP/s;

- RTX 3090 FP32 ≈ 35.6 × 1012 FLOP/s;

- High-performance CPU FP32 ≈ 0.5 × 1012 FLOP/s.

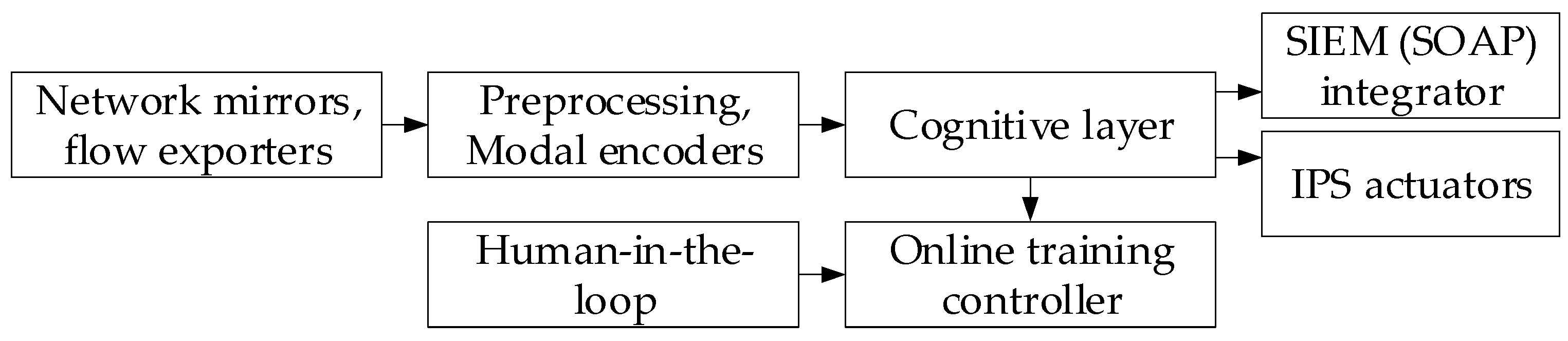

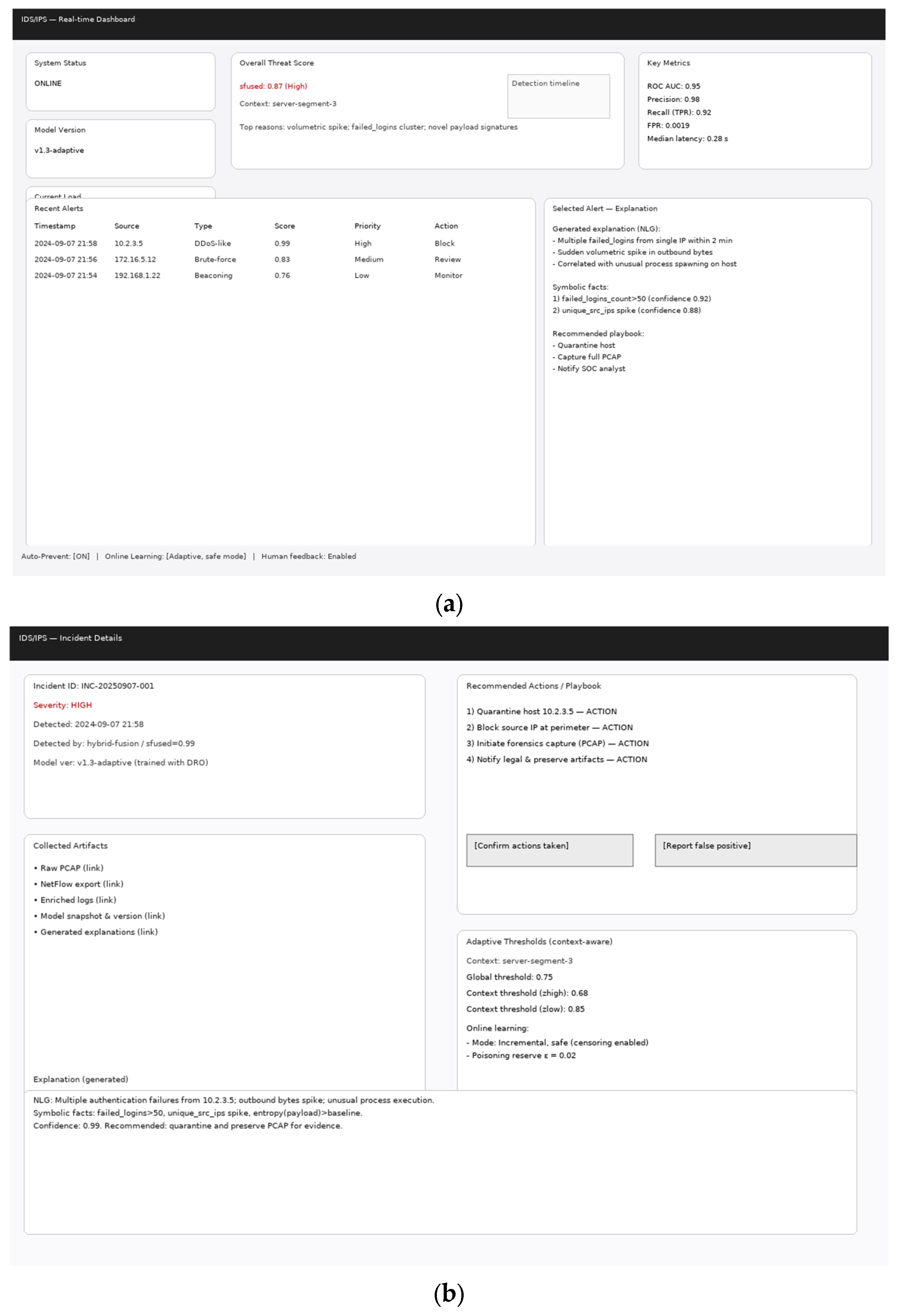

3.5. Development of the Virtual Neural Network System for Detecting and Preventing IDS/IPS Intrusions: Experimental Sample

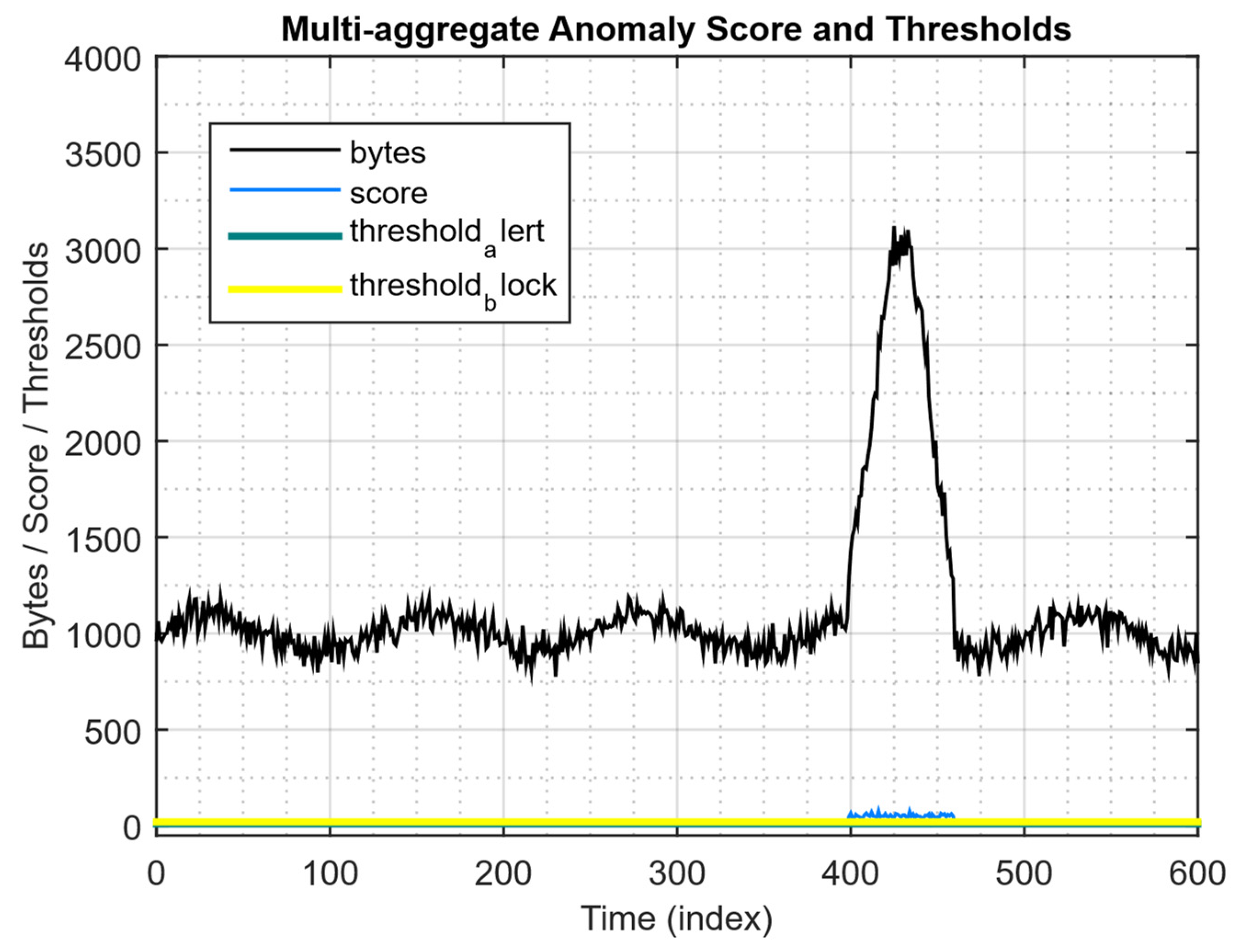

4. Case Study

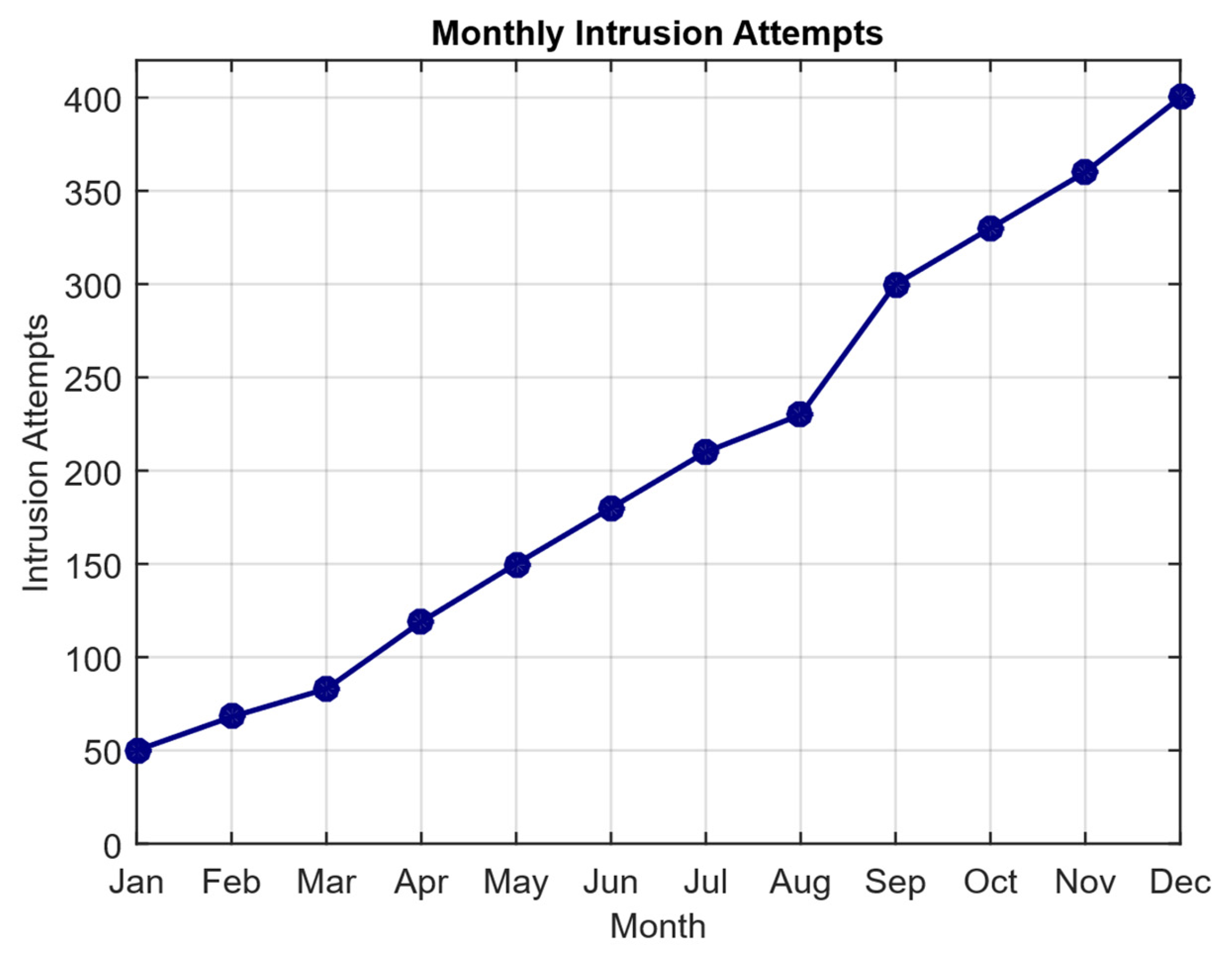

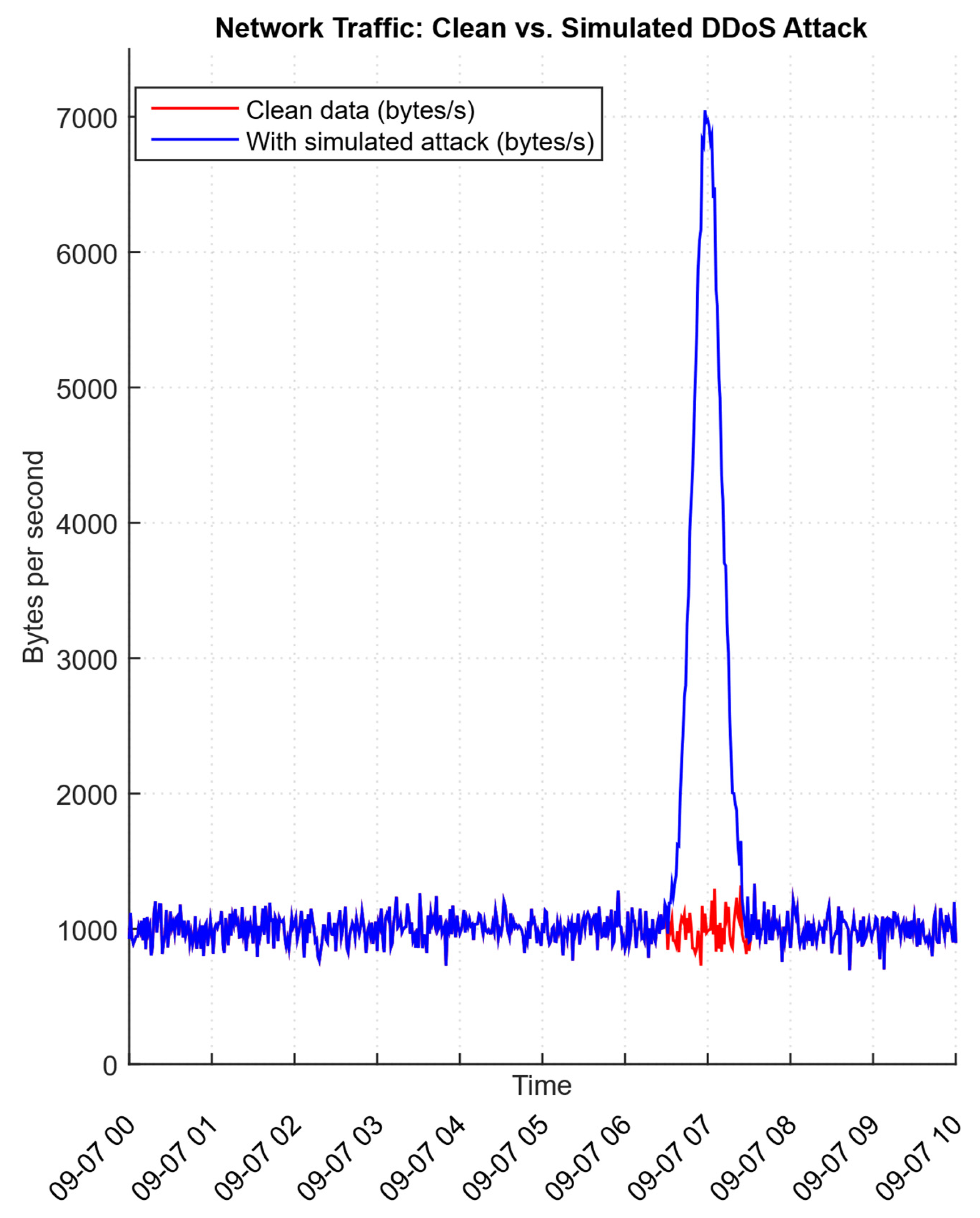

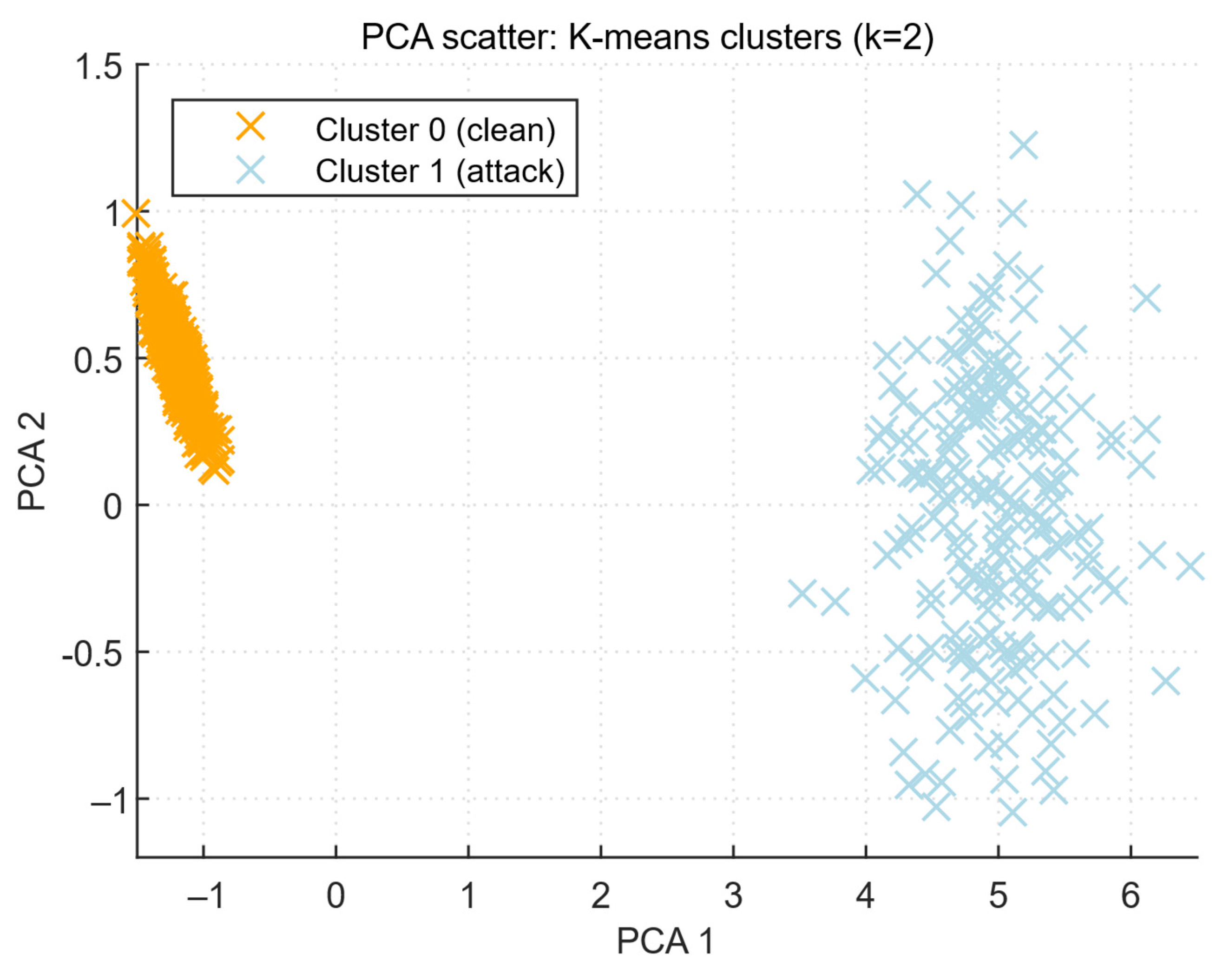

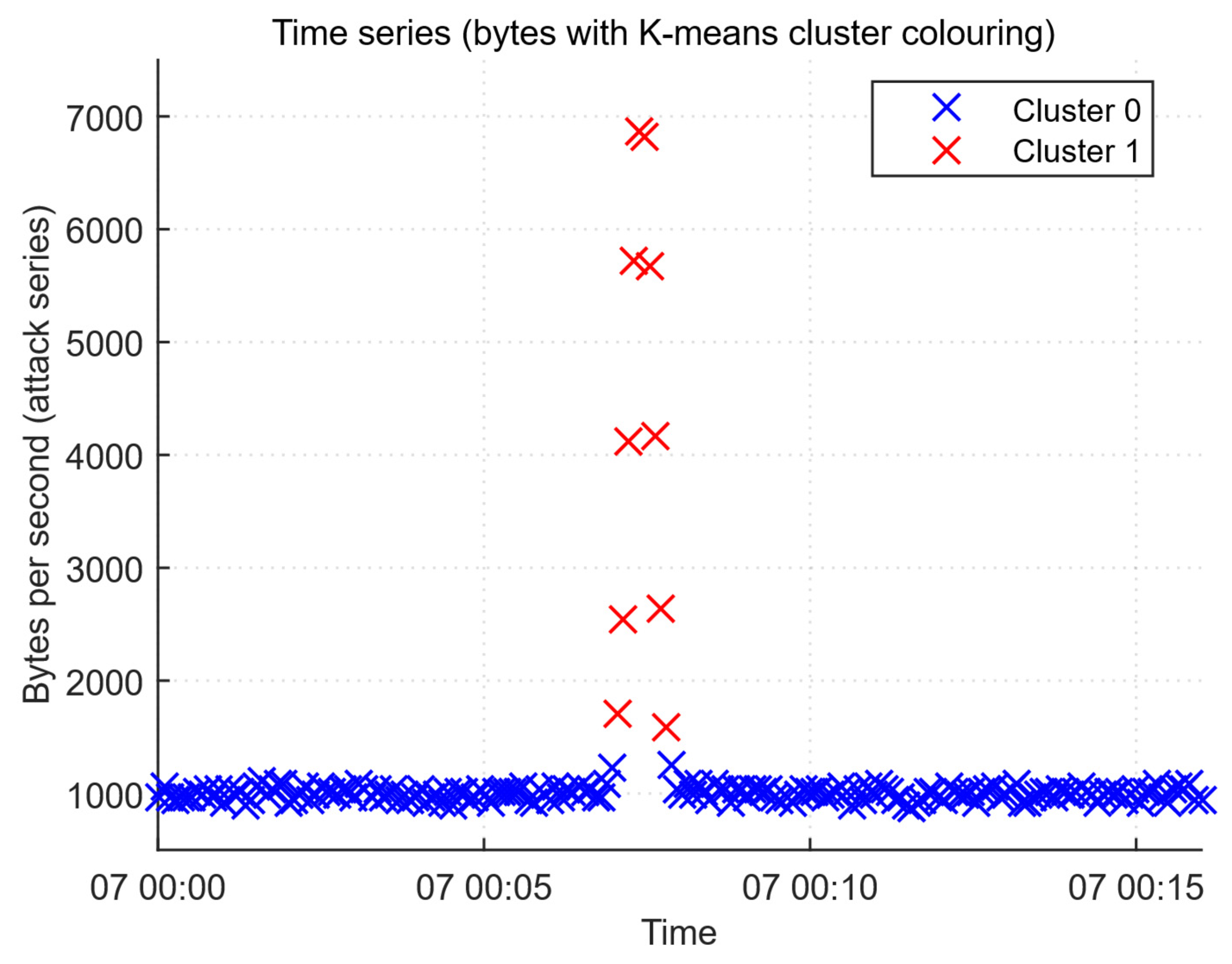

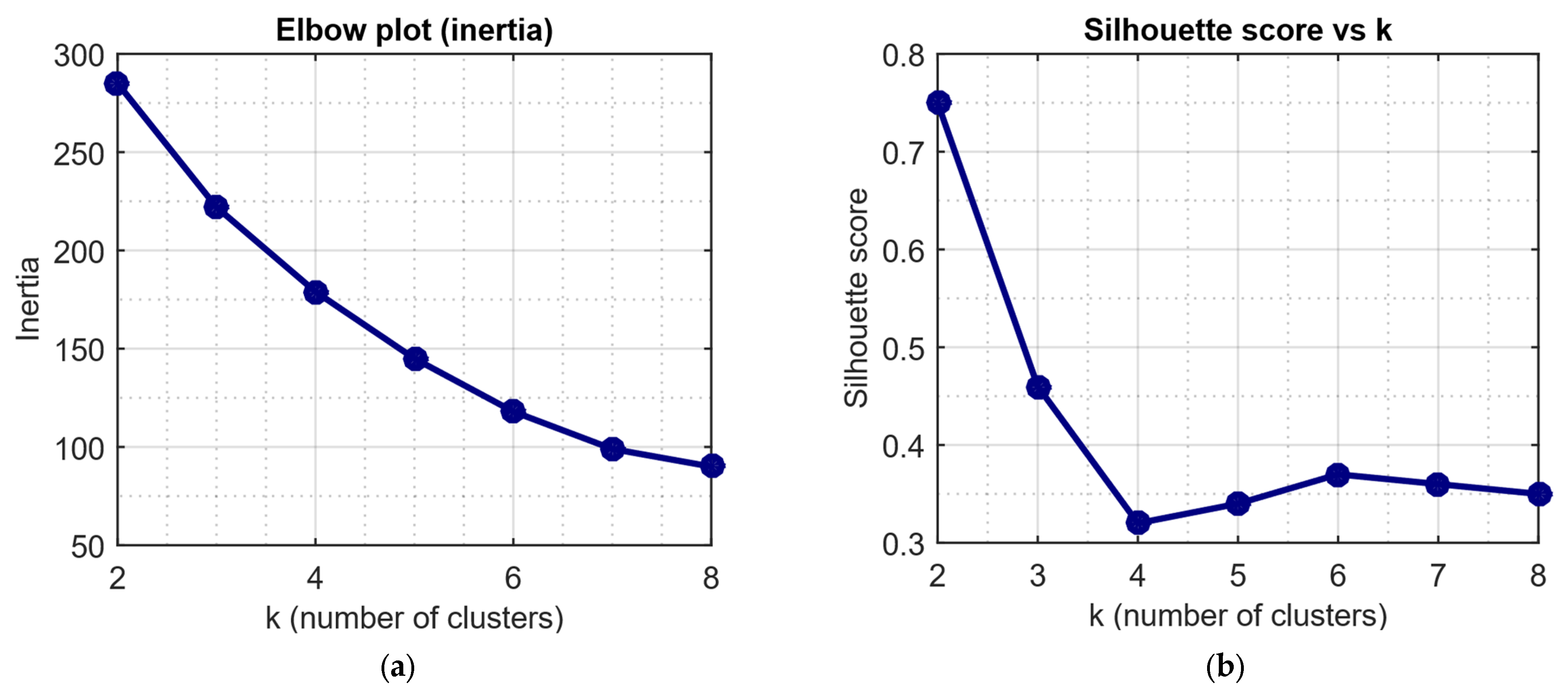

4.1. Formation, Analysis, and Pre-Processing of the Input Dataset

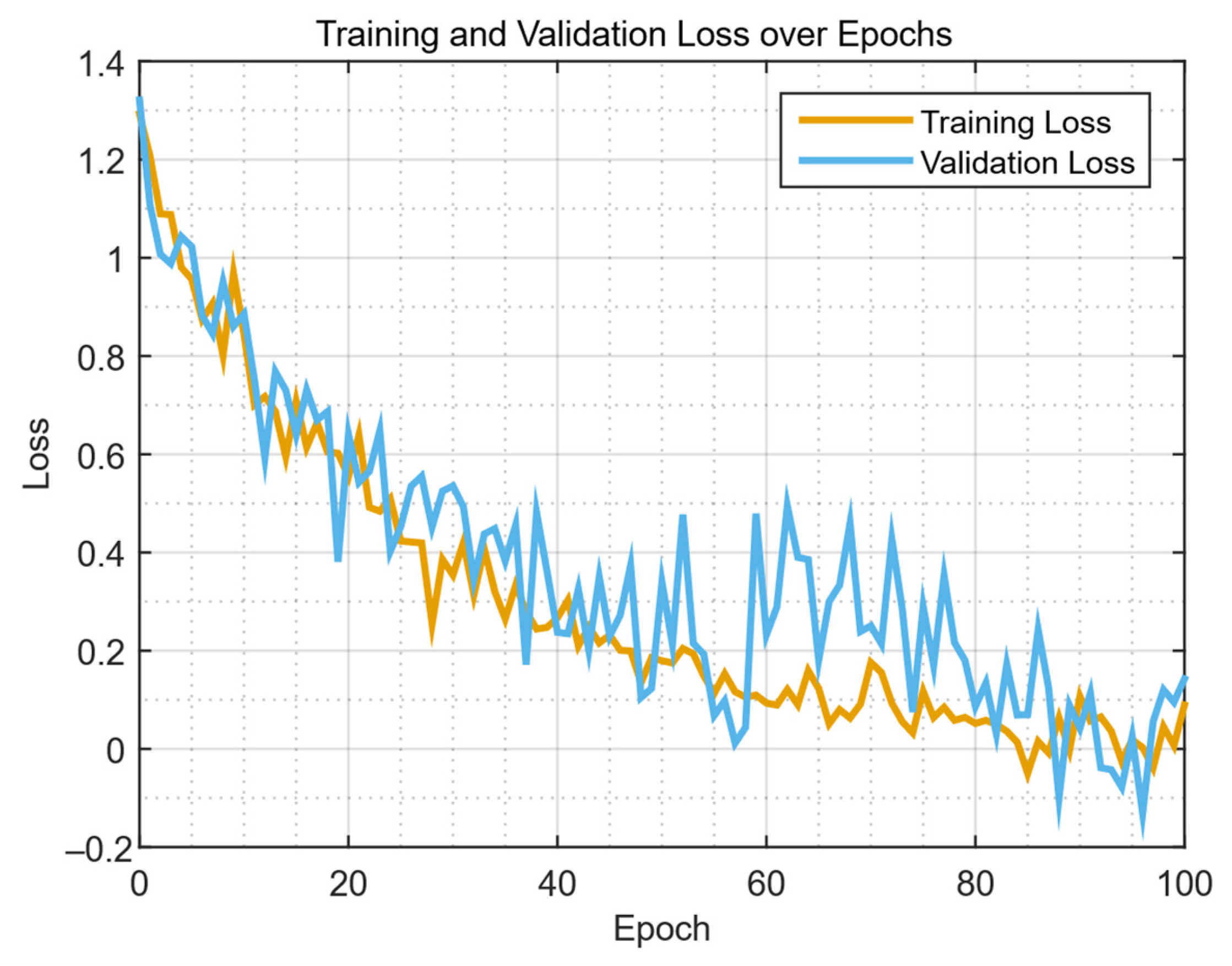

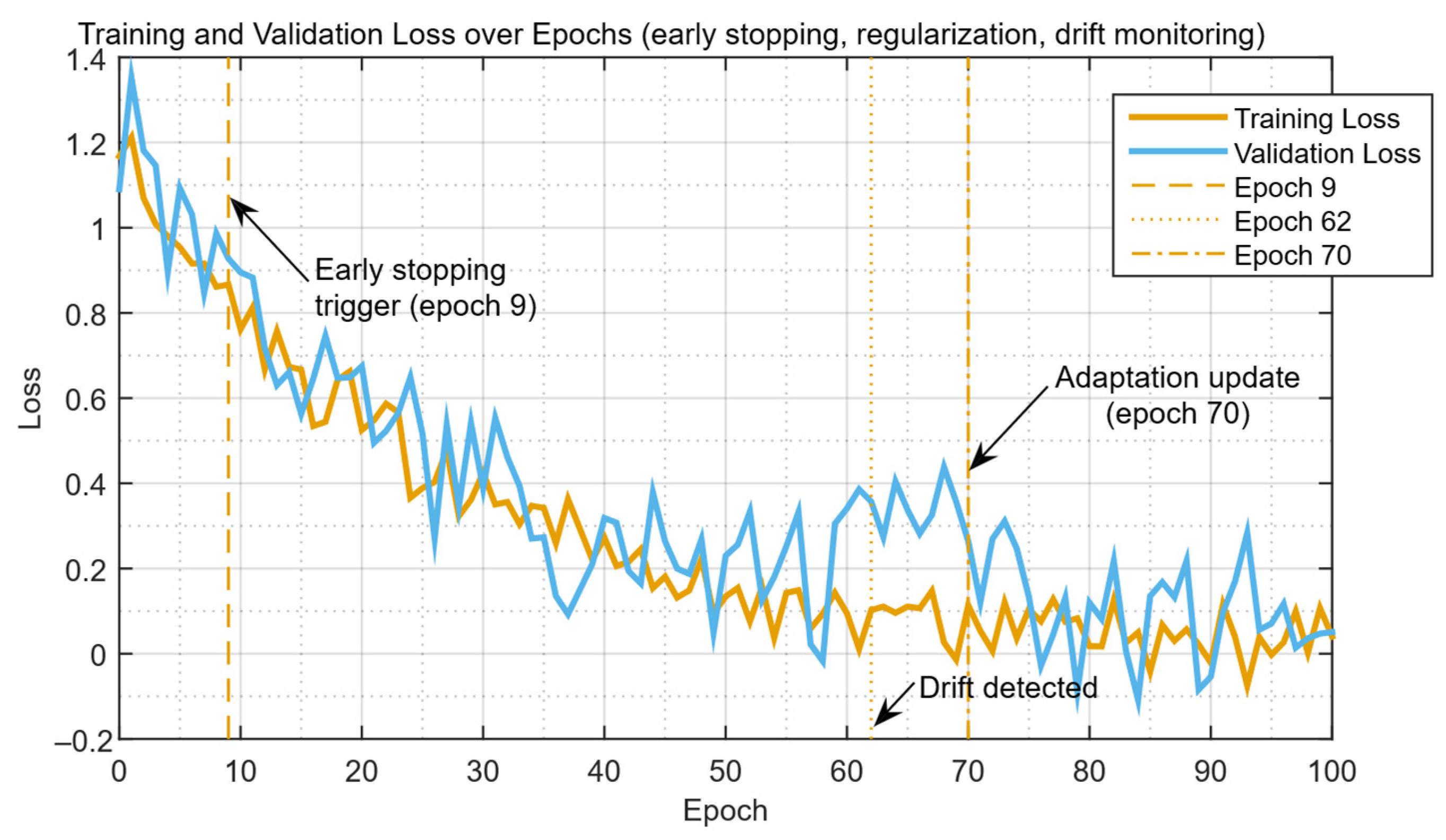

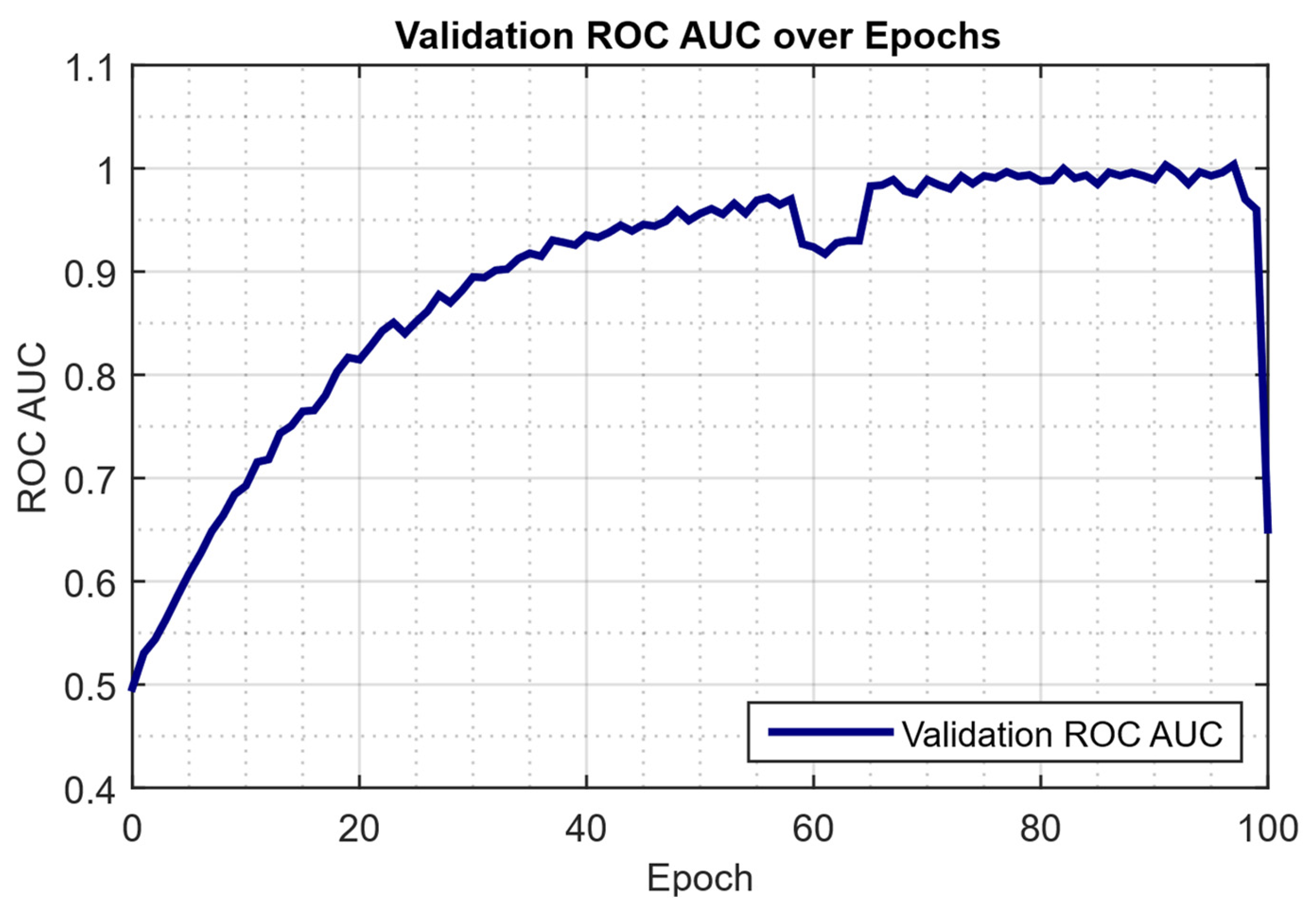

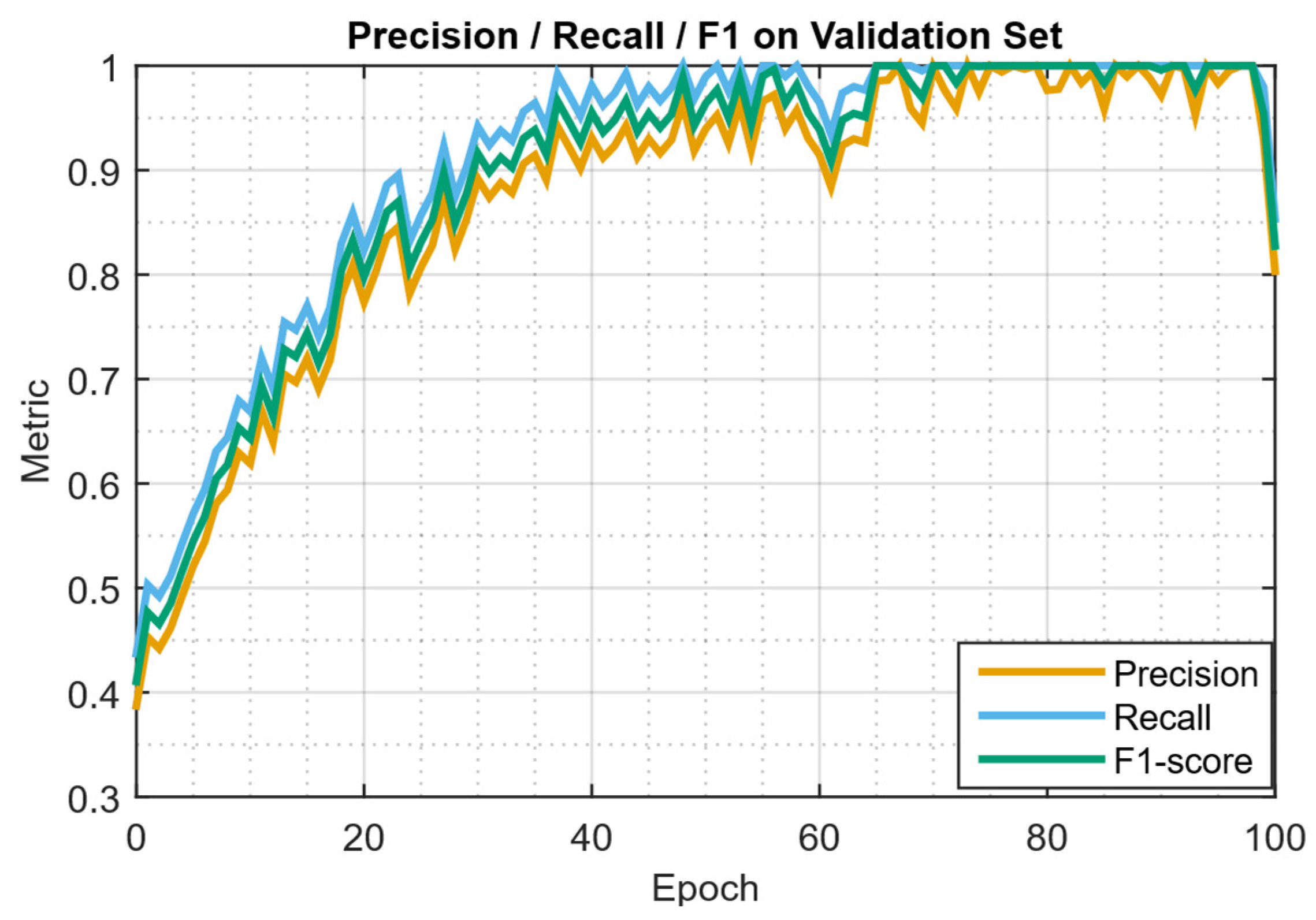

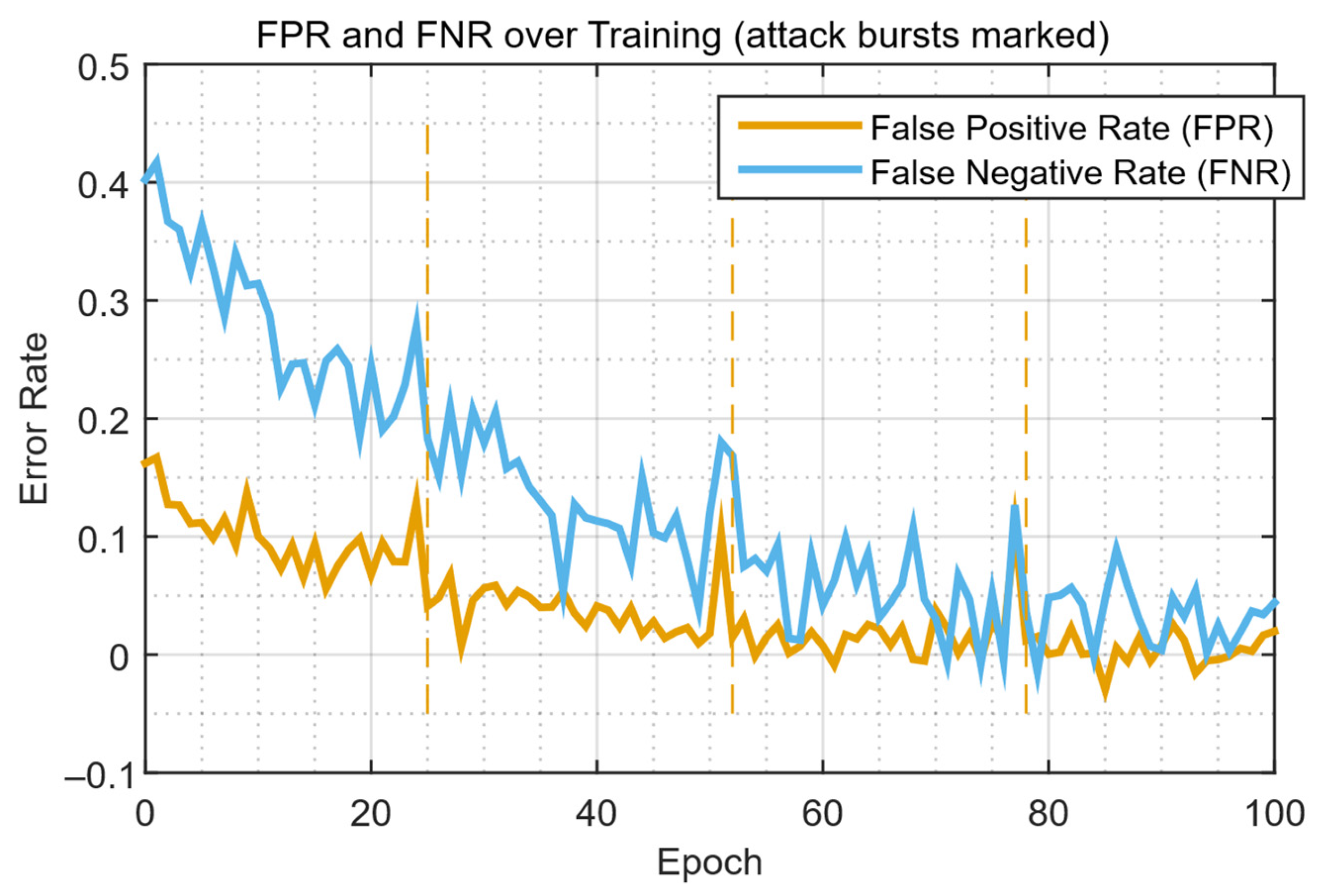

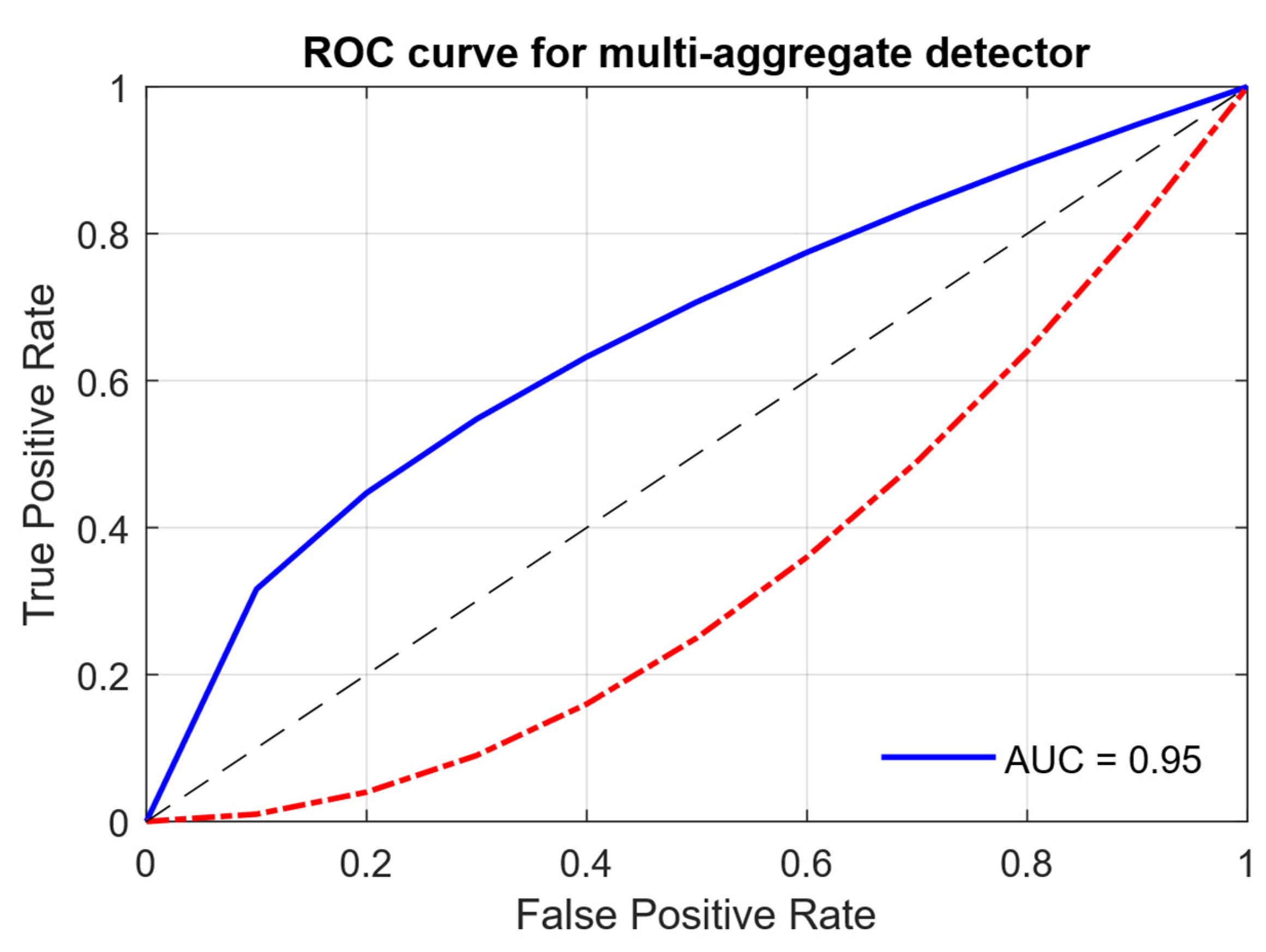

4.2. Results of the Developed Neural Network Training Effectiveness Evaluation

4.3. Results of the Detection and Prevention of the IDS/IPS Intrusion Problem Example Solution

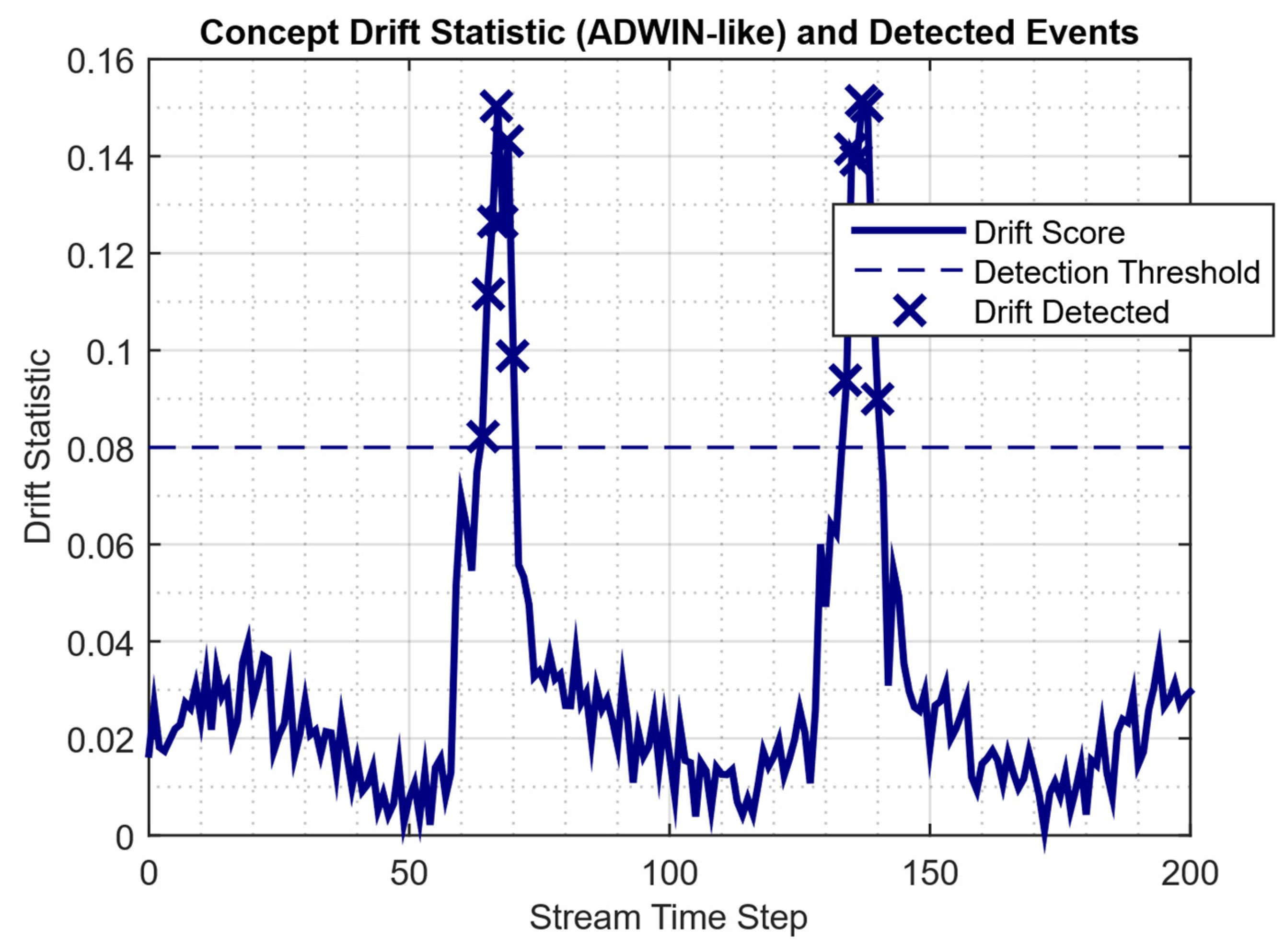

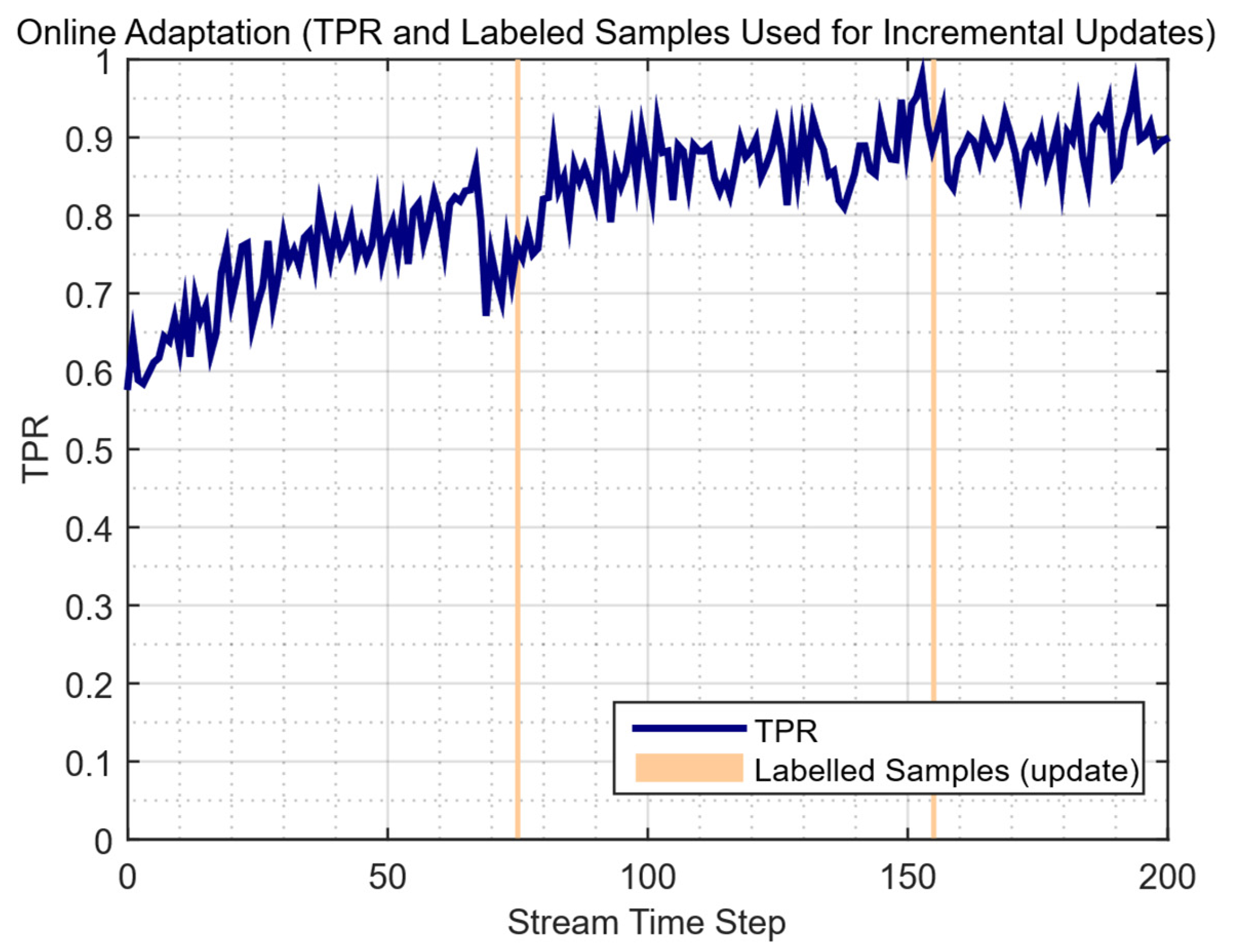

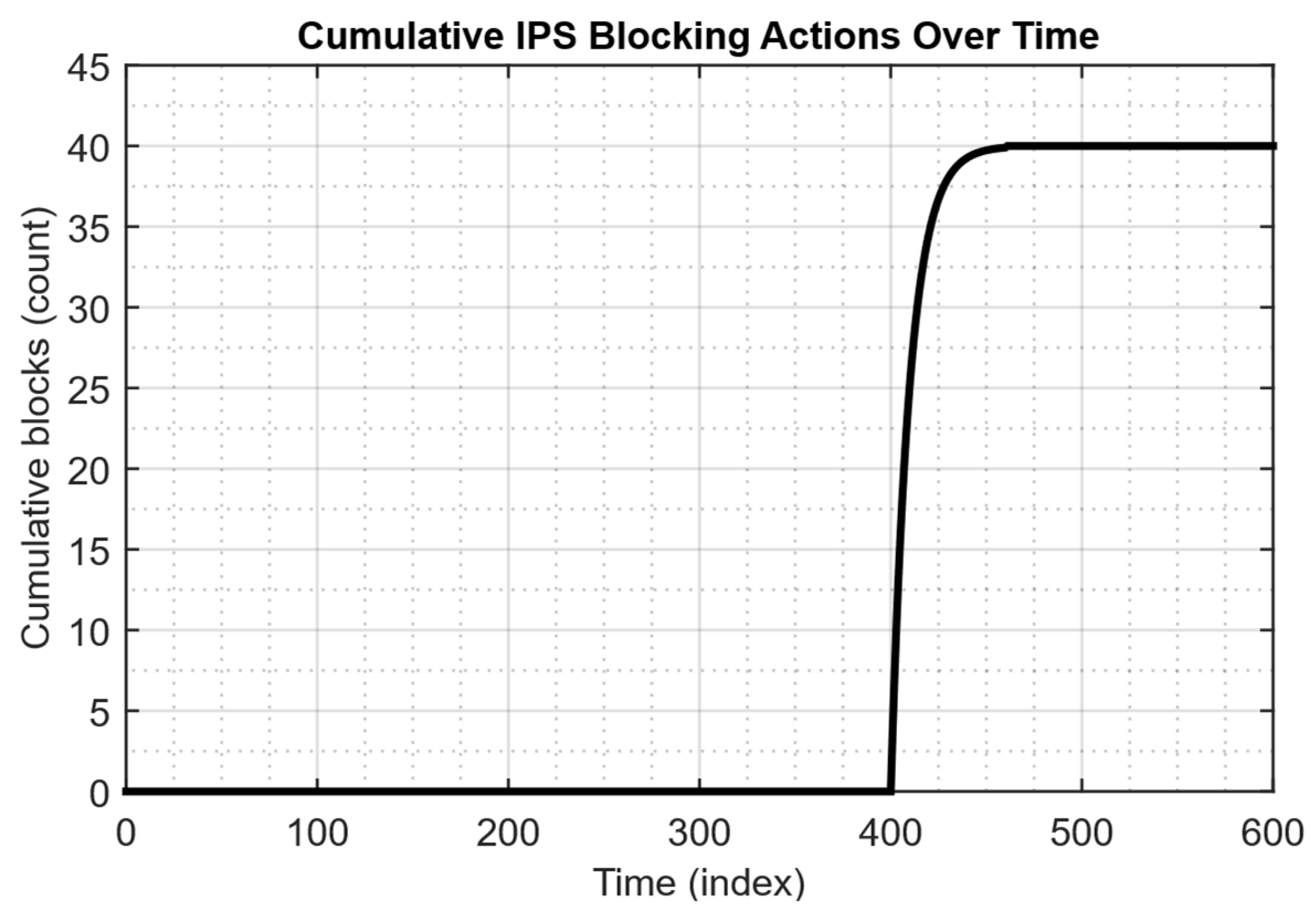

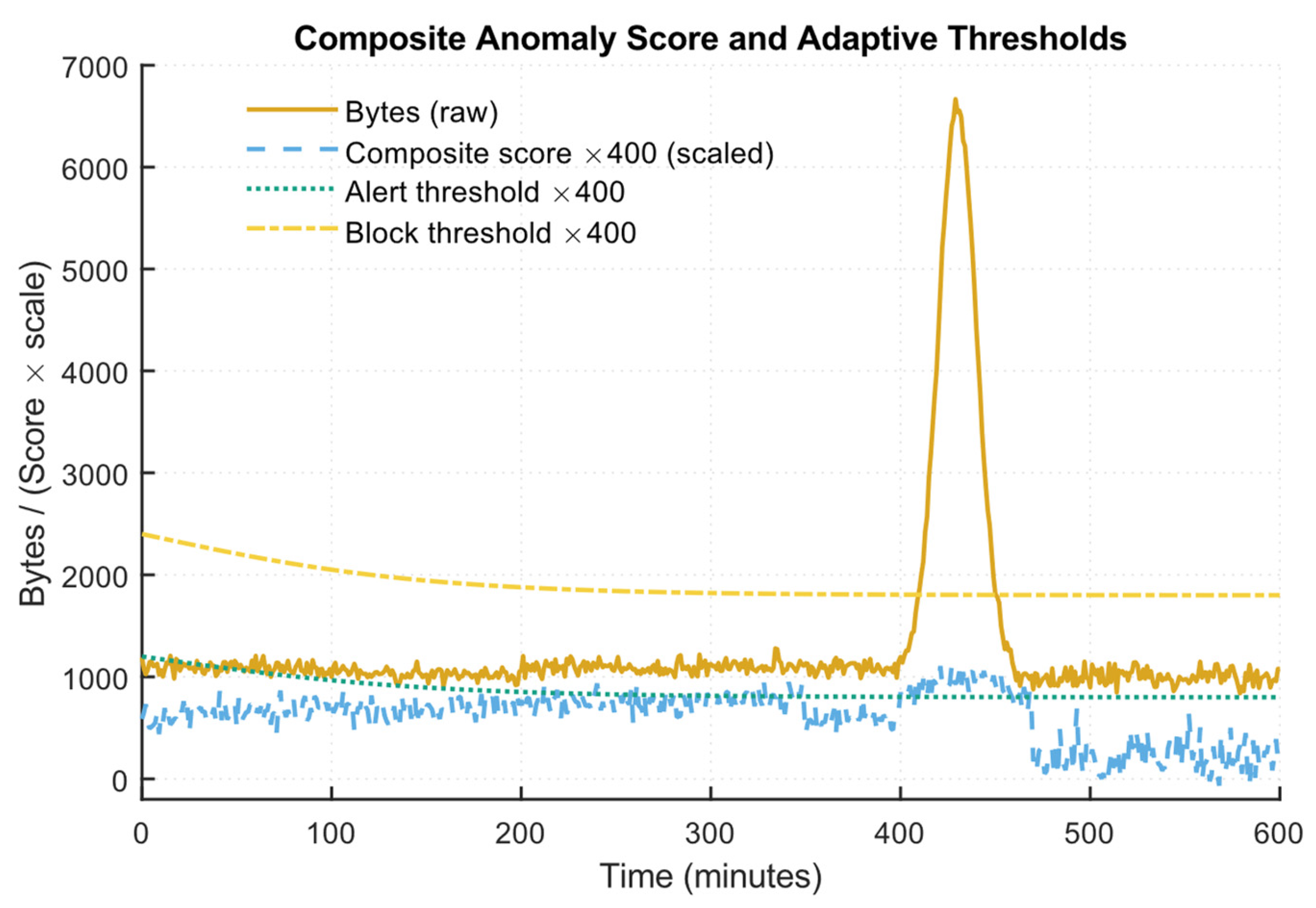

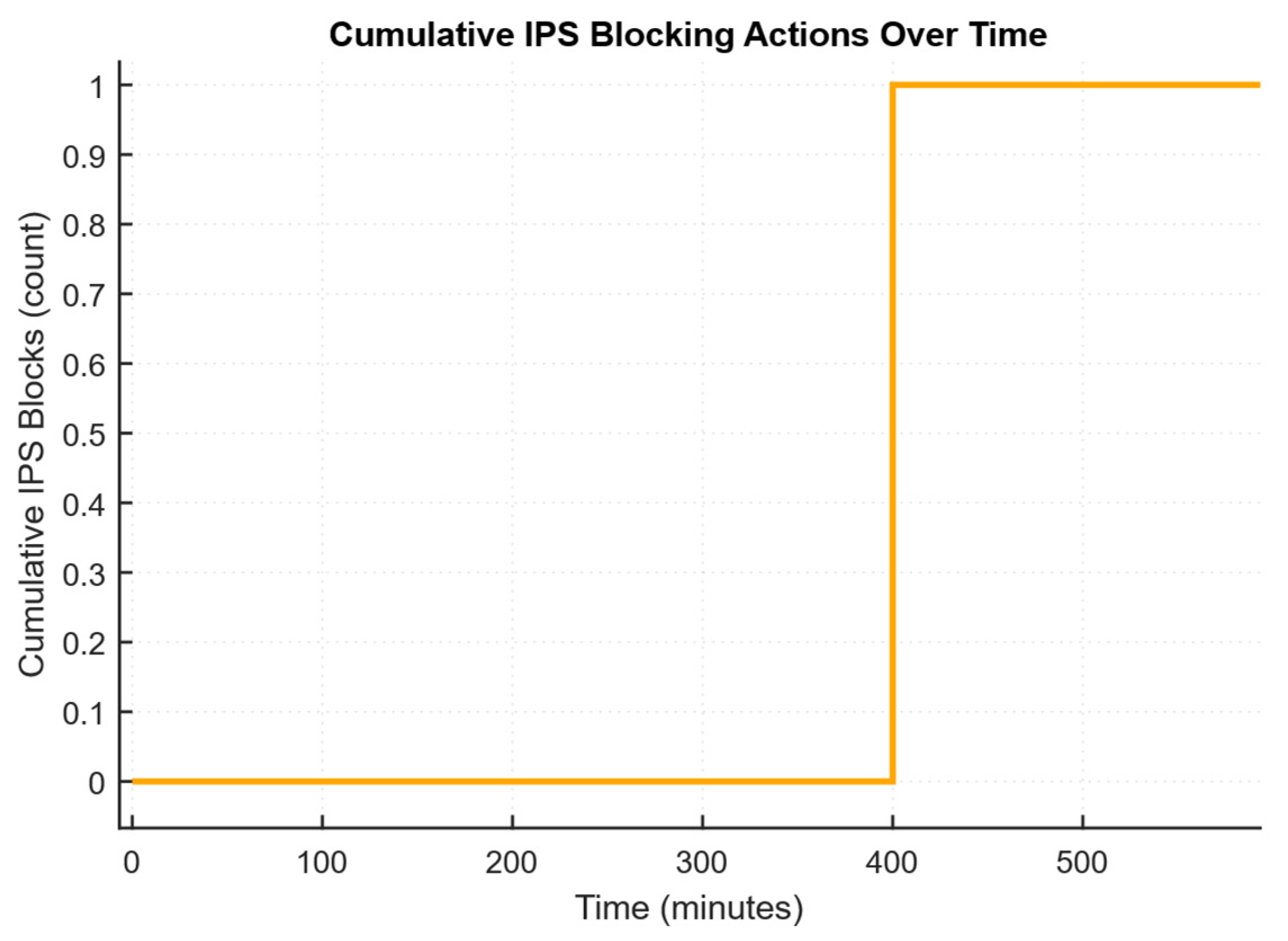

4.4. Results of Modelling Multi-Aggregate Monitoring and Rapid Response of IPS When Detecting a Significant Deviation from the Baseline

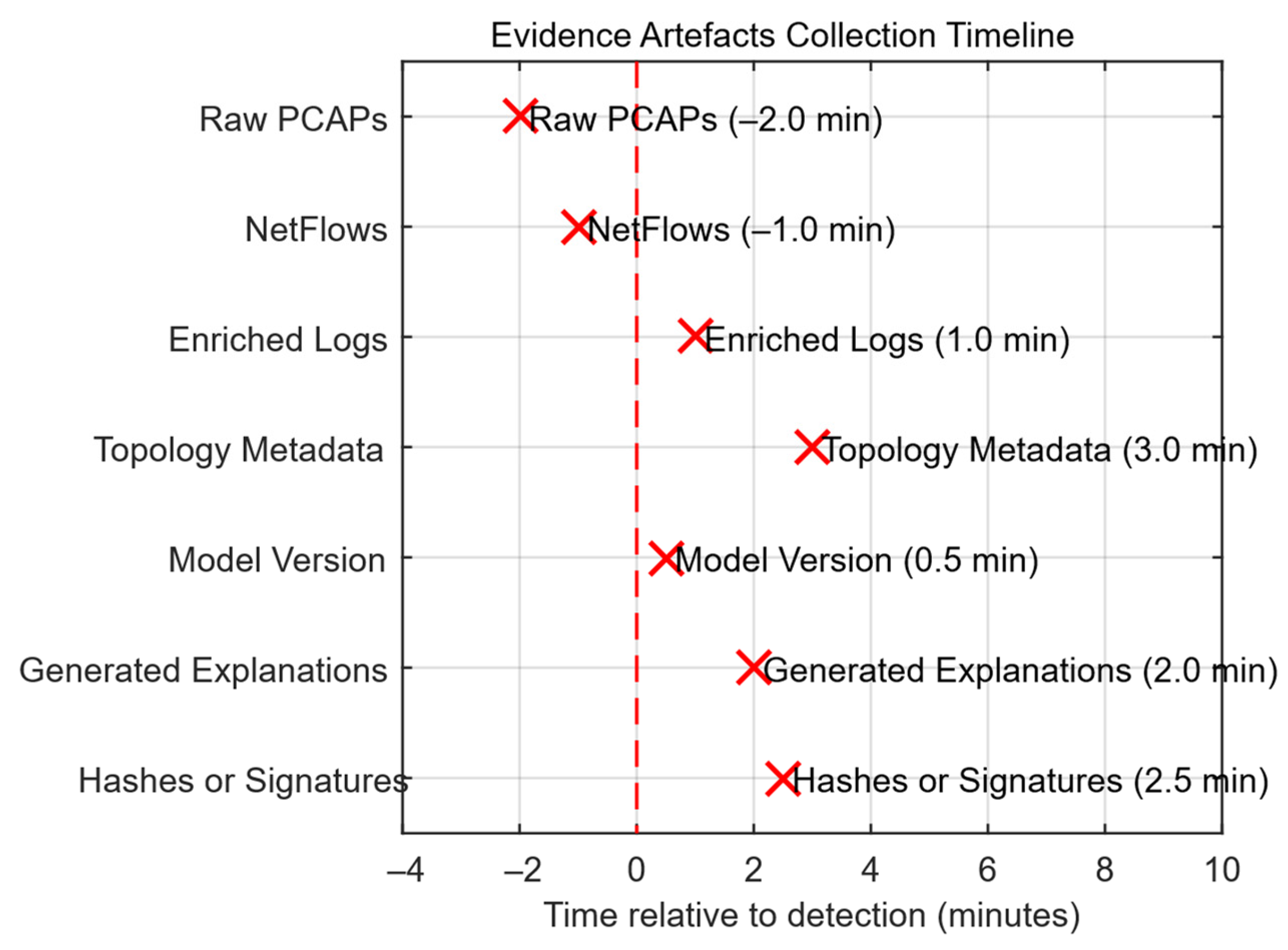

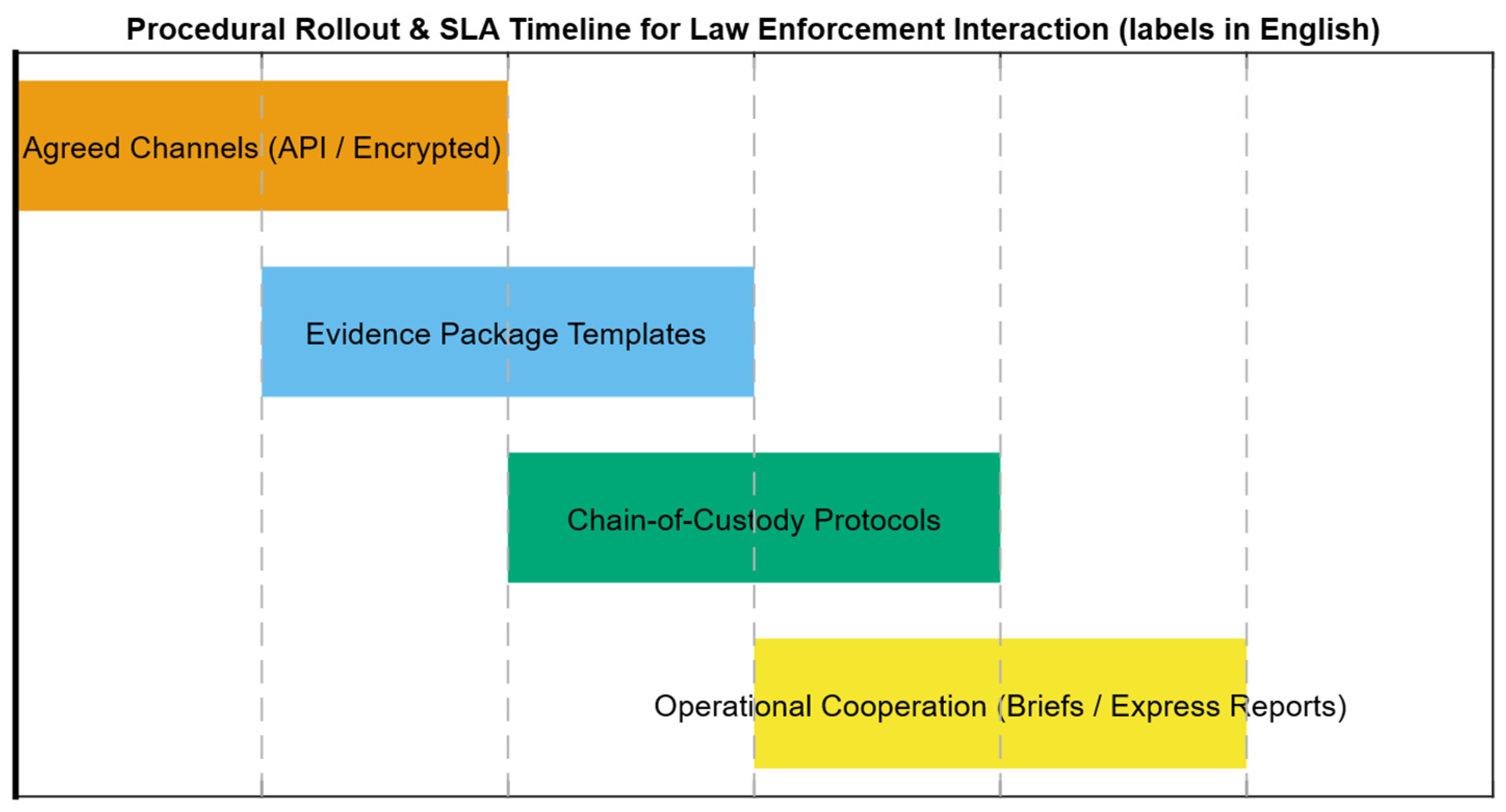

4.5. Results of Practical Implementation of the Developed System for Detection and Prevention of IDS/IPS Intrusions into the IDS/IPS Infrastructure and a Description of Its Interaction with Law Enforcement Agencies

- Pre-agreed exchange channels and SLAs for data requests (API or encrypted channels via SIEM or SOAR);

- Templates of legally verified evidence packages with metadata description and detection methodology;

- Chain-of-custody protocols (digital signatures, hashes, access logs);

- Mechanisms for operational cooperation (technical briefs, express reports for investigation).

5. Discussion

6. Conclusions

- It is mathematically proven that the detectors’ context-adaptive thresholding provides a formally proven reduction in the false positive rate at a fixed worst-case sensitivity level by proving the theorem that the optimal test is the conditional likelihood ratio thresholding with a threshold τ*(z), and in an informative context, the gain in FPR compared to the global threshold is strictly positive.

- It was found that minimax-optimal rules of the type “censored or robustified LR” and margin conditions were introduced to protect against targeted data poisoning and test adversarial perturbations. At the same time, with ε-contamination and δ-bounded perturbations, explicit guarantees were obtained: the worst-case FPR increases by no more than ε, and the TPR preservation is achieved with a reserve (margin) and the corresponding threshold settings.

- A hybrid neural network architecture for IDS/IPS with modal encoders (traffic, logs, metrics), a cognitive cross-modal layer, a temporal GNN, a variational probabilistic module, a differentiable symbolic block, a Bayesian fuser, an RL prioritization agent, and an NLG explainer with 2.8 · 107 parameters is proposed and formalized. The efficiency and high detection accuracy (about 92, …, 94%) in DDoS outbreak cases when using it are experimentally proven.

- A practical adaptive online learning pipeline is developed: “novelty detection → active labelling → incremental supervised update” in combination with DRO, a gradient-norm regularizer, clipping (censoring), and prioritized replay (PER) for RL, which enables safe incremental updates in streaming data with controlled risk of model degradation.

- To ensure evidentiary suitability and integration with law enforcement agencies, the developed system provides for a chain-of-custody, automatic hashing (signatures) of artefacts, and legally verified templates of evidence packages, which makes the proposed approach not only detective but also practically applicable in the operational and legal environment, subject to the specified restrictions.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Dataset | ROC-AUC | PR-AUC | F1 Score | FPR (95% TPR) | FNR | ECE | Brief Score |

|---|---|---|---|---|---|---|---|

| CIC-IDS2017 | 0.95 (±0.01) | 0.88 (±0.02) | 0.90 (±0.01) | 0.040 (±0.006) | 0.060 (±0.005) | 0.035 | 0.080 |

| UNSW-NB15 | 0.93 (±0.02) | 0.85 (±0.02) | 0.86 (±0.02) | 0.050 (±0.007) | 0.070 (±0.006) | 0.042 | 0.102 |

| Dataset | Metric | Before Drift | After Drift (No Online Update) | After Online Adaptation (Hourly Updates) |

|---|---|---|---|---|

| CIC-IDS2017 | ROC-AUC | 0.95 | 0.92 (Δ − 0.03) | 0.945 (recovers ≈95% baseline) |

| PR-AUC | 0.88 | 0.83 (Δ − 0.05) | 0.87 | |

| F1 score | 0.90 | 0.86 (Δ − 0.04) | 0.895 | |

| FPR (95%TPR) | 0.040 | 0.060 (↑50%) | 0.045 | |

| FNR | 0.060 | 0.090 | 0.065 | |

| ECE | 0.035 | 0.050 | 0.038 | |

| UNSW-NB15 | ROC-AUC | 0.93 | 0.90 (Δ − 0.03) | 0.92 |

| PR-AUC | 0.85 | 0.80 (Δ − 0.05) | 0.84 | |

| F1 score | 0.86 | 0.82 (Δ − 0.04) | 0.855 | |

| FPR (95%TPR) | 0.050 | 0.075 (↑50%) | 0.055 | |

| FNR | 0.070 | 0.100 | 0.075 | |

| ECE | 0.042 | 0.060 | 0.044 |

References

- Erskine, S.K. Real-Time Large-Scale Intrusion Detection and Prevention System (IDPS) CICIoT Dataset Traffic Assessment Based on Deep Learning. Appl. Syst. Innov. 2025, 8, 52. [Google Scholar] [CrossRef]

- Chowdhury, A.; Karmakar, G.; Kamruzzaman, J.; Das, R.; Newaz, S.H.S. An Evidence Theoretic Approach for Traffic Signal Intrusion Detection. Sensors 2023, 23, 4646. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Zhang, X.; Liu, Q.; Cui, J. Design of a Heterogeneous-Based Network Intrusion Detection System and Compiler. Appl. Sci. 2025, 15, 5012. [Google Scholar] [CrossRef]

- Ho, C.-Y.; Lin, Y.-D.; Lai, Y.-C.; Chen, I.-W.; Wang, F.-Y.; Tai, W.-H. False Positives and Negatives from Real Traffic with Intrusion Detection/Prevention Systems. Int. J. Future Comput. Commun. 2012, 1, 87–90. [Google Scholar] [CrossRef]

- Adu-Kyere, A.; Nigussie, E.; Isoaho, J. Analyzing the Effectiveness of IDS/IPS in Real-Time with a Custom in-Vehicle Design. Procedia Comput. Sci. 2024, 238, 175–183. [Google Scholar] [CrossRef]

- Shall Peelam, M.; Chamola, V.; Chaurasia, B.K. Blockchain-Enabled Intrusion Detection Systems for Real-Time Vehicle Monitoring. Veh. Commun. 2025, 55, 100961. [Google Scholar] [CrossRef]

- Al-Absi, G.A.; Fang, Y.; Qaseem, A.A.; Al-Absi, H. DST-IDS: Dynamic Spatial-Temporal Graph-Transformer Network for in-Vehicle Network Intrusion Detection System. Veh. Commun. 2025, 55, 100962. [Google Scholar] [CrossRef]

- Zhang, Y.; Muniyandi, R.C.; Qamar, F. A Review of Deep Learning Applications in Intrusion Detection Systems: Overcoming Challenges in Spatiotemporal Feature Extraction and Data Imbalance. Appl. Sci. 2025, 15, 1552. [Google Scholar] [CrossRef]

- Mao, J.; Yang, X.; Hu, B.; Lu, Y.; Yin, G. Intrusion Detection System Based on Multi-Level Feature Extraction and Inductive Network. Electronics 2025, 14, 189. [Google Scholar] [CrossRef]

- Nguyen Dang, K.D.; Fazio, P.; Voznak, M. A Novel Deep Learning Framework for Intrusion Detection Systems in Wireless Network. Future Internet 2024, 16, 264. [Google Scholar] [CrossRef]

- Roy, S.; Sankaran, S.; Zeng, M. Green Intrusion Detection Systems: A Comprehensive Review and Directions. Sensors 2024, 24, 5516. [Google Scholar] [CrossRef]

- Teixeira, D.; Malta, S.; Pinto, P. A Vote-Based Architecture to Generate Classified Datasets and Improve Performance of Intrusion Detection Systems Based on Supervised Learning. Future Internet 2022, 14, 72. [Google Scholar] [CrossRef]

- Kumar, N.; Sharma, S. A Hybrid Modified Deep Learning Architecture for Intrusion Detection System with Optimal Feature Selection. Electronics 2023, 12, 4050. [Google Scholar] [CrossRef]

- Niemiec, M.; Kościej, R.; Gdowski, B. Multivariable Heuristic Approach to Intrusion Detection in Network Environments. Entropy 2021, 23, 776. [Google Scholar] [CrossRef] [PubMed]

- Szczepanik, W.; Niemiec, M. Heuristic Intrusion Detection Based on Traffic Flow Statistical Analysis. Energies 2022, 15, 3951. [Google Scholar] [CrossRef]

- Gou, W.; Zhang, H.; Zhang, R. Multi-Classification and Tree-Based Ensemble Network for the Intrusion Detection System in the Internet of Vehicles. Sensors 2023, 23, 8788. [Google Scholar] [CrossRef]

- Luo, F.; Yang, Z.; Zhang, Z.; Wang, Z.; Wang, B.; Wu, M. A Multi-Layer Intrusion Detection System for SOME/IP-Based In-Vehicle Network. Sensors 2023, 23, 4376. [Google Scholar] [CrossRef]

- Wang, C.; Sun, Y.; Lv, S.; Wang, C.; Liu, H.; Wang, B. Intrusion Detection System Based on One-Class Support Vector Machine and Gaussian Mixture Model. Electronics 2023, 12, 930. [Google Scholar] [CrossRef]

- Nassreddine, G.; Nassereddine, M.; Al-Khatib, O. Ensemble Learning for Network Intrusion Detection Based on Correlation and Embedded Feature Selection Techniques. Computers 2025, 14, 82. [Google Scholar] [CrossRef]

- Ahsan, S.I.; Legg, P.; Alam, S.M.I. An Explainable Ensemble-Based Intrusion Detection System for Software-Defined Vehicle Ad-Hoc Networks. Cyber Secur. Appl. 2025, 3, 100090. [Google Scholar] [CrossRef]

- Ali, M.; Haque, M.-; Durad, M.H.; Usman, A.; Mohsin, S.M.; Mujlid, H.; Maple, C. Effective Network Intrusion Detection Using Stacking-Based Ensemble Approach. Int. J. Inf. Secur. 2023, 22, 1781–1798. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R.; Stushchankyi, Y.; Havryliuk, Y. Neural Network Method for Controlling the Helicopters Turboshaft Engines Free Turbine Speed at Flight Modes. CEUR Workshop Proc. 2023, 3426, 89–108. Available online: https://ceur-ws.org/Vol-3426/paper8.pdf (accessed on 24 July 2025).

- Ogunseyi, T.B.; Thiyagarajan, G. An Explainable LSTM-Based Intrusion Detection System Optimized by Firefly Algorithm for IoT Networks. Sensors 2025, 25, 2288. [Google Scholar] [CrossRef]

- Volpe, G.; Fiore, M.; la Grasta, A.; Albano, F.; Stefanizzi, S.; Mongiello, M.; Mangini, A.M. A Petri Net and LSTM Hybrid Approach for Intrusion Detection Systems in Enterprise Networks. Sensors 2024, 24, 7924. [Google Scholar] [CrossRef]

- Sayegh, H.R.; Dong, W.; Al-madani, A.M. Enhanced Intrusion Detection with LSTM-Based Model, Feature Selection, and SMOTE for Imbalanced Data. Appl. Sci. 2024, 14, 479. [Google Scholar] [CrossRef]

- Deshmukh, A.; Ravulakollu, K. An Efficient CNN-Based Intrusion Detection System for IoT: Use Case Towards Cybersecurity. Technologies 2024, 12, 203. [Google Scholar] [CrossRef]

- Mohammadpour, L.; Ling, T.C.; Liew, C.S.; Aryanfar, A. A Survey of CNN-Based Network Intrusion Detection. Appl. Sci. 2022, 12, 8162. [Google Scholar] [CrossRef]

- Udurume, M.; Shakhov, V.; Koo, I. Comparative Analysis of Deep Convolutional Neural Network—Bidirectional Long Short-Term Memory and Machine Learning Methods in Intrusion Detection Systems. Appl. Sci. 2024, 14, 6967. [Google Scholar] [CrossRef]

- Najar, A.A.; Manohar Naik, S. Cyber-Secure SDN: A CNN-Based Approach for Efficient Detection and Mitigation of DDoS Attacks. Comput. Secur. 2024, 139, 103716. [Google Scholar] [CrossRef]

- Yang, S.; Pan, W.; Li, M.; Yin, M.; Ren, H.; Chang, Y.; Liu, Y.; Zhang, S.; Lou, F. Industrial Internet of Things Intrusion Detection System Based on Graph Neural Network. Symmetry 2025, 17, 997. [Google Scholar] [CrossRef]

- Basak, M.; Kim, D.-W.; Han, M.-M.; Shin, G.-Y. X-GANet: An Explainable Graph-Based Framework for Robust Network Intrusion Detection. Appl. Sci. 2025, 15, 5002. [Google Scholar] [CrossRef]

- Mohammad, R.; Saeed, F.; Almazroi, A.A.; Alsubaei, F.S.; Almazroi, A.A. Enhancing Intrusion Detection Systems Using a Deep Learning and Data Augmentation Approach. Systems 2024, 12, 79. [Google Scholar] [CrossRef]

- Aljuaid, W.H.; Alshamrani, S.S. A Deep Learning Approach for Intrusion Detection Systems in Cloud Computing Environments. Appl. Sci. 2024, 14, 5381. [Google Scholar] [CrossRef]

- Hafsa, M.; Jemili, F. Comparative Study between Big Data Analysis Techniques in Intrusion Detection. Big Data Cogn. Comput. 2018, 3, 1. [Google Scholar] [CrossRef]

- Alrayes, F.S.; Amin, S.U.; Hakami, N. An Adaptive Framework for Intrusion Detection in IoT Security Using MAML (Model-Agnostic Meta-Learning). Sensors 2025, 25, 2487. [Google Scholar] [CrossRef]

- Shyaa, M.A.; Zainol, Z.; Abdullah, R.; Anbar, M.; Alzubaidi, L.; Santamaría, J. Enhanced Intrusion Detection with Data Stream Classification and Concept Drift Guided by the Incremental Learning Genetic Programming Combiner. Sensors 2023, 23, 3736. [Google Scholar] [CrossRef]

- Devine, M.; Ardakani, S.P.; Al-Khafajiy, M.; James, Y. Federated Machine Learning to Enable Intrusion Detection Systems in IoT Networks. Electronics 2025, 14, 1176. [Google Scholar] [CrossRef]

- Chiriac, B.-N.; Anton, F.-D.; Ioniță, A.-D.; Vasilică, B.-V. A Modular AI-Driven Intrusion Detection System for Network Traffic Monitoring in Industry 4.0, Using Nvidia Morpheus and Generative Adversarial Networks. Sensors 2024, 25, 130. [Google Scholar] [CrossRef]

- Zhou, H.; Zou, H.; Li, W.; Li, D.; Kuang, Y. HiViT-IDS: An Efficient Network Intrusion Detection Method Based on Vision Transformer. Sensors 2025, 25, 1752. [Google Scholar] [CrossRef]

- Kim, T.; Pak, W. Integrated Feature-Based Network Intrusion Detection System Using Incremental Feature Generation. Electronics 2023, 12, 1657. [Google Scholar] [CrossRef]

- Jang, W.; Kim, H.; Seo, H.; Kim, M.; Yoon, M. SELID: Selective Event Labeling for Intrusion Detection Datasets. Sensors 2023, 23, 6105. [Google Scholar] [CrossRef]

- Mouyart, M.; Medeiros Machado, G.; Jun, J.-Y. A Multi-Agent Intrusion Detection System Optimized by a Deep Reinforcement Learning Approach with a Dataset Enlarged Using a Generative Model to Reduce the Bias Effect. J. Sens. Actuator Netw. 2023, 12, 68. [Google Scholar] [CrossRef]

- Verma, P.; Dumka, A.; Singh, R.; Ashok, A.; Gehlot, A.; Malik, P.K.; Gaba, G.S.; Hedabou, M. A Novel Intrusion Detection Approach Using Machine Learning Ensemble for IoT Environments. Appl. Sci. 2021, 11, 10268. [Google Scholar] [CrossRef]

- Asharf, J.; Moustafa, N.; Khurshid, H.; Debie, E.; Haider, W.; Wahab, A. A Review of Intrusion Detection Systems Using Machine and Deep Learning in Internet of Things: Challenges, Solutions and Future Directions. Electronics 2020, 9, 1177. [Google Scholar] [CrossRef]

- Almalawi, A. A Lightweight Intrusion Detection System for Internet of Things: Clustering and Monte Carlo Cross-Entropy Approach. Sensors 2025, 25, 2235. [Google Scholar] [CrossRef] [PubMed]

- Alserhani, F. Intrusion Detection and Real-Time Adaptive Security in Medical IoT Using a Cyber-Physical System Design. Sensors 2025, 25, 4720. [Google Scholar] [CrossRef]

- Maosa, H.; Ouazzane, K.; Ghanem, M.C. A Hierarchical Security Event Correlation Model for Real-Time Threat Detection and Response. Network 2024, 4, 68–90. [Google Scholar] [CrossRef]

- Alromaihi, N.; Rouached, M.; Akremi, A. Design and Analysis of an Effective Architecture for Machine Learning Based Intrusion Detection Systems. Network 2025, 5, 13. [Google Scholar] [CrossRef]

- Heijungs, R.; Henriksson, P.; Guinée, J. Measures of Difference and Significance in the Era of Computer Simulations, Meta-Analysis, and Big Data. Entropy 2016, 18, 361. [Google Scholar] [CrossRef]

- Vladov, S.; Yakovliev, R.; Hubachov, O.; Rud, J. Neuro-Fuzzy System for Detection Fuel Consumption of Helicopters Turboshaft Engines. CEUR Workshop Proc. 2024, 3628, 55–72. Available online: https://ceur-ws.org/Vol-3628/paper5.pdf (accessed on 3 August 2025).

- Abbas, N.; Atwell, E. Cognitive Computing with Large Language Models for Student Assessment Feedback. Big Data Cogn. Comput. 2025, 9, 112. [Google Scholar] [CrossRef]

- Liu, X.; Chen, W.; Guo, X.; Luo, D.; Liang, L.; Zhang, B.; Gu, Y. Secure Computation Schemes for Mahalanobis Distance Between Sample Vectors in Combating Malicious Deception. Symmetry 2025, 17, 1407. [Google Scholar] [CrossRef]

- Yao, L.; Lin, T.-B. Evolutionary Mahalanobis Distance-Based Oversampling for Multi-Class Imbalanced Data Classification. Sensors 2021, 21, 6616. [Google Scholar] [CrossRef]

- Kumar, A.; Gutierrez, J.A. Impact of Machine Learning on Intrusion Detection Systems for the Protection of Critical Infrastructure. Information 2025, 16, 515. [Google Scholar] [CrossRef]

- Zhong, M.; Zhou, Y.; Chen, G. Sequential Model Based Intrusion Detection System for IoT Servers Using Deep Learning Methods. Sensors 2021, 21, 1113. [Google Scholar] [CrossRef] [PubMed]

- Zaman, B.; Mahfooz, S.Z.; Khan, N.; Abbasi, S.A. Optimizing Process Monitoring: Adaptive CUSUM Control Chart with Hybrid Score Functions. Measurement 2025, 254, 117847. [Google Scholar] [CrossRef]

- Nawa, V.; Nadarajah, S. Exact Expressions for Kullback–Leibler Divergence for Univariate Distributions. Entropy 2024, 26, 959. [Google Scholar] [CrossRef]

- Park, H.; Shin, D.; Park, C.; Jang, J.; Shin, D. Unsupervised Machine Learning Methods for Anomaly Detection in Network Packets. Electronics 2025, 14, 2779. [Google Scholar] [CrossRef]

- Mutambik, I. An Efficient Flow-Based Anomaly Detection System for Enhanced Security in IoT Networks. Sensors 2024, 24, 7408. [Google Scholar] [CrossRef]

- Vladov, S.; Yakovliev, R.; Vysotska, V.; Nazarkevych, M.; Lytvyn, V. The Method of Restoring Lost Information from Sensors Based on Auto-Associative Neural Networks. Appl. Syst. Innov. 2024, 7, 53. [Google Scholar] [CrossRef]

- Kim, D.; Im, H.; Lee, S. Adaptive Autoencoder-Based Intrusion Detection System with Single Threshold for CAN Networks. Sensors 2025, 25, 4174. [Google Scholar] [CrossRef] [PubMed]

- Almalawi, A.; Hassan, S.; Fahad, A.; Iqbal, A.; Khan, A.I. Hybrid Cybersecurity for Asymmetric Threats: Intrusion Detection and SCADA System Protection Innovations. Symmetry 2025, 17, 616. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R.; Petchenko, M.; Drozdova, S. Helicopters Turboshaft Engines Parameters Identification at Flight Modes Using Neural Networks. In Proceedings of the IEEE 17th International Conference on Computer Science and Information Technologies (CSIT), Lviv, Ukraine, 10–12 November 2022; pp. 5–8. [Google Scholar] [CrossRef]

- Rai, H.M.; Yoo, J.; Agarwal, S. The Improved Network Intrusion Detection Techniques Using the Feature Engineering Approach with Boosting Classifiers. Mathematics 2024, 12, 3909. [Google Scholar] [CrossRef]

- Adewole, K.S.; Jacobsson, A.; Davidsson, P. Intrusion Detection Framework for Internet of Things with Rule Induction for Model Explanation. Sensors 2025, 25, 1845. [Google Scholar] [CrossRef]

- Li, M.; Qiao, Y.; Lee, B. Multi-View Intrusion Detection Framework Using Deep Learning and Knowledge Graphs. Information 2025, 16, 377. [Google Scholar] [CrossRef]

- Vladov, S.; Scislo, L.; Sokurenko, V.; Muzychuk, O.; Vysotska, V.; Osadchy, S.; Sachenko, A. Neural Network Signal Integration from Thermogas-Dynamic Parameter Sensors for Helicopters Turboshaft Engines at Flight Operation Conditions. Sensors 2024, 24, 4246. [Google Scholar] [CrossRef]

- Vladov, S.; Sachenko, A.; Sokurenko, V.; Muzychuk, O.; Vysotska, V. Helicopters Turboshaft Engines Neural Network Modeling under Sensor Failure. J. Sens. Actuator Netw. 2024, 13, 66. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R. Modified Helicopters Turboshaft Engines Neural Network On-board Automatic Control System Using the Adaptive Control Method. CEUR Workshop Proc. 2022, 3309, 205–224. Available online: https://ceur-ws.org/Vol-3309/paper15.pdf (accessed on 18 August 2025).

- Vladov, S.; Shmelov, Y.; Yakovliev, R. Method for Forecasting of Helicopters Aircraft Engines Technical State in Flight Modes Using Neural Networks. CEUR Workshop Proc. 2022, 3171, 974–985. Available online: https://ceur-ws.org/Vol-3171/paper70.pdf (accessed on 21 August 2025).

- Kuk, K.; Stanojević, A.; Čisar, P.; Popović, B.; Jovanović, M.; Stanković, Z.; Pronić-Rančić, O. Applications of Fuzzy Logic and Probabilistic Neural Networks in E-Service for Malware Detection. Axioms 2024, 13, 624. [Google Scholar] [CrossRef]

- Guo, D.; Xie, Y. Research on Network Intrusion Detection Model Based on Hybrid Sampling and Deep Learning. Sensors 2025, 25, 1578. [Google Scholar] [CrossRef]

- Vanin, P.; Newe, T.; Dhirani, L.L.; O’Connell, E.; O’Shea, D.; Lee, B.; Rao, M. A Study of Network Intrusion Detection Systems Using Artificial Intelligence/Machine Learning. Appl. Sci. 2022, 12, 11752. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R. Optimization of Helicopters Aircraft Engine Working Process Using Neural Networks Technologies. CEUR Workshop Proc. 2022, 3171, 1639–1656. Available online: https://ceur-ws.org/Vol-3171/paper117.pdf (accessed on 28 August 2025).

- Tang, S.; Du, F.; Diao, Z.; Fan, W. A Multi-Feature Semantic Fusion Machine Learning Architecture for Detecting Encrypted Malicious Traffic. J. Cybersecur. Priv. 2025, 5, 47. [Google Scholar] [CrossRef]

- Bhattacharya, M.; Penica, M.; O’Connell, E.; Southern, M.; Hayes, M. Human-in-Loop: A Review of Smart Manufacturing Deployments. Systems 2023, 11, 35. [Google Scholar] [CrossRef]

- Moghaddam, P.S.; Vaziri, A.; Khatami, S.S.; Hernando-Gallego, F.; Martín, D. Generative Adversarial and Transformer Network Synergy for Robust Intrusion Detection in IoT Environments. Future Internet 2025, 17, 258. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, L. Intrusion Detection Model Based on Improved Transformer. Appl. Sci. 2023, 13, 6251. [Google Scholar] [CrossRef]

- Gutiérrez-Galeano, L.; Domínguez-Jiménez, J.-J.; Schäfer, J.; Medina-Bulo, I. LLM-Based Cyberattack Detection Using Network Flow Statistics. Appl. Sci. 2025, 15, 6529. [Google Scholar] [CrossRef]

- Kim, T.; Pak, W. Deep Learning-Based Network Intrusion Detection Using Multiple Image Transformers. Appl. Sci. 2023, 13, 2754. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R. Methodology for Control of Helicop-ters Aircraft Engines Technical State in Flight Modes Using Neural Networks. CEUR Workshop Proc. 2022, 3137, 108–125. [Google Scholar] [CrossRef]

- Romanova, T.E.; Stetsyuk, P.I.; Chugay, A.M.; Shekhovtsov, S.B. Parallel Computing Technologies for Solving Optimization Problems of Geometric Design. Cybern. Syst. Anal. 2019, 55, 894–904. [Google Scholar] [CrossRef]

- Kovtun, V.; Izonin, I.; Gregus, M. Model of Functioning of the Centralized Wireless Information Ecosystem Focused on Multimedia Streaming. Egypt. Inform. J. 2022, 23, 89–96. [Google Scholar] [CrossRef]

- Bodyanskiy, Y.; Shafronenko, A.; Pliss, I. Clusterization of Vector and Matrix Data Arrays Using the Combined Evolutionary Method of Fish Schools. Syst. Res. Inf. Technol. 2022, 4, 79–87. [Google Scholar] [CrossRef]

- Sachenko, A.; Kochan, V.; Turchenko, V. Intelligent Distributed Sensor Network. In Proceedings of the IMTC/98 Conference Proceedings, IEEE Instrumentation and Measurement Technology Conference. Where Instrumentation is Going (Cat. No.98CH36222), St. Paul, MN, USA, 18–21 May 1998; Volume 1, pp. 60–66. [Google Scholar] [CrossRef]

- Vladov, S.; Shmelov, Y.; Yakovliev, R.; Petchenko, M. Modified Neural Net-work Fault-Tolerant Closed Onboard Helicopters Turboshaft Engines Automatic Control System. CEUR Workshop Proc. 2023, 3387, 160–179. Available online: https://ceur-ws.org/Vol-3387/paper13.pdf (accessed on 30 August 2025).

- Dyvak, M.; Manzhula, V.; Melnyk, A.; Rusyn, B.; Spivak, I. Modeling the Efficiency of Biogas Plants by Using an Interval Data Analysis Method. Energies 2024, 17, 3537. [Google Scholar] [CrossRef]

- Lytvyn, V.; Dudyk, D.; Peleshchak, I.; Peleshchak, R.; Pukach, P. Influence of the Number of Neighbours on the Clustering Metric by Oscillatory Chaotic Neural Network with Dipole Synaptic Connections. CEUR Workshop Proc. 2024, 3664, 24–34. Available online: https://ceur-ws.org/Vol-3664/paper3.pdf (accessed on 31 August 2025).

- Bisikalo, O.; Danylchuk, O.; Kovtun, V.; Kovtun, O.; Nikitenko, O.; Vysotska, V. Modeling of Operation of Information System for Critical Use in the Conditions of Influence of a Complex Certain Negative Factor. Int. J. Control Autom. Syst. 2022, 20, 1904–1913. [Google Scholar] [CrossRef]

| Study | Approach | Key Contribution | Main Limitations |

|---|---|---|---|

| Systematic machine learning and IDS surveys | Exhaustive review of machine learning methods (supervised or unsupervised) | Algorithms, datasets, metrics systematization | Limitations in practical deployment, real installations lack validation |

| Deep learning IDS reviews | CNN, RNN, GNN for traffic and logs | Highlighting the deep learning ability to extract complex spatio-temporal features | High computational requirements, sensitive to data imbalances |

| Adaptive and online IDS (drift detection, ADWIN, DDM) | Streaming algorithms, incremental training | Drift detection and incremental update mechanisms | Markup required, error accumulation risk, and latency when updating |

| Multi-agent and novelty detection | Deep novelty classifiers with clustering and active labelling | Unseen (zero-day) detection and adaptation | Orchestration complexity, infrastructure requirements, and validation on real networks |

| Drift-aware ensembles (AdIter or GBDT adaptations) | Ensemble weighting with drift detectors | Fast adaptation of base model weights | Setup complexity, possible increase in computational load |

| Step | Description | Aim |

|---|---|---|

| 0 | Data collection and preprocessing (balance, augmentations) | Normalization, tokenization |

| 1 | Pre-training of modal encoders ϕT, ϕL, ϕM (supervised or self-supervised) | Minimize Lsup + λcontr · Lcontr |

| 2 | Initialization of cognitive memory and GNN (batch training) | Train GNN: min∑ℓnode |

| 3 | Variational training probabilistic module (ELBO) | Maximize LELBO (reparam trick) |

| 4 | Train differentiable-symbolic module (soft rules) | Minimize Llogic (soft constraints) |

| 5 | Fusion module joint fine-tuning (multitask) | |

| 6 | Adversarial or DRO fine-tune: gradient-norm reg or adversarial examples | approximated by a gradient regularizer |

| 7 | RL-agent training with human feedback (PPO or actor-critic) | Maximize J(θ) using PPO surrogate LPPO |

| 8 | RL-agent training with human feedback (PPO or actor-critic) | Update policy with operator labels rhuman |

| 9 | Joint deployment fine-tuning (online continual training, constrained by ε-contamination bounds) | Use robust update rules, reservoir sampling, and clip updates |

| 10 | Explainability polishing (NLG) and calibration | Minimize LNLG + λfaith · Lfaith |

| Module (Layer) | Dimensions or Configuration (Parts) | Parameters Number | Justification |

|---|---|---|---|

| Token-embedding (logs) | vocab = 30 k × 256 (embed_dim = 256) | 7.68 · 104 | Universal text embedding. The number 256 is a precision (memory) trade-off for contextual log representation and NLG. |

| Output linear (NLG) | dmodel = 256, i.e., vocab = 30 k | 7.71 · 104 | Enabled for an independent decoder. |

| Traffic Conv1D | Conv1D: in = 32, filters = 128, k = 5; dmodel = 256 | 20,608 | Lightweight convolution for local traffic features; small number of pairs. |

| Traffic Transformer | LT = 4 layers, dmodel = 256, dff = 1024 | 3,153,920 | Four layers give a balance of speed or long memory (time patterns). |

| Logs Transformer | LL = 6 layers, dmodel = 256, dff = 1024 | 4,730,880 | Deeper for text semantics (logs, messages). |

| Metrics MLP | 16 → 128 → 256 | 35,200 | Simple projection of metrics into a common semantic space. |

| Projections into semantics | 3 × (256 × 256) (WT, WL, WM) | 197,376 | Unification of modalities in ds = 256. |

| Cognitive layer (fusion) | K = 32 sensory tokens (32 × 256), WQ (256 × 256), WK × 3, WV × 3, memory M = 512 | 926,208 | Cross-modal attention with differentiable KV-memory. |

| GNN (event correlation) | LG = 3 × (256 × 256 W + attn. vecs.) | 198,912 | Graph relations: semantics translation between entities. |

| VAE (probabilistic module) | latent udim = 64; enc (256 → 128 → 128 → μ/logvar) | 107,264 | Uncertainty estimation (posterior predictive). |

| Symbolic module (differentiable rules) | nrules = 10 × (256 → 64 → 1 nets) | 165,130 | A small set of rules, differentiable (fuzzy predicates). |

| Fusion head (Bayesian merge) | 256 → 1 (logit combination) | 260 | Probabilistic fusion with symbolic and cognitive. |

| RL: actor with critic | actor.: 256 → 256 → 8, critic.: 256 → 256 → 1 | 67,848/66,049 | Prioritization agent (action_space ≈ 8). |

| NLG decoder Transformer | Ldec = 4 layers, dmodel = 256, dff = 1024 | 3,153,920 | Compact decoder for explanation generation. |

| Total | – | ≈28,213,575 | A general average-size model that is suitable for a production cluster. |

| Component | Analytical Expression | FLOPs | Fraction of Total |

|---|---|---|---|

| Traffic Transformer (4 layers), n = 256 | 4 · (4 · n · d2 + 4 · 2 · n2 · d + 4 · 2 · n · d · dff) | 939,524,096 | 43.36% |

| Logs Transformer (6 layers), n = 128 | 6 · 4 · (4 · n · d2 + 4 · 2 · n2 · d + 4 · 2 · n · d · dff) | 654,311,424 | 30.20% |

| NLG decoder (4 layers), Lgen = 32 | 4 · 4 · (4 · n · d2 + 4 · 2 · n2 · d + 4 · 2 · n · d · dff) | 450,887,680 | 20.81% |

| Cross-modal attention (K = 32 → N = 448) | (2 ∙ N + 2 ∙ K) ∙ d2 + 2 ∙ K ∙ N ∙ d | 70,254,592 | 3.24% |

| Memory attention (K × M) | 2 ∙ K ∙ M ∙ d + (K + M) ∙ d2 | 44,040,192 | 2.03% |

| GNN (V = 100, E = 800) | V ∙ d2 + E ∙ d | 6,758,400 | 0.31% |

| Conv1D with projections | small dense ops | 217,984 | 0.01% |

| VAE with small modules | small nets | 200,000 | 0.01% |

| Symbolic module | fuzzy-rule nets | 200,000 | 0.01% |

| Actor network (policy) | small MLP | 133,120 | 0.01% |

| Critic network | small MLP | 131,328 | 0.01% |

| Total (forward, batch is 1) | – | ≈2.17 × 109 | 100% |

| Device | Forward FLOPs | tforward (s) | ttrain_step ≈ 3× (s) |

|---|---|---|---|

| NVIDIA V100 (FP32 peak 15.715.7 TFLOPS) | 2.167 × 109 | 0.000138 s (≈0.138 ms) | 0.000414 s (≈0.414 ms) |

| NVIDIA A100 (FP32 peak 19.519.5 TFLOPS) | 2.167 × 109 | 0.000111 s (≈0.111 ms) | 0.000333 s (≈0.333 ms) |

| RTX 3090 (FP32 peak 35.635.6 TFLOPS) | 2.167 × 109 | 0.000061 s (≈0.061 ms) | 0.000183 s (≈0.183 ms) |

| High-end CPU (FP32~0.5 TFLOPS) | 2.167 × 109 | 0.00433 s (≈4.33 ms) | 0.01299 s (≈13.0 ms) |

| Title 1 | Type | Unit | Short Description | Count | Mean | Std | Min | 25% | 50% | 75% | Max |

|---|---|---|---|---|---|---|---|---|---|---|---|

| bytes_clean | float | bytes/s | Baseline bytes per second (no attack) | 600 | 1141.39 | 97.21 | 853.88 | 1076.65 | 1141.92 | 1204.61 | 1466.36 |

| bytes_attack | float | bytes/s | Bytes per second with the injected attack scenario | 600 | 1429.44 | 1036.52 | 853.88 | 1080.64 | 1150.69 | 1224.96 | 7038.22 |

| pkts_attack | float | packets/s | Packets per second with the injected attack scenario | 600 | 138.79 | 53.09 | 68.55 | 109.27 | 134.31 | 147.29 | 401.14 |

| unique_src_ips_attack | int | count | Unique source IPs observed (attack scenario) | 600 | 15.78 | 37.98 | 3 | 4 | 4 | 4 | 202 |

| failed_logins_attack | float | count | Failed login attempts (attack scenario) | 600 | 0.465 | 0.851 | 0.102 | 0.167 | 0.201 | 0.240 | 4.21 |

| cpu_percent_attack | float | % | CPU utilization percent (attack scenario) | 600 | 21.62 | 5.91 | 12.62 | 17.11 | 21.01 | 24.29 | 46.16 |

| Feature | n_Clean | n_Attack | mean_Clean | mean_Attack | var_Clean | var_Attack | var_Ratio | Levene_Stat | Levene_p | Bartlett_Stat | Bartlett_p | KS_Stat | KS_p | MannW_Stat | MannW_p | Cohens_d |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| bytes_attack | 540 | 60 | 1136.876 | 4062.497 | 9549.6 | 3 · 106 | 312.7 | 1376.5 | 0 | 1719.1 | 0 | 0.95 | 0 | 473 | 0 | 5.313 |

| pkts_attack | 540 | 60 | 125.636 | 257.17 | 548.89 | 7764 | 14.15 | 410.19 | 0 | 339.03 | 0 | 0.811 | 0 | 2696 | 0 | 3.704 |

| unique_src_ips_attack | 540 | 60 | 4.013 | 121.717 | 0.306 | 1962.2 | 6413.4 | 1079.6 | 0 | 3321.8 | 0 | 1.0 | 0 | 0 | 0 | 8.453 |

| failed_logins_attack | 540 | 60 | 0.196 | 2.883 | 0.0016 | 0.722 | 462.3 | 1477.1 | 0 | 1923.1 | 0 | 1.0 | 0 | 0 | 0 | 9.971 |

| cpu_percent_attack | 540 | 60 | 20.121 | 35.092 | 13.6 | 25.3 | 1.86 | 11.59 | 0.0007 | 12.095 | 0.0005 | 0.98 | 0 | 15 | 0 | 3.897 |

| Cluster | Size | Mean_Bytes (Bytes/s) | Mean_Pkts (Pkt/s) | Mean_Unique_Srcs (Count) | Mean_Failed_Logins | Mean_Cpu_Percent | Silhouette_Mean |

|---|---|---|---|---|---|---|---|

| 0 (clean) | 540 | 1136.88 | 125.64 | 4 | 0.196 | 20.12 | ≈0.62 |

| 1 (attack) | 60 | 4062.50 | 257.17 | 122 | 2.883 | 35.09 | ≈0.85 |

| Total | 600 | – | – | – | — | — | average ≈ 0.65 |

| Metric | Cluster 0 (Clean) | Cluster 1 (Attack) | Interpretation |

|---|---|---|---|

| size | 540 | 60 | An attack that is ≈10% of the epoch is a realistic, rare anomaly |

| mean bytes (bytes/s) | 1136.88 | 4062.50 | Substantial volumetric shift (×≈3.6) |

| mean pkts (pkt/s) | 125.64 | 257.17 | Packet rate grows (~×2) |

| mean unique_srcs | 4 | 122 | An attack introduces many source IPs (DDoS) |

| mean failed_logins | 0.196 | 2.883 | Elevated login failures (brute-force signature) |

| mean cpu % | 20.12 | 35.09 | Increased server load during the attack |

| silhouette (cluster mean) | ≈0.62 | ≈0.85 | Clusters are well separable (esp. attack) |

| Architecture | Validation ROC AUC | Precision | Recall | F1-Score | FPR | FNR |

|---|---|---|---|---|---|---|

| MLP (baseline) | 0.89 | 0.87 | 0.85 | 0.86 | 0.07 | 0.08 |

| CNN | 0.91 | 0.89 | 0.87 | 0.88 | 0.06 | 0.07 |

| LSTM | 0.92 | 0.90 | 0.89 | 0.89 | 0.05 | 0.06 |

| Autoencoder-based IDS | 0.88 | 0.84 | 0.86 | 0.85 | 0.08 | 0.09 |

| Transformer | 0.93 | 0.90 | 0.88 | 0.91 | 0.06 | 0.05 |

| Temporal Graph Network | 0.92 | 0.88 | 0.91 | 0.91 | 0.04 | 0.05 |

| EvolveGCN | 0.91 | 0.88 | 0.85 | 0.87 | 0.07 | 0.06 |

| Graph Transformer | 0.94 | 0.91 | 0.89 | 0.91 | 0.05 | 0.05 |

| Developed a neural network | 0.96 | 0.95 | 0.94 | 0.95 | 0.03 | 0.04 |

| Approach (Method, System) | ROC AUC | Precision | Recall (TPR) | F1-Score | FPR |

|---|---|---|---|---|---|

| Proposed neural network (hybrid, adaptive) | 0.96 | 0.95 | 0.94 | 0.95 | 0.03 |

| Adaptive Random Forest (ARF, streaming ensemble) | 0.93 | 0.91 | 0.90 | 0.90 | 0.05 |

| ADWIN-drift with LSTM (drift-aware LSTM ensemble) | 0.92 | 0.90 | 0.89 | 0.90 | 0.05 |

| LSTM (temporal DL) | 0.92 | 0.90 | 0.89 | 0.89 | 0.05 |

| OzaBoost or online boosting (streaming boosting) | 0.91 | 0.89 | 0.87 | 0.88 | 0.06 |

| Local pattern DL | 0.91 | 0.89 | 0.87 | 0.88 | 0.06 |

| Hoeffding Tree (incremental decision tree) | 0.88 | 0.86 | 0.83 | 0.84 | 0.09 |

| Autoencoder-based IDS (reconstruction anomaly) | 0.88 | 0.84 | 0.86 | 0.85 | 0.08 |

| Approach | Precision | Recall (TPR) | F1-Score | FNR | ROC-AUC | Median Latency, Seconds |

|---|---|---|---|---|---|---|

| DDoS (volumetric) | 0.98 | 0.995 | 0.987 | 0.005 | 0.99 | 0.15 |

| Behavioural (lateral) | 0.85 | 0.88 | 0.865 | 0.12 | 0.90 | – |

| Port-scan | 0.93 | 0.92 | 0.925 | 0.08 | 0.94 | 0.10 |

| Password-guessing | 0.86 | 0.80 | 0.829 | 0.20 | 0.88 | 0.40 |

| Malware or delivery | 0.84 | 0.78 | 0.809 | 0.22 | 0.87 | 1.00 |

| Aggregate | 0.95 | 0.94 | 0.95 | 0.04 | 0.96 | 0.15 |

| Metric | Value |

|---|---|

| ROC AUC (score) | 0.950 |

| Precision (alerts) | 0.980 |

| TPR (alerts) | 0.803 |

| F1 (alerts) | 0.883 |

| FPR (alerts) | 0.0019 |

| Median detection latency (samples) | 0 (detection at first attack sample) |

| Inference latency | 0.142 s |

| Peak memory | 612 MB |

| Computational cost | 1.27 GFLOPs |

| Total blocks (during test run) | 40 |

| Number | Limitation | Brief Explanation | Possible Mitigations |

|---|---|---|---|

| 1 | Theoretical assumptions and sensitivity to attack models | The methods are based on specific statistical assumptions (IID, distribution type, divergence estimates), and deviation from them reduces the detection guarantee. | Adaptive thresholds, minimax regularization, and validation on various benchmarks. |

| 2 | Risk of poisoning and concept drift in online training | Incremental updates are vulnerable to deliberate and long-term data drift. | Censoring (clip) mechanisms, confidence interval control, drift monitoring, and rollback mechanisms. |

| 3 | Computational resources and response latency | Complex architectures (GNN, variational layers, entity correlation) require CPU (GPU) and may increase latency. | Profiling, model trade-offs (pruning, distillation), hybrid pipeline (fast-path with deep analysis). |

| 4 | Legal and privacy limitations of evidential fixation | The collection and storage of PCAP (logs) and their sharing with law enforcement are restricted by privacy and evidence integrity laws. | Built-in chain-of-custody, data collection minimization, legal review, and encryption or hashing. |

| Number | Research Direction | Aim | Main Steps (Methods) | Key Performance Indicators |

|---|---|---|---|---|

| 1 | Robust online training versus poisoning and drift | Ensure robustness of incremental updates against hostile and non-stationary data | Development of DRO, certified protections, drift detection, rollback, and selective update mechanisms | AUC under attack, AUC restoring time, FPR reduction under attack |

| 2 | Simplified and deterministic architectures for real-time architecture use | Reduce latency and computational requirements without significant loss of quality | Pruning, quantisation or distillation, hybrid fast-path with deep-analysis, profiling on target hosts | Latency (ms), throughput (req/s), AUC drop ≤ acceptable threshold |

| 3 | Formalization of the forensic pipeline and legal validation | Ensure the admissibility of the collected artefacts in investigations | Chain-of-custody, hashing (signatures), using secure logging, coordinating templates with lawyers | Valid evidence packets proportion, packet preparation time, and integrity (hash) |

| 4 | Standardized adversarial benchmark and testing framework | Create a representative environment for a comparable IDS/IPS assessment | Attack (drift) generation, red-team scenarios, metrics set and replica repository, reproducibility of experiments | Scenario coverage, reproducibility, and models’ comparative rating |

| Method (Class) | Online (Stream) | Novelty and Adaptation | Poisoning Protection (Adversarial) | Human-In-Loop | Forensic Readiness | Typical Metrics (AUC, F1, FPR) | Computational Cost |

|---|---|---|---|---|---|---|---|

| Proposed hybrid method | Yes (incremental) | Built-in (novelty, active labelling, incremental) | Formal (DRO, clipping, adversarial training) | Yes (RL and interface) | Full (NLG, chain-of-custodians) | AUC = 0.96 F1 = 0.95 FPR = 0.03 | High |

| Signature-based (rule-based) | No or partially | No | N/A | No | Limited | high on the known, low on the zero-day | Low |

| Classical ML (MLP, RF, Hoeffding) | Partially (ARF, HT) | Partially (via drift detectors) | Limited | Partially | Low | AUC ≈ 0.89, …, 0.93 F1 ≈ 0.84, …, 0.90 FPR ≈ 0.05, …, 0.09 | Low-medium |

| LSTM, CNN (temporal or local pattern DL) | Partially (batches) | No built-in | Limited | Rarely | Low | AUC ≈ 0.91, …, 0.92 F1 ≈ 0.88, …, 0.89 FPR ≈ 0.05, …, 0.06 | Medium |

| Autoencoder-IDS (reconstruction) | Partially | Yes (via reconstruction) | Insensitive to targeted poisoning | No | Poor | AUC ≈ 0.88 F1 ≈ 0.85 FPR ≈ 0.08 | Low-medium |

| Adaptive Random Forest (ARF) | Yes (stream) | Partially | Moderate | No or partially | Low | AUC ≈ 0.93 F1 ≈ 0.90 FPR ≈ 0.045 | Low |

| Temporal GNN (EvolveGCN) | Yes (variants) | Good (entity relationships) | Depends on implementation | Partially | Average (graphs help) | AUC ≈ 0.91, …, 0.92 F1 ≈ 0.88, …, 0.90 FPR ≈ 0.05, …, 0.08 | Medium-high |

| Transformer (Graph Transformer) | Partially (tuning) | High expressive power | Possible with training regimen | Rarely | Average | AUC ≈ 0.93, …, 0.94 F1 ≈ 0.90, …, 0.92 FPR ≈ 0.04, …, 0.06 | Very high |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vladov, S.; Vysotska, V.; Vashchenko, S.; Bolvinov, S.; Glubochenko, S.; Repchonok, A.; Korniienko, M.; Nazarkevych, M.; Herasymchuk, R. Neural Network IDS/IPS Intrusion Detection and Prevention System with Adaptive Online Training to Improve Corporate Network Cybersecurity, Evidence Recording, and Interaction with Law Enforcement Agencies. Big Data Cogn. Comput. 2025, 9, 267. https://doi.org/10.3390/bdcc9110267

Vladov S, Vysotska V, Vashchenko S, Bolvinov S, Glubochenko S, Repchonok A, Korniienko M, Nazarkevych M, Herasymchuk R. Neural Network IDS/IPS Intrusion Detection and Prevention System with Adaptive Online Training to Improve Corporate Network Cybersecurity, Evidence Recording, and Interaction with Law Enforcement Agencies. Big Data and Cognitive Computing. 2025; 9(11):267. https://doi.org/10.3390/bdcc9110267

Chicago/Turabian StyleVladov, Serhii, Victoria Vysotska, Svitlana Vashchenko, Serhii Bolvinov, Serhii Glubochenko, Andrii Repchonok, Maksym Korniienko, Mariia Nazarkevych, and Ruslan Herasymchuk. 2025. "Neural Network IDS/IPS Intrusion Detection and Prevention System with Adaptive Online Training to Improve Corporate Network Cybersecurity, Evidence Recording, and Interaction with Law Enforcement Agencies" Big Data and Cognitive Computing 9, no. 11: 267. https://doi.org/10.3390/bdcc9110267

APA StyleVladov, S., Vysotska, V., Vashchenko, S., Bolvinov, S., Glubochenko, S., Repchonok, A., Korniienko, M., Nazarkevych, M., & Herasymchuk, R. (2025). Neural Network IDS/IPS Intrusion Detection and Prevention System with Adaptive Online Training to Improve Corporate Network Cybersecurity, Evidence Recording, and Interaction with Law Enforcement Agencies. Big Data and Cognitive Computing, 9(11), 267. https://doi.org/10.3390/bdcc9110267