A Digital Twin Threat Survey

Abstract

1. Introduction

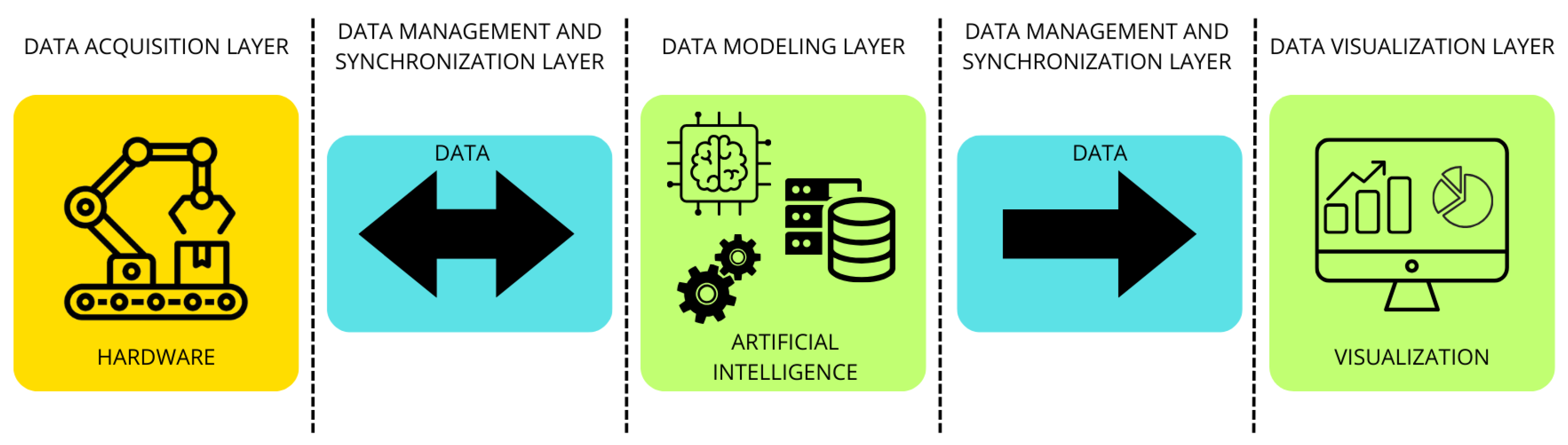

1.1. Structure of Modern Digital Twins

- Data Acquisition LayerThe data acquisition layer is the first layer that takes part in the flow of information generated by a DT. It is responsible for collecting data from sensors attached to physical objects. As underlined in [6], the implementation of DT demands a vast amount of data collected through sensors, embedded systems, offline measurement and sampling inspection methods over physical entities.

- Data Management and Synchronization LayerAfter successfully gathering all the information available from the physical device, it is time for the data to be managed and synchronized. In this layer, all the storing and processing operations of the data collected from the physical object and the transmission from the physical device to the digital part of the DT take part.

- Data Modelling LayerOnce the information flow has arrived to the DT, it is time to process that information in order to obtain the useful output desired when implementing the paradigm. The data modelling layer is then responsible for creating and updating the DT based on the data collected from the physical object on the first layer and transmitted on the second one. At this level the Artificial Intelligence (AI) governing the model can take its own conclusions from the data received and the actual state of the model, which provides a highly valuable asset for companies in order to automate engineering and management operations [7]. In addition, the adoption of modern techniques such as AI has been identified as a key factor in the growth and use of digital twins [8,9,10]. In this section and the rest of this work we will refer to Artificial Intelligence as it is defined in the proposed EU AI Act: “a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments” [11].

- Data Visualization LayerAfter all the data gathering and processing has occurred, the DT shows to its respective operator all the conclusions obtained after the information flow. Traditionally, the operator following the organization policies and rules, after carefully analysing this representations, takes the consequent decisions on the corresponding physical system. In modern DT systems, this role is progressively assumed by the AI, leaving to the operator a monitoring role.

- Hardware: Attached to the real-world object, the physical twin is responsible for gathering all the necessary information to virtualize it. It is clearly connected to Internet of Things (IoT) devices, whose growth is significantly contributing to the adoption of the digital twin paradigm as a standard in manufacturing processes for Industry 4.0 [10,12].

- Data: This refers to the information exchanged at every stage of the digital twin process. It encompasses data generated by the hardware components in the data acquisition layer, as well as data resulting from transformations made in the rest of the layers.

- Artificial Intelligence and Software: This encompasses all software components within the digital twin paradigm. Of particular note is the AI system, which can vary widely, from deep learning techniques [13] or Generative Adversary Networks (GANs) [14] to more classical Machine Learning algorithms such as Random Forest [15] or Support Vector Machine [16], among others, all of them playing a key role in the representability of the generated digital twin, as AI techniques are used to collect, analyse, and test the data from a physical object to build its digital copy [17,18].

1.2. Methodology

- The Population in which the evidence is being collected, the group of entities that are of interest for the review.

- The Intervention applied in the study, more specifically, which technology is in the scope of this study or which area related to that technology is being reviewed.

- The Comparison to the previously identified intervention.

- The Outcomes we would like to achieve by developing this review.

- The Context of the study.

- RQ1: Which are the main cybersecurity risks to the digital twin paradigm?

- RQ2: Is it feasible to employ a risk analysis framework from different computation paradigm systems in a DT system?

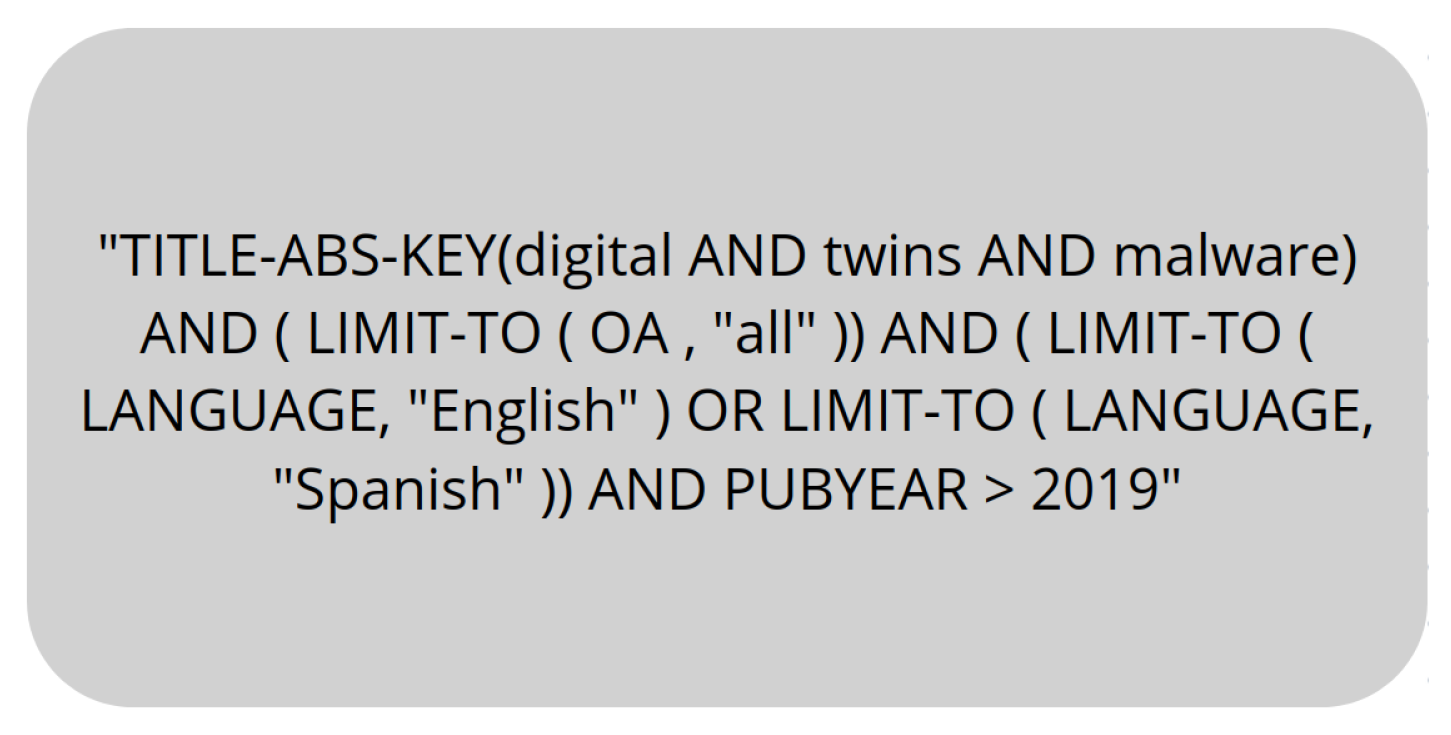

1.3. Search Process

- General strings: “digital twin”, “digital twins”, “digital twinning”.

- Additional strings: “attack”, “attestation”, “cyberattacks”, “cyberthreat”, “dependability”, “dependable”, “disaster”, “honey”, “honeypot”, “intrusion detection”, “malware”, “quantum computing”, “quantum”, “ransomware”, “reliability”, “remote safety”, “risks”, “threats”, “trustworthiness”, “verification”.

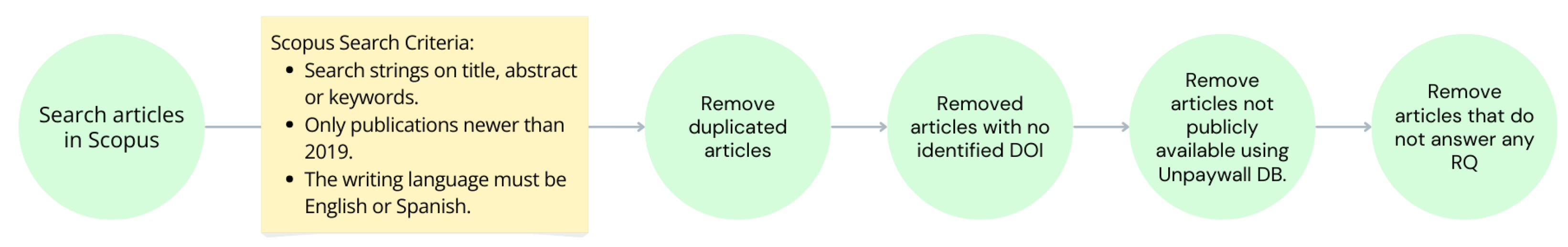

1.4. Study Selection Criteria

- We have removed duplicated articles leaving us with a total of 3244 articles.

- We have deleted those publications with no identified DOI.

- We have removed those publications not publicly available. For checking this, we have used the Unpaywall Database [22]. This step left us with a total number of 1204 open access publications.

- Only publications written in English and Spanish have been considered.

- The publication must answer at least one research question; otherwise, it has been excluded.

2. Digital Twins’ Risks

2.1. Hardware Threats and Countermeasures

2.2. AI Threats and Countermeasures

2.3. Threats to the Data Life-Cycle and Countermeasures

- Threats to data generation

- Low-Quality Data Threat: In the context of twin technology, this threat can occur in various scenarios, both in the communication between the physical twin and the DT, as well as in communication between DTs. Initially, it can impact the reliability of actions or decisions made by the DT regarding its physical counterpart and can affect inter-twin communications in case collaboration is required.

- Model Inconsistency Attack: A physically manipulated element can maliciously alter the parameters used in the model training phase, leading to operational issues and even privacy concerns; see Pasquini et al. [55].

- Model Poisoning Attack: In a collaborative context involving different DTs, an attacker may manipulate the parameters of the AI model with the aim of disrupting collaborative functionality; see Tian et al. [56]. In federated learning environments, local models could be tampered with in an attempt to influence the results of the global model aggregation. The guidelines of [23] to guarantee the reliability of the decision making and taking in DT can be implemented, for example, by means of the deployment of black-box audit using verifiable computation [46]. This approach could help to detect both model inconsistency and poisoning attacks.

- Threats to Data Backup: Another threat inherited from IT environments pertains to backup data, which serves as a recovery mechanism in the event of data loss or corruption, a necessity to ensure the life-cycle of services associated with DT technology; see Su et al. [57]. Alterations to backup data could result in system malfunction following a restoration operation. On this point, it is necessary to take into account the need to implement encryption backup, and strict access control to all backups, along with regular verification of backup integrity [23].

- Data/Content Poisoning Attack: This type of threat directly impacts interactions between twins (see Nour et al. [58]), as it can disrupt the system’s operation by injecting malicious data and even interfere with the model training process. Remote Attestation should be used here to detect any attempt to carry out this type of attack. In addition, and according to the guidelines in [23], all information flows should be verified, for example, by creating robust confidential and authenticated channels, which demands the deployment of robust Public Key Infrastructure and the adoption of IPSec to protect the control pane in the DT.

- Threats to data transmission: As communication flows from hardware devices to the computerized structures supporting the Artificial Intelligence models discussed in the previous section, information is transmitted through channels, whether wired or wireless. This involves a classic communication protocol, and hence the transmitted data may be susceptible to typical attack typologies. We would like to emphasize the importance of considering that, as this technology aims to become predominant in the industrial, healthcare [59], and autonomous driving [60] sectors, when it comes to its implementation and security, one must also take into account potential threats arising from the advent of quantum computers.

- Data Tampering: Without a mechanism to ensure the authenticity of exchanged information, a DT-based system is vulnerable to forgery, alteration, replacement, or deletion of data. This type of threat may manifest during the DT creation phase, by contaminating the training dataset with manipulated data, thereby disrupting the DT’s functionality from its inception.

- Desynchronization of DT: A distinctive feature of DT-based technology is its bidirectional communication, which, in order to function correctly, must remain synchronized. In response to this requirement, an attacker could compromise the system by tampering with the synchronization associated with twin interactions; see Gehrmann et al. [61]. This threat broadens the attack vector, as it is possible to disrupt either twin (digital or physical) by simply altering the synchrony of its corresponding twin.

- Eavesdropping Attack: Although not specific to DT-based technology, this type of threat applies to any communication utilizing open or improperly secured channels, potentially gaining access to transmitted data.

- Message Flooding Attack: This type of attack can impact communications, both inter/intra-twin, and may trigger a DoS attack.

- Interest Flooding Attack: Such an attack affects not only the availability of the digital resource but can also interfere with the operation of the DT by executing queries that raise CPU usage or increase memory utilization, among others problems [62].

- Man-in-the-Middle (MITM): This is a typical threat to the network infrastructures used by the DT, especially when communication is effectuated through wireless networks, where malicious servers can be located in the middle of the DT information flow for launching an MitM attack. An insider can launch a routing attack on the devices under their control and modify the information sent, trace the network traffic sequence or deteriorate the quality of the DT maintenance process by altering the DT database or the final representation of the data.

- Denial of Service (DoS): DoS attacks mainly affect the data acquisition layer devices and are aimed to facilitate another series of attacks, although the physical resources (servers) of the virtualized twin may also be affected. However, in cases where the organization deploys a decentralized infrastructure, the damage is minimized. This is the most difficult type of attack to carry out as DTs are usually set up in closed environments and require the attacker to be close, physically or digitally, to the devices or servers to be compromised.

The protection against interception attacks involves the use of encryption, integrity verification, and the deployment of adequate security controls for authentication, accountability and audit. Taking into account the heterogeneous nature of the DT ecosystem, there is a need to promote the adoption of standards and interoperable technical solutions. Again, the NIST Cybersecurity Framework provides a set of very useful guidelines for the promotion of those standards in DTs [63]. - Threats to data usage/consumption

- Private Information Extraction With Insiders: This type of threat occurs in both types of communications within a DT ecosystem. An insider may leverage their position to extract sensitive information shared with the DT from legitimate physical twins. Using this information could enable access to the DT within the system, facilitating attacks within the infrastructure itself.

- Privacy Leakage in Model Aggregation: Under the collaborative learning paradigm, there is a risk of information leakage during the model aggregation phase applied to the DT ecosystem [64].

- Privacy Leakage in Model Delivery/Deployment: When offering global AI models from cloud infrastructures, there is a potential risk of model theft during communications between different DTs. If the AI model is obtained, it may be possible to infer information contained in the model parameters. Additionally, with the model in hand, it is possible to implement techniques that allow unauthorized access, enabling the alteration of the model’s output at the attacker’s discretion [65].

- Knowledge/Model Inversion Attack: During the usage phase of a DT, there is a risk of extracting the representations of training data from the AI model, known as knowledge/model inversion attacks. This type of information extraction can be classified into two types, the first of which is known as a white-box attack, in which direct access is gained to the AI model along with all associated information, and black-box attacks, in which interaction with the AI model occurs through APIs to obtain related information [66].

3. A New Framework for Identifying Digital Twin Threats

4. Conclusions

Funding

Conflicts of Interest

References

- Chinesta, F.; Cueto, E.; Abisset-Chavanne, E.; Duval, J.L.; Khaldi, F.E. Virtual, digital and hybrid twins: A new paradigm in data-based engineering and engineered data. Arch. Comput. Methods Eng. 2018, 27, 105–134. [Google Scholar] [CrossRef]

- Jafari, M.; Kavousi-Fard, A.; Chen, T.; Karimi, M. A review on digital twin technology in smart grid, transportation system and smart city: Challenges and future. IEEE Access 2023, 11, 17471–17484. [Google Scholar] [CrossRef]

- Minerva, R.; Lee, G.M.; Crespi, N. Digital twin in the iot context: A survey on technical features, scenarios, and architectural models. Proc. IEEE 2020, 108, 1785–1824. [Google Scholar] [CrossRef]

- Alcaraz, C.; Lopez, J. Digital twin: A comprehensive survey of security threats. IEEE Commun. Surv. Tutor. 2022, 24, 1475–1503. [Google Scholar] [CrossRef]

- ISO TC 184/SC4/WG15: ISO 23247 standard. Available online: https://www.ap238.org/iso23247/ (accessed on 25 September 2025).

- Dihan, M.; Akash, A.; Tasneem, Z.; Das, P.; Das, S.; Islam, M.; Islam, M.; Badal, F.; Ali, M.; Ahamed, M.; et al. Digital twin: Data exploration, architecture, implementation and future. Heliyon 2024, 10, e26503. [Google Scholar] [CrossRef] [PubMed]

- Pan, Y.; Zhang, L. Roles of artificial intelligence in construction engineering and management: A critical review and future trends. Autom. Constr. 2021, 122, 103517. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital twin: Enabling technologies, challenges and open research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Tao, F.; Qi, Q.; Wang, L.; Nee, A. Digital twins and cyber–physical systems toward smart manufacturing and industry 4.0: Correlation and comparison. Engineering 2019, 5, 653–661. [Google Scholar] [CrossRef]

- Tao, F.; Zhang, H.; Liu, A.; Nee, A.Y.C. Digital twin in industry: State-of-the-art. IEEE Trans. Ind. Inform. 2019, 15, 2405–2415. [Google Scholar] [CrossRef]

- Council of European Union. Council Regulation (EU). 2021. Available online: https://www.euaiact.com/ (accessed on 25 September 2025).

- Madni, A.M.; Madni, C.C.; Lucero, S.D. Leveraging digital twin technology in model-based systems engineering. Systems 2019, 7, 7. [Google Scholar] [CrossRef]

- Pan, X.; Lin, Q.; Ye, S.; Li, L.; Guo, L.; Harmon, B. Deep learning based approaches from semantic point clouds to semantic BIM models for heritage digital twin. Herit. Sci. 2024, 12, 65. [Google Scholar] [CrossRef]

- Zhao, J.; Huang, J.; Zhi, D.; Yan, W.; Ma, X.; Yang, X.; Li, X.; Ke, Q.; Jiang, T.; Calhoun, V.D.; et al. Functional network connectivity (fnc)-based generative adversarial network (gan) and its applications in classification of mental disorders. J. Neurosci. Methods 2020, 341, 108756. [Google Scholar] [CrossRef]

- Bo, Y.; Wu, H.; Che, W.; Zhang, Z.; Li, X.; Myagkov, L. Methodology and application of digital twin-driven diesel engine fault diagnosis and virtual fault model acquisition. Eng. Appl. Artif. Intell. 2024, 131, 107853. [Google Scholar] [CrossRef]

- Thao, L.Q.; Kien, D.T.; Thien, N.D.; Bach, N.C.; Hiep, V.V.; Khanh, D.G. Utilizing AI and silver nanoparticles for the detection and treatment monitoring of canker in pomelo trees. Sens. Actuators A Phys. 2024, 368, 115127. [Google Scholar] [CrossRef]

- Jiang, W.; Han, B.; Habibi, M.A.; Schotten, H.D. The road towards 6g: A comprehensive survey. IEEE Open J. Commun. Soc. 2021, 2, 334–366. [Google Scholar] [CrossRef]

- Emmert-Streib, F. What is the role of ai for digital twins? AI 2023, 4, 721–728. [Google Scholar] [CrossRef]

- Petticrew, M.; Roberts, H. Systematic Reviews in the Social Sciences: A Practical Guide; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar] [CrossRef]

- Bruzza, M.; Cabrera, A.; Tupia, M. Survey of the state of art based on PICOC about the use of artificial intelligence tools and expert systems to manage and generate tourist packages. In Proceedings of the 2017 International Conference on Infocom Technologies and Unmanned Systems (Trends and Future Directions) (ICTUS), Dubai, United Arab Emirates, 18–20 December 2017; pp. 290–296. [Google Scholar] [CrossRef]

- Boteju, M.; Ranbaduge, T.; Vatsalan, D.; Arachchilage, N.A.G. Sok: Demystifying privacy enhancing technologies through the lens of software developers. arXiv 2023, arXiv:2401.00879. [Google Scholar] [CrossRef]

- Priem, J.; Piwowar, H. The Unpaywall Dataset. 2018. Available online: https://figshare.com/articles/The_Unpaywall_Dataset/6020078 (accessed on 20 September 2025). [CrossRef]

- NIST. The NIST Cybersecurity Framework (CSF) 2.0; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2024. [CrossRef]

- Sharma, A.; Kosasih, E.; Zhang, J.; Brintrup, A.; Calinescu, A. Digital twins: State of the art theory and practice, challenges, and open research questions. arXiv 2020, arXiv:2011.02833. [Google Scholar] [CrossRef]

- Al-Kuwaiti, M.; Kyriakopoulos, N.; Hussein, S. Network dependability, fault-tolerance, reliability, security, survivability: A framework for comparative analysis. In Proceedings of the 2006 International Conference on Computer Engineering and Systems, Cairo, Egypt, 5–7 November 2006. [Google Scholar] [CrossRef]

- Durão, L.F.C.S.; Haag, S.; Anderl, R.; Schützer, K.; Zancul, E. Digital Twin Requirements in the Context of Industry 4.0. In Product Lifecycle Management to Support Industry 4.0. PLM 2018; Springer International Publishing: Cham, Switzerland, 2018; pp. 204–214. [Google Scholar] [CrossRef]

- Moyne, J.; Qamsane, Y.; Balta, E.C.; Kovalenko, I.; Faris, J.; Barton, K.; Tilbury, D.M. A requirements driven digital twin framework: Specification and opportunities. IEEE Access 2020, 8, 107781–107801. [Google Scholar] [CrossRef]

- Neshenko, N.; Bou-Harb, E.; Crichigno, J.; Kaddoum, G.; Ghani, N. Demystifying iot security: An exhaustive survey on iot vulnerabilities and a first empirical look on internet-scale iot exploitations. IEEE Commun. Surv. Tutor. 2019, 21, 2702–2733. [Google Scholar] [CrossRef]

- Mullet, V.; Sondi, P.; Ramat, E. A review of cybersecurity guidelines for manufacturing factories in industry 4.0. IEEE Access 2021, 9, 23235–23263. [Google Scholar] [CrossRef]

- Liu, H.; Tu, J.; Liu, J.; Zhao, Z.; Zhou, R. Generative adversarial scheme based GNSS spoofing detection for digital twin vehicular networks. In Wireless Algorithms, Systems, and Applications; Springer International Publishing: Cham, Switzerland, 2021; pp. 367–374. [Google Scholar] [CrossRef]

- Garg, H.; Sharma, B.; Shekhar, S.; Agarwal, R. Spoofing detection system for e-health digital twin using EfficientNet convolution neural network. Multimed. Tools Appl. 2022, 81, 26873–26888. [Google Scholar] [CrossRef]

- Mastorakis, S.; Zhong, X.; Huang, P.-C.; Tourani, R. Dlwiot: Deep learning-based watermarking for authorized iot onboarding. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Gupta, H.; van Oorschot, P.C. Onboarding and software update architecture for iot devices. In Proceedings of the 2019 17th International Conference on Privacy, Security and Trust (PST), Fredericton, NB, Canada, 26–28 August 2019; pp. 1–11. [Google Scholar] [CrossRef]

- Asokan, N.; Brasser, F.; Ibrahim, A.; Sadeghi, A.-R.; Schunter, M.; Tsudik, G.; Wachsmann, C. Seda: Scalable embedded device attestation. In Proceedings of the 22nd ACM SIGSAC Conference on Computer and Communications Security (CCS ’15), Denver, CO, USA, 12–16 October 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 964–975. [Google Scholar] [CrossRef]

- Carpent, X.; Rattanavipanon, N.; Tsudik, G. Erasmus: Efficient remote attestation via self-measurement for unattended settings. arxiv 2017, arXiv:1707.09043. [Google Scholar]

- Abera, T.; Asokan, N.; Davi, L.; Koushanfar, F.; Paverd, A.; Sadeghi, A.-R.; Tsudik, G. Invited: Things, trouble, trust: On building trust in iot systems. In Proceedings of the 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 5–9 June 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Ambrosin, M.; Conti, M.; Lazzeretti, R.; Rabbani, M.M.; Ranise, S. Collective remote attestation at the internet of things scale: State-of-the-art and future challenges. IEEE Commun. Surv. Tutor. 2020, 22, 2447–2461. [Google Scholar] [CrossRef]

- Ibrahim, A.; Sadeghi, A.-R.; Zeitouni, S. Seed: Secure non-interactive attestation for embedded devices. In Proceedings of the 10th ACM Conference on Security and Privacy in Wireless and Mobile Networks (WiSec ’17), Boston, MA, USA, 18–20 July 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 64–74. [Google Scholar] [CrossRef]

- Petzi, L.; Yahya, A.E.B.; Dmitrienko, A.; Tsudik, G.; Prantl, T.; Kounev, S. SCRAPS: Scalable collective remote attestation for Pub-Sub IoT networks with untrusted proxy verifier. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; USENIX Association: Boston, MA, USA, 2022; pp. 3485–3501. [Google Scholar]

- Jenkins, I.R.; Smith, S.W. Distributed iot attestation via blockchain. In Proceedings of the 2020 20th IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing (CCGRID), Melbourne, VIC, Australia, 1–14 May 2020; pp. 798–801. [Google Scholar] [CrossRef]

- Kohnhäuser, F.; Büscher, N.; Katzenbeisser, S. Salad: Secure and lightweight attestation of highly dynamic and disruptive networks. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security (ASIACCS ’18), Incheon, Republic of Korea, 4 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 329–342. [Google Scholar] [CrossRef]

- Masure, L.; Dumas, C.; Prouff, E. A comprehensive study of deep learning for side-channel analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 1, 348–375. [Google Scholar] [CrossRef]

- Shwartz, O.; Mathov, Y.; Bohadana, M.; Elovici, Y.; Oren, Y. Reverse engineering iot devices: Effective techniques and methods. IEEE Internet Things J. 2018, 5, 4965–4976. [Google Scholar] [CrossRef]

- Lo, C.; Chen, C.; Zhong, R.Y. A review of digital twin in product design and development. Adv. Eng. Inform. 2021, 48, 101297. [Google Scholar] [CrossRef]

- Clements, J.; Lao, Y. Hardware trojan attacks on neural networks. arXiv 2018, arXiv:1806.05768. [Google Scholar] [CrossRef]

- Goldwasser, S.; Kim, M.P.; Vaikuntanathan, V.; Zamir, O. Planting undetectable backdoors in machine learning models. arXiv 2022, arXiv:2204.06974. [Google Scholar] [CrossRef]

- Wu, B.; Chen, H.; Zhang, M.; Zhu, Z.; Wei, S.; Yuan, D.; Shen, C. Backdoorbench: A comprehensive benchmark of backdoor learning. arXiv 2022, arXiv:2206.12654. [Google Scholar] [CrossRef]

- Gu, T.; Dolan-Gavitt, B.; Garg, S. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2019, arXiv:1708.06733. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with sample-specific triggers. arXiv 2021, arXiv:2012.03816. [Google Scholar] [CrossRef]

- Lin, L.; Bao, H.; Dinh, N. Uncertainty quantification and software risk analysis for digital twins in the nearly autonomous management and control systems: A review. Ann. Nucl. Energy 2021, 160, 108362. [Google Scholar] [CrossRef]

- Roy, C.J.; Oberkampf, W.L. A comprehensive framework for verification, validation, and uncertainty quantification in scientific computing. Comput. Methods Appl. Mech. Eng. 2011, 200, 2131–2144. [Google Scholar] [CrossRef]

- Jamil, S.; Rahman, M.; Fawad. A comprehensive survey of digital twins and federated learning for industrial internet of things (iiot), internet of vehicles (iov) and internet of drones (iod). Appl. Syst. Innov. 2022, 5, 56. [Google Scholar] [CrossRef]

- Hard, A.; Rao, K.; Mathews, R.; Ramaswamy, S.; Beaufays, F.; Augenstein, S.; Eichner, H.; Kiddon, C.; Ramage, D. Federated learning for mobile keyboard prediction. arXiv 2019, arXiv:1811.03604. [Google Scholar] [CrossRef]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How to backdoor federated learning. arXiv 2019, arXiv:1807.00459. [Google Scholar] [PubMed]

- Pasquini, D.; Francati, D.; Ateniese, G. Eluding Secure Aggregation in Federated Learning via Model Inconsistency. arXiv 2022, arXiv:2111.07380. [Google Scholar] [CrossRef]

- Tian, Z.; Cui, L.; Liang, J.; Yu, S. A Comprehensive Survey on Poisoning Attacks and Countermeasures in Machine Learning. ACM Comput. Surv. 2022, 55, 166. [Google Scholar] [CrossRef]

- Su, Z.; Xu, Q.; Luo, J.; Pu, H.; Peng, Y.; Lu, R. A Secure Content Caching Scheme for Disaster Backup in Fog Computing Enabled Mobile Social Networks. IEEE Trans. Ind. Inform. 2018, 14, 4579–4589. [Google Scholar] [CrossRef]

- Nour, B.; Mastorakis, S.; Ullah, R.; Stergiou, N. Information-Centric Networking in Wireless Environments: Security Risks and Challenges. IEEE Wirel. Commun. 2021, 28, 121–127. [Google Scholar] [CrossRef]

- Ricci, A.; Croatti, A.; Mariani, S.; Montagna, S.; Picone, M. Web of digital twins. ACM Trans. Internet Technol. 2022, 22, 1–30. [Google Scholar] [CrossRef]

- Veledar, O.; Damjanovic-Behrendt, V.; Macher, G. Digital twins for dependability improvement of autonomous driving. In Systems, Software and Services Process Improvement. EuroSPI 2019; European Conference on Software Process Improvement; Springer: Cham, Switzerland, 2019; pp. 415–426. [Google Scholar]

- Gehrmann, C.; Gunnarsson, M. A Digital Twin Based Industrial Automation and Control System Security Architecture. IEEE Trans. Ind. Inform. 2020, 16, 669–680. [Google Scholar] [CrossRef]

- Tourani, R.; Misra, S.; Mick, T.; Panwar, G. Security, Privacy, and Access Control in Information-Centric Networking: A Survey. IEEE Commun. Surv. Tutor. 2018, 20, 566–600. [Google Scholar] [CrossRef]

- Alcaraz, C.; Lopez, J. Digital Twin Security: A Perspective on Efforts From Standardization Bodies. IEEE Secur. Priv. 2025, 23, 83–90. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, H.; Su, Z.; Luan, T.H.; Benslimane, A.; Wu, Y. A Platform-Free Proof of Federated Learning Consensus Mechanism for Sustainable Blockchains. IEEE J. Sel. Areas Commun. 2022, 40, 3305–3324. [Google Scholar] [CrossRef]

- Xue, M.; Zhang, Y.; Wang, J.; Liu, W. Intellectual Property Protection for Deep Learning Models: Taxonomy, Methods, Attacks, and Evaluations. IEEE Trans. Artif. Intell. 2022, 3, 908–923. [Google Scholar] [CrossRef]

- Tramèr, F.; Zhang, F.; Juels, A.; Reiter, M.K.; Ristenpart, T. Stealing Machine Learning Models via Prediction APIs. arXiv 2016, arXiv:1609.02943. [Google Scholar] [CrossRef]

- Karabacak, B.; Sogukpinar, I. Isram: Information security risk analysis method. Comput. Secur. 2005, 24, 147–159. [Google Scholar] [CrossRef]

- Microsoft. The Stride Threat Model. 2009. Available online: https://learn.microsoft.com/en-us/previous-versions/commerce-server/ee823878(v=cs.20) (accessed on 20 September 2025).

- Sommestad, T.; Ekstedt, M.; Johnson, P. A probabilistic relational model for security risk analysis. Comput. Secur. 2010, 29, 659–679. [Google Scholar] [CrossRef]

- Henry, M.H.; Haimes, Y.Y. A comprehensive network security risk model for process control networks. Risk Anal. 2009, 29, 223–248. [Google Scholar] [CrossRef]

- Fovino, I.N.; Guidi, L.; Masera, M.; Stefanini, A. Cyber security assessment of a power plant. Electr. Power Syst. Res. 2011, 81, 518–526. [Google Scholar] [CrossRef][Green Version]

- Ganin, A.A.; Quach, P.; Panwar, M.; Collier, Z.A.; Keisler, J.M.; Marchese, D.; Linkov, I. Multicriteria decision framework for cybersecurity risk assessment and management. Risk Anal. 2017, 40, 183–199. [Google Scholar] [CrossRef]

- Linkov, I.; Moberg, E. Multi-Criteria Decision Analysis; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar] [CrossRef]

- Schuett, J. Risk management in the artificial intelligence act. Eur. J. Risk Regul. 2024, 15, 367–385. [Google Scholar] [CrossRef]

- Camacho, J.M.; Couce-Vieira, A.; Arroyo, D.; Insua, D.R. A cybersecurity risk analysis framework for systems with artificial intelligence components. Int. Trans. Oper. Res. 2025, 33, 798–825. [Google Scholar] [CrossRef]

- Couce-Vieira, A.; Insua, D.R.; Kosgodagan, A. Assessing and forecasting cybersecurity impacts. Decis. Anal. 2020, 17, 356–374. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. Artificial Intelligence Risk Management Framework (AI RMF 1.0). 2023. Available online: https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf (accessed on 20 September 2025). [CrossRef]

- Information Security Forum. Information Risk Assessment Methodology 2 (IRAM2). 2016. Available online: https://www.securityforum.org/solutions-and-insights/information-risk-assessment-methodology-2-iram2/ (accessed on 14 March 2024).

- Karmakar, K.K.; Varadharajan, V.; Tupakula, U. Policy-Driven Security Architecture for Internet of Things (IoT) Infrastructure; CRC Press: Boca Raton, FL, USA, 2023; pp. 76–120. [Google Scholar] [CrossRef]

- Karmakar, K.K.; Varadharajan, V.; Speirs, P.; Hitchens, M.; Robertson, A. Sdpm: A secure smart device provisioning and monitoring service architecture for smart network infrastructure. IEEE Internet Things J. 2022, 9, 25037–25051. [Google Scholar] [CrossRef]

- European Commission. Recommendation on a Coordinated Implementation Roadmap for the Transition to Post-Quantum Cryptography. 2024. Available online: https://digital-strategy.ec.europa.eu/en/library/recommendation-coordinated-implementation-roadmap-transition-post-quantum-cryptography (accessed on 20 September 2025).

- NIST. Post-Quantum Cryptography Standardization. 2024. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography (accessed on 20 September 2025).

| PICOC Item | Question | Answer |

|---|---|---|

| Population | What are the new paradigms of Industry 4.0. we are interested in? | Digital Twin |

| Intervention | Which area related to digital twins would we like to study in our review? | The last techniques to implement the digital twin paradigm and its potential risks |

| Outcomes | What will be an enrichment outcome of this study for the community? | A framework for identifying and classifying threats to digital twins |

| Context | What is the current context of digital twins in the digital landscape? | Implementation of new computational paradigms such as Artificial Intelligence or quantum computing techniques. |

| Operational Costs | Cybersecurity Costs | Other Costs | Income Reduction | Reputation Impact | Operational Costs | Other Costs | Income Reduction | Reputation Impact | Fatalities | Injuries to Physical and Mental Health | Injuries to Personal Rights | Personal Economic Damage | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Hardware Spoofing | x | x | x | x | x | x | |||||||

| Side-channel attack | x | ||||||||||||

| Reverse Engineering | x | ||||||||||||

| Hardware Trojan attack | x | x | x | x | x | x | x | x | |||||

| MLaaS by untrustful company | x | x | x | x | x | x | x | x | |||||

| Uncertainty Quantification | x | x | x | x | x | x | |||||||

| Malicious participant in Federated Learning system | x | x | x | x | x | x | |||||||

| Low-Quality Data | x | x | x | x | |||||||||

| Model Inconsistency | x | x | x | x | x | x | x | x | |||||

| Model Poisoning | x | x | x | x | x | ||||||||

| Threats to Data Backup | x | x | x | ||||||||||

| Data/Content Poisoning Attack | x | x | x | x | x | ||||||||

| Data Tampering | x | x | x | ||||||||||

| Desynchronization | x | x | x | ||||||||||

| Eavesdropping | x | x | x | x | x | x | x | x | x | x | |||

| Message Flooding | x | x | |||||||||||

| Interest Flooding | x | x | x | x | x | x | |||||||

| Man-in-the-Middle | x | x | x | x | x | x | x | x | x | x | x | x | x |

| Denial of Service | x | x | x | x | |||||||||

| Private Information Extraction With Insiders | x | x | x | x | x | x | x | x | |||||

| Privacy Leakage in Model Aggregation | x | x | x | x | x | x | x | x | |||||

| Privacy Leakage in Model Delivery/Deployment | x | x | x | x | x | ||||||||

| Knowledge/Model Inversion | x | x | x | x | x | x |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suárez-Román, M.; Sanz-Rodrigo, M.; Marín-López, A.; Arroyo, D. A Digital Twin Threat Survey. Big Data Cogn. Comput. 2025, 9, 252. https://doi.org/10.3390/bdcc9100252

Suárez-Román M, Sanz-Rodrigo M, Marín-López A, Arroyo D. A Digital Twin Threat Survey. Big Data and Cognitive Computing. 2025; 9(10):252. https://doi.org/10.3390/bdcc9100252

Chicago/Turabian StyleSuárez-Román, Manuel, Mario Sanz-Rodrigo, Andrés Marín-López, and David Arroyo. 2025. "A Digital Twin Threat Survey" Big Data and Cognitive Computing 9, no. 10: 252. https://doi.org/10.3390/bdcc9100252

APA StyleSuárez-Román, M., Sanz-Rodrigo, M., Marín-López, A., & Arroyo, D. (2025). A Digital Twin Threat Survey. Big Data and Cognitive Computing, 9(10), 252. https://doi.org/10.3390/bdcc9100252