Abstract

The advent of autonomous vehicles has heralded a transformative era in transportation, reshaping the landscape of mobility through cutting-edge technologies. Central to this evolution is the integration of artificial intelligence (AI), propelling vehicles into realms of unprecedented autonomy. Commencing with an overview of the current industry landscape with respect to Operational Design Domain (ODD), this paper delves into the fundamental role of AI in shaping the autonomous decision-making capabilities of vehicles. It elucidates the steps involved in the AI-powered development life cycle in vehicles, addressing various challenges such as safety, security, privacy, and ethical considerations in AI-driven software development for autonomous vehicles. The study presents statistical insights into the usage and types of AI algorithms over the years, showcasing the evolving research landscape within the automotive industry. Furthermore, the paper highlights the pivotal role of parameters in refining algorithms for both trucks and cars, facilitating vehicles to adapt, learn, and improve performance over time. It concludes by outlining different levels of autonomy, elucidating the nuanced usage of AI algorithms, and discussing the automation of key tasks and the software package size at each level. Overall, the paper provides a comprehensive analysis of the current industry landscape, focusing on several critical aspects.

Keywords:

artificial intelligence (AI); Machine learning (ML); deep learning (DL); deep neural networks (DNNs); natural language processing (NLP); autonomous vehicles (AVs); safety; security; ethics; emerging trends; trucks vs. cars; autonomy levels; operational design domain (ODD); software-defined vehicles (SDVs); connected and automated vehicles (CAVs); in-vehicle AI assistant; internet of things (IoT); generative AI (GenAI) 1. Introduction

Artificial intelligence (AI) currently plays a crucial role in the development and operation of autonomous vehicles. The integration of AI algorithms enables autonomous vehicles to navigate, perceive, and adapt to dynamic environments, making them safer and more efficient. Continuous advancements in AI technologies are expected to further enhance the capabilities and safety of autonomous vehicles in the future. Autonomous system development has been experiencing a transformational evolution through the integration of artificial intelligence (AI). This revolutionary combination holds the promise of reshaping traditional development processes, enhancing efficiency, and accelerating innovation. AI technologies are becoming integral within numerous facets of software development within autonomous vehicles, leading to a paradigm shift towards Software-Defined Vehicles (SDVs) [1,2].

1.1. Benefits of AI Algorithms for Autonomous Vehicles

AI algorithms are currently influencing various stages from initial coding to post-deployment maintenance in autonomous vehicles [3]. Some of the benefits include the following:

- Safety: AI can significantly reduce accidents by eliminating human error, leading to safer roads.

- Traffic Flow: Platooning [4] and efficient routing can ease congestion and improve efficiency.

- Accessibility: People with physical impairments or different abilities, the elderly, and the young can gain independent mobility.

- Energy Savings: Optimized driving reduces fuel consumption and emissions.

- Productivity and Convenience: Passengers use travel time productively while delivery services become more efficient.

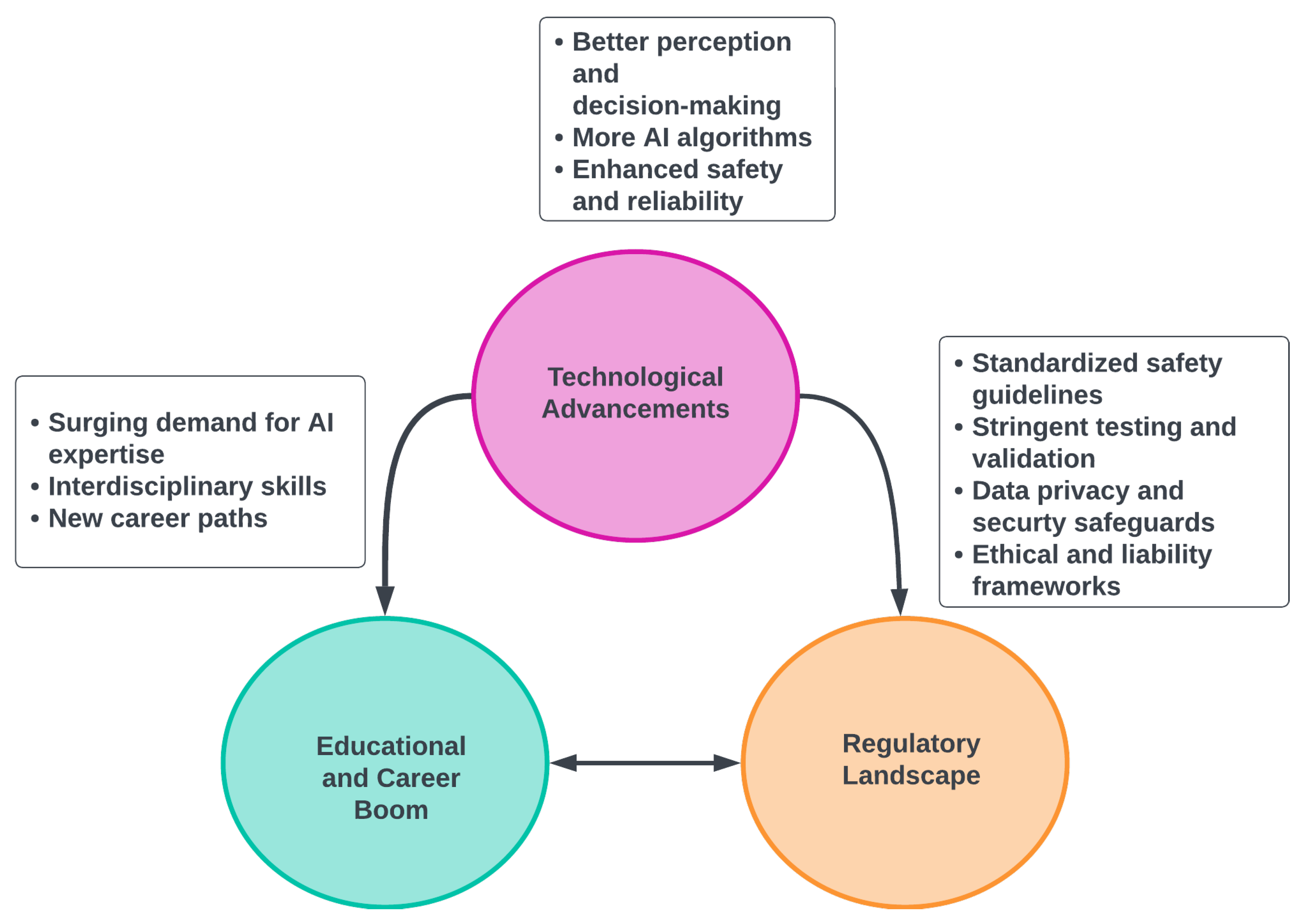

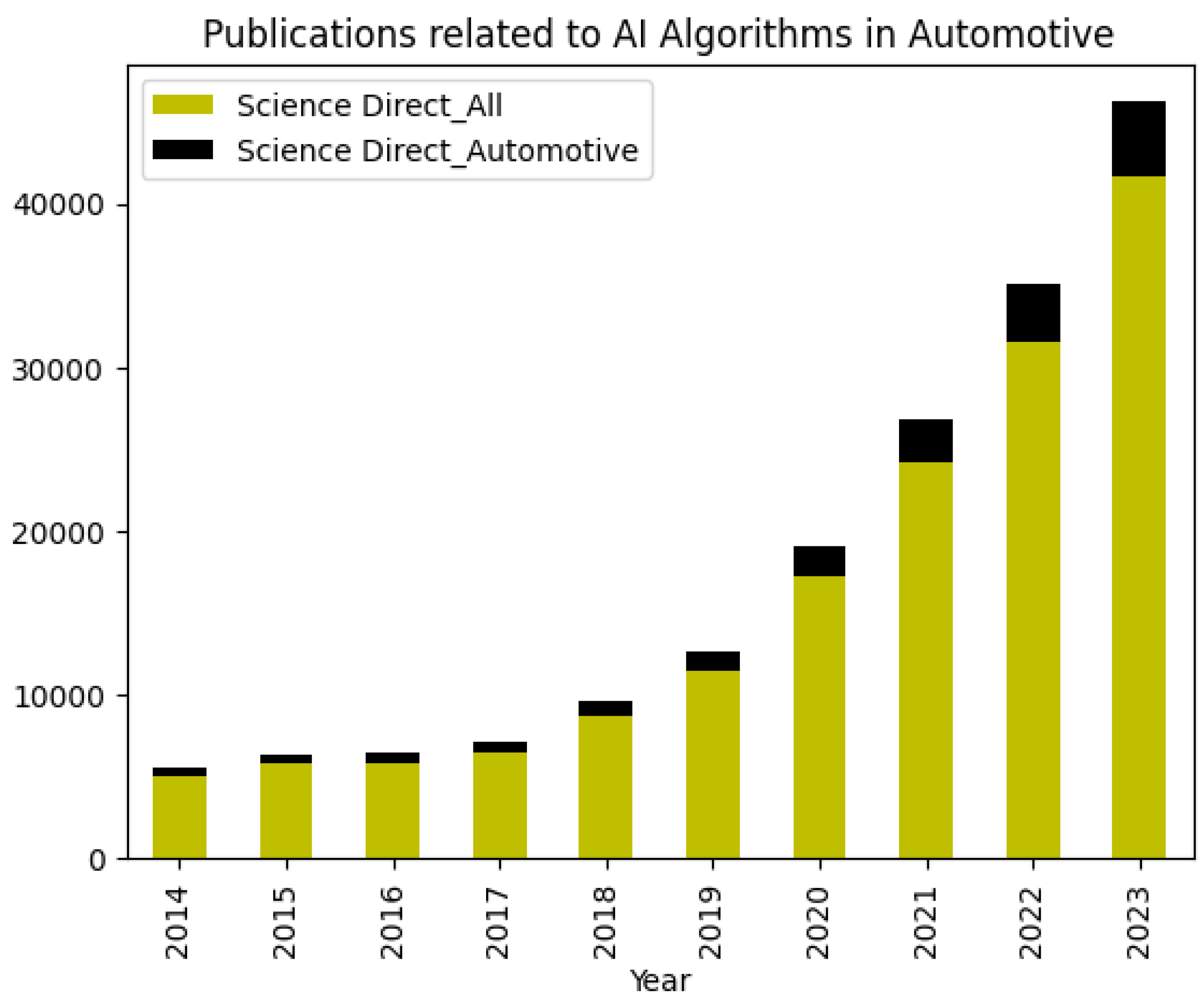

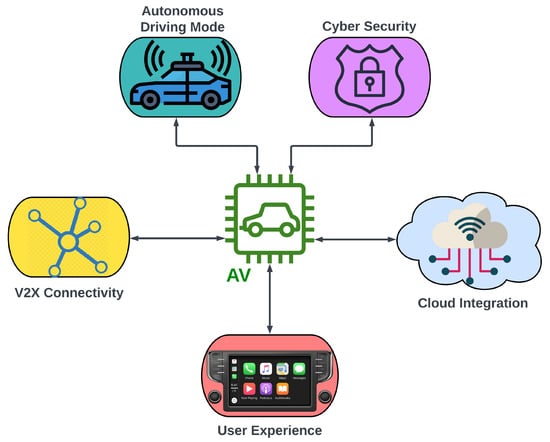

AI in autonomous vehicles is poised for a bright future, shaping everyday life and creating exciting opportunities. A glimpse of the possibilities is shown in Figure 1.

Figure 1.

Benefits of AI in autonomous vehicles.

1.1.1. Technological Advancements

- Sharper perception and decision-making: AI algorithms are more adept at understanding environments with advanced sensors and robust machine learning.

- Faster, more autonomous operation: Edge computing enables on-board AI processing for quicker decisions and greater independence.

- Enhanced safety and reliability: Redundant systems and rigorous fail-safe mechanisms prioritize safety above all else.

1.1.2. Education and Career Boom

- Surging demand for AI expertise: Specialized courses and degrees in autonomous vehicle technology will cater to a growing need for AI, robotics, and self-driving car professionals.

- Interdisciplinary skills will be key: Professionals with cross-functional skills bridging AI, robotics, and transportation will be highly sought after.

- New career paths in safety and ethics: Expertise in ethical considerations, safety audits, and regulatory [5] compliance will be crucial as self-driving cars become widespread.

1.1.3. Regulatory Landscape

- Standardized safety guidelines: Governments will establish common frameworks for performance and safety, building public trust and ensuring industry coherence.

- Stringent testing and validation: Autonomous systems will undergo rigorous testing before deployment, guaranteeing reliability and safety standards.

- Data privacy and security safeguards: Laws and regulations will address data privacy and cybersecurity concerns, protecting personal information and mitigating cyberattacks.

- Ethical and liability frameworks: Clearly defined legal frameworks will address ethical decision-making and determine liability in situations involving self-driving cars.

Thus, the future holds immense potential for revolutionizing transportation, creating new jobs, and improving safety. However, navigating ethical dilemmas, ensuring robust regulations, and building public trust will be crucial to harnessing this technology responsibly and sustainably, which is discussed in Section 4.

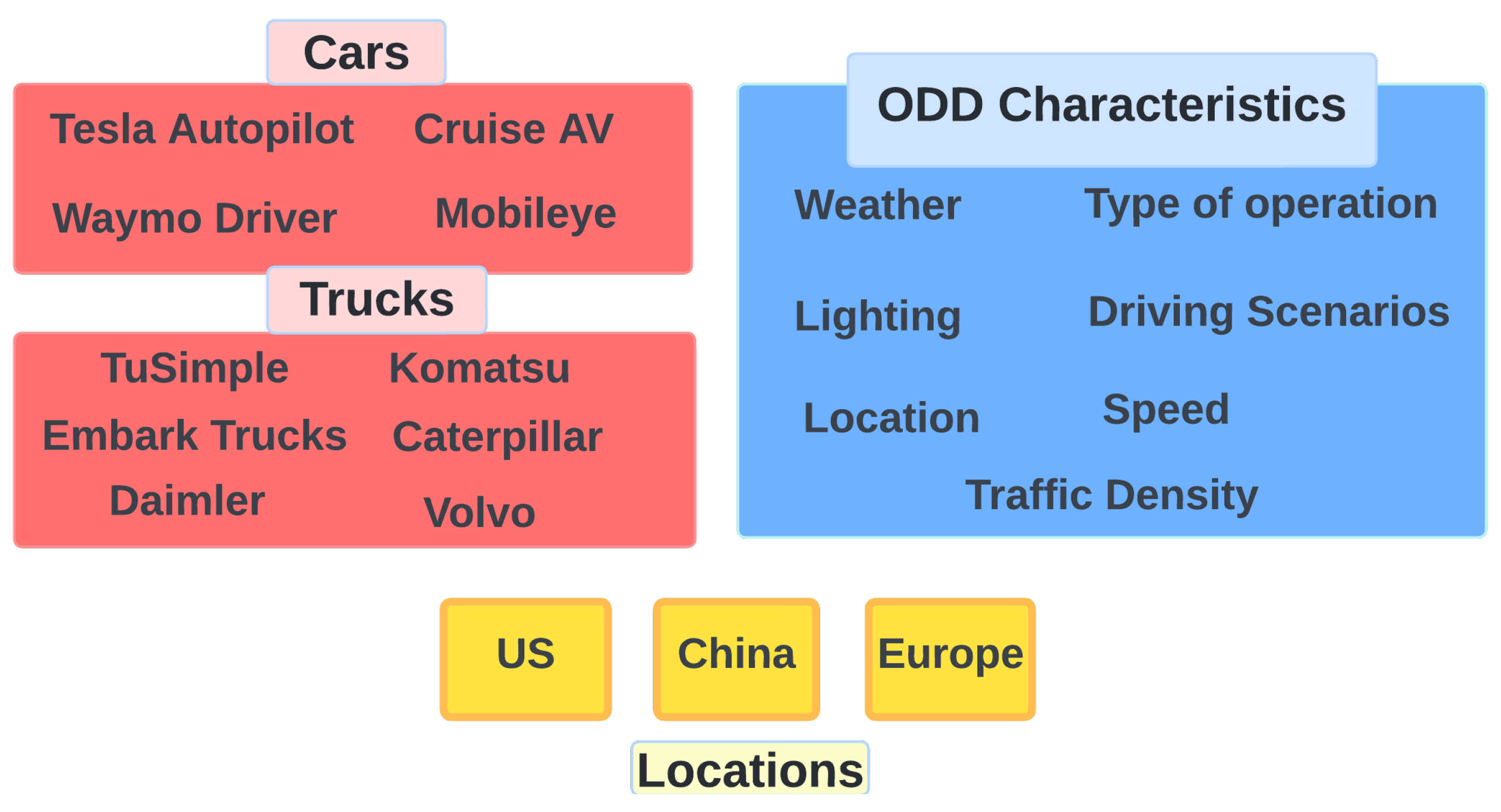

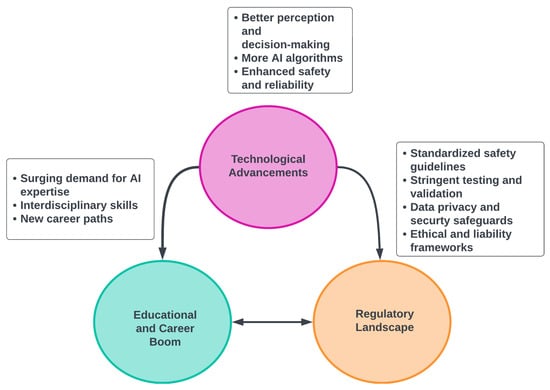

1.2. Operational Design Domains (ODDs) and Diversity—The Current Industry Landscape

An Operational Design Domain (ODD) [6] refers to the specific conditions under which an autonomous vehicle (AV) is designed to operate safely. These examples illustrate the diverse evolution of Operational Design Domains (ODDs) [7] across various vehicle types, including trucks and cars, and within different geographical locations such as the United States, China, and Europe [8], as shown in Figure 2. The intention here is not to cover all the companies or geographical locations but to only provide an overview of the diversity in terms of the ODDs that are present in the current industry landscape. Table 1 explains the complete mapping of different vehicle companies, countries, ODDs, and the driving scenarios being covered by each vehicle company, explaining the current autonomous vehicle industry landscape.

Figure 2.

Current industry landscape: Different vehicles from different geographical locations and their Operational Design Domain (ODD) characteristics.

Table 1.

ODD Characteristics distributed across cars, trucks, and different geographical locations.

- Waymo Driver: [9] Can handle a wider range of weather conditions, city streets, and highway driving, but speed limitations and geo-fencing restrictions apply.

- Tesla Autopilot: [10] Primarily for highway driving with lane markings, under driver supervision, and within specific speed ranges.

- Mobileye Cruise AV: [11] Operates in sunny and dry weather, on highways with clearly marked lanes, and at speeds below 45 mph.

- Aurora and Waymo Via: Wider range of weather conditions, including light rain/snow. Variable lighting (sunrise/sunset), multi-lane highways and rural roads with good pavement quality, daytime and nighttime operation, moderate traffic density, dynamic route planning, traffic light/stop sign recognition, intersection navigation, maneuvering in yards/warehouses, etc.

- TuSimple and Embark Trucks: [12] Sunny, dry weather, clear visibility. Temperature range −10 °C to 40 °C, limited-access highways with clearly marked lanes, daytime operation only, maximum speed of 70 mph, limited traffic density, pre-mapped routes, lane changes, highway merging/exiting, platooning with other AV trucks, etc.

- Pony.ai and Einride: Diverse weather conditions, including heavy rain/snow. Variable lighting and complex urban environments, narrow city streets, residential areas, and parking lots. Low speeds (20–30 mph), high traffic density, frequent stops and turns, geo-fenced delivery zones, pedestrian and cyclist detection/avoidance, obstacle avoidance in tight spaces, dynamic rerouting due to congestion, etc.

- Komatsu Autonomous Haul Trucks, Caterpillar MineStar Command for Haul Trucks: Harsh weather conditions (dust, heat, extreme temperatures). Limited or no network connectivity, unpaved roads, uneven terrain, steep inclines/declines, autonomous operation with remote monitoring, pre-programmed routes, high ground clearance, obstacle detection in unstructured environments, path planning around natural hazards, dust/fog mitigation, etc.

- Baidu Apollo: Highways and city streets in specific zones like Beijing and Shenzhen. Operates in the daytime and nighttime under clear weather conditions and limited traffic density. Designed for passenger transportation and robotaxis. Specific scenarios include lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation, and low-speed maneuvering in urban areas.

- WeRide: Limited-access highways and urban streets in Guangzhou and Nanjing. Operates in the daytime and nighttime under clear weather conditions. Targeted for robotaxi services and last-mile delivery. Specific scenarios include lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation, and automated pick-up and drop-off for passengers/packages.

- Bosch and Daimler [13]: Motorways and specific highways in Germany. Operates in the daytime and nighttime under good weather conditions. Focused on highway trucking applications. Specific scenarios include platooning with other AV trucks, automated lane changes and overtaking, emergency stopping procedures, and communication with traffic management systems.

- Volvo Trucks: Defined sections of Swedish highways. Operates in the daytime and nighttime under varying weather conditions. Tailored for autonomous mining and quarry operations. Specific scenarios include obstacle detection and avoidance in unstructured environments, path planning around natural hazards, pre-programmed routes with high precision, and remote monitoring and control.

1.3. Role of Connected Vehicle Technology

Connected vehicle technology acts as a powerful enabler, providing valuable data and facilitating better decision-making, ultimately contributing to a smoother and more efficient path towards full autonomy. Connected vehicle technology plays a crucial role in the development and advancement of autonomous vehicles in several ways [14]:

- Enhanced situational awareness: Real-time information exchange between connected vehicles and infrastructures provides a broader picture of the surrounding environment, including road conditions, traffic patterns, and potential hazards, which is crucial for autonomous vehicles to navigate safely and efficiently.

- Improved decision-making: Connected vehicles can leverage data from other vehicles and infrastructures to make better decisions, such as optimizing routes, avoiding congestion, and coordinating maneuvers with other vehicles, contributing to smoother and safer autonomous operation.

- Faster innovation and testing: Connected vehicle technology allows for real-time data collection and analysis of vehicle performance, enabling faster development and testing of autonomous driving algorithms, accelerating the path to safer and more reliable autonomous vehicles [15].

However, it is important to note that connected vehicle technology alone cannot guarantee the complete autonomy of vehicles. Other crucial elements like robust onboard sensors, advanced artificial intelligence, and clear regulatory frameworks are still necessary for the widespread adoption of fully autonomous vehicles. Section 5 provides more insights into emerging technologies such as Internet of Things (IoT) that support the concept of connected vehicles.

Contributions of this paper: Section 1 provides an overview of the autonomous vehicle industry landscape in different aspects. A literature survey of how the AI algorithms are being used within autonomous vehicles is provided in Section 2. In Section 3, there is an explanation of the AI-powered software development life-cycle for autonomous vehicles and a discussion of the details on how to ensure software quality and security during the development of the AI algorithms. Section 4 explains the current challenges with using AI in autonomous vehicles and provides mitigation considerations for each challenge. In Section 5, there is an explanation of how AI algorithms have been emerging and evolving over time to have increasingly more decision-making capabilities without human involvement using IoT as a future direction of expansion for autonomous vehicles to become more connected to other actors in the driving environment. In Section 6, the major contribution of this paper are mentioned, such as the comparative analysis of how AI is applied in autonomous vehicles for trucks and cars. It highlights, with references, the exponential growth in research related to AI research and applications in general. It identifies a gap in the research focused on autonomous trucks compared to passenger cars. The paper then details the key differences in parameters that need to be considered when designing AI models for autonomous trucks versus cars. Finally, it explores the evolving role of AI algorithms and software package sizes at different levels of autonomy for self-driving vehicles. In summary, this paper thoroughly explores various topics concerning the integration of AI in AVs, offering a comprehensive overview of the current industry landscape.

2. Review of Existing Research and Use Cases

H. J. Vishnukumar et al. [16] noted that traditional development methods like Waterfall and Agile fall short when testing intricate autonomous vehicles and proposed a novel AI-powered methodology for both lab and real-world testing and validation (T&V) of ADAS and autonomous systems. Leveraging machine learning and deep neural networks, the AI core learns from existing test scenarios, generates new efficient cases, and controls diverse simulated environments for exhaustive testing. Critical tests then translate to real-world validation with automated vehicles in controlled settings. Constant learning from each test iteration refines future testing, ultimately saving precious development time and boosting the efficiency and quality of autonomous systems. The proposed methodology lays the groundwork for AI to eventually handle most T&V tasks, paving the way for safer and more reliable autonomous vehicles.

Bachute, Mrinal R et al. [17] described the algorithms crucial for various tasks in autonomous driving, recognizing the multifaceted nature of the system. It discerns specific algorithmic preferences for tasks, such as employing Reinforcement Learning (RL) models for effective velocity control in car-following scenarios and utilizing the “Locally Decorrelated Channel Features (LDCF)” algorithm for superior pedestrian detection. The study emphasizes the significance of algorithmic choices in motion planning, fault diagnosis with data imbalance, vehicle platoon scenarios, and more. Notably, it advocates the continuous optimization and expansion of algorithms to address the evolving challenges in autonomous driving. This serves as an insightful foundation, prompting future research endeavors to broaden the scope of tasks, explore a diverse array of algorithms, and fine-tune their application in specific areas of interest within the autonomous driving system.

Y. Ma et al. [18] explained the pivotal role of artificial intelligence (AI) in propelling the development and deployment of autonomous vehicles (AVs) within the transportation sector. Fueled by extensive data from diverse sensors and robust computing resources, AI has become integral for AVs to perceive their environment and make informed decisions while in motion, while existing research has explored various facets of AI application in AV development. This paper addresses a gap in the literature by presenting a comprehensive survey of key studies in this domain. The primary focus is on analyzing how AI is employed in supporting crucial applications in AVs: (1) perception, (2) localization and mapping, and (3) decision-making. The paper scrutinizes current practices to elucidate the utilization of AI, delineating associated challenges and issues. Furthermore, it offers insights into potential opportunities by examining the integration of AI with emerging technologies such as high-definition maps, big data, high-performance computing, augmented reality (AR), and virtual reality (VR) for enhanced simulation platforms, and 5G communication for connected AVs. In essence, this research serves as a valuable reference for researchers seeking a deeper understanding of AI’s role in AV research, providing a comprehensive overview of current practices and paving the way for future opportunities and advancements.

G. Bendiab et al. [19] mention that the introduction of autonomous vehicles (AVs) presents numerous advantages such as enhanced safety and reduced environmental impact, yet security and privacy vulnerabilities pose significant risks. Integrating blockchain and AI offers a promising solution to address these concerns by leveraging their respective strengths to fortify AV systems against malicious attacks. While existing research explores this intersection, further investigation is needed to fully realize the potential of this amalgamation in securing AVs, as outlined in the paper through a systematic review of security threats, the recent literature, and future research directions.

M. Chu et al. [20] explains that the emergence of autonomous vehicles (AVs) has led to the creation of a new job role called “safety drivers”, tasked with supervising and operating AVs during various driving missions. Despite being crucial for road testing tasks, safety drivers’ experiences are largely unexplored in the Human–Computer Interaction (HCI) community. Through interviews with 26 safety drivers, it was found that they coped with defective algorithms, adapted their perceptions while working with AVs, and faced challenges such as assuming risks from the AV industry upstream and limited opportunities for personal growth, highlighting the need for further research in human–AI interaction and the lived experiences of safety drivers.

M. H. Hwang et al. [21] introduced a Comfort Regenerative Braking System (CRBS) utilizing neural networks to enhance driving comfort in autonomous vehicles. By predicting acceleration and deceleration limits based on passenger comfort criteria, the CRBS adjusts vehicle control strategies, reducing discomfort during braking. Numerical analysis and back propagation techniques ensure efficient regenerative braking within comfort limits. The proposed CRBS, validated through simulations, offered effective regenerative braking while maintaining passenger comfort, making it a promising solution for autonomous electric vehicles.

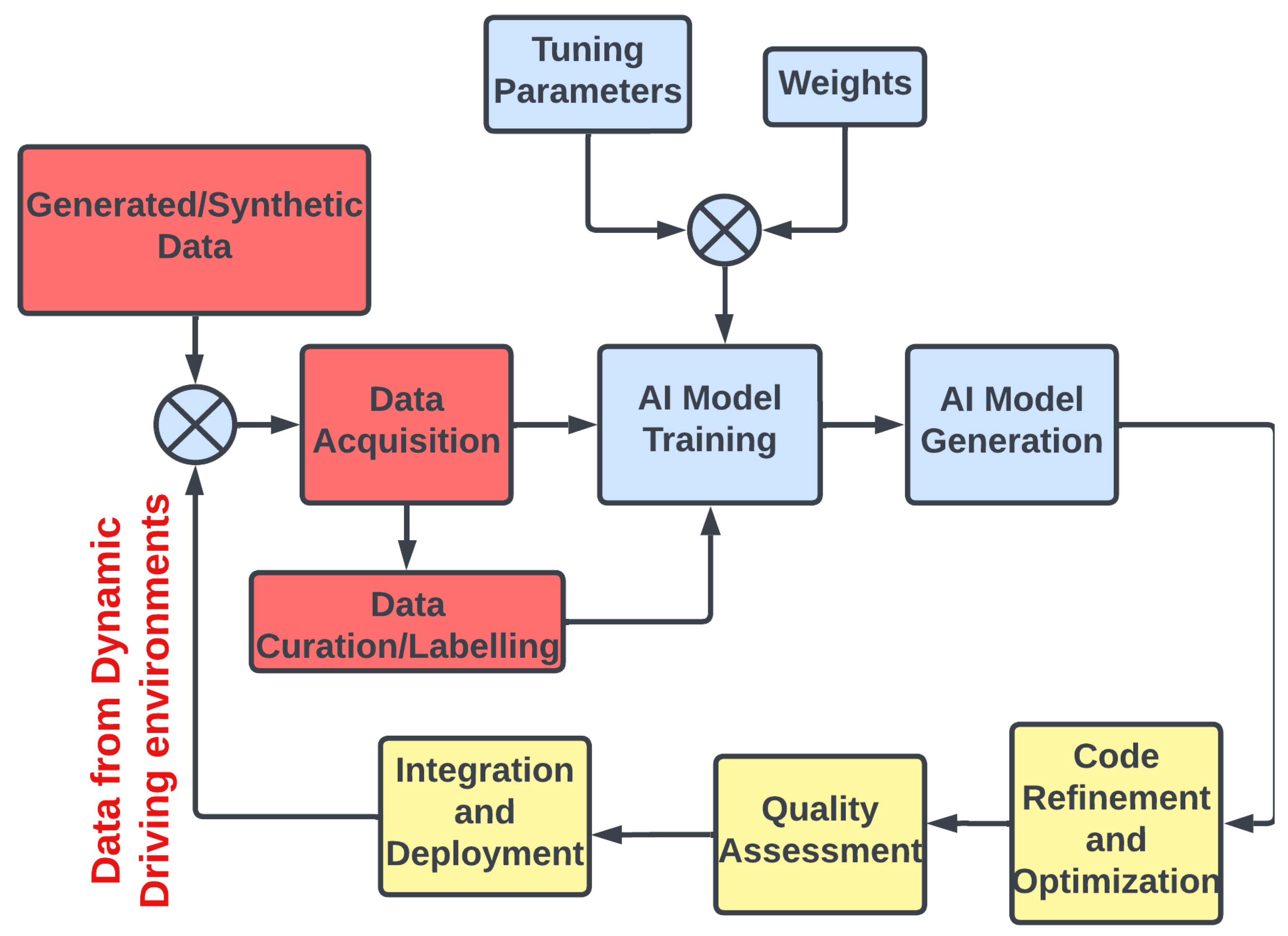

3. The AI-Powered Development Life Cycle in Autonomous Vehicles

This section describes the key aspects involved in the AI-powered development life cycles within autonomous vehicles, and these could be applicable to other fields as well in general.

3.1. Model Training and Deployment

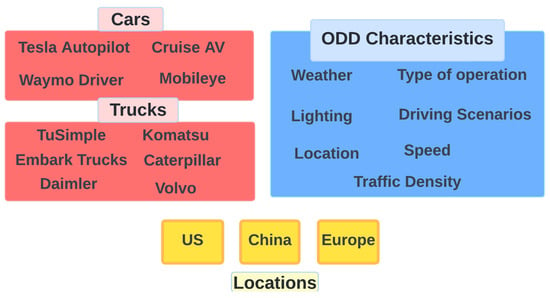

AI model training and deployment in autonomous vehicles involves a systematic process and typically includes several stages:

Data Collection and Pre-processing: Gathering a vast amount of data from real-world sensors, pre-existing datasets, and other sources such as synthetic datasets. Cleaning and pre-processing the data to make them suitable for machine learning models.

Model Training: Refers to the pattern extraction models, that is, employing learning models such as neural networks, deep learning [22], and natural language processing (NLP) to understand patterns and structures based on the data. Training the models to a desired level of accuracy based on each scenario or in generic abstract cases like being able to extract the patterns during the live operation of the vehicles.

Model Generation: Refers to the decision-making models. Trained models are used to perform a certain decision-making task, function, or modules based on learned patterns. These models can use various architectures, such as decision trees, random forests, regression trees, deep layers, ensemble learning, etc.

Code Refinement and Optimization [23]: Refine the generated code to improve its quality, readability, and functionality. Post-generation processing ensures the code adheres to coding standards, conventions [24], and requirements.

Quality Assessment: Evaluate the generated code for correctness, efficiency, and adherence to the intended functionalities. This involves testing, debugging, and validation procedures.

Integration and Deployment: Integrate the model into a broader system under development for autonomy implementation. Deploy and test the software application incorporating the new model using multiple methods like software-in-the-loop, hardware-in-the-loop, human-in-the-loop etc., using simulation, closed course, and limited public road environments. Some models are trained to improve their learning even after deployment. These models need to be tested for future directions of learning to ensure compliance to ethical considerations as explained in Section 4 and other requirements.

Using a systematic process like this would help build the confidence levels on each model being developed and deployed in various subsystems of autonomous vehicles like perception, planning, controls [25], and Human–Machine Interface (HMI) applications.

3.2. Ensuring Software Quality and Security

In autonomous vehicles, the integration of AI in various aspects of software development and maintenance plays a crucial role in ensuring the robustness and security of the overall system. Automated testing, powered by AI-based tools, emerges as a key component in the testing process. These tools efficiently identify bugs and vulnerabilities, and they ensure that the software functions as intended, contributing to the reliability of autonomous vehicle software. Additionally, AI extends its capabilities to code analysis and review, providing a thorough examination of the code base for quality and highlighting potential issues or vulnerabilities. Predictive maintenance, facilitated by AI, becomes essential for anticipating and addressing potential software failures, ultimately reducing downtime and enhancing the overall operational efficiency of autonomous vehicles. Moreover, AI-driven anomaly detection and security monitoring contribute significantly to the safety of autonomous vehicles. By continuously monitoring the software environment, AI systems can identify abnormal patterns or behaviors, promptly responding to potential security threats in real time. Vulnerability assessment, another application of AI tools, is the conducting of in-depth evaluations to pinpoint weaknesses in software systems, providing valuable insights to mitigate risks effectively. Behavioral analysis powered by AI proves instrumental in understanding user interactions within the software. This capability aids in detecting and preventing suspicious or malicious activities, fostering a secure and reliable autonomous vehicle ecosystem. Finally, AI’s role in fraud detection within software applications adds an extra layer of security, ensuring the integrity of autonomous vehicle’s systems and safeguarding against potential security breaches. In summary, the integration of AI in these diverse areas significantly enhances the overall safety, security, and efficiency of autonomous vehicles.

4. Challenges in AI-Driven Software Development for Autonomous Vehicles

The success of autonomous vehicles hinges on balancing their potential benefits with addressing the challenges through collaborative efforts in technological development, regulation, and public communication. Some of the challenges include the following:

- Safety and Reliability: Ensuring flawless AI performance in all scenarios is paramount.

- Cybersecurity: Protecting against hacking and unauthorized access is essential.

- Regulations and Law: Clear standards for safety, insurance, and liability are needed.

- Public Trust and Acceptance: Addressing concerns about safety, data privacy, and ethical dilemmas is crucial.

- Addressing Edge cases: Being able to handle unforeseen scenarios is challenging as those scenarios are rare and could be hard to imagine in some cases.

- Ethical Dilemmas: Defining AI decision-making in ambiguous situations raises moral questions.

To address some of these challenges, understanding and addressing these concerns are crucial for building responsible and fair AI-driven software for autonomous vehicles. The following are some of the existing challenges and their mitigation for AI-driven software for autonomous vehicles:

- Safety and Reliability:

- Challenge: Sensor failures can lead to the misinterpretation of the environment.

- −

- Mitigation: Use diverse sensors (LiDAR, cameras, radar) with redundancy and robust sensor fusion algorithms.

- Challenge: Cybersecurity vulnerabilities can be exploited for malicious control.

- −

- Mitigation: Implement strong cybersecurity measures, penetration testing, and secure communication protocols.

- Challenge: Limited real-world testing data can lead to unforeseen scenarios.

- −

- Mitigation: Utilize simulation environments with diverse and challenging scenarios, combined with real-world testing with safety drivers.

- Challenge: Lack of clear regulations and legal frameworks can hinder the development and deployment.

- −

- Mitigation: Advocate for clear and adaptable regulations that prioritize safety and innovation.

- Cybersecurity:

- Challenge: Vulnerable software susceptible to hacking and manipulation.

- −

- Mitigation: Implement secure coding practices, penetration testing, and continuous monitoring.

- Challenge: AI models can be vulnerable to adversarial attacks, posing security risks.

- −

- Mitigation: Robust testing against adversarial scenarios, incorporating security measures, and regular updates to address emerging threats.

- Regulations and law:

- Challenge: Lack of clear legal liability in case of accidents involving autonomous vehicles.

- −

- Mitigation: Develop frameworks assigning responsibility to manufacturers, software developers, and operators.

- Challenge: Difficulty in adapting existing traffic laws to address the capabilities and limitations of autonomous vehicles.

- −

- Mitigation: Establish new regulations that prioritize safety, consider ethical dilemmas, and update with technological advancements.

- Public Trust and Acceptance:

- Challenge: Public concerns regarding safety and a lack of trust in AI decision-making.

- −

- Mitigation: Increase transparency in testing procedures, demonstrate safety through rigorous testing and data, and prioritize passenger safety in design.

- Addressing Edge Cases:

- Challenge: Rare or unexpected scenarios that confuse the AI’s perception.

- −

- Mitigation: Utilize diverse and comprehensive testing data, including simulations of edge cases, and develop robust algorithms that can handle the unexpected. Furthermore, include new scenarios as they are seen in the field from collected data as a continuous feedback loop as shown in Figure 3

Figure 3. AI-Powered Development Life Cycle.

Figure 3. AI-Powered Development Life Cycle.

- Ethical Dilemmas [26]:Data Bias:

- Challenge: AI models learn from historical data, and if the training data are biased [27], the model can perpetuate and amplify existing biases.

- −

- Mitigation: Rigorous data pre-processing, diversity in training data, and continuous monitoring for bias are essential. Ethical data collection practices must be upheld.

Algorithmic Bias:- Challenge: Algorithms may inadvertently encode biases present in the training data, leading to discriminatory outcomes.

- −

- Mitigation [28]: Regular audits of algorithms for bias, transparency in algorithmic decision-making, and the incorporation of fairness metrics during model evaluation.

Fairness and Accountability:- Challenge: Ensuring fair outcomes [29] and establishing accountability for AI decisions is complex, especially when models are opaque.

- −

- Mitigation: Implementing explainable AI (XAI) techniques, defining clear decision boundaries, and establishing accountability frameworks for AI-generated decisions.

Inclusivity and Accessibility:- Challenge: Biases in AI can result in excluding certain demographics, reinforcing digital divides.

- −

- Mitigation: Prioritizing diversity in development teams, actively seeking user feedback, and conducting accessibility assessments to ensure inclusivity.

Social Impact:- Challenge: The deployment of biased AI systems can have negative social implications [30], affecting marginalized communities disproportionately.

- −

- Mitigation: Conducting thorough impact assessments, involving diverse stakeholders in development process, and considering societal consequences during AI development.

- Ethical Frameworks and Guidelines [31]:

- Challenge: The absence of standardized ethical frameworks can lead to inconsistent practices in AI development.

- −

- Mitigation: Adhering to established ethical guidelines, such as those provided by organizations like the ISO, IEEE, SAE, government regulatory boards, etc., and actively participating in the development of industry-wide standards.

- Explainability and Transparency:

- Challenge: Many AI models operate as “black boxes”, making it challenging to understand how decisions are reached. AI safety is another challenge that needs to be addressed in safety-critical applications like autonomous vehicles.

- −

- Mitigation: Prioritizing explainability [32] in AI models, using interpretable algorithms, and providing clear documentation on model behavior.

- User Privacy: [33]

- Challenge: AI systems often process vast amounts of personal data, raising concerns about user privacy.

- −

- Mitigation: Implementing privacy-preserving techniques, obtaining informed consent, and adhering to data protection regulations (e.g., GDPR [34]) to safeguard user privacy.

- Continuous Monitoring and Adaptation:

- Challenge: AI models may encounter new biases or ethical challenges as they operate in dynamic environments.

- −

- Mitigation: Establishing mechanisms for ongoing monitoring, feedback loops, and model adaptation to address evolving ethical considerations.

Out of all of these challenges, addressing ethical considerations and bias in AI-driven software development in autonomous vehicles is more challenging and requires a holistic and proactive approach [35]. It involves a commitment to fairness, transparency, user privacy, and social responsibility throughout the AI development life cycle. As the field evolves, continuous efforts are needed to refine ethical practices and promote responsible AI deployment.

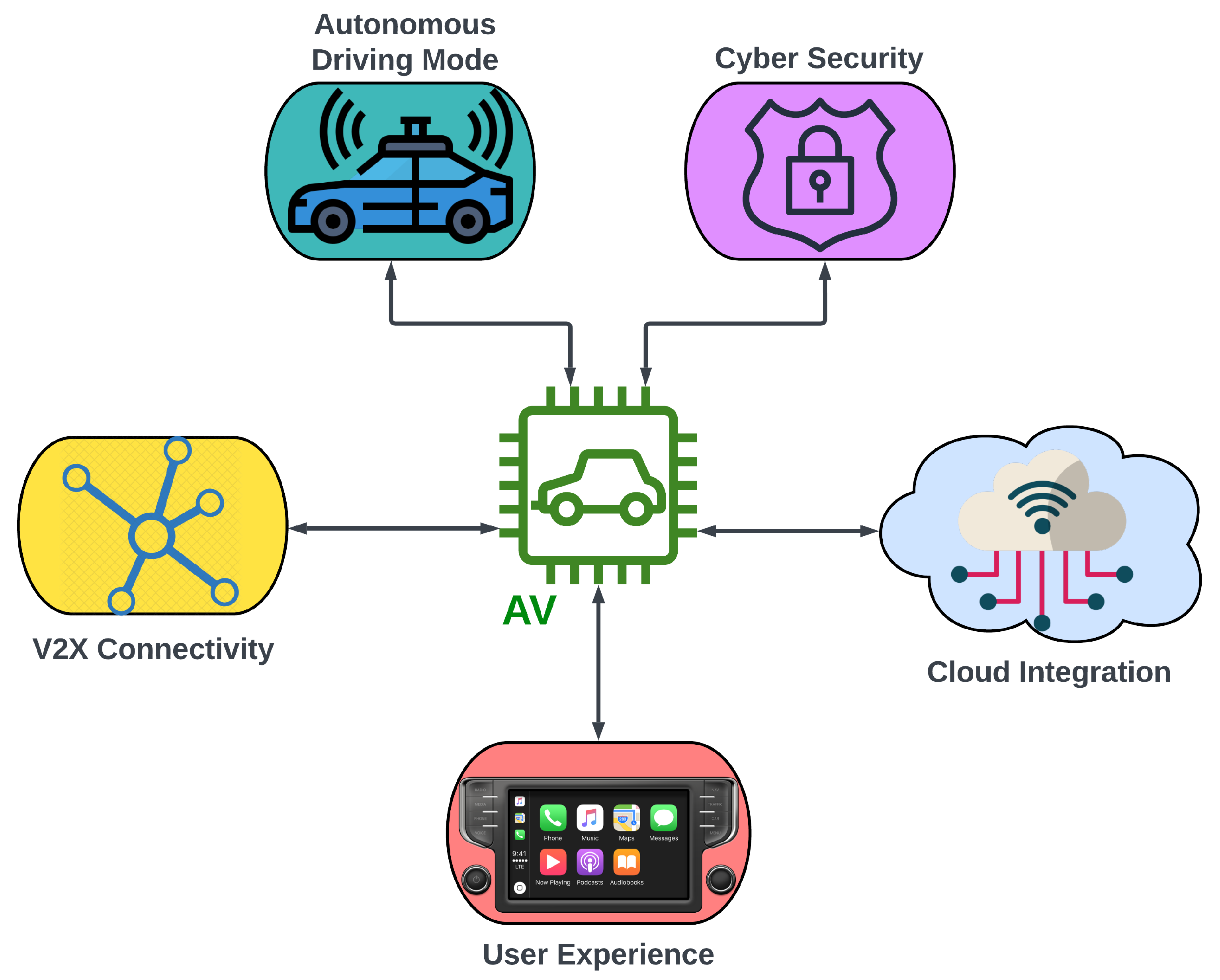

5. AI’s Role in the Emerging Trend of Internet of Things (IoT) Ecosystem for Autonomous Vehicles

Artificial Intelligence (AI) plays a crucial role in shaping and enhancing the capabilities of the Internet of Things (IoT). Here is an overview of how AI contributes to the IoT ecosystem for autonomous vehicles (Figure 4).

Figure 4.

AI’s role in the Internet of Things (IoT) ecosystem for autonomous vehicles.

In the realm of Connected and Autonomous Vehicles (CAVs), AI and IoT [36] converge to create a seamless network of intelligence and connectivity, transforming the driving experience. Vehicles become intelligent agents, processing sensor data in real-time to make informed decisions, predicting traffic patterns, optimizing routes, detecting anomalies, and even adapting to changing road conditions with dynamic adjustments. This intelligent ecosystem extends beyond individual vehicles, interconnecting with infrastructure and other vehicles to optimize traffic flow, anticipate potential hazards, and personalize the driving experience.

Key AI-powered IoT capabilities in CAVs include the following:

- Real-time data processing and analysis for insights into traffic, road conditions, and vehicle health.

- Predictive analytics for proactive maintenance, efficient resource allocation, and informed decision-making.

- Enhanced automation for autonomous driving tasks, adaptive cruise control [37], and dynamic route optimization.

- Efficient resource management for optimizing energy consumption, bandwidth usage, and load balancing.

- Security and anomaly detection for identifying potential threats and preventing cyberattacks [38].

- Personalized user experience through customized settings, preferences, and tailored insights.

- Edge computing for real-time decision-making, reducing latency and improving responsiveness.

The challenges to address include ensuring data privacy, security, interoperability, and overcoming resource constraints in connected vehicles. The seamless integration of AI and IoT holds the potential to revolutionize transportation, leading to safer, more efficient, and sustainable [39] mobility solutions.

Enhancing User Experience

Personalization and recommendation systems in-cabin: AI-driven personalization and recommendation systems in autonomous vehicles use machine learning models to analyze user behavior and preferences, creating personalized recommendations for tools, libraries, and vehicle maneuvers. They collect and pre-process user data, create individual profiles, generate tailored suggestions, and continuously adapt based on real-time interactions, aiming to enhance user experience and developer productivity.

Natural Language Processing (NLP) in-cabin: NLP enables software to comprehend and process human language. This includes chat bots, virtual assistants, and voice recognition systems that understand and respond to natural language queries in vehicle cabins. It allows the vehicle’s subsystems to analyze and derive insights from user requirements and structuring requirements effectively to create responses and certain vehicle maneuvers.

Generative Artificial Intelligence (Gen AI): This technology uses machine learning algorithms to produce new and original outputs based on the patterns and information it has learned from training data. In the context of vehicles, generative AI can be applied to various aspects, including natural language processing for in-car voice assistants, content generation for infotainment systems, and even simulation scenarios for testing autonomous driving systems. Large Language Models (LLMs) are a specific class of generative AI models that are trained on massive amounts of text data to understand and generate human-like language. In vehicles, LLMs can be employed for natural language understanding and generation, allowing for more intuitive and context-aware interactions between the vehicle and its occupants. This can enhance features like voice-activated controls, virtual assistants, and communication systems within the vehicle.

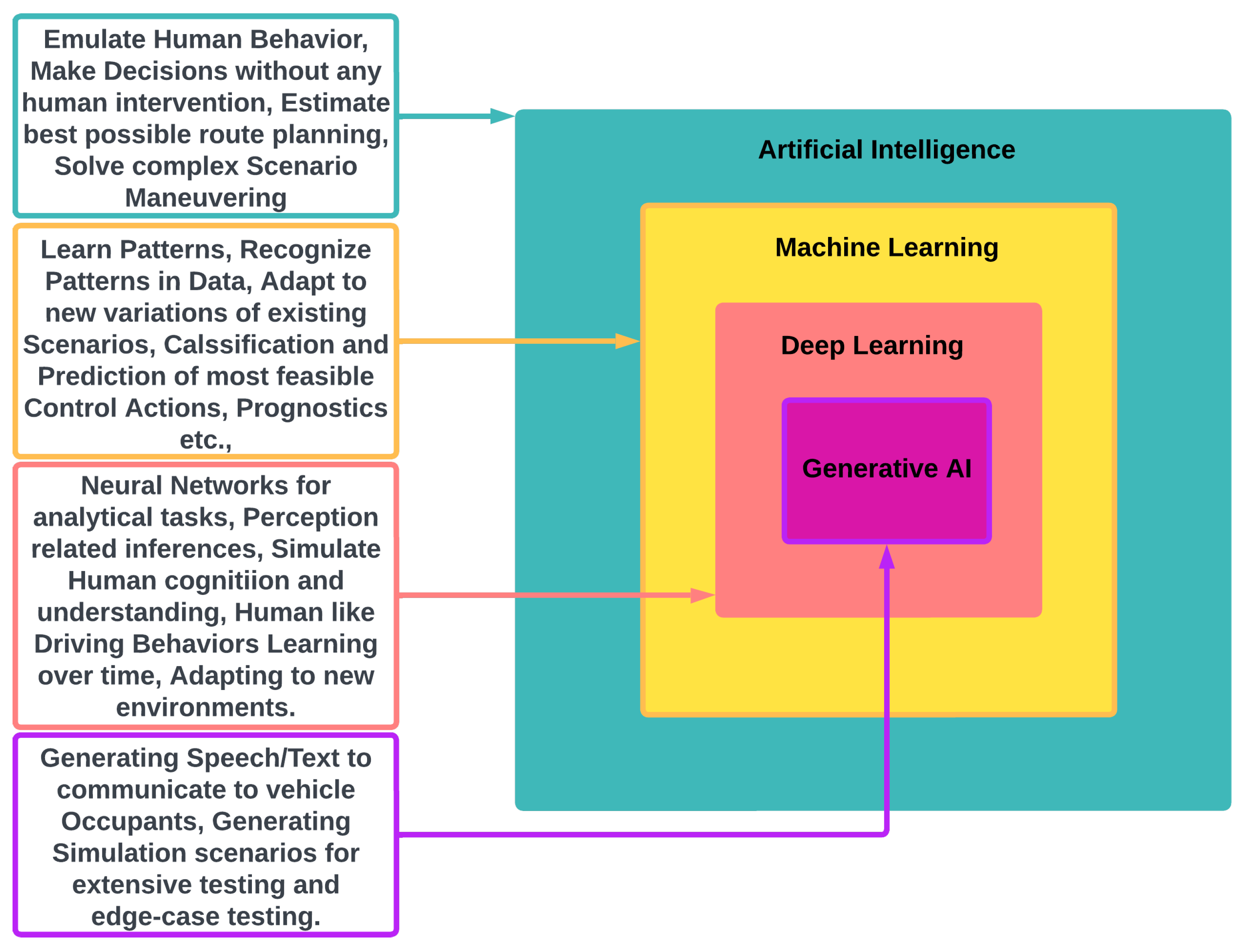

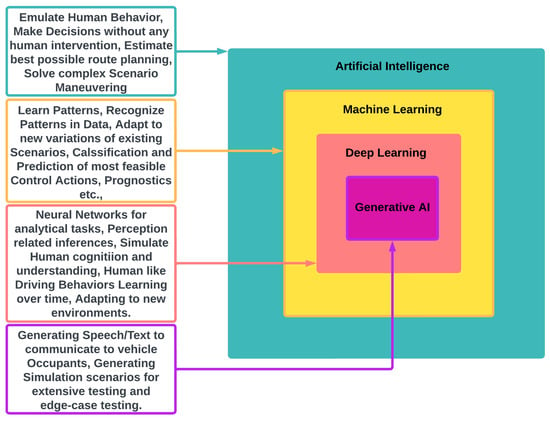

6. AI Algorithms’ Statistics for Autonomous Vehicles

There are multiple sub-divisions of AI, such as machine learning (ML), deep learning (DL), and generative AI (Gen AI), as shown in Figure 5. ML is a bigger subset of AI, which focuses on deciphering patterns within datasets and adapting to the evolving circumstances. DL is a subset of ML that operates through intricate neural networks, handling larger datasets and performing more complex computations. DL algorithms primarily employ supervised learning methods, particularly deep neural networks, enabling learning from unstructured data. Gen AI is a subset of DL that helps in emulating human interaction through sophisticated models like Generative Adversarial Networks (GANs) [40] and Generative Pre-trained Transformers (GPTs). GANs engage in a perpetual learning cycle between generator and discriminator models to enhance their capabilities until they become indistinguishable from authentic examples. GPT frameworks focus on generative language modeling, facilitating tasks such as text generation, code creation, and conversational dialogue.

Figure 5.

A comparative view of AI, ML, deep learning, and Gen AI and some of their use cases in AVs [41].

This section extends the analysis of artificial intelligence (AI) algorithms in autonomous vehicles, building upon previous work as described in Section 5. The focus is on providing additional statistical insights into the following:

- −

- Evolution of different types of AI algorithms over the years,

- −

- Research trends in the application of AI in all fields vs. autonomous vehicles,

- −

- Creation of a parameter set crucial for autonomous trucks versus cars,

- −

- Evolution of AI algorithms at different autonomy levels, and

- −

- Changes in the types of algorithms, software package size, etc., over time.

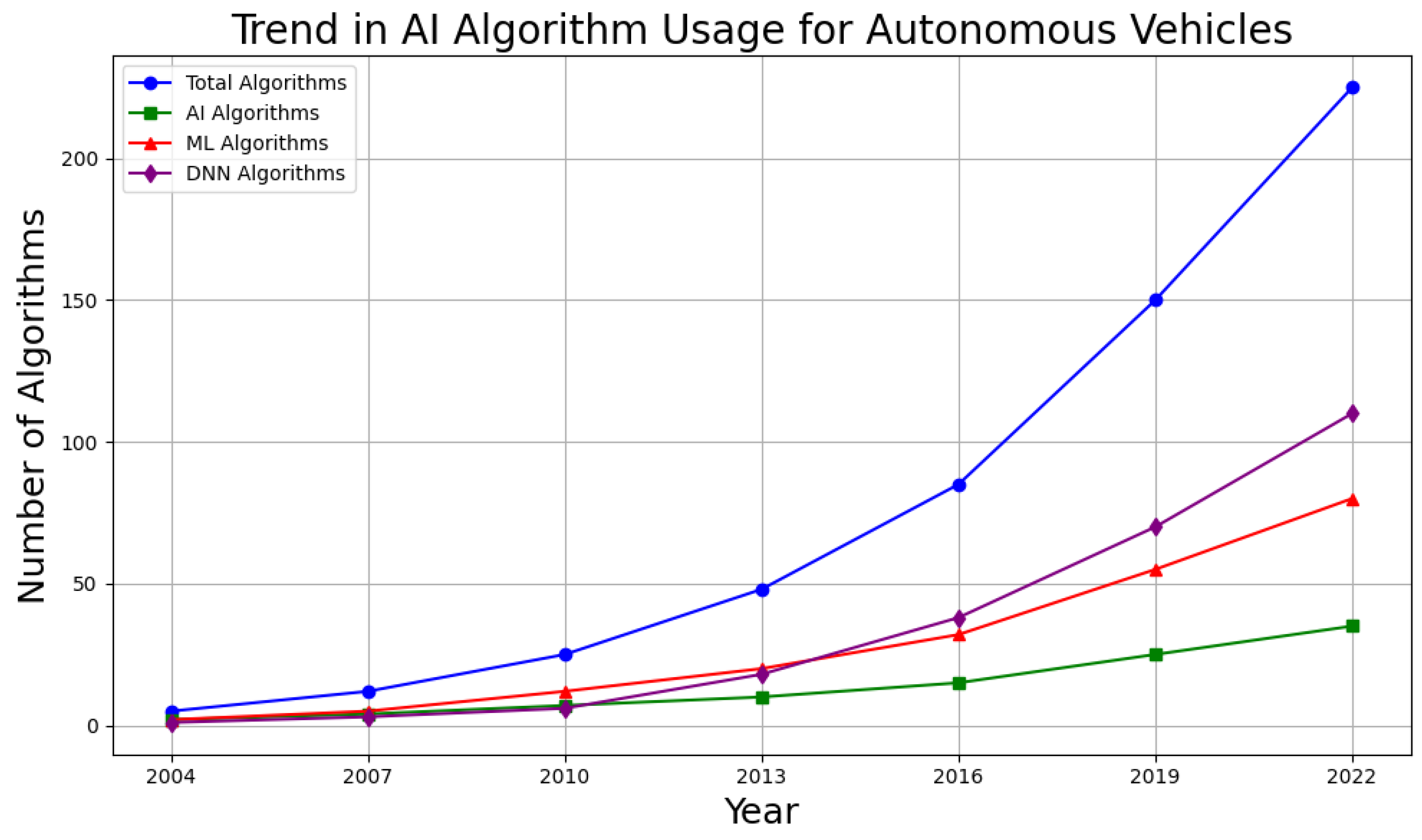

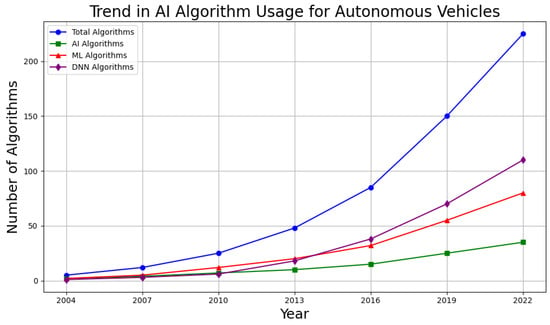

6.1. Stat1: Trends of Usage of AI Algorithms over the Years

Today, a vehicle’s main goal is not limited to transportation, but also includes comfort, safety, and convenience. This has led to extensive research on improving vehicles and incorporating technological breakthroughs and advancements.

As per prior work carried out for the development of architectures and the ADAS technology, it is evident the research still has limitations. These limitations are pertaining either to the authors’ elaboration of their knowledge or not having proper sources. Thus, it is a good exercise to take a look at the trends over the years. This is because our capabilities to develop these ML models have improved. Access to better computing units [42,43] has led to the evolution of better algorithms. In Table 2, we summarize different modeling algorithms for various standard components of the ADAS algorithm. The second column presents the technologies that exist today, and the third column predicts potential future developments that are more efficient than the current state.

Table 2.

Autonomous driving: Key technologies’ evolution.

Below, we create a series of plots pertaining to research publications in AI (artificial intelligence) with a focus on ML (machine learning), incorporating DNN (Deep Neural Network) domains. Brief explanations are provided above to understand what topics come under these domains.

- AI (Artificial Intelligence)

- Expert Systems: Rule-based systems that mimic human expertise in decision-making [44].

- Decision Trees: Hierarchical structures for classification and prediction. One good example is prognostics areas.

- Search Algorithms: Methods for finding optimal paths or solutions, such as A* search and path planning algorithms.

- Generative AI: To create scenarios for training the system and for balancing data in high severity accident/non-accident cases. (CRSS dataset). Create a non-existent scenario dataset. Supplement the real datasets. Simulation testing.

- NLP: AI Assistant (Yui, Concierge, Hey Mercedes, etc.,)—LLMs.

- ML (Machine Learning)

- Supervised Learning: Algorithms that learn from labeled data to make predictions, such as the following:

- −

- Linear Regression: For predicting continuous values.

- −

- Support Vector Machines (SVMs): For classification and outlier detection.

- −

- Decision Trees: For classification and rule generation.

- −

- Random Forests: Ensembles of decision trees for improved accuracy.

- Unsupervised Learning: Algorithms that find patterns in unlabeled data, such as the following:

- −

- Clustering Algorithms (K-means, Hierarchical): For grouping similar data points.

- −

- Dimensionality Reduction (PCA, t-SNE): For reducing data complexity.

- DNN (Deep Neural Networks)

- Convolutional Neural Networks (CNNs): For image and video processing, used for object detection, lane segmentation, and traffic sign recognition.

- Recurrent Neural Networks (RNNs): For sequential data processing, used for trajectory prediction and behavior modeling.

- Deep Reinforcement Learning (DRL): For learning through trial and error, used for control optimization and decision-making.

- Specific Examples in Autonomous Vehicles

- Object Detection (DNN): CNNs like YOLO [45], SSD [46], and Faster R-CNN are used to detect objects around the vehicle.

- Lane Detection (DNN): CNNs are used to identify lane markings and road boundaries.

- Path Planning (AI): Search algorithms like A* and RRT are used to plan safe and efficient routes.

- Motion Control (ML): Regression models [47,48,49] are used to predict vehicle dynamics and control steering, acceleration, and braking.

- Behavior Prediction (ML): SVMs or RNNs are used to anticipate the behavior of other vehicles and pedestrians.

As shown in Figure 6, we evaluated the papers from [50,51] and found the trends to be as shown. One can observe that in year 2013, the number of algorithms in DNN surpassed that in generic AI and ML. This shows more research on deep neural networks and the traction it received in the AI community. However, the main takeaway from the graph is the exponential upward trend in the number of algorithms developed over the years for AI applications.

Figure 6.

Trends of usage of AI algorithms over the years.

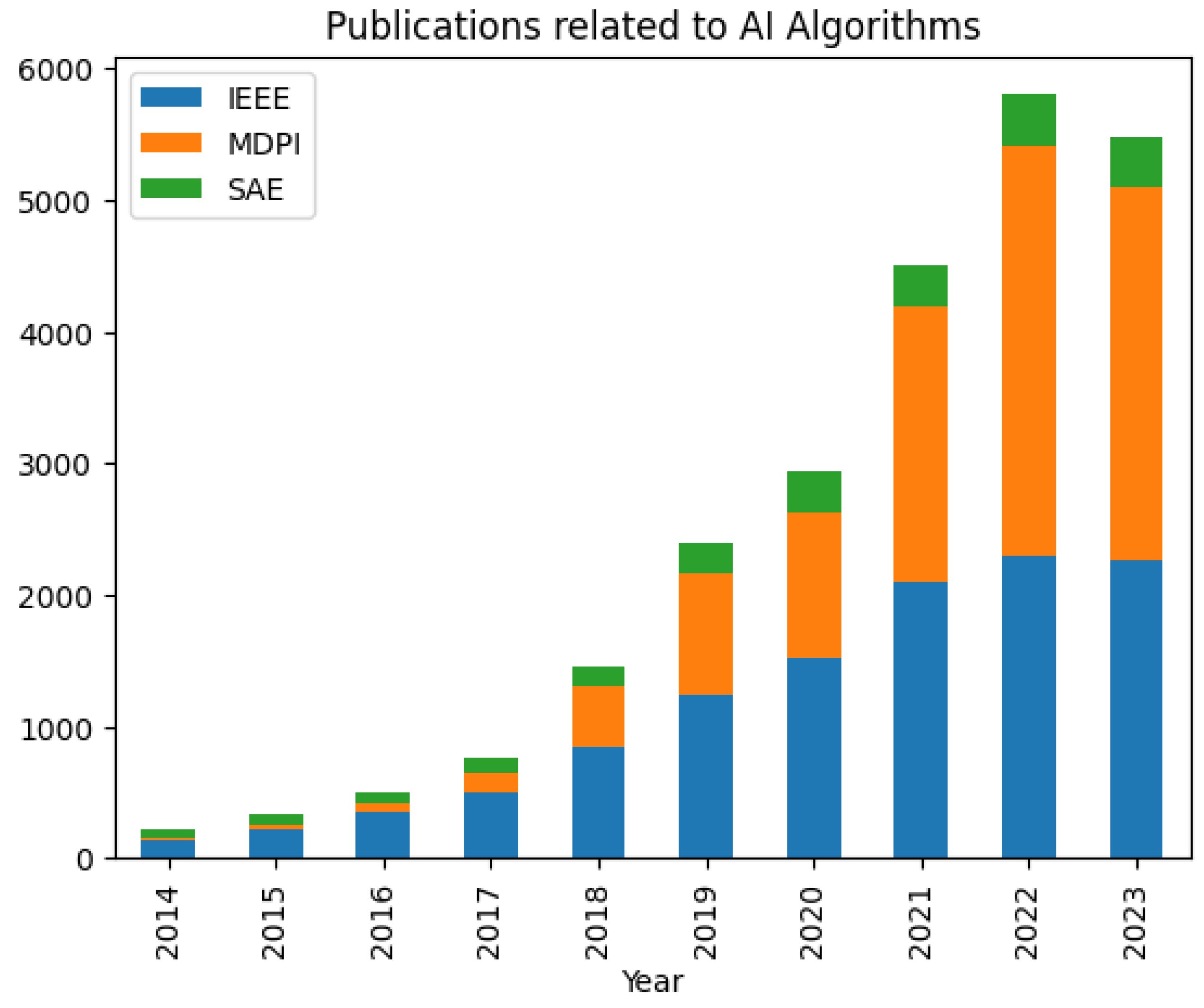

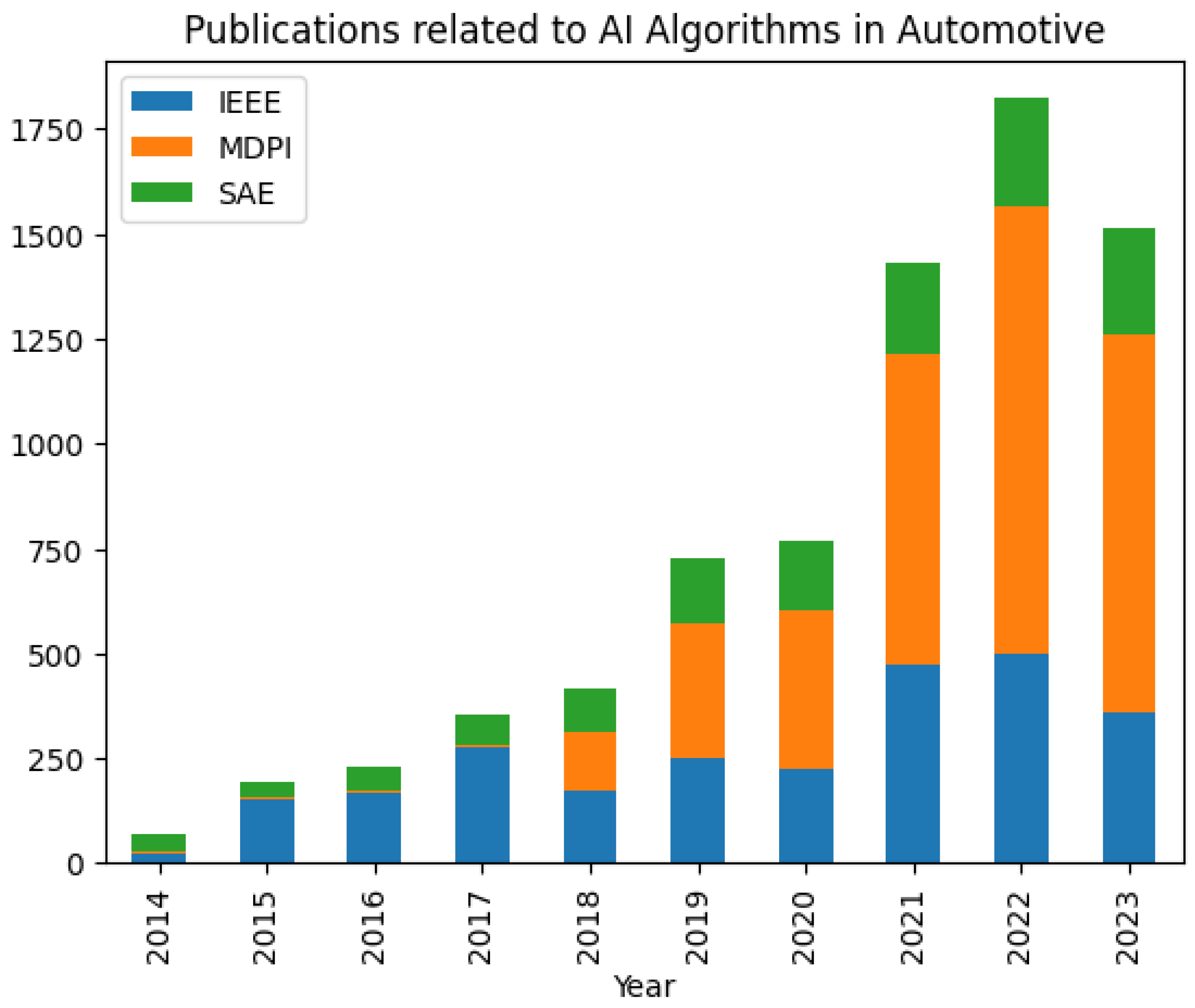

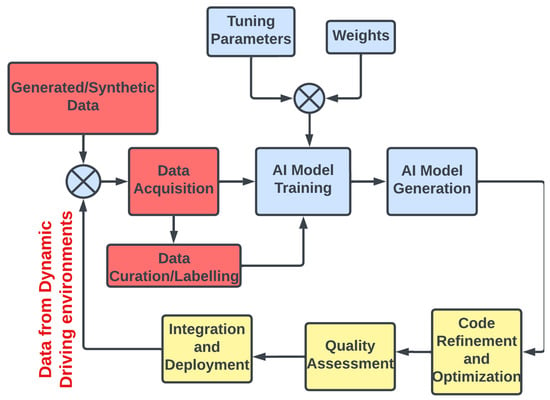

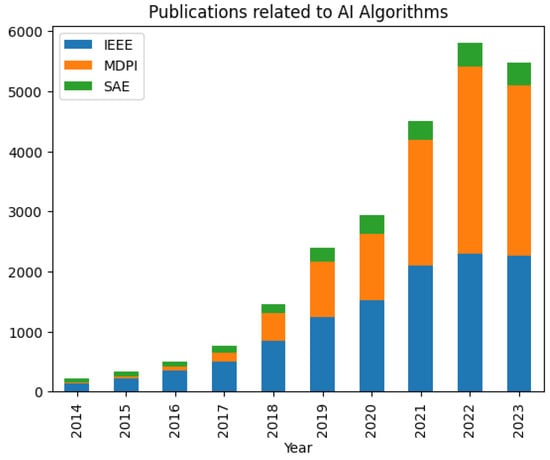

Research was performed by considering platforms like IEEEXplore, SAE Mobilus, MDPI, and Science Direct to find out the published research in AI/ML and also particularly in autonomous vehicles.

When filtering the MDPI journals and articles, one can observe that there is an additional filter relating to Data that pops up after 2021. This indicates that pre-2020, not many papers related to data handling and analysis were published, as the collected data were not large. One also observes that the year 2020 (year of the COVID-19 pandemic), for MDPI, saw minimal papers on autonomous technology. While several factors may contribute to the rise in model deployments observed in 2021, a possible explanation is the limited opportunity for previous models to undergo real-world testing through vehicle deployment. Notably, the number of deployed models surged to 737 in 2021, representing nearly a twofold increase compared to earlier years.

From the IEEE publications, one can see that although effective research in AI/ML increased over time, not much research has been published towards autonomous vehicle technology.

Shifting Trends in IEEE Publications: Interestingly, post 2021, the upward trend in LMM and DNN publications (identified through filters aligned with our previous analysis) appears to plateau. This suggests a potential shift in research focus within Computer Vision (CV) following the emergence of Generative AI (GenAI) and other advanced technologies. While LMM and DNN remain foundational, their prominence as primary research subjects within classic CV might be declining.

Considering CVPR Publications: Initially, we considered including CVPR publications in our analysis. However, we ultimately excluded them due to a significant overlap with the IEEE dataset. As a significant portion of CVPR papers are subsequently published in IEEE journals, including both sets would introduce redundancy and potentially skew the analysis.

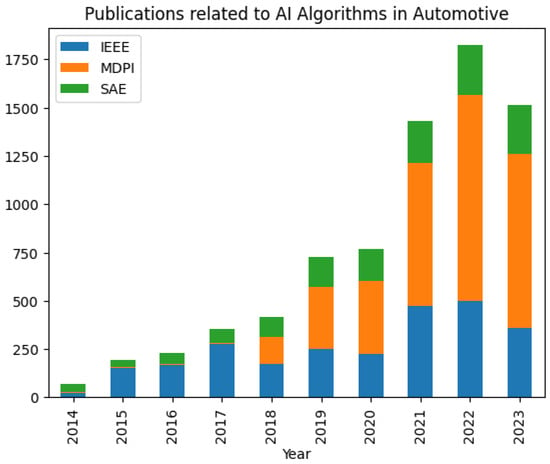

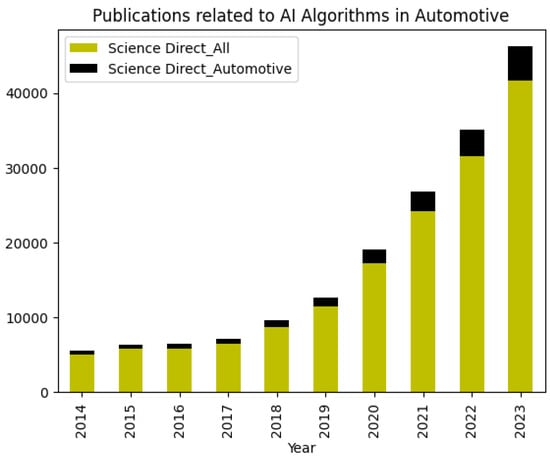

Figure 7 focuses on all AI/ML publications related to IEEEXplore [52], MDPI (Multidisciplinary Digital Publishing Institute) [53], and SAE (Society Of Automotive Engineers) [54]. Figure 8 focuses on the trend changes in publications on autonomous vehicles. Figure 9 focuses on Science Direct [55], where we see that the publications are in thousands, with autonomous vehicles having very little presence. This is an indication of how AI applications have surpassed engineering and are used everywhere from medicine to defense.

Figure 7.

No. of publications related to AI algorithms in all fields.

Figure 8.

No. of publications related to AI algorithms for autonomous vehicles.

Figure 9.

No. of publications related to AI algorithms for autonomous vehicles vs. all fields in Science Direct.

From the graphs, we see comparatively few publications in the years 2014–2018. There is a huge surge in 2018, where we see that autonomous vehicles with advanced self-driving features gained traction. From the trend, we expect, in the future, a similar exponential rise. However, we do expect additional parameters (for example, data being introduced) to be in the list. With AI applications coming up in every industry along with the automotive industry, the future for research in the area is promising.

The analysis focuses on the quantitative aspect of AI research, specifically the number of related papers published in reputable journals like IEEE, MDPI, SAE, and Science Direct. This approach provides a solid foundation for understanding the overall research trends in AI and its subsequent evolution. Although there have been valuable contributions of qualitative research and development in the field, it falls outside the scope of this particular analysis.

6.2. Stat2: Parameters for AI Models (Trucks vs. Cars)

As per the American Trucking Associations (ATA), there will be a shortage of over 100,000 truck drivers in the US by 2030, which could potentially double by 2050 if current trends continue. The Bureau of Labor Statistics (BLS stats), while not explicitly predicting a shortage, projects a slower-than-average job growth for truck drivers through 2030, indicating potential challenges in meeting future demand. The aging work force, demanding job conditions, and regulatory hurdles are few of the reasons which contribute towards the same. The above two results give a good business case for driverless trucks in comparison to driverless cars. This is also contradictory to the belief that truck drivers may lose their jobs over the self-driving technology. As mentioned in [56,57], driverless trucks can drastically reduce the driver costs, increase truck utilization, and improve truck safety. In spite of this, one can see that not enough research has been conducted on the impacts of self-driving trucks compared to passenger transport [58]. There is a need to ensure that road freight transport is aligned with its current operations that retain its value chain. One cannot think of cost reductions by taking out the driver from the cabin completely, as most self-driving technology-developing trucking companies are focusing on hub-to-hub transport and, unlike passenger cars, not from source to destination. One would still need a driver at the start and at the end of the journey. This refocuses our attention on the statement above regarding the need for truck drivers in the future but eliminates the other drawbacks of long-haul freight transport.

In fact, as mentioned in [59,60,61], the concept of heterogenous traffic flow is explored. It concerns utilizing different car–truck, truck–truck, and car–car combination effects to develop pragmatic cross-class interaction rules. This model generalizes the classical Cell Transmission Model (CTM) to three types of traffic regimes in general, i.e., free flow, semi-congestion, and full congestion regimes. The unique combination of traffic scenarios in different geographical areas has been explored. This also helps in collecting data for non-lane-based traffic in countries like India and Indonesia. It helps in better tuning the training models discussed earlier in our paper.

Considering the cost aspects for the autonomous feature development of trucks and cars, ref. [62] talks about how, in Sweden, this technology is found to save a lot of money from the driver costs of trucks. For car drivers, the study found the technology to save a lot of time in travel. Much capital is saved in terms of subsidies. As per [63], a case study was conducted in South Korea, where an autonomous truck pilot project has been running for several years now. The findings of this study indicate that autonomous trucks would attain substantial operational cost savings for freight transport operators across all scenarios, ranging from the most pessimistic to the most optimistic.

As mentioned in [57] above, according to Daimler’s ex-CEO Zetsche, future vehicles need to have four characteristics: they need to be connected, autonomous, shared, and electric, the so-called CASE vehicles. Nevertheless, each of these points has the potential to turn the industry upside down. The paper is clearly backed up by a study that Level 4 automation will be reached by 2030 followed by Level 5 in 2040. Based on the interview results conducted in [56] and the delphi-based scenario study with projections for the next 10 years, it is evident that one needs to seriously consider the impact of automation on trucks. Lots of research revolves around passenger cars with many competitors in the market. We found that not much data exist for self- driving trucks.

This parameter set, as shown in Table 3, serves as a starting point for understanding the key differences in how AI is applied to autonomous trucks and cars. Each parameter can be further explored and nuanced based on specific scenarios and applications. Currently, the autonomous trucking industry has been expanding in four major categories, such as Highway Trucking ODD (Operational Design Domain), Regional Delivery ODD, Urban Logistics ODD, and Mining and Off-Road ODD. There are also three different categories based on the different stages of logistics to handle the movement of goods for autonomous trucking like Long Haul, Middle Mile, and Last Mile. Understanding these categorizations and how the trucking industry has been evolving to deliver more autonomous vehicles is very important for the future of logistics to help optimize and streamline the entire supply chain, ensuring the efficient and timely delivery of goods to their final destination.

Table 3.

Parameter set for differences in using AI in autonomous trucks and cars.

6.3. Stat3: Usage of AI Algorithms at Various Levels of Autonomy

Autonomous vehicles operate at various levels of autonomy, from Level 0 to Level 5, each presenting unique challenges and opportunities. This section explores the diversity and evolution of AI algorithms across different levels of autonomous vehicle capabilities. Autonomous vehicles are categorized into different levels based on their autonomy, with increasing complexity and diversity of AI algorithms as autonomy levels progress. The six levels [64] of AV autonomy define the degree of driver involvement and vehicle automation. At lower levels (L0–L2), driver assistance systems primarily utilize rule-based and probabilistic methods for specific tasks like adaptive cruise control or lane departure warning. Higher levels (L3–L4) rely heavily on machine learning and deep learning algorithms [65,66], particularly for perception tasks like object detection and classification using convolutional neural networks (CNNs). Advanced sensor fusion techniques combine data from cameras, LiDAR (Light Detection and Ranging), radar, and other sensors to create a comprehensive understanding of the environment. Furthermore, reinforcement learning and probabilistic roadmap planning algorithms contribute to complex decision-making and route planning in L3–L4 AVs. L5 (full automation) requires robust sensor fusion, 3D mapping capabilities, and deep reinforcement learning approaches for adaptive behavior prediction and high-level route planning.

Some industry-relevant examples are illustrated below:

Kodiak

- Status: Kodiak currently operates a fleet of Level 4 autonomous trucks for commercial freight hauling on behalf of shippers.

- Recent Developments:

- −

- Kodiak is focusing on scaling [59] its autonomous trucking service as a model, providing the driving system to existing carriers.

- −

- The company recently secured additional funding to expand its operations and partnerships.

- −

- No immediate news about the deployment of driverless trucks beyond current operations.

Waymo

- Status: Waymo remains focused on Level 4 autonomous vehicle technology, primarily targeting robotaxi services in specific geographies [67].

- Recent Developments:

- −

- Waymo is expanding its robotaxi service in Phoenix, Arizona, with plans to eventually launch fully driverless operations.

- −

- The company’s Waymo Via trucking division continues testing autonomous trucks in California and Texas.

- −

- No publicly announced timeline for nationwide deployment of driverless trucks.

- Overall:

- Both Kodiak and Waymo are making progress towards commercializing Level 4 autonomous vehicles but are primarily focused on different segments (trucks vs. passenger cars).

- Driverless truck deployment timelines remain flexible and dependent on regulatory approvals and further testing as discussed previously.

- Key AI Components across Levels

- Perception:

- −

- L0–L2: Basic object detection and lane segmentation using CNNs.

- −

- L3–L4: LiDAR-based object detection, advanced sensor fusion algorithms for robust object recognition.

- −

- L5: 3D object mapping, robust sensor fusion, and interpretation.

- Decision-Making:

- −

- L0–L2: Rule-based algorithms for lane change assistance, adaptive cruise control.

- −

- L3–L4: Probabilistic roadmap planning (PRM), decision-making models for route selection.

- −

- L5: Deep reinforcement learning for adaptive behavior prediction, high-level route planning.

- Control:

- −

- L0–L2: PID controllers [49] for basic acceleration and braking adjustments.

- −

- L3–L4: Model Predictive Control (MPC) [68] for complex maneuvers, trajectory tracking algorithms.

- −

- L5: Multi-task DNNs for real-time coordination of all driving actions.

The following Table 4 provides examples of AI algorithms used at different autonomy levels, from L0 to L5, highlighting key techniques and applications. We consider the percentage of systems using AI algorithms, algorithm types, examples of AI algorithms at each level, and the key tasks being automated at each level of autonomy. Please note that at L0, the extent to which AI or learning algorithms are being used is very minimal, and so they are not complete algorithms in themselves, although there could be some partial techniques being used like data processing or detecting an object on the road.

Table 4.

Statistics on AI algorithms in autonomous vehicles based on levels of automation.

The level of autonomy in an AV directly correlates with the size of its software package. Imagine a pyramid, with Level 0 at the base (smallest size) and Level 5 at the peak (largest size). Each level adds functionalities and complexities, reflected in the increasing size of the pyramid [69].

Challenges and Implications:

- Limited Storage and Processing Power: The existing onboard storage and processing capabilities might not yet be sufficient for larger Level 4 and 5 software packages [63].

- Download and Update Challenges: Updating these larger packages may require longer download times and potentially disrupt vehicle operation.

- Security Concerns: The more complex the software, the higher the potential vulnerability to cyberattacks, necessitating robust security measures.

AV software package size is a major challenge for developers like Nvidia and Qualcomm, as larger packages require the following:

- Increased processing power and memory: This translates to higher hardware costs and potentially bulkier systems.

- Slower download and installation times: This can be frustrating for users, especially in areas with limited internet connectivity.

- Security concerns: Larger packages offer more attack vectors for potential hackers.

Here is how Nvidia and Qualcomm are tackling these challenges:

Nvidia:

- Drive Orin platform: Designed for high-performance AV applications, Orin features a scalable architecture that can handle large software packages.

- Software optimization techniques: Nvidia uses various techniques like code compression and hardware-specific optimizations to reduce software size without sacrificing performance.

- Cloud-based solutions: Offloading some processing and data storage to the cloud can reduce the size of the onboard software package [42].

Qualcomm:

- Snapdragon Ride platform: Similar to Orin, Snapdragon Ride is a scalable platform built for the efficient processing of large AV software packages.

- Heterogeneous computing: Qualcomm utilizes different processing units like CPUs (Central Processing Units), GPUs (Graphics Processing Units), and NPUs (Neural Processing Units) to optimize performance and reduce software size by distributing tasks efficiently.

- Modular software architecture: Breaking down the software into smaller, modular components allows for easier updates and reduces the overall package size [2].

Additional approaches:

- Standardization: Industry-wide standards for AV software can help reduce duplication and fragmentation, leading to smaller package sizes.

- Compression algorithms: Advanced compression algorithms can significantly reduce the size of data and code without compromising functionality.

- Machine learning: Using machine learning to optimize software performance and resource utilization can help reduce the overall software footprint.

The battle against the size of AV software packages is ongoing, and both Nvidia and Qualcomm are at the forefront of developing innovative solutions. As technology advances and these approaches mature, we can expect to see smaller, more efficient AV software packages that pave the way for a wider adoption of self-driving vehicles.

Here is a deeper dive, as shown in Table 4, into this relationship [70].

The level of autonomy directly influences the size of an AV’s software package. While higher levels offer greater convenience and potential safety benefits, they come with the challenge of managing increasingly complex and computationally intensive software packages that would require large storage spaces that the current processors cannot accommodate. Hence, the transformation towards zonal-based architectures [71] is desirable, with multiple but a small number of processors that are tasked to accomplish a particular function or task providing the ample storage space needed for moving towards Level 5 along with supporting connected and automated vehicle concepts.

7. Conclusions

This paper provides a thorough examination of the significance of AI algorithms in autonomous vehicles, addressing shifts from rule-based systems to deep neural networks driven by enhanced model capabilities and computing power. It delineates the distinct requirements for trucks and cars, highlighting trucks’ focus on route optimization and fuel efficiency in contrast to cars’ emphasis on passenger comfort and urban adaptability. The progression from basic object detection to 3D mapping and adaptive behavior prediction is discussed, alongside challenges like limited storage, processing power, software updates, and security vulnerabilities at higher levels of autonomy. Key conclusions emphasize AI’s pivotal role in achieving varying levels of autonomous vehicle functionality, necessitating advanced techniques such as Deep Learning and Reinforcement Learning for complex decision-making. As autonomy levels increase, the software package size grows, which poses challenges for storage, processing, and updates, underscoring the need for efficient architectures and robust security measures. Despite the promising business potential, further research is urged on self-driving trucks to optimize logistics and address driver shortages.

While certain aspects like emerging technologies (e.g., quantum AI, transfer learning, meta-learning) fall beyond this paper’s scope, their potential integration within vehicles for tasks like edge computing or human behavior learning through transfer learning has been acknowledged. Although trends in artificial intelligence are discussed, this paper acknowledges the existence of other platforms like NeurIPS [72] with potentially more publications. The exclusion of discussions on emerging trends is intentional to narrow the scope and present relevant studies for drawing meaningful conclusions. This helped us to maintain focus and present relevant studies, offering a clear picture of AI’s evolving landscape in autonomous vehicles and proposing future research directions for stakeholders invested in modern transportation dynamics.

Author Contributions

Conceptualization, D.G. and S.S.S.; methodology, D.G. and S.S.S.; investigation, D.G. and S.S.S.; resources, D.G. and S.S.S.; data curation, D.G. and S.S.S.; writing—original draft preparation, D.G. and S.S.S.; writing—review and editing, D.G. and S.S.S.; visualization, D.G. and S.S.S.; supervision, D.G. and S.S.S.; project administration, D.G. and S.S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

Author Sneha Sudhir Shetiya was employed by the company Torc Robotics Inc. The other author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Bordoloi, U.; Chakraborty, S.; Jochim, M.; Joshi, P.; Raghuraman, A.; Ramesh, S. Autonomy-driven Emerging Directions in Software-defined Vehicles. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, W.; Zhao, F. Impact, challenges and prospect of software-defined vehicles. Automot. Innov. 2022, 5, 180–194. [Google Scholar] [CrossRef]

- Nadikattu, R.R. New ways in artificial intelligence. Int. J. Comput. Trends Technol. 2019, 67. [Google Scholar] [CrossRef]

- Deng, Z.; Yang, K.; Shen, W.; Shi, Y. Cooperative Platoon Formation of Connected and Autonomous Vehicles: Toward Efficient Merging Coordination at Unsignalized Intersections. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5625–5639. [Google Scholar] [CrossRef]

- Pugliese, A.; Regondi, S.; Marini, R. Machine Learning-based approach: Global trends, research directions, and regulatory standpoints. Data Sci. Manag. 2021, 4, 19–29. [Google Scholar] [CrossRef]

- SAE Industry Technologies Consortia’s Automated Vehicle Safety Consortium AVSC Best Practice for Describing an Operational Design Domain: Conceptual Framework and Lexicon, AVSC00002202004, Revised April, 2020. Available online: https://www.sae.org/standards/content/avsc00002202004/ (accessed on 28 January 2024).

- Khastgir, S.; Khastgir, S.; Vreeswijk, J.; Shladover, S.; Kulmala, R.; Alkim, T.; Wijbenga, A.; Maerivoet, S.; Kotilainen, I.; Kawashima, K.; et al. Distributed ODD Awareness for Connected and Automated Driving. Transp. Res. Procedia 2023, 72, 3118–3125. [Google Scholar] [CrossRef]

- Jack, W.; Jon, B. Navigating Tomorrow: Advancements and Road Ahead in AI for Autonomous Vehicles; (No. 11955); EasyChair: Manchester, UK, 2024. [Google Scholar]

- Lillo, L.D.; Gode, T.; Zhou, X.; Atzei, M.; Chen, R.; Victor, T. Comparative safety performance of autonomous-and human drivers: A real-world case study of the waymo one service. arXiv 2023, arXiv:2309.01206. [Google Scholar]

- Nordhoff, S.; Lee, J.D.; Hagenzieker, M.; Happee, R. (Mis-) use of standard Autopilot and Full Self-Driving (FSD) Beta: Results from interviews with users of Tesla’s FSD Beta. Front. Psychol. 2023, 14, 1101520. [Google Scholar] [CrossRef]

- Wansley, M.T. Regulating Driving Automation Safety. Emory Law J. 2024, 73, 505. [Google Scholar]

- Anton, K.; Oleg, K. MBSE and Safety Lifecycle of AI-enabled systems in transportation. Int. J. Open Inf. Technol. 2023, 11, 100–104. [Google Scholar]

- Kang, H.; Lee, Y.; Jeong, H.; Park, G.; Yun, I. Applying the operational design domain concept to vehicles equipped with advanced driver assistance systems for enhanced safety. J. Adv. Transp. 2023, 2023, 4640069. [Google Scholar] [CrossRef]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A Taxonomy and Survey of Edge Cloud Computing for Intelligent Transportation Systems and Connected Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6206–6221. [Google Scholar] [CrossRef]

- Murphey, Y.L.; Kolmanovsky, I.; Watta, P. (Eds.) AI-Enabled Technologies for Autonomous and Connected Vehicles; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Vishnukumar, H.; Butting, B.; Müller, C.; Sax, E. Machine Learning and deep neural network—Artificial intelligence core for lab and real-world test and validation for ADAS and autonomous vehicles: AI for efficient and quality test and validation. In Proceedings of the 2017 Intelligent Systems Conference (IntelliSys), London, UK, 7–8 September 2017; pp. 714–721. [Google Scholar]

- Bachute, M.R.; Subhedar, J.M. Autonomous driving architectures: Insights of machine Learning and deep Learning algorithms. Mach. Learn. Appl. 2021, 6, 00164. [Google Scholar] [CrossRef]

- Ma, Y.; Wang, Z.; Yang, H.; Yang, L. Artificial intelligence applications in the development of autonomous vehicles: A survey. IEEE/CAA J. Autom. Sin. 2020, 7, 315–329. [Google Scholar] [CrossRef]

- Bendiab, G.; Hameurlaine, A.; Germanos, G.; Kolokotronis, N.; Shiaeles, S. Autonomous Vehicles Security: Challenges and Solutions Using Blockchain and Artificial Intelligence. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3614–3637. [Google Scholar] [CrossRef]

- Chu, M.; Zong, K.; Shu, X.; Gong, J.; Lu, Z.; Guo, K.; Dai, X.; Zhou, G. Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Hwang, M.H.; Lee, G.S.; Kim, E.; Kim, H.W.; Yoon, S.; Talluri, T.; Cha, H.R. Regenerative braking control strategy based on AI algorithm to improve driving comfort of autonomous vehicles. Appl. Sci. 2023, 13, 946. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, C.; Macesanu, G. A Survey of Deep Learning Techniques for Autonomous Driving. arXiv 2020, arXiv:1910.07738v2. [Google Scholar] [CrossRef]

- Le, H.; Wang, Y.; Deepak, A.; Savarese, S.; Hoi, S.C.-H. Coderl: Mastering code generation through pretrained models and deep reinforcement Learning. Adv. Neural Inf. Process. Syst. 2022, 35, 21314–21328. [Google Scholar]

- Allamanis, M. Learning Natural Coding Conventions. In Proceedings of the SIGSOFT/FSE’14: 22nd ACM SIGSOFT Symposium on the Foundations of Software Engineering, Hong Kong, China, 16–21 November 2014; pp. 281–293. [Google Scholar] [CrossRef]

- Sharma, O.; Sahoo, N.C.; Puhan, N.B. Recent advances in motion and behavior planning techniques for software architecture of autonomous vehicles: A state-of-the-art survey. Eng. Appl. Artif. Intell. 2021, 101, 104211. [Google Scholar] [CrossRef]

- Yoo, D.H. The Ethics of Artificial Intelligence From an Economics Perspective: Logical, Theoretical, and Legal Discussions in Autonomous Vehicle Dilemma. Ph.D. Thesis, Università degli Studi di Siena, Siena, Italy, 2023. [Google Scholar]

- Khan, S.M.; Salek, M.S.; Harris, V.; Comert, G.; Morris, E.; Chowdhury, M. Autonomous Vehicles for All? J. Auton. Transp. Syst. 2023, 1, 3. [Google Scholar] [CrossRef]

- Mensah, G.B. Artificial Intelligence and Ethics: A Comprehensive Review of Bias Mitigation, Transparency, and Accountability in AI Systems. 2023. Available online: https://www.researchgate.net/profile/George-Benneh-Mensah-2/publication/375744287_Artificial_Intelligence_and_Ethics_A_Comprehensive_Reviews_of_Bias_Mitigation_Transparency_and_Accountability_in_AI_Systems/links/656c8e46b86a1d521b2e2a16/Artificial-Intelligence-and-Ethics-A-Comprehensive-Reviews-of-Bias-Mitigation-Transparency-and-Accountability-in-AI-Systems.pdf (accessed on 26 January 2024).

- Stine, A.A.K.; Kavak, H. Bias, fairness, and assurance in AI: Overview and synthesis. In AI Assurance; Academic Press: Cambridge, MA, USA, 2023; pp. 125–151. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical considerations of using ChatGPT in health care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Prem, E. From ethical AI frameworks to tools: A review of approaches. AI Ethics 2023, 3, 699–716. [Google Scholar] [CrossRef]

- Hase, P.; Bansal, M. Evaluating explainable AI: Which algorithmic explanations help users predict model behavior? arXiv 2020, arXiv:2005.01831. [Google Scholar]

- Kumar, A. Exploring Ethical Considerations in AI-driven Autonomous Vehicles: Balancing Safety and Privacy. J. Artif. Intell. Gen. Sci. (Jaigs) 2024, 2, 125–138. [Google Scholar] [CrossRef]

- Vogel, M.; Bruckmeier, T.; Cerbo, F.D. General Data Protection Regulation (GDPR) Infrastructure for Microservices and Programming Model. U.S. Patent 10839099, 17 November 2020. [Google Scholar]

- Mökander, J.; Floridi, L. Operationalising AI governance through ethics-based auditing: An industry case study. Ethics 2023, 3, 451–468. [Google Scholar]

- Biswas, A.; Wang, H.-C. Autonomous Vehicles Enabled by the Integration of IoT, Edge Intelligence, 5G, and Blockchain. Sensors 2023, 23, 1963. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Lyu, B.; Wen, S.; Shi, K.; Zhu, S.; Huang, T. Robust adaptive safety-critical control for unknown systems with finite-time element-wise parameter estimation. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 1607–1617. [Google Scholar] [CrossRef]

- Madala, R.; Vijayakumar, N.; Verma, S.; Chandvekar, S.D.; Singh, D.P. Automated AI research on cyber attack prediction and security design. In Proceedings of the 2023 6th International Conference on Contemporary Computing and Informatics (IC3I), Gautam Buddha Nagar, India, 14–16 September 2023; pp. 1391–1395. [Google Scholar] [CrossRef]

- Nishant, R.; Kennedy, M.; Corbett, J. Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda. Int. J. Inf. Manag. 2020, 53, 102104. [Google Scholar] [CrossRef]

- Yu, J.; Zhou, X.; Liu, X.; Liu, J.; Xie, Z.; Zhao, K. Detecting multi-type self-admitted technical debt with generative adversarial network-based neural networks. Inf. Softw. Technol. 2023, 158, 107190. [Google Scholar] [CrossRef]

- Zhuhadar, L.P.; Lytras, M.D. The Application of AutoML Techniques in Diabetes Diagnosis: Current Approaches, Performance, and Future Directions. Sustainability 2023, 15, 13484. [Google Scholar] [CrossRef]

- Marie, L. NVIDIA Enters Production with DRIVE Orin, Announces BYD and Lucid Group as New EV Customers, Unveils Next-Gen DRIVE Hyperion AV Platform. Published by Nvidia Newsroom, Press Release, 2022. Available online: https://nvidianews.nvidia.com/news/nvidia-enters-production-with-drive-orin-announces-byd-and-lucid-group-as-new-ev-customers-unveils-next-gen-drive-hyperion-av-platform (accessed on 26 January 2024).

- Tharakram, K. Snapdragon Ride SDK: A Premium Platform for Developing Customizable ADAS Applications. Published by Qualcomm OnQ Blog. 2022. Available online: https://www.qualcomm.com/news/onq/2022/01/snapdragon-ride-sdk-premium-solution-developing-customizable-adas-and-autonomous (accessed on 26 January 2024).

- Garikapati, D.; Liu, Y. Dynamic Control Limits Application Strategy For Safety-Critical Autonomy Features. In Proceedings of the IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 695–702. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Kumar, A.; Srivastava, S. Object detection system based on convolution neural networks using single shot multi-box detector. Procedia Comput. Sci. 2020, 171, 2610–2617. [Google Scholar] [CrossRef]

- Madridano, A.; Al-Kaff, A.; Martín, D.; Escalera, A.D.L. Trajectory planning for multi-robot systems: Methods and applications. Expert Syst. Appl. 2021, 173, 114660. [Google Scholar] [CrossRef]

- Guo, F.; Wang, S.; Yue, B.; Wang, J. A deformable configuration planning framework for a parallel wheel-legged robot equipped with lidar. Sensors 2020, 20, 5614. [Google Scholar] [CrossRef] [PubMed]

- Borase, R.P.; Maghade, D.K.; Sondkar, S.Y.; Pawar, S.N. A review of PID control, tuning methods and applications. Int. J. Dyn. Control. 2021, 9, 818–827. [Google Scholar] [CrossRef]

- Tuli, S.; Mirhakimi, F.; Pallewatta, S.; Zawad, S.; Casale, G.; Javadi, B.; Yan, F.; Buyya, R.; Jennings, N.R. AI augmented Edge and Fog computing: Trends and challenges. J. Netw. Comput. Appl. 2023, 216, 103648. [Google Scholar] [CrossRef]

- Elkhediri, S.; Benfradj, A.; Thaljaoui, A.; Moulahi, T.; Zeadally, S. Integration of Artificial Intelligence (AI) with sensor networks: Trends and future research opportunities. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 36, 101892. [Google Scholar] [CrossRef]

- Institute of Electrical and Electronics Engineers (IEEE) Explore. AI/ML Publications (2014–2023). Available online: https://ieeexplore.ieee.org/Xplore/home.jsp (accessed on 28 January 2024).

- Multidisciplinary Digital Publishing Institute (MDPI). AI/ML Publications (2014–2023). Available online: https://www.mdpi.com/ (accessed on 28 January 2024).

- Society of Automotive Engineers (SAE) International. AI/ML Publications (2014–2023). Available online: https://www.sae.org/publications (accessed on 28 January 2024).

- Science Direct. AI/ML Publications (2014–2023). Available online: https://www.sciencedirect.com/ (accessed on 28 January 2024).

- Fritschy, C.; Spinler, S. The impact of autonomous trucks on business models in the automotive and logistics industry—A Delphi-based scenario study. Technol. Forecast. Soc. Chang. 2019, 148, 119736. [Google Scholar] [CrossRef]

- Engholma, A.; Björkmanb, A.; Joelssonb, Y.; Kristofferssond, I.; Perneståla, A. The emerging technological innovation system of driverless trucks. Transp. Res. Procedia 2020, 49, 145–159. [Google Scholar] [CrossRef]

- Parekh, D.; Poddar, N.; Rajpurkar, A.; Chahal, M.; Kumar, N.; Joshi, G.P.; Cho, W. A Review on Autonomous Vehicles: Progress, Methods and Challenges. Electronics 2022, 11, 2162. [Google Scholar] [CrossRef]

- Kong, D.; Sun, L.; Li, J.L.; Xu, Y. Modeling cars and trucks in the heterogeneous traffic based on car–truck combination effect using cellular automata. Phys. Stat. Mech. Appl. 2021, 562, 125329. [Google Scholar] [CrossRef]

- Zeb, A.; Khattak, K.S.; Ullah, M.R.; Khan, Z.H.; Gulliver, T.A. HetroTraffSim: A macroscopic heterogeneous traffic flow simulator for road bottlenecks. Future Transp. 2023, 3, 368–383. [Google Scholar] [CrossRef]

- Qian, Z.S.; Li, J.; Li, X.; Zhang, M.; Wang, H. Modeling heterogeneous traffic flow: A pragmatic approach. Transp. Res. Part Methodol. 2017, 99, 183–204. [Google Scholar] [CrossRef]

- Andersson, P.; Ivehammar, P. Benefits and costs of autonomous trucks and cars. J. Transp. Technol. 2019, 9, 121–145. [Google Scholar] [CrossRef]

- Lee, S.; Cho, K.; Park, H.; Cho, D. Cost-Effectiveness of Introducing Autonomous Trucks: From the Perspective of the Total Cost of Operation in Logistics. Appl. Sci. 2023, 13, 10467. [Google Scholar] [CrossRef]

- SAE International. J3016_202104: Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. Revised on April 2021. Available online: https://www.sae.org/standards/content/j3016_202104/ (accessed on 28 January 2024).

- Chen, D.; Lin, Y.; Li, W.; Li, P.; Zhou, J.; Sun, X. Measuring and relieving the over-smoothing problem for graph neural networks from the topological view. Proc. Aaai Conf. Artif. Intell. 2020, 34, 3438–3445. [Google Scholar] [CrossRef]

- Bojarski, M.; Testa, D.D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, D.L.; Monfort, M.; Muller, U.; Zhang, J.; et al. End-to-End Deep Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Zhou, H.; Zhou, A.L.J.; Wang, Y.; Wu, W.; Qing, Z.; Peeta, S. Review of learning-based longitudinal motion planning for autonomous vehicles: Research gaps between self-driving and traffic congestion. Transp. Res. Rec. 2022, 2676, 324–341. [Google Scholar] [CrossRef]

- Norouzi, A.; Heidarifar, H.; Borhan, H.; Shahbakhti, M.; Koch, C.R. Integrating machine learning and model predictive control for automotive applications: A review and future directions. Eng. Appl. Artif. Intell. 2023, 120, 105878. [Google Scholar] [CrossRef]

- Kummetha, V.C.; Kondyli, A.; Schrock, S.D. Analysis of the effects of adaptive cruise control on driver behavior and awareness using a driving simulator. J. Transp. Saf. Secur. 2020, 12, 587–610. [Google Scholar] [CrossRef]

- Koopman, P.; Wagner, M. Challenges in Autonomous Vehicle Testing and Validation. Sae Int. J. Transp. Safety 2016, 4, 15–24. [Google Scholar] [CrossRef]

- Bosich, D.; Chiandone, M.; Sulligoi, G.; Tavagnutti, A.A.; Vicenzutti, A. High-Performance Megawatt-Scale MVDC Zonal Electrical Distribution System Based on Power Electronics Open System Interfaces. IEEE Trans. Transp. Electrif. 2023, 9, 4541–4551. [Google Scholar] [CrossRef]

- Neural Information Processing Systems (NeurIPS). AI/ML Publications (2014–2023). Available online: https://nips.cc/ (accessed on 28 January 2024).