1. Introduction

The significant growth of the population, economic activities, and living standards has led to an increase in electricity demand, creating the need for greater electricity production [

1]. Load forecasting (LF) is a critical component of power system management due to the unpredictable and inconsistent nature of load demand [

2]. LF aims to predict future load demands based on current and historical data [

3]. LF is commonly classified into three distinct types. The initial category, short-term load forecasting (STLF), involves predicting energy demand within a timeframe spanning from a few hours to several days [

4,

5]. The second category, known as midterm load forecasting, anticipates energy demand from one week to several months and occasionally extends to a year [

6]. Long-term load forecasting focuses on predicting energy consumption over a timeframe exceeding a year [

7]. While short- and mid-term forecasting are instrumental for efficient system operation management, long-term electricity demand forecasting facilitates the development of power system infrastructure [

8]. Accurate load forecasting enables more effective planning for constructing distribution and transmission networks, leading to substantial reductions in investment costs [

9]. The power grid system is becoming more complex and unstable due to the penetration of distributed renewable energy sources (DRES). Addressing this challenge necessitates dynamic operation and control. STLF plays a pivotal role in the context of advanced power grid systems. Leveraging the vast amount of data generated by smart grid infrastructure allows for the precise estimation of energy demand, contributing to enhanced management of energy distribution, economy, and security. Furthermore, STLF also aids in the balancing of energy supply and demand, helping grid operators avert issues such as system imbalances and power outages.

First-generation LF algorithms encompass statistical and machine learning (ML) methods such as regression, wavelet transform (WT), support vector machine (SVM), Random Forest (RF), autoregressive-moving average (ARMA), autoregressive-integrated moving average (ARIMA), among others [

10]. In a study [

11] for the Greek Electric Network Grid, the authors proposed an STLF model utilizing SVM, ensemble XGBoost, RF, k-nearest neighbours (KNN), neural networks (NN), and decision trees (DT) based on historical meteorological parameters. This model demonstrated a 4.74% decrease in prediction error compared to industry predictions in Greece, using mean absolute percentage error (MAPE) as a performance metric. The study by Srivastava et al. [

12] aims to improve accuracy in short-term load forecasting (STLF) for the Australian electricity market. It proposes a novel hybrid feature selection (HFS) algorithm that combines an elitist genetic algorithm (EGA) with a random forest method to select the most relevant features, and then uses the M5P forecaster for prediction. The study found that HFS-selected features consistently outperformed those with larger feature sets and M5P forecaster with HFS was more accurate compared to other Bagging approaches. Phyo et al. [

13] introduced an advanced ML-based bagging ensemble model that integrates linear regression (LR) and support vector regression (SVR). Their training utilized a two-year dataset from five distinct regions. In contrast to our approach, they focused on predicting the net load demand for these regions. The ensemble model they proposed exhibited performance closely aligned with baseline DL methods. The study underscored that temperature might not consistently serve as a reliable feature for load prediction, as their findings indicated that incorporating temperature did not contribute to increased accuracy. Another study by Yao et al. [

14] employed the maximal information coefficient (MIC) to screen and select feature sets, including climate and delayed load data, for load prediction using LightGBM and XGBoost models. The proposed MOEC-LGB-XGb model outperformed RF, ARIMA, and SVR models on two years of historical demand dataset from Northwest China. ML models exhibit superior performance with linear data but face challenges with highly non-linear datasets, such as real-world power system demand data. To address non-linearities, Ribeiro et al. [

15] separated trend, seasonality, and residual components using locally weighted regression and applied variational mode decomposition (VMD) to the residual data. They employed an ensemble of ML algorithms to optimize XGBoost model hyperparameters. In a comparative study by Tarmanini et al. [

10], load forecasting was performed using both ARIMA and artificial neural network (ANN). The ANN method demonstrated lower error (MAPE) and a regression factor (R) closer to 1, indicating superior performance compared to ARIMA. Ibrahim et al. [

16] utilized various ML and deep learning (DL) algorithms, including XGBoost, AdaBoost, SVR, and ANN, for 24 h ahead predictions, with ANN exhibiting superior performance in terms of MAPE, RMSE, and

, despite longer training times and higher computational expenses for DL algorithms.

Although the first-generation methods were successful in the past, researchers continue to utilize them for feature extraction purposes [

17,

18]. In recent years, ANN-based models have been widely adopted, primarily due to their proficiency in processing non-linear data. Recurrent neural network (RNN) and convolutional neural network (CNN) have proven particularly effective in handling time series data. Notably, RNN, unlike traditional ANN, possesses the capability to remember and manage temporal sequences. The use of attention-based RNN for electrical load prediction, as discussed in [

19], is noteworthy; however, the model’s precision diminishes with an extended prediction interval. The development of a long short-term memory (LSTM) model, which permits the network to maintain long-term dependencies, has resolved the vanishing gradient problem in RNN [

20]. The study in [

21] introduces an LSTM-based model for STLF, using both single and multi-step predictions. However, an increase in the size of the look-back window results in decreased prediction accuracy. Another alternative for time series forecasting is the Gated Recurrent Unit network (GRU), which exhibits shorter execution times than LSTM by consolidating forget and input gates into a single update gate [

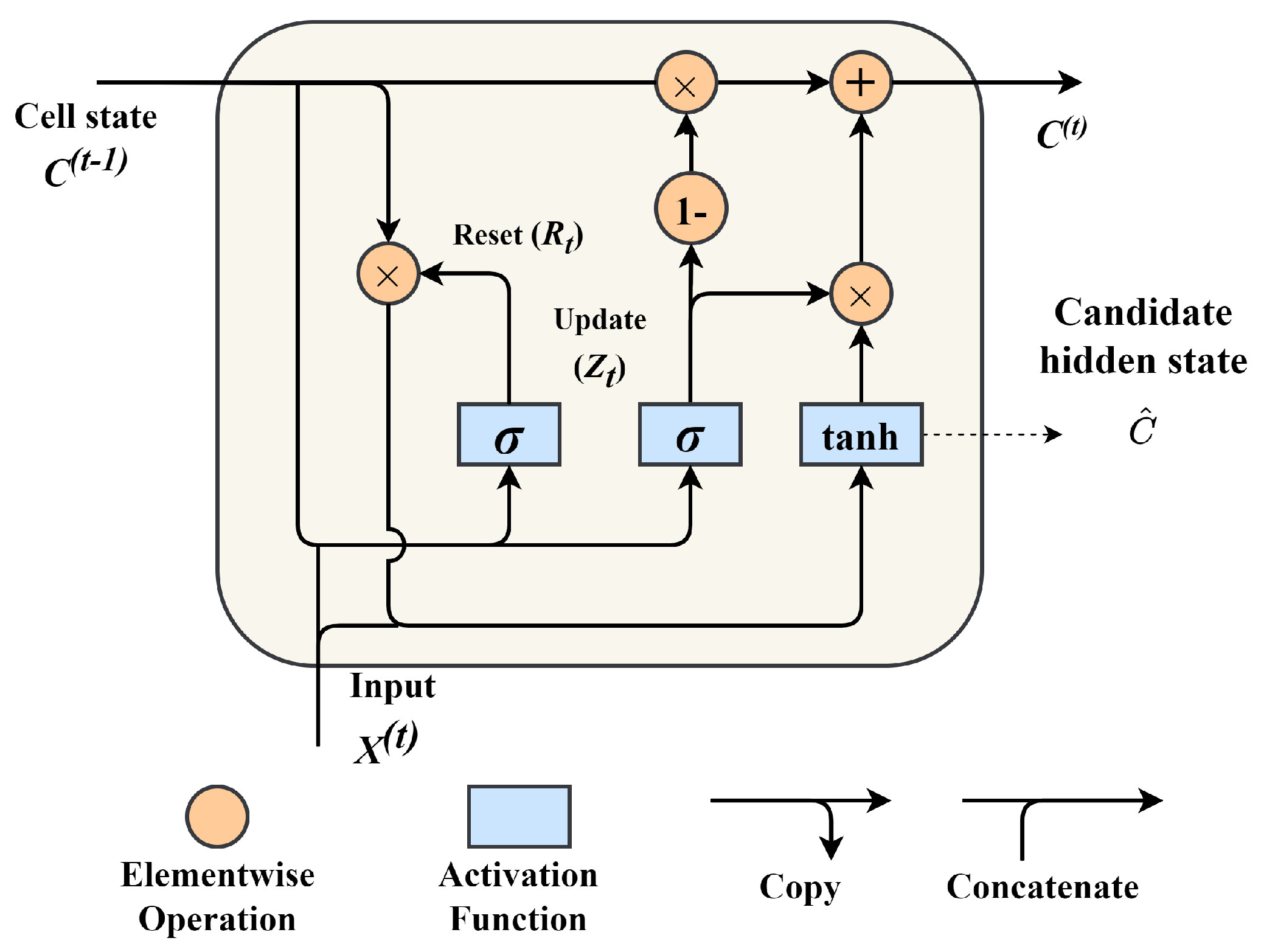

22]. In [

23], Ijaz et al. propose an ANN-LSTM model for predicting hour-ahead load demand, where the ANN functions as a temporal feature extractor. The proposed model, evaluated against CNN-LSTM, outperforms the latter. The dataset comprises two years of hourly demand data for a city region, considering various features such as temperature, humidity, and holidays. Wang et al. in [

17] suggested using variational mode decomposition (VMD), empirical mode decomposition (EMD), and empirical wavelet transform (EWT) to convert time-domain demand data into the frequency domain. The processed data are then passed to a Bi-LSTM layer before signal reconstruction at the output. However, this method comes with a more extended training period due to extensive preprocessing and challenges in optimizing hyperparameters. In their study, Abumohsen et al. [

24] employed RNN, LSTM, and GRU models to conduct STLF using a real-world power system dataset from Palestine. The electrical load dataset was collected from SCADA at one-minute intervals over the course of a year. The research highlighted the superior performance of the GRU model compared to other RNN variants. It also illustrated that datasets with fewer intervals resulted in higher accuracy.

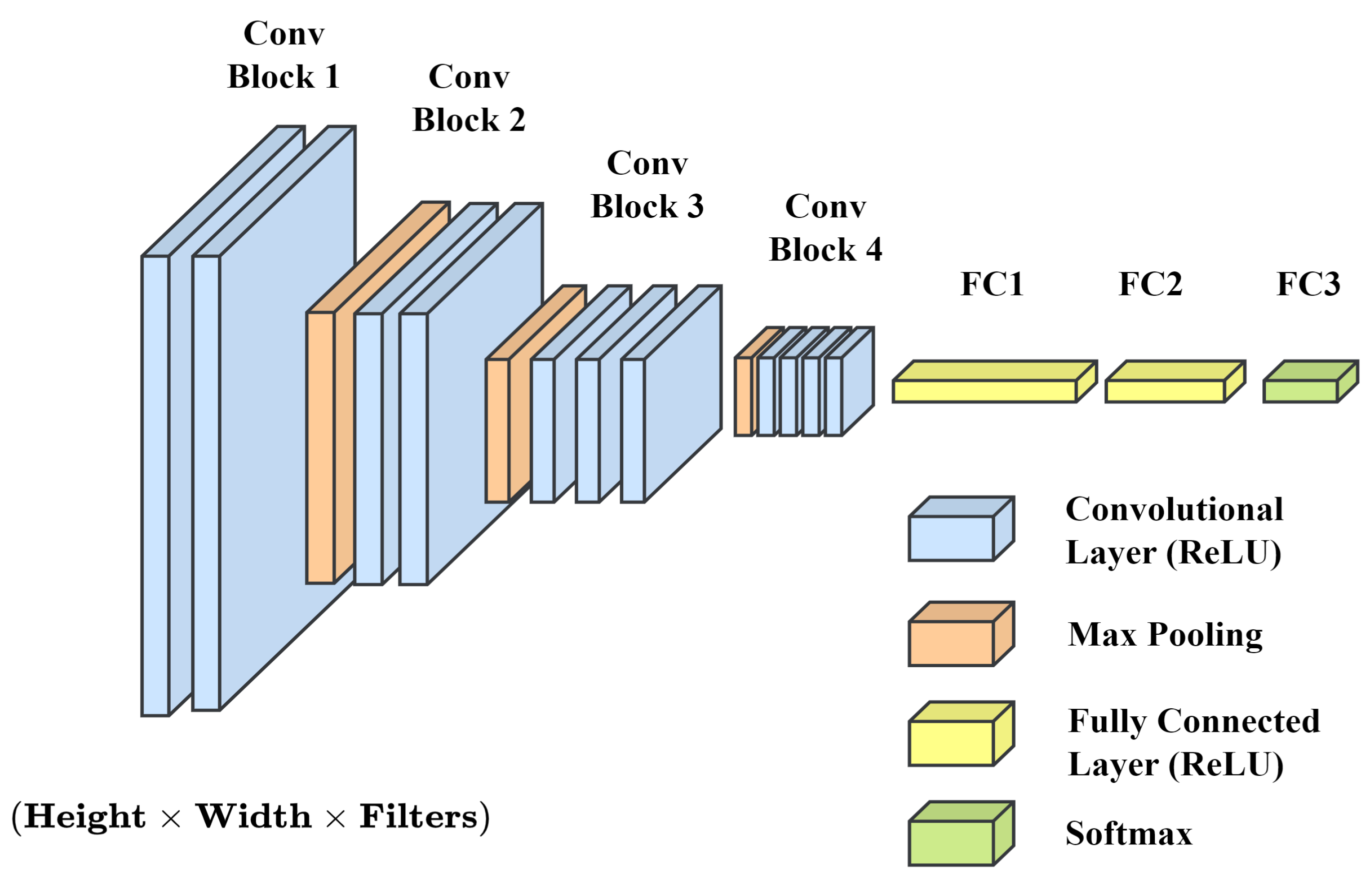

CNN, on the other hand, can learn spatial pattern hierarchies that are translation-invariant. The time series models alone cannot effectively handle various types of high-dimensional data in the power system, including spatiotemporal matrices and image information. Still, the CNN is considered the optimal choice for processing such high-dimensional data [

25]. In a study by Amarasinghe et al. [

26], the effectiveness of CNNs for load forecasting in individual buildings was explored, yielding outcomes comparable to LSTM. While CNNs are proficient in extracting spatial information, they are less effective at capturing temporal information. In contrast, RNNs specialize in learning temporal patterns. Recognizing the strengths of both architectures, researchers have introduced hybrid approaches combining CNNs and RNNs to enhance Short-Term Load Forecasting (STLF) accuracy [

27,

28]. To predict the performance of a smart grid system located in Saudi, different hybrid DL models were used in [

29], with CNN-GRU achieving the highest forecasting accuracy. Haque et al. [

27] used 1D CNN as a preprocessing step before LSTM to predict week-ahead load data, demonstrating the efficiency of CNN as a feature extractor for sequence learning. This hybrid model outperformed the LSTM and GRU models when they are used directly. Sekhar et al. [

30] utilized a combination of bidirectional LSTM and CNN to forecast short-term building energy demand. They employed Grey Wolf Optimization (GWO) to optimize the parameters for their proposed method. The research revealed that their approach demonstrated superior performance compared to unidirectional LSTM, CNN, and the CNN-LSTM hybrid method. However, the study did not investigate the impact of additional features, such as temperature and weekday, on the predictive performance. Similarly, in [

7], the combination of genetic algorithm (GA) and bidirectional gated recurrent unit (Bi-GRU) was proposed for STLF in Bangladesh, outperforming other techniques with only a minimal decrease of 18.13% and 19.82% in RMSE and MAPE, respectively. Another hybrid method, proposed by Chen et al. [

31], combines Residual Neural Network (ResNet) and LSTM to accurately forecast short-term load for Queensland, Australia. However, the proposed model architecture is more computationally expensive and requires a larger training and inference period.

Despite the success of mainstream algorithms like CNNs and RNNs, they face challenges in completely overcoming gradient vanishing limitations, making it difficult to capture very long-term dependencies. Transformer-based algorithms, initially developed for machine translations, are gaining prominence as state-of-the-art solutions in sequence learning tasks. Qu et al. [

32] proposed a day-ahead load forecasting method using Forwardformer, a Transformer architecture variant incorporating multi-scale forward self-attention (MSFSA). Their model, adopting an encoder–dual decoder architecture instead of the conventional encoder–decoder model, outperforms other transformers, Facebook Prophet, and sequence models [

32]. In a hybrid architecture introduced by Ran et al. [

18], Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEDMAN), sample entropy (SE), and Transformer are combined. The decomposition algorithm reduces the non-stationary components of the data, while SE minimizes the complexity of each decomposed element. This hybrid transformer architecture demonstrated excellent performance in predictions ranging from 4 to 24 h. Transfer learning has also recently gained a lot of interest in LF. Yuan et al. [

33] presented a pre-trained model based on CNN-LSTM with attention to predicting buildings’ peak energy demand and total energy consumption. Comparisons with direct learning algorithms such as ANN, RF, and LSTM showed the proposed model to outperform them. However, due to the dependence of large-scale power systems on geographic and demographic information, utilizing a source domain dataset for the target domain proves challenging.

Table 1 provides a brief overview of recent studies in STLF along with their limitations. Most previous research on STLF has focused on predicting electricity demand at minute-long, hourly, or daily intervals. While this approach is advantageous for real-time scenarios with rapid fluctuations in demand, weekly forecasting presents certain benefits, including improved maintenance planning, enhanced system operation, and more effective resource management [

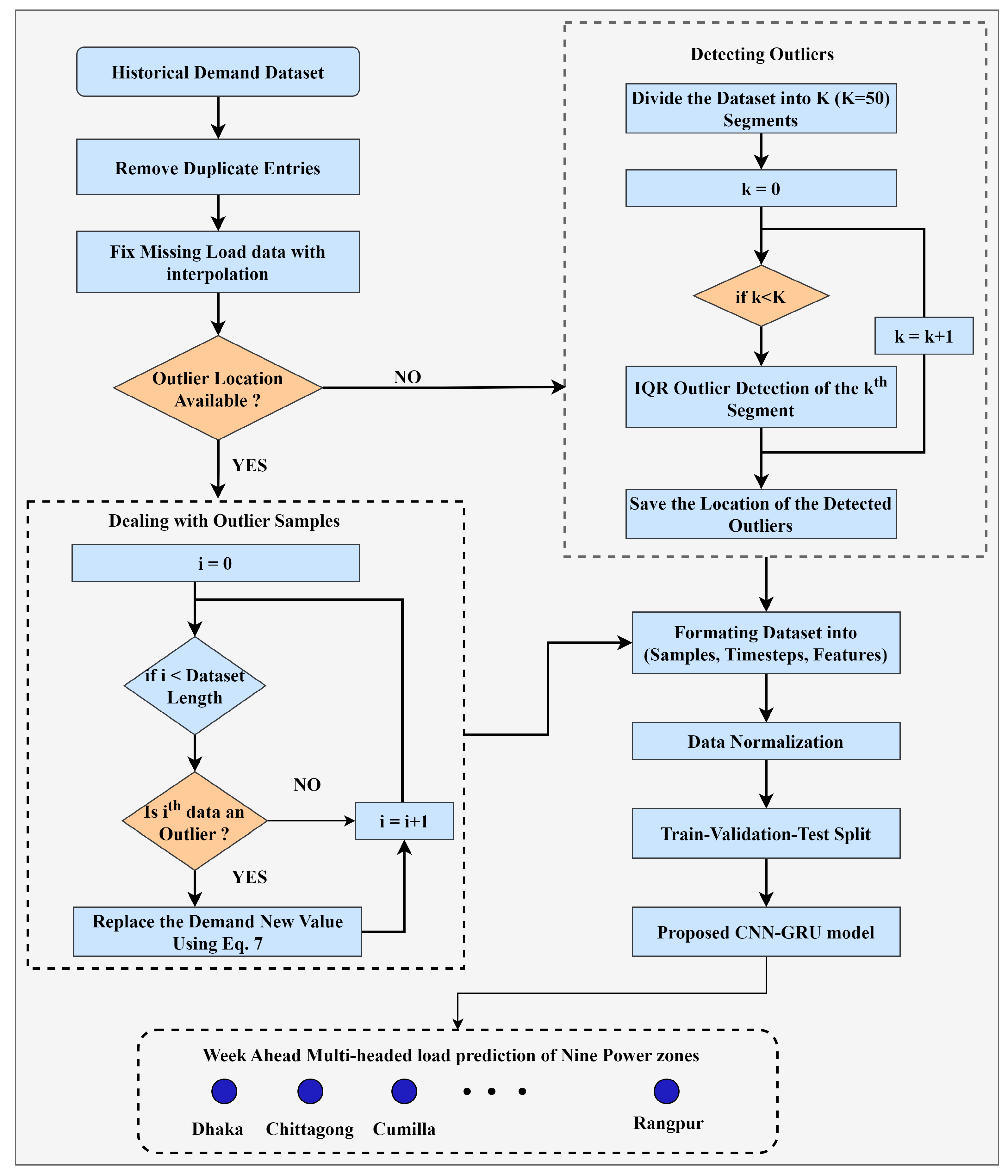

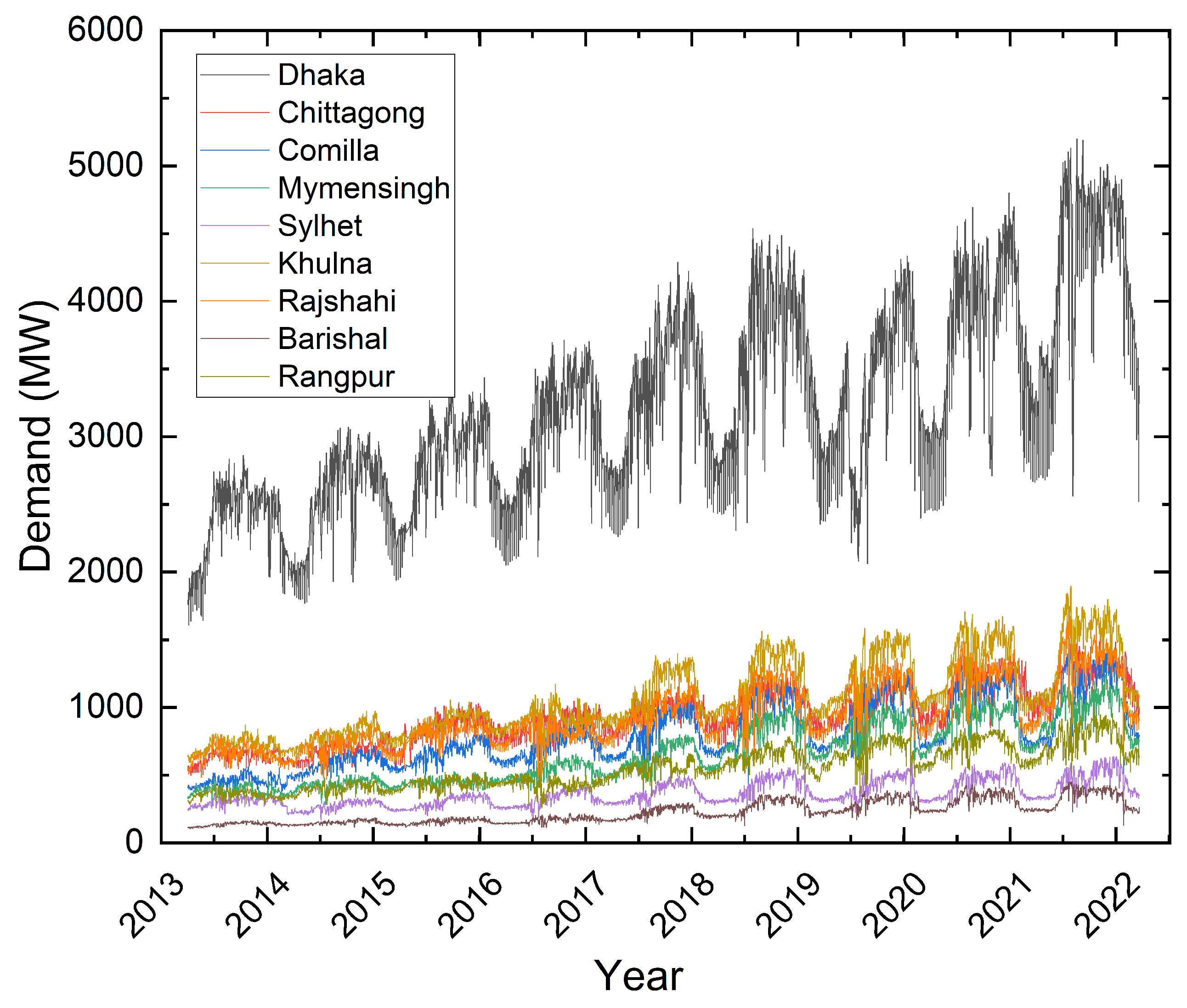

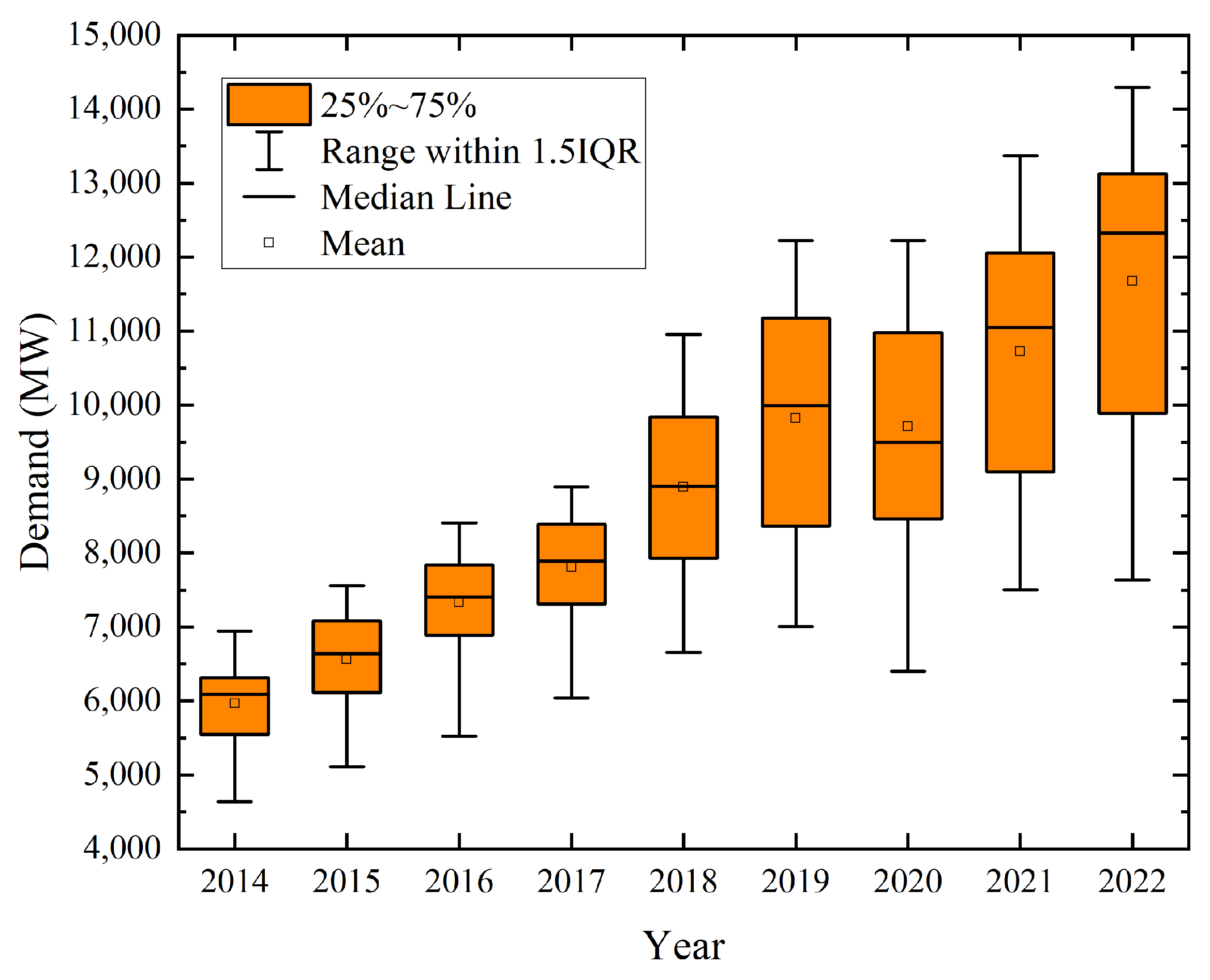

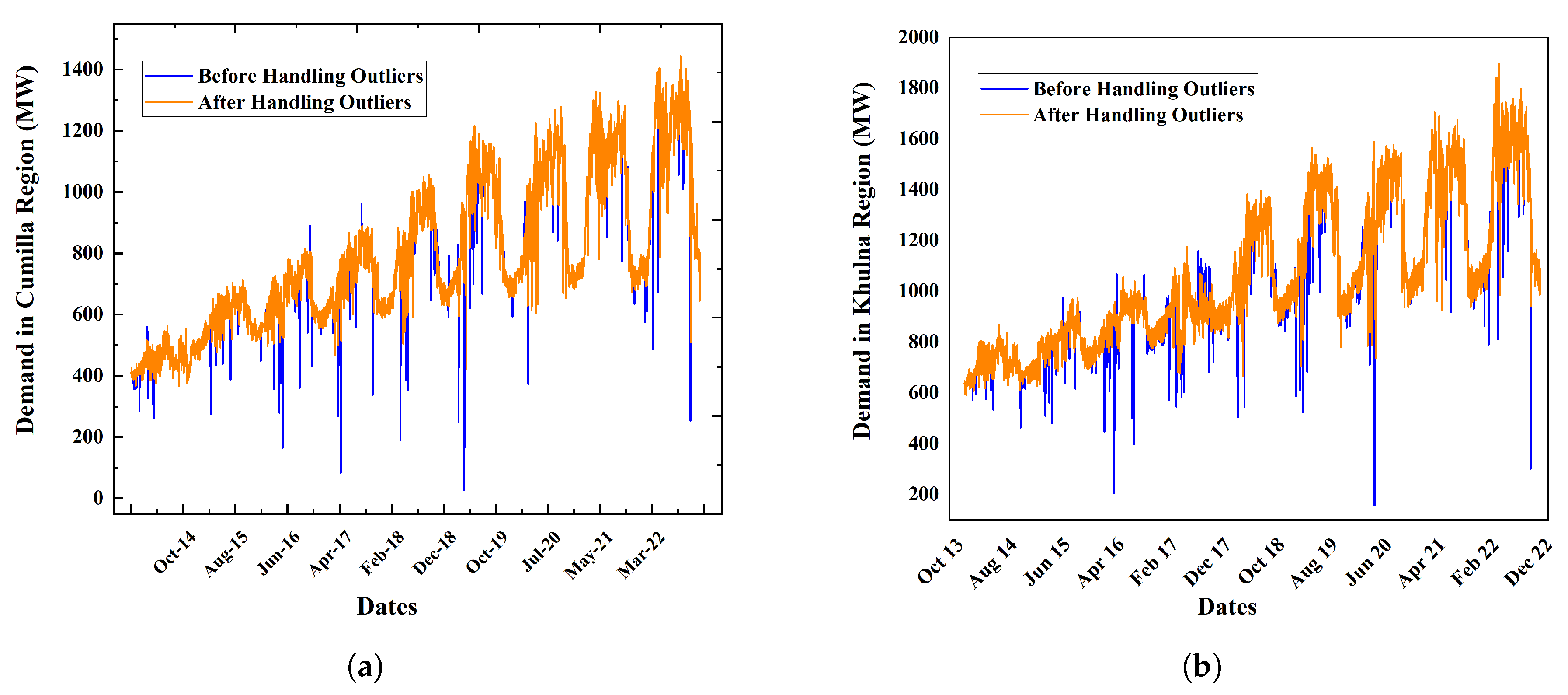

1]. Moreover, after reviewing the existing literature, we have identified that the majority of the past studies focused on predicting load demand in particular regions. A study is yet to be done to forecast loads from different locations simultaneously that can cover a country’s total load demand. Bangladesh has an installed power capacity of 25 GW with a maximum demand of 21 GW. The country is divided into nine power zones—Barishal, Chattogram, Dhaka, Khulna, Rajshahi, Rangpur, Mymensingh, Cumilla, and Sylhet—each having unique demographic and economic characteristics, resulting in varying load demands. The National Load Dispatch Centre (NLDC) manages power distribution and generation throughout the country. However, they use traditional statistical methods to estimate load demand. The economic stakes of even minor STLF errors are high in developing nations, driving the need for further research. In this study, we propose a novel STLF method based on CNN-GRU to predict the week-ahead load demand of different power zones that cover the entire country simultaneously. Our system offers improved accuracy, enhanced reliability, effective resource allocation, better planning, and cost savings. Our proposal is also compared with other state-of-the-art techniques, including LSTM, GRU, CNN-LSTM, Transformer, CNN-Transformer, and LSTM-Transformer. Our contributions are as follows:

We have developed a novel STLF model based on CNN-GRU hybrid model that can simultaneously forecast the week-ahead load demand of nine different power zones of Bangladesh. The proposed model can be trained to make predictions for all of the locations at the same time instead of having to build separate models for each one, which can take a lot of time and computational power.

The performance of the proposed model is compared with six other DL approaches including three Transformer-based models.

We have prepared our historical demand dataset from the PGCB website and, based on these data, we have created our own interpolated data. The raw collected dataset along with the clean and interpolated demand data are made publicly available, which is missing in most research works (

https://github.com/gcsarker/Multiple-Regions-STLF, accessed on 20 October 2023).

Table 1.

Review and limitations of recent studies.

Table 1.

Review and limitations of recent studies.

| Authors | Objectives | Dataset | Model Used | Limitations |

|---|

| Ribeiro et al. [34] | 12 and 24 h ahead LF | Electricity demand for five Australian regions in 2019 (not open) | Ensemble ML | |

| Yuan et al. [33] | STLF based on transfer learning with attention | Two years of load data from a large-scale shopping mall | Pretrained CNN-LSTM with attention | |

| Haque et al. [27] | Weekly LF with hybrid DL model | PGCB electricity demand dataset from Mymensingh, Bangladesh (open) | CNN-LSTM | |

| Inteha et al. [7] | Day-ahead LF | PGCB total demand dataset of Bangladesh, not open | GA-BiGRU | |

| Tarmanini et al. [10] | Household STLF | Hourly demand dataset of 709 households in Ireland (not open) | ARIMA, ANN | |

| Wang et al. [17] | STLF based on wavelet transform and NN | Household-level smart meter data | VMD, EMD, EWT, LSTM | |

| Ran et al. [18] | STLF based on CEEDMAN and Transformer | New York City demand dataset (open) | CEEDMAN-SE-Transformer | |

| Srivastava et al. [12] | Day-ahead load forecast | Half-hourly dataset of New South Wales, Australia from Australian Energy Market Operator (AEMO) | M5P + HFS (EGA and RF) | |

| Chen et al. [31] | STLF with weather paramater forecasting | Four-year historical demand dataset from Queens, Australia (open) | Resnet + LSTM | |

| Abumohsen et al. [24] | STLF | One-year minute-wise electrical load demand dataset from Palestine | RNN, LSTM, GRU | The justification for the choices of the simulated models is missing Relying on training and evaluating with data from just a single year may not adequately capture the increasing demand trend for future years Lacks specification of the forecast horizon

|

| Proposed | Week-ahead LF in multiple zones simultaneously | PGCB daily load demand of Bangladesh (Open) | CNN-GRU | - |

4. Results

In this section, we discuss the model performances on the test observations. The proposed technique in this study simultaneously predicts week-ahead demand in all divisions in Bangladesh. To evaluate the performance of our forecasting model, we have used root mean squared error (RMSE) and mean absolute percentage error (MAPE). Equations (

10) and (

11) demonstrate the mathematical formulation for RMSE and MAPE respectively, where

and

are the actual and predicted values of

N samples. These metrics have become widely used for their effectiveness in assessing the accuracy of forecasting results.

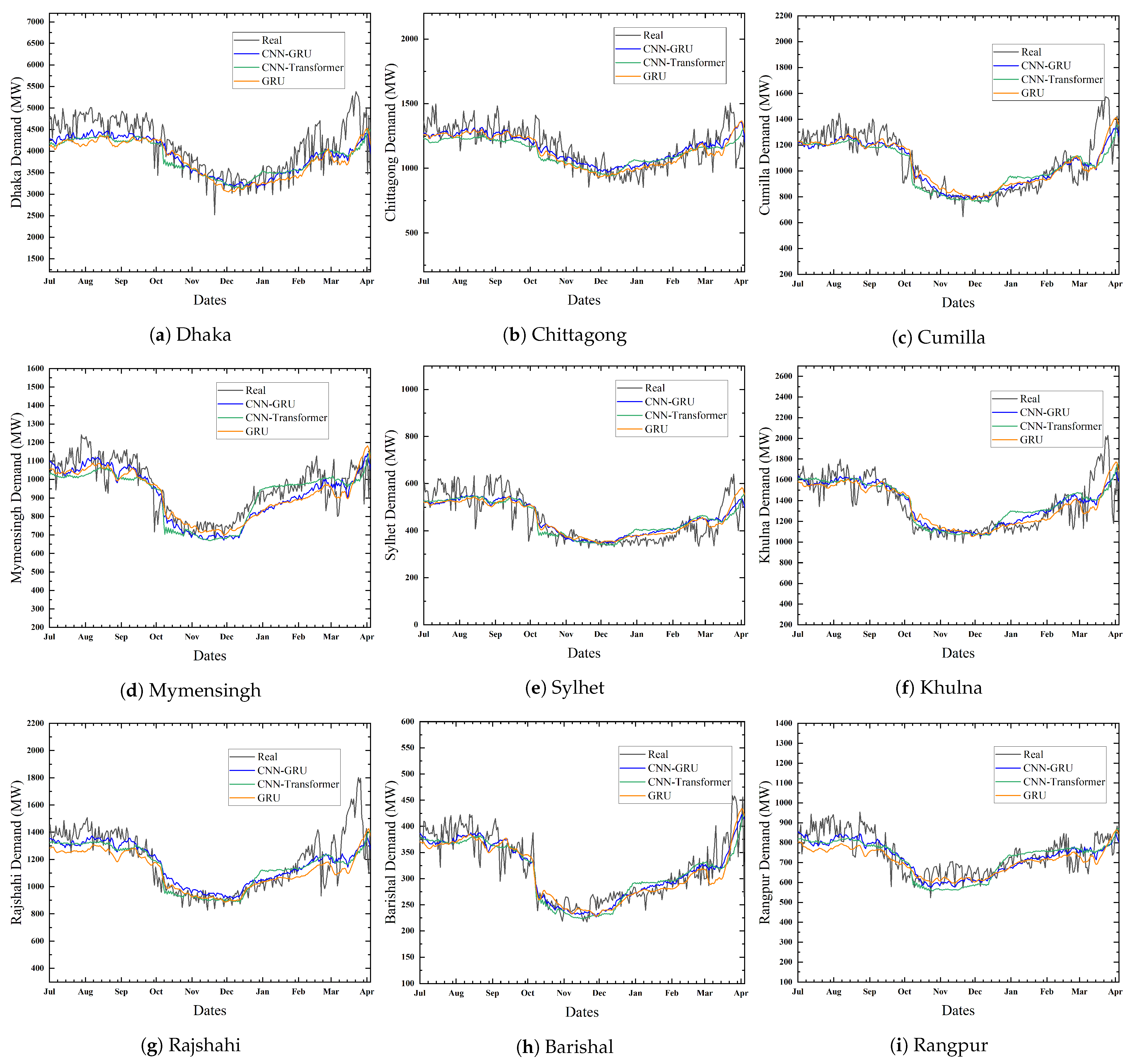

The real-world power system is massive and very complex. The power system’s highly non-linear properties and randomness introduce prediction challenges. This can be observed in the figures where the actual data consist of sudden variations. To keep the illustrations clear and concise, we showcase the prediction outcomes of only the three top-scoring models: CNN-GRU, GRU, and CNN-Transformer. The figures verify that the CNN-GRU model can follow the demand trend more closely than other models.

Table 4 compares the MAPE of several models over a seven-day forecasting period in nine distinct zones for the time period between July and December 2022. The RMSE error obtained from different models for the same time period is listed in

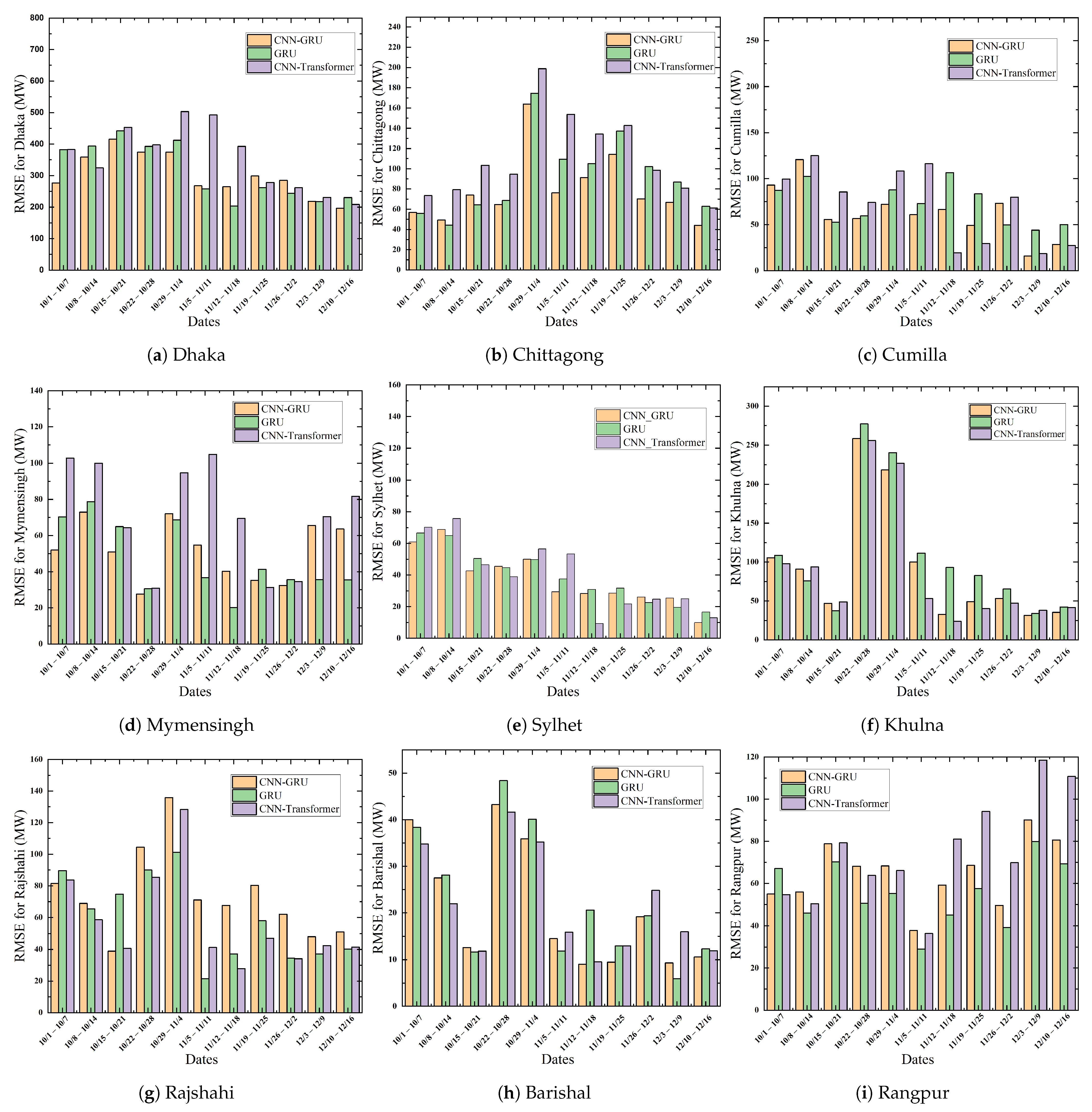

Table 5. The MAPE scores of the naive predictor for nine different zones are 0.0539, 0.0641, 0.0845, 0.0729, 0.1227, 0.0689, 0.0583, 0.0977, and 0.0774, respectively, and for the corresponding areas, the RMSE scores are 310.9725, 100.9114, 132.3682, 94.7536, 76.7995, 135.4886, 98.0518, 43.4898, and 74.0442. Evidently, the proposed CNN-GRU model is more effective than the naive baseline as well as other DL approaches, achieving the least MAPE of 0.0544 in the Chittagong Region and the highest score of 0.0905 in Sylhet. Similarly, the lowest and highest RMSE scores of the proposed model are 33.5080 and 378.2723, respectively, for Barishal and Dhaka. In comparison, the second best model, namely GRU, achieved MAPE score ranging from 0.0602 to 0.0931 across all division on test data. The proposed model significantly outperforms GRU in all of the regions. We have investigated the outcome of famous transformer architecture on our task. Among the transformer models, only the CNN-Transformer hybrid approach performed better, obtaining the least RMSE of 33.1362 in Barishal and the highest of 434.7402 in Dhaka. In comparison, the proposed technique performed 15.98% and 25.37% better in these two regions, respectively. Although CNN-Transformer performed better than the proposed method in Barishal Division, both RMSE and MAPE scores are close for both models. The graphical representation in

Figure 9 illustrates the forecast results for the nine regions spanning from July 2022 to April 2023. The figures validate the seasonal variations as shown in our prediction curve during the summer, winter, spring, and autumn months. It is worth noting that, in certain regions, as the prediction timeframe extends further from the training period, the models exhibit suboptimal performance. This can be attributed to the limitation of traditional DL models, as these algorithms require complete retraining with new observations. MAPE error rates for all the weeks from July to December 2022 are illustrated in

Figure 10 concerning the three best-performing models. Similarly, the RMSE scores for the same observation periods are depicted in

Figure 11. Overall, CNN-GRU achieved the lowest MAPE error across the observation periods for all regions.