1. Introduction

Droplet-based microfluidic systems have proven to be effective in solving the problems of synthesizing new functional materials in several field applications, such as chemistry, biology, and medicine [

1,

2]. In each individual case, the authors propose a new microfluidic system architecture, a Lab-on-Chip topology, or unique synthesis conditions and parameters to achieve the targets of the synthesized materials [

3]. For example, in the study [

4], the authors propose a new technology for screening and diagnosing surfactants during the droplet generation, where the platform is controlled and optimized on the genetic algorithm’s support. Microfluidics has gained wide popularity in biomedical engineering [

5,

6,

7]. Some research focuses on exploring the possibilities for microfluidic synthesis based on monodisperse droplets, in general. Thus, work [

8] is devoted to the anisotropic metal particles controlled and reproducible synthesis description proposed by the authors, using the example of monodisperse gold particles. In general, the use of droplets as individual carriers of the active substance in the synthesis process provides certain advantages. Using passive fusion methods, positive results were obtained in the cadmium sulfide particles synthesis [

9]. In general, microfluidic synthesis made it possible to take a fresh look at existing problems in the field of nanoscale particle synthesis [

10]. The use of droplet capillary reactors not only allowed the efficient organization of magnetic iron oxide nanoparticles, but also the rapid change in the morphology of the resulting substance by varying a few experimental parameters [

11,

12]. The authors’ main efforts are focused on the microfluidic synthesis system’s individual elements creation. In [

13,

14], a unique microfluidic reactor structure is proposed for the synthesis of silver particles. A very popular topic in this field is the synthesis of microfluidic systems-based synthesis platforms. In [

15], the authors propose a platform for the making of drug microparticles, and in [

16,

17,

18] for the synthesis of polymer nanoparticles. In general, droplet synthesis microfluidic technologies provide researchers with a wide and flexible toolkit for manipulating substances’ dimensional and structural characteristics, as well as determining the structural transitions dynamics in materials [

19,

20,

21,

22,

23].

At the same time, the current task is to automate the continuous screening of monodisperse droplets’ morphological and dynamic parameters that characterize the obtained substances’ properties. The methods and software implementation development of such tools will make it possible to develop effective systems for screening and controlling the parameters and conditions for the synthesis and diagnosis of new materials with target properties in the fewest number of experimental iterations. To solve this problem, researchers used instrumental evaluations of these various methods on the experimental results and methods of machine learning, deep learning, and computer vision. In the paper [

24], numerical solution methods for modeling the dynamic characteristics of objects and flows in microchannels with different structures and orientations when considering various influencing factors are proposed. Special architectures of microfluidic systems are used to determine a dynamic characteristic of flow in a microchannel as velocity. For example, in [

25], the authors propose a unique optofluidic device, allowing the measurement of fluid flow velocity in a microchannel using a non-contact optical technique. Some solutions in this field focus not only on the extraction of key characteristics of microfluidic synthesis, but also on their prediction with respect to the initial parameters and experimental conditions. For example, in one study [

26], the authors propose an effective web-based tool for predicting the morphological parameters of generated droplets based on machine learning models. This approach allows the automated design of microfluidic devices for the required conditions of microfluidic synthesis. The concept of integrated technology and methods for the intelligent analysis of computer vision and droplets to automate and intellectualize the microfluidic synthesis process and build a platform for a digital microfluidic setup is very promising [

27,

28,

29,

30,

31,

32,

33,

34]. The general intelligent control concept of the microfluidic chemical synthesis process can be the vectors of parameters and predictive experimental conditions in accordance with the material’s target properties or the planning and developing of a winning strategy for dynamically changing the microfluidic system parameters in real time for the monodisperse droplets with targeted properties. The main solutions proposed in this subject area are based on the use of machine learning models or deep reinforcement learning agents trained in a virtual environment that simulates the real behaviour of the experiment process [

26,

35,

36,

37,

38].

2. Related Work and Problem Statement

The most important point when using microfluidic droplet synthesis is the monodispersed droplets formation principle in the Lab-on-Chip. This process depends on the microchannel’s geometry and the droplet microreactor’s structure [

9,

37,

38]. To form monodisperse water-in-oil macroemulsions, there are several types of microfluidic generator topologies that provide coaxial flows, flow focusing, or T-injection. One of the main parameters that determines the conditions for the emulsion formation is the continuous and dispersed phases’ capillary numbers. In a Lab-on-Chip with flow focusing, depending on the ratio between the capillary numbers, the emulsion formation can occur in a dripping or jet mode. The advantage of using the flow focusing topology is the greater control it has over the droplet generation mode. In addition, due to the droplet introduction into the generator design, which restricts the aperture flow, it is possible to control the formed emulsion size, regardless of its generation frequency. In the proposed study, a Lab-on-Chip was used, the schematic diagram of which is shown in

Figure 1.

This microfluidic device was made using 3D printing technology. In this Lab-on-Chip topology, (1) and (2) are the droplet generator, a geometry structure where monodispersed water or oil droplets in an immiscible phase are generated; (3) is the channels where microdroplets are moving in the flow; (4) is the chamber for storing droplets and for flow visual monitoring; and (5) is the outlet port. It contains an inlet for the continuous and dispersed phases and one outlet for collecting the macroemulsion. The device has a reaction serpentine zone, as well as a droplet collector. The channel cross section is 400 µm, and the droplet generation zone narrows to 200 µm; the depth of all channels is 200 µm. The microfluidic device was created using an Asiga UV MAX digital 3D printer (Asiga, Sydney, Australia) with a wavelength of 385 nm and a light intensity of 7.25 mW/cm2. The first layer was chosen to be 25 µm thick and exposed for 20 s. to avoid delamination of the imprint from the platform. The layer thickness was set to 25 µm and each layer was exposed for 1.2 s. To avoid layer separation during the process, the z compensation was set to 300 µm. For better processability of the resin during printing, the printing temperature was set to 45 °C. Immediately after printing, the microfluidic device was sonicated in the IRS for one minute at a frequency of 80 kHz and then placed in a holder for manual washing of the IRS channels. After flushing the channels, the devices were again sonicated and purged with nitrogen gas. Finally, the device was post-cured for 2 min using a UV lamp (Flash type DR-301C, Asiga, Sydney, Australia).

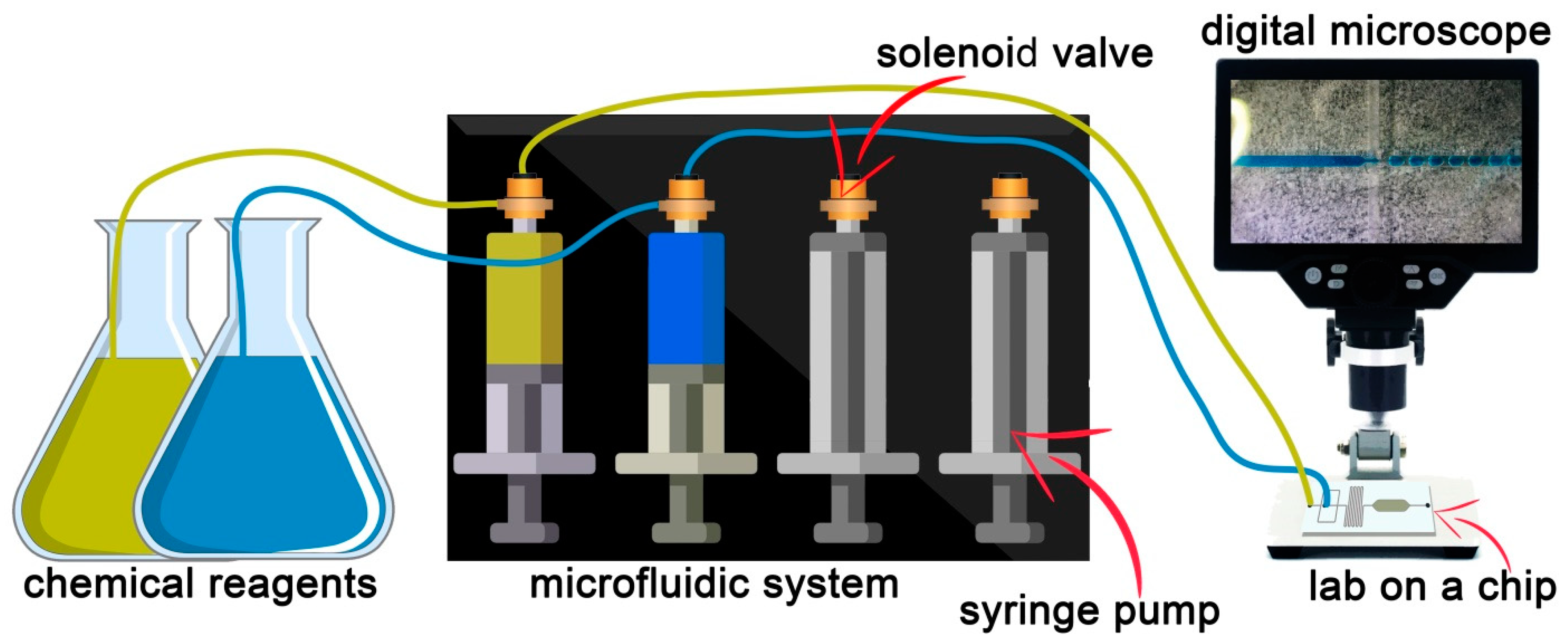

Mineral oil (catalog no. 330779, Sigma-Aldrich, St. Louis, MO, USA) was used as the continuous phase to form a monodisperse microemulsion with the addition of a non-ionic surfactant (surfactant) Span 80 (Sigma-Aldrich, St. Louis, MO, USA). The surfactant stabilized the emulsion and prevented its coalescence by reducing the surface tension and creating a barrier at the interface between the two phases. As a dispersed phase for the formation of droplets, diionized water tinted with methylene blue dye was used at a concentration of 1:10. The liquids were introduced into the microfluidic device using syringe pumps (BYZ-810, Hunan Beyond Medical Technology Co., Ltd, Changsha, China). The flow rate of the dispersed phase was constant and amounted to 200 μL per hour, and the continuous phase rate varied from 200 μL/h to 2000 μr/h. The process of droplet formation was observed and recorded using a digital microscope. The general process of conducting the experiment is shown in

Figure 2.

In the proposed study, the analysis objects for extracting the synthesized monodisperse droplets’ morphological and dynamic characteristics will be captured video data streams from a digital microscope. Furthermore, one of the main conditions is online screening in real time to visualize the results with a minimal model inference delay to expand the dynamically changing parameters functionality during the experiment.

The Data Preprocessing paragraph defines the methods used in the study for generating synthetic image data. These image datasets are designed to form an enriched representation of the feature space of possible outcomes of the monodisperse droplet generation process. An automatic procedure is implemented to label the synthetic images, which eliminates the human factor and reduces the time required to prepare the datasets. The paragraph Intelligent Object Detection Models is devoted to the choice of methods for identifying objects in the image. Data on the training and validation of the selected object detection model is presented. The

Section 5 describes the proposed methods for analyzing and processing video data streams from the microscope. A modification of the YOLOv7 (You Only Look Once) model logical output system is proposed to extend its functionality within the scope of the list of problems to be solved. The Programs and Tests paragraph presents the main results of the research and shows examples of laboratory tests of the created software. The paragraph Discussion reveals the main limitations of this study and defines the scope and complexity of the problems to be solved and the prospects for further research in this subject area. The final paragraph contains conclusions and experiences gained during the study.

3. Data Processing

Initially, the image processing and analysis classical methods as well as standard computer vision algorithms were considered when solving the monodisperse droplets extracting morphological and dynamic characteristics problems based on microscope video data streams. Intellectualization of the process was required because of the technical means used and the chemical reaction types with high variability, the noise, and distortions present, which greatly hampered the operation in the spatial and frequency images domain and affected the accuracy and detecting monodisperse droplet reliability on video recordings from a microscope. As a result, it was decided to discriminately model the process using the one-stage detector algorithm-trained model.

To ensure the formation of feature space extracted by the model and providing the reliable detection of objects, it is required to expand the initial data sample. Since we are talking not only about the already known reaction control, but also about maintaining functionality when setting up new experiments for the functional material microfluidic synthesis, it is required to ensure a stable pattern formation based on visual data that considers geometric shapes, sizes, and colors variety, including signs occurrence, which is an unlikely event. To form such a unique data set, an infinite number of staged experiments would be required, which would incur enormous resource and time costs, which is an inefficient approach to solving the problem. In addition, even having such an example of an available sample, it would be necessary to carry out its labeling in a qualitative way, which leads to even greater costs.

To quickly solve this problem, it is proposed to create synthetic image datasets that consider such high variability and distortions and noise presence, as in the original. In addition, this approach allows for automatic data labeling, eliminating the human factor and, consequently, qualitatively affects the final precision of the model being trained [

39]. The proposed approach to the methods implementation and the software development for generating synthetic image data of the capillary microfluidic synthesis results is to create algorithms that model an unknown data distribution, considering the restrictions imposed on the droplets geometric shape, size, and color, and also to combine these parameters with different arbitrary probabilities. This will go significantly beyond the representative real data sample and provide the droplet stability detection possibility under any, even previously unknown, conditions. The main software tools for the implementation of synthetic image modeling were: Blender 3D graphics environment and its application programming interface; and the functional programming language Python.

The microfluidic synthesis images synthetic datasets generating and labeling took place in several stages. At first, using the application programming interface Blender and using the Python programming language, sphere and ellipsoid templates were created in a virtual environment scene. To do this, at the initial stage, a vertex is created, which is the future sphere lower point. The classical circle formula was then used to define the series of radii of two-dimensional geometric shapes. This happened in two directions. The first step was performed according to the circle and vertex current radii were assigned according to the formula. The second step was to create the next circle over the previous one with a proportional change in radius. These steps are repeated until the vectors for all the circles that model the sphere have been assigned. The more steps we take at each stage, the more polygons the three-dimensional figure surface will end up with resulting in a “smoother” sphere. Then, all the obtained vertexes are connected by edges, considering four neighboring circles belonging to two neighboring circles, with two points each, to form faces. Thus, moving from top to bottom, we create the sphere surface fragments until we run out of free vertex.

The formula for a regular ellipse was used to create a three-dimensional ellipsoid template. The three-dimensional ellipsoid figure software reconstruction general structure corresponds to the procedure for creating a sphere, except for operating with two radius values, and not one as in the first case. In this way, two general templates of a sphere and an ellipsoid were created. They correspond to two formalized microfluidic synthesis droplet geometry cases using a droplet generator. In principle, we separated these two cases to obtain droplets two classes in the future. This was done for objective reasons and will make it possible to calculate the synthesized droplet volume more accurately from its microscopic image.

The next stage was devoted to the materials and modifiers development that allow the automated implementation droplet images variability on synthetic data. Two materials were developed, one of which was a droplet representing the image form, and the second was in the mask nature to implement the generated data automatic labeling function. Each of the materials has its own shader. In the first case, a noise texture with a gloss effect was integrated, which made it possible to implement a blur change by choosing Fresnel coefficients in the range of values from 0 to 1, where a coefficient equal to 1 gives a high level of image blur. In general, it was necessary to cover the contour of a geometric figure, and here a coordinate node was created, connected to a 3D noise texture, combined with a 1D noise texture. Furthermore, by connecting the resulting block with emission, the effect of blurring the contour of the sphere was obtained, which in turn made it possible to adjust the internal color gradient of the synthetic droplet by changing the Fresnel coefficient and transparency. The general shader structure for the first material is shown in

Figure 3.

In the second case, to create a mask shader, we needed to represent the generated objects in a uniform white color. For this, Emission and the Principal BSDF were used, where the adjustment took place by changing the mixing ratio through white.

The main droplets generated features in dynamics by a certain transient process of establishing their geometric shape. As well as the geometry features in general, as mentioned earlier, all the synthesis results can be formalized through two ideal three-dimensional figures, but in the future, when intelligently analyzing video data streams from a microscope and detecting drops of two classes, it is necessary to extract the required features for reliable identification and recognition of the control objects with geometry slightly different from ideal.

In general, face smoothing is applied to existing templates. Then, the arbitrary selection function for several templates within one created scene is implemented. This value is regulated by the objects minimum and maximum number and in our case is in the range from eight to twelve pieces, with the subsequent specification of the number relative to the figure final size. In the microfluidic drop synthesis study case of images, it is practically impossible to obtain a situation where one type of geometry replaces another with a high frequency during the next droplet generation, but in general, there can be moments with a change in the geometry type within a series of monodisperse droplets within the experimental parameters during the synthesis. For the algorithm to operate stably on synthetic images, an arbitrary set of droplets of various geometries are implemented. Therefore, after selecting the objects number, the automatic selection and arrangement of the sphere and ellipsoid templates takes place, provided that in the scene from the total droplets number there must be at least two of the figures’ presented types. Then, for each of the templates, the size is randomly chosen in arbitrary units and in the values range from 0.1 to 0.3 blender unit. At the next step, the first type is applied to all objects, where the RGBA randomization procedure is implemented using the Basecolor parameter located in the Principal BSDF module, if A is always equal to 1. Next come the two modifiers (Simple Deform). To ensure the objects’ spatial forms’ variability, the modifiers assignment is dependent and arbitrary. This means that the procedure for choosing the first modifier purpose, whether it will be used or not, and then the second, considering the first one’s parameters formation, is initially implemented. It is necessary to consider the first modifier parameters when designing the second, so that there is no violation of the generated three-dimensional object spatial geometry. The modifiers implement a procedure for determining the deformation type based on stretching or cone. These deformation types were chosen as the most representative for solving the problem and not violating the template figures smoothness. Then, there is an automatic adjustment of the deformation-selectable type parameters, as well as the choice of the axis along which the distortions will be implemented. In our case, three cases are considered, only the X axis, only the Y axis, or the X and Y axes. Then, depending on the deformation type choice and the axis along which the distortion will occur, there is a limited choice of the deformation factor value (multiplier). The deformation axis choice is one of the conditions for assigning the second modifier and forming its parameters set. This implementation feature is associated with the need to modify the figure along the X and Y axes so that the change in geometry occurs evenly on all figure sides and does not require its rotation.

When we have the prepared objects set, we need to place them in the scene according to the formation in one line. This arrangement is typical for monodisperse droplet microfluidic generator channels. To do this, in turn, each next object is placed to the left of the previous one at a distance that is a multiple of 1.5 object widths in two-dimensional coordinates. This process continues until we reach the placement limit of −3.4 blender unit. This indicator was chosen considering the future image at a resolution of 1920 by 1080 pixels. If the placement limit was reached earlier than it was possible to place all the generated objects according to the specified template number, the placement is terminated. This scenario is useful in cases where the figure sizes are arbitrarily selected and the function for choosing the objects’ number in all cases gives the maximum values. A line is created under the distributed objects and stretched to the future image target resolution. The plane is fitted closely; in this case, the scale limit was selected experimentally. Using the graphics processing environment, we prepared in advance a texture that mimics the Lab-on-Chip surface. This texture is superimposed on the created plane to ensure the synthesized graphic data reliability. It was also necessary to adjust the lighting for the scene, which should correspond to the conditions for conducting an instrumental study of the microfluidic chemical synthesis results. For this, the Sun was chosen as a light source with a light propagation corpuscular type, and the source power was set at 50,000 points.

After preparing the scene, the next step was to render synthetic monodisperse droplets microscopic images datasets. This was implemented like a cycle to create several thousand images in jpeg format, the resulting image sample is shown in

Figure 4.

Parallel to it, for each such image, its black-and-white mask was generated. To create this image mask, material two was applied to the generated objects and a solid black texture was applied to the plane. The mask image data was rendered in png format with the same resolution and spatial elements arrangement. The resulting image mask sample is shown in

Figure 5.

The png format was used because of the alpha channel preservation considerations, i.e., producing images without compression. The image files names in the first and second cases were the same.

In the next stage, an algorithm for automatic pseudodroplets labeling in synthetic data was developed and implemented. For the proposed algorithm, black-and-white synthetic images masks were used. To do this, using the Pillow library, we converted the color space from the RGBA standard to HSV. After color space conversion, pixel-by-pixel image processing took place. The pixels were processed from top to bottom, from left to right. In each pixel data array column, a search was made for a volume greater than 10 in HSV values. After finding the first pixel, the search function was started for the last white pixel in the column. The coordinate addresses of all the first and last pixels with volume > 10 are recorded in a separate data array. After that, a transition to the right occurred and the script repeated until there were only black pixels in the column. This means that the first droplet descriptor is finished. The operation was repeated until the image spatial region ends and the descriptions for all the figures were retrieved. Then, in each array corresponding to one pseudodroplet, the minimum and maximum values of the x and y coordinates were found. As a result, four points characterizing our region of interest were extracted. To correctly interpret the images labeling for the training of the algorithm for detecting and classifying objects, the txt format text file was created. Data about marked objects were written line by line to this file. The data label formation principle is presented in Algorithm 1.

| Algorithm 1 Tailoring data labeling for the target model |

INPUT: Top left pixel position (x, y) and bottom right pixel position (x, y) of Bounding Box, image width, and height

OUTPUT: List with YOLO-format parameters (class, x-center, y-center, width, height)

start function

p_1 = [bbox [2][0], bbox [0][1]]

p_2 = [bbox [3][0], bbox [0][1]]

p_4 = [bbox [3][0], bbox [1][1]]

width_p = p_2[0]–p_1[0]

height_p = p_4[1]–p_2[1]

width = width_p/image.width

height = height_p/image.height

x = ((p_2[0] + p_1[0])/2)/image.width

y = ((p_4[1] + p_2[1])/2)/image.height

class = 0

if width_p/height_p > 1.2 then

class = 1

else

class = 0

yolo_format = [class, x, y, width, height ]

return yolo_format

end function |

All text descriptions retain the child image names. The labeled image sample is shown in

Figure 6 and

Table 1.

The data given in

Table 1 correspond to the class and position of the objects in

Figure 6. Class 0 corresponds to the approximated spatial shape of the drop-sphere, and 1 to an ellipsoid. The marking of the objects on the images was performed using Algorithm 1 above.

The original microfluidic drip synthesis video stream captured from a digital microscope is quite noisy. In addition, the real digital microscopes models used in the instrumental study process of the experiment results are quite different. They have different video stream output resolutions, support video recording with different frames per second, and are also subject to various distortions and noise due to technical and optical features. Therefore, it was decided not to filter and process the input stream of video data from the microscope, but to expand the data-used feature space for the training of the model by introducing noise and distortions into the synthetic sets. At the beginning, a randomized choice a is randomized in a synthetic data postprocessing scenario a. Among the scenarios used are: changing the image quality factor in the range from 1 to 5 (multiplying by 10 we get an original image quality percentage); blurring; and introducing noise. Among the main noises used are Gaussian, Spekle, Poisson, Salt, Paper, Salt, and Paper. After post-processing at the output, we get a labeled dataset of the droplet synthetic images, considering the great variability and the problem being solved of specificity.

5. Monodisperse Droplets Extracting Morphological and Dynamic Characteristics

It is necessary that all the monodisperse droplet characteristics extracted from the video stream be presented in the metric system, and not in conventional units. To do this, it is required to organize the scale factor finding function to bring the considered images’ screen coordinates into the Cartesian system. At the first step, the first frame is extracted from the video sequence and filtered in the frequency domain; for this, the Discrete Fourier Transform is implemented, and low-pass filters are used. Then there is a transition back to the spatial processing area and a brightening of the image by adding a positive constant to the color value for each pixel, in cases where the sum is greater than 255, we force the maximum color value to be written. The resulting image is then processed using the Canny edge detection operator. Since the channel with liquid in the dispersed phase in our case is always located on the right and we know in advance the image spatial resolution, we analyze from right to left, while retreating 20% of the pixels in width. Then we sample nine columns of pixels and in each column, we denote the coordinates of the first and last white pixel. Then we find the difference in coordinates and average it over all nine samples. Since we always know the channel width of a microfluidic chip, using the ratio of the pixels’ average number to the chip channel’s actual value, we find the conditional correspondence of a pixel to the object’s real physical size. A common example of finding the scale factor is shown in

Figure 10.

After that, a tensor array is formed that stores the spatial arrangement of the region in the xyxy (min and max) format, the droplet class shape being determined, and the precision percentage being determined. Since there is some false positive detection at a high frame rate and we cannot directly identify specific droplets on the same frame, we filter out records with a detection accuracy value less than the average classification accuracy value within one video frame. Next, the remaining data of the array is sorted in ascending order by the value of the x-coordinate of the Boundary Box. Thus, the droplet numbering on the frame is set, starting from the left most one.

To find the distance between monodisperse droplets in the image, the minimum x value within the nth Bounding Box is taken, where n is the number assigned in the previous step and the maximum x value for n + 1 Bounding Boxes. After that, the difference

is found and multiplied by the scale factor to convert to the metric system. This procedure is repeated for each adjacent droplet detection. The inference sample of the distance example between adjacent drops in conventional units is shown in

Figure 11.

One of the droplet’s main morphological characteristics for researchers is their volume. To find it, the Bounding Box spatial descriptions in the inference of the used model are also used. Since the Bounding Box boundaries accurately describe the detected object’s location, the droplets two-dimensional projections can be considered inscribed in the Bounding Box. Thus, we are dealing with two formalized figures—a circle and an ellipse. For a circle, we find the Bounding Box center coordinate as the difference between the X and Y coordinates, and . Then the difference between the maximum value of the x coordinate for the Bounding Box and the x coordinate of the center will be equal to the circle radius and, accordingly, of our sphere, since the possibility of individual deformations along the z axis is not expected, which is associated with the peculiarities of laminar fluid flows in the Lab-on-Chip channels. The existing difference is multiplied by the scale factor and the volume of the sphere is calculated. The ellipsoid in our case is regular and extended along the X axis, so you can find the semi-axes as and _center and find the volume of the figure .

To find the droplet color in the synthesis process, a 3 × 3 pixel matrix is selected, which coincides with the Bounding Box center determined at the previous step. The resulting values are then averaged over each RGB channel. These morphological characteristics are constantly updated and averaged over a frame series. A frame series is determined by the magnitude of the change in these morphological parameters. If there is a sharp change in one of them, the average values are reset, and a new recalculation begins.

One of the monodisperse droplet’s main dynamic characteristic that determines the synthesized substance behavior is its speed. To find the speed on the frame, two extreme left Bounding Boxes are fixed. For each of the drops, the value of the minimum x coordinate is stored. This coordinate value extraction is repeated for each frame and always with the two left detectable droplets. We also constantly evaluate the minimum x coordinate value for a droplet located at the image space on the left edge. This estimate allows you to track the moment the droplet leaves the frame and, as a result, the detection loss. Then the numbering is reassigned and the minimum coordinate array of the two extreme neighboring Bounding Boxes is overwritten. Thus, we always determine the change in the minimum x coordinates for the second droplet from the left within adjacent video sequence frames. Having made a frame selection, before reassigning the numbering, we will get the total difference between the x coordinate values and the frame number for which this change occurred. We will also need to extract metadata from the digital microscope video file to determine the frame’s number of the real time sequence per second. After that, a series is formed from i = 1 to the frame value obtained, where you can find the sum of the coordinate changes sum in the form . Then, this sum can be divided by the number of frames per second and multiplied by the scale factor to find the speed.