Abstract

Most current affect scales and sentiment analysis on written text focus on quantifying valence/sentiment, the primary dimension of emotion. Distinguishing broader, more complex negative emotions of similar valence is key to evaluating mental health. We propose a semi-supervised machine learning model, DASentimental, to extract depression, anxiety, and stress from written text. We trained DASentimental to identify how N = 200 sequences of recalled emotional words correlate with recallers’ depression, anxiety, and stress from the Depression Anxiety Stress Scale (DASS-21). Using cognitive network science, we modeled every recall list as a bag-of-words (BOW) vector and as a walk over a network representation of semantic memory—in this case, free associations. This weights BOW entries according to their centrality (degree) in semantic memory and informs recalls using semantic network distances, thus embedding recalls in a cognitive representation. This embedding translated into state-of-the-art, cross-validated predictions for depression (R = 0.7), anxiety (R = 0.44), and stress (R = 0.52), equivalent to previous results employing additional human data. Powered by a multilayer perceptron neural network, DASentimental opens the door to probing the semantic organizations of emotional distress. We found that semantic distances between recalls (i.e., walk coverage), was key for estimating depression levels but redundant for anxiety and stress levels. Semantic distances from “fear” boosted anxiety predictions but were redundant when the “sad–happy” dyad was considered. We applied DASentimental to a clinical dataset of 142 suicide notes and found that the predicted depression and anxiety levels (high/low) corresponded to differences in valence and arousal as expected from a circumplex model of affect. We discuss key directions for future research enabled by artificial intelligence detecting stress, anxiety, and depression in texts.

1. Introduction

Depression, anxiety, and stress are the three negative emotions most likely to be associated with psychopathological consequences [1,2,3]. According to the Depression Anxiety Stress Scale (DASS-21) [4], depression is associated with profound dissatisfaction, hopelessness, abnormal evaluation of life, self-deprecation, lack of interest, and inertia. Similarly, anxiety is associated with hyperventilation, palpitation, nausea, and physical trembling. Stress is associated with difficulty relaxing, agitation, and impatience (cf. [1,5,6]).

While these emotions are discrete and highly complex [3,6], they vary along a primary and culturally universal dimension of valence: perceived pleasantness [2,7,8]. Due to its universality, valence is frequently used in self-report affect scales [9]. Valence, which can be easily quantified along a continuous scale, explains the largest variance when compared to other proposed emotional dimensions such as arousal [7]. Unfortunately, valence is often the only output of affect scales. This is potentially problematic for measuring depression, anxiety, and stress, which are more complex [10]: These three distinct types of psychological distress are similar in valence but differ widely along other dimensions, such as arousal [11]. In fact, depression, anxiety, and stress are difficult to distinguish using valence alone [3,12,13]. This underlines the need for richer mappings between emotional dimensions and depression, anxiety, and stress (DAS) levels.

In the present study, we examine a new approach for detecting DAS levels by exploring how they are related to the emotions people recall when asked to report how they felt recently [8]. Specifically, we developed a machine learning approach (DASentimental) to extract more comprehensive information about reported emotions from a recall-based affect scale, the Emotional Recall Task (ERT) [8]. By using DASentimental to extract information from recently experienced emotions, we show how DASentimental can be used to make inferences about DAS levels that extend beyond valence and, subsequently, allow users to investigate natural language more generally.

Literature Review: Cognitive Data Science, Mental Well-Being and Issues of Affect Scales

Mental well-being is a psychological state in which individuals are able to cope with negative stimuli and emotional states [3,6,14,15]. Assessing the connection between emotions and mental well-being is a key yet relatively unexplored dimension in cognitive data science, the branch of cognitive science investigating human psychology and mental processes under the lens of quantitative data models [6,8,16,17,18,19,20,21,22,23].

Depression, anxiety, and stress can impair mental well-being, and consequences can be as extreme as suicide [5,24]. Early quantitative recognition of distress signals that might affect mental health is crucial to providing support and boosting wellness. Recognition starts with diving deep into an individual’s mindset and understanding their emotions. Psychological research [8,15,22,25,26] has found that people’s mental states relate to how they communicate; written and spoken language can thus reveal psychological states. A person’s emotional state can be anticipated through their communication [22,27]—identifying a quantitative coexistence and correlation among emotional words used by an individual can unveil crucial insights into their emotional state [28,29]. However, using only valence (also called “sentiment” in computer science [30]) to assess DAS levels is likely to be insufficient. Capturing how people reveal various forms of emotional distress in their natural language is therefore an important and open research area.

Outputs of affect scales typically include scores that quantify emotional valence. For example, the Positive Affect and Negative Affect Scale (PANAS; [13]), arguably the most popular self-report affect scale, asks people to evaluate their emotional experience against a predetermined emotion checklist that contains 10 positive words and 10 negative words (e.g., “to what extent did you feel irritated over the past month?”). By summing up the responses, the PANAS provides two scores: one for positive affect and one for negative affect. The PANAS essentially splits the emotions into two groups based on valence, and consequently ignores the within-group difference in valence. That means, for example, that emotions in the negative affect list such as “guilty” and “scared” are treated as though they had the same emotional impact.

Understanding mental well-being could be enhanced by both investigating richer sets of emotions—including everything a person might remember about their recent emotional experience—and examining the sequence of those emotional states. A precedent for this approach was recently set with the publication of the ERT [8], which asks participants to produce 10 emotions that describe their feelings. The sequences of words produced in the ERT represents a potential wealth of information for adapting machine learning to sentiment analysis. The idiosyncratic features of these individual words may contain information beyond valence. Indeed, arousal is often included as a primary predictor in addition to valence, for example, in the two-dimensional circumplex model of emotions [11]. Yet the ERT is likely to contain other dimensions as well. For example, anger and fear are both highly negative and highly arousing, but they refer to different experiences and prepare people for different sets of behaviors—anger may trigger aggression whereas fear may trigger freezing or fleeing. The order in which words are recalled in the ERT may also offer useful information by indicating the availability of different emotions and therefore potentially signalling information about emotional importance [31,32]. For example, earlier-recalled words are likely to provide more information on well-being than are later-recalled words. Finally, the ERT may contain information on emotional granularity, a psychological construct referring to an individual’s ability to discriminate between different emotions [14]. For example, a person with high emotional granularity would tend to use more distinct words (e.g., “anxious” instead of “bad”). People with higher emotional granularity reported better well-being and were less prone to mental illness, probably because a sophisticated understanding of one’s negative emotions fosters better coping strategies [14]. Crucially, people with low emotional granularity were found to be more likely to focus on valence and use “happy” and “sad” to cover the entire spectrum of positive and negative emotions [2]. All these patterns and strategies represent the building blocks of DASentimental.

2. Research Aims

This work focuses on sequences of emotional words, whose ordering and semantic meaning contain features that are assumed to be predictive of depression, anxiety, and stress. Having defined these psychological constructs along the psychometric scale represented by the DASS-21, the current work aims to reconstruct the model between emotional word sequences and DAS levels through machine learning. We adopted a semi-supervised learning approach mainly composed of two stages. First, we trained a machine learning regression model using cognitive data from the ERT [8]. Through cross-validation and feature selection, we enriched word sequences with a cognitive network representation [15,33] of semantic memory. We show that semantic prominence in the recall task as captured by network degree can boost the performance of the regression task. Second, having selected the best-performing model, we applied it to identifying emotional sequences in text, providing estimations for the DAS levels of narrative/emotional corpora—in this case, suicide notes [24,28].

3. Methods

This section outlines the datasets and methodological approaches adopted in this manuscript.

3.1. Datasets: Emotional Recall Data, Free Associations, Suicide Notes, and Valence–Arousal Norms

Four datasets were used to train and test DASentimental: the ERT dataset [8], the Small World of Words free association dataset in English [33], the corpus of genuine suicide notes curated by Schoene and Dethlefs [28], and valence–arousal norms in the Valence-Arousal-Dominance (VAD) Lexicon [10].

The ERT dataset is a collection of emotional recalls provided by 200 individuals and matched against psychometric scales such as the DASS-21 [4]. During the recall task, each participant was asked to produce a list of 10 words expressing the emotions they had felt in the last month. Participants were also asked to assess items on psychometric scales, thus providing data in the form of word lists/recalls (e.g., (anger, hope, sadness, disgust, boredom, elation, relief, stress, anxiety, happiness) and psychometric scores (e.g., depression/anxiety/stress levels between 0 and 20). The completely anonymous dataset makes it possible to map the sequences of emotional words recalled by individuals against their mental well-being, achieved here through a machine learning approach.

The Small World of Words [33] project is an international research project aimed at mapping human semantic memory [19] through free associations: conceptual associations where one word elicits the recall of others [33]. Cognitive networks made of free associations between concepts have been successfully used to predict a wide variety of cognitive phenomena, including language processing tasks [33], creativity levels [18,20], early word learning [34,35], picture naming in clinical populations [36], and semantic relatedness tasks [37,38]. Furthermore, because they have no specific syntactic or semantic constraints, free associations capture a wide variety of associations encoded in the human mind [39]. We therefore used free associations to model the structure of semantic memory from which the ERT recalls were selected. This modeling approach posits that all individuals, independent of their well-being, possess a common structure of conceptual associations. Although preliminary evidence shows that semantic memory might be influenced by external factors such as distress [40] or personality traits [41], we had to adopt this point as a necessary modeling simplification in absence of free association norms across clinical populations. Our approach also posits that the connectivity of emotional words is not uniform; rather, there are more (and less) well connected concepts. This postulation, combined with the adoption of a network structure, operationalized the task of identifying how semantically related emotional words are in terms of network distance (the length of the shortest path connecting any two node [35]). The finding that network distance in free associations outmatched semantic latent analysis when modeling semantic relatedness norms [37,38] supports our approach.

The corpus of suicide notes is a collection of 142 suicide notes by people who ended their lives [28]. The dataset was curated and analyzed for the first time by Schoene and Dethlefs [28], who used it to devise a supervised learning approach to automatic detection of suicide ideation. The notes were collected from various sources, including newspaper articles and other existing corpora. All notes were anonymized by removing any links to a person or place or any other identifying information. Already investigated in previous studies under the lens of sentiment analysis [28], cognitive network science [24], and recurrent neural networks [29], this dataset was a clinical case study to which DASentimental was applied after having been trained on word sequences from the ERT data.

The valence–arousal norms used here indicate how pleasant/unpleasant (valence) and how exciting/inhibiting (arousal) words are when identified in isolation within a psychology mega-study [10]. This dataset included valence and arousal norms for over 20,000 English words. We used it to validate, through the circumplex model of affect [11], results based on DASentimental and text analysis.

3.2. Machine Learning Regression Analysis

Our DASentimental approach aims at extracting depression, anxiety, and stress levels from a given text through semi-supervised learning. DASentimental is a regression model, trained on features extracted from emotional recalls (ERT data) and obtained from a network representation of semantic memory (free association data). The model was validated against psychometric scores from the DAS scale [8]. Using cross-validation and feature importance analysis [42], we selected a best-performing model to detect depression, anxiety, and stress levels in previously unseen texts.

All in all, the pipeline implemented in this work can be divided into four main subtasks, performed sequentially:

- Data cleaning and vectorial representation of regressor (features) and response (DAS levels) variables;

- Training, cross-validation, and selection of the best-performing regression model for estimating DAS levels from ERT data;

- Estimating the DAS levels of suicide notes by parsing the sequences of emotional words in each letter;

- Validating the labelling predicted by DASentimental through independent affective norms [10].

3.3. Data Cleaning and Vectorial Representation of Regressor Variables

Our regression task builds a mapping between depression (anxiety, stress) scores and features extracted from sequences of emotional words, . Each sequence contains exactly 10 words produced by a participant in the ERT (e.g., = {anger, hope, sadness, disgust, boredom, elation, relief, stress, anxiety, happiness} and thus = anger). The ERT dataset features 200 recalls , each produced by an individual and reported relative to their estimated levels of depression, anxiety, and stress as measured on the DASS-21 (cf. [8]). One training instance was performed for each of the three independent variables (depression, anxiety, and stress levels). All instances used 200 recalls in a fourfold cross-validation. Independent variables ranged from 0 (e.g., absence of stress) to 20 (e.g., high levels of stress). The ERT dataset contained on average one in three individuals suffering from abnormal levels (for reference see: https://www.psytoolkit.org/survey-library/depression-anxiety-stress-dass.html accessed on 8 November 2021) of depression, anxiety, or stress, indicating that the dataset contains both normal and abnormal levels of distressing emotional states and can be used for further analysis.

Like other approaches in natural language processing [20,23,42], we adopted a vectorial representation, transforming the 10-dimensional vectors into N-dimensional vectors , where the first entries (for ) count the occurrence (1, 2, 3, …) or absence (0) of a word in the original recall list . In this way, K counts the first entries in the vectorial representation of recalls featuring the absence or presence of specific words. Consequently, K is also the number of unique words present across all 200 recalls in the ERT data. The remaining entries (for ) are relative to additional features extracted from recall lists, i.e., network distances obtained from the cognitive network of free associations. Hence, N is the sum of K and the number of distance-based features extracted from recalls. The representation of word lists as binary vectors of word occurrences, known as Bag-of-Words (BOW) [43], is one of the simplest and most commonly used numerical representations of texts in natural language processing. The representation of word lists as features extracted from a network structure, known as network embedding, has been used in cognitive network science for predicting creativity levels from animal category tasks [20].

BOW representations can be noisy due to different word forms indicating the same lexical item and thus the same semantic/emotional content of a list (e.g., “depressed” and “depression”). Noise in textual data can be reduced by regularizing the text—that is, recasting different words to the same lemma or form. We cast different forms to their noun counterparts through the WordNet lemmatization function implemented in the Natural Language Toolkit and available in Python. This data cleaning reduced the overall set of unique words from 526 to K = 355 nouns, thus reducing the dimensionality and sparsity of our vector representations.

3.4. Embedding Recall Data in Cognitive Networks of Free Associations

A crucial limitation of the BOW representation is that emotional words have the same weight for the regression analysis regardless of whether they were recalled first or last. This is in contrast with a rich literature on recall from semantic memory [19,21], which indicates that in producing a list of items from a given category, the elements recalled first are generally more semantically relevant to the category itself. Therefore a better refinement would be to weight word entries in BOW according to their position in recalls. For instance, the occurrence of “sad” in the first position in recall i (i.e., = sad) would receive a higher weight than if it occurred later. The different weights could be tailored so that initial words in a recall are more important in estimating DAS levels.

Rather than using arbitrary weightings, we adopted a cognitive network science approach [17]. Emotional words do not come from an unstructured system; they are the outcome of a search in human memory [21]. We modeled this memory as a network of free associations in order to embed words in a network structure and measure their relevance in memory through network metrics (cf. [19,21,44]). This approach allowed us to compute the network centrality of all words in a given position j and estimate weight as the average of such scores.

Our first step was to transform continuous free association data from [33] into a network where nodes represented words and links represented memory recall patterns (e.g., word A reminding at least two individuals of word B). Analogously to other network approaches [34,35,37,38] and due to the asymmetry in gathering cues and targets [39], we considered links as being undirected. This procedure led to a representation of semantic memory as a fully connected network containing 34,298 concepts and 328,936 links. On this representation we then computed semantic relevance through one local metric (degree) and one global metric (closeness) that had been adopted in previous cognitive inquiries [18,34,36]. Degree captures the numbers of free associations providing access to a given concept, whereas closeness centrality identifies how far on average a node is from its connected neighbors (cf. [17]). We checked that all K = 355 unique words from the ERT data were present in , then computed degrees and closeness centralities of all words occurring in a given position . We report the results in Figure 1.

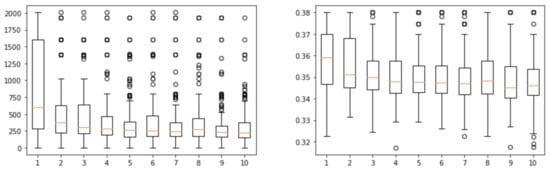

Figure 1.

Box plots of degree (left) and closeness centrality (right) of words in the ERT dataset for each position of recall, .

Although there are several outliers, Figure 1 confirms previous findings [8] about the ERT data following memory recall patterns, with words in the first positions being more semantically prominent than subsequent ones. Since degree and closeness centrality did not seem to display qualitatively different behaviors, we used the median values for degree (see Figure 1, left) as weights , normalized so that . These weights were used to multiply/weight the respective entries of the BOW representation, (for ), so as to obtain a weighted BOW representation of recalls depending on both the ERT data and the network representation of semantic memory.

This procedure constitutes a first semantic embedding of ERT data in a cognitive network. We also performed a second semantic embedding of ERT recalls by considering them as walks over the structure of semantic memory. Like the approach in [20], we considered a list of recalled words as a network path [17], , visiting nodes over the network structure and moving along shortest network paths [38]. This second embedding made it possible to attribute a novel set of distance-based features to each recall.

In particular, we focused on the following distance-based features:

- The coverage performed over the whole walk [36]—that is, the total number of free associations traversed when navigating across a shortest path from node to , then from to , and so on. This coverage equals the sum or the total of all the network distances between adjacent words in a given recall;

- The graph distance entropy [45] of the whole walk , computed as the Shannon entropy for the occurrences of paths of any length within ;

- The total network distance between all nodes in a walk/recall and the target word “depression”. Similarly, we also considered () as the sum of distances between recalled words and “stress” (“anxiety”);

- The total network distance between all nodes in a walk/recall and the target emotional state “happy”. Similarly, we also considered () as the sum of distances between recalled words and the target emotion “sad” (“fear”).

We considered these metrics following previous investigations of semantic memory, affect, and personality traits. Coverage on cognitive networks has been found to be an important metric for predicting creativity levels with recall tasks [16,20]. Higher coverage and graph distance entropy can indicate that sets of responses are more scattered across the structure of semantic memory or that they oscillate between positive and negative emotional states, with potential repercussions over reduced emotion regulation and increased DAS levels [8]. Since shortest network distance on free association networks was found to predict semantic similarity [37,38], we selected semantic distances between recalls and target clinical states to capture the relatedness of responses to DAS levels. The selection of “happy” and “sad” followed previous results from the circumplex model of affect [11], a model mapping emotional states according to the dimensions of pleasantness and arousal. In the circumplex model, “happy” and “sad” are opposite emotional states and their relatedness to recalls can provide additional information for detecting the presence or absence of states such as DAS. We also included “fear”, as it is a common symptom of DAS disorders [8].

The validation of these distances as useful features for discriminating between DAS levels is presented in the Results section.

3.5. Machine Learning Approaches

After having built weighted and unweighted BOW representations of ERT data, enriched with distance-based measured, we tested them within three commonly adopted machine learning regressors.

We tested the following algorithms [42,46]: (i) decision tree, (ii) multilayer perceptron (MLP), and (iii) recurrent neural network (Long–Short Term Memory [LSTM]). Decision trees can predict target values by learning decision rules on how to partition a dataset according to its features in order to reduce the total error between predictions and estimates (cf. [23]). The MLP is inspired by biological neural networks [47] and consists of multiple computing units organized in input, hidden, and output layers. Each unit takes a linear combination of features and produces an output according to an activation function. Combinations are fixed according to weights that are updated over time so as to minimize the error between the final and target inputs. This procedure, known as backpropagation, travels backwards on the neural network [46]. LSTM networks feature feed-forward and back-forward loops that affect hidden layers recurrently over training, a procedure known as deep learning. Additionally, LSTMs feature specific nodes that remember outputs over arbitrary time intervals; this can enhance training by reducing the occurrence of vanishing gradients and getting stuck in local minima.

3.6. Model Training

We trained decision trees with scikit-learn in Python [42]. We used a maximum tree depth of 8 to reduce overfitting and applied a squared error optimization metric for identifying tree nodes. For MLPs we selected an architecture using two hidden layers with 25 neurons each. A dropout rate of 20% between weight updates in the second hidden layer was fixed to reduce overfitting. The number of layers and neurons were fixed after fine-tuning over multiple iterations using the whole dataset of 200 data points and a fourfold cross-validation. A rectified linear activation function was selected to keep the output positive at each layer, as is the case with DAS scores. Training was performed via backpropagation [46]. For the LSTM architecture, we used two hidden layers, each featuring four cells and a dropout rate of 20% to reduce overfitting. A batch gradient descent algorithm was used to train the LSTM network [46].

Training was performed by splitting the dataset of 200 ERT recalls into training (75%) and test sets (25%), according to a fourfold cross-validation. In the regression task of estimating DAS levels from the test set after training, we measured performance in terms of mean squared error (MSE) loss and Pearson’s correlation R. Vectors of features underwent an L2 regularization to further reduce the impact of large dimensionality and sparseness during regression. Performance with different sets of features was recorded so we could apply the best-performing model to text analysis.

3.7. Application of DASentimental to Text

Texts are sequences of words, albeit in more articulated forms than sequential recalls from semantic memory. Nonetheless, word co-occurrences in texts are not independent from semantic memory structure itself—in fact, a growing body of literature in distribution semantics adopts co-occurrences for predicting free association norms [48]. We adopted an analogous approach and used the best-performing model from the ERT data to estimate DAS levels in texts based on their sequences of emotional words. DASentimental can thus be considered a semi-supervised learning approach, trained on psychologically validated recalls and applied to previously unseen sequences of emotional words in texts.

To enhance overlap between the emotional jargon of text and the lexicon of K = 355 unique emotional words in the ERT dataset, we implemented a text parser in spaCy, identifying tokens in texts and mapping them to semantically related items in the ERT lexicon. This semantic similarity was obtained as a cosine similarity between pre-trained word2vec embeddings; it was therefore independent from free association distances.

As seen in Algorithm 1, for every non-stopword [49] of every sentence in a text, the parser identifies nouns, verbs, and adjectives and maps them onto the most similar concept (if any) present in the ERT/DASentimental lexicon. This procedure can skip stopwords and enhance the attribution of different word forms and tenses to their corresponding base form from the ERT dataset, which possesses less linguistic variability than does text due to its recall-from-memory structure. This mapping helped ensure that DASentimental does not miss different forms of words or synonyms from texts, and therefore ultimately enhances the quality of the regression analysis. Checking for item similarity in network neighborhoods drastically reduced computation times, thereby making DASentimental more scalable for volumes of texts larger than the 142 notes used in this first study.

| Algorithm 1: Semantic parser identifying emotional words from text that can be mapped onto the emotional lexicon of DASentimental. |

Input: Text from Suicide Note Output: Vector Representation of emotional content, selected words 1for each sentence in suicide note do 2 for each word in sentence do 3 if word is negative: 4 isNeg = True 5 if word not in stopwords and word.pos in [‘NOUN’,‘ADJ’,‘ADV’,‘VERB’] 6 if isNeg True: 7 find similar words to the current word antonym in ERT words 8 if max similarity ≥= 0.5: 9 Add most similar word to selected words and update vector 10 isNeg=False 11 else: 12 find similar words to the current word in ERT words 13 if max similarity ≥= 0.5: 14 Add most similar word to selected words and update vector 15 end for 16end for |

3.8. Handling Negations in Texts

Using spaCy also meant we could track negation in a sentence and, for any emotional words in the same sentence, substitute in their antonym to be checked (instead of the non-negated word). A similar approach was adopted in studies with cognitive networks [50]. For instance, in the sentence “I am not happy”, the word “happy” was not directly checked for similarity against the ERT lexicon. Instead, the antonym of “happy” (“sad”) was found using spaCy and its similarity was checked instead. Handling negations is a key aspect of processing texts. Since more elaborate forms of meaning negations are present in language, this can be considered a first, simple approach to accounting for semantic negations.

3.9. Psycholinguistic Validation of DASentimental for Text Analysis

In this first study we used suicide letters as a clinical corpus investigated in previous works [28,50] and featuring narratives produced by individuals affected by pathological levels of distress. Unfortunately but understandably, the corpus did not feature annotations expressing the levels of depression, anxiety, and stress felt by the authors of the letters.

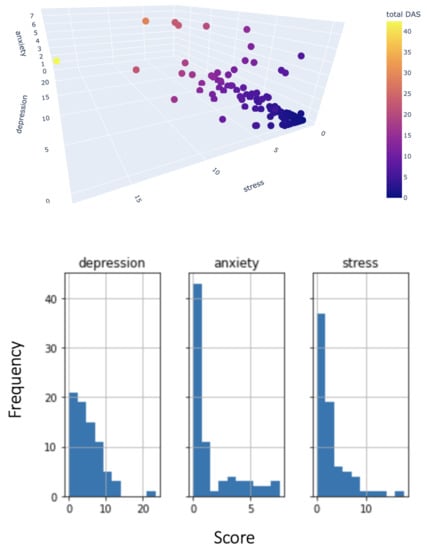

We also performed emotional profiling [51] over the same set of suicide notes, this time relying on another psycholinguistic set of affective norms, the NRC VAD Lexicon [10]. Analogously to the emotional profiling implemented in [30] to extract key states from textual data, we used the NRC VAD Lexicon to provide valence and arousal scores to lemmatized words occurring in suicide notes. We also applied DASentimental to all suicide notes and plotted the resulting distributions of depression (anxiety, stress) scores. A qualitative analysis of the distributions highlighted tipping points, which were used for partitioning the data into letters indicating high and low levels of estimated depression (anxiety, stress). Tipping points were selected instead of medians because most notes elicited no estimated DAS levels, thus producing imbalanced partitions. The tipping points were 6 for depression, 2 for anxiety, and 4 for stress. As reported in Figure 2 (bottom), above these tipping points the distributions exhibited cut-offs or abrupt changes.

Figure 2.

Top: 3D visualization of depression, anxiety, and stress in suicide notes as estimated by DASentimental. Bottom: Histograms of DAS levels per pathological construct.

We then compared the median valence and arousal of words occurring in high and low partitions of the suicide note corpus. Our exploration was guided by the circumplex model of affect [11], which maps “depression” as a state with negative valence and low arousal, “anxiety” as a state with negative valence and high arousal, and “stress” as a state in between “anxiety” and “depression”. For every partition, we expected letters tagged as “high” by DASentimental to feature more extreme language.

4. Results

This section reports on the main results of the manuscript. First, we quantify semantic distances and their relationships with DAS levels. Second, we outline a comparison of different learning methods. Third, within the overall best-performing machine learning model we compare performance of the binary and weighted BOW representations of recalls, using only the embedding coming from network centrality. We then provide key results about several models using different combinations of network distances, further enriching the ERT data with features coming from network navigation of semantic memory (see Methods). We conclude by applying the best-performing model to the analysis of suicide letters and presenting the results of the psycholinguistic validation of DASentimental estimations.

4.1. Semantic Distances Reflect Patterns of Depression, Anxiety, and Stress

Semantic distances, in the network representation of semantic memory, correlated with DAS levels. In other words, the emotional words produced by individuals tended to be closer to or further from targets such as “depression” and “anxiety” (see Methods) according to the DAS levels recorded via the psychometric scale. We found a Pearson’s correlation coefficient R between depression levels and total semantic distance between recalls and “depression” equal to −0.341 (N = 200, p < 0.0001). This means that people affected by higher levels of depression tended to recall and produce emotional words that were semantically closer and thus more related to [37,38] the concept “depression” in semantic memory. We found similar patterns for “anxiety” and anxiety levels (−0.218, N = 200, p = 0.002) and for “stress” and stress levels (−0.357, N = 200, p < 0.0001). These findings constitute quantitative evidence that semantic distance from these target concepts can be useful features for predicting DAS levels. For the happy/sad emotional dimension, we found that people with higher depression/anxiety/stress levels tended to produce concepts closer to “sad” (R < −0.209, N = 200, p < 0.001). Only people affected by lower depression levels tended to recall items closer to “happy” (R = 0.162, N = 200, p = 0.02). No statistically significant correlations were found for anxiety and stress levels. These results indicate that the sad/happy dimension might be particularly relevant for estimating depression levels. At a significance level of 0.05, no other correlations were found. Because there might be additional correlations between different features that are exploitable by machine learning, we will further test the relevance of distance over machine learning regression within the trained models.

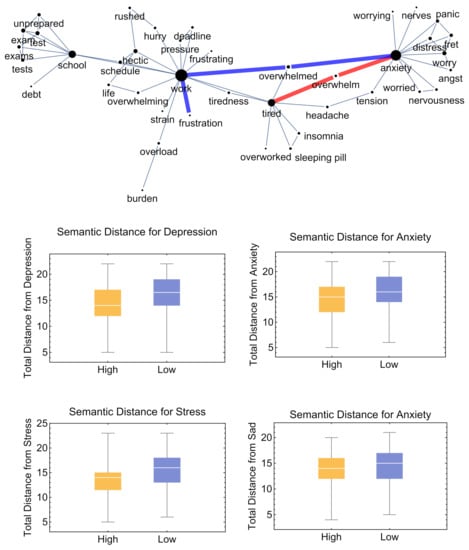

The relevance of semantic distances for predicting DAS levels can also be visualized by partitioning the ERT dataset into individuals with higher-than-median or lower-than-median depression (anxiety, stress) levels. Figure 3 (bottom) shows that people with lower levels tended to produce distributions of network distances differing in their medians (Kruskal–Wallis test, , p < 0.001). Individuals with higher levels of depression (anxiety, stress) tended to recall items more semantically prominent to “depression” (“anxiety”, “stress”), which further validates our correlation analysis and our adoption of network distance as features for regression.

Figure 3.

Top: Toy representation of semantic memory as a network of free associations. Semantic network distances from recalls (e.g., “tired”, “frustration”) to “anxiety” are highlighted. This visualization illustrates that cognitive networks provide structure to conceptual organization in the mental lexicon and enable measurements such as semantic relatedness in terms of shortest paths/network distance. Bottom: Total network distances between recalls and individual concepts (“anxiety”, “depression”, “stress”, and “sad”) between people with high and low levels of DAS.

4.2. Performance of Different Machine Learning Algorithms

As reported in the Methods section, we trained ERT data through three machine learning algorithms: (i) decision trees, (ii) LSTM recurrent neural networks and (iii) MLP. Independent of using the binary or embedded BOW representations of ERT recall and tuning hyperparameters, neither decision trees nor LSTM networks learned from the dataset. This might be due to the relatively small size of the sample (200 recalls). It must be also noted that decision trees try to split the data based on a single feature value, whereas in the current case DAS levels might depend on the co-existence of emotional words. For instance, the sequence “love, broken” might portray love in a painful context, creating a codependence of features that would be difficult for decision trees to account for. The MLP did learn relationships from the data; its performance is outlined in the next section in terms of MSE loss and between estimations and validation values of DAS.

4.3. Embedding BOW in Semantic Memory Significantly Boosts Regression Performance

Table 1 reports the average performance of the MLP regressor over binary and weighted representations of word recalls from the ERT. In our approach, weights come from the median centralities of words in the network representation of semantic memory enabled by free associations [19,38,39] (see Methods).

Table 1.

Average losses and estimators for the binary and weighted versions of Bag-of-Words (BOW) representations of ERT recalls. Weights were fixed according to the median centralities of words in each position of the ERT data (see Methods). Error margins are computed over 10 iterations and indicate standard deviations. MSE: mean squared error.

The binary BOW representation of recalls achieved nontrivial regression results ( higher than 0) for the estimation of depression levels, but not for anxiety or stress estimation. Enriching the same vector representation with the weights from cognitive network science drastically boosted performance, with ranging between 0.15 and 0.40 (i.e., R between 0.38 and 0.63). These results indicate that the first items recalled from semantic memory possess more information about the DAS levels of a given individual. This indicates that there is additional structure within the ERT data that we capitalize on by using the weighted representation.

4.4. Comparison of Model Performance Based on Cognitive Network Features

Table 2 reports model performance when different network distances were plugged in together with the weighted BOW.

Table 2.

Average losses and estimators for models employing different features within the same neural network architecture. All models include weighted representations of word and include (in order of appearance in table): (i) all network distances/conceptual entries (from “anxiety”, “depression”, “stress”, “sad|”, “happy”, and “fear”, together with total emotional coverage (Cover.)), (ii) only distances from “depression”, “stress”, and “anxiety” with coverage and graph distance entropy (Entr.), (iii) only distances from “happy” and “sad” with coverage and entropy, (iv) only coverage and entropy, (v) all other distances, coverage, and entropy without distances from “fear”, and (vi) only distances from “fear”. Error margins are computed over 10 iterations and indicate standard deviations. BOW: bag-of-Words, MSE: mean squared error.

Considering all semantic distances was beneficial for boosting regression results, reducing MSE loss, and enhancing levels—up to 0.49 for depression levels (R = 0.7), 0.20 for anxiety levels (R = 0.44), and 0.27 for stress levels (R = 0.52). The artificial intelligence trained here correlates as strongly as the ERT metric introduced by Li and colleagues [8], which relied on additional valence data attributed to emotions by participants and was powered by traditional statistical methods not based on machine learning. Since additional valence data is not available in texts, we did not use it to train DASentimental; nevertheless, we achieved analogous prediction correlations to Li and colleagues. We therefore considered DASentimental to be a state-of-the-art tool for assessing depression, anxiety, and stress levels from emotional recall data, and proceeded to the next step: using it for text analysis.

Table 2 also identifies the relevance of different distance-based features for predicting DAS levels. We found that the addition of coverage was beneficial for boosting prediction performance compared to the weighted BOW only; this might be due to nonlinear effects that cannot be captured by the previous regression analysis. Adding happy/sad distances to coverage worsened prediction results for “depression” and, in general, produced a lower boost than did adding distances from depression/anxiety/stress to the unweighted BOW representation. Adding all these distances introduced feature correlations that the MLP exploited to achieve higher performance.

Furthermore, Table 2 reports crucial results for exploring how “fear” relates to the estimation of DAS levels. The semantic distances from fear produced a boost in predicting anxiety, indicating that fear is an important emotion for predicting anxiety levels. No boost was recovered for other DAS constructs. However, because these distances are correlated with others, the two models using “fear” and all other concepts performed as well as the simpler model without “fear”. We therefore selected the model based on the weighted BOW representation plus coverage/entropy and all other distances except from “fear” as the final model of DASentimental.

4.5. Analysis of Suicide Notes

According to the World Health Organization (See: https://www.who.int/news-room/fact-sheets/detail/suicide; last accessed 5 October 2021), every year more than 700,000 people commit suicide. There are usually multiple reasons behind a person’s decision to take their life, and one reason can lead to others: Minor incidents may accumulate over time, increasing mental distress and the appearance of depression, anxiety, and/or stress. Eventually, these may become too overwhelming to endure, and the mental pressure may trigger the decision to end one’s own life [5].

Most people who commit suicide do not leave behind a note; those who do provide vital information. Written by individuals who have reached the limit of emotional distress, suicide notes are first-hand evidence of the vulnerable mindset of emotionally distraught individuals [28,29]. Analyzing these notes can offer important insights into the mental states of their authors.

To gather such insights, we applied the best-performing version of DASentimental (with weighted BOW and semantic distances) to the corpus of genuine suicide notes curated by Schoene and Deathlefs [28] and investigated in other recent studies [24,29]. This application constitutes the second part of our semi-supervised approach to text analysis, in which DASentimental predicts DAS levels of non-annotated text from its semantically enriched sequences of emotional words (see Methods).

Results are reported in Figure 2. We registered strong positive correlations between estimated DAS levels (Pearson’s coefficients, = 0.35, p < 0.0001; = 0.50, p < 0.0001; = 0.59, p < 0.0001). These correlations indicate that suicide notes tended to feature similar levels of distress coming from depression, anxiety, and stress, although with different intensities and frequencies, as evident from the qualitative analysis of distributions in Figure 2 (bottom).

Using valence and arousal of words expressed in suicide letters, we performed an additional validation of the results of DAS through the circumplex model of affect [11] (see Methods). By partitioning the notes according to high/low levels of depression (anxiety, stress), we compared the valence (and arousal) of all words mentioned in suicide letters from each partition. At a significance level of 0.05, suicide notes marked by DASentimental as showing higher depression levels were found to contain a lower median valence than notes marked as showing lower levels of depression (Kruskal–Wallis test, KS = 6.889, p = 0.009). Analogously, suicide notes marked by DASentimental as showing higher anxiety levels contained a higher median arousal than notes marked as showing lower anxiety levels (Kruskal–Wallis test, KS = 3.2014, p = 0.007). No differences for stress were found.

Letters with lower depression levels contained more positive jargon, including mentions of loved ones and “relief” at ending the pain and starting a new chapter. Some notes even included emotionless instructions about relatives and assets to be taken care of. Letters with higher depression levels more frequently mentioned jargon relative to “pain” and “boredom”—this imbalance in frequency is captured by the difference in median valence noted above. In the circumplex model, depression lives in a space with more negative valence than neutral/emotionless language; thus the statistically significant difference in valence indicates that DASentimental is able to identify the negative dimension associated with depression.

A similar pattern was found for anxiety, with letters marked as “high anxiety” by DASentimental featuring more anxious jargon relative to pain and suffering. In the circumplex model, anxiety lives in a space with higher arousal and alarm than neutral/emotionless language; therefore, the difference in median arousal between high- and low-anxiety letters indicates that DASentimental is able to identify the alarming and arousing dimensions associated with anxiety.

The absence of differences for stress might indicate that DASentimental is not powerful enough to detect these differences; if this is the case, further research and larger datasets are required. Nonetheless, the signals of enhanced negativity and alarm detected by DASentimental lay the foundation for detecting stress, anxiety, and depression in texts via emotional recall data.

5. Discussion

In this study, we trained a neural network, DASentimental, to predict depression, anxiety, and stress using sequences of emotional words embedded in a cognitive network representation of semantic memory. DASentimental achieved cross-validated predictions for depression (R = 0.7), anxiety (R = 0.44), and stress (R = 0.52) in line with previous approaches using additional valence data [8]. This state-of-the-art performance suggests that even without explicitly encoding valence ratings for each word, DASentimental is able to achieve good explanatory power. This success stems from the cognitive embedding of recalled concepts—that is, concepts at shorter/longer network distance from key ideas such as “depression”, “sad”, and “happy” that are commonly used to describe emotional distress [4,7].

Our findings suggest the importance of considering network distances between concepts in semantic memory in investigations of emotional distress. This insight provides further support to studies showing how network distances and connectivity can predict other cognitive phenomena, including creativity levels [16,18,20], semantic distance [37,38], and word production in clinical populations [36].

We noticed a significant boost in performance (+210% in on average) when embedding BOW representations of recall lists (see Methods) in a cognitive network of free associations [33]. A nearly tenfold boost was observed for predicting stress and anxiety, which are considerably complex distress constructs [14,40]. Our results underline the need to tie together artificial intelligence/text-mining [43] and cognitive network science [15] to achieve cutting-edge predictors in next-generation cognitive computing.

We applied DASentimental to a collection of suicide notes as a case study. Most suicide notes in the corpus [28] indicated low levels of depression, anxiety, and stress. This suggests that despite the decision to terminate their own life, the writers of suicide notes tried to avoid overwhelming their last messages with negative emotions; this is compatible with previous studies [24]. One observation gained from a close reading of suicide notes is that many writers expressed their love and gratitude to their significant others, and used euphemisms when referencing the act of suicide (e.g., “I can’t take this anymore”). Therefore, although a reader might interpret a typical suicide note as being filled with sorrow, their perception is influenced by the knowledge that the writer eventually killed themself.

Limitations and Future Research

A key limitation of DASentimental is that it cannot account for how context shifts and forges meaning and perceptions in language [43]. Furthermore, DASentimental cannot capture how the writers actually felt before, during, or after writing those last letters. Instead, DASentimental quantifies the emotions that are explicitly expressed by the authors, since it is trained on ERT data which includes expressions of emotions without context. Future research might better detect contextual knowledge through natural language processing, which has been successfully used on contextual features such as medical reports [52] and speech organization [27] to detect the risk of psychosis in clinical populations. Alternatively, community detection in feature-rich networks could provide information about the different meanings and contextual interpretations provided to concepts in cognitive networks (e.g., the different meanings of “star” [53]). Last but not least, contextual features might be detected through meso-scale network metrics such as entanglement, which was recently shown to efficiently identify nodes critical for information diffusion in a variety of techno-social networks [54].

Subjective valence ratings such as those adopted in the original ERT study [8] are not available in texts; DASentimental therefore uses cognitive network distances [45] to target words instead. Despite this difference, DASentimental’s performance is on par with the work of Li and colleagues [8]. This has two implications: First, a stronger model might use distances and valences when focusing only on fluency tasks. Second, for text analysis and even cognitive social media mining [55], DASentimental’s machine learning pipeline could be used to detect any kind of target emotion (e.g., “surprise” or “love”). Furthermore, DASentimenal could be used on future datasets relating DAS levels with ERT data and demographics to explore how age, gender, or physical health can influence depression, anxiety, and stress detection.

In this first study we relied on machine learning to spot relationships between emotional recall and depression, anxiety, and stress levels. However, other statistical approaches might be employed in the future—the most promising is LASSO for semi-supervised regression [56].

As a future research direction, DASentimental could be used to investigate the cultural evolution of emotions. Emotions and their expressions are shaped by culture and learned in social contexts [57,58] and media movements [49]. What people can feel and express depends on their surrounding social norms. Previous studies have shown that large historical corpora can be used to make quantitative inferences on the rise and fall of national happiness [58]. Similarly, DASentimental could be applied to track the change of explicit expression of depression, anxiety, and stress over history, quantified through the emotions of modern individuals. This would highlight changes in norms towards emotional expression and historical events (e.g., “pandemic”), thus complementing other recent approaches in cognitive network science [9,30,59,60,61] and sentiment/emotional profiling [51,55,62,63] by bringing to the table a quantitative, automatic quantification of depression, anxiety, and stress in texts.

6. Conclusions

This work combines cognitive network science and artificial intelligence to introduce a semi-supervised machine learning model, DASentimental, that extracts depression, anxiety, and stress from written text. Cognitive data and networked representations of semantic memory powered our approach. We trained the model to spot how sequences of recalled emotion words by N = 200 individuals correlated with their responses to the DASS-21. Weighting responses according to their network centrality (degree) and measuring recalls as network walks significantly boosted regression results up to state-of-the-art approaches (cf. [8]). This quantitative approach not only makes it possible to assess emotional distress in texts (e.g., detecting the depression level expressed in a letter), but also provides a quantitative framework for testing how semantic distances of emotions correlate with distress and mental well-being. For these reasons, DASentimental will appeal to clinical researchers interested in measuring distress levels in texts in ways that are interpretable under the framework of semantic network distances (cf. [16,20,37,38]).

Author Contributions

Conceptualization, A.F. and M.S.; methodology, all authors; software, A.F.; validation, all authors; formal analysis, A.F. and M.S.; investigation, A.F. and M.S.; writing—original draft, review and editing, all authors; visualization, A.F.; supervision, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki over datasets already released in previous ethically approved studies.

Informed Consent Statement

Since no novel data was generated from this study, no informed consent was required.

Data Availability Statement

We prepared a Python package containing the best model of DASentimental implemented and described in this paper. The package is available on GitHub: https://github.com/asrafaiz7/DASentimental (accessed on 21 November 2021). If using DASentimental for estimating anxiety, stress and depression levels in texts, please cite this manuscript.

Acknowledgments

We acknowledge Deborah Ain for kindly providing comments and help with manuscript editing.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lovibond, P.F.; Lovibond, S.H. The structure of negative emotional states: Comparison of the Depression Anxiety Stress Scales (DASS) with the Beck Depression and Anxiety Inventories. Behav. Res. Ther. 1995, 33, 335–343. [Google Scholar] [CrossRef]

- Russell, J.A.; Barrett, L.F. Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. J. Personal. Soc. Psychol. 1999, 76, 805. [Google Scholar] [CrossRef]

- O’Driscoll, C.; Buckman, J.E.; Fried, E.I.; Saunders, R.; Cohen, Z.D.; Ambler, G.; DeRubeis, R.J.; Gilbody, S.; Hollon, S.D.; Kendrick, T.; et al. The importance of transdiagnostic symptom level assessment to understanding prognosis for depressed adults: Analysis of data from six randomised control trials. BMC Med. 2021, 19, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Akin, A.; Çetın, B. The Depression Anxiety and Stress Scale (DASS): The study of Validity and Reliability. Educ. Sci. Theory Pract. 2007, 7, 260–268. [Google Scholar]

- Conejero, I.; Olié, E.; Calati, R.; Ducasse, D.; Courtet, P. Psychological pain, depression, and suicide: Recent evidences and future directions. Curr. Psychiatry Rep. 2018, 20, 1–9. [Google Scholar] [CrossRef]

- Abend, R.; Bajaj, M.A.; Coppersmith, D.D.; Kircanski, K.; Haller, S.P.; Cardinale, E.M.; Salum, G.A.; Wiers, R.W.; Salemink, E.; Pettit, J.W.; et al. A computational network perspective on pediatric anxiety symptoms. Psychol. Med. 2021, 51, 1752–1762. [Google Scholar] [CrossRef]

- Barrett, L.F. Valence is a basic building block of emotional life. J. Res. Personal. 2006, 40, 35–55. [Google Scholar] [CrossRef]

- Li, Y.; Masitah, A.; Hills, T.T. The Emotional Recall Task: Juxtaposing recall and recognition-based affect scales. J. Exp. Psychol. Learn. Mem. Cogn. 2020, 46, 1782–1794. [Google Scholar] [CrossRef]

- Montefinese, M.; Ambrosini, E.; Angrilli, A. Online search trends and word-related emotional response during COVID-19 lockdown in Italy: A cross-sectional online study. PeerJ 2021, 9, e11858. [Google Scholar] [CrossRef]

- Mohammad, S. Obtaining reliable human ratings of valence, arousal, and dominance for 20,000 English words. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; pp. 174–184. [Google Scholar]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef]

- Tellegen, A. Structures of Mood and Personality and Their Relevance to Assessing Anxiety, with an Emphasis on Self-Report; Lawrence Erlbaum Associates, Inc.: Mahwah, NJ, USA, 1985. [Google Scholar]

- Watson, D.; Clark, L.A.; Tellegen, A. Development and validation of brief measures of positive and negative affect: The PANAS scales. J. Personal. Soc. Psychol. 1988, 54, 1063. [Google Scholar] [CrossRef]

- Tugade, M.M.; Fredrickson, B.L.; Feldman Barrett, L. Psychological resilience and positive emotional granularity: Examining the benefits of positive emotions on coping and health. J. Personal. 2004, 72, 1161–1190. [Google Scholar] [CrossRef]

- Kenett, Y.N.; Faust, M. Clinical cognitive networks: A graph theory approach. In Network Science in Cognitive Psychology; Routledge: London, UK, 2019; pp. 136–165. [Google Scholar]

- Beaty, R.E.; Zeitlen, D.C.; Baker, B.S.; Kenett, Y.N. Forward Flow and Creative Thought: Assessing Associative Cognition and its Role in Divergent Thinking. Think. Ski. Creat. 2021, 41, 100859. [Google Scholar] [CrossRef]

- Siew, C.S.; Wulff, D.U.; Beckage, N.M.; Kenett, Y.N. Cognitive network science: A review of research on cognition through the lens of network representations, processes, and dynamics. Complexity 2019, 2019, 2108423. [Google Scholar] [CrossRef]

- Kenett, Y.N.; Levy, O.; Kenett, D.Y.; Stanley, H.E.; Faust, M.; Havlin, S. Flexibility of thought in high creative individuals represented by percolation analysis. Proc. Natl. Acad. Sci. USA 2018, 115, 867–872. [Google Scholar] [CrossRef] [Green Version]

- Kumar, A.A. Semantic memory: A review of methods, models, and current challenges. Psychon. Bull. Rev. 2021, 28, 40–80. [Google Scholar] [CrossRef]

- Stella, M.; Kenett, Y.N. Viability in multiplex lexical networks and machine learning characterizes human creativity. Big Data Cogn. Comput. 2019, 3, 45. [Google Scholar] [CrossRef] [Green Version]

- Hills, T.T.; Jones, M.N.; Todd, P.M. Optimal foraging in semantic memory. Psychol. Rev. 2012, 119, 431. [Google Scholar] [CrossRef] [Green Version]

- Golino, H.F.; Epskamp, S. Exploratory graph analysis: A new approach for estimating the number of dimensions in psychological research. PLoS ONE 2017, 12, e0174035. [Google Scholar]

- Tohalino, J.A.; Quispe, L.V.; Amancio, D.R. Analyzing the relationship between text features and grants productivity. Scientometrics 2021, 126, 4255–4275. [Google Scholar] [CrossRef]

- Teixeira, A.S.; Talaga, S.; Swanson, T.J.; Stella, M. Revealing semantic and emotional structure of suicide notes with cognitive network science. arXiv 2020, arXiv:2007.12053. [Google Scholar] [CrossRef]

- Zemla, J.C.; Cao, K.; Mueller, K.D.; Austerweil, J.L. SNAFU: The semantic network and fluency utility. Behav. Res. Methods 2020, 52, 1681–1699. [Google Scholar] [CrossRef] [Green Version]

- Morgan, S.E.; Diederen, K.; Vertes, P.E.; Ip, S.H.; Wang, B.; Thompson, B.; Demjaha, A.; De Micheli, A.; Oliver, D.; Liakata, M.; et al. Assessing psychosis risk using quantitative markers of disorganised speech. medRxiv 2021. [Google Scholar] [CrossRef]

- Morgan, S.; Diederen, K.; Vértes, P.; Ip, S.; Wang, B.; Thompson, B.; Demjaha, A.; De Micheli, A.; Oliver, D.; Liakata, M.; et al. Natural Language Processing markers in first episode psychosis and people at clinical high-risk. Transl. Psychiatry 2021. [Google Scholar] [CrossRef]

- Schoene, A.M.; Dethlefs, N. Automatic identification of suicide notes from linguistic and sentiment features. In Proceedings of the 10th SIGHUM Workshop on Language Technology for Cultural Heritage, Social Sciences, and Humanities, Berlin, Germany, 11 August 2016; pp. 128–133. [Google Scholar]

- Schoene, A.M.; Turner, A.; De Mel, G.R.; Dethlefs, N. Hierarchical Multiscale Recurrent Neural Networks for Detecting Suicide Notes. IEEE Trans. Affect. Comput. 2021. [Google Scholar] [CrossRef]

- Stella, M.; Restocchi, V.; De Deyne, S. # lockdown: Network-enhanced emotional profiling in the time of COVID-19. Big Data Cogn. Comput. 2020, 4, 14. [Google Scholar]

- Pachur, T.; Hertwig, R.; Steinmann, F. How do people judge risks: Availability heuristic, affect heuristic, or both? J. Exp. Psychol. Appl. 2012, 18, 314. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tversky, A.; Kahneman, D. Availability: A heuristic for judging frequency and probability. Cogn. Psychol. 1973, 5, 207–232. [Google Scholar] [CrossRef]

- De Deyne, S.; Navarro, D.J.; Perfors, A.; Brysbaert, M.; Storms, G. The “Small World of Words” English word association norms for over 12,000 cue words. Behav. Res. Methods 2019, 51, 987–1006. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hills, T.T.; Maouene, M.; Maouene, J.; Sheya, A.; Smith, L. Longitudinal analysis of early semantic networks: Preferential attachment or preferential acquisition? Psychol. Sci. 2009, 20, 729–739. [Google Scholar] [CrossRef] [Green Version]

- Stella, M.; Beckage, N.M.; Brede, M. Multiplex lexical networks reveal patterns in early word acquisition in children. Sci. Rep. 2017, 7, 1–10. [Google Scholar]

- Castro, N.; Stella, M.; Siew, C.S. Quantifying the interplay of semantics and phonology during failures of word retrieval by people with aphasia using a multiplex lexical network. Cogn. Sci. 2020, 44, e12881. [Google Scholar] [CrossRef]

- Kumar, A.A.; Balota, D.A.; Steyvers, M. Distant connectivity and multiple-step priming in large-scale semantic networks. J. Exp. Psychol. Learn. Mem. Cogn. 2020, 46, 2261. [Google Scholar] [CrossRef]

- Kenett, Y.N.; Levi, E.; Anaki, D.; Faust, M. The semantic distance task: Quantifying semantic distance with semantic network path length. J. Exp. Psychol. Learn. Mem. Cogn. 2017, 43, 1470. [Google Scholar] [CrossRef]

- De Deyne, S.; Navarro, D.J.; Storms, G. Better explanations of lexical and semantic cognition using networks derived from continued rather than single-word associations. Behav. Res. Methods 2013, 45, 480–498. [Google Scholar] [CrossRef] [Green Version]

- Smith, A.M.; Hughes, G.I.; Davis, F.C.; Thomas, A.K. Acute stress enhances general-knowledge semantic memory. Horm. Behav. 2019, 109, 38–43. [Google Scholar] [CrossRef]

- Kenett, Y.; Baker, B.; Hills, T.; Hart, Y.; Beaty, R. Creative Foraging: Examining Relations Between Foraging Styles, Semantic Memory Structure, and Creative Thinking. In Proceedings of the Annual Meeting of the Cognitive Science Society, Vienna, Austria, 25–29 July 2021; Volume 43. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Hassani, H.; Beneki, C.; Unger, S.; Mazinani, M.T.; Yeganegi, M.R. Text mining in big data analytics. Big Data Cogn. Comput. 2020, 4, 1. [Google Scholar] [CrossRef] [Green Version]

- Hills, T.T.; Kenett, Y.N. Networks of the Mind: How Can Network Science Elucidate Our Understanding of Cognition? Top. Cogn. Sci. 2021. [Google Scholar] [CrossRef]

- Stella, M.; De Domenico, M. Distance entropy cartography characterises centrality in complex networks. Entropy 2018, 20, 268. [Google Scholar] [CrossRef] [Green Version]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Gardner, M.W.; Dorling, S. Artificial neural networks (the multilayer perceptron)—A review of applications in the atmospheric sciences. Atmos. Environ. 1998, 32, 2627–2636. [Google Scholar] [CrossRef]

- Vankrunkelsven, H.; Verheyen, S.; De Deyne, S.; Storms, G. Predicting lexical norms using a word association corpus. In Proceedings of the 37th Annual Conference of the Cognitive Science Society, Pasadena, CA, USA, 22–25 July 2015; pp. 2463–2468. [Google Scholar]

- Amancio, D.R.; Oliveira, O.N., Jr.; da Fontoura Costa, L. Identification of literary movements using complex networks to represent texts. New J. Phys. 2012, 14, 043029. [Google Scholar] [CrossRef]

- Stella, M. Text-mining forma mentis networks reconstruct public perception of the STEM gender gap in social media. PeerJ Comput. Sci. 2020, 6, e295. [Google Scholar] [CrossRef]

- Mohammad, S.M. Sentiment analysis: Automatically detecting valence, emotions, and other affectual states from text. In Emotion Measurement; Elsevier: Amsterdam, The Netherlands, 2021; pp. 323–379. [Google Scholar]

- Irving, J.; Patel, R.; Oliver, D.; Colling, C.; Pritchard, M.; Broadbent, M.; Baldwin, H.; Stahl, D.; Stewart, R.; Fusar-Poli, P. Using natural language processing on electronic health records to enhance detection and prediction of psychosis risk. Schizophr. Bull. 2021, 47, 405–414. [Google Scholar] [CrossRef]

- Citraro, S.; Rossetti, G. Identifying and exploiting homogeneous communities in labeled networks. Appl. Netw. Sci. 2020, 5, 1–20. [Google Scholar] [CrossRef]

- Ghavasieh, A.; Stella, M.; Biamonte, J.; De Domenico, M. Unraveling the effects of multiscale network entanglement on empirical systems. Commun. Phys. 2021, 4, 1–10. [Google Scholar] [CrossRef]

- Stella, M. Cognitive network science for understanding online social cognitions: A brief review. Top. Cogn. Sci. 2021. [Google Scholar] [CrossRef]

- Jung, A.; Vesselinova, N. Analysis of network lasso for semi-supervised regression. In Proceedings of the 22nd International Conference on Artificial Intelligence and Statistics, Naha, Japan, 16–18 April 2019; pp. 380–387. [Google Scholar]

- Morini, V.; Pollacci, L.; Rossetti, G. Toward a Standard Approach for Echo Chamber Detection: Reddit Case Study. Appl. Sci. 2021, 11, 5390. [Google Scholar] [CrossRef]

- Hills, T.T.; Proto, E.; Sgroi, D.; Seresinhe, C.I. Historical analysis of national subjective wellbeing using millions of digitized books. Nat. Hum. Behav. 2015, 3, 1271–1275. [Google Scholar] [CrossRef]

- Simon, F.M.; Camargo, C.Q. Autopsy of a metaphor: The origins, use and blind spots of the ‘infodemic’. New Media Soc. 2021. [Google Scholar] [CrossRef]

- Li, Y.; Luan, S.; Li, Y.; Hertwig, R. Changing emotions in the COVID-19 pandemic: A four-wave longitudinal study in the United States and China. Soc. Sci. Med. 2021, 285, 114222. [Google Scholar] [CrossRef] [PubMed]

- Cinelli, M.; Quattrociocchi, W.; Galeazzi, A.; Valensise, C.M.; Brugnoli, E.; Schmidt, A.L.; Zola, P.; Zollo, F.; Scala, A. The COVID-19 social media infodemic. Sci. Rep. 2020, 10, 1–10. [Google Scholar]

- Semeraro, A.; Vilella, S.; Ruffo, G. PyPlutchik: Visualising and comparing emotion-annotated corpora. PLoS ONE 2021. [Google Scholar] [CrossRef] [PubMed]

- Radicioni, T.; Squartini, T.; Pavan, E.; Saracco, F. Networked partisanship and framing: A socio-semantic network analysis of the Italian debate on migration. arXiv 2021, arXiv:2103.04653. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).