1. Introduction

From e-commerce platforms and social media networks to healthcare systems and financial institutions, organizations across diverse domains rely on big data analytics to glean actionable insights, drive informed decision-making, and gain a competitive edge in the market. This proliferation of big data applications has prompted a paradigm shift in data architecture, with traditional approaches giving way to more agile, scalable, and versatile frameworks. The evolution of big data architecture began with the emergence of the Hadoop-based data stack, which introduced revolutionary concepts such as distributed storage and parallel processing enabling organizations to efficiently store, process, and analyze massive volumes of data across distributed computing clusters [

1]. However, in recent years, the landscape has shifted towards the adoption of modern data stacks, characterized by their flexibility, agility, and diverse toolsets. Unlike the monolithic nature of the Hadoop stack, the modern data stack allows organizations to tailor their data processing pipelines with a mix of open-source and proprietary tools, selecting the best-fit solutions for their specific use cases [

2]. On the other hand, cloud computing has emerged as a key enabler for big data, offering scalable, elastic, and integrated services tailored to large-scale data processing. The cloud-based data stack thus marks a new paradigm, emphasizing flexibility and managed infrastructure.

Despite the benefits offered by these distinct data stack paradigms, navigating the landscape of big data technologies can be daunting. While the Hadoop stack remains a stalwart choice for many organizations, the rise in modern data stacks and cloud-based solutions has introduced new considerations and trade-offs. Factors such as data integration, implementation costs, ease of deployment, and data security play pivotal roles in determining the most suitable architecture for a given use case [

1,

3]. In response to the evolving landscape of big data technologies, there is a growing need for practical studies that provide comprehensive comparisons and actionable insights. While existing literature offers valuable insights into individual data stack paradigms, there remains a dearth of studies that offer practical, in-depth comparisons of these paradigms across the entire big data value chain.

To bridge this gap, we propose an exhaustive architectural analysis coupled with a practical implementation on a real-world use case, comparing the three main big data stacks: Hadoop, Modern, and Cloud-Based. The objective is to provide researchers and practitioners with a clear understanding of each stack’s strengths, limitations, and suitability for big data processing.

For this, we undertake an exhaustive and empirical comparative study of the three big data stacks, spanning the entire big data value chain from data acquisition to visualization, and encompassing crucial phases such as preprocessing, data quality, storage, analysis, machine learning, and data orchestration and management. Moreover, our comparisons will extend beyond mere architectural considerations to encompass an end-to-end use case implementation covering all the previously cited phases to evaluate each stack comprehensively. Thus, we will implement the different data stack scenarios through a comparative implementation using a large dataset of Amazon reviews that will be processed through the entire big data value chain, queried, analyzed using sentiment analysis, and finally visualized. Furthermore, we delve into deeper comparisons, exploring critical factors such as data integration, implementation costs, and ease of deployment. Through this thorough and objective analysis, we aim to furnish researchers and practitioners with a pertinent and updated reference study for navigating the intricate landscape of big data technologies and making informed decisions about their data strategies.

The rest of this paper is organized as follows:

Section 2, we introduce the big data value chain and its different steps.

Section 2 introduces the big data value chain and its constituent phases. In

Section 3, we review the most recent studies that have tackled big data stacks: Hadoop-based, Cloud-based, and the Modern Data stack.

Section 4 describes our comparative framework of big data stacks based on different features, including the data pipeline, data quality, data security, ease of integration, and deployment costs.

Section 5 presents the comparative implementation we performed on the three stacks and the results obtained regarding accuracy, scalability, ease of integration, and deployment costs. Finally, conclusions are made, and future work directions are highlighted.

2. Defining the Big Data Value Chain: A Framework for Comparative Analysis

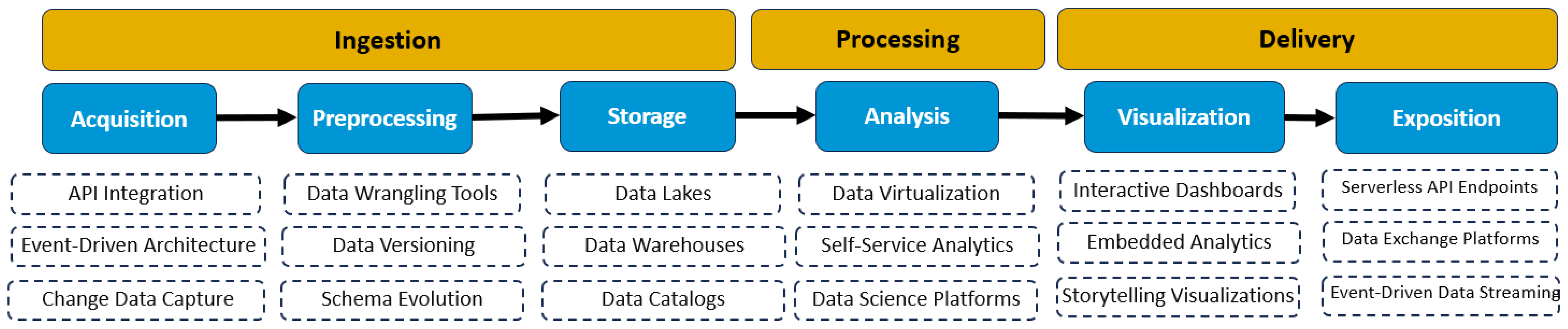

Big data has become ubiquitous in today’s digital landscape, revolutionizing how organizations operate and make decisions. However, the sheer volume, velocity, variety, and veracity of data, commonly referred to as the big data V’s, pose significant challenges for data management and analysis. These characteristics of big data underscore the need for specialized processing approaches distinct from traditional data management methods. To comprehensively address the complexities of big data management, organizations employ a robust big data pipeline that integrates various components, allowing them to transform raw data into valuable insights. Thus, the big data pipeline serves as a structured framework for extracting value from unstructured datasets, encompassing the following interconnected components, as shown in

Figure 1:

Acquisition & Ingestion: The process begins with data acquisition, sourcing data from various internal and external sources. Once acquired, the data undergoes ingestion, where it is imported into the data processing system. This seamless transition from acquisition to ingestion ensures that data is efficiently collected and prepared for further processing.

Preprocessing & Storage: Following ingestion, the data undergoes preprocessing to clean, transform, and prepare it for analysis. Preprocessed data is then stored in suitable storage systems or databases, ensuring that it is readily accessible for analysis. The integration between preprocessing and storage guarantees that high-quality data is readily available for analysis, enabling organizations to derive meaningful insights.

Analysis & Visualization: With data stored and prepared, the analysis phase begins, applying various statistical, machine learning, and data mining techniques to extract insights. Once insights are derived, visualization techniques are employed to represent complex datasets intuitively. The integration between analysis and visualization ensures that insights are effectively communicated to stakeholders, facilitating informed decision-making.

Exposition & Data Strategy: Finally, the analyzed and visualized data is presented to relevant stakeholders in a comprehensible format. This may include generating reports, dashboards, or interactive visualizations that convey insights and recommendations. Additionally, data strategy guides the entire process, defining goals and initiatives related to data acquisition, management, analysis, and utilization. The alignment between exposition and data strategy ensures that insights derived from data analysis support organizational objectives and drive strategic initiatives.

The different stages of the big data value chain are managed by a data management component that ensures the effective orchestration, organization, and optimization of data processes throughout the entire lifecycle. In a big data context, data management is based on the following components:

Data Governance & Data Security: Data governance establishes policies and standards governing data usage, management, and protection. This framework ensures that data security measures, such as encryption and access controls, are aligned with governance policies to protect sensitive information from unauthorized access or disclosure.

Data Quality: Quality assurance in the context of big data involves ensuring the accuracy, completeness, consistency, and reliability of data throughout its lifecycle. This component encompasses various processes and techniques, including data validation, data profiling, data cleansing, and data enrichment. Quality assurance is particularly crucial during the preprocessing stage of the big data pipeline, where raw data is cleaned, transformed, and prepared for analysis.

Metadata Management: Metadata management involves capturing, storing, and maintaining metadata to provide context and lineage information for data assets. It enables data discovery, understanding, and governance, intersecting with various stages of the big data value chain.

Data Ops: DataOps combines DevOps principles with data engineering and management practices to streamline and automate the data lifecycle. It focuses on collaboration, agility, and automation to accelerate data delivery and optimize data operations.

These components interact with each other throughout the big data pipeline, ensuring that data is effectively managed, governed, secured, and optimized to meet organizational objectives and regulatory requirements Over the years, the implementation of the big data pipeline has evolved across various technological stacks, a progression we examine in

Section 3 through a review of recent state-of-the-art contributions.

3. Literature Review: Insights into Hadoop, Modern, and Cloud-Based Big Data Stacks

3.1. Hadoop Data Stack

The emergence of big data has brought new challenges, particularly in processing and analyzing vast amounts of information efficiently. With traditional methods proving inadequate, the spotlight has turned to innovative solutions, among which. Renowned for its scalability and distributed computing capabilities, Hadoop has become instrumental in managing large datasets with relative ease. Consequently, numerous studies have explored the Hadoop data stack [

4,

5,

6,

7,

8,

9,

10], highlighting its strengths in scalability, performance optimization, and security mechanisms. These contributions propose a range of enhancements aimed at improving the efficiency and reliability of Hadoop-based processing. For instance, autonomic architectures such as KERMIT dynamically tune Spark and Hadoop parameters in real time to improve performance and workload adaptation. Other works focus on caching strategies, offering intelligent classification models and hybrid approaches to accelerate data access and job execution. On the security side, several studies investigate authentication and authorization frameworks, including role-based access control and auditing mechanisms to secure distributed data environments. Some research also illustrates practical use cases of Hadoop, particularly in public service and academic settings (e.g., library systems), showcasing its utility in managing large-scale unstructured data. However, while these studies provide valuable insights, they tend to focus on individual components or isolated technical aspects—such as MapReduce tuning, HDFS optimization, or access control—and rarely assess the Hadoop stack as a whole. As such, end-to-end architectural analyses that span the full big data value chain in real-world scenarios remain scarce.

3.2. Cloud Data Stack

With the emergence of cloud computing and its widespread adoption, a transformative shift has occurred in the realm of big data. The cloud has introduced novel possibilities for scalability and performance, revolutionizing how large datasets are managed and analyzed. Consequently, a growing body of literature has examined the integration of cloud computing into big data architectures [

11,

12,

13,

14], emphasizing its potential to enhance scalability, performance, and cost-efficiency. These studies collectively recognize cloud computing as a transformative enabler for big data processing, thanks to its elasticity, flexibility, and broad availability of managed services. Several contributions provide general overviews of the cloud–big data ecosystem, exploring how cloud service models, including IaaS, PaaS, and SaaS, can be leveraged to address core big data challenges such as data volume, velocity, and variety [

11]. Others delve deeper into technical and organizational issues, such as availability, data quality, integration, and legal or regulatory compliance [

12]. Security also emerges as a recurring theme, with studies proposing architectural solutions to mitigate privacy and access control risks, especially when handling sensitive or large-scale datasets. In parallel, researchers have explored the convergence of big data analytics and cloud platforms, illustrating how real-time, cloud-native solutions enable faster insights and decision-making, particularly in fast-moving industries [

13]. Applications in industrial settings have also been investigated, where cloud infrastructures were shown to improve efficiency, security, and data lifecycle management in high-volume environments [

14]. Overall, these studies confirm the practical viability and scalability of cloud-based big data solutions, but most analyses remain conceptual or domain-specific, lacking end-to-end comparative evaluations of full cloud-based data stacks in real-world scenarios.

3.3. Modern Data Stack

In recent years, a novel approach to data management has gained prominence, known as the modern data stack. This innovative concept revolves around the integration of diverse data management tools, allowing for the creation of comprehensive end-to-end architectures to support the entire big data value chain. Unlike Hadoop and Cloud-based stacks, which are typically confined to their native ecosystems, the Modern Data Stack can integrate heterogeneous tools from both environments. Its defining characteristic lies in this flexibility that can also combine components from Hadoop or cloud platforms within a unified and modular architecture. However, despite its potential, the modern data stack remains in its nascent stages of development. Only a limited number of studies have delved into its intricacies, highlighting the need for further exploration and research to unlock its full capabilities. Thus, recent studies have investigated the modern data stack (MDS) as a promising alternative for building modular and scalable big data architectures [

15,

16,

17,

18]. Some studies focus on its application in specific contexts such as public sector data consolidation, where tools like Fivetran are used for ingestion and warehouse integration to streamline decision-making processes [

15]. Other contributions explore the use of cloud-native orchestration platforms, combining Kubernetes, Argo Workflows, MinIO, and Dremio to build lakehouse-style architectures aligned with DataOps practices [

16]. These platforms aim to ensure agility, scalability, and continuous delivery within modern analytics environments. Further research presents more customizable and flexible big data stacks, designed to integrate diverse components for petabyte-scale storage and elastic distributed computing, significantly reducing the time required for complex data queries [

17]. On a more advanced level, some studies examine the use of federated learning and personalization techniques within modern stacks, especially in IoT-enabled systems, where models are locally tuned and optimized for real-time analytics [

18]. While these studies demonstrate the potential of MDS in a variety of real-world use cases, the majority of them remain exploratory or architecture-focused, and lack comprehensive end-to-end evaluations of modern stacks across the full big data value chain.

- ❖

Identified Research Gaps

After conducting a comprehensive review of the state of the art, it is evident that big data management stacks have undergone significant evolution with the advent of new technologies. Presently, Hadoop remains the most widely used tool in the field. However, the rise in cloud computing has also gained considerable traction in recent years, offering alternative solutions to traditional on-premises setups. Conversely, the modern data stack has received relatively less attention in the literature, despite its potential advantages. Overall, we noticed the following gaps in the reviewed state of the art:

One notable gap identified is the lack of comprehensive studies focusing on the modern data stack, particularly concerning its application in big data contexts. Additionally, there is a notable absence of end-to-end analyses that integrate modern data stacks into the broader big data value chain.

While individual components of each stack have been extensively researched and compared, there is a dearth of exhaustive, end-to-end comparisons that consider the entire big data value chain.

Another gap observed in the literature is the lack of comprehensive studies evaluating the performance and suitability of different big data stacks in real-world scenarios. Therefore, there is a need for research that goes beyond theoretical discussions and provides actionable insights for organizations.

Thus, to address the gaps raised we perform in this paper an exhaustive study about big data stacks with the following contributions:

We perform an in-depth exploration of the modern data stack’s effectiveness within the realm of big data, emphasizing its seamless integration throughout the entire value chain, offering novel insights into its practical implementation and impact.

We provide an end-to-end architectural comparison of the three big data main stacks, covering the most exhaustive big data value chain from data acquisition to exposition, including preprocessing, data quality, storage, analysis, machine learning, and data orchestration and management.

We go beyond architectural considerations by implementing end-to-end use case scenarios and exploring critical factors such as data integration, implementation costs, and ease of deployment, aiming to provide researchers and practitioners with a comprehensive reference of big data stacks.

To delve deeper into the contributions cited above, we present in

Section 4 our comparative framework of big data stacks based on different features including the data pipeline, data quality, data security, ease of integration and also deployment costs.

4. Comparative Framework: Analyzing Architecture, Governance, and Costs Across Big Data Stacks

For an in-depth analysis, each selected source was analyzed using six consistent criteria derived from the big data lifecycle: (1) acquisition and ingestion, (2) preprocessing and transformation, (3) storage, (4) analysis and visualization, (5) data quality, security, and governance, and (6) integration and cost efficiency. These same dimensions are used to structure the comparative analysis in

Section 4 and the experimental evaluation in

Section 5. By applying this shared framework, the paper ensures that comparisons across Hadoop, MDS, and Cloud stacks follow the same evaluation logic and are directly comparable in scope.

4.1. Data Pipeline Architecture

The evolution of the big data value chain has been significantly influenced by advancements in data stack architecture. Initially, Hadoop-based data stacks provided a distributed computing framework to process large-scale datasets efficiently. Over time, the emergence of the modern data stack introduced a more modular and cloud-compatible approach, prioritizing flexibility, real-time processing, and ease of integration. More recently, cloud-based data stacks have taken this further by fully leveraging cloud-native services, offering enhanced scalability, managed services, and cost optimization. Each of these architectures has reshaped how different phases of the big data value chain are executed, particularly in data acquisition, preprocessing, storage, analysis, visualization, and exposition.

Table 1 provides a macro-level comparison of how these phases are approached in the three data stack paradigms.

This comparative analysis underscores the evolution of big data architectures from batch-oriented, infrastructure-heavy models (Hadoop) to modular, real-time frameworks (Modern Data Stack), and ultimately to fully managed, cloud-native solutions. While Hadoop pioneered distributed batch processing, its rigid infrastructure limits adaptability to real-time demands. The modern data stack introduced event-driven processing and decoupled storage, enabling greater flexibility and responsiveness. Cloud-based stacks take this further by leveraging serverless computing and managed services, offering seamless scalability, automation, and reduced operational overhead. This shift reflects a broader trend toward agile, efficient, and real-time data architectures.

Section 4.2 explores the technical structures and tools supporting each of these paradigms.

4.2. Data Stack Architectures and Tools

Big data architectures differ across Hadoop-based, modern, and cloud-based data stacks, each offering unique approaches to handling data ingestion, processing, and delivery. In this section, we describe the architecture of each data stack following three macro steps that structure the big data value chain:

Ingestion: Combining acquisition, preprocessing, and storage into a unified phase where raw data is collected, cleaned, transformed, and stored for further processing.

Processing: Focusing on analytics, computations, and business intelligence applied to data for extracting insights and enabling decision-making.

Data Delivery: Encompassing visualization and exposition, ensuring that processed data is made accessible, interpretable, and actionable for business users, applications, or external systems.

Each of the following subsections details the architectural design, techniques, and tools used in Hadoop, modern, and cloud-based data stacks in the context of big data processing.

4.2.1. Hadoop Data Stack

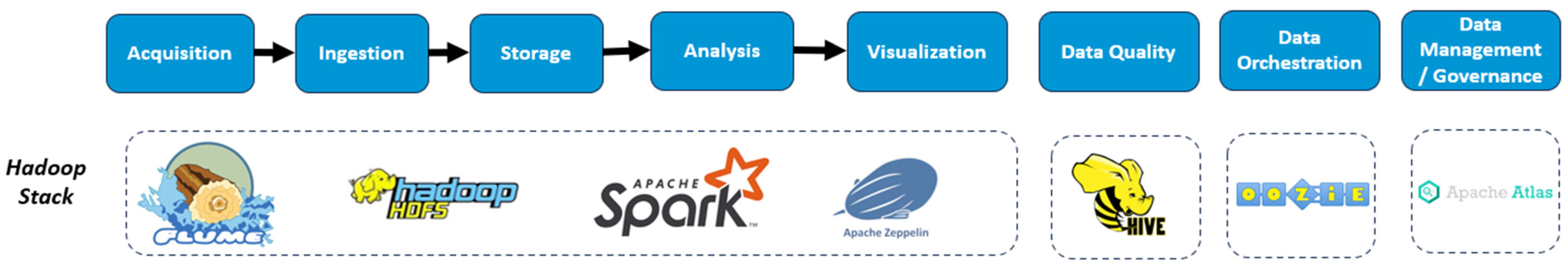

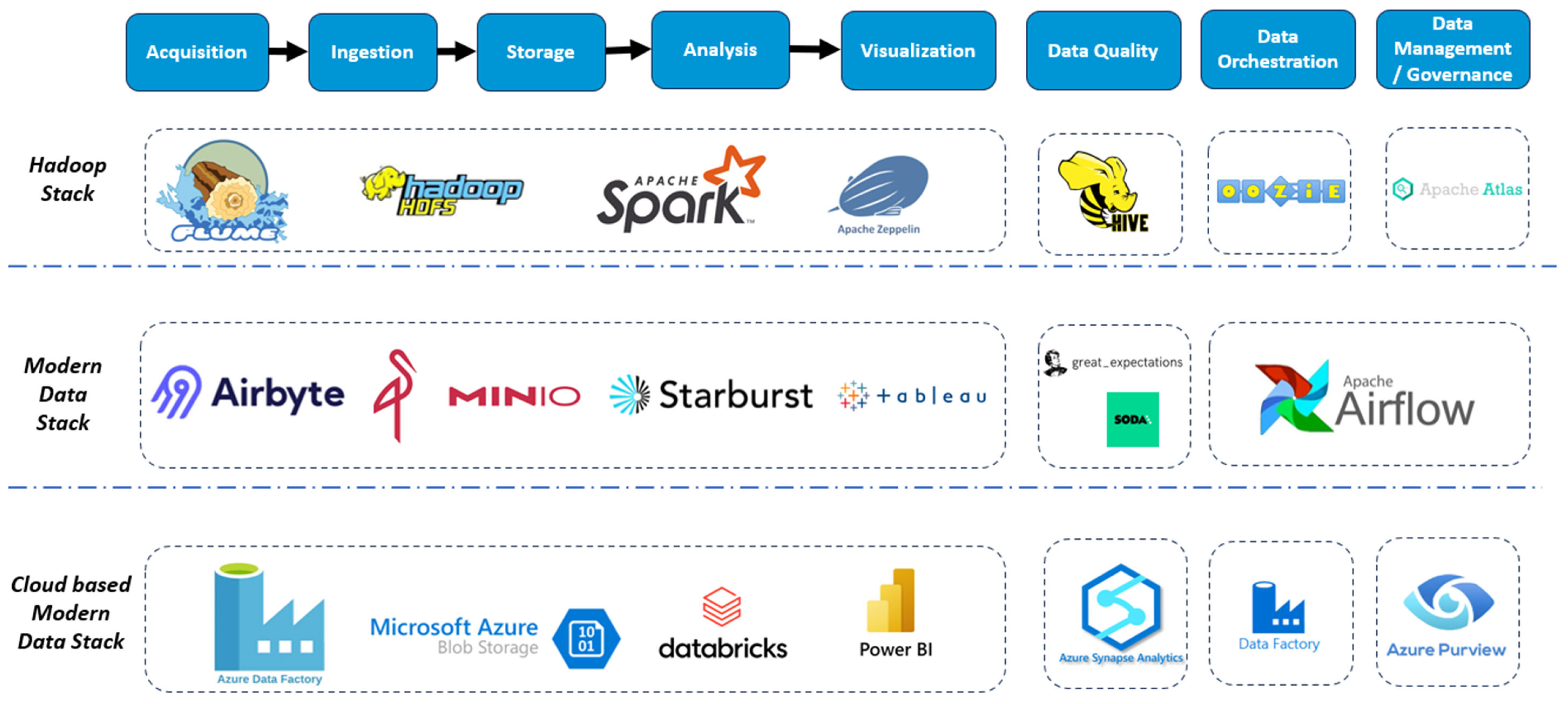

The Hadoop data stack (

Figure 2) was designed as a batch-oriented, distributed system to handle large-scale datasets efficiently across clusters of commodity hardware. It follows monolithic architecture, where each component tightly integrates with others, often relying on MapReduce for processing. Hadoop’s ecosystem includes a distributed file system (HDFS) for storage, batch-based ingestion tools, and query engines for analysis. Despite its scalability, Hadoop faces challenges related to complex cluster management, high-latency batch jobs, and limited flexibility in real-time processing [

19]. Below, we explore how Hadoop structures its data pipeline across the three macro steps.

Ingestion: In the Hadoop data stack, ingestion is mainly batch-oriented, involving the scheduled collection of data from sources such as relational databases, logs, and messaging systems. For data acquisition, Apache Flume is commonly used for collecting and aggregating log data before processing them into HDFS. In this phase, Apache Kafka is also used and acts as a distributed publish-subscribe system that buffers streaming data for later processing. Thus, Kafka allows real-time data processing in the Hadoop ecosystem and then, serves as a staging layer for batch jobs. Once ingested, the data is preprocessed using Apache Pig, which allows users to write data flow scripts for filtering, transforming, and joining datasets, and also Apache Hive, which provides an SQL interface for querying and structuring large data volumes. These tools complement each other, with Pig supporting procedural workflows and Hive enabling declarative analytics. The processed data is then stored in HDFS, the Hadoop Distributed File System, which ensures durability and scalability by distributing data across clusters with a master-slave NameNode/DataNode architecture. This approach is robust for high-volume batch processing; however, it is less suited for latency-sensitive workloads.

Processing: In the Hadoop data stack, data processing is mainly performed using the MapReduce programming model. This model divides a task into two main phases: the Map phase, where the job is broken down into smaller parallel computations, and the Reduce phase, where the outputs of these computations are aggregated to produce the final result. MapReduce is highly scalable and fault-tolerant, but it often suffers from high latency, especially when executing iterative or complex queries. To address these limitations, Apache Spark (3.5) is frequently integrated into the Hadoop ecosystem. Spark provides in-memory processing capabilities and follows a Directed Acyclic Graph (DAG) execution model, which significantly reduces processing time compared to MapReduce [

20]. In addition to batch jobs, Spark also supports machine learning, graph processing, and streaming analytics, making it a versatile tool within the stack. Apache Hive continues to play an important role at this layer by offering an SQL-based interface that translates user queries into MapReduce or Spark jobs. In this way, Hive acts as a bridge between data engineers and analysts, allowing users with limited programming knowledge to perform structured queries on large datasets.

Data Delivery: In the Hadoop ecosystem, data delivery is primarily batch-oriented, with processed results exported on a periodic basis for downstream consumption. Apache Sqoop is widely used to transfer data between HDFS and external relational databases, facilitating integration with existing enterprise systems. Another tool, Apache NiFi, provides a more flexible and user-friendly interface for designing and automating end-to-end data flows, enabling tasks such as routing, transformation, and system integration. For visualization and business intelligence, platforms like Apache Zeppelin and Apache Superset are often employed, allowing users to build interactive dashboards and notebooks directly connected to Hive or Spark. These tools make it easier for analysts and decision-makers to access insights from big data. However, because the delivery pipeline in Hadoop is mainly scheduled and periodic, it lacks the low-latency and event-driven features required for real-time operational analytics. As a result, Hadoop’s delivery approach is effective for reporting and historical analysis but less suitable for scenarios that demand immediate responses.

4.2.2. Modern Data Stack

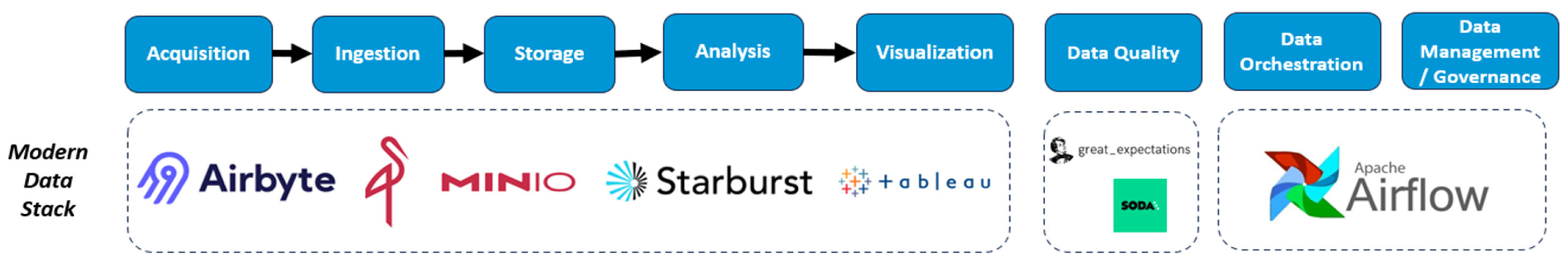

The modern data stack (MDS) (

Figure 3) shifts away from Hadoop’s monolithic and batch-oriented model, favoring modular, scalable, and cloud-compatible architectures. Instead of a rigid ecosystem, MDS allows organizations to select and integrate best-of-breed tools, prioritizing real-time event-driven processing, decoupled storage, and low-latency analytics. This flexibility enables faster, more interactive analytics while reducing operational overhead through managed and serverless solutions.

Ingestion: In the modern data stack, ingestion is usually real-time instead of only batch mode like Hadoop. Data could be captured from many sources such as databases, APIs, SaaS apps, and even IoT devices. Kafka is still very important is MDS, since it works as a backbone for streaming events. Also, tools like Debezium are used to capture small changes in databases and stream them out. Managed ELT services such as Fivetran are also integrated, automating the extraction of data from multiple sources and loading it directly into cloud data warehouses. Once ingested, transformations are usually performed using dbt (data build tool), which applies modular SQL transformations directly in the warehouse environment [

21]. This approach ensures that raw data remains available while curated layers of transformed data can be easily maintained and versioned. For storage, cloud-native solutions such as Snowflake, Amazon Redshift, Google BigQuery, and Azure Synapse are widely adopted, offering scalable and highly performant query engines. In addition, data catalogs like Amundsen or DataHub are employed to provide metadata management, lineage tracking, and discoverability, ensuring transparency and governance throughout the ingestion pipeline.

Processing: Processing in the modern data stack is centered on real-time, distributed, and self-service analytics, moving away from traditional batch-oriented models. It leverages SQL-based engines like Presto, Starburst, and Apache Drill, which enable federated queries across multiple heterogeneous data sources, such as data lakes, warehouses, and external APIs, without requiring data duplication or movement. Presto acts as a high-performance distributed SQL query engine, while Starburst extends it with enterprise features like advanced caching and security, and Apache Drill provides schema-free querying for semi-structured data. Together, they empower both analysts and business users to access and analyze data directly from its source, promoting true data democratization. Real-time access is further enhanced by data virtualization, which abstracts the physical location of the data, allowing users to query multiple systems through a unified interface. This query layer integrates naturally with AI and machine learning platforms such as DataRobot, an automated machine learning tool, SageMaker, Amazon’s end-to-end ML service, and Vertex AI, Google’s unified ML development platform [

22]. These tools connect to the same query layer or underlying storage systems, enabling advanced analytics and predictive modeling to be conducted directly on curated datasets without needing complex data pipelines, ensuring tight integration across the data and ML workflow.

Data Delivery: The modern data stack delivers data through real-time, API-driven mechanisms tightly integrated with BI and visualization tools. Platforms like Tableau, Power BI, and Looker connect directly to cloud data warehouses, enabling live querying, interactive dashboards, and role-based data exploration without data extraction. These tools translate business logic and visualizations into queries that run on high-performance engines like BigQuery or Snowflake, ensuring up-to-date insights. For broader dissemination, insights are exposed via RESTful APIs, GraphQL endpoints, and event buses, allowing external applications and services to consume data in real time. Kafka and Pulsar, both distributed messaging systems, act as backbone event streaming platforms for real-time data delivery, while AWS EventBridge offers event routing across AWS services and third-party applications. These systems work in tandem to ensure that insights, alerts, or data updates are pushed proactively to systems or users.

4.2.3. Cloud-Based Data Stack

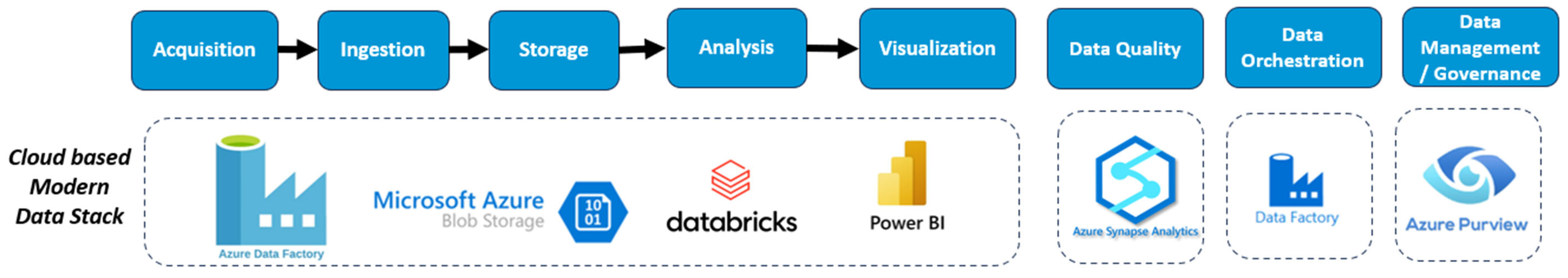

The cloud-based data stack (

Figure 4) is a fully cloud-native approach to big data processing, leveraging serverless computing, managed services, and scalable storage solutions. Unlike Hadoop, which requires manual cluster management, cloud stacks offload infrastructure management to cloud providers, reducing operational complexity and cost. Cloud-based architectures are designed for elasticity, automatically scaling resources based on demand while ensuring high availability and security.

Ingestion: In the cloud-based data stack, ingestion is serverless, scalable, and fully cloud-native, leveraging managed services to enable automated real-time data collection across distributed environments. Tools like AWS Kinesis, Azure Event Hubs, and Google Pub/Sub handle high-throughput data streaming with elastic scaling, while ETL and transformation are orchestrated via serverless tools such as AWS Glue, Azure Data Factory, and Google Dataflow, which abstract away infrastructure concerns [

23]. Data is then stored using object storage solutions like Amazon S3, Azure Blob Storage, and Google Cloud Storage, which offer high durability and native integration with compute services. For analytical workloads, cloud-native data warehouses like BigQuery, Redshift, and Snowflake provide columnar storage, fast query performance, and built-in governance features to ensure data quality and compliance.

Processing: Processing in cloud-based stacks is entirely managed and serverless, relying on auto-scaling compute services that allocate resources dynamically based on demand. Tools like Google BigQuery, AWS Athena, and Azure Synapse enable SQL-based analysis across large datasets without the need for server provisioning. For real-time, event-driven processing, distributed engines such as Databricks and Apache Beam support complex streaming workflows. Cloud platforms also offer integrated machine learning services like SageMaker, Azure ML, and Google AI Platform, allowing organizations to train, deploy, and infer ML models directly within their data pipelines—making AI-driven insights a seamless part of the architecture.

Data Delivery: Data delivery in cloud-based stacks is designed for real-time, global accessibility, enabled through API-first, event-driven architectures. BI tools such as AWS QuickSight, Google Data Studio, and Azure Power BI integrate with cloud warehouses to offer live dashboards, dynamic reporting, and interactive visualizations [

24]. Exposition is facilitated by fully managed API gateways including AWS API Gateway, Azure API Management, and Google Cloud Endpoints that provide secure, scalable access to processed data. Additionally, services like AWS Lambda, Azure Functions, and Google Cloud Functions ensure real-time data dissemination through event-based automation and workflow orchestration.

The transition from Hadoop-based to modern and cloud-based data stacks highlights a shift toward real-time, flexible, and serverless data architectures. While Hadoop remains a robust solution for batch processing of large-scale datasets, its high-latency and infrastructure-heavy nature make it less adaptable to today’s fast-paced data environments. The modern data stack improves on this by introducing event-driven workflows, interactive analytics, and API-driven integrations, offering a more modular and scalable approach. The cloud-based data stack goes even further, eliminating infrastructure management, enhancing scalability, and integrating AI-driven insights, making it the preferred choice for businesses seeking cost-effective, fully managed, and highly automated data solutions.

Figure 5 shows a comparison of the three big data stacks in terms of tools.

In the next section, we discuss data governance across the three data stacks, examining how security, compliance, access control, and data quality management are handled in Hadoop, modern, and cloud-based environments.

4.3. Governance and Security

Data governance and security are foundational elements of any big data architecture, ensuring that data remains trustworthy, compliant, and protected throughout its lifecycle. Although these concerns are common across all stacks, the approaches and tooling differ significantly between Hadoop, Modern, and Cloud-Based architectures due to their underlying design philosophies and levels of abstraction. The following comparison outlines how each paradigm addresses governance and security, highlighting their respective strengths and limitations.

4.3.1. Hadoop Data Stack

Governance in the Hadoop stack is primarily handled through tools like Apache Atlas, which support metadata management, lineage tracking, and policy enforcement. However, its implementation often requires manual configuration and custom integration, making it less straightforward than newer paradigms. On the security side, Hadoop relies on Kerberos for authentication and Apache Ranger for fine-grained access control and auditing. While these tools are robust, their integration and maintenance across distributed environments can be complex and resource-intensive.

4.3.2. Modern Data Stack

Governance in the modern data stack is more workflow-oriented, typically supported by tools such as Apache Airflow and dbt, which allow organizations to orchestrate pipelines while embedding governance policies into transformation processes. These tools enable monitoring, scheduling, and lineage tracking, though integration can be challenging due to tool heterogeneity and the need for custom connectors. From a security perspective, MDS incorporates modern practices such as encryption (at rest and in transit), data tokenization, and RBAC/ABAC for granular access control. Nevertheless, ensuring seamless interoperability between various tools can introduce security and compatibility issues.

4.3.3. Cloud-Based Data Stack

Cloud-based stacks benefit from natively integrated governance and security services provided by platforms like AWS and Azure. Governance is streamlined through centralized dashboards, automated lineage tracking, and compliance monitoring using services like Azure Data Factory and AWS Data Pipeline. For security, cloud platforms offer Identity and Access Management (IAM), encryption key services (AWS KMS, Azure Key Vault), and network security policies, all of which are tightly coupled with compliance standards [

25]. While these built-in features reduce configuration complexity, they require careful policy alignment and ongoing oversight to ensure security objectives are fully met.

4.4. Cost and Scalability Considerations

Cost considerations and scalability are key factors to evaluate when choosing a big data stack. In the Hadoop data stack, the initial setup costs can be significant, as organizations need to invest in hardware infrastructure and skilled personnel to deploy and manage the Hadoop cluster. For instance, According to Flexera’s 2023 report, migrating to the cloud can reduce infrastructure costs by up to 30% [

26]. Additionally, the scalability of the Hadoop stack is constrained by the physical hardware limitations, requiring organizations to plan for future capacity needs and invest in additional resources as data volumes grow. A Databricks analysis of Hadoop TCO highlights that data center management, which includes administration, infrastructure maintenance, and related support, can represent nearly 50% of the total cost of ownership [

27]. Thus, while open-source technologies like Hadoop offer cost savings compared to proprietary solutions, organizations must also consider the total cost of ownership, including maintenance, support, and upgrades in long-term operational expenditure in some enterprise environments.

In modern data stacks, cost considerations are often more flexible due to the availability of a wide range of tools and technologies, including both open-source and commercial options. Modern data stacks offer scalability through cloud-based infrastructure, allowing organizations to scale resources up or down based on demand. Research suggests that transitioning to modern, decoupled data architectures can result in cost reductions of 15–40% for some organizations compared to legacy systems [

28]. However, the use of multiple tools and technologies within the modern data stack can lead to higher integration and management costs, as organizations may need to invest in custom integrations. A 2024 industry survey found that 40% of respondents perceived maintenance of integrations between data tools as their largest cost driver. Additionally, integration-related maintenance may add an extra 15–18% atop base licensing costs, for instance, an additional

$300 K on a

$2 M contract [

29].

Cloud-based data stacks offer a pay-as-you-go model, where organizations only pay for the resources they consume, making it a cost-effective option for many use cases. Cloud platforms provide built-in scalability features, allowing organizations to dynamically scale resources based on workload demands. According to Flexera’s 2023 State of the Cloud Report [

30], public cloud spending exceeded budgets by an average of 18%, often due to suboptimal storage and compute usage. As data volumes grow, the costs of storing and processing large datasets in the cloud can escalate, requiring organizations to carefully monitor and optimize resource usage to control costs. For example, Gartner has observed that most customers spend 10% to 15% of their cloud bill on egress charges [

31], especially for data-intensive analytics workloads. Additionally, organizations must consider factors such as network bandwidth costs and vendor lock-in when evaluating the total cost of ownership of a cloud-based data stack. Overall, while cloud-based data stacks offer scalability and flexibility, organizations must carefully consider their cost requirements and usage patterns to choose the most cost-effective solution for their needs. Overall, while Hadoop remains cost-effective for on-premises environments, MDS offers flexibility for hybrid contexts, and cloud-native stacks excel in automation and elasticity.

The three big data stacks Hadoop, Modern Data Stack (MDS), and Cloud-Based Stack present distinct architectural philosophies that shape their operational characteristics and trade-offs. The Hadoop ecosystem remains foundational in large-scale distributed computing, prioritizing scalability and fault tolerance through tightly coupled storage and compute layers such as HDFS and YARN. However, its reliance on complex cluster management and hardware resources introduces significant setup and maintenance overhead. In contrast, the Modern Data Stack introduces modularity and tool flexibility, often built on cloud infrastructure but maintaining open-source interoperability. It supports modern paradigms such as Data Mesh and reverse ETL pipelines, improving governance and data accessibility. Nevertheless, the abundance of tools and integration points can create operational complexity and increase the risk of vendor lock-in. Finally, Cloud-Based Stacks fully leverage managed and serverless services, offering built-in scalability, elasticity, and governance. Research emphasizes their efficiency in dynamic resource allocation and ingestion optimization. However, they also face challenges including data egress costs, latency, and dependency on specific cloud platforms.

Table 2 summarizes this comparative analysis across twelve operational dimensions.

For an exhaustive comparison, we go beyond architectural considerations in

Section 5 by implementing end-to-end use case scenarios and exploring critical factors such as data integration, implementation costs, and ease of deployment, aiming to provide researchers and practitioners with a comprehensive reference of big data stacks.

5. Practical Implementation: Testing Hadoop, Modern, and Cloud Stacks with Real-World Data

5.1. Implementation Design

5.1.1. Use Case Description

We aim to conduct a comprehensive performance comparison among three prominent implementation modes: Hadoop stack, modern data stack, and cloud-based data stack, using the Amazon Books Reviews Dataset [

32], which contains 142 million reviews spanning from May 1996 to the year 2014. Our approach focuses on executing typical data processing tasks across these modes, encompassing data ingestion, processing, query execution, parallel processing, data visualization, and data export. Through meticulous measurement of scalability and execution time metrics, we seek to unveil the efficiency and effectiveness of each implementation mode in handling large-scale data processing tasks. This comparative study promises to shed valuable insights on the strengths and limitations of each mode, thus facilitating informed decision-making in the realm of big data analytics.

5.1.2. Architecture Tools

Hadoop Stack

For our implementation on the Hadoop stack, we leveraged a suite of Hadoop-based tools within the Cloudera Data Platform (CDP) as shown in

Figure 6, which offers an integrated environment for big data processing. We first began with the acquisition of the Amazon Books Reviews Dataset, a comprehensive repository encompassing attributes like book titles, prices, user IDs, review scores, and summaries, using Apache Flume that allows collecting and aggregating large volumes of data as in this context. Subsequently, we proceeded to ingest and store the dataset using Hadoop HDFS, an established Hadoop distributed file system. This choice was made to ensure seamless scalability and fault tolerance within our data storage infrastructure, crucial for handling the immense volume of data at hand. To process the data, we used to Apache Spark for its fast data processing capabilities in distributed mode. The reason behind using the parallel processing capabilities of Apache Spark and distributed storage of Hadoop HDFS, is to evaluate and compare the efficiency and scalability of the Hadoop stack against other implementation modes, particularly in the context of big data parallel processing. As part of our implementation process, we also conducted a thorough data quality check, essential in the context of big data analytics, using Apache Hive. Indeed, Apache Hive offers data querying and validation functionalities, enabling us to ensure data integrity and reliability and also serves for comparison with other modes in terms of query execution performance. The whole process was orchestrated by Apache Oozie, a versatile tool within the Hadoop ecosystem that facilitated the coordination and management of data processing tasks. Moreover, we also incorporated a data management and governance component into our implementation using Apache Atlas, which is a metadata management and governance tool to maintain data integrity throughout our implementation. Thus, this architecture of carefully selected tools was meticulously integrated in the Cloudera Platform and served as the foundation for our Hadoop stack implementation.

Modern Data Stack

To build our modern data stack architecture, we have selected a bunch of tools based on their compatibility with big data systems and their availability as open-source solutions as shown in

Figure 7. Thus, for data acquisition, we have adopted Airbyte, an efficient ingestion tool that seamlessly gathers data flows into our architecture. To store the gathered data, we have used Minio a scalable storage system based on object storage technology. Minio is designed to emulate Amazon S3 (Simple Storage Service), offering a self-hosted open-source alternative with compatibility for the S3 API. Once data are stored, they are processed using Starburst which is a commonly used processing tool known for its ability to handle complex queries and massive datasets. For the quality aspect, Great Expectations validates the data fetched from Starburst to ensure it meets the requirements through a multitude of quality check functions, enabling quality validation of the datasets to ensure integrity and accuracy. Our processing tasks are orchestrated within a streamlined data pipeline using Apache Airflow. Data airflow is a data management tool that allows monitoring data pipelines and is integrable with starburst operations. Finally for data visualization, we used Tableau as a powerful tool for data dashboarding and visualization.

Cloud-Based Data Stack

To implement the cloud-based data stack, we opted for Microsoft Azure as a leader cloud provider offering advanced features for managing big data workloads. Thus, based on the Azure’s ecosystem, we built a cloud-based big data architecture using different features to cover the whole big data value chain as shown

Figure 8. First, the Amazon Books Reviews Dataset, was acquired and ingested using the Azure Data Factory allowing for an efficient and fast data ingestion at scale. For storage, Azure offers a plethora of options including data lakes and blob storage. In this implementation, we opted for S3-based storage, leveraging its modern architecture that ensures optimal performance for housing large-scale datasets. Moving on to data analysis, we used the Azure Databricks, a powerful tool built on Apache Spark that offers a unified interface for processing, analyzing, building, and deploying large datasets in a scalable manner. As for the other implementation modes we have performed a data quality check using this time Azure Synapse Analytics. Indeed, Azure Synapse analytics is a comprehensive data quality tool also used for data profiling, cleansing, and validation to ensure data integrity and reliability. For data management, we used azure purview, widely used in Azure stacks to manage big data as it provides an end-to-end visibility into data lineage, classification, and usage across the entire data lifecycle. Finally, for data visualization and insights generation, we used Power BI, leveraging its intuitive interface and advanced analytics capabilities to create interactive dashboards and reports. Thus, we opted for this cloud architecture powered by Azure’s cloud services to build our cloud-based big data stack implementation as it offers one of the most powerful Stacks in cloud scenario for an accurate comparison of the different implementations.

Figure 9 shows an overall comparison of the three big data stacks architectures throughout the big data value chain.

5.1.3. Data Pipeline

To compare the three data stacks, we built an exhaustive data pipeline that implements common data tasks as follows:

Ingestion—Data reading and exploring: The first step of our data pipeline is reading the content of the dataset. In the Hadoop Stack is step is performed using Apache zeppelin namely Spark Context Object functions, allowing to visualize the content of the dataset in a table format. In the Azure Stack, this is performed using the preview function of the Azure Data Factory. In the modern data stack, the source file has been setted up as a source connector in Airbyte, and Starburst Galaxy as a destination connector. Data shape is also explored in this step through standard functions of Apache zeppelin. In azure, Data factory offers a ready dashboard of metrics about the ingested data. For the modern data stack, the dataset was explored using SHOW STATS commands of Trino SQL interface integrated with Straburst.

Preprocessing—Data cleaning: The next step of our data pipeline is data cleaning through dropping anomalous data such as duplicate entries and missing values. In Hadoop stack this is performed using Hive queries such as DISTINCT and LENGTH statements. In Azure, data cleaning is performed using the components of Data Transformation functionality of Data Synapse Analytics such as the Remove Duplicate Rows component that allows to identify duplicates after selecting the key columns. In the modern data stack is performed using Starburst connectors that allows to perform advanced queries to catch anomalies in the dataset.

Analysis—Querying: One of the most performed tasks in data analysis is querying to extract insights from the dataset. In this pipeline we performed the following queries in the different data stacks

Query 1: Books Type Distribution

Query 2: Titles, authors, and publishers of books in the “Computers” category between 2000 and 2023 that contain ‘distributed’ in their title.

Query 3: Top 10 books with the highest average rating among those published in the 2000 with at least 500 ratings.

Query 4: Most Reviewed Books by word Count

Query 5: Most Frequent words in book reviews

Analysis—Sentiment Analysis: To perform advanced analysis tasks, we also implemented a sentiment analysis on the dataset in the different stacks. For this, we used Spark NLP in the Hadoop data stack that offers sentiment analysis library to perform sentiment analysis. In the Azure Stack, the feature ai_analyze_sentiment() of Azure Databricks was applied to the whole book’s reviews for data sentiment analysis. In the modern data stack, the Pyspark API is used to implement the sentiment analysis process through the Pystarburst integration that Starburst offer.

SA-Query 1: Distribution of Negative, Neutral and Positive Sentiment of the whole reviews

SA-Query 2: Books with the greatest number of positive reviews

SA-Query 3: Books with the greatest number of negative reviews

Visualization—Data Visualization: Finally, we have visualized the obtained results of the performed queries as well as the sentiment analysis operations using the corresponding visualization tool of each stack.

5.2. Results and Discussion

5.2.1. Scalability

One key metric to compare data stacks in the context of big data is scalability, which refers to the system’s ability to handle increasing data volumes and workload demands efficiently. To assess the scalability of the three data stacks we conducted a series of experiments measuring the execution time of each step in a unified data pipeline, built using the respective stack technologies. The pipeline consisted of five stages: data ingestion, preprocessing, structured querying, sentiment analysis (SA), and visualization. Each stage was executed using a dataset of consistent size and structure across all stacks, ensuring comparability. The execution times were recorded under similar computing conditions, and included the time required for data transport, transformation, computation, and result generation. To ensure reproducibility and fairness in comparing stack scalability, all experiments were executed under the same computing environment. The implementations were deployed on a virtual machine running Ubuntu 22.04 LTS, equipped with 16 vCPUs, 64 GB RAM, and 500 GB SSD storage. No dedicated GPU was used. Hadoop (Cloudera CDP 7.x), the Modern Data Stack (Docker-based deployment including Airbyte, MinIO, Starburst, Airflow), and the Cloud-based stack (Azure Data Factory, Azure Databricks, Azure Synapse Analytics, and Power BI) were all configured with equivalent resource allocations. Each experiment was executed three times, and the reported times represent averaged results. The obtained results are shown in

Table 3.

5.2.2. Accuracy

Another key metric we have evaluated is accuracy, which reflects the correctness and reliability of the outcomes produced by each data stack. Specifically, we assessed accuracy in two main phases of the big data value chain: preprocessing and analysis. For preprocessing, accuracy was measured by comparing the proportion of correctly cleaned and structured records against a validated ground truth dataset. This included detecting and resolving missing values, inconsistencies, and formatting errors. For the analysis tasks, we evaluated the accuracy of both structured querying and sentiment analysis operations. Sentiment analysis was evaluated by measuring the percentage of correctly classified text samples based on predefined sentiment labels (positive, neutral, negative) using a manually verified benchmark set.

Table 4 shows the obtained accuracy rates for the different processes of the three big data stacks.

The high accuracy values obtained in

Table 4 can be explained by the nature of the tasks being evaluated. For data cleaning, the accuracy corresponds to the proportion of correctly standardized, deduplicated, and validated records when compared with a manually verified subset of the dataset. Minor differences between stacks (e.g., 99.89–99.94%) arise from variations in how each tool handles borderline cases such as conflicting duplicate entries or irregular formatting.

For sentiment analysis, the two queries that reached 100% accuracy (SA-Query 2 and SA-Query 3) are deterministic, counting-based operations. These tasks identify the books with the greatest number of positive or negative reviews, and the results can be directly validated by reviewing the counted occurrences in the dataset. Because these queries do not rely on probabilistic classification, they naturally achieve 100% correctness across all stacks.

Although the sentiment-classification outputs generated by the different engines (Spark NLP, Azure Databricks sentiment API, and PyStarburst) show slight variations, these differences do not significantly alter the identification of the books with the highest proportion of positive or negative reviews. These particular queries were intentionally chosen to verify whether variations in the Modern Data Stack could lead to major discrepancies in downstream results. It is worth mentioning that the primary objective here is not to benchmark the intrinsic accuracy of each individual stack, but to highlight the extent to which differences between the stacks may or may not lead to divergences in the produced results. Based on our observations, the minor classification differences do not produce misleading or contradictory outcomes. All stacks consistently identify the same books as having the most positive or most negative reviews.

Overall, the accuracy levels reflect both the deterministic nature of certain queries (leading to 100% accuracy) and the small, tool-specific variations in NLP processing during SA-Query 1, which remain too minor to affect the final conclusions.

5.2.3. Ease of Integration

Hadoop: The use of Cloudera Data Platform has simplified the integration process as it offers a pre-integrated solution that combines all the needed tools for data processing. Thus, the use of the different tools requires only the start of the tools in the Ambari interface. Apache Spark, a fast and general-purpose distributed computing engine, seamlessly interacts with HDFS to read and write data using its Hadoop Input Format and Output Format interfaces. This integration allows Spark to directly access data stored in HDFS without data movement overhead. Also, Apache Zeppelin, interfaces with HDFS to enable data exploration and analysis. Indeed, Zeppelin notebooks execute Spark jobs and Hive queries directly against data residing in HDFS.

Modern Data Stack: The implementation of the modern data stack involves configuring the connection between the different tools. Indeed, Airbyte provides a Docker container and REST API to trigger data jobs in Apache Airflow allowing for the automation and orchestration of data synchronization workflows within Airflow. For data storage, Airbyte’s configuration allows to specify a MinIO instance as the destination for storing data. This involves providing the MinIO endpoint and access credentials. To trigger SQL queries in Starbust, this involves configuring a connection to the Starburst cluster within Airflow’s connection settings. On the other hand, Starburst itself typically does not integrate directly with MinIO. Instead, it queries data stored in MinIO buckets through S3 protocol. To achieve this, Starburst is configured with an S3 connector that allows it to communicate with MinIO using the same protocol and interface as it would with Amazon S3. This connector abstracts away the underlying storage mechanism, allowing Starburst to query and analyze data stored in MinIO buckets just like any other data source accessible via S3.

Cloud Data Stack: In our cloud-based data stack implementation on Microsoft Azure, the communication between different components was seamless, facilitated by Azure’s interconnected ecosystem of services and built-in integrations. ADF provides built-in connectors specifically designed to interact with Blob Storage. These connectors enable ADF to perform operations such as reading data from and writing data to Blob Storage. When integrating with Azure Databricks, Azure Data Factory uses its Databricks Linked Service, which acts as a bridge between the two services. This linked service configuration allows ADF to establish a connection to Databricks clusters and execute data processing activities such as running notebooks or submitting jobs. Thus, ADF can trigger Databricks jobs or notebooks based on predefined schedules or event triggers. Similarly, Azure Synapse Analytics can be seamlessly integrated with Azure Data Factory through Synapse Linked Services.

5.2.4. Deployment Costs

Deployment costs vary across the different data stacks, influenced by factors such as tool licensing fees, infrastructure costs, and maintenance expenses. Our evaluation reveals that the modern data stack offers the most cost-effective deployment solution, thanks to its flexibility in tool selection and customization. In contrast, the Hadoop stack and cloud-based stack exhibit higher deployment costs, with the former often requiring substantial hardware investments and the latter involving pay-as-you-go or subscription-based pricing models from cloud providers. Organizations must weigh these cost considerations against their specific use case requirements and budget constraints when selecting a suitable data stack for deployment.

6. Discussion: Scalability, Accuracy, and Cost Implications of Big Data Stacks

As detailed previously, the same data pipeline was executed across the three distinct stacks for comparative analysis. The implemented data pipeline across the three stacks involved preprocessing the data to clean anomalies like duplicates and missing values. Subsequently, analysis was conducted by querying the dataset to extract informative insights. Then, sentiment analysis was performed to gain further insights into the dataset, including distribution of positive, neutral, and negative sentiments, books with the highest number of positive and negative reviews. Finally, the obtained results were visualized using the corresponding visualization tool to each stack.

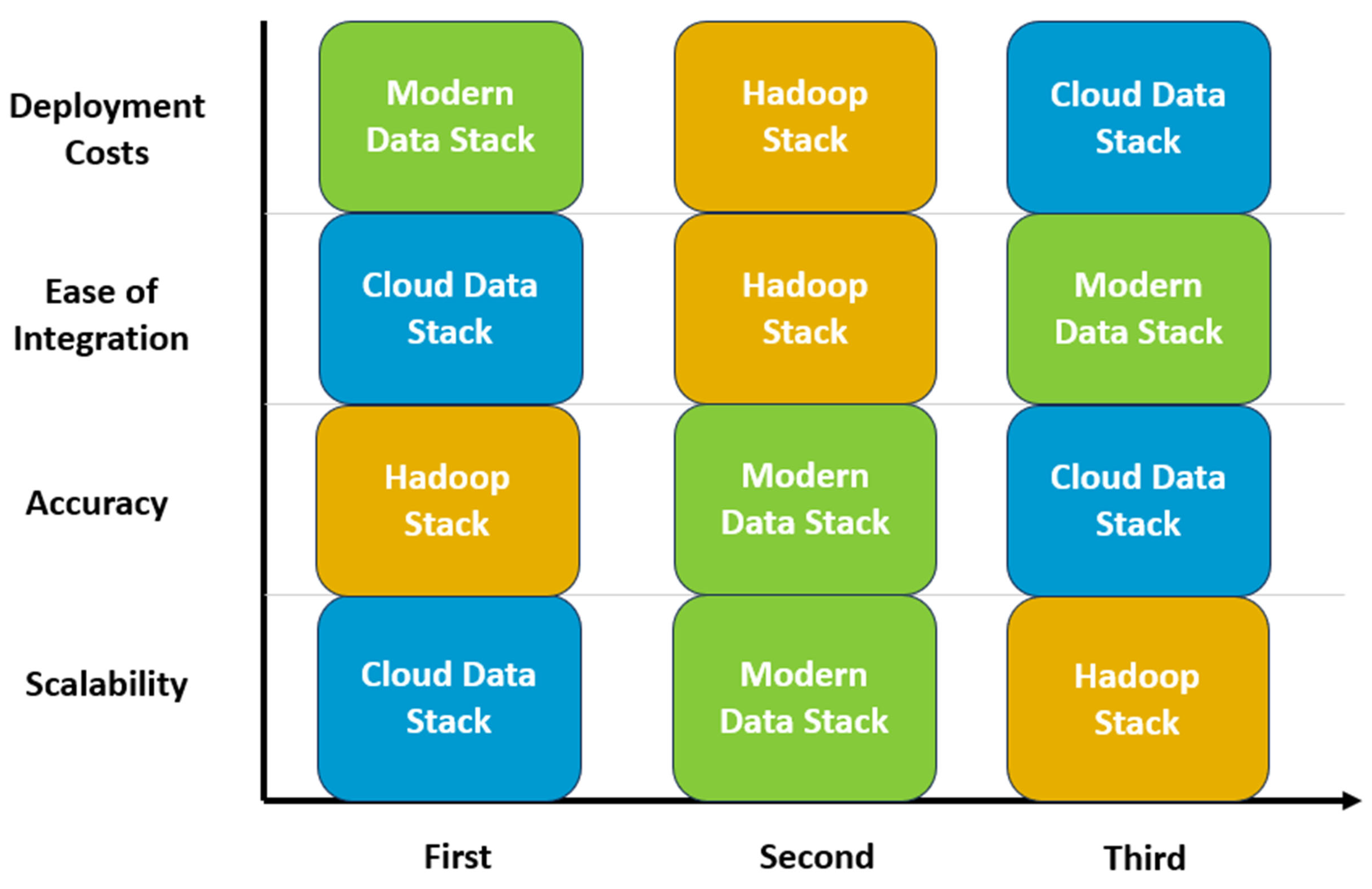

The scalability analysis across the three data stacks reveals distinct performance trends. The Hadoop stack demonstrates moderate scalability, with efficient data loading and preprocessing but encountering challenges in complex analytical tasks, as evidenced by higher execution times in querying and sentiment analysis phases. In contrast, the modern data stack exhibits improved scalability, particularly in preprocessing and querying phases, indicating enhanced capability in handling data transformation and analytical queries. However, scalability limitations become apparent in advanced analytical tasks, reflected in slightly higher execution times for sentiment analysis. Conversely, the cloud-based data stack demonstrates superior scalability across all phases, leveraging the elasticity and scalability of cloud infrastructure to achieve significantly reduced execution times. This scalability advantage positions the cloud-based stack as a favorable choice for organizations requiring scalable big data solutions, offering efficient data processing capabilities across the entire data pipeline. These findings support our architectural analysis, which anticipated the cloud stack’s advantages in elasticity and resource optimization

Concerning accuracy, the obtained results demonstrate high levels of accuracy across all three data stacks in various tasks, including data cleaning, querying, and sentiment analysis. In the cleaning phase, all stacks achieved exceptionally high accuracy rates, with Hadoop, Modern Data Stack, and Cloud achieving accuracy rates of 99.94%, 99.89%, and 99.93%, respectively. This indicates that the preprocessing techniques utilized in each stack effectively removed anomalies such as duplicate entries and missing values, ensuring data quality and integrity. Similarly, in the querying phase, we achieved the same accurate queries results in all stacks attaining thus perfect accuracy rates of 100% across all queries (Q1–Q5). In the first sentiment analysis query (SA-Q1) regarding the distribution of negative, neutral, and positive sentiments of the entire reviews dataset, we observed slightly different accuracy rates among the three data stacks. This variation in accuracy rates underscores the nuanced differences in the sentiment analysis techniques employed by each data stack, with Hadoop leveraging sophisticated libraries to achieve the highest accuracy, followed by the Modern Data Stack, while the Cloud-based solution trailed slightly behind, utilizing built-in Azure features. For the two other queries (SA-Q2 and SA-Q3) which involved selecting specific records (books with the greatest number of positive or negative reviews), all three data stacks achieved correct results. This suggests similar accuracy performance across the stacks for queries targeting specific records, whereas slight differences may arise when querying non-specific results such as distributions.

In terms of ease of integration, our assessment ranks the cloud data stack as the most straightforward, thanks to its built-in connectors and integrations that facilitate seamless communication between components. Following closely behind is the Hadoop stack, leveraging the Cloudera Data Platform to provide a pre-integrated solution that simplifies tool usage and configuration, with slightly more complexity than the cloud-based approach. In contrast, the modern data stack presents the most challenging integration process, requiring meticulous configuration of connections between various tools to enable interoperability and workflow automation. Regarding deployment costs, the modern data stack emerges as the most cost-effective option due to its flexible tool selection and customization. In contrast, Hadoop and cloud-based stacks typically incur higher deployment costs, influenced by factors like licensing fees and infrastructure expenses.

It is important to note that performance differences across the three implementations may be partially affected by tool-specific characteristics rather than architectural choices alone. Although each stack was configured to use the same dataset, execution logic, and equivalent computational resources, the tools available within each paradigm differ in maturity, internal optimization, and execution model. To mitigate this limitation, we selected widely adopted and representative tools for each paradigm (Spark for Hadoop, Starburst/Trino for MDS, and Databricks/Synapse for Cloud) and ensured that comparable processing logic was applied to each pipeline stage. While this approach improves practical comparability, we acknowledge that the measured performance reflects an interplay between architecture and tooling rather than architecture in isolation. Nevertheless, all obtained results were within acceptable operational ranges for the chosen workloads, and this comparative study primarily aims to share the practical insights gained from implementing each paradigm end-to-end under realistic conditions.

Figure 10 shows a comparative matrix of the three big data stacks.

Overall, the choice of a data stack should be guided by a holistic assessment that takes into account multiple factors such as costs, scalability, the evolving size of data, architectural requirements, accuracy, and availability of technical resources. While each data stack has its strengths and weaknesses, organizations must weigh these considerations against their specific use case requirements and long-term strategic objectives. For example, while the modern data stack offers flexibility and customization options, it may require more technical expertise and effort to integrate and manage effectively. On the other hand, the cloud-based data stack provides scalability and ease of deployment but may incur higher costs over time, particularly for large-scale deployments. Therefore, a comprehensive evaluation considering all these factors is essential to make an informed decision that aligns with the organization’s goals and constraints. These observations pave the way for the conclusions and future directions presented in

Section 7, where we reflect on the broader implications of our findings and propose avenues for further research.

7. Conclusions

This study has provided an in-depth comparative analysis of three dominant big data stack paradigms across the full data value chain, from acquisition to exposition. By evaluating both architectural design and experimental implementation, we identified clear distinctions in scalability, accuracy, integration complexity, and operational costs. Notably, our results highlight that cloud-based stacks clearly outperform in scalability and automation, while the modern data stack excels in flexibility and cost-efficiency, particularly in hybrid environments. Conversely, the Hadoop stack, though robust, suffers from high latency and infrastructure overhead, limiting its suitability for real-time applications.

Based on these findings, we propose several directions for future research:

- ⮚

We plan to conduct longitudinal evaluations to observe how the three data stacks evolve over time under varying workloads, growing data volumes, and shifting operational constraints.

- ⮚

We intend to explore emerging technologies, such as data lakehouses, serverless analytics, and AI-native pipelines, which show potential to unify batch and real-time processing within a single architecture.

- ⮚

We also seek to investigate the influence of evolving regulatory frameworks and data sovereignty policies, on the adoption, design, and governance of big data infrastructures.

Ultimately, this study offers a practical reference point for organizations and researchers seeking to navigate the increasingly complex and fragmented big data ecosystem. By aligning technical choices with strategic goals, stakeholders can make more informed decisions and build data infrastructures that are both scalable and future-proof.