1. Introduction

The penetration of wind power in energy networks is gaining momentum in view of the trend in installed capacity, the achievement of sustainability and security of supply being crucial in green energy transitioning roadmaps. Consequently, future decarbonization scenario depends on wind energy exploitation due to a plurality of factors. In their numbers, it is worth mentioning the growth of power-per-unit in wind turbines (WTs) [

1], the wind farm maturity, and quest for Levelized Cost of Energy (LCOE) competitiveness, mostly in floating offshore configurations [

2,

3,

4]. The enhancement of wind farm overall efficiency, reliability, and availability is critical [

5], in a view of wind power becoming the backbone of modern electric grids. Recent developments are focusing on multiple possible outcomes, from layout optimization to reduce wake losses [

6], to modeling blade erosion to evaluate energy losses [

7], up to novel data-driven techniques to efficiently manage Operation and Maintenance (O&M) in large wind farm fleets [

8,

9].

Notably, from an economic viewpoint, O&M costs can range from 30% to 40% of a wind farm life-cycle cost (in offshore installations), being the most frequent faults on electric and control systems, followed by blades and hydraulic groups [

10,

11]. In addition, failures (typically in generators and gearboxes) entail high repair and replacement costs and result in long downtimes with significant loss of production.

The task of operation monitoring is customarily demanded to network sensors in Supervisory Control And Data Acquisition (SCADA) systems meant to collect, store, and display all relevant parameters of individual wind turbine, substation, and meteorological stations. In the wind technologies arena, SCADA data are usually collected on a 10-min interval basis with standard systems providing four statistical measures (mean, standard deviation, maximum value, and minimum value) of hundreds of wind turbine signals, and information about energy output, availability, and error logs [

12].

Modern approaches to O&M challenges, therefore, advocate Condition-Based Monitoring (CBM) strategies capable of the early detection and isolation of incipient faults with a variety of methods [

13]. Due to the lack of a comprehensive physical or mathematical model of WT operations, CBM techniques must heavily rely on data-driven methods based on 10-minute SCADA (see [

14] for a systematic review), in that probabilistic approaches fail in modeling proper temporal dependencies in sensor networks [

15]. As a consequence, wind turbine prediction science is rapidly developing on the paradigm of exploiting high-frequency SCADA datasets to nowcast potential fault as the main ingredient of CBM strategies, and forecast producibility to manage responsive wind farm operations.

Data-driven tools emerge as key ingredients not only in O&M perspective but also, and possibly more importantly, in asset integrity management approaches. Being wind energy assets made of numerous individual components with possibly different useful lives, component-specific life extension strategies may only rely on data-driven tools designed to integrate prediction techniques and daily operation of power systems [

16]. The ability to predict, with good accuracy, wind farm operations gives wind power the requested flexibility in grid connection or off-grid uses (e.g., power-to-X applications), enable cost-efficient operation and maintenance, and asset condition assessment.

To this end, it is worth noting that CBM strategies suffer from several limitations, as they depend on the processing of vast quantities of SCADA data. Those datasets include raw data, featuring a degree of unreliability and erroneous data in the form of inaccurate and incomplete entries, missing values, or noisy sensor measurements [

17]. Such abnormal entries may directly impact data-driven strategies [

18] meant to process signals from the turbine sensor networks, and, dealing with a large amount of data, it can lead to overfitting problems and highly time-consuming [

19].

In addition to data curation, a second significant challenge in data-driven methods design is taking into account the signals’ mutual non-linearity and causal dependencies among WT components prompting the use of Artificial Neural Network (NN) models [

20].

When looking at early fault detection, the customary assumption is that failure occurrences reflect a change of correlation among SCADA signals. In this respect, NNs are used to model learning operator describing normal operations (so-called normal behavior models), based on which incipient faults can be detected, analyzing the deviation of real-time data from nowcasted ones, resulting in high reconstruction errors (in the multivariate framework of interest). A survey of the open literature shows that several machine learning methods [

21], as well as multiple neural architectures, have been proposed as tools to capture time series anomalies based on prediction errors in the regression of a univariate target variable from a multivariate input. To mention but a few, most of the contributions proposed Convolutional NN (CNN) [

22], and NN-based space–time fusion combining convolutional kernels with recurrent units, such as Recurrent NN (RNN) or Gated Recurrent Unit (GRU) [

23,

24,

25]. Typical shortcomings of those neural architectures range from the limited capability of regressing the general space–time domain dynamics (i.e., CNN), to possible error accumulation and high computational costs of recurrent NNs due to the sequenced learning [

26].

Recently, autoencoders (AEs) have been proposed as an alternative to regression models in anomaly detection, in view of their ability to extract salient features of normal operating conditions from multivariate time series (e.g., deep AEs, denoising AEs, and CNN-based AEs [

27,

28,

29,

30,

31]).

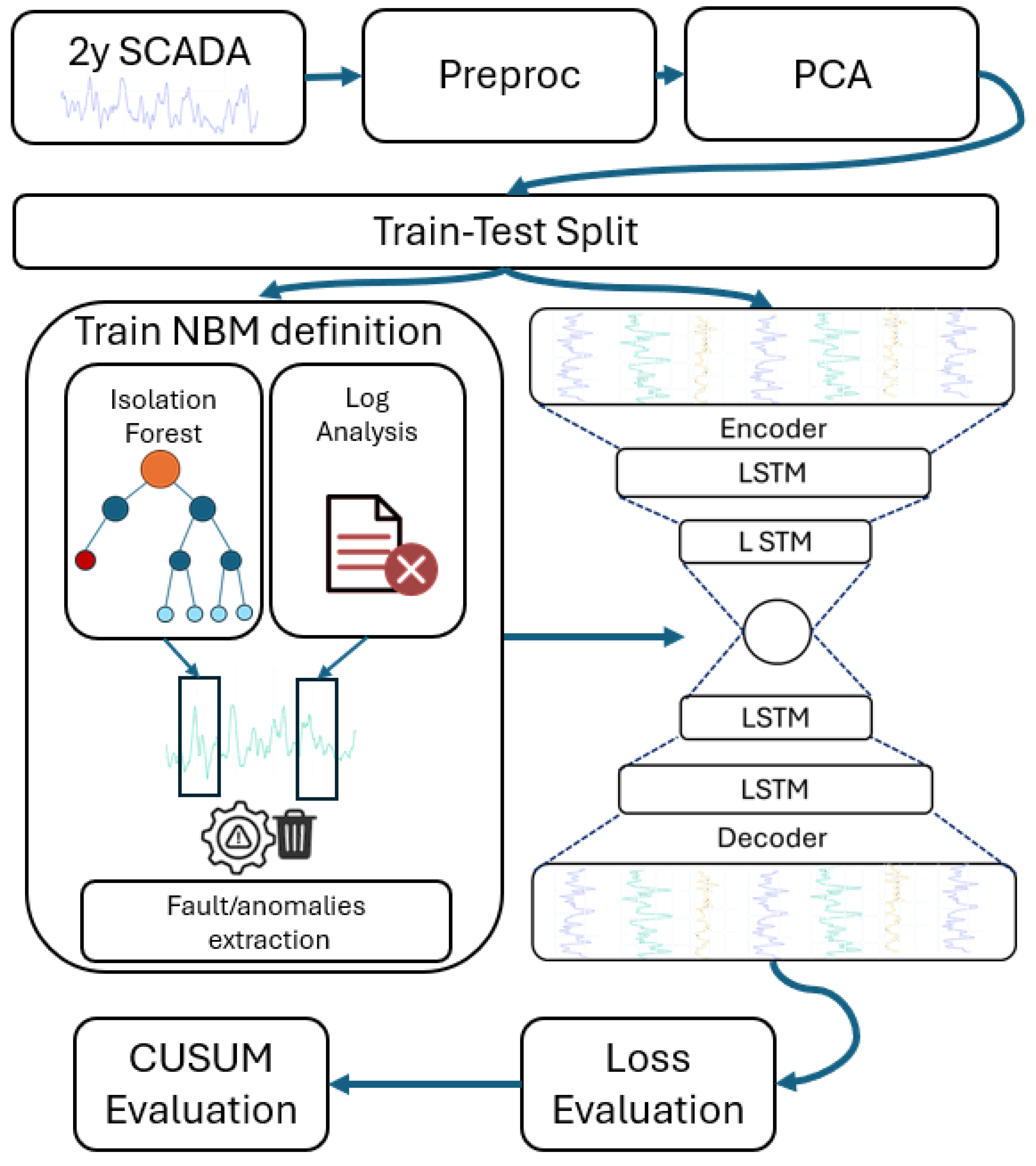

To this end, in this paper, we propose a multi-modal anomaly detection framework orchestrating an unsupervised module (i.e., decision tree method) with a supervised one (i.e., BI-LSTM AE). The proposed framework processes a dataset containing raw SCADA signals but also detailed logs of failure events and recorded alarms triggered by the SCADA system, on a wide range of subsystems: from gearbox to transformers and generators. These logs prove instrumental in validating the model’s findings and defining the reconstruction task for the autoencoder. Before being injected into the BI-LSTM AE, the SCADA data undergo a series of crucial preprocessing steps, from data cleaning to data integrity check. Next, feature engineering techniques are employed to enhance the information content of the dataset by creating new derived variables. Finally, Principal Component Analysis (PCA) is applied to reduce the dimensionality of the data, focusing on principal components that capture 98% of the total variance. In order to complement the warning log information, an unsupervised method for anomaly detection, i.e., an Isolation Forest algorithm, is in charge of labeling additional anomalies emerging from the preprocessed dataset. To perform anomaly detection, the AE is trained to learn the Normal Behavior Model (NBM) of the system. By evaluating indicators based on model reconstruction errors, the framework is able to trigger warnings. We tested the model on SCADA data gathered from one WT of an offshore wind farm located in the Guinea Gulf and covered a two-year period [

32]. Results showed that the proposed model successfully predicts 21 out of 23 SCADA log alarms involving some of the most critical components, with 4 false alarms triggered.

This article is a revised version of a paper presented at the 16th European Conference on Turbomachinery Fluid dynamics & Thermodynamics, Hannover, 24–28 March 2025 [

33].

The rest of the paper is organized as follows. In

Section 2, we present the proposed BI-LSTM autoencoder neural architecture together with the building blocks of the deep anomaly detection framework. Then, in

Section 3, we describe the case study, and in

Section 4, the obtained results.

Section 5 provides a final summary of conclusion.

3. Case Study

Data are derived from one turbine of an offshore wind farm located in the Guinea Gulf and cover the period from January 2016 to December 2017. The turbine considered, ranked class 2 according to the standard IEC 61400 [

45], is bottom-fixed, with a rotor diameter of 90 m, maximum rotor speed 14.9 rpm, and 2 MW rated power at a nominal wind speed of 12 m/s.

Table 1 summarizes the technical specifications of wind turbine under scrutiny.

Data recording and visualization are made possible by a dense network of sensors located along the nacelle of the wind generator. Sensors for wind speed, wind direction, shaft rotation speed, and numerous other factors collect and transfer data to the PLC (Programmable Logic Controller) to enable real-time operators’ control on the generation profile. WTs are equipped with a SCADA system for the monitoring of multiple parameters collected from the main components together with ambient measurements that are recorded every 10 min in terms of the mean, minimum value, maximum value and standard deviation. From the complete dataset, which includes 84 features, 30 signals have been selected as listed in

Table 2. The features excluded from the analysis are the minima, maxima, and standard deviations of the original features, along with most grid-related variables (such as frequency and reactive power on the grid side) and the active and reactive power for each phase of the generator. In the table, “Temp.” is short for ”temperature“, and “gen.” abbreviates “generator”, while “trans.” stands for “transformer”. When referring to bearing locations, two bearings are monitored at the generator: one at the drive end (DE) and one at the non-drive end (NDE). At the gearbox, a bearing is monitored at the high speed shaft (HSS).

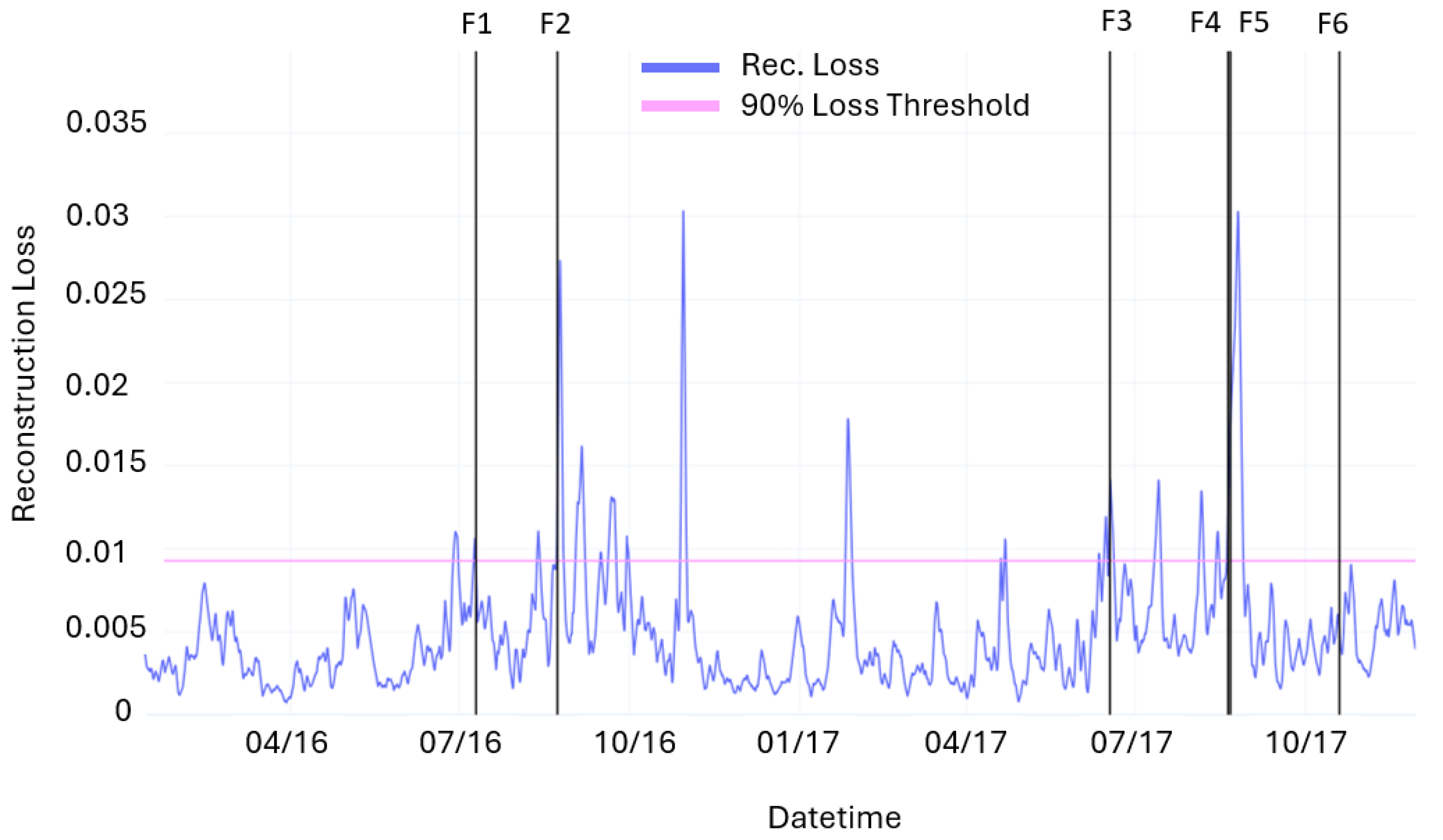

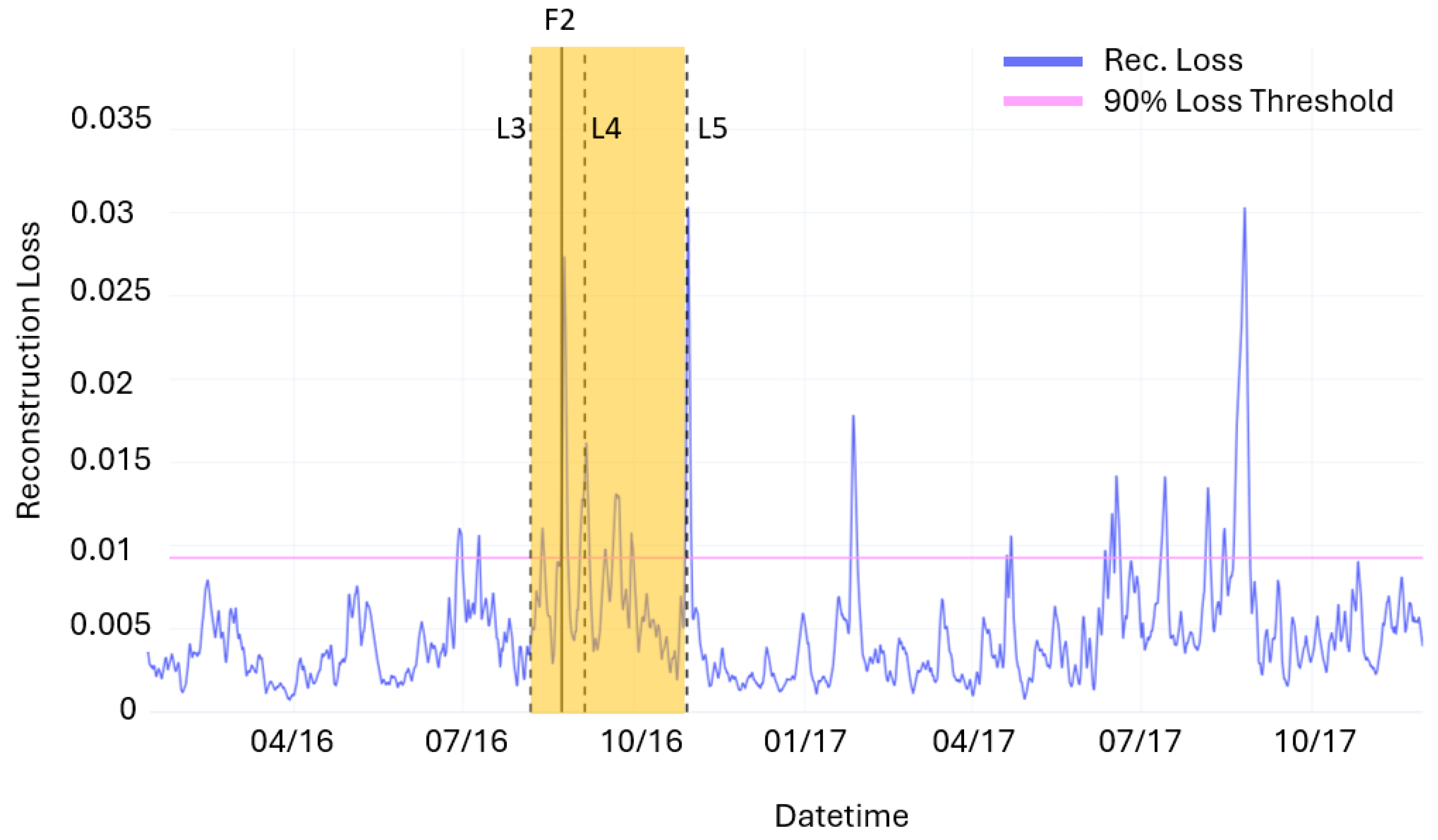

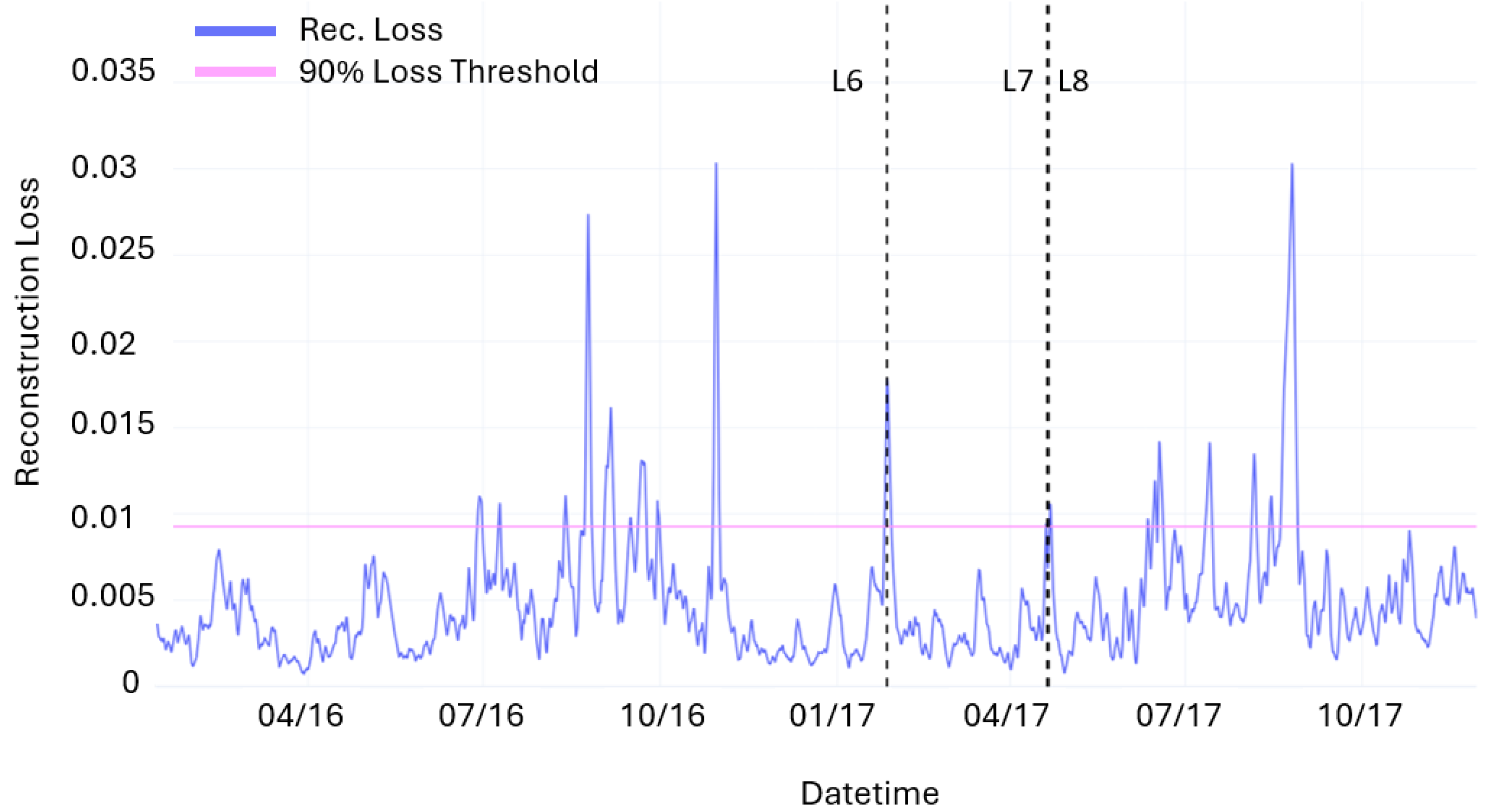

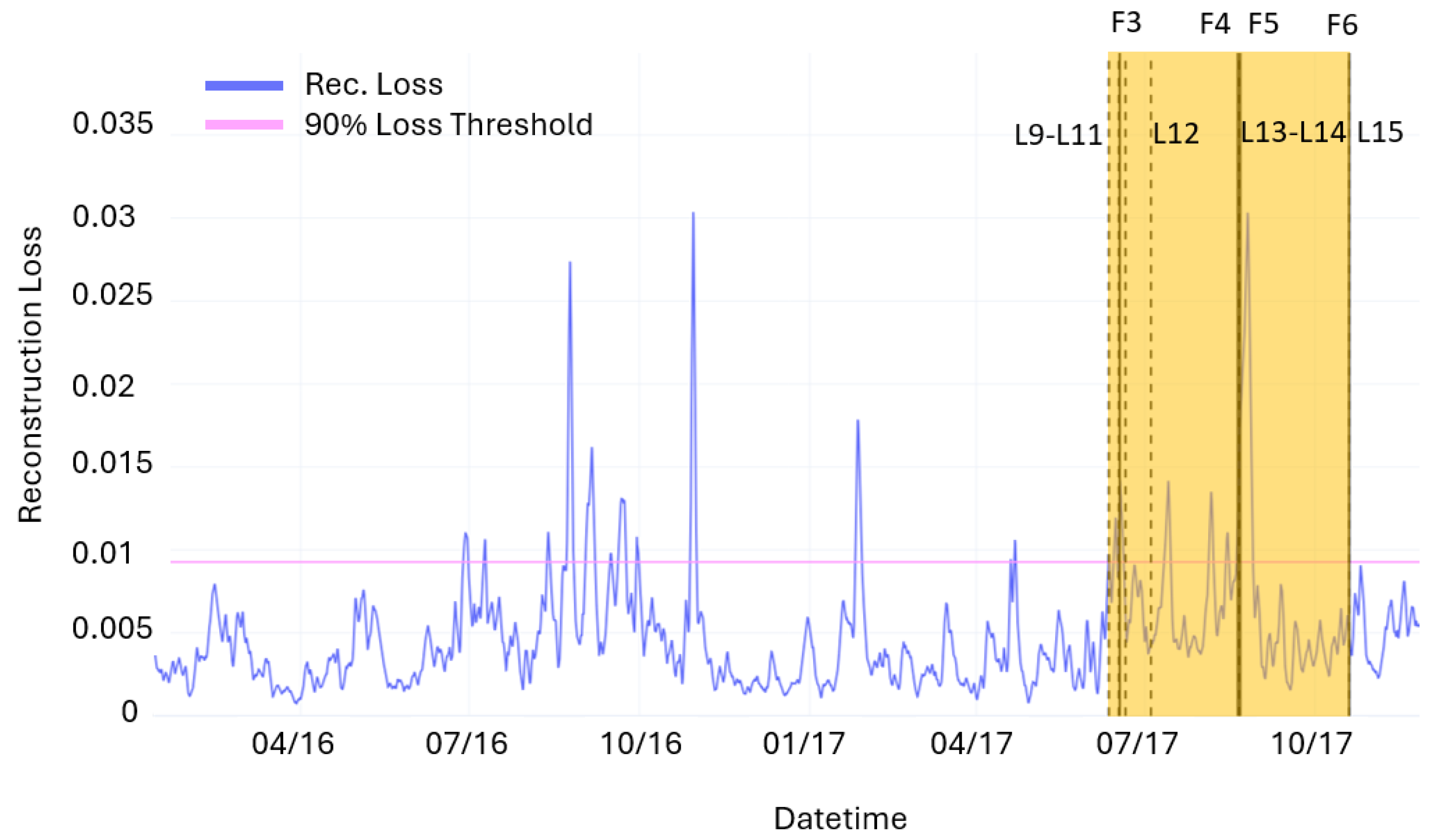

Datasets are completed with event logs recording the list of alarms during the period of interest as summarized in

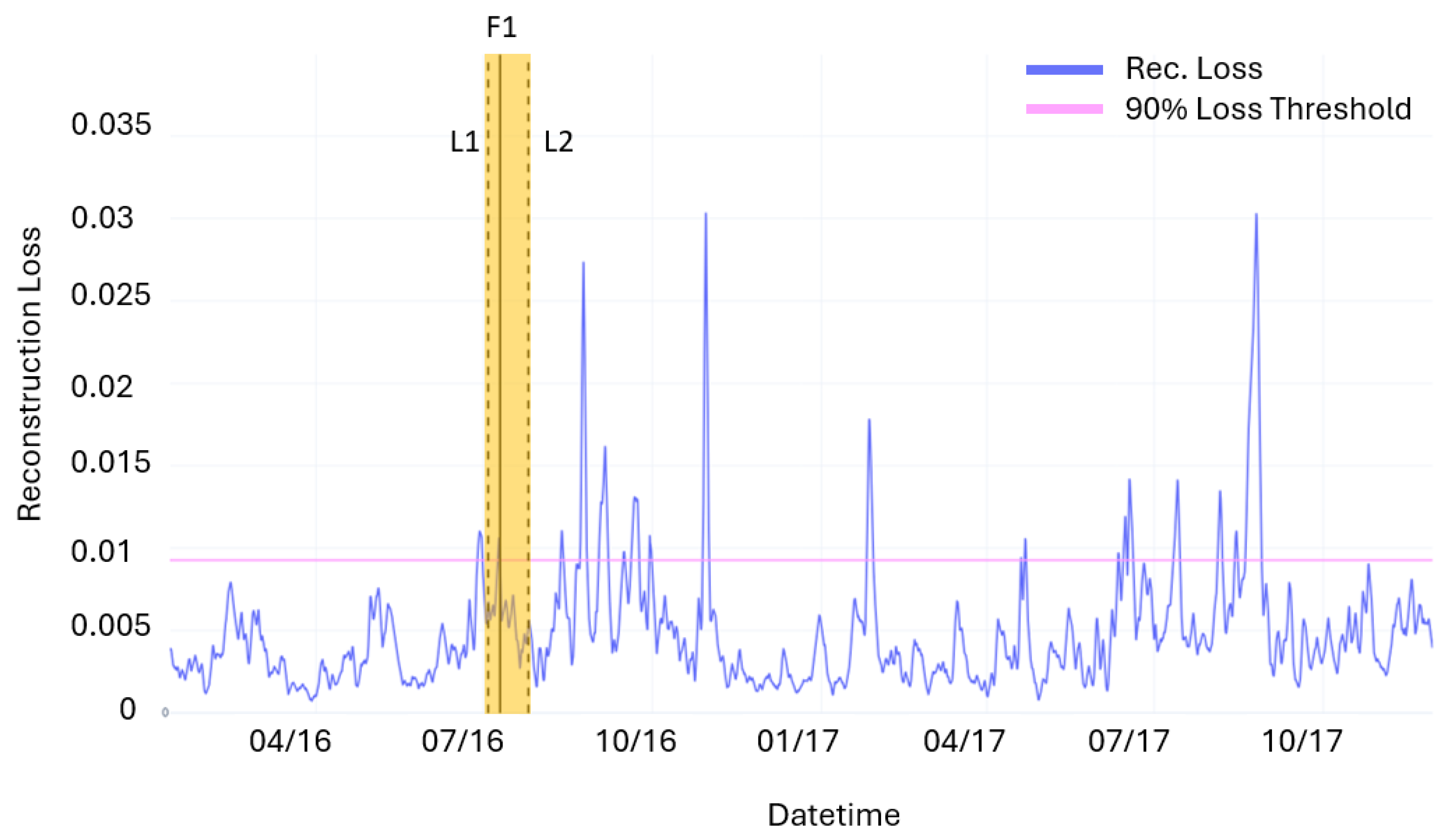

Table 3. The lists counting 21 events include all the events considered in the present anomaly detection study. These are obtained from the list of failure events made available by the files “Historical-Failure-Logbook-2016” and “opendata-wind-failures-2017” (denoted by the letter F in the table) and from the event log (denoted by the letter L in the table) contained in the files “Wind-Turbines-Logs-2016” and “Wind-Turbines-Logs-2017”, which includes all events recorded by the SCADA system on the wind turbine during the reported period [

32]. Following Reliawind turbine taxonomy [

46], the event log includes anomalies categorized at assembly and sub-assembly levels but only a few alarms at the component/part level. In addition, we filtered out all false alarms and minor events not leading to repair or replacement actions. When looking closely at the alarm logs, it is noticeable that the majority of events relate to WT drive train and electric sub-systems. In particular, the turbine had seven alarms relating to high temperature of the transformer (10 July and 23 August 2016) and a major failure of the generator on 21 August 2017.

For model training, the portion of the dataset spanning from January 2016 to June 2017 is used. Moreover, periods surrounding anomalies detected through Isolation Forests and recorded failures are removed from the training dataset. Specifically, for anomalies detected through IF, the 24 h preceding and following each event are excluded. For failures, the 24 h preceding and 72 h following each failure are also excluded.

5. Conclusions

The present work proposes a semi-supervised anomaly detection framework for horizontal axis wind turbines, based on artificial intelligence techniques applied to SCADA data. Specifically, real-world open data provided by EDP Renewables, collected from offshore wind turbines operating in the Gulf of Guinea, are used. In addition to SCADA data covering two years of operations of a single turbine, event and failure logs, also made available in open format, are considered.

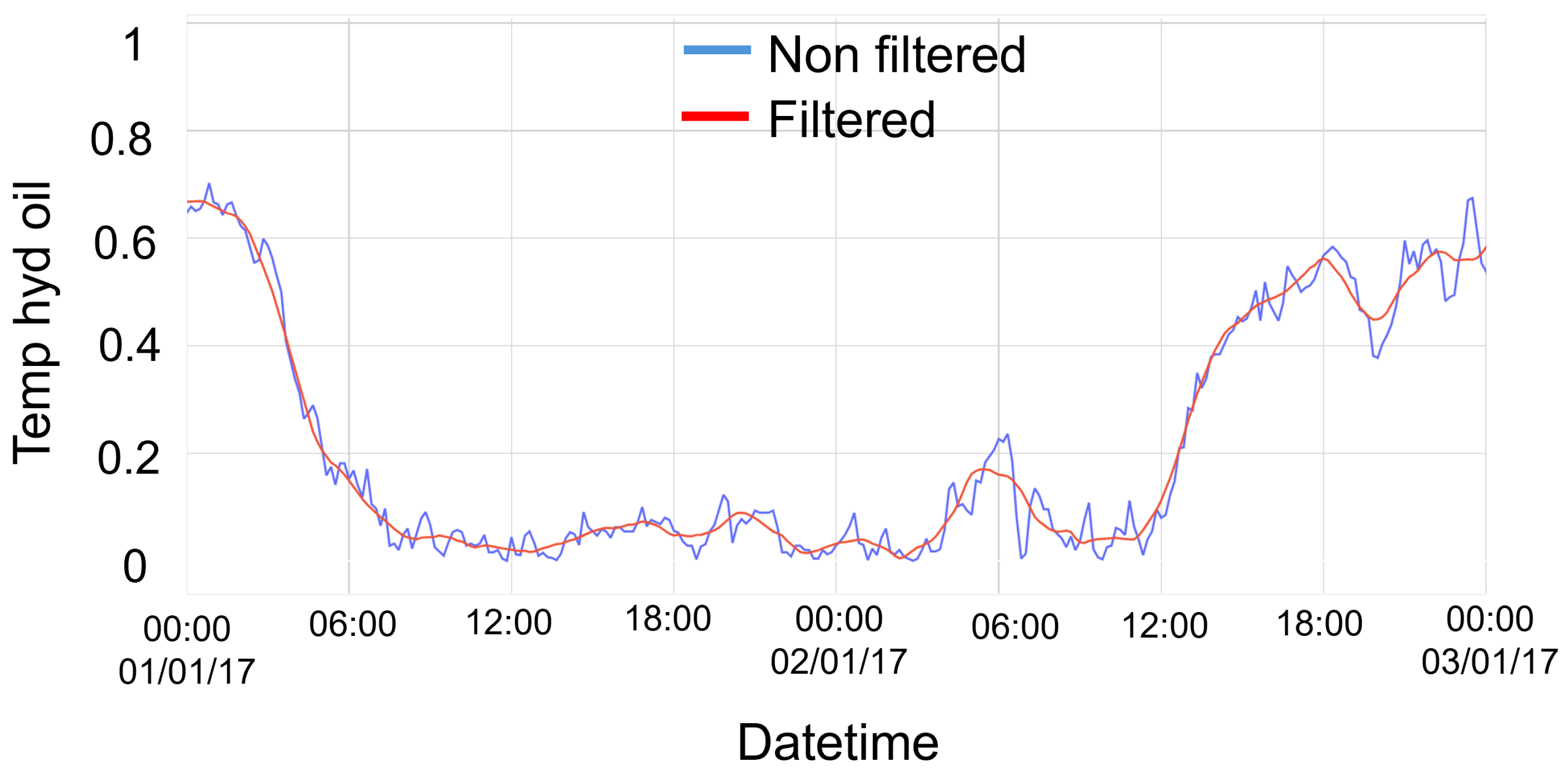

The first step of the work consists of the preprocessing of the SCADA data. Specifically, a subset of 30 significant variables is selected. The Savitzky–Golay filter is applied in order to smooth the time series data and reduce noise. The ambient temperature value is subtracted from all temperature signals to eliminate the strong seasonal trend in the data. After scaling the data using MinMax Scaling, the strong dependency of the operational variables from the wind speed (e.g., power-, rotational speed-, or drive-train-related temperatures) is neutralized in a similar fashion. From the resulting dataset, the first 70% is selected as the training dataset so that, during inference, the model would be exposed to data different from those on which it is trained.

The reconstruction loss obtained from an autoencoder based on Bidirectional Long Short-Term Memory (BI-LSTM) cells is chosen as the alarm signal. Considering the need to train the autoencoder on a time series characterized by the normal behavior of the wind turbine, any interval between the 24 h prior to and the 72 h following each failure event is removed from the training dataset. Moreover, the unsupervised Isolation Forest method is used to eliminate additional anomalous operating points from the training data. Thus, with a contamination rate set at 0.001, intervals between the 24 h before and after each anomaly are removed. The resulting dataset is then used to train the autoencoder. The architecture of the autoencoder consists of an encoder made up of a BI-LSTM cell layer that transforms the input data into a latent space of 128 dimensions, and a decoder that reconstructs the input sequence from this latent representation.

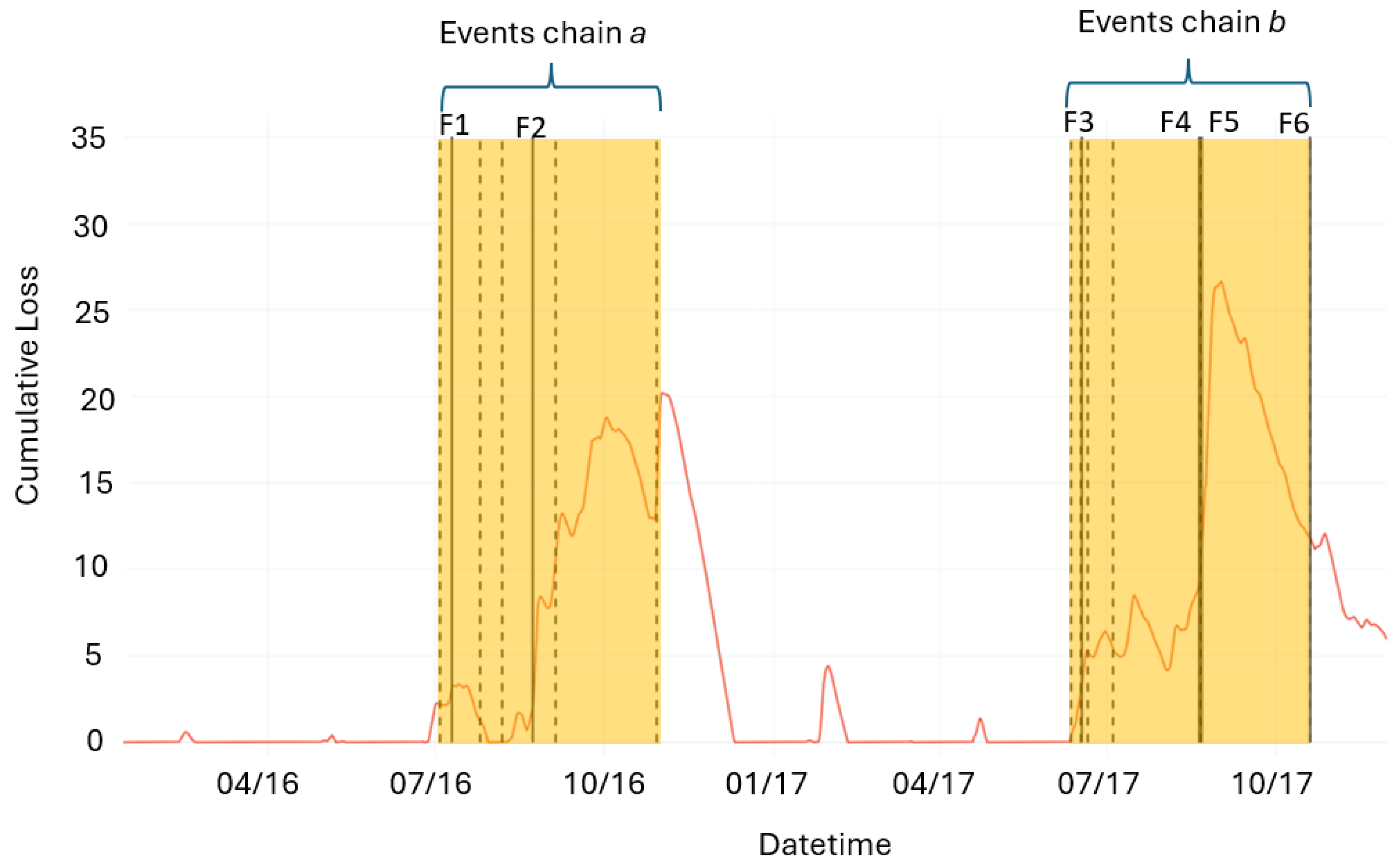

During the inference phase, the entire dataset, including the two years of turbine operations and the anomalous points identified by the failure logs and Isolation Forest, is used. Spikes in the reconstruction loss are used to trigger fault alarms. Specifically, an alarm threshold is set at the 90th percentile of the reconstruction loss value. Considering a time window () of 4320 samples (1 month) the predicted faults are defined as follows:

True Positive (TP): a model warning trigger that is followed by an event within ;

False Positive (FP): a model warning trigger that is not followed by an event within ;

False Negative (FN): an event that is not preceded by any warnings.

In this way, 4 FPs, 12 TPs, and 2 FNs are obtained. The model’s performance is therefore expressed through the well-known metrics of precision (P), recall (R), and F1 Score (F1). The following values are obtained: P = 0.75, R = 0.86, and F1 = 0.8.

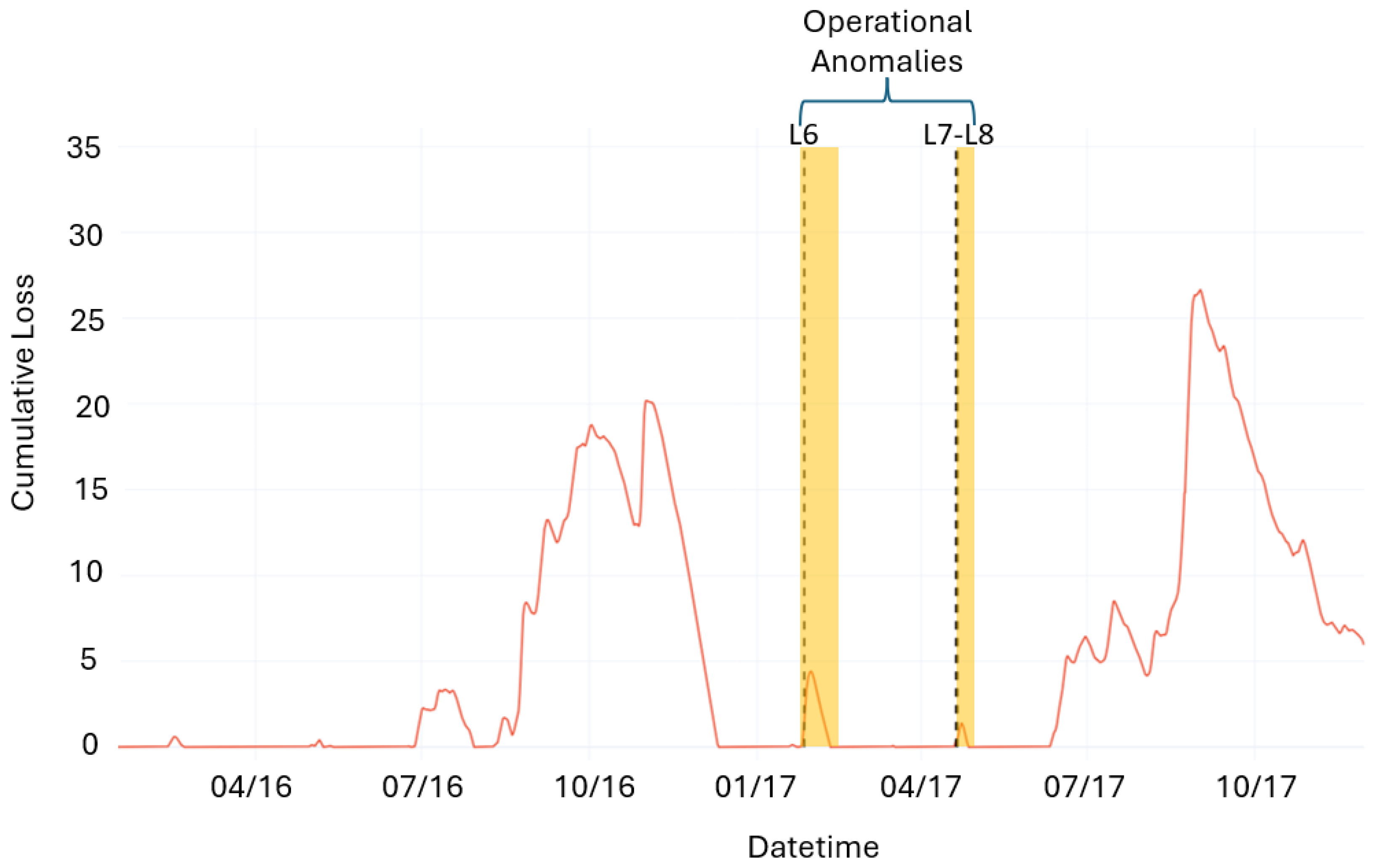

Moreover, considering only the reconstruction loss does not provide a measure of the persistence and, therefore, the severity of the anomalies. In order to obtain a better understanding of each alarm triggered by the loss, a CUSUM control chart based on the loss is used. This approach made it possible to identify two fault chains corresponding to periods where the CUSUM values remain persistently above 0. Conversely, two operational anomalies are identified where, despite the presence of loss spikes, the CUSUM value returned to 0 immediately after the event. In these cases, the reconstruction errors of the time series are not attributed to the onset of faults but rather to the occurrence of momentary anomalous operating conditions.