Assessing Cognitive Load Using EEG and Eye-Tracking in 3-D Learning Environments: A Systematic Review

Abstract

1. Introduction

1.1. Overview

1.2. Learning & 3-D Technologies

1.3. Cognitive Load

1.4. Psychophysiological Measurements

1.4.1. EEG

1.4.2. Eye-Tracking

1.5. Objectives and Research Questions (RQs)

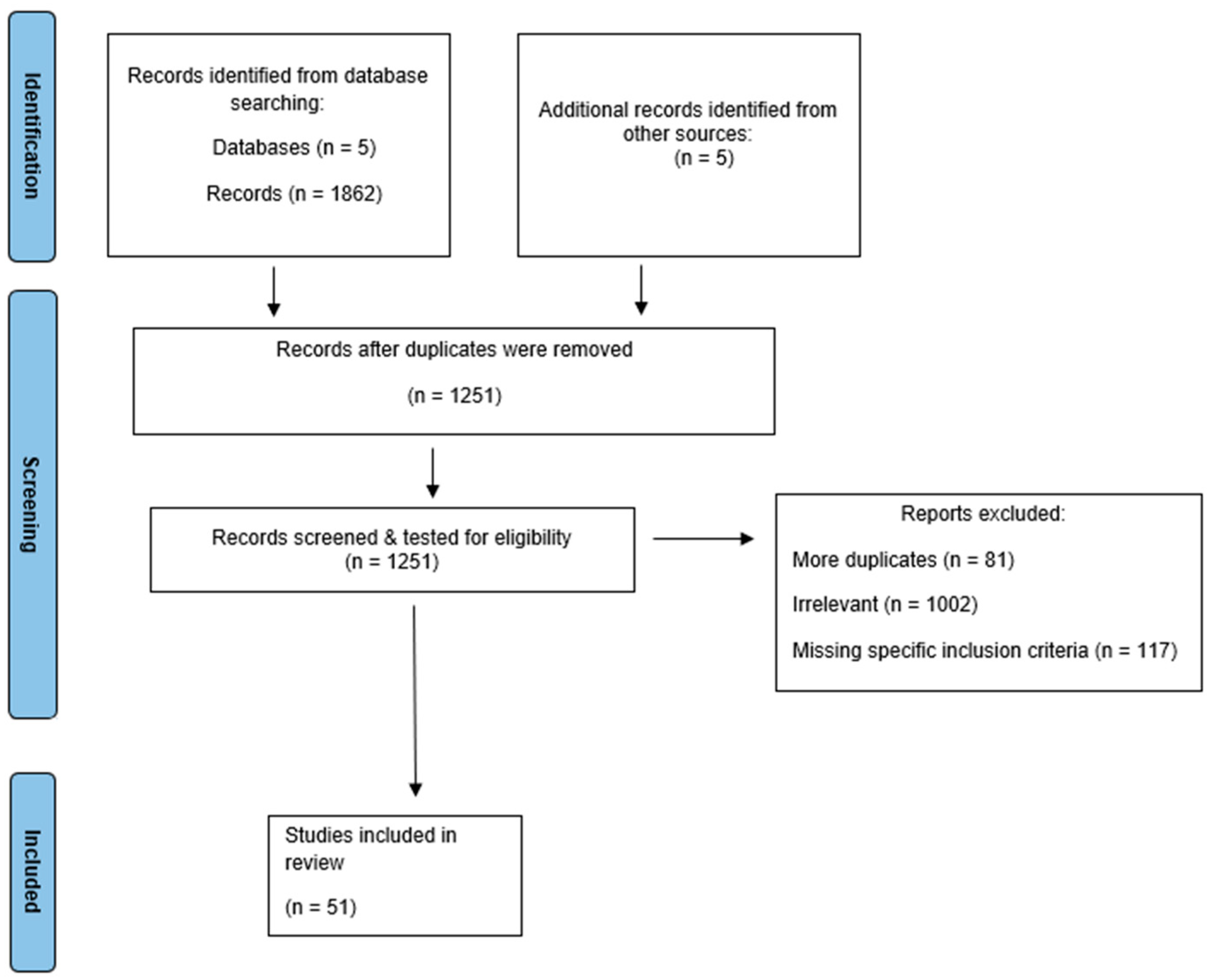

2. Materials and Methods

2.1. Registration of the Study

2.2. Search Strategy

2.3. Study Selection

2.4. Inclusion Criteria

2.5. Exclusion Criteria

2.6. Screening

2.7. Psychophysiological Methods

2.7.1. EEG

2.7.2. Eye-Tracking

2.8. Data Extraction

2.9. Quality Appraisal

3. Results

3.1. Overview

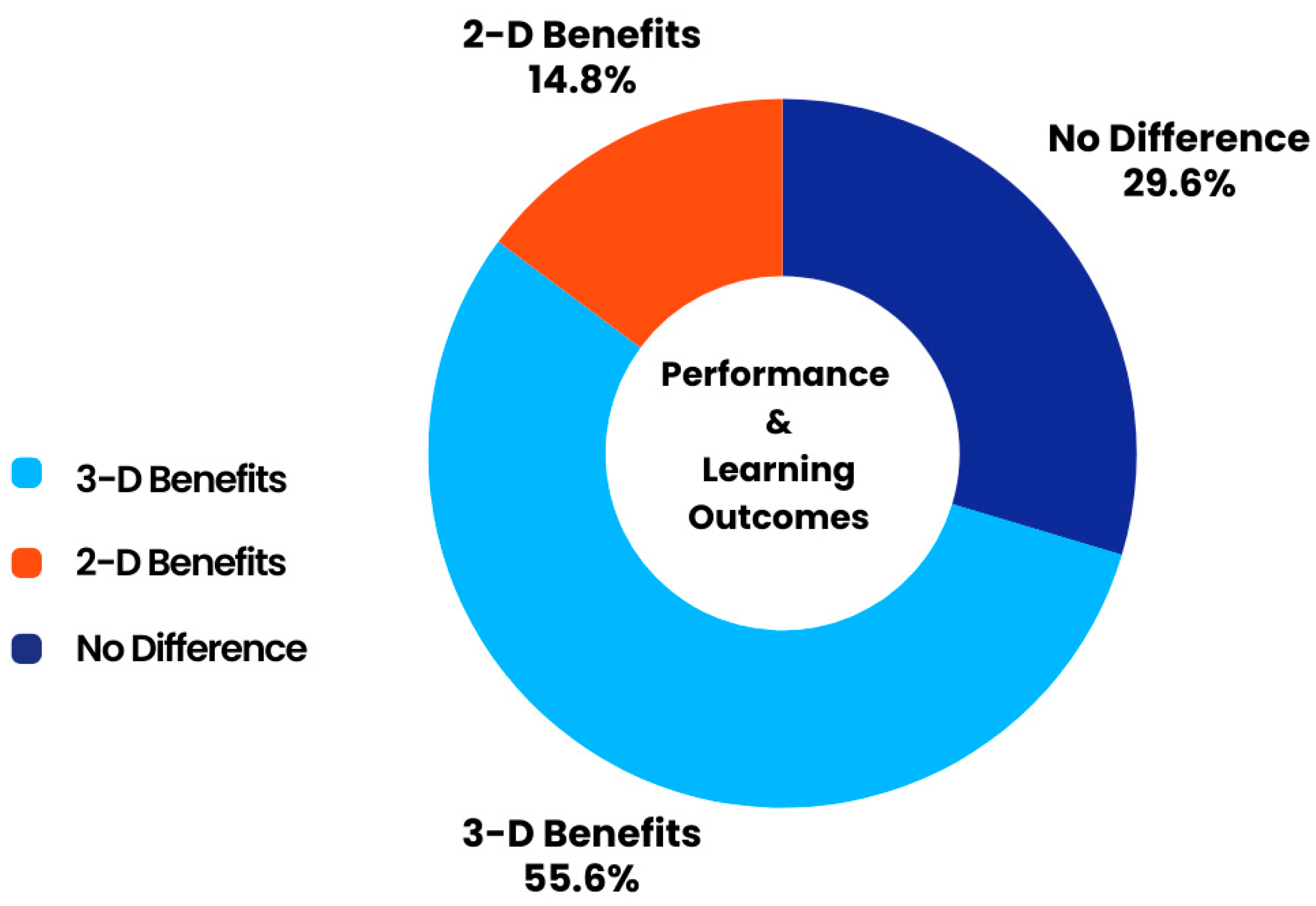

3.2. RQ1: Learning and Performance Is Enhanced by 3-D Technologies

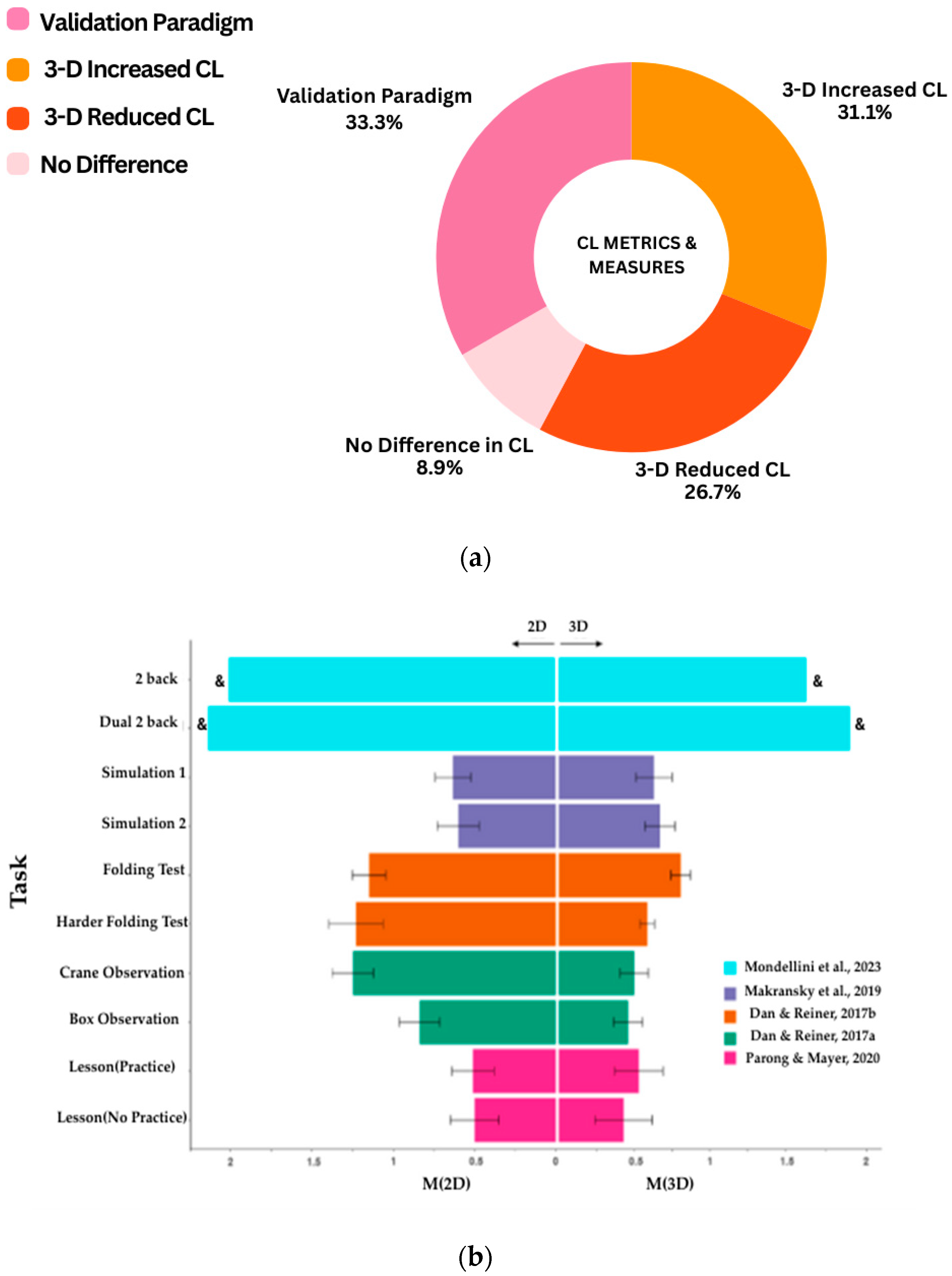

3.3. RQ2: Task Dependent Psychophysiological Measures on Levels of Cognitive Load

4. Discussion

4.1. Overview

4.2. Differences in Cognitive Load as Reflected by Psychophysiological Measures

4.2.1. EEG

4.2.2. Eye-Tracking

4.3. Does 3-D Technology Improve Learning?

4.4. Limitations

4.5. Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| 3-D/3D | Three-Dimensional |

| 2-D/2D | Two-Dimensional |

| AR | Augmented Reality |

| CTML | Cognitive Theory of Multimedia Learning |

| CDA | Contralateral Delay Activity |

| CL | Cognitive Load |

| CLI | Cognitive Load Index |

| CLT | Cognitive Load Theory |

| ECG | Electrocardiogram |

| EEG | Electroencephalography |

| EOG | Electrooculogram |

| ERPs | Event Related Potentials |

| fMRI | Functional Magnetic Resonance Imaging |

| GSR | Galvanic Skin Response |

| HMD | Head Mounted Device |

| HRV | Heart Rate Variability |

| HSA | High Spatial Ability |

| IVR | Immersive Virtual Reality |

| LSA | Low Spatial Ability |

| LSTM | Long Short-Term Memory |

| MOT | Multiple Object Tracking |

| MWLI | Mental Workload Index |

| MR | Mixed Reality |

| PPT | PowerPoint |

| STEM | Science, Technology, Engineering, Mathematics |

| RCTs | Randomized Controlled Trials |

| VR | Virtual Reality |

| VRLS | Virtual Reality Laparoscopic Simulator |

| XR | Extended Reality |

Appendix A. Search Strategy

Appendix B

| Paper | Authors | Journal | Year | Number of Participants | Gender | Design | Task | Task Description | Experimental Group | Comparison Group | 3-D Technology | Control Technology | Psychophysiological Measure | Research Question (RQ) | Cognitive Load Measure | Other Measure(s) | Conclusion |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Reduced Mental Load in learning a motor visual task with virtual 3D method [14] | Dan, Reiner | Journal of Computer Assisted Learning | 2017(a) | 14 | 10 M, 4 F | Within Subjects | Folding Test | Participants watched origami folding demos in 2-D or 3-D and then participated in a folding test | 3-D Origami | 2-D Origami | Stereoscopic 3-D headset | 2-D video | EEG | 2 | Cognitive Load Index | NASA-TLX | Findings suggest benefit of the 3-D presentation vs. 2-D presentation |

| The Effect of a virtual reality learning environment on learners’ spatial ability [23] | Sun, Wu, Cai | Virtual Reality | 2019 | 28 | 13 M, 15 F | Between Subjects | Auditory test (single stimulus paradigm) | Discriminate standard from non-target tone | VR based learning group | Presentation Slides learning group | XR Headset (Google Cardboard) | Presentation Slides | EEG | 1 | ERP: N1 & P2 | Learning outcome test, spatial ability test, astronomy knowledge test | Low Spatial Ability participants had reduced cognitive loads and improvement in performance in VR-based learning environment. High Spatial Ability learners did not show differences in their cognitive load between the 2-D & 3-D environments, but they had less learning performance in the VR-based environment. |

| Frontal Alpha Oscillations and Attentional Control: A Virtual Reality Neurofeedback Study [80] | Berger and Davelaar | Neuroscience | 2017 | 22 | 14 M, 8 F | Between Subjects | Stroop Task | Used neurofeedback training to train alpha responses in a cognitive control task | 3-D Neurofeedback | 2-D Neurofeedback | XR Headset (Oculus Rift Development Kit 2) | 2-D Screen | EEG | 1 | Mean correct response times & accuracy on Stroop task | N/A | Increase in frontal alpha was associated with enhanced attentional processing. Learning slopes were higher in participants who received 3-D feedback. Alpha oscillations can be a useful measure of cortical processing and efficiency. |

| Investigating the Redundancy principle in immersive reality environments: An eye-tracking & EEG Study [98] | Baceviciute, Lucas, Terkildsen, Makransky | Journal of Computer Assisted Learning | 2021a | 73 | 44 F, 29 M | Between Subjects | Knowledge retention & transfer tests | After exposure to three different conditions: written, auditory, and redundant, participants knowledge retention and transfer was tested | Redundant (written + auditory) | 2 Groups: Written, auditory | XR Headset (HTC Vive) | N/A | Eye-tracking and EEG | 2 | Self-reported Cognitive Load Scales (Ayrnes; Cierniak; Salomon; Leppink; Paas; Ayres), EEG measurements (Theta + Alpha), Eye-Tracking (fixation & Saccades) | Pre-test survey, post-test questionnaires, Retention & Transfer test scores | There was more mental effort required for the auditory group; there are benefits of redundant learning content in an IVR context as opposed to a standard context |

| Changes in brain activity of trainees during laparoscopic surgical virtual training assessed with electroencephalography [93] | Suarez, Gramann, Ochoa, Toro, Mejia, Hernandez | Brain Research | 2022 | 16 | 9 F, 7 M | Within Subjects | Motor training and then post tests for tasks: coordination, peg-transfer, and grasping | Use of XR technology to train in surgical/motor tasks | Use of XR technology to perform 3 basic tasks | N/A | XR simulator (LapSim) | N/A | EEG | 1, 2 | EEG: changes in Frontal Midline Theta and Central Parietal Alpha, NASA-TLX questionnaire | Performance in coordination, grasping & peg transfer | The EEG data indicate that brain rhythms are linked to distinct cognitive processes regarding training and the acquisition of skills. Training in VR reduced frontal midline theta and increased performance. Furthermore, there was a positive correlation with central parietal alpha and improved performance |

| An intelligent Man-Machine-Interface- Multi Robot Control adapted for Task engagement based on single trial detectability of P300 [106] | Kirchner, Kim, Tabie, Wohrle, Maurus, & Kirchner | Frontiers in Human Neuroscience | 2016 | 6 | 6 M | Within Subjects | Task engagement with Man-Machine Interfaces (MMI) | Participants controlled robots and their task engagement and task load was assessed | Subjects control several simulated robots, subjects needed to complete 30 tasks | N/A | A virtual environment using MARS (Machina Arte Robotum Simulans) in 3-D and 2-D. The Man Machine Inteface (MMI) makes use of a Cave Automatic Virtual Environment (CAVE) | N/A | EEG | 2 | Changes in the interstimulus interval (ISI), Changes in P300 related activity | MMIs can be used to adapt training protocols based on participants’ ERP(P300) measures for mental workload. | |

| Efficacy of a Single-Task ERP Measure to Evaluate Cognitive Workload During a Novel Exergame [101] | Ghani, Signal, Niazi, Taylor | Frontiers in Human Neuroscience | 2021 | 16 | 11 F, 13 M | Within Subjects | Exergame Rehabilitation Task | Participants had to play a tilt ball game on a balance board that was controlled through an android phone. The task was varied on three levels of difficulty | Three levels of difficulty (easy, medium, hard) | N/A | Computer screen projection while playing on tilt board | N/A | EEG | 2 | ERP (N1) | Task performance parameters: goals scored, difficulty level; Subjective Ratings of Difficulty | The amplitude of the N1 ERP component decreased significantly with an increase in task difficulty |

| Remediating learning from non-immersive to immersive media: Using EEG to investigate the effects of environmental embeddedness on reading in Virtual Reality [78] | Baceviciute, Terkildsen, & Makransky | Computers & Education | 2021b | 48 | 27 F, 21 M | Between Subjects | Real Reading vs. Embodied Reading in VR followed by a Knowledge Test and Transfer Test | Participants had to read information about sarcoma cancer either in a real-reading condition or VR reading condition and were tested on their learning outcomes afterwards | Educational Text from a physical booklet | Identical Text in virtual booklet | XR Headset (HTC Vive) | Physical Booklet | EEG | 1, 2 | mental effort (Paas Scale), extrinsic cognitive load measure, intrinsic cognitive load, germane load | N/A | XR promotes knowledge transfer, but no significant difference in knowledge retention. EEG measures suggested that cognitive load was increased in XR |

| EEG-based cognitive load of processing events in 3D virtual worlds is lower than processing events in 2D displays [15] | Dan & Reiner | International Journal of Psychophysiology | 2017b | 17 | 11 M, 6 F | Between Subjects | Folding Test | Participants watched origami folding demos of an instructor in 2-D or 3-D and then participated in a folding test where they either needed to fold an origami box or origami crane. | First folded a box after 2-D instruction, then a crane after 3-D instruction | First folded a box after 3-D instruction, then a crane after 2-D instruction | 3-D NVIDIA glasses | 2-D Video | EEG | 1, 2 | Cognitive Load Index (Frontal Theta & Parietal Alpha) | VZ-2 paper folding test to assess spatial ability | Cognitive load of visually processing 2-D video was higher than in 3-D virtual worlds. During both the easier task of box folding and the harder task of crane folding, the cognitive load index was lower for all participants in the 3-D world and most for those with lower spatial ability |

| Towards real world neuroscience using mobile EEG and augmented reality [109] | Krugliak & Clarke | Scientific Reports | 2022 | 8 | 4 F, 4 M | Within Subjects | Face processing tasks: Computer-based face processing task; viewing of upright and inverted photos of faces along the walls of a corridor; viewing of virtual faces along a corridor(upright and inverted) | The assessment of the characteristic face inversion effect was investigated in conjunction with a head mounted AR device | Task 1: Computer Based, Task 2: mEEG + photos, Task 3: mEEG + XR | N/A | XR Headset (Microsoft Hololens) | N/A | EEG | 2 | Epoch based analysis, GLM based analysis | N/A | There was an increased low-frequency power over posterior electrodes for inverted faces compared to upright faces demonstrating the characteristic face inversion effects. This study revealed that these face inversion effects can be identified during free moving EEG paradigms. The authors argue that EEG and XR are a feasible approach to studying cognitive processes in various types of environments. |

| A comparative experimental study of visual brain event related potentials to a working memory task: virtual reality head-mounted display versus a desktop computer screen [76] | Aksoy, Ufodiama, Bateson, Martin, Asghar | Experimental Brain Research | 2021 | 21 | 7 F, 14 M | Within Subjects | N-back task | A working memory task in which participants needed to remember visual stimuli from a sequence either 1 trial back (1-back) or 2 trials back (2-back) in either a VR or desktop condition | Single Wall VR condition | Desktop | XR Headset (HTC Vive) | Desktop | EEG | 1, 2 | Anterior N1, Posterior P1 and P3 ERPs | response time, accuracy rate of 1-back and 2-back tasks. | The P3 waveform was similar in the XR condition and the desktop environment. N1 peak amplitude was higher in the XR HMD environment. |

| Enhancing Cognitive Function Using Perceptual-Cognitive Training [89]. | Parsons, Magill, Boucher, Zhang, Zogbo, Bérubé, Scheffer, Beauregard, and Faubert | Clinical EEG & Neuroscience | 2014 | 20 | Not Reported | Between Subjects | 3D Multiple Object Tracking(MOT) | Participants performed a 3-D MOT with or without training | Training | Non-active Control | Neurotracker (glasses) | N/A | EEG | 1 | EEG: Alpha, Beta, Gamma and Theta power | . MOT session scores, Integrated Visual and Auditory Continuous Performance Test, selected subtests from the Wechsler Adult Intelligence Scale, Delis-Kaplan Executive Functions System Color-Word Interference Test | 3D-MOT can improve cognitive function and can have an effect on attention, working memory, and visual information processing speed as demonstrated by EEG measures. 3-D MOT training can have improved cognitive outcomes compared to non-active control group. |

| Estimating Cognitive Workload in an Interactive Virtual Reality Environment Using EEG [117] | Tremmel, Herff, Sato, Tetsuya, Rechowicz, Yamani, Krusienski | Frontiers in Human Neuroscience | 2019 | 15 | 4 F, 11 M | Within Subjects | N-back task in virtual environment | Participants had to virtually move colored balls that were presented n-trials before and move them to the target area | Three experimental blocks in randomized order: 0-back, 1-back, 2-back | N/A | XR Headset (HTC Vive) | N/A | EEG | 2 | Differences in average spectral amplitude of EEG bands across workload levels, Task performance | N/A | Cognitive workload during an interactive XR task can be estimated via scalp recordings |

| Being present in a real or virtual world: A EEG study [112] | Petukhov, Glazyrin, Gorokhov, Steshina, Tanryverdiev | International Journal of Medical Informatics | 2020 | 5 | ALL M | Within Subjects | Real life skiing and virtual skiing | Five experienced skiers first performed a controlled downhill skiing task while wearing EEG equipment and afterwards performed virtual downhill skiing task using a headset, and a simulated downhill skiing task using a desktop | Downhill skiing task followed by virtual downhill skiing task and desktop skiing task | N/A | XR Headset (HTC Vive) | Desktop | EEG | 2 | Frontal Activation Pattern Analysis and measure of cognitive expenditure during task | Electrocardiogram, Electrooculogram, Electromyogram, respiration analysis | This study revealed greater stable neuropatterns present in the virtual and physical environment compared to the desktop application. The authors argue that similar brain activity in the virtual and physical environments could help to understand the sense of presence in XR. In all cases (desktop, XR, physical) there was a high level of activation in the frontal lobe, which was indicative of processing of sensory and cognitive information. |

| Cortical correlate of spatial presence in 2D and 3D interactive virtual reality: An EEG Study [108] | Silvia Erika Kober, Jurgen Kurzmann, Christa Neuper | International Journal of Psychophysiology | 2011 | 30 | 15 F, 15 M | Between Subjects | Spatial Navigation Task | Participants performed the task in a desktop condition on a small screen that was less immersive or a XR condition on a large screen that was more immersive while their EEG data was recorded | Single Wall XR condition | Desktop VR condition | 3-D screen | Desktop | EEG | 2 | Task Related Power Analysis | Regional activity and connectivity, Presence Ratings | There was a greater feeling of presence in the more immersive XR condition and there was an increased parietal activation compared to the less immersive desktop condition. In terms of the decreased presence experience there was a stronger functional connectivity between frontal and parietal brain regions |

| Adding immersive virtual reality to a science lab simulation causes more presence but less learning [2] | Makransky, Terkildsen, Mayer | Learning & Instruction | 2019 | 52 | 22 M, 30 F | Between Subjects and within subjects | four different versions of a virtual laboratory simulation followed by Knowledge & Transfer tests | The participants learning outcomes were measured post simulation and they were assessed on their retention of information through a knowledge test and their ability to transfer their learning to another context through a transfer test | Part 1 (Between Subjects): Text; Part 2 (Within Subjects): VR -> Desktop | Part 1 (Between Subjects): Text + Narration; Part 2 (Within Subjects): Desktop -> VR | XR headset (Samsung GearVR) | Part 2: Desktop | EEG | 2 | Workload metric | Performance on tests, participant questionnaire, self-report survey | The study shows that immersive instructional media may indeed be fun and increase presence but does not necessarily increase student learning. High-immersion XR can increase processing demands on working memory and decrease knowledge acquisition. The authors argue that this should be taken into account before utilizing immersive XR for instructional learning |

| Cognitive and affective processes for learning science in immersive virtual reality [88] | Jocelyn Parong, Richard Mayer | Journal of Computer Assisted Learning | 2020 | 61 | 40 F, 20 M, 1 Other | Between Subjects | A biology lesson which varied in terms of the instructional media and the practice testing administered to participants | Participants were tested on their learning outcomes through retention and transfer post-tests administered after the lesson which was either virtual reality or desktop | VR | Desktop | XR headset (HTC Vive) | Desktop | EEG | 2 | EEG, Heart Rate Variability, Skin Conductance | Retention Score, Transfer Score, self report | Participants performed better on transfer tests after viewing a biology lesson in a PowerPoint as opposed to in IVR. IVR was rated as more distracting based on self-report measures and EEG-based measures than the PowerPoint. IVR led to more distraction, which was linked to weaker performance on learning outcomes |

| Stereoscopic perception of women in real and virtual environments: A study towards educational neuroscience [118] | Zacharis, Mikropoulos, Priovolou | Themes in Science & Technology Education | 2013 | 36 | 36 F | Within Subjects | Stereoscopic perception of women were observed in three different environments to observe the electric brain activity | These women either had to view a real environment, a 2-D environment, or a 3-D and a stereoscopic environment | Real desktop, 2-D virtual desktop, 3-D virtual desktop | N/A | Desktop with active 3-D glasses (Sony) | Desktop without active 3-D glasses | EEG | 2 | Theta, Alpha, Beta and Gamma activity | N/A | Brain activity was similar in the real and 3-D environments compared to 2-D. The real (stereoscopic) environment required the least mental effort compared to the other two environments |

| Impacts of Cues on Learning and Attention in Immersive 360-Degree Video: An Eye-Tracking Study [57] | Liu, Xu, Yang, Li, Huang | frontiers in psychology | 2022 | 110 | 74 F, 36 M | Between Subjects | Textual and visual, cue response task | Participants watched a 360-degree video on an intracellular environment and then were assigned to 4 conditions: • No cues • Textual cues in the field of vision (FOV) • Textual cues outside of the FOV • Textual cues outside of the FOV with visual cues | textual cues in the initial FOV (TCIIF) group, textual cues outside the initial FOV (TCOIF) group, textual cues outside the initial FOV + visual cues (TCOIF + VC) group | No cues (NC) group | XR headset (HTC Vive), Eye-Tracker (Tobii Pro) | N/A | Eyetracking | 1, 2 | Main eye movement indicators: total fixation duration, fixation duration on annotation areas of interest (AOIs), fixation duration on initial FOV AOIs and fixation heatmaps | spatial ability tests, prior knowledge test, semi-structured interview | Due to limited field of vision in immersive environments, visual cues in the field of vision (FOV) help guide the learner. However, visual cues and annotations may increase cognitive load, therefore appropriate use of visual cues is necessary for instructional design in immersive environments. |

| From 2D to VR Film: A Research on the Load of Different Cutting Rates Based on EEG Data Processing [116] | Tian, Zhang and Li | information | 2021 | 40 | 27 M, 13 F | Between Subjects | Visual Task | Participants watched the film in either a 2-D or 3-D (VR) condition at three different cutting rates | XR Group | 2-D Group | XR Headset(HTV Vive) | Desktop | EEG | 2 | Alpha, Beta, and Theta Frequency bands and power values, NASA-TLX | PANAS questionnaire | EEG analysis and topographical maps showed that the energy of the alpha, beta, and theta waves of the XR film group were higher than the 2D film group. The NASA-TLX results also show that the subjective load of the XR film group was higher than that of the 2D film group |

| Cognitive load and attentional demands during objects’ position change in real and digital environments [119] | Zacharis, Mikropoulos, Kalyvioti | Themes in Science & Technology Education | 2016 | 36 | ALL F | Within Subjects | Observation of changes in object position and attentional demand in real and digital environments | Participants were placed into three different environments: real, 2-D, and 3-D while they observed before and after changes in objects position | Real Environment (Before & After), Virtual Environment (Before & After), Virtual environment using 3-D Glasses (Before & After) | N/A | Desktop with active 3-D glasses | Desktop | EEG | 2 | Theta, Alpha, Beta, and Gamma frequency and power analysis | Performance in different environments | Cognitive load associated with the task was higher in all environments before the change of the objects position. The cognitive load appeared to reduce after the change |

| Mental Workload Drives Different Reorganizations of Functional Cortical Connectivity Between 2D and 3D Simulated Flight Experiments [105] | Kakkos, Dimitrakopoulos, Gao, Zhang, Qi, Matsopoulos, Thakor, Bezerianos, Sun | IEEE Transactions on neural systems and rehabilitaion engineering | 2019 | 33 | 33 M | Within Subjects | Flight simulation with three levels of mental workload | Participants were assigned to both the simulation session in a desktop condition and in a XR condition | XR interface | 2-D interface | XR headset (Oculus Rift) | Desktop | EEG | 2 | Frequency Analysis (Delta, Theta, Alpha, Beta, Gamma), Workload Metric, Task difficulty performance | Network Topology Analysis, Functional connectivity, Classification using support vector machine(SVM) analysis | The study validated different levels of workload in a simulation setting and found differences in 2-D vs. XR conditions. XR interface had higher mental workload. Theta band edges had higher overall connectivity in XR condition with increasing mental load. This may be a result of higher presence, and active cognitive processing in the XR condition |

| The impact of 3D and 2D TV watching on neurophysiological responses and cognitive functioning in adults [84] | Jeong, Ko, Han, Oh, Park, Kim, Ko | European Journal of Public Health | 2015 | 72 | 40 F, 32 M | Between Subjects | Administered various neurocognitive tests, i.e., simple reaction time (SRT), choice reaction task (CRT), digit classification task (DC) | Participants did neurocognitive tests while physiological measurements were being taken in either a 2-D TV watching condition or 3-D TV watching condition | 3-D TV watching | 2-D TV watching | 3-D stereoscopic TV (with polarized glasses) | Regular TV | EEG | 1 | Mean electrical activity across Theta, Alpha, beta, gamma, delta bands in frontal and occipital regions | Psychophysiological Measures: heart rate, respiratory rate, electromyography, galvanic skin response, temperature; neurocognitive measures: simple reaction time (SRT), choice reaction time (CRT), color word vigilance (CWV), digit classification (DC), digit addition (DA), digit symbol substitution (DSS), memory forward digit span (FDS), backward digit span (BDS), and finger tapping test (FT); Simulator Sickness Questionnaire | Contrary to belief that 3-D TV watching is harmful and may negatively affect neurocognitive outcomes, findings reveal it is equivalent to its 2-D counterpart with no dangers to cognitive functioning or differences between the two conditions in neurocognitive outcomes |

| EEG Alpha Power Is Modulated by Attentional Changes during Cognitive Tasks and Virtual Reality Immersion [111] | Magosso, Crescenzio, Ricci, Piastra, and Ursino | Computa-tional Intelligence & Neuroscience | 2019 | EXP 1: 30, EXP 2: 41 | EXP 1: 10 F, 20 M, EXP 2: 9 F, 32 M | Within Subjects | EXP 1: Arithmetic Task & Reading Numbers Task EXP 2: Immersion in a VR airplane cabin--relaxation without VR, first static immersion in the VR, interactive exploration of VR, and second static immersion in the VR. | EXP 1: participants had to solve arithmetic operations vs. mentally read the arithmetic operations (order randomized). EXP 2: participants passively and actively exploring a VR environment. Twenty-four participants additionally performed arithmetic task in VR | separate participants for each exp | N/A | Cave Automatic Virtual Environment(CAVE) with shutter glasses | Desk-top | EEG | 2 | Alpha Power Computation as a function of task demands | N/A | Attention to immersive XR environments and an arithmetic task both create a significant ERD compared to a previous relaxation phase, especially in parie-to-occipital regions which is indicative of mental effort/cognitive load. XR environments have a sense of realism and attention-grabbing influence as reflected in alpha rhythms The use of XR with objective physiological measures is a useful tool in studying various aspects of cognition and behavior |

| An Augmented Reality Based Mobile Photography Application to Improve Learning Gain, Decrease Cognitive Load, and Achieve Better Emotional State [95] | Zhao, Zhang, Chu, Zhu, Hu, He & Yang | International Journal of Human Computer Interaction | 2022 | 28 | 7 M, 21 F | Between Subjects | Photography Task | Participants performed the task in a 2D mobile photography app vs. a 3D XR photography application | AR App | 2-D App | Android mobile AR application (Huawei LYA-AL00) | Android mobile application (Huawei LYA-AL00) | EEG | 1, 2 | Cheng Scale, Comparison of pre to post performance test score | Emotional State Indicators | Both groups improve their knowledge of photography. However, ARMPA participants perform better and have the most learning gain. From these findings the authors propose that AR has lower cognitive load and according to their brain analyses the AR group has greater interest for students |

| Comparing virtual reality, desktop-based 3D, and 2D versions of a category learning experiment [79] | Robin Colin Alexander Barrett, Rollin Poe, Justin William OCamb, Cal Woodruff, Scott Marcus Harrison, Katerina Dolguikh, Christine Chuong, Amanda Dawn Klassen, Ruilin Zhang, Rohan Ben Joseph, Mark Randal Blair | Plos One | 2022 | 179 | N/A | Between Subjects | Category Learning Task | Participants performed a category learning task in either a 2-D projection, 3-D projection on a desktop, or 3-D projection using XR | 3-D Stimuli using XR | 3-D Stimuli using Desktop & 2-D Stimuli using Desktop | XR Head-set (HTC Vive) | Desk-top | Eye-tracking | 1, 2 | Improvements in Accuracy, Fixation Counts & Average Fixation Duration | Response Time, Optimization, and in-formation sampling | There were no significant differences in learning outcomes between the three groups but there were longer fixations in the XR condition and longer response times |

| Cognitive workload evaluation of landmarks and routes using virtual reality [96] | Usman Alhaji Abdurrah-man, Lirong Zheng, Shih-Ching Yeh | Plos One | 2022 | 79 | 36 M, 43 F | Be-tween Subjects | Landmark Route & Navigational Task | Assessment via a XR driving system whether there’s an effect of landmarks and routes on navigational efficiency and learning transfer | Part 1:Suffi-cient Landmarks and Easy routes Part 2: In-sufficient Landmarks and Easy Routes | Part 1: Sufficient Landmarks and Difficult routes Part 2: Insuffi-cient Landmarks and Difficult routes | XR Driving System with Logitech G-27 Steering wheel con-troller | N/A | Eye-tracking | 2 | Fixation rate, blink rate, blink duration, cognitive workload obtained through combining information on pupil size, eye gaze, heart rate, performance, into classifiers (support vector machine (SVM), artificial neural networks (ANNs), naïve Bayes (NB), K-nearest neigh-bor (KNN), and a decision tree (DT)) | Mean Task Completion Time | Sufficient landmarks increase navigational efficiency. Navigation with insufficient landmarks increased pupil size |

| Augmented reality on industrial assembly line: Impact on effectiveness and mental workload [83] | Mathilde Drouot, Nathalie Le Bigot, Emmanuel Bricard, Jean-Louis de Bougrenet, Vincent Nourrit. | Applied Ergonomics | 2022 | 27 | 22 M, 5 F | Within Subjects | Dual Task Paradigm: Main Assembly Task and Auditory stimulus Detection Task | Assembly tasks: picking, positioning, tool uses, and handling and to be as accurate while doing a secondary beep detection task | AR Complex, AR Simple | Computer Complex, Computer Simple | XR Headset (Microsoft Hololens 2) | Desktop | Eye tracking | 1, 2 | Pupil size, Blink rate, Blink duration, NASA-TLX, | Reaction Time | Lower blink rate and higher mental workload when using XR |

| Visual short term memory-related EEG components in Virtual Reality set-up [107] | Felix Klotzsche, Michael Gaebler, Arno Villringer, Werner Sommer, Vadim Nikulin, Sven Oh | Psychophysiology | 2023 | 26 | 14 M, 12 F | Within Subjects | Change Detection Task | The visual memory of varying loads was tested in a change detection task with bilateral stimulus arrays of either two or four items while varying the horizontal eccentricity of the memory arrays | XR Group | N/A | XR Headset (HTC Vive) | N/A | Eye tracking, EEG | 2 | Blink rate, saccades, alpha activity, memory performance, CDA power | Contralateral Delay Activity Analysis | Increasing memory load resulted in diminished memory performance and we observed both a pronounced CDA and a lateralization of alpha power during the retention interval. Findings encourage the use of XR to measure EEG signals related to visual short-term memory and attention (e.g., the CDA or the lateralization of alpha power) |

| A Multimodal Approach Exploiting EEG to Investigate the Effects of VR Environment on Mental Workload [86] | Marta Mondellini, Ileana Pirovano, Vera Colombo, Sara Arlati, Marco Sacco, Giovanna Rizzo, and Alfonso Mastropietro | International Journal of Human-Computer Interaction | 2023 | 27 | 16 M, 11 F | Within Subjects | N-Back Task | Working memory task with varying levels of load; participants were asked to indicate if the image was the same as the one presented ‘n’ trials ago. There was also a dual task version with visual + audio stimuli | XR Group | Desktop Group | XR Headset (HTC Vive Pro) | Desktop | EEG | 1, 2 | Mental Workload Index, NASA-TLX | Performance assessment | No significant differences between XR and desktop in terms of mental workload or performance assessments |

| Does stereopsis improve face identification? A study using a virtual reality display with integrated eye-tracking and pupillometry [49] | Liu, Laeng, Czajkowski | Acta Psy-chologica | 2020 | 32 | 19 F, 13 M | Within Subjects | sample to match face identification task | Stereoscopic vs. monoscopic images of faces were presented and accuracy of face identification was assessed in frontal, intermediate and profile views | Stereoscopic & Monoscopic images | N/A | XR headset (HTC Vive) + SMI Eyetracker | N/A | Eyetracking and pupillometry | 1, 2 | Gaze information, pupil size variation | Response time | The accuracy rate was higher in the stereoscopic condition compared to the monoscopic condition for both frontal and intermediate views. |

| Enhanced Interactivity in VR-based Telerobotics: An Eye-tracking Investigation of Human Performance and Workload [87] | Federica Nenna, Davide Zanardi, Luciano Gamberini | International Journal of Human Computer Studies | 2023 | 24 | 13 M, 11 F | Within Subjects | an arithmetic task, a pick-and-place task, physical actions, a dual-task | 5 tasks using VR—arithmetic calculations in virtual environment; pick and place items using a robotic arm—one task via a button, the other by manually moving the arm in VR; in the dual task both arithmetic and pick and place task performed simultaneously | VR Group | N/A | XR Headset (HTC Vive Pro Eye Headset) | N/A | Eyetracking | 1 | NASA-TLX, Pupil Size Variation | Operation time, Error rate | Improved performance and reduced cognitive load in a XR-based action control system |

| Modulation of cortical activity in 2D versus 3D virtual reality environments: An EEG study [92] | Sloubonov, Ray, Johnson, Slobounov, Newell | International Journal of Psychophysiology | 2015 | EXP 1: 12, EXP 2: 15 | 6 M, 6 F; 8 M, 7 F | Within Subjects | 3-D Spatial navigation Task | Navigation in a 3-D or 2-D projected virtual corridor | EXP 1: 3-D TV; EXP 2: 3-D VR moving room | EXP 1: 2-D TV; EXP 2: 2-D VR moving room | 3D television with CrystalEyes stereo glasses | N/A | EEG | 1, 2 | EEG frontal midline Theta Power Analysis | Postural Movement Analysis; accuracy of the task performance, number of trials-and time needed to complete the test | VR has a higher sense of presence, especially in 3-D conditions compared to 2-D. Navigation performance was better in 3-D condition compared to 2-D condition. FM-theta was higher in encoding rather than retrieval |

| A comparative analysis of face and object perception in 2D laboratory and virtual reality settings: insights from induced oscillatory responses [91] | Merle Sagehorn, Joanna Kisker, Marike Johnsdorf, Thomas Gruber, Benjamin Schöne | Experimental Brain Research | 2024 | 55 | 38 F, 17 M | Within Subjects | Face & Object Perception & (Car) Standard Stimulus Discrimination paradigm | Viewing of face stimuli and car stimuli in either 2-D or 3-D version and then pressing of button to identify target and then feedback on whether response was correct or not | XR Condition | PC condition | XR Headset (HTC Vive Pro 2) | Desktop | EEG | 1, 2 | Posterior induced alpha band response(iABR) and midfrontal induced theta band response(iTBR), Response Times | Induced Beta Band response (iBBR) | Cognitive load higher in 2D setting, as midfrontal theta was higher and faster reaction times in virtual condition |

| Tracking visual attention during learning of complex science concepts with augmented 3D visualizations [24] | Fang-Ying Yang, Hui-Yun Wang | Computers & Education | 2023 | 32 | 15 M, 17 F | Within Subjects | AR Chemistry Learning App | 3 different 3-D visualizations in AR app: static, dynamic and interactive 3D modes with descriptions of molecular shapes and instructions on how to interact with them. After playing with AR app and doing activities participants could take post-test. | XR group | N/A | App developed on Unity and download on Android Tablet | N/A | Eye-tracking | 1, 2 | Total Fixation Duration(TFD), Average Fixation Duration(AFD), Saccade Duration(SD) | Pre & Post test results | Students significantly increased their understanding of study concepts after AR learning. Interactive 3D mode captured attention in basic stages but dynamic modes were better in advanced stages. Higher fixation durations for static and interactive modes |

| Impact of Visual Game-Like Features on Cognitive Performance in a Virtual Reality Working Memory Task: Within-Subjects Experiment [90] | Eric Redlinger, Bernhard Glas, Yang Rong | JMIR Serious Games | 2022 | 20 | 6 F, 14 M | Within Subjects | Visual Working Memory Task | Participant sees a screen with 4 distractor images in the corners and a center image. In order to proceed the participant needs to identify which image was present previously in the center—1-back condition. | XR condition | N/A | XR Headset (HTC Vive) | N/A | EEG | 1 | Task Performance: Accuracy & Reaction Time | Spectral EEG power | Visually distracting 3D background has no observable effect on reaction speed but slight impact on accuracy |

| Cognitive processes during virtual reality learning: A study of brain wave [115] | Dadan Sumardani, Chih Hung Lin | Education & Information Technologies | 2023 | 9 | 4 M, 5 F | Within Subjects | Attention differences between reading activity and virtual reality activity | Reading material about the Internation Space Station (ISS) and experiencing virtual reality simulation about the ISS | XR Learning | Reading | Unknown | Reading Material | EEG | 2 | Resting State EEG | Meditation in EEG vs. XR | Reading had higher level of attention than XR Learning |

| Dynamic Cognitive Load Assessment in Virtual Reality [100] | Rachel Elkin, Jeff M. Beaubien, Nathaniel Damaghi, Todd P. Chang, and David O. Kessler | Simulation & Gaming | 2024 | 12 | 6 F, 6 M | Mixed (within & between subjects design) | VR paediatric resuscitation task | Participants acted as team leader in four immersive VR emergency scenarios (status epilepticus vs. anaphylaxis) with low vs. high distraction manipulations, requiring airway management, medication administration, and stabilization. | Novice paediatric residents (PGY1–2) | Expert paediatric emergency fellows/attendings | XR Headset (Oculus Rift) | None (all scenarios delivered in VR) | EEG & ECG | 2 | EEG + ECG (denoised with accelerometry); combined into a composite workload index using previously validated classifier models; NASA-TLX; Performance: Time to critical action, number of errors. | ECG | Feasible to measure CL unobtrusively in real time during VR resuscitation simulations; experts showed lower CL than novices, consistent with NASA-TLX |

| Understanding Pedestrian Cognition Workload in Traffic Environments Using Virtual Reality and Electroencephalography [85] | Francisco Luque, Víctor Armada, Luca Piovano, Rosa Jurado-Barba, Asunción Santamaría | Electronics | 2024 | 12 | 2 F, 10 M | Within Subjects | VR pedestrian crossing with embedded auditory oddball (dual-task paradigm). | Participants performed road-crossing decisions in a VR traffic environment while simultaneously completing an auditory oddball task to assess attentional load | Dual task (VR Crossing + oddball) | N/A | XR Headset (Oculus Quest 2) | None | EEG | 1, 2 | P3 amplitude, Early-CNV, time-to-arrival estimation | NASA-TLX | There is value of virtual environments to explore cognitive load |

| Identification of Language Induced Mental Load from Eye Behaviors in Virtual Reality [114] | Johannes Schirm; Andrés Roberto Gómez-Vargas; Monica Perusquía-Hernández; Richard T. Skarbez; Naoya Isoyama; Hideaki Uchiyama; Kiyoshi Kiyokawa | Sensors | 2023 | EXP 1: 30 EXP 2: 15 | EXP 1: 9 F, 21 M EXP 2: 1 F, 14 M | Within Subjects | Listening Comprehension Task | Participants listened to four speech samples (native vs. foreign; familiar vs. unfamiliar) to vary mental load. | participants under higher cognitive load (listening to unfamiliar/foreign language speech) | Same participants under lower cognitive load (listening to familiar/native speech) | XR Headset (HTC Vive Pro Eye) | None | Eye-tracking | 2 | Gaze points, gaze targets, pupil size, focus point, fixation frequency, saccades | NASA-TLX | Eye-tracking metrics (pupil size, fixations, focus offset) reflected changes in cognitive load during the VR listening task |

| Visual information processing of 2D, virtual 3D and real-world objects marked by theta band responses: Visuospatial processing and cognitive load as a function of modality [104] | Joanna Kisker, Marike Johnsdorf, Merle Sagehorn, Thomas Hofmann, Thomas Gruber, Benjamin Schöne | European Journal of Neuroscience | 2024 | 99 | 61 F, 38 M | Between Subjects | Delayed Matching to Sample Task | Participants viewed abstract objects in 2D (PC), VR, or real-world (3D print) and judged whether pairs were identical or different, while EEG theta band responses were recorded to compare sensory vs. cognitive processing across modalities. | VR condition (virtual 3D objects presented via HMD) | PC condition (2D objects on a monitor), RL condition (real-world 3D printed objects) | XR Headset (HTC Vive Pro 2) | Desktop | EEG | 2 | Discrimination performance, induced Theta band response (iTBR) | Evoked Theta Band Response (eTBR) | Cognitive load was higher for 2D than VR or real 3D, with VR closely matching real-world processing |

| Comparative Analysis of Teleportation and Joystick Locomotion in Virtual Reality Navigation with Different Postures: A Comprehensive Examination of Mental Workload [103] | Reza Kazemi, Naveen Kumar & Seul Chan Lee | International Journal of Human-Computer Interaction | 2024 | 60 | 20 F, 40 M | Between Subjects | Navigation Task | Locomotion in VR navigation through joystick and teleportation methods and postures: sitting and standing | Teleportation (seated + standing) | Joystick (seated + standing) | XR Headset (Oculus Quest 2) | None | EEG | 2 | Alpha & Theta Band Activity | NASA-TLX, ECG | Teleportation locomotion and standing posture both increased mental workload compared to joystick and seated conditions. Joystick + seated produced the lowest workload and better task performance, while teleportation + standing showed the highest workload. |

| The impact of virtual agents ‘multimodal communication on brain activity and cognitive load in Virtual Reality [81] | Zhuang Chang, Huidong Bai, Li Zhang, Kunal Gupta, Weiping He, and Mark Billinghurst. | Frontiers in Virtual Reality | 2022 | 11 | 1 F, 10 M | Within Subjects | VR desert survival decision making game | Participants ranked 15 survival items in collaboration with a virtual agent in VR, across three IVA communication conditions (voice-only, embodied with gaze, gestural with pointing). | Virtual agents with embodied multimodal communication—speech + gaze (Embodied Agent) or speech + gaze + pointing (Gestural Agent). | Virtual agent with voice-only communication | XR Headset (HTC Vive Pro Eye) | None | EEG | 1, 2 | Alpha Band Activity (Spectral power + ERD/ERS), Survival Ranking score | NASA-TLX, Co-presence questionnaire | Embodied and gestural VR agents influenced neural load; alpha activity showed embodied agents eased attentional demand while gestures increased it, though survival ranking performance was unaffected |

| A Neurophysiological Evaluation of Cognitive Load during Augmented Reality Interactions in Various Industrial Maintenance and Assembly Tasks [77] | Faisal M. Alessa, Mohammed H. Alhaag, Ibrahim M. Al-harkan, Mohamed Z. Ramadan, and Fahad M. Alqahtani. | Sensors | 2023 | 28 | 28 M | Mixed (between + within) | Piston pump assembly/maintenance task | Participants performed pump maintenance (gearbox repair = high demand; seal check = low demand) using either AR-based or paper-based instructions. | AR-based instructions | Paper based instructions | XR Headset (Microsoft HoloLens) | Paper Manuals | EEG | 1, 2 | Theta, alpha, beta band activity; total task time | NASA-TLX | AR increased mental workload (theta, alpha; beta), especially during high-demand tasks, but reduced completion time versus paper |

| Event Related Brain Responses Reveal the Impact of Spatial Augmented Reality Predictive Cues on Mental Effort [94] | Benjamin Volmer, James Baumeister, Stewart Von Itzstein, Matthias Schlesewsky, Ina Bornkessel-Schlesewsky, and Bruce H. Thomas. | Transactions on Visualization and Computer Graphics | 2023 | 23 | 2 F, 21 M | Between Subjects | N-Back task and button pressing procedural task | Button-pressing sequences with instructions shown via monitor or SAR (baseline highlight or predictive cues: ARC, ARROW, LINE, BLINK, COLOR), while performing a dual-task auditory oddball to index mental effort. | SAR with predictive cues (ARC, ARROW, LINE, COLOR, BLINK). | 1. Monitor-based (2D screen instructions). 2. SAR baseline without predictive cues. | Spatial Augmented Reality (SAR) projected onto a dome shape | Desktop | EEG | 1, 2 | Mismatch negativity (MMN), P3 component, Response times, accuracy | EOG | Using spatial AR predictive cues improves task performance and can lower cognitive load, but not all cues are equal—ARC and ARROW balance speed and low brain effort best, while LINE is fastest but not the most cognitively efficient |

| Designing and Evaluating an Adaptive Virtual Reality System using EEG Frequencies to Balance Internal and External Attention States [82] | Francesco Chiossi, Changkun Ou, Carolina Gerhardt, Felix Putze, and Sven Mayer | International Journal of Human-Computer Studies | 2025 | 24 | 12 F, 12 M | Within Subjects | Visual Monitoring Task & N-Back Task | Participants performed a visual monitoring task (tracking target avatars) and a 2-back task with colored spheres. The VR system adjusted the number of distractor characters in real time according to EEG signals, either to support internal attention (positive adaptation) or to bias toward external attention (negative adaptation). | EEG-adaptive (positive & negative). | Non-adaptive n-back + visual monitoring baseline. | XR Headset (HTC Vive Pro) | None | EEG | 1, 2 | Alpha & Theta Band Activity; Accuracy scores, reaction times | NASA-TLX, Gaming Experience Questionnaire (GEQ) | Positive (internal-attention) adaptation improved 2-back accuracy and lowered workload versus negative adaptation; negative adaptation sped responses but hurt accuracy and increased workload. EEG alpha/theta can drive useful real-time VR adaptations |

| Cognitive Load Estimation in VR Flight Simulator [102] | P. Archana Hebbar, Sanjana Vinod, Aumkar Kishore Shah, Abhay A. Pashilkar, and Pradipta Biswas. | Journal of Eye movement research | 2023 | 12 | 12 M | Within Subjects | VR flight simulation (dogfight scenarios against AI-controlled enemy aircraft). | Twelve Air Force test pilots flew VR F-16 simulations, engaging AI aircraft across five scenarios. They controlled the aircraft using HOTAS (Hands-On Throttle and Stick) and fired missiles when targets were in range. | More AI guidance (higher automation support) and shorter radar latency (system responding quickly). | Less AI guidance (lower automation support) and longer radar latency (slower system response). | XR Headset (HTC Vive Pro Eye) | None | EEG, Eye-tracking | 2 | EEG Spectral power (Theta + Alpha); Ocular Parameters (pupil dilation, gaze fixation) | None | Pupil diameter, fixation rate, and EEG workload indices increased with task difficulty and workload manipulation, while engagement measures decreased, showing these physiological signals reliably estimate cognitive load in VR flight scenarios and support VR simulators for cockpit evaluation |

| Unraveling the Dynamics of Mental and Visuospatial Workload in Virtual Reality Environments [99] | Gonzalo Bernal, Hyejin Jung, Ilayda Ece Yassi, Nicolás Hidalgo, Yonathan Alemu, Theresa Barnes-Diana, and Pattie Maes. | Computers | 2024 | 34 | 16 F, 15 M | Within Subjects | VR Tetris Game | Participants played VR Tetris with and without a “helper event” (a ball piece that cleared rows when the stack reached 60%). | Tetris with helper event | Tetris without helper event | XR Headset (Valve Index) | None | EEG | 2 | Alpha, Beta, Theta spectral activity; performance improvement | Photoplethysmography (PPG), Heart Rate Variability (HRV) | The VR helper event reduced cognitive load and arousal while boosting visuospatial engagement. Performance improved with helper events, supporting adaptive VR for workload optimization |

| Measuring Cognitive Load in Virtual Reality Training via Pupillometry [110] | Joy Yeonjoo Lee, Nynke de Jong, Jeroen Donkers, Halszka Jarodzka, and Jeroen J. G. van Merriënboer | Transactions on Learning Technologies | 2023 | 14 | 12 F, 2 M | Within Subjects | Health care observation task | Participants engaged in a 9-minute VR home-healthcare scenario. In the easy condition, they observed the provider’s actions; in the difficult condition, they identified patient symptoms and evaluated the provider’s performance. | Difficult Observation Task | Easy Observation Task | XR Headset (HTC Vive Pro Eye) | None | Eye-tracking | 2 | Task Evoked Pupillary Responses (TEPR); Task score | Paas Scale | TEPRs reliably indexed increases in cognitive load with task difficulty and correlated with self-reported effort |

| Estimating mental workload through event-related fluctuations of pupil area during a task in a virtual world [113] | Miriam Reiner, Tatiana M Gelfeld | International Journal of Psychophysiology | 2014 | 31 | 15 F, 16 M | Between Subjects | Motor task: hitting cubes | Participants used a VR stylus-hand to strike cubes under two conditions: a congruent task (cube size matched weight) and a random task (size–weight association unpredictable). | 6 congruent trials, 6 random trials | 12 congruent trials, 6 random trials | Stereoscopic Shutter Glasses | None | Eye-tracking | 2 | Pupil size, Power Spectrum Density (PSD) | None | Pupil-based indices (low/high frequency ratio and high-frequency power) reliably tracked changes in workload. Mental load decreased with repeated congruent trials but increased when task demands became unpredictable, supporting pupil metrics as valid, non-intrusive measures for adaptive VR/BCI systems |

| Effects of augmented reality glasses on the cognitive load of assembly operators in the automotive industry [97] | Hilal Atici-Ulusu, Yagmur Dila Ikiz, Ozlem Taskapilioglu & Tulin Gunduz | International Journal of Computer Integrated Manufacturing | 2021 | 4 | 2 M, 2 F | Within Subjects | Assembly line diffusion task | Operators on an automotive assembly line performed a diffusion task (selecting and sorting materials into carts for assembly preparation). | Assembly with AR glasses | Standard manual procedure with paper instructions | XR Glasses(Sony Smart Eyeglass Sed-1) | Paper instructions | EEG | 2 | area under the curve (µV x s), focusing on frontal/temporal/occipital beta–gamma oscillations (attention, decision-making). | NASA-TLX | AR glasses significantly reduced operators’ cognitive load compared to standard procedures. Workers adapted immediately—no extra burden between first and last days—suggesting AR can enhance accuracy and efficiency in assembly preparation without increasing mental effort |

Appendix C. Quality Assessment

| Study | Bias Arising from the Randomization Process | Bias Due to Deviations from Intended Interventions | Bias Due to Missing Outcome Data | Bias in Measurement of Outcome | Bias in Selection of the Reported Result | Overall Risk of Bias |

|---|---|---|---|---|---|---|

| Dan & Reiner(a) | + | + | + | + | + | + |

| Kober, Kurzmann, Neuper | + | + | + | ? | + | + |

| Sun, Wu, & Cai | + | + | + | ? | + | + |

| Ghani, Signal, Niazi, Taylor | + | + | + | ? | ? | + |

| Makransky, Terkildsen, Mayer | + | + | + | ? | + | + |

| Parong & Mayer | + | + | + | ? | + | + |

| Berger & Davelaar | + | + | + | ? | + | + |

| Baceviciute, Lucas, Terkildsen, Makransky (a) | + | + | + | ? | + | + |

| Liu, Xu, Yang, Li, Huang | + | + | + | ? | + | + |

| Parsons, Magill, Boucher, Zhang, Zogbo, Bérubé, Scheffer, Beauregard, Faubert | + | + | + | ? | + | + |

| Dan & Reiner(b) | + | ? | + | ? | + | + |

| Baceviciute, Terkildsen, Makransky(b) | + | + | + | ? | + | + |

| Tian, Zhang, Li | + | + | + | ? | + | + |

| Jeong, Ko, Han, Oh, Park, Kim, Ko | + | + | + | ? | + | + |

| Zhao, Zhang, Chu, Zhu, Hu, He & Yang | + | + | + | ? | + | + |

| Liu, Laeng, Czajkowski | ? | + | + | ? | + | + |

| Nenna, Zanardi, Gamberini | ? | + | + | ? | ? | ? |

| Drouot, Bigot, Bricard, Bougrenet, Nourrit | + | + | + | ? | ? | ? |

| Mondellini, Pirovano, Colombo, Arlati, Sacco, Rizzo, Mastropietro | + | + | + | ? | + | + |

| Abdurrahman, Zheng, Yeh | + | + | + | ? | + | + |

| Barrett, Poe, O’Camb, Woodruff, Harrison, Dolguikh, Chuong, Klassen, Zhang, Joseph, Blair | + | + | + | + | ? | + |

| Redlinger, Glas, Rong | + | + | + | + | + | + |

| Sagehorn, Kisker, Johnsdorf, Gruber, Schöne | ? | + | + | + | + | + |

| Sumardani & Lin | ? | + | + | - | + | - |

| Luque, Armada, Piovano, Jurado-Barba, Santamaria | ? | + | + | + | + | + |

| Kisker, Sagehorn, Hofmann, Gruber, Schöne | + | + | + | + | + | + |

| Schirm, Gómez-Vargas, Perusquía-Hernández, Skarbez, Isoyama, Uchiyama | ? | + | + | + | ? | ? |

| Volmer, Baumeister, Von Itzstein, Schlesewsky, Bornkessel-Schlesewsky, Thomas | ? | + | + | + | + | + |

| Alessa, Alhaag, Al-harkan, Ramadan, Alqahtani | ? | + | + | + | + | + |

| Chang, Bai, Zhang, Gupta, He, Billinghurst | ? | + | + | + | + | + |

| Lee, De Jong, Donkers, Jarodzka, Van Merriënboer | ? | + | + | ? | + | ? |

| Kazemi, Kumar, Lee | + | + | + | + | + | + |

ROBINS-I Risk of Bias Assessment

| Study | Confounding | Selection of Participants | Classification of Interventions | Deviations from Intended Interventions | Missing Data | Measurement of Outcomes | Selection of Reported Result | Overall Risk of Bias |

|---|---|---|---|---|---|---|---|---|

| Magosso, Crescenzio, Ricci, Piastra, & Ursino | + | + | + | + | + | ? | + | + |

| Kakkos, Dimitrakopoulos, Gao, Zhang, Qi, Mastopoulos, Thakor, Bezerianos, Sun | + | + | + | + | + | ? | + | + |

| Zacharis, Mikropoulos, Priovolou | ? | - | + | + | + | ? | ? | - |

| Sloubonov, Ray, Johnson, Sloubonov, Newell | + | + | + | + | + | + | + | + |

| Zacharis, Mikropoulos, Kalyvioti | ? | - | + | + | + | ? | ? | ? |

| Tremmel, Herff, Sato, Tetsuya, Rechowicz, Yamani, Krusienski | + | + | + | + | + | + | + | + |

| Petukhov, Glazyrin, Gorokhov, Steeshina, Tanyrverdev | ? | - | + | + | + | ? | ? | - |

| Aksoy, Ufodiama, Bateson, Martin, Asghar | + | + | + | + | + | + | + | + |

| Kirchner, Kim, Tabie, Wohrle, Maurus, & Kirchner | ? | - | + | + | + | + | ? | ? |

| Suarez, Grasmann, Ochoa, Toro, Mejia, Hernandez | ? | ? | + | + | + | + | + | + |

| Krugliak & Clarke | ? | ? | + | + | + | + | + | + |

| Yang & Wang | ? | ? | + | + | + | + | + | ? |

| Elkin, Beaubien, Damaghi, Chang, Kessler | ? | + | + | + | + | + | + | + |

| Bernal, Jung, Yassi, Hidalgo, Alemu, Barnes-Diana, Maes | ? | + | + | + | + | + | + | + |

| Hebbar, Vinod, Shah, Pashilkar, Biswas | ? | + | + | + | + | + | + | + |

| Chiossi, Ou, Gerhardt, Putze, Mayer | ? | + | + | + | + | + | + | + |

| Atici-Ulusu, Ikiz, Taskapilioglu, Gunduz | - | - | + | + | + | + | ? | - |

| Reiner & Gelfeld | ? | + | + | + | + | ? | ? | ? |

References

- Skulmowski, A.; Xu, K.M. Understanding Cognitive Load in Digital and Online Learning: A New Perspective on Extraneous Cognitive Load. Educ. Psychol. Rev. 2022, 34, 171–196. [Google Scholar] [CrossRef]

- Makransky, G.; Terkildsen, T.S.; Mayer, R.E. Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 2019, 60, 225–236. [Google Scholar] [CrossRef]

- Mayer, R.E. (Ed.) Multimedia Learning; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Mayer, R.E. (Ed.) Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Slater, M.; Wilbur, S. A framework for immersive virtual environments (FIVE): Speculations on the role of presence in virtual environments. Presence Teleoperators Virtual Environ. 1997, 6, 603–616. [Google Scholar] [CrossRef]

- Dede, C. Immersive interfaces for engagement and learning. Science 2009, 323, 66–69. [Google Scholar] [CrossRef] [PubMed]

- Makransky, G.; Lilleholt, L. A structural equation modeling investigation of the emotional value of immersive virtual reality in education. Educ. Technol. Res. Dev. 2018, 66, 1141–1164. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.; Fromm, J.; Wohlgenannt, I. A Systematic Review of Immersive Virtual Reality Applications for Higher Education: Design Elements, Lessons Learned, and Research Agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Calabuig-Moreno, F.; Haist, M.; García-Sánchez, M.; Fombona, J.; García-Tascón, M. The emergence of technology in physical education: A general bibliometric analysis with a focus on virtual and augmented reality. Sustainability 2020, 12, 2728. [Google Scholar] [CrossRef]

- Woods, A.J.; Docherty, T.; Koch, R.M. Image distortions in stereoscopic video systems. In Stereoscopic Displays and Applications IV; SPIE: Bellingham, WA, USA, 1993; Volume 1915, pp. 36–48. [Google Scholar] [CrossRef]

- LaValle, S.M. (Ed.) Virtual Reality; Cambridge University Press: Cambridge, UK, 2020. [Google Scholar]

- Keenan, I.D.; Powell, M. Interdimensional travel: Visualisation of 3D–2D transitions in anatomy learning. In Biomedical Visualization; Rea, P., Ed.; Advances in Experimental Medicine and Biology; Springer: Cham, Switzerland, 2020; Volume 1235, pp. 55–69. [Google Scholar] [CrossRef]

- Choi, B.; Baek, Y. Exploring factors of media characteristic influencing flow in learning through virtual worlds. Comput. Educ. 2011, 57, 2382–2394. [Google Scholar] [CrossRef]

- Dan, A.; Reiner, M. Reduced mental load in learning a motor visual task with virtual 3D method. J. Comput. Assist. Learn. 2017, 34, 12216. [Google Scholar] [CrossRef]

- Dan, A.; Reiner, M. EEG-based cognitive load of processing events in 3D virtual worlds is lower than processing events in 2D displays. Int. J. Psychophysiol. 2017, 113, 16–24. [Google Scholar] [CrossRef]

- Kang, M.L.; Wong, C.M.J.; Tan, H.; Bohari, A.; Han, T.O.; Soon, Y. A secondary learning curve in 3D versus 2D imaging in laparoscopic training of surgical novices. Surg. Endosc. 2020, 34, 2663–2672. [Google Scholar] [CrossRef] [PubMed]

- Kunert, W.; Storz, P.; Dietz, N.; Axt, S.; Falch, C.; Kirschniak, A.; Wilhelm, P. Learning curves, potential and speed in training of laparoscopic skills: A randomised comparative study in a box trainer. Surg. Endosc. 2021, 35, 1624–1635. [Google Scholar] [CrossRef] [PubMed]

- Savva, M.; Chang, A.X.; Agrawala, M. SceneSuggest: Context-Driven 3D Scene Design. arXiv 2017, arXiv:1703.00061. Available online: https://arxiv.org/abs/1703.00061 (accessed on 15 September 2025).

- Bogomolova, K.; van der Ham, I.J.M.; Dankbaar, M.E.W.; van den Broek, W.W.; Hovius, S.E.R.; van der Hage, J.A.; Hierck, B.P. The effect of stereoscopic augmented reality visualization on learning anatomy and the modifying effect of visual-spatial abilities: A double-center randomized controlled trial. Anat. Sci. Educ. 2020, 13, 558–567. [Google Scholar] [CrossRef] [PubMed]

- Chikha, A.B.; Khacharem, A.; Trabelsi, K.; Bragazzi, N.L. The effect of spatial ability in learning from static and dynamic visualizations: A moderation analysis in 6-year-old children. Front. Psychol. 2021, 12, 583968. [Google Scholar] [CrossRef]

- Dalgarno, B.; Lee, M.J.W. What are the learning affordances of 3-D virtual environments? Br. J. Educ. Technol. 2010, 41, 10–32. [Google Scholar] [CrossRef]

- Dengel, A.; Maegdefrau, J. Immersive Learning Predicted: Presence, Prior Knowledge, and School Performance Influence Learning Outcomes in Immersive Educational Virtual Environments. In Proceedings of the 2020 6th International Conference of the Immersive Learning Research Network (iLRN), San Luis Obispo, CA, USA, 21–25 June 2020; IEEE: Piscataway, NJ, USA; pp. 14–20. [Google Scholar]

- Sun, R.; Wu, Y.J.; Cai, Q. The effect of a virtual reality learning environment on learners’ spatial ability. Virtual Real. 2019, 23, 385–398. [Google Scholar] [CrossRef]

- Yang, F.-Y.; Wang, H.-Y. Tracking visual attention during learning of complex science concepts with augmented 3D visualizations. Comput. Educ. 2023, 191, 104659. [Google Scholar] [CrossRef]

- Sweller, J.; Chandler, P. Cognitive load during problem solving: Effects on learning. Cogn. Sci. 1988, 12, 257–285. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive load theory and instructional design: Recent developments. Educ. Psychol. 2003, 38, 1–4. [Google Scholar] [CrossRef]

- Van Merriënboer, J.J.G.; Sweller, J. Cognitive load theory and complex learning: Recent developments and future directions. Educ. Psychol. Rev. 2005, 17, 147–177. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory: New Conceptualizations, Specifications, and Integrated Research Perspectives. Educ. Psychol. Rev. 2010, 22, 115–121. [Google Scholar] [CrossRef]

- Choi, Y.K.; Kim, J. Learning analytics for diagnosing cognitive load in e-learning using Bayesian network analysis. Sustainability 2021, 13, 10149. [Google Scholar] [CrossRef]

- Debue, N.; Van de Leemput, C. What does germane load mean? An empirical contribution to the cognitive load theory. Front. Psychol. 2014, 5, 1099. [Google Scholar] [CrossRef] [PubMed]

- Klepsch, M.; Seufert, T.; Sailer, M. Development and Validation of Two Instruments Measuring Intrinsic, Extraneous, and Germane Cognitive Load. Front. Psychol. 2017, 8, 1997. [Google Scholar] [CrossRef]

- De Jong, T. Cognitive load theory, educational research, and instructional design: Some food for thought. Instr. Sci. 2010, 38, 105–134. [Google Scholar] [CrossRef]

- Antonenko, P.; Paas, F.; Grabner, R.; van Gog, T. Using electroencephalography to measure cognitive load. Educ. Psychol. Rev. 2010, 22, 425–438. [Google Scholar] [CrossRef]

- Kim, H.J.; Park, Y.; Lee, J. The Validity of Heart Rate Variability (HRV) in Educational Research and a Synthesis of Recommendations. Educ. Psychol. Rev. 2024, 36, 42. [Google Scholar] [CrossRef]

- Berger, H. Über das Elektrenkephalogramm des Menschen. Arch. Psychiatr. Nervenkr. 1929, 87, 527–570. [Google Scholar] [CrossRef]

- Başar, E. Oscillations in the brain and cognitive functions. In Brain Function and Oscillations: IV; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Buzsáki, G. (Ed.) Rhythms of the Brain; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Gevins, A.; Smith, M.E. Neurophysiological measures of cognitive workload during human-computer interactions. Theor. Issues Erg. Sci. 2003, 4, 113–131. [Google Scholar] [CrossRef]

- Klimesch, W. EEG alpha and theta oscillations reflect cognitive and memory performance: A review and analysis. Brain Res. Rev. 1999, 29, 169–195. [Google Scholar] [CrossRef]

- Klimesch, W.; Schack, B.; Sauseng, P. The functional significance of theta and upper alpha oscillations for working memory: A review. Exp. Psychol. 2005, 52, 99–108. [Google Scholar] [CrossRef] [PubMed]

- Puma, S.; Matton, N.; Paubel, P.V.; Raufaste, É.; El-Yagoubi, R. Using theta and alpha band power to assess cognitive workload in multitasking environments. Int. J. Psychophysiol. 2018, 123, 111–120. [Google Scholar] [CrossRef] [PubMed]

- Cavanagh, J.F.; Frank, M.J. Frontal theta as a mechanism for cognitive control. Trends Cogn. Sci. 2014, 18, 414–421. [Google Scholar] [CrossRef] [PubMed]

- Raufi, B.; Longo, L. An evaluation of the EEG alpha-to-theta and theta-to-alpha band ratios as indexes of mental workload. Front. Neuroinform 2022, 16, 861967. [Google Scholar] [CrossRef]

- Luck, S.J. An Introduction to the Event-Related Potential Technique, 2nd ed.; MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; van de Weijer, J. (Eds.) Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Duchowski, A.T. (Ed.) Eye Tracking Methodology: Theory and Practice, 3rd ed.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Lin, C.J.; Prasetyo, Y.T.; Widyaningrum, R. Eye movement parameters for performance evaluation in projection-based stereoscopic display. J. Eye Mov. Res. 2018, 11, 3. [Google Scholar] [CrossRef]

- Liu, H.; Lin, B.; Czajkowski, N.O. Does stereopsis improve face identification? A study using a virtual reality display with integrated eye-tracking and pupillometry. Acta Psychol. 2020, 210, 103142. [Google Scholar] [CrossRef]

- Salehi Fadardi, M.; Salehi Fadardi, J.; Mahjoob, M.; Doosti, H. Post-saccadic Eye Movement Indices Under Cognitive Load: A Path Analysis to Determine Visual Performance. J. Ophthalmic Vis. Res. 2022, 17, 397–404. [Google Scholar] [CrossRef]

- Di Stasi, L.L.; Renner, R.; Staehr, P.; Helmert, J.R.; Velichkovsky, B.M.; Cañas, J.J.; Catena, A.; Pannasch, S. Saccadic peak velocity sensitivity to variations in mental workload. Aviat. Space Environ. Med. 2010, 81, 413–417. [Google Scholar] [CrossRef]

- Hess, E.H.; Polt, J.M. Pupil size in relation to mental activity during simple problem-solving. Science 1964, 143, 1190–1192. [Google Scholar] [CrossRef]

- Beatty, J.; Lucero-Wagoner, B. The pupillary system. In Handbook of Psychophysiology; Cacioppo, J.T., Tassinary, L.G., Berntson, G.G., Eds.; Cambridge University Press: Cambridge, UK, 2000; pp. 142–162. [Google Scholar]

- Mitre-Hernandez, H.; Carrillo, R.; Lara-Alvarez, C. Pupillary responses for cognitive load measurement to classify difficulty levels in an educational video game: Empirical study. JMIR Serious Games 2020, 9, e21620. [Google Scholar] [CrossRef]

- Winn, B.; Whitaker, D.; Elliott, D.B.; Phillips, N.J. Factors affecting light-adapted pupil size in normal human subjects. Invest Ophthalmol. Vis. Sci. 1994, 35, 1132–1137. [Google Scholar] [PubMed]

- Bradley, M.M.; Miccoli, L.; Escrig, M.A.; Lang, P.J. The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 2008, 45, 602–607. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Yang, L.; Huang, Y. Impacts of cues on learning and attention in immersive 360-degree video: An eye-tracking study. Front. Psychol. 2022, 12, 792069. [Google Scholar] [CrossRef] [PubMed]

- Ouzzani, M.; Hammady, H.; Fedorowicz, Z.; Elmagarmid, A. Rayyan—A web and mobile app for systematic reviews. Syst. Rev. 2016, 5, 210. [Google Scholar] [CrossRef]

- Klimesch, W.; Doppelmayr, M.; Stadler, W.; Pollhuber, D.; Sauseng, P.; Rohm, D. Episodic retrieval is reflected by a process specific increase in human electroencephalographic theta activity. Neurosci. Lett. 2001, 302, 49–52. [Google Scholar] [CrossRef]

- Ergenoglu, T.; Demiralp, T.; Bayraktaroglu, Z.; Ergen, M.; Beydagi, H.; Uresin, Y. Alpha rhythm of the EEG modulates visual detection performance in humans. Cogn. Brain Res. 2004, 20, 376–383. [Google Scholar] [CrossRef]

- Grabner, R.H.; Fink, A.; Stipacek, A.; Neuper, C.; Neubauer, A.C. Intelligence and working memory systems: Evidence of neural efficiency in alpha band ERD. Cogn. Brain Res. 2004, 20, 212–225. [Google Scholar] [CrossRef]

- Käthner, I.; Wriessnegger, S.C.; Müller-Putz, G.R.; Kübler, A.; Halder, S. Effects of mental workload and fatigue on the P300, alpha and theta band power during operation of an ERP (P300) brain-computer interface. Biol. Psychol. 2014, 102, 118–129. [Google Scholar] [CrossRef]

- Xie, J.; Xu, G.; Wang, J.; Li, M.; Han, C.; Jia, Y. Effects of mental load and fatigue on steady-state evoked potential based brain-computer interface tasks: A comparison of periodic flickering and motion-reversal based visual attention. PLoS ONE 2016, 11, e0163426. [Google Scholar] [CrossRef]

- Fournier, L.R.; Wilson, G.F.; Swain, C.R. Electrophysiological, behavioral, and subjective indexes of workload when performing multiple tasks: Manipulations of task difficulty and training. Int. J. Psychophysiol. 1999, 31, 129–145. [Google Scholar] [CrossRef]

- Berka, C.; Levendowski, D.J.; Lumicao, M.N.; Yau, A.; Davis, G.; Zivkovic, V.T.; Olmstead, R.E.; Tremoulet, P.D.; Craven, P.L. EEG correlates of task engagement and mental workload in vigilance, learning, and memory tasks. Aviat. Space Env. Med. 2007, 78 (Suppl. 5), B231–B244. [Google Scholar]

- Mikinka, E.; Siwak, M. Recent advances in electromagnetic shielding properties of carbon-fibre-reinforced polymer composites: A topical review. J. Mater. Sci. Mater. Electron. 2021, 32, 21832–21853. [Google Scholar] [CrossRef]

- D’Angelo, G.; Rampone, S. Feature extraction and soft computing methods for aerospace structure defect classification. Measurement 2016, 85, 192–209. [Google Scholar] [CrossRef]

- Laganà, F.; Bibbò, L.; Calcagno, S.; De Carlo, D.; Pullano, S.A.; Pratticò, D.; Angiulli, G. Smart electronic device-based monitoring of SAR and temperature variations in indoor human tissue interaction. Sensors 2023, 23, 2439. [Google Scholar] [CrossRef]

- Broadbent, D.P.; Iwanski, G.D.; Ellmers, T.J.; Parsler, J.; Szameitat, A.J.; Bishop, D.T. Cognitive load, working memory capacity and driving performance: A preliminary fNIRS and eye tracking study. Transp. Res. F Traffic Psychol. Behav. 2023, 92, 121–132. [Google Scholar] [CrossRef]

- Park, B.K.; Plass, J.L.; Brünken, R. Emotional design and positive emotions in multimedia learning: An eye-tracking study on the use of anthropomorphisms. Comput. Educ. 2015, 86, 30–42. [Google Scholar] [CrossRef]

- Stern, J.A.; Boyer, D.; Schroeder, D. Blink rate: A possible measure of fatigue. Hum. Factors 1994, 36, 285–297. [Google Scholar] [CrossRef] [PubMed]

- Zagermann, J.; Pfeil, U.; Reiterer, H. Measuring cognitive load using eye tracking technology in visual computing. In Proceedings of the Sixth Workshop on Beyond Time and Errors: Novel Evaluation Methods for Visualization; ACM Press: Baltimore, MD, USA, 2016; pp. 78–85. [Google Scholar] [CrossRef]

- John, B. Pupil diameter as a measure of emotion and sickness in VR. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications (ETRA), Denver, CO, USA, 25–28 June 2019; pp. 1–3. [Google Scholar] [CrossRef]

- Sterne, J.A.C.; Savović, J.; Page, M.J.; Elbers, R.G.; Blencowe, N.S.; Boutron, I.; Cates, C.J.; Cheng, H.Y.; Corbett, M.S.; Eldridge, S.M.; et al. RoB 2: A revised tool for assessing risk of bias in randomized trials. BMJ 2019, 366, l4898. [Google Scholar] [CrossRef] [PubMed]

- Sterne, J.A.C.; Hernán, M.A.; Reeves, B.C.; Savović, J.; Berkman, N.D.; Viswanathan, M.; Henry, D.; Altman, D.G.; Ansari, M.T.; Boutron, I.; et al. ROBINS-I: A tool for assessing risk of bias in non-randomized studies of interventions. BMJ 2016, 355, i4919. [Google Scholar] [CrossRef] [PubMed]

- Aksoy, M.; Ufodiama, C.E.; Bateson, A.D.; Martin, S.; Asghar, A.U.R. A comparative experimental study of visual brain event-related potentials to a working memory task: Virtual reality head-mounted display versus a desktop computer screen. Exp. Brain Res. 2021, 239, 3463–3475. [Google Scholar] [CrossRef]

- Alessa, F.M.; Alhaag, M.H.; Al-harkan, I.M.; Ramadan, M.Z.; Alqahtani, F.M. A neurophysiological evaluation of cognitive load during augmented reality interactions in various industrial maintenance and assembly tasks. Sensors 2023, 23, 7698. [Google Scholar] [CrossRef] [PubMed]

- Baceviciute, S.; Terkildsen, T.; Makransky, G. Remediating learning from non-immersive to immersive media: Using EEG to investigate the effects of environmental embeddedness on reading in Virtual Reality. Comput. Educ. 2021, 164, 104122. [Google Scholar] [CrossRef]

- Barrett, R.C.A.; Poe, R.; O’cAmb, J.W.; Woodruff, C.; Harrison, S.M.; Dolguikh, K.; Chuong, C.; Klassen, A.D.; Zhang, R.; Ben Joseph, R.; et al. Comparing virtual reality, desktop-based 3D, and 2D versions of a category learning experiment. PLoS ONE 2022, 17, e0275119. [Google Scholar] [CrossRef] [PubMed]

- Berger, A.M.; Davelaar, E.J. Frontal alpha oscillations and attentional control: A virtual reality neurofeedback study. Neuroscience 2018, 378, 189–197. [Google Scholar] [CrossRef]

- Chang, Z.; Bai, H.; Zhang, L.; Gupta, K.; He, W.; Billinghurst, M. The impact of virtual agents’ multimodal communication on brain activity and cognitive load in Virtual Reality. Front. Virtual Real. 2022, 3, 995090. [Google Scholar] [CrossRef]

- Chiossi, F.; Ou, C.; Gerhardt, C.; Putze, F.; Mayer, S. Designing and evaluating an adaptive virtual reality system using EEG frequencies to balance internal and external attention states. Int. J. Human–Comput. Stud. 2025, 196, 103433. [Google Scholar] [CrossRef]

- Drouot, M.; Bricard, E.; de Bougrenet, J.; Nourrit, V. Augmented reality on industrial assembly line: Impact on effectiveness and mental workload. Appl. Ergon. 2022, 103, 103793. [Google Scholar] [CrossRef]

- Jeong, H.-G.; Ko, Y.-H.; Han, C.; Oh, S.-Y.; Park, K.W.; Kim, T.; Ko, D. The impact of 3D and 2D TV watching on neurophysiological responses and cognitive functioning in adults. Eur. J. Public Health 2015, 25, 1047–1052. [Google Scholar] [CrossRef]

- Luque, F.; Armada, V.; Piovano, L.; Jurado-Barba, R.; Santamaría, A. Understanding pedestrian cognition workload in traffic environments using virtual reality and electroencephalography. Electronics 2024, 13, 1453. [Google Scholar] [CrossRef]

- Mondellini, M.; Pirovano, I.; Colombo, V.; Arlati, S.; Sacco, M.; Rizzo, G.; Mastropietro, A. A multimodal approach exploiting EEG to investigate the effects of VR environment on mental workload. Int. J. Hum. Comput. Interact. 2023, 39, 643–658. [Google Scholar] [CrossRef]

- Nenna, F.; Zorzi, D.; Gamberini, L. Enhanced interactivity in VR-based telerobotics: An eye-tracking investigation of human performance and workload. Int. J. Hum. Comput. Stud. 2023, 177, 103079. [Google Scholar] [CrossRef]

- Parong, J.; Mayer, R.E. Cognitive and affective processes for learning science in immersive virtual reality. J. Comput. Assist. Learn. 2021, 37, 226–241. [Google Scholar] [CrossRef]

- Parsons, B.; Magill, T.; Boucher, A.; Zhang, M.; Zogbo, K.; Bérubé, S.; Scheffer, O.; Beauregard, M.; Faubert, J. Enhancing cognitive function using perceptual-cognitive training. Clin. EEG Neurosci. 2016, 47, 37–47. [Google Scholar] [CrossRef] [PubMed]

- Redlinger, E.; Glas, B.; Rong, Y. Impact of Visual Game-Like Features on Cognitive Performance in a Virtual Reality Working Memory Task: Within-Subjects Experiment. JMIR Serious Games 2022, 10, e35295. [Google Scholar] [CrossRef] [PubMed]

- Sagehorn, M.; Kisker, J.; Johnsdorf, M.; Gruber, T.; Schöne, B. A comparative analysis of face and object perception in 2D laboratory and virtual reality settings: Insights from induced oscillatory responses. Exp. Brain Res. 2024, 242, 2765–2783. [Google Scholar] [CrossRef] [PubMed]

- Slobounov, S.M.; Ray, W.; Johnson, B.; Slobounov, E.; Newell, K.M. Modulation of cortical activity in 2D versus 3D virtual reality environments: An EEG study. Int. J. Psychophysiol. 2015, 95, 254–260. [Google Scholar] [CrossRef]

- Suárez, J.X.; Gutiérrez, K.; Ochoa, J.F.; Toro, J.P.; Mejía, A.M.; Hernández, A.M. Changes in brain activity of trainees during laparoscopic surgical virtual training assessed with electroencephalography. Brain Res. 2022, 1783, 147836. [Google Scholar] [CrossRef]

- Volmer, B.; Baumeister, J.; Von Itzstein, S.; Schlesewsky, M.; Bornkessel-Schlesewsky, I.; Thomas, B.H. Event related brain responses reveal the impact of spatial augmented reality predictive cues on mental effort. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2384–2397. [Google Scholar] [CrossRef]

- Zhao, G.; Zhu, W.; Hu, B.; He, H.; Yang, L. An augmented reality-based mobile photography application to improve learning gain, decrease cognitive load, and achieve better emotional state. Int. J. Hum. Comput. Interact. 2022, 39, 643–658. [Google Scholar] [CrossRef]

- Abdurrahman, U.A.; Zhang, L.; Yeh, S.-C. Cognitive workload evaluation of landmarks and routes using virtual reality. PLoS ONE 2022, 17, e0268399. [Google Scholar] [CrossRef]

- Atici-Ulusu, H.; Ikiz, Y.D.; Taskapilioglu, Ö.; Gunduz, T. Effects of augmented reality glasses on the cognitive load of assembly operators in the automotive industry. Int. J. Comput. Integr. Manuf. 2021, 34, 487–499. [Google Scholar] [CrossRef]

- Baceviciute, S.; Laugwitz, G.; Terkildsen, T.; Makransky, G. Investigating the redundancy principle in immersive virtual reality environments: An eye-tracking and EEG study. J. Comput. Assist. Learn. 2021, 38, 120–136. [Google Scholar] [CrossRef]

- Bernal, G.; Jung, H.; Yassı, İ.E.; Hidalgo, N.; Alemu, Y.; Barnes-Diana, T.; Maes, P. Unraveling the dynamics of mental and visuospatial workload in Virtual Reality environments. Computers 2024, 13, 246. [Google Scholar] [CrossRef]

- Elkin, R.; Beaubien, J.M.; Damaghi, N.; Chang, T.P.; Kessler, D.O. Dynamic cognitive load assessment in virtual reality. Simul. Gaming 2024, 55, 89–108. [Google Scholar] [CrossRef]

- Ghani, U.; Niazi, I.K.; Taylor, D. Efficacy of a single-task ERP measure to evaluate cognitive workload during a novel exergame. Front. Hum. Neurosci. 2021, 15, 742384. [Google Scholar] [CrossRef]

- Hebbar, P.A.; Vinod, S.; Shah, A.K.; Pashilkar, A.A.; Biswas, P. Cognitive load estimation in VR flight simulator. J. Eye Mov. Res. 2023, 15, 11. [Google Scholar] [CrossRef]

- Kazemi, R.; Kumar, N.; Lee, S.C. Comparative analysis of teleportation and joystick locomotion in virtual reality navigation with different postures: A comprehensive examination of mental workload. Int. J. Human–Comput Interact. 2024, 41, 947–9958. [Google Scholar] [CrossRef]

- Kisker, J.; Johnsdorf, M.; Sagehorn, M.; Hofmann, T.; Gruber, T.; Schöne, B. Visual information processing of 2D, virtual 3D and real-world objects marked by theta band responses: Visuospatial processing and cognitive load as a function of modality. Eur. J. Neurosci. 2024, 61, e16634. [Google Scholar] [CrossRef]

- Kakkos, I.; Dimitrakopoulos, G.N.; Gao, L.; Zhang, Y.; Qi, P.; Matsopoulos, G.K.; Thakor, N.; Bezerianos, A.; Sun, Y. Mental workload drives different reorganizations of functional cortical connectivity between 2D and 3D simulated flight experiments. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1704–1713. [Google Scholar] [CrossRef]

- Kirchner, E.A.; Tabie, M.; Wöhrle, H.; Maurus, M.; Kim, S.K.; Kirchner, F. An intelligent man-machine interface—Multi-robot control adapted for task engagement based on single-trial detectability of P300. Front. Hum. Neurosci. 2016, 10, 291. [Google Scholar] [CrossRef]

- Klotzsche, F.; Gollmitzer, M.; Villringer, A.; Sommer, W.; Nikulin, V.V.; Ohl, S. Visual short-term memory-related EEG components in a virtual reality setup. Psychophysiology 2023, 60, e14378. [Google Scholar] [CrossRef]

- Kober, K.; Neuper, C. Cortical correlate of spatial presence in 2D and 3D interactive virtual reality: An EEG study. Int. J. Psychophysiol. 2012, 83, 365–374. [Google Scholar] [CrossRef]