A Review of Socially Assistive Robotics in Supporting Children with Autism Spectrum Disorder

Abstract

1. Introduction

1.1. Types, Diagnosis, and Treatment of Autism

1.2. Robot Assisted Therapy (RAT)

1.3. Existing Review

2. Materials and Methods

2.1. Research Questions

- RQ1: What social robots have been employed for RAT in children with ASD, and how do they differ in terms of functionality, performance, and cost?

- RQ2: How are social robots integrated into therapeutic interventions for individuals with ASD, and which deployment strategies are the most effective

- RQ3: Which developmental areas, communication modalities, and target behaviors are primarily targeted by robot-assisted interventions for ASD?

2.2. Search Approach

- S1—(“humanoid robots” OR “social robots”) AND “autism treatment”

- S2—“Robot-assisted therapy” AND “autism spectrum disorder”

- S3—“Assistive technology” AND “social skills” AND “autism”

- S4—“Socially assistive robots” AND “autism treatment”

- S5—“Robots” AND” autism treatment

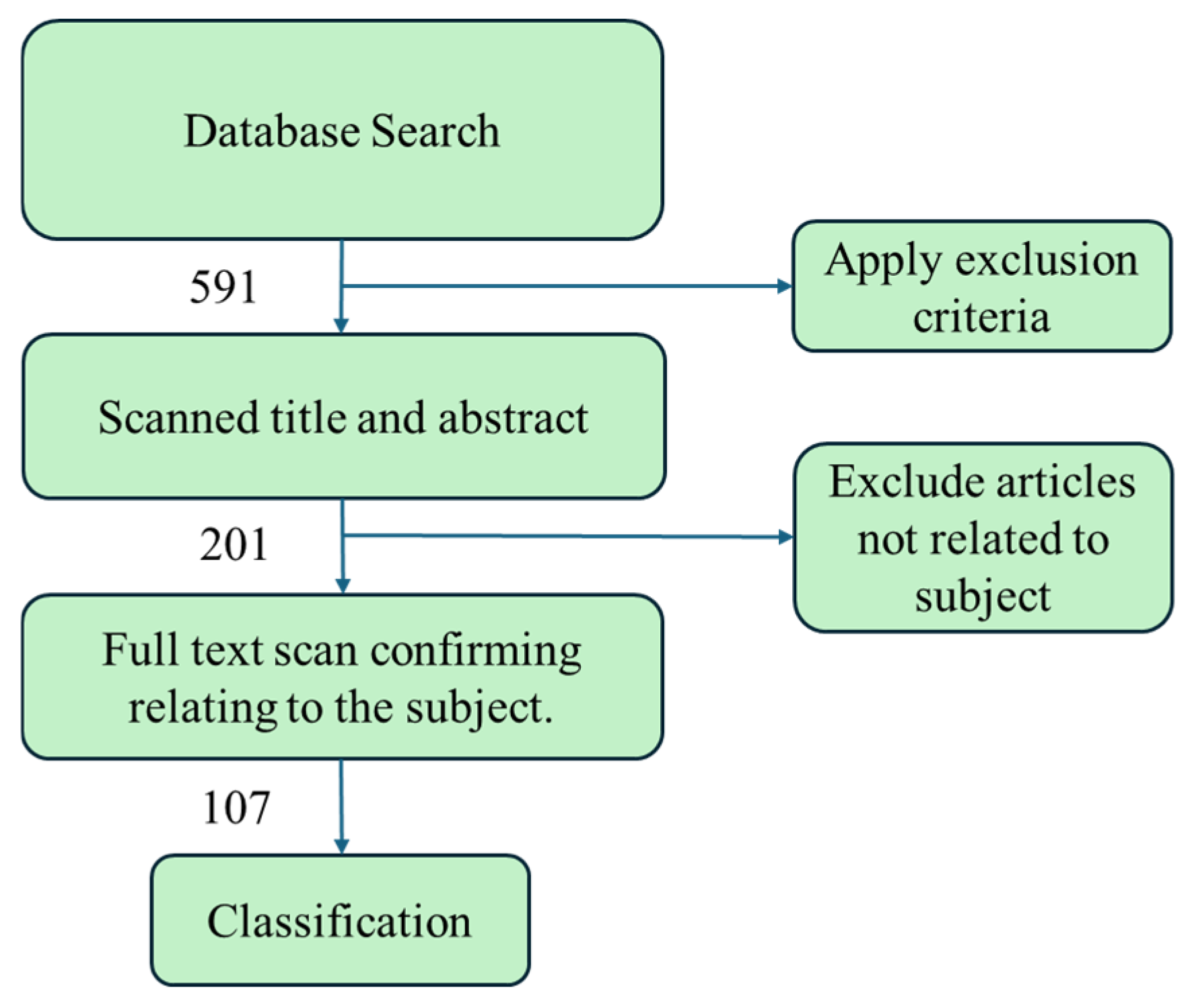

2.3. Inclusion and Exclusion Criteria

- I1: Original research articles published in English.

- I2: Explicitly focused on the use of robots in therapeutic interventions for children with ASD.

- I3: Involved robot-assisted therapy targeting developmental or behavioral outcomes relevant to ASD

- E1: Did not involve either ASD or robotic applications

- E2: Non-peer-reviewed sources, such as preprints, theses, and technical reports.

- E3: Review articles, editorials, commentaries, and other secondary sources

2.4. PRISMA Flowchart

2.5. Data Extraction and Synthesis

2.6. Quality Assessment

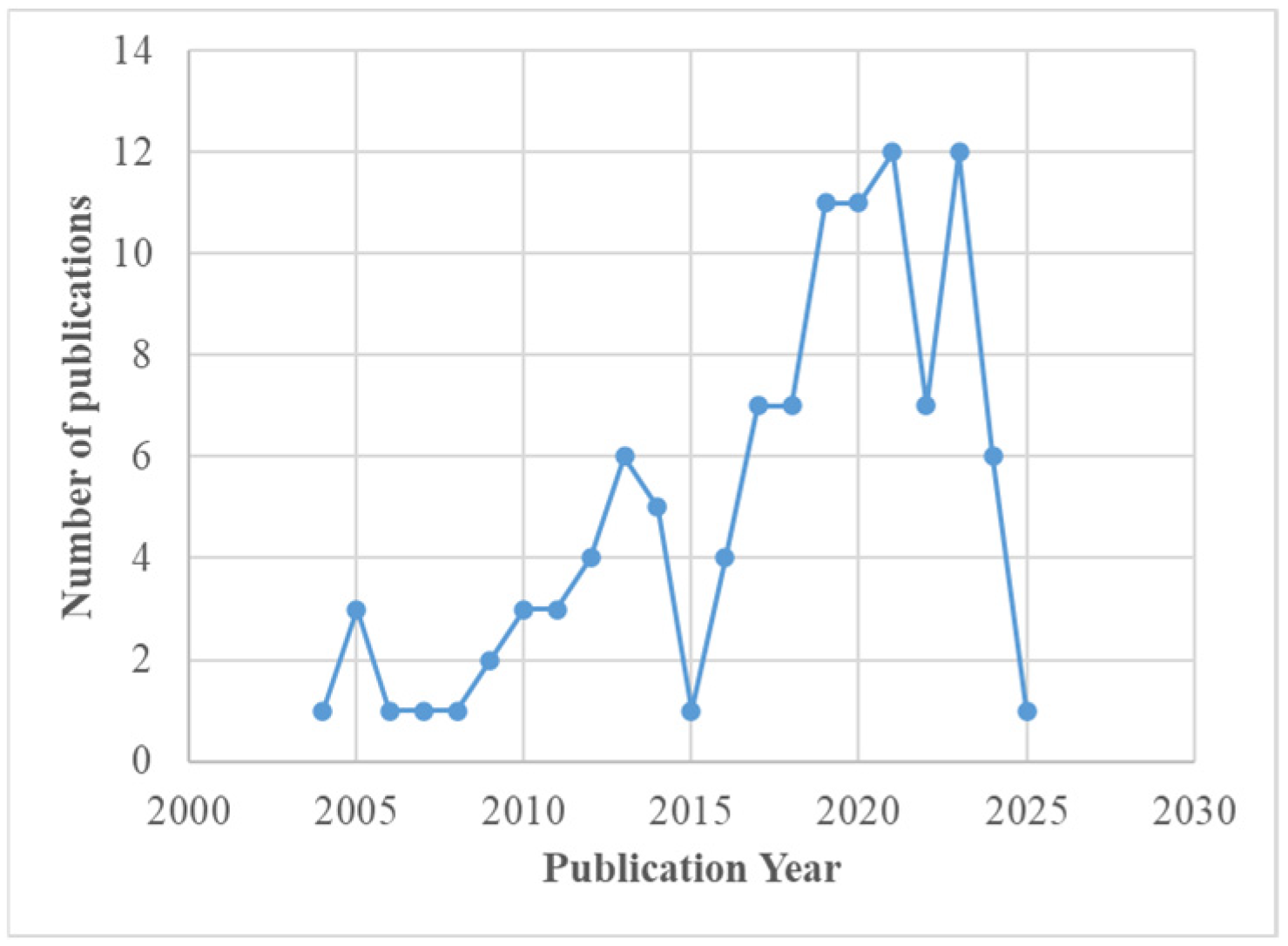

2.7. Journal and Conference Publications

2.8. Publication Map

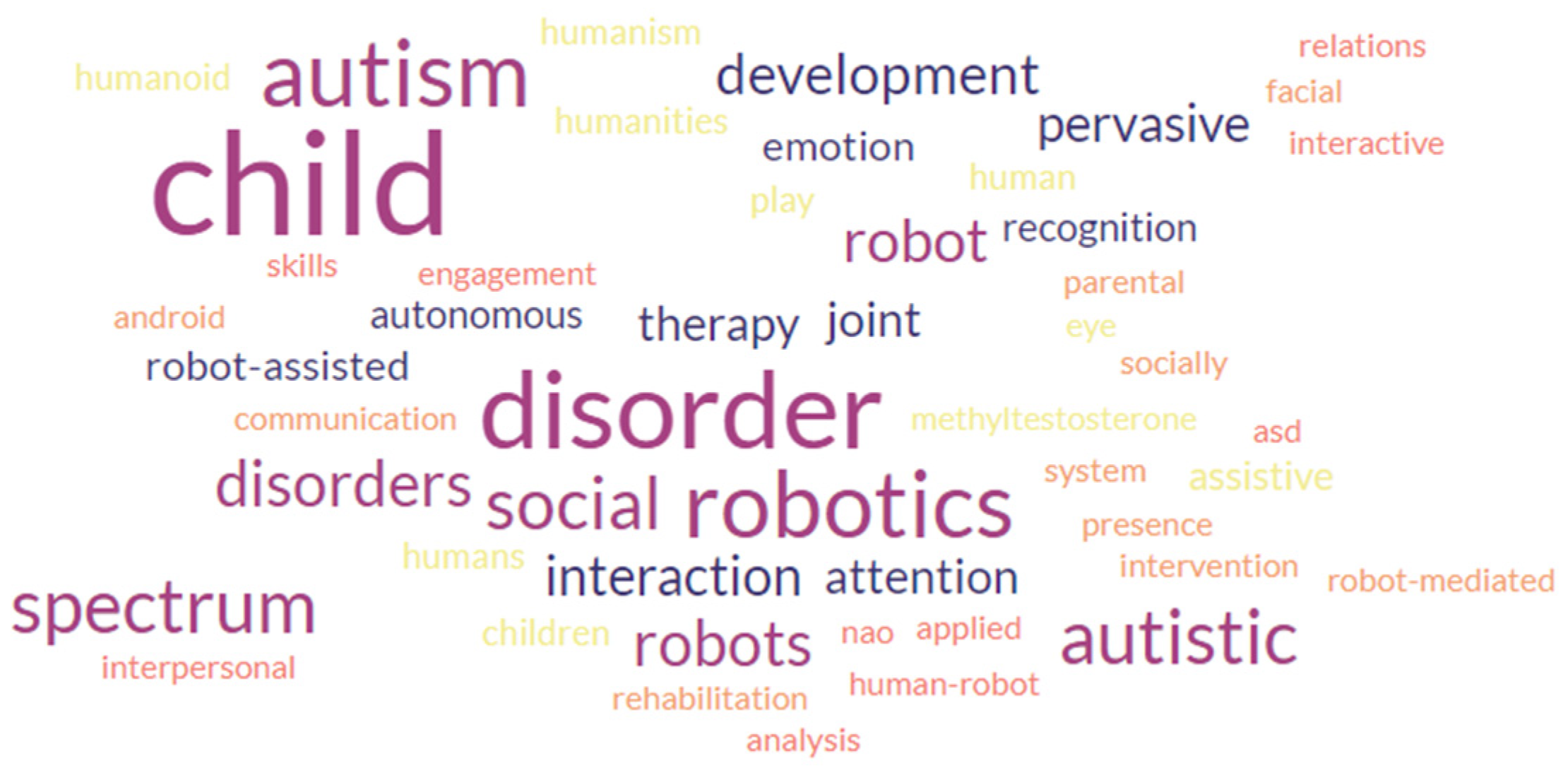

2.9. Frequency Analysis of the Keywords

3. Results

3.1. Social Robots for Treating ASD

3.2. Humanoid Robots

3.2.1. Zeno

3.2.2. Furhat

3.2.3. Milo

3.2.4. Pepper

3.2.5. KASPAR

3.2.6. NAO

3.2.7. QTrobot

3.2.8. FACE

3.3. Animal Robots

3.3.1. Robo Parrot

3.3.2. Probo

3.3.3. JARI

3.3.4. Kiwi

3.3.5. iCat

3.3.6. Paro

3.4. Toy Robots

3.4.1. Robosapien

3.4.2. DREAM

3.4.3. Lego NXT

3.5. Sensory Integration Techniques Used in Social Robots for Treating Autism

3.5.1. Multisensory Processing

3.5.2. Personalized Interventions

3.5.3. Interactive and Engaging Environments

3.5.4. Data Collection and Analysis

3.6. Comparison of Physical Features of Social Robots

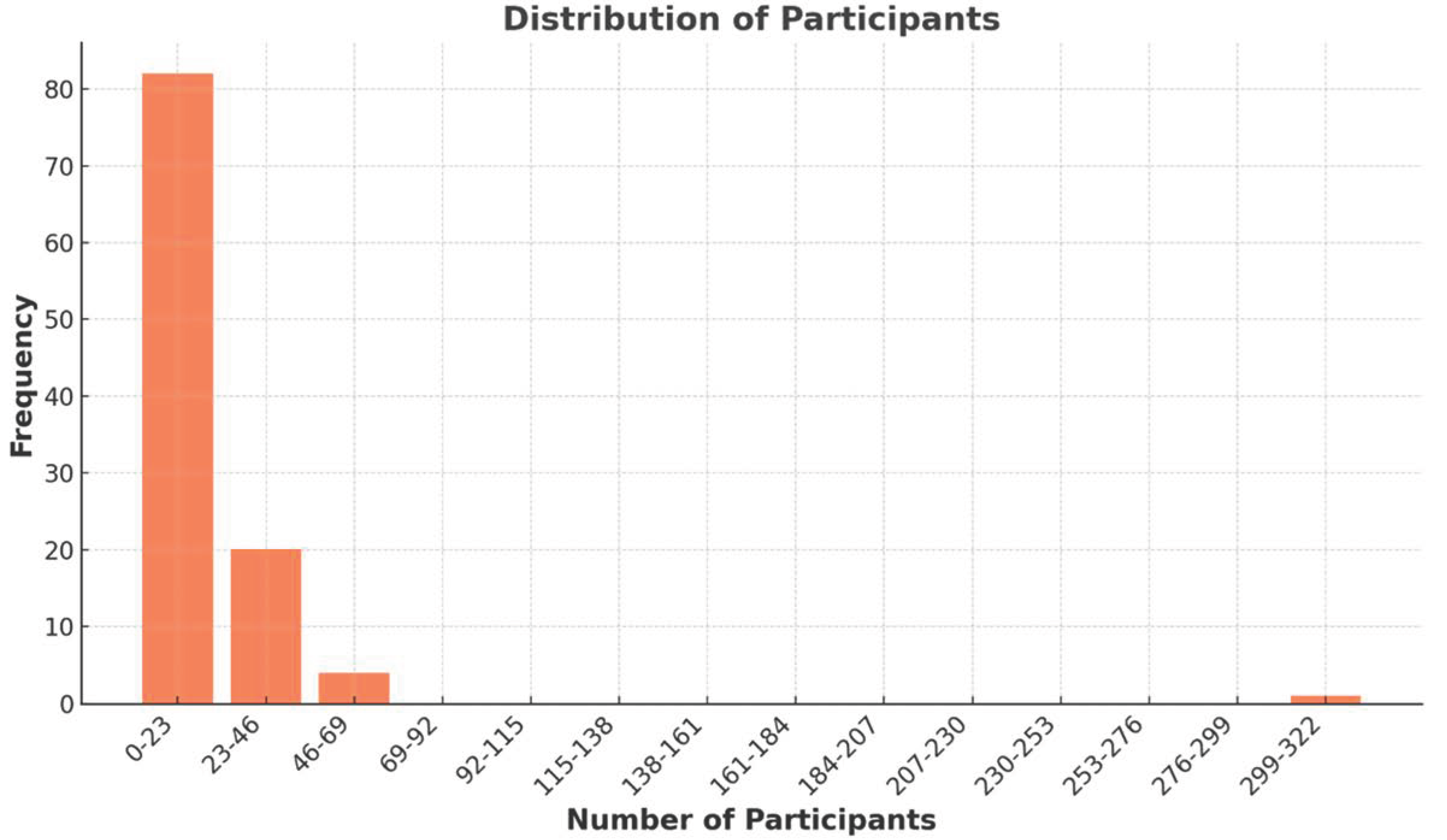

3.7. Analysis by Participant Types

3.8. Classification Based on Robot Used

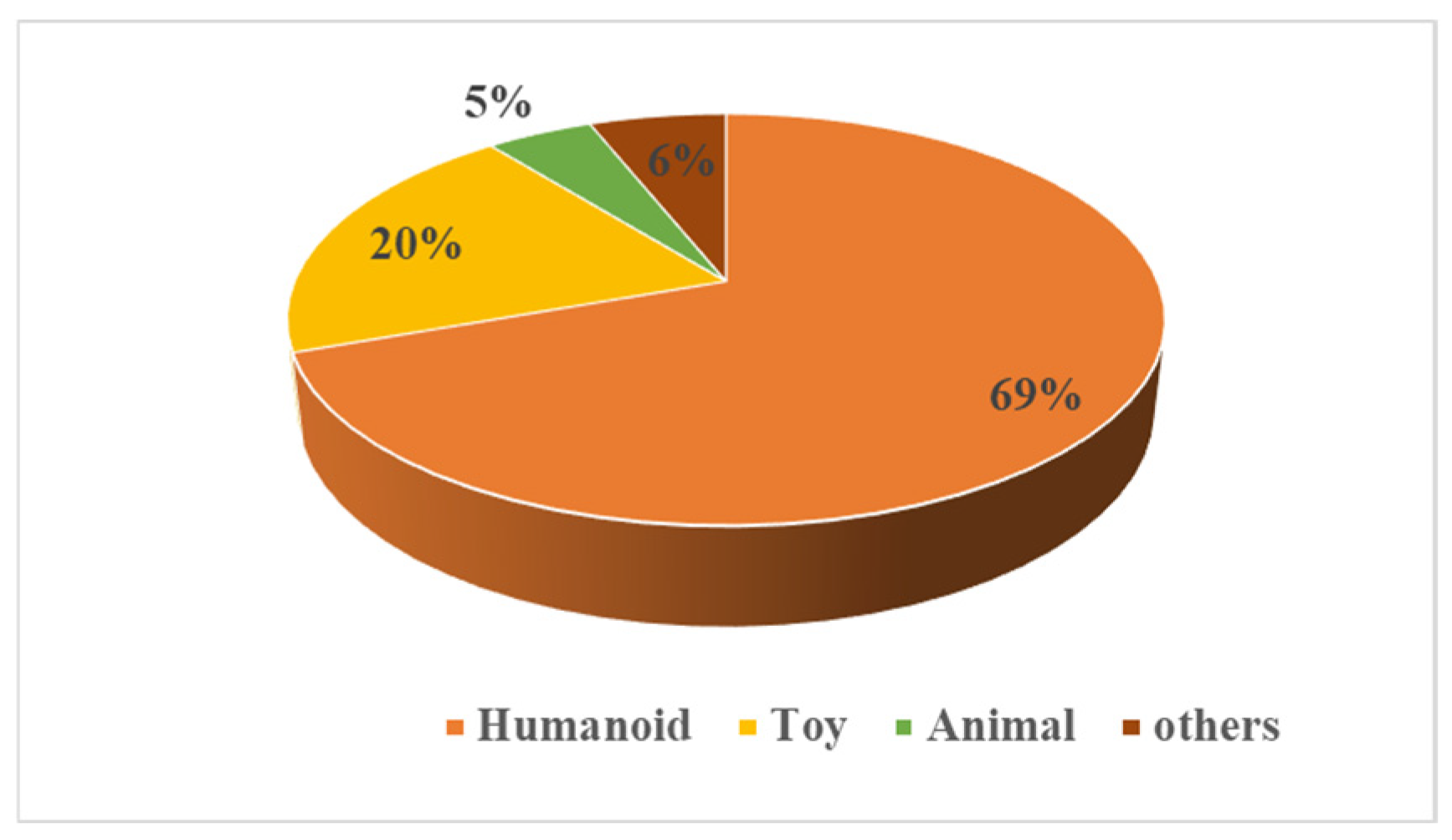

3.9. Classification Based on Robot Shape

3.10. Article Classification by Children’s Developmental Area

3.11. Article Classification Based on Communication Methodologies

3.12. Categorization Based on Target Behavior

3.12.1. Joint Attention

3.12.2. Eye Contact

3.12.3. Imitation

3.12.4. Turn-Taking

3.12.5. Emotion Recognition and Expression

3.12.6. Social Interaction

3.13. Challenges in Human–Robot Interaction and Autism Therapy

3.13.1. Variability in Child Behavior

3.13.2. Managing Repetitive Behaviors

3.13.3. Meaningful Social Interaction

3.13.4. Technological Limitations

3.13.5. Social Cues

3.13.6. Design and Engineering Challenges

3.13.7. Intensive Programming

3.13.8. Coordination Among Participants

3.13.9. Replication

4. Discussion

4.1. Critical Analysis and Limitations of the Study

4.2. Future Research

4.2.1. Expansion of Sample Size and Diversity

4.2.2. Enhancing Personalization and Adaptive AI for Therapy

4.2.3. Investigating Long-Term Efficacy and Generalization

4.2.4. Exploring Multimodal and Multi-Robot Therapy Approaches

4.2.5. Investigating Ethical, Psychological, and Safety Concerns

4.2.6. Real-World Applications and Integration into Home and School Environments

4.3. Contribution to the Field

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bespalova, I.N.; Reichert, J.; Buxbaum, J.D. Candidate susceptibility genes for Autism. In The Neurobiology of Autism; Johns Hopkins University Press: Baltimore, MD, USA, 2005; pp. 217–232. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-67749117036&partnerID=40&md5=9320a9fc7e5482611d3419f2773dd2d1 (accessed on 14 August 2015).

- Dawson, G.; Sterling, L. Autism Spectrum Disorders. Encycl. Infant Early Child. Dev. 2008, 1–3, 137–143. [Google Scholar] [CrossRef]

- Ošlejšková, H.; Pejčochová, J. Autisms. Ceska A Slov. Neurol. A Neurochir. 2010, 73, 627–641. [Google Scholar]

- Frith, U. Autism—Are we any closer to explaining the enigma? Psychologist 2014, 27, 744–745. [Google Scholar]

- Matas, C.G.; Aburaya, F.C.L.M.; Kamita, M.K.; de Souza, R.Y.C.K. Principal Findings of Auditory Evoked Potentials in Autism Spectrum Disorder. In Neurobiology of Autism Spectrum Disorders; Springer: Cham, Switzerland, 2024; pp. 333–347. [Google Scholar] [CrossRef]

- Zeidan, J.; Fombonne, E.; Scorah, J.; Ibrahim, A.; Durkin, M.S.; Saxena, S.; Yusuf, A.; Shih, A.; Elsabbagh, M. Global prevalence of autism: A systematic review update. Autism Res. 2022, 15, 778–790. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Boekhorst, R.T.; Billard, A. Robotic assistants in therapy and education of children with autism: Can a small humanoid robot help encourage social interaction skills? Univers. Access Inf. Soc. 2005, 4, 105–120. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Publishing: Washington, DC, USA, 2024; Available online: https://www.psychiatry.org/getmedia/2ed086b0-ec88-42ec-aa0e-f442e4af74e6/APA-DSM5TR-Update-September-2024.pdf (accessed on 13 March 2015).

- Centers for Disease Control and Prevention. Data and Statistics on Autism Spectrum Disorder. 2025. Available online: https://www.cdc.gov/autism/data-research/index.html (accessed on 13 March 2015).

- Cakir, J.; Frye, R.E.; Walker, S.J. The lifetime social cost of autism: 1990–2029. Res. Autism Spectr. Disord. 2020, 72, 101502. [Google Scholar] [CrossRef]

- Jackson, S.L.; Volkmar, F.R.; Volkmar, F. Diagnosis and definition of autism and other pervasive developmental disorders. In Autism and Pervasive Developmental Disorders; Cambridge University Press: Cambridge, UK, 2019; pp. 1–24. [Google Scholar]

- Mercadante, M.T.; Van der Gaag, R.J.; Schwartzman, J.S. Non-Autistic Pervasive Developmental Disorders: Rett syndrome, disintegrative disorder and pervasive developmental disorder not otherwise specified. Braz. J. Psychiatry 2006, 28, s12–s20. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Mughal, S.; Faizy, R.M.; Saadabadi, A. Autism spectrum disorder (regressive autism, child disintegrative disorder). In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2020. [Google Scholar][Green Version]

- Hassan, A.Z.; Zahed, B.T.; Zohora, F.T.; Moosa, J.M.; Salam, T.; Rahman, M.M.; Ferdous, H.S.; Ahmed, S.I. Developing the concept of money by interactive computer games for autistic children. In Proceedings of the 2011 IEEE International Symposium on Multimedia, ISM 2011, Dana Point, CA, USA, 5–7 December 2011; pp. 559–564. [Google Scholar] [CrossRef]

- Robel, L. Diagnosis and evaluation of autism and pervasive developmental disorders. Med. Ther. Pediatr. 2012, 15, 219–223. [Google Scholar] [CrossRef]

- Artigas-Pallarés, J.; Gabau-Vila, E.; Guitart-Feliubadaló, M. Syndromic autism: I. General aspects. Rev. Neurol. 2005, 40 (Suppl. S1), S143–S149. [Google Scholar] [CrossRef]

- Kim, J.I.; Yoo, H.J. Diagnosis and Assessment of Autism Spectrum Disorder in South Korea. J. Korean Acad. Child Adolesc. Psychiatry 2024, 35, 15–21. [Google Scholar] [CrossRef]

- Williams, D.L. Working memory and autism. In Working Memory and Clinical Developmental Disorders: Theories, Debates and Interventions; Routledge: Abingdon, UK, 2018; pp. 38–52. [Google Scholar] [CrossRef]

- Hodges, H.; Fealko, C.; Soares, N. Autism spectrum disorder: Definition, epidemiology, causes, and clinical evaluation. Transl Pediatr 2020, 9 (Suppl. S1), S55. [Google Scholar] [CrossRef] [PubMed]

- Rossignol, D.A. Novel and emerging treatments for autism spectrum disorders: A systematic review. Ann. Clin. Psychiatry 2009, 21, 213–236. [Google Scholar] [CrossRef] [PubMed]

- Klin, A.; Lin, D.J.; Gorrindo, P.; Ramsay, G.; Jones, W. Two-year-olds with autism orient to non-social contingencies rather than biological motion. Nature 2009, 459, 257–261. [Google Scholar] [CrossRef]

- Ozonoff, S. Reliability and validity of the Wisconsin Card Sorting Test in studies of autism. Neuropsychology 1995, 9, 491. [Google Scholar] [CrossRef]

- Robins, B.; Dautenhahn, K.; Dubowski, J. Robots as isolators or mediators for children with autism? A cautionary tale. In AISB’05 Convention: Social Intelligence and Interaction in Animals, Robots and Agents, Proceedings of the Symposium on Robot Companions: Hard Problems and Open Challenges in Robot-Human Interaction, Hatfield, UK, 14–15 April 2025; The Society for the Study of Artificial Intelligence and the Simulation of Behaviour (AISB): London, UK, 2005; pp. 82–88. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-67650488745&partnerID=40&md5=dc453db9d34d94f03b04619f07bd8dea (accessed on 20 August 2025).

- Emanuel, R.; Weir, S. Catalysing communication in an autistic child in a LOGO-like learning environment. In Proceedings of the 2nd Summer Conference on Artificial Intelligence and Simulation of Behaviour, Edinburgh, UK, 12–14 July 1976; pp. 118–129. [Google Scholar]

- Perillo, F.; Romano, M.; Vitiello, G. Social Robots Design to improve Social Skills in Autism Spectrum Disorder. In Proceedings of the International Workshop on Digital Innovations for Learning and Neurodevelopmental Disorders (DILeND 2024), CEUR Workshop Proceedings, Rome, Italy, 24–25 May 2024; pp. 58–64. [Google Scholar]

- Puglisi, A.; Caprì, T.; Pignolo, L.; Gismondo, S.; Chilà, P.; Minutoli, R.; Marino, F.; Failla, C.; Arnao, A.A.; Tartarisco, G.; et al. Social humanoid robots for children with autism spectrum disorders: A review of modalities, indications, and pitfalls. Children 2022, 9, 953. [Google Scholar] [CrossRef]

- Wagino, W.; Abidin, Z.; Beny, A.O.N.; Anggara, O.F.; Anggraeny, D.; Sari, D.E.; Pradana, H.D. A Robot for Children on the Autistic Spectrum. J. Eng. Sci. Technol. 2024, 19, 51–58. [Google Scholar]

- Cersosimo, R.; Pennazio, V. Promoting social communication skills in autism spectrum disorder through robotics and virtual worlds. In Intelligent Educational Robots: Toward Personalized Learning Environments; Walter de Gruyter GmbH & Co KG: Berlin, Germany, 2024; p. 95. [Google Scholar]

- Lee, J.; Takehashi, H.; Nagai, C.; Obinata, G.; Stefanov, D. Which robot features can stimulate better responses from children with autism in robot-assisted therapy? Int. J. Adv. Robot. Syst. 2012, 9, 72. [Google Scholar] [CrossRef]

- Narejo, I.H.; Matloob, M.J.; Attaullah, H.M.; Khawar, R. Designing and Evaluating a Mobile Social Robot as an Intervention Tool for Autism Spectrum Disorder. In Proceedings of the 2024 IEEE 1st Karachi Section Humanitarian Technology Conference (KHI-HTC), Tandojam, Pakistan, 8–9 January 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar]

- Diehl, J.J.; Schmitt, L.M.; Villano, M.; Crowell, C.R. The clinical use of robots for individuals with autism spectrum disorders: A critical review. Res. Autism Spectr. Disord. 2012, 6, 249–262. [Google Scholar] [CrossRef]

- Pinto-Bernal, M.J.; Cespedes, N.; Castro, P.; Munera, M.; Cifuentes, C.A. Physical human-robot interaction influence in ASD therapy through an affordable soft social robot. J. Intell. Robot. Syst. 2022, 105, 67. [Google Scholar] [CrossRef]

- Gómez-Espinosa, A.; Moreno, J.C.; la Cruz, S.P.-D. Assisted robots in therapies for children with autism in early childhood. Sensors 2024, 24, 1503. [Google Scholar] [CrossRef] [PubMed]

- Pagliara, S.M.; Bonavolonta, G.; Pia, M.; Falchi, S.; Zurru, A.L.; Fenu, G.; Mura, A. The Integration of Artificial Intelligence in Inclusive Education: A Scoping Review. Information 2024, 15, 774. [Google Scholar] [CrossRef]

- Mahdi, H.; Akgun, S.A.; Saleh, S.; Dautenhahn, K. A survey on the design and evolution of social robots—Past, present and future. Rob. Auton. Syst. 2022, 156, 104193. [Google Scholar] [CrossRef]

- Hanson, D.; Baurmann, S.; Riccio, T.; Margolin, R.; Dockins, T.; Tavares, M.; Carpenter, K. Zeno: A cognitive character. In Proceedings of the AI Magazine, and Special Proceeding of AAAI National Conference, Chicago, IL, USA, 13–17 July 2008. (later cited as 2009). [Google Scholar]

- Lecciso, F.; Levante, A.; Fabio, R.A.; Caprì, T.; Leo, M.; Carcagnì, P.; Distante, C.; Mazzeo, P.L.; Spagnolo, P.; Petrocchi, S. Emotional expression in children with ASD: A pre-study on a two-group pre-post-test design comparing robot-based and computer-based training. Front. Psychol. 2021, 12, 2826. [Google Scholar] [CrossRef] [PubMed]

- Salvador, M.; Marsh, A.S.; Gutierrez, A.; Mahoor, M.H. Development of an ABA autism intervention delivered by a humanoid robot. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; pp. 551–560. [Google Scholar] [CrossRef]

- Torres, N.A.; Clark, N.; Ranatunga, I.; Popa, D. Implementation of interactive arm playback behaviors of social robot Zeno for autism spectrum disorder therapy. In Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2012. [Google Scholar] [CrossRef]

- Watson, S.W. Socially Assisted Robotics as an Intervention for Children With Autism Spectrum Disorder. In Using Assistive Technology for Inclusive Learning in K-12 Classrooms; IGI Global: Hershey, PE, USA, 2023; pp. 24–41. [Google Scholar]

- Gkiolnta, E.; Zygopoulou, M.; Syriopoulou-Delli, C.K. Robot programming for a child with autism spectrum disorder: A pilot study. Int. J. Dev. Disabil. 2023, 69, 424–431. [Google Scholar] [CrossRef]

- Taheri, A.R.; Alemi, M.; Meghdari, A.; Pouretemad, H.R.; Basiri, N.M. Social robots as assistants for autism therapy in Iran: Research in progress. In Proceedings of the 2014 2nd RSI/ISM International Conference on Robotics and Mechatronics, ICRoM 2014, Tehran, Iran, 15–17 October 2014; pp. 760–766. [Google Scholar] [CrossRef]

- Zabidi, S.A.M.; Yusof, H.M.; Sidek, S.N. Platform to Improve Joint Attention. In Proceedings of the RiTA 2020: Proceedings of the 8th International Conference on Robot Intelligence Technology and Applications, Online, 11–13 December 2020; Springer Nature: Berlin, Germany, 2021; p. 214. [Google Scholar]

- Senland, A. Robots and autism spectrum disorder: Clinical and educational applications. In Innovative Technologies to Benefit Children on the Autism Spectrum; IGI Global Scientific Publishing: Palmdale, PA, USA, 2014; pp. 178–196. [Google Scholar] [CrossRef]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Benedicto, G.; Val, M.; Fernández, E.; Ferrer, F.S.; Ferrández, J.M. Autism Spectrum Disorder (ASD): Emotional Intervention Protocol. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2022; pp. 310–322. [Google Scholar] [CrossRef]

- Gena, C.; Mattutino, C.; Brighenti, S.; Meirone, A.; Petriglia, F.; Mazzotta, L.; Liscio, F.; Nazzario, M.; Ricci, V.; Quarato, C.; et al. Sugar, Salt & Pepper-Humanoid robotics for autism In Proceedings of the Joint Proceedings of the ACM IUI 2021 Workshops co-located with 26th ACM Conference on Intelligent User Interfaces (ACM IUI 2021), College Station, TX, USA, 13–17 April 2021. CEUR Workshop Proceedings. Glowacka, D., Krishnamurthy, V.R., Eds.; CEUR-WS.org: College Station, TX, USA, 2021; Volume 2903. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85110500897&partnerID=40&md5=b56ca0cb99a88b3e7bc00e66cf882ccf (accessed on 21 August 2025).

- Benedicto, G.; Juan, C.G.; Fernández-Caballero, A.; Fernandez, E.; Ferrández, J.M. Unravelling the Robot Gestures Interpretation by Children with Autism Spectrum Disorder During Human-Robot Interaction. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2024; pp. 342–355. [Google Scholar] [CrossRef]

- Palestra, G.; De Carolis, B.; Esposito, F. Artificial Intelligence for Robot-Assisted Treatment of Autism. In Proceedings of the Workshop on Artificial Intelligence with Application in Health, Bari, Italy, 14 November 2017; pp. 17–24. [Google Scholar]

- Dautenhahn, K.; Nehaniv, C.L.; Walters, M.L.; Robins, B.; Kose-Bagci, H.; Mirza, N.A.; Blow, M. KASPAR—A minimally expressive humanoid robot for human–robot interaction research. Appl. Bionics Biomech. 2009, 6, 369–397. [Google Scholar] [CrossRef]

- Wood, L.J.; Zaraki, A.; Robins, B.; Dautenhahn, K. Developing kaspar: A humanoid robot for children with autism. Int. J. Soc. Robot. 2021, 13, 491–508. [Google Scholar] [CrossRef]

- Mengoni, S.E.; Irvine, K.; Thakur, D.; Barton, G.; Dautenhahn, K.; Guldberg, K.; Robins, B.; Wellsted, D.; Sharma, S. Feasibility study of a randomised controlled trial to investigate the effectiveness of using a humanoid robot to improve the social skills of children with autism spectrum disorder (Kaspar RCT): A study protocol. BMJ Open 2017, 7, e017376. [Google Scholar] [CrossRef]

- Marinoiu, E.; Zanfir, M.; Olaru, V.; Sminchisescu, C. 3D human sensing, action and emotion recognition in robot assisted therapy of children with autism. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2158–2167. [Google Scholar]

- Wainer, J.; Dautenhahn, K.; Robins, B.; Amirabdollahian, F. A pilot study with a novel setup for collaborative play of the humanoid robot KASPAR with children with autism. Int. J. Soc. Robot. 2014, 6, 45–65. [Google Scholar] [CrossRef]

- Lakatos, G.; Wood, L.J.; Syrdal, D.S.; Robins, B.; Zaraki, A.; Dautenhahn, K. Robot-mediated intervention can assist children with autism to develop visual perspective taking skills. Paladyn 2020, 12, 87–101. [Google Scholar] [CrossRef]

- Costa, S.; Lehmann, H.; Dautenhahn, K.; Robins, B.; Soares, F. Using a humanoid robot to elicit body awareness and appropriate physical interaction in children with autism. Int. J. Soc. Robot. 2015, 7, 265–278. [Google Scholar] [CrossRef]

- Shamsuddin, S.; Ismail, L.I.; Yussof, H.; Zahari, N.I.; Bahari, S.; Hashim, H.; Jaffar, A. Humanoid robot NAO: Review of control and motion exploration. In Proceedings of the 2011 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 25–27 November 2011; IEEE: New York, NY, USA, 2011; pp. 511–516. [Google Scholar]

- Anzalone, S.M.; Tilmont, E.; Boucenna, S.; Xavier, J.; Jouen, A.L.; Bodeau, N.; Maharatna, K.; Chetouani, M.; Cohen, D.; MICHELANGELO Study Group. How children with autism spectrum disorder behave and explore the 4-dimensional (spatial 3D+ time) environment during a joint attention induction task with a robot. Res. Autism Spectr. Disord. 2014, 8, 814–826. [Google Scholar] [CrossRef]

- Lytridis, C.; Vrochidou, E.; Chatzistamatis, S.; Kaburlasos, V. Social Engagement Interaction Games Between Children with Autism and Humanoid Robot NAO. In Advances in Intelligent Systems and Computing, Proceedings of the International Conference on Soft Computing Models in Industrial and Environmental Applications, San Sebastian, Spain, 6–8 June 2018; Springer: Cham, Switzerland, 2019; pp. 562–570. [Google Scholar] [CrossRef]

- Malik, N.A.; Shamsuddin, S.; Yussof, H.; Miskam, M.A.; Hamid, A.C. Feasibility of using a humanoid robot to elicit communicational response in children with mild autism. In IOP Conference Series: Materials Science and Engineering, Proceedings of the 5th International Conference on Mechatronics (ICOM’13), Kuala Lumpur, Malaysia, 2–4 July 2013; IOP Publishing Ltd.: Bristol, UK, 2013. [Google Scholar] [CrossRef]

- Miskam, M.A.; Hamid, M.A.C.; Yussof, H.; Shamsuddin, S.; Malik, N.A.; Basir, S.N. Study on social interaction between children with autism and humanoid robot NAO. Appl. Mech. Mater. 2013, 393, 573–578. [Google Scholar] [CrossRef]

- Hamid, A.C.; Miskam, M.A.; Yussof, H.; Shamsuddin, S.; Hashim, H.; Ismail, L. Human-robot interaction (HRI) for children with autism to augment communication skills. Appl. Mech. Mater. 2013, 393, 598–603. [Google Scholar] [CrossRef]

- Costa, A.P.; Kirsten, L.; Charpiot, L.; Steffgen, G. Mental health benefits of a robot-mediated emotional ability training for children with autism: An exploratory study. In Proceedings of the Annual Meeting of the International Society for Autism Research (INSAR 2019), Montreal, QC, Canada, 1–4 May 2019. [Google Scholar]

- Costa, A.P.; Charpiot, L.; Lera, F.R.; Ziafati, P.; Nazarikhorram, A.; Van Der Torre, L.; Steffgen, G. More attention and less repetitive and stereotyped behaviors using a robot with children with autism. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; IEEE: New York, NY, USA, 2018; pp. 534–539. [Google Scholar]

- Pioggia, G.; Ahluwalia, A.; Carpi, F.; Marchetti, A.; Ferro, M.; Rocchia, W.; Rossi, D.D. FACE: Facial automaton for conveying emotions. Appl. Bionics Biomech. 2004, 1, 91–100. [Google Scholar] [CrossRef]

- Ghiglino, D.; Chevalier, P.; Floris, F.; Priolo, T.; Wykowska, A. Follow the white robot: Efficacy of robot-assistive training for children with autism spectrum disorder. Res. Autism Spectr. Disord. 2021, 86, 101822. [Google Scholar] [CrossRef]

- Mazzei, D.; Billeci, L.; Armato, A.; Lazzeri, N.; Cisternino, A.; Pioggia, G.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; De Rossi, D. The FACE of autism. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 791–796. [Google Scholar] [CrossRef]

- Pioggia, G.; Igliozzi, R.; Sica, M.L.; Ferro, M.; Muratori, F.; Ahluwalia, A.; De Rossi, D. Exploring emotional and imitational android-based interactions in autistic spectrum disorders. J. CyberTherapy Rehabil. 2008, 1, 49–61. [Google Scholar]

- Pioggia, G.; Sica, M.L.; Ferro, M.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; De Rossi, D. Human-robot interaction in autism: FACE, an android-based social therapy. In Proceedings of the RO-MAN 2007-the 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Republic of Korea, 26–29 August 2007; IEEE: New York, NY, USA, 2007; pp. 605–612. [Google Scholar]

- Mazzei, D.; Lazzeri, N.; Billeci, L.; Igliozzi, R.; Mancini, A.; Ahluwalia, A.; Muratori, F.; De Rossi, D. Development and evaluation of a social robot platform for therapy in autism. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Boston, MA, USA, 30 August–3 September 2011; pp. 4515–4518. [Google Scholar] [CrossRef]

- Yoshikawa, Y.; Kumazaki, H.; Matsumoto, Y.; Miyao, M.; Kikuchi, M.; Ishiguro, H. Relaxing gaze aversion of adolescents with autism spectrum disorder in consecutive conversations with human and android robot-a preliminary study. Front. Psychiatry 2019, 10, 370. [Google Scholar] [CrossRef]

- Soleiman, P.; Moradi, H.; Mahmoudi, M.; Teymouri, M.; Pouretemad, H.R. The use of RoboParrot in the therapy of children with autism children: In case of teaching the turn-taking skills. In Proceedings of the 16th International Conference on Intelligent Virtual Agent, Los Angeles, CA, USA, 20–23 September 2016. [Google Scholar]

- Pop, C.A.; Simut, R.E.; Pintea, S.; Saldien, J.; Rusu, A.S.; Vanderfaeillie, J.; David, D.O.; Lefeber, D.; Vanderborght, B. Social robots vs. computer display: Does the way social stories are delivered make a difference for their effectiveness on ASD children? J. Educ. Comput. Res. 2013, 49, 381–401. [Google Scholar] [CrossRef]

- Ruiz, E.P.M.; Fernández, H.H.O.; Cena, C.E.G.; León, R.C. Design of JARI: A Robot to Enhance Social Interaction in Children with Autism Spectrum Disorder. Machines 2025, 13, 436. [Google Scholar] [CrossRef]

- Pakkar, R.; Clabaugh, C.; Lee, R.; Deng, E.; Mataricć, M.J. Designing a socially assistive robot for long-term in-home use for children with autism spectrum disorders. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 1–7. [Google Scholar]

- Van Breemen, A.J.N. Animation engine for believable interactive user-interface robots. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; pp. 2873–2878. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-14044268833&partnerID=40&md5=fdcf5d91794f372159f1ee9e25a2cc8e (accessed on 1 June 2025).

- Van Breemen, A.J.N. ICat: Experimenting with animabotics. In AISB’05 Convention: Social Intelligence and Interaction in Animals, Robots and Agents, Proceedings of Symposium on Robotics, Mechatronics and Animatronics in the Creative and Entertainment Industries and Arts, University of Hertfordshire, Hatfield, UK, 12–15 April 2005; AISB: Bristol, UK, 2005; pp. 27–32. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-37349106715&partnerID=40&md5=44486dfb04ab0dc9587cd5a9243fc8fd (accessed on 1 June 2025).

- Van Breemen, A.; Yan, X.; Meerbeek, B. iCat: An animated user-interface robot with personality. In Proceedings of the International Conference on Autonomous Agents and Multiagent Systems, Utrecht, The Netherlands, 25–29 July 2005; pp. 17–18. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-33644802349&partnerID=40&md5=0acb82815e3fd9cb348c947f912515f9 (accessed on 1 June 2025).

- Shibata, T.; Mitsui, T.; Wada, K.; Touda, A.; Kumasaka, T.; Tagami, K.; Tanie, K. Mental commit robot and its application to therapy of children. In Proceedings of the 2001 IEEE/ASME International Conference on Advanced Intelligent Mechatronics. Proceedings (Cat. No. 01TH8556), Como, Italy, 8–12 July 2001; IEEE: New York, NY, USA, 2001; pp. 1053–1058. [Google Scholar]

- Veronesi, C.; Trimarco, B.; Botticelli, N.; Armani, G.; Bentenuto, A.; Fioriello, F.; Picchiotti, G.; Sogos, C. Use of the PARO robot as a social mediator in a sample of children with neurodevelopmental disorders and typical development. Clin. Ter. 2023, 174, 132–138. [Google Scholar]

- Qidwai, U.; Shakir, M.; Connor, O.B. Robotic toys for autistic children: Innovative tools for teaching and treatment. In Proceedings of the 2013 7th IEEE GCC Conference and Exhibition, GCC 2013, Doha, Qatar, 17–20 November 2013; pp. 188–192. [Google Scholar] [CrossRef]

- Cao, H.L.; Esteban, P.G.; Bartlett, M.; Baxter, P.; Belpaeme, T.; Billing, E.; Cai, H.; Coeckelbergh, M.; Costescu, C.; David, D.; et al. Robot-enhanced therapy: Development and validation of supervised autonomous robotic system for autism spectrum disorders therapy. IEEE Robot. Autom. Mag. 2019, 26, 49–58. [Google Scholar] [CrossRef]

- Nikolopoulos, C.; Kuester, D.; Sheehan, M.; Ramteke, S.; Karmarkar, A.; Thota, S.; Kearney, J.; Boirum, C.; Bojedla, S.; Lee, A. Robotic agents used to help teach social skills to children with Autism: The third generation. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Atlanta, GA, USA, 31 July–3 August 2011; pp. 253–258. [Google Scholar] [CrossRef]

- Sartorato, F.; Przybylowski, L.; Sarko, D.K. Improving therapeutic outcomes in autism spectrum disorders: Enhancing social communication and sensory processing through the use of interactive robots. J. Psychiatr. Res. 2017, 90, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Bevill, R.; Park, C.H.; Kim, H.J.; Lee, J.W.; Rennie, A.; Jeon, M.; Howard, A.M. Interactive robotic framework for multi-sensory therapy for children with autism spectrum disorder. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; pp. 421–422. [Google Scholar] [CrossRef]

- Bevill, R.; Azzi, P.; Spadafora, M.; Park, C.H.; Kim, H.J.; Lee, J.; Raihan, K.; Jeon, M.; Howard, A.M. Multisensory robotic therapy to promote natural emotional interaction for children with ASD. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Christchurch, New Zealand, 7–10 March 2016; p. 571. [Google Scholar] [CrossRef]

- Yin, X.; Hou, S.; Hu, H. Research on interactive design of social interaction training APP for children with autistic spectrum disorder (ASD) based on multi-modal interaction. E3S Web Conf. 2020, 179, 02044. [Google Scholar] [CrossRef]

- Lee, J.; Obinata, G.; Aoki, H. A pilot study of using touch sensing and robotic feedback for children with autism. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 222–223. [Google Scholar] [CrossRef]

- Mavadati, S.M.; Feng, H.; Salvador, M.; Silver, S.; Gutierrez, A.; Mahoor, M.H. Robot-based therapeutic protocol for training children with Autism. In Proceedings of the 25th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN 2016, New York, NY, USA, 26–31 August 2016; pp. 855–860. [Google Scholar] [CrossRef]

- Cao, H.L.; Simut, R.E.; Desmet, N.; De Beir, A.; Van De Perre, G.; Vanderborght, B.; Vanderfaeillie, J. Robot-assisted joint attention: A comparative study between children with autism spectrum disorder and typically developing children in interaction with NAO. IEEE Access 2020, 8, 223325–223334. [Google Scholar] [CrossRef]

- Lohan, K.S.; Sheppard, E.; Little, G.; Rajendran, G. Toward improved child–robot interaction by understanding eye movements. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 983–992. [Google Scholar] [CrossRef]

- Mishra, R. Towards adaptive and personalized robotic therapy for children with autism spectrum disorder. In Proceedings of the 2022 10th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW); IEEE: New York, NY, USA, 2022; pp. 1–5. [Google Scholar]

- Arshad, N.I.; Hashim, A.S.; Ariffin, M.M.; Aszemi, N.M.; Low, H.M.; Norman, A.A. Robots as assistive technology tools to enhance cognitive abilities and foster valuable learning experiences among young children with autism spectrum disorder. IEEE Access 2020, 8, 116279–116291. [Google Scholar] [CrossRef]

- Kumazaki, H.; Warren, Z.; Swanson, A.; Yoshikawa, Y.; Matsumoto, Y.; Ishiguro, H.; Sarkar, N.; Minabe, Y.; Kikuchi, M. Impressions of humanness for android robot may represent an endophenotype for autism spectrum disorders. J. Autism Dev. Disord. 2018, 48, 632–634. [Google Scholar] [CrossRef]

- Holeva, V.; Nikopoulou, V.A.; Lytridis, C.; Bazinas, C.; Kechayas, P.; Sidiropoulos, G.; Papadopoulou, M.; Kerasidou, M.D.; Karatsioras, C.; Geronikola, N.; et al. Effectiveness of a Robot-Assisted Psychological Intervention for Children with Autism Spectrum Disorder. J. Autism Dev. Disord. 2022, 54, 577–593. [Google Scholar] [CrossRef]

- Kumazaki, H.; Muramatsu, T.; Yoshikawa, Y.; Matsumoto, Y.; Ishiguro, H.; Mimura, M. Android robot was beneficial for communication rehabilitation in a patient with schizophrenia comorbid with autism spectrum disorders. Schizophr. Res. 2023, 254, 116–117. [Google Scholar] [CrossRef]

- Mishra, R.; Welch, K.C. Towards Forecasting Engagement in Children with Autism Spectrum Disorder using Social Robots and Deep Learning. In Proceedings of the SoutheastCon 2023, Orlando, FL, USA, 1–16 April 2023; IEEE: New York, NY, USA, 2023; pp. 838–843. [Google Scholar]

- Santos, L.; Geminiani, A.; Schydlo, P.; Olivieri, I.; Santos-Victor, J.; Pedrocchi, A. Design of a robotic coach for motor, social and cognitive skills training toward applications with ASD children. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1223–1232. [Google Scholar] [CrossRef]

- Jyoti, V.; Gupta, S.; Lahiri, U. Understanding the role of objects in joint attention task framework for children with autism. IEEE Trans. Cogn. Dev. Syst. 2020, 13, 524–534. [Google Scholar] [CrossRef]

- Ali, S.; Mehmood, F.; Khan, M.J.; Ayaz, Y.; Asgher, U.; Sadia, H.; Edifor, E.; Nawaz, R. A preliminary study on effectiveness of a standardized multi-robot therapy for improvement in collaborative multi-human interaction of children with ASD. IEEE Access 2020, 8, 109466–109474. [Google Scholar] [CrossRef]

- Kumazaki, H.; Muramatsu, T.; Yoshikawa, Y.; Yoshimura, Y.; Ikeda, T.; Hasegawa, C.; Saito, D.N.; Shimaya, J.; Ishiguro, H.; Mimura, M.; et al. Brief report: A novel system to evaluate autism spectrum disorders using two humanoid robots. J. Autism Dev. Disord. 2019, 49, 1709–1716. [Google Scholar] [CrossRef]

- Fears, N.E.; Sherrod, G.M.; Blankenship, D.; Patterson, R.M.; Hynan, L.S.; Wijayasinghe, I.; Popa, D.O.; Bugnariu, N.L.; Miller, H.L. Motor differences in autism during a human-robot imitative gesturing task. Clin. Biomech. 2023, 106, 105987. [Google Scholar] [CrossRef]

- Amirova, A.; Rakhymbayeva, N.; Zhanatkyzy, A.; Telisheva, Z.; Sandygulova, A. Effects of parental involvement in robot-assisted autism therapy. J. Autism Dev. Disord. 2023, 53, 438–455. [Google Scholar] [CrossRef] [PubMed]

- Shamsuddin, S.; Yussof, H.; Ismail, L.I.; Mohamed, S.; Hanapiah, F.A.; Zahari, N.I. Initial response in HRI-a case study on evaluation of child with autism spectrum disorders interacting with a humanoid robot Nao. Procedia Eng. 2012, 41, 1448–1455. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhao, H.; Swanson, A.R.; Weitlauf, A.S.; Warren, Z.E.; Sarkar, N. Design, development, and evaluation of a noninvasive autonomous robot-mediated joint attention intervention system for young children with ASD. IEEE Trans. Hum. Mach. Syst. 2017, 48, 125–135. [Google Scholar] [CrossRef]

- Anhuaman, A.; Granados, C.; Meza, W.; Raez, R. Cogui: Interactive Social Robot for Autism Spectrum Disorder Children: A Wonderful Partner for ASD Children. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, Stockholm Sweden, 13–16 March 2023; pp. 861–864. [Google Scholar]

- So, W.C.; Cheng, C.H.; Lam, W.Y.; Huang, Y.; Ng, K.C.; Tung, H.C.; Wong, W. A robot-based play-drama intervention may improve the joint attention and functional play behaviors of chinese-speaking preschoolers with autism spectrum disorder: A pilot study. J. Autism Dev. Disord. 2020, 50, 467–481. [Google Scholar] [CrossRef]

- Bhargavi, Y.; Bini, D.; Prince, S. AI-based Emotion Therapy Bot for Children with Autism Spectrum Disorder (ASD). In Proceedings of the 2023 9th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 17–18 March 2023; IEEE: New York, NY, USA, 2023; pp. 1895–1899. [Google Scholar]

- Saadatzi, M.N.; Pennington, R.C.; Welch, K.C.; Graham, J.H. Small-group technology-assisted instruction: Virtual teacher and robot peer for individuals with autism spectrum disorder. J. Autism Dev. Disord. 2018, 48, 3816–3830. [Google Scholar] [CrossRef]

- Kim, E.S.; Berkovits, L.D.; Bernier, E.P.; Leyzberg, D.; Shic, F.; Paul, R.; Scassellati, B. Social robots as embedded reinforcers of social behavior in children with autism. J. Autism Dev. Disord. 2013, 43, 1038–1049. [Google Scholar] [CrossRef]

- Telisheva, Z.; Amirova, A.; Rakhymbayeva, N.; Zhanatkyzy, A.; Sandygulova, A. The Quantitative Case-by-Case Analyses of the Socio-Emotional Outcomes of Children with ASD in Robot-Assisted Autism Therapy. Multimodal Technol. Interact. 2022, 6, 46. [Google Scholar] [CrossRef]

- Melo, F.S.; Sardinha, A.; Belo, D.; Couto, M.; Faria, M.; Farias, A.; Gamboa, H.; Jesus, C.; Kinarullathil, M.; Lima, P.; et al. Project INSIDE: Towards autonomous semi-unstructured human–robot social interaction in autism therapy. Artif. Intell. Med. 2019, 96, 198–216. [Google Scholar] [CrossRef] [PubMed]

- Kostrubiec, V.; Kruck, J. Collaborative research project: Developing and testing a robot-assisted intervention for children with autism. Front. Robot. AI 2020, 7, 37. [Google Scholar] [CrossRef]

- Ali, S.; Mehmood, F.; Dancey, D.; Ayaz, Y.; Khan, M.J.; Naseer, N.; Amadeu, R.D.C.; Sadia, H.; Nawaz, R. An adaptive multi-robot therapy for improving joint attention and imitation of ASD children. IEEE Access 2019, 7, 81808–81825. [Google Scholar] [CrossRef]

- Marino, F.; Chilà, P.; Sfrazzetto, S.T.; Carrozza, C.; Crimi, I.; Failla, C.; Busà, M.; Bernava, G.; Tartarisco, G.; Vagni, D.; et al. Outcomes of a robot-assisted social-emotional understanding intervention for young children with autism spectrum disorders. J. Autism Dev. Disord. 2020, 50, 1973–1987. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, S.M.; Eigsti, I.-M.; Neelly, L.; Bhat, A.N. The effects of embodied rhythm and robotic interventions on the spontaneous and responsive social attention patterns of children with autism spectrum disorder (ASD): A pilot randomized controlled trial. Res. Autism Spectr. Disord. 2016, 27, 54–72. [Google Scholar] [CrossRef]

- Srinivasan, S.M.; Eigsti, I.-M.; Gifford, T.; Bhat, A.N. The effects of embodied rhythm and robotic interventions on the spontaneous and responsive verbal communication skills of children with Autism Spectrum Disorder (ASD): A further outcome of a pilot randomized controlled trial. Res. Autism Spectr. Disord. 2016, 27, 73–87. [Google Scholar] [CrossRef]

- Mehmood, F.; Mahzoon, H.; Yoshikawa, Y.; Ishiguro, H.; Sadia, H.; Ali, S.; Ayaz, Y. Attentional behavior of children with ASD in response to robotic agents. IEEE Access 2021, 9, 31946–31955. [Google Scholar] [CrossRef]

- Kobayashi, T.; Toyamaya, T.; Shafait, F.; Iwamura, M.; Kise, K.; Dengel, A. Recognizing words in scenes with a head-mounted eye-tracker. In Proceedings of the 2012 10th IAPR International Workshop on Document Analysis Systems, Gold Coast, Australia, 27–29 March 2012; IEEE: New York, NY, USA, 2012; pp. 333–338. [Google Scholar][Green Version]

- Chadsey, J. ‘Promoting Social Communication: Children With Developmental Disabilities From Birth to Adolescence,’ by Howard Goldstein, Louise A. Kaczmarek, and Kristina M. English (Book Review). Ment. Retard. 2003, 41, 301. [Google Scholar] [CrossRef]

- Stanton-Chapman, T.L.; Snell, M.E. Promoting turn-taking skills in preschool children with disabilities: The effects of a peer-based social communication intervention. Early Child. Res. Q. 2011, 26, 303–319. [Google Scholar] [CrossRef]

- Broz, F.; Nehaniv, C.L.; Kose, H.; Dautenhahn, K. Interaction histories and short-term memory: Enactive development of turn-taking behaviours in a childlike humanoid robot. Philosophies 2019, 4, 26. [Google Scholar] [CrossRef]

- Leekam, S. Social cognitive impairment and autism: What are we trying to explain? Philos. Trans. R. Soc. B Biol. Sci. 2016, 371, 20150082. [Google Scholar] [CrossRef] [PubMed]

- Pennisi, P.; Tonacci, A.; Tartarisco, G.; Billeci, L.; Ruta, L.; Gangemi, S.; Pioggia, G. Autism and social robotics: A systematic review. Autism Res. 2016, 9, 165–183. [Google Scholar] [CrossRef]

- Bird, G.; Leighton, J.; Press, C.; Heyes, C. Intact automatic imitation of human and robot actions in autism spectrum disorders. Proc. R. Soc. B Biol. Sci. 2007, 274, 3027–3031. [Google Scholar] [CrossRef]

- Fuentes-Alvarez, R.; Morfin-Santana, A.; Ibañez, K.; Chairez, I.; Salazar, S. Energetic optimization of an autonomous mobile socially assistive robot for autism spectrum disorder. Front. Robot. AI 2023, 9, 372. [Google Scholar] [CrossRef] [PubMed]

- Mottron, L.; Bzdok, D. Autism spectrum heterogeneity: Fact or artifact? Mol. Psychiatry 2020, 25, 3178–3185. [Google Scholar] [CrossRef]

- Kozima, H.; Nakagawa, C. Interactive robots as facilitators of childrens social development. In Mobile Robots: Towards New Applications; Citeseer; I-Tech Education and Publishing: Vienna, Austria, 2006. [Google Scholar]

- Wang, C.-P. Training children with autism spectrum disorder, and children in general with AI robots related to the automatic organization of sentence menus and interaction design evaluation. Expert Syst. Appl. 2023, 229, 120527. [Google Scholar] [CrossRef]

- Hume, K.; Steinbrenner, J.R.; Odom, S.L.; Morin, K.L.; Nowell, S.W.; Tomaszewski, B.; Szendrey, S.; McIntyre, N.S.; Yücesoy-Özkan, S.; Savage, M.N. Evidence-based practices for children, youth, and young adults with autism: Third generation review. J. Autism Dev. Disord. 2021, 51, 4013–4032. [Google Scholar] [CrossRef]

- Sandoval, J.; Gutiérrez, B.M.A.; Jiménez-Pérez, L.; Robles, S.R. Integration of school technologies for language learning for students with autism spectrum disorder: A systematic review. Int. J. Technol. Knowl. Soc. 2024, 20, 23. [Google Scholar] [CrossRef]

- Lorenzo, G.; Lledó, A.; Pérez-Vázquez, E.; Lorenzo-Lledó, A. Action protocol for the use of robotics in students with Autism Spectrum Disoders: A systematic-review. Educ. Inf. Technol. 2021, 26, 4111–4126. [Google Scholar] [CrossRef]

- Ivanova, G.; Ivanov, A.; Kirilova, M. Design and Development of a 3D Social Mobile Robot with Artificial Intelligence for Teaching with Assistive Technology. In Proceedings of the 2025 24th International Symposium INFOTEH-JAHORINA (INFOTEH), East Sarajevo, Bosnia and Herzegovina, 19–21 March 2025; IEEE: New York, NY, USA, 2025; pp. 1–6. [Google Scholar]

- Jung, Y.; Jung, G.; Jeong, S.; Kim, C.; Woo, W.; Hong, H.; Lee, U. ‘Enjoy, but Moderately!’: Designing a Social Companion Robot for Social Engagement and Behavior Moderation in Solitary Drinking Context. Proc. ACM Hum Comput. Interact. 2023, 7, 1–24. [Google Scholar] [CrossRef]

- Richardson, K.; Coeckelbergh, M.; Wakunuma, K.; Billing, E.; Ziemke, T.; Gomez, P.; Vanderborght, B.; Belpaeme, T. Robot enhanced therapy for children with autism (DREAM): A social model of autism. IEEE Technol. Soc. Mag. 2018, 37, 30–39. [Google Scholar] [CrossRef]

- da Silva, N.I.; Lebrun, I. Autism spectrum disorder: History, concept and future perspective. J. Neurol. Res. Rev. Rep. 2023, 173, 5–8. [Google Scholar] [CrossRef]

| Robot | Height | Weight | Processor | DoF | Sensors |

|---|---|---|---|---|---|

| Pepper | 120 cm | 28 kg | Intel Atom E3845 | 19 | 2 HD cameras, 3D sensors, 4 microphones, 2 touch sensors, 2 sonars, 6 lasers, 3 bumper sensors, and gyroscope. |

| NAO | 58 cm | 5.48 kg | Intel Atom 1.6 GHz | 25 | 2 cameras, 4 microphones, 4 sonars, gyroscope, touch sensors, IMU |

| Jibo | 28 cm | 3 kg | ARM Cortex-A8 | 3 | Touch sensors, cameras, microphones |

| Paro | 57 cm | 2.7 kg | 32-bit RISC | 7 | Light, sound, touch, temperature sensors, microphone arrays |

| Kuri | 50 cm | 6.3 kg | ARM-based processor | 4 | HD camera, microphones, speakers |

| Temi | 100 cm | 11 kg | ARM-based processor | 3 | Cameras, depth sensors, lidar, microphones, touch sensors |

| QTrobot | 60 cm | 5 kg | Intel® NUC i5/i7 PC | 4 | Cameras, microphones |

| Zeno | 63.5 cm | 6.5 kg | dual core 1.5 GHz ARM Cortex A | 36 | Cameras, 3 microphones, 2 IR, 2 bumper, gyroscope, accelerometer |

| Furhat | 41 cm | 3.5 kg | Intel Core i5-7260U | 3 | 1080p RGB camera, stereo microphones, RFID reader |

| Sophia | 167 cm | 20 kg | Intel i7 3 GHz with GPU | 83 | 3 cameras, force sensors, touch sensors microphone, Speaker, IMU. |

| Milo | 60 cm | 5 kg | VIA Mini-ITX, 600 MHz | 13 | Facial recognition, microphones, touch sensors |

| RoboParrot | 50 cm | 1.5 kg | AtMega16 | Cameras, microphones, touch sensors | |

| Probo | 66 cm | 5.7 kg | Intel Core i3/i5 | 20 | Cameras, microphones, touch sensors |

| Zoomer | 26 cm | 0.8 Kg | Microcontroller | Microphone, speaker | |

| JARI | 60 cm | 15 kg | A Raspberry Pi 3 B+ and Arduino Nano | 3 | Camera, microphone, ultrasonic sensor |

| Charli | 141 cm | 12.1 kg | Intel Atom 1.6 GHz | 25 | Camera, gyro, accelerometer, 2 microphones |

| LEGO Mindstorm NXT | - | 2.1 kg | 32-bit microprocessor | 3 | 2 touch sensors, 1 ultrasonic sensor, 1 color/light sensor. |

| Keepon | 27.5 cm | 1.5 kg | Microcontrollers | 4 | Cameras, 2 microphones, array of touch sensors |

| Pleo | 17.8 cm | 1.6 kg | 7 CPUs | 15 | Color camera, IR sensor, temperature sensor, RFID reader, 2 microphones, foot, orientation and touch sensors |

| Paro | 16 cm | 2.7 kg | 32-bit RISC processors | 7 | Light sensor, temperature sensor, tactile sensors, microphone array. |

| Aibo | 29.3 cm | 2.2 kg | Qualcomm Snapdragon 820, 64-bit quad-core | 22 | 2 cameras, Time-of-flight sensors, 2 IR sensors, 4 microphones, capacitive touch sensors, 2 motion and 4 paw contact sensors. |

| KASPAR | 55 cm | 15 kg | Onboard mini-PC | 17 | Cameras in eyes, force-sensing resistor or capacitive touch sensors |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nadeem, M.; Barakat, J.M.H.; Daas, D.; Potams, A. A Review of Socially Assistive Robotics in Supporting Children with Autism Spectrum Disorder. Multimodal Technol. Interact. 2025, 9, 98. https://doi.org/10.3390/mti9090098

Nadeem M, Barakat JMH, Daas D, Potams A. A Review of Socially Assistive Robotics in Supporting Children with Autism Spectrum Disorder. Multimodal Technologies and Interaction. 2025; 9(9):98. https://doi.org/10.3390/mti9090098

Chicago/Turabian StyleNadeem, Muhammad, Julien Moussa H. Barakat, Dani Daas, and Albert Potams. 2025. "A Review of Socially Assistive Robotics in Supporting Children with Autism Spectrum Disorder" Multimodal Technologies and Interaction 9, no. 9: 98. https://doi.org/10.3390/mti9090098

APA StyleNadeem, M., Barakat, J. M. H., Daas, D., & Potams, A. (2025). A Review of Socially Assistive Robotics in Supporting Children with Autism Spectrum Disorder. Multimodal Technologies and Interaction, 9(9), 98. https://doi.org/10.3390/mti9090098