1. Introduction

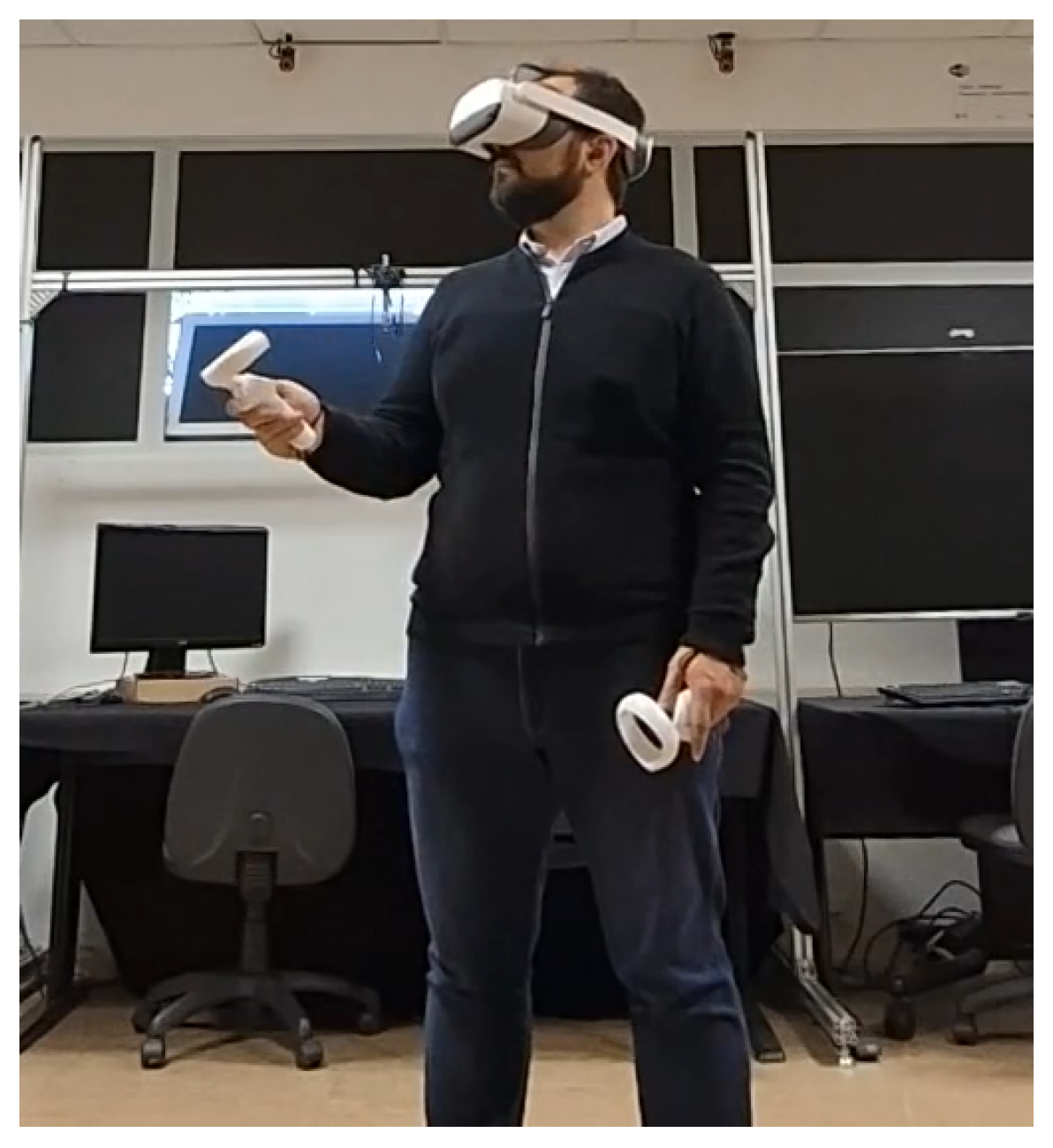

With affordable HMDs now incorporating eye tracking, effective gaze-dominant interfaces have become crucial for VR environments (see

Figure 1). Multitasking in VR poses challenges, as traditional gaze- or hand-based controls often compromise precision or limit the freedom to look around. We present a novel gaze–hand steering technique that addresses these issues with eye tracking and hand pointing.

In VR, navigation techniques are essential for effective user interaction, as they enable users to reach the locations of objects that will be selected and manipulated within the virtual environment. Wayfinding is the element of navigation that helps users understand their position and plan their paths, often utilizing visual cues or maps. Travel, the motor aspect of navigation, employs specific techniques to enable users to determine the speed and direction of their viewpoint as it moves through virtual space. Although selection and manipulation tasks are frequently separated from navigation, the user concludes navigation before starting manipulation, it is not rare that the application demands selection and manipulation during locomotion, in other words, travel. Selection, for example, enables users to choose objects or destinations using gaze, gestures, or controllers during motion. These elements enable users to explore and interact seamlessly within VR, enhancing immersion and spatial awareness.

Room size often limits the physical movement a user can make when applying locomotion in VR. Techniques such as joystick-based controls, gaze-directed movement, and walking in place have been employed to address these issues and enhance immersion, without requiring large physical spaces. One relatively successful technique is Magic Carpet [

1], which applies a flying carpet metaphor to separate direction and speed controls into two phases. It works with speed control methods such as a joystick, speed ring, and walking in place, combined with head-gaze as a pointing technique for direction control. This accommodates the naturalness of having a ground reference and the expeditiousness of flying. However, techniques relying solely on gaze direction for control have limitations. While gaze-oriented movement can provide precise and effortless steering [

2], it can also restrict users’ ability to explore the environment freely, as gaze-based navigation often leads to unintentional actions, a phenomenon known as the “Midas Touch” problem [

3]. This limitation prevents users from looking around without unintentionally changing direction, which affects their overall navigation freedom.

However, while hand-pointing techniques offer precision, they prevent users from simultaneously using their hands for secondary tasks, such as selection or manipulation, which reduces their practicality in multitasking scenarios. Adding a trigger to switch between navigation-pointing and selection- or manipulation-pointing can make interactions cumbersome and increase the likelihood of errors.

In response to these limitations, our study proposes a technique combining eye gaze and hand pointing to enhance speed control and steering precision while supporting multitasking and offering free look capability. This combined technique builds upon the strengths of gaze and hand-directed methods [

4,

5,

6,

7,

8], enabling more seamless navigation that retains performance even during complex or multitasking activities.

Hence, we investigate the hypothesis that combining the gaze control techniques with pointing techniques can significantly improve speed and steering control. This potentially leads to the performance not being affected when exploring and performing other tasks while navigating, and leads to more accurate travel in the virtual environment, as gaze-oriented steering is considered to be the most efficient when more complex movements are involved [

9,

10].

We therefore focus on answering the following research question: How does combining gaze-directed steering with hand-pointing techniques allow exploration and multitasking without compromising performance?

In search of responses, we conducted an experimental study with users to assess the user experience and travel efficiency achieved with the proposed technique. We also compare the performance of our method with two different speed control techniques, joystick and speed circle, and introduce new tasks to explore the free-look capability and selection interactions, which support multitasking.

While inspired by prior flying techniques like Magic Carpet [

1], our approach introduces gaze–hand steering that supports multitasking during travel, such as popping balloons or interacting with virtual objects, and a free-look mode during travel. This design enables users to look around freely and use their hands for other tasks while traveling, which is not possible with techniques that rely on continuous hand or gaze control.

2. Related Work

Travel is a critical task in VR, encompassing directional control and movement through virtual spaces. Steering-based methods are commonly used for VR navigation, where users can control the direction of movement as if steering a vehicle. However, traditional approaches that rely solely on gaze or hand input often suffer from limitations, such as physical fatigue or imprecise control, which recent studies aim to address by integrating gaze for quick directional indications and hand movements for refined speed and directional adjustments [

4]. Despite these innovations, there is still a need for techniques that allow users to navigate while multitasking without compromising control precision or causing physical discomfort. Previous works have focused primarily on selection or navigation, but not adequately on how users can multitask during flight locomotion.

Medeiros et al. [

1] studied flight locomotion by dividing the process into direction indication and speed control, using a floor proxy with full-body representation. In the direction study, three techniques—elevator+steering, gaze-oriented steering, and hand steering were evaluated with 18 participants. Participants provided feedback through questionnaires, assessing user preferences, comfort, embodiment, and immersion. The elevator+steering technique enabled horizontal navigation through gaze and vertical navigation via buttons. Gaze-oriented and hand-oriented techniques offered direction control based on head rotation and dominant hand movement, respectively. For the speed study, they explored three techniques: joystick, speed circle, and WIP. The joystick controlled speed with an analog stick. The speed circle approach used the body as an analog stick, derived from a virtual circle metaphor [

11,

12]. The last technique, adapted from Bruno et al. [

13], used knee movement for a maximum velocity of 5 m/s.

The experiment involved navigating through a city scene from Unity3D Asset Store, with rings indicating the direction of the movement. The first experiment tested steering control with speed controlled by a button, and the second focused on speed control using a hand technique for direction. The first experiment showed that gaze and hand-oriented steering techniques had close results, with gaze showing shorter path length and total time and the hand technique with fewer collisions. In the second experiment, only the hand technique was used for direction control due to limited visual exploration with the gaze method.

Lai et al. investigated gaze-directed steering on user comfort in virtual reality environments [

14]. Previous research has been inconclusive about which technique is best. Some found Gaze-directed better than Hand-directed [

9,

15], while others found the opposite [

16,

17]. They compared gaze, hand, and torso-directed steering, finding that gaze-directed methods significantly reduced simulator sickness symptoms like nausea and dizziness. Zeleznik et al. [

18] explored gaze in Virtual Reality interactions, testing if gaze-oriented steering provides benefits over existing techniques. They argued that hand input in VR interactions is often redundant with gaze information, potentially reducing arm fatigue and improving interactions. They focused on terrain navigation, identifying speed control with an analog joystick as a main problem, and offering fixed speeds. Another issue was the inability to orbit around a region of interest. They proposed using gaze to lock areas of interest and orbit using a joystick or tablet gestures.

Lai et al. [

19] replicated a dual-task methodology to compare steering-based techniques with target-based ones, finding steering techniques afford greater spatial awareness due to continuous motion. However, steering techniques may increase cybersickness [

20]. VR developers are recommended to provide Gaze-directed steering as an intermediate option between novice-friendly teleport and expert-friendly hand-directed steering.

Zielasko et al. [

21] mentioned the advantages of seated VR for ergonomics and long-term usage. They found torso and gaze steering effective for direction while leaning caused fatigue and cybersickness. However, gaze/view-directed steering has issues inspecting the environment independently of movement direction, commonly called the “Midas Touch” problem [

3], where gaze interactions trigger unintended actions. Tregillus et al. [

22] addressed this by introducing head-tilt motions for independent “free-look” control.

Combining gaze with other input modalities, particularly hand gestures, has emerged as a solution to overcome the limitations of gaze-only interaction. Techniques that utilize gaze for initial selection and confirm actions with a secondary hand gesture have effectively reduced the “Midas Touch” effect by decoupling selection from confirmation inputs [

7,

8]. Lystbæk et al. [

7] introduced gaze–hand alignment, where the alignment of gaze and hand inputs triggers selection, offering a shand steering, integrating the two vectors to determines like menu selection in AR. This technique leverages the natural coordination of gaze and hand, allowing users to pre-select a target with their gaze and confirm it by aligning their hand, thereby reducing physical strain and increasing interaction speed [

6]. Recent advancements, such as the study by Chen et al. [

5], extend this approach by combining gaze rays with controller rays, effectively disambiguating target selection to reduce selection time and enhance accuracy. Similarly, the study by Kang et al. [

4] combines gaze for initial direction setting with hand-based adjustments, allowing users to control navigation speed and direction without unwanted activations. Also, HMDs can impact spatial awareness and task performance, especially when body representation and perspective are manipulated [

23].

The integration of gaze and hand inputs has improved significantly over single-modality systems, providing more natural, efficient, and accurate interaction in VR environments. Despite these advancements, multitasking within navigation, particularly during flight locomotion, needs further development. Our approach extends gaze–hand-directed steering techniques explored in previous studies, e.g., Chen et al. [

5], Kang et al. [

4], Medeiros et al. [

1], introducing a mechanism that supports flight locomotion and enables users to navigate efficiently without losing orientation while multitasking.

3. Our Gaze–Hand Steering Mechanism

Hand pointing is an affordable and handy solution that indicates the direction of travel. However, many HMDs currently incorporate eye-tracking capabilities, and several eye-dominant techniques are prevalent. For example, industry standards are adopting gaze as the default dominant paradigm, as is the case with the Apple Vision Pro (Apple, Cupertino, CA, USA) high-end consumer device. However, a major constraint in eyegaze-directed techniques is the free-look problem or “Midas Touch” [

3,

22], which makes it difficult for users to look around without affecting the direction of movement. To address this, we implemented a mechanism that changes between free-look (exploring the environment with gaze without changing movement direction) and directed look (gaze changes movement direction) that allows multitasking while traveling. This mechanism combines eye-tracking gaze techniques and hand-steering, combining the two vectors to decide the direction vector. Inspired by gaze as a helping hand study [

18], we combine eye gaze and hand pointing to lock direction and allow users to explore while maintaining the movement direction. This approach uses an invisible string metaphor, where the direction locks with the interception of the eye and hand vectors, enabling users to move while looking around.

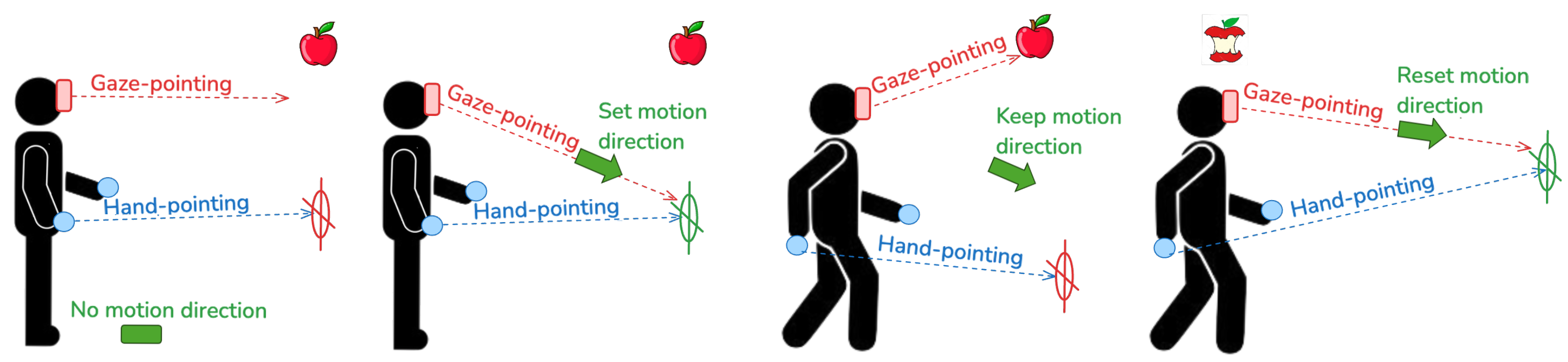

3.1. Pointing Direction

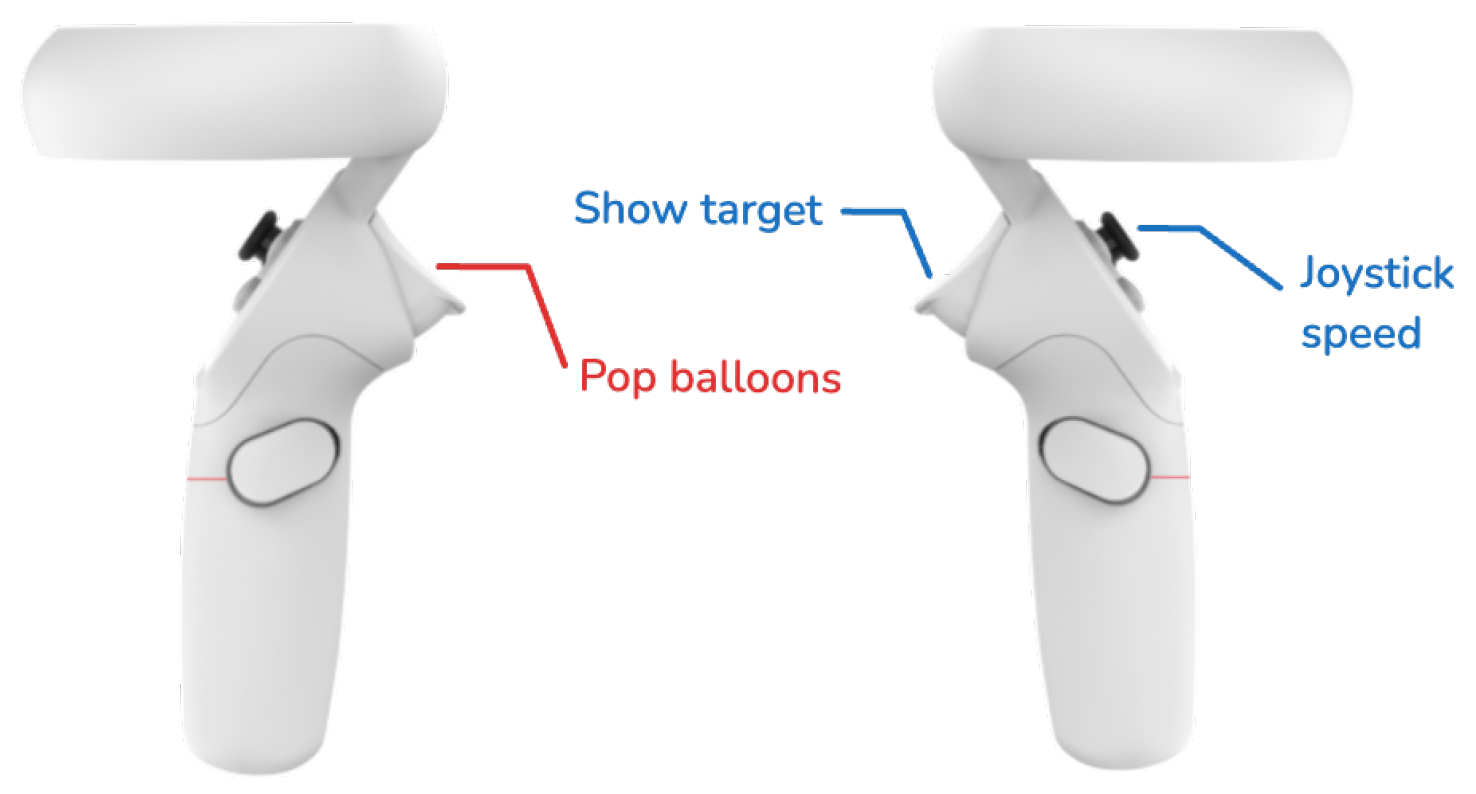

To determine the travel direction, the system combines hand pointing and eye gaze. The direction is null at the start and no travel is possible. The dominant hand trigger activates the display of a target object 1 m wide at a 5 m distance along the hand-pointing direction. Then, a ray is cast in the direction of the eye gaze, and if this ray intercepts the hand-driven target object, a new direction is set for travel. The chosen direction is that of the eye gaze, providing a more egocentric definition of the direction to follow. Once the direction is locked, the user can release the trigger and look freely in any direction without affecting movement, enabling a ‘free-look’ mode. Eye gaze and hands can carry out other tasks, such as selecting or manipulating objects, at any time. A new direction is only set when the trigger is pressed again and the alignment between gaze and hand occurs, reentering ‘directed look’ mode. This mechanism is illustrated in

Figure 1, which shows how gaze–hand alignment enables navigation while preserving freedom for multitasking.

3.2. Controlling Speed

Whenever a direction is set, travel speed will determine the quantity of motion in that direction. The literature has plenty of solutions. We tested two simple techniques to control speed: the joystick and the speed circle. Joystick uses the analog thumbstick to set a velocity forward or backward smoothly. A full push on the stick will set the maximum speed, or 5 m/s, which is the same used by the Magic Carpet (see

Figure 2). Speed circle, in turn, uses the user’s body as a joystick, i.e., leaning forward or backward sets a speed proportional to the amount of leaning (see

Figure 3). Returning to the initial neutral position sets the speed to zero.

3.3. Multitasking

As we intend to use multitasking, the interface includes other simple actions. Eye gaze is the dominant input for selection. So, while it is used to select the hand-defined target for travel direction, it is also used to select other objects. One example is to point and pop balloons. This action is triggered when the eye gaze hits a balloon and the non-dominant hand trigger is pulled. To shoot a target accurately, users needed to look away from the directional scope and explore the environment to locate the targets. Upon identifying a target, they had to press the trigger on the controller in their non-dominant hand only when their line of sight intersected with the target object. This setup allowed users to multitask, managing navigation and target acquisition simultaneously.

Figure 2.

User navigation using a joystick.

Figure 2.

User navigation using a joystick.

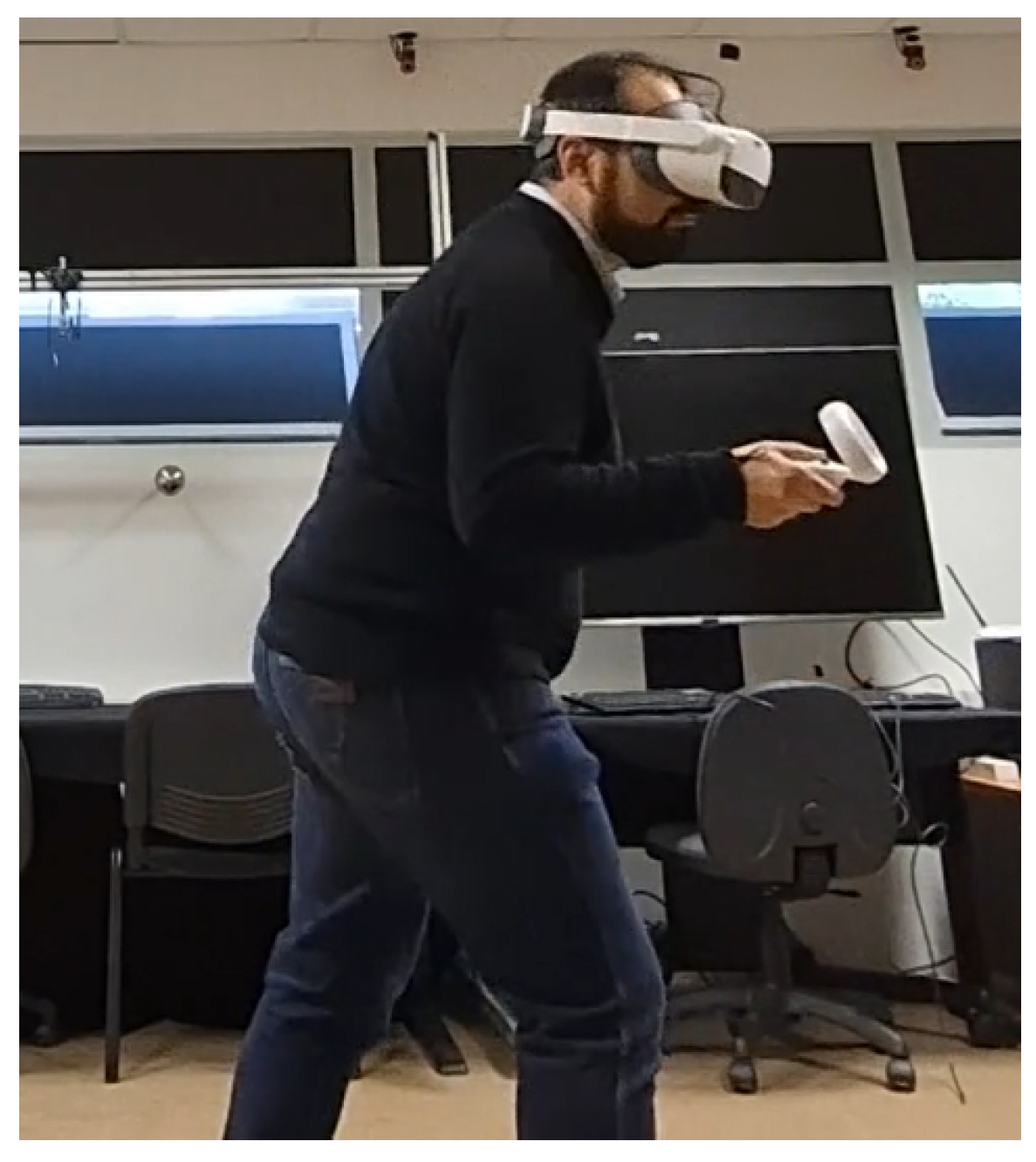

Figure 3.

Navigation control with speed circle.

Figure 3.

Navigation control with speed circle.

Figure 4 and

Figure 5 summarize the controllers and actions implemented to support the intended multitasking.

3.4. Implementation

We implemented the above techniques for the PICO Neo 3 Pro EYE VR headset (ByteDance, Beijing, China), which supports eye tracking, and the two included hand controllers. The eye tracking API used with this device is the Tobii Extended Reality toolkit (Tobii AB, Danderyd, Sweden). However, any similar contemporary VR kit, such as the MetaQuest Pro, could alternatively be used.

We used Unity version 2021.3 for the virtual environment and retained most of the assets and design elements from Magic Carpet [

1] to enable direct comparative evaluation. One difference is that we did not include the walking in place technique due to subpar performance results, and thus did not use the trackers they used. Another is that the Magic Carpet was based on a CAVE, whereas we used an HMD. Therefore, we also refined the speed circle technique for HMD environments. Our redesign aimed to minimize user movement and eliminate confusion about the center of the circle. To do this, the speed circle was positioned at waist level and resized to dimensions of

m, aligned with the physical space of the user and preventing abrupt accelerations during sharp turns. This enhanced stability ensures effectiveness and prevents unintended movements by establishing a maximum speed threshold of 5 m/s and improving access to a null velocity zone. We also added graphical elements, such as redesigned semicircle colors with positive and negative symbols in each sector to indicate the areas for increasing or decreasing velocity. Leaning or moving towards the designated green area, marked with a plus sign, increases velocity, while moving towards the red semicircle, marked with a negative sign, decreases velocity.

As for the experimental tasks, which will be further discussed in

Section 4, we adjusted visibility to keep all rings visible, minimizing wayfinding difficulties to focus on the eye-tracking performance.

4. Evaluation

We designed and conducted a user study to assess and characterize the effectiveness and efficiency of our steering locomotion method. We seek to establish the feasibility of using eye-tracking steering and hand-pointing gestures for navigation, enabling users to freely explore the environment. Later, we also compare our results with those from the Magic Carpet technique [

1].

We anticipate observing effects in several interaction metrics. So, we measured total completion times for tasks, the occurrence of collisions, path lengths traveled, scores in the presence and absence of workload assessments, and cybersickness. Furthermore, we anticipate introducing free look capabilities, facilitated by eye-tracking technology, that should not adversely impact flying or completion times. We expect flying and idle times to remain consistent across tasks, indicating that incorporating free-look functionality does not hinder overall performance. Additionally, we anticipate that users will perform simultaneous tasks involving traversing rings and destroying targets without undermining performance and experience when compared to data in the literature.

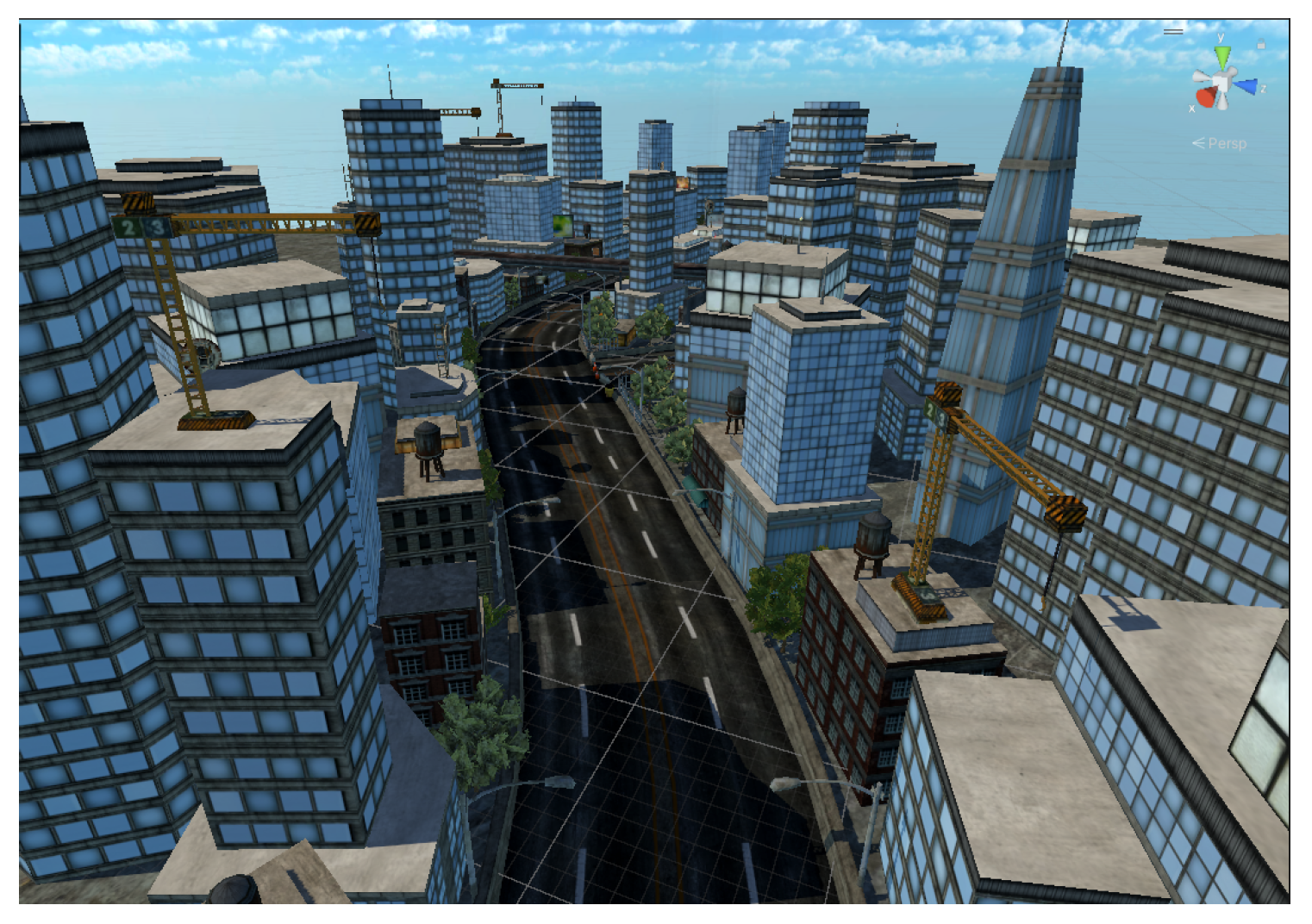

4.1. Environment

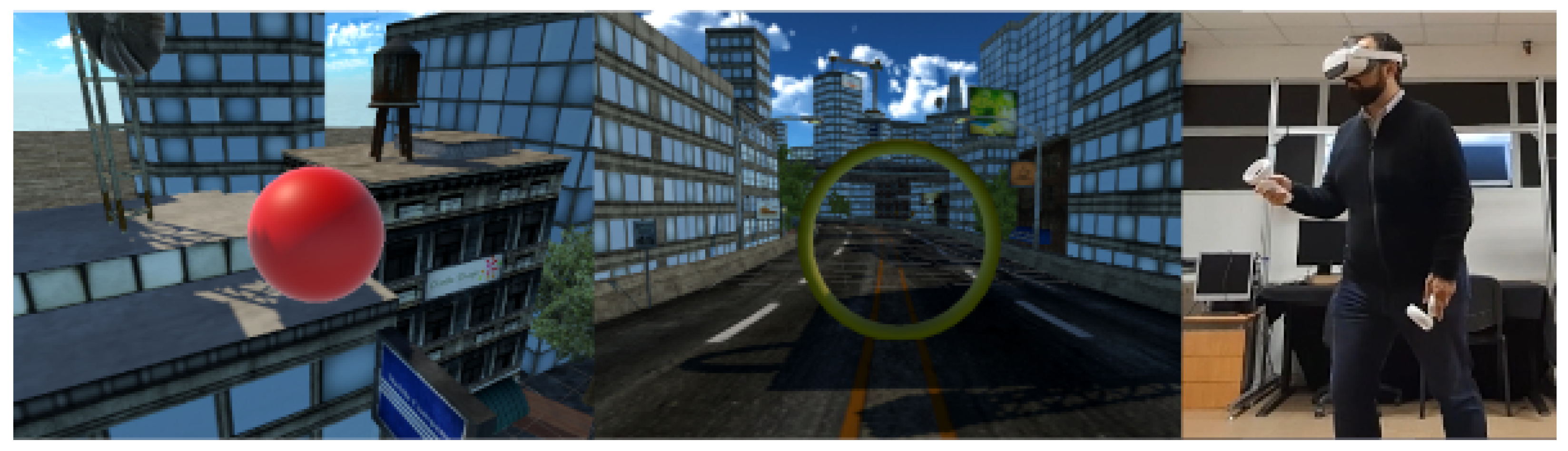

The virtual environment for this study is the same as that of the Magic Carpet study to facilitate direct comparison. The environment was based on a city scene with a path of rings through which the user must fly, as seen in

Figure 6 and

Figure 7, obtained from the Unity3D Asset Store. This scene was modified to remove visual clutter and present a smoother path for testing. The interaction was implemented with the help of Unity XR’s interaction kit, a framework for implementing virtual and augmented reality functionality in Unity projects.

4.2. Conditions

We designed the experiment to evaluate the impact of gaze–hand steering in immersive environments by assigning participants specific tasks under varying conditions—each task condition aimed at different aspects of user interaction to assess steering and targeting capabilities more effectively. The following subsections describe the functions that participants performed during the study.

Figure 6.

Perspective of the virtual environment.

Figure 6.

Perspective of the virtual environment.

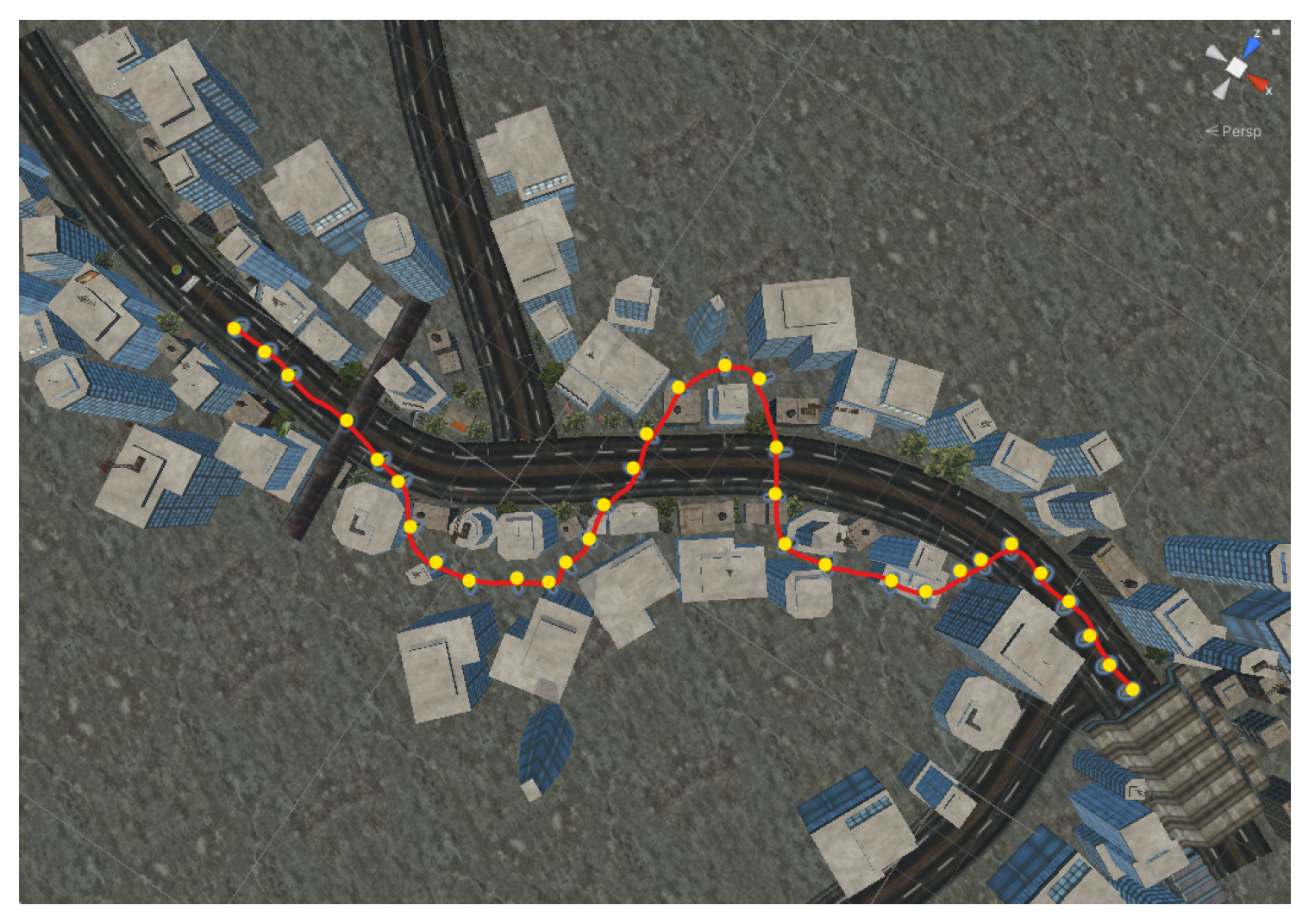

Figure 7.

Path used in the experiment (350 m). Yellow dots indicate the ring’s placement.

Figure 7.

Path used in the experiment (350 m). Yellow dots indicate the ring’s placement.

4.2.1. Tasks

There are two task conditions. One is a single-task race where participants fly through rings scattered across the map, with obstacles between the rings to test effective steering without colliding. Rings make a sound when crossed to indicate the path.

The other task condition is

multitask. It involves a

target practice challenge in which users navigate through rings and shoot balloon targets placed at several locations along the path. Balloons are popped by looking at them and pressing the non-dominant hand trigger. This design focused on assessing the effectiveness of gaze–hand steering in isolation, providing insights into its performance characteristics. Comparisons with traditional eye-gaze steering are discussed using published data from the Magic Carpet experiment [

1].

Figure 8 provides a visual overview of the experimental tasks and setup, including a balloon target from the target shooting task, a ring used in the ring navigation task, and a participant interacting with the system using gaze–hand steering.

4.2.2. Interface Conditions

We implemented our gaze–hand steering as the only interface for travel direction. However, we use two conditions for speed control. Gaze–hand steering is coupled with one of the speed control interactions, making two interface conditions: gaze–hand steering + joystick and gaze–hand steering + speed circle.

4.2.3. Experimental Conditions

Our user study thus exposed participants to four different conditions:

Gaze–hand steering—joystick—rings.

Gaze–hand steering—joystick—targets.

Gaze–hand steering—speed circle—rings.

Gaze–hand steering—speed circle—targets.

The four conditions use gaze–hand steering to control direction. Arguably, having only one direction control condition in the protocol limits the results to findings that characterize the technique in isolation. However, we did not include other direction techniques to maintain a manageable session length and minimize participant fatigue. It would also be interesting to see how participants perform if simple eye-gaze steering is used compared to how they perform with our technique. We compare the two methods in

Section 5.4 using published data from the Magic Carpet experiment [

1].

4.3. Apparatus

To control for extraneous factors and ensure a consistent environment, all trials were conducted in a virtual reality laboratory with controlled lighting and sound levels to minimize distractions. At the beginning of each session, participants were equipped with a head-mounted Display (HMD) and calibrated hand controllers. Calibration ensured precise eye tracking, and participants were given a short training session to familiarize themselves with the gaze–hand alignment technique and verify the alignment of the equipment.

We collected both objective and subjective measures. The system recorded performance measures in four aspects: task speed (time to complete), collisions (with obstacles), path length, and movement time (percentage of time passed moving instead of idling). We also administered three utility and usability measurement scales: Slater–Usoh–Steed (SUS) presence questionnaire [

24], the Simulator Sickness Questionnaire (SSQ) [

25], and the NASA Task Load Index (NASA-TLX) [

26].

Some of these instruments were explicitly chosen to allow comparison with the results of the Magic Carpet study.

4.4. Procedure

The experimental procedure followed a within-subject design, with participants experiencing the four conditions once. The interface condition presentation order was counterbalanced, and the task condition was fixed with the single task ring performed first and the multitask target performed second.

After a brief explanation, the participants signed an informed consent form and were told they could stop at any time if they felt discomfort, cyber-sickness, or other reasons. Then, they filled out a pre-test profile form. After that, they were introduced to the VR headset, the eye-tracking calibration was performed, and they were guided to the marked spot on the floor and allowed to perform the test task, where they were free to explore and practice. When they were comfortable, they started the experimental tasks.

After exposure to all four conditions, participants completed the post-test usability and experience questionnaires of

Section 4.3 and a list of subjective questions about the system. We thanked the participants, and they were dismissed.

The experience lasted approximately 40 min per participant, including the 15 min briefing, training, and questionnaires.

4.5. Participants

Twenty participants, aged 17 to 35 (, ), volunteered for the study. The majority had at least a bachelor’s degree. Although many users had some VR experience, most were inexperienced, having used VR less than five times. Furthermore, two-thirds of the participants never or sometimes experienced dizziness or nausea using VR, while the other third never used it.

5. Results

We present our results in this section according to the evidence found. We start by analyzing usability from subjective measurements and then go into the objective data collected. In the objective data part, we first look into our user performance data in isolation, developing along different tasks and interface conditions. Then, we compare our data with the results from the Magic Carpet [

1] in

Section 5.4.

5.1. Subjective Scores

This section evaluates the integrity of the developed system using SUS-presence, SSQ, and NASA-TLX questionnaires. It includes user impressions of each speed technique to compare with each other and to understand how our steering approach performs overall. Statistical results are examined. Then, we present the users’ responses to additional general questions regarding the experience.

A summary of the subjective instruments’ results from 20 participants is shown in

Table 1, providing a mean (

), standard deviation (

), and 95% confidence interval (CI).

First, the average SUS-presence score is 15.54 out of 20 total points (77.7%). Then we observed that none of the participants felt severe sickness symptoms. Only four marked at least two of the 16 symptoms as moderate. The SSQ total score at the end of the participation is 21.25, but the nausea factor is lower, 13.87 on average. Disorientation was scored higher, at 30.37.

Table 2 shows how the cybersickness level compares with the Magic Carpet. On average, our approach elicits less sickness by a large margin. For task load, the total average NASA-TLX score falls below 29 on a scale of 100, indicating a category of medium workload. We could not compare SUS and TLX data with Magic Carpet because their work did not apply these measurements.

5.2. Additional Subjective Impressions

Table 3 summarizes the participants’ impressions of using the system on a five-point Likert scale. This table also reproduces the responses from Magic Carpet for the same questions. The table only shows the median and IQR values, but we also used Wilcoxon’s to test for significance and report the results here, also including the averages and deviations. The responses indicate that walking inside the virtual circle (Q1) is significantly easier (Wilcoxon =

, (

) with the joystick (

,

) than with the speed circle (

,

). Controlling speed was significantly easier (Wilcoxon

, (

) using the joystick (

,

) compared to the speed circle (

,

).

Moving around the virtual environment was also easier with the joystick (Wilcoxon = 12.5, ). Users reported a higher sense of agency with the joystick (, ) compared to the speed circle (, ) (Wilcoxon = , ). Users also felt safer inside the circle when using the joystick (Wilcoxon = , ). These results collectively suggest that the joystick provides a more familiar user experience, as it is commonly used in video games. However, some users preferred the speed circle for its more immersive experience, which allowed for direct interaction through body gestures. Notably, some users achieved better results with the speed circle. Despite this, the joystick was generally preferred for speed tasks. Some users found it easier to maintain movement with the speed circle during target practice, as the joystick required more frequent stops for slow movements. However, the speed circle was more prone to imbalance issues, requiring constant position adjustments when changing direction. One user commented “I felt that with the Speed Circle I was more in control, but with the stick it was more immediate. In the Speed Circle, I was much more likely to overbalance and fall, whereas with the joystick I was not”.

No significant differences were found between the two techniques in terms of fatigue or fear of heights. Similarly, there were no significant differences in body ownership or sense of self-location. Both methods were perceived as equally effective in maintaining user immersion and safety.

5.3. Objective Measurements

This section examines task performance across different techniques and tasks. The analysis includes statistics with a 95% confidence interval for each metric to compare techniques, tasks, and previous findings. Both objective metrics and subjective scores were analyzed to evaluate differences between techniques. The normality of the samples was assessed using the Shapiro–Wilk test. Although most conditions followed a normal distribution, the Targets_SpeedCircle condition significantly deviated from normality (

). To ensure consistency and robustness, we proceeded with the Kruskal–Wallis nonparametric test for all comparisons to identify significant effects. Post hoc pairwise comparisons were performed using Dunn’s test with Bonferroni correction to determine specific differences between conditions (see

Table 4). The primary goal was to determine the extent to which our technique supports a secondary task without compromising performance outcomes.

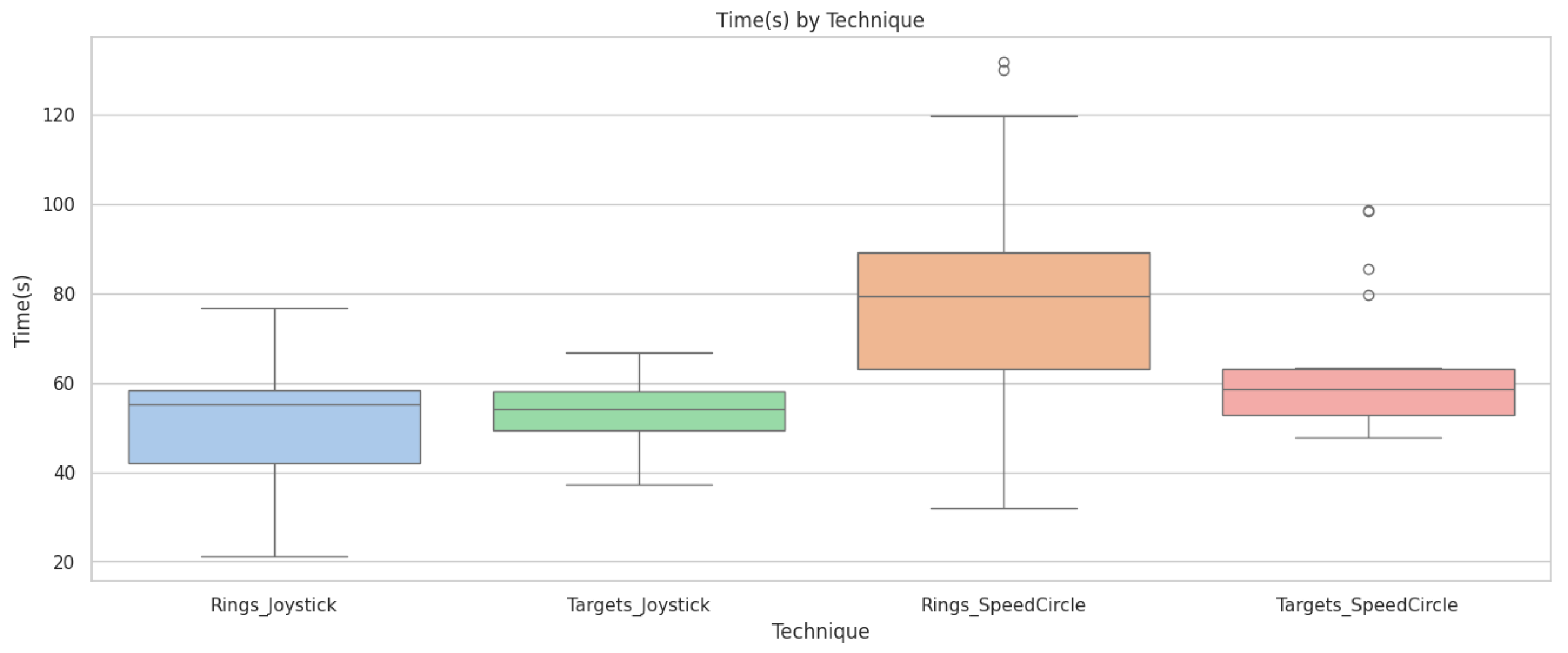

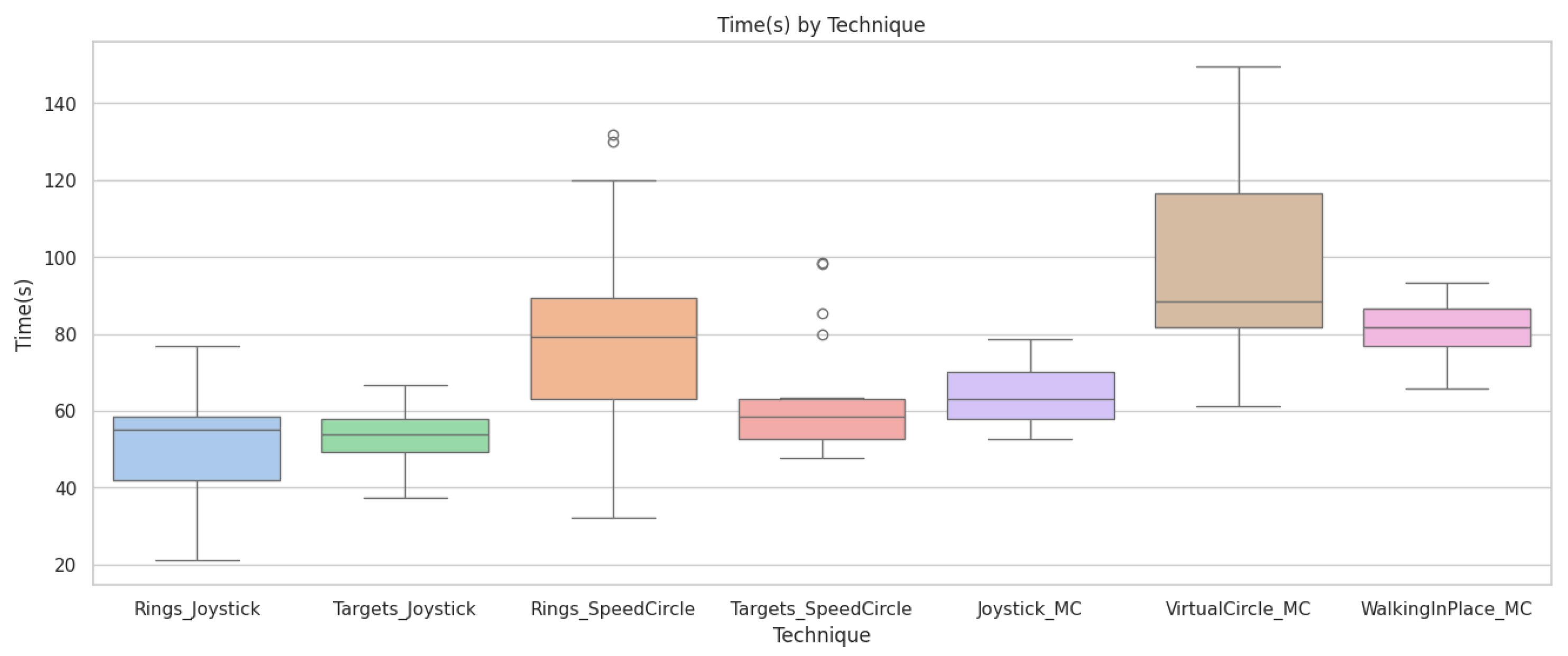

5.3.1. Time

Task completion time shows that the joystick method is faster in both the ring task () and the target practice (). No significant differences were found between tasks using the joystick (), but speed circle showed slightly better times in the target practice ().

Figure 9 shows that for the target+joystick task, the median completion time is approximately 55 s, with a mean slightly above the median, indicating a right-skewed distribution. The IQR is relatively narrow, suggesting consistent performance among participants. In the target+speed circle task, the median completion time is around 60 s, with a mean close to the median, indicating a relatively symmetric distribution and consistent performance, with a few outliers. The rings+joystick task has a median completion time of about 50 s, with a mean close to the median. The IQR is narrow and shows consistent performance, although a few outliers indicate some variability. Rings+speed circle has the highest median completion time at around 80 s, with a mean slightly higher than the median, indicating a right-skewed distribution. The IQR is the widest among the conditions, suggesting significant variability in performance, with several outliers showing that some participants took much longer to complete the task.

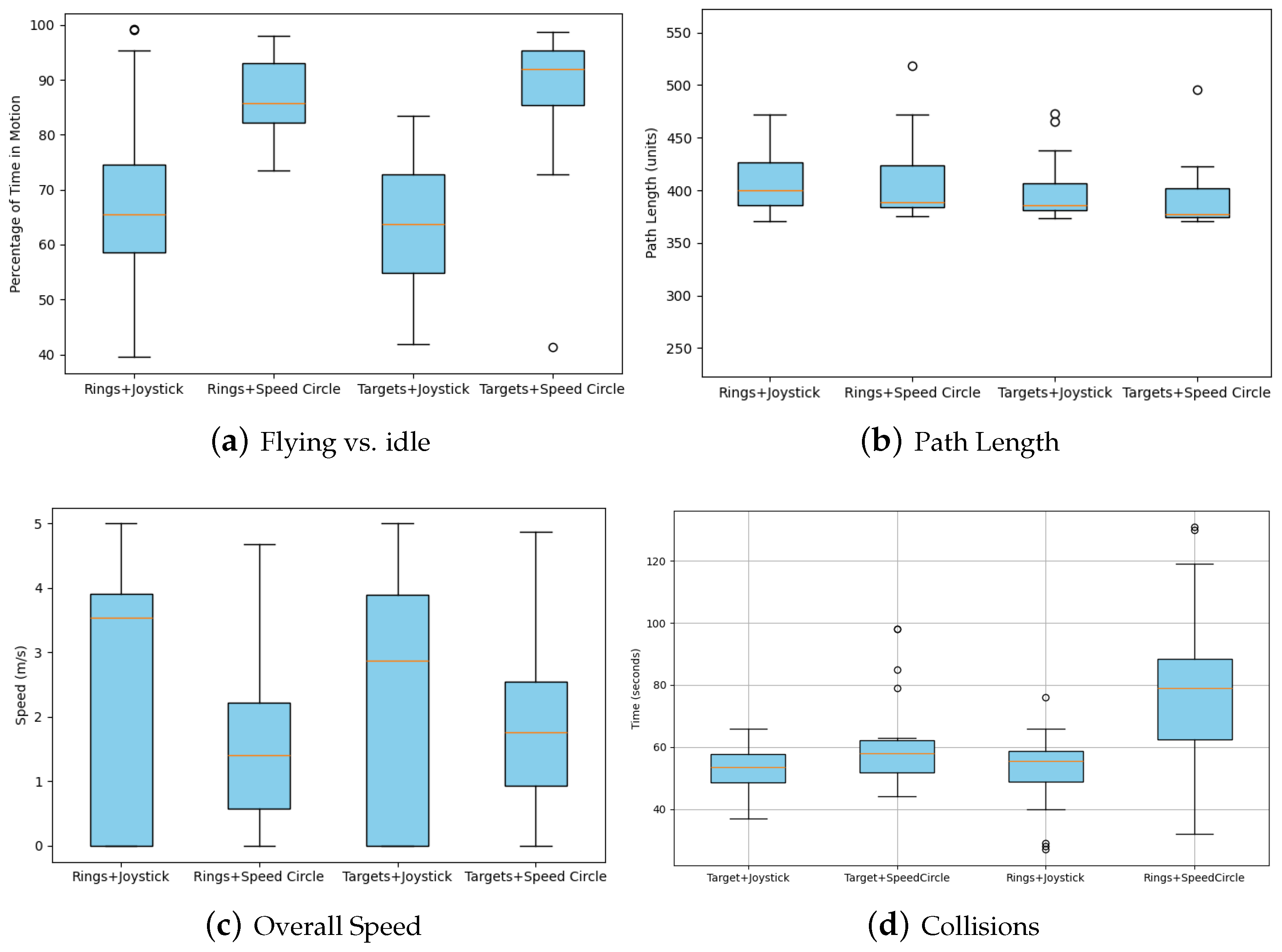

5.3.2. Flying vs. Idling

See

Figure 10a. Speed circle tasks had higher flying percentages for both ring (87.072,

) and target tasks (87.928,

) than the joystick method. Idle time was significantly higher with the joystick, indicating more consistent movement with the speed circle. Adding multitasking did not affect movement fluidity, as shown by no significant difference in flying and idle percentages between tasks using the joystick (

) or speed circle (

).

Figure 9.

Time to complete each task (ring and target) with each speed technique (joystick and speed circle).

Figure 9.

Time to complete each task (ring and target) with each speed technique (joystick and speed circle).

Figure 10.

Comparison of performance metrics across joystick and speed circle techniques, including ring navigation and target shooting tasks, to evaluate multitasking performance during VR navigation: (a) percentage of time in motion (flying vs. idle), (b) total path length (units), (c) average navigation speed (m/s), and (d) total collision time (seconds).

Figure 10.

Comparison of performance metrics across joystick and speed circle techniques, including ring navigation and target shooting tasks, to evaluate multitasking performance during VR navigation: (a) percentage of time in motion (flying vs. idle), (b) total path length (units), (c) average navigation speed (m/s), and (d) total collision time (seconds).

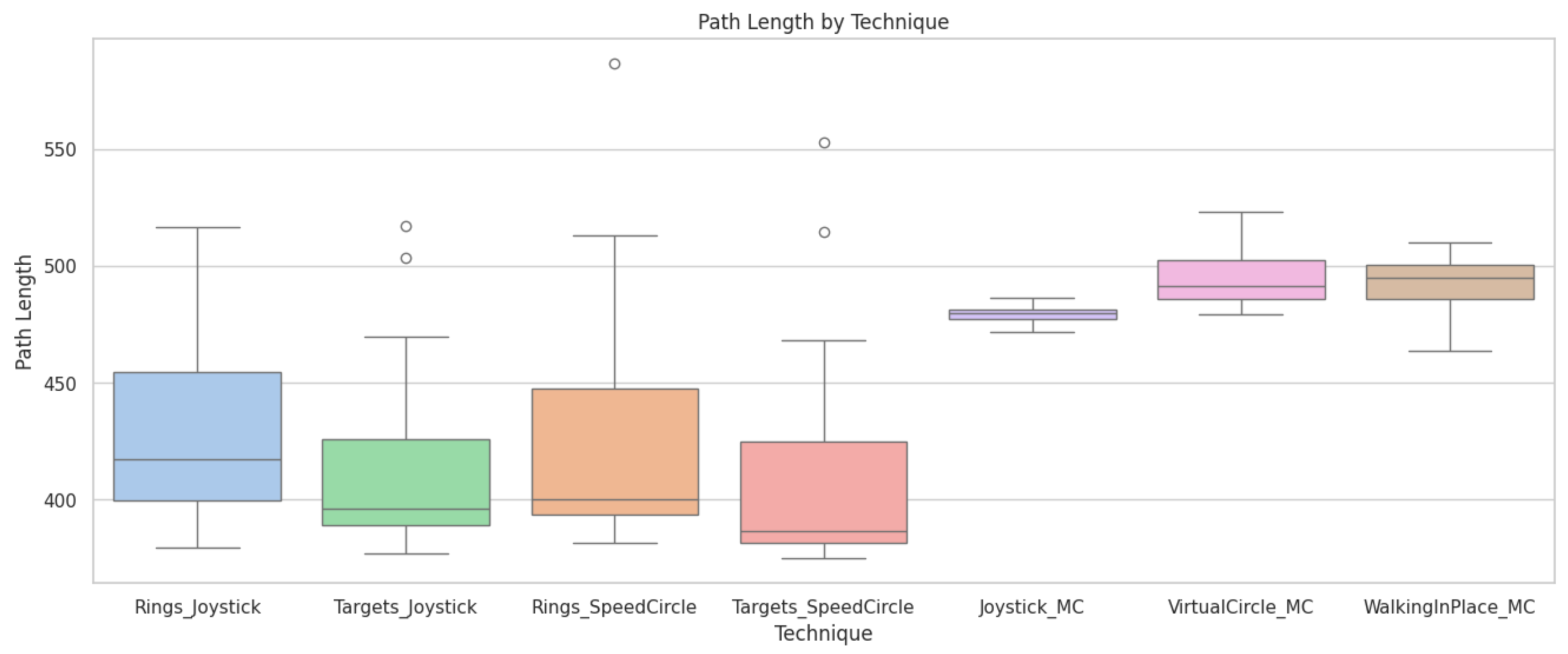

5.3.3. Path Length

See

Figure 10b. Path length averages were similar among tasks and ring conditions, ranging from 376.67 to 408.86 m. There are no significant differences in ring tasks between joystick and speed circle techniques, with users traveling approximately 2.37% less using the joystick (

), consistent with the Magic Carpet study (see further below). No significant difference in path lengths was found in the target practice task (

).

5.3.4. Speed

The speed plot (

Figure 10c) shows that rings+joystick had a median speed of

m/s with high variability. Rings+speed circle shows a lower median speed of 2 m/s with slightly more consistency, probably because users did not always reach the maximum speed. Targets+joystick has a median speed of

m/s with high variability, while targets+speed circle has the highest median speed at

m/s, also with high variability. The joystick technique supports higher speeds, especially in the rings tasks.

5.3.5. Collisions

The collisions did not show significant differences (see

Figure 10d) between the techniques in the rings (

) and the target practice (

,

) tasks. More collisions occurred in the target practice task than in the rings task, indicating a slight decrease in performance with multitasking. This pattern holds for both joystick (

) and speed circle (

) techniques.

5.4. Performance Compared to Magic Carpet

The joystick and speed circle techniques covered less distance than the Magic Carpet study (see

Figure 11). This occurs even for the target task where a secondary subtask is active. Threading a shorter path indicates that the maneuverability of the technique is more efficient.

Table 5 shows the significant differences after applying a Kruskal–Wallis H test and a Dunn test adjusted with Bonferroni.

Although very few collisions occurred overall, there were significantly fewer collisions in the ring task with our technique, either using a joystick or speed circle for speed, than with the Magic Carpet conditions, except for the joystick, where the effect is seen but is insignificant. It is also visible that the target task distracts some participants enough so that they allow more collisions. Still, the distribution is more widespread, showing that some participants are unaffected, implying that training can improve their performance. Significance measures after applying a Kruskal–Wallis H test and a Dunn’s test adjusted with Bonferroni are in

Table 6.

Finally, the results of the time performance show that our eye gaze steering technique, either with a joystick or a speed circle, is more efficient than the Magic Carpet conditions or at least similar, but not worse (cf.

Figure 12). Although Joystick_MC is not significantly different from our joystick results (see

Table 7 for significance), this is due to the lack of training with our technique, which causes the samples to spread. However, all participants in our experiment performed the rings before the target task. This induced a learning effect that considerably reduced variance. This also reduced the average time for the speed circle, which requires more learning than the joystick.

5.5. Discussion

The usability testing of our VR steering system yielded highly promising results, as evidenced by the metrics from the SUS-presence, SSQ, and NASA-TLX questionnaires. The SUS scores indicated a strong presence, with an average score of out of 20 . This suggests that our system provides a high level of user immersion. The SSQ score, with an average total score of could be significant, especially when disorientation was scored higher, at . However, the nausea factor is lower, on average. These symptoms are concerning but still lower than similar approaches like the Magic Carpet (with joystick SSQ = ; speed circle SSQ = ). This indicates that overall comfort was maintained.

The NASA-TLX total score of , places the system within the medium workload category. Since multitasking was expected to overload the participant’s work further, this result indicates that gaze–hand steering successfully accommodated multitasking.

There were significant differences in flying and idle times between techniques, with longer idle times occurring during both tasks when using the joystick compared to the speed circle. This difference was more pronounced in the target task. The joystick caused users to stop moving more frequently to look around. This can be attributed to the ease of performing dual tasks with body gestures rather than physical input.

There are indications of slightly lower performance in reaching the rings using the speed circle. Regarding the target task, the mean number of targets destroyed using the speed circle () is very similar to the joystick (). This indicates that the technique does not affect the accuracy of the target task.

Results compared with the Magic Carpet technique show that our technique allows for overall performance similar to the previous technique while allowing for a secondary simultaneous task. Some performance measures are better with our technique, such as collisions and distance traveled, arguably due to the speed circle and HMD setup improvements.

Regarding body gestures, we argue that evaluating the user’s position within the circle could be more accurately achieved by assessing the position of the torso rather than the head. Users reported difficulties looking upwards and attempting to navigate forward as their heads instinctively moved back. This resulted in a shorter distance from the circle’s center, leading to slower velocities.

6. Conclusions

In this paper, we approached the problem of how a gaze-directed approach assisted by hand pointing could prevail relative to other known steering techniques. We designed a method that provides a free look, opening possibilities for multitasking during travel in virtual environments.

We developed an application to study our technique and conducted a user study with 20 participants. Additionally, we analyzed our gaze-directed approach compared to the Magic Carpet to find any possible effects. The results help us understand how applying this method in eye-gaze-dominant applications affects exploration by decoupling gaze from directed steering while still being able to navigate simultaneously.

One important finding is that performance does not decrease when introducing a new task while navigating. Users were able to complete objectives like passing through rings and shooting targets with success rates of 97.5% and 96.3%, respectively, which confirms the effectiveness of our gaze-directed technique. Based on these results, we argue that the system can be used to navigate virtual environments in a range of applications.

One limitation of our study is the collection of user cybersickness, presence, and workload inputs for each technique. The current results assess these variables in general rather than for specific techniques or tasks. A more detailed analysis could improve comparisons with the Magic Carpet and other studies in future work to provide clearer conclusions. An important feature to implement is to use the torso instead of the head position for speed control. We also suggest that hand-tracking instead of controllers be used in a new user study, as this would align with the current trend of gaze-dominant hands-free interaction in XR.