Abstract

In the current context, the use of technologies in applications for multimodal dialogue systems with computers and emotion recognition through artificial intelligence continues to grow rapidly. Consequently, it is challenging for researchers to identify gaps, propose new models, and increase user satisfaction. The objective of this study is to explore and analyze potential applications based on artificial intelligence for multimodal dialogue systems incorporating emotion recognition. The methodology used in selecting papers is in accordance with PRISMA and identifies 13 scientific articles whose research proposals are generally focused on convolutional neural networks (CNNs), Long Short-Term Memory (LSTM), GRU, and BERT. The research results identify the proposed models as Mindlink-Eumpy, RHPRnet, Emo Fu-Sense, 3FACRNNN, H-MMER, TMID, DKMD, and MatCR. The datasets used are DEAP, MAHNOB-HCI, SEED-IV, SEDD-V, AMIGOS, and DREAMER. In addition, the metrics achieved by the models are presented. It is concluded that emotion recognition models such as Emo Fu-Sense, 3FACRNNN, and H-MMER obtain outstanding results, with their accuracy ranging from 92.62% to 98.19%, and multimodal dialogue models such as TMID and the scene-aware model with BLEU4 metrics obtain values of 51.59% and 29%, respectively.

1. Introduction

In the current context, the use of technologies in applications for dialogue systems with computers, such as virtual assistants based on artificial intelligence (AI), continues to grow rapidly [1]. In addition, it is expected that this interaction will incorporate emotion recognition in multimodal dialogue systems [2], thus increasing the level of user satisfaction [3].

The problem tackled in this paper is the identification of how artificial intelligence can contribute to multimodal dialogue systems with emotion recognition between the user and the computer [4,5,6,7].

The importance of this paper is its determination of the relationship between artificial intelligence and affective multimodal dialogue. In addition, it will find gaps in the existing research and propose possible solutions for future research.

This research study will contribute to maintaining a more humanized dialogue and achieving user satisfaction through multiple physical and physiological inputs, managing to integrate subjective data into the dialogue between users and virtual assistants [8,9].

This systematic review study aims to explore and analyze potential AI-based applications for multimodal dialogue systems [10] incorporating emotion recognition [11,12,13].

Several studies and approaches have addressed issues related to multimodal dialogue systems and emotion recognition in isolation. The physical data input of these systems is very varied and generally includes sound, vision, and text [14,15]. For physiological data input, researchers have considered electroencephalographic [16,17,18] and electron tomography [19,20,21] signals to be the representative ones.

The models that have been used in the different investigations have generally been based on convolutional neural networks (CNNs) [22,23] and their variants [24,25]. In addition, Long Short-Term Memory (LSTM) [2,26,27], GRU [28,29,30], and BERT [31,32,33] have been used.

The limitations found in the existing research are due to the use of limited data sources or datasets in the training process of the models [26,34,35,36,37] and the integration of dialogue models with emotion recognition [38,39,40].

This systematic review study will identify the challenges and opportunities associated with artificial intelligence and provide a roadmap for future research and development.

This study will focus on analyzing various applications and models based on artificial intelligence for a multimodal dialogue system with emotion recognition, identifying the proposed architectures and limitations [41,42,43].

This research is novel because there is limited information on the topic of study. Much research has focused on emotion recognition, sentiment analysis, and virtual assistant dialogue, but artificial intelligence-based multimodal affective dialogue systems are still in their early stages [14,44,45]. Moreover, this systematic review will contribute to the analysis of models proposed by researchers in previous studies [13,46].

This article has been divided into several sections to better explain the topic of this systematic literature review. The Introduction briefly explains the problem and the importance of the research topic. In the Materials and Methods, the process of the information search is explained. The different technologies, models, and datasets considered in the information search are discussed in the Results. The Discussion deals with the interpretation of the results.

2. Materials and Methods

The methodology selected in this systematic literature review is in accordance with Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA). The PICO framework (P: patient or population; I: intervention; C: comparison; O: outcome) was used for the research question. The systematic review protocol was registered as a public record at https://osf.io/j7byg/ (accessed on 12 January 2025).

2.1. Research Questions

The PICO framework is used in scientific research to generate research questions in systematic literature reviews.

First, the population or problem (P) is used to identify users that interact with multimodal dialogue systems. Then, intervention (I) refers to the analysis of models widely used in emotion recognition. Subsequently, comparison (C) refers to the comparative analysis of traditional models with multimodal dialogue systems. Finally, the results or output (O) refers to the evaluation of metrics in widely used models in both emotion recognition and multimodal dialogue systems. The PICO questions are presented in Table 1 below.

Table 1.

List of PICO questions.

2.2. Eligibility Criteria

In this section, we have considered the inclusion and exclusion criteria for the articles found to guarantee their quality, as shown in Table 2.

Table 2.

Eligibility criteria.

In the context of a comprehensive search strategy to minimize potential biases, justifications for the specific and detailed eligibility criteria for the articles are presented in terms of the inclusion and exclusion criteria.

IC1: Research related to multimodal dialogue systems and emotion recognition.

Including this criterion for multimodal dialogue and emotion recognition systems is essential to improve human–machine interactions and provide a more natural and efficient experience.

For a better understanding of the context, this criterion allows the integration of data types with multiple modalities so that the system can better interpret the user’s intentions and emotional states, which is essential for recognizing emotions with greater accuracy.

The selection criteria specifically focus on systems that integrate both multimodal dialogue and emotional recognition, thus avoiding distorting the conclusions with studies that address these aspects in isolation or incompletely. The integration of AI in human–machine interfaces has highlighted the critical need to develop dialogue systems that understand the user’s emotions, driving research in interactive deep learning to improve this emotional recognition capability.

IC2: Studies that present quantifiable metrics as results.

Including studies that present quantifiable metrics as outcomes is critical to ensuring rigour and validity in evaluating the performance of emotion recognition models in multimodal dialogue systems. Emotion recognition can be a subjective task due to variability in human perception. By requiring quantifiable metrics, sole reliance on human judgement is avoided and an objective basis for model comparison is provided.

IC3: Research that presents the origin of its data sources.

Criterion IC3 requires clear documentation of the origin of data in the research, thus ensuring the transparency, reproducibility, and validity of studies on multimodal dialogue systems, while allowing the identification and minimization of potential biases such as availability, selection, and confirmation biases, also facilitating comparison between different research studies and the generalization of the results.

IC4: Research that presents the architecture of the proposed solution.

Criterion IC4 requires a detailed presentation of the architecture used in research on multimodal dialogue systems with emotional recognition, ensuring reproducibility and facilitating comparison between models. Mandatory documentation of the technical architecture minimizes publication bias and allows for incremental evolution of research, avoiding duplication of efforts when developing new models.

EC1: Studies not related to artificial intelligence.

Criterion EC1 excludes non-AI-related studies to maintain the focus on modern computational techniques applicable to multimodal dialogue systems with emotional recognition, avoiding interference from traditional or non-computational approaches that could skew research results toward less relevant or non-scalable methodologies.

EC2: Studies not published between 2020 and 2024.

The EC2 criterion limits the review to studies published between 2020 and 2024 to ensure the inclusion of the most advanced AI techniques for emotional recognition in multimodal dialogues, considering the rapid evolution of models, datasets, and metrics that have significantly improved the accuracy and robustness of these systems.

EC3: Studies unrelated to multimodal dialogue systems.

Multimodal dialogue systems integrate multiple types of input, such as text, audio, images, and physiological signals, allowing for a more natural and richer interaction between humans and machines. Excluding studies that only address unimodal systems (text or voice only) avoids the inclusion of approaches that do not reflect the objective of this review. Excluding studies not related to multimodal dialogue systems allows for a more precise comparison of results and methodologies, avoiding methodological confusion derived from different approaches, and excluding non-multimodal studies avoids confusion with research in fields such as unimodal natural language processing or text-based recommendation systems. Likewise, eliminating these studies ensures that the systematic review only analyzes works where the processing and generation of responses involve multiple modalities of information.

EC4: Studies not written in English.

Excluding studies not written in English ensures global accessibility of the research, given that English is the predominant language in scientific and technological literature. This facilitates the dissemination of advances in artificial intelligence and multimodal dialogue systems, avoiding restrictions to a limited audience. Furthermore, English allows for a uniform comparison of models and metrics, reducing the risk of misinterpretations due to inaccurate translations. Including studies in multiple languages could introduce selection biases, as many articles in other languages are not indexed in international databases such as Scopus or IEEE Xplore, which would affect the consistency and replicability of the findings.

EC5: Duplicate articles.

Including duplicate articles in an analysis can lead to an overestimation of the available evidence, giving a false impression about the support for a research area. Detecting and removing duplicate references speeds up the screening phase, allowing researchers to focus on relevant content and avoiding biases in meta-analyses that could affect the accuracy of the results. Furthermore, removing duplicates optimizes the use of resources and improves the quality of the analysis, especially in studies of artificial intelligence and emotion recognition, where redundant information can make it difficult to compare and evaluate metrics in different machine learning models.

The articles identified as duplicates were recorded in the Zotero software for reference management, an initial criterion for justifying the elimination of articles.

Duplicate articles were identified manually by comparing title, authors, DOI, abstract, and publication source. The most recent, peer-reviewed version was prioritized in case of multiple publications of the same study. Ambiguous cases were assessed by content review; among other criteria, the methodology and results sections were reviewed to identify whether the studies analyzed the same data or presented new findings.

To determine whether an article was a duplicate, the following components were analyzed:

Title: We considered the paper a duplicate if the title was identical or presented a minimal variation without affecting the meaning of the study.

Authors: We checked whether the authors fully matched or whether the article had minor variations in the list of authors.

DOI (Digital Object Identifier): We checked whether two articles shared the same DOI, and if so, they were automatically considered duplicates. In the absence of a DOI, other key elements were compared.

Abstract: If the title and authors of the deleted articles presented slight differences, the abstract was reviewed in search of coincidences in the objectives, methodology, and main findings, validating the deletion.

Journal or publication source: If the same study appeared in different databases, the version published in the journal with the highest impact or with the most recent revision was selected.

Publication date: The most up-to-date version of the study was prioritized in case of multiple published versions.

2.3. Sources of Information

In the research, ScienceDirect, Scopus, and IEEE Xplore were considered sources of scientific data, due to the quality, prestige, and importance of the high-impact published articles, as shown in Figure 1.

Figure 1.

Data source origins of the indexed articles.

2.4. Search Strategy

In the research, keywords and search strings were considered to analyze the selection of articles, as shown in Table 3.

Table 3.

Strategy for queries in different sources of information.

2.5. Study Selection Process

The following describes the selection process.

Identification. In this stage, the sources of information to be searched are identified.

Filtering. In this stage, the first filter was applied, for example, to extract duplicate articles, abstracts, and titles according to the research topic, among others.

Eligibility. Here, the exclusion and inclusion of articles were carried out according to the criteria considered.

Inclusion. This is the final stage, in which the articles considered using the PRISMA and SNOWBALL methods were included.

The search and selection process flow identified in Figure 2 is described in the following. First, in the identification stage, it was observed that the results of the number of articles found in the databases were 700 articles for ScienceDirect, 720 articles for Scopus, 40 articles for IEEE Xplore, and 120 articles for the SNOWBALL method. After applying the criteria for elimination due to duplicity, 20 articles were eliminated from ScienceDirect results, 30 from Scopus results, and 40 from IEEE Xplore results. Continuing with the filtering stage, 400 of the total 1400 articles were eliminated due to the criteria of related content, articles written in other languages, and inability to download. Subsequently, in the eligibility stage, from a total of 1000 articles selected from the Science Direct, Scopus, and IEEE Xplore databases, 980 articles were eliminated, and from the 120 articles selected using the SNOWBALL method, 120 were eliminated, both according to the eligibility criteria shown in Table 2. Finally, in the inclusion stage, the number of files selected from the databases Science Direct, Scopus, and IEEE Xplore was 20, and using the SNOWBALL method, 4 articles; in total, 24 articles were selected.

Figure 2.

The process of searching and selecting articles from information sources according to PRISMA.

3. Results

In this section, the articles selected after the PRISMA methodology process are presented, and 13 scientific articles are thoroughly analyzed to answer the research questions of the PICO framework.

3.1. Selection of Studies

The results of the article selection search can be seen in Table 4.

Table 4.

List of articles found in different information sources following application of eligibility criteria.

3.2. Designation of Studies

This section describes the number of scientific articles found based on the research question using the PICO framework as shown in Table 5.

Table 5.

List of articles found corresponding to the PICO questions.

3.3. What Input Data and Categories Are Present in Multimodal Dialogue Systems?

The characteristics observed in the selected articles, according to Table 6, can be divided into three categories: physiological, which is related only to signals of biological processes; physical signals, which are signals that the senses can detect; and physical–physiological signals, which are a combination of physical and physiological signals. In addition, it is possible to observe the different types of input, the most representative being electroencephalogram (EEG) signals for the physiological category and image, sound, and text for the physical category.

Table 6.

List of the different characteristics of the data entry type in multimodal dialogue systems.

3.4. What Are the Models and Architectures in Emotion Recognition Through Signals?

The fundamental characteristic in the proposed models for the recognition of emotions through physiological signals is the processing of electroencephalographic data by a model based on neural networks such as the convolutional neural network (CNN), Long Short-Term Memory (LSTM), and a combination of hybrid models with ensemble algorithms such as Adaboost, as can be seen in Table 7.

Table 7.

List of the different proposed models found in the research.

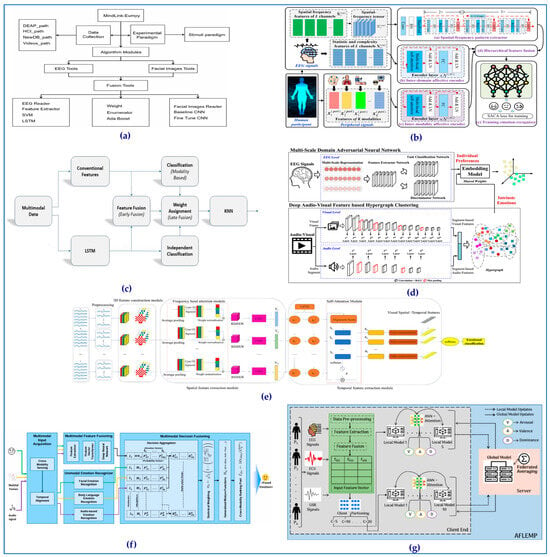

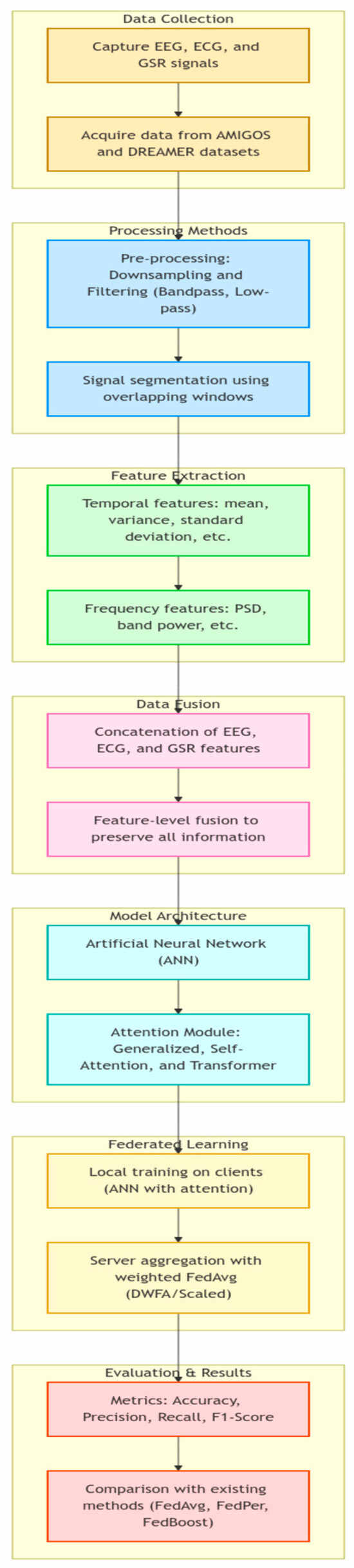

The architecture of the proposed models can be seen in Figure 3, where the process flow can be observed from data input, model training, and classification or prediction results.

Figure 3.

Architectures of the models proposed in the research are shown in Figure 4, Figure 5, Figure 6 and Figure 7. Visual elements of the components of the architectures of emotion recognition models analyzed in the review. (a) Mindlink-Eumpy architecture proposed by Li et al. [2]; (b) RHPRnet architecture proposed by Tang et al. [47]; (c) Emo Fu-Sense architecture proposed by Umair et al. [41]; (d) EEG-AVE architecture proposed by Liang et al. [43]; (e) 3FACRNN architecture proposed by Du et al. [48]; (f) H-MMER architecture proposed by Razzaq et al. [42]; (g) AFLEMP architecture proposed by Gahlan and Sethia [34].

More details of the architectures models proposed in the research are shown in Figure 4, Figure 5, Figure 6 and Figure 7.

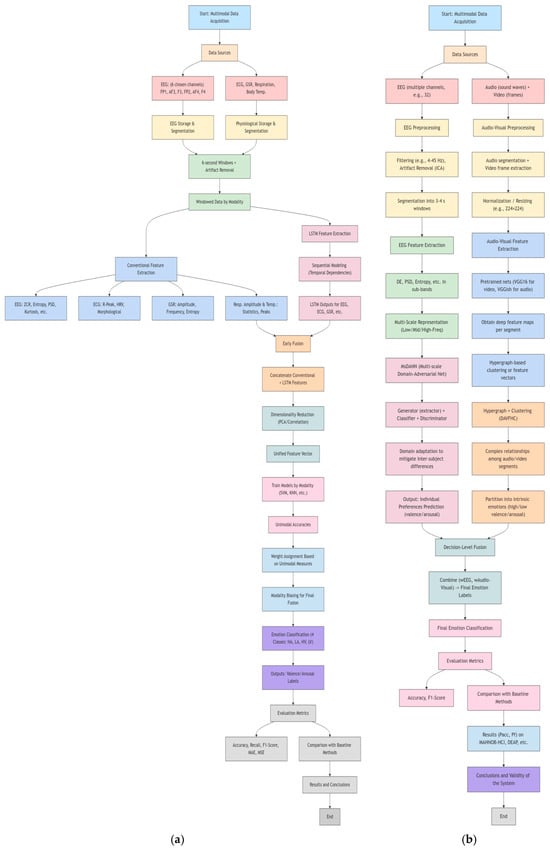

Figure 4.

Architectures of the models analyzed in the review: (a) architecture proposed by Li et al. [2]; (b) architecture proposed by Tang et al. [47].

Figure 5.

Architectures of the models analyzed in the review: (a) architecture proposed by Umair et al. [41]; (b) architecture proposed by Liang et al. [43].

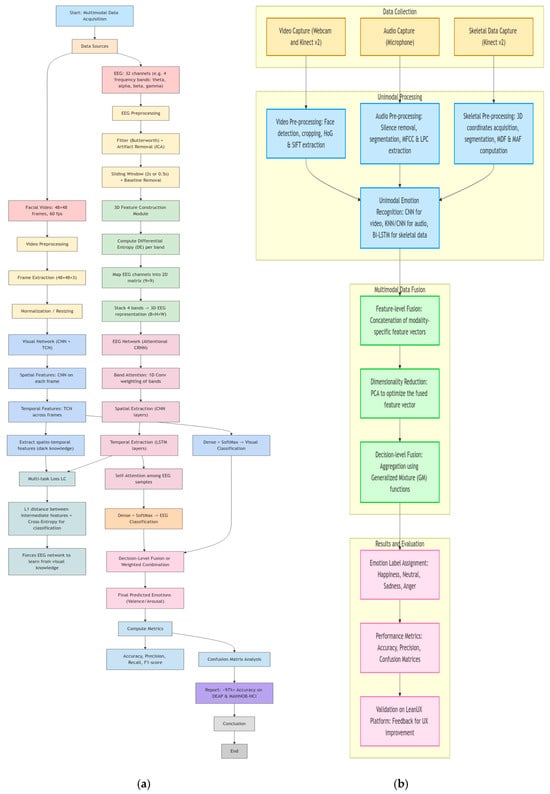

Figure 6.

Architectures of the models analyzed in the review: (a) architecture proposed by Du et al. [48]; (b) architecture proposed by Razzaq et al. [42].

Figure 7.

Architectures of the models analyzed in the review: Architecture proposed by Gahlan and Sethia [34]. Analysis of the methods of combining different types of input data in the emotion recognition models found in the systematic review are shown in Table 8.

Table 8.

List of data fusion methods in emotion recognition models.

Table 8.

List of data fusion methods in emotion recognition models.

| Author | Data Combination Methods in the Proposed Models |

|---|---|

| Li et al. [2] | Weighting Enumerator: This approach involves attributing a numerical value, called a “weight”, to each data element, indicating its importance within an information set. This technique demonstrated optimal accuracy in the valence and excitation dimensions in experiments using the DEAP and MAHNOB-HCI databases. It competently integrates SVM results applied to EEG and CNN data used for facial expression analysis. |

| Tang et al. [47] | Modality-level fusion: the paper discusses a modality-level fusion that processes peripheral signals and EEG independently before integration, thus preserving the distinctive features of each modality. Global fusion: after modality-level integration, a global fusion phase is executed, which consists of mapping the combined features into a lower-dimensional space using fully connected layers, thus amalgamating information from several modalities into a singular vector for emotion classification. |

| Umair et al. [41] | Early fusion integrates features from modalities such as ECG, GSR, EEG, respiration amplitude, and body temperature into a unified feature vector, which improves the capture of complementary information. Late fusion (decision-level fusion): The system employs late fusion, or decision-level fusion (DLF), which combines the results of several classifiers. This approach allows individual classification of each modality using several classifiers, such as Support Vector Machines, Logistic Regression, KNN, and Naïve Bayes. Combining the results of these classifiers according to specific rules poses design challenges. |

| Liang et al. [43] | Fusion techniques: The text examines two main fusion methodologies: EEG–Video Fusion and EEG–Visual–Audio Fusion. EEG–Video Fusion integrates EEG metrics with video data, while EEG–Visual–Audio Fusion fuses EEG, visual, and audio data independently. Weight assignment: Both fusion approaches use weight assignments for various modalities. In electroencephalographic and video fusion, weights are designated to illustrate the importance of EEG and video data in emotion detection. In electroencephalographic, visual, and audio–visual fusion, the weights for electroencephalographic, visual, and audio modalities are distributed evenly. |

| Du et al. [48] | Multimodal fusion: 3FACRNN synergistically integrates EEG and visual data through a multi-tasking loss function rather than simple feature concatenation, improving performance across modality strengths. Feature construction: EEG signals are decomposed into frequency bands through a 3D feature construction module, which captures spatial, temporal, and band-specific information essential for emotion recognition. Attentional mechanism: the model employs attentional mechanisms to prioritize frequency bands in EEG data, which enhances the network’s focus on crucial features to accurately predict emotion recognition. Cascade networks: the visual network is designed as a convolutional cascade neural network (CNN-TCN), which efficiently processes visual data before integrating it with EEG data. |

| Razzaq et al. [42] | Multimodal feature fusion: the framework incorporates features from various modalities, such as facial expressions, body language, and sound cues, by concatenating their feature vectors into a unified global representation of emotional state. Decision-level fusion: the paper highlights the importance of decision-level fusion, which consolidates the results of classifiers using different modalities, facilitating independent operation and improving the flexibility and robustness of classification. Generalized mixture functions (GM): the framework uses genetic modification functions to fuse features and decisions, which allows dynamic weighting of contributions to modalities to address data sparsity and variability of emotional expressions, thus improving classification accuracy. Implementation of fusion techniques: implementation involves preprocessing the data to extract relevant features from each modality and fuse them into equal-sized vectors to ensure consistency and efficient integration of multimodal decisions. |

| Gahlan and Sethia [34] | Function-level fusion (FLF): The framework employs function-level fusion, extracting and concatenating features from different physiological signals (EEG, ECG, and GSR) separately. This method maintains the integrity of the relevant information for each signal modality. |

3.5. What Are the Models and Architectures in Traditional Multimodal Dialogue Systems?

The characteristics of the multimodal dialogue system models show that the data inputs are more of a physical category whose data input is perceived by the senses, and the proposed models are based on Transformers and variants of convolutional neural networks (CNNs).

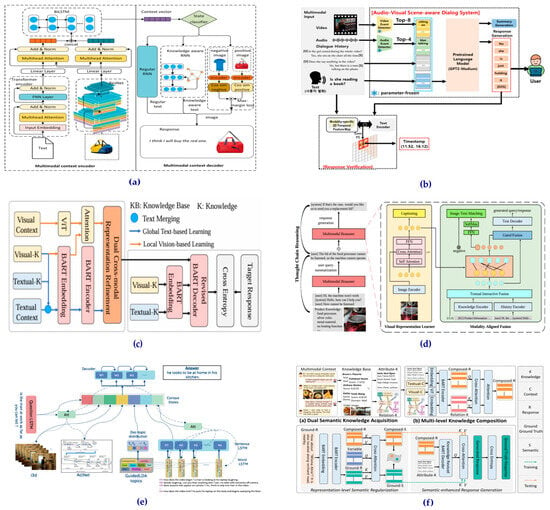

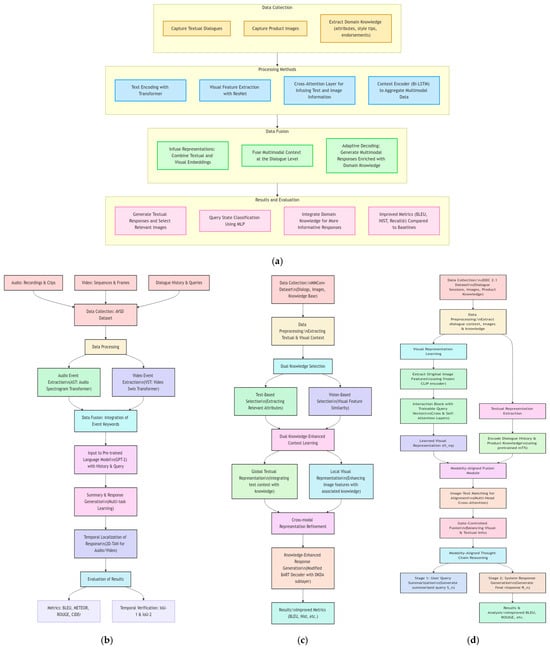

The architectures of the proposed models for the multimodal dialogue system are shown in Figure 8, Figure 9 and Figure 10.

Figure 8.

The architecture of proposed models for a multimodal dialogue system. Visual elements of the components of the architectures of multimodal dialogue system models are analyzed in the review. (a) TMID architecture proposed by Li et al. [49]; (b) dialogue system architecture with audiovisual recognition proposed by Heo et al. [50]; (c) DKMD architecture proposed by Chen et al. [35]; (d) MatCR architecture proposed by Liu et al. [51]; (e) ACLnet architecture proposed by Kumar et al. [10]; (f) architecture of a multimodal dialogue system composed of dual semantic knowledge proposed by Chen et al. [52].

Figure 9.

Architectures of the models analyzed in the review: (a) Architecture proposed by Liu et al. [49]. (b) Architecture proposed by Heo et al. [50]. (c) Architecture proposed by Chen et al. [35]. (d) Architecture proposed Liu et al. [51]. Analysis of the methods of combining different types of input data in multimodal dialogue system models found in the systematic review.

Figure 10.

Architectures of the models analyzed in the review: (a) Architecture proposed by Kumar et al. [10]. (b) Architecture proposed by Chen et al. [52]. Analysis of the methods of combining different types of input data in multimodal dialogue system models found in the systematic review.

3.6. What Are the Metrics and Their Value in Emotion Recognition Models and Multimodal Dialogue Systems?

The metrics extracted from the scientific articles, selected and carefully analyzed, reflect the effectiveness of the model in recognizing emotions in Table 9. The multimodal dialogue is reflected in Table 10. In addition, the contrast of the values of these metrics can be observed in Table 11.

Table 9.

List of proposed models in multimodal dialogue system architecture.

Table 9.

List of proposed models in multimodal dialogue system architecture.

| Author | Proposed Models |

|---|---|

| Liu et al. [49] | Transformer-based multimodal infusion dialogue (TMID) system |

| Heo et al. [50] | Dialogue system with audiovisual scene recognition |

| Chen et al. [35] | DKMD, which stands for Dual Knowledge Generative Pretrained Language Model, is designed for task-oriented multimodal dialogue systems |

| Liu et al. [51] | Modality-aligned chain of thought reasoning (MatCR) is designed for the generation of task-oriented multimodal dialogues |

| Kumar et al. [10] | Uses an end-to-end audio classification subnet, ACLnet |

| Chen et al. [52] | MDS-S2, which stands for Dual Semantic Knowledge Composed Multimodal Dialog System, uses the pretrained generative language model BART as its backbone |

Table 10.

List of data fusion methods in multimodal dialogue system models.

Table 10.

List of data fusion methods in multimodal dialogue system models.

| Author | Data Combination Methods in the Proposed Models |

|---|---|

| Liu et al. [49] |

|

| Heo et al. [50] | The proposed system uses an advanced data fusion technique that combines audio, visual, and textual modalities. The following are the key aspects of the data fusion method:

|

| Chen et al. [35] | The data fusion method integrates textual and visual information to improve the performance of the dialogue system. Key aspects include the following:

|

| Liu et al. [51] | The MatCR framework’s data fusion method integrates multimodal information to improve the understanding of user intent and response optimization.

|

| Kumar et al. [10] | Data fusion methods are vital for multimodal dialogue systems, particularly in audiovisual scene-aware dialogue (AVSD). The key points of data fusion methods are described below:

|

| Chen et al. [52] | The data fusion method integrates several types of knowledge to improve the performance of the dialogue system. These are the key points of this method:

|

Table 11.

Metrics recorded for the proposed multimodal emotion recognition models.

Table 11.

Metrics recorded for the proposed multimodal emotion recognition models.

| Metrics | Precision | Recall Rate | F1-Score | Accuracy | |||||

|---|---|---|---|---|---|---|---|---|---|

| Author\ Dimension | Valence (%) | Arousal (%) | Valence (%) | Arousal (%) | Valence (%) | Arousal (%) | Valence (%) | Arousal (%) | |

| Proposed Models for Emotion Recognition | [2] | - | - | 68.18 | 69.28 | - | - | 78.56 | 77.22 |

| [47] | - | - | - | - | - | - | 74.17 | 74.34 | |

| [41] | 97 | 85 | 92 | 91 | 95 | 88 | - | 92.62 | |

| [43] | - | - | - | - | 90.45 | 86.55 | 90.21 | 85.59 | |

| [48] | 99.13 | 98.89 | 97.26 | 96.42 | 97.33 | 97.38 | 98.37 | 97.55 | |

| [42] | - | - | - | - | - | - | 98.19 | 98.19 | |

| [34] | - | - | - | - | - | - | 88.3 | 88.3 | |

Table 12.

Metrics recorded for the proposed multimodal dialogue system models.

Table 12.

Metrics recorded for the proposed multimodal dialogue system models.

| Author\Metrics | BLEU1 | BLEU2 | BLEU3 | BLEU4 | METEOR | ROUGE-L | CIDEr | NIST | |

|---|---|---|---|---|---|---|---|---|---|

| Proposed Models | [49] | 64.81 | 58.09 | 54.24 | 51.59 | - | - | - | 8.8317 |

| [50] K = 4 | 0.6455 | 0.4889 | 0.3796 | 0.2986 | 0.2253 | 0.503 | 0.7868 | - | |

| [35] | 39.59 | 31.95 | 27.26 | 23.72 | - | - | - | 4.0004 | |

| [51] | 29.03 | 24.69 | 22.43 | - | - | 27.79 | - | - | |

| [10] | 0.632 | 0.499 | 0.402 | 0.329 | 0.223 | 0.488 | 0.762 | - | |

| [52] | 41.4 | 32.91 | 27.74 | 23.89 | - | - | - | 4.2142 |

4. Discussion

In selecting the scientific research, the PRISMA methodology was considered, and scientific articles whose content could be divided into two categories were found: emotion with seven articles and dialogue with six articles. The most used dataset for model training in the emotion category was DEAP.

The type of input data in the scientific research is characterized as physical (audio, image, video, and text), physiological (EEG, EMG, GSR, and ECG), and mixed (a combination of physical and physiological).

The current research is focused on a thorough exploration of the different models proposed for an affective multimodal dialogue system, but little information has been found. The closest we managed to find were scientific research articles oriented toward artificial intelligence models in emotion recognition and dialogues with a lack of emotion recognition, as shown in Table 4. In addition, it is evident that the optimal model in emotion recognition has an electroencephalogram (EEG) signal as its input data, and the most prominent models are from the authors of [42,48], as can be seen in Table 9, finding the most optimal value in the accuracy arousal metric, at 98.19 and 97.55, respectively, using as a base algorithm the variants of the Transformers and CNN models, as shown in Table 7. In addition, scientific researchers, in their proposals of traditional multimodal dialogue system models, used pretrained long language model (LLM) algorithms based on Transformers, with the models by the authors of [49,52] having values of 51.54 and 23.89, respectively, for the BLEU4 metric.

The scientific researchers also commented on the limitations of their models, such as the limited data sources to perform their training process. They also argued that it is necessary to incorporate individual data sources because they are unique traits of human beings.

These investigations emphasize technical performance metrics and lack direct assessments of energy efficiency and user satisfaction, with the exception of Razzaq et al. [42], who used the hybrid multimodal emotion recognition (H-MMER) methodology, which associated high-accuracy emotion classification with satisfactory user experience.

The researchers’ recommendations for future work include the following.

Research integrating physiological signals with external data such as body gestures and facial expressions [47].

Improvements in research on precision techniques and the fusion of new multimodal data [2,10,41].

Further research on EEG signals for emotion detection [43].

Research on real-time emotion recognition [48].

The integration of multimodal information with external knowledge for a more solid and coherent dialogue [35,50,51].

Critical Analysis

The scientific papers reviewed highlight that many models are based on a limited number of datasets, such as DEAP, MAHNOB-HCI, SEED-IV, and others. This limited scope may reduce the generalizability of the conclusions to various real-world scenarios. Therefore, it is recommended to use other datasets that represent a variety of cultural, environmental, and demographic conditions, such as the following:

- Robustness and Improved Generalization

The analysis of datasets obtained in natural environments such as AFEW, SFEW, MELD, and AffWild2 has shown that emotional recognition models achieve greater generalization capacity and robustness under variable conditions of lighting, perspective, and sound quality [53]. This feature is particularly valuable for real-world applications such as virtual assistants and human–machine interaction systems, where environmental conditions are highly dynamic.

- Advanced Understanding of Complex Emotions

The incorporation of datasets such as C-EXPR-DB and MELD, which capture compound emotions in conversational contexts, has allowed for the development of models with a more sophisticated and nuanced understanding of emotional states, significantly improving performance in sentiment and affect analysis [54]. This capability is critical for systems that demand high precision in human interactions, particularly in critical areas such as mental health and AI-assisted education, where the accurate interpretation of emotional nuances is essential.

- Emotional Detection in High-Stress Scenarios

Advances in emotional recognition under acute stress conditions have seen significant progress thanks to the incorporation of the CEMO dataset, which captures emotional responses in crisis situations [55]. This evolution has proven particularly valuable for the development of intelligent systems in critical environments such as emergency care centers, hospital monitoring units, and customer service departments, where accuracy in emotional interpretation can be crucial for immediate decision making.

- Cultural Inclusion and Linguistic Diversity in Emotional Recognition

The integration of multilingual datasets, such as CH-MEAD for the Chinese language, has made it possible to address a significant gap in research by capturing culturally specific emotional expression patterns [56]. This expansion in data diversity is driving the development of more sophisticated AI systems capable of recognizing and processing emotional expressions across different cultural and linguistic contexts, moving toward truly inclusive and globally applicable models.

- Emotional Recognition in Real Driving Contexts

The incorporation of the Real-World Driving Emotion Dataset, which captures emotional states during driving in uncontrolled environments, has proven to be fundamental for the development of emotional detection systems in authentic vehicular scenarios [57]. This innovation is particularly relevant for the development of driving assistance technologies that adapt to the emotional state of the driver, allowing for the implementation of more effective fatigue monitoring and contextual assistance systems, thus contributing to a safer and more personalized driving experience.

Engagement with industry entities and research institutions is critical to creating datasets that are representative and ethically sound. Proposed methodologies may encompass public–private collaboration, data acquisition in authentic settings, the inclusion of cultural and linguistic diversity, and ethical collection, as well as synthetic data generation. The adoption of these methodologies is likely to improve the accuracy and relevance of AI frameworks in the field of emotion recognition, making them more inclusive and effective in diverse contexts.

Scientific research has analyzed and criticized the computational and algorithmic obstacles to achieving real-time emotion recognition with a high degree of accuracy and efficiency. This has been made possible through deep neural network (DNN) models, which are used for emotion recognition; such models are often complex and require a high processing power, making them difficult to implement in resource-limited devices. However, in an experimental study [42], the ability of a proposed framework to model a set of four different emotional states (happiness, neutrality, sadness, and anger) has been evaluated and it was found that most of them can be modelled with significantly high accuracy using GM features [42]. The experiment shows that the proposed framework can model emotional states with an average accuracy of 98.19% and indicates a significant gain in terms of performance in contrast to traditional approaches. The overall evaluation results indicate that we can identify emotional states with high accuracy and increase the robustness of an emotion classification system needed for user experience (UX) measurement. There are also other complexities, such as power consumption in computationally intensive models, whose energy expenditure is high, which is problematic for mobile devices or laptops with limited battery capacity.

It is also recommended to use techniques to optimize performance, such as the following:

Lightweight models and efficient architectures: resource-efficient architectures, such as MobileNet and EfficientNet, reduce parameters and operations while maintaining accuracy.

Model compression: debugging and quantization techniques minimize model size and improve inference speed, reducing the computational load and energy usage.

Knowledge distillation: this technique allows a smaller model to emulate a larger one, reducing size and complexity with minimal performance loss.

On-device processing (on-device inference): implementing models on mobile or peripheral devices improves privacy and reduces latency through the use of accelerators and hardware optimizations.

Multimodal data flow optimization: simplified preprocessing and data fusion optimization can significantly reduce processing times.

Optimized federated learning: in distributed systems, model update size and communication frequency should be minimized through compression techniques and sporadic updates to reduce energy and bandwidth consumption.

With respect to the evolution of emotional recognition systems from the traditional approach to artificial intelligence (AI) as shown in Table 13, it is argued that the transition from conventional multimodal systems to AI-based models has marked a turning point in the field of emotional recognition, showing substantial advances in terms of accuracy, adaptability, and generalizability in diverse application contexts.

Table 13.

List of feature differences between traditional and AI-based systems.

AI-powered multimodal models have demonstrated significant superiority over conventional systems in crucial aspects such as accuracy, adaptability, and scalability. However, these advances come with significant challenges, including the demand for massive datasets, considerable computational resource requirements, and the inherent risk of bias in the collection and processing of emotional data.

Based on the articles reviewed, several real-world applications using multimodal information integration can be identified. Examples include the following:

Health and mental health:

Recognizing emotions through physiological signals enables continuous emotional monitoring in healthcare, allowing real-time treatment adjustment for stress, anxiety, or depression.

Accurate emotional measurement improves assessments of service quality and rehabilitation effectiveness, which improves patient experiences.

Education:

Intelligent tutoring systems using multimodal dialogue can adapt to students’ emotional states, modifying content delivery to improve motivation and attention.

Real-time feedback analysis using multimodal fusion supports pedagogical strategy adjustments based on student responses.

Customer service:

Multimodal virtual assistants and chatbots provide personalized responses by integrating various multimedia formats, enhancing user interactions on e-commerce platforms.

Identifying customer emotions and intentions enables empathetic and efficient solutions in financial and transportation services, increasing user satisfaction.

These examples demonstrate that advances in multimodal integration and emotional analytics enhance technical performance and significantly improve service quality and personalization in critical sectors such as healthcare, education, and customer service.

5. Conclusions

The integration of EEG, facial, physiological, and audiovisual data has demonstrated significantly higher accuracy than other models in emotion recognition, such as Emo Fu-Sense, 3FACRNN, and H-MMER, with outstanding results: the accuracy ranged from 92.62% to 98.19%. Models with multimodal dialogue, TMID and scene-aware, had a BLEU4 metric value of 51.59% and 29%, respectively.

Frameworks such as H-MMER and AFLEMP emphasize the importance of hierarchical fusion, federated learning, and user-adaptive strategies to improve generalization and maintain privacy.

Using attention modules, self-attention, and hierarchical strategies improves the extraction and combination of emotional signal features.

Advances highlight the relevance of multimodal analysis for empathic and responsive systems in fields such as user experience and mental health.

Models such as TMID, DKMD, and mATCR integrate textual, visual, and contextual information through Transformers and cross-attention mechanisms, achieving more accurate and interpretive response generation.

The experimental results indicate that the multimodal approach achieves greater accuracy in subject-dependent and subject-independent conditions [2].

The papers conclude that emotions are essential in various aspects of human life, and emotion classification can lead to more empathetic and responsive systems. The advantages of multimodal emotion classification over unimodal approaches highlight its ability to improve human–computer communication and interaction.

The future of multimodal emotion classification is promising, and advances in real-time analysis, cross-cultural recognition, and improved accuracy are expected [41]. In addition, they aim to explore semantic transitions between different domain topics to further improve the performance of response generation [42].

Author Contributions

Conceptualization, L.B. and C.A.; methodology, L.B. and C.R.; software, P.H.; validation, P.H., C.A. and C.R.; formal analysis, L.B. and P.H.; investigation, L.B. and P.H.; writing—original draft preparation, L.B., C.A. and C.R.; writing—review and editing, L.B., C.R., P.H. and C.A.; visualization, L.B.; supervision, C.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- De Andrade, I.M.; Tumelero, C. Increasing customer service efficiency through artificial intelligence chatbot. Rege-Rev. Gest. 2022, 29, 238–251. [Google Scholar] [CrossRef]

- Li, R.; Liang, Y.; Liu, X.; Wang, B.; Huang, W.; Cai, Z.; Ye, Y.; Qiu, L.; Pan, J. MindLink-Eumpy: An Open-Source Python Toolbox for Multimodal Emotion Recognition. Front. Hum. Neurosci. 2021, 15, 621493. [Google Scholar] [CrossRef] [PubMed]

- Pinochet, L.H.C.; de Gois, F.S.; Pardim, V.I.; Onusic, L.M. Experimental study on the effect of adopting humanized and non-humanized chatbots on the factors measure the intensity of the user’s perceived trust in the Yellow September campaign. Technol. Forecast. Soc. Change 2024, 204, 123414. [Google Scholar] [CrossRef]

- Karani, R.; Desai, S. IndEmoVis: A Multimodal Repository for In-Depth Emotion Analysis in Conversational Contexts. Procedia Comput. Sci. 2024, 233, 108–118. [Google Scholar] [CrossRef]

- Kim, H.; Hong, T. Enhancing emotion recognition using multimodal fusion of physiological, environmental, personal data. Expert Syst. Appl. 2024, 249, 123723. [Google Scholar] [CrossRef]

- Zou, B.; Wang, Y.; Zhang, X.; Lyu, X.; Ma, H. Concordance between facial micro-expressions and physiological signals under emotion elicitation. Pattern Recognit. Lett. 2022, 164, 200–209. [Google Scholar] [CrossRef]

- Taware, S.; Thakare, A. Critical Analysis on Multimodal Emotion Recognition in Meeting the Requirements for Next Generation Human Computer Interactions. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 523–534. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Z. Realization of Self-Adaptive Higher Teaching Management Based Upon Expression and Speech Multimodal Emotion Recognition. Front. Psychol. 2022, 13, 857924. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Wang, Y.; Yamashita, K.S.; Khatibi, E.; Azimi, I.; Dutt, N.; Borelli, J.L.; Rahmani, A.M. Integrating wearable sensor data and self-reported diaries for personalized affect forecasting. Smart Health 2024, 32, 100464. [Google Scholar] [CrossRef]

- Kumar, S.H.; Okur, E.; Sahay, S.; Huang, J.; Nachman, L. Investigating topics, audio representations and attention for multimodal scene-aware dialog. Comput. Speech Lang. 2020, 64, 101102. [Google Scholar] [CrossRef]

- Hazer-Rau, D.; Meudt, S.; Daucher, A.; Spohrs, J.; Hoffmann, H.; Schwenker, F.; Traue, H.C. The uulmMAC Database—A Multimodal Affective Corpus for Affective Computing in Human-Computer Interaction. Sensors 2020, 20, 2308. [Google Scholar] [CrossRef]

- Khan, M.; Gueaieb, W.; El Saddik, A.; Kwon, S. MSER: Multimodal speech emotion recognition using cross-attention with deep fusion. Expert Syst. Appl. 2024, 245, 122946. [Google Scholar] [CrossRef]

- Shang, Y.; Fu, T. Multimodal fusion: A study on speech-text emotion recognition with the integration of deep learning. Intell. Syst. Appl. 2024, 24, 200436. [Google Scholar] [CrossRef]

- Moore, P. Do We Understand the Relationship between Affective Computing, Emotion and Context-Awareness? Machines 2017, 5, 16. [Google Scholar] [CrossRef]

- Shinde, S.; Kathole, A.; Wadhwa, L.; Shaikha, A.S. Breaking the silence: Innovation in wake word activation. Multidiscip. Sci. J. 2023, 6, 2024021. [Google Scholar] [CrossRef]

- Shi, X.; She, Q.; Fang, F.; Meng, M.; Tan, T.; Zhang, Y. Enhancing cross-subject EEG emotion recognition through multi-source manifold metric transfer learning. Comput. Biol. Med. 2024, 174, 108445. [Google Scholar] [CrossRef] [PubMed]

- Pei, G.; Shang, Q.; Hua, S.; Li, T.; Jin, J. EEG-based affective computing in virtual reality with a balancing of the computational efficiency and recognition accuracy. Comput. Hum. Behav. 2024, 152, 108085. [Google Scholar] [CrossRef]

- Hsu, S.-H.; Lin, Y.; Onton, J.; Jung, T.-P.; Makeig, S. Unsupervised learning of brain state dynamics during emotion imagination using high-density EEG. NeuroImage 2022, 249, 118873. [Google Scholar] [CrossRef]

- Na Jo, H.; Park, S.W.; Choi, H.G.; Han, S.H.; Kim, T.S. Development of an Electrooculogram (EOG) and Surface Electromyogram (sEMG)-Based Human Computer Interface (HCI) Using a Bone Conduction Headphone Integrated Bio-Signal Acquisition System. Electronics 2022, 11, 2561. [Google Scholar] [CrossRef]

- Zhu, B.; Zhang, D.; Chu, Y.; Zhao, X.; Zhang, L.; Zhao, L. Face-Computer Interface (FCI): Intent Recognition Based on Facial Electromyography (fEMG) and Online Human-Computer Interface with Audiovisual Feedback. Front. Neurorobot. 2021, 15, 692562. [Google Scholar] [CrossRef]

- Kim, J.; Cho, D.; Lee, K.J.; Lee, B. A Real-Time Pinch-to-Zoom Motion Detection by Means of a Surface EMG-Based Human-Computer Interface. Sensors 2014, 15, 394–407. [Google Scholar] [CrossRef]

- Jekauc, D.; Burkart, D.; Fritsch, J.; Hesenius, M.; Meyer, O.; Sarfraz, S.; Stiefelhagen, R. Recognizing affective states from the expressive behavior of tennis players using convolutional neural networks. Knowl.-Based Syst. 2024, 295, 111856. [Google Scholar] [CrossRef]

- Islam, M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef] [PubMed]

- Diep, Q.B.; Phan, H.Y.; Truong, T.-C. Crossmixed convolutional neural network for digital speech recognition. PLoS ONE 2024, 19, e0302394. [Google Scholar] [CrossRef]

- Annamalai, B.; Saravanan, P.; Varadharajan, I. ABOA-CNN: Auction-based optimization algorithm with convolutional neural network for pulmonary disease prediction. Neural Comput. Appl. 2023, 35, 7463–7474. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, J.; Zhao, L.; Yin, Y. Human-Computer Interaction Based on ASGCN Displacement Graph Neural Networks. Informatica 2024, 48, 195–208. [Google Scholar] [CrossRef]

- Bi, W.; Xie, Y.; Dong, Z.; Li, H. Enterprise Strategic Management from the Perspective of Business Ecosystem Construction Based on Multimodal Emotion Recognition. Front. Psychol. 2022, 13, 857891. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zhai, Y.; Xu, G.; Wang, N. Multi-Modal Affective Computing: An Application in Teaching Evaluation Based on Combined Processing of Texts and Images. Trait. Signal 2023, 40, 533–541. [Google Scholar] [CrossRef]

- Dang, X.; Chen, Z.; Hao, Z.; Ga, M.; Han, X.; Zhang, X.; Yang, J. Wireless Sensing Technology Combined with Facial Expression to Realize Multimodal Emotion Recognition. Sensors 2022, 23, 338. [Google Scholar] [CrossRef]

- Tashu, T.M.; Hajiyeva, S.; Horvath, T. Multimodal Emotion Recognition from Art Using Sequential Co-Attention. J. Imaging 2021, 7, 157. [Google Scholar] [CrossRef]

- Liu, W.; Cao, S.; Zhang, S. Multimodal consistency-specificity fusion based on information bottleneck for sentiment analysis. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 101943. [Google Scholar] [CrossRef]

- Makhmudov, F.; Kultimuratov, A.; Cho, Y.-I. Enhancing Multimodal Emotion Recognition through Attention Mechanisms in BERT and CNN Architectures. Appl. Sci. 2024, 14, 4199. [Google Scholar] [CrossRef]

- Gifu, D.; Pop, E. Smart Solutions to Keep Your Mental Balance. Procedia Comput. Sci. 2022, 214, 503–510. [Google Scholar] [CrossRef]

- Gahlan, N.; Sethia, D. AFLEMP: Attention-based Federated Learning for Emotion recognition using Multi-modal Physiological data. Biomed. Signal Process. Control 2024, 94, 106353. [Google Scholar] [CrossRef]

- Chen, X.; Song, X.; Jing, L.; Li, S.; Hu, L.; Nie, L. Multimodal Dialog Systems with Dual Knowledge-enhanced Generative Pretrained Language Model. arXiv 2024, arXiv:2207.07934. [Google Scholar] [CrossRef]

- Singla, C.; Singh, S.; Sharma, P.; Mittal, N.; Gared, F. Emotion recognition for human–computer interaction using high-level descriptors. Sci. Rep. 2024, 14, 12122. [Google Scholar] [CrossRef]

- Chen, W. A Novel Long Short-Term Memory Network Model for Multimodal Music Emotion Analysis in Affective Computing. J. Appl. Sci. Eng. 2022, 26, 367–376. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.M.; Lozano, M.D.; Penichet, V.M.R.; Law, E.L.-C. Building a three-level multimodal emotion recognition framework. Multimed. Tools Appl. 2023, 82, 239–269. [Google Scholar] [CrossRef]

- Polydorou, N.; Edalat, A. An interactive VR platform with emotion recognition for self-attachment intervention. EAI Endorsed Trans. Pervasive Health Technol. 2021, 7, e5. [Google Scholar] [CrossRef]

- Bieńkiewicz, M.M.; Smykovskyi, A.P.; Olugbade, T.; Janaqi, S.; Camurri, A.; Bianchi-Berthouze, N.; Björkman, M.; Bardy, B.G. Bridging the gap between emotion and joint action. Neurosci. Biobehav. Rev. 2021, 131, 806–833. [Google Scholar] [CrossRef]

- Umair, M.; Rashid, N.; Khan, U.S.; Hamza, A.; Iqbal, J. Emotion Fusion-Sense (Emo Fu-Sense)—A novel multimodal emotion classification technique. Biomed. Signal Process. Control 2024, 94, 106224. [Google Scholar] [CrossRef]

- Razzaq, M.A.; Hussain, J.; Bang, J.; Hua, C.-H.; Satti, F.A.; Rehman, U.U.; Bilal, H.S.M.; Kim, S.T.; Lee, S. A Hybrid Multimodal Emotion Recognition Framework for UX Evaluation Using Generalized Mixture Functions. Sensors 2023, 23, 4373. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Zhang, X.; Zhou, R.; Zhang, L.; Li, L.; Huang, G.; Zhang, Z. Cross-individual affective detection using EEG signals with audio-visual embedding. Neurocomputing 2022, 510, 107–121. [Google Scholar] [CrossRef]

- Yu, S.; Androsov, A.; Yan, H.; Chen, Y. Bridging computer and education sciences: A systematic review of automated emotion recognition in online learning environments. Comput. Educ. 2024, 220, 105111. [Google Scholar] [CrossRef]

- Bianco, S.; Celona, L.; Ciocca, G.; Marelli, D.; Napoletano, P.; Yu, S.; Schettini, R. A Smart Mirror for Emotion Monitoring in Home Environments. Sensors 2021, 21, 7453. [Google Scholar] [CrossRef]

- Tan, X.; Fan, Y.; Sun, M.; Zhuang, M.; Qu, F. An emotion index estimation based on facial action unit prediction. Pattern Recognit. Lett. 2022, 164, 183–190. [Google Scholar] [CrossRef]

- Tang, J.; Ma, Z.; Gan, K.; Zhang, J.; Yin, Z. Hierarchical multimodal-fusion of physiological signals for emotion recognition with scenario adaption and contrastive alignment. Inf. Fusion 2024, 103, 102129. [Google Scholar] [CrossRef]

- Du, Y.; Li, P.; Cheng, L.; Zhang, X.; Li, M.; Li, F. Attention-based 3D convolutional recurrent neural network model for multimodal emotion recognition. Front. Neurosci. 2024, 17, 1330077. [Google Scholar] [CrossRef]

- Liu, B.; He, L.; Liu, Y.; Yu, T.; Xiang, Y.; Zhu, L.; Ruan, W. Transformer-Based Multimodal Infusion Dialogue Systems. Electronics 2022, 11, 3409. [Google Scholar] [CrossRef]

- Heo, Y.; Kang, S.; Seo, J. Natural-Language-Driven Multimodal Representation Learning for Audio-Visual Scene-Aware Dialog System. Sensors 2023, 23, 7875. [Google Scholar] [CrossRef]

- Liu, Y.; Li, L.; Zhang, B.; Huang, S.; Zha, Z.-J.; Huang, Q. MaTCR: Modality-Aligned Thought Chain Reasoning for Multimodal Task-Oriented Dialogue Generation. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 5776–5785. [Google Scholar]

- Chen, X.; Song, X.; Wei, Y.; Nie, L.; Chua, T.-S. Dual Semantic Knowledge Composed Multimodal Dialog Systems. arXiv 2023, arXiv:2305.09990. [Google Scholar]

- Aguilera, A.; Mellado, D.; Rojas, F. An Assessment of In-the-Wild Datasets for Multimodal Emotion Recognition. Sensors 2023, 23, 5184. [Google Scholar] [CrossRef] [PubMed]

- Richet, N.; Belharbi, S.; Aslam, H.; Schadt, M.E.; González-González, M.; Cortal, G.; Koerich, A.L.; Pedersoli, M.; Finkel, A.; Bacon, S.; et al. Textualized and Feature-based Models for Compound Multimodal Emotion Recognition in the Wild. arXiv 2024, arXiv:2407.12927. [Google Scholar]

- Deschamps-Berger, T.; Lamel, L.; Devillers, L. Exploring Attention Mechanisms for Multimodal Emotion Recognition in an Emergency Call Center Corpus. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Ruan, Y.P.; Zheng, S.K.; Huang, J.; Zhang, X.; Liu, Y.; Li, T. CH-MEAD: A Chinese Multimodal Conversational Emotion Analysis Dataset with Fine-Grained Emotion Taxonomy. In Proceedings of the 2023 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Taipei, Taiwan, 31 October–3 November 2023; pp. 498–505. [Google Scholar]

- Oh, G.; Jeong, E.; Kim, R.C.; Yang, J.H.; Hwang, S.; Lee, S.; Lim, S. Multimodal Data Collection System for Driver Emotion Recognition Based on Self-Reporting in Real-World Driving. Sensors 2022, 22, 4402. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).