Human–Machine Interface for Remote Crane Operation: A Review

Abstract

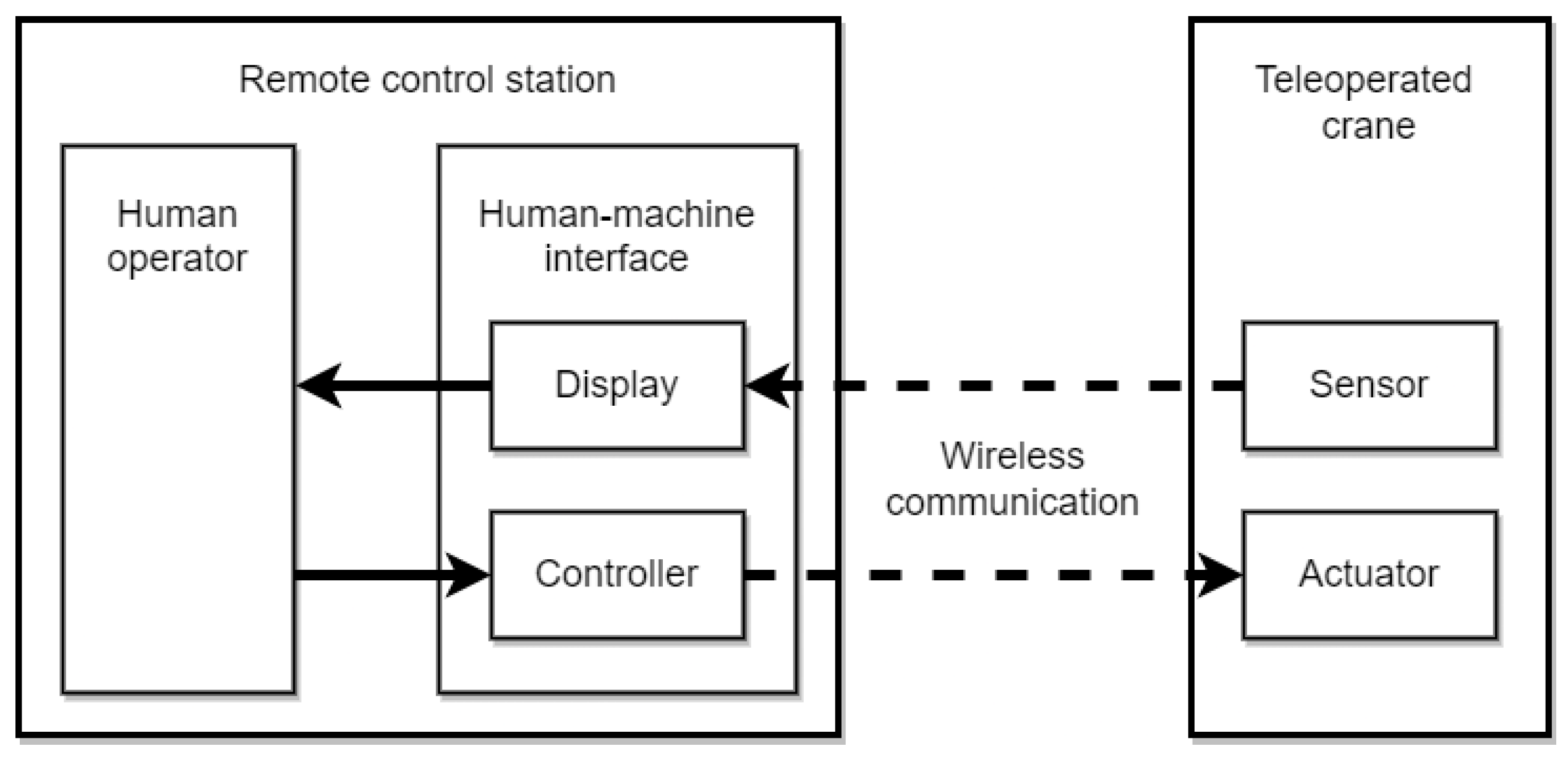

1. Introduction

2. Method

- The term crane is used to refer to species of birds;

- The term crane appears in the authors’ names or in the bibliography section only;

- The term crane appears in the body text, but it is mentioned in a passing manner. For example, the term is only mentioned once or twice in the body text.

- 1.

- What kind of HMI that was proposed?

- 2.

- What is the purpose of the HMI?

- 3.

- For what type of cranes that the HMI was proposed?

- 4.

- Was the HMI evaluated with test users?

- 5.

- What were the findings from the evaluation with test users?

3. Results

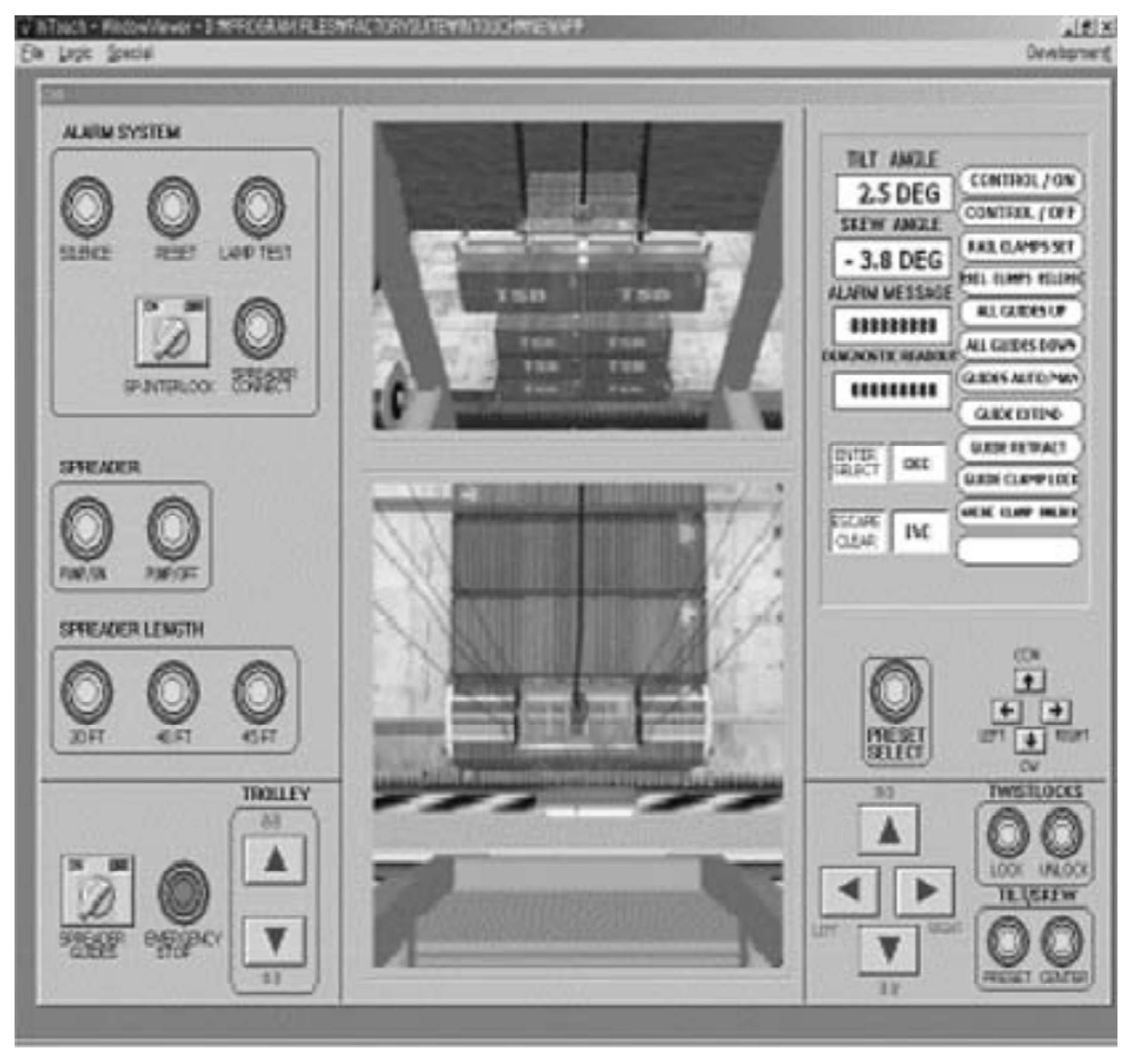

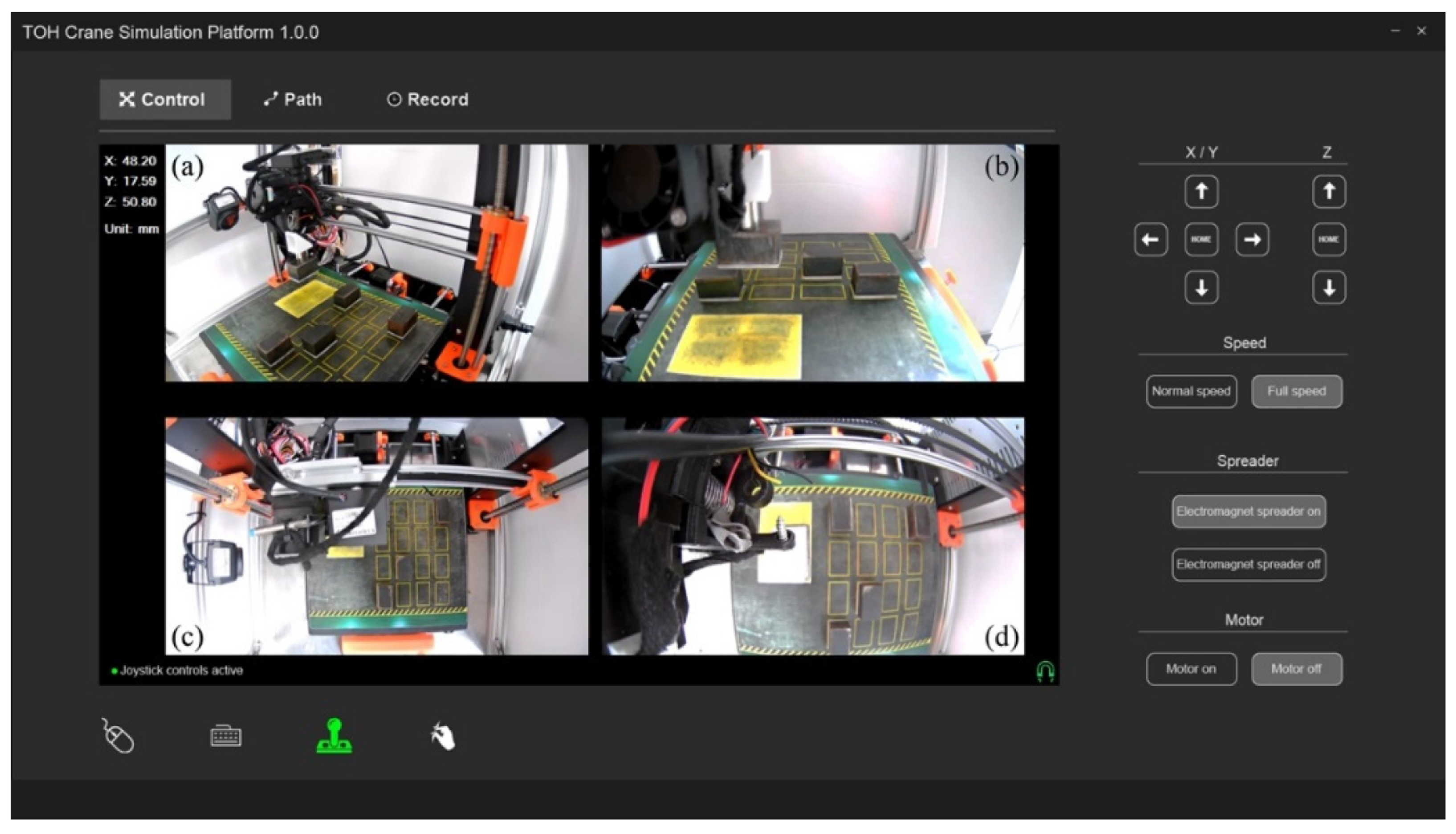

3.1. Graphical User Interfaces for Performing Teleoperation

3.2. Different Ways of Presenting Video Feed from Different Camera Views

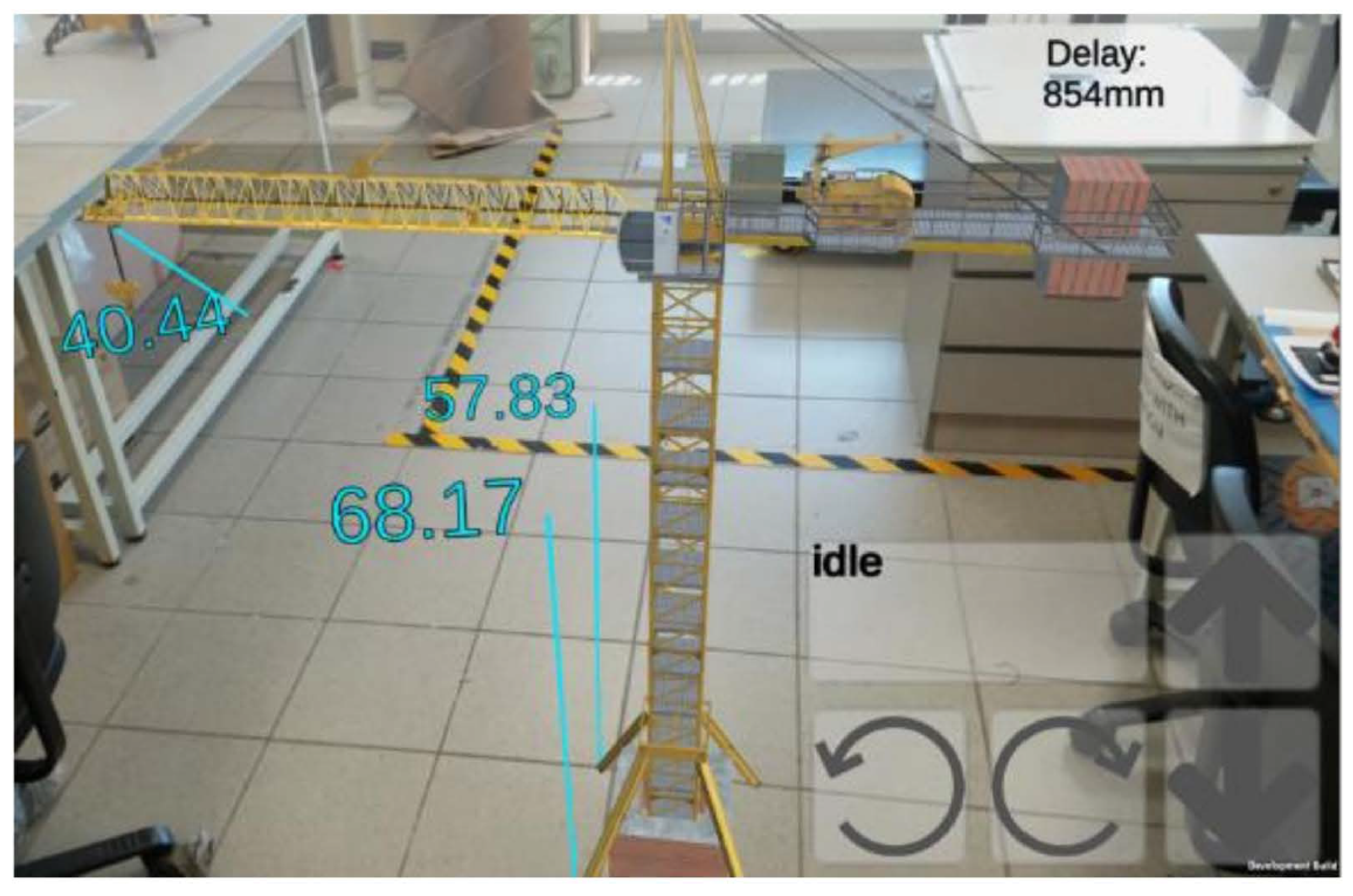

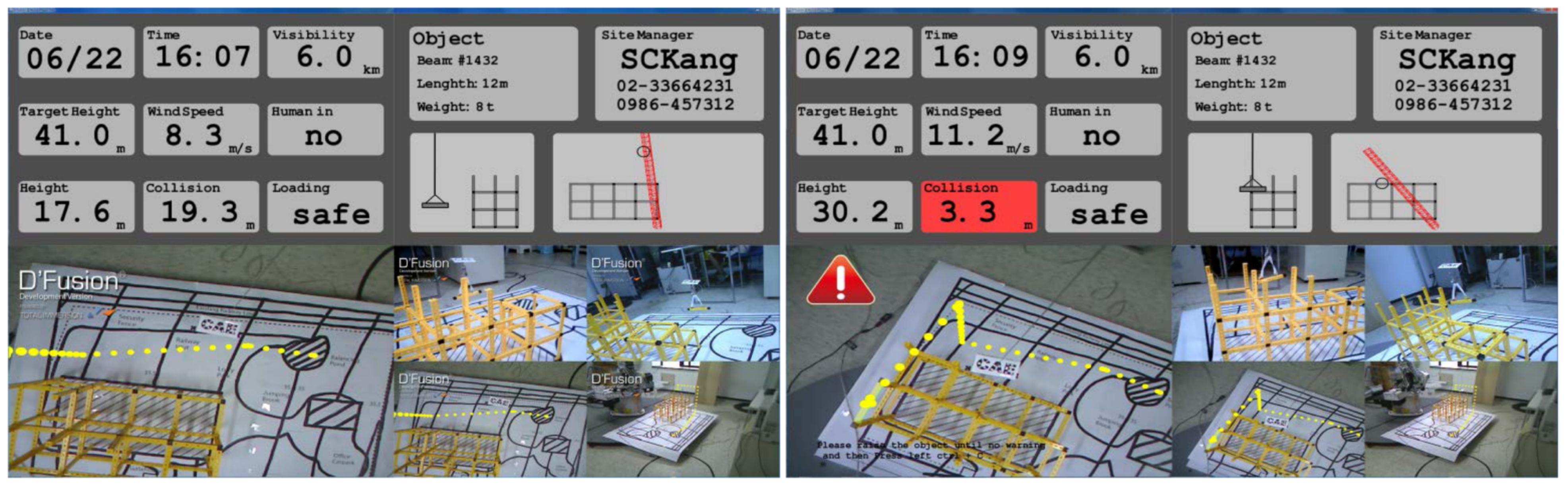

3.3. Overlay Supportive Information into Video Feed

3.4. Provide Auditory Information to Operators

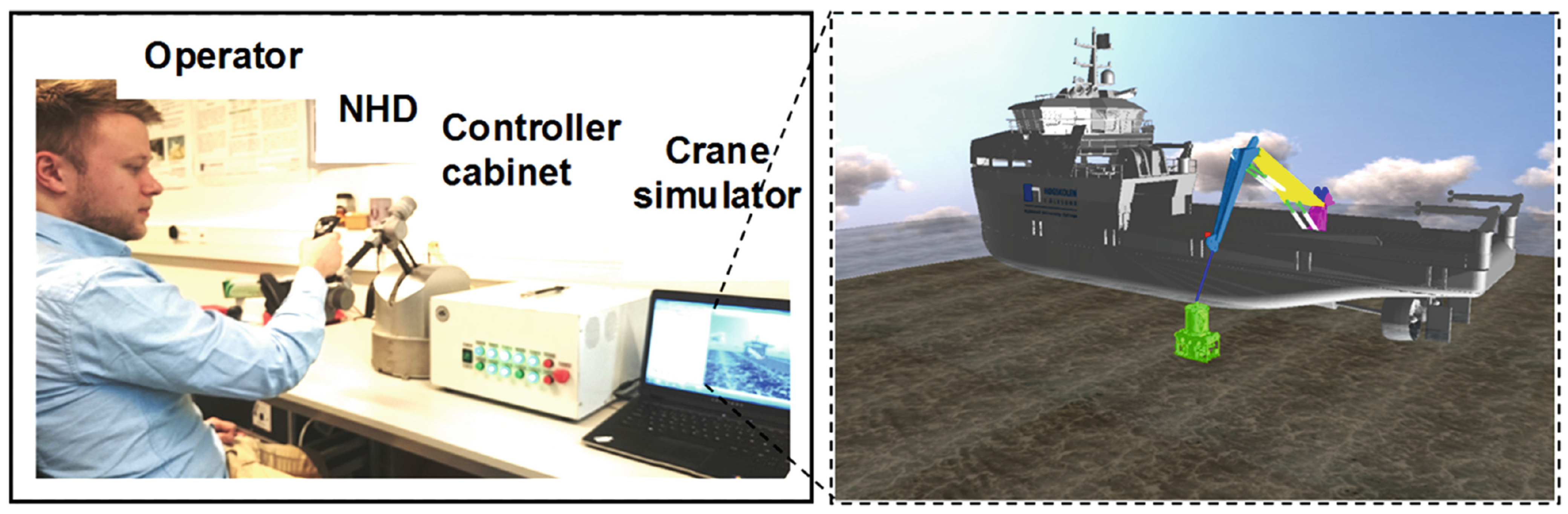

3.5. Provide Force Feedback to Operators

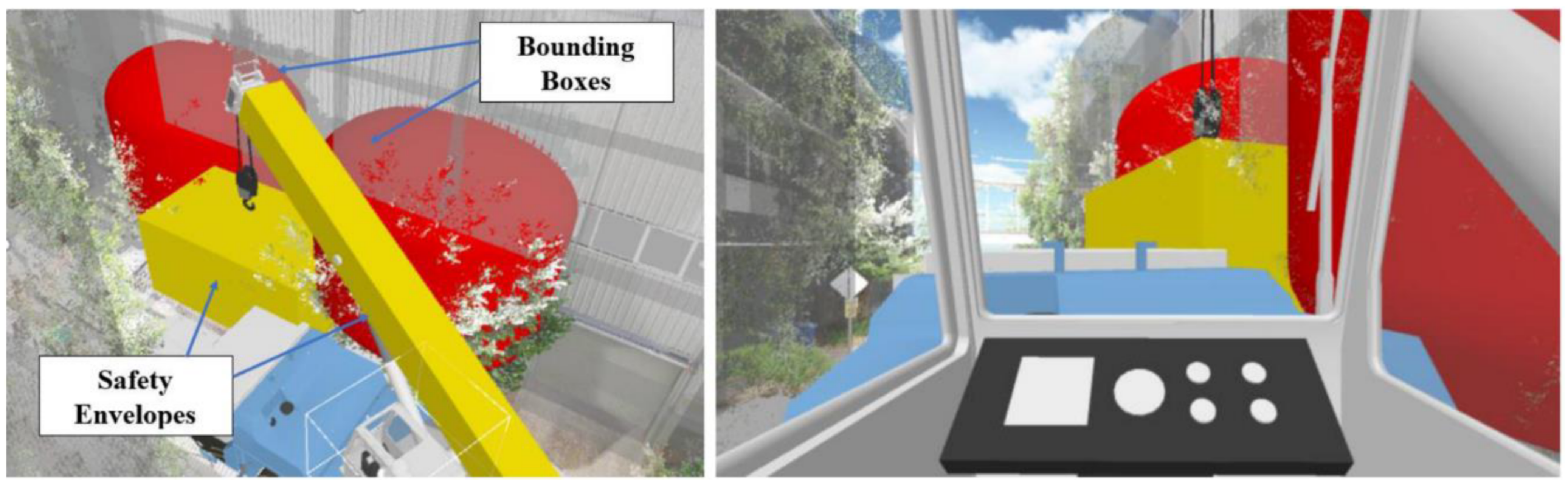

3.6. Improve Telepresence Using Immersive Technologies

3.7. Provide Different Input Techniques to Perform Teleoperation

3.8. Incorporate Higher Levels of Automation into Crane Teleoperation

- 1.

- Manual control: The operator is responsible for controlling and monitoring the crane;

- 2.

- Human-led control: The operator indicates the target lifting location and the system automatically moves the crane to the target location;

- 3.

- Machine-led control: The operator controls the crane based on the visual information provided by the system;

- 4.

- Autonomous control: The system completely controls the crane from the starting location to the target location.

4. Open Issues and Future Research Opportunities

4.1. Involvement of Crane Operators

4.2. Design of Teleoperation Stations

4.3. Facilitate Telepresence through Multimodal Feedback

4.4. Mitigate the Impact of Time Delay on Teleoperation

4.5. Considerations for Human-Automation Interaction

4.6. Examine the Impact of the Proposed HMIs on User Experience

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sitompul, T.A.; Wallmyr, M. Using Augmented Reality to Improve Productivity and Safety for Heavy Machinery Operators: State of the Art. In Proceedings of the 17th International Conference on Virtual-Reality Continuum and Its Applications in Industry (VRCAI ’19), Brisbane, Australia, 14–16 November 2019; ACM: New York, NY, USA, 2019; pp. 8:1–8:9. [Google Scholar] [CrossRef]

- Yu, Z.; Luo, J.; Zhang, H.; Onchi, E.; Lee, S.H. Approaches for Motion Control Interface and Tele-Operated Overhead Crane Handling Tasks. Processes 2021, 9, 2148. [Google Scholar] [CrossRef]

- Spasojević Brkić, V.; Klarin, M.; Brkić, A. Ergonomic design of crane cabin interior: The path to improved safety. Saf. Sci. 2015, 73, 43–51. [Google Scholar] [CrossRef]

- Kushwaha, D.K.; Kane, P.V. Ergonomic assessment and workstation design of shipping crane cabin in steel industry. Int. J. Ind. Ergon. 2016, 52, 29–39. [Google Scholar] [CrossRef]

- Xiao, B.; Lam, K.Y.K.; Cui, J.; Kang, S.C. Perceptions for Crane Operations. In Computing in Civil Engineering 2019; ASCE: Reston, VA, USA, 2019; pp. 415–421. [Google Scholar] [CrossRef]

- Kim, J.Y. A TCP/IP-based remote control system for yard cranes in a port container terminal. Robotica 2006, 24, 613–620. [Google Scholar] [CrossRef]

- Chi, H.L.; Chen, Y.C.; Kang, S.C.; Hsieh, S.H. Development of user interface for tele-operated cranes. Adv. Eng. Inform. 2012, 26, 641–652. [Google Scholar] [CrossRef]

- Sitompul, T.A. Information Visualization Using Transparent Displays in Mobile Cranes and Excavators. Ph.D Thesis, Mälardalen University, Västerås, Sweden, 2022. Available online: http://urn.kb.se/resolve?urn=urn%3Anbn%3Ase%3Amdh%3Adiva-56512 (accessed on 30 March 2022).

- Vaughan, J.; Singhose, W. The Influence of Time Delay on Crane Operator Performance. In Delay Systems: From Theory to Numerics and Applications; Springer: Cham, Switzerland, 2014; pp. 329–342. [Google Scholar] [CrossRef]

- Lee, J.S.; Ham, Y. Exploring Human–Machine Interfaces for Teleoperation of Excavator. In Construction Research Congress 2022; ASCE: Reston, VA, USA, 2022; pp. 757–765. [Google Scholar] [CrossRef]

- Fong, T.; Thorpe, C. Vehicle Teleoperation Interfaces. Auton. Robot. 2001, 11, 9–18. [Google Scholar] [CrossRef]

- Alho, T.; Pettersson, T.; Haapa-Aho, M. The Path to Automation in an RTG Terminal. Technical Report. 2018. Available online: https://www.kalmarglobal.com/4948ad/globalassets/equipment/rtg-cranes/kalmar-whitepaper-autortg (accessed on 30 March 2022).

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Baas, J.; Schotten, M.; Plume, A.; Côté, G.; Karimi, R. Scopus as a curated, high-quality bibliometric data source for academic research in quantitative science studies. Quant. Sci. Stud. 2020, 1, 377–386. [Google Scholar] [CrossRef]

- Peng, K.C.C.; Singhose, W. Crane control using machine vision and wand following. In Proceedings of the 2009 IEEE International Conference on Mechatronics, Malaga, Spain, 14–17 April 2009; pp. 1–6. [Google Scholar] [CrossRef]

- Cardona Ujueta, D.; Peng, K.C.C.; Singhose, W.; Frakes, D. Determination of control parameters for a Radio-Frequency based crane controller. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 3602–3607. [Google Scholar] [CrossRef]

- Negishi, M.; Osumi, H.; Saito, K.; Masuda, H.; Tamura, Y. Development of crane tele-operation system using laser pointer interface. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5457–5462. [Google Scholar] [CrossRef]

- Yoneda, M.; Arai, F.; Fukuda, T.; Miyata, K.; Naito, T. Multimedia tele-operation of crane system supported by interactive adaptation interface. In Proceedings of the 5th IEEE International Workshop on Robot and Human Communication (RO-MAN’96), Tsukuba, Japan, 11–14 November 1996; pp. 135–140. [Google Scholar] [CrossRef]

- Moon, S.; Bernold, L.E. Graphic-Based Human–Machine Interface for Construction Manipulator Control. J. Constr. Eng. Manag. 1998, 124, 305–311. [Google Scholar] [CrossRef]

- Sorensen, K.L.; Spiers, J.B.; Singhose, W.E. Operational Effects of Crane Interface Devices. In Proceedings of the 2nd IEEE Conference on Industrial Electronics and Applications, Harbin, China, 23–25 May 2007; pp. 1073–1078. [Google Scholar] [CrossRef]

- Farkhatdinov, I.; Ryu, J.H. A Study on the Role of Force Feedback for Teleoperation of Industrial Overhead Crane. In Haptics: Perception, Devices and Scenarios; Springer: Berlin/Heidelberg, Germany, 2008; pp. 796–805. [Google Scholar] [CrossRef]

- Osumi, H.; Kubo, M.; Yano, S.; Saito, K. Development of tele-operation system for a crane without overshoot in positioning. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5799–5805. [Google Scholar] [CrossRef]

- Singhose, W.; Vaughan, J.; Peng, K.C.C.; Pridgen, B.; Glauser, U.; de Juanes Márquez, J.; Hong, S.W. Use of Cranes in Education and International Collaborations. J. Robot. Mechatron. 2011, 23, 881–892. [Google Scholar] [CrossRef]

- Villaverde, A.F.; Raimúndez, C.; Barreiro, A. Passive internet-based crane teleoperation with haptic aids. Int. J. Control Autom. Syst. 2012, 10, 78–87. [Google Scholar] [CrossRef][Green Version]

- Heikkinen, J.; Handroos, H.M. Haptic Controller for Mobile Machine Teleoperation. Int. Rev. Autom. Control 2013, 6, 228–235. [Google Scholar]

- Suzuki, K.; Murakami, T. Anti-sway control of crane system by equivalent force feedback of load. In Proceedings of the 2013 IEEE International Conference on Mechatronics (ICM), Vicenza, Italy, 27 February–1 March 2013; pp. 688–693. [Google Scholar] [CrossRef]

- Chi, H.L.; Kang, S.C.; Hsieh, S.H.; Wang, X. Optimization and Evaluation of Automatic Rigging Path Guidance for Tele-Operated Construction Crane. In Proceedings of the 31st International Symposium on Automation and Robotics in Construction and Mining (ISARC), Sydney, Australia, 9–11 July 2014; pp. 738–745. [Google Scholar] [CrossRef]

- Karvonen, H.; Koskinen, H.; Tokkonen, H.; Hakulinen, J. Evaluation of User Experience Goal Fulfillment: Case Remote Operator Station. In Virtual, Augmented and Mixed Reality. Applications of Virtual and Augmented Reality; Springer: Cham, Switzerland, 2014; pp. 366–377. [Google Scholar] [CrossRef]

- Chen, Y.C.; Chi, H.L.; Kang, S.C.; Hsieh, S.H. Attention-Based User Interface Design for a Tele-Operated Crane. J. Comput. Civ. Eng. 2016, 30, 04015030. [Google Scholar] [CrossRef]

- Chu, Y.; Zhang, H.; Wang, W. Enhancement of Virtual Simulator for Marine Crane Operations via Haptic Device with Force Feedback. In Haptics: Perception, Devices, Control, and Applications; Springer: Cham, Switzerland, 2016; pp. 327–337. [Google Scholar] [CrossRef]

- Gao, X.; Yeh, H.G.; Marayong, P. A high-speed color-based object detection algorithm for quayside crane operator assistance system. In Proceedings of the 2017 Annual IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 April 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Goh, J.; Hu, S.; Fang, Y. Human-in-the-Loop Simulation for Crane Lift Planning in Modular Construction On-Site Assembly. In Proceedings of the Computing in Civil Engineering 2019: Visualization, Information Modeling, and Simulation, Atlanta, GA, USA, 17–19 June 2019; pp. 71–78. [Google Scholar] [CrossRef]

- Top, F.; Krottenthaler, J.; Fottner, J. Evaluation of Remote Crane Operation with an Intuitive Tablet Interface and Boom Tip Control. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 3275–3282. [Google Scholar] [CrossRef]

- Major, P.; Li, G.; Zhang, H.; Hildre, H.P. Real-Time Digital Twin Of Research Vessel For Remote Monitoring. In Proceedings of the 35th European Council for Modeling and Simulation (ECMS), Online, 31 May–2 June 2021. [Google Scholar] [CrossRef]

- He, F.; Ong, S.K.; Nee, A.Y.C. An Integrated Mobile Augmented Reality Digital Twin Monitoring System. Computers 2021, 10, 99. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Horberry, T.; Lynas, D. Human interaction with automated mining equipment: The development of an emerging technologies database. Ergon. Aust. 2012, 8, 1–6. [Google Scholar]

- Kalmar. Be in Complete Control—The New Kalmar Remote Console. Available online: https://www.kalmar.fi/491403/globalassets/media/216100/216100_RC-desk-flyer-web.pdf (accessed on 30 March 2022).

- Chen, J.Y.C.; Haas, E.C.; Barnes, M.J. Human Performance Issues and User Interface Design for Teleoperated Robots. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 2007, 37, 1231–1245. [Google Scholar] [CrossRef]

- Occupational Safety and Health Branch Labour Department. Code of Practice for Safe Use of Mobile Cranes, 2nd ed.; Labour Department: Hong Kong, China, 2017. Available online: https://www.labour.gov.hk/eng/public/os/B/MobileCrane.pdf (accessed on 30 March 2022).

- Council, W. Workplace Safety and Health Guidelines: Safe Use of Lorry Cranes; Workplace Safety and Health Council: Singapore, 2020; Available online: https://www.tal.sg/wshc/-/media/tal/wshc/resources/publications/wsh-guidelines/files/wsh_guidelines_safe_use_of_lorry_crane.ashx (accessed on 30 March 2022).

- Lichiardopol, S. A Survey on Teleoperation; Technical Report DCT 2007.155; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2007; Available online: https://research.tue.nl/files/4419568/656592.pdf (accessed on 30 March 2022).

- Georg, J.M.; Feiler, J.; Diermeyer, F.; Lienkamp, M. Teleoperated Driving, a Key Technology for Automated Driving? Comparison of Actual Test Drives with a Head Mounted Display and Conventional Monitors. In Proceedings of the 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3403–3408. [Google Scholar] [CrossRef]

- Georg, J.M.; Feiler, J.; Hoffmann, S.; Diermeyer, F. Sensor and Actuator Latency during Teleoperation of Automated Vehicles. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 760–766. [Google Scholar] [CrossRef]

- Bidwell, J.; Holloway, A.; Davidoff, S. Measuring Operator Anticipatory Inputs in Response to Time-Delay for Teleoperated Human-Robot Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’14), Toronto, ON, Canada, 26 April 2014–1 May 2014; ACM: New York, NY, USA, 2014; pp. 1467–1470. [Google Scholar] [CrossRef]

- Neumeier, S.; Wintersberger, P.; Frison, A.K.; Becher, A.; Facchi, C.; Riener, A. Teleoperation: The Holy Grail to Solve Problems of Automated Driving? Sure, but Latency Matters. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’19), Utrecht, The Netherlands, 22–25 September 2019; ACM: New York, NY, USA, 2019; pp. 186–197. [Google Scholar] [CrossRef]

- Brunnström, K.; Dima, E.; Andersson, M.; Sjöström, M.; Qureshi, T.; Johanson, M. Quality of Experience of hand controller latency in a virtual reality simulator. Electron. Imaging 2019, 2019, 218:1–218:9. [Google Scholar] [CrossRef]

- Thompson, J.M.; Ottensmeyer, M.P.; Sheridan, T.B. Human Factors in Telesurgery: Effects of Time Delay and Asynchrony in Video and Control Feedback with Local Manipulative Assistance. Telemed. J. 1999, 5, 129–137. [Google Scholar] [CrossRef]

- Zhang, T. Toward Automated Vehicle Teleoperation: Vision, Opportunities, and Challenges. IEEE Internet Things J. 2020, 7, 11347–11354. [Google Scholar] [CrossRef]

- Gutwin, C.; Benford, S.; Dyck, J.; Fraser, M.; Vaghi, I.; Greenhalgh, C. Revealing Delay in Collaborative Environments. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’04), Vienna, Austria, 24–29 April 2004; ACM: New York, NY, USA, 2004; pp. 503–510. [Google Scholar] [CrossRef]

- Wilde, M.; Chan, M.; Kish, B. Predictive Human–Machine Interface for Teleoperation of Air and Space Vehicles over Time Delay. In Proceedings of the 2020 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2020; pp. 1–14. [Google Scholar] [CrossRef]

- Debernard, S.; Chauvin, C.; Pokam, R.; Langlois, S. Designing Human–Machine Interface for Autonomous Vehicles. IFAC-PapersOnLine 2016, 49, 609–614. [Google Scholar] [CrossRef]

- Cummings, M.; Mastracchio, C.; Thornburg, K.; Mkrtchyan, A. Boredom and Distraction in Multiple Unmanned Vehicle Supervisory Control. Interact. Comput. 2013, 25, 34–47. [Google Scholar] [CrossRef]

- Lorenz, S.; Helmert, J.R.; Anders, R.; Wölfel, C.; Krzywinski, J. UUX Evaluation of a Digitally Advanced Human–Machine Interface for Excavators. Multimodal Technol. Interact. 2020, 4, 57. [Google Scholar] [CrossRef]

- Savioja, P.; Liinasuo, M.; Koskinen, H. User experience: Does it matter in complex systems? Cognit. Technol. Work 2014, 16, 429–449. [Google Scholar] [CrossRef]

- Knight, J.C. Safety critical systems: Challenges and directions. In Proceedings of the 24th International Conference on Software Engineering (ICSE), Orlando, FL, USA, 19–25 May 2002; pp. 547–550. [Google Scholar]

| No. | Authors | Year | Type of Crane | Type of HMI | Purpose of the HMI | User Evaluation | Evaluation Metrics |

|---|---|---|---|---|---|---|---|

| 1 | Yoneda et al. [18] | 1996 | All-terrain crane | Overlaid information, auditory feedback | Load sway reduction | With 4 participants 1 | Completion time |

| 2 | Moon and Bernold [19] | 1996 | Loader crane | GUI, automation | Collision prevention | With 4 crane operators | Completion time |

| 3 | Kim [6] | 2006 | Gantry crane | GUI | General teleoperation | - | - |

| 4 | Sorensen et al. [20] | 2007 | Bridge crane | GUI, automation | Load sway reduction | With 19 non-operators | Completion time |

| 5 | Farkhatdinov and Ryu [21] | 2008 | Bridge crane | Force feedback | Load sway reduction | With 5 non-operators | Completion time |

| 6 | Osumi et al. [22] | 2020 | Bridge crane | GUI, automation | Collision prevention | - | - |

| 7 | Singhose et al. [23] | 2011 | Bridge crane, tower crane, all-terrain crane | GUI | General teleoperation | - | - |

| 8 | Villaverde et al. [24] | 2012 | Bridge crane | Force feedback | Collision prevention | With 1 participant 1 | Completion time |

| 9 | Chi et al. [7] | 2012 | Tower crane | Multi-monitor, overlaid information | Collision avoidance | With 5 crane operators & 30 non-operators | Completion time, eye gaze, mental workload (for non-operators only) |

| 10 | Heikkinen and Handroos [25] | 2013 | Gantry crane | CAVE environment, force feedback | Load sway reduction | With 5 non-operators | Sway angle, sway speed |

| 11 | Suzuki and Murakami [26] | 2013 | Bridge crane | Force feedback | Load sway reduction | With 1 participant 1 | Completion time, sway angle |

| 12 | Chi et al. [27] | 2014 | Tower crane | Overlaid information | Collision prevention | - | - |

| 13 | Karvonen et al. [28] | 2014 | Gantry crane | GUI | General teleoperation | With 6 crane operators | Individual opinions |

| 14 | Chen et al. [29] | 2016 | Tower crane | Multi-monitor, overlaid information | Collision prevention | With 30 non-operators | Completion time, number of collisions, eye gaze |

| 15 | Chu et al. [30] | 2016 | Deck crane | Force feedback | Load sway reduction | With 3 non-operators | Completion time |

| 16 | Gao et al. [31] | 2017 | Gantry crane | Overlaid information | Collision prevention | - | - |

| 17 | Goh et al. [32] | 2019 | All-terrain crane | Virtual reality | Collision prevention | - | - |

| 18 | Top et al. [33] | 2020 | Loader crane | GUI, automation | General teleoperation | With 28 crane operators & 28 non-operators | Completion time, task accuracy |

| 19 | Major et al. [34] | 2021 | Deck crane | CAVE environment | General teleoperation | - | - |

| 20 | He et al. [35] | 2021 | Tower crane | Augmented reality | Collision prevention | With 20 non-operators | Response time, task accuracy |

| 21 | Yu et al. [2] | 2021 | Bridge crane | GUI, input techniques | General teleoperation | With 11 crane operators & 21 non-operators | Completion time, task accuracy, mental workload (for non-operators only), heart rate variation (for non-operators only) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sitompul, T.A. Human–Machine Interface for Remote Crane Operation: A Review. Multimodal Technol. Interact. 2022, 6, 45. https://doi.org/10.3390/mti6060045

Sitompul TA. Human–Machine Interface for Remote Crane Operation: A Review. Multimodal Technologies and Interaction. 2022; 6(6):45. https://doi.org/10.3390/mti6060045

Chicago/Turabian StyleSitompul, Taufik Akbar. 2022. "Human–Machine Interface for Remote Crane Operation: A Review" Multimodal Technologies and Interaction 6, no. 6: 45. https://doi.org/10.3390/mti6060045

APA StyleSitompul, T. A. (2022). Human–Machine Interface for Remote Crane Operation: A Review. Multimodal Technologies and Interaction, 6(6), 45. https://doi.org/10.3390/mti6060045