1. Introduction

This paper presents a case study describing the construction methods used to design and develop immersive, multimodal, interactive models of virtual nature. Using 3D data visualizations of scientific data related to plants, ecologies, terrain, and waterbodies to create large geospatial, immersive, photorealistic models at a true scale, these spatial virtual landscapes can accurately reflect real-world landscapes. As such, these virtual nature models may be displayed in augmented reality (AR) and virtual reality (VR) devices as immersive game level environments. However, unlike traditional game level environments, these visualize reality to be experienced perceptually, emotionally, and cognitively, much like an AR or VR Holodeck: walk through the virtual forest, look up to see the canopy, bend down to inspect the small details in a flower, explore wherever you want, go off trail, select a plant to open a virtual field guide, learn about the natural world. The main importance and interest in this work lie in the unique design and novel processes combined to fuse information from multiple sources to create a high information fidelity environment as a photorealistic immersive experience of nature. The human experience is perceptual, experiential, embodied, and cognitive, allowing the non-expert to “see” and experience a reality that only a domain expert could imagine, one based on deep knowledge. The non-expert’s Gestalt moment of insight is to see the world through the eyes of an expert and then develop a new intuitive understanding of knowledge about the natural world. This paper focuses on the larger of the two virtual nature applications,

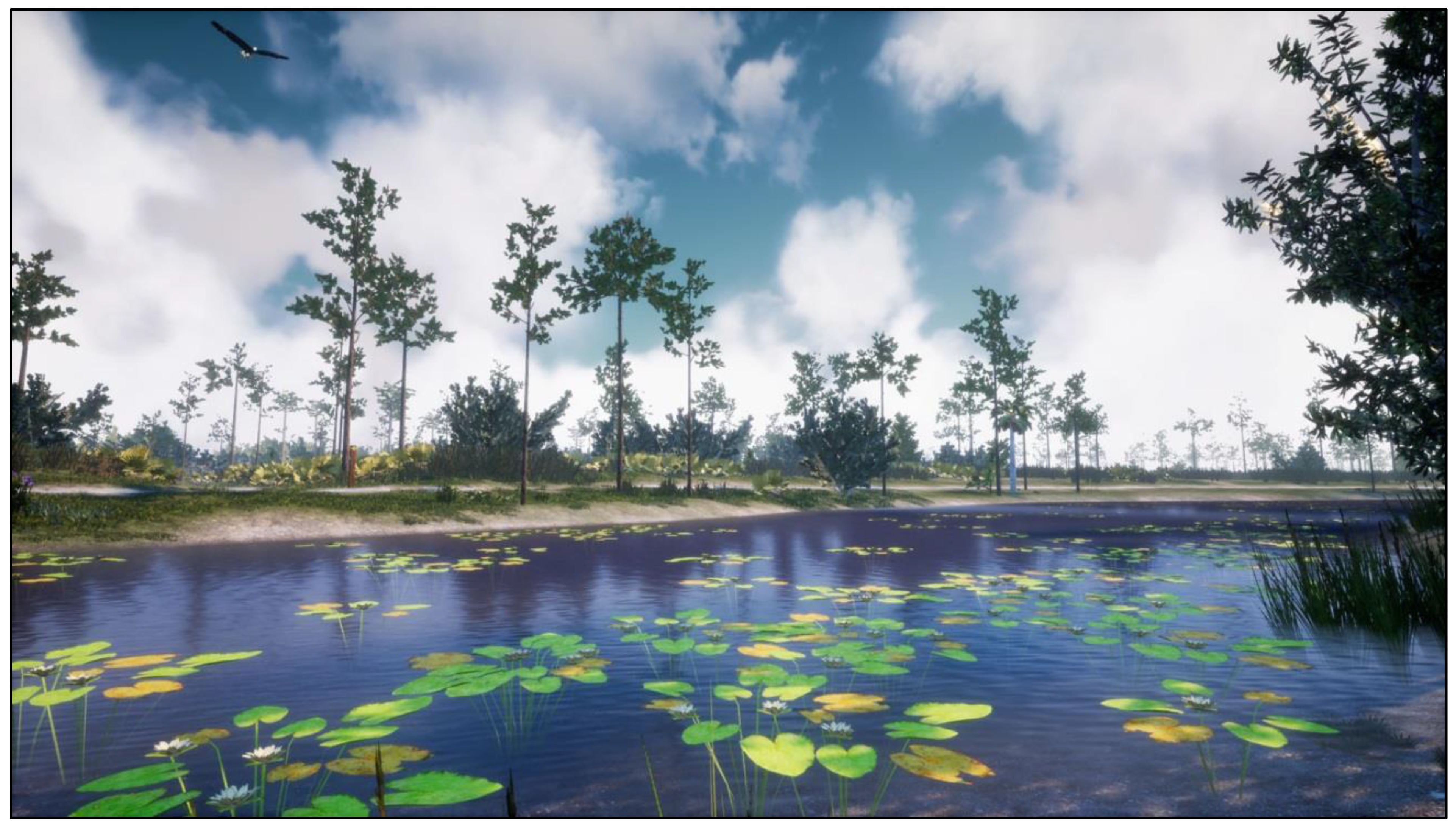

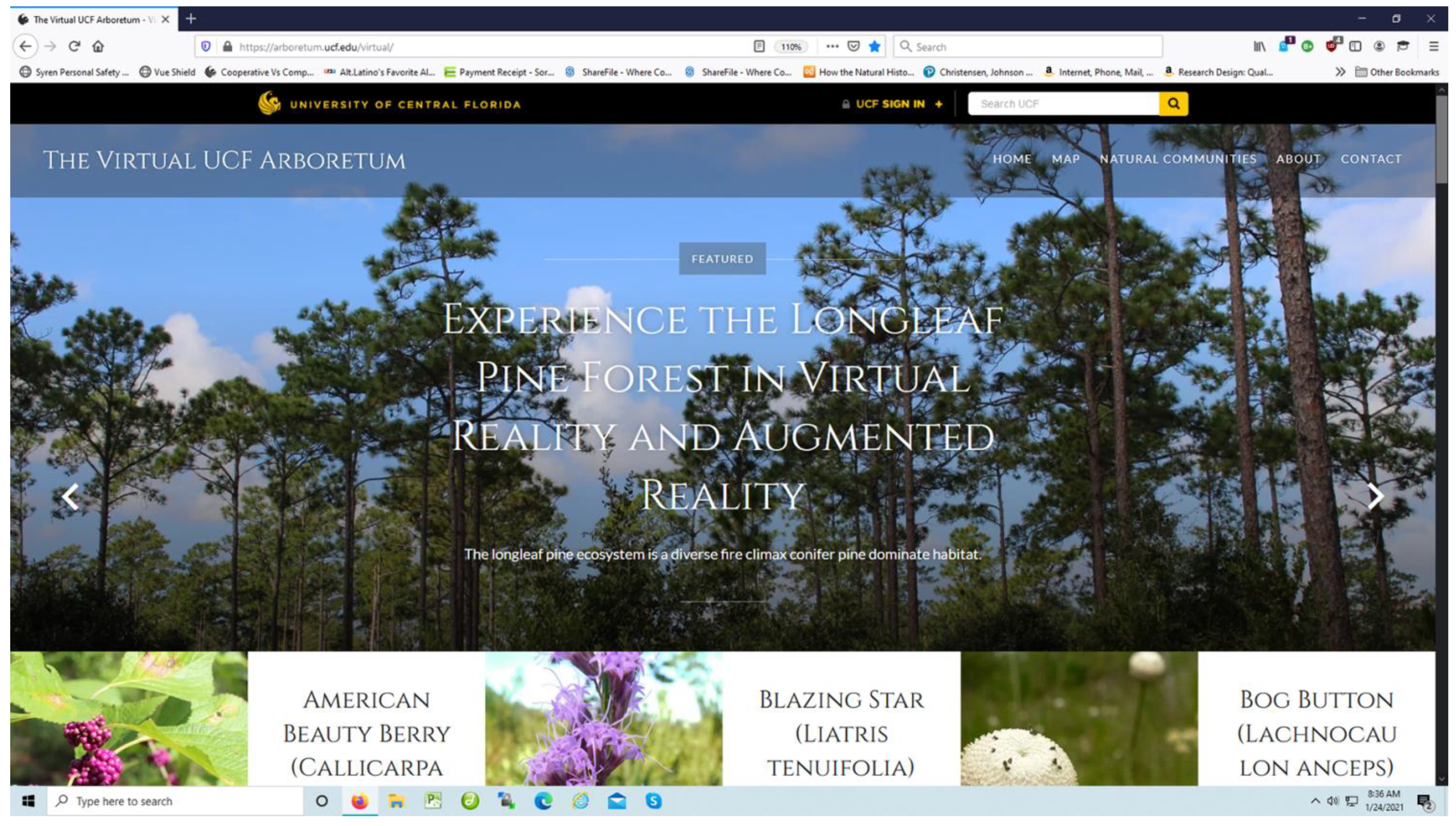

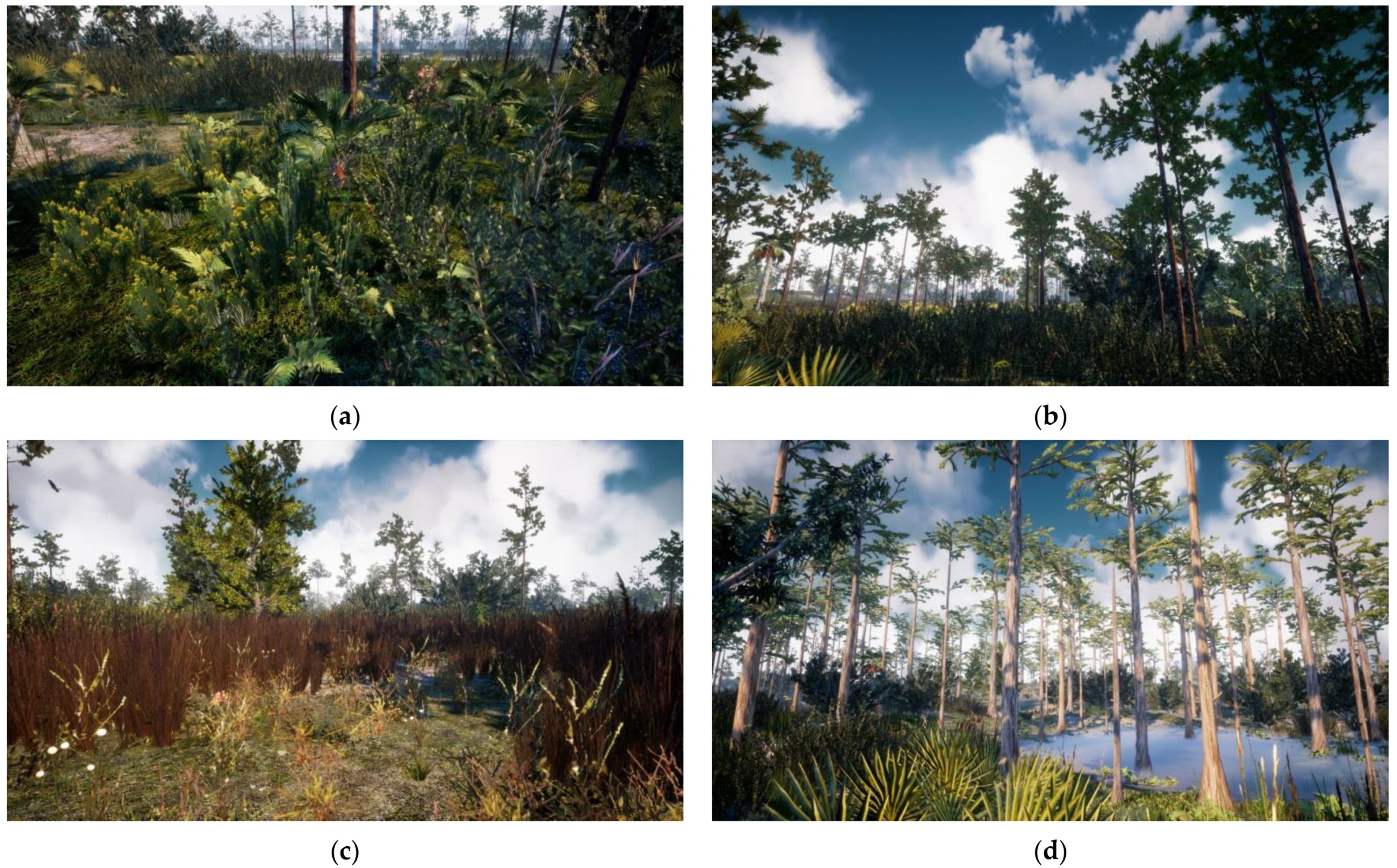

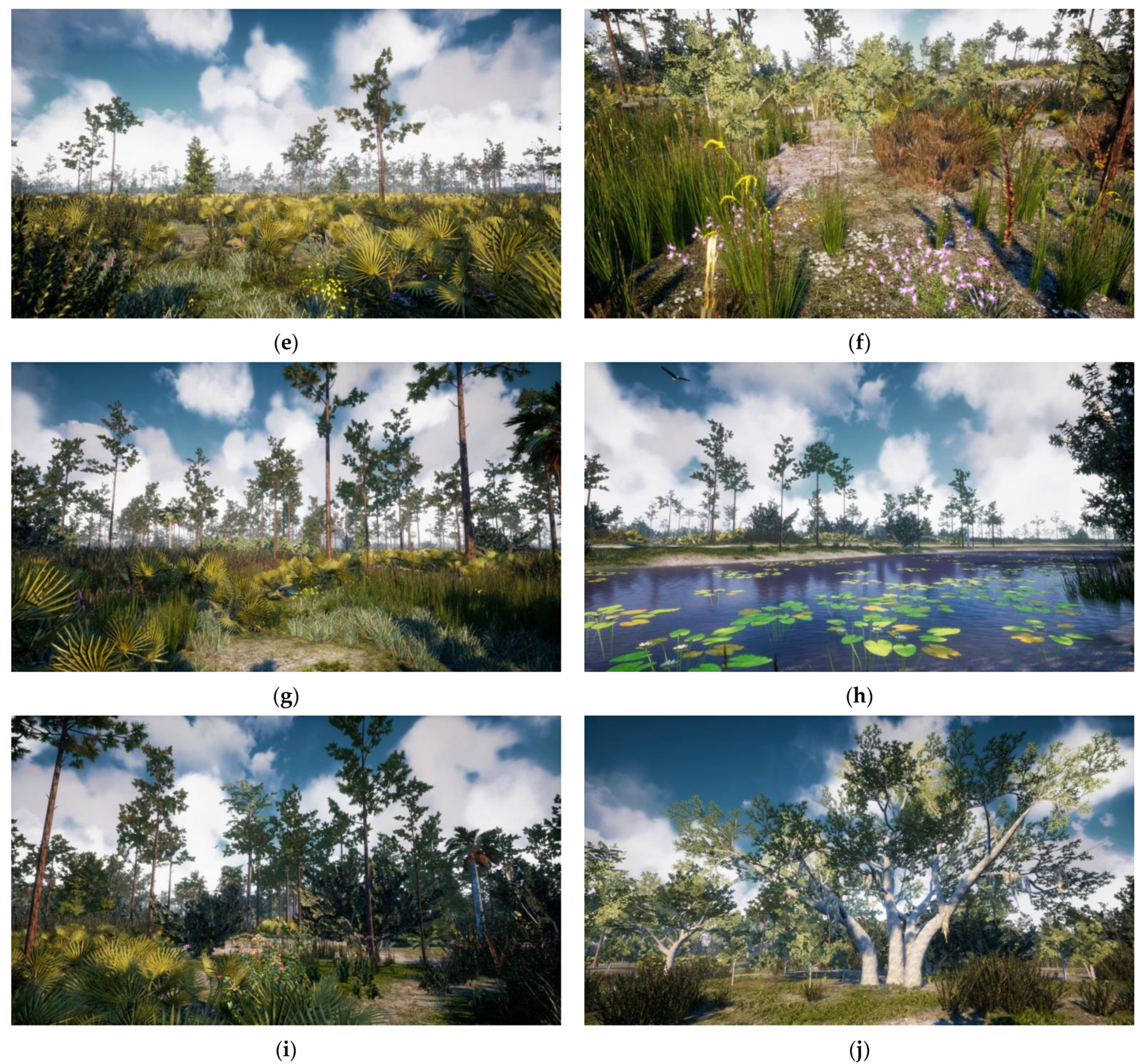

The Virtual UCF Arboretum (

Figure 1) (see

Supplementary Materials), to document the design, development, and construction process for virtual nature immersive environments and summarizes previously published results for a related project,

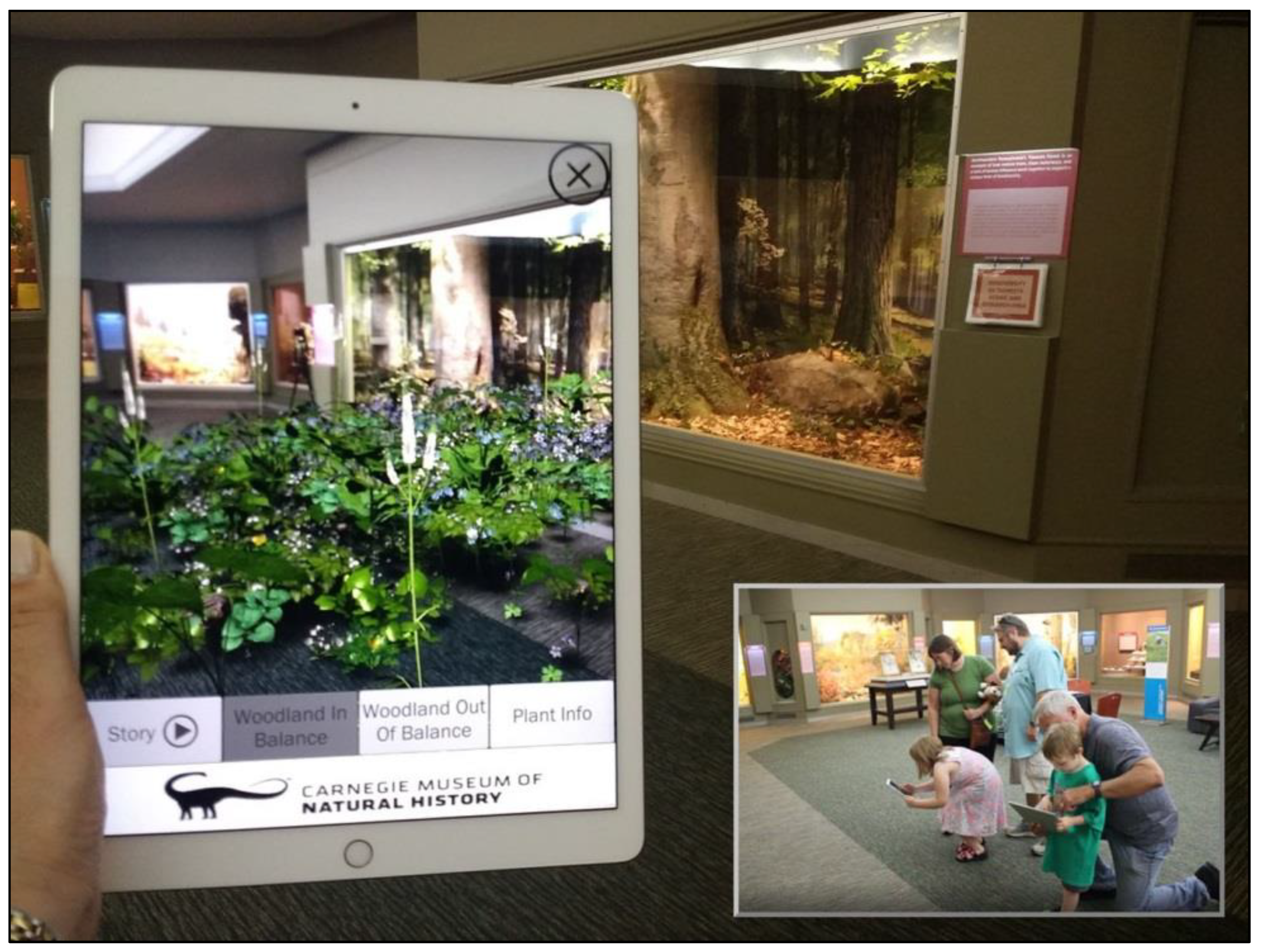

The AR Perpetual Garden (

Figure 2) (see

Supplementary Materials), to provide insight into its use as an immersive informal learning application. The primary focus of this paper is to provide a detailed document of the design, development, and construction of the virtual nature immersive application so that researchers and practitioners may replicate the work. Importantly, an argument is presented in support of factual accuracy in terms of both content and presentation for applications used for education and learning. Critically for effective educational and learning applications, the media should accurately reflect the facts. Not only should the facts, data, and educational story be accurate, but the visualization, presentation, and transmission must also be correct. Also developed is a definition of information fidelity and how it interacts with the curriculum, photorealism, and immersion to extend and define the critical design elements of concern for immersive informal learning applications, especially when modeling the natural world. The results might be helpful to developers and practitioners alike who wish to replicate the design and develop their own virtual nature immersive learning applications with AR and VR technologies.

3. Motivation and Contribution

The original motivation was driven by the direct observation of how students were highly motivated and engaged in learning activities on real field trips and the immergence of off-the-shelf game engines used in combination with immersive hardware to create simulations of large open worlds to construct a similar virtual learning experience. Parents often lack the knowledge to offer factually correct answers to questions on field trips or visits to museums, leaving their children with misrepresentations of reality (e.g., answering “oh, that is a lizard”, when it in reality it is a salamander). Part of the problem with a socially constructed reality is that the “authority” is often not the domain expert and they are wrong. As observed in the 2019 study, as mothers rested on the couch, their children were observed to independently explore, challenging the extensive literature on informal learning in museums driven by social collaborative interaction [

7]. Part of the challenge was to design an application that could work for a child exploring on their own. When there is collaboration and socially constructed learning, this design satisfies curiosity for both the parent and the child by opening a semantically linked authoritative factual resource. This educational story is specific to each virtual object and context and important for learning correct representations of the world, because they are authored, vetted, and approved for use by the domain experts critical for scientific communication.

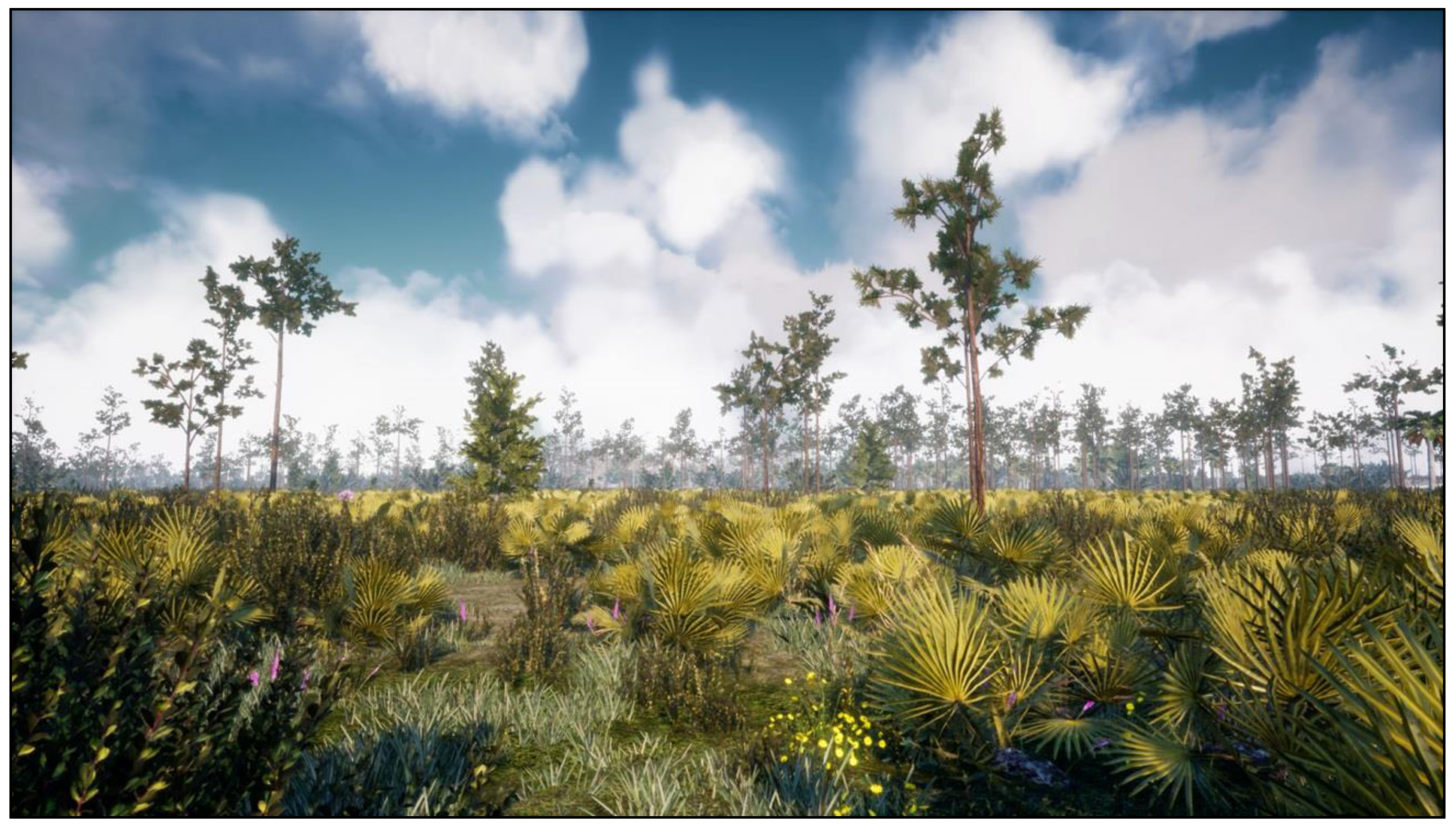

The Virtual UCF Arboretum allows the user to take a leisurely virtual hike for as long as they wish and in any direction desired, even off-trail in this wild Central Florida environment. Much like a real hike, they experience nature in an egocentric view as a realistic visual and audio landscape based on biological research field data. It is not your typical fantasy game level, nor an immersive 2D 360 video that restricts navigation to the trail, but a new type of artificial knowledge artifact representing the plants and animals found in the real environment. It reflects the correct species inventories, population densities, and botanically correct 3D plant models as sensory patterns of data, perceived in context as ecological information. Students react to the “sharpness” of a Saw Palmetto (Serenoa repens) and are hesitant to enter the thicket. A research scientist from New York in the winter experiences the “warmth” of the Florida sun and smiles. No one enters the pond, cautious of a potential alligator encounter. An American bald eagle (Haliaeetus leucocephalus) cries; a student looks up and wonders why it does not sound as expected, like a hawk. Bioacoustics are accurate to the insects and birds of each location and to the season, based on research data of species counts, inventory, and population densities, offering an enhanced multimodal sensory experience en route. The vision-impaired may independently explore and experience the sounds of nature in a spatial environment in ways that are not possible in the real world. It is possible to virtually walk off trail through the plants and hear the crunching sound of plants and earth, as well as birds, frogs, and insects as they move through the virtual world. When demonstrated to the public at a science center, those with physical disabilities and in wheelchairs found the work to be especially exciting, engaging, and enjoyable.

The high information fidelity of these virtual nature applications is unique because they are not built as traditional game level environmental art using fantasy content, nor are they “immersive” 2D 360 video VR, because they allow the full exploration of a large open world driven by the user of the system and not pre-determined by the designer. As a three-dimensional, real-time interactive data visualization of GIS data, this artifact becomes a virtual model of the plants, and when those plants are combined correctly on a terrain, a highly realistic and accurate virtual landscape is produced. It is also like walking through the glass of, and into, a diorama or exhibit found in natural history museums. Such applications may be used to reinforce, not to replace, real field trips or real field-work [

8].

The virtual nature environment is fully interactive, consistent with Slater’s theories on presence [

9], supporting design affordances unique to VR immersive applications that produce place and plausibility illusion because the content of these virtual nature applications accurately represents the data that produce the ambient environmental signals with high information fidelity. The user experiences are thus directly related to the perception of the transmitted signals, in total, or to the summation of the quality of the factual content based on data and expressed fully with minimal information loss as photorealistic and ambient audio digital signals, bounded by the immersive capacities of the hardware output devices.

This is very different to Bowman’s definition of “graphical fidelity” and “immersive applications” [

10]. The design factors of the output devices alone—an iPad, HoloLens, or HTC Vive—will transmit the information fidelity of the content constrained by the resolution and field of view of the device, whether it is a cartoon, a high-definition film, or a stylized or photorealistic fantasy game. The information fidelity is higher in the film than the cartoon and is low in any fantasy game, independent of style, photorealism, or the “immersive” level of the device. Photorealism, or graphical fidelity, is a byproduct of the high information fidelity and the software rendering capabilities and is enhanced by the immersive devices’ hardware properties for the transmission of signals to the human to perceive. The application’s objective is to support the human in the free-choice exploration of that immersive environment for engaged and intrinsic learning, very different to Dede’s use of the word “immersive” [

11] in the education literature that places the learner into a structured scenario, framing perception and choices, and reducing free choice.

High information fidelity, from an information science perspective, is defined in terms of the

accuracy, completeness, and assurance of all of the signals transmitted from the environment and perceived by the human through their sense organs. It is what makes this photorealistic, multimodal, immersive, and interactive virtual arboretum unique, different, and powerful. Past research with virtual nature found significant interaction effects between the independent variables of visual fidelity and navigational freedom of knowledge gained, with the highest actual learning gains in the combined conditions (37%) [

1]. Free choice in navigation and wayfinding, not bounded to a route created at the point of recording or restricted to a game designer’s pre-determined path, appears to also enhance presence and embodiment, as shown in other mixed-reality applications [

12,

13]. Presence appears to be enhanced by the information fidelity, which, when combined with freedom in exploration, produces realistic perceptions and interactions, especially when displayed in AR and VR devices that transmit signals at high resolutions and wide field of views, and at true scale.

At individual points of personal interest, whatever an individual perceives as salient—a bright red flower, a plant with delicious fruit, a butterfly to follow—the user may select the object for more information with a simple click below the activated cross-hair indicator to satisfy a

spontaneous emotional reaction to the beauty of the virtual natural world, satisfying an innate human need to know and understand the world. That salient event is a combination of the user’s response to the signals in the virtual environment combined with emotions, perceptions, memory, and an instinctual decision response to take action (e.g., clicking on the object), which is supported in the design with an interactive virtual field guide (

Figure 3) and educational story used to satisfy curiosity in the moment. The VR technical design component is similar to a heads-up display (HUD) user interface (UI) common in many games or other 3D UI VR applications. Many AR applications use the UI annotation as an overlay on top of real-world objects as a type of virtual tool-tip. Such design factors represent interaction functionality that are semantically context-sensitive. This definition of interaction is more closely related to Bowman’s work on immersive 3D UIs than Slater’s. Such semantic interactions enhance the presence experienced by increasing engagement through active learning and enhanced perception driven by knowledge gained about the world. To learn to see the world is to learn the meaning of what is seen.

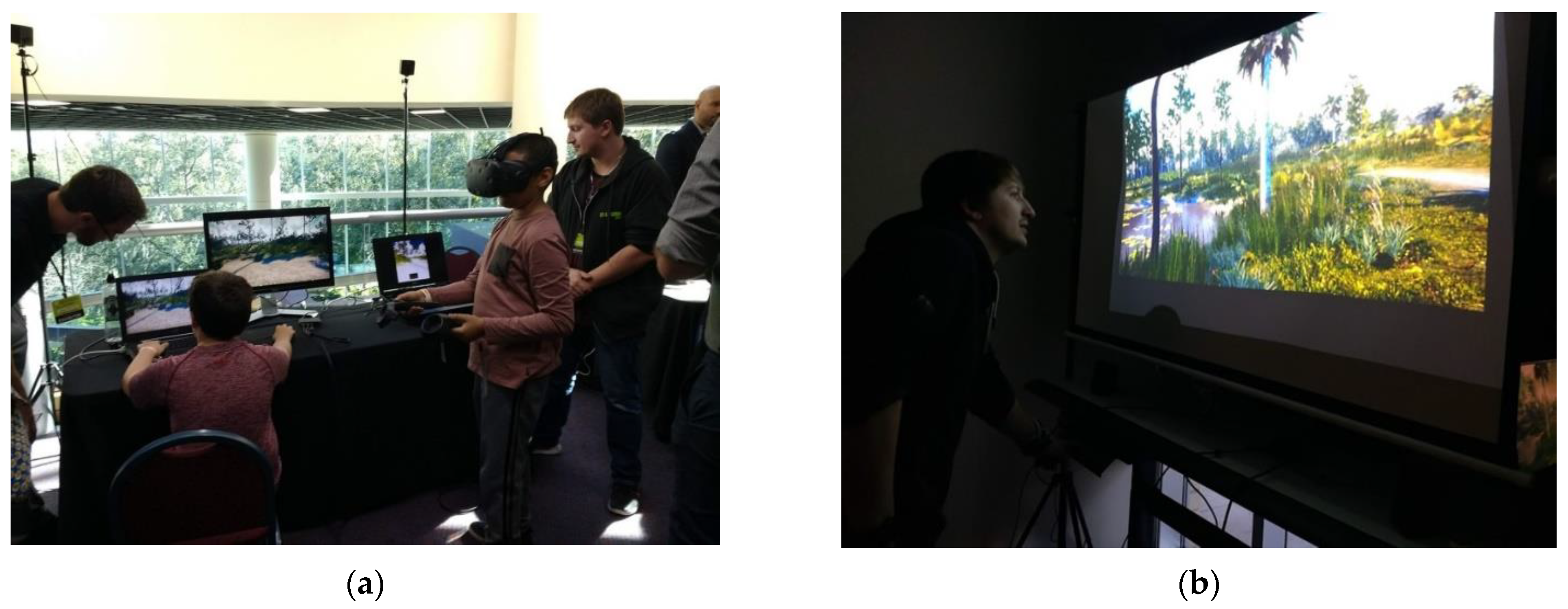

The PC version can be used on a desktop or in an immersive VR headset environment, as well as a version that is connected to a treadmill. As the virtual nature model was developed using the Unreal Engine, it was easily extended to work with a VR headset (e.g., HTC Vive) and hand controllers to study emotional reactions needed to support learning outcomes (

Figure 4a). VR headset navigation is more constrained than the desktop or treadmill versions and is supported with teleportation to avoid cybersickness. The treadmill version is expected to enhance presence with the added benefits of kinesthetic sensory feedback loops to increase embodiment, engagement, and emotional reactions and to facilitate future spatial cognition research broadly and learning research specifically (

Figure 4b). An immersive AR version is being developed for the arboretum with optimized assets for a future study.

5. Materials and Methods

5.1. Data and Software Applications Used to Develop the Game Level Environment

Many non-entertainment applications have been designed and developed with game engines. The reasons for this are many, including that they are free and offer ease of use in the construction of large open-worlds and environments, and that they offer an optimized output pipeline process to produce AR and VR applications. The current versions of the Unity and Unreal game engines offer advanced photorealistic computer graphics rendering and real-time, interactive, multimodal, and user interface features. Both game engines support application programming interfaces (APIs) to connect external data sources to control in-game dynamics, such as lighting and wind effects, to enhance the game environments as simulations. They both support a wide array of user interface tools to generate overlays and visualizations for attention direction and control and user interaction. Recently, both game engines have started to offer streamlined development pipeline processes to make immersive AR and VR easier than in the past and to support a wider array of manufacturers.

These tools support automatic terrain construction using height maps, making them ideal for visualizing terrain and GIS data. There are many ways to import real terrain data to automatically generate a realistic environment as a game level. Game engines also support location-based sound and level of detail (LODs) optimization for multi-sensory effects in high fidelity over large open worlds. The Unreal game engine was selected over Unity because of the superior photorealistic graphics quality required for The Virtual UCF Arboretum research portfolio, whereas Unity was selected for the AR apps due to the tighter and easier development pipeline with AR devices.

Terrain map data may be used to generate height maps to automatically generate virtual terrains for a game level environment. Environments without vegetation are simple to construct, such as a VR Mars, VR Moon, or VR Earth deserts or mountains. To create a lush and accurate forest, data for the plants are required. ESRI is the de facto standard database used in forestry and ecology. Such data are typically displayed as abstract dots on a 2D GIS map. Although accurate, such abstract data visualizations are not emotionally engaging, immersive, or experiential—all outcomes shown to be desirable for learning outcomes, such as those that simulate virtual field trips.

For this project to achieve the emotional and learning design objectives, the ESRI data had to be converted into virtual plants at a true scale and displayed in patterns representative of real ecological regions in the virtual terrain. The virtual arboretum project sourced real plant inventory and population distribution data for each of the 10 natural communities of interest in collaboration with the UCF Arboretum. Once the data were converted into information the game engine could process, the rendering and LOD tools automatically displayed the virtual plants on top of the virtual terrain. The pattern of all of the plants viewed together, while not representing unique individuals, matched the real plant population inventory and population densities as statistical extrapolations of the real natural environment. Thus, the virtual nature model presents an accurate pattern of foliage to the viewer. In order to increase the information fidelity, and when the line of sight was clear, drone images were used for the precise location of individual plant models, such as the waterlilies placed in exact locations on the pond.

5.2. Sourced Content: Facts, Educational Story, Photographs, and 3D Plant Models

The Virtual Field Guide, consisting of 35 unique plant species native to Florida for the arboretum and 35 unique plant species native to Pennsylvania for the Carnegie, served three functions. First, the plant facts, photographs, and educational stories were collaboratively sourced using the WordPress platform. The processes required the biologists to source, review, and vet all the data that supported the digital media team’s access to data on plant facts—such as plant height and spread, in addition to membership in natural communities—required to create accurate 3D plant models. The 3D plant models were shared by the digital media team on SketchFab.com, creating the project’s 3D Plant Atlas used to support the domain expert review, vet, and approval process.

Second, the

Virtual Field Guide built in WordPress.com functions as a stand-alone educational website available to anyone with a web browser, offering broader impact and access. Aligned to national educational standards, it was published on PBS

LearningMedia in 2019 and offers browsing and searching by common name, plant meta-data, plant Latin name, photographs, educational stories, and gardening facts. The

3D Plant Atlas component allows teachers nationwide to use any web browser-enabled device, or low-cost VR Google Cardboard and AR-enabled smartphones, to bring educational material about plants into their classrooms for free for an immersive botany lesson. Such an experience could be beneficial, especially for urban youth who are underrepresented in STEM fields without access to forests [

28] to motivate interest in the science of the natural world and related careers [

29].

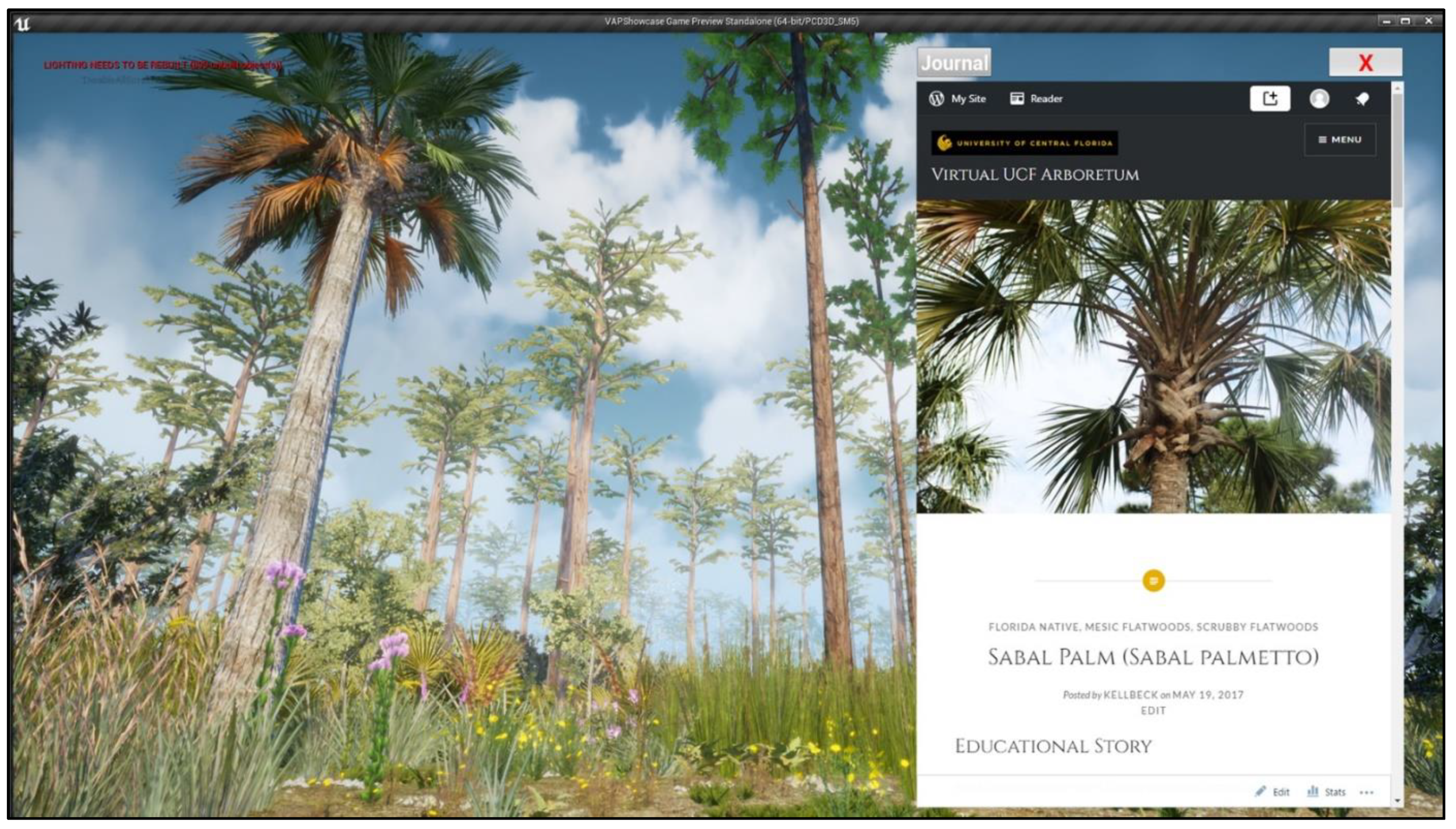

The third function was to use the entire published website as the Virtual Field Guide in-game for learning in the moment of curiosity. Experienced in-game as a type of heads-up display (HUD), it allows a user to click on a plant to open a fact card about that plant, as well as to keep a journal of all plants found while engaged in the virtual field trip and access a 2D map of the entire arboretum as a navigation and wayfinding aid. The fact that the educational content is not hardcoded or embedded inside the game is an important design innovation. This design represents a web of knowledge connected to objects inside the game level that is programmatically independent and thus easy to maintain and extend.

The digital media team relied on data to construct accurate and realistic plant models and landscapes, representing high information fidelity. SketchFab.com was used to publish the 3D models, first for review in collaboration with the scientists and then online as a standalone atlas of the plant models available to the public to use as AR and VR plant models for educational activities. Although photogrammetry and laser-scanned point clouds (e.g., Megascans) will improve the future efficiency of capture and provide higher resolution, better detail, and higher complexity with higher polygon counts for the 3D models, the reviews with botanists are expected to be continued to ensure the accurate identification and level of detail required to accurately represent the model’s salient features. Each plant has been through an iterative, co-creation, and review process with a botanist or biologist and artists in the digital media team to vet and approve it prior to release. The scientists worked with digital media artists to review, correct errors in the 3D models, and agree on the level of information fidelity required prior to release and use. All of the AR and VR photorealistic 3D plant models are botanically correct.

The ambient bioacoustics were gathered and reviewed in a similar matter and are accurate representations of insects, amphibians, and birds found in the environment. Limited artificial life takes the form as animated avatars. The American eagle makes a sound more like a seagull than a hawk, but the hawk cry is used in many nature films because it is “prettier”. The cry in the virtual arboretum sounds as it should. These components, when combined, make a high information fidelity model of the real natural environment possible and open many lines for future research and development.

5.3. The Design and Construction Process

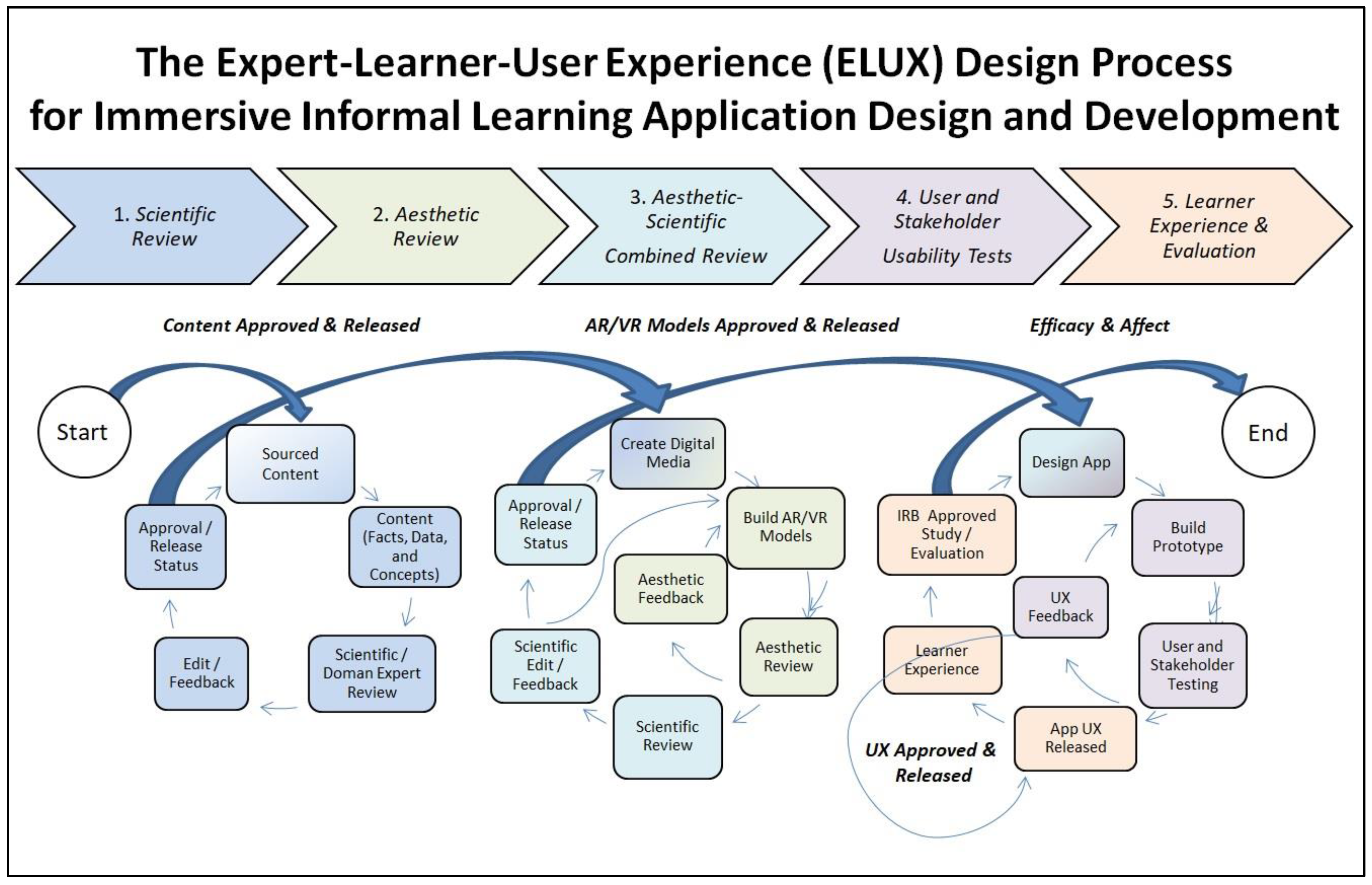

An interdisciplinary co-design process was used to integrate the requirements of scientists and artists to carefully create these artificial knowledge artifacts for use in the communication of materials that not only engage but also teach. Using a modified user-centered design (UCD) approach [

30] to integrate the Expert-Learner-User eXperience (ELUX) design process [

31], holistic requirements emerged, were captured, and were used for the application design specification (

Figure 5).

This iterative co-design process required multiple feedback loops:

Scientific Review: iterative source–review–correct–validate process required to authenticate all data, information, facts, and concepts for approval and release by domain experts.

Aesthetic Review: iterative creative process to construct 3D models reviewed relative to the scientific facts and the technical constraints of the AR and VR platforms.

Aesthetic-Scientific Combined Review: iterative artistic-scientific process of all digital media reviewed for information accuracy relative to the expression of those facts in the models and visualizations with collaborative correction of errors, then a joint decision and approval for release.

User and Stakeholder Usability Tests: traditional iterative software application design and development process with attention to user experience, technical constraints, and digital distribution standards.

Learner Experience and Actual Learning Outcomes: UCF’s Institutional Review Board (IRB) approved learning impact studies to evaluate actual learning outcomes and evaluations.

5.4. Details of the ELUX Production Process

5.4.1. Data Gathering

The project started with a meeting with the arboretum biologists to gather data and maps for the plants and landscape. There are thousands of plants in the real arboretum, but only the most important ones needed to satisfy the educational objectives were selected for modeling. For each plant, an authoritative description was created, including the common name, Latin name, soil, water, sunlight requirements, other gardening facts, and membership in each natural community. Also created were educational stories that related to the field trip outreach programs, highlighting fun facts about each plant. Photographs, maps, data, plant inventories by species, and plant population densities were also collected. Then, the undergraduate research assistants in the digital media program, guided by the biologists, went into the field to locate, photograph, and measure each plant of interest.

5.4.2. The Natural Communities and Plant Content

The ten natural communities in the selected area of the real arboretum are Basin Swamp, Baygall, Depression Marsh, Dome Swamp, Mesic Flatwoods, Scrub, Scrubby Flatwoods, Waterbody, Wet Flatwoods, and Xeric Hammock.

The plants in those areas that were selected were: American Beauty Berry (Callicarpa americana), Blazing Star (Liatris tenuifolia), Bog Button (Lachnocaulon anceps), Bracken Fern (Pteridium aquilinum var. pseudocaudatum), Scarlet Calamint (Calamintha coccinea), Chalky Bluestem (Andropogon virginicus var. glaucus), Coastalplain Honeycombhead (Balduina angustifolia), Coontie (Zamia integrifolia), Dahoon Holly (Ilex cassine), Feay’s Palafox (Palafoxia feayi), Fetterbush (Lyonia lucida), Florida Paintbrush (Carphephorus corymbosus), Florida Tickseed (Coreopsis leavenworthii), Forked Bluecurls (Trichostema dichotomum), Fourpetal St. John’s Wort (Hypericum tetrapetalum), Goldenrod (Solidago stricta), Hooded Pitcher Plant (Sarracenia minor), Longleaf Pine (Pinus palustris), Lopsided Indiangrass (Sorghastrum secundum), Pond Cypress (Taxodium ascendens), Prickly Pear Cactus (Opuntia humifusa), Rayless Sunflower (Helianthus radula), Reindeer Moss (Cladonia spp.), Sabal Palm (Sabal palmetto), Sand Live Oak (Quercus geminata), Saw Palmetto (Serenoa repens), Scrub Rosemary (Ceratiola ericoides), Shortleaf Rosegentian (Sabatia brevifolia), Slender Flattop Goldenrod (Euthamia caroliniana), Spanish Moss (Tillandsia usneoides), Tall Jointweed (Polygonella gracilis), Titusville Balm (Dicerandra thinicola), Waterlily (Nymphaea odorata), Wax Myrtle (Myrica cerifera), and Wiregrass (Aristida stricta).

5.4.3. Field Research to Gather Data and Photographs

For each plant selected to be modeled, a portrait photograph of the plant in its location was taken first and used as a reference. Then, a cardboard screen was placed around the plant to surround both sides and the back to isolate it from the visual clutter of the background environment. This screen had a measurement grid inscribed on it to indicate height and width, that when photographed provided a future visual reference for use in the construction of the 3D polygon structures. Each petal, leaf, and part of the plant was then placed flat on a blue-screen cloth fabric to photograph each separate plant component (e.g., leaf, petal, stamen, pestle, any berries, nuts, or cones, and stems) (

Figure 6a). These detailed studies of the organic forms composed the texture map library for each 3D plant model. Undergraduate research students developed advanced technical skills in working on complex organics and new knowledge about plants in this creative process.

5.4.4. Research to Gather Artificial Life Digital Media Assets and Ambient Bioacoustics

The insects, amphibians, birds, and fish include American bald eagles, green treefrogs (Hyla cinerea), largemouth bass, and many butterflies (e.g., monarch, gulf fertilary, xebra longwing, giant swallowtail), and damsels and dragonflies accurate to the location and time. These assets were gathered either directly from the environment or purchased online and verified with the scientific domain experts involved in the project.

5.4.5. The Virtual Field Guide Website

The WordPress engine platform was used to build the Virtual Field Guide website (

Figure 7) and to support an interdisciplinary, geographically diverse, virtual team in collaboration. The different levels of project management, editorial, authorship, and readership roles were supported with the platform’s control of users’ roles. Multiple virtual team members in both the scientific and digital media teams were able to create, edit, approve, and publish content. The biologists provided data about each plant—such as the common name, Latin name, educational story, facts on size and spread, gardening factors, and natural community locations—for publication and for meta tag expression. The digital media team published the photographs and videos, in addition to embedding the 3D models once approved to complete the component. The interdisciplinary team worked on the design, production, and publication in a highly controlled iterative process that supported the sourcing, reviewing, approval, and release of information necessary for the accuracy of the facts. Factual accuracy was required for educational and learning objectives in the applications.

Photographs that were gathered in the field and authenticated by the scientists were published for each plant. The correct identification of each plant proved to be invaluable to the artists, who did not know the plant names. The digital media team used this information as a reference guide when building the virtual plants and environment, which was particularly important for setting each plant’s acceptable height ranges in-game to real-world scales. Conveniently, the university’s web support team standards allowed for the easy integration of the Virtual Field Guide website with the university’s arboretum website for maintenance after it was published. The production website is currently published and is an accessible resource for teachers on PBS LearningMedia indexed to national educational standards, and can be used in preparing for and reinforcing real-world field trips to the real arboretum for the university and local communities.

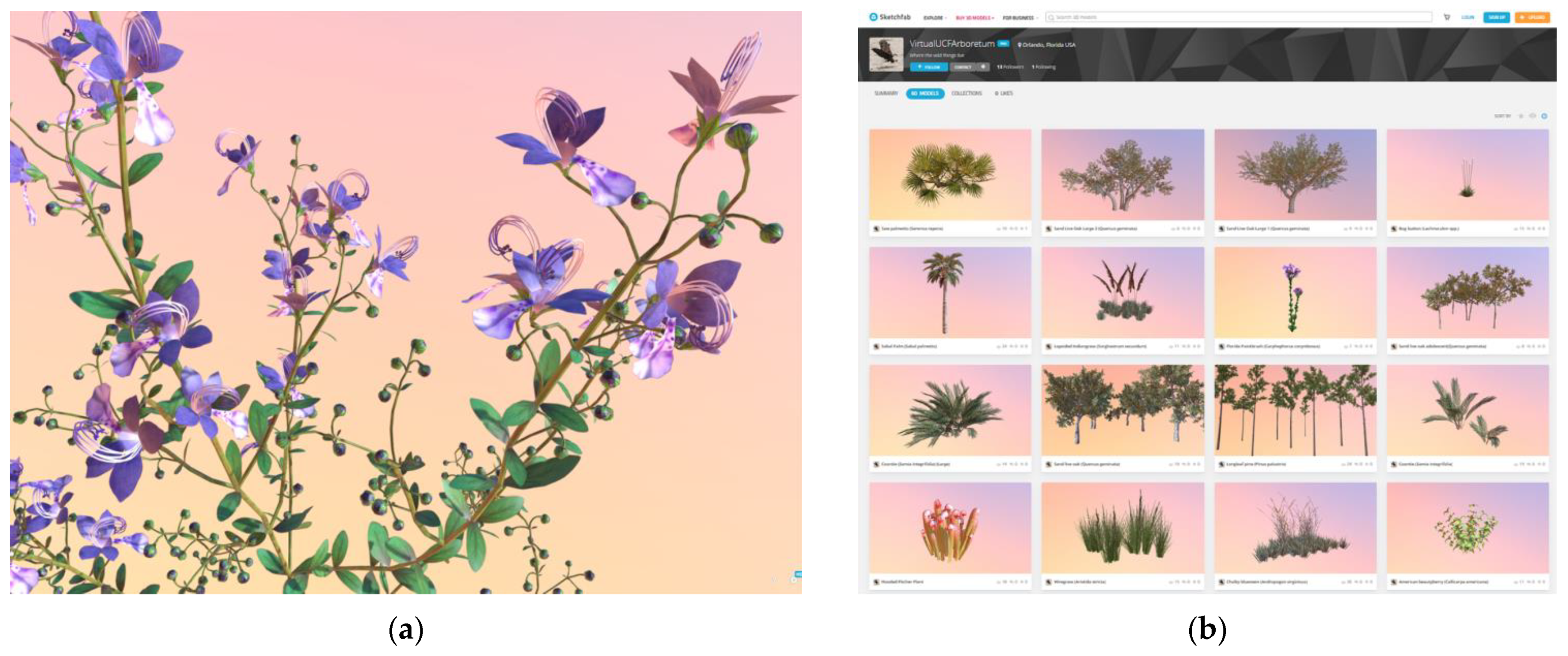

5.4.6. The 3D Plant Atlas Model Library

The photographs were processed with Photoshop, Quixel SUITE 2.0, and Substance Painter. Maya 2018 was used to create the 3D plant and flower models (

Figure 8a). The 3D Maya models were converted to FBX file types and imported into the Unreal Engine with the textures. Several new techniques were applied to increase the realism, adding to realistic light reflection and translucency to enhance the realistic interaction of the sunlight and the leaves, while other bump-map techniques were used to enhance leaf and bark textures, improving the realism in both the detail and complexity of each plant. Once the plant 3D model met the quality standards of the biologists or botanists, the model was released to a public viewable 3D Plant Atlas on SketchFab.com (

Figure 8b).

The art asset creation process relied on the Virtual Field Guide for data and the photographs collected in the field to build each 3D plant model. This process was a surprisingly steep learning curve for the undergraduate digital media students who had never created organics before. Much of the complexity in nature was difficult to see or perceive at first, for many did not know what to look for and relied heavily on the iterative review from the scientists for highly detailed feedback to correct errors and achieve an accurate and realistic virtual plant model. A few of the digital media modelers had to invest time in field research, such as drawing plants to better understand their structures. SketchFab.com provided the framework to share models privately and collaborate on feedback in real time. Each model’s polygon count was bounded to respect the real-time runtime constraints of the frame rate speeds required by the AR and VR platforms. Recent advancements in the Unreal Engine will relax this constraint, but not for AR due to many factors in mobile distribution. The construction of the 3D plant models required this dynamic collaborative iterative review process, both artistic and scientific, to ensure content accuracy and visual quality in this triangulated process to achieve a high information fidelity.

5.4.7. Selectable Plants and Interactive Points of Interest

Interaction required each virtual plant to register a user event and then to respond. When a user approached a plant, the cross-hairs turned bright green to indicate to invite inquiry. The user event to select the plant depended on the user interface; a mouse click on that plant; a finger tap on the iPad; or a line cast in VR. The plant responded by opening the Virtual Field Guide in-game (

Figure 3) so that facts, concepts, and educational stories became visible. In order to achieve the desired user interaction with the content, all plant meshes in the level are of the type “Foliage Instanced Static Mesh”. A line is cast from the player to where they are looking 10 times per second. This line checks for geometry collision. If it is hit, a mouse click event is registered to a widget to open the plant information displayed in the HUD from the external website based on the name of the mesh itself. In this way, 3D objects in-game expose semantic meta-data for real-time external data interaction in-game. The desktop application version supported direct manipulation between the mouse, pointer, cross-hairs, and object in-game. The VR application supported a 3D UI as a HUD in-game as a widget that rotated to face the player and respond to user input from the hand controllers casting a virtual line from the virtual hand to the target on the HUD menu.

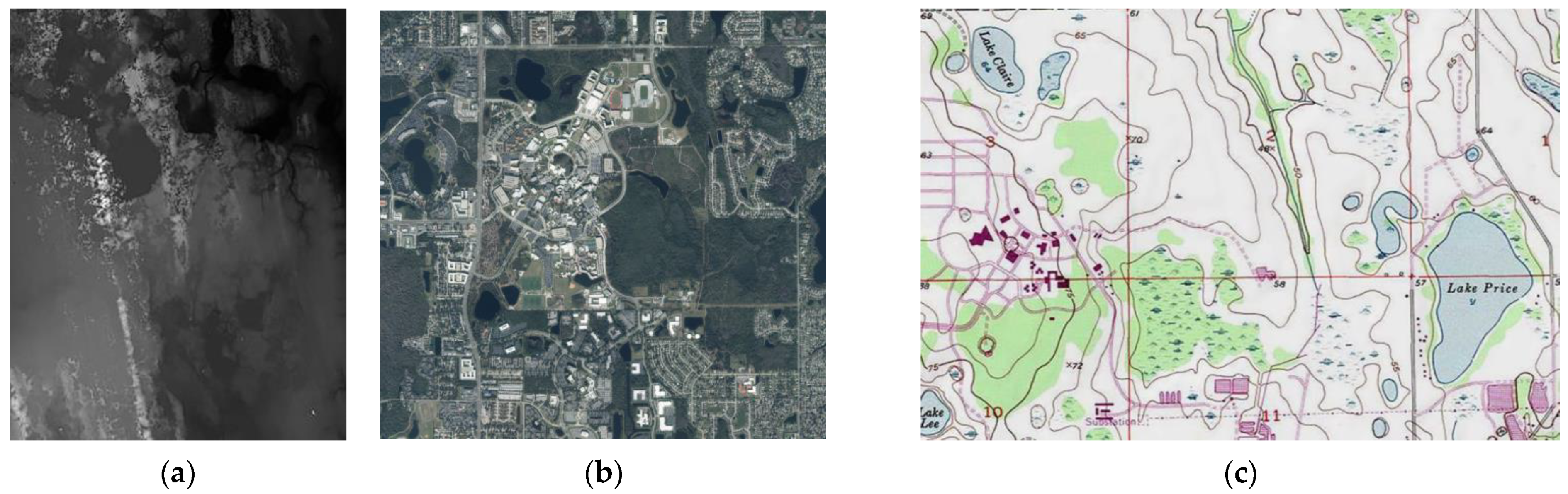

5.4.8. Terrain Maps and Data File Import

The game level required a virtual terrain scaled to match the real terrain to produce an immersive experience accurate in scale to all real-world measurements and geo-locations. Topographical terrain data were sourced from the U.S. Geological Survey (USGA) database and an older map with greater landmark detail, in addition to a high-resolution aerial photograph, to provide landmark references (

Figure 9). These data formats as provided could not be used to automatically generate a terrain in Unreal Engine. Therefore, the development team relied on terrain data from TerrainParty.com, in the form of a PNG download, to automatically generate the game-level terrain (

Figure 10b). The minimum sample file size in this service is 8 km

2, so the 1 km

2 sample used for the arboretum was extracted from the original PNG. This extracted sample was scaled up to the same resolution as the landscape in Unreal Engine. This made each pixel in the PNG equal to one square meter in the engine. To resolve the issue of sharp angles between tiles on the landscape, the imported PNG was also filtered with a Gaussian blur of 1 pixel to smooth the surface.

5.4.9. The Arboretum Natural Communities Plant Data and Unreal Level Terrain

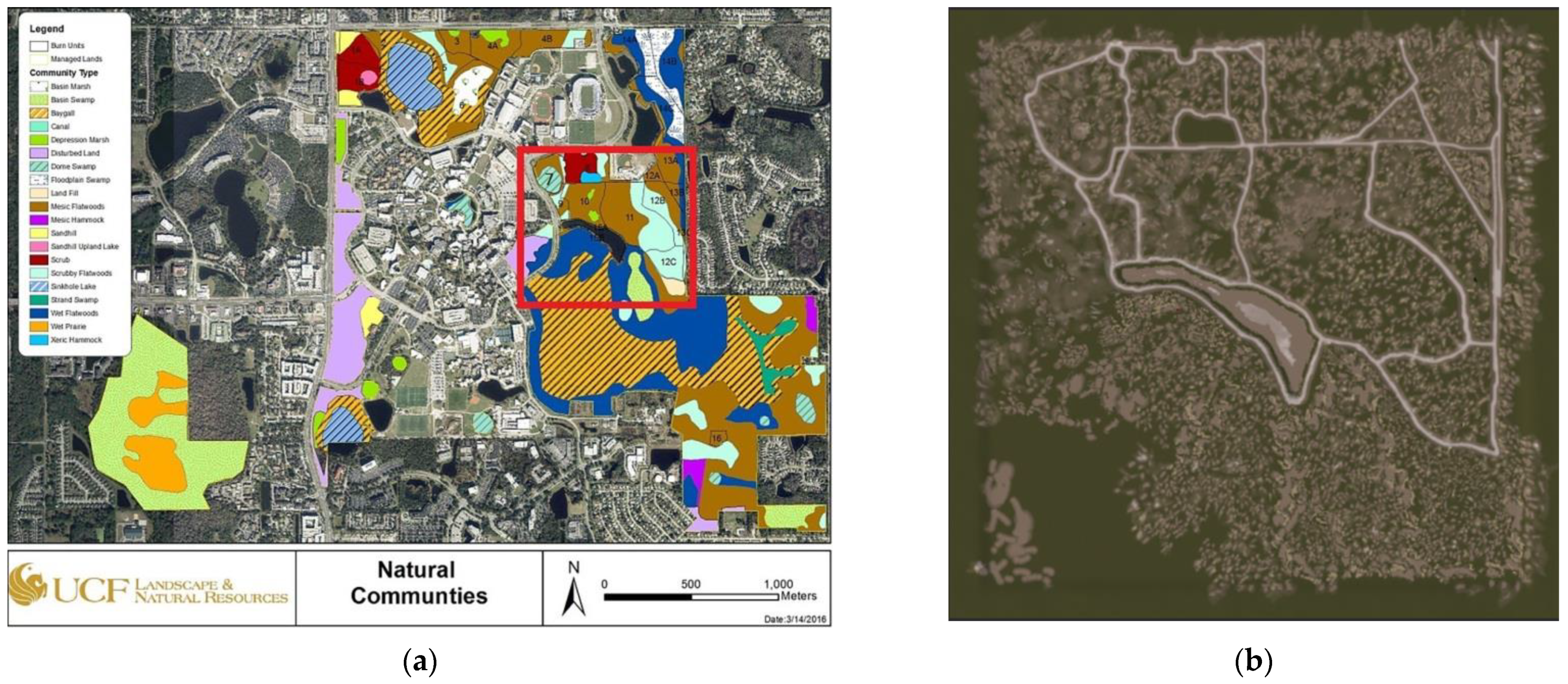

In order for Unreal Engine to render each virtual plant in the correct location in-game on the virtual terrain, the real-world geo-spatial plant data had to be indexed to the virtual terrain coordinates. The identification and number of plants covering an area had to be sourced and then transformed. The plant data stored in the arboretum GIS ESRI database originated from biologists who gathered data in real field-work on plant inventories (e.g., the list of plant species) and plant population densities (e.g., how many in an area), using a plant plot study data sampling method, a standard data gathering method used in biology and forestry. This method uses a linear transept (e.g., measuring a line over the land of some distance) to guide the positioning of rectangular grids (e.g., plastic piping connected to construct a square or a frame to lay on the ground), which are used to record the location for future data gathering efforts and to guide the biologists regarding the plants to include in the count. This approach was applied to each of the natural communities in the real arboretum to create a type of plant inventory over time and by location. Then, all field data were entered into a long-term GIS database using ESRI to chart changes in biodiversity over time. The arboretum used ESRI to generate a color-coded map to visualize each of the natural communities (e.g., basin swamp, baygall, depression marsh, dome swamp, mesic flatwoods, scrub, scrubby flatwoods, waterbody, wet flatwoods, and xeric hammock) that were then indexed to the plant inventories and plant population densities (

Figure 10a). The digital media development team selected a 1000 × 1000 m section of that GIS map to model in Unreal (e.g., GIS map shown in

Figure 10a, with the area selected to model indicated by a red rectangle, and the corresponding Unreal terrain level in

Figure 10b).

The data on each plant’s population density for each of the 10 natural communities were tabulated in a table. The dimensions and measurements in meters used for the original plant plot studies were converted to centimeters for Unreal. The real plant plot studies were converted to a virtual 10 × 10 m virtual plot, representing the real percentages of coverage to render the 3D plant models in the game level using the same percent coverage area associated with each natural community. Each of the 35 3D plant models were then indexed to one or more of the ten natural communities by population density for an extrapolation over that region. Each natural community is represented as an irregular shape on the map (

Figure 10a), but within that surface area, the virtual 10 × 10 m pattern of plants is generated in that area to match the real distribution pattern. The plant population data set for each plant in each natural community was converted to a percentage value for use in Unreal as a type of foliage coverage for each virtual natural community mapped to each zone (

Table 1).

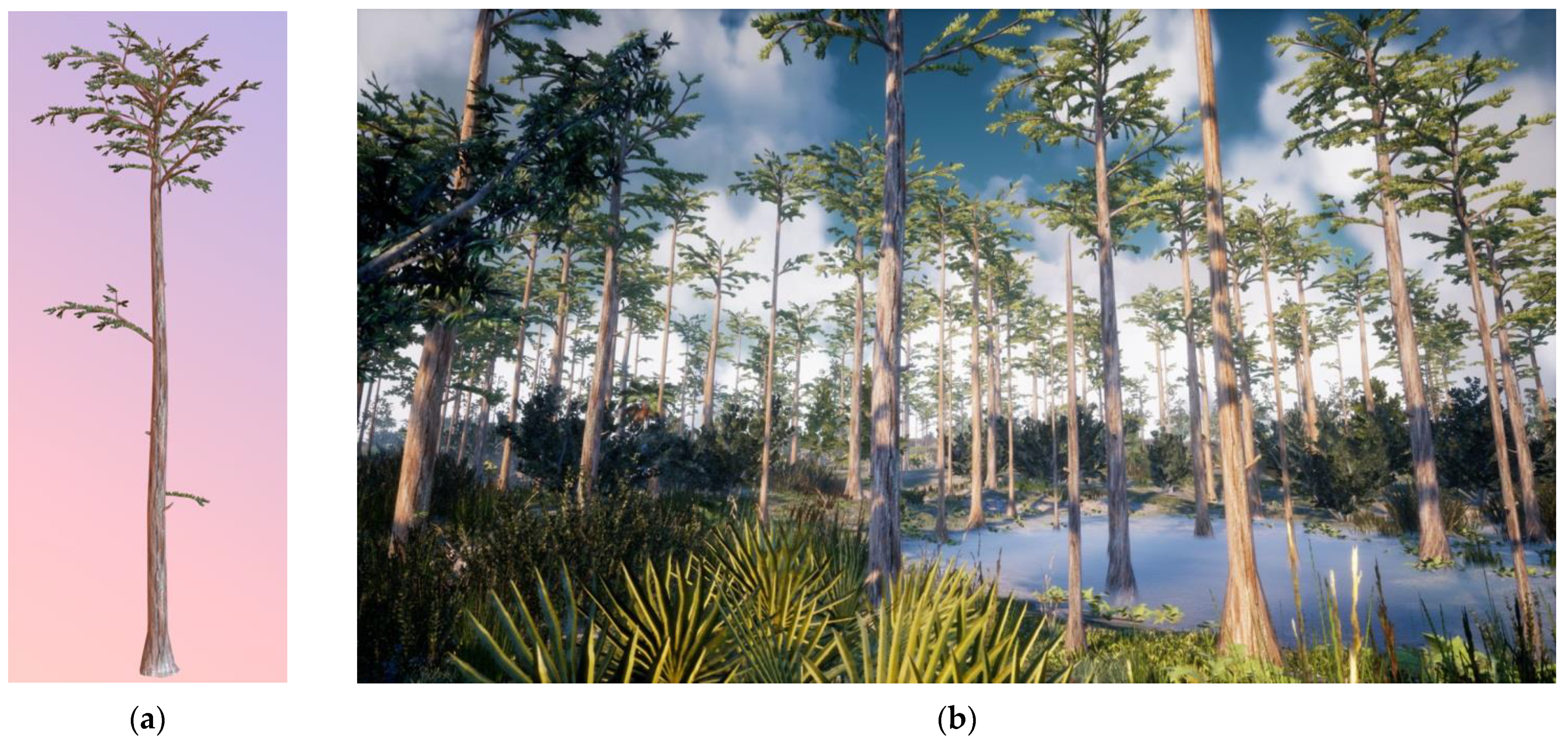

For example, in the real environment the dome swamp area of the map (

Figure 10a) is represented by a turquoise circle with green diagonal lines and labeled number 7 on the map (

Figure 10a). The plant population database showed a 20% coverage of that area by the pond cypress (

Taxodium ascendens) tree. This value was used to the set the density parameter within Unreal engine’s foliage tool to randomly render the 3D model (

Figure 11a) of the virtual pond cypress tree over 20% of the virtual dome swamp area in the Unreal terrain, with the height and spread variance reflective of each plant species (e.g., data from the Virtual Field Guide). Each foliage asset has a density value which was left at 100 instances per 1000. The result was a pattern of plants in each natural community that represented the real pattern of plants in the real environment (

Figure 11b). In this way, the ESRI geospatial plant data were visualized as a virtual landscape of 3D plants in-game over the entire terrain area.

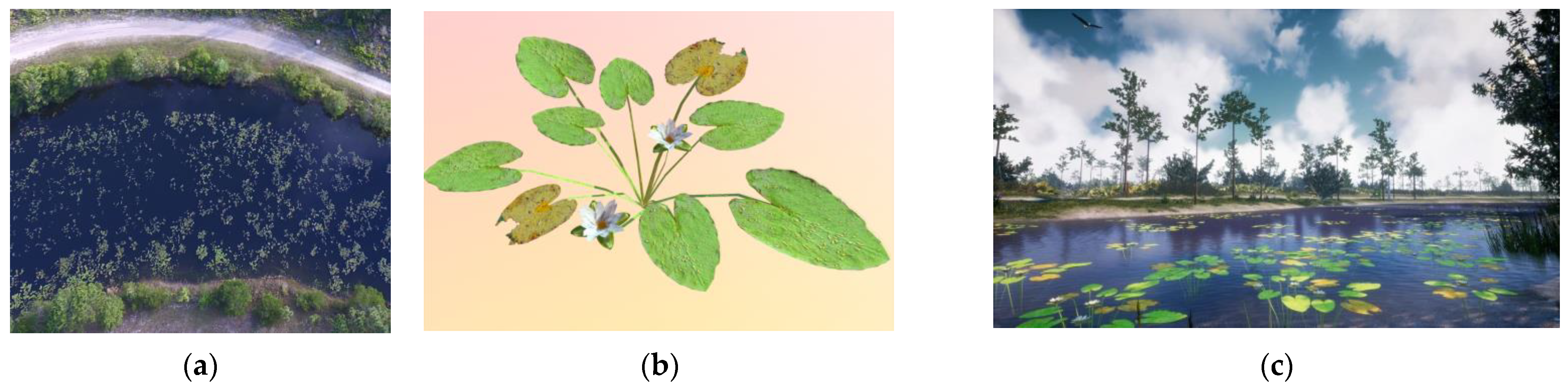

5.4.10. Drone Photographs Increased the Information Fidelity

When individual plants could be identified from the aerial drone photographs, their locations were mapped to the terrain to increase the information fidelity. For example, the drone flyover line of sight was clear over the pond where individual waterlilies (

Nymphaea odorata) could be identified from the drone photograph (

Figure 12a). Then, each individual 3D waterlily plant model (

Figure 12b) was accurately placed to match its real location in the virtual waterbody (

Figure 12c).

5.4.11. Alpha Maps, Unreal Settings for Plant Population Data Sets, and Map Locations

Black and white alpha maps were created for each separate zone (e.g., natural community) using the GIS map of the arboretum. These alpha maps were imported as textures in Unreal engine, and materials were made from them. The approach was not easy or direct, requiring multiple steps. Procedural tools, now available as third-party game engine plug-ins, make this easier to accomplish. These materials were applied to a cube mesh, which was flattened and scaled on the X and Y axis to match the landscape. The brush paint density value is a multiplier for the foliage painting density, (e.g., 2% real plant density would translate to 0.02 paint density rendered on the virtual terrain), and thus the plants are accurately represented per square meter in this way. Future research could automate these procedures and construction methods.

7. Conclusions: Virtual Nature, Information Fidelity, and Immersive Informal Learning

There is a confluence of data visualization processes coming together to produce information-rich, immersive, multimodal, and interactive virtual environments of natural landscapes in game engines. This paper introduced a new concept, information fidelity, as the defining design factor that is important in order to extend the impact of photorealism, immersion, embodiment, engagement, and presence, and especially important when designing and constructing a virtual field trip of nature for informal learning applications.

These new virtual environments of virtual nature cross the boundary into data visualization applications because they are constructed from an information fusion of GIS data, different than traditional game art or the 2D 360 video that was used in the past. The design, methods, and processes presented in this paper demonstrate how an immersive data visualization of the geo-spatial GIS plant data may be rendered in a game engine with high information fidelity to achieve sensory accuracy, and thus photorealism, more efficiently than prior environmental art creation methods and processes.

Non-entertainment applications of game engines have been used as general-purpose, real-time, interactive computer graphics tools, especially for cultural heritage and informal learning applications and in modeling, simulation, and training. However, few have developed virtual field trips to inform about the natural world by truly exploiting the design affordances of new immersive technologies to construct and present a complete virtual environment in VR or projected on top of the real environment at almost identical levels of photorealism in AR—the virtual forest merges with the real forest. Additionally, they act as a shared teaching and learning knowledge artifact, similar to natural history museum dioramas and exhibits of the past, while using game engines as general-purpose tools. As such, they become interactive and multimodal, supporting personalized informal learning. Most AR applications only overlay a 2D annotation consisting of text, photograph, video, or 3D model on top of a real object, or, in VR applications, use environments that are relatively small in scale, thus limiting wide expansive open-world free exploration driven by free choice and curiosity.

Presented here is an example of a high information fidelity environment that also offers free exploration of a large landscape for individuals’ experiential learning. The ELUX design process increases the accuracy, assurance, and completeness of scientific information by integrating the domain experts’ knowledge into the virtual environment as a data visualization and augmentation to the real world. That artifact is capable of transmitting an array of multisensory signals to the human to perceive as a new type of artificial knowledge artifact. The domain experts’ imagination is made real, tangible, and transferable to a non-expert, offering direct and accurate experience of the reality of nature. Perception is a simultaneous top-down and bottom-up process. For a non-expert without deep domain knowledge, they cannot “see” the world in the same detail, complexity, or accuracy as the expert. These applications transmit a sensory experience that only an expert can imagine based on the deep knowledge of the expert, thus allowing the non-expert to see and experience a reality they cannot possibly otherwise imagine, a type of knowledge-imagination machine. Harnessing the immersive power of theoutput devices, they amplify presence, embodiment, and engagement. Shown, too, are the emotional reactions to beautiful, salient, and peaceful places, triggering individual curiosity, exploration, and inquiry, which are important in developing the non-expert’s individual mind relative to contrasts they directly perceive in reality and not dependent on socially constructed views that might be full of misinformation.

As such they represent an opportunity for future research and development into scientific communication, important to all science, technology, engineering, and mathematics (STEM) fields of interest, and informal learning research broadly, especially when authentic human reactions are important outcomes to expansive, complex, unknowns, triggering awe and wonder. It is also a different way of viewing “immersive” AR and VR application design and development, which are important when the desired outcomes are driven by learning, education, and scientific communication goals surrounding topics important to botany, biology, ecology, forestry, or geology. The application is useful for natural history museums, botanical gardens, or arboretums with educational objectives, and can support field trips or field-work training. Although some can appreciate the technical expertise or “craft” of this work, scientists trained in botany, biology, and ecology can appreciate the knowledge, and artists who study the natural world are awestruck by the beauty. Others will see the opportunity to automate these methods for new algorithms and to create new solutions to model and simulate the natural world. As such, these virtual nature models may support new multimodal knowledge visualizations of past and future realities, and data-driven realities of alternate scenarios of the present. These models could support the creation of a new immersive digital twin of the Earth that will be useful for integrating information into models already created for human-made systems, and for sustainable urban development as a means to optimize and visualize a more complete system of dynamic models.