Abstract

Traffic accidents pose a significant threat to public safety, resulting in numerous fatalities, injuries, and a substantial economic burden each year. The development of predictive models capable of the real-time forecasting of post-accident impact using readily available data can play a crucial role in preventing adverse outcomes and enhancing overall safety. However, existing accident predictive models encounter two main challenges: first, a reliance on either costly or non-real-time data, and second, the absence of a comprehensive metric to measure post-accident impact accurately. To address these limitations, this study proposes a deep neural network model known as the cascade model. It leverages readily available real-world data from Los Angeles County to predict post-accident impacts. The model consists of two components: Long Short-Term Memory (LSTM) and a Convolutional Neural Network (CNN). The LSTM model captures temporal patterns, while the CNN extracts patterns from the sparse accident dataset. Furthermore, an external traffic congestion dataset is incorporated to derive a new feature called the “accident impact” factor, which quantifies the influence of an accident on surrounding traffic flow. Extensive experiments were conducted to demonstrate the effectiveness of the proposed hybrid machine learning method in predicting the post-accident impact compared to state-of-the-art baselines. The results reveal a higher precision in predicting minimal impacts (i.e., cases with no reported accidents) and a higher recall in predicting more significant impacts (i.e., cases with reported accidents).

1. Introduction

Traffic crashes are a major public safety concern, leading to significant fatalities, injuries, and economic burdens annually. In 2020, the fatality rate on American roads saw a staggering 24% increase compared to 2019, the largest year-over-year rise since 1924, according to the National Safety Council (NSC). Understanding the causes and impacts of traffic accidents is crucial for evaluating highway safety and addressing negative consequences, including high fatality and injury rates, traffic congestion, carbon emissions, and related incidents. Despite the ample existing literature, the development of accurate and efficient accident prediction models remains a challenging and fascinating research area.

Over recent decades, a wide range of predictive and computational methods have been proposed to predict traffic accidents and their impacts [1]. These models progressed from linear to nonlinear models and from traditional predictive regression models [2,3,4] to today’s most common data analytic and machine learning algorithms [5,6,7]. A majority of the studies treat the problem as a standard binary classification task [8,9], with the output being a categorical event (accident or non-accident), with no information on the post-accident consequences. Furthermore, existing studies that investigated post-accident impacts mostly failed to provide a compelling way to define and measure the “impact”, which is a significant challenge. The most commonly used metric to measure and illustrate the impact of accidents on traffic flow is “duration”. However, to our understanding, this metric is not ideal because it can be influenced by various environmental factors, including the type of road and accessibility conditions. For example, if a road has accessibility issues, it may take longer to clear an accident scene, resulting in a longer duration. Furthermore, the datasets typically utilized for these studies are not publicly available and cannot be employed in real-time projects.

In recent years, hybrid neural networks that integrate multiple architectures have attracted increasing attention for their ability to model complex, real-world phenomena. Marcillo et al. [10] review learning-based models for traffic accident prediction and highlight deep learning methods—particularly LSTM and CNN—as the most effective, due to their strengths in handling temporal and spatial data, respectively. This review also emphasizes the need for high-quality, heterogeneous data and effective strategies to address data imbalance and high dimensionality. Motivated by recent findings, we propose a cascaded LSTM-CNN architecture tailored to the spatiotemporal nature of traffic data. LSTM captures long-term temporal dependencies, while CNN efficiently extracts local spatial and short-term temporal patterns [11]. This hybrid model addresses key challenges such as data heterogeneity and high dimensionality, aiming to enhance predictive accuracy. Furthermore, we introduce a novel metric to quantify accident impact on surrounding traffic flow, bridging a gap in prior work that focused primarily on accident occurrence rather than its broader effects.

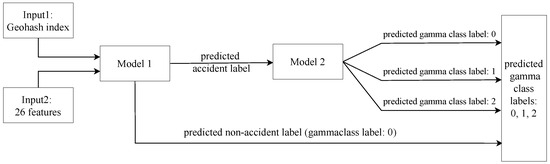

LSTM and CNN models are sequentially combined in terms of a “cascade” model. We specifically chose these two models because of their ability to handle temporal dependencies effectively. Furthermore, their combination offers a stronger capability to distinguish between accident and non-accident events, especially in imbalanced datasets, compared to using each model individually. Our model performs better in discriminating high-impact accidents. It achieves this by first distinguishing between accident and non-accident events and subsequently predicting the post-accident impact of the accident events. In more detail, the cascade model utilizes a sequence of the last T time intervals (e.g., a two-hour interval) within each specific region (e.g., a zone specified by a square of size 5 km × 5 km on map) to predict the probability of an accident and its post-impact in that region for the next time interval (i.e., ).

This study utilizes four complementary, easy-to-obtain, real-world datasets to build and evaluate the proposed prediction framework. The first dataset provides real-world accident data across the United States; it contains location, time, duration, distance, and severity. The second dataset offers detailed weather information such as weather conditions, wind chill, humidity, temperature, etc. The third dataset contains spatial attributes for each district (e.g., the number of traffic lights, stop signs, and highway junctions). The fourth dataset is a traffic congestion dataset that provides real-world congestion data across the United States. The congestion dataset is also used to develop a novel target feature, gamma, representing an accident’s impact on its surrounding area. We propose a data-driven solution to define gamma as a function of three accident-impact-related features, duration, distance, and severity. Generally, non-accident intervals are far more prevalent in the real world compared to accident intervals (since accidents are relatively infrequent events). Therefore, working with such an imbalanced dataset poses challenges for model development and evaluation. To address this issue, we employ a random under-sampling method to mitigate the imbalanced nature of the dataset. Using these settings and through extensive experiments and evaluations, we demonstrate the effectiveness of the cascade model in predicting post-accident impact compared to state-of-the-art models that were evaluated using the same datasets. It is worth noting that the datasets used in this research are generally easy to obtain, regarding how they have been collected (more details can be found in [12]) and the replicability of the data collection processes for other regions.

The main contributions of this paper are summarized as follows:

- A real-world setup for post-accident impact prediction: This paper proposes an effective setup to use easy-to-obtain, real-world data resources (i.e., datasets on accident events, congestion incidents, weather data, and spatial information) to estimate accident impact on the surrounding area shortly after an accident occurs. Also, this framework employs a data augmentation approach to obtain accurate feature vectors from heterogeneous data sources.

- A data-driven label refinement process: This study illustrates a novel feature to demonstrate post-accident impact on its surrounding traffic flow. This feature combines three factors, namely “severity”, “duration”, and “distance”, to create a compelling feature that we refer to as gamma in this work.

- A cascade model for accident impact prediction: This paper proposes a cascade model to employ the power of LSTM and CNN to efficiently predict the post-accident impacts in two stages. First, it distinguishes between accident and non-accident events, then it predicts the intensity of impact for accident events.

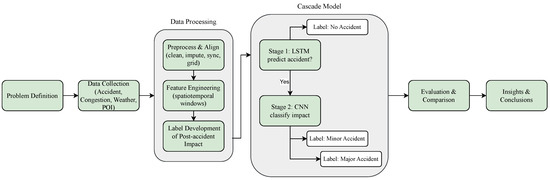

To provide a clear overview of our study design, Figure 1 presents the research workflow. The process begins with the collection and integration of four real-world datasets, followed by data preprocessing, feature engineering, and the construction of a novel post-accident impact label. The processed data is then passed through a two-stage cascade model: first, an LSTM predicts the likelihood of an accident; if an accident is detected, a CNN subsequently classifies its impact severity. The workflow concludes with model evaluation and comparison, followed by discussion of key insights and practical implications.

Figure 1.

Overall research workflow for accident impact prediction.

The remainder of the article is organized as follows. Section 2 provides an overview of related studies, followed by a description of data in Section 3. Section 4 describes our accident labelling approach, and then the cascade model is presented in Section 5. Section 6 illustrates experimentation design and results, and Section 7 concludes the paper by discussing essential findings and recommendations for future studies.

2. Related Work

Traffic accident analysis and prediction have been the focus of extensive research over recent decades. Broadly, prior work in this field falls into two main categories: accident risk prediction, which seeks to estimate the likelihood or frequency of accidents occurring, and accident impact prediction, which aims to assess the consequences and disruptions caused by accidents. In the following subsections, we review key studies and advances within each of these categories, highlighting major methodological trends and identifying remaining gaps addressed by our work.

2.1. Accident Risk Prediction

Research on accident risk prediction has evolved through the application of diverse methodologies and data sources, generally aiming to (1) estimate accident rates in a region or (2) classify the likelihood of accident occurrence in specific locations or intervals [13,14].

Early works in this area focused on statistical and classical machine learning models. Regression-based techniques have been widely used for accident rate prediction, often incorporating fundamental attributes such as traffic volume, road type, and historical incident records [15,16,17]. In the context of accident detection and classification, binary classifiers such as SVMs [18] and k-nearest neighbor or Bayesian models [19] have been effectively employed. Many studies enhanced these predictions through advanced feature selection methods, such as the use of decision trees for variable filtering [18] or frequent pattern trees for uncovering important data patterns [19].

As the volume and diversity of available data have increased, a key trend has been the integration of temporal and spatial dependencies into predictive models. Several studies leveraged time-series analysis and recurrent structures to capture temporal patterns in accident data [20,21,22]. Others extended this paradigm by considering spatial correlations across road networks or urban zones, with graph-based and deep learning models offering notable improvements in cases where location and neighborhood effects are significant [16,23,24].

With the rise of deep learning, more recent works increasingly employ neural architectures to accommodate high-dimensional, heterogeneous, and sequential traffic data. Deep neural networks, including stacked autoencoders and hybrid LSTM-CNN designs, have consistently demonstrated higher accuracy and robustness for accident risk prediction than traditional methods [11,24,25,26]. Notably, these approaches often integrate multi-source data (e.g., traffic flow, weather, road characteristics) to enrich their feature spaces. For example, Moosavi et al. [27] developed a hybrid deep model for large-scale detection; Chen et al. [17] applied a stacked denoising autoencoder on GPS-derived accident severity; and Ren et al. [15] used an LSTM to predict traffic accident counts, though with a limited feature set. Expanding the diversity of input data, Chen et al. [28] and Li et al. [11] exploited novel sources such as vehicle license plate recognition and detailed signal timing.

In addition, methodological advances such as self-organizing maps have been utilized to improve model calibration and fine-tune neural networks for spatially extensive road sections, as shown by Kaffash et al. [29], who achieved high predictive accuracy over thousands of roadway points. A summary of the above studies can be found in Table 1. Taken together, the literature reveals clear progress from regression and classical machine learning toward deep learning models that systematically exploit spatial and temporal information as well as a richer array of features. However, most prior work remains focused on the occurrence or frequency of accidents, rather than the downstream effects such incidents have on traffic and congestion. Moreover, although deep models have improved predictive performance, limited attention has been paid to developing coherent, actionable metrics for post-accident impact or to integrating contextual features in a way that supports interpretability and generalization.

Table 1.

A summary of previous works on accident risk prediction.

2.2. Accident Impact Prediction

A substantial body of work has focused on analyzing and predicting the post-accident impact on surrounding areas. The majority of these studies characterize “impact” using accident duration, severity, or clearance time as core indicators, and employ a range of predictive models. Traditional approaches typically use regression techniques to estimate the duration and magnitude of post-accident disruptions, considering factors such as traffic flow, road geometry, and incident details [31,32,33,34]. As modeling techniques advanced, neural networks and other machine learning algorithms were applied to capture complex, nonlinear effects among input variables [5,35,36].

A number of recent studies have refined these paradigms by expanding data inputs and comparing multiple modeling strategies. For example, Zhang et al. [2] combined loop detector and police data to define the total impact as the sum of accident duration and clearance time and compared the predictive power of multiple linear regression to artificial neural networks. Yu et al. [37] explored a range of binary features (weather, time of day, presence of disabled vehicles, and peak hour) for predicting incident duration on freeways, finding that ANNs performed better for long-duration incidents, while SVMs were more accurate for shorter-duration cases. Ensemble methods have also been used: Ma et al. [38] reported superior interpretability and prediction accuracy for gradient boosting decision trees in forecasting clearance time compared to methods such as SVMs, neural networks, and random forests.

While most prior work defines impact in terms of duration or severity, a minority of studies have attempted to forecast downstream traffic metrics. Yu et al. [39] stands out by modeling post-accident traffic flow, although their analysis was limited to a single route. Lin et al. [40] investigated the prediction of different post-accident traffic condition states using SVM, neural network, and random forest models and incorporated incremental learning by updating predictions as new data became available during an event. Ensemble approaches continue to gain traction, with recent work demonstrating the effectiveness of CatBoost ensemble algorithms for predicting the impact range of traffic accidents and addressing common issues such as overfitting and generalization in accident impact models [41]. More recently, Ekanem [42] employed logistic regression, random forest, and XGBoost to predict accident severity in Victoria, Australia, identifying temporal features as significant predictors; however, the models faced challenges in accurately predicting rare but severe (fatal) cases, with the authors recommending resampling strategies as a remedy. A summary of the above studies is provided in Table 2.

Table 2.

A summary of previous works on accident impact prediction.

In summary, while prior research has advanced modeling techniques for accident impact prediction, most studies use narrow definitions of impact—typically limited to duration or severity—and focus on small geographic areas. Few works combine multiple impact dimensions or apply composite metrics at scale. Our study addresses these gaps by introducing a novel, composite impact metric (integrating duration, severity, and blockage distance) and evaluating it across the large and diverse region of Los Angeles County. We rely on openly available data sources—such as BingMaps and MapQuest for traffic, Weather Underground for weather (examples: Microsoft BingMaps (https://www.microsoft.com/en-us/maps/ (accessed on 3 June 2025)), MapQuest (https://www.mapquest.com/ (accessed on 3 June 2025)), Weather Underground (https://www.wunderground.com/history (accessed on 3 June 2025)), and OpenStreetMap (https://www.openstreetmap.org (accessed on 3 June 2025))) and OpenStreetMap for spatial features—which facilitates replicability and broader application. More details on dataset collection and preparation can be found in [12].

3. Dataset

This section describes all datasets used to build our accident impact prediction framework. In addition to describing original datasets, the processes of data cleaning, transformation, and augmentation are also described. Lastly, we briefly study accident duration distribution and compare it with findings presented by other researchers. It is worth noting that accident duration refers to the time-span required to clear the impact of an accident, and we believe that it is highly correlated with accident impact. Hence, further studying it would help to derive valuable insights when building the predictive model.

3.1. Data Sources

The data sources and study areas are related to Los Angeles County, the most accident-prone county in the United states (see https://www.iihs.org/topics/fatality-statistics/detail/state-by-state (accessed on 3 June 2025) for more details). Four datasets are used to deliberate all factors involved in the aftermath of accident: the accident dataset, congestion dataset, point of interest (poi) dataset, and weather dataset. The following sub-sections describe these datasets in detail.

3.1.1. Accident Dataset

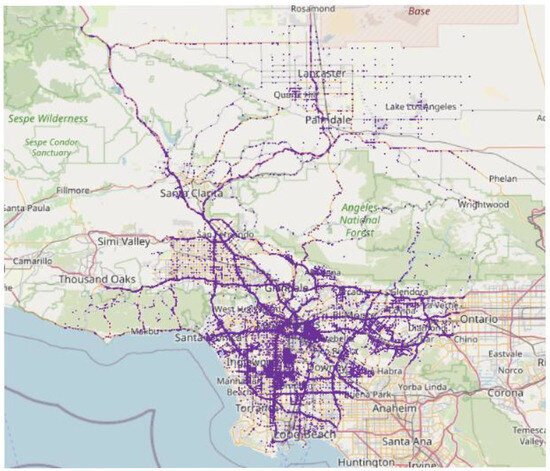

This study adopts the US-Accident dataset, a large-scale traffic accident dataset compiling data collected from all over the United States [43] between 2016 and 2020. This dataset contains accident events collected from two sources, MapQuest Traffic and Microsoft BingMaps, and it covers 49 states of the United States. A total of 73,553 accident records were extracted that cover four years from August 2016 to December 2020. Figure 2 shows the dispersion of accident location in the area of study that covers both freeway and urban arterial roads. Features of reported accidents are listed in Table 3. The reader can find a detailed description of features at smoosavi.org/datasets/us_accidents (accessed on 3 June 2025).

Figure 2.

Spatial dispersion of accident locations in Los Angeles County (2016–2020).

Table 3.

Accident dataset features.

3.1.2. Congestion Dataset

Traffic congestion events are essential to predict and also to measure accident impact. The congestion dataset provided in [12] that includes such incidents over the same period and location is considered as the accident data. This dataset includes over 600,000 congestion events, collected between 2016 and 2020, where each event was recorded when traffic speed was slower than typical traffic speed. In addition to the basic details (such as the time and location of a congestion case), the dataset also offers other valuable details such as delay and average speed (the latter is in the form of natural language description of an event reported by a human agent). “Severe delays of 22 min on San Diego Fwy Northbound in LA. Average speed ten mph.” is an example of the description attribute, which offers details such as the exact “delay” and “speed” of the congestion. One can easily extract such details from the free-form text via regular expression patterns. In this work, we use congestion data for accident impact labeling, which is thoroughly discussed in Section 4.

3.1.3. POI Dataset

As previous studies have shown, road features have a significant impact on determining the possibility of accidents [27]. As a result, road-network features must be considered when predicting and measuring the impact of accidents. This study utilizes a POI dataset taken from OpenStreetMap (OSM) that provides the geographical features of each location, such as the number of railroads, speed bumps, traffic signals, and pedestrian crossings.

3.1.4. Weather Condition Dataset

Numerous studies have shown the significant impact of weather condition on accident prediction [44,45,46]. While airport stations may be located some distance from urban centers, they offer reliable and regionally representative weather data. This research considers the weather data that had been collected from weather stations located in four airports in Los Angeles area (i.e., Los Angeles International Airport (LAX), Hollywood Burbank Airport (BUR), Van Nuys Airport (VNY), and Whiteman Airport (KWHP)). The four airports listed above offer hourly weather characteristics for the period of study. Each record includes the following features: airport, date, hour, temperature, wind chill, humidity, pressure, visibility, wind speed, wind direction, precipitation, and weather conditions. The last two are categorical features, and all their possible values are shown in Table 4.

Table 4.

Details of categorical weather features.

3.2. Data Quality Assurance

Although the datasets used in this study are publicly available and widely adopted in transportation research, we conducted a set of quality checks to ensure that the input data was reliable and suitable for downstream modeling. This included reviewing all features for semantic consistency, confirming that the categorical fields used well-defined value sets, and inspecting numerical attributes for plausible ranges.

We also assessed data completeness and addressed missing values and anomalies where appropriate. For instance, missing entries in the weather dataset were handled using a k-nearest neighbors imputation approach, and outliers in numerical features were managed using standard range-based filtering. Details of these methods are described in the next section. Duplicate records were removed, and low-frequency spatial zones were excluded to reduce sparsity and improve model stability.

These quality assurance steps provided a cleaner and more coherent input space, allowing the model to learn from consistent and interpretable patterns without being affected by noise or structural inconsistencies.

3.3. Preprocessing and Preparation

This section describes the required steps to preprocess different sources of data that are used in this work. Further, the proposal to build input data by combining different sources to be used for modeling is described. In terms of preprocessing, we can elaborate the following steps:

- Remove duplicated records: Since the accident data is collected from two potentially overlapping sources, some accidents might be reported twice. Therefore, we first process the input accident data to remove duplicated cases.

- Fill missing values using K-Nearest Neighbors imputation: The weather data suffers from missing values for some of features (e.g., sunrise_sunset). Based on two nearest neighbors, we impute missing values with the mean (for numerical features) or mode (for categorical features). We use time and location to determine distance when finding neighbors. This approach leverages the smooth variation in weather data across space and time. For cases with higher missingness, more advanced imputation methods could be explored in future work.

- Treat outliers: For a numerical feature f, if or , then we replace it with or , respectively. Here, and are the mean and standard deviation of feature f, respectively, calculated on all weather records. This three-sigma range is a standard statistical method that effectively removes extreme outlier values while preserving the vast majority of valid data.

- Omit redundant features: We remove those features that satisfy either of the following conditions: (1) correlated features based on Pearson correlation; (2) categorical features with more than 90% data frequency on a specific value.

- Discretize data: After data cleaning, both accident and congestion data are first discretized in space and time. The temporal resolution is 2 h intervals and the spatial resolution is set to 5 km × 5 km squares in uniform grids.

Overall, 26 input features are chosen based on domain knowledge, literature precedence, and preliminary correlation analysis, and refined by removing highly correlated or low-variance features as discussed above during preprocessing.

A vector like is used to represent the input data at time t in region s, applied for accident impact prediction. This vector represents the input for the predictive task, and later we describe labeling. In this way, we convert raw data to , which consists of 5 categories of features that include 26 different features obtained from raw datasets. Table 5 describes details of features used to create an input vector. In addition, due to the rarity of accident events and data sparsity, we drop those zones for which the total number of recorded accidents is less than a certain threshold during the two years. By setting , which means dropping zones with less than 75 reported accidents in 2 years (i.e., the accidents which were reported in less than 0.8% of time intervals), the ratio of time intervals with at least one reported accident (also known as accident intervals) to all intervals increases by 4.8%. The value of was set based on exploratory data analysis to ensure each selected region contained a sufficient number of accident events for robust model training, while avoiding excessive data sparsity. Lower thresholds led to too many regions with insufficient data for reliable prediction, while higher thresholds resulted in too few regions and reduced spatial diversity. Thus, provides a balance between data adequacy per zone and representative spatial coverage of the study area. In Section 5.2 an under-sampling method is discussed to balance the ratio of accident to non-accident intervals to a larger extent.

Table 5.

Selected features after preprocessing and preparation.

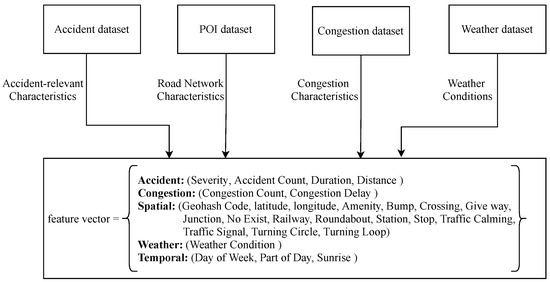

3.4. Data Augmentation

The initial accident dataset includes a variety of features. However, we only use highly accident-relevant features, and further augment the data with additional features described in Section 3.1 to create the input feature vector. Road-network characteristics of regions are added to differentiate between various types of urban and suburban regions, congestion-related information is added to empower the model in finding latent patterns between accident and congestion events, and weather data collected from the nearest airport based on accident’s occurrence time and location is used to further help the model by encoding weather condition data. Figure 3 summarizes how the input feature vector is built in this study.

Figure 3.

Forming a feature vector at time t in region s based on heterogeneous data obtained from various data sources.

To define a proper accident prediction task over geographical areas during different time intervals, it is required to build input data for accident-free time intervals. The data are discretized in space and time in order to build these non-accident (or accident-free) intervals (described in Section 3.3). When no accident is reported for an interval, accident-related features (i.e., severity, accident count, duration and distance) are set to zero, and the remaining features (i.e., geographical, temporal, surrounding congestion and weather conditions) are filled in accordingly using available resources.

3.5. Accident Duration Distribution

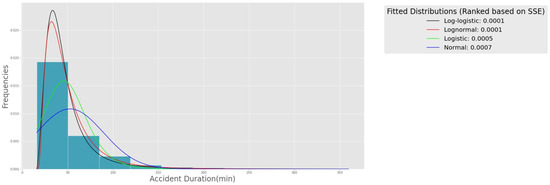

Accident duration is an essential factor to determine impact. Thus, it is worth studying this factor to see how its distribution in our dataset is aligned with datasets that were used in the literature. Previous studies found that a log-normal distribution can best model accident duration [2,47,48].

This section investigates this phenomenon using the input data and compares the research findings with [2]. First, we divide the duration dataset into K classes based on Doane’s formula [47]:

where n stands for the total number of observations and refers to the estimated third-moment-skewness of the observations:

Class is specified by its upper bound and lower bound , where D is the accident duration. Then, the frequency of each class is calculated and summation of the squared estimate of errors between the frequency of each class and the probability density function of fitted distribution is calculated.

The sum of the squared estimate of errors (SSE) calculated for four selected distributions is shown in Figure 4. Log-normal and log-logistic distributions have the lowest SSE and therefore describe accident duration data the best. This is aligned with findings reported by Zhang et al. [2]. They used the AIC (Akaike Information Criterion) and the BIC (Bayesian Information Criterion). They found log-normal and log-logistic distributions to be the first and the second best-fitted distributions, respectively.

Figure 4.

Four best-fitted distributions on accident duration data and their summation of squared estimate of errors.

4. Label Development of Post-Accident Impact

The previous section discussed data sources, pre-processing, and augmentation steps. However, we have not yet described “accident impact” in terms of a quantitative feature or attribute. To the best of our knowledge, there is no exact parameter to show the impact of the accident on its surrounding traffic flow in the real world. There are a number of studies addressing the effect of traffic congestion on accidents [49,50,51], while the effect of traffic accidents on traffic congestion has been rarely studied [23,52]. Of these few studies, some researches used accident duration as an indicator for an accident’s impact, while some others studied the effects of speed changes in surrounding traffic flow as an indicator. We believe that none of these approaches are comprehensive and genuine enough to describe how traffic flow would be affected by the occurrence of an accident.

In this study, we propose a novel “accident impact” feature based on the delay caused by accidents. This is achieved by finding a function from the congestion dataset to estimate delay based on the accident dataset. In the following, a detailed explanation of the process can be found.

4.1. Accident Impact: A Derived Factor

There are three attributes in our dataset that can be used to determine “impact”:

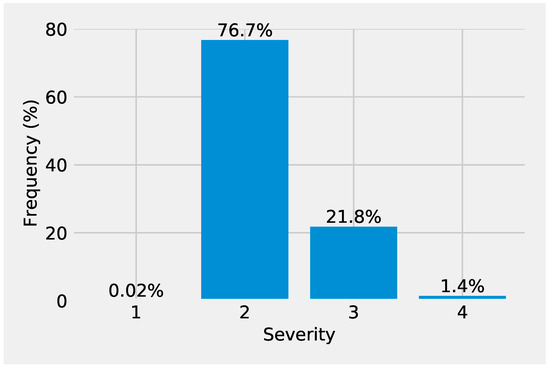

- Severity: This is a categorical attribute that shows severity in terms of delay in free-flow traffic due to accidents. It is defined by the original data providers based on real-time traffic conditions and reports, where 1 indicates minimal disruption and 4 indicates significant traffic delays. Although it seems to be a highly relevant feature, it suffers from skewness when looking at its distribution (see Figure 5), and is a coarse-grained factor (i.e., it is represented by just a few categorical values).

Figure 5. Distribution of severity values in the accident dataset. Severity is an ordinal variable ranging from 1 to 4, representing the level of impact on traffic rather than physical injury or property damage as defined by the dataset providers. Severity 1 = least impact; Severity 2 = minor or manageable delay; Severity 3 = moderate or extended delay; Severity 4 = significant traffic disruption.

Figure 5. Distribution of severity values in the accident dataset. Severity is an ordinal variable ranging from 1 to 4, representing the level of impact on traffic rather than physical injury or property damage as defined by the dataset providers. Severity 1 = least impact; Severity 2 = minor or manageable delay; Severity 3 = moderate or extended delay; Severity 4 = significant traffic disruption. - Duration: The duration of a traffic accident shows the period from when the accident was first reported until its impact had been cleared from the road network. In this sense, the duration can be considered as another factor to determine impact. Please note that a long duration does not necessarily indicate a significant impact, as it could be related to the type of location and accessibility concerns. However, generally speaking, duration is positively correlated with impact.

- Distance: Distance shows the length of road affected by an accident. Similar to duration, a long distance does positively correlate with higher impact. However, a high-impact accident may not necessarily result in impacting a long extent of road.

All three attributes can, to some extent, represent the impact of an accident. However, each alone may not suffice to define the impact primarily. Thus, we propose building a model that can estimate accident impact given these features as input.

4.2. Delay as a Proxy for Impact

To estimate accident impact, a function is defined that maps the three input features to a target value , which we refer it as “impact”:

Now, the question is, how do we find ? While there is no straight-forward approach to estimate based on accident data, if we choose to fit it on congestion data (see Section 3.1.2), we can use some extra signals from the input to estimate the desired function. For congestion events, our data offers a human-reported description of incidents that includes “delay” (in minutes) with respect to typical traffic flow (see Table 6 for some examples). To the best of our knowledge, the “description” is generated by traffic officials in a systematic way, and therefore it is reliable and accurate. Supposing that the impact in the congestion domain is represented by delay (which is a fair assumption given our definition of impact and what delay represents), we can fit on congestion data using delay as the target. Please note that “delay” is only available in the congestion dataset, and here the goal is to derive it for accidents and use it as the target value.

Table 6.

Examples of congestion events and features used to build function F.

After fitting on congestion events, it is used to estimate impact (represented by ) for accidents. It is worth noting that, according to our data, congestion and accident events share the same nature, given their attributes and the sources that have been used to collect them. Thus, fitting a function like on one and applying it on another is a reasonable design choice.

4.3. Estimating Delay

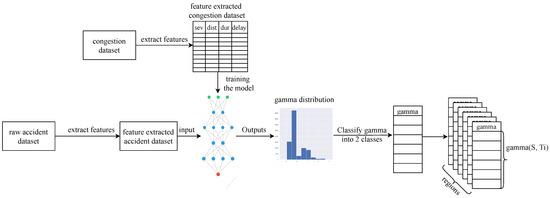

The reported delays associated with congestion events are extracted from their description and used as our variables (i.e., target values). The aim is to find by fitting different functions on data and select the best one with lower Mean Squared Error (MSE) and Mean Absolute Error (MAE) values. The overall process is described in Figure 6. Two models are used to estimate :

Figure 6.

The process of fitting on congestion events and applying it to accident events to predict impact (i.e., ).

- Linear regression (LR) model: Given our input features, a linear model seems a natural choice to estimate . So, we use a linear regression model for this purpose.

- Artificial Neural Network (ANN): To examine the impact of non-linearity to estimate , we also used a multi-layer perceptron network with four layers, each with three neurons, and a single neuron in the last layer. We used the Adam optimizer [53] with a learning rate of and trained the model for 200 epochs.

To train these models, in total, 48,109 congestion records collected between June 2018 and August 2019 are used. The algorithm considers of the data for the training and for evaluation. Table 7 shows the results of different models to predict delay using the three input features. Based on these results, the non-linear model (i.e., ANN) is selected to estimate the delay (or ).

Table 7.

Result of using ANN and LR models to predict delay (as a proxy for impact) on congestion data.

In the following, we discuss why it is reasonable to use the function fitted on congestion events and apply it to accident events. First, in our data, there is a resemblance between accidents and congestion events, given their attributes and the sources used to collect them. Second, we define the reported delay associated with congestion as gamma, so with similar inputs from another type of traffic event, the output would be of the nature of delay, which in our opinion is a better indicator of the impact of a traffic accident in comparison to duration or speed changes.

After obtaining values for accident events, we categorize them into two different classes to show the impact of accidents using more detectable labels: “medium severity” and “high severity”. A value of lower than the median is labeled as “medium severity”, and a higher than the median is labeled as “high severity”. Although one could pick a finer-grained categorization of values (e.g., three or four classes), through the empirical studies, we found that the choice of two categories best represents our data.

Please note that , as a real value, is not a proper target feature for accident impact prediction since there are numerous contributing factors determining the exact value of accident delay (in seconds or minutes) which cannot be collected in advance. Therefore, the aim is to classify into two classes (i.e., “medium severity” and “high severity” ) to deal with this natural drawback of the accident impact prediction task.

4.4. Scope of Predicted Accident Impact

It is important to clarify that the focus of this study is on predicting the traffic-related consequences of accidents, not their human or medical outcomes. Specifically, the goal of our model is to estimate the post-accident impact on traffic flow, such as increased congestion or travel delay, using a derived indicator called (). This indicator is estimated using congestion-related features such as delay, severity, and affected distance, as outlined in Section 4.2. Our framework does not attempt to predict health outcomes such as injuries, fatalities, or emergency response outcomes, as this would require detailed individual-level incident and medical data, which are not available in the datasets used. Instead, our focus is on developing a real-time, data-driven method to quantify and forecast traffic disruption caused by accidents—a perspective aligned with applications in traffic management and urban mobility planning. Future extensions of this work could consider incorporating the prediction of health outcomes if appropriate and reliable data become available.

5. Accident Impact Prediction Methodology

This section presents our modeling approach for predicting the impact of traffic accidents using spatiotemporal urban data. The nature of this task poses two key challenges. First, the input data is high-dimensional and heterogeneous, combining weather, congestion, road structure, and historical accident records across both space and time. Second, the learning objective involves classifying both the occurrence and the severity of accidents in the presence of significant class imbalance. Addressing these challenges requires a model that can learn both sequential dependencies and spatial structure from complex urban data [16].

To this end, we propose a two-stage cascade model that leverages the complementary strengths of LSTM and CNN architectures. The first stage uses an LSTM network to detect the likelihood of an accident in the next interval. LSTMs are well-suited for this purpose, as they have been shown to effectively capture long-term temporal dependencies in traffic and transportation systems [54,55,56]. By learning from recent sequences of temporal features, this model identifies patterns that precede accident occurrences.

The second stage of the cascade uses a CNN to predict the impact severity of accident events identified in the first stage. CNNs are commonly used for spatial pattern recognition and have demonstrated strong performance in traffic applications where features are arranged over grids or maps [11,57,58]. In our setup, spatial and short-term temporal features such as congestion delay, road layout, and local conditions are encoded into structured input, allowing the CNN to learn discriminative patterns associated with different impact levels.

This modular design allows each component to specialize in a subtask: temporal event detection or spatial impact classification. It also improves interpretability, reduces overfitting risk by narrowing the prediction scope of each stage, and helps to mitigate the challenges associated with imbalanced data.

In the remainder of this section, we first introduce key background concepts related to LSTM and CNN architectures, then describe how input data is structured and labeled, and finally detail the model architecture and training procedure.

5.1. Basic Concepts

5.1.1. Long Short-Term Memory

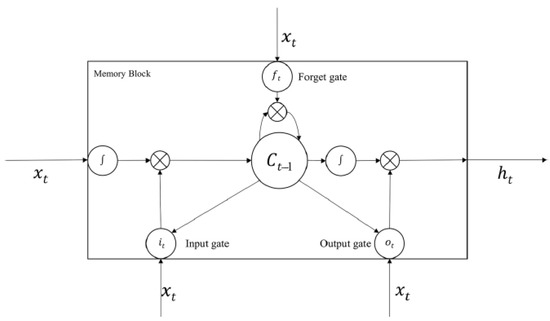

The LSTM model proposed by Hochreiter and Schmidhuber [59] is a variant of the recurrent neural network (RNN) model. It builds a specialized memory storage unit during training through a time backpropagation algorithm. It is designed to avoid the vanishing gradient issue in the original RNN. The key to LSTMs is the cell state, which allows information to flow along with the network. LSTM can remove or add information to the cell state, carefully regulated by structures called gates, including the input gate, forget gate, and output gate. The structure of a LSTM unit at each time step is shown in Figure 7. The LSTM generates a mapping from an input sequence vector to an output probability vector by calculating the network units’ activation using the following equations (t shows iteration index):

where X is the input vector. W and b are weight matrices and bias vector parameters, respectively, that need to be learned during training. and are sigmoid and hyperbolic tangent function, respectively, and ⊙ indicates the element-wise product of the vectors. The forget gate controls the extent to which the previous step memory cell should be forgotten, the input gate determines how much update each unit, and the output gate controls the exposure of the internal memory state. The model can learn how to represent information over several time steps as the values of gating variables vary for each time step.

Figure 7.

LSTM unit structure.

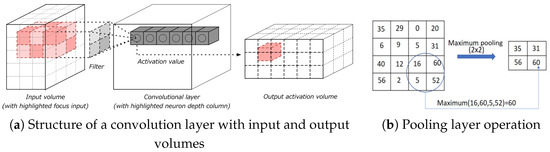

5.1.2. Convolutional Neural Network

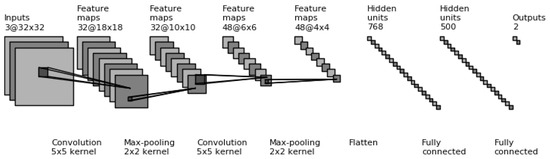

CNN is a multi-layer neural network which includes two important components for feature extraction: convolution and pooling layers. Figure 8 shows the overall structure of the convolution neural network. Its basic structure includes two special neuronal layers. The first one is the convolution layer; the input of each neuron in this layer is locally connected to the previous layer, and this layer seeks to extract local features. The second layer is the pooling layer, used to find the local sensitivity and perform secondary feature extraction [60]. The number of convolutions and pooled layers depends on the specific problem definition and objectives. Firstly, the model uses a convolutional layer to generate latent features based on the input (Figure 9a). Then, a sub-sampling layer is used on top of the convolutional output to extract more high-level features for the classification or recognition task (Figure 9b).

Figure 8.

Overall structure of the convolution neural network.

Figure 9.

Illustration of key components in a convolutional neural network. (a) Convolution layer structure. (b) Pooling layer operation.

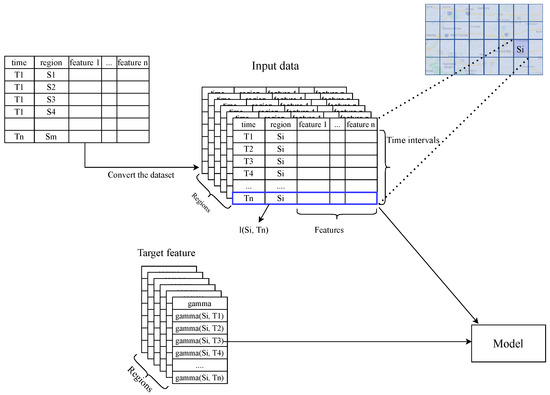

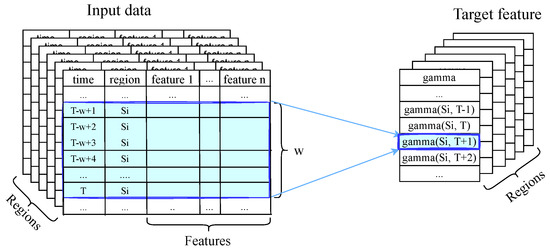

5.2. Model Input and Output

As described in Section 3.3, we create a vector representation for an accident in geographical region s during time interval t. Each vector has a corresponding Gammaclass label that indicates the intensity of delay in traffic flow after an accident. For accident-free data vectors, we label them as 0, which means no congestion is likely to take place. While this might not necessarily be true in the real world, it helps to simplify our problem formulation and label data vectors efficiently. The model predicts for time in region given a sequence of w previous time intervals in region . Mathematically speaking, is predicted given a sequence of Figure 10 shows the process of converting the dataset to a 3D structure of in the time and space domains. is structured in the same order as . Figure 11 shows an arbitrary input sequence and its corresponding target in our 3D data structure.

Figure 10.

Converting dataset into a 3D structure of and in the time and space domains. Model inputs and outputs are extracted from this 3D structure.

Figure 11.

A sequence of as model input and as its corresponding target feature.

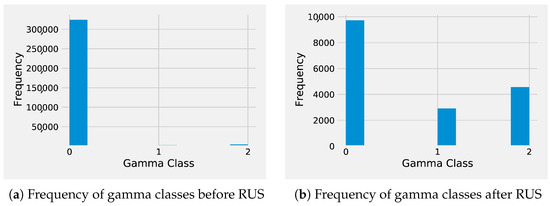

We choose accidents from February 2019 to August 2019 as the training time frame. This 27-week time frame includes 13,026 accident representations and 319,194 non-accident representations. The frequency of each gammaclass is shown in Figure 12a, indicating that the data is highly imbalanced. Various methods exist to resolve the class imbalance problem in the context of classification or pattern recognition. Examples include (i) removing or merging data in the majority class, (ii) duplicating samples in minority classes, and (iii) adjusting the cost function to make misclassification of minority classes more costly than the misclassification of majority instances. In this study, the random under-sampling (RUS) method resulted in better outcomes. Hence, we use it to mitigate the class imbalance problem of . Using this approach, the ratio of accident to non-accident events increased from to . Figure 12b shows the frequency of each after under-sampling. In addition, we assign a weight to each class in the loss function of models (and adjust them during training) to further address the imbalance issue. The following section describes class weighting in more detail.

Figure 12.

Comparing frequency distribution of gamma classes before and after random under sampling.

5.3. Model Development

Since the distinction between accidents and non-accidents is difficult due to the complex factors that can affect traffic accidents, and some factors that cannot be observed and collected in advance (e.g., driver distraction), a model may not perform well on the distinction of using single-step prediction (i.e., just by using a single model). Hence, we propose a cascade model that includes two deep neural network components. As a cascade model, the output of the first model is served as the input for the second model. The first component (referred to as a “layer” in our cascade design) focuses on distinguishing accidents from non-accident events; in other words, the first model detects if there will be an accident in the next two hours given w previous intervals’ information. If the first model classifies the input as an accident, then it goes to the second component. The second component focuses on accident impact prediction, i.e., ) for accident events, detected by the previous layer. The structure of the proposed accident impact prediction model is shown in Figure 13. The structure of the two components in our design is described below.

Figure 13.

Cascade model overview; the first model predicts whether the next interval will have an accident (i.e., label = 1) or not (i.e., label = 0). Next, predictions with label = 1 are given to the second model that predicts the intensity of accident impact (i.e., ). Non-accident predictions of the first model are labeled as .

- (A)

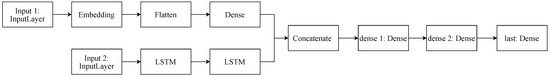

- Label Prediction: The first model is a binary LSTM classifier that predicts whether the next interval would have an accident (i.e., label = 1) or not (i.e., label = 0).We adopt a cascade modeling approach instead of a single-step classifier to decompose the task into two more focused subtasks: detecting the occurrence of accidents and then assessing their impact. This decoupled structure allows each model to specialize—Model 1 learns patterns for accident likelihood, while Model 2 focuses on severity estimation—leading to improved performance, especially in imbalanced and noisy settings. Moreover, this setup provides greater interpretability and flexibility, enabling separate tuning and the evaluation of detection and impact stages.In accident prediction, it is vital that if an accident is likely to happen, the model can predict it in advance. Therefore, in the first model, the focus is on detecting accident events, and we use a weighting mechanism for this purpose, such that the weight of the accident class is higher than the weight of the non-accident class.Class weights play a key role in guiding the model to compensate for the imbalance in the data, particularly by penalizing missed accident predictions more heavily than false alarms. There is no fixed theoretical formula for choosing class weights; they are typically treated as hyperparameters that must be tuned empirically. To this end, we conducted a grid search over a range of weight ratios from 1:1 up to 1:8 (non-accident/accident) and selected the combination that yielded the best precision–recall tradeoff on a validation set.A similar process was applied to the second model, where we explored different weighting schemes to balance the three gamma classes (non-impact, medium-impact, and high-impact). The selected weights were those that minimized the misclassification of impactful accidents while keeping false positives low. Using grid search, we found the optimum weights to be 1 and 3 for non-accident and accident classes, respectively.The first model takes as its input two components: (a) the index of a given zone (denoted as ), and (b) the temporal feature sequence , for .Here, “subtraction” indicates that the zone index is excluded from the temporal feature vectors. The first input is passed through an embedding layer of size , where R is the set of all spatial grid cells, allowing the model to learn a distributed representation of regional characteristics such as spatial heterogeneity, traffic dynamics, and environmental context. The second input is a sequence of w vectors, each with 35 features, which is processed by two LSTM layers with 12 and 24 neurons, respectively. The resulting sequence encoding is concatenated with the embedded zone representation and passed through two fully connected layers, each with 25 neurons. Batch normalization is applied throughout the network to enhance convergence stability and reduce internal covariate shift. The structure of the first model is shown in Figure 14.

Figure 14. Structure of the first model (layer) in the cascade model. The index of a given zone is fed into an embedding layer to extract latent features for that zone during training. The other features (e.g., weather conditions and congestion data) are fed into LSTM layers. The output of the aforementioned layers is then concatenated and input to fully connected layers to predict the possibility of an accident.The overall architecture was selected through extensive hyperparameter tuning using a validation dataset. Simpler or shallower model variants were evaluated but failed to capture the complex temporal dependencies necessary for accurate accident prediction.To mitigate overfitting, we employed batch normalization and early stopping, which are well-established regularization methods in deep learning. Although the chosen architecture introduces additional computational cost and longer training time, this trade-off was necessary to achieve improved predictive accuracy in the context of highly imbalanced and noisy real-world traffic data.

Figure 14. Structure of the first model (layer) in the cascade model. The index of a given zone is fed into an embedding layer to extract latent features for that zone during training. The other features (e.g., weather conditions and congestion data) are fed into LSTM layers. The output of the aforementioned layers is then concatenated and input to fully connected layers to predict the possibility of an accident.The overall architecture was selected through extensive hyperparameter tuning using a validation dataset. Simpler or shallower model variants were evaluated but failed to capture the complex temporal dependencies necessary for accurate accident prediction.To mitigate overfitting, we employed batch normalization and early stopping, which are well-established regularization methods in deep learning. Although the chosen architecture introduces additional computational cost and longer training time, this trade-off was necessary to achieve improved predictive accuracy in the context of highly imbalanced and noisy real-world traffic data. - (B)

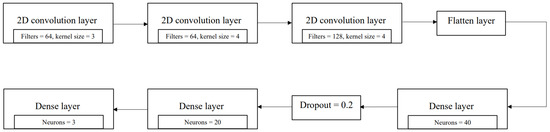

- Impact Prediction: The second model is a three-class CNN classifier that predicts for the next interval. The weight of each class is assigned based on its frequency and importance in our problem setup. Class imbalance in the impact prediction task can lead to biased predictions favoring the majority class (i.e., non-impact). To mitigate this, we incorporated class weights in the loss function to amplify the contribution of under-represented classes during training.To determine the optimal set of weights, we conducted an extensive grid search over different configurations, varying the weights for and in the range of 1.0 to 5.0 (in increments of 0.5), while slightly adjusting the weight of around 0.5 to 1.0 to maintain baseline stability. This search aimed to balance the model’s sensitivity to impactful accidents (classes 1 and 2) with the need to avoid excessive false positives. The best-performing combination was selected based on validation set performance, prioritizing recall for impactful cases. The optimum weights are found to be 0.7, 4.5, and 3.5 for , and , respectively.The second model is a CNN-based classifier that predicts the impact level (i.e., ) for intervals flagged as accident-prone by the first model. Although the input is primarily composed of samples predicted as accidents, we also include selected non-accident cases to help correct potential misclassifications from the first model and improve the cascade system’s overall reliability.This CNN model consists of three convolutional layers followed by a flattening operation and three fully connected (dense) layers, with one dropout layer applied before the final two layers. The architecture is designed to extract hierarchical spatial and short-term temporal patterns from the input feature maps, enabling the model to differentiate between varying levels of post-accident impact. The specific configuration was determined through extensive hyperparameter tuning on a validation set. Simpler architectures with fewer convolutional layers or reduced capacity were tested but did not perform as well, particularly in capturing the nuances of medium- and high-impact events.To mitigate overfitting and stabilize training, we included early stopping and dropout layers, both of which are widely used and effective in deep learning models [57]. While the depth of the network increases the training time, we found this to be an acceptable trade-off for improved performance. In future work, we aim to investigate lighter-weight alternatives such as MobileNet-style CNNs or dilated convolutions to reduce computational cost without compromising prediction quality.The structure of the model is illustrated in Figure 15.

Figure 15. Structure of the second model (layer) in the cascade model; three consecutive convolutional layers are used to extract spatial latent features from data, and then three fully connected layers are used to convert the output of convolutional layers to a probability vector for classes.

Figure 15. Structure of the second model (layer) in the cascade model; three consecutive convolutional layers are used to extract spatial latent features from data, and then three fully connected layers are used to convert the output of convolutional layers to a probability vector for classes.

To the best of our knowledge, the proposed model is the first cascade model that can be applied in the real world to classify time intervals as accident and no-accident and then predict the severity of accidents using available data in real time. Additionally, given the type of data used, we believe that this model can be used in the real world to serve and make compelling predictions.

6. Experiments and Results

In this section, we first describe our evaluation metrics, then provide details on the experimental setup, and lastly present our results followed by discussions.

6.1. Evaluation Metrics

Failing to predict an accident event (and the consequent impact on traffic flow) is more costly comparing to the case of falsely forecasting traffic delay in an area as a result of a false accident prediction. Hence, we focus on two objectives: (1) maximizing confidence when predicting a non-accident case (i.e., ), and (2) minimizing the possibility of failing to predict accident events (i.e., cases with and ). In terms of metrics, these objectives can be translated to (1) “precision” for and (2) “recall” for and . Precision and recall for multi-class classification are formulated as below:

where is the number of samples with the true class label i and predicted class label j. Therefore, we focus on precision for class “0” and recall for the other two classes for evaluation purposes. That being said, we still need to ensure reasonable recall for class “0” and acceptable precision for the other classes.

6.2. Experimental Setup

This section explains the experimental setup and the corresponding test runs. In this study, we used Keras, a Python-based Deep Learning library, to build the prediction models. We chose Adam [53] as the optimizer, given its characteristics to dynamically adjust the learning rate to converge faster and better. To find the optimal models’ settings, we performed a grid search over choices of LSTM layers, {1, 2, 3}, the number of neurons in recurrent layers, {12, 18, 24}, the number of fully-connected layers, {1, 2}, and the size of fully-connected layers, {12, 25, 50}, for the first model; and a grid search over choices of convolutional layers, {1, 2, 3}, and the number of filters in each convolutional layer, {8, 16, 32, 64, 128}, for the second model. The first model (i.e., LSTM) was trained for 150 epochs and the CNN model was trained for 25 epochs.

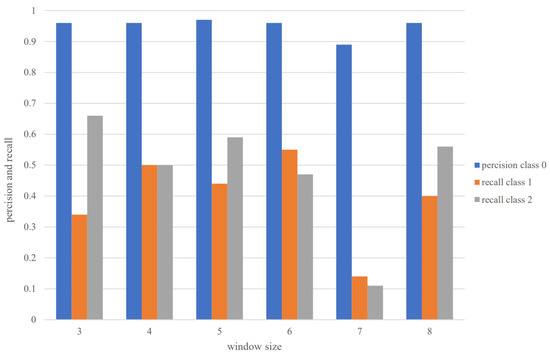

When building the input vectors, we can use past w intervals to build a vector and pass it to the cascade model to predict a label and a gamma class. But, what is the right choice of w? To answer this question, we ran an experiment on the test data to study the metrics introduced in the previous section on different classes for different choices of w. The results are shown in Figure 16. According to these results, a choice of 4 or 5 for w (which translates to having information from 8 to 10 h before the accident) seems to be reasonable for this parameter. Here, we chose to set , since it consistently provides reasonable results over all three gamma classes.

Figure 16.

Comparison of evaluation metrics (i.e., recall for classes 1 and 2 and precision for class 0) based on the cascade model for different choices of input length (or window size).

6.3. Baseline Models

In this section, random forest (RF), gradient boosting classifier (GBC), a CNN, and an LSTM are selected as the baseline algorithms. RF and GBC are traditional models that generally provide satisfactory results on a variety of classification or regression problems. Furthermore, as our proposed cascade model leverages LSTM and CNN components, the use of these two as standalone models seems to be a reasonable choice. We used Scikit-learn and Keras for the off-the-shelf implementation of the baseline models. As for hyperparameters, for the random forest, we used 200 estimators, the maximum depth was 12, the minimum sample split was 2, and the rest of the parameters were set to the default values. For GBC, we used 300 estimators, the learning rate was 0.8, the maximum depth was 2, and the rest of the parameters were set to the default values. The baseline CNN and LSTM models share the same structure as in the proposed cascade model, except for the last layer of the LSTM that uses 3 neurons instead of 2 (since by means of this model we seek to predict three in a single step).

6.4. Results and Model Comparison

This section provides the evaluation results based on test data (from September 2019 to November 2019) to compare our proposal against the two traditional (i.e., RF and GBC) and deep neural network models (i.e., CNN and LSTM). A summary of the results is presented in Table 8 and Figure 17. From these results, we can see that the GBC model performed quite well in detecting non-accident events (i.e., = 0) based on precision for this class. However, its results in predicting the other two classes are not satisfactory.

Table 8.

Accident impact prediction results based on precision for and recall for 1 and 2.

Figure 17.

Comparing different models based on precision and recall to predict gammaclasses 0, 1, and 2.

Further, the RF model does a better job in detecting accidents with medium or high impact. The LSTM model seems to perform better than the other models (except the cascaded model) in detecting accidents with medium or high impact, indicating the importance of taking into account the temporal dependencies to encode input data better. However, CNN’s performance is not satisfactory as a single-step model.

While the proposed cascade model provides satisfactory results in terms of precision for = 0, it results in significantly higher recalls when predicting the other two classes. These are important observations, because in the case of accident prediction, failing to predict the occurrence of an accident in a zone (i.e., low recall) can result in a more serious outcome than falsely predicting an accident in a non-accidental zone (i.e., low precision). Therefore, we generally care more about having higher recall to predict impact for accident events, and also a high precision to decide whether a time interval is associated with an accident event or not. Please note that while high precision is still necessary for non-zero classes (i.e., cases with reported accident), a real-world framework must ensure acceptable recall for these cases.

6.5. Influencing Factor Analysis

In this section, we conduct an analysis to study the importance of different factors in our model design (i.e., components and input features). Such analysis can help to improve our input features and model design, all in order to build an effective framework for real-world applications.

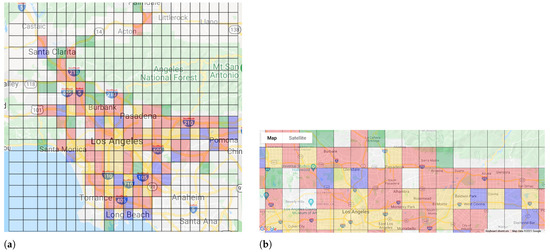

(A) Latent Location Representations: As discussed in Section 3.1 and Section 5.2, our dataset covers accident events in an extensive area containing urban road network and high-speed roads. During the model training, we derive latent representations for each region to encode essential spatiotemporal characteristics. The first analysis aims to study the quality of derived representations and understand how they can help to distinguish between regions (or zones) with different spatial characteristics. We believe that these latent representations can show how well the model has learned spatial factors involved in post-accident impacts. Therefore, we clustered latent (or embedding) vectors into four clusters and specified each cluster with a specific color on a map for better interpretation. Figure 18 shows the results of this analysis. Based on Figure 18a, most of the adjacent regions in our study area are found to be in the same cluster, indicating that they share the same accident-related characteristics, which is aligned with the principals of urban design. As we move away from the center to the outskirts of the county area, we see more heterogeneity in clusters. This could either be a result of fewer accident reports (i.e., less data for the model to train on and properly distinguish between regions) or more heterogeneity in the road network in the suburbs. Figure 18b shows more details of different clusters. We can see that urban highways are mainly highlighted in yellow, while areas with a sparse road network are highlighted in green. Blue and red regions are mostly urban regions with a high density of spatial points of interest (e.g., intersections), and the difference between them may be in the intensity of traffic congestion caused by accidents.

Figure 18.

(a) An overview of different clusters of latent representations of regions. (b) Closer look at clustering of different regions and their spatial characteristics. Clustering of latent spatial representations for different regions based on road network and spatial context. Each region is colored according to its cluster assignment, derived from the embedding layer of the trained model. Yellow clusters correspond to areas dominated by arterial highways and high-speed roads. Green regions represent areas with sparse road networks and low traffic volume, typically located in suburban zones. Blue and red clusters mostly represent urban areas with dense road networks and a high concentration of spatial points of interest (e.g., intersections), with variation likely driven by differences in congestion intensity or connectivity. Transparent areas are regions excluded due to insufficient data.

In summary, the research findings in this section indicate the ability of our framework to build meaningful latent representations for different regions, that help to better predict accidents and their impact. Moreover, different clusters seem to reasonably represent regions with similar characteristics. The yellow cluster mostly represents regions with a concentration of arterial highways (for which we can expect higher delays as a result of an accident). The green cluster, in contrast, represents regions with marginal and low traffic flow, and thus an accident in these regions will not cause as much delay as we would expect for regions in yellow cluster. For the regions in the red cluster, we can expect less delay (or impact) due to lower traffic flow, but higher delay in comparison to the regions in the blue cluster. Also note that regions with a sparse road network and low traffic flow (green regions) are mostly located in the suburbs and locations far from downtown areas.

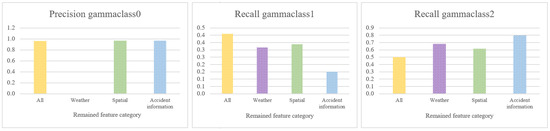

(B) Ablation study: The second analysis is an ablation study aiming to explore the importance of each feature category when used individually for predicting accident impact. Here, we study three categories of features—weather, spatial, and accident information—and compare their results with the case of using all categories of features. By systematically evaluating model performance with each category—weather, spatial, and accident information—used in isolation, we assess the extent to which each group contributes unique and complementary information to overall prediction accuracy. Figure 19 shows the analysis results, and it reveals that removing all but one feature category would significantly degrade the prediction results. In comparison to the best model results, using each category alone as an input would result in greater recall for = 2, lower recall for = 1, and almost the same precision for = 0 (except for the weather category). It is worth noting that using just weather features does not assist in identifying cases where = 0. This can either indicate that the quality of weather data in our dataset is not as good as it should be (e.g., it could be finer-grained spatially and temporally), or that weather characteristics do not play an important role in the area of our study (throughout the year, we do not usually see significant shifts in weather conditions in the Los Angeles area (see https://weatherspark.com/y/1705/Average-Weather-in-Los-Angeles-California-United-States-Year-Round (accessed on 3 June 2025) for more details)). This analysis demonstrates that effective accident impact prediction relies on the integration of all feature categories, as the exclusion of any single group yields a marked decline in model performance. The ablation results support the conclusion that weather, spatial, and accident information each provide distinct, complementary signals that are essential for maximizing predictive accuracy across different impact classes.

Figure 19.

Model performance based on evaluation metrics (recall of ; precision of ) when using just one feature category (i.e., weather features, spatial features, and accident-related features) in comparison to the case of using all feature categories as the input.

While this study evaluates the importance of grouped features, we acknowledge that further investigation of individual feature contributions could provide deeper interpretability, and we propose this as a direction for future work.

7. Conclusions and Future Work

Traffic accidents are serious public safety concerns, and many studies have focused on analyzing and predicting these infrequent events. However, existing studies suffer from employing extensive data that may not be easily available to other researchers or recorded in real time, meaning they cannot be utilized for real-world applications. Additionally, they fail to establish an appropriate criterion for determining the impact of accidents. To overcome these limitations, this study proposes a cascade model that combines LSTM and CNN components for real-time traffic accident prediction. The model detects future accidents and assesses their impact on surrounding traffic flow using a novel metric called gamma. Four complementary datasets, including accident data, congestion data, weather data, and spatial data, are utilized to construct the input data. Through extensive experiments conducted using data from Los Angeles county, we demonstrate that our proposed model outperforms existing approaches in terms of precision for cases with minimal impact (i.e., no reported accidents, = 0) and recall for cases with significant impact (i.e., reported accidents, = 1 and 2).

This study has several implications for future research. Firstly, we suggest expanding the framework to incorporate data from neighboring regions to predict accident impact for a target region, mitigating data sparsity. Additionally, the inclusion of satellite imagery data to enhance the model’s ability to distinguish between regions and capture spatial information could also improve performance. Future works may also consider physical or human consequences—such as injuries, fatalities, or emergency response needs—as part of accident impact, provided that reliable real-time data for these outcomes becomes available.

While our work makes important contributions, it is important to note its limitations. Our model currently only classifies accident impacts into three broad categories. Future research could benefit from more granular classifications or assigning probabilities to each accident’s potential impact on road conditions. Additionally, the accuracy of our results may have been limited by the accessibility and quality of the weather data we used from a private source, which was gathered from only four airports. To enhance the accuracy of our model, future research could incorporate radar data or other more comprehensive weather data sources. Addressing these limitations will be essential to advancing our understanding of the factors that contribute to road accidents and improving road safety.

Overall, this study provides a promising approach to real-time traffic accident prediction using a novel metric for measuring accident impact. The proposed framework has the potential to be applied in real-world settings to enhance public safety and traffic management.

Author Contributions

Conceptualization, P.S., M.Q., S.M. and E.H.; methodology, P.S. and M.Q.; software, P.S. and M.Q.; validation, P.S. and M.Q.; formal analysis, P.S. and M.Q.; investigation, P.S. and M.Q.; resources, S.M. and E.H.; data curation, P.S. and M.Q.; writing—original draft preparation, P.S., M.Q. and S.M.; writing—review and editing, P.S., M.Q., S.M. and E.H.; visualization, P.S. and M.Q.; supervision, S.M. and E.H.; project administration, E.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

All data generated or analysed during this study are included in this published article, “Short and Long-term Pattern Discovery Over Large-Scale Geo-Spatiotemporal Data” [12]. The Weather Condition Dataset and POI Dataset are not available publicly, but you can refer to the paper mentioned above to gain insightful information about how to collect such a dataset.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, C.; Li, X.; Zhou, X.; Wang, A.; Nedjah, N. Soft computing in big data intelligent transportation systems. Appl. Soft Comput. 2016, 38, 1099–1108. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, J.; Fang, S. Prediction of urban expressway total traffic accident duration based on multiple linear regression and artificial neural network. In Proceedings of the 2019 5th International Conference on Transportation Information and Safety (ICTIS), Liverpool, UK, 14–17 July 2019; pp. 503–510. [Google Scholar]

- Austin, R.D.; Carson, J.L. An alternative accident prediction model for highway-rail interfaces. Accid. Anal. Prev. 2002, 34, 31–42. [Google Scholar] [CrossRef] [PubMed]

- Oyedepo, O.; Makinde, O. Accident Prediction Models for Akure–Ondo Carriageway, Ondo State Southwest Nigeria; Using Multiple Linear Regressions. Afr. Res. Rev. 2010, 4, 30–49. [Google Scholar] [CrossRef][Green Version]

- Wei, C.H.; Lee, Y. Sequential forecast of incident duration using artificial neural network models. Accid. Anal. Prev. 2007, 39, 944–954. [Google Scholar] [CrossRef]

- Salahadin Seid Yassin, P. Road accident prediction and model interpretation using a hybrid K-means and random forest algorithm approach. SN Appl. Sci. 2020, 1576. [Google Scholar]

- Sarkar, S.; Vinay, S.; Raj, R.; Maiti, J.; Mitra, P. Application of optimized machine learning techniques for prediction of occupational accidents. Comput. Oper. Res. 2019, 106, 210–224. [Google Scholar] [CrossRef]

- Wenqi, L.; Dongyu, L.; Menghua, Y. A model of traffic accident prediction based on convolutional neural network. In Proceedings of the 2017 2nd IEEE International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 1–3 September 2017; pp. 198–202. [Google Scholar]

- Ozbayoglu, M.; Kucukayan, G.; Dogdu, E. A real-time autonomous highway accident detection model based on big data processing and computational intelligence. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 1807–1813. [Google Scholar]

- Marcillo, P.; Valdivieso Caraguay, Á.L.; Hernández-Álvarez, M. A systematic literature review of learning-based traffic accident prediction models based on heterogeneous sources. Appl. Sci. 2022, 12, 4529. [Google Scholar] [CrossRef]

- Li, P.; Abdel-Aty, M.; Yuan, J. Real-time crash risk prediction on arterials based on LSTM-CNN. Accid. Anal. Prev. 2020, 135, 105371. [Google Scholar] [CrossRef] [PubMed]

- Moosavi, S.; Samavatian, M.H.; Nandi, A.; Parthasarathy, S.; Ramnath, R. Short and Long-Term Pattern Discovery Over Large-Scale Geo-Spatiotemporal Data. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’19, Anchorage, AK, USA, 4–8 August 2019; pp. 2905–2913. [Google Scholar] [CrossRef]

- Caliendo, C.; Guida, M.; Parisi, A. A crash-prediction model for multilane roads. Accid. Anal. Prev. 2007, 39, 657–670. [Google Scholar] [CrossRef]

- Najjar, A.; Kaneko, S.; Miyanaga, Y. Combining satellite imagery and open data to map road safety. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Ren, H.; Song, Y.; Wang, J.; Hu, Y.; Lei, J. A deep learning approach to the citywide traffic accident risk prediction. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3346–3351. [Google Scholar]

- Yuan, Z.; Zhou, X.; Yang, T. Hetero-convlstm: A deep learning approach to traffic accident prediction on heterogeneous spatio-temporal data. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 984–992. [Google Scholar]

- Chen, Q.; Song, X.; Yamada, H.; Shibasaki, R. Learning deep representation from big and heterogeneous data for traffic accident inference. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Yu, R.; Abdel-Aty, M. Utilizing support vector machine in real-time crash risk evaluation. Accid. Anal. Prev. 2013, 51, 252–259. [Google Scholar] [CrossRef]

- Lin, L.; Wang, Q.; Sadek, A.W. A novel variable selection method based on frequent pattern tree for real-time traffic accident risk prediction. Transp. Res. Part C Emerg. Technol. 2015, 55, 444–459. [Google Scholar] [CrossRef]

- Bianchi, F.M.; Maiorino, E.; Kampffmeyer, M.C.; Rizzi, A.; Jenssen, R. Recurrent Neural Networks for Short-Term Load Forecasting: An Overview and Comparative Analysis; Springer: Cham, Switzerland, 2017. [Google Scholar]