A Review of Data Models and Frameworks in Urban Environments in the Context of AI

Abstract

1. Introduction

RQ: What is the nature of the need for extending and/or enriching data models and frameworks in urban environments in the era of AI?

1.1. Definitions

1.2. Objectives

1.3. Methodology

2. Literature Review

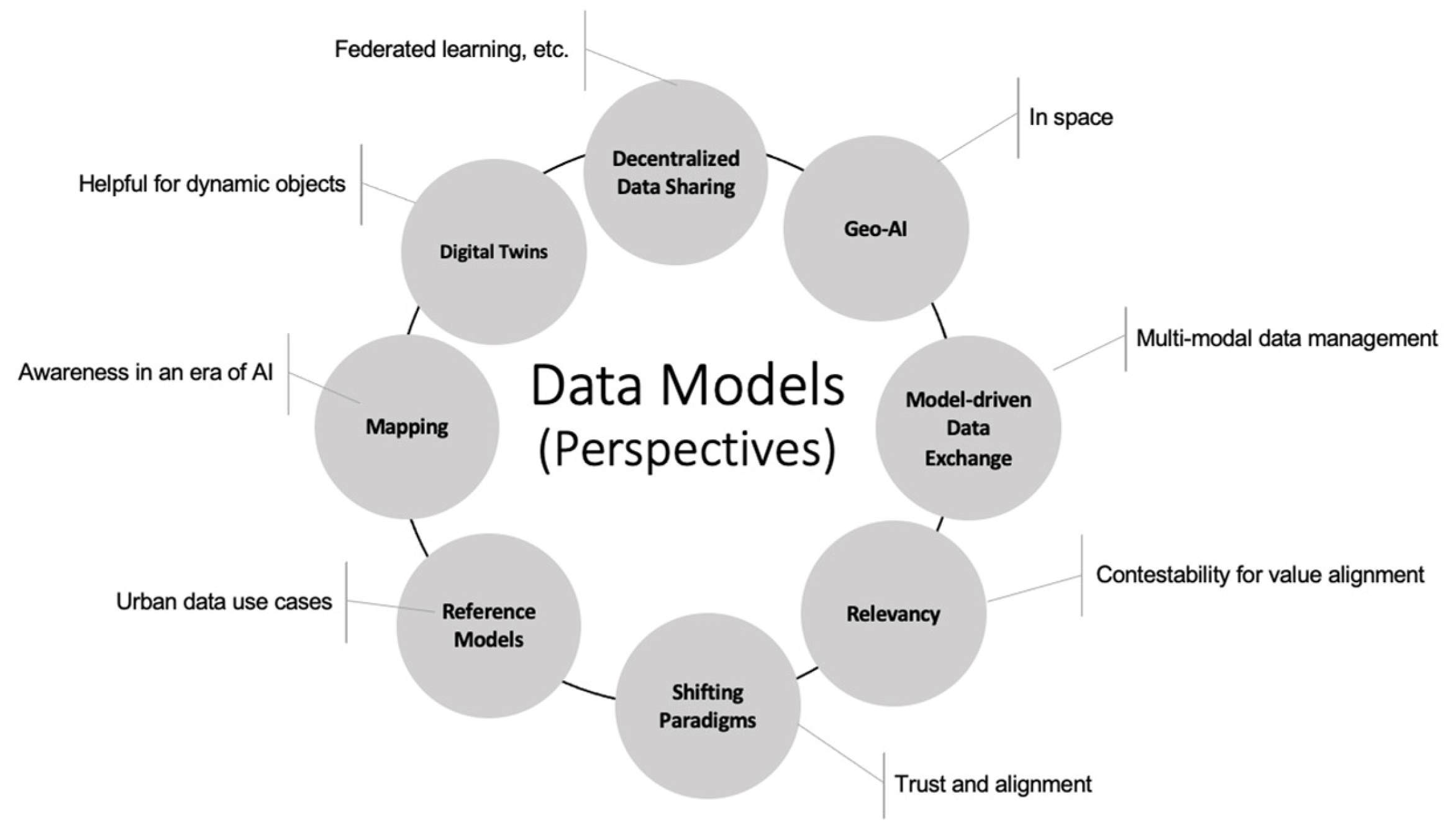

2.1. Data Models in Urban Environments in the Context of AI

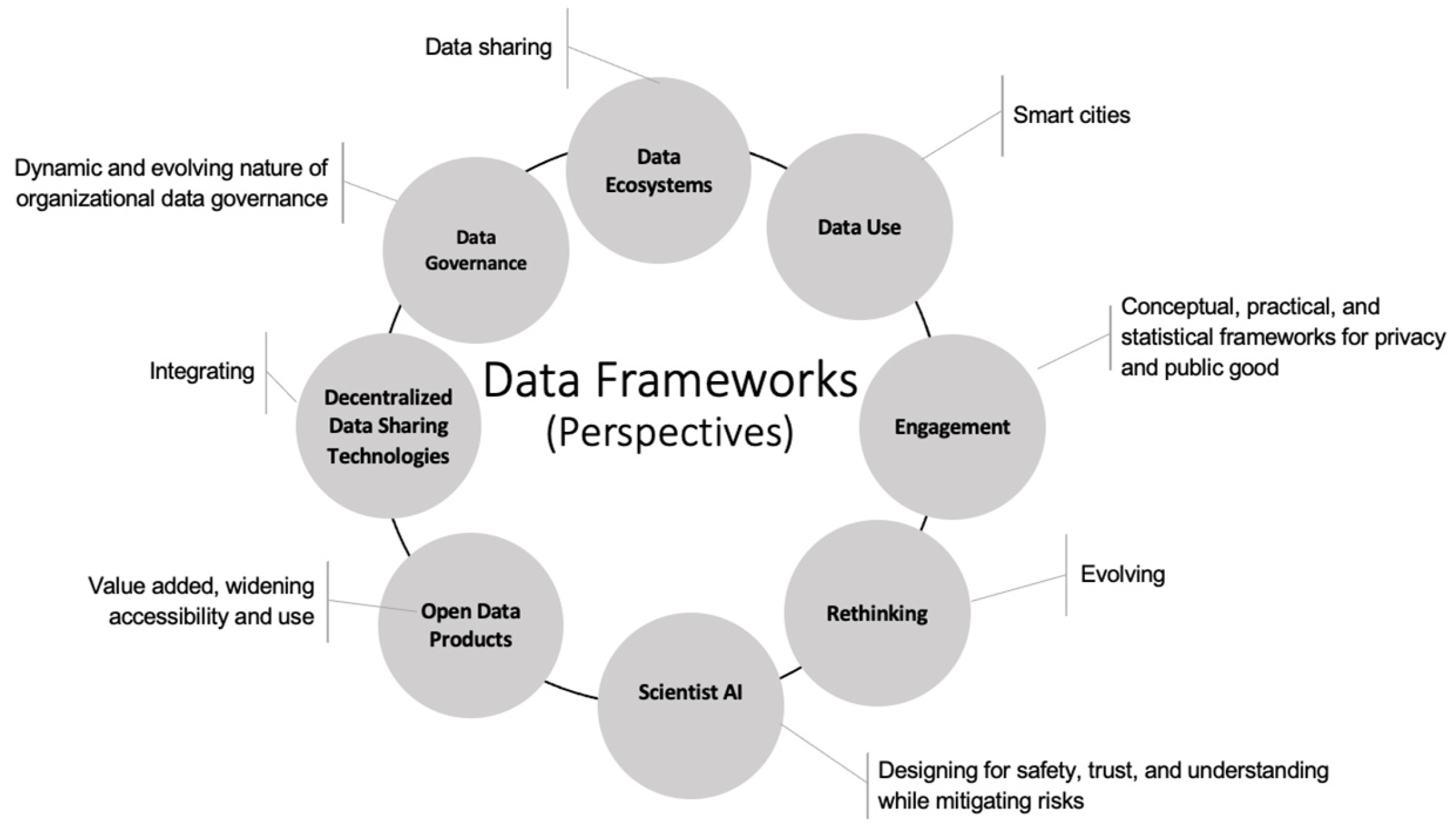

2.2. Data Frameworks in Urban Environments in the Context of AI

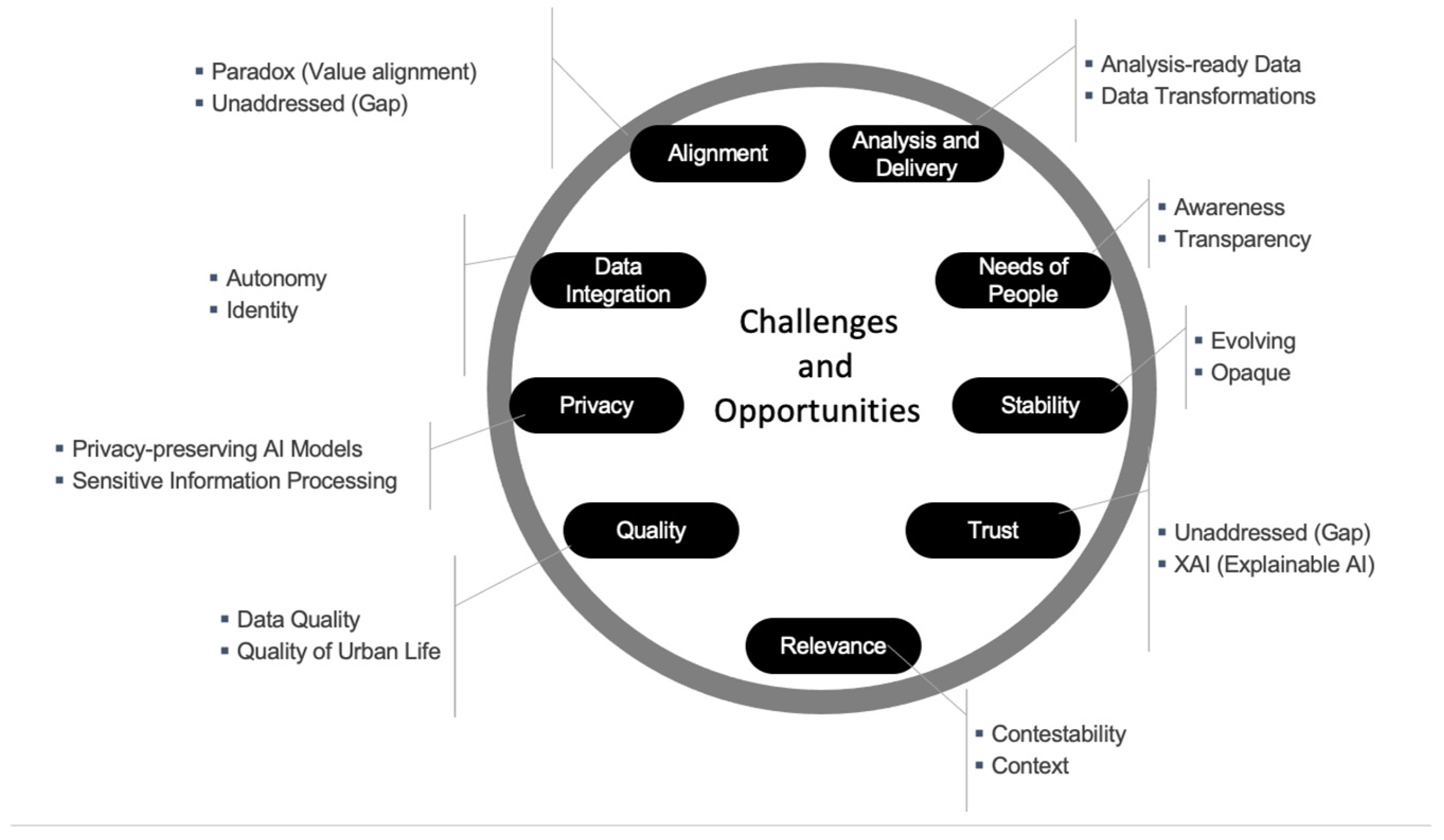

3. Challenges and Opportunities for Data Models and Frameworks in Urban Environments in the Context of AI

3.1. Gaps: Data Models and Frameworks in Urban Environments in the Context of AI

3.2. Problems: Data Models and Frameworks in Urban Environments in the Context of AI

P1: Data models and frameworks in the context of AI in urban environments and beyond are in need of extension and enrichment in their ability to address catastrophic risks while ensuring safe, trustworthy, and non-agentic systems.

4. Discussion

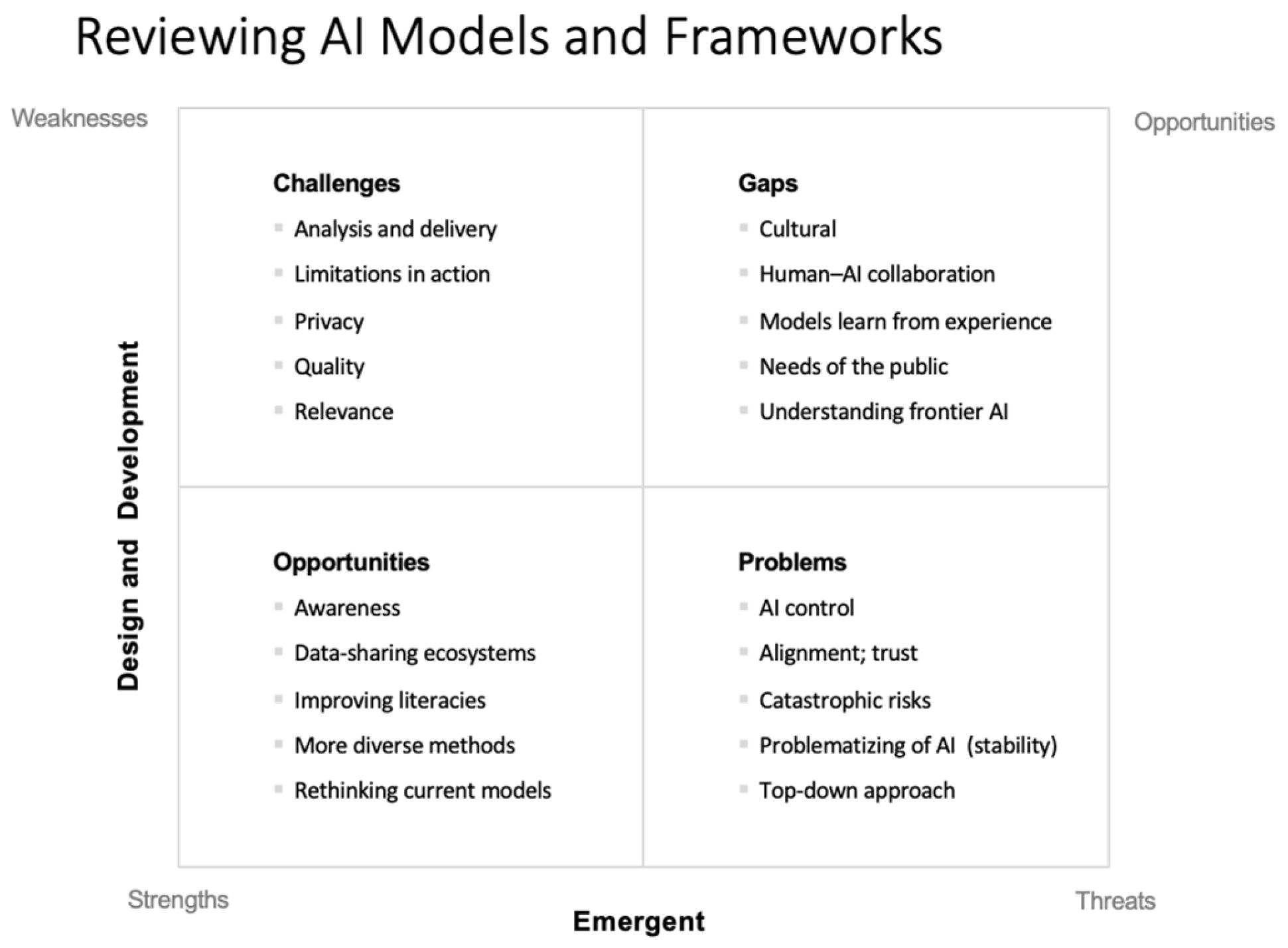

4.1. SWOT Analysis of Review Findings for Data Models and Frameworks in the Era of AI

4.1.1. Challenges/Weaknesses

4.1.2. Opportunities/Strengths

4.1.3. Gaps/Opportunities

4.1.4. Problems/Threats

4.2. Theorizing and Framework Formulation for Data in the Era of AI

4.3. Limitations and Mitigations

4.4. Future Directions

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AGI | Artificial General Intelligence |

| ASI | Artificial Superintelligence |

| ATSC | Ambient Theory for Smart Cities |

| DDS | Decentralized Data-Sharing |

| DFS | Decentralized File System |

| DT | Digital Twin |

| EU | European Union |

| FL | Federated Learning |

| FHE | Fully Homomorphic Encryption |

| GeoAI | Geographic Artificial Intelligence |

| GIScience | Geographic Information Science |

| GPT | General Purpose Technology |

| IoT | Internet of Things |

| LLMs | Large Language Models |

| MAS | Multi-Agent System |

| MIT | Massachusetts Institute of Technology |

| NYU | New York University |

| ODPs | Open Data Products |

| SCs | Smart Cities |

| SW | Semantic Web |

| SWOT | Strengths Weaknesses Opportunities Threats |

| UDTs | Urban Digital Twins |

| XAI | Explainable Artificial Intelligence |

References

- Yoo, Y.; Bryant, A.; Wigand, R.T. Designing digital communities that transform urban life: Introduction to the special section on digital cities. Commun. Assoc. Inf. Syst. 2010, 27, 637–640. [Google Scholar] [CrossRef]

- Nam, T.; Pardo, T.A. Conceptualizing smart city with dimensions of technology, people, and institutions. In Proceedings of the 12th Annual International Digital Government Research Conference: Digital Government Innovation in Challenging Times, College Park, MD, USA, 12–15 June 2011; pp. 282–291. [Google Scholar] [CrossRef]

- Lim, C.; Kim, K.-J.; Maglio, P.P. Smart cities with big data: Reference models, challenges, and considerations. Cities 2018, 82, 86–99. [Google Scholar] [CrossRef]

- Curry, E.; Scerri, S.; Tuikka, T. Data spaces: Design, deployment, and future directions. In Data Spaces; Curry, E., Scerri, S., Tuikka, T., Eds.; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Ullah, A.; Anwar, S.M.; Li, J.; Nadeem, L.; Mahmood, T.; Rehman, A.; Saba, T. Smart cities: The role of Internet of Things and machine learning in realizing a data-centric smart environment. Complex Intell. Syst. 2023, 10, 1607–1637. [Google Scholar] [CrossRef]

- Batty, M. The emergence and evolution of urban AI. AI Soc. 2023, 38, 1045–1048. [Google Scholar] [CrossRef]

- Luusua, A.; Ylipulli, J.; Foth, M.; Aurigi, A. Urban AI: Understanding the emerging role of artificial intelligence in smart cities. AI Soc. 2023, 38, 1039–1044. [Google Scholar] [CrossRef]

- Suchman, L. The uncontroversial ‘thingness’ of AI. Big Data Soc. 2023, 10, 4. [Google Scholar] [CrossRef]

- Dhar, V. The paradigm shifts in artificial intelligence: Even as we celebrate AI as a technology that will have far-reaching benefits for humanity, trust and alignment remain disconcertingly unaddressed. Commun. ACM 2024, 67, 50–59. [Google Scholar] [CrossRef]

- Widder, D.G.; Whittaker, M.; West, S.M. Why ‘open’ AI systems are actually closed, and why this matters. Nature 2024, 635, 827–833. [Google Scholar] [CrossRef]

- Böhlen, M. On the Logics of Planetary Computing: Artificial Intelligence and Geography in the Alas Mertajati; Routledge: London, UK, 2025. [Google Scholar] [CrossRef]

- McKenna, H.P. Improving our awareness of data generation, use, and ownership: People and data interactions in AI-rich environments. In Distributed, Ambient and Pervasive Interactions; Streitz, N.A., Konomi, S., Eds.; HCII 2025. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2025; Volume 15802. [Google Scholar] [CrossRef]

- West, R.; Aydin, R. The AI alignment paradox: The better we align AI models with our values, the easier we may make it to realign them with opposing values. Commun. ACM 2025, 68, 24–26. [Google Scholar] [CrossRef]

- Mollick, E. Co-Intelligence: Living and Working with AI; Portfolio; Penguin Random House: Westminster, MD, USA, 2024. [Google Scholar]

- Partelow, S. What is a framework? Understanding their purpose, value, development and use. J. Environ. Stud. Sci. 2023, 13, 510–519. [Google Scholar] [CrossRef]

- Smaldino, P. What are models and why should we use them to understand social behavior? Code Horiz. Blog 2023. Available online: https://codehorizons.com/what-are-models-and-why-should-we-use-them-to-understand-social-behavior/ (accessed on 5 May 2025).

- Wright, L.; Davidson, S. How to tell the difference between a model and a digital twin. Adv. Model. Simul. Eng. Sci. 2020, 7, 13. [Google Scholar] [CrossRef]

- Batty, M. Digital twins. Environ. Plan. B Urban Anal. City Sci. 2018, 45, 817–820. [Google Scholar] [CrossRef]

- Yue, Y.; Yan, G.; Lan, T.; Cao, R.; Gao, Q.; Gao, W.; Huang, B.; Huang, G.; Huang, Z.; Kan, Z.; et al. Shaping future sustainable cities with AI-powered urban informatics: Toward human-AI symbiosis. Comput. Urban Sci. 2025, 5, 31. [Google Scholar] [CrossRef]

- Le, F.; Srivatsa, M.; Ganti, R.; Sekar, V. Rethinking data-driven networking with foundation models: Challenges and opportunities. In Proceedings of the 21st ACM Workshop on Hot Topics in Networks, Austin, TX, USA, 14–15 November 2022; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar] [CrossRef]

- Klîmek, J.; Koupil, P.; Škoda, P.; Bártîk, J.; Stenchlák, S.; Nečaský, M. Atlas: A toolset for efficient model-driven data exchange in data spaces. In Proceedings of the 2023 ACM/IEEE International Conference on Model Driven Engineering Languages and Systems Companion (MODELS-C), Västerås, Sweden, 1–6 October 2023; pp. 4–8. [Google Scholar] [CrossRef]

- Alsamhi, S.H.; Hawbani, A.; Kumar, S.; Timilsina, M.; Al-Qatf, M.; Haque, R. Empowering dataspace 4.0: Unveiling promise of decentralized data-sharing. IEEE Access 2024, 12, 112637–112658. [Google Scholar] [CrossRef]

- Peldon, D.; Banihashemi, S.; LeNguyen, K.; Derrible, S. Navigating urban complexity: The transformative role of digital twins in smart city development. Sustain. Cities Soc. 2024, 111, 105583. [Google Scholar] [CrossRef]

- Kuilman, S.K.; Siebert, L.C.; Buijsman, S.; Jonker, C.M. How to gain control and influence algorithms: Contesting AI to find relevant reasons. AI Ethics 2024, 5, 1571–1581. [Google Scholar] [CrossRef]

- Argota Sánchez-Vaquerizo, J. Urban Digital Twins and metaverses towards city multiplicities: Uniting or dividing urban experiences? Ethics Inf. Technol. 2025, 27, 4. [Google Scholar] [CrossRef]

- Hou, C.; Zhang, F.; Li, Y.; Li, H.; Mai, G.; Kang, Y.; Yao, L.; Yu, W.; Yao, Y.; Gao, S.; et al. Urban sensing in the era of large language models. Innovation 2025, 6, 100749. [Google Scholar] [CrossRef]

- Lane, J.; Stodden, V.; Bender, S.; Nissenbaum, H. (Eds.) Privacy, Big Data, and the Public Good: Frameworks for Engagement; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar] [CrossRef]

- Cabrera-Barona, P.F.; Merschdorf, H. A Conceptual Urban Quality Space-Place Framework: Linking Geo-Information and Quality of Life. Urban Sci. 2018, 2, 73. [Google Scholar] [CrossRef]

- Arribas-Bel, D.; Green, M.; Rowe, F.; Singleton, A. Open data products-A framework for creating valuable analysis ready data. J. Geograpical Syst. 2021, 23, 497–514. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Chen, M.; Claramunt, C.; Batty, M.; Kwan, M.-P.; Senousi, A.M.; Cheng, T.; Strobl, J.; Cöltekin, A.; Wilson, J.; et al. Geographic information science in the era of geospatial big data: A cyberspace perspective. Innovation 2022, 3, 100279. [Google Scholar] [CrossRef] [PubMed]

- Sharma, B.M.; Verma, D.K.; Raghuwanshi, K.D.; Dubey, S.; Nair, R.; Malviya, S. Generic framework of new era artificial intelligence and its applications. In International Conference on Applied Technologies; Botto-Tobar, M., Zambrano Vizuete, M., Montes León, S., Torres-Carrión, P., Durakovic, B., Eds.; ICAT 2023. Communications in Computer and Information Science; Springer: Cham, Switzerland, 2024; Volume 2049. [Google Scholar] [CrossRef]

- Sargiotis, D. Conclusion: The evolving landscape of data governance. In Data Governance; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Bengio, Y.; Cohen, M.; Fornasiere, D.; Ghosn, J.; Greiner, P.; MacDermott, M.; Mindermann, S.; Oberman, A.; Richardson, J.; Richardson, O.; et al. Superintelligent agents pose catastrophic risks: Can Scientist AI offer a safer path? arXiv 2025, arXiv:2502.15657. [Google Scholar]

- Stephanidis, C.; Salvendy, G.; Antona, M.; Duffy, V.G.; Gao, Q.; Karwowski, W.; Konomi, S.; Nah, F.; Ntoa, S.; Rau, P.-L.P.; et al. Seven HCI grand challenges revisited: Five-year progress. Int. J. Hum.-Comput. Interact. 2025, 1–49. [Google Scholar] [CrossRef]

- Starke, C.; Baleis, J.; Keller, B.; Marcinkowski, F. Fairness perceptions of algorithmic decision-making: A systematic review of the empirical literature. Big Data Soc. 2022, 9, 1–16. [Google Scholar] [CrossRef]

- Nussbaum, M. Human rights and human capabilities. Harv. Hum. Rights J. 2007, 20, 21–24. [Google Scholar]

- Patidar, N.; Mishra, S.; Jain, R.; Prajapati, D.; Solanki, A.; Suthar, R.; Patel, K.; Patel, H. Transparency in AI decision making: A survey of explainable AI methods and applications. Adv. Robot. Technol. 2024, 2, 1–10. [Google Scholar] [CrossRef]

- Kumar, S.; Datta, S.; Singh, V.; Singh, S.K.; Sharma, R. Opportunities and challenges in data-centric AI. IEEE Access 2024, 12, 33173–33189. [Google Scholar] [CrossRef]

- Shumailov, I.; Shumaylov, Z.; Zhao, Y.; Papernot, N.; Anderson, R.; Gal, Y. AI models collapse when trained on recursively generated data. Nature 2024, 631, 755–759. [Google Scholar] [CrossRef]

- Ameisen, E.; Lindsey, J.; Pearce, A.; Gurnee, W.; Turner, N.L.; Chen, B.; Citro, C.; Abrahams, D.; Carter, S.; Hosmer, B.; et al. Circuit tracing: Revealing computational graphs in language models. Transform. Circuits Thread 2025. Available online: https://transformer-circuits.pub/2025/attribution-graphs/methods.html (accessed on 31 March 2025).

- Lindsey, J.; Gurnee, W.; Ameisen, E.; Chen, B.; Pearce, A.; Turner, N.L.; Citro, C.; Abrahams, D.; Carter, S.; Hosmer, B.; et al. On the biology of a large language model: We investigate the internal mechanisms used by Claude 3.5 Haiku—Anthropic’s lightweight production model—In a variety of contexts, using our circuit tracing methodology. Transform. Circuits Thread 2025. Available online: https://transformer-circuits.pub/2025/attribution-graphs/biology.html (accessed on 31 March 2025).

- Alber, D.A.; Yang, Z.; Alyakin, A.; Yang, E.; Rai, S.; Valliani, A.A.; Zhang, J.; Rosenbaum, G.R.; Amend-Thomas, A.K.; Kurland, D.B.; et al. Medical large language models are vulnerable to data-poisoning attacks. Nat. Med. 2025, 31, 618–626. [Google Scholar] [CrossRef] [PubMed]

- Rothman, J. Are We Taking A.I. Seriously Enough? There’s No Longer Any Scenario in Which A.I. Fades into Irrelevance. We Urgently Need Voices from Outside the Industry to Help Shape Its Future. The New Yorker, 1 April 2025. Available online: https://www.newyorker.com/culture/open-questions/are-we-taking-ai-seriously-enough (accessed on 1 April 2025).

- Anderson, J.; Rainie, L. Expert Views on the Impact of AI on the Essence of Being Human; Elon University’s Imagining the Digital Future Center: Elon, NC, USA, 2025; Available online: https://imaginingthedigitalfuture.org/wp-content/uploads/2025/03/Being-Human-in-2035-ITDF-report.pdf (accessed on 4 April 2025).

- Liu, B.; Li, X.; Zhang, J.; Wang, J.; He, T.; Hong, S.; Liu, H.; Zhang, S.; Song, K.; Zhu, K.; et al. Advances and challenges in foundation agents: From brain-inspired intelligence to evolutionary, collaborative, and safe systems. arXiv 2025, arXiv:2504.01990. [Google Scholar]

- Dorostkar, E.; Ziari, K. Integrating ancient Chinese feng shui philosophy with smart city technologies: A culturally sustainable urban planning framework for contemporary China. J. Chin. Archit. Urban. 2025, 025080018. [Google Scholar] [CrossRef]

- Gomez, C.; Cho, S.M.; Ke, S.; Huang, C.-M.; Unberath, M. Human-AI collaboration is not very collaborative yet: A taxonomy of interaction patterns in AI-assisted decision making from a systematic review. Front. Comput. Sci. 2025, 6, 2024. [Google Scholar] [CrossRef]

- Gartrell, A. Thinking Machines. Thinking Machines Lab Blog. 2025. Available online: https://thinkingmachines.ai (accessed on 9 April 2025).

- Silver, D.; Sutton, R.S. Welcome to the era of experience. In Designing an Intelligence; MIT Press: Cambridge, MA, USA, 2025; Available online: https://storage.googleapis.com/deepmind-media/Era-of-Experience%20/The%20Era%20of%20Experience%20Paper.pdf (accessed on 3 May 2025).

- Russell, S. If We Succeed. Daedalus 2022, 151, 43–57. [Google Scholar] [CrossRef]

- Kim, Y.H.; Sting, F.J.; Loch, C.H. Top-down, bottom-up, or both? Toward an integrative perspective on operations strategy formation. J. Oper. Manag. 2014, 32, 462–474. [Google Scholar] [CrossRef]

- Hendawy, M.; da Silva, I.F.K. Hybrid smartness: Seeking a balance between top-down and bottom-up smart city approaches. In Intelligence for Future Cities; Goodspeed, R., Sengupta, R., Kyttä, M., Pettit, C., Eds.; CUPUM 2023; The Urban Book Series; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Ray, T. AI Has Grown Beyond Human Knowledge, Says Google’s DeepMind Unit: A New Agentic Approach Called ‘Streams’ Will Let AI Models Learn from the Experience of the Environment Without Human ‘Pre-Judgment’. ZDNet, 18 April 2025. Available online: https://www.zdnet.com/article/ai-has-grown-beyond-human-knowledge-says-googles-deepmind-unit/ (accessed on 26 April 2025).

- Teece, D.J. SWOT analysis. In The Palgrave Encyclopedia of Strategic Management; Augier, M., Teece, D.J., Eds.; Palgrave Macmillan: London, UK, 2018. [Google Scholar] [CrossRef]

- NYU. Encryption Breakthrough Lays Groundwork for Privacy-Preserving AI Models: New AI Framework Enables Secure Neural Network Computation Without Sacrificing Accuracy. New York University, Tandon School of Engineering News. 2025. Available online: https://engineering.nyu.edu/news/encryption-breakthrough-lays-groundwork-privacy-preserving-ai-models (accessed on 20 April 2025).

- Hoffman, R.; Beato, G. Superagency: What Could Possibly Go Right with Our AI Future? Authors Equity. 2025. Available online: https://www.simonandschuster.com/books/Superagency/Reid-Hoffman/9798893310108 (accessed on 18 March 2025).

- Mayer, H.; Yee, L.; Chui, M.; Roberts, R. Superagency in the Workplace: Empowering People to Unlock AI’s Full Potential. McKinsey Digital, 28 January 2025. Available online: https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work (accessed on 5 May 2025).

- Jacobs, G.; Munoz, J.M. AI and Education: Strategic Imperatives for Corporations and Academic Institutions. California Management Review Insights, 2 May 2025. Available online: https://cmr.berkeley.edu/2025/05/ai-and-education-strategic-imperatives-for-corporations-and-academic-institutions/ (accessed on 5 May 2025).

- Strickland, E. 12 graphs that explain the state of AI in 2025 > Stanford’s AI index tracks performance, investment, public opinion, and more. IEEE Spectr. 2025. Available online: https://spectrum.ieee.org/ai-index-2025? (accessed on 5 May 2025).

- Caprotti, F.; Cugurullo, F.; Cook, M.; Karvonen, A.; Marvin, S.; McGuirk, P.; Valdez, A.M. Why does urban Artificial Intelligence (AI) matter for urban studies? Developing research directions in urban AI research. Urban Geogr. 2024, 45, 883–894. [Google Scholar] [CrossRef]

- McKenna, H.P. An exploration of theory for smart spaces in everyday life: Enriching ambient theory for smart cities. In Intelligent Data-Centric Systems; Lyu, Z., Ed.; Smart Spaces; Academic Press: Cambridge, MA, USA, 2024; pp. 17–46. [Google Scholar] [CrossRef]

| Author | Year | Data Models |

|---|---|---|

| Lim et al. | 2018 | Four reference models for urban data-use cases in SCs |

| Wright & Davidson | 2020 | Data models and digital twins |

| Le et al. | 2022 | Foundation models for rethinking data-driven networking |

| Alsamhi et al. | 2024 | Models for decentralized data-sharing (FL, DFS, SW) |

| Klîmek et al. | 2023 | Atlas: model-driven data exchange and multi-modal data management |

| Dhar | 2024 | Shifting paradigms of AI: trust and alignment as unaddressed |

| Kuilman et al. | 2024 | Contestability for value alignment and relevancy in AI |

| Widder et al. | 2024 | Creation and use of meaningful alternatives to AI models |

| Argota Sánchez-Vaquerizo | 2025 | Urban digital twins and metaverses |

| Böhlen | 2025 | Geo-AI model inscrutability and opaqueness |

| Hou et al. | 2025 | Urban sensing and LLMs |

| McKenna | 2025 | Mapping of current data models for awareness in the era of AI |

| Author | Year | Data Frameworks |

|---|---|---|

| Lane et al. | 2014 | Conceptual, practical, and statistical frameworks and engagement |

| Cabrera-Barona & Merschdorf | 2018 | Urban quality space–place framework |

| Lim et al. | 2018 | Data-use frameworks for SCs |

| Arribas-Bel et al. | 2021 | Open data products framework, widening accessibility and use |

| Curry et al. | 2022 | Framework for sharing data in data ecosystems |

| Liu et al. | 2022 | GIScience in relation to geospatial data and cyberspace |

| Alsamhi et al. | 2024 | Framework for integrating decentralized data-sharing tech |

| Sharma et al. | 2023 | Generic framework of new era AI and applications |

| Sargiotis | 2024 | Data governance at the organizational level |

| Bengio et al. | 2025 | Scientist AI framework for understanding and mitigating risks |

| McKenna | 2025 | Rethinking and evolving data frameworks in the AI era |

| Stephanidis | 2025 | Human capabilities framework in an AI context |

| Yue et al. | 2025 | Human–AI symbiosis framework |

| Author | Year | Data Models and Frameworks: Challenges and Opportunities |

|---|---|---|

| Lim et al. | 2018 | Six challenges for transforming data into information in SCs |

| Dhar | 2024 | Alignment, trust, and legislation |

| Kuilman et al. | 2024 | Relevance in relation to context, alignment, and contestability |

| Kumar et al. | 2024 | Moving from a model-centric to a data-centric approach |

| Shumailov et al. | 2024 | Model collapse and the importance of data provenance |

| Widder et al. | 2024 | Openness—needs of public vs. commercial interests |

| Alber et al. | 2025 | Data poisoning and misinformation in the healthcare sector |

| Anderson & Rainie | 2025 | Exploration of “being human” in a world of AI |

| Bateson et al. | 2025 | Method to expose behaviors of large language models |

| Böhlen | 2025 | Geo-AI model—limitations and peculiarities in action |

| Hou et al. | 2025 | Urban sensing and LLMs |

| Liu et al. | 2025 | Foundational agents and the need for safe, secure, and beneficial AI |

| Rothman | 2025 | Calling for voices outside of the AI industry to shape the future |

| West & Aydin | 2025 | AI alignment paradox |

| Authors | Gaps | Problems |

|---|---|---|

| Anderson & Rainie | Top-down/bottom-up approach | |

| Bengio et al. | Catastrophic risks | |

| Dhar | Alignment; trust | |

| Dorostkar & Ziari | Culturally sustainable urban planning framework | |

| Gomez et al. | Human–AI collaboration | |

| Ray | AI model development | |

| Russell | AI control; research | |

| Gartrell et al. | Understanding of frontier AI among the scientific community | |

| Silver & Sutton | Models that learn from experience of the environment | |

| Suchman | Problematization of AI | |

| Widder et al. | Meaningfully addressing the needs of the public |

| Action/Focus | Community Members | Developers | Policymakers | Researchers |

|---|---|---|---|---|

| Human–AI collaboration | ✔ | ✔ | ✔ | ✔ |

| Human–AI interactions | ✔ | ✔ | ✔ | ✔ |

| Rethinking AI models/definitions | ✔ | |||

| Risk mitigation for AI/ASI | ✔ | ✔ | ✔ | ✔ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

McKenna, H.P. A Review of Data Models and Frameworks in Urban Environments in the Context of AI. Urban Sci. 2025, 9, 239. https://doi.org/10.3390/urbansci9070239

McKenna HP. A Review of Data Models and Frameworks in Urban Environments in the Context of AI. Urban Science. 2025; 9(7):239. https://doi.org/10.3390/urbansci9070239

Chicago/Turabian StyleMcKenna, H. Patricia. 2025. "A Review of Data Models and Frameworks in Urban Environments in the Context of AI" Urban Science 9, no. 7: 239. https://doi.org/10.3390/urbansci9070239

APA StyleMcKenna, H. P. (2025). A Review of Data Models and Frameworks in Urban Environments in the Context of AI. Urban Science, 9(7), 239. https://doi.org/10.3390/urbansci9070239