Exploring AI-Integrated VR Systems: A Methodological Approach to Inclusive Digital Urban Design

Abstract

1. Introduction

2. Literature Review and Background

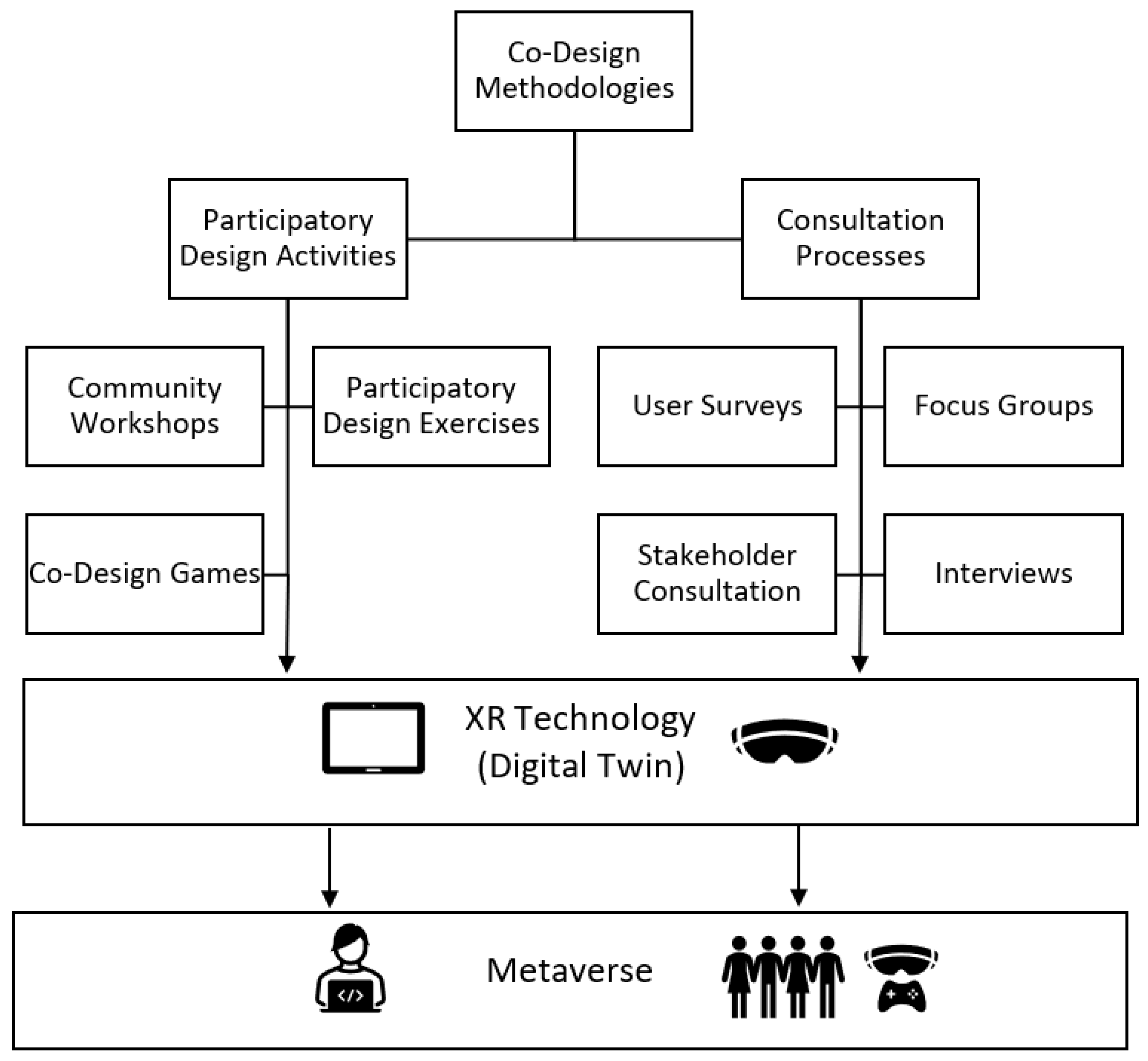

2.1. Co-Design Methods and Technological Innovations in Urban Design and Public Spaces

2.2. Virtual Reality in Urban Design: Immersion, Digital Twins, and Empathic Design

2.3. Artificial Intelligence in Urban Design

2.4. Virtual Reality Software and Game Engines for Urban Design

2.5. AI-Enhanced Urban Design Software and Tools

2.5.1. AI-Enhanced Tools for Urban Modeling, Simulation, and Procedural Generation

2.5.2. AI in Real-Time Rendering and Automated Environmental Simulation

2.5.3. AI-Driven Photogrammetry and 3D Reconstruction

2.5.4. AI-Based Climate and Environmental Simulation for Sustainable Urban Design

3. Methodology: Developing a Multi-Platform VR Model

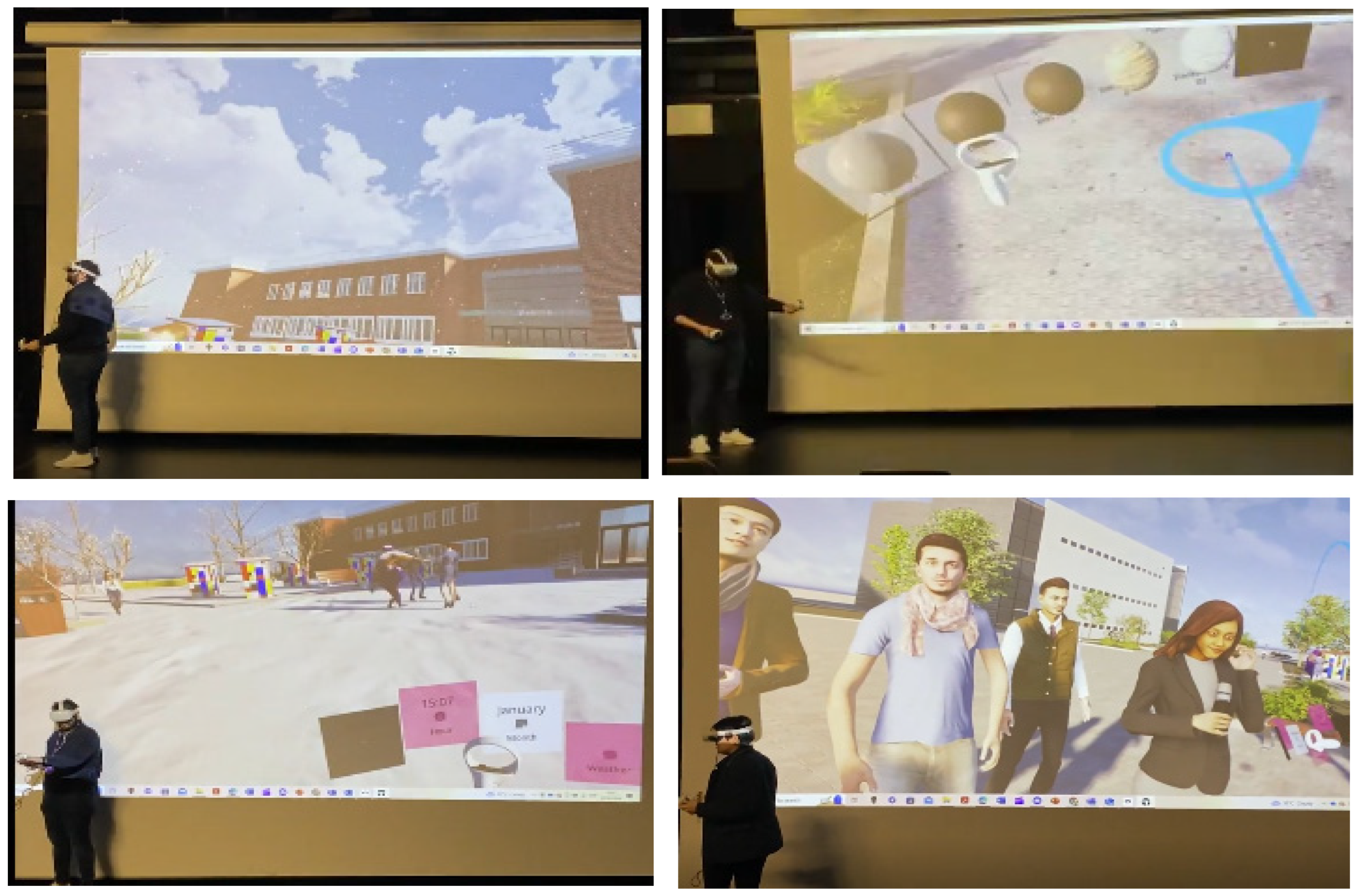

3.1. Case Study: Loughborough University Digital Model

3.2. Workflow for Implementing the Model in Different VR Platforms

3.2.1. Digital Model Development and Optimization

3.2.2. Platform Selection Justification

3.3. Evaluation Framework

4. Comparative Technical Analysis

4.1. Twinmotion

4.2. Unreal Engine

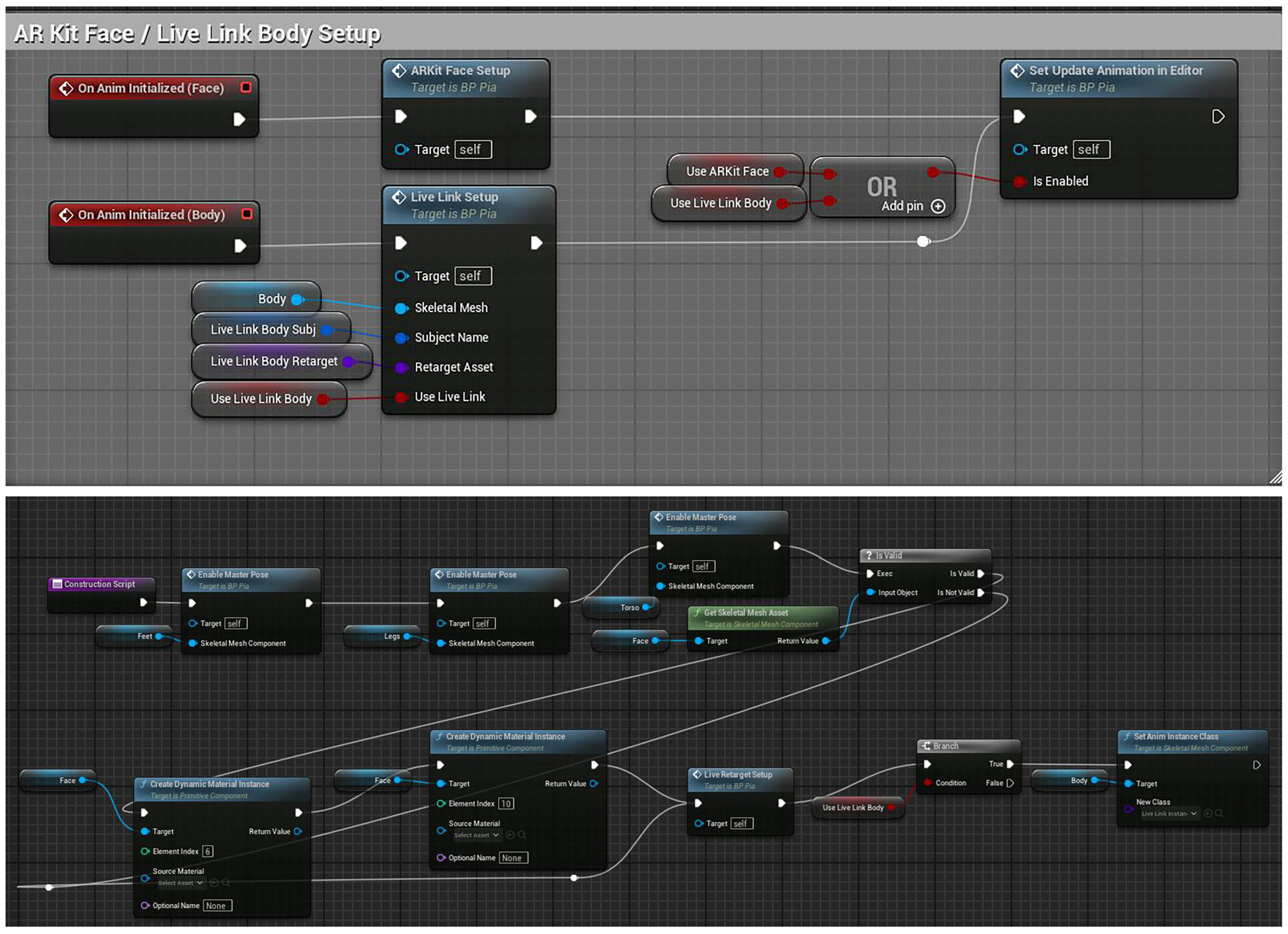

4.3. Mozilla Hubs Instance

4.4. Frame VR

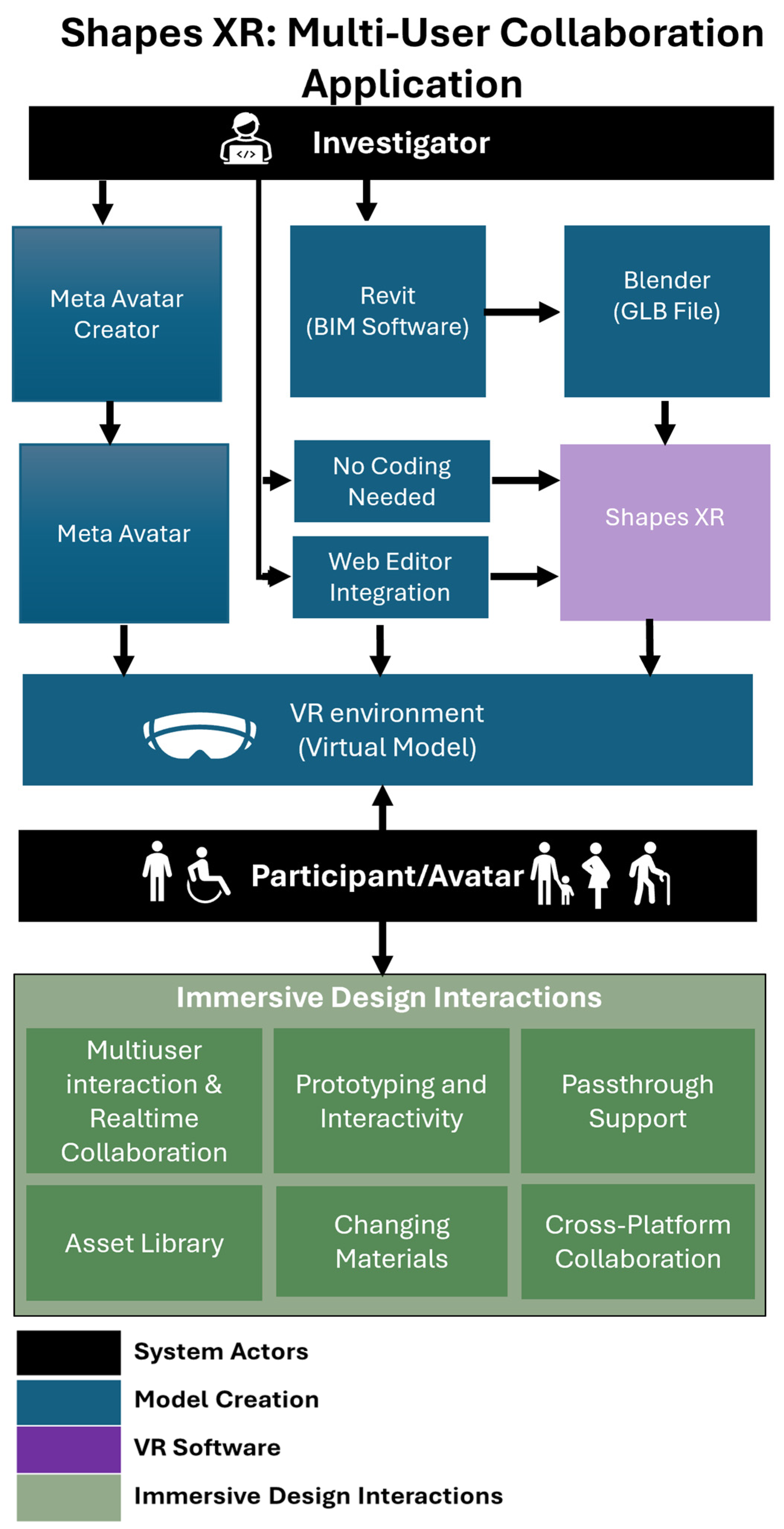

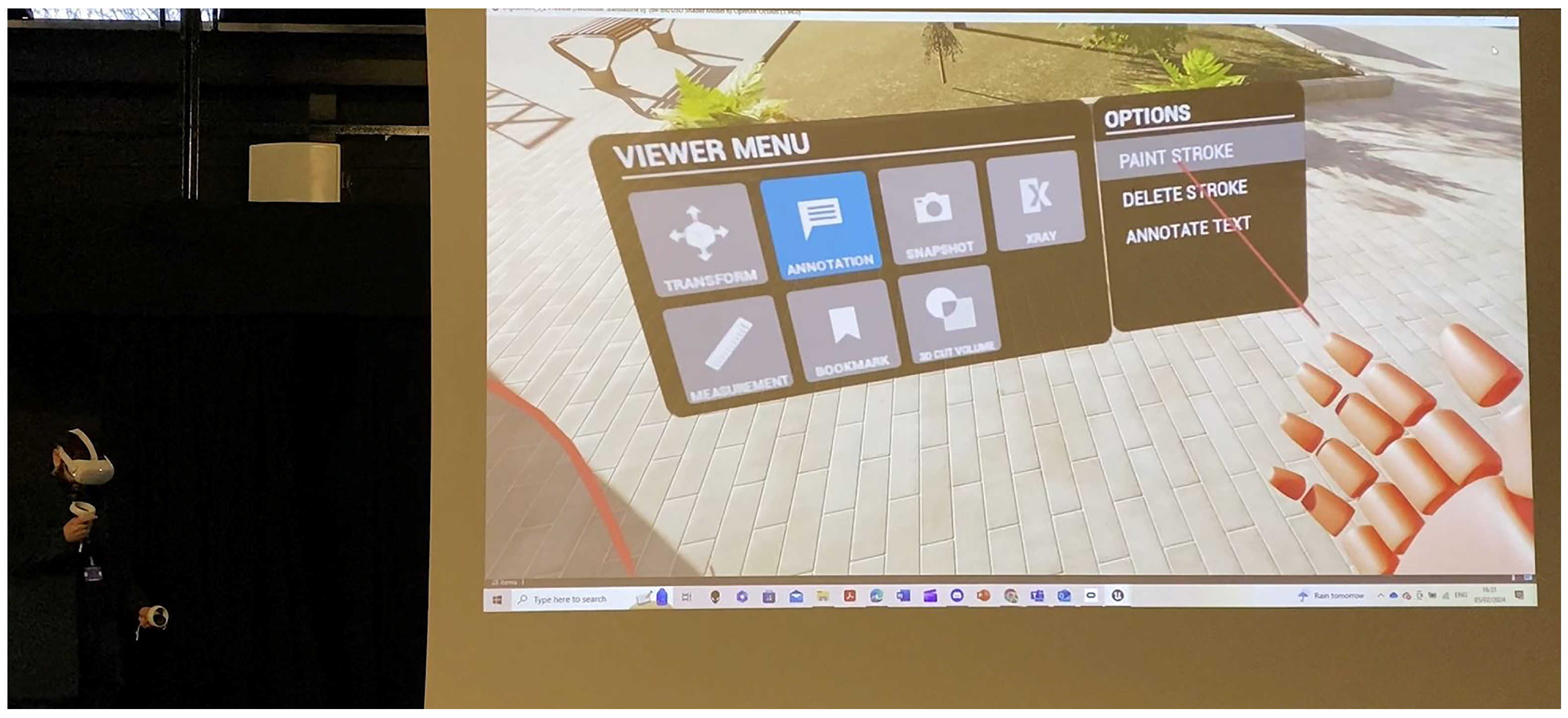

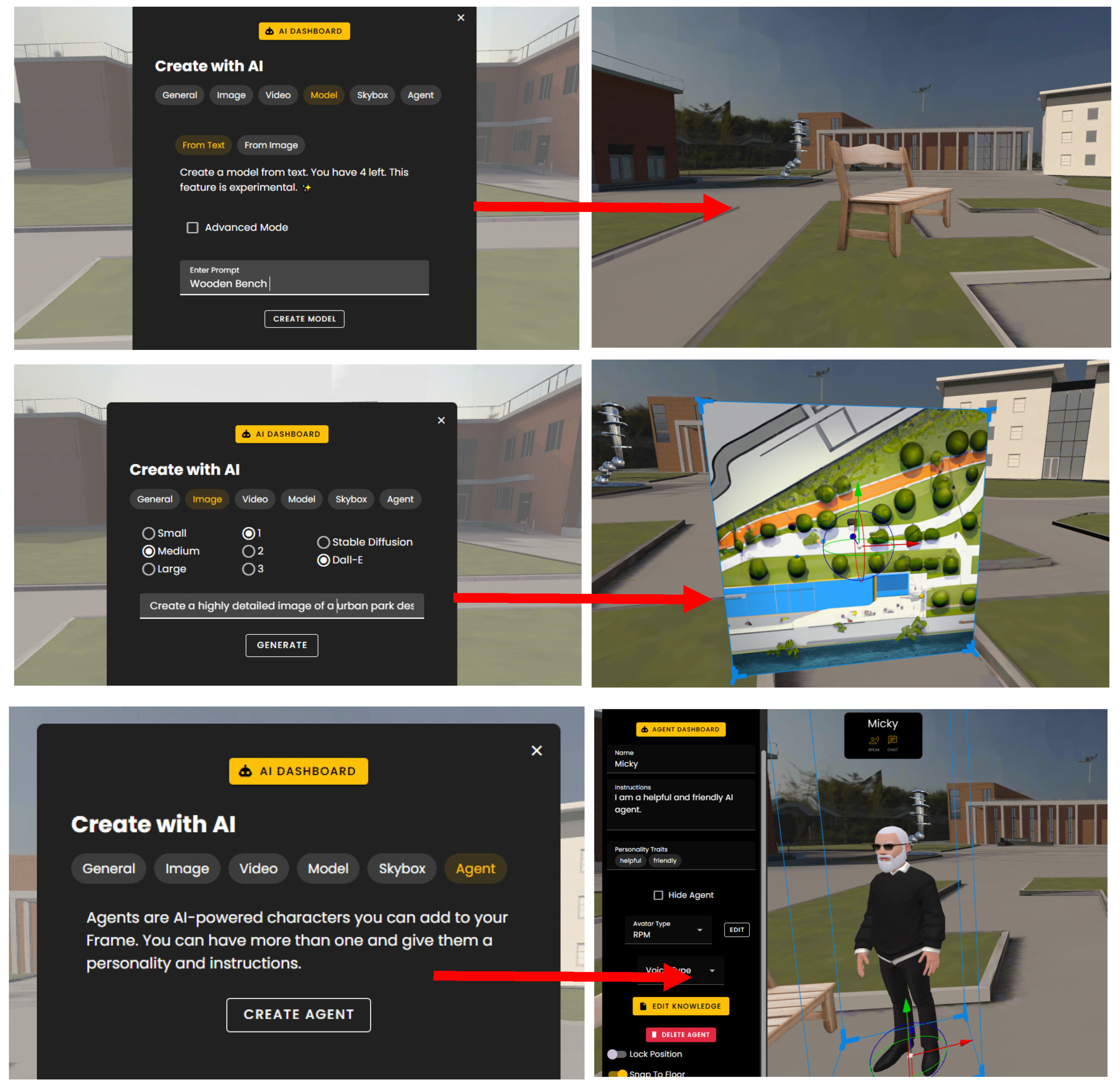

4.5. ShapesXR

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| VR | Virtual Reality |

| AR | Augmented Reality |

| MR | Mixed Reality |

| XR | Extended Reality |

| BIM | Building Information Modeling |

| NeRF | Neural Radiance Field |

| SPLAT | Spatial Point Light Approximation Technique (used in Gaussian Splatting) |

| NLP | Natural Language Processing |

| GANs | Generative Adversarial Networks |

References

- Pena, M.L.C.; Carballal, A.; Rodríguez-Fernández, N.; Santos, I.; Romero, J. Artificial intelligence applied to conceptual design: A review of its use in architecture. Autom. Constr. 2021, 124, 103550. [Google Scholar] [CrossRef]

- Son, T.H.; Weedon, Z.; Yigitcanlar, T.; Sanchez, T.; Corchado, J.M.; Mehmood, R. Algorithmic urban planning for smart and sustainable development: Systematic review of the literature. Sustain. Cities Soc. 2023, 94, 104562. [Google Scholar] [CrossRef]

- Young, G.W.; O’Dwyer, N.; Smolic, A. Exploring virtual reality for quality immersive empathy-building experiences. Behav. Inf. Technol. 2021, 41, 3415–3431. [Google Scholar] [CrossRef]

- Jamei, E.; Mortimer, M.; Seyedmahmoudian, M.; Horan, B.; Stojcevski, A. Investigating the role of virtual reality in planning for sustainable smart cities. Sustainability 2017, 9, 2006. [Google Scholar] [CrossRef]

- Portman, M.E.; Natapov, A.; Fisher-Gewirtzman, D. To go where no man has gone before: Virtual reality in architecture, landscape architecture and environmental planning. Comput. Environ. Urban Syst. 2015, 54, 376–384. [Google Scholar] [CrossRef]

- Disability Unit. UK Disability Survey Research Report, June 2021; Cabinet Office: London, UK, 2021. Available online: https://www.gov.uk/government/publications/uk-disability-survey-research-report-june-2021 (accessed on 21 March 2025).

- Gill, T. Urban Playground: How Child-Friendly Planning and Design Can Save Cities; RIBA Publishing: London, UK, 2021. [Google Scholar]

- Jian, I.Y.; Luo, J.; Chan, E.H. Spatial justice in public open space planning: Accessibility and inclusivity. Habitat Int. 2020, 97, 102122. [Google Scholar] [CrossRef]

- Nabatchi, T.; Ertinger, E.; Leighninger, M. The future of public participation: Better design, better laws, better systems. Confl. Resolut. Q. 2015, 33, S35–S44. [Google Scholar] [CrossRef]

- Wates, N. The Community Planning Handbook: How People Can Shape Their Cities, Towns and Villages in Any Part of the World; Routledge: Abingdon, UK, 2014. [Google Scholar]

- Schrom-Feiertag, H.; Stubenschrott, M.; Regal, G.; Matyus, T.; Seer, S. An interactive and responsive virtual reality environment for participatory urban planning. In Proceedings of the 11th Annual Symposium on Simulation for Architecture and Urban Design, Online, 25–28 May 2020; pp. 1–7. [Google Scholar]

- Rubio-Tamayo, J.L.; Gertrudix Barrio, M.; García García, F. Immersive environments and virtual reality: Systematic review and advances in communication, interaction and simulation. Multimodal Technol. Interact. 2017, 1, 21. [Google Scholar] [CrossRef]

- Meenar, M.; Kitson, J. Using multi-sensory and multi-dimensional immersive virtual reality in participatory planning. Urban Sci. 2020, 4, 34. [Google Scholar] [CrossRef]

- Alizadehsalehi, S.; Hadavi, A.; Huang, J.C. From BIM to extended reality in the AEC industry. Autom. Constr. 2020, 116, 103254. [Google Scholar] [CrossRef]

- Noghabaei, M.; Heydarian, A.; Balali, V.; Han, K. Trend analysis on adoption of virtual and augmented reality in the architecture, engineering, and construction industry. Data 2020, 5, 26. [Google Scholar] [CrossRef]

- Zhang, C.; Zeng, W.; Liu, L. UrbanVR: An immersive analytics system for context-aware urban design. Comput. Graph. 2021, 99, 128–138. [Google Scholar] [CrossRef]

- Ververidis, D.; Nikolopoulos, S.; Kompatsiaris, I. A review of collaborative virtual reality systems for the architecture, engineering, and construction industry. Architecture 2022, 2, 476–496. [Google Scholar] [CrossRef]

- Huang, Y.; Shakya, S.; Odeleye, T. Comparing the functionality between virtual reality and mixed reality for architecture and construction uses. J. Civ. Eng. Archit. 2019, 13, 409–414. [Google Scholar]

- Schiavi, B.; Havard, V.; Beddiar, K.; Baudry, D. BIM data flow architecture with AR/VR technologies: Use cases in architecture, engineering and construction. Autom. Constr. 2022, 134, 104054. [Google Scholar] [CrossRef]

- Davidson, J.; Fowler, J.; Pantazis, C.; Sannino, M.; Walker, J.; Sheikhkhoshkar, M.; Rahimian, F.P. Integration of VR with BIM to facilitate real-time creation of bill of quantities during the design phase. Front. Eng. Manag. 2020, 7, 396–403. [Google Scholar] [CrossRef]

- Watchorn, V.; Tucker, R.; Hitch, D.; Frawley, P. Co-design in the context of universal design: An Australian case study exploring the role of people with disabilities in the design of public buildings. Des. J. 2023, 27, 68–88. [Google Scholar] [CrossRef]

- Pesch, A.; Ochoa, K.D.; Fletcher, K.K.; Bermudez, V.N.; Todaro, R.D.; Salazar, J.; Hirsh-Pasek, K. Reinventing the public square and early educational settings through culturally informed, community co-design: Playful Learning Landscapes. Front. Psychol. 2022, 13, 933320. [Google Scholar] [CrossRef] [PubMed]

- Oetken, K.J. Unravelling the why: Exploring the increasing recognition and adoption of co-creation in contemporary urban design. Sustain. Communities 2025, 2, 1. [Google Scholar] [CrossRef]

- Oetken, K.J.; Hennig, K.; Henkel, S.; Merfeld, K. A psychoanalytical approach in urban design: Exploring dynamics of co-creation through theme-centred interaction. J. Urban Des. 2024, 1–28. [Google Scholar] [CrossRef]

- Reuter, T.K. Human rights and the city: Including marginalized communities in urban development and smart cities. J. Hum. Rights 2019, 18, 382–402. [Google Scholar] [CrossRef]

- Lynch, H.; Moore, A.; Edwards, C.; Horgan, L. Advancing play participation for all: The challenge of addressing play diversity and inclusion in community parks and playgrounds. Br. J. Occup. Ther. 2020, 83, 107–117. [Google Scholar] [CrossRef]

- Pineda, V.S.; Corburn, J. Disability, urban health equity, and the coronavirus pandemic: Promoting cities for all. J. Urban Health 2020, 97, 336–341. [Google Scholar] [CrossRef]

- Sánchez-Sepúlveda, M.; Fonseca, D.; Franquesa, J.; Redondo, E. Virtual interactive innovations applied for digital urban transformations: Mixed approach. Future Gener. Comput. Syst. 2019, 91, 371–381. [Google Scholar] [CrossRef]

- Dane, G.; Evers, S.; van den Berg, P.; Klippel, A.; Verduijn, T.; Wallgrün, J.O.; Arentze, T. Experiencing the future: Evaluating a new framework for the participatory co-design of healthy public spaces using immersive virtual reality. Comput. Environ. Urban Syst. 2024, 114, 102194. [Google Scholar] [CrossRef]

- Van Leeuwen, J.P.; Hermans, K.; Jylhä, A.; Quanjer, A.J.; Nijman, H. Effectiveness of virtual reality in participatory urban planning: A case study. In Proceedings of the 4th Media Architecture Biennale Conference, Beijing, China, 13–16 November 2018; pp. 128–136. [Google Scholar] [CrossRef]

- Parker, R.; Al-Maiyah, S. Developing an integrated approach to the evaluation of outdoor play settings: Rethinking the position of play value. Child. Geogr. 2021, 20, 1–23. [Google Scholar] [CrossRef]

- Pineo, H. Towards healthy urbanism: Inclusive, equitable and sustainable (THRIVES)—An urban design and planning framework from theory to praxis. Cities Health 2022, 6, 974–992. [Google Scholar] [CrossRef] [PubMed]

- Safikhani, S.; Keller, S.; Schweiger, G.; Pirker, J. Immersive virtual reality for extending the potential of building information modeling in architecture, engineering, and construction sector: Systematic review. Int. J. Digit. Earth 2022, 15, 503–526. [Google Scholar] [CrossRef]

- Ehab, A.; Burnett, G.; Heath, T. Enhancing Public Engagement in Architectural Design: A Comparative Analysis of Advanced Virtual Reality Approaches in Building Information Modeling and Gamification Techniques. Buildings 2023, 13, 1262. [Google Scholar] [CrossRef]

- Zaker, R.; Coloma, E. Virtual reality-integrated workflow in BIM-enabled projects collaboration and design review: A case study. Vis. Eng. 2018, 6, 4. [Google Scholar] [CrossRef]

- Yu, R.; Gu, N.; Lee, G.; Khan, A. A systematic review of architectural design collaboration in immersive virtual environments. Designs 2022, 6, 93. [Google Scholar] [CrossRef]

- Panya, D.S.; Kim, T.; Choo, S. An interactive design change methodology using BIM-based virtual reality and augmented reality. J. Build. Eng. 2023, 68, 106030. [Google Scholar] [CrossRef]

- Makanadar, A. Neuro-adaptive architecture: Buildings and city design that respond to human emotions, cognitive states. Res. Glob. 2024, 8, 100222. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Huang, X.; Liu, Z.; Wu, W. Enhancing interaction in virtual-real architectural environments: A comparative analysis of generative AI-driven reality approaches. Build. Environ. 2024, 266, 112113. [Google Scholar] [CrossRef]

- Abdelsalam, A.E. Resilient Design for London’s Elevated Social Spaces: Exploring Challenges, Opportunities, and Harnessing Interactive Virtual Reality Co-Design Approaches for Community Engagement. Ph.D. Thesis, University of Nottingham, Nottingham, UK, 2023. [Google Scholar]

- Ehab, A.; Heath, T. Exploring Immersive Co-Design: Comparing Human Interaction in Real and Virtual Elevated Urban Spaces in London. Sustainability 2023, 15, 9184. [Google Scholar] [CrossRef]

- Prabhakaran, A.; Mahamadu, A.M.; Mahdjoubi, L. Understanding the challenges of immersive technology use in the architecture and construction industry: A systematic review. Autom. Constr. 2022, 137, 104228. [Google Scholar] [CrossRef]

- Ehab, A.; Heath, T.; Burnett, G. Virtual Reality and the Interactive Design of Elevated Public Spaces: Cognitive Experience vs. VR Experience. In HCI International 2023 Posters. HCII 2023. Communications in Computer and Information Science; Stephanidis, C., Antona, M., Ntoa, S., Salvendy, G., Eds.; Springer: Cham, The Netherlands, 2023; Volume 1836. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Integrating BIM and AI for smart construction management: Current status and future directions. Arch. Comput. Methods Eng. 2022, 30, 1081–1110. [Google Scholar] [CrossRef]

- Kapsalis, E.; Jaeger, N.; Hale, J. Disabled-by-design: Effects of inaccessible urban public spaces on users of mobility assistive devices—A systematic review. Disabil. Rehabil. Assist. Technol. 2022, 19, 604–622. [Google Scholar] [CrossRef]

- Rueda, J.; Lara, F. Virtual reality and empathy enhancement: Ethical aspects. Front. Robot. AI 2020, 7, 506984. [Google Scholar] [CrossRef]

- Yao, T.; Yoo, S.; Parker, C. Evaluating Virtual Reality as a Tool for Empathic Modelling of Vision Impairment: Insights from a Simulated Public Interactive Display Experience. In Proceedings of the 33rd Australian Conference on Human-Computer Interaction (OzCHI ‘21), Melbourne, Australia, 30 November–3 December 2021; Association for Computing Machinery: New York, NY, USA, 2022; pp. 190–197. [Google Scholar] [CrossRef]

- Liu, Z.; Lu, Y.; Peh, L.C. A review and scientometric analysis of global building information modeling (BIM) research in the architecture, engineering and construction (AEC) industry. Buildings 2019, 9, 210. [Google Scholar] [CrossRef]

- Chaturvedi, V.; de Vries, W.T. Machine Learning Algorithms for Urban Land Use Planning: A Review. Urban Sci. 2021, 5, 68. [Google Scholar] [CrossRef]

- Koutra, S.; Ioakimidis, C.S. Unveiling the Potential of Machine Learning Applications in Urban Planning Challenges. Land 2023, 12, 83. [Google Scholar] [CrossRef]

- Casali, Y.; Aydin, N.Y.; Comes, T. Machine learning for spatial analyses in urban areas: A scoping review. Sustain. Cities society 2022, 85, 104050. [Google Scholar] [CrossRef]

- Qin, R.; Gruen, A. The role of machine intelligence in photogrammetric 3D modeling—An overview and perspectives. Int. J. Digit. Earth 2020, 14, 15–31. [Google Scholar] [CrossRef]

- Zhang, M.; Tan, S.; Liang, J.; Zhang, C.; Chen, E. Predicting the impacts of urban development on urban thermal environment using machine learning algorithms in Nanjing, China. J. Environ. Manag. 2024, 356, 120560. [Google Scholar] [CrossRef]

- Xu, H.; Omitaomu, F.; Sabri, S.; Zlatanova, S.; Li, X.; Song, Y. Leveraging Generative AI for Urban Digital Twins: A Scoping Review on the Autonomous Generation of Urban Data, Scenarios, Designs, and 3D City Models for Smart City Advancement. Urban Inform. 2024, 3, 29. [Google Scholar] [CrossRef]

- Abramov, N.; Lankegowda, H.; Liu, S.; Barazzetti, L.; Beltracchi, C.; Ruttico, P. Implementing Immersive Worlds for Metaverse-Based Participatory Design through Photogrammetry and Blockchain. ISPRS Int. J. Geo-Inf. 2024, 13, 211. [Google Scholar] [CrossRef]

- Zhang, K.; Fassi, F. Transforming Architectural Digitisation: Advancements in AI-Driven 3D Reality-Based Modelling. Heritage 2025, 8, 81. [Google Scholar] [CrossRef]

- Sestras, P.; Badea, G.; Badea, A.C.; Salagean, T.; Roșca, S.; Kader, S.; Remondino, F. Land surveying with UAV photogrammetry and LiDAR for optimal building planning. Autom. Constr. 2025, 173, 106092. [Google Scholar] [CrossRef]

- Jamil, O.; Brennan, A. Immersive heritage through Gaussian Splatting: A new visual aesthetic for reality capture. Front. Comput. Sci. 2025, 7, 1515609. [Google Scholar] [CrossRef]

- Morse, C. Gaming Engines: Unity, Unreal, and Interactive 3D Spaces. Technol. Archit. Des. 2021, 5, 246–249. [Google Scholar] [CrossRef]

- Qiu, W.; Yuille, A. UnrealCV: Connecting Computer Vision to Unreal Engine. In Computer Vision—ECCV 2016 Workshops. ECCV 2016; Hua, G., Jégou, H., Eds.; Lecture Notes in Computer Science; Springer: Cham, The Netherlands, 2016; Volume 9915. [Google Scholar] [CrossRef]

- Sidani, A.; Dinis, F.M.; Sanhudo, L.; Duarte, J.; Santos Baptista, J.; Pocas Martins, J.; Soeiro, A. Recent tools and techniques of BIM-based virtual reality: A systematic review. Arch. Comput. Methods Eng. 2021, 28, 449–462. [Google Scholar] [CrossRef]

- Badwi, I.M.; Ellaithy, H.M.; Youssef, H.E. 3D-GIS Parametric Modelling for Virtual Urban Simulation Using CityEngine. Ann. GIS 2022, 28, 325–341. [Google Scholar] [CrossRef]

- Belaroussi, R.; Dai, H.; González, E.D.; Gutiérrez, J.M. Designing a Large-Scale Immersive Visit in Architecture, Engineering, and Construction. Appl. Sci. 2023, 13, 3044. [Google Scholar] [CrossRef]

- Belaroussi, R.; Pazzini, M.; Issa, I.; Dionisio, C.; Lantieri, C.; González, E.D.; Vignali, V.; Adelé, S. Assessing the Future Streetscape of Rimini Harbor Docks with Virtual Reality. Sustainability 2023, 15, 5547. [Google Scholar] [CrossRef]

- Lee, H.; Hwang, Y. Technology-Enhanced Education through VR-Making and Metaverse-Linking to Foster Teacher Readiness and Sustainable Learning. Sustainability 2022, 14, 4786. [Google Scholar] [CrossRef]

- Kao, H.-W.; Chen, Y.-C.; Wu, E.H.-K.; Yeh, S.-C.; Kao, S.-C. Loka: A Cross-Platform Virtual Reality Streaming Framework for the Metaverse. Sensors 2025, 25, 1066. [Google Scholar] [CrossRef]

- Lo, T.T.S.; Chen, Y.; Lai, T.Y.; Goodman, A. Phygital workspace: A systematic review in developing a new typological work environment using XR technology to reduce the carbon footprint. Front. Built Environ. 2024, 10, 1370423. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, X.; Wang, J.; Lin, Y.; Li, Y. Application of Artificial Intelligence Technology in Urban Planning and Design: Opportunities, Challenges, and Prospects. Buildings 2024, 14, 835. [Google Scholar] [CrossRef]

- Bauerová, R.; Halaška, M.; Kopřivová, V. User experience with virtual reality in team-based prototyping and brainstorming. Int. J. Hum.–Comput. Interact. 2025, 1–19. [Google Scholar] [CrossRef]

| Name | Type | Compatible Software | Features | Source |

|---|---|---|---|---|

| Arkio | VR Standalone Application | Revit, Rhino | Immersive VR environment, real-time design modifications, absence of texture support, multi-user collaboration, 3D modeling, and presentation capabilities in virtual reality | https://www.arkio.is/ (accessed on 20 February 2025) |

| Fuzor | VR Standalone Application | Revit, Rhino | Synchronized live updates, integration of various disciplines within a virtual reality environment, clash detection, 4D simulations, and BIM data visualization | https://www.kalloctech.com/ (accessed on 25 February 2025) |

| Gravity Sketch | VR Standalone Application | Rhino | Virtual reality support, real-time editing, absence of texture support, multi-user functionality, 3D sketching and modeling in immersive environments, and export capabilities in OBJ, IGES, and FBX formats | https://gravitysketch.com/ (accessed on 20 February 2025) |

| Holodeck Nvidia | VR Standalone Application | 3Ds Max, Maya | NVIDIA Iray rendering technology, compatibility with standard NVIDIA vMaterials, high-quality visualization in virtual reality, limited connectivity with Omniverse, and AI-enhanced graphics | https://www.nvidia.com/en-gb/design-visualization/technologies/holodeck/ (accessed on 20 February 2025) |

| TwinMotion | VR Standalone Application | Revit, Rhino, 3Ds Max, SketchUp, ArchiCAD, Cinema 4D | Compatibility with virtual reality, real-time visualization, dynamic weather system, landscape and vegetation tools, import and export capabilities for 3D models, and efficient design exploration | https://www.twinmotion.com/en-US (accessed on 25 February 2025) |

| VU.CITY | VR Standalone Application | Rhino, Revit, SketchUp, AutoCAD | 3D city modeling, urban planning and analysis tools, interactive visualization, virtual reality support, integration with BIM data, and scenario-based planning | https://www.vu.city/news/vu-city-virtual-reality-model (accessed on 28 February 2025) |

| IrisVR—The Wild | VR Standalone Application | Rhino, Revit, Navisworks, SketchUp | Support for multiple users, 3D model viewing and annotation within virtual reality, real-time collaboration, and visualization of BIM data | https://irisvr.com/ (accessed on 28 February 2025) |

| Enscape | VR Plugin | Revit, SketchUp, Rhino, ArchiCAD, Vectorworks | Photorealistic rendering, interactive virtual reality environment, real-time walkthroughs, material and lighting adjustments, and efficient communication among stakeholders | https://www.chaos.com/enscape (accessed on 10 February 2025) |

| Mindesk | VR Plugin | Rhino, Revit, Solidworks, Unreal Engine | Absence of web interface and database support, real-time virtual reality modeling, seamless CAD integration, and streamlined design workflows | https://mindeskvr.com/ (accessed on 28 February 2025) |

| Tridify | VR Plugin | Revit, ArchiCAD, Tekla Structures | BIM data visualization, virtual reality support, web-based platform, interactive 3D models, and collaboration tools | https://apps.autodesk.com/en/Publisher/PublisherHomepage?ID=ZQ8PQN75GY7D (accessed on 28 February 2025) |

| SENTIO VR | VR Plugin | SketchUp, Revit, Rhino | Virtual reality support, immersive presentations, 360-degree rendering, real-time collaboration, and integration with various design software | https://www.sentiovr.com/ (accessed on 20 February 2025) |

| Autodesk Revit Live | VR Plugin | Revit | Interactive visualization, virtual reality support, real-time design modifications, integration with BIM data, and streamlined collaboration among stakeholders | https://www.autodesk.com/products/revit-live (accessed on 20 February 2025) |

| VR Sketch | VR Plugin | SketchUp | Virtual reality support, real-time design modifications, integration with SketchUp models, navigation and presentation tools, and compatibility with various virtual reality headsets | https://vrsketch.eu/ (accessed on 25 February 2025) |

| Unity | Game Engine | FBX, OBJ, 3ds Max, Maya, Blender | Real-time rendering, support for virtual and augmented reality, 2D and 3D visualization, comprehensive asset library, scripting capabilities, integration with BIM tools, and customizable design workflows | https://unity.com/ (accessed on 10 February 2025) |

| Unreal Engine | Game Engine | FBX, OBJ, 3ds Max, Maya, Blender, SketchUp, Revit | Real-time rendering, virtual and augmented reality support, high-quality visualization, integration with BIM tools, Datasmith import, interactive experiences, and advanced material and lighting adjustments | https://www.unrealengine.com/en-US (accessed on 28 February 2025) |

| CryEngine | Game Engine | FBX, OBJ, 3ds Max, Maya, Blender | High-quality rendering, support for virtual reality, real-time lighting and reflections, large-scale terrain tools, and integration with architectural visualization tools | https://www.cryengine.com/ (accessed on 28 February 2025) |

| Godot Engine | Game Engine | FBX, OBJ, Blender, Collada | 2D and 3D visualization, virtual and augmented reality support, scripting capabilities, customizable workflows, and integration with 3D modeling software | https://godotengine.org/ (accessed on 28 February 2025) |

| Mozilla Hubs | VR Standalone Chat Platform | GlTF, FBX, OBJ | Browser-based virtual reality platform, real-time collaboration, 3D model importing, avatars, customizable spaces, and cross-platform compatibility | https://hubsfoundation.org/ (accessed on 28 February 2025) |

| Any Land | VR Standalone Chat Platform | N/A | Virtual reality chat platform, in-world creation tools, user-generated content, customization, and interactive environments | https://anyland.com/ (accessed on 28 February 2025) |

| VRChat | VR Standalone Chat Platform | Unity, Blender, FBX, OBJ | Virtual reality chat platform, user-generated content, avatars, interactive worlds, and integration with Unity for custom content creation | https://hello.vrchat.com/ (accessed on 20 February 2025) |

| FrameVR | VR Standalone Chat Platform | Blender, ply, spz | Multi-user collaboration, 3D model import, voice chat, browser-based immersive spatial design | https://learn.framevr.io/ (accessed on 10 May 2025) |

| ShapesXR | VR Standalone Application | OBJ, GLB, glTF | Immersive design collaboration, real-time prototyping, spatial sketching in VR, multi-user interaction | https://www.shapesxr.com/ (accessed on 10 May 2025) |

| Software | Key Features | AI Integration | Primary Use Case | Source |

|---|---|---|---|---|

| NVIDIA Omniverse | AI-driven real-time digital twins, collaborative workflows, and automated texture enhancement | AI-powered texturing, predictive environmental adaptation, and multi-user collaboration | Interactive digital twins, real-time urban model interaction and AI-based optimizations | https://www.nvidia.com/en-gb/omniverse/ (accessed on 20 February 2025) |

| CityEngine | Procedural urban modeling and rule-based AI for generative city layouts | AI-assisted generative city modeling and automated urban landscapes | Automated procedural city generation, optimizing complex urban layouts | https://www.esri.com/en-us/arcgis/products/arcgis-cityengine/overview (accessed on 15 February 2025) |

| RealityCapture | AI-powered photogrammetry and high-accuracy 3D model reconstruction | AI-enhanced photogrammetry and real-time 3D urban reconstruction | 3D scanning of real-world urban environments for digital twins | https://www.capturingreality.com/ (accessed on 20 February 2025) |

| Meshroom | Open-source photogrammetry and AI-assisted 3D reconstruction from images | Neural network-based texture generation and point cloud reconstruction | Photogrammetry and high-accuracy 3D reconstruction from photos, high-quality visualization in virtual reality, limited connectivity with Omniverse, and AI-enhanced graphics | https://meshroom.en.softonic.com/ (accessed on 10 February 2025) |

| Agisoft Metashape | AI-enhanced photogrammetry, texture mapping, and digital twin creation | Automated 3D model creation from aerial/satellite imagery | Urban digitization and integration into AI-based analysis workflows | https://www.agisoft.com/ (accessed on 18 February 2025) |

| Grasshopper AI | AI-assisted parametric modeling, adaptive urban forms, and passive cooling strategies | AI-driven optimization for climate-adaptive architectural designs | Climate-responsive building design and energy-efficient urban form | https://simplyrhino.co.uk/3d-modelling-software/grasshopper (accessed on 20 February 2025) |

| Ladybug Tools | AI-driven climate simulations, solar radiation, and daylight optimization | Machine learning for real-time climate modeling and energy efficiency calculations | AI-driven urban microclimate simulations and sustainability planning | https://www.ladybug.tools/ (accessed on 25 February 2025) |

| Polycam | AI-powered 3D scanning, LiDAR, and photogrammetry-based model creation | AI-enhanced 3D reconstruction and real-time processing of scan data | High-fidelity 3D scanning of urban environments and objects for design workflows | https://poly.cam/ (accessed on 10 February 2025) |

| Convai AI | AI-driven conversational agents and interactive avatars | AI-powered NPCs and digital assistants for spatial interaction | AI-driven engagement in urban simulations, interactive NPCs for digital urban spaces | https://www.convai.com/ (accessed on 10 February 2025) |

| Roden AI | AI-powered generative architecture, automated 3D modeling, and urban planning optimization | AI-driven parametric urban design, automated massing studies, and generative urban scenarios | AI-based architectural design automation, optimizing urban form and spatial efficiency | https://www.rundown.ai/tools/rodin (accessed on 15 February 2025) |

| Cesium | 3D geospatial visualization, digital twins, and real-time rendering of massive urban datasets | AI-assisted geospatial analytics and real-time rendering of global-scale urban models | Large-scale city modeling, geospatial analysis, and real-time 3D visualization | https://cesium.com/ (accessed on 20 February 2025) |

| Autodesk Forma | Cloud-based generative design, environmental analysis, and scenario simulation | AI-powered site analysis, energy usage prediction, and generative massing tools | Early-stage urban planning, real-time performance feedback, sustainable design | https://www.autodesk.com/company/autodesk-platform/aec (accessed on 20 February 2025) |

| Platform | Compatibility | Design and VR Features | Collaboration and Accessibility | AI Capabilities |

|---|---|---|---|---|

| Twinmotion | Revit, Rhino, 3Ds Max, SketchUp, ArchiCAD, Cinema 4D, FBX, GLB, and OBJ | Lumen rendering, path tracing, material editing, real-time weather/light simulation, and asset library | Twinmotion Cloud, VR headset support, and browser-based viewing | Photogrammetry integration, AI-enhanced assets (external), and no generative design |

| Unreal Engine | Revit, Rhino, SketchUp, 3Ds Max FBX, GLB, and Datasmith | High-fidelity rendering, blueprint scripting, real-time design, MetaHuman, and annotations | Multi-user VR sessions and real-time walkthroughs | Convai avatars, Cesium for geodata, and AI scripting for creating characters and environments |

| Hubs Instance | GLB, FBX, and OBJ (via Blender/Spoke) | 360° media integration, spatial audio, fly navigation, annotation tools, virtual camera views, real-time object importing, and sharing PDFs and PowerPoint presentations within the metaverse | Browser-based, WebXR, multi-user, and custom avatars | Supports AI chatbots and no native AI behavior |

| FrameVR | GLB, PLY, SPZ, and SPLAT (Blender) | Object interaction, AI avatar design, Google Street View, Skybox editing, Gaussian splatting, and spatial audio | Browser-based, WebXR, multi-user, and flying navigation | Text-to-3D Gen, Image-to-3D Gen, AI chatbot avatars, AI-generated skyboxes, AI meeting transcripts, and Gaussian splatting |

| ShapesXR | OBJ, GLB, glTF (ZIP), PNG, JPG, MP3, WAV, and Figma | Sketching, procedural design, prototyping, Holonotes, multi-user interaction and real-time collaboration, passthrough support, asset library, changing materials, and cross-platform collaboration | Up to 8 users, web access, voice chat, Meta avatars, and browser editor | Export to Unity/Unreal for AI integration |

| Platform | Use Cases in Inclusive Urban Design | Strengths | Weaknesses and Limitations |

|---|---|---|---|

| Twinmotion |

|

|

|

| Unreal Engine |

|

|

|

| Hubs Instance |

|

|

|

| FrameVR |

|

|

|

| ShapesXR |

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ehab, A.; Aladawi, A.; Burnett, G. Exploring AI-Integrated VR Systems: A Methodological Approach to Inclusive Digital Urban Design. Urban Sci. 2025, 9, 196. https://doi.org/10.3390/urbansci9060196

Ehab A, Aladawi A, Burnett G. Exploring AI-Integrated VR Systems: A Methodological Approach to Inclusive Digital Urban Design. Urban Science. 2025; 9(6):196. https://doi.org/10.3390/urbansci9060196

Chicago/Turabian StyleEhab, Ahmed, Ahmad Aladawi, and Gary Burnett. 2025. "Exploring AI-Integrated VR Systems: A Methodological Approach to Inclusive Digital Urban Design" Urban Science 9, no. 6: 196. https://doi.org/10.3390/urbansci9060196

APA StyleEhab, A., Aladawi, A., & Burnett, G. (2025). Exploring AI-Integrated VR Systems: A Methodological Approach to Inclusive Digital Urban Design. Urban Science, 9(6), 196. https://doi.org/10.3390/urbansci9060196