1. Introduction

The processing of sinusoidal signals is a fundamental technique in modern measurement and instrumentation systems, playing a critical role in the performance evaluation of electronic devices and the extraction of key parameters of the system. Fitting sinusoidal models to empirical data finds widespread use in various engineering fields, including the testing of analog-to-digital converters (ADCs), signal integrity analysis, precision metrology, and communication system characterization [

1]. The popularity of sinusoidal processing stems from the natural occurrence of sinusoidal waveforms in physical systems and their deliberate use as controlled excitation signals in measurement setups. Also in physics, for example, there are many situations where the signals are sinusoidal, like in nuclear magnetic resonance spectroscopy, particle accelerators, where particles are forced to move in a circle by a magnetic field, and gravitational wave detection, where the fabric of spacetime is often modeled as sinusoids. The computation of the root mean square (RMS) of the residuals can be used to detect faint signals in noisy data.

The mathematical foundation of the current work is robust and general, and the application is a matter of mapping the concepts to the specific domain in question. The proposed analytical expressions serve as a versatile statistical tool. Their adaptation to a new domain primarily requires identifying the physical source of phase noise or timing jitter within that system’s measurement process. The core advantage remains consistent across all fields: providing a computationally efficient, analytical method to quantify uncertainty and assess model fidelity for any process that can be described by a sinusoidal model.

Apart from mere curve fitting, sinusoidal analysis is also a standard technique for investigating device behavior under controlled conditions. If a pure sinusoidal input is presented to a system, the output will display fundamental characteristics like linearity, nonlinear distortion, noise performance, and dynamic range limits. The residuals, resulting from subtracting the fitted sinusoidal model from measurement data, represent non-ideal effects, such as thermal noise, quantization errors, harmonic distortion, and timing jitter.

The RMS value of residuals is a metric that provides direct information on key system parameters, including signal-to-noise ratio (SNR), effective number of bits (ENOB), and noise floor characteristics [

2]. Such parameters are critical to assess the performance of measurement equipment, data acquisition systems, and signal processing algorithms. For high-precision applications, accurate analysis of residuals enables engineers to establish confidence intervals, optimize system designs, and balance measurement accuracy and acquisition time.

Sinusoidal fitting is specifically crucial in ADC testing, where standard test procedures are based on sine wave stimuli to assess converter performance. The IEEE Standard 1241-2000 [

3] mandates least-squares sine-wave fitting methods for the extraction of dynamic performance metrics. In precision oscillator characterization and frequency metrology, sinusoidal fitting is used to quantify phase noise and frequency stability—parameters that are of paramount importance to telecommunications and scientific instrumentation [

4].

Notwithstanding its prevalent application, the implementation of sinusoidal fitting in practical measurements is complicated by non-ideal conditions, including phase noise and sampling jitter, both of which contribute substantial uncertainty. Phase noise, which signifies stochastic variations in the phase of the waveform, appears as discrepancies from ideal periodicity, thereby complicating the differentiation from other sources of noise [

5]. Phase noise is caused by a multitude of sources, including thermal noise related to active devices, flicker noise in semiconductor junctions, and external perturbations like vibrations and temperature fluctuations. These effects propagate through reference oscillators, frequency synthesizers, and phase-locked loops, causing correlated errors that bias parameter estimates and increase perceived noise levels. Sampling jitter—time uncertainty in ADC sampling moments—additionally complicates measurements by introducing amplitude errors in digitized samples. These errors’ magnitude depends on input signal amplitude, frequency, and the RMS jitter value, establishing complicated dependencies that complicate result interpretation [

6]. In the mathematical analysis that follows, both phase noise and jitter can be dealt with the same formalism.

Although there has been much research aimed at refining sinusoidal fitting algorithms, there are few studies that give detailed analytical foundations for residual prediction in the presence of phase noise and jitter. Giaquinto and Trotta [

1] created improved sine-wave fitting methods for ADC testing without explicitly considering the effects of phase noise. Likewise, multiharmonic sine-fitting (MHSF) algorithms [

7] consider harmonic distortion without theoretical consideration of correlated noise sources. Phase noise measurement methods, e.g., cross-correlation techniques [

8], have pushed precision metrology forward but are largely unexploited in more general sinusoidal fitting applications. The lack of analytical residual statistics models restricts uncertainty budgeting, optimization of measurement, and confidence interval estimation, especially in calibration and standards laboratories [

9]. This paper fills these gaps by deriving analytical formulas for the mean and variance of residual RMS values in the presence of phase noise and jitter. These closed-form solutions allow for fast uncertainty assessment without lengthy Monte Carlo-type simulations, providing insight into noise dependencies and measurement optimization.

Validated by numerical simulations, the framework lends itself to applications from ADC testing to oscillator characterization. It also offers a basis for future studies of complicated noise situations, non-stationary effects, and nonlinear system behavior.

The impact of phase noise in measurement systems has been treated from several distinct viewpoints in the literature, each adding vital insights to specific aspects while leaving other areas less adequately covered. In the context of analog-to-digital conversion, significant work has focused on the impact of sampling jitter on signal-to-noise ratio (SNR) and spurious-free dynamic range (SFDR) degradation in high-speed converters. Brannon [

10] performed an extensive analysis of the effects of jitter in data conversion systems, deriving equations for jitter-induced noise power as a function of input signal characteristics and jitter statistics. While this work provided a fundamental link between timing uncertainty and amplitude error, it did not address the residuals of sinusoidal fitting. Phase noise in communication systems has been studied quite thoroughly, particularly with regard to digital modulation methods and carrier recovery mechanisms. Meyr et al. [

11] developed detailed mathematical models for describing phase noise in phase-locked loops and carrier tracking systems, from which analytical expressions were derived for the degradation in bit error rates under various phase noise conditions. Although this work provides important information on the statistical properties of sinusoidal signals corrupted by phase distortion, its focus on communication systems means that it does not directly address the unique requirements of measurement and instrumentation applications.

Robust parameter estimation of sinusoidal signals in noise has been a topic of study in signal processing for many years. Kay [

12] presented a comprehensive analysis of spectral analysis and parameter estimation, including Cramér–Rao lower bounds for sinusoidal parameter estimation in additive white Gaussian noise. Although this work establishes ultimate accuracy limits on frequency, amplitude, and phase estimation, it does not address phase noise or residual fit statistics specifically. More recent research by Pintelon and Schoukens [

13] has developed system identification methods with sinusoidal excitation, further including techniques to deal with colored noise and nonlinear distortions. Their approach encompasses uncertainty analysis for frequency-domain measurements but does not completely deal with phase noise and jitter in time-domain fitting.

This research fills essential voids in the literature through the derivation of analytical formulas for the mean and variance of root mean square (RMS) sinusoidal fitting residuals in the presence of phase noise and jitter. For the first time, this theoretical model stringently describes the impact of these noise sources on residual statistics and measurement uncertainty, which in the future can be utilized in metrics such as SNR and effective number of bits (ENOB) estimation, for example. The analytical strategy employed here has important benefits over numerical techniques. Firstly, closed-form solutions provide fast uncertainty calculation—a feature especially valuable for real-time adaptive measurements. Secondly, the formalism employed discloses functional dependencies among noise properties, signal parameters, and uncertainty, which makes it possible to optimize systems from first principles instead of empirical information.

The author has published several papers that deal with the effects of phase noise or sampling jitter. The one that is closer to the current work is [

14]. It deals only with the expected value of the RMS value estimation and does not address the estimator’s standard deviation. Furthermore, that study of the expected value of RMS estimation results in a simple, approximate linear expression that is valid only for small values of phase noise standard deviation (less than 0.5 rad) and not for the complete range of possible values, as is performed in the current work. Naturally, the author has devoted his entire career to studying the uncertainty associated with diverse measurement methods. This includes techniques such as the time-domain polarization method for characterization of soils [

15], as well as numerical and experimental validation of measurement methods using Monte Carlo-type procedures.

In this work, we derive two analytical expressions for both the mean and the variance of the root mean square estimation of the sine-fitting residuals in the presence of phase noise or jitter. These expressions take into account the noise standard deviation, the number of data points, and the sinusoidal amplitude.

Section 2 recapitulates the least-squares sine-fitting algorithm, and

Section 3 calculates the statistics of the sinusoidal amplitude estimator under phase noise and jitter.

Section 4 deals with the analytical derivation of the formulas for the statistics of the residuals, and

Section 5 applies those findings to calculate the statistics of the RMS value of the residuals.

Section 6 gives the findings of numerical simulations that confirm the analytical formulas introduced. Lastly,

Section 7 makes some conclusions and suggests future work.

2. Sine-Fitting

In the following, we present the analytical derivation of an expression that allows us to determine the means and standard deviation of the RMS value of the sine-fitting residuals. The first step is, naturally, to start by describing the sinusoidal model used. We thus express the ideal sample values using

where

is the sinusoidal offset,

is the amplitude, and

is the initial phase. The sampling instants are given by

, where the sample index,

, goes from 0 to

where

is the number of samples. The sinusoidal frequency is assumed to be known and is, thus, not estimated in the context of this work. It is given by

and the corresponding angular frequency is given by

.

Here, we study the case where there is a phase noise that affects the sample values, so that the actual sample values are provided by

Note that, since we are considering that the sinusoidal frequency is known, the analysis presented next is also applicable to the case where sampling jitter is present instead of phase noise. In this case, we just need to make the correspondence

where

is the amount of sampling jitter present. Naturally, the standard deviation of those two variables is related by

Considering this set of data points, corrupted by noise, we wish to estimate the three unknown parameters that describe the sinusoid—offset (

), amplitude (

) and initial phase (

). Note the “hat” symbol used over the estimated quantities. We can thus use a three-parameter sine-fitting algorithm, which minimizes the square difference between the data points and the sinusoidal model; that is, it minimizes the summed squared residuals (SSR) provided by

where the estimated sinusoidal points are given by

We are going to write the estimated SSR value as

where

are the residuals.

The three estimated parameters of the sinusoidal model are determined from the samples using

for the offset,

for the amplitude, and

for the initial phase, where

and

are the in-phase and in-quadrature amplitudes computed using

and

Note that

is the angular frequency used in the least-squares sine-fitting procedure, and which we assumed to be known in this work, so that we have

The next step is going to be the derivation of an analytical expression for the expected value of the square of the residuals. This is presented in the next section. Afterwards, we will focus on the expected value of the residuals and, from it, study the variance of the root mean square value of the residuals.

3. Statistics of the Amplitude Estimator

Here, we derive the expected value and standard deviation of the estimated sinusoidal amplitude in the presence of phase noise or jitter. Later on, these results will be used to compute the statistics of the root mean square value of the sine-fitting residuals.

Considering the phase noise to be normally distributed with null mean and variance

,

the characteristic function is

We now use the Euler formula to write the complex exponential in (16) as

Since the right side of this equation is real, we must have

and thus

If we set

now, we obtain

Later on, we will also need the expected value of the square of the cosine of

. We will compute it now by writing the square of the cosine as

The expected value is thus

To compute the expected value of

we use an identical procedure as before, but now making

in (19) such that

Inserting this back into (22) leads to

Focusing now on the bias of the amplitude estimation, we will use the fact that the sinusoidal amplitude can be estimated using

Taking the expectation value and assuming that the initial phase estimation is unbiased, that is

we have

Making use of (20) leads to

The previous two sections (

Section 2 and

Section 3) contain material that is well known in statistics and estimation theory. It is included here to serve as context and reference for the novel derivations, which constitute the main contribution of this work, and which are presented in

Section 4 and

Section 5.

4. Statistics of the Residuals

The sine-fitting residuals, provided by (8), are computed as the difference between the sample values and the values of the fitted sine wave at the same instants in time. The first depends directly on the phase noise or jitter present and is considered a random variable. The latter depends on the sine-fitting parameters, namely amplitude, initial phase, and offset. These three parameters, since they are estimated from the data points, are, strictly speaking, also random variables. We are, however, in the following theoretical analysis, considering them to be deterministic variables, albeit with a biased amplitude provided by (28). This will simplify the derivations and will be shown, using numerical simulations, that the resulting analytical expression proposed for the variance of the RMS value of the residuals is, in fact, very accurate, despite this simplification.

Note that the analysis is conditional on the parameter estimation process. We are not analyzing the joint distribution of the data and the unknown parameters, but rather the distribution of the residuals given the fitted model. In mathematical terms, we are computing and , where , , and are the estimated parameters. Once these parameters are estimated from the data, they become fixed values for the purpose of analyzing the residual statistics.

Our derivation makes several key assumptions about the parameter estimation process. The first one is that the initial phase and offset estimations are unbiased; that is,

and

. This means that on average, the phase and offset estimation procedure recovers the true phase and offset:

and

For least-squares fitting of sinusoidal data with phase noise, this assumption is generally valid. We explicitly account for the amplitude estimation bias, as determined by (28), and consider that the sinusoidal amplitude is given by

This bias is deterministic and depends only on the phase noise variance, not on random realizations. Finally, we assume that for sufficiently large datasets, the parameter estimates converge to their expected values, making the conditioning assumption more accurate.

4.1. Second Raw Moment Calculation

The starting point of the derivation is writing the cosine function found in (2) for the sample value affected by phase noise,

using the trigonometric identity

for any generic variables

and

. Applying this to our case results in

The actual sample values, provided by (32), become

The residual, provided by (8), can then be written as

where we have made use of

given by (6). Assuming now that

and

, we have

Inserting the expected value of the amplitude, provided by (28), leads to

Computing now the square of the residual results in

We can now compute the expected value of the square of this residual by computing the expected value of each of the three terms:

The expected value in the first term of the left side of (41) is, computing the square,

The first expected value on the right side was computed in (24), while the second one was computed in (20). Using them both leads to

Moving on to the second expected value in (41) we have

Introducing (24) leads to

Finally, computing the third term in (41) leads to

Since the sine function is an odd function, the second term is null.

Writing the product of the cosine and sine functions as the sine of double the argument leads to

For the same reason as above, this term is null. We thus have

Inserting into (41) the terms given in (44), (46), and (50) leads to

Using

, we can write

We will now carry out a similar analysis for the case of the fourth raw moment of the residuals.

4.2. Fourth Raw Moment Calculation

In this section, we proceed to compute the expected value, as in the previous section, but now not of the square of the residuals but of their fourth power. This will be necessary later on when computing the variance of the summed squared residuals (SSRs).

Computing the binomial expansion leads to

where

is given by (6).

We now have to compute each of the four expected values. The first one results in

The second one results in

The fourth one results in

Inserting these four terms, together with (29), (30), and (31), into (55) leads to

Simplifying, the final expression for the fourth raw moment of the residual values is

This is going to be used later on when computing the statistics of SSR and then the RMS estimation of the residuals.

4.3. Degrees of Freedom Correction

In statistics, the number of degrees of freedom is the number of values in the final calculation of statistics that are free to vary. When we use a set of data to estimate parameters in a model, we “use up” some of those degrees of freedom. Each parameter we estimate from the data reduces the number of degrees of freedom by one.

Imagine you have a set of data points, and you calculate their sample mean. The sum of the deviations of each data point from the sample mean is always zero. This means that if you know the first deviations, the last one is fixed. You have lost one degree of freedom in the process of estimating the mean. This is why, when we calculate the sample variance (which is the average of the squared residuals from the mean), we divide by instead of to obtain an unbiased estimate of the true population variance. This is known as Bessel’s correction.

Applying this to the current specific problem of three-parameter sine-fitting, where we are estimating the sinusoidal amplitude, initial phase, and offset, when you perform the sine fit, you are estimating these three parameters from your

data points. In doing so, you are imposing three constraints on your data. This means you lose three degrees of freedom. It will thus be necessary, for the correct estimation of the variance of the summed squared residuals (SSRs), to take into account this reduction in the number of degrees of freedom by multiplying the values obtained by the factor

In particular, the analytical expression derived previously for the expected value of the residuals, namely Equation (53), needs to be corrected, resulting in

The same needs to be performed for the expected value of the fourth power of the residuals given by (61),

Later on, when validating the analytical expressions derived in this work using numerical simulations, it will be evident that the results of the simulation will not match the analytical expressions derived if this correction factor is not included.

4.4. Expected Value of SSR

The expected value of the sum of the square of the residuals is obtained from (7) by summing all the residuals

Considering that the data points cover an integer number of periods of the sinusoid (coherent sampling), the summation of the square of the cosine function becomes

Inserting this into (66) leads to

which simplifies to

4.5. Second Raw Moment of SSR

In order to compute the variance of the SSR it will be necessary to determine also the second raw moment of the SSR. Proceeding in a similar manner as in the previous section, we can write the expected value of the sum of the squares of the residuals is obtained from (7) by summing all the residuals

The square of the summation can be written as a double summation (omitting the limits of the summation for now):

Separating the diagonal terms from the off-diagonal terms leads to

Taking the expected value of both sides leads to

Moving the expected values on the right side past the summations leads to

Assuming that the residuals are independent, we have

Inserting this into (74) leads to

We will now simplify the double summation, which can be written as

Inserting this back into (76) leads to

We split this expression into three parts (, and ) that we will compute next in turn.

4.5.1. Computation of Part A

Here, we will compute the term

of (80),

Considering that summations cover an integer number of periods of the signal, the second, third, and fifth terms become 0. The first, fourth, and fifth summations become

,

and

respectively, leading to

4.5.2. Computation of Part B

Here, we will compute the term

of (80),

We will start with the square root of this part and, after simplification, compute the square:

Moving the summation into the square brackets leads to

The summation over an integer number of periods of the sinewave results in

, leading to

Finally, computing the square, one obtains

4.5.3. Computation of Part C

Computing the square of the square brackets leads to

Moving the summation into the square bracket leads to

Using

and

in (95) leads to

Moving the factor of

leads to

4.5.4. Bringing the Three Parts Together

Adding the three parts of (80), using (84), (91) and (100) leads to

4.6. Computing the Variance

The variance of the SSR estimation, given by (7), is

Inserting (102) and (69) into this leads to

We have now derived an analytical expression for the mean and variance of the SSR estimation given by Equations (69) and (105), respectively.

6. Validation Using Numerical Simulations

At this point in the presentation, the two derived analytical expressions will be put to the test by numerically simulating a set of data points that are corrupted by phase noise, fitting them to the sinusoidal model described above, determining the resultant residuals, and computing their root mean square value. Three parameters are going to be varied in this study, namely the injected phase noise standard deviation, the number of data points, and the sinusoidal amplitude, all of which are parameters that these two analytical expressions depend on. In general, for each case, we will present the average and the standard deviation of the RMS estimation, together with the values given by the analytical expressions derived here. The numerically obtained values are presented in a graphical form using error bars, which correspond to a confidence interval computed for the confidence level of 99.9%. The values obtained with the analytical expressions will be plotted in the same charts using a solid line. The goal is to have all the error bars located around the solid lines. As we will see, this happens in almost all instances, showcasing the range of validity of the analytical expressions derived. A second set of charts will present the difference between the numerical simulation values and the ones given by the analytical expressions derived here.

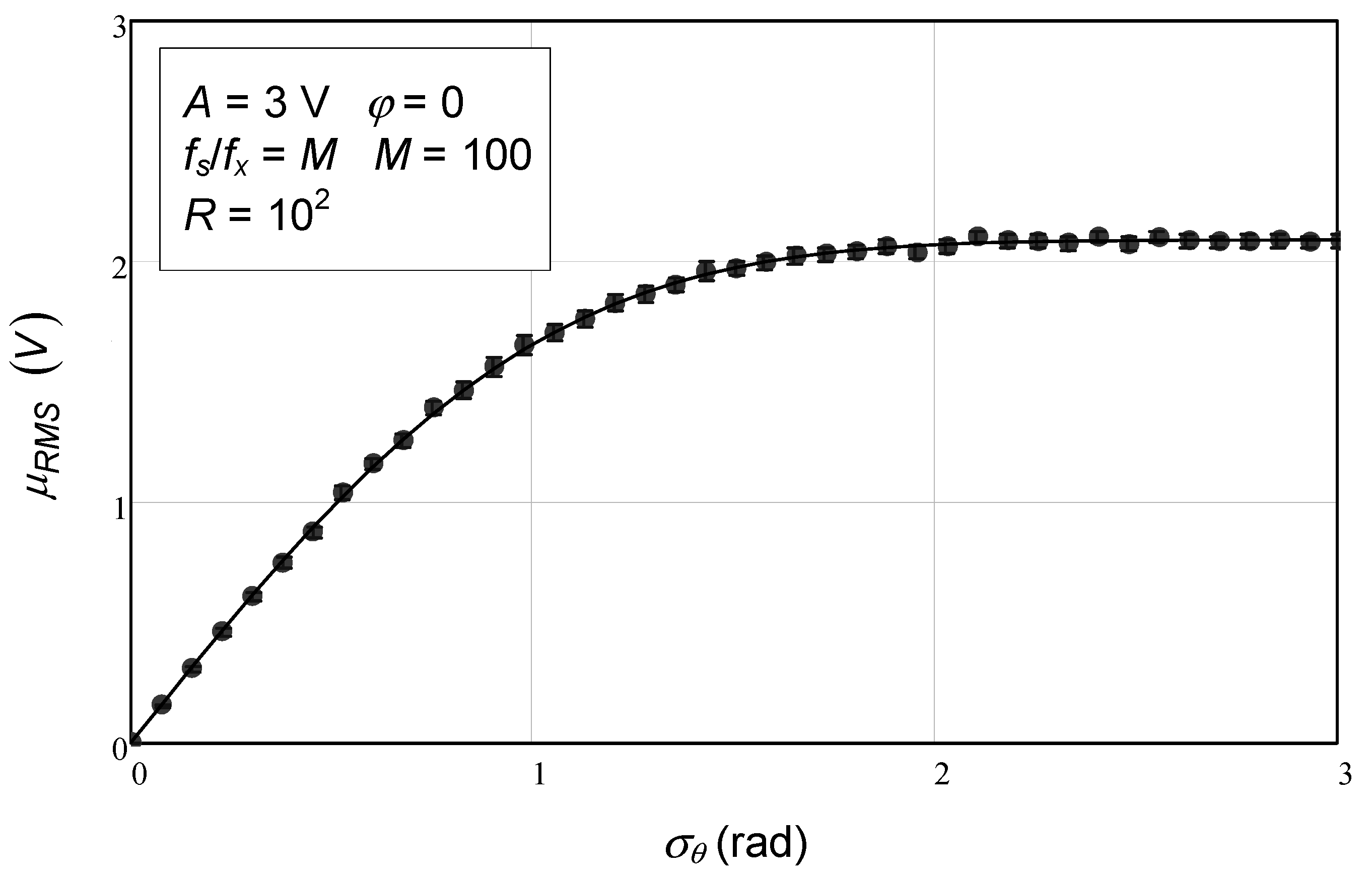

The first study presented here concerns the use of different amounts of injected phase noise. In

Figure 1, we observe the mean values of RMS estimation as a function of phase noise standard deviation over a range up to 3 rad. In this instance, the error bars are so short that they are practically invisible. In any case, the circles representing the middle point of the error bars follow closely the values given by the analytical expression. In this example, a sinusoidal amplitude of 3 V and 100 samples (

M) was used. Note that the number of simulation repetitions used to compute the error bars (

R) was, in this case, 100. The more repetitions are used, the shorter the error bars become, but the longer the numerical simulation takes.

In this figure, we confirm the complete agreement between the numerical simulations and the theoretical expression (116). Furthermore, in

Figure 2, we plot the difference between the values from numerical simulation and the ones from the analytical expression. Once again, a complete agreement is observed, since in this instance all error bars are around 0, which shows that the analytical expression indeed accurately reproduces the relationship between the phase noise or jitter phase noise standard deviation and the expected value of the estimated RMS value of the residuals.

In

Table 1, one can see the theoretical and numerical mean values of RMS for some of the phase noise standard deviation cases.

The range of values tried in the numerical simulation is far greater than the ones shown here; however, since no substantive difference in conclusions is warranted, and for the sake of conciseness, we choose to present just a few illustrative cases.

In

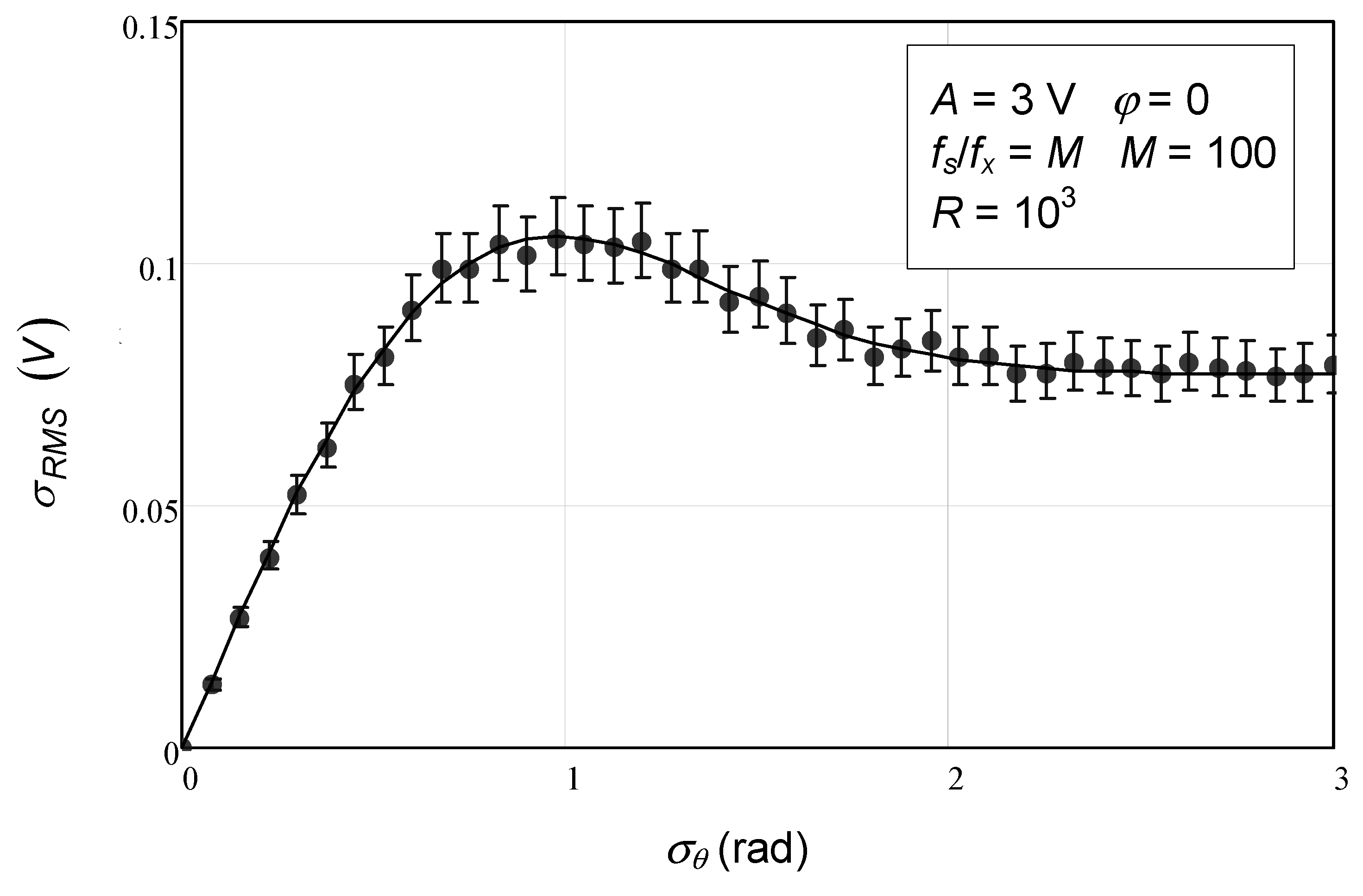

Figure 3 and

Figure 4, we observe the equivalent results for the estimated RMS standard deviation. In this case, the theoretical expression is the one presented in (120). Again, complete agreement is found. In

Figure 3, one can observe the theoretical expression depicted with the solid line and the error bars from the numerical simulations made with 1000 repetitions.

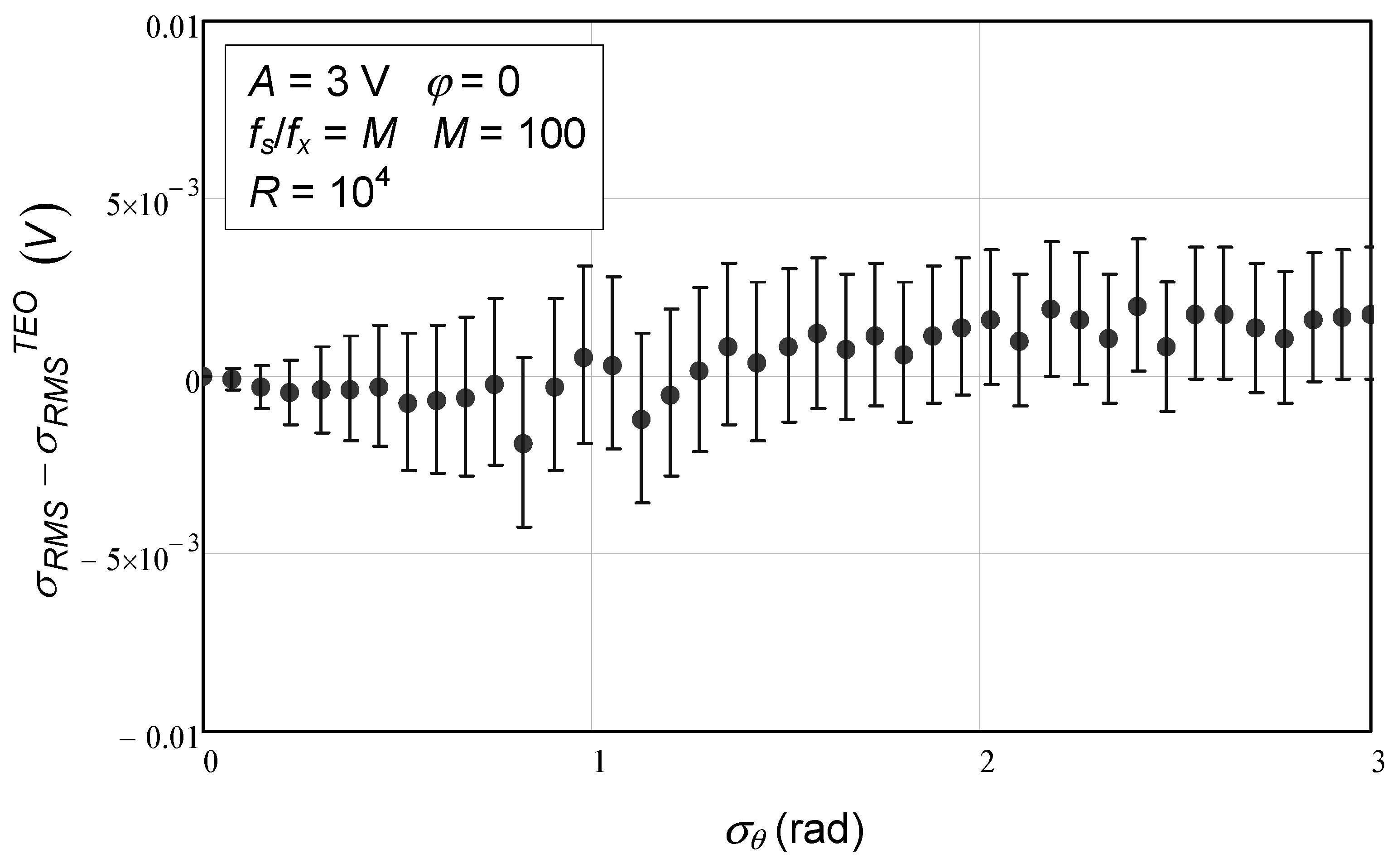

In

Figure 4, one can see the difference between the analytical expression and the numerical simulation results. The error bars are very close to 0, which means that the approximated analytical expression for the standard deviation of the RMS value of the residuals provides an excellent approximation, albeit not exact, due to the use of a Taylor series approximation with just one term.

In

Table 2, one can see the theoretical and numerical standard deviation values of RMS estimation for some of the phase noise standard deviation cases.

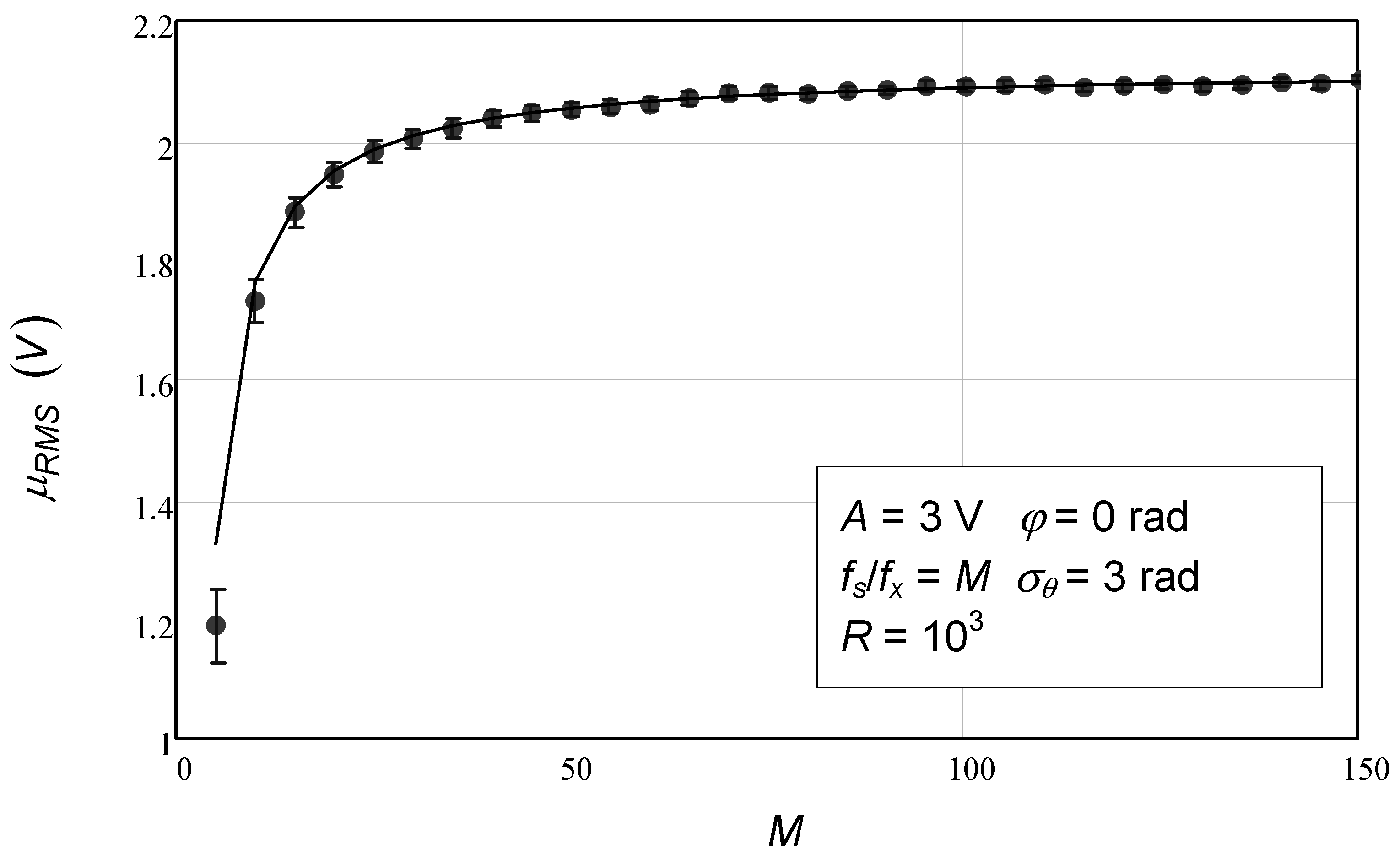

In the next set of charts, the number of samples was varied from 5 to 150, and the phase noise standard deviation was kept constant at 3 rad. Other values were also used, but the conclusions are the same, and so we refrain from showing them here.

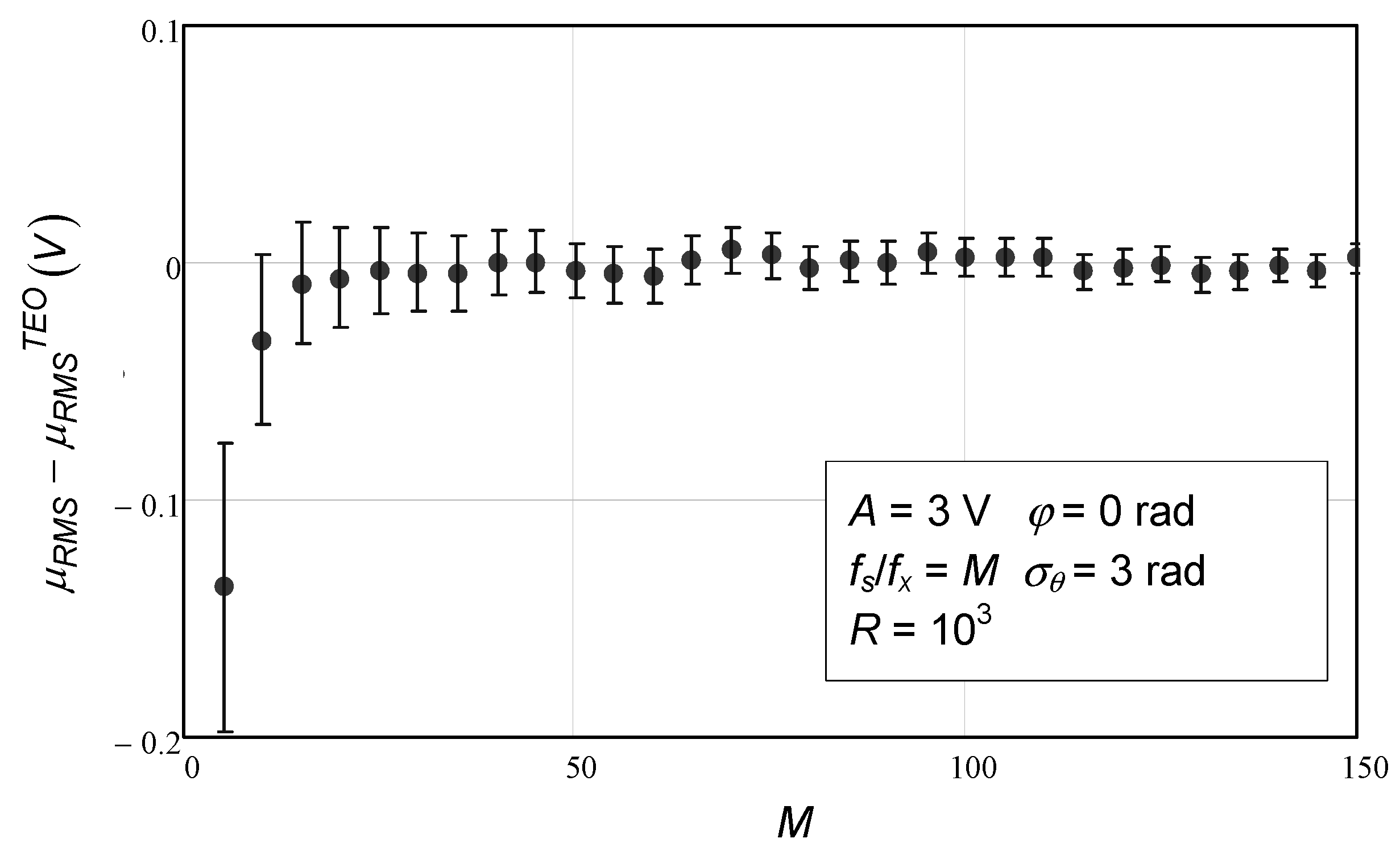

Figure 5 shows the mean value of the RMS value estimation as a function of the number of samples. The agreement between numerical simulation values and the analytical expression (solid line) is strong, except when the number of data points is very low (5). Recall that some approximations were performed during the theoretical derivation in order to obtain analytical expressions that were as simple as possible but still fairly accurate.

Figure 6 shows the difference between numerical simulation values and the ones provided by the analytical expression for varying numbers of data points. Again, we see that for a very small number of data points, the difference is not negligible.

In

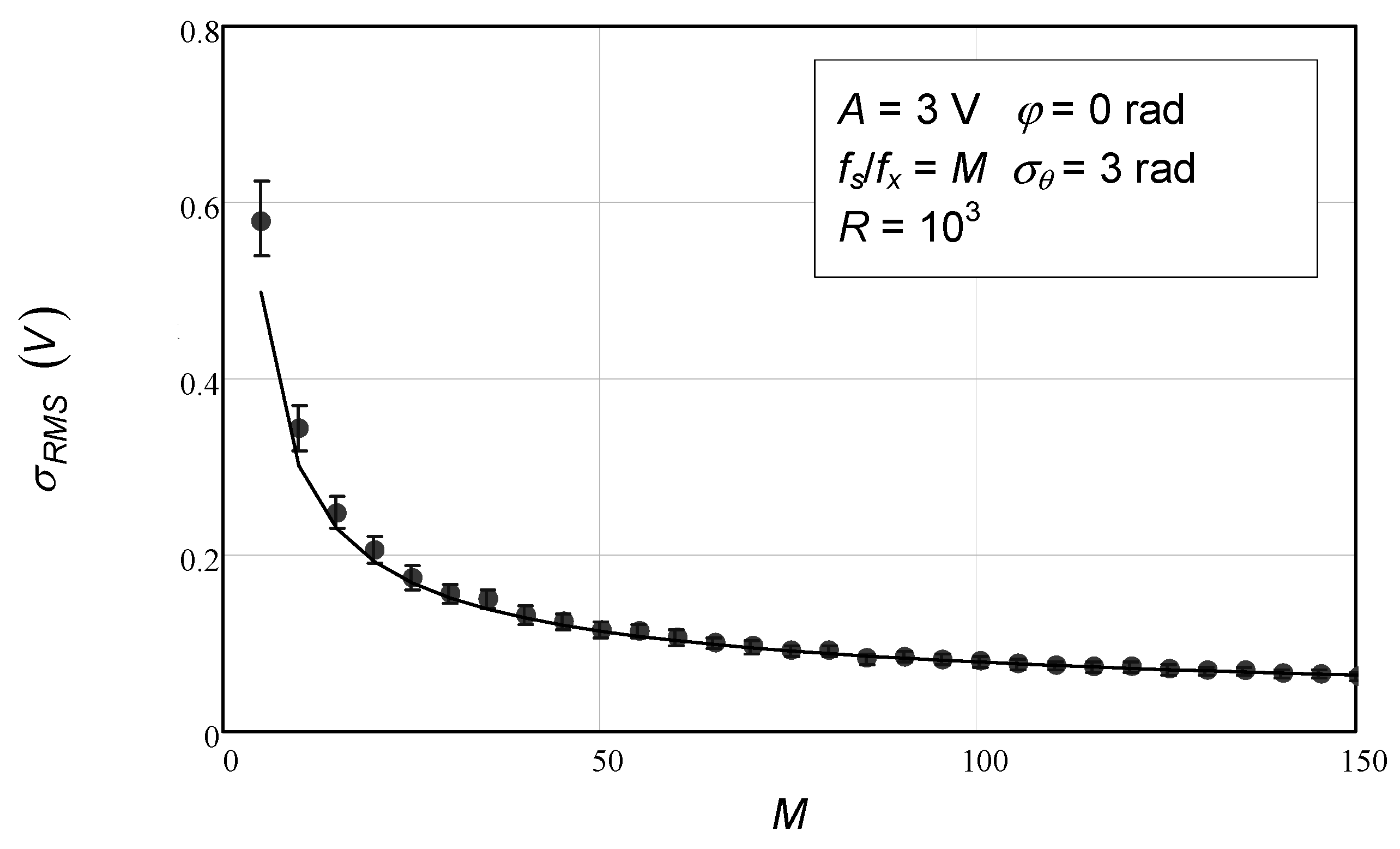

Figure 7 and

Figure 8, we see the behavior of the standard deviation of the RMS value estimation as a function of the number of data points. Again, strong agreement is found, except for the first data points that correspond to a very low number of samples. In

Figure 7, we see the standard deviation of the RMS value of the residuals as a function of the number of data points. The solid line, corresponding to the theoretical expression, lies around the error bars, which in some cases (large number of samples) are so small that they become invisible, and only the middle point (circles) can be observed. The exception is in the first four data points.

In

Figure 8, we observe the difference between numerical simulation values and theoretical ones. Here, we can observe more clearly the discrepancy in the first four data points. As expected, the smaller the number of data points, the worse the Taylor series approximation. One could have used more terms of the Taylor series to achieve a better agreement, but that would lead to a more complex and cumbersome analytical expression.

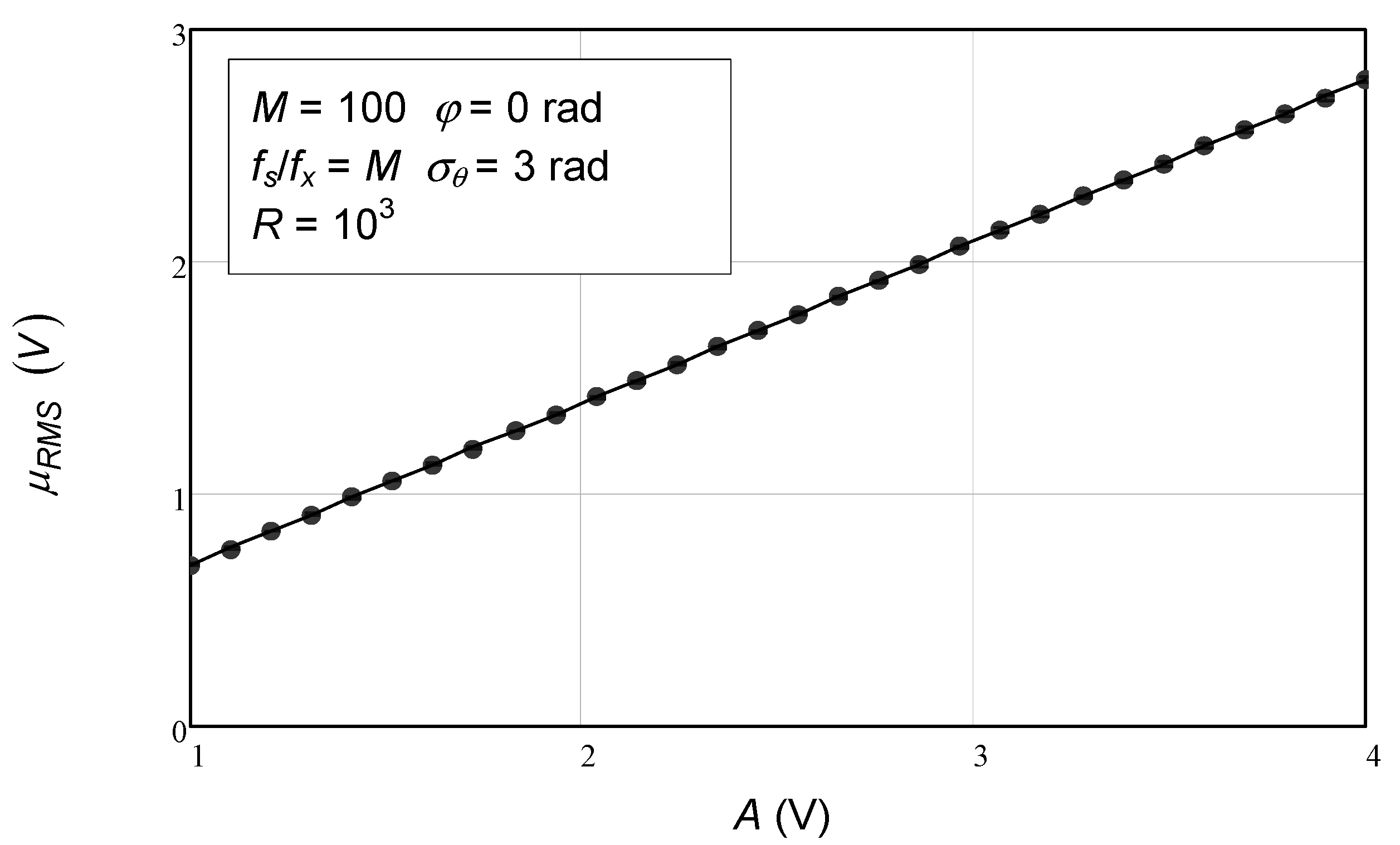

The final set of numerical simulation results pertains to varying sinusoidal amplitude. In

Figure 9, we observe the linear behavior provided by the analytical expression for the dependence of the mean value of the estimated residual RMS as a function of sinusoidal amplitude, as expressed by Equation (116). Again, for the number of repetitions used,

R = 1000, the error bars for the numerical simulation results are invisible, and only the middle points (circles) are visible. As observed, all the circles are on top of the solid line. Note that this simulation was made for 100 samples. As mentioned before, if the number of samples is less than 10, the agreement deteriorates significantly.

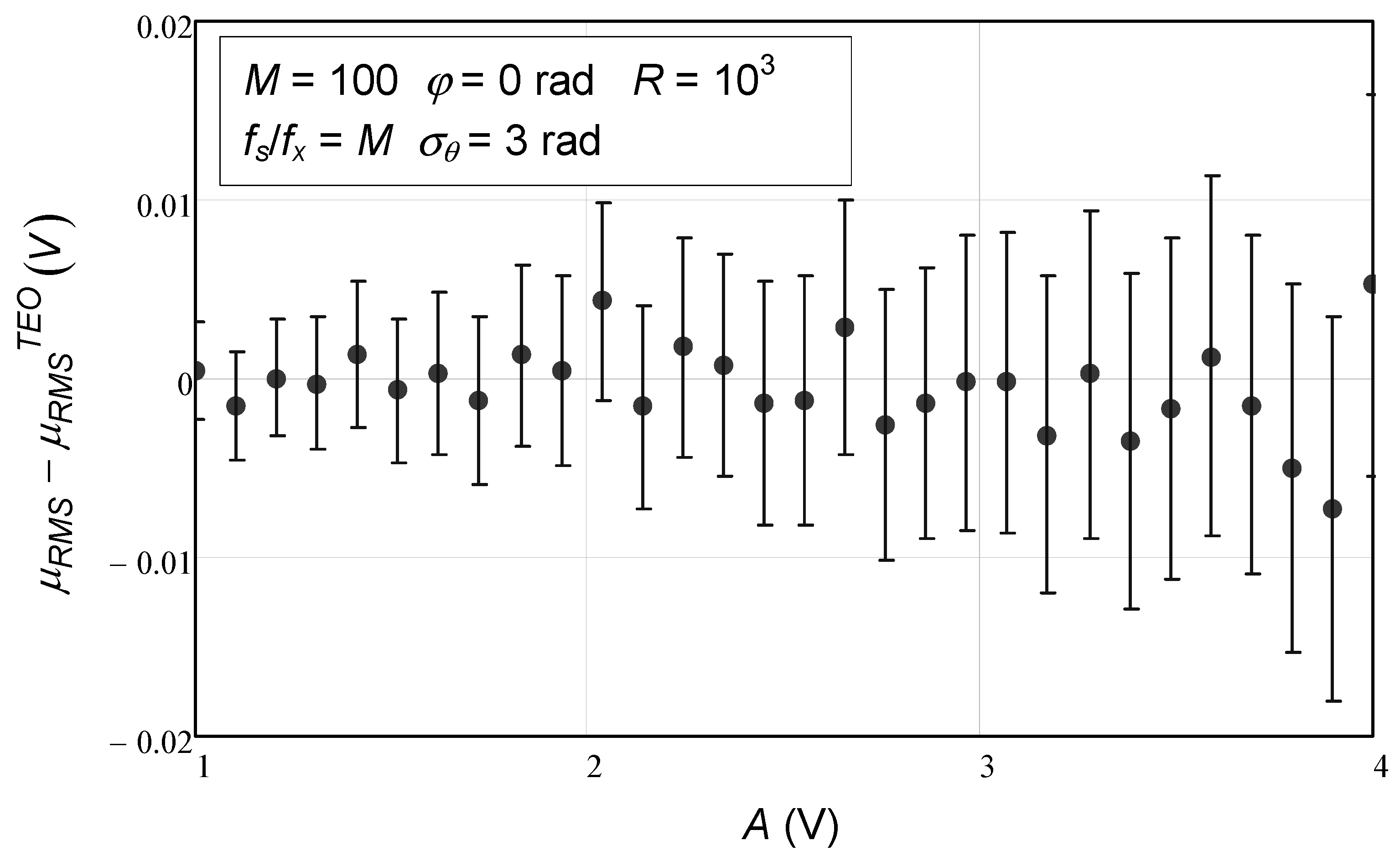

In

Figure 10, we see more clearly that the numerical simulation values and the analytical expression agree for different values of sinusoidal amplitude, since all the error bars are located around 0 for this specific case, where 100 (

M) samples were used.

In

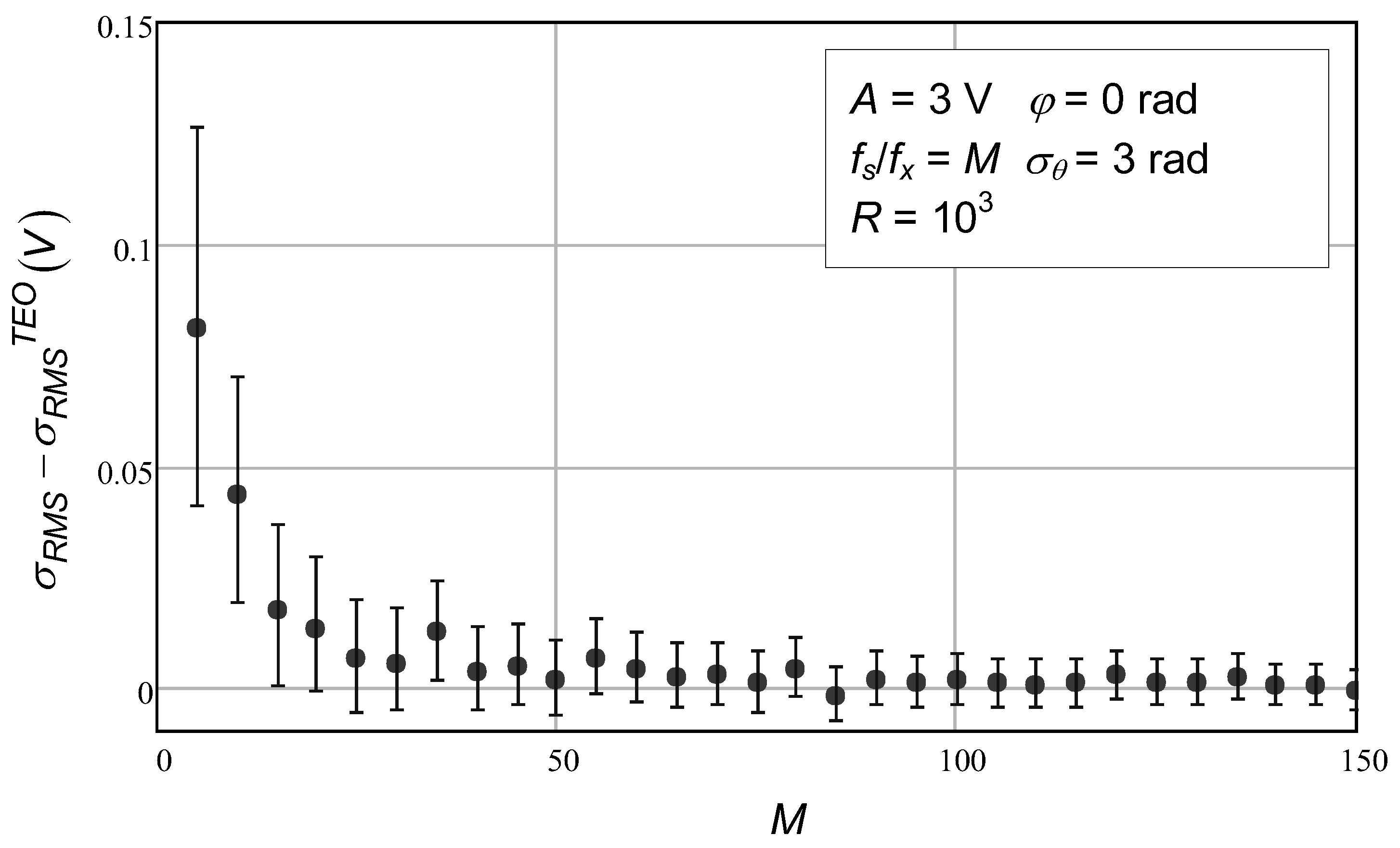

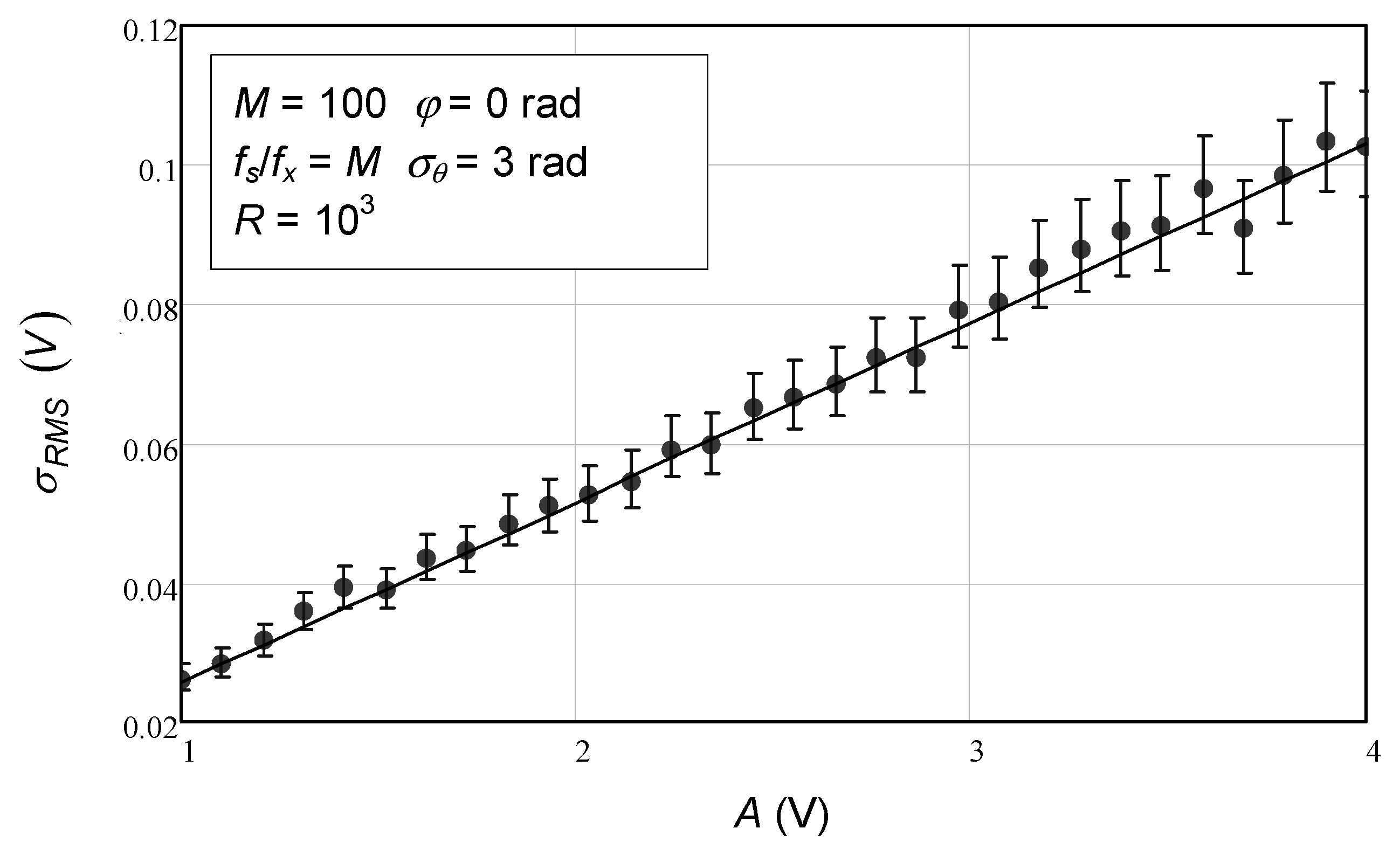

Figure 11 and

Figure 12, we see the last set of results that pertain to the RMS estimation standard deviation as a function of the sinusoidal amplitude. Again, the agreement with the theoretical expression derived here and presented in Equation (120) is strong. In

Figure 11, all the vertical bars corresponding to the numerical simulation results are on top of the solid line representing the analytical expression.

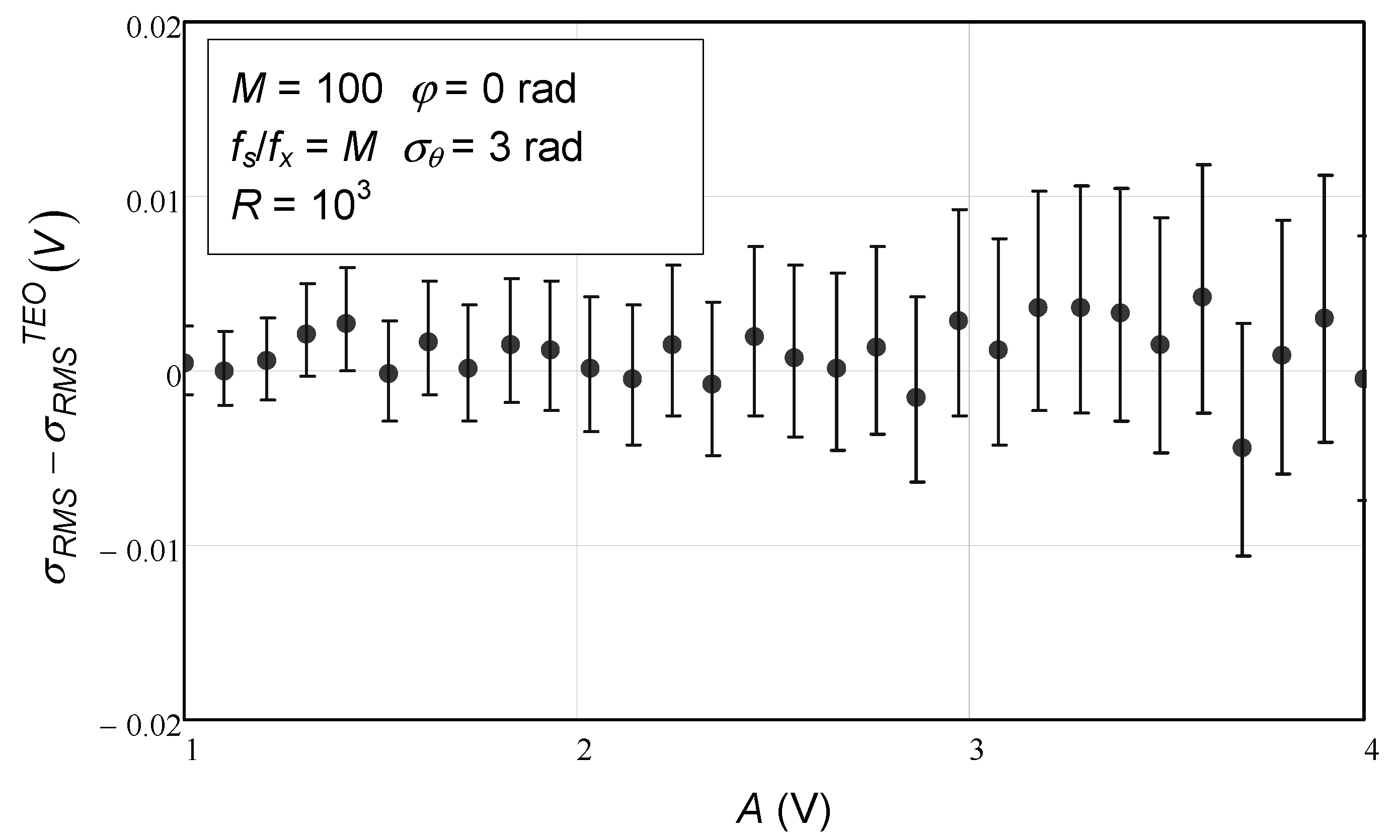

In

Figure 12, the difference between the theoretical values and those from the numerical simulations is more clearly seen. As expected, the error bars are all around 0.

The numerical simulation results presented in this section completely validate the analytical derivations presented in this work. This provides assurance to the engineer that the two analytical expressions obtained (for the mean and for the standard deviation of RMS value estimation) can be used with confidence.

7. Conclusions

This paper has developed a strong theoretical framework to understand and quantify the influence of phase noise and sampling jitter on sinusoidal fitting residuals, fundamentally contributing to the measurement science literature. The rigorous derivation of closed-form analytical expressions for the mean and variance of the root mean square (RMS) estimation of sine-fitting residuals is a sustainable contribution to the measurement science literature and furthers the knowledge of measurement uncertainty when considering either generator phase noise or sampling jitter. The detailed numerical validation through Monte Carlo simulations provides considerable confidence that the derived expressions accurately represent the actual behavior of residuals and will reflect reasonably strong estimates for very diverse operating conditions. Excellent agreement with the analytical predictions when compared to simulations, which were shown for varying amounts of phase noise, different numbers of data points, and different signal amplitudes, clearly illustrates that the developed theory will have wide applicability for real-world measurement purposes. Additionally, throughout the validation, it was confirmed that the assumptions and approximations made during the analytical derivation were reasonable for the intended domain of application. Developing theoretical knowledge of this structure is considered a significant development in the field of measurement uncertainty; however, it is an important consideration to discuss the potential limits and assumptions that will limit its domain of application.

In the future, the results presented here can be used as a starting point to study the uncertainty of other estimators that are based on sine-fitting residuals, like signal-to-noise ratio, noise floor, or effective number of bits, for example.

As a final comment, the author wants to reiterate that the knowledge of the standard deviation value of an estimator is important for an engineer who, when performing a measurement or numerical estimation of some kind where random phenomena are at play, must always specify the confidence intervals for their estimates.