Data Fusion of Scanned Black and White Aerial Photographs with Multispectral Satellite Images

Abstract

:1. Introduction

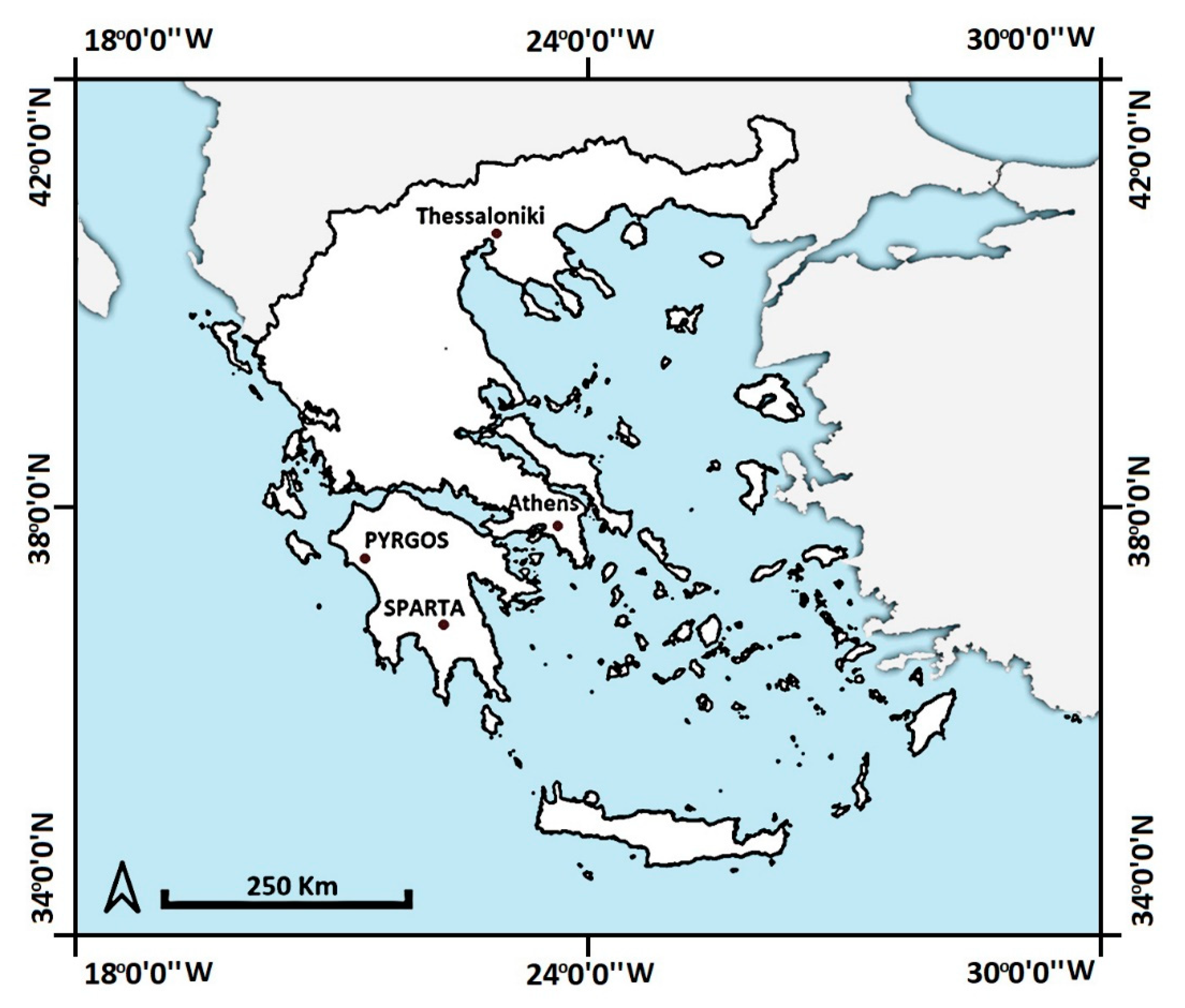

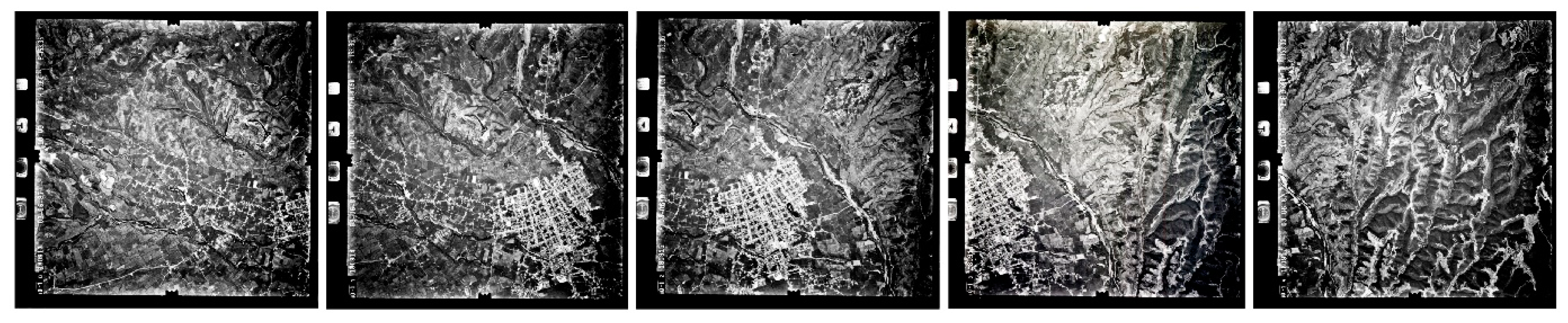

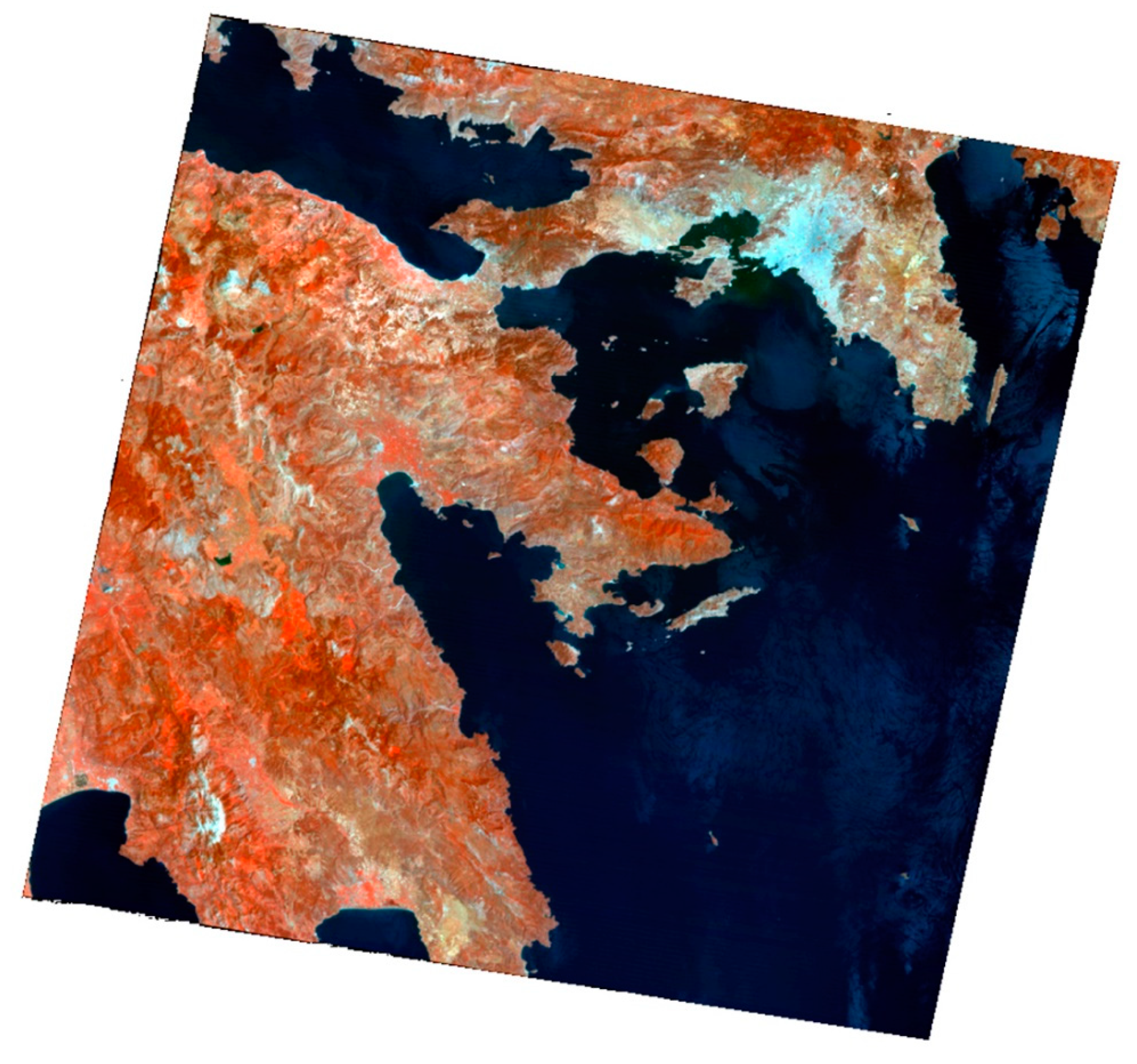

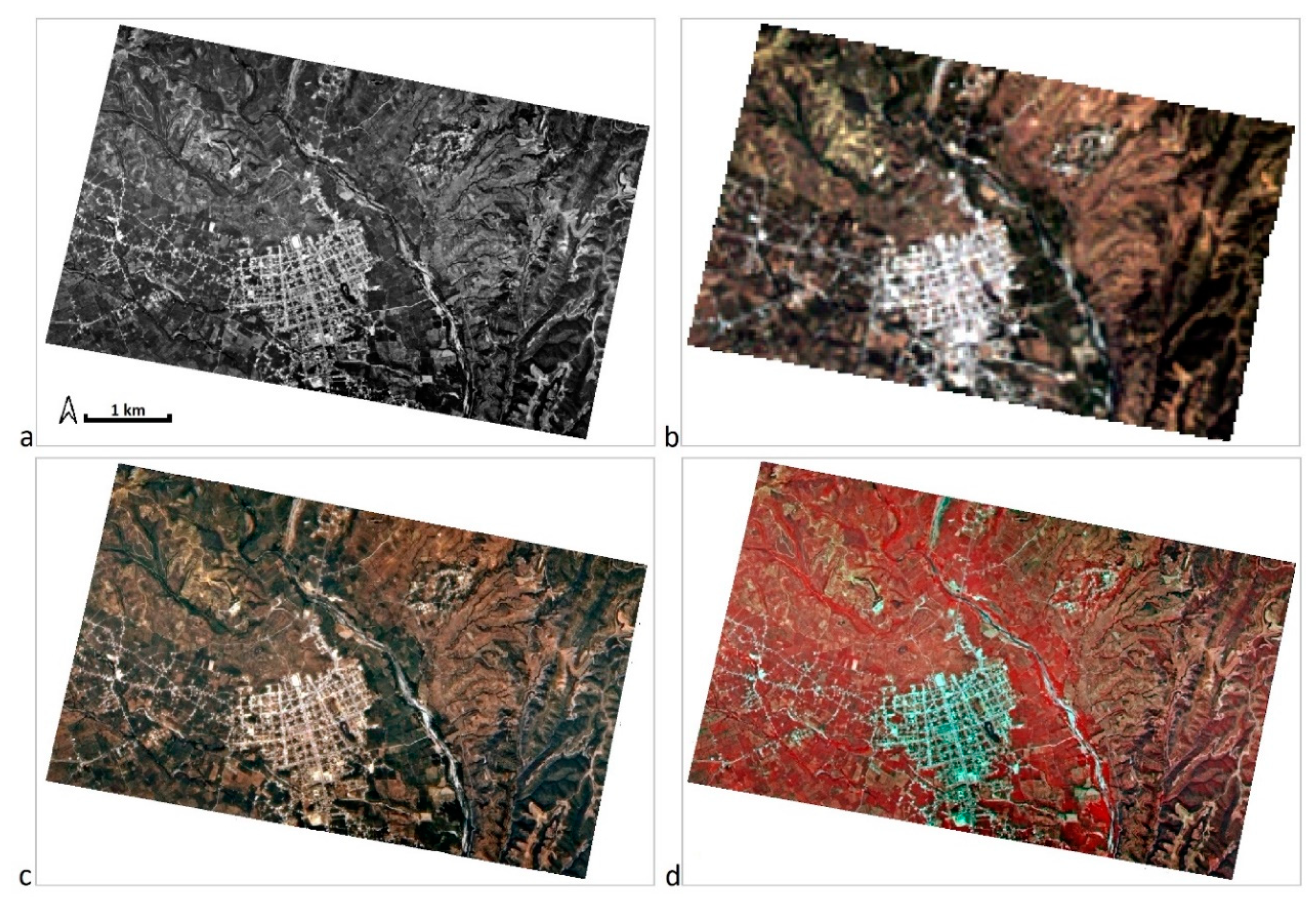

2. Data

3. Methodology and Processing of Data/Products

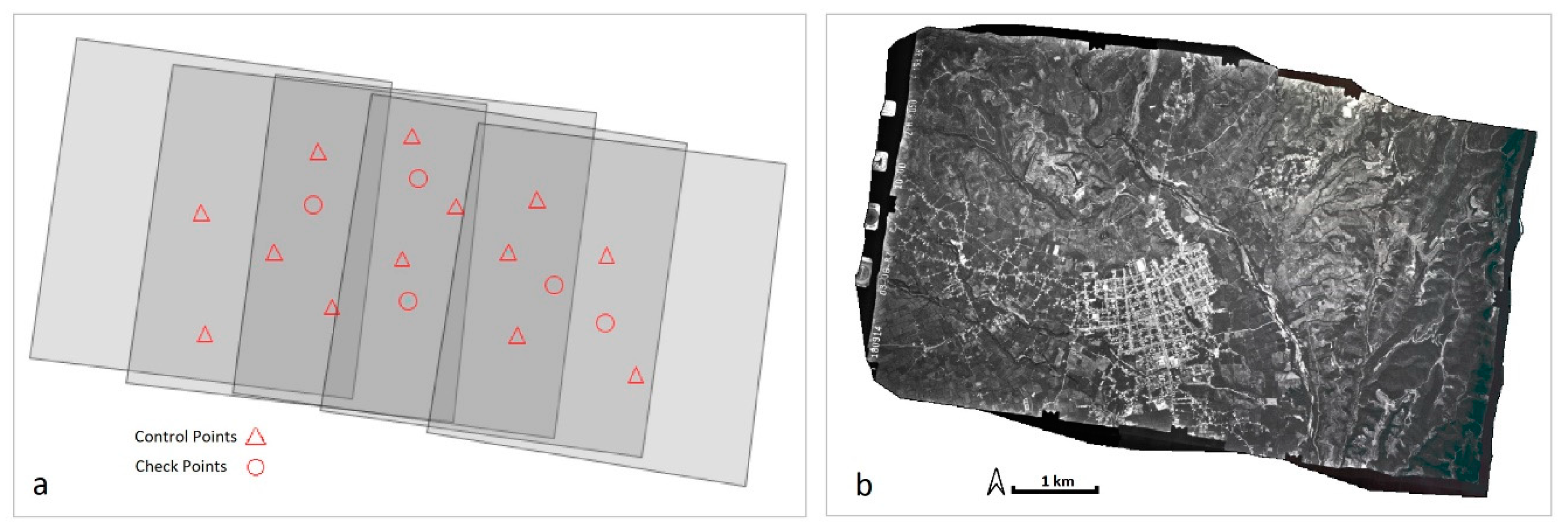

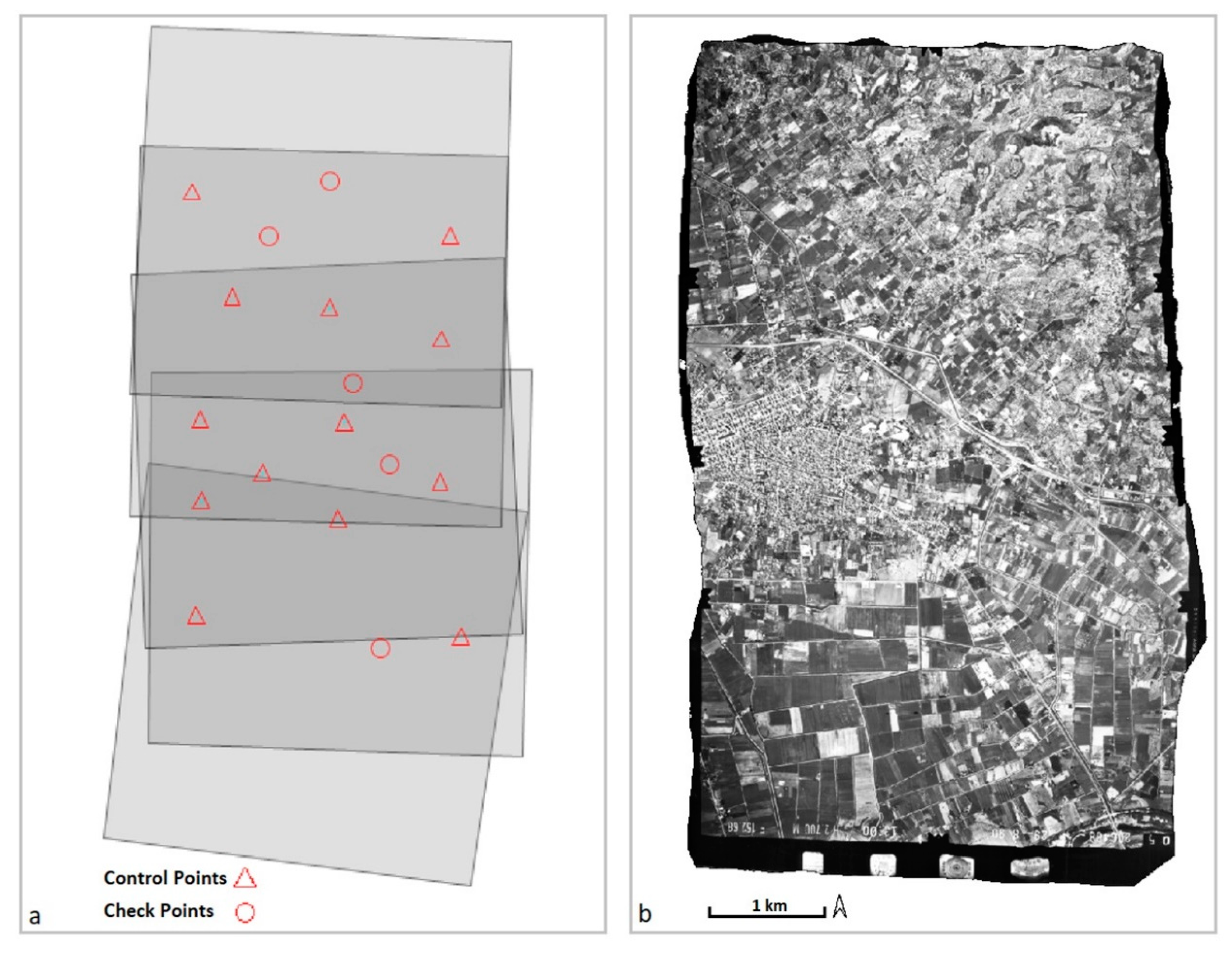

3.1. Aerial Triangulation

3.2. Geometric Correction of Satellite Images

3.3. Fusion of Images

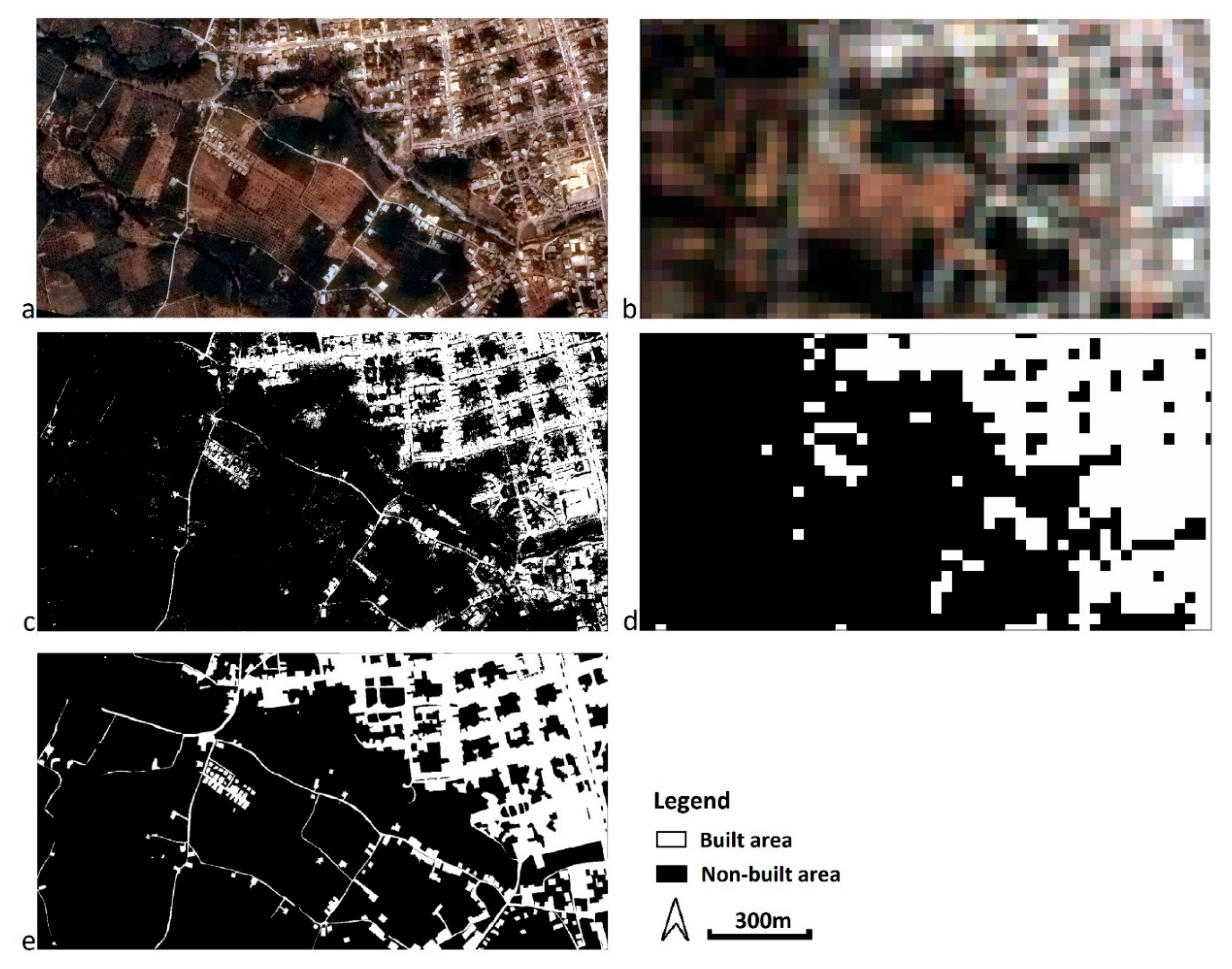

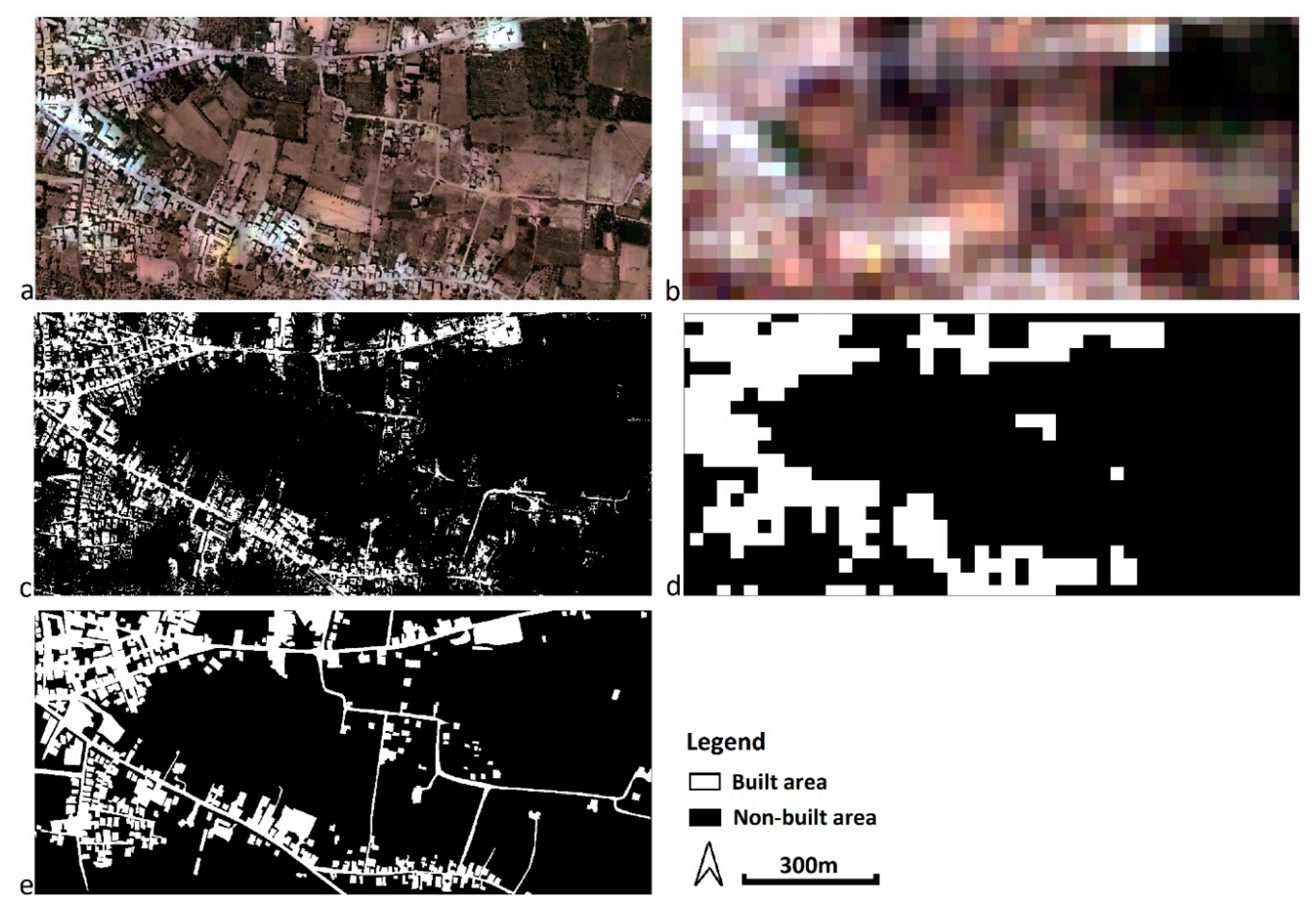

3.4. Classifications and Area Measurements

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Panda, C.B. Remote Sensing. Principles and Applications in Remote Sensing, 1st ed.; Viva Books: New Delhi, India, 1995; pp. 234–267. [Google Scholar]

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing, 2nd ed.; Academic Press: Orlando, FL, USA, 1997. [Google Scholar]

- Bethune, S.; Muller, F.; Donnay, P.J. Fusion of multi-spectral and panchromatic images by local mean and variance matching filtering techniques. In Proceedings of the Second International Conference en Fusion of Earth Data, Nice, France, 28–30 January 1998; pp. 31–36. [Google Scholar]

- Wald, L. Some terms of reference in data fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1190–1193. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, R.; Woods, R. Digital Image Processing, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002. [Google Scholar]

- Choodarathnakara, L.A.; Ashok Kumar, T.; Koliwad, S.; Patil, G.C. Assessment of Different Fusion Methods Applied to Remote Sensing Imagery. Int. J. Comput. Sci. Inf. Technol. 2012, 3, 5447–5453. [Google Scholar]

- Fonseca, L.; Namikawa, L.; Castejon, E.; Carvalho, L.; Pinho, C.; Pagamisse, A. Image Fusion for Remote Sensing Applications. In Image Fusion and Its Applications, 1st ed.; Zheng, Y., Ed.; IntechOpen: Rijeka, Croatia, 2011; pp. 153–178. [Google Scholar]

- Shi, W.; Zhu, C.; Tian, Y.; Nichol, J. Wavelet-based image fusion and quality assessment. Int. J. Appl. Earth Obs. Geoinf. 2005, 6, 241–251. [Google Scholar] [CrossRef]

- Zhang, H.K.; Huang, B. A new look at image fusion methods from a Bayesian perspective. Remote Sens. 2015, 7, 6828–6861. [Google Scholar] [CrossRef] [Green Version]

- Helmy, A.K.; El-Tawel, G.S. An integrated scheme to improve pan-sharpening visual quality of satellite images. Egypt. Inf. J. 2015, 16, 121–131. [Google Scholar] [CrossRef]

- Jelének, J.; Kopačková, V.; Koucká, L.; Mišurec, J. Testing a modified PCA-based sharpening approach for image fusion. Remote Sens. 2016, 8, 794. [Google Scholar] [CrossRef] [Green Version]

- Chavez, P.S.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data: Landsat TM and SPOT Panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Fryskowska, A.; Wojtkowska, M.; Delis, P.; Grochala, A. Some Aspects of Satellite Imagery Integration from EROS B and LANDSAT 8. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 647–652. [Google Scholar]

- Grochala, A.; Kedzierski, M. A Method of Panchromatic Image Modification for Satellite Imagery Data Fusion. Remote Sens. 2017, 9, 639. [Google Scholar] [CrossRef] [Green Version]

- Pohl, C.; Van Genderen, J.L. Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Erdogan, M.; Maras, H.H.; Yilmaz, A.; Özerbil, T.Ö. Resolution merge of 1:35000 scale aerial photographs with Landsat 7 ETM imagery. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–11 July 2008; Volume XXXVII, Part B7. pp. 1281–1286. [Google Scholar]

- Stabile, M.; Odeh, I.; McBratney, A. Fusion of high-resolution aerial orthophoto with Landsat TM image for improved object-based land-use classification. In Proceedings of the 30th Asian Conference on Remote Sensing 2009 (ACRS 2009), Beijing, China, 18–23 October 2009; pp. 114–119. [Google Scholar]

- Siok, K.; Jenerowicz, A.; Woroszkiewicz, M. Enhancement of spectral quality of archival aerial photographs using satellite imagery for detection of land cover. J. Appl. Remote Sens. 2017, 11, 036001. [Google Scholar] [CrossRef]

- LandsatLook Viewer. Available online: https://landsatlook.usgs.gov/ (accessed on 29 May 2019).

- Hellenic Cadastre, Ortho images. Available online: http://gis.ktimanet.gr/wms/ktbasemap/default.aspx (accessed on 29 May 2019).

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Ranchin, T.; Aiazzi, B.; Alparone, L.; Baronti, S.; Wald, L. Image fusion-The ARSIS concept and some successful implementation schemes. ISPRS J. Photogramm. Remote Sens. 2003, 58, 4–18. [Google Scholar] [CrossRef] [Green Version]

- Selva, M.; Santurri, L.; Baronti, S. On the Use of the Expanded Image in Quality Assessment of Pansharpened Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 320–324. [Google Scholar] [CrossRef]

- Li, M.; Zang, S.; Zhang, B.; Li, S.; Wu, C. A Review of Remote Sensing Image Classification Techniques: The Role of Spatio-contextual Information. Eur. J. Remote Sens. 2014, 47, 389–411. [Google Scholar] [CrossRef]

- Gillespie, A.R.; Kahle, A.B.; Walker, E.R. Color enhancement of highly correlated images-II. Channel ratio and ‘chromaticity’ transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Liu, J.G.; Moore, J.M. Pixel block intensity modulation: Adding spatial detail to TM band 6 thermal imagery. Int. J. Remote Sens. 1998, 19, 2477–2491. [Google Scholar]

- Zhang, Y. A new merging method and its spectral and spatial effects. Int. J. Remote Sens. 1999, 20, 2003–2014. [Google Scholar] [CrossRef]

- Liu, J.G. Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- González-Audícana, M.; Saleta, J.L.; Catalán, G.R.; García, R. Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1291–1299. [Google Scholar] [CrossRef]

- Wang, Z.; Ziou, D.; Armenakis, C. A Comparative Analysis of Image Fusion Methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Helmy, K.A.; Nasr, H.A.; El-Taweel, S.G. Assessment and Evaluation of Different Data Fusion Techniques. Int. J. Comput. 2010, 4, 107–115. [Google Scholar]

- Susheela, D.; Pradeep, K.G.; Mahesh, K.J. A comparative study of various pixel based image fusion techniques as applied to an urban environment. Int. J. Image Data Fusion 2013, 4, 197–213. [Google Scholar]

- Jong-Song, J.; Jong-Hun, C. Application Effect Analysis of Image Fusion Methods for Extraction of Shoreline in Coastal Zone Using Landsat ETM+. Atmos. Ocean. Sci. 2017, 1, 1–6. [Google Scholar]

| Data | Location | Number of Images | Date of Capture | Spectral Resolution | Spatial Resolution | Radiometric Resolution |

|---|---|---|---|---|---|---|

| Aerial photographs | Sparta | 5 | 03/06/1987 | b/w, visible spectrum | 0.50 m | 8 bit |

| Pyrgos | 5 | 29/08/1990 | 0.50 m | |||

| Satellite images Landsat 5 | Sparta | 1 | 10/06/1987 | 6 Bands: R-G-B-NIR-SWIR1-SWIR2 | 30 m | |

| Pyrgos | 1 | 28/08/1990 |

| Estimated Indices (units m) | Orthophoto Mosaic from Aerial Photographs | Orthoimagery from Satellite Images | ||

|---|---|---|---|---|

| Study Areas | ||||

| Sparta | Pyrgos | Sparta | Pyrgos | |

| , where the differenced of CPs in the X axis between the orthoimage and the actual values, the values of CPs in the X axis in the orthoimage, the actual values of CPs in the X axis, and the number of observations (=5). | 2.1 | 1.0 | 9.5 | 7.2 |

| 2.4 | 1.0 | 9.2 | 8.3 | |

| = | 1.5 | 0.4 | 3.6 | 5.7 |

| 1.8 | 0.3 | 4.2 | 4.8 | |

| LANDSAT 5 | DATAFUSION | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bands | Blue | Green | Red | NIR | SWIR1 | SWIR2 | Blue | Green | Red | NIR | SWIR1 | SWIR2 | |

| LANDSAT 5 | Blue | 1 | 0.979 | 0.927 | 0.203 | 0.751 | 0.883 | 0.697 | 0.750 | 0.774 | 0.193 | 0.584 | 0.775 |

| Green | 0.979 | 1 | 0.969 | 0.239 | 0.827 | 0.925 | 0.693 | 0.770 | 0.815 | 0.240 | 0.656 | 0.815 | |

| Red | 0.927 | 0.969 | 1 | 0.204 | 0.894 | 0.943 | 0.658 | 0.751 | 0.843 | 0.239 | 0.721 | 0.833 | |

| NIR | 0.203 | 0.239 | 0.204 | 1 | 0.373 | 0.154 | −0.010 | 0.048 | 0.068 | 0.528 | 0.146 | 0.028 | |

| SWIR1 | 0.751 | 0.827 | 0.894 | 0.373 | 1 | 0.914 | 0.467 | 0.581 | 0.705 | 0.297 | 0.735 | 0.742 | |

| SWIR2 | 0.883 | 0.925 | 0.943 | 0.154 | 0.914 | 1 | 0.612 | 0.701 | 0.781 | 0.179 | 0.707 | 0.849 | |

| DATAFUSION | Blue | 0.697 | 0.693 | 0.658 | −0.010 | 0.467 | 0.612 | 1 | 0.978 | 0.909 | 0.564 | 0.803 | 0.878 |

| Green | 0.750 | 0.770 | 0.751 | 0.048 | 0.581 | 0.701 | 0.978 | 1 | 0.964 | 0.565 | 0.866 | 0.934 | |

| Red | 0.774 | 0.815 | 0.843 | 0.068 | 0.705 | 0.781 | 0.909 | 0.964 | 1 | 0.505 | 0.914 | 0.963 | |

| NIR | 0.193 | 0.240 | 0.239 | 0.528 | 0.297 | 0.179 | 0.564 | 0.565 | 0.505 | 1 | 0.656 | 0.442 | |

| SWIR1 | 0.584 | 0.656 | 0.721 | 0.146 | 0.735 | 0.707 | 0.803 | 0.866 | 0.914 | 0.656 | 1 | 0.913 | |

| SWIR2 | 0.775 | 0.815 | 0.833 | 0.028 | 0.742 | 0.849 | 0.878 | 0.934 | 0.963 | 0.442 | 0.913 | 1 | |

| LANDSAT 5 | DATAFUSION | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Bands | Blue | Green | Red | NIR | SWIR1 | SWIR2 | Blue | Green | Red | NIR | SWIR1 | SWIR2 | |

| LANDSAT 5 | Blue | 1 | 0.967 | 0.947 | −0.153 | 0.707 | 0.832 | 0.811 | 0.773 | 0.726 | −0.290 | 0.553 | 0.595 |

| Green | 0.967 | 1 | 0.963 | −0.092 | 0.738 | 0.855 | 0.800 | 0.818 | 0.756 | −0.255 | 0.582 | 0.622 | |

| Red | 0.947 | 0.963 | 1 | −0.244 | 0.818 | 0.921 | 0.792 | 0.786 | 0.789 | −0.399 | 0.668 | 0.702 | |

| NIR | −0.153 | −0.092 | −0.244 | 1 | −0.106 | −0.260 | −0.179 | −0.110 | −0.225 | 0.870 | −0.228 | −0.288 | |

| SWIR1 | 0.707 | 0.738 | 0.818 | −0.106 | 1 | 0.926 | 0.568 | 0.580 | 0.639 | −0.279 | 0.737 | 0.681 | |

| SWIR2 | 0.832 | 0.855 | 0.921 | −0.260 | 0.926 | 1 | 0.676 | 0.681 | 0.716 | −0.402 | 0.715 | 0.736 | |

| DATAFUSION | Blue | 0.811 | 0.800 | 0.792 | −0.179 | 0.568 | 0.676 | 1 | 0.952 | 0.947 | −0.421 | 0.757 | 0.809 |

| Green | 0.773 | 0.818 | 0.786 | −0.110 | 0.580 | 0.681 | 0.952 | 1 | 0.948 | −0.348 | 0.743 | 0.793 | |

| Red | 0.726 | 0.756 | 0.789 | −0.225 | 0.639 | 0.716 | 0.947 | 0.948 | 1 | −0.500 | 0.872 | 0.910 | |

| NIR | −0.290 | −0.255 | −0.399 | 0.870 | −0.279 | −0.402 | −0.421 | −0.348 | −0.500 | 1 | −1 | −0.569 | |

| SWIR1 | 0.553 | 0.582 | 0.668 | −0.228 | 0.737 | 0.715 | 0.757 | 0.743 | 0.872 | −0.524 | 1 | 0.938 | |

| SWIR2 | 0.595 | 0.622 | 0.702 | −0.288 | 0.681 | 0.736 | 0.809 | 0.793 | 0.910 | −0.569 | 0.938 | 1 | |

| Digitization in GIS | Classification Landsat 5 | Classification Datafusion | ||||

|---|---|---|---|---|---|---|

| Area (sqm) | Area (sqm) | Difference % | Area (sqm) | Difference % | ||

| Sparta | Built surface | 349,332.31 | 453,600.00 | 29.80 | 274,119.00 | −21.5 |

| Open surface | 1,022,910.195 | 918,642.50 | −10.19 | 1,098,123.50 | 7.40 | |

| Pyrgos | Built surface | 159,466.43 | 211,500.00 | 32.60 | 129,493.75 | −18.80 |

| Open surface | 676,364.56 | 624,330.99 | −7.70 | 706,337.24 | 4.43 | |

© 2020 by the authors. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaimaris, D.; Patias, P.; Mallinis, G.; Georgiadis, C. Data Fusion of Scanned Black and White Aerial Photographs with Multispectral Satellite Images. Sci 2020, 2, 29. https://doi.org/10.3390/sci2020029

Kaimaris D, Patias P, Mallinis G, Georgiadis C. Data Fusion of Scanned Black and White Aerial Photographs with Multispectral Satellite Images. Sci. 2020; 2(2):29. https://doi.org/10.3390/sci2020029

Chicago/Turabian StyleKaimaris, Dimitris, Petros Patias, Giorgos Mallinis, and Charalampos Georgiadis. 2020. "Data Fusion of Scanned Black and White Aerial Photographs with Multispectral Satellite Images" Sci 2, no. 2: 29. https://doi.org/10.3390/sci2020029