1. Introduction

Dams, as critical infrastructure in hydraulic engineering, play essential roles in flood control, irrigation, power generation, and water supply. However, prolonged natural erosion, environmental changes, and construction quality issues gradually lead to structural damage such as surface cracks [

1]. These cracks not only undermine the stability of dams but may also result in catastrophic consequences [

2]. Therefore, accurately detecting surface cracks is vital for the safe operation of dams.

Traditional crack detection in dams mainly depends on manual inspection, which is often time-consuming, costly, and prone to subjective bias. These limitations make it difficult to achieve accurate and comprehensive assessment [

3]. Computer vision techniques, particularly convolutional neural networks (CNNs), have demonstrated exceptional performance in image feature extraction and pattern recognition, enabling the automatic extraction of crack edge features and precise segmentation [

4,

5,

6]. Despite the significant progress of CNNs in crack segmentation tasks, they still face certain limitations. For instance, CNNs excel at extracting local features but struggle with tasks like dam crack segmentation, which require long-range dependencies. The local convolution kernels of CNNs are less effective in capturing global information in large-scale cracks.

Originally developed for natural language processing, the Transformer architecture utilizes a multi-head self-attention mechanism to effectively capture long-range dependencies within sequences [

7,

8]. Researchers have since introduced Transformer into the computer vision domain, achieving groundbreaking results. Unlike CNNs, Transformers excel at capturing global features, making them highly effective in modeling global contextual information in large-scale images. This is particularly beneficial for crack detection, where global information is crucial. Transformers have shown immense potential in this field [

9].

Given this context, this study proposes a dam surface crack segmentation model, named CCT Net (Crack CNN-Transformer Net), which integrates the complementary strengths of CNNs and Transformers. The model is designed to leverage the local feature extraction capability of CNNs and the global context modeling of Transformers, thereby enhancing the accuracy and robustness of dam surface crack segmentation. Specifically, CCT Net first utilizes CNNs for initial local feature extraction from input images. Subsequently, a Transformer module is introduced to perform global modeling on the extracted features, enhancing the model’s ability to detect large-scale and structurally complex cracks. By complementing the strengths of CNNs and Transformers, the model ensures the precise capture of crack details while effectively improving global information awareness. This model not only holds significant theoretical value but also has promising prospects for practical engineering applications.

2. Related Works

The accurate detection and segmentation of dam surface cracks are critical tasks in structural health monitoring. With the development of deep learning techniques, substantial progress has been achieved in this area. This section reviews related work, focusing on traditional crack detection methods, CNN-based approaches, Transformer-based techniques, and recent efforts that integrate these methods to improve segmentation performance.

2.1. Traditional Methods for Crack Detection

Before the advent of deep learning, traditional image processing techniques were widely used for crack detection. These methods often relied on manual feature engineering and statistical analysis. Techniques such as edge detection algorithms, including Canny and Sobel operators, were used to highlight crack edges based on intensity gradients [

10,

11]. Morphological operations, including dilation and erosion, were subsequently applied to refine the detected edges [

12]. While these methods achieved reasonable results under controlled conditions, they were highly sensitive to noise and environmental factors such as lighting variations and background textures, making them unsuitable for large-scale dam crack detection tasks.

Machine learning techniques, such as support vector machines (SVMs) and random forests, have also been explored for crack classification and segmentation [

13,

14]. These approaches utilized handcrafted features, including texture, color, and shape descriptors, to distinguish cracks from non-crack regions. However, their reliance on manual feature extraction limited their generalizability and scalability to complex scenarios with diverse crack patterns and backgrounds.

2.2. CNN-Based Crack Segmentation

Convolutional neural networks (CNNs) have brought transformative advances to the field of computer vision, demonstrating remarkable effectiveness in image feature extraction and pattern recognition tasks [

15,

16]. For SHM tasks, convolutional neural networks (CNNs) have been widely adopted owing to their ability to automatically learn hierarchical representations of image features [

17,

18]. Architectures such as U-Net and DeepLab have been extensively employed in various segmentation applications, including the detection of cracks on dam surfaces.

U-Net, introduced for biomedical image segmentation, utilizes an encoder–decoder architecture with skip connections to recover spatial information lost during downsampling [

19]. This architecture has been adapted for crack segmentation, achieving notable improvements in Precision and Recall. However, U-Net’s reliance on local convolution operations limits its ability to capture long-range dependencies, which are crucial for detecting large-scale and interconnected crack patterns. DeepLab, which incorporates atrous convolution and conditional random fields (CRFs) for capturing multi-scale contextual information, has also shown promise in crack segmentation tasks [

20]. Despite its strengths, DeepLab struggles with the fine-grained details required for precise crack boundary detection.

Due to its structural receptive field limitation, convolutional neural networks have certain limitations: the receptive field of CNNs is limited by the size of the convolution kernel. Even if the receptive field is expanded by stacking multiple convolutional layers, it is still difficult to fully capture the global information of the cracks. In particular, bottlenecks appear when dealing with cracks with complex morphology and across image ranges [

21].

2.3. Transformer-Based Approaches

Originally developed for natural language processing tasks, the Transformer architecture has recently gained substantial attention in the field of computer vision due to its strong capability to model global contextual relationships via self-attention mechanisms [

22]. Vision Transformers (ViTs) and their variants, such as the Swin Transformer, have achieved state-of-the-art performance across a range of vision tasks, including object detection, image classification, and semantic segmentation [

8,

23].

In the context of crack segmentation, Transformer-based models offer a notable advantage over traditional CNNs by effectively capturing long-range dependencies and global contextual information, which are crucial for identifying elongated and irregular crack patterns [

24,

25]. Recent SHM studies have adopted Transformer-based models for surface damage assessment and crack analysis, reporting improved context modeling and segmentation quality compared with pure CNNs [

26,

27]. However, their high computational cost and sensitivity to noise, particularly in backgrounds with complex textures, pose significant challenges [

28].

2.4. Hybrid Models Combining CNN and Transformer

Recent research has focused on combining the strengths of CNNs and Transformers to overcome their individual limitations [

29,

30]. These hybrid models leverage the local feature extraction capabilities of CNNs and the global modeling power of Transformers, enabling comprehensive feature representation for complex tasks like crack segmentation.

For example, works integrating CNN backbones with Transformer modules have demonstrated improved performance in various segmentation tasks. These models typically employ CNNs for initial feature extraction and Transformers for refining global contextual information. The complementary nature of these architectures allows for precise crack boundary detection while maintaining robustness against background interference.

Feature fusion strategies play a pivotal role in these hybrid models [

31]. Techniques such as attention-based fusion and multi-scale feature aggregation have been proposed to integrate local and global features effectively [

32]. These strategies facilitate the simultaneous capture of fine-grained local details and long-range contextual dependencies, thereby contributing to improved overall segmentation accuracy.

2.5. Applications in Structural Health Monitoring

In the domain of structural health monitoring, deep learning models have been applied to various tasks beyond crack detection, including surface damage classification, defect localization, and anomaly detection. The integration of CNNs and Transformers has further extended these applications by enabling the more accurate and reliable analysis of structural conditions.

For dam surface crack segmentation, the unique challenges include the irregular shapes of cracks, significant class imbalance between cracks and the background, and environmental factors such as lighting variations and surface contamination. Hybrid models combining CNNs and Transformers address these challenges by providing a balance between local precision and global contextual understanding [

33]. Furthermore, the incorporation of advanced loss functions, such as composite losses that combine binary cross-entropy and Dice loss, has contributed to enhanced segmentation performance by effectively addressing class imbalance and promoting spatial consistency.

2.6. Summary and Motivation

Despite substantial advancements in crack segmentation achieved through traditional methods and CNN- and Transformer-based approaches, existing techniques continue to exhibit limitations when dealing with complex and large-scale crack patterns. Hybrid models that integrate CNNs and Transformers are increasingly being adopted as a promising solution for addressing these challenges. By harnessing the complementary strengths of both architectures, such models facilitate a more holistic representation of crack features, thereby enabling more accurate and robust segmentation performance.

The proposed CCT Net builds upon this foundation by introducing a novel Feature Complementary Fusion Module (FCFM) to integrate local and global features effectively. This approach not only enhances segmentation accuracy but also improves robustness against environmental variations, making it a valuable tool for dam surface crack detection in structural health monitoring.

3. Methodology

3.1. Architecture of CCT Net

The proposed CCT Net adopts a structure similar to conventional segmentation models, comprising an encoder and a decoder, as illustrated in

Figure 1. The key distinction is that CCT Net employs two encoders for feature extraction: a CNN encoder and a Transformer encoder. The input image, an RGB image with a resolution of 512 × 512, is processed through five CNN modules in the CNN encoder and four Transformer modules in the Transformer encoder. Each encoder performs four downsampling operations, with each step reducing the spatial resolution of the feature maps by half. To compensate for the potential semantic information loss resulting from this reduction, the number of channels in the output feature maps is doubled after each downsampling stage.

In each downsampling stage, the CNN and Transformer encoders produce two separate feature maps. These feature maps are then integrated using a complementary fusion module, continuing until all four downsampling stages are completed. The dimensions of the feature maps in each stage follow this progression: [B, C, H, W] → [B, 4C, H/4, W/4] → [B, 8C, H/8, W/8] → [B, 16C, H/16, W/16] → [B, 32C, H/32, W/32].

Subsequently, the four multi-scale feature maps are forwarded to the decoder for reconstruction. The decoding process begins with the feature map produced by the final encoder layer, which has already undergone complementary fusion. The decoder performs four successive upsampling operations, where each step doubles the spatial resolution and reduces the number of channels by half. Following each upsampling operation, the resulting feature map is concatenated along the channel dimension with the corresponding feature map obtained from the feature fusion module. This concatenated output is then fed into the subsequent upsampling stage. Upon completing the four upsampling steps, five feature maps with spatial dimensions identical to the input are obtained. These feature maps are concatenated along the channel axis and subsequently transformed to match the number of target output classes.

Within the downsampling–feature fusion–upsampling framework of CCT Net, crack features in the original image are effectively extracted. The CNN encoder excels at capturing fine-grained crack details, while the Transformer encoder leverages its powerful long-range contextual modeling capabilities to extract the elongated and irregular structural features of cracks. The complementary fusion module integrates the feature maps from both encoders. During upsampling, the outputs from each stage are concatenated with the feature maps of the same dimensions generated by the Feature Complementary Fusion Module (FCFM). This integration maximizes the utilization of both deep positional information and shallow semantic details, allowing the decoder to reconstruct more precise predictions. Finally, all feature maps generated during the downsampling process are concatenated, and the channels are adjusted to produce the final segmentation output.

3.2. CNN-Based Encoder

Although Transformers offer powerful long-range modeling capabilities that are absent in conventional CNNs—facilitating the precise extraction of elongated crack features by leveraging global contextual information—cracks usually occupy only a small portion of the overall image. Consequently, using a Transformer alone is susceptible to interference from background noise. The limited receptive field of CNNs, while a drawback in some contexts, becomes advantageous here. It allows the convolutional layers to focus more effectively on local crack features. Incorporating a CNN encoder compensates for the limitations of the Transformer structure.

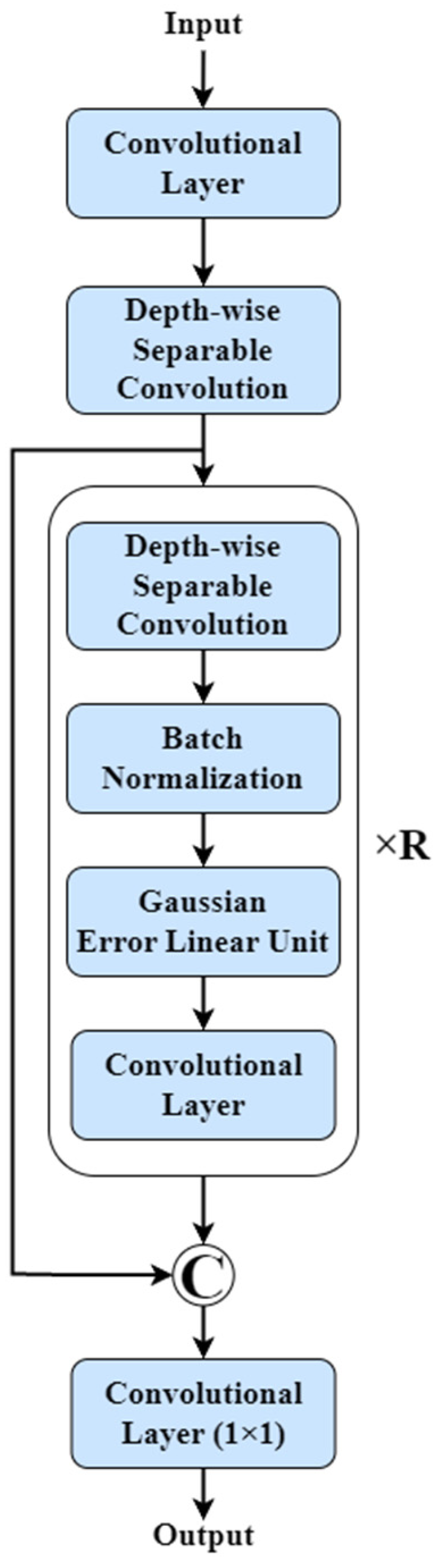

The convolutional layer serves as the fundamental building block of convolutional neural networks, playing a central role in feature extraction while also accounting for the majority of the network’s computational burden. As shown in

Figure 1, the CNN encoder comprises five CNN modules. Among these, modules 1 through 4 share a similar structure, consisting of depthwise separable convolution, batch normalization, and a Gaussian error-based nonlinear activation function. The structure of these first four CNN modules is illustrated in

Figure 2.

Initially, the raw input image undergoes preliminary semantic feature extraction through a simple convolutional layer. This is followed by a depthwise separable convolution for scale transformation, and a convolution kernel with a stride of 2 is used to reduce the width and height of the feature map to 1/2 of the original to reduce the amount of calculation. The extracted features are then processed through deeper sub-blocks consisting of conventional convolutions and depthwise separable convolutions, enabling multi-level feature extraction. Finally, the output is concatenated with the residual block along the channel dimension and refined using a 1 × 1 pointwise convolution to adjust the number of output channels, producing the final feature map.

The first four CNN modules are structurally similar but differ in the number of convolutional layers stacked within each module. The repetition count R for these modules is 2, 2, 4, and 2, respectively. The final convolutional module only adopts depthwise separable convolution for downsampling, which consists of the first two parts of

Figure 2 without repetition.

3.3. Transformer-Based Encoder

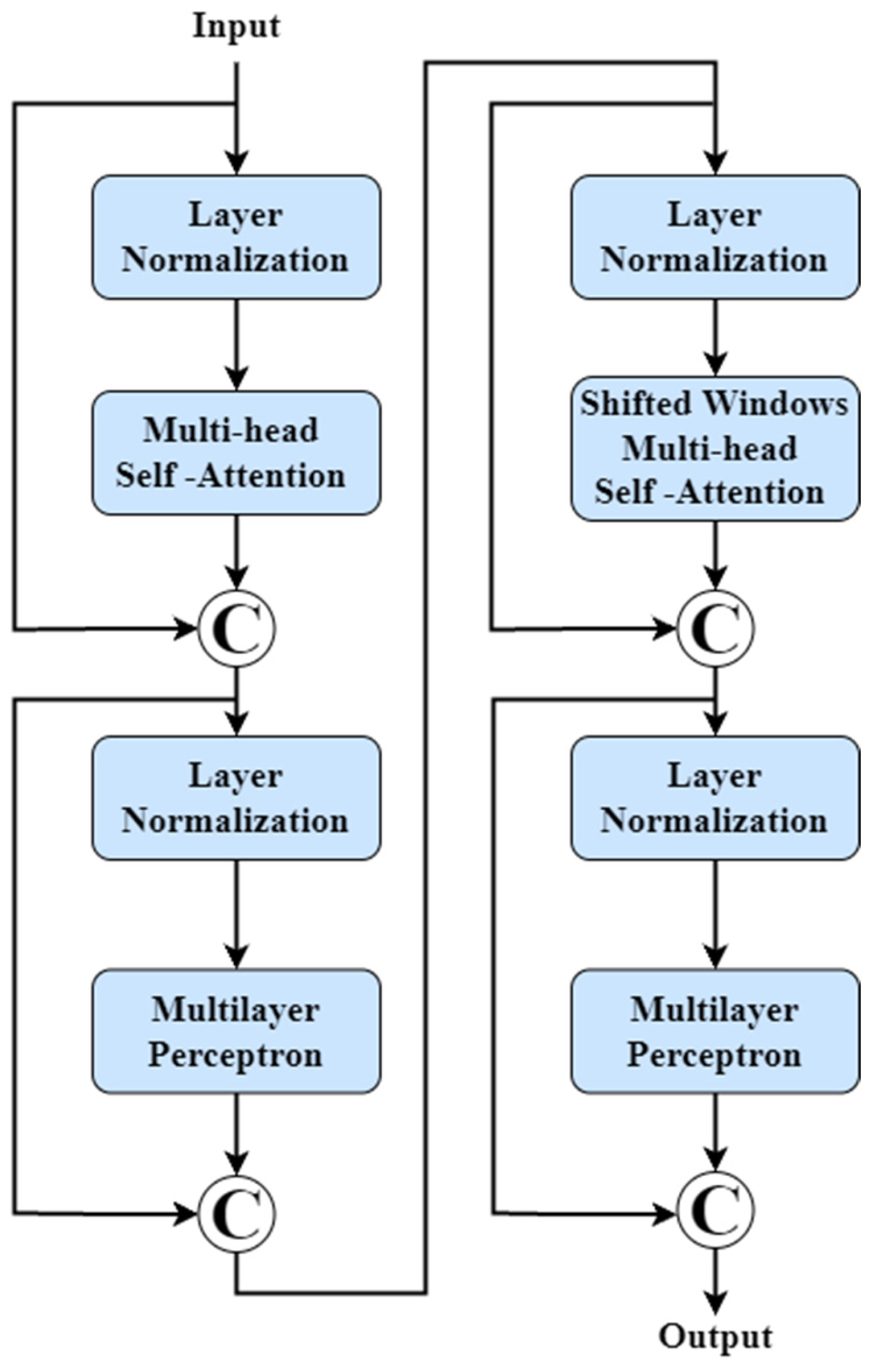

Leveraging the powerful long-range modeling capabilities of Transformers, which enable the precise extraction of elongated crack features by contextualizing information, this study incorporates Transformers as part of the CCT Net encoder. As illustrated in

Figure 1, the Transformer encoder is composed of four sequential modules.

Each Transformer module is composed of two consecutive sub-blocks. The first sub-block includes layer normalization (LN), a multi-head self-attention mechanism (MSA), a multi-layer perceptron (MLP), and residual connections. The second sub-block shares a similar structure, except that the standard multi-head self-attention mechanism is replaced by a sliding-window-based multi-head self-attention mechanism (Swin-MSA).

To enhance the Transformer model’s long-range modeling capabilities, a patch partitioning step is introduced before the first Transformer module, dividing the input image into patches. Additionally, patch merging is performed before the subsequent three Transformer modules, combining the previously divided patches before passing them into the next module.

As illustrated in

Figure 3, the Transformer encoder follows a straightforward yet highly effective logical structure for extracting crack features. Initially, the input image is partitioned into 4 × 4 patches and processed by the first Transformer module for preliminary feature extraction. The resulting feature map is subsequently aggregated and forwarded to the next Transformer module to enable deeper representation learning. This process is repeated three more times, enabling the encoder to effectively learn and represent both deep positional information and shallow semantic information in the feature maps across different layers.

3.4. Feature Complementary Fusion Network

In CCT Net, the CNN encoder and Transformer encoder capitalize on their respective strengths in capturing local and global features of images, generating eight semantic and positional feature maps. However, effectively fusing feature maps from different levels remains a key challenge. To address this, we propose the Feature Complementary Fusion Module (FCFM), designed to integrate the output feature maps from the CNN and Transformer encoders.

As illustrated in

Figure 4, the fusion process involves the following steps:

Each pair of feature maps, Inputs 1 and 2, from the CNN and Transformer encoders undergo global average pooling to produce two 1 × 1 × C feature maps, and . Simultaneously, these feature maps are reshaped into two 2D feature maps, and , with dimensions (H × W) × C.

The reshaped feature maps are cross-concatenated to form two new feature maps, and , each with dimensions (H × W + 1) × C. These are then passed through a Swin Transformer module for further feature extraction.

The resulting feature maps are reshaped back into (H × W × C) dimensions as and , which are multiplied elementwise (Hadamard product) with Inputs 1 and 2, respectively, to generate a new feature map .

is concatenated with the original feature maps to produce an H × W × 3C feature map .

Feature map is further processed through a CBR module, which consists of a convolutional layer, batch normalization, and a ReLU activation function, to enhance feature fusion. A pointwise convolution operation is then applied to adjust the channel dimensions. The adjusted feature map is elementwise summed with the original feature maps to produce .

Finally, is reshaped into a 2D feature map, , with dimensions (H × W) × C, which serves as the output.

Figure 4.

Feature Complementary Fusion Module.

Figure 4.

Feature Complementary Fusion Module.

The CBR module is designed to suppress insignificant features while reducing the number of parameters, thereby ensuring efficient and effective feature integration. This systematic fusion process enables CCT Net to fully leverage the complementary characteristics of CNN and Transformer encoders, thereby enhancing its capability to capture both fine-grained local details and global contextual crack features.

The entire Feature Complementary Fusion Module (FCFM) achieves cross-domain and cross-feature fusion of the feature maps from two encoders, enhancing the characteristics derived from the Transformer and CNN encoders in their respective domains. The feature fusion process can be described mathematically using the following equations:

where

Reshape represents the reshaping of the feature maps;

STB represents the Swin Transformer Block, responsible for feature refinement;

Cat represents concatenation along the channel dimension;

GAP represents global average pooling, which condenses spatial information into a single value for each channel;

HP represents the Hadamard product (elementwise multiplication);

CBR is a module consisting of convolution, batch normalization, and ReLU activation; and

refers to pointwise convolution, used for channel adjustment.

These operations facilitate a systematic and efficient fusion of feature maps by harnessing the strengths of both local and global representations, thereby enhancing the model’s effectiveness in capturing intricate and large-scale crack patterns.

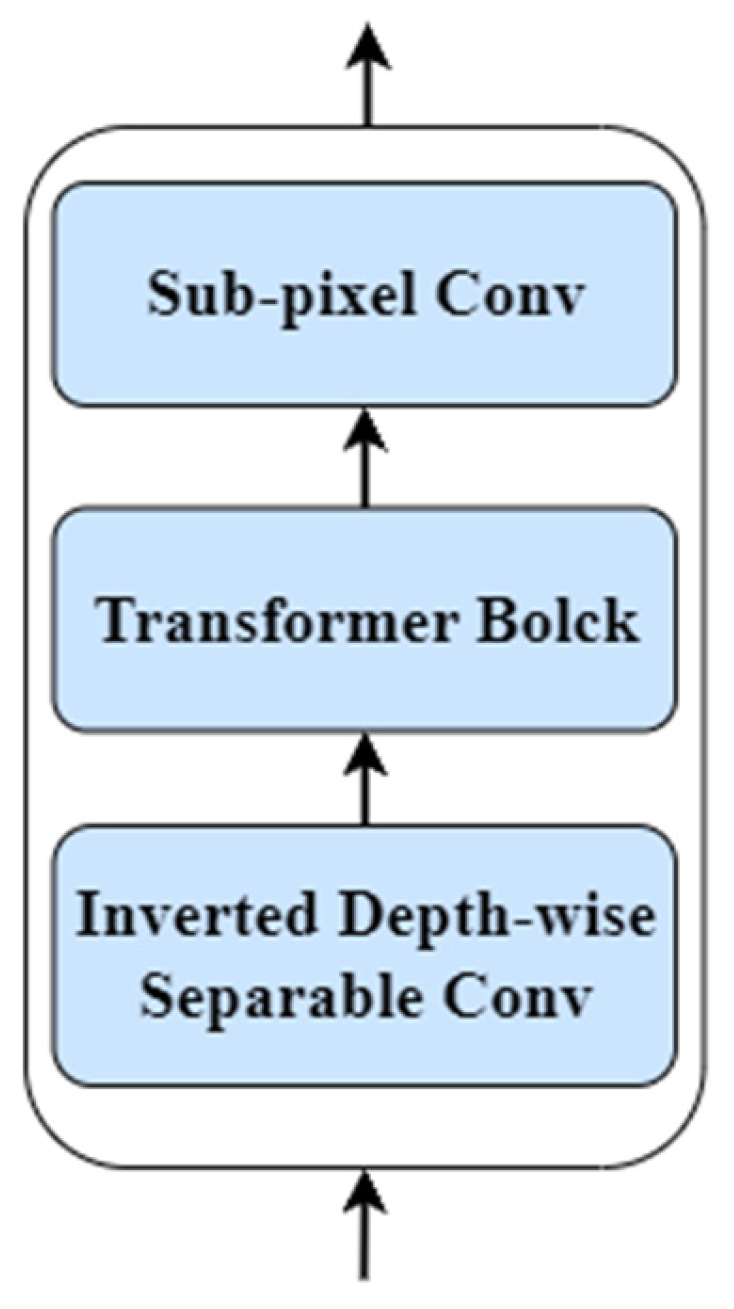

3.5. The Decoder

The primary function of the decoder is to progressively reconstruct the segmentation output based on the hierarchical features extracted by the encoder. Within the decoder, upsampling operations are typically employed to gradually restore the spatial resolution of the original image. In CCT Net, sub-pixel convolution is employed for upsampling. Compared to commonly used methods such as linear interpolation, bilinear interpolation, and transposed convolution, sub-pixel convolution offers a simpler structure, requires fewer computational parameters, and significantly reduces computational complexity.

The design of the upsampling modules in the decoder is illustrated in

Figure 5. Each layer of the decoder receives fused feature maps from the corresponding Feature Complementary Fusion Module (FCFM). These feature maps are then processed through the upsampling modules for image reconstruction. Among them, Upsampling Module 1 performs only sub-pixel convolution operations, and Upsampling Modules 2, 3, and 4 utilize Transformer modules to further decode the fused feature maps and the upsampling results from the lower layers of the decoder. Sub-pixel convolution is applied at the end of each of these modules to generate the output feature maps. This approach effectively leverages the semantic and spatial information learned by the encoder, enabling efficient reconstruction of the segmentation results. A total of five feature maps are generated through this process.

Finally, the feature maps are concatenated along the channel dimension and refined through a pointwise convolution to adjust the number of channels, thereby generating the final pixel-level classification results.

3.6. Loss Function

In semantic segmentation, the loss function plays a crucial role in guiding model training by measuring the discrepancy between the predicted segmentation results for each pixel and the ground truth labels. For the CCT Net architecture, this study adopts a composite loss function that combines binary cross-entropy loss and Dice loss.

Binary cross-entropy (BCE) loss is commonly employed in binary classification tasks to quantify the divergence between the predicted probability distribution and the ground truth distribution at the pixel level [

34]. In semantic segmentation, BCE loss handles pixel-level classification effectively. Its definition is as follows:

where

N is the total number of pixels,

is the ground truth label for pixel

, and

is the predicted probability for pixel

belonging to the foreground class.

Dice loss optimizes the model by penalizing insufficient overlap between the predicted segmentation region and the corresponding ground truth, thereby encouraging better spatial alignment [

35]. It is defined as follows:

where

A is the predicted segmentation region,

B is the ground truth segmentation region, and

represents the intersection of the predicted and ground truth regions.

For dam surface segmentation, the area containing cracks is significantly smaller compared to the background, leading to a severe class imbalance between foreground and background pixels. During training, binary cross-entropy loss emphasizes optimizing the model at the pixel level, ensuring accurate local predictions, and Dice loss focuses on global optimization by encouraging greater overlap between the predicted and ground truth regions. These two components are complementary, addressing both local and global optimization needs. To balance these aspects, the composite loss function is defined as

This combined approach ensures that the model pays attention to both local and global features, which is essential for accurately segmenting dam surface cracks.

4. Experiments

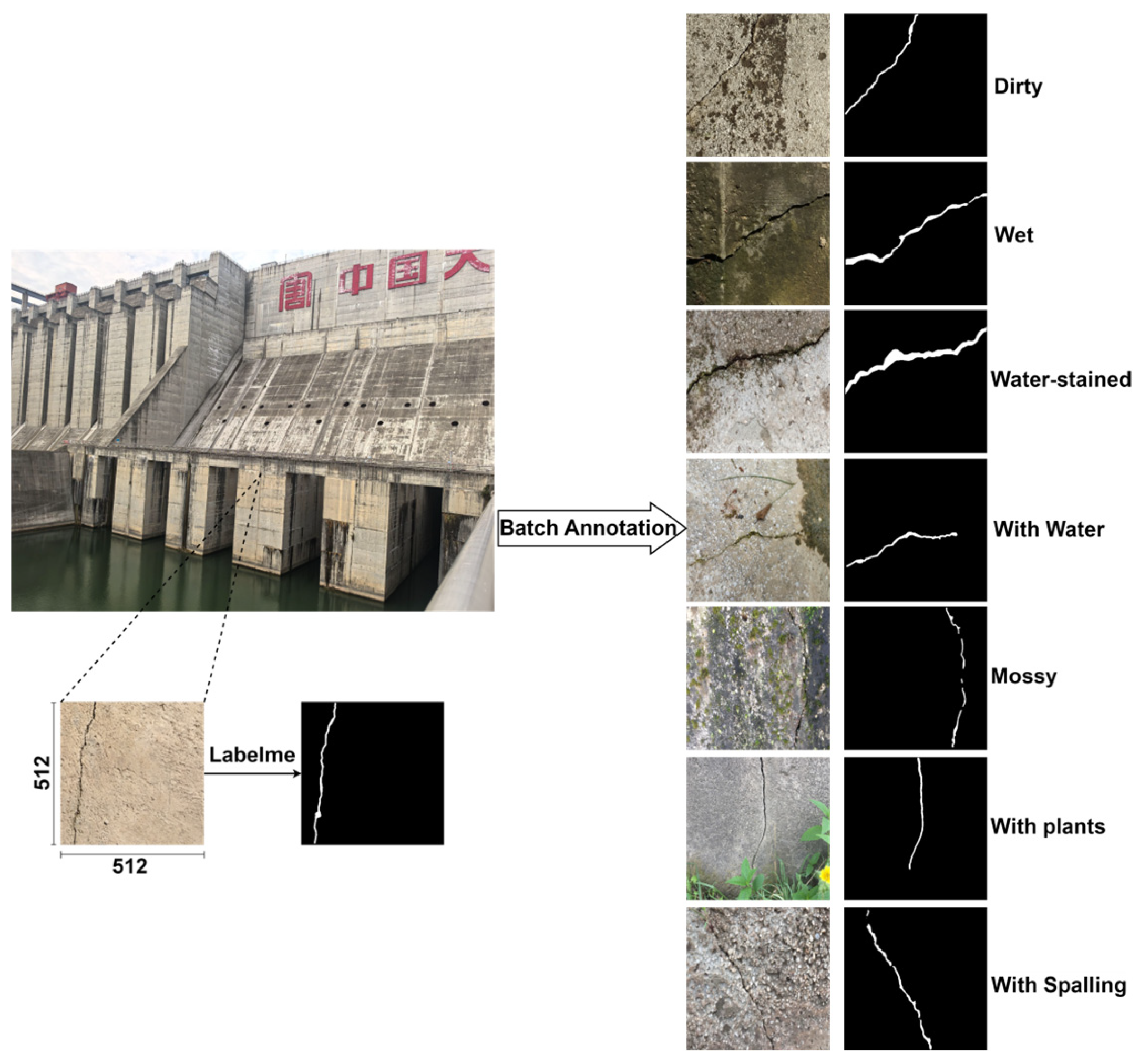

4.1. Dataset Construction

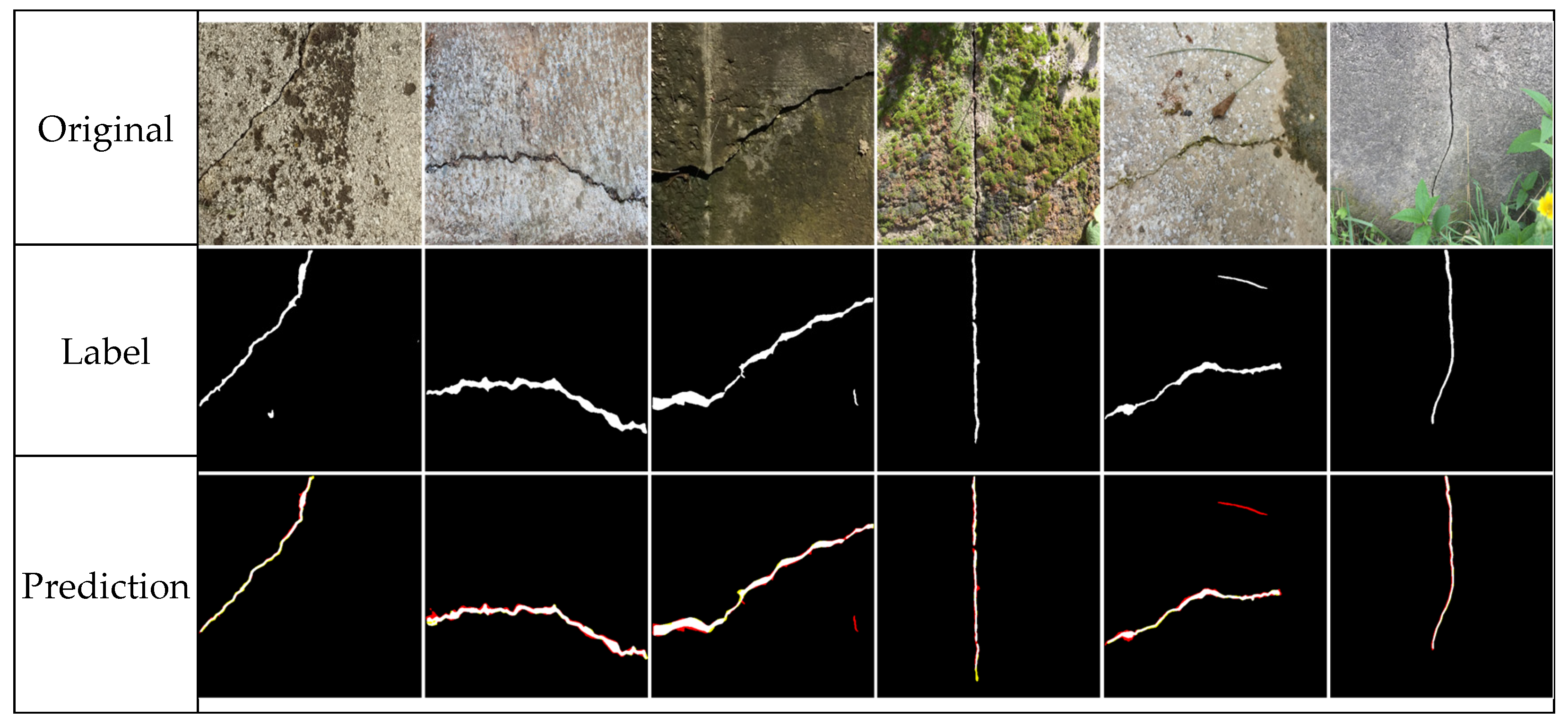

The dataset is the most important raw data for deep learning network model training. In this study, based on some open-source data, crack images of a specific dam’s downstream area (including the dam surface and downstream auxiliary concrete structures) were captured using mobile phones and cameras [

36]. Subsequently, the collected photos were annotated at the pixel level using the image segmentation annotation software Labelme 5.6.0. Finally, the images were cropped into 512 × 512-pixel label files, resulting in a total of 400 labeled images, including complex backgrounds such as moisture, water stains, moss, and so on. Examples of label files are shown in

Figure 6.

4.2. Experimental Implementation Details

The training process of the model adheres to standard machine learning practices. The dataset is partitioned into training, validation, and test subsets with a ratio of 7:2:1. The training set is utilized for learning multi-level features from the input images, the validation set is employed for tuning hyperparameters and preventing overfitting, and the test set is reserved for evaluating the final performance of the trained model. CCT Net is trained with 4 images per batch, for a total of 400 epochs on the training dataset. During training, the model is validated on the validation dataset every 10 epochs. L2 weight decay was used in the Adam optimizer to penalize large weight values and improve generalization. Early stopping was adopted by monitoring the validation loss, halting training if no improvement was observed for 20 consecutive epochs.

To improve generalization and mitigate the severe foreground–background imbalance inherent in crack segmentation, we applied a set of online data augmentation techniques during training. These included random horizontal and vertical flips, 90° rotations, and brightness/contrast adjustments within a ±20% range. These augmentations increase the diversity of crack appearances and background contexts, reducing the likelihood of overfitting to dominant background patterns. In addition to data augmentation, we employed an online hard-example mining strategy to further address the imbalance between the small proportion of crack pixels and the dominant background pixels. For each mini-batch, the per-pixel loss was computed and ranked, and only the top 20% hardest pixels—those with the highest loss values—were retained for backpropagation. This ensures that the model focuses its learning on challenging regions, such as thin cracks, blurred edges, and low-contrast areas, rather than being dominated by abundant, easily classified background pixels.

4.3. The Prediction of the Model

The performance of CCT Net on the test dataset is shown in

Figure 7. It can be observed that, even with different background interferences (such as moss, sludge, and concrete spalling), CCT Net is able to accurately segment both the main body of the cracks and their finer branches from the original image.

5. Results and Discussion

5.1. Model Performance Evaluation Metrics

To quantify the performance differences between segmentation models, four evaluation metrics are used: Precision, Recall, F1 score, and mean Intersection over Union (mIoU). These metrics have practical physical significance, reflecting the crack segmentation performance from different perspectives. The Precision and Recall represent the proportions of crack pixels correctly and comprehensively detected in the predicted results and label files, respectively; F1 score is the harmonic mean of Precision and Recall; and mIoU measures the overlap between the predicted results and the ground truth, indicating the degree of matching between them.

The calculation methods for these metrics are as follows:

where

TP is the number of true positive samples,

FP is the number of false positive samples,

FN is the number of False Negative samples,

A is the number of ground truth labels, and

B is the model prediction results.

5.2. Model Performance Comparison

5.2.1. Comparison with the Classical Model

This section presents a comparative analysis of the performance of CCT Net against the segmentation model U-Net, based on convolutional neural networks [

19], and Swin Transformer, based on Transformer architecture [

23].

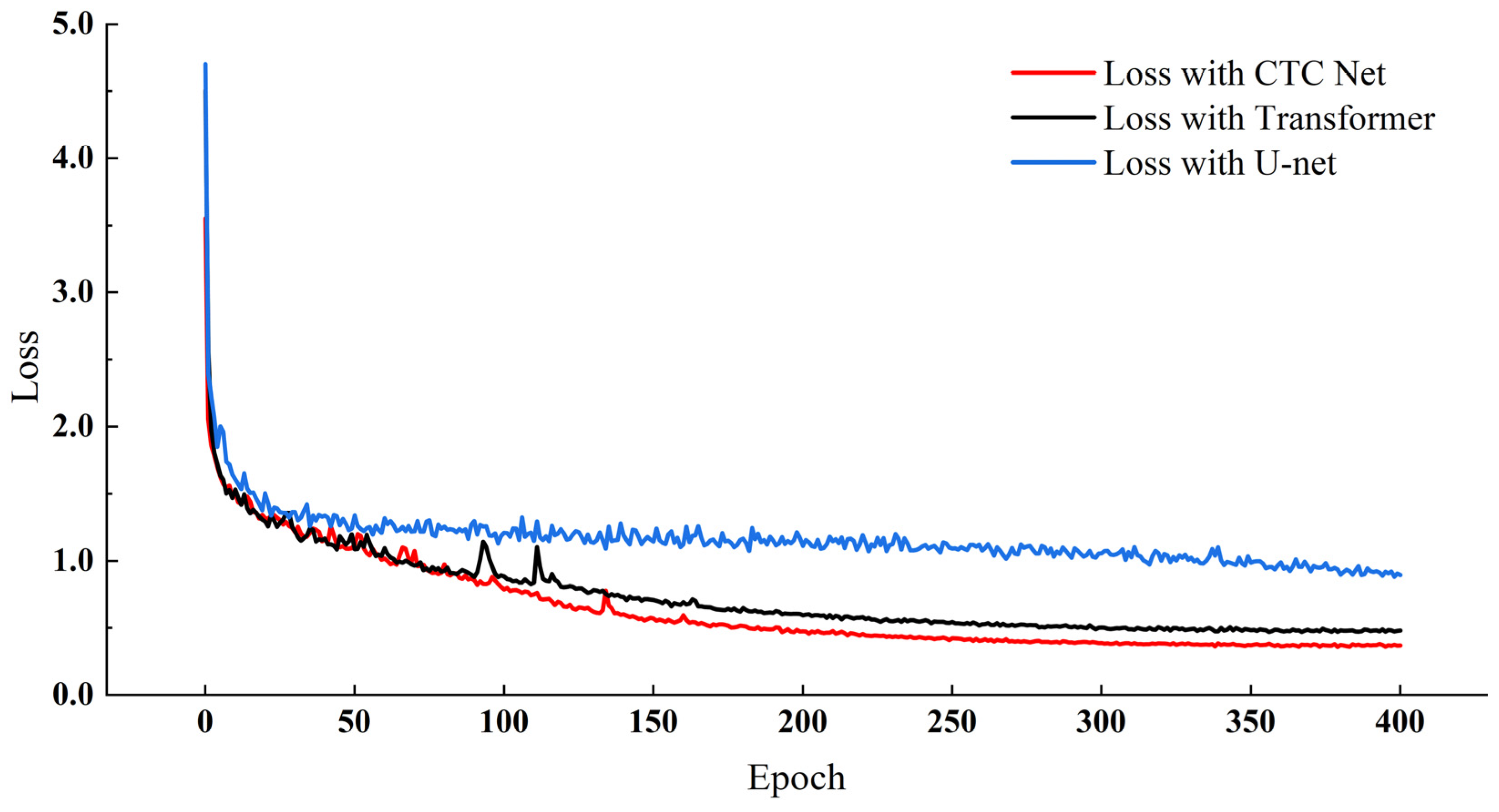

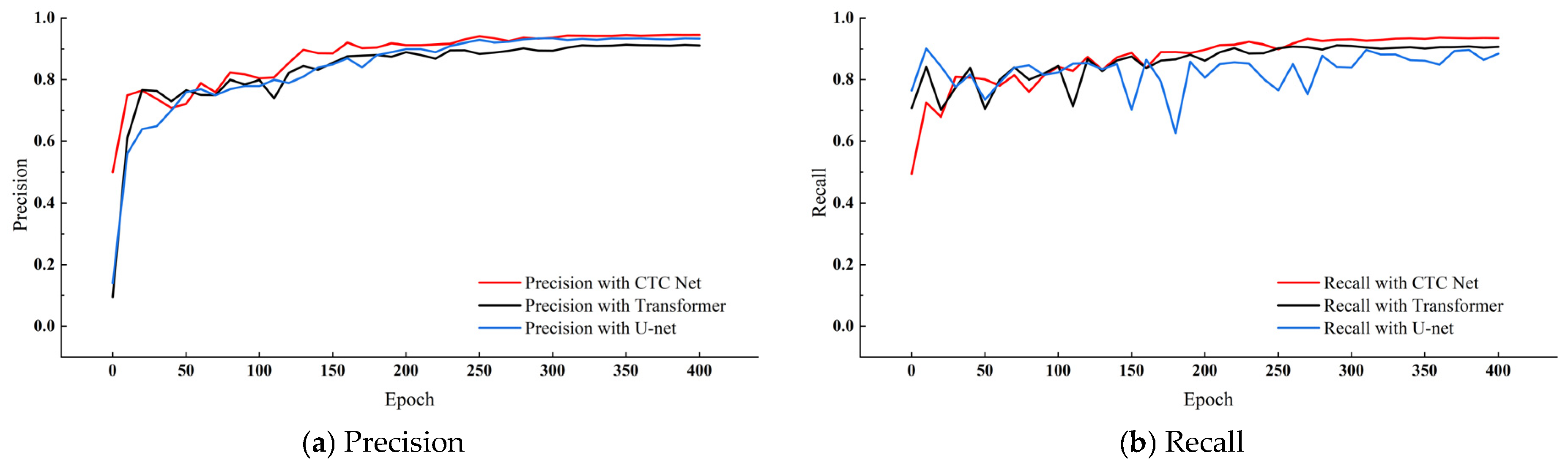

Figure 8 illustrates the loss variation during training for the three models, while

Figure 9 shows the evaluation metrics on the validation dataset throughout the training process.

During training, the losses of CCT Net and Swin Transformer decrease rapidly and converge quickly, with their loss values being very close. By contrast, U-Net also converges quickly but maintains a higher loss, indicating that its ability to extract crack features has reached a bottleneck. When comparing the evaluation metrics of the three network structures on the validation dataset, CCT Net consistently performs the best, demonstrating superior and stable performance.

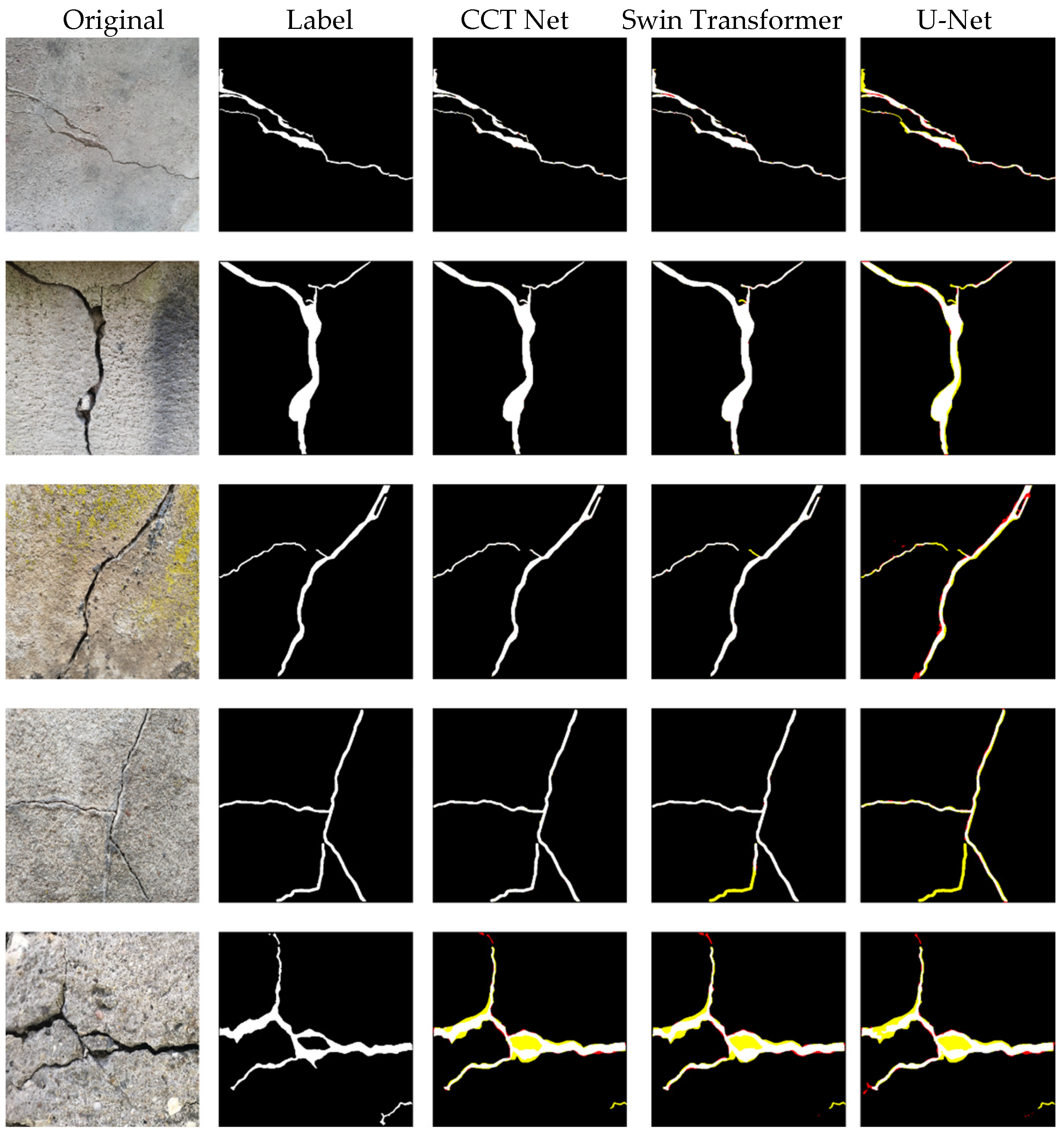

Representative segmentation results on the test dataset are illustrated in

Figure 10. In these visualizations, white pixels indicate correctly segmented regions, red pixels represent false positives (i.e., background misclassified as cracks), and yellow pixels denote false negatives (i.e., cracks misclassified as background). As observed, the proposed network demonstrates strong performance in accurately segmenting crack regions. In comparison, Swin Transformer misses certain small, developing cracks, while U-Net also fails to detect some minor cracks and shows instances of pixel misclassification.

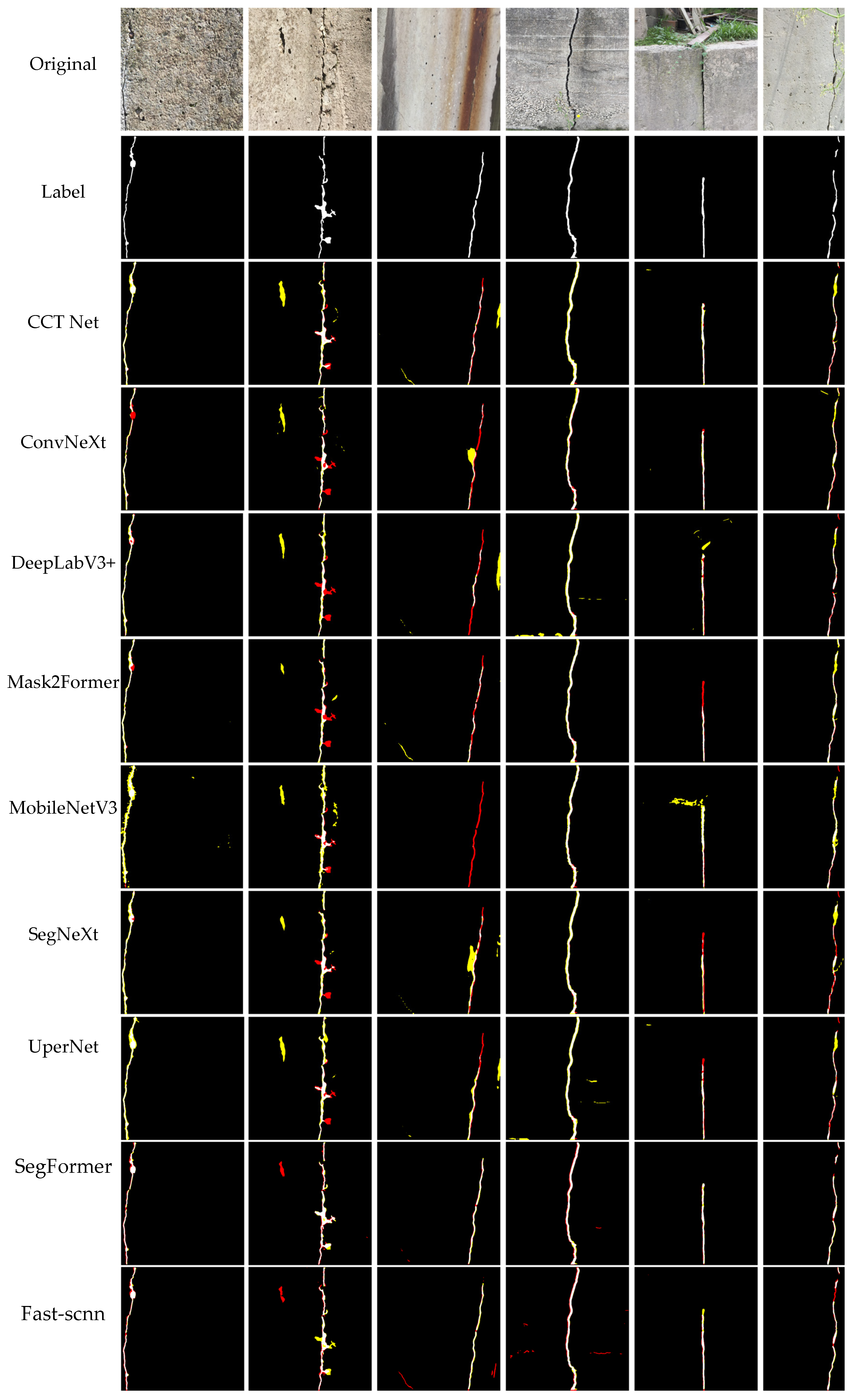

5.2.2. Comparison with Existing Models

With the rapid development of deep learning, a large number of segmentation models have been proposed. However, in order to improve the performance of crack segmentation in engineering applications, research on more accurate and effective models continues. In this context, CCT Net presents a novel architecture that attempts to improve the semantic segmentation performance by fusing the advantages of two different types of segmentation networks. To verify the performance of CCT Net, this section compares it with six other existing segmentation models: ConvNeXt [

37], DeepLabV3+ [

38], Mask2Former [

39], MobileNetV3 [

40], SegNeXt [

41], UperNet [

42], SegFormer [

43], and Fast-SCNN [

44]. Each model is evaluated in a series of experiments, and the performance of each network model is shown in

Table 1.

As shown in

Table 1, among the seven segmentation models, CCT Net demonstrates outstanding performance. Except for Precision, it ranks first in Recall, F1 score, and Intersection over Union (IoU), which fully reflects the superiority of the CCT Net architecture.

Figure 11 visually presents the prediction results of various models on selected images from the test set. For images with simple backgrounds, all models can accurately segment cracks, as there is minimal visual interference with the target objects. However, for images with more complex backgrounds and disturbances, the performance of different models varies significantly. The presence of numerous unrelated visual elements makes it difficult for the network to distinguish between the foreground and background.

5.2.3. Computational Cost and Inference Analysis

To comprehensively evaluate the deployment feasibility of the proposed model, we compared multiple semantic segmentation models in terms of parameter count (Params), floating-point operations (FLOPs), average inference latency (Latency), and frames per second (FPS). All experiments were conducted on the same hardware platform (NVIDIA RTX 4060Ti 16 G GPU) with an input resolution of 512 × 512. The results are presented in

Table 2.

From the comparison, Fast-SCNN exhibits the smallest model size (1.11 M parameters) and lowest FLOPs (3.98 G), achieving the highest FPS (79.15) due to its highly optimized lightweight convolutional design. MobileNetV3 also demonstrates competitive efficiency (11.21 M parameters, 45.73 G FLOPs, 52.76 FPS) owing to its streamlined depthwise separable convolutions. By contrast, high-capacity models such as ConvNeXt (60.24 M parameters, 234.94 G FLOPs) and UperNet (58.92 M parameters, 229.48 G FLOPs) have a lower FPS (30.08 and 28.26, respectively) due to their heavier backbones. Interestingly, CCT Net, despite having a relatively small parameter count (5.63 M) and low FLOPs (25.49 G), does not achieve the highest FPS (35.20). This is attributed to its Transformer-based architecture, where self-attention operations involve frequent tensor reshaping, memory access, and non-convolutional operations, which are less optimized for GPU parallelism compared to convolution-heavy architectures. These operations tend to be memory-bound rather than compute-bound, leading to a higher latency than expected from FLOPs alone.

Overall, lightweight CNN-based architectures such as Fast-SCNN and MobileNetV3 remain the most efficient for real-time applications, while Transformer-based models tend to trade inference speed for improved accuracy and global context modeling. Despite not achieving the highest FPS, the proposed CCT Net still maintains a competitive inference speed (35.20 FPS), which is sufficient for real-time structural health monitoring tasks.

5.3. Ablation Study

5.3.1. Ablation Experiment on Loss Function

Given the significant imbalance between crack pixels and background pixels in dam images, the choice of an appropriate loss function plays a critical role in guiding effective model training. As shown in

Table 3, this section investigates the impact of different loss functions—including binary cross-entropy (BCE), Dice loss, and a composite loss combining both—on the training performance of the proposed CCT Net.

The results demonstrate that employing the composite loss function leads to a notable improvement in the performance of CCT Net, yielding the highest scores across all four evaluation metrics: a Precision of 94.5%, Recall of 93.5%, F1 Score of 93.6%, and mean Intersection over Union (mIoU) of 88.7%.

The binary cross-entropy loss focuses on correctly classifying individual pixels but overlooks the spatial coherence of cracks and their development areas, which may lead to discontinuous crack predictions. Dice loss regularizes the training process by calculating the overlap ratio between class pixels, emphasizing the reasoning ability for continuous regions. In the task of semantic segmentation for dam cracks, where there is severe class imbalance, it is essential to ensure the accuracy of each pixel’s classification while maintaining the spatial consistency of crack regions. Combining binary cross-entropy loss and Dice loss into a composite loss function significantly enhances the model’s reasoning ability, ensuring better segmentation results.

5.3.2. Ablation Experiment on Feature Complementary Fusion

The Feature Complementary Fusion Module is a critical component of CCT Net. Since the feature maps extracted by the two encoders have different focuses, it is essential to design a method for effectively integrating these features. To verify the necessity of the FCFM, a comparison was conducted to evaluate the impact of including or excluding this module. The performance of the model on the test dataset is summarized in

Table 4. The results indicate that the integration of the Feature Complementary Fusion Module (FCFM) substantially enhances the model’s performance, resulting in an improvement of nearly 5% across all four evaluation metrics.

The rationale for employing both CNN and Transformer architectures as encoders in CCT Net stems from their complementary strengths in capturing diverse image features. By integrating these features, the network achieves a more comprehensive understanding of images through multi-perspective and deep representation learning. This emphasizes the critical information in the features and enables the model to distinguish between different categories more effectively. Consequently, the FCFM enhances the model’s robustness and segmentation performance.

5.4. Analysis of Model Advantages

In the task of dam crack segmentation, differences in model architectures directly affect segmentation performance. U-Net is a classical convolutional neural network (CNN) architecture that learns the appearance features of cracks through successive convolutional layers. Due to the limited receptive field of convolutional kernels, it requires a large number of convolutional operations to focus on local regions of the image in order to capture edge and texture features, making it well-suited for detecting fine crack structures. However, the limited receptive field also makes it difficult to capture global contextual information, leading to potential discontinuities or missed detections when dealing with large-scale cracks, especially in complex scenes where background noise can interfere.

The Swin Transformer introduces a hierarchical structure and a shifted window-based self-attention mechanism, which provides excellent global modeling capabilities. Based on the self-attention mechanism, it can effectively model long-range dependencies between each pixel and the rest of the image, making it suitable for handling long and complex dam crack images. However, its excessive focus on the correlation between each region and all other regions in the image can hinder its ability to capture local detailed features, creating a bottleneck in semantic crack segmentation.

CCT Net combines the strengths of both U-Net and Swin Transformer by introducing the Feature Complementary Fusion Module (FCFM) to achieve deep integration of local and global features. Compared to the two aforementioned models, CCT Net offers the following advantages:

- ①

It fully leverages the CNN’s capability to capture fine crack edges, enhancing local feature extraction;

- ②

It utilizes the Transformer’s strength in capturing global information, overcoming U-Net’s limited receptive field;

- ③

The hybrid structure reduces model complexity while maintaining high accuracy, thereby optimizing computational efficiency.

6. Conclusions and Future Research

This paper proposes CCT Net, a dam surface crack segmentation model that combines the complementary strengths of the CNN and Transformer. Considering the unique characteristics of concrete dam cracks, CCT Net fully leverages the CNN’s advantage in local feature extraction and Transformer’s capability in capturing global information. Through a two-stage feature extraction network, it effectively extracts crack features from the dam surface from both local and global perspectives.

For different feature maps, this study introduces a Feature Complementary Fusion Module (FCFM), which enables the deep fusion of features extracted in the two stages. This significantly enhances the segmentation performance of the model, achieving impressive results with a Precision of 94.5%, Recall of 93.5%, F1 score of 94.0%, and mean IoU of 88.7%.

Two ablation studies were conducted to evaluate the impact of the loss function and the Feature Complementary Fusion Module (FCFM) on the performance of the proposed CCT Net model. Comparative experiments with U-Net, a representative CNN-based segmentation model, and Swin Transformer, a Transformer-based architecture, demonstrate that CCT Net achieves superior performance across all evaluation metrics. Furthermore, comparisons with other state-of-the-art segmentation models confirm that CCT Net consistently exhibits outstanding performance. In addition to its high accuracy, CCT Net achieves competitive computational efficiency, with only 5.63 M parameters, 25.49 G FLOPs, and an inference speed of 35.20 FPS, making it well-suited for real-time dam crack monitoring applications.

Future work will focus on two parts. As the dataset used in this study is entirely from dry environments, the segmentation performance for dam surface cracks located below the waterline is not ideal, primarily due to insufficient underwater lighting and water turbidity. In addition, the current dataset does not provide a balanced number of samples across different crack types (e.g., hairline, transverse, diagonal, alligator cracks), which limits type-specific performance analysis. Future dataset expansion will address both environmental diversity and crack-type balance, enabling more comprehensive evaluations and improving the generalizability of the proposed method. Second, although CCT Net demonstrates excellent performance, its segmentation speed can be further improved to meet more stringent real-time requirements. Future work will explore lightweight optimization strategies, such as integrating model pruning, quantization, or knowledge distillation, to reduce computational cost while maintaining high segmentation accuracy.

Author Contributions

Conceptualization, F.S.; methodology, H.L.; software, H.L.; validation, F.S.; formal analysis, F.S.; investigation, H.L.; resources, H.L.; data curation, F.S.; writing—original draft preparation, H.L.; writing—review and editing, F.S.; visualization, H.L.; supervision, F.S.; project administration, F.S.; funding acquisition, F.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key R&D Program of China, grant number 2021YFC3090102.

Data Availability Statement

Data will be made available on request.

Conflicts of Interest

The affiliations of Dr. Hongzheng Ling (Datang Sichuan Power Generation Co., Ltd.) and Dr. Futing Sun (Power China Huadong Engineering Co., Ltd.) jointly supported the research under the National Key R&D Program of China. Beyond this collaboration, there are no potential conflicts of interest.

References

- Kang, F.; Li, J.; Zhao, S.; Wang, Y. Structural health monitoring of concrete dams using long-term air temperature for thermal effect simulation. Eng. Struct. 2019, 180, 642–653. [Google Scholar] [CrossRef]

- Ye, X.-W.; Jin, T.; Li, Z.; Ma, S.; Ding, Y.; Ou, Y. Structural crack detection from benchmark data sets using pruned fully convolutional networks. J. Struct. Eng. 2021, 147, 04721008. [Google Scholar] [CrossRef]

- Hamishebahar, Y.; Guan, H.; So, S.; Jo, J. A comprehensive review of deep learning-based crack detection approaches. Appl. Sci. 2022, 12, 1374. [Google Scholar] [CrossRef]

- Shi, P.; Shao, S.; Fan, X.; Zhou, Z.; Xin, Y. MCL-CrackNet: A Concrete Crack Segmentation Network Using Multi-level Contrastive Learning. IEEE Trans. Instrum. Meas. 2023, 72, 5030415. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, H.; Wang, G.; Huo, J.; Li, Y.; Li, L. Automatic concrete infrastructure crack semantic segmentation using deep learning. Autom. Constr. 2023, 152, 104950. [Google Scholar] [CrossRef]

- O’Shea, K. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Dosovitskiy, A. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Zhao, S.; Kang, F.; Li, J. Intelligent segmentation method for blurred cracks and 3D mapping of width nephograms in concrete dams using UAV photogrammetry. Autom. Constr. 2024, 157, 105145. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Gonzales, R.C.; Wintz, P. Digital Image Processing; Addison-Wesley Longman Publishing Co., Inc.: Upper Saddle River, NJ, USA, 1987. [Google Scholar]

- Li, X.; Tang, Y.; Zhu, W.; Wang, X.; Zhang, C.; Liu, X.; Zhu, L. Automatic measurement and characterization of surface crack defect in steel based on morphological features. In Proceedings of the 2016 4th International Conference on Machinery, Materials and Computing Technology, Hangzhou, China, 23–24 January 2016; pp. 753–756. [Google Scholar]

- Lingfang, S.; Guocheng, F.; Wei, M. A Novel SVM for Crack Edge Checking of Coal CT Image. In Proceedings of the 2010 International Conference on Innovative Computing and Communication and 2010 Asia-Pacific Conference on Information Technology and Ocean Engineering, Macao, China, 30–31 January 2010; pp. 75–78. [Google Scholar]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Ali, R.; Chuah, J.H.; Talip, M.S.A.; Mokhtar, N.; Shoaib, M.A. Structural crack detection using deep convolutional neural networks. Autom. Constr. 2022, 133, 103989. [Google Scholar] [CrossRef]

- Huang, H.; Zhao, S.; Zhang, D.; Chen, J. Deep learning-based instance segmentation of cracks from shield tunnel lining images. Struct. Infrastruct. Eng. 2022, 18, 183–196. [Google Scholar] [CrossRef]

- Wang, R.; Chencho; An, S.; Li, J.; Li, L.; Hao, H.; Liu, W. Deep residual network framework for structural health monitoring. Struct. Health Monit. 2021, 20, 1443–1461. [Google Scholar] [CrossRef]

- Wang, W.; Su, C.; Han, G.; Dong, Y. Efficient segmentation of water leakage in shield tunnel lining with convolutional neural network. Struct. Health Monit. 2024, 23, 671–685. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Wang, J.; Zeng, Z.; Sharma, P.K.; Alfarraj, O.; Tolba, A.; Zhang, J.; Wang, L. Dual-path network combining CNN and transformer for pavement crack segmentation. Autom. Constr. 2024, 158, 105217. [Google Scholar] [CrossRef]

- Ai, H.; Wang, K.; Wang, Z.; Lu, H.; Tian, J.; Luo, Y.; Xing, P.; Huang, J.-Y.; Li, H. Dynamic Pyramid Network for Efficient Multimodal Large Language Model. arXiv 2025, arXiv:2503.20322. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wang, W.; Su, C. Transformer-based crack segmentation for concrete structures in complex scenarios. Struct. Concr. 2025. [Google Scholar] [CrossRef]

- Zhang, J.; Yang, X.; Wang, W.; Brilakis, I.; Davletshina, D.; Wang, H. Robust ELM-PID tracing control on autonomous mobile robot via transformer-based pavement crack segmentation. Measurement 2025, 242, 116045. [Google Scholar] [CrossRef]

- Wang, R.; Shao, Y.; Li, Q.; Li, L.; Li, J.; Hao, H. A novel transformer-based semantic segmentation framework for structural condition assessment. Struct. Health Monit. 2024, 23, 1170–1183. [Google Scholar] [CrossRef]

- Wu, Y.; Li, S.; Zhang, J.; Li, Y.; Li, Y.; Zhang, Y. Dual attention transformer network for pixel-level concrete crack segmentation considering camera placement. Autom. Constr. 2024, 157, 105166. [Google Scholar] [CrossRef]

- Li, H.; Wang, W.; Wang, M.; Li, L.; Vimlund, V. A review of deep learning methods for pixel-level crack detection. J. Traffic Transp. Eng. Engl. Ed. 2022, 9, 945–968. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6881–6890. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Bai, S.; Ma, M.; Yang, L.; Liu, Y. Pixel-wise crack defect segmentation with dual-encoder fusion network. Constr. Build. Mater. 2024, 426, 136179. [Google Scholar] [CrossRef]

- Wu, M.; Jia, M.; Wang, J. TMCrack-Net: A U-shaped network with a feature pyramid and transformer for mural crack segmentation. Appl. Sci. 2022, 12, 10940. [Google Scholar] [CrossRef]

- Zhou, Y.; Ali, R.; Mokhtar, N.; Harun, S.W.; Iwahashi, M. MixSegNet: A novel crack segmentation network combining CNN and Transformer. IEEE Access 2024, 12, 111535–111545. [Google Scholar] [CrossRef]

- Ruby, U.; Yendapalli, V. Binary cross entropy with deep learning technique for image classification. Int. J. Adv. Trends Comput. Sci. Eng 2020, 9, 5393–5397. [Google Scholar]

- Li, X.; Sun, X.; Meng, Y.; Liang, J.; Wu, F.; Li, J. Dice loss for data-imbalanced NLP tasks. arXiv 2019, arXiv:1911.02855. [Google Scholar]

- Çelik, F.; König, M. A sigmoid-optimized encoder–decoder network for crack segmentation with copy-edit-paste transfer learning. Comput.-Aided Civ. Infrastruct. Eng. 2022, 37, 1875–1890. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.-Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Cheng, B.; Misra, I.; Schwing, A.G.; Kirillov, A.; Girdhar, R. Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1290–1299. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Guo, M.-H.; Lu, C.-Z.; Hou, Q.; Liu, Z.; Cheng, M.-M.; Hu, S.-M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2018; pp. 418–434. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Poudel, R.P.; Liwicki, S.; Cipolla, R. Fast-scnn: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).