1. Introduction

Enhancing low-light images in construction scenarios is crucial for reliable structural health monitoring and automated defect detection in building projects. During nighttime inspections or in dimly lit indoor environments—such as basements or industrial facilities—images of structures often suffer from poor visual quality due to inadequate natural light or limited lighting equipment. These issues manifest as poor visibility, low brightness, reduced contrast, and blurred textures. Such degradation not only hampers inspectors’ visual assessments but also significantly diminishes the effectiveness of computer vision-based automated defect detection systems in construction. Many computer vision tasks for building inspection depend on clear, visible image inputs. Therefore, capturing sharp structural images in nighttime or low-light conditions is a significant technical challenge in industrial inspection and structural health monitoring.

This paper focuses on thoroughly investigating and validating the effectiveness of low-light building inspection image enhancement methods by selecting indoor concrete walls as a typical research subject. Concrete wall defect detection holds significant representational value as concrete walls are among the most common structural components in buildings. This makes them ideal for effectively validating the applicability of the proposed method in the field of building inspection. When capturing images of concrete walls in indoor low-light environments, the imaging process can be defined as:

In this context,

represents the observed low-light wall image, while

denotes a clear reference wall image that includes defects like cracks and peeling. The point spread function (PSF) blur kernel [

1], also represented by

, results from long exposure needed to compensate for insufficient illumination or from the shaking of a handheld inspection device. Additionally,

signifies the sensor additive noise, which encompasses both shot noise and readout noise, and is notably amplified in low-light conditions. Furthermore,

is the function responsible for controlling the dynamic range and pixel saturation, and undefined serves as the convolution operator.

Compared to imaging in bright daylight, indoor low-light wall images exhibit three major degradation characteristics, highlighting core challenges in defect detection [

2]. The “flooding effect” occurs because dim lighting significantly compresses the grayscale gradient of crack edges [

3] (only 1/6 to 1/8 of normal light), drastically reducing contrast between peeling areas and the background. As a result, very small cracks (width < 0.2 mm) become nearly invisible, severely impairing manual recognition accuracy. Structural destruction arises from prolonged exposure. To capture discernible images at night or in low light, exposure time must be extended to gather sufficient light. However, even slight device movements during long exposure can cause artifacts, ghosting, and motion blur [

4], which blur linear cracks, disrupt their continuity, and substantially increase the rate of missed crack detections [

5]. Feature distortion due to equipment limitations is another issue. Handheld devices commonly used in industrial photography, like mobile phones and portable cameras, are constrained by small sensors and fixed apertures. Their low-light noise reduction algorithms overly smooth high-frequency details, leading to tiny defects, such as honeycomb surfaces, being misinterpreted as uniform backgrounds. Consequently, feature distortion becomes a significant problem.

These degradation phenomena significantly compromise the reliability and effectiveness of automated wall defect detection [

2]. Traditional threshold segmentation algorithms have a false detection rate exceeding 60% due to confusion between defects and background grayscale. Deep learning models, such as YOLOv8 [

6], experience a reduction in mean average precision (mAP) of over 30% when the input image signal-to-noise ratio (SNR) falls below 10 dB. Consequently, combining low-light enhancement [

7] and deblurring preprocessing is crucial for overcoming low-light-blur coupled degradation and ensuring accurate defect detection. In response to this challenge, we propose an efficient convolutional neural network (CNN) that integrates spatial and frequency-domain information, processing them concurrently. In the spatial domain, a large kernel convolution [

8] employing the inception architecture [

9] captures the multidirectional characteristics [

10,

11,

12] of cracks. A gating mechanism [

13] dynamically suppresses noise, effectively addressing the disruption of defect continuity caused by blurring. In the frequency domain, amplitude spectrum adjustment enhances defect-related high-frequency components, such as the energy spectrum peak at the crack edge [

3], achieving global illumination balance without over-smoothing high-frequency details.

The main contributions of this work are summarized below:

A lightweight neural network was developed to integrate frequency-domain [

14] attention with large-receptive-field spatial attention. This network effectively combines frequency-domain and spatial information to enhance the detection of defect characteristics, including crack edges, honeycomb surfaces, holes, and spalling boundaries on wall surfaces.

Our model demonstrated outstanding performance on a custom indoor concrete wall dataset featuring low-light defects, enhancing the PSNR by 1.13 dB and the SSIM by 0.06 over traditional methods. Additionally, we increased the mean average precision (mAP) of a subsequent YOLOv8 defect detection [

2] model by 7.1% and reduced the computational cost [

15] by over 50% compared to existing multitask methods.

Using this model, we set a new benchmark for low-light enhancement [

7] tasks in wall defect detection [

2], achieving an optimized balance between enhancement effectiveness and detection support.

2. Related Work

Low-Light Image Enhancement: Early methods primarily focused on image statistical characteristics or prior information, often rooted in the well-known Retinex theory [

16]. With the rise of deep learning [

6], modern approaches to low-light image enhancement largely utilize convolutional neural networks (CNNs) [

17]. Notable examples include RetinexNet, along with its associated LOL dataset, ZeroDCE, and SCI. Recent research has begun exploring transformers, such as RetinexFormer, and specific techniques like FourLLIE, which use Fourier frequency-domain representations to adjust image amplitude for enhancement. Advances have led to more complex architectures. For instance, DiffDark [

18], based on a diffusion model, extracts color priors through a residual decomposition network and illumination priors via histogram equalization. It employs a conditional correlation module to mine prior correlations, enhanced by an attention module to optimize illumination. Non-uniform sampling accelerates the diffusion process for low-light enhancement. Wang et al. [

19] introduced a zero-reference framework, creating a physical quadruple prior based on light transport theory to extract illumination-invariant features. This framework integrates a pretrained diffusion model for prior-to-image mapping and can distill lightweight versions. However, these methods are not specifically designed for building defect detection scenarios, where preserving defect features and minimizing redundant wall background information is crucial. In this work, we address the challenges of wall background noise and blurred defect features under low-light conditions in building environments. We developed a feature refinement feedforward network to reduce background redundancy, combined with a frequency response module that dynamically enhances high-frequency defect components in the frequency domain while balancing overall illumination.

Image Deblurring: Image deblurring techniques are generally categorized into blind and nonblind approaches. Nonblind methods utilize a blur kernel [

1] (or point spread function (PSF)) during image processing, while blind methods operate without prior knowledge of the blur degradation process. Recent developments have seen a surge in deep learning [

6]-based techniques for both categories, which outperform traditional methods. Nonblind deblurring methods offer significant advantages through an end-to-end approach, requiring only blurry–clear image pairs for training, without the need for point spread function estimation or sensor-related information. Most methods are sensor-independent, enhancing sRGB images from various camera systems. Currently, mainstream methods in this field rely on CNNs [

17]. For instance, DeblurGAN employs generative adversarial networks [

20] (GANs) [

21] to tackle the deblurring problem; iterative methods and diffusion models are also applied. In this work, we tackle the challenges of blurred cracks and lost spatial continuity in low-light architectural environments by designing the InceptionDWConv2d module to capture multi-directional, multi-scale crack features. Using a gating mechanism, we dynamically suppress noise and restore defect spatial continuity, facilitating clear image restoration for real-time defect detection.

Low-Light Blurry Image Enhancement: Enhancing blurry images captured in low-light conditions is a complex task that has not been extensively explored in the literature [

22,

23,

24,

25]. The NBDN [

22] introduced a non-blind network aimed at improving saturated nighttime images. This approach must consider noise and saturation when deconvolving images to achieve clarity, highlighting the limitations of previous methods in tackling this challenge. LEDNet [

25] addressed the simultaneous enhancement of images affected by both blur and overexposure in low-light settings, a scenario common in smartphones that use long exposure times in dim environments. To address this, the researchers developed an encoder–decoder network and introduced the widely used LOLBlur dataset. We exploit the strong relationship between low-light conditions and blur in architectural scenarios to propose an efficient and robust frequency-domain restoration network (FSRNet). By utilizing dual-domain collaboration—adjusting frequency-domain amplitude through the encoder and capturing spatial-domain defect features with the decoder—FSRNet optimizes low-light enhancement, deblurring, and defect detail preservation synergistically. Its lightweight design is suitable for real-time processing on edge devices used in architectural inspections.

3. Materials and Methods

Enhancing low-light images in architectural contexts necessitates addressing the diverse and complex nature of building structures. To thoroughly explore this issue and validate our approach, we focus on indoor concrete wall low-light defect detection as a representative application in architectural settings. Concrete wall defect detection is particularly significant in these contexts for several reasons: First, concrete is a fundamental and widely used material in construction, directly impacting building safety. Second, enhancing low-light images of wall defects—such as cracks, spalling, and honeycombing—addresses key technical challenges in architectural low-light enhancement, including detail preservation, noise reduction, and contrast enhancement. Lastly, the relatively uniform structure of walls offers an ideal platform for in-depth analysis of low-light enhancement techniques in architectural scenes.

We base our network design on the Metaformer architecture [

26], simplifying it into a fundamental module with two key components: global attention, functioning as a feature mixer, and a feedforward network. The module’s formula is as follows:

The input feature includes low-light image features of wall defects, while the module output feature is derived from these inputs. Similar to mainstream image restoration models like NAFNet [

27], we have made specific enhancements to the low-light image enhancement and deblurring modules to address the requirements of low-light enhancement for indoor wall defect detection. Our method integrates frequency-domain and spatial-domain collaborative refinement modules with the large kernel convolution [

8] of the inception structure.

Low-light image enhancement: Low-light image enhancement can be efficiently achieved through frequency-domain processing. Studies [

28,

29] have shown a strong correlation between low-light conditions and the amplitude component of an image in the Fourier domain. By enhancing only the amplitude and preserving the phase information, significant illumination correction can be accomplished. This approach is consistent across different resolutions [

28], allowing effective illumination enhancement estimation at lower resolutions before applying the improvement.

Image deblurring: Achieving effective image sharpening and deblurring typically necessitates a large receptive field, which can be accomplished by extracting deep features during downsampling. This approach is utilized by NAFNet. Alternatively, dilated convolution is employed, but its skip sampling mechanism often leads to gridding artifacts. Some models opt for standard large kernel convolution [

8], which, however, results in increased computational complexity and memory demands.

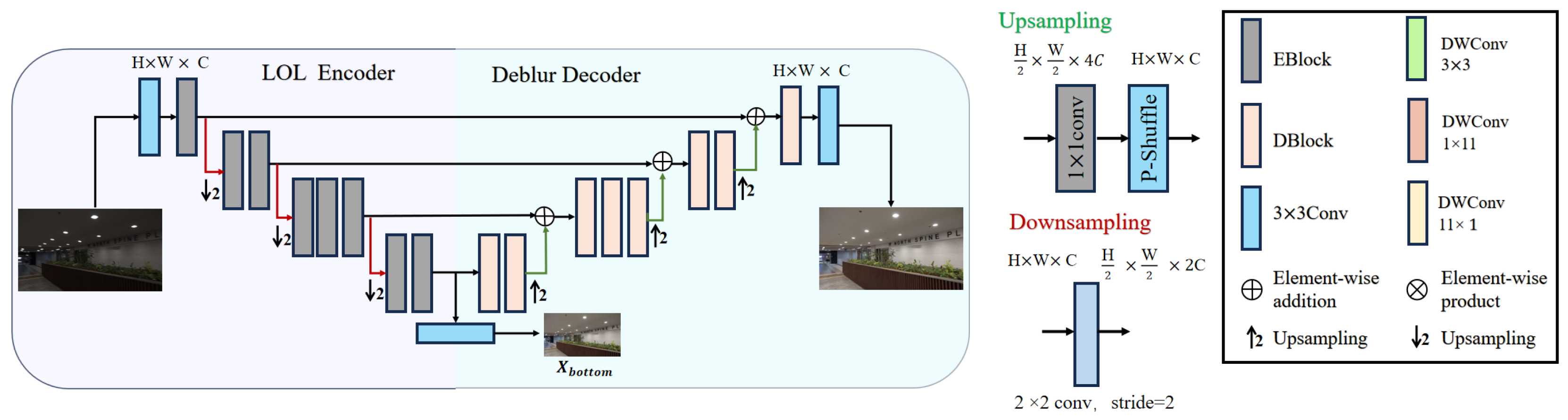

In summary, the proposed network architecture is depicted in

Figure 1. Unlike traditional methods, we introduce two separate modules specifically for the encoder and decoder. This asymmetric design arises from the need to enhance low-light conditions on low-resolution images within the encoder. The decoder then uses the enhanced illumination features from the encoder to upscale and optimize the clarity of the reconstructed output, a strategy similar to LEDNet [

25].

To enhance low-light images effectively, the encoder module operates in the Fourier domain, employing convolutional layers to linearly combine encoded features, resulting in an intermediate image representation

. We utilize this intermediate representation with an additional loss function to regularize our model, ensuring effective amplitude enhancement in the Fourier domain and producing a robust low-resolution representation. The decoder module emphasizes spatial processing by incorporating large kernel convolutions with an extensive receptive field, based on an improved inception architecture [

9]. By employing task-specific modules, we can minimize the number of modules, significantly reducing both the number of parameters and the computational cost in terms of MACs and FLOPs.

3.1. Low-Light Enhancement Encoder

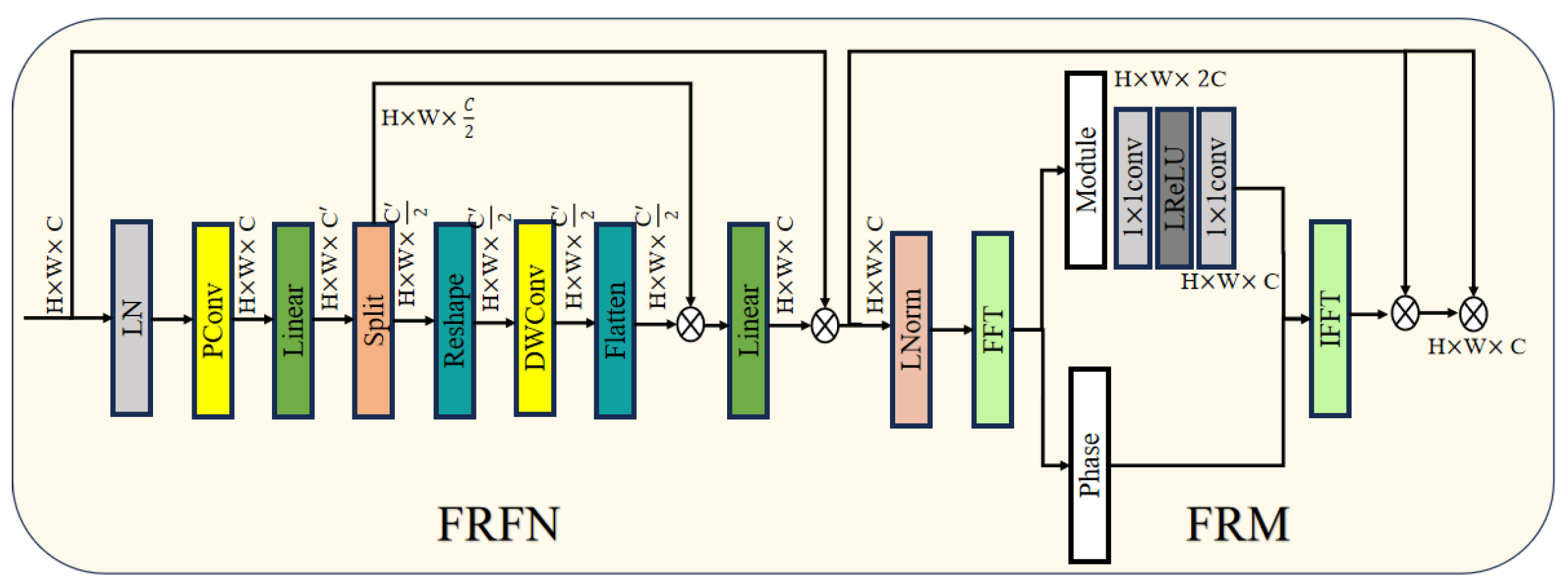

Encoder blocks (EBlock) aim to improve the visibility of indoor concrete wall images under low-light conditions by utilizing Fourier information and adhering to the Metaformer [

26] framework. The central structure comprises two main components: a feature refinement feedforward network (FRFN) and a frequency response module (FRM), as illustrated in

Figure 2.

The feature refinement feedforward network (FRFN), central to spatial feature processing [

31], is specifically crafted to address the feature disparities between defects and backgrounds in low-light wall images. Through hierarchical processing, the network precisely enhances defect features while dynamically suppressing [

32] background redundancy. For low-light wall feature images input from the encoder—containing blurred defects like cracks and spalling, along with the cement background texture—the FRFN initially performs differential enhancement using partial convolution (PConv). This involves a 3 × 3 convolution on high-frequency edge channels related to defects, such as crack edges and spalling boundaries, to enhance local details. In contrast, it applies only shallow processing to low-frequency, smooth channels, like large cement areas, which dominate the background, to avoid amplifying dark noise. Following linear projection and activation, the features split into two branches: branch

retains potential defect feature information, while branch

employs deep convolution [

33] (DWConv) to capture local spatial correlations and generate targeted gating signals that specifically suppress redundant channels in the wall background, such as uniform grayscale cement areas. Finally, elementwise multiplication

is used to preserve defect features [

34] and suppress background noise [

35], enhancing the contrast of blurred crack edges under low illumination and reducing noise in the uniform areas of the wall background in the output refined features. This process provides an increasingly accurate feature basis for subsequent frequency-domain enhancement while preventing irrelevant background information from interfering with illumination correction. For ease of reproduction and theoretical analysis, the FRFN’s calculation process can be formalized as follows:

where

and

are linear projection matrices,

denotes a partial convolution operation,

signifies depth-wise convolution,

and

F refer to the reshape and flatten operations, and

indicates element-wise multiplication.

The frequency response module (FRM) enhances illumination [

30] by leveraging Fourier domain characteristics. Previous studies have shown that low-light information primarily relies on the amplitude in the frequency domain. This is especially crucial for wall images, where noise often obscures the high-frequency features of defects, resulting in much lower amplitude spectrum energy compared to normal lighting conditions. To address this, a fast Fourier transform (FFT) is applied to the feature map generated by the FRFN. The FRM selectively enhances high-frequency elements linked to defects within the amplitude spectrum, such as energy peaks at crack edges, while maintaining the phase spectrum unchanged to prevent spatial structure distortion. This enhanced data is then transformed back into the spatial domain using the inverse fast Fourier transform (IFFT), ensuring both illumination correction and defect feature preservation [

34]. The calculation process is as follows:

where

is the learnable frequency-domain weight,

denotes element-by-element multiplication, and

are the fusion coefficients. The resulting

integrates the defect enhancement features of the spatial domain with the illumination compensation features of the frequency domain, achieving high-fidelity restoration of low-illuminated wall defects.

The encoder employs stride convolution for downsampling, halving the feature resolution at each level. This design enables the integration of additional modules in deeper layers without significantly increasing computational demands. Furthermore, the module adjusts to the feature distribution of wall images, characterized by extensive backgrounds and sparse defects, while preserving multiscale [

36] defect features and compressing redundant information. Ultimately, the encoder provides the decoder with illumination enhancement features [

30], resulting in an estimated clear wall image at a low resolution

(1/8 of the input image size). Despite the reduced resolution, multiscale consistency in illumination and amplitude maintains defect feature coherence, ensuring robustness in the decoder’s deblurring process.

3.2. Deblurring Decoder

Decoder blocks (DBlocks) are crafted to adjust the blur characteristics of indoor concrete wall images. Their primary function is to restore spatial continuity and enhance the sharpness of defects like cracks and spalling. This is achieved through illumination correction and comprehensive feature upsampling from the encoder. The structure also extends the Metaformer framework [

26], and the formula is as follows:

The InceptionDWConv2d module is crafted to capture multi-directional crack features, drawing inspiration from prior studies [

11,

12] that highlight the significance of extracting multi-directional features for detecting various defect types, such as cracks and spalling. Drawing from the large kernel attention (LKA) mechanism [

37] and the Inception architecture [

38], InceptionDWConv2d employs multi-branch deep convolution [

28] to capture differentiated features. The Inception architecture decomposes a single large kernel into three small kernel deep convolution branches: a 1 × 1 kernel targets the linear continuity of horizontal cracks, another 1 × 1 kernel enhances the edge features of vertical cracks, and a 3 × 3 kernel captures the local texture of diagonal cracks and spalling areas. This approach avoids the over-smoothing of small defects typical of traditional large kernel convolution. Each branch utilizes deep convolution to minimize parameters, incorporating batch normalization and the ReLU activation function to enhance nonlinear feature representation. This method maintains the large receptive field advantage of large kernel convolution while addressing its computational inefficiency. Following multi-branch feature fusion, a simplified channel attention mechanism (SGM) is introduced to dynamically adjust the channel weight in response to the grayscale difference between the crack edge and the background, suppressing redundant responses in the uniform cement wall area. This ensures that defect features are effectively retained across different directions and scales. The calculation process can be expressed as:

In this context, represents the channel count per convolutional branch, while C denotes the total number of input channels. The allocation ratio coefficient is given by . During this step, the input feature X is partitioned into four groups along the channel dimension, each corresponding to feature extraction tasks oriented in different directions and shapes.

Next, different branches use direction-aware convolution kernels to extract features:

The kernel is a small square convolution kernel designed to capture oblique cracks and local textures. Meanwhile, the kernel with is specialized in extracting long horizontal crack features, and another kernel targets the continuous edge structures of vertical cracks. Additionally, the branch serves as a residual direct connection, maintaining the global context information within the input features. This configuration allows each branch to respond to defects in various spatial directions independently, without interference.

Finally, the features of each branch are concatenated according to the channel dimension:

The resulting feature map integrates multidirectional and multiscale information. This map preserves the receptive field benefits of large kernel convolution, significantly reduces computational demands, and enhances responses to subtle cracks and extensive peeling areas.

To further reduce background noise, the SGM employs a gating mechanism [

13] for adaptive feature refinement. Once the features output by InceptionDWConv2d are linearly projected, they are divided into a defect feature branch and a gate signal branch. The gate signal branch utilizes a 3 × 3 depth-wise convolution to capture local texture variations on the wall surface, generating a targeted gating signal. Through element-wise multiplication, background noise channels are suppressed, while the high-frequency responses of crack edges are preserved and enhanced. This process ensures that defect details, such as crack bifurcations and spalling boundaries, remain intact during upsampling.

The decoder employs transposed convolution for upsampling, effectively doubling the feature resolution at each level to produce a clear wall image that matches the original input dimensions. This design capitalizes on the multidirectional feature capture of InceptionDWConv2d and the noise suppression capabilities [

35] of the SGM. It removes blur from long exposures while accurately preserving the linear features of cracks and the boundaries of spalled areas, thereby providing highly recognizable inputs for subsequent defect detection models [

2]. The decoder architecture is illustrated in

Figure 3.

3.3. Loss Function

Beyond the innovative module design, the loss function is crucial in maximizing the effectiveness of our proposed method. We optimize the model

by integrating a distortion loss with a perceptual loss [

39]. To ensure high fidelity, we first apply a pixel-wise loss

, defined as

, where

and

are the enhanced wall image and the true, clear wall image, respectively.

is therefore the

loss.

To maintain high fidelity, we employ the

norm loss, while perceptual similarity is attained through the introduction of perceptual loss [

39]

. The perceptual loss is calculated by determining the perceptual distance between image features, utilizing the VGG19-based [

40] LPIPS metric.

By incorporating this loss, we ensure that the images produced by the network maintain high visual quality and closely resemble the clear baseline wall images. Additionally, we introduce the gradient edge loss:

This loss function is employed to improve both the consistency and accuracy in reconstructing high-frequency features, like crack edges.

Finally, the architecture-guided loss

is introduced to ensure the encoder stays focused on the low-light enhancement objective [

7]. This loss is applied directly to the encoder’s low-resolution output

:

The intermediate result is compared to the downsampled baseline image ( represents an 8× resolution downsampling achieved through bilinear interpolation).

The final total loss function is as follows:

The loss weights , , and are determined to be 1, 10−3, and 60, respectively, based on empirical experiments.

4. Results

4.1. Dataset and Preprocessing

To assess the performance of the newly introduced low-light enhancement method [

7], which employs frequency-domain [

14] and spatial-domain [

41] collaborative refinement alongside multidirectional feature optimization, we developed the indoor–concrete–LLI–defect dataset (ICLDD). This dataset was gathered from various industrial buildings and laboratories using a high-sensitivity portable industrial camera (ISO 320, fixed aperture f/2.8) as well as common inspection devices like mobile phones and portable cameras. The illumination range spanned from 0.5 to 15 lux. We collected a total of 5200 images, comprising 2600 low-light degraded images and 2600 corresponding clear reference images. These images featured a range of defect types, including fine cracks (<0.2 mm wide), long cracks (>50 mm wide), spalling, honeycombing, and holes. The dataset was divided into a training set with 3640 images (70%), a validation set with 520 images (10%), and a test set with 1040 images (20%).

To assess the model’s generalizability, we performed transfer testing using the public datasets LOLBlur and LOLv2 Real, along with qualitative visualization analysis on unpaired real-world low-light wall data. The images were input at their original resolution, randomly cropped to 384 × 384 during training, and augmented with random horizontal and vertical flips as well as 90° rotations.

4.2. Parameter Settings and Evaluation Indicators

The experiments were conducted using an NVIDIA RTX 4090 GPU in PyTorch 1.8.1. Training was performed on a single GPU with a batch size of 32. The optimizer used was AdamW (

β1 = 0.9,

β2 = 0.9, weight decay = 1 × 10

−3, and initial learning rate = 5 × 10

−4), a cosine annealing strategy, and a minimum learning rate of 1 × 10

−6. The training loss function weights were

= 1,

= 1, and

= 50, with an

weight of 1. The number of training epochs was set to 500. The peak signal-to-noise ratio (PSNR) and structural similarity (SSIM) were used as objective quality metrics for low-light enhancement [

7], whereas LPIPS was used to measure perceptual quality. To verify the improvement in defect detection [

2], the enhanced results were fed into the YOLOv8 defect detection model, and the mean average precision (mAP) (intersection over union = 0.5) and fine crack recognition [

42] rates were recorded.

4.3. Comparative Experiment

4.3.1. ICLDD Dataset

This experiment served as the central verification of this study. The proposed method was evaluated using both quantitative and qualitative assessments on the self-constructed indoor concrete wall low-illuminance defect dataset (ICLDD).

We covered image quality [

43] evaluation metrics (PSNR, SSIM, and LPIPS), defect detection [

2] performance metrics (mAP@0.5 and fine crack recall), and model efficiency metrics (parameter count and computational complexity [

44]).

Table 1 shows a comprehensive performance comparison of our method with mainstream low-light enhancement [

7] methods on ICLDD. The experiments used the publicly available code and recommended parameter settings of these methods to ensure a fair comparison.

Our method achieves comprehensive leadership on the ICLDD dataset: Compared to the state-of-the-art methods DiffDark [

18] and Wang & Zhang [

19], FSRNet excels in peak signal-to-noise ratio and mAP@0.5, while using significantly fewer parameters. This underscores its efficiency and effectiveness in low-light architectural applications. The PSNR reached 27.58 dB, the SSIM reached 0.901, and the LPIPS decreased to 0.128, all of which were state-of-the-art performance levels. The mAP@0.5 reached 83.4%, and the fine crack recall reached 80.2%, a 7.2% increase over the baseline, demonstrating significant improvements in key engineering metrics. With only 3.75 M parameters and a computational complexity of 8.8 GMACs [

44], this method achieved an optimal balance between being lightweight and having high performance. We visualized parameters related to image quality [

43] on the ICLDD dataset. As shown in

Figure 4, our method had a clear advantage.

- 2.

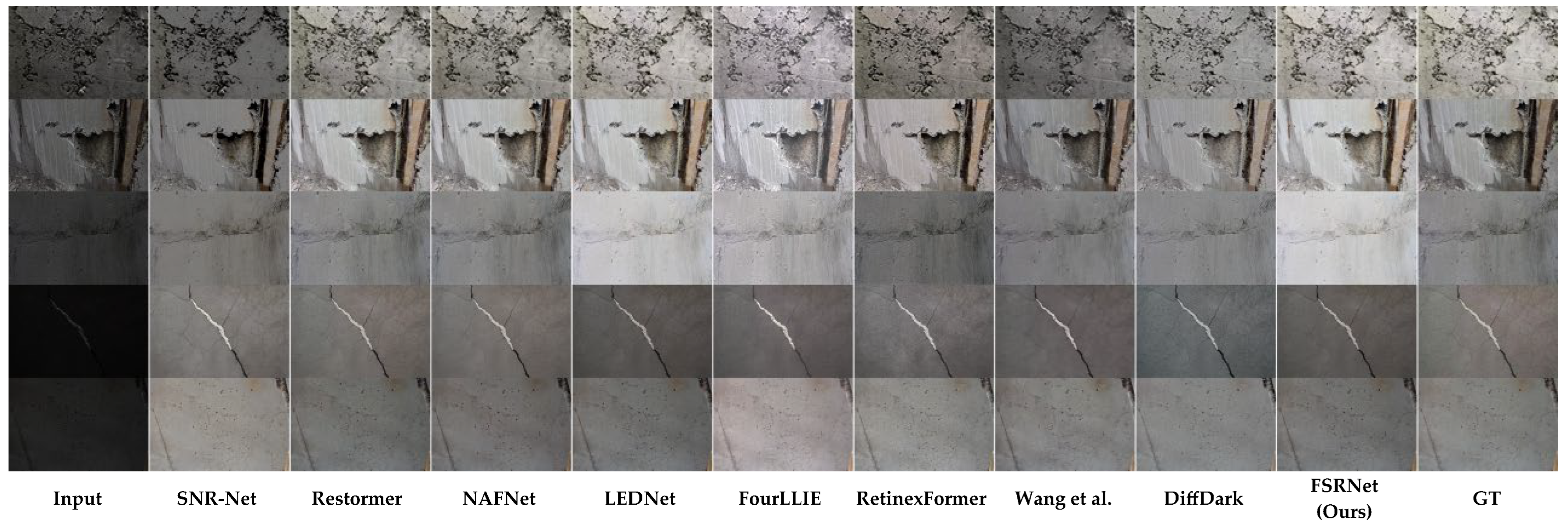

Qualitative analysis

Figure 5 shows a visual comparison of real low-light wall images from ICLDD. These samples demonstrate the robustness of our method to handheld motion blur [

4], sensor noise [

50], pixel saturation, and insufficient illumination.

Figure 5 demonstrates that FSRNet surpasses other methods in restoring fine crack details and reducing motion blur artifacts. This is attributed to the InceptionDWConv2d module’s capability to capture multidirectional defect features.

FSRNet demonstrated outstanding performance on the ICLDD dataset, achieving a PSNR of 27.58 dB and an SSIM of 0.901. This success is attributed to its dual-domain collaborative mechanism, which enhances high-frequency defect features while suppressing background noise. Compared to RetinexFormer and Restormer, FSRNet’s lightweight design, with only 3.75 million parameters, significantly reduces computational overhead without compromising repair quality. This efficiency makes it ideal for deployment on resource-constrained edge devices, particularly in engineering scenarios like construction. However, in extremely noisy environments (SNR < 5 dB), the method may experience slight performance degradation, suggesting potential for further optimization.

We evaluate our methods based on both image quality metrics and computational efficiency. As indicated in

Table 1, we present the number of parameters (Params) and frames per second (FPS) for each method. The FPS of competing methods is determined by model complexity and computational complexity (MAC) and is benchmarked on a GPU for low-resolution input. Heavyweight models like Restormer [

48] and diffusion-based DiffDark [

18] deliver high performance but incur significant computational overhead, rendering them unsuitable for real-time applications. Conversely, lightweight methods such as Uformer [

47] and Zero-DCE [

38] provide extremely high speed (>100 FPS) but compromise on image quality. Our FSRNet strikes an excellent balance, achieving a speed of 113.7 FPS on an NVIDIA RTX 4090 GPU. It significantly outperforms all lightweight methods in terms of PSNR and SSIM while maintaining a speed suitable for near-real-time applications, surpassing strong competitors like RetinexFormer [

49] and DiffDark [

18].

4.3.2. LOLBlur Dataset

LOLBlur is a benchmark test set for low-light blur image enhancement. This set contains 10,200 training pairs and 1800 test pairs synthesized by darkening and blurring normal images. This experiment primarily validates the cross-dataset generalization ability of the method, thereby improving its performance in demanding environments and practical applications.

Table 2 shows the generalization performance of our method on the public benchmark dataset LOLBlur.

Our method demonstrates excellent cross-domain generalization on the LOLBlur dataset: the PSNR reaches 27.12 dB, which is a 0.4 dB improvement over the next-best Restormer. The SSIM and LPIPS demonstrate balanced overall performance. These results demonstrate the effectiveness of the frequency-spatial synergy mechanism for addressing different types of low-light blur degradation.

- 2.

Qualitative analysis

Figure 6 shows the enhancement results of our method compared with those of the other methods on the LOLBlur dataset; our method achieves the best visual performance.

4.3.3. LOLv2 Real Dataset

This dataset contains low-light images of real scenes. LOLv2 (real) includes 689 training image pairs for training and 100 test image pairs for testing. This dataset is an important benchmark for verifying the effectiveness of the method in real environments.

Table 3 shows the performance metrics of our method on the LOLv2 Real dataset. The experimental results consider both image quality [

43] and computational efficiency.

Our method achieves a significant breakthrough on the League of Legends v2 Real dataset: a PSNR of 23.45 dB, which is a 0.65 dB improvement over the next-best Retinexformer, achieving optimal restoration results in realistic low-light scenes. The computational complexity [

44] was only 8.8 GMACs, and its parameter count was 3.31 M, which was significantly less than that of high-performing methods. These results demonstrated the adaptability of the method to real-world, complex noise environments and its feasibility for engineering deployment.

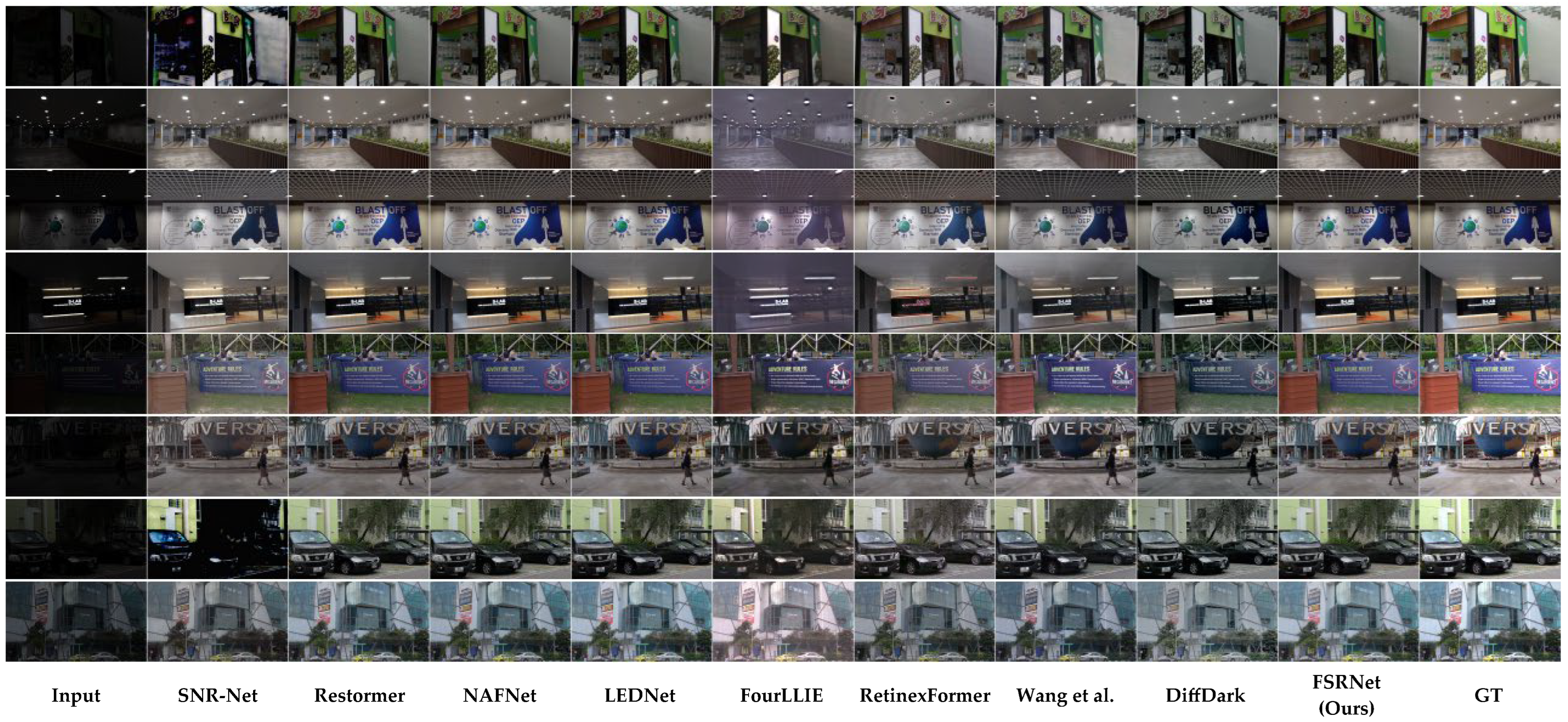

- 2.

Qualitative analysis

Figure 7 shows a visual example of our comparative results on the LOLv2 Real dataset. As shown, the results produced by our method more closely resembled real images. Furthermore, in terms of image denoising, our method recovered more details from severely degraded noisy inputs.

4.4. Ablation Experiments

4.4.1. Network Structure Module Ablation

Ablation experiments are a standard practice in deep learning [

6] studies to verify the rationality of the design and help understand the working mechanism of each part of the model.

Table 4 verifies the contribution of each module to the overall performance by systematically removing key components of the model:

The ablation experiment systematically verified the effectiveness of each module: removing the FRFN caused the PSNR to decrease by 1.13 dB, reducing the FRM drops by 1.56 dB, the InceptionDWConv2d drops by 1.37 dB, and the SGM drops by 1.25 dB. The loss of each module led to significant performance degradation.

4.4.2. Loss Function Ablation Experiment

Table 5 illustrates how different combinations of loss functions impact the model’s effectiveness. This analysis aids in comprehending the role of each loss term and in identifying the optimal combination strategy.

Ablation studies on the loss function revealed that the complete loss function achieved the highest PSNR at 26.9 dB. Additionally, each individual loss term contributed complementarily, confirming the validity of the multiobjective optimization strategy and the scientific basis of the module’s collaborative design.

4.5. Computational Complexity Analysis

To assess the feasibility of deploying FSRNet on edge devices within building environments, this section quantitatively examines the model’s complexity through two primary dimensions: parameters and computational overhead (MACs). Additionally, the lightweight design principles of FSRNet are elucidated in relation to its architectural framework.

FSRNet’s lightweight design is a result of its carefully crafted architecture.

Table 6 presents the parameter and computational overhead ratios for each core module, assuming an input image size of 256 × 256 with an initial 3-channel configuration.

Table 6 highlights that the feature refinement feedforward network (FRFN) and InceptionDWConv2d are the most complex components of FSRNet, comprising 35.0% and 42.8% of the total parameters, and 37.2% and 39.2% of the total computation, respectively. FRFN employs a dimensionality reduction-increase structure, halving the number of intermediate channels, and uses a Sigmoid gating mechanism to dynamically suppress redundant features and minimize unnecessary calculations. InceptionDWConv2d reduces computation by 60% compared to traditional large kernel convolutions by splitting the 11 × 11 large kernel into three smaller depthwise convolutions. The input coding layer is lightweight, accounting for 4.7% of the parameters and 3.3% of the computation, and compresses the feature map from 512 × 512 to 3 × 3 using 3 × 3 convolution with stride 2 downsampling. FSRNet’s architecture employs a 256 × 256 matrix, reducing subsequent computational demands. The frequency response module (FRM) maintains low complexity, with 9.3% of parameters and 12.7% of computation, by adjusting only the amplitude spectrum and halving the number of channels via 1 × 1 convolutions. The output decoding layer is minimally complex, using 0.7% of parameters and 3.2% of computation, relying solely on 1 × 1 convolutions and upsampling for basic channel mapping and resizing. Overall, FSRNet achieves 3.75 M parameters and 8.8 GMACs of computation, balancing accuracy with computational efficiency, facilitating real-time deployment on edge devices in building scenarios.

4.6. Cross-Task Validation Experiments

This experiment effectively showcased the practical value and versatility of the method by comparing detection accuracy before and after enhancement.

Table 7 verifies the promotion effect of the low-light enhancement [

7] method in this work on different target detection models.

Cross-task validation experiments highlighted the method’s versatility and practical value: three detectors with distinct architectures (YOLOv8, YOLOv5, and RT-DETR) showed mAP improvements of 7.0–8.0% after enhancement. This consistent improvement underscored the method’s stability. Notably, the 8% boost in detection accuracy was crucial for identifying engineering application facilities, particularly safety-critical infrastructure. This enhancement significantly increased the detection model’s generalizability and offered essential flexibility in choosing suitable detectors for practical applications.

4.7. Light Fluctuation [57] Test

We conducted simulations of lighting changes in a real engineering environment to verify the method’s stability under diverse low-light conditions. Additionally,

Table 8 tests the method’s robustness across varying lighting intensities.

Illumination robustness testing confirmed the method’s stability: within an illumination range of 0.5–10 lux, the PSNR fluctuated by just 0.23 dB (27.58 to 27.35 dB), and the SSIM changed by only 0.002. Even in extremely low light conditions of 0.5–1 lux, the method maintained optimal performance. This consistent performance underscores the method’s effectiveness in challenging engineering scenarios, offering reliable support for real-world applications and minimizing the risk of detection instability due to changes in ambient illumination.

4.8. Real-Time and Latency Testing

The test evaluated the real-world hardware configuration of edge devices and engineering sites, directly linked to practicality and deployment feasibility.

Table 9 illustrates the deployment performance of the verification method on an actual engineering hardware platform.

Real-time testing confirmed the feasibility of engineering deployment, achieving 28 FPS with a 35.7 ms latency on the resource-constrained Xavier NX, and 54 FPS with an 18.5 ms latency on an engineering PC configuration. Both configurations met real-time processing requirements. The lightweight design, featuring 3.75 million parameters and 8.8 GMACs, ensured smooth operation across various hardware platforms. This robust performance lays a solid foundation for large-scale engineering deployment and edge device applications, verifying the method’s practical deployment value.

6. Conclusions

This paper tackles the challenge of enhancing low-light images in architectural scenes by proposing a lightweight solution that integrates frequency-domain and spatial-domain collaboration. The innovative InceptionDWConv2d module is specifically designed to accommodate the multi-directional characteristics of building structures, while the FRFN module effectively combines frequency-domain noise suppression with spatial-domain detail enhancement. This synergy significantly improves the detectability of subtle structures in architectural scenes. Validation through typical cases, such as indoor concrete wall defect detection, demonstrates an 83.4% mAP@.5 detection accuracy on the ICLDD dataset and a 23.40 dB PSNR on the LOLv2-Real dataset, underscoring the advantages of low-light enhancement methods in these contexts. For engineering deployment, the model boasts only 3.75 M parameters, 8.8 GMACs computational complexity, and an inference speed of 28–54 FPS, satisfying the dual requirements of being lightweight and real-time for architectural scene detection. Future work will explore multimodal fusion to enhance robustness in extremely dark scenes, construct real building defect datasets to overcome the limitations of synthetic data, optimize adaptive multi-task balance strategies, and develop versions suitable for edge AI chips to facilitate large-scale industrial application in smart building monitoring.