A Computational Framework for Procedural Abduction Done by Smart Cyber-Physical Systems

Abstract

:1. Introduction

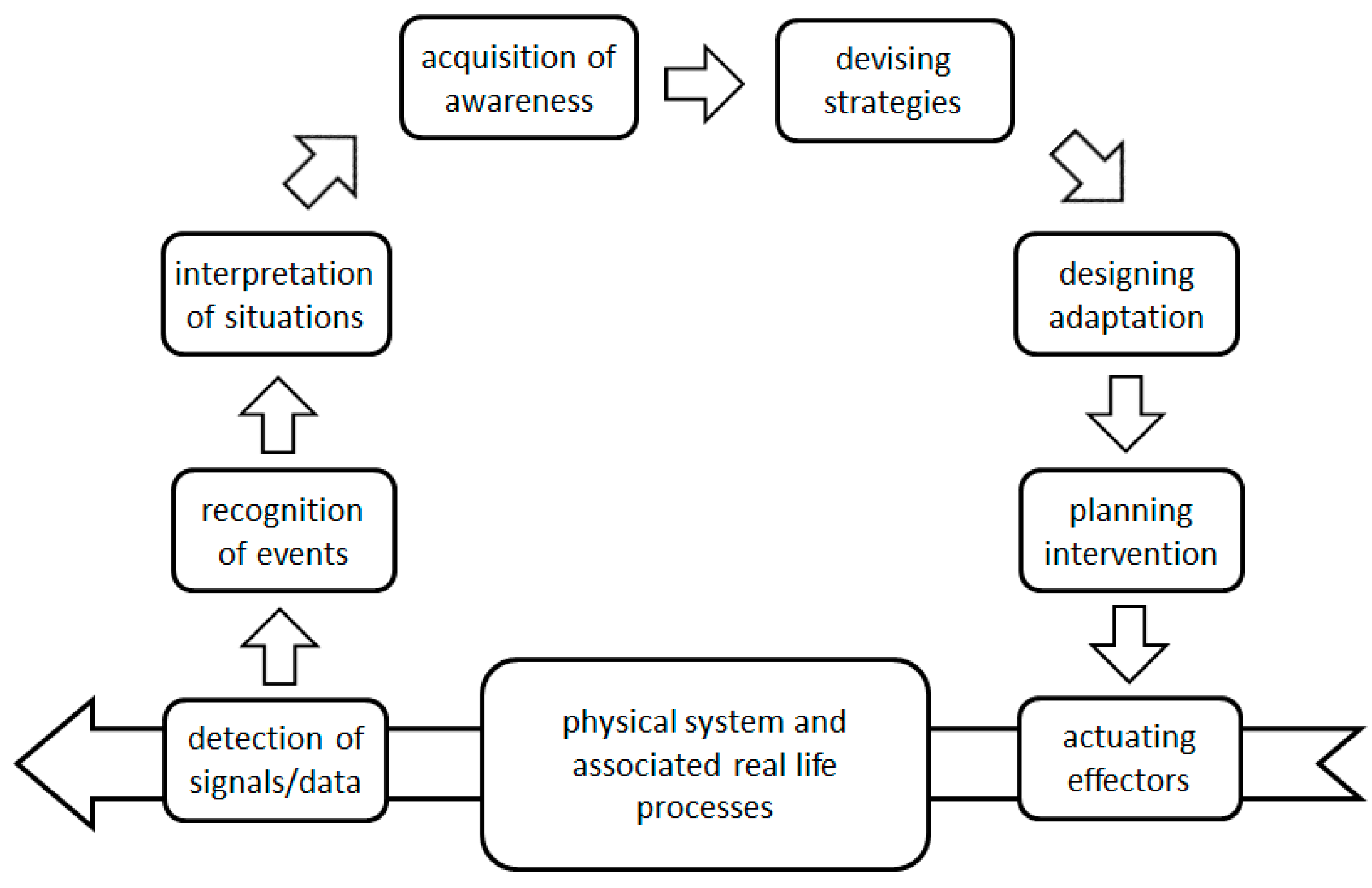

1.1. An Evolutionary View on Cyber-Physical Systems

1.2. On the Background Research and the Research Issue Addressed in This Article

- Based on what theoretical and methodological foundations can system-level smartness be implemented in next generation cyber-physical systems?

- What way can dynamic context information processing be extended to provide semantically enriched awareness representation?

- In what forms can procedural abstraction be implemented as a system-level reasoning mechanism of smart CPSs?

- Based on what knowledge can an active engineering framework support transdisciplinary development of compositional CPSs?

- What do human/system-in-the-loop and supervisory/operative control mean in the context of smart CPSs?

1.3. Content and Structure of the Article

2. Current State of the Art

2.1. Theoretical Understanding of Mind-Like Behavior of Artefactual Systems

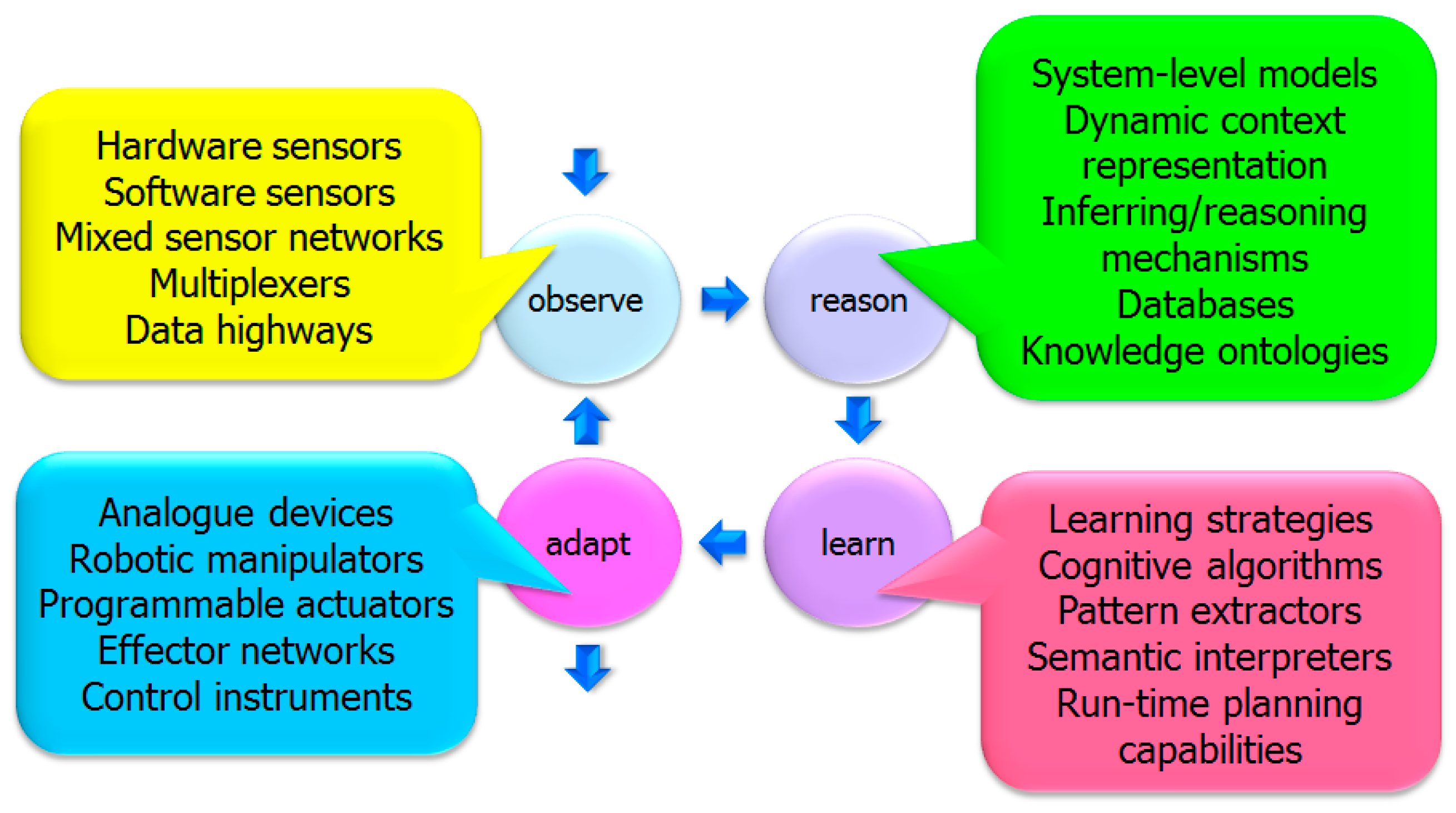

2.2. Potential Enabling Technologies for Smart CPSs

2.3. Achieving System-Level Holism in Reasoning and Decision Making

2.4. Possible Logical Bases of System-Level Ampliative Reasoning Mechanisms

2.5. Recent Efforts to Exploit Abduction as an Ampliative Computational Mechanism

2.6. Synthesis of the Major Findings

3. Pilot Systems Hinting at Necessary Constituents of Procedural Abduction

3.1. Forerunning Projects and their Outcomes

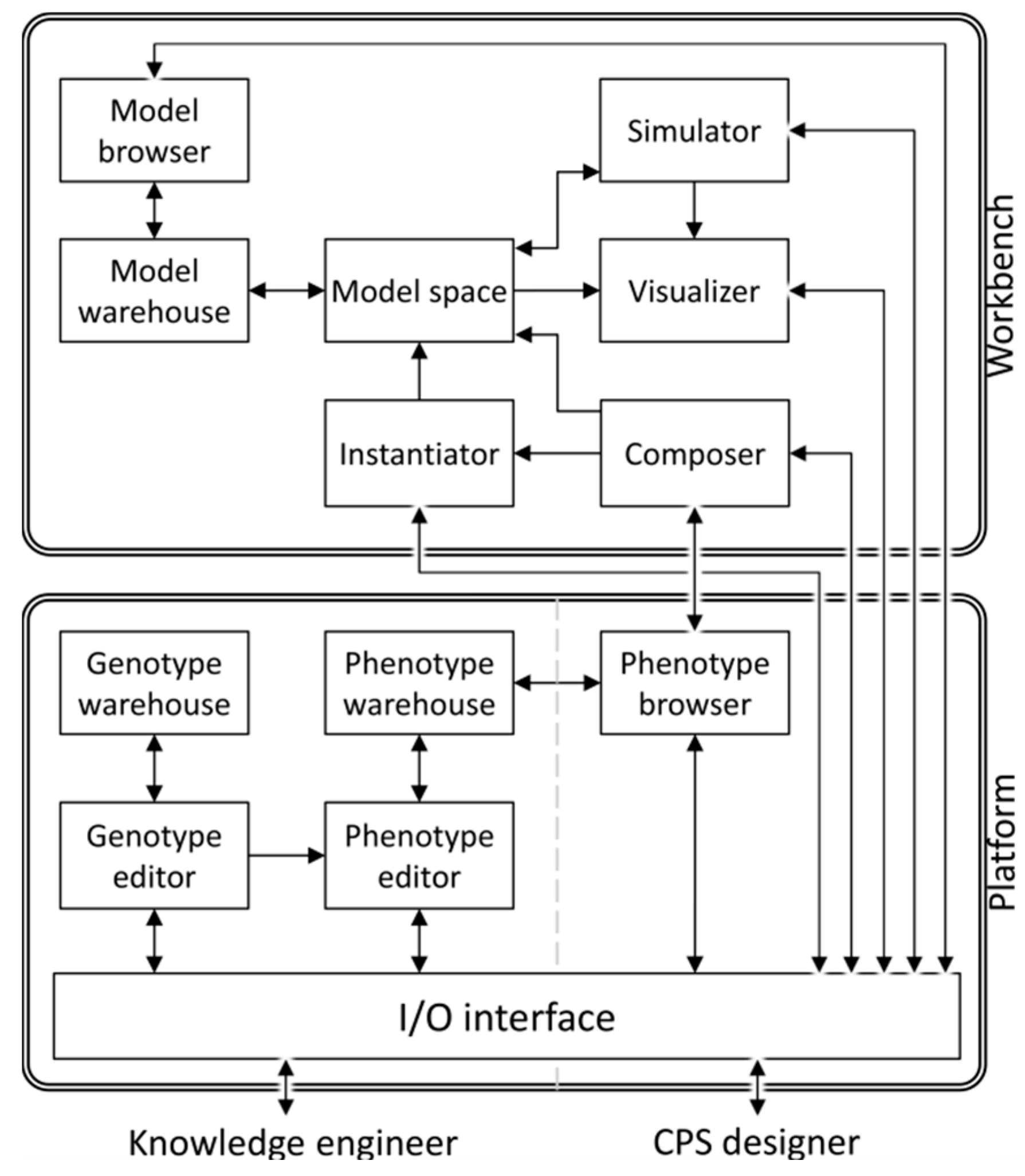

3.2. System-Level Feature-Based Conceptualization and Modeling of First Generation Cyber-Physical Systems

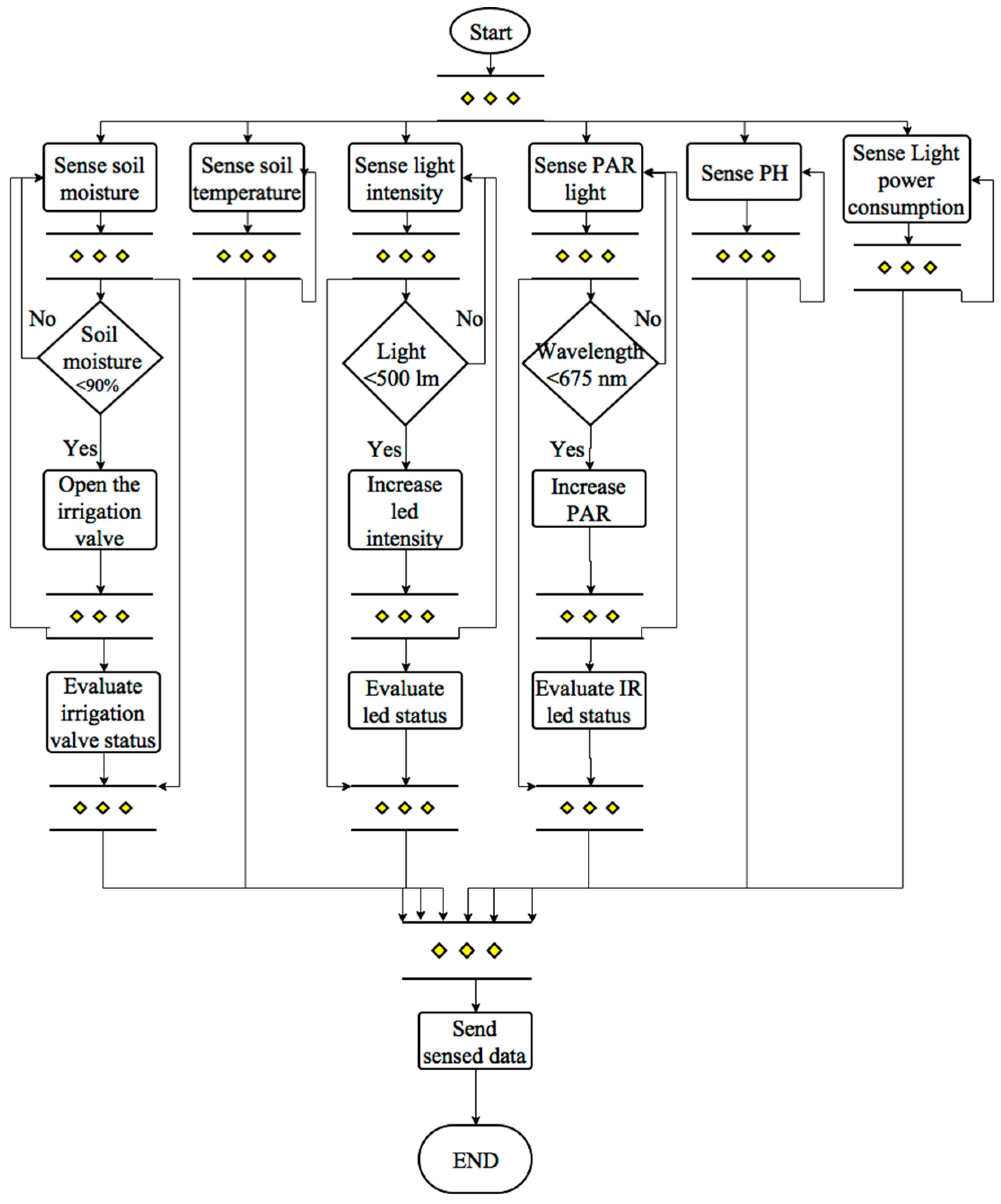

3.3. Identification and Forecasting Failures in a Resilient Cyber-Physical Greenhouse Testbed System

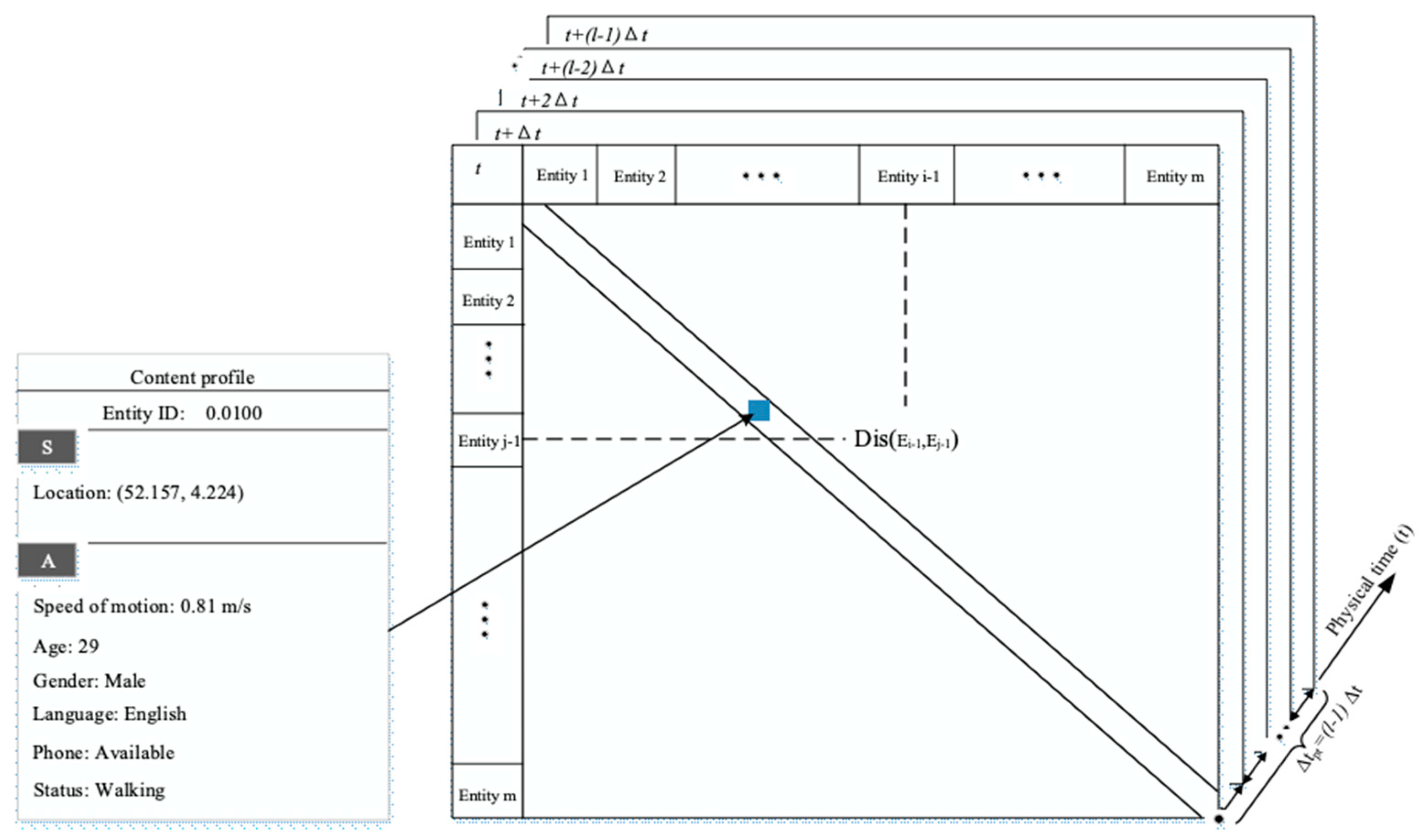

3.4. Representation and Reasoning with Dynamic Context Knowledge in a Fire Evacuation Aiding System

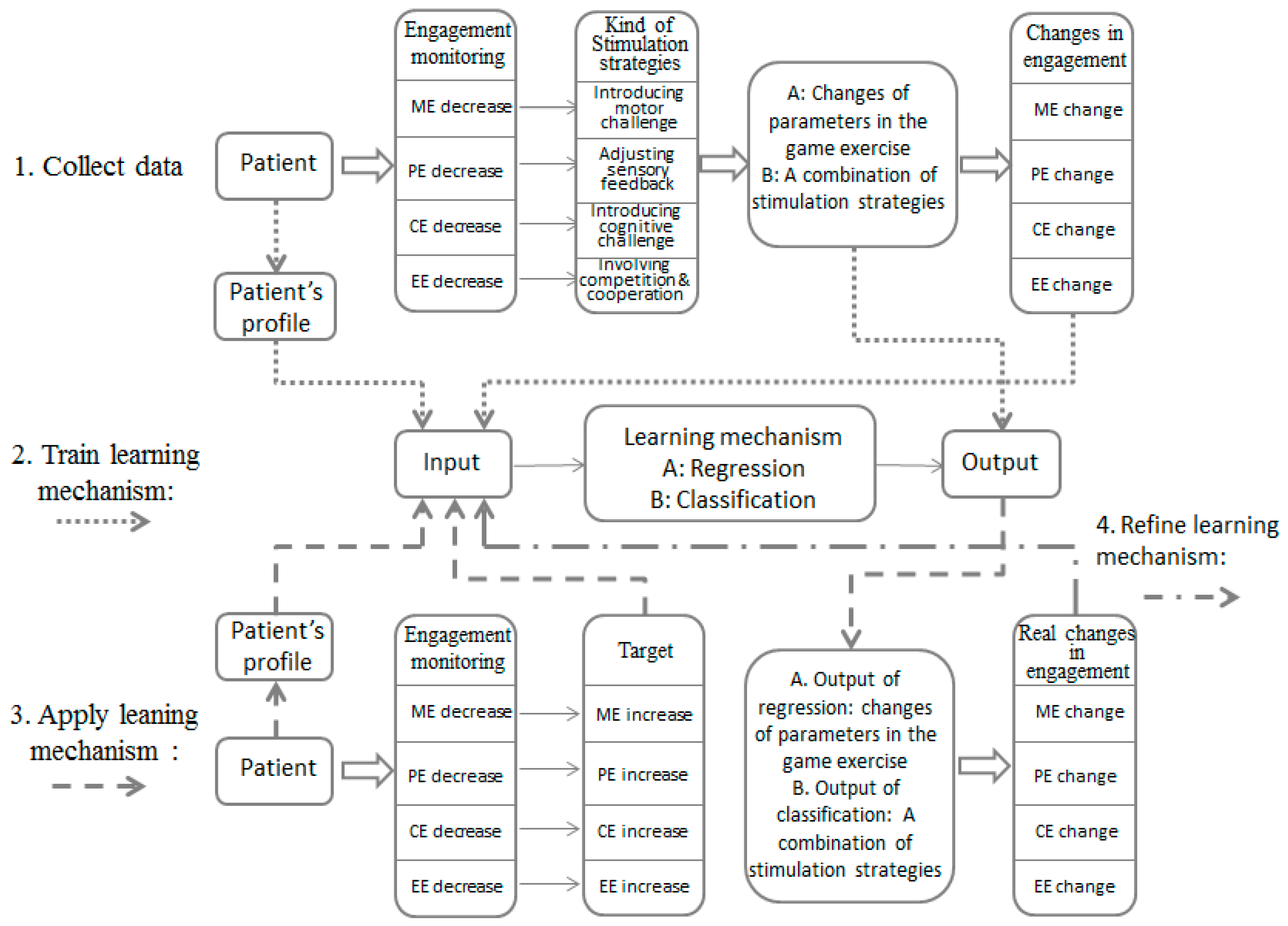

3.5. Reasoning and Adaptation in an Engagement Monitoring and Enhancing System for Stroke Rehabilitation

4. The Framework of Computational Implementation of Procedural Abduction

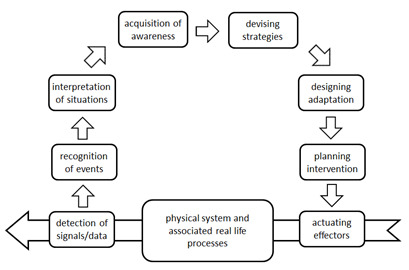

4.1. The General Workflow and the Underpinning Knowledge of Procedural Abduction

4.2. From Enabling Operators to Transforming Algorithms

4.3. Transforming Algorithms Needed for the Operators

5. Some Conclusions and Future Research Opportunities

5.1. Reflection on the Approach

- Some phenomenon concerning the operation of the system is observed;

- If a particular explanation would be true, then the phenomenon could be a matter of course;

- Hence, there is a reason to suspect that the particular explanation is proper (true).

5.2. Future Research Opportunities

Funding

Acknowledgments

Conflicts of Interest

References

- MacKay, D.M. Mind-like behaviour in artefacts. Bull. Br. Soc. Hist. Sci. 1951, 1, 164–165. [Google Scholar] [CrossRef]

- Poovendran, R.; Sampigethaya, K.; Gupta, S.K.; Lee, I.; Prasad, K.V.; Corman, D.; Paunicka, J.L. Special issue on cyber-physical systems. Proc. IEEE 2012, 100, 6–12. [Google Scholar] [CrossRef]

- Rho, S.; Vasilakos, A.V.; Chen, W. Cyber physical systems technologies and applications. Future Gener. Comput. Syst. 2016, 56, 436–437. [Google Scholar] [CrossRef]

- Knight, J.; Xiang, J.; Sullivan, K. A rigorous definition of cyber-physical systems. In Trustworthy Cyber-Physical Systems Engineering; CRC Press: Boca Raton, FL, USA, 2016; Chapter 3; pp. 47–74. [Google Scholar]

- Sztipanovits, J.; Koutsoukos, X.; Karsai, G.; Kottenstette, N.; Antsaklis, P.; Gupta, V.; Goodwine, B.; Baras, J.; Wang, S. Toward a science of cyber–physical system integration. Proc. IEEE 2012, 100, 29–44. [Google Scholar] [CrossRef]

- CPSOS Consortium. Cyber-Physical Systems of Systems: Research and Innovation Priorities; Initial Document for Public Consultation; European Union’s Seventh Programme for Research, Technological Development and Demonstration; CPSOS Consortium: Düsseldorf, Germany, 2014; pp. 1–21. [Google Scholar]

- Schätz, B.; Törngreen, M.; Bensalem, S.; Cengarle, M.V.; Pfeifer, H.; McDermid, J.A.; Passerone, R.; Sangiovanni-Vincentelli, A. Cyber-Physical European Roadmap and Strategy: Research Agenda and Recommendations for Action, CyPhERS Consortium Technical Report. February 2015. Available online: www.cyphers.eu (accessed on 11 October 2018).

- Horváth, I.; Rusák, Z.; Li, Y. Order beyond chaos: Introducing the notion of generation to characterize the continuously evolving implementations of cyber-physical systems. In Proceedings of the ASME 2017 International Design Engineering Technical Conferences, Cleveland, OH, USA, 6–9 August 2017; pp. 1–14. [Google Scholar]

- Sheth, A.; Anantharam, P.; Henson, C. Physical-cyber-social computing: An early 21st century approach. IEEE Intell. Syst. 2013, 28, 79–82. [Google Scholar] [CrossRef]

- Engell, S.; Paulen, R.; Reniers, M.A.; Sonntag, C.; Thompson, H. Core research and innovation areas in cyber-physical systems of systems. In Cyber Physical Systems. Design, Modeling, and Evaluation; Springer International Publishing: Berlin, Germany, 2015; pp. 40–55. [Google Scholar]

- Colombo, A.W.; Bangemann, T.; Karnouskos, S.; Delsing, J.; Stluka, P.; Harrison, R.; Jammes, F.; Lastra, J.L. Industrial Cloud-Based Cyber-Physical Systems. The IMC-AESOP Approach; Springer Science + Business Media: Berlin/Heidelberg, Germany, 2014; ISBN 3319056239 9783319056234. [Google Scholar]

- Yue, X.; Cai, H.; Yan, H.; Zou, C.; Zhou, K. Cloud-assisted industrial cyber-physical systems: An insight. Microprocess. Microsyst. 2015, 39, 1262–1270. [Google Scholar] [CrossRef]

- Gerritsen, B.H.; Horváth, I. Current drivers and obstacles of synergy in cyber-physical systems design. In Proceedings of the ASME 2012 International Design Engineering Technical Conferences, Chicago, IL, USA, 12–15 August 2012; pp. 1277–1286. [Google Scholar]

- Ribeiro, L.; Barata, J. Self-organizing multiagent mechatronic systems in perspective. In Proceedings of the 11th International Conference on Industrial Informatics, IEEE, Bochum, Germany, 29–31 July 2013; pp. 392–397. [Google Scholar]

- Palviainen, M.; Mäntyjärvi, J.; Ronkainen, J.; Tuomikoski, M. Towards user-driven cyber-physical systems—Strategies to support user intervention in provisioning of information and capabilities of cyber-physical systems. In Industrial Internet of Things; Springer International Publishing: New York, NY, USA, 2017; pp. 575–593. [Google Scholar]

- Juuso, E.K. Integration of intelligent systems in development of smart adaptive systems. Int. J. Approx. Reason. 2004, 35, 307–337. [Google Scholar] [CrossRef]

- Barile, S.; Polese, F. Smart service systems and viable service systems: Applying systems theory to service science. Serv. Sci. 2010, 2, 21–40. [Google Scholar] [CrossRef]

- Zhuge, H. Cyber physical society. In Proceedings of the Sixth International Conference on Semantics Knowledge and Grid, IEEE, Beijing, China, 1–3 November 2010; pp. 1–8. [Google Scholar]

- Hahanov, V.; Litvinova, E.; Chumachenko, S.; Hahanova, A. Cyber physical computing. In Cyber Physical Computing for IoT-driven Services; Springer: Cham, Switzerland, 2018; pp. 1–20. [Google Scholar]

- Stankovic, J.A. Research directions for cyber physical systems in wireless and mobile healthcare. ACM Trans. Cyber-Phys. Syst. 2016, 1, 1–12. [Google Scholar] [CrossRef]

- Leitão, P.; Colombo, A.W.; Karnouskos, S. Industrial automation based on cyber-physical systems technologies: Prototype implementations and challenges. Comput. Ind. 2016, 81, 11–25. [Google Scholar] [CrossRef] [Green Version]

- Sobhrajan, P.; Nikam, S.Y.; Pimpri, D.; Pimpri, P.D. Comparative study of abstraction in cyber physical system. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 466–469. [Google Scholar]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach; Prentice Hall: Englewood Cliffs, NJ, USA, 1995; ISBN 0-13-103805-2. [Google Scholar]

- Tani, J. Model-based learning for mobile robot navigation from the dynamical systems perspective. IEEE Trans. Syst. Man Cybern. Part B Cybern. 1996, 26, 421–436. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Domingos, P. The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World; Basic Books: New York, NY, USA, 2015; pp. 1–315. [Google Scholar]

- Jamroga, W.; Ågotnes, T. Constructive knowledge: What agents can achieve under imperfect information. J. Appl. Non-Class. Log. 2007, 17, 423–475. [Google Scholar] [CrossRef]

- Reed, S.K.; Pease, A. Reasoning from imperfect knowledge. Cogn. Syst. Res. 2017, 41, 56–72. [Google Scholar] [CrossRef]

- McFarlane, D.; Giannikas, V.; Wong, A.C.; Harrison, M. Product intelligence in industrial control: Theory and practice. Annu. Rev. Control 2013, 37, 69–88. [Google Scholar] [CrossRef]

- Meyer, G.G.; Främling, K.; Holmström, J. Intelligent products: A survey. Comput. Ind. 2009, 60, 137–148. [Google Scholar] [CrossRef]

- Flasiński, M. Reasoning with imperfect knowledge. In Introduction to Artificial Intelligence; Springer: Cham, Switzerland, 2016; pp. 175–188. [Google Scholar]

- Lees, B. Smart reasoning. In Artificial Intelligence: Learning Models; Pearson Education: London, UK, 2013; pp. 1–3. [Google Scholar]

- Lumer, C.; Dove, I.J. Argument schemes—An epistemological approach. In Proceedings of the OSSA Conference Archive, Windsor, ON, Canada, 8–21 May 2011; Volume 17, pp. 1–5. [Google Scholar]

- Resnik, M. New paradigms for computing, new paradigms for thinking. In Computers and Explanatory Learning; Springer-Verlag: Berlin/Heidelberg, Germany, 1995; pp. 31–43. [Google Scholar]

- Ayodele, T.O. Types of machine learning algorithms. In New Advances in Machine Learning; IntechOpen: London, UK, 2010; pp. 19–48. ISBN 978-953-307-034-6. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016; Volume 1, pp. 1–800. ISBN 978-0262035613. [Google Scholar]

- Huang, G.B.; Wang, D.H.; Lan, Y. Extreme learning machines: A survey. Int. J. Mach. Learn. Cybern. 2011, 2, 107–122. [Google Scholar] [CrossRef]

- Finocchiaro, M.A. Fallacies and the evaluation of reasoning. Am. Philos. Q. 1981, 18, 13–22. [Google Scholar]

- Sigman, S.; Liu, X.F. A computational argumentation methodology for capturing and analyzing design rationale arising from multiple perspectives. Inf. Softw. Technol. 2003, 45, 113–122. [Google Scholar] [CrossRef]

- Larvor, B. How to think about informal proofs. Synthese 2011, 187, 715–730. [Google Scholar] [CrossRef] [Green Version]

- Marfori, M.A. Informal proofs and mathematical rigour. Stud. Log. 2010, 96, 261–272. [Google Scholar] [CrossRef]

- Håkansson, A.; Hartung, R.; Moradian, E. Reasoning strategies in smart cyber-physical systems. Procedia Comput. Sci. 2015, 60, 1575–1584. [Google Scholar] [CrossRef]

- Johnson-Laird, P.N. Deductive reasoning. Annu. Rev. Psychol. 1999, 50, 109–135. [Google Scholar] [CrossRef] [PubMed]

- Psillos, S. Ampliative reasoning: Induction or abduction. In Proceedings of the ECAI96 Workshop on Abductive and Inductive Reasoning, Budapest, Hungary, 12 August 1996; pp. 1–6. [Google Scholar]

- Hintikka, J. What is abduction? The fundamental problem of contemporary epistemology. Trans. Charles S. Peirce Soc. 1998, 34, 503. [Google Scholar]

- Peirce, C.S. Philosophical writings of Peirce; Buchler, J., Ed.; Courier Corporation: Dover, NY, USA, 1955. [Google Scholar]

- Aliseda, A. Abductive Reasoning: Logical Investigations into Discovery and Explanation; Springer Science & Business Media: Dordrecht, The Netherlands, 2006; Volume 330. [Google Scholar]

- Meheus, J.; Verhoeven, L.; van Dyck, M.; Provijn, D. Ampliative adaptive logics and the foundation of logic-based approaches to abduction. In Logical and Computational Aspects of Model-Based Reasoning; Springer: Dordrecht, The Netherlands, 2002; pp. 39–71. [Google Scholar]

- Satoh, K.; Inoue, K.; Iwanuma, K.; Sakama, C. Speculative computation by abduction under incomplete communication environments. In Proceedings of the Fourth International Conference on Multi Agent Systems, Boston, MA, USA, 10–12 July 2000; pp. 263–270. [Google Scholar]

- Batens, D. A universal logic approach to adaptive logics. Log. Univers. 2007, 1, 221–242. [Google Scholar] [CrossRef]

- Gauderis, T. Modelling abduction in science by means of a modal adaptive logic. Found. Sci. 2013, 18, 611–624. [Google Scholar] [CrossRef]

- Menzies, T.J. An overview of abduction as a general framework for knowledge-based systems. In Proceedings of the Australian AI 1995 Conference, Canberra, Australia, 13–17 November 1995; pp. 1–8. [Google Scholar]

- Hermann, M.; Pichler, R. Counting complexity of propositional abduction. J. Comput. Syst. Sci. 2010, 76, 634–649. [Google Scholar] [CrossRef]

- Kean, A.C. A Formal Characterization of a Domain Independent Abductive Reasoning System. Ph.D. Dissertation, University of British Columbia, Vancouver, Canada, 1993; pp. 1–175. [Google Scholar]

- Eiter, T.; Gottlob, G.; Leone, N. Abduction from logic programs: Semantics and complexity. Theor. Comput. Sci. 1997, 189, 129–177. [Google Scholar] [CrossRef]

- Gottlob, G.; Pichler, R.; Wei, F. Bounded treewidth as a key to tractability of knowledge representation and reasoning. Artif. Intell. 2010, 174, 105–132. [Google Scholar] [CrossRef]

- Poole, D.; Rowen, G.M. What is an optimal diagnosis? In Proceedings of the Sixth Conference on Uncertainty in AI, Cambridge, MA, USA, 27–29 July 1990; pp. 46–53. [Google Scholar]

- De Campos, L.M.; Gámez, J.A.; Moral, S. Partial abductive inference in Bayesian belief networks using a genetic algorithm. Pattern Recognit. Lett. 1999, 20, 1211–1217. [Google Scholar] [CrossRef] [Green Version]

- Psillos, S. Abduction: Between conceptual richness and computational complexity. In Abduction and Induction; Springer: Dordrecht, The Netherlands, 2000; pp. 59–74. [Google Scholar]

- Pagnucco, M.; Foo, N. Inverting resolution with conceptual graphs. In Proceedings of the International Conference on Conceptual Structures, Kassel, Germany, 18–22 July 1993; Springer: Berlin/Heidelberg, Germany, 1993; pp. 238–253. [Google Scholar]

- Boutilier, C.; Becher, V. Abduction as belief revision. Artif. Intell. 1995, 77, 43–94. [Google Scholar] [CrossRef]

- Kakas, A.; van Nuffelen, B.; Denecker, M. A-system: Problem solving through abduction. In Proceedings of the Seventeenth International Joint Conference on Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001; Volume 1, pp. 591–596. [Google Scholar]

- Verdoolaege, S.; Denecker, M.; van Eynde, F. Abductive reasoning with temporal information. arXiv, 2000; arXiv:cs/0011035. [Google Scholar]

- Sangiovanni-Vincentelli, A. Quo vadis, SLD? Reasoning about the trends and challenges of system level design. Proc. IEEE 2007, 95, 467–506. [Google Scholar] [CrossRef]

- Gabbay, D.M.; Woods, J. Formal approaches to practical reasoning: A survey. In Handbook of the Logic of Argument and Inference: The Turn Towards the Practical; Elsevier: Amsterdam, The Netherlands, 2002; Volume 1, pp. 445–478. [Google Scholar]

- Oaksford, M.; Chater, N. Conditional probability and the cognitive science of conditional reasoning. Mind Lang. 2003, 18, 359–379. [Google Scholar] [CrossRef]

- Van Nuffelen, B. A-system: Problem solving through abduction. In Proceedings of the 13th Dutch-Belgian Artificial Intelligence Conference, Amsterdam, The Netherlands, 25–26 October 2001; Volume 1, pp. 591–596. [Google Scholar]

- Kakas, A.; Riguzzi, F. Abductive concept learning. New Gener. Comput. 2000, 18, 243–294. [Google Scholar] [CrossRef] [Green Version]

- Bylander, T.; Allemang, D.; Tanner, M.C.; Josephson, J.R. The computational complexity of abduction. Artif. Intell. 1991, 49, 25–60. [Google Scholar] [CrossRef]

- Pourtalebi, S. System-Level Feature-Based Modeling of Cyber-Physical Systems. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2017. [Google Scholar]

- Ruiz-Arenas, S. Exploring the Role of System Operation Modes in Failure Analysis in the Context of First Generation Cyber-Physical Systems. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2018. [Google Scholar]

- Li, Y. Modelling, Inferring and Reasoning Dynamic Context Information in Informing Cyber Physical Systems. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2018. [Google Scholar]

- Li, C. Cyber-Physical Solution for an Engagement Enhancing Rehabilitation System. Ph.D. Thesis, Delft University of Technology, Delft, The Netherlands, 2016. [Google Scholar]

- Horváth, I. Procedural abduction as enabler of smart operation of cyber-physical systems: Theoretical foundations. In Proceedings of the 23rd ICE/IEEE International Conference on Engineering, Technology, and Innovation, Madeira Island, Portugal, 27–29 June 2017; pp. 124–132. [Google Scholar]

- Horváth, I.; Tepjit, S.; Rusák, Z. Compositional engineering frameworks for development of smart cyber-physical systems: A critical survey of the current state of progression. In Proceedings of the ASME Computers and Information in Engineering Conference, Quebec City, QC, Canada, 26–29 August 2018; pp. 1–14. [Google Scholar]

- Tavčar, J.; Horváth, I. A Review of the Principles of Designing Smart Cyber-Physical Systems for Run-Time Adaptation: Learned Lessons and Open Issues. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 145–158. [Google Scholar] [CrossRef]

- Pourtalebi, S.; Horváth, I. Information schema constructs for defining warehouse databases of genotypes and phenotypes of system manifestation features. Front. Inf. Technol. Electron. Eng. 2016, 17, 862–884. [Google Scholar] [CrossRef]

- Pourtalebi, S.; Horváth, I. Information schema constructs for instantiation and composition of system manifestation features. Front. Inf. Technol. Electron. Eng. 2017, 18, 1396–1415. [Google Scholar] [CrossRef]

- Ruiz-Arenas, S.; Rusák, Z.; Colina, S.R.; Mejia-Gutierrez, R.; Horváth, I. Testbed for validating failure diagnosis and preventive maintenance methods by a low-end cyber-physical system. In Proceedings of the 11th Tools and Methods of Competitive Engineering Symposium, Aix-en-Provence, France, 9–13 May 2016; Volume 1, pp. 1–11. [Google Scholar]

- Ruiz-Arenas, S.; Rusá, Z.; Horváth, I.; Mejia-Gutierrez, R. Systematic exploration of signal-based indicators for failure diagnosis in the context of cyber-physical systems. J. Front. Inf. Technol. Electron. Eng. 2018, 1–16, accepted. [Google Scholar]

- Horváth, I.; Li, Y.; Rusák, Z.; van der Vegte, W.F.; Zhang, G. Dynamic computation of time-varying spatial contexts. J. Comput. Inf. Sci. Eng. 2017, 17, 011007-1-12. [Google Scholar] [CrossRef]

- Li, Y.; Horváth, I.; Rusák, Z. Building awareness in dynamic context for the second-generation cyber-physical systems. Future Gener. Comput. Syst. 2018, 1–34, submitted. [Google Scholar]

- Li, C.; Rusák, Z.; Horváth, I.; Ji, L. Development of engagement evaluation method and learning mechanism in an engagement enhancing rehabilitation system. Eng. Appl. Artif. Intell. 2016, 51, 182–190. [Google Scholar] [CrossRef]

- Li, C.; Rusák, Z.; Horváth, I.; Kooijman, A.; Ji, L. Implementation and validation of engagement monitoring in an engagement enhancing rehabilitation system. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 726–738. [Google Scholar] [CrossRef] [PubMed]

- Wolpert, D.; Wheeler, K.; Tumer, K. Collective Intelligence for Control of Distributed Dynamical Systems; Technical Report NASA-ARC-IC-99-44; NASA: Ames, IA, USA, 1999; pp. 1–8.

- Pease, A.; Aberdein, A. Five theories of reasoning: Interconnections and applications to mathematics. Logic Log. Philos. 2011, 20, 7–57. [Google Scholar] [CrossRef]

- Tripakis, S. Compositionality in the science of system design. Proc. IEEE 2016, 104, 960–972. [Google Scholar] [CrossRef]

- Fouquet, F.; Morin, B.; Fleurey, F.; Barais, O.; Plouzeau, N.; Jezequel, J.M. A dynamic component model for cyber physical systems. In Proceedings of the 15th ACM SIGSOFT Symposium on Component Based Software Engineering, Bertinoro, Italy, 25–28 June 2012; pp. 135–144. [Google Scholar]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Horváth, I. A Computational Framework for Procedural Abduction Done by Smart Cyber-Physical Systems. Designs 2019, 3, 1. https://doi.org/10.3390/designs3010001

Horváth I. A Computational Framework for Procedural Abduction Done by Smart Cyber-Physical Systems. Designs. 2019; 3(1):1. https://doi.org/10.3390/designs3010001

Chicago/Turabian StyleHorváth, Imre. 2019. "A Computational Framework for Procedural Abduction Done by Smart Cyber-Physical Systems" Designs 3, no. 1: 1. https://doi.org/10.3390/designs3010001