Comparative Assessment of Large Language Models in Optics and Refractive Surgery: Performance on Multiple-Choice Questions

Abstract

1. Introduction

2. Methods

2.1. Large Language Model-Based Software

2.2. Evaluation Dataset

2.3. Statistical Analysis

3. Results

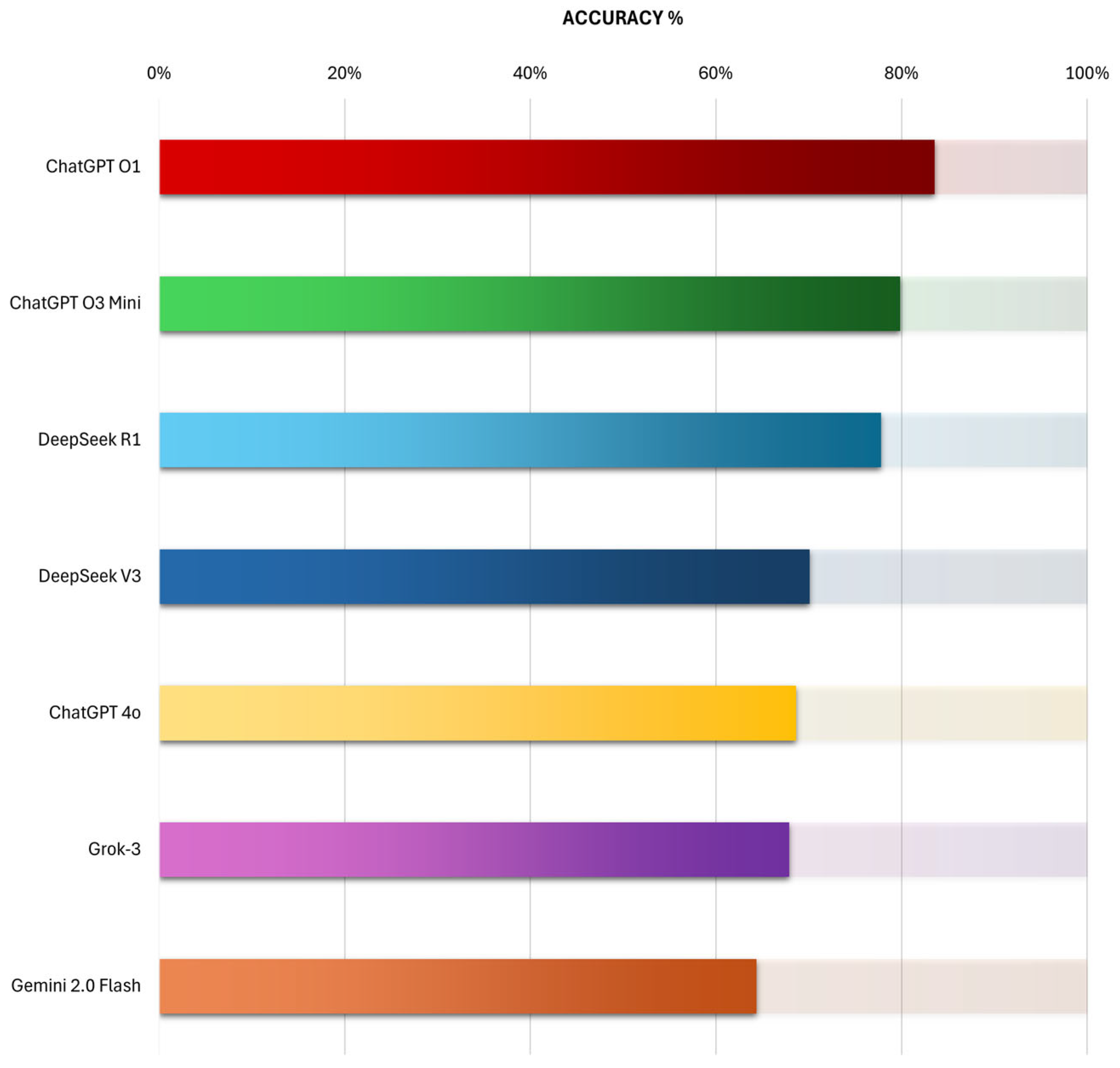

3.1. Overall Performance

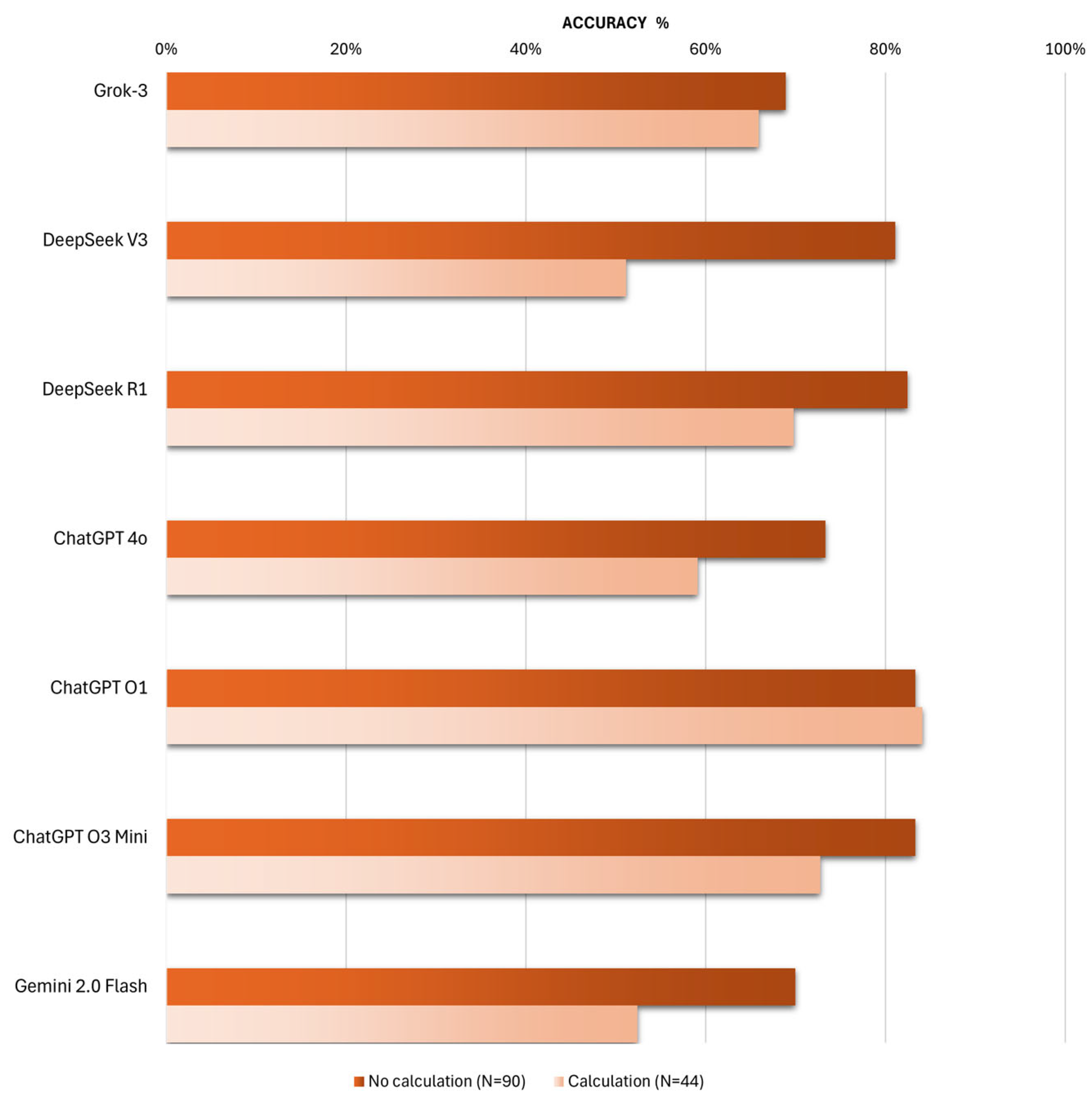

3.2. Performance by Need for Calculations

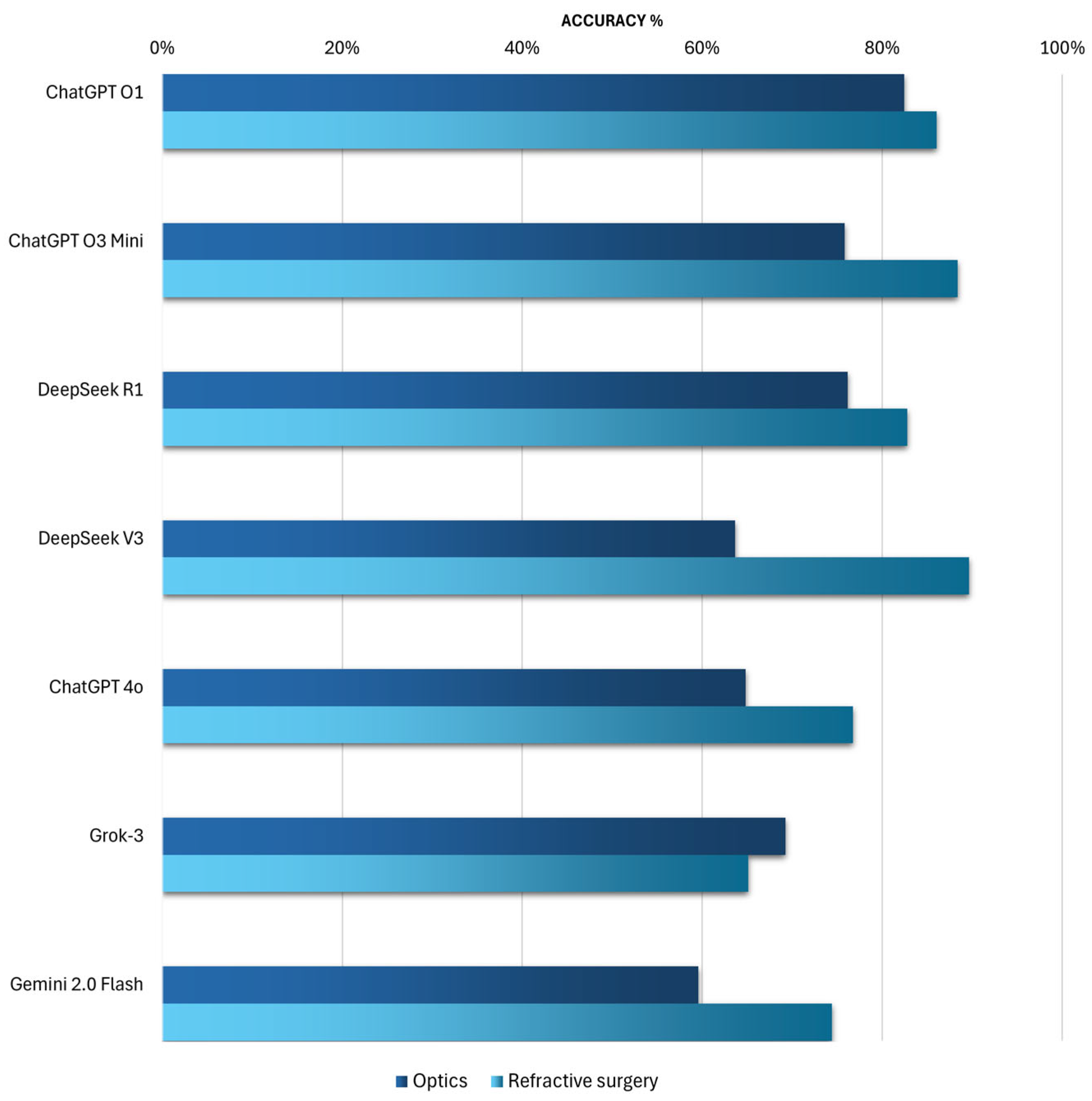

3.3. Performance by Subspecialty

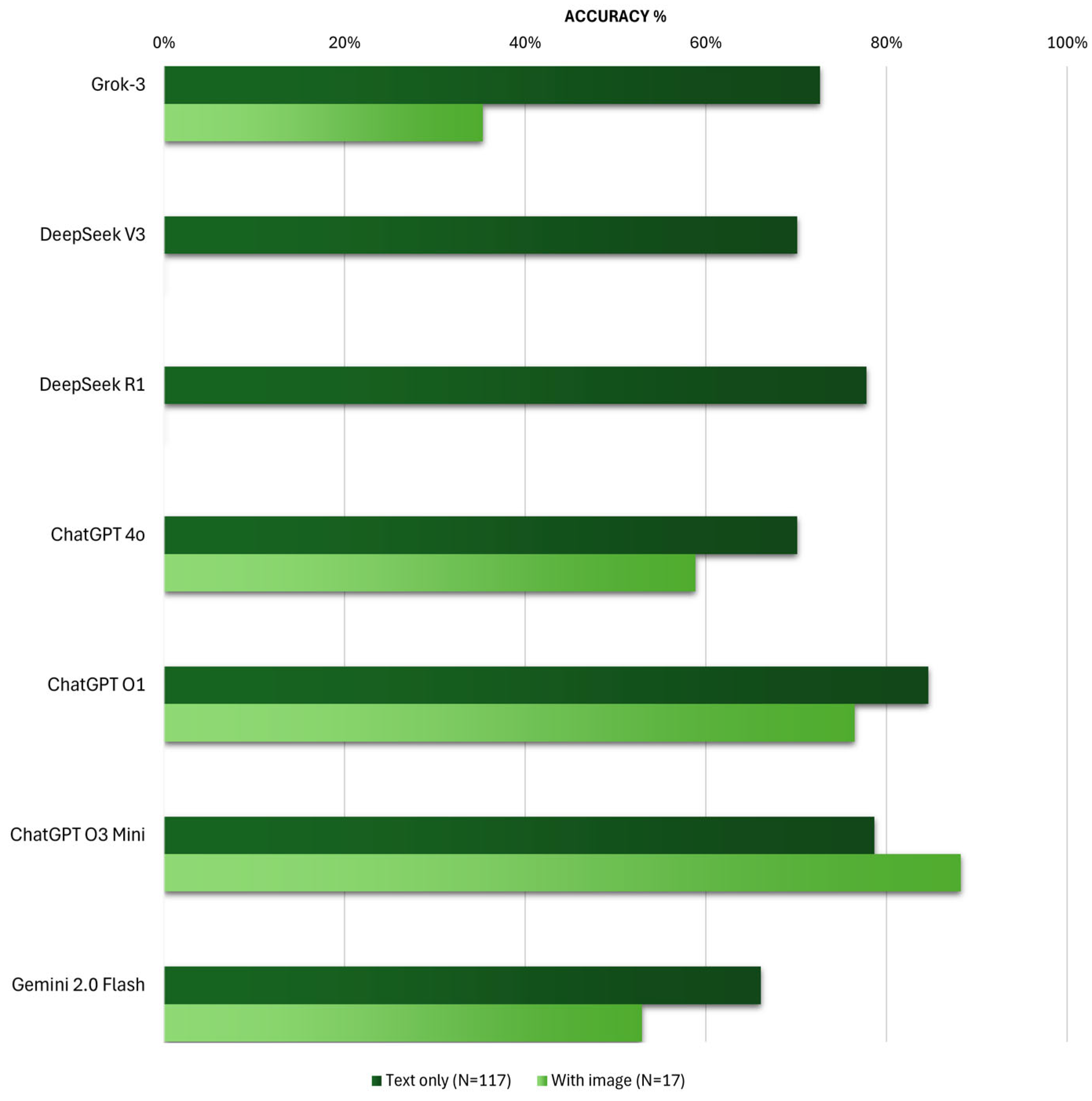

3.4. Performance by Question Format

4. Discussion

4.1. Comparative Accuracy of LLMs in Optics and Refractive Surgery

4.2. Evaluation of LLMs for Calculation-Based Questions

4.3. Comparative Performance of LLMs Across Subspecialties

4.4. Evaluation of Image Processing Capabilities

4.5. AI and Optics: A New Era in Medical Education

4.6. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Baseline | Accuracy | Contender | Accuracy | All Responses | Odds Ratio | 95% CI | p-Value | Phi Φ |

|---|---|---|---|---|---|---|---|---|

| Overall comparison | ||||||||

| ChatGPT O1 | 112/134 (83.6%) | Grok-3 | 91/134 (67.9%) | 268 | 0.42 | 0.23–0.75 | 0.003 | 0.19 |

| DeepSeek V3 | 82/117 (70.1%) | 251 | 0.47 | 0.25–0.84 | 0.011 | 0.17 | ||

| DeepSeek R1 | 91/117 (77.8%) | 251 | 0.69 | 0.37–1.29 | 0.244 | 0.08 | ||

| ChatGPT 4o | 92/134 (68.7%) | 268 | 0.44 | 0.24–0.77 | 0.005 | 0.18 | ||

| ChatGPT O3 Mini | 107/134 (79.9%) | 268 | 0.78 | 0.42–1.45 | 0.430 | 0.05 | ||

| Gemini 2.0 Flash | 85/132 (64.4%) | 266 | 0.36 | 0.2–0.63 | <0.001 | 0.22 | ||

| Comparison by topics | ||||||||

| ChatGPT O1 Optics | 75/91 (82.4%) | Grok-3 | 63/91 (69.2%) | 182 | 0.48 | 0.24–0.97 | 0.038 | 0.16 |

| DeepSeek V3 | 56/88 (63.6%) | 179 | 0.38 | 0.19–0.75 | 0.005 | 0.22 | ||

| DeepSeek R1 | 67/88 (76.1%) | 179 | 0.69 | 0.33–1.41 | 0.300 | 0.08 | ||

| ChatGPT 4o | 59/91 (64.8%) | 182 | 0.40 | 0.2–0.78 | 0.008 | 0.20 | ||

| ChatGPT O3 Mini | 69/91 (75.8%) | 182 | 0.67 | 0.32–1.38 | 0.274 | 0.09 | ||

| Gemini 2.0 Flash | 53/89 (59.6%) | 180 | 0.32 | 0.16–0.62 | <0.001 | 0.26 | ||

| ChatGPT O1 Refractive | 37/43 (86%) | Grok-3 | 28/43 (65.1%) | 86 | 0.31 | 0.1–0.88 | 0.024 | 0.25 |

| DeepSeek V3 | 26/29 (89.7%) | 72 | 1.41 | 0.32–6.14 | 0.650 | 0.06 | ||

| DeepSeek R1 | 24/29 (82.8%) | 72 | 0.78 | 0.21–2.84 | 0.704 | 0.05 | ||

| ChatGPT 4o | 33/43 (76.7%) | 86 | 0.54 | 0.18–1.63 | 0.268 | 0.12 | ||

| ChatGPT O3 Mini | 38/43 (88.4%) | 86 | 1.24 | 0.35–4.39 | 0.747 | 0.04 | ||

| Gemini 2.0 Flash | 32/43 (74.4%) | 86 | 0.48 | 0.16–1.42 | 0.176 | 0.15 | ||

| Comparison by calculation-based questions | ||||||||

| ChatGPT O1 No calculation | 75/90 (83.3%) | Grok-3 | 62/90 (68.9%) | 180 | 0.45 | 0.22–0.9 | 0.024 | 0.17 |

| DeepSeek V3 | 60/74 (81.1%) | 164 | 0.86 | 0.38–1.91 | 0.707 | 0.03 | ||

| DeepSeek R1 | 61/74 (82.4%) | 164 | 0.94 | 0.41–2.12 | 0.879 | 0.02 | ||

| ChatGPT 4o | 66/90 (73.3%) | 180 | 0.55 | 0.27–1.14 | 0.104 | 0.13 | ||

| ChatGPT O3 Mini | 75/90 (83.3%) | 180 | 1.00 | 0.46–2.19 | 1.000 | 0.00 | ||

| Gemini 2.0 Flash | 63/90 (70%) | 180 | 0.47 | 0.23–0.95 | 0.035 | 0.16 | ||

| ChatGPT O1 Calculation | Grok-3 | 29/44 (65.9%) | 88 | 0.37 | 0.13–1.01 | 0.049 | 0.21 | |

| DeepSeek V3 | 22/43 (51.2%) | 87 | 0.20 | 0.07–0.54 | 0.002 | 0.36 | ||

| DeepSeek R1 | 30/43 (69.8%) | 87 | 0.44 | 0.15–1.23 | 0.113 | 0.18 | ||

| ChatGPT 4o | 26/44 (59.1%) | 88 | 0.28 | 0.1–0.75 | 0.010 | 0.28 | ||

| ChatGPT O3 Mini | 32/44 (72.7%) | 88 | 0.51 | 0.18–1.44 | 0.196 | 0.14 | ||

| Gemini 2.0 Flash | 22/42 (52.4%) | 86 | 0.21 | 0.08–0.57 | 0.002 | 0.35 | ||

| Comparison of text-only vs. image-based questions | ||||||||

| ChatGPT O1 Text only | 99/117 (84.6%) | Grok-3 | 85/117 (72.6%) | 234 | 0.49 | 0.25–0.92 | 0.026 | 0.15 |

| DeepSeek V3 | 82/117 (70.1%) | 234 | 0.43 | 0.22–0.81 | 0.008 | 0.18 | ||

| DeepSeek R1 | 91/117 (77.8%) | 234 | 0.64 | 0.33–1.24 | 0.181 | 0.09 | ||

| ChatGPT 4o | 82/117 (70.1%) | 234 | 0.43 | 0.22–0.81 | 0.008 | 0.18 | ||

| ChatGPT O3 Mini | 92/117 (78.6%) | 234 | 0.67 | 0.34–1.31 | 0.238 | 0.08 | ||

| Gemini 2.0 Flash | 76/115 (66.1%) | 232 | 0.36 | 0.19–0.67 | 0.002 | 0.22 | ||

| ChatGPT O1 With Image | 13/17 (76.5%) | Grok-3 | 6/17 (35.3%) | 34 | 0.17 | 0.04–0.75 | 0.016 | 0.42 |

| DeepSeek V3 | - | 17 | - | - | - | - | ||

| DeepSeek R1 | - | 17 | - | - | - | - | ||

| ChatGPT 4o | 10/17 (58.8%) | 34 | 0.44 | 0.1–1.93 | 0.272 | 0.19 | ||

| ChatGPT O3 Mini | 15/17 (88.2%) | 34 | 2.31 | 0.36–14.72 | 0.369 | 0.16 | ||

| Gemini 2.0 Flash | 9/17 (52.9%) | 34 | 0.35 | 0.08–1.51 | 0.152 | 0.25 | ||

References

- Attal, L.; Shvartz, E.; Nakhoul, N.; Bahir, D. Chat GPT 4o vs. residents: French language evaluation in ophthalmology. AJO Int. 2025, 2, 100104. [Google Scholar] [CrossRef]

- Panthier, C.; Gatinel, D. Success of ChatGPT, an AI language model, in taking the French language version of the European Board of Ophthalmology examination: A novel approach to medical knowledge assessment. J. Fr. Ophtalmol. 2023, 46, 706–711. [Google Scholar] [CrossRef]

- De Fauw, J.; Ledsam, J.R.; Romera-Paredes, B.; Nikolov, S.; Tomasev, N.; Blackwell, S.; Askham, H.; Glorot, X.; O’Donoghue, B.; Visentin, D.; et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018, 24, 1342–1350. [Google Scholar] [CrossRef] [PubMed]

- Carlà, M.M.; Gambini, G.; Baldascino, A.; Giannuzzi, F.; Boselli, F.; Crincoli, E.; D’Onofrio, N.C.; Rizzo, S. Exploring AI-chatbots’ capability to suggest surgical planning in ophthalmology: ChatGPT versus Google Gemini analysis of retinal detachment cases. Br. J. Ophthalmol. 2024, 108, 1457–1469. [Google Scholar] [CrossRef] [PubMed]

- Carlà, M.M.; Gambini, G.; Baldascino, A.; Boselli, F.; Giannuzzi, F.; Margollicci, F.; Rizzo, S. Large language models as assistance for glaucoma surgical cases: A ChatGPT vs. Google Gemini comparison. Graefe’s Arch. Clin. Exp. Ophthalmol. 2024, 262, 2945–2959. [Google Scholar] [CrossRef] [PubMed]

- Ahuja, A.S. The impact of artificial intelligence in medicine on the future role of the physician. PeerJ 2019, 7, e7702. [Google Scholar] [CrossRef]

- Bansal, G.; Chamola, V.; Hussain, A.; Guizani, M.; Niyato, D. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cogn. Comput. 2024, 16, 2487–2510. [Google Scholar] [CrossRef]

- Jee, H. Emergence of artificial intelligence chatbots in scientific research. Korean Soc. Exerc. Rehabil. 2023, 19, 139. [Google Scholar] [CrossRef]

- Touma, N.J.; Caterini, J.; Liblk, K. Performance of artificial intelligence on a simulated Canadian urology board exam. Can. Urol. Assoc. J. 2024, 18, 329–332. [Google Scholar] [CrossRef]

- Vaishya, R.; Iyengar, K.P.; Patralekh, M.K.; Botchu, R.; Shirodkar, K.; Jain, V.K.; Vaish, A.; Scarlat, M.M. Effectiveness of AI-powered Chatbots in responding to orthopaedic postgraduate exam questions—An observational study. Int. Orthop. 2024, 48, 1963–1969. [Google Scholar] [CrossRef]

- Meo, S.A.; Al-Khlaiwi, T.; AbuKhalaf, A.A.; Meo, A.S.; Klonoff, D.C. The Scientific Knowledge of Bard and ChatGPT in Endocrinology, Diabetes, and Diabetes Technology: Multiple-Choice Questions Examination-Based Performance. J. Diabetes Sci. Technol. 2023, 19, 705–710. [Google Scholar] [CrossRef]

- Bahir, D.; Zur, O.; Attal, L.; Nujeidat, Z.; Knaanie, A.; Pikkel, J.; Mimouni, M.; Plopsky, G. Gemini AI vs. ChatGPT: A comprehensive examination alongside ophthalmology residents in medical knowledge. Graefe’s Arch. Clin. Exp. Ophthalmol. 2024, 263, 527–536. [Google Scholar] [CrossRef]

- Sabaner, M.C.; Hashas, A.S.K.; Mutibayraktaroglu, K.M.; Yozgat, Z.; Klefter, O.N.; Subhi, Y. The performance of artificial intelligence-based large language models on ophthalmology-related questions in Swedish proficiency test for medicine: ChatGPT-4 omni vs. Gemini 1.5 Pro. AJO Int. 2024, 1, 100070. [Google Scholar] [CrossRef]

- Javaeed, A. Assessment of Higher Ordered Thinking in Medical Education: Multiple Choice Questions and Modified Essay Questions. MedEdPublish 2018, 7, 128. [Google Scholar] [CrossRef]

- Panchbudhe, S.; Shaikh, S.; Swami, H.; Kadam, C.Y.; Padalkar, R.; Shivkar, R.R.; Gulavani, G.; Gulajkar, S.; Gawade, S.; Mujawar, F. Efficacy of Google Form–based MCQ tests for formative assessment in medical biochemistry education. J. Educ. Health Promot. 2024, 13, 92. [Google Scholar] [CrossRef] [PubMed]

- Sakai, D.; Maeda, T.; Ozaki, A.; Kanda, G.N.; Kurimoto, Y.; Takahashi, M. Performance of ChatGPT in Board Examinations for Specialists in the Japanese Ophthalmology Society. Cureus 2023, 15, e49903. [Google Scholar] [CrossRef] [PubMed]

- Sabaner, M.C.; Anguita, R.; Antaki, F.; Balas, M.; Boberg-Ans, L.C.; Ferro Desideri, L.; Grauslund, J.; Hansen, M.S.; Klefter, O.N.; Potapenko, I.; et al. Opportunities and Challenges of Chatbots in Ophthalmology: A Narrative Review. J. Pers. Med. 2024, 14, 1165. [Google Scholar] [CrossRef] [PubMed]

- The Internship Website—A Database of Written Exam Files. Available online: https://www.ima.org.il/internship/Exams.aspx (accessed on 19 March 2025).

- Jones, N. ‘In awe’: Scientists impressed by latest ChatGPT model o1. Nature 2024, 634, 275–276. [Google Scholar] [CrossRef]

- Patil, A.; Jadon, A. Advancing Reasoning in Large Language Models: Promising Methods and Approaches. arXiv 2025, arXiv:2502.03671. [Google Scholar] [CrossRef]

- Temsah, M.-H.; Jamal, A.; Alhasan, K.; Temsah, A.A.; Malki, K.H. OpenAI o1-Preview vs. ChatGPT in Healthcare: A New Frontier in Medical AI Reasoning. Cureus 2024, 16, e70640. [Google Scholar] [CrossRef]

- Chang, Y.; Su, C.Y.; Liu, Y.C. Assessing the Performance of Chatbots on the Taiwan Psychiatry Licensing Examination Using the Rasch Model. Healthcare 2024, 12, 2305. [Google Scholar] [CrossRef] [PubMed]

- Sawamura, S.; Kohiyama, K.; Takenaka, T.; Sera, T.; Inoue, T.; Nagai, T. An Evaluation of the Performance of OpenAI-o1 and GPT-4o in the Japanese National Examination for Physical Therapists. Cureus 2025, 17, e76989. [Google Scholar] [CrossRef] [PubMed]

- Robert Booth. What is DeepSeek and Why Did US Tech Stocks Fall? 27 January 2025. Available online: https://www.theguardian.com/business/2025/jan/27/what-is-deepseek-and-why-did-us-tech-stocks-fall?utm_source=chatgpt.com (accessed on 15 April 2025).

- Zhou, M.; Pan, Y.; Zhang, Y.; Song, X.; Zhou, Y. Evaluating AI-generated patient education materials for spinal surgeries: Comparative analysis of readability and DISCERN quality across ChatGPT and deepseek models. Int. J. Med. Inform. 2025, 198, 105871. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Wang, Z. Explainable Sentiment Analysis with DeepSeek-R1: Performance, Efficiency, and Few-Shot Learning. arXiv 2025, arXiv:2503.11655. [Google Scholar] [CrossRef]

- Evstafev, E. Token-Hungry, Yet Precise: DeepSeek R1 Highlights the Need for Multi-Step Reasoning Over Speed in MATH. arXiv 2025, arXiv:2501.18576. [Google Scholar]

- Moshirfar, M.; Altaf, A.W.; Stoakes, I.M.; Tuttle, J.J.; Hoopes, P.C. Artificial Intelligence in Ophthalmology: A Comparative Analysis of GPT-3.5, GPT-4, and Human Expertise in Answering StatPearls Questions. Cureus 2023, 15, e40822. [Google Scholar] [CrossRef]

- Hirosawa, T.; Harada, Y.; Tokumasu, K.; Ito, T.; Suzuki, T.; Shimizu, T. Evaluating ChatGPT-4’s Diagnostic Accuracy: Impact of Visual Data Integration. JMIR Med. Inform. 2024, 12, e55627. [Google Scholar] [CrossRef]

- Qiu, J.; Wu, J.; Wei, H.; Shi, P.; Zhang, M.; Sun, Y.; Li, L.; Liu, H.; Liu, H.; Hou, S.; et al. Development and Validation of a Multimodal Multitask Vision Foundation Model for Generalist Ophthalmic Artificial Intelligence. NEJM AI 2024, 1, AIoa2300221. [Google Scholar] [CrossRef]

- Müller, C.; Mildenberger, T. Facilitating flexible learning by replacing classroom time with an online learning environment: A systematic review of blended learning in higher education. Educ. Res. Rev. 2021, 34, 100394. [Google Scholar] [CrossRef]

- Goh, E.; Gallo, R.J.; Strong, E.; Weng, Y.; Kerman, H.; Freed, J.A.; Cool, J.A.; Kanjee, Z.; Lane, K.P.; Parsons, A.S.; et al. GPT-4 assistance for improvement of physician performance on patient care tasks: A randomized controlled trial. Nat. Med. 2025, 31, 1233–1238. [Google Scholar] [CrossRef]

- Gorenshtein, A.; Sorka, M.; Fistel, S.; Shelly, S. Reduced Neurological Burnout in the ER Utilizing Advanced Sophisticated Large Language Model (P1-2.001). Neurology 2025, 104 (Suppl. S1), 4556. [Google Scholar] [CrossRef]

- Hartman, V.; Zhang, X.; Poddar, R.; McCarty, M.; Fortenko, A.; Sholle, E.; Sharma, R.; Campion, T.; Steel, P.A. Developing and Evaluating Large Language Model-Generated Emergency Medicine Handoff Notes. JAMA Netw. Open 2024, 7, e2448723. [Google Scholar] [CrossRef] [PubMed]

- Hswen, Y.; Abbasi, J. AI Will—And Should—Change Medical School, Says Harvard’s Dean for Medical Education. JAMA 2023, 330, 1820–1823. [Google Scholar] [CrossRef]

- Fernández-Pichel, M.; Pichel, J.C.; Losada, D.E. Evaluating search engines and large language models for answering health questions. NPJ Digit. Med. 2025, 8, 153. [Google Scholar] [CrossRef] [PubMed]

- Cai, L.Z.; Shaheen, A.; Jin, A.; Fukui, R.; Yi, J.S.; Yannuzzi, N.; Alabiad, C. Performance of Generative Large Language Models on Ophthalmology Board–Style Questions. Am. J. Ophthalmol. 2023, 254, 141–149. [Google Scholar] [CrossRef] [PubMed]

- Taloni, A.; Borselli, M.; Scarsi, V.; Rossi, C.; Coco, G.; Scorcia, V.; Giannaccare, G. Comparative performance of humans versus GPT-4.0 and GPT-3.5 in the self-assessment program of American Academy of Ophthalmology. Sci. Rep. 2023, 13, 18562. [Google Scholar] [CrossRef]

- Kedia, N.; Sanjeev, S.; Ong, J.; Chhablani, J. ChatGPT and Beyond: An overview of the growing field of large language models and their use in ophthalmology. Eye 2024, 38, 1252–1261. [Google Scholar] [CrossRef]

- Bélisle-Pipon, J.C. Why We Need to Be Careful with LLMs in Medicine. Front. Med. 2024, 11, 1495582. [Google Scholar] [CrossRef]

- Safranek, C.W.; Sidamon-Eristoff, A.E.; Gilson, A.; Chartash, D. The Role of Large Language Models in Medical Education: Applications and Implications. JMIR Med. Educ. 2023, 9, e50945. [Google Scholar] [CrossRef]

- Vrdoljak, J.; Boban, Z.; Vilović, M.; Kumrić, M.; Božić, J. A Review of Large Language Models in Medical Education, Clinical Decision Support, and Healthcare Administration. Healthcare 2025, 13, 603. [Google Scholar] [CrossRef]

- Gorenshtein, A.; Shihada, K.; Sorka, M.; Aran, D.; Shelly, S. LITERAS: Biomedical literature review and citation retrieval agents. Comput. Biol. Med. 2025, 192, 110363. [Google Scholar] [CrossRef]

- Černý, M. Educational Psychology Aspects of Learning with Chatbots without Artificial Intelligence: Suggestions for Designers. Eur. J. Investig. Health Psychol. Educ. 2023, 13, 284–305. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attal, L.; Shvartz, E.; Gorenshtein, A.; Pincovich, S.; Bahir, D. Comparative Assessment of Large Language Models in Optics and Refractive Surgery: Performance on Multiple-Choice Questions. Vision 2025, 9, 85. https://doi.org/10.3390/vision9040085

Attal L, Shvartz E, Gorenshtein A, Pincovich S, Bahir D. Comparative Assessment of Large Language Models in Optics and Refractive Surgery: Performance on Multiple-Choice Questions. Vision. 2025; 9(4):85. https://doi.org/10.3390/vision9040085

Chicago/Turabian StyleAttal, Leah, Elad Shvartz, Alon Gorenshtein, Shirley Pincovich, and Daniel Bahir. 2025. "Comparative Assessment of Large Language Models in Optics and Refractive Surgery: Performance on Multiple-Choice Questions" Vision 9, no. 4: 85. https://doi.org/10.3390/vision9040085

APA StyleAttal, L., Shvartz, E., Gorenshtein, A., Pincovich, S., & Bahir, D. (2025). Comparative Assessment of Large Language Models in Optics and Refractive Surgery: Performance on Multiple-Choice Questions. Vision, 9(4), 85. https://doi.org/10.3390/vision9040085