1. Introduction

The capacity limits of various perceptual and cognitive processes have been, and continue to be, important topics of study in psychological research. From the classic work of Miller [

1] who established the “magic number” seven for the capacity of working memory, to Cowan’s work [

2] on visual working memory capacity, quantifying these capacities is paramount in establishing the capabilities and limitations of the human brain. While Miller’s and Cowan’s work was unimodal in nature, more recent research has focused on how presentation of stimuli in multiple modalities can influence perception of individual stimuli. This includes numerous combinations of modalities, including the integration of auditory and visual information. Some of the earliest work on audiovisual integration was that of Sumby and Pollack [

3], who found that speech perception in a noisy environment is made easier by being able to watch the speaker’s lips. The demonstration of stimuli in one modality promoting better perception in a different modality led to a burgeoning field of research, much of which will be discussed within this special issue of

Vision.

Multisensory integration plays an important role in the way we perceive and interact with the world. The capacity to attend to a single stimulus in our noisy environment can be challenging but can be facilitated by the presentation of a simultaneous (or near-simultaneous) tone [

4,

5,

6]. In a study by Van der Burg et al. [

6] of the “pip and pop” effect, visual targets were presented among an array of similar distractor stimuli, in cases where it is normally very difficult to locate the target. They found that presenting a spatially uninformative auditory event drastically decreased the time needed for participants to complete this type of serial search task. These results demonstrated the possible involvement of stimulus-driven multisensory integration on attentional selection using a difficult visual search task. They also showed that presentation of a visual warning signal provided no facilitation in the pip-and-pop task, which indicates that the integration of an auditory tone with a specific visual presentation leads it to “pop out” of the display, allowing that particular visual presentation to be prioritized for further processing. Furthering the contribution of these results, additional research showed that the observed facilitatory effect was not due to general alerting, nor was it due to top-down cueing of the visual change [

7]. Instead, they proposed that the binding of synchronized audiovisual signals occurs rapidly, automatically, and effortlessly, with the auditory signal attaching to the visual signal relatively early in the perceptual process. As a result, the visual target becomes more salient within its dynamic, cluttered environment.

While much of the early work in audiovisual integration involved considering how temporal (e.g., [

8,

9,

10,

11]) and stimulus congruency (e.g., [

12,

13,

14]) factors play a role in one-to-one integration, recent attention has turned to quantifying the capacity of audiovisual integration. Van der Burg et al. [

15] suggested that the capacity is strictly limited to a single item, while work from our lab has proposed that the capacity is flexible, can exceed one, and modulates based on numerous factors [

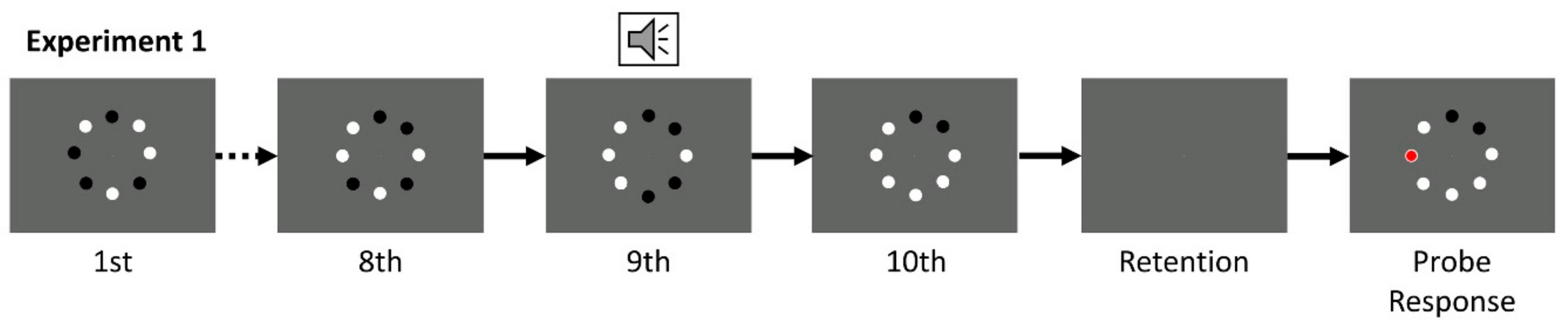

16,

17]. In this series of experiments, participants were presented with eight visual stimuli (dots) arranged along an imaginary circle. Each of these dots was initially black or white, and then a number of the dots (between one and four, dependent on trial) changed polarity from black to white, or vice versa. A total of 10 dot presentations occurred wherein a different subset of dots changed polarity, and on one of the dot presentations, a tone was presented, with the understanding that participants would be able to note which dots changed in synchrony with the tone through a process of audiovisual integration. Participants were asked to respond to a probed location, as to whether the dot in that location did (or did not) change in synchrony with the tone. Results from our work showed that participants were able to integrate more than one visual stimulus with an auditory stimulus, and that capacity modulated as a function of factors such as cross-modal congruency or dots being connected by real or illusory polygons. The specific improvement provided by audiovisual integration in these studies was confirmed through comparison of audiovisual conditions to visual cueing conditions ([

16], experiment 5 [

18], experiments 1b and 2b). In these conditions, rather than a tone, participants were presented with a spatially uninformative visual stimulus to indicate the dots changing at the critical presentation. These visual cues were found to be inferior to audiovisual integration trials, confirming the unique contribution of audiovisual integration.

In considering the limitations of audiovisual integration, we have found that at particularly short visual durations of presentation, capacity was severely limited. This was the case in Van der Burg et al.’s work [

15], where the rates of presentation were set at 150 or 200 ms. We replicated their findings at 200 ms and extended the findings with a 700 ms condition that led to capacity exceeding one item. In the majority of our experimental variations, we found that average capacity estimates in our populations did not exceed one item at 200 ms. We also confirmed that at this duration of presentation, there is no significant modulation in visual N1 amplitude when more than one dot was changing, suggesting that participants were unable to successfully perceive the incoming visual stimuli, and were therefore also unable to integrate more than one visual stimulus with an auditory stimulus ([

16], experiment 4). That said, it is the case that certain individuals did demonstrate the ability to integrate more than one item at the fastest rate of presentation. Putting aside the question of whether capacity can reliably exceed one item or not, our recent focus has been on understanding how other unimodal and multimodal processes may be able to account for a wide range of capacity differences between individuals. An initial investigation into this question revealed that we can account for as much as a quarter of the variation present in audiovisual integration capacity estimates by considering an individual’s ability to track multiple objects, their tendency for global precedence on a Navon task, and their susceptibility to location conflict and alerting on the Attention Network Test [

19].

In seeking to go beyond these lab-based measures, and in attempting to account for more than a quarter of the variation in estimates of audiovisual integration capacity, the current research sought to examine a type of auditory expertise that may further contribute to an individual’s capacity: musical training. Lee and Nopenney [

20] provided insight on how musicians’ brains are fine-tuned for predictive action based on audiovisual timing. The researchers hypothesized that, compared with non-musicians, musically-trained individuals should display a narrower window of temporal integration for audiovisual stimuli. Participants were asked to judge the audiovisual synchrony of speech sentences and piano music at 13 levels of stimulus-onset asynchronies, and musicians were found to have a narrower temporal integration window for music, but not for speech. These findings suggested that piano training narrows the temporal integration window selectively for musical stimuli, but not for speech. Bishop and Goebl [

21] demonstrated that the ability to perceive audiovisual asynchronies is strengthened through musicianship, but most prominently when the asynchronous presentation involved the musician’s instrument (pianists performed best when showed asynchronous presentations of piano playing). Similarly, Petrini et al. [

22] demonstrated the relationship between memory of learned actions and the ability to recall upon those memories of actions to make associations between visual stimuli and auditory signals, even when one of the stimuli is incomplete. For instance, when hearing a particular snare or cymbal sound combined with the visual image of the drumstick hitting the drum, experienced drummers would perform better than novices in replicating the required movement.

Another study hypothesized that musicians, compared with non-musicians, would have a significantly enhanced temporal binding window, with greater accuracy on audiovisual integration tasks [

23]. When discussing facets of multisensory perception such as the temporal binding window, it is commonly held that a ‘wider’ window (e.g., binding stimuli that are more disparate in time) is indicative of inferior function. Participants were subjected to a sound-induced flash illusion paradigm (cf. [

24]). Across the two groups, the musicians showed lower susceptibility to perceiving the illusory flashes, particularly at faster stimulus onset asynchronies (SOA) where the illusion is most likely to occur. The results also showed that non-musicians’ temporal binding window was 2–3 times longer than that of musicians. A study by Landry and Champoux [

25] further illustrated that trained musicians are fine-tuned to have stronger perceptual abilities than non-musicians. They pointed out that musicians have more developed cortical areas in the regions that process tactile, auditory and visual information, due to the impact of years of experience and training on neuroplasticity.

Talamini et al. [

26] sought to determine whether the working memory capacity of musicians is advanced compared to that of non-musicians. Digit span recollection relies on the articulatory rehearsal loop, the phonological buffer, and subvocalization. Concurrent articulation (rehearsing anything else verbally while attempting to memorize a series of numerical or alphabetical structures) has been shown to reduce working memory capacity. The digit span test was administered to test the working memory of musicians vs. non-musicians, with musicians performing better than non-musicians when no concurrent articulation was required (but with musicians performing worse when concurrent articulation was required). Further to the study of audiovisual processing in musicians, Proverbio et al. [

27] found that musicians are not subject to the McGurk effect [

28]—a perceptual illusion that incorrectly combines sound to visual stimuli—in the same way non-musicians are. This ability to selectively integrate (or in the case of the McGurk effect, not integrate) auditory and visual information, speaks further to the superiority of musicians in audiovisual processing.

Based on the extant literature, including some from our laboratory, it is clear that the ability to identify the location of dots that changed polarity at a specific moment is increased by the presentation of a simultaneous tone. The ability of individuals to integrate this tone with the visual stimuli presented is an example of multisensory modulation of vision. It is also clear that there are underlying abilities that may further modulate this capacity, and that one of these abilities that has been found to influence other forms of multisensory integration is having had musical training in one’s life. The current study seeks to examine whether an individual’s experience (or lack thereof) with musical training contributes to a greater capacity for integration of audiovisual information. We expect to find that audiovisual integration capacity will be increased for those individuals with a high level of musical experience, as compared to more musically naïve individuals. This hypothesis is based on the literature discussed above, which suggests that musical training leads to an improvement in performance on both auditory and audiovisual tasks.

3. Results

We calculated the proportion correct for each combination of conditions and for each participant, and then subjected the raw data to a model-fitting procedure analogous to Cowan’s

K [

2]. The data for this experiment are available at:

https://osf.io/g3ks2/. According to this model, if the number of visual stimuli changing (

n) is less than or equal to an individual’s capacity (

K), the probability of successful integration approaches certainty (i.e., if

n ≤

K, then

p = 1). Under conditions where an individual’s capacity is less than the number of visual stimuli changing (i.e.,

n >

K), the probability of integration is modelled based on the equation:

p =

K/2

n + 0.5. The fitting procedure involves using

K as the free parameter and optimizing this value to minimize root-mean-square error between the raw proportion of correct responses for each number of locations changing and the ideal model. As the fitting procedure uses the values for each number of locations changing, along with the proportion of correct responses for each condition, capacity estimates (

K) are obtained for each duration of presentation with no further consideration of number of locations changing or proportion correct responding.

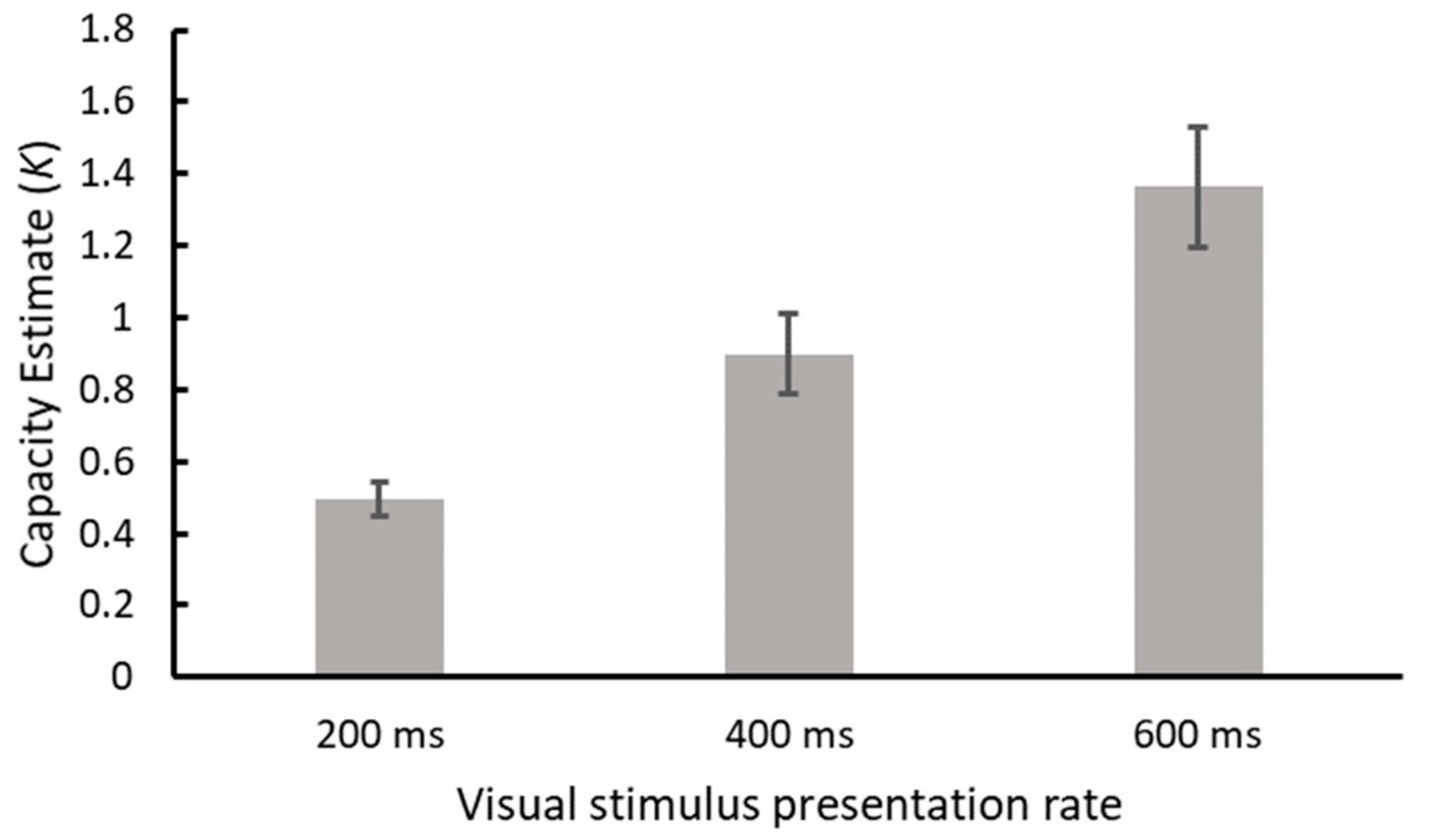

An initial analysis was conducted by means of one-way ANOVA examining capacity estimates for each duration of presentation. A significant main effect of presentation duration was observed, F(2,38) = 29.951, MSE = 0.126,

p < 0.001, η

p2 = 0.612, indicating an increase in capacity with slowing rates of presentation. Means and standard errors are displayed in

Figure 2 and post hoc testing with Tukey’s HSD (

p < 0.05) confirmed that each level of presentation duration yielded a capacity estimate that was significantly different from the others. Having established that capacity in this sample increased as a function of visual stimulus presentation duration, we subsequently determined whether an individual’s level of musical training was correlated with their capacity estimates. Capacity measures for each presentation duration (200, 400, and 600 ms) were entered into Spearman correlations with the following sub scores from the Goldsmith MSI: Active engagement, perceptual abilities, and musical training. Spearman correlations were used as the data were found to be non-parametric, and a Bonferroni-adjusted significance cut-off of

p < 0.017 was employed, due to each score being used for three comparisons (0.05/3 ≈ 0.017) Full results of the correlations are displayed in

Table 1. The data revealed that there was only one correlation that reached standard levels of statistical significance, which was between capacity at 200 ms and the musical training sub score (

r = 0.536,

p = 0.015). We expected to find significant correlations between capacity estimates and the other subscales of the MSI, but we will discuss further in the following section why this result is particularly interesting.

4. Discussion

The purpose of this study was to determine whether the modulation of visual perception through audiovisual integration would be further augmented by musical training. Our initial finding confirmed what we have found previously—that audiovisual integration capacity is flexible and increases as a function of slower rates of presentation. Our findings with regard to the interaction between capacity and musical training were more nuanced. Correlational analysis revealed that higher levels of musical training were associated with higher estimates of audiovisual integration capacity, specifically for the fastest (200 ms) duration of visual presentation. There was a small positive correlation between musical training and integration capacity at the other presentation rates, but only at 200 ms was the correlation a statistically significant one. While this was not exactly the pattern of results that we had expected, it is certainly one worth discussing. In our previous work, integration capacity at 200 ms was only able to exceed one item when it was further boosted by perceptual chunking of visual locations [

17]. If individuals with higher levels of musical training have greater capacity estimates at 200 ms than individuals with less musical training, it would be worthwhile to recruit a group of highly trained musicians to see if this training allows them to reliably integrate more than one item at this relatively short duration of presentation. Previous research has shown that presenting stimuli with a greater perceptual load as indexed by number of stimuli [

30,

31] or presentation rate [

32] leads to decreases in performance on tasks related to perception. Our previous work has shown that reducing the effective number of items to be tracked leads to an increase in capacity estimates [

17,

18], suggesting that perceptual load plays a similar role in audiovisual integration. Additionally, our previous research with electrophysiological recording showed that participants were unable to process incoming information sufficiently at a presentation duration of 200 ms, as indexed by a lack of modulation in N1 amplitude [

16]. While perceptual load seems to serve as a limiting factor in an individual’s capacity to integrate auditory and visual information, the current data suggest that musical training may allow an individual to overcome this challenge. This could be confirmed in future studies by conducting an EEG study on trained musicians, compared to a group of non-musicians, and comparing components such as the N1 or contralateral delay activity (CDA [

31]).

The “pip-and-pop” effect [

6], on which the current research is loosely based, is described as being automatic, rapid, and effortless in individuals being presented with stimuli. That is to say, it occurs outside of the individual’s conscious understanding, at a perceptual level. This is in accord with what we have found previously in both behavioural [

17,

18] and electrophysiological [

16] work. While this effect may well be automatic, it is also likely that previous experience and expertise would have an effect on an individual’s ability to integrate auditory and visual information. In recently completed work [

19], we determined that a number of perceptual and cognitive functions can be shown to contribute to individual differences in audiovisual integration capacity. While these relatively low-level effects have been found to play a role, the current research has shown that higher level factors such as lifelong training can also play a role. While this is the first such experiment to show differences in audiovisual integration capacity, there is previous literature showing similar differences on perception of audiovisual synchrony perception [

21], width of temporal binding window [

23], McGurk susceptibility [

27], and working memory capacity [

26].

It would be of interest, moving forward, to employ more ecologically valid stimuli, as it has been shown that experiments using artificial tones do not generalize to natural sounds [

33,

34]. For example, the current experiment used a pure tone (single frequency) but it would be of additional interest to use intermediate types of tones, such as instrumental tones and other ecologically valid stimuli, which might also further promote the advantage of musicians, as they would likely have more experience with such stimuli through their practice as a musician. This would also be in alignment with work from Lee and Noppeney [

20] who used musical stimuli in their experiments and found that musically trained individuals had a narrower (that is, superior) temporal binding window than those without musical training. Our findings showed limited differences between individuals with relatively greater or lesser amounts of musical training on an audiovisual integration capacity task using abstract stimuli (sine tones and dots). It follows that we may observe greater differences based on musical training if we were to employ more ecologically valid stimuli.

The current experiment is part of an ongoing program of research in our lab in which we are seeking to identify factors that may influence variability in audiovisual integration capacity. In addition to the previously completed research on underlying perceptual and cognitive factors [

19], work is underway to examine differences stemming from variability in traits related to autism spectrum disorder, as well as major depressive disorder. This work is currently in the initial stages, but data will initially be used in building an increasingly efficient predictive model of audiovisual integration capacity. Having done so, it will be of interest to consider applications of audiovisual integration capacity as a potential early diagnostic system for autism, as the sensory and perceptual differences in autism can, in many cases, be observed earlier than traditional diagnostic systems can be employed. This is of utmost importance in the field of autism spectrum disorder, as earlier diagnosis of ASD has been associated with better treatment outcomes in the long term [

35]. The results of the current experiment, however, add to the corpus of literature showing that vision can be affected positively by other sensory inputs, and that this phenomenon can be further modulated through the lifetime experience of an individual.