Tennis Timing Assessment by a Machine Learning-Based Acoustic Detection System: A Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

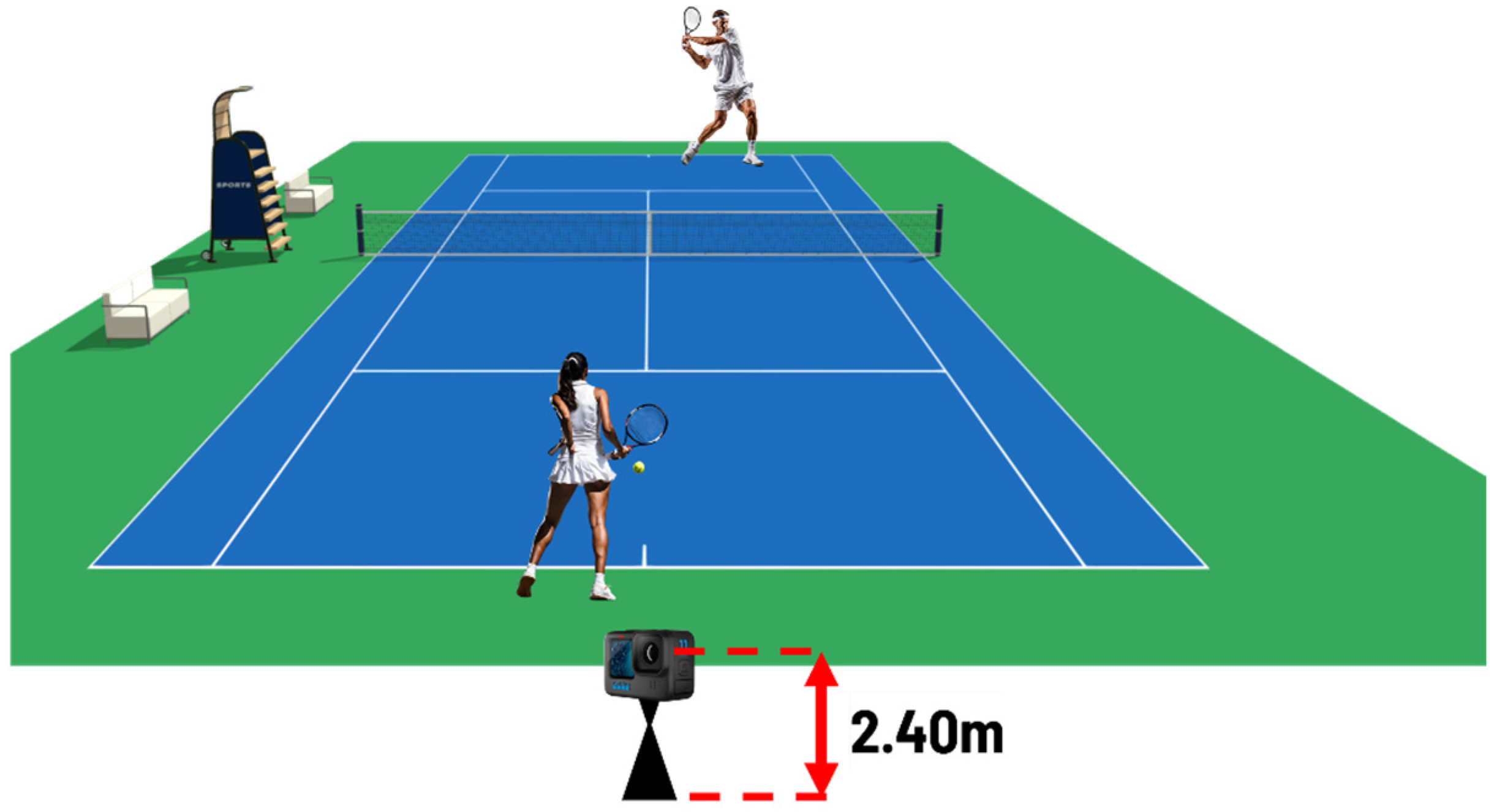

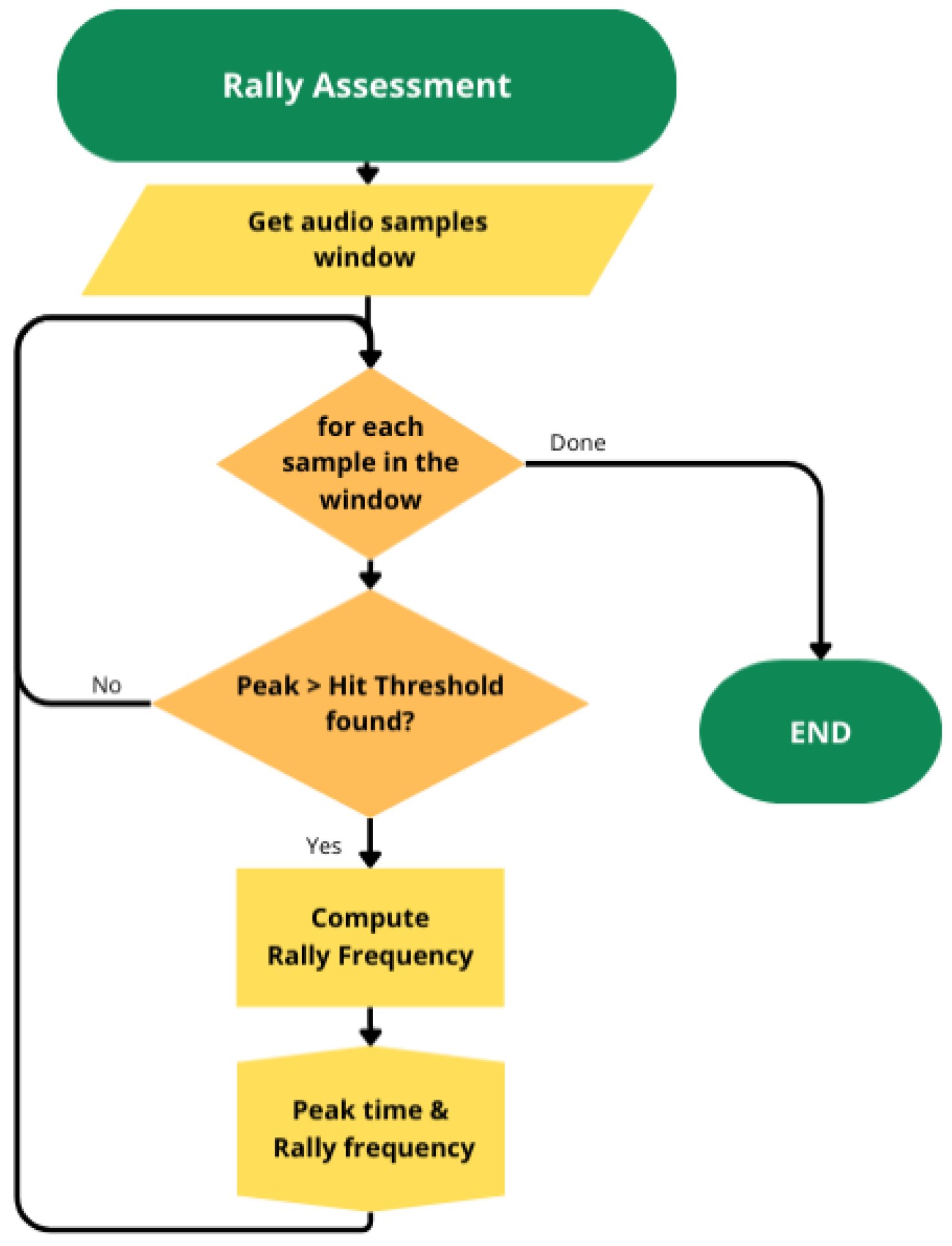

2.1.1. Rally Assessment

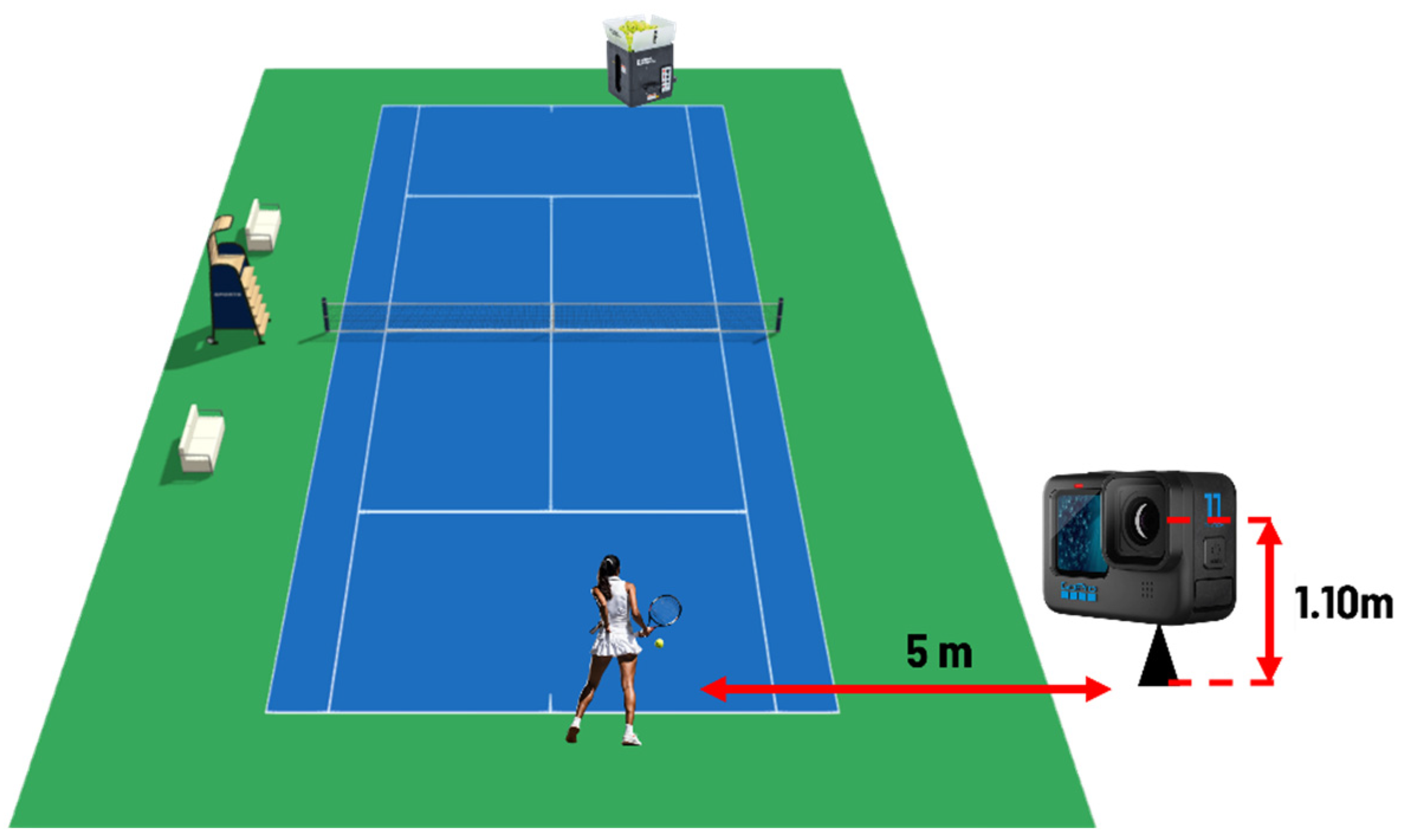

2.1.2. Groundstrokes Assessment

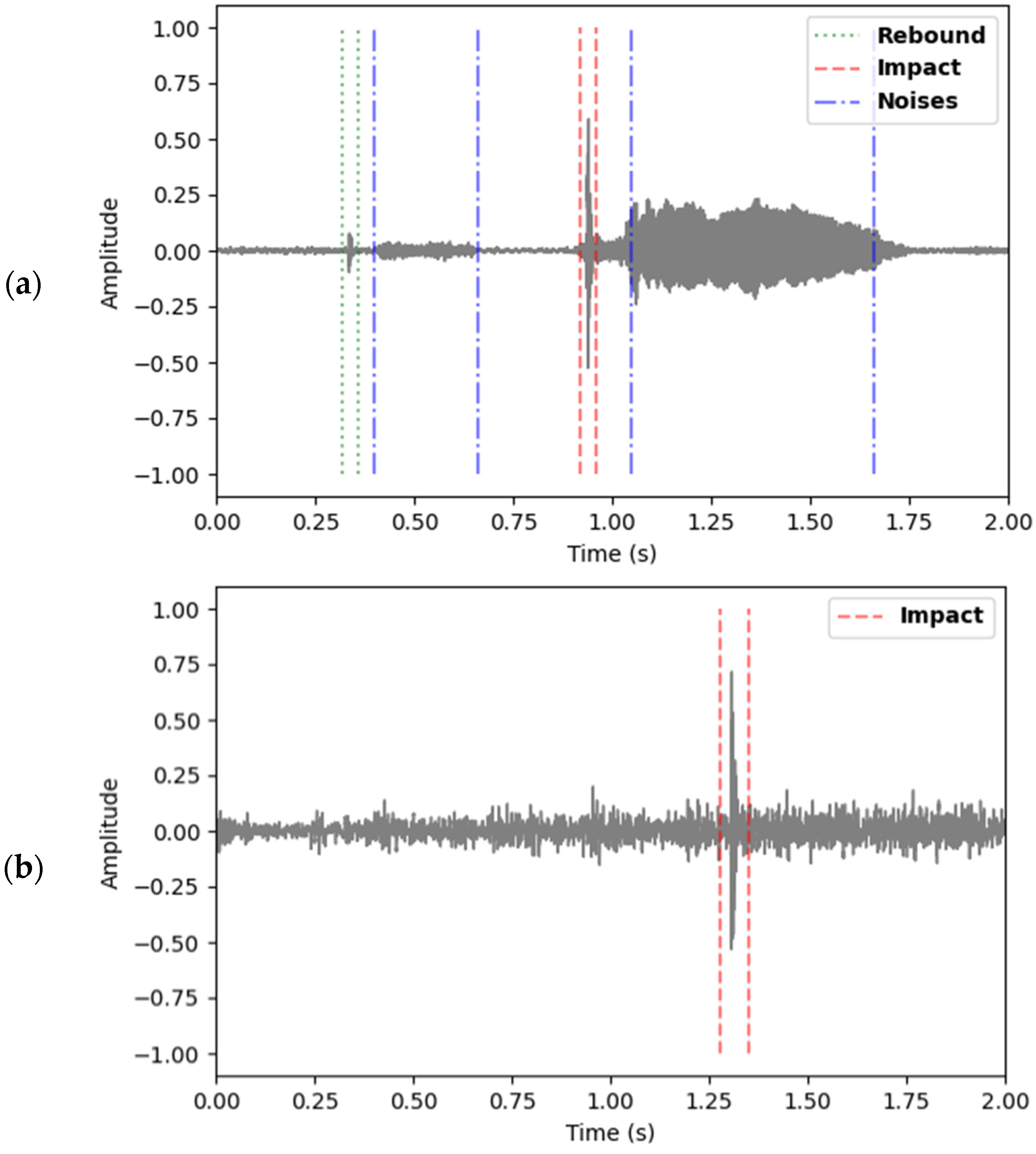

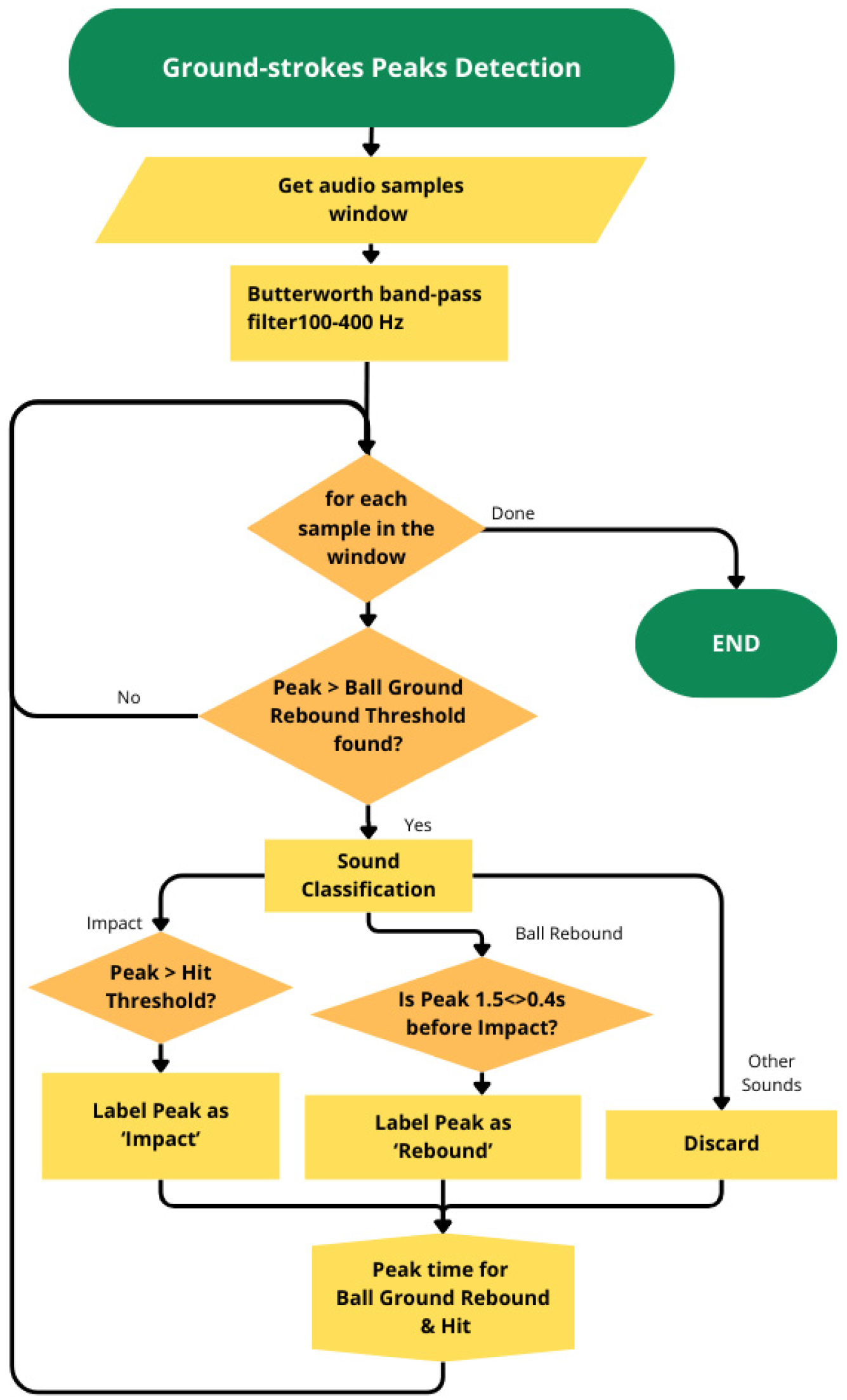

2.2. Peaks Detection

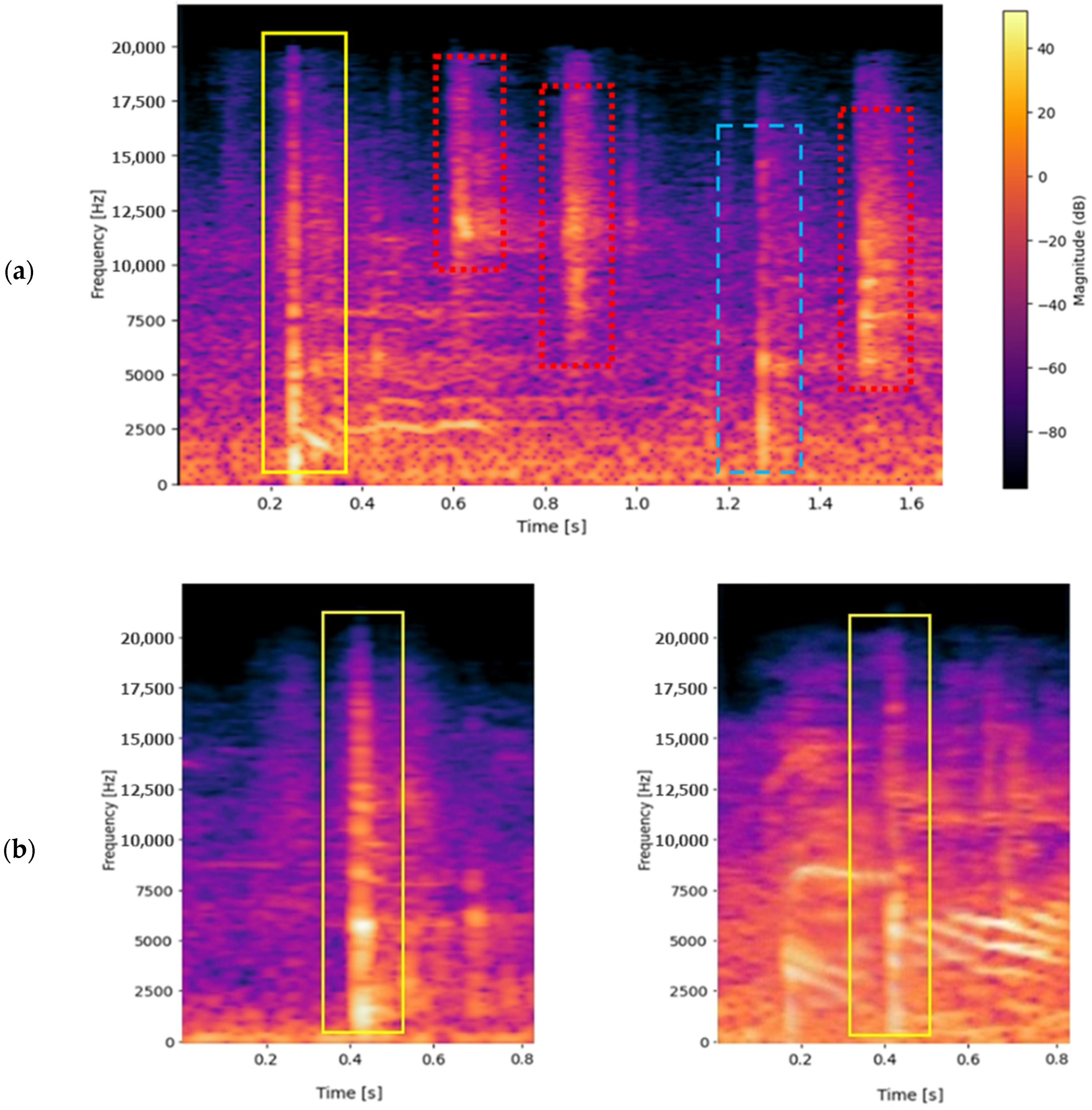

2.3. Machine Learning Sound Classification

2.3.1. Data Collection and Feature Extraction

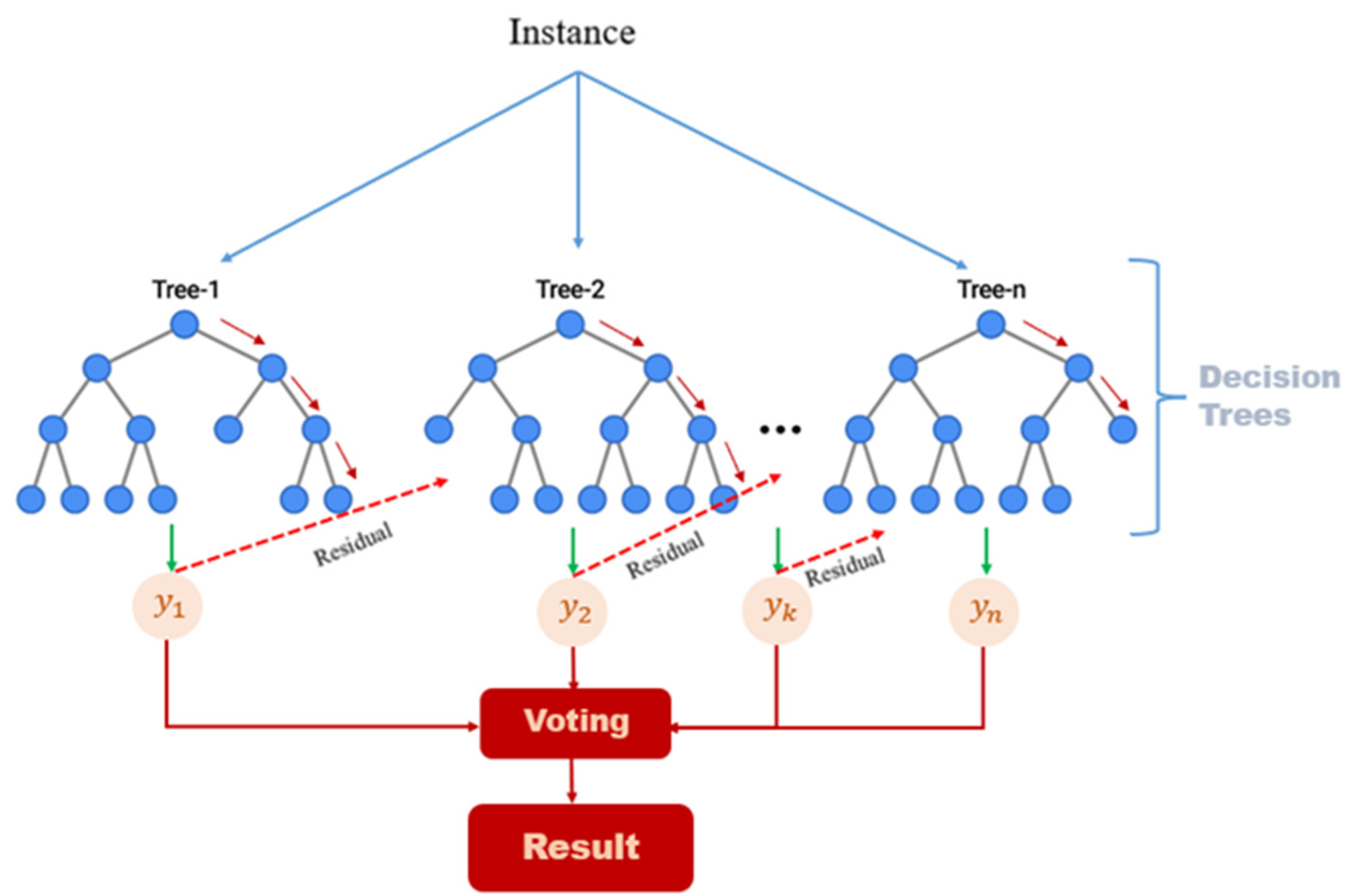

2.3.2. Sound Classification Using Machine Learning

2.3.3. Model Training

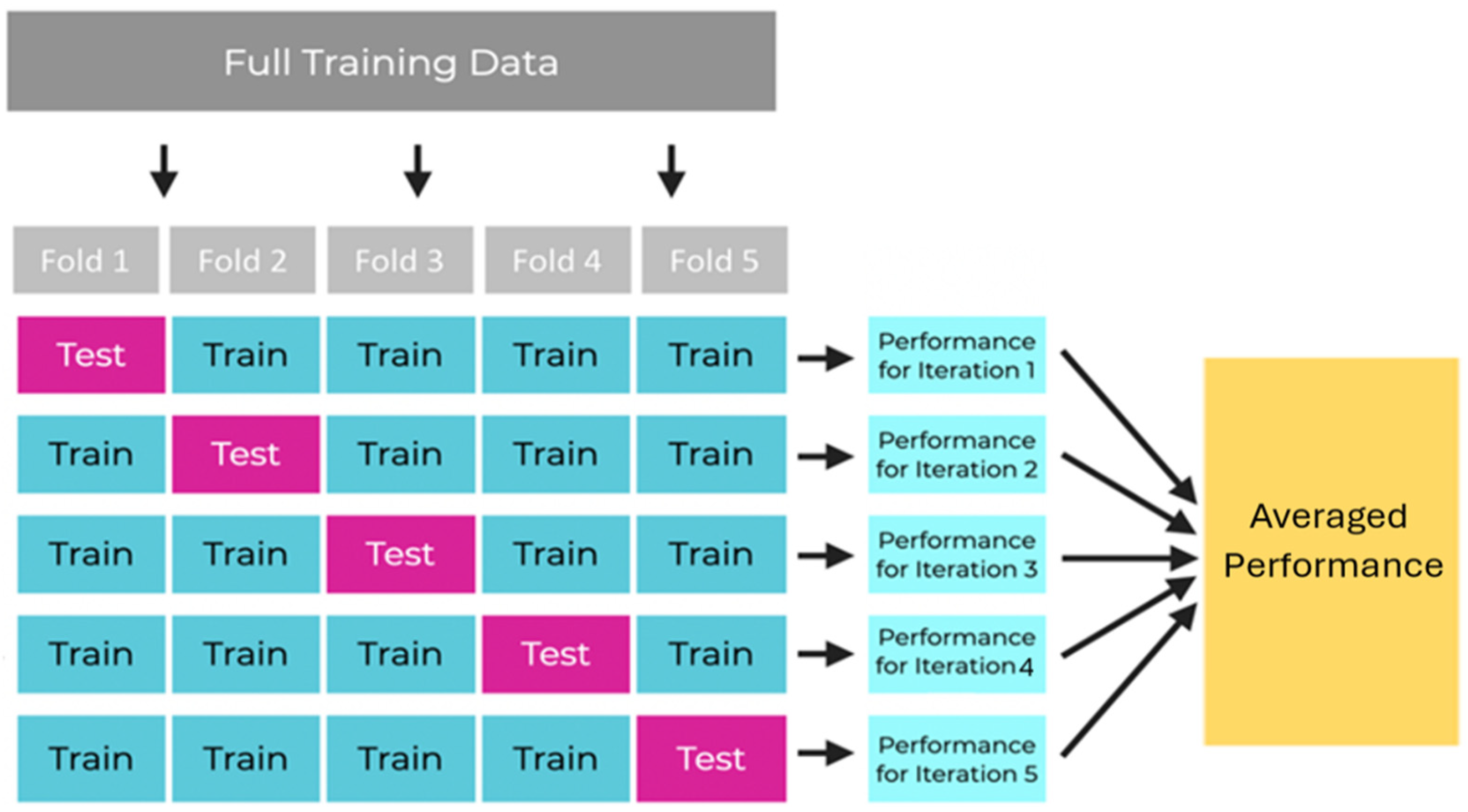

2.3.4. Cross-Validation to Avoid Overfitting

- Split the data into precisely five folds.

- Train the model in four of the five folds (i.e., train the model in all the folds except one).

- Evaluate the model on the 5th remaining fold by computing the accuracy.

- Rotate the folds and repeat steps 2 and 3 with a new holdout fold. Repeat steps 2 and 3 until all k folds have been used as the holdout fold exactly 1 time.

- Average the model performances across all iterations.

2.4. System Accuracy

2.5. Statistical Analysis

3. Results

3.1. Machine Learning Processing

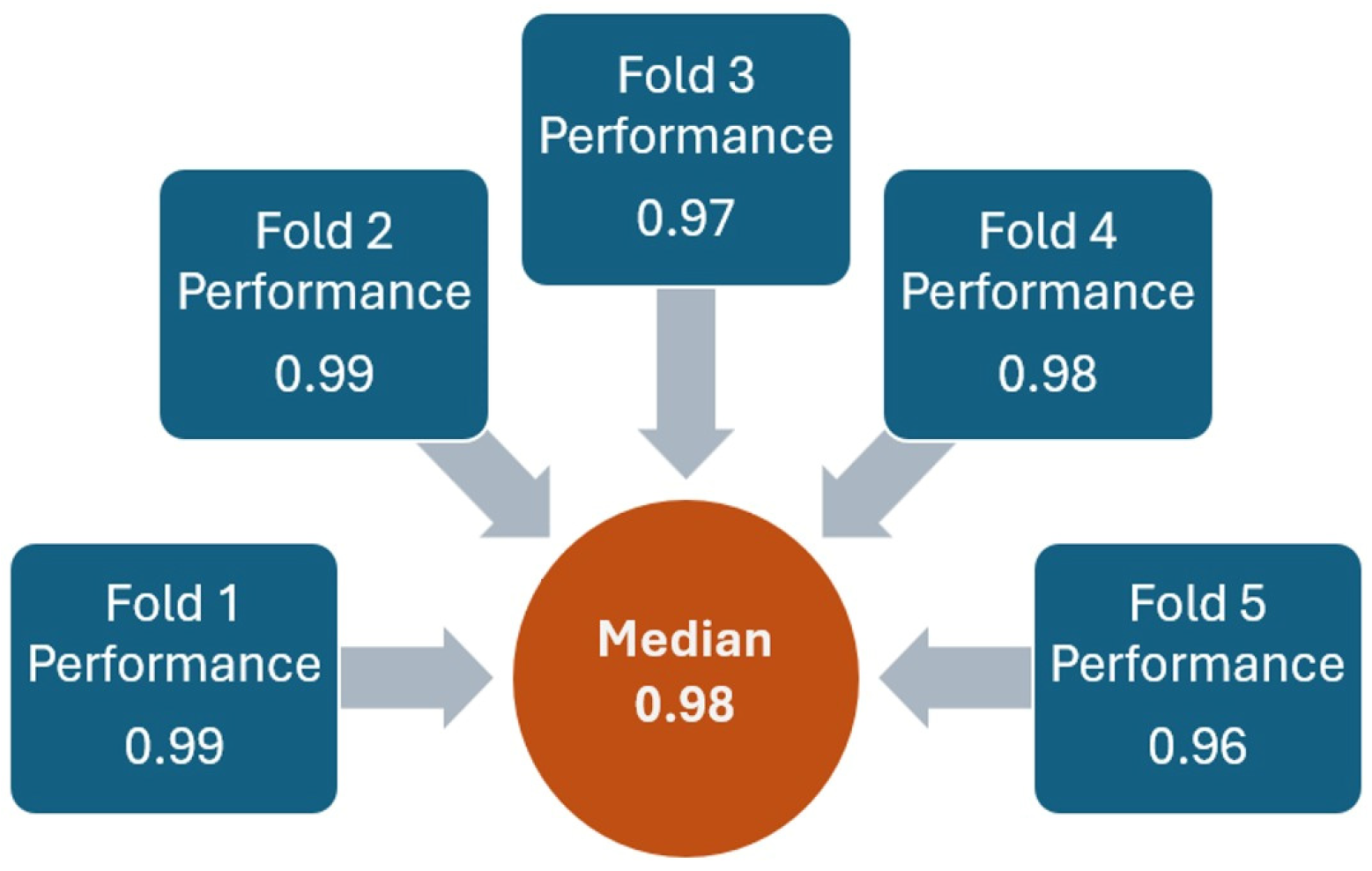

3.1.1. First Model Results

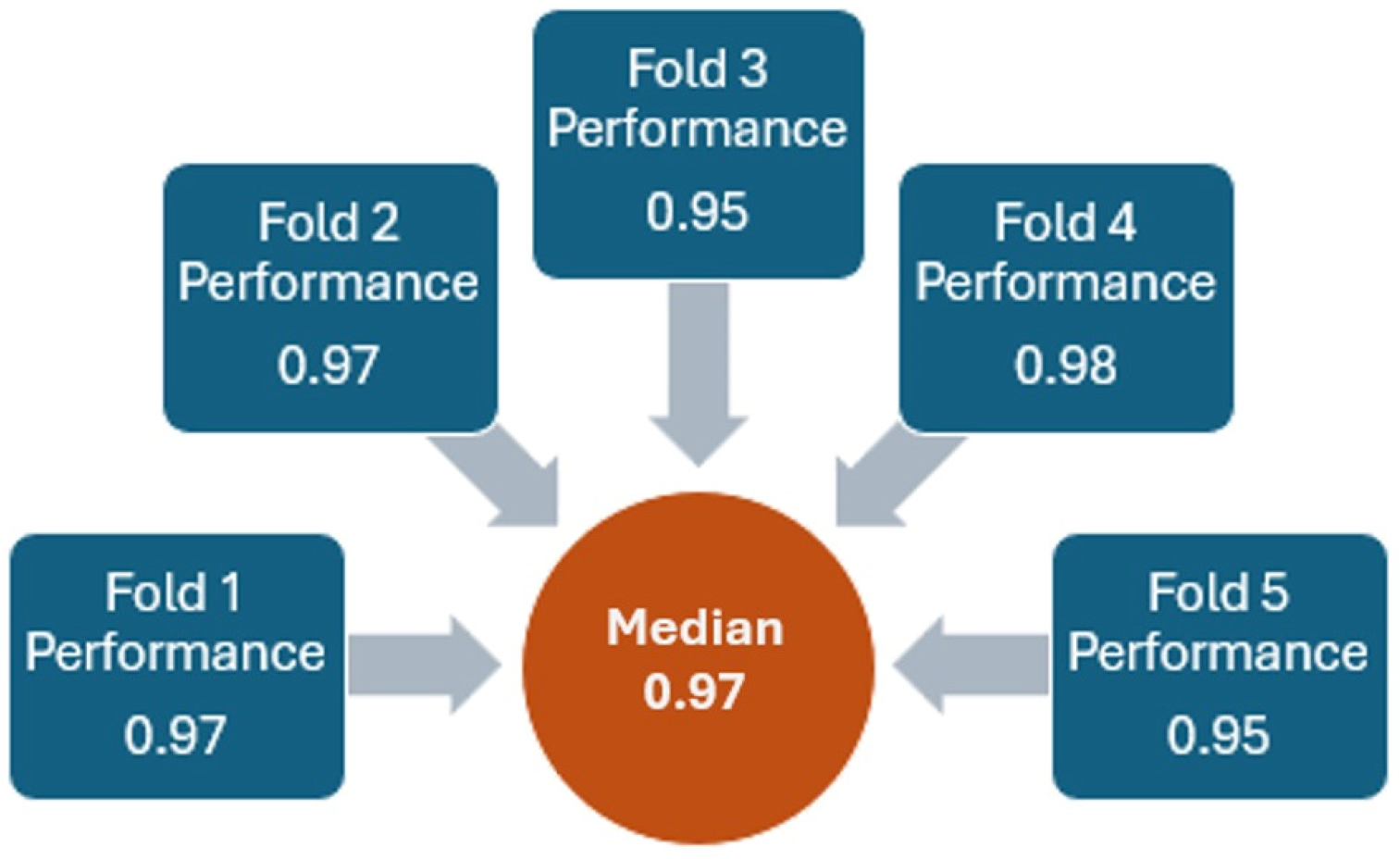

3.1.2. Second Model Results

3.2. Video Analysis Repeatability

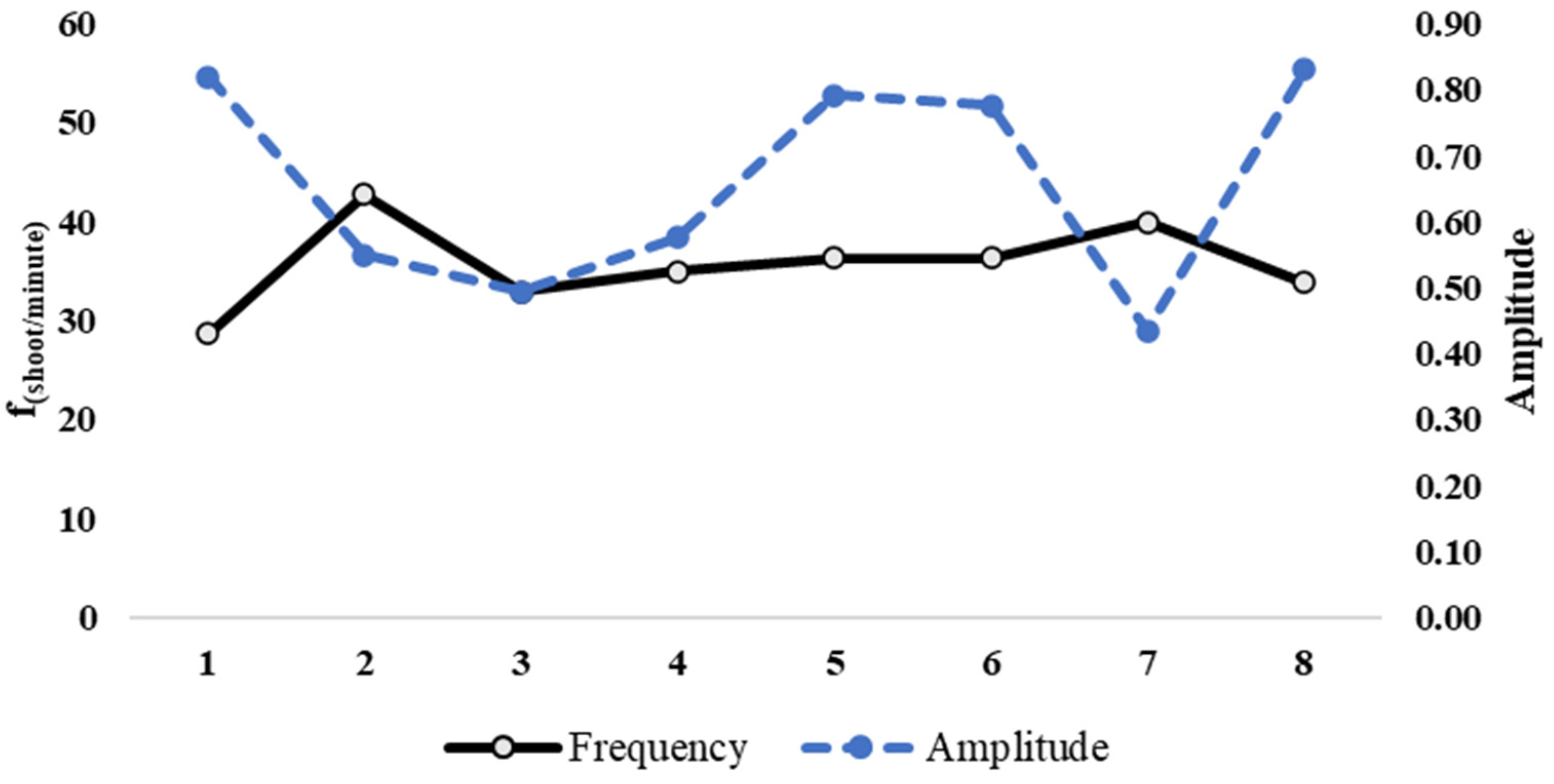

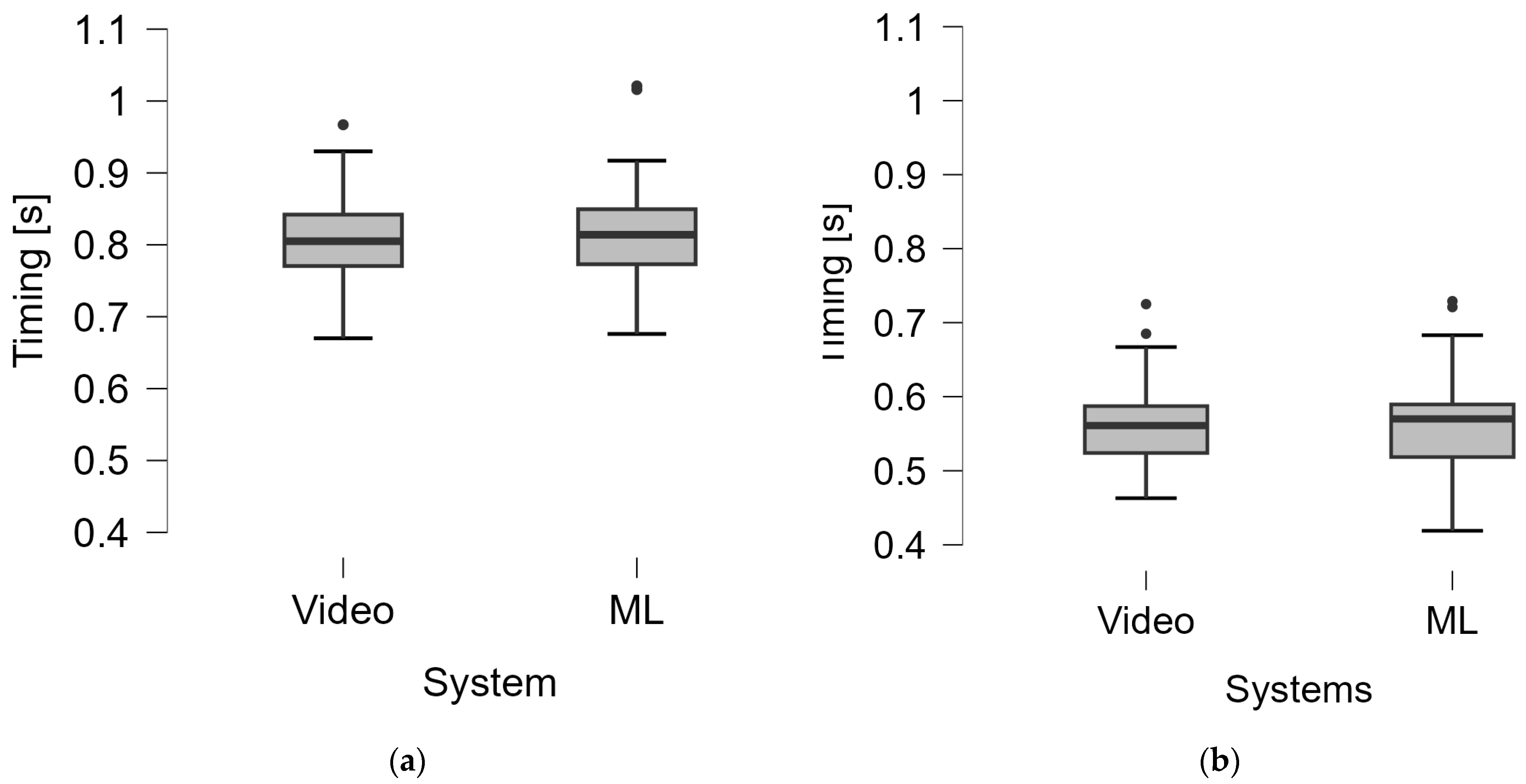

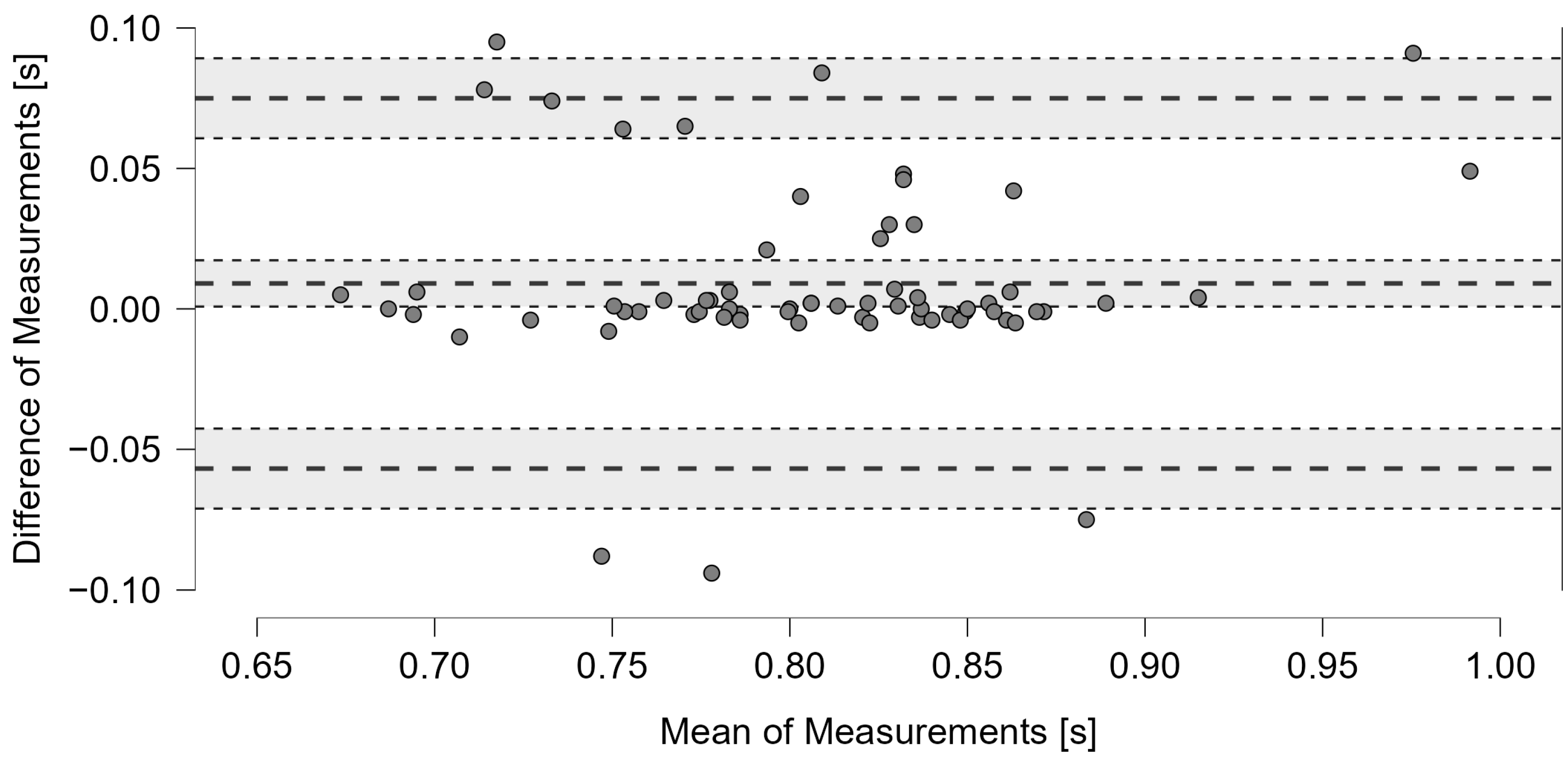

3.3. Rally Assessment

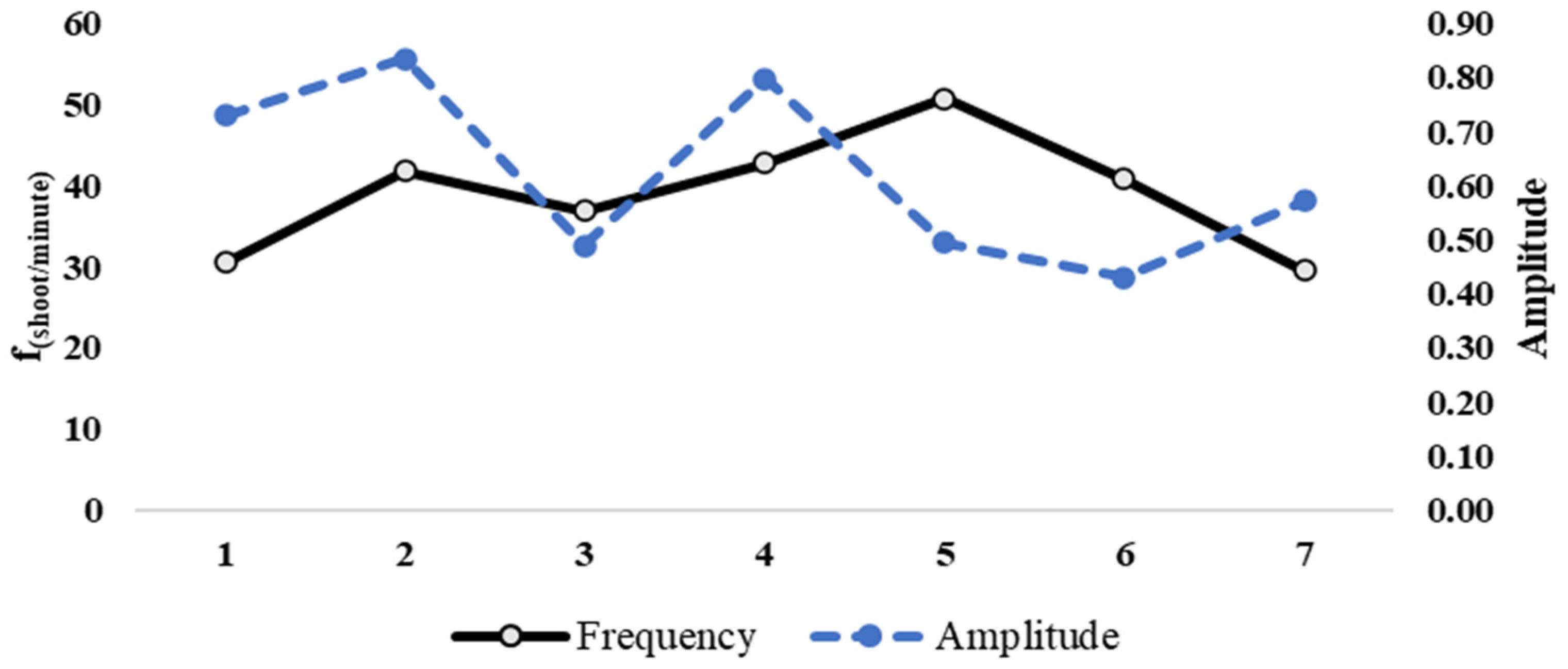

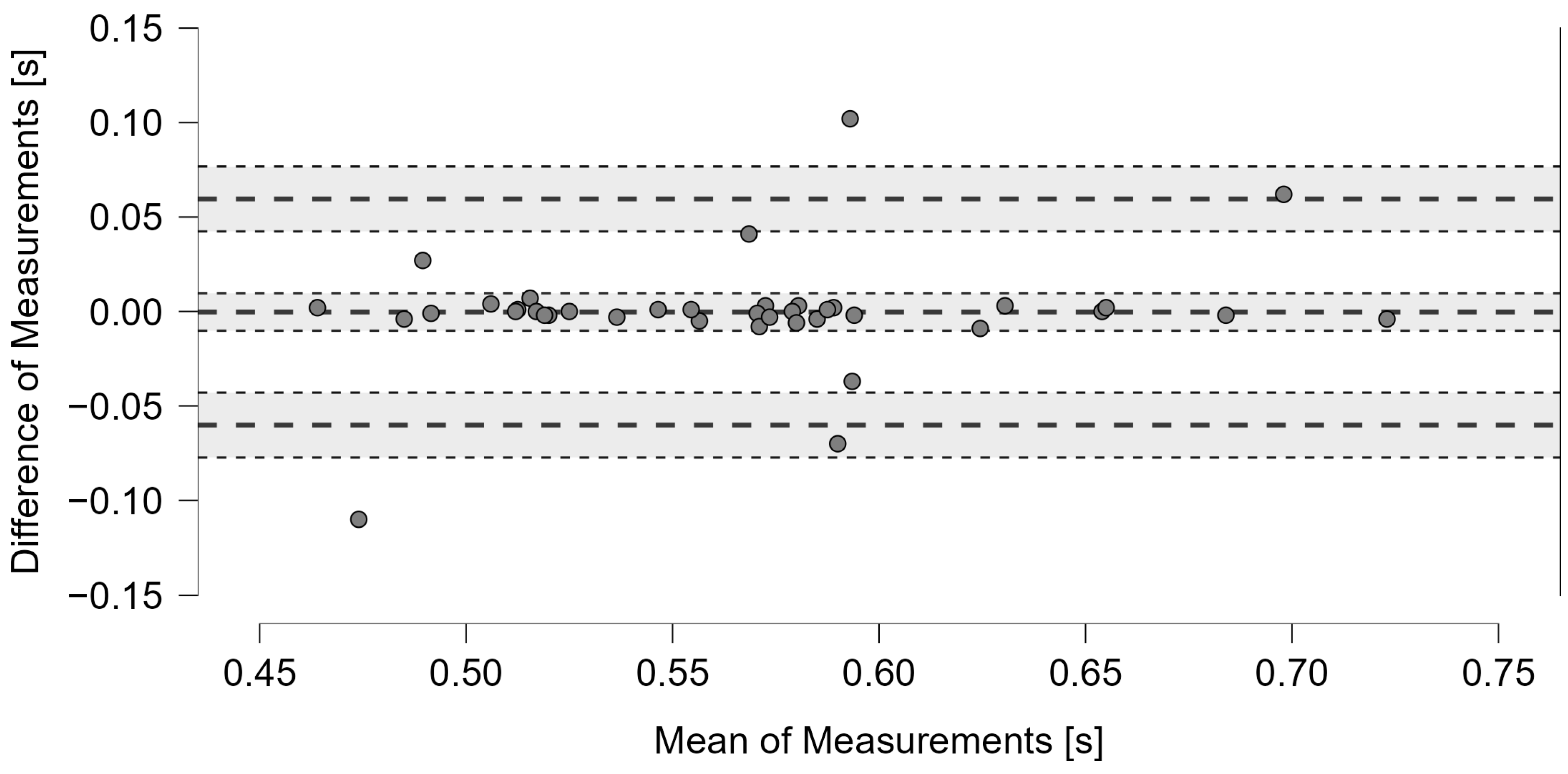

3.4. Groundstrokes Assessment

3.4.1. System Accuracy

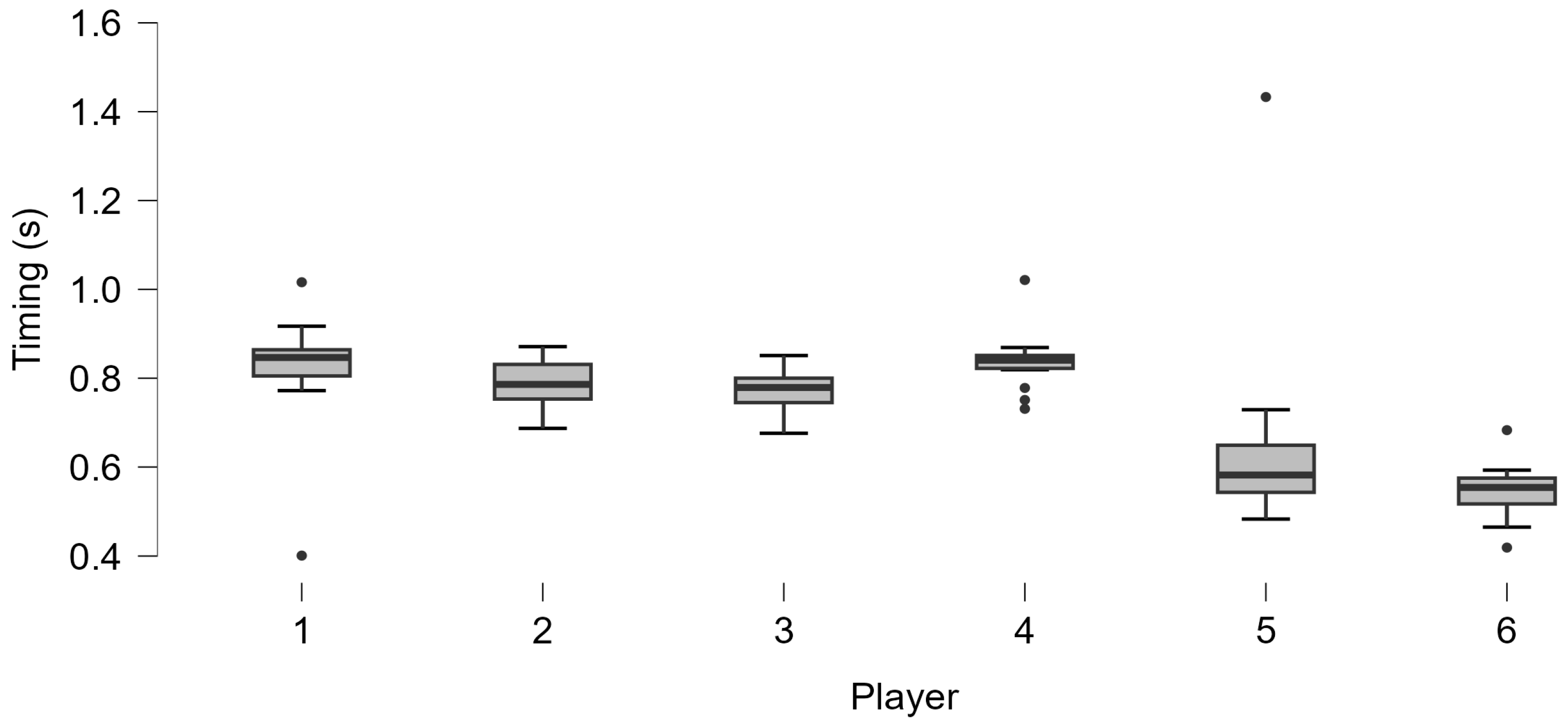

3.4.2. Technical Assessment

4. Discussion

4.1. System Accuracy

4.2. Groundstrokes Technical Assessment

4.3. Limitations of the Study

4.4. Future Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Casale, L. Physical Training for Tennis Players; Professional Tennis Registry: Wesley Chapel, FL, USA, 2003. [Google Scholar]

- Caprioli, L.; Campoli, F.; Edriss, S.; Padua, E.; Panichi, E.; Romagnoli, C.; Annino, G.; Bonaiuto, V. Video Analysis Application to Assess the Reaction Time in an ATP Tennis Tournament. In Proceedings of the 11th International Conference on Sport Sciences Research and Technology Support, Rome, Italy, 16–17 November 2023; pp. 16–17. [Google Scholar]

- Issurin, V.B. New Horizons for the Methodology and Physiology of Training Periodization. Sports Med. 2010, 40, 189–206. [Google Scholar] [CrossRef]

- Matveev, L.P. Teoría General Del Entrenamiento Deportivo; Editorial Paidotribo: Badalona, Spain, 2001. [Google Scholar]

- Fox, E.L.; Bowers, R.W.; Foss, M.L. The Physiological Basis for Exercise and Sport; Brown & Benchmark Pub: Providence, RI, USA, 1993. [Google Scholar]

- Schönborn, R. Advanced Techniques for Competitive Tennis; Meyer & Meyer: Osnabrück, Germany, 1999. [Google Scholar]

- Sogut, M.; Ayser, S.; Akar, G.B. A Video-Based Analysis of Rhythmic Accuracy and Maintenance in Junior Tennis Players. Pamukkale J. Sport Sci. 2017, 8, 73. [Google Scholar]

- Aleksovski, A. Precision of ground strokes in tennis. Act. Phys. Educ. Sport 2015, 5, 68–70. [Google Scholar]

- Elliott, B. Biomechanics and Tennis. Br. J. Sports Med. 2006, 40, 392–396. [Google Scholar] [CrossRef]

- Kibler, W.B.; Sciascia, A. Kinetic Chain Contributions to Elbow Function and Dysfunction in Sports. Clin. Sports Med. 2004, 23, 545–552. [Google Scholar] [CrossRef] [PubMed]

- Roetert, E.P.; Kovacs, M. Tennis Anatomy; Human Kinetics: Champaign, IL, USA, 2019. [Google Scholar]

- Sciascia, A.; Thigpen, C.; Namdari, S.; Baldwin, K. Kinetic Chain Abnormalities in the Athletic Shoulder. Sports Med. Arthrosc. Rev. 2012, 20, 16–21. [Google Scholar] [CrossRef] [PubMed]

- Faneker, E.; van Trigt, B.; Hoekstra, A. The Kinetic Chain and Serve Performance in Elite Tennis Players; Vrije Universiteit: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Verrelli, C.M.; Caprioli, L.; Bonaiuto, V. Cyclic Human Movements and Time-Harmonic Structures: Role of the Golden Ratio in the Tennis Forehand. In Proceedings of the International Workshop on Engineering Methodologies for Medicine and Sport, Rome, Italy, 7–9 February 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 557–577. [Google Scholar]

- Zachopoulou, E.; Mantis, K. The Role of Rhythmic Ability on the Forehand Performance in Tennis. Eur. J. Phys. Educ. 2001, 6, 117–126. [Google Scholar] [CrossRef]

- Smekal, G.; Von Duvillard, S.P.; Rihacek, C.; Pokan, R.; Hofmann, P.; Baron, R.; Tschan, H.; Bachl, N. A Physiological Profile of Tennis Match Play. Med. Sci. Sports Exerc. 2001, 33, 999–1005. [Google Scholar] [CrossRef] [PubMed]

- Filipcic, A.; Zecic, M.; Reid, M.; Crespo, M.; Panjan, A.; Nejc, S. Differences in Performance Indicators of Elite Tennis Players in the Period 1991–2010. J. Phys. Educ. Sport 2015, 15, 671. [Google Scholar]

- Reid, M.; McMurtrie, D.; Crespo, M. The Relationship between Match Statistics and Top 100 Ranking in Professional Men’s Tennis. Int. J. Perform. Anal. Sport 2010, 10, 131–138. [Google Scholar] [CrossRef]

- Castellani, A.; D’Aprile, A.; Tamorri, S. Tennis Training; Società Stampa Sportiva: Rome, Italy, 2007. [Google Scholar]

- Guevara, D. Tap Tempo Button 2022. Available online: https://play.google.com/store/apps/details?id=com.diegoguevara.taptempo (accessed on 23 October 2024).

- Cureton, T.K., Jr.; Coe, D.E. An Analysis of the Errors in Stop-Watch Timing. Res. Q. Am. Phys. Educ. Assoc. 1933, 4, 94–109. [Google Scholar]

- Faux, D.A.; Godolphin, J. Manual Timing in Physics Experiments: Error and Uncertainty. Am. J. Phys. 2019, 87, 110–115. [Google Scholar] [CrossRef]

- Amir, N.; Saifuddin. Analysis of Body Position, Angle and Force in Lawn Tennis Service Accuracy. J. Phys. Educ. Sport 2018, 18, 1692–1698. [Google Scholar]

- Kang, Y.-T.; Lee, K.-S.; Seo, K.-W. Analysis of Lower Limb Joint Angle and Rotation Angle of Tennis Forehand Stroke by Stance Pattern. Korean J. Sport Biomech. 2006, 16, 85–94. [Google Scholar]

- Mark King, A.H.; Blenkinsop, G. The Effect of Ball Impact Location on Racket and Forearm Joint Angle Changes for One-Handed Tennis Backhand Groundstrokes. J. Sports Sci. 2017, 35, 1231–1238. [Google Scholar] [CrossRef][Green Version]

- Caprioli, L.; Romagnoli, C.; Campoli, F.; Edriss, S.; Padua, E.; Bonaiuto, V.; Annino, G. Reliability of an Inertial Measurement System Applied to the Technical Assessment of Forehand and Serve in Amateur Tennis Players. Bioengineering 2025, 12, 30. [Google Scholar] [CrossRef]

- Takahashi, H.; Wada, T.; Maeda, A.; Kodama, M.; Nishizono, H. An Analysis of Time Factors in Elite Male Tennis Players Using the Computerised Scorebook for Tennis. Int. J. Perform. Anal. Sport 2009, 9, 314–319. [Google Scholar] [CrossRef]

- Reid, M.; Elliott, B.; Crespo, M. Mechanics and Learning Practices Associated with the Tennis Forehand: A Review. J. Sports Sci. Med. 2013, 12, 225. [Google Scholar]

- Fu, M.C.; Ellenbecker, T.S.; Renstrom, P.A.; Windler, G.S.; Dines, D.M. Epidemiology of Injuries in Tennis Players. Curr. Rev. Musculoskelet. Med. 2018, 11, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Hernández-Belmonte, A.; Sánchez-Pay, A. Concurrent Validity, Inter-Unit Reliability and Biological Variability of a Low-Cost Pocket Radar for Ball Velocity Measurement in Soccer and Tennis. J. Sports Sci. 2021, 39, 1312–1319. [Google Scholar] [CrossRef]

- Bortolotti, C.; Coviello, A.; Giannattasio, R.; Lambranzi, C.; Serrani, A.; Villa, G.; Xu, H.; Aliverti, A.; Esmailbeigi, H. Wearable Platform for Tennis Players Performance Monitoring: A Proof of Concept. In Proceedings of the 2023 IEEE International Workshop on Sport, Technology and Research (STAR), Trento, Italy, 14–16 September 2023; IEEE: New York, NY, USA, 2023; pp. 70–76. [Google Scholar]

- Brito, A.V.; Fonseca, P.; Costa, M.J.; Cardoso, R.; Santos, C.C.; Fernandez-Fernandez, J.; Fernandes, R.J. The Influence of Kinematics on Tennis Serve Speed: An In-Depth Analysis Using Xsens MVN Biomech Link Technology. Bioengineering 2024, 11, 971. [Google Scholar] [CrossRef] [PubMed]

- Kelley, J.; Choppin, S.; Goodwill, S.; Haake, S. Validation of a Live, Automatic Ball Velocity and Spin Rate Finder in Tennis. Procedia Eng. 2010, 2, 2967–2972. [Google Scholar] [CrossRef][Green Version]

- Tian, B.; Zhang, D.; Zhang, C. High-Speed Tiny Tennis Ball Detection Based on Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE 14th International Conference on Anti-counterfeiting, Security, and Identification (ASID), Xiamen, China, 30 October–November 2020; IEEE: New York, NY, USA, 2020; pp. 30–33. [Google Scholar]

- Qazi, T.; Mukherjee, P.; Srivastava, S.; Lall, B.; Chauhan, N.R. Automated Ball Tracking in Tennis Videos. In Proceedings of the 2015 Third International Conference on Image Information Processing (ICIIP), Waknaghat, India, 21–24 December 2015; IEEE: New York, NY, USA, 2015; pp. 236–240. [Google Scholar]

- Annino, G.; Bonaiuto, V.; Campoli, F.; Caprioli, L.; Edriss, S.; Padua, E.; Panichi, E.; Romagnoli, C.; Romagnoli, N.; Zanela, A. Assessing Sports Performances Using an Artificial Intelligence-Driven System. In Proceedings of the 2023 IEEE International Workshop on Sport, Technology and Research (STAR), Trento, Italy, 14–16 September 2023; IEEE: New York, NY, USA, 2023; pp. 98–103. [Google Scholar]

- Edriss, S.; Romagnoli, C.; Caprioli, L.; Zanela, A.; Panichi, E.; Campoli, F.; Padua, E.; Annino, G.; Bonaiuto, V. The Role of Emergent Technologies in the Dynamic and Kinematic Assessment of Human Movement in Sport and Clinical Applications. Appl. Sci. 2024, 14, 1012. [Google Scholar] [CrossRef]

- Martin, C.; Kulpa, R.; Ropars, M.; Delamarche, P.; Bideau, B. Identification of Temporal Pathomechanical Factors during the Tennis Serve. Med. Sci. Sports Exerc. 2013, 45, 2113–2119. [Google Scholar] [CrossRef]

- Zhang, B.; Dou, W.; Chen, L. Ball Hit Detection in Table Tennis Games Based on Audio Analysis. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; IEEE: New York, NY, USA, 2006; Volume 3, pp. 220–223. [Google Scholar]

- Baughman, A.; Morales, E.; Reiss, G.; Greco, N.; Hammer, S.; Wang, S. Detection of Tennis Events from Acoustic Data. In Proceedings of the the 2nd International Workshop on Multimedia Content Analysis in Sports, Nice, France, 25 October 2019; pp. 91–99. [Google Scholar]

- Yamamoto, N.; Nishida, K.; Itoyama, K.; Nakadai, K. Detection of Ball Spin Direction Using Hitting Sound in Tennis. In Proceedings of the 8th International Conference on Sport Sciences Research and Technology Support (ICSPORTS), Budapest, Hungary, 5–6 November 2020; pp. 30–37. [Google Scholar]

- Huang, Q.; Cox, S.; Zhou, X.; Xie, L. Detection of Ball Hits in a Tennis Game Using Audio and Visual Information. In Proceedings of the 2012 Asia Pacific Signal and Information Processing Association Annual Summit and Conference, Hollywood, CA, USA, 3–6 December 2012; IEEE: New York, NY, USA, 2012; pp. 1–10. [Google Scholar]

- Caprioli, L.; Campoli, F.; Edriss, S.; Frontuto, C.; Najlaoui, A.; Padua, E.; Romagnoli, C.; Annino, G.; Bonaiuto, V. Assessment of Tennis Timing Using an Acoustic Detection System; IEEE: Lecco, Italy, 2024. [Google Scholar]

- Multimedia Programming Interface and Data Specifications 1.0 1991. Available online: https://www.mmsp.ece.mcgill.ca/Documents/AudioFormats/WAVE/Docs/riffmci.pdf (accessed on 23 October 2024).

- Shouran, M.; Elgamli, E. Design and Implementation of Butterworth Filter. Int. J. Innov. Res. Sci. Eng. Technol. 2020, 9, 7975–7983. [Google Scholar]

- Hussin, S.F.; Birasamy, G.; Hamid, Z. Design of Butterworth Band-Pass Filter. Politek. Kolej Komuniti J. Eng. Technol. 2016, 1, 32–46. [Google Scholar]

- Breebaart, J.; McKinney, M.F. Features for Audio Classification. In Algorithms in Ambient Intelligence; Springer: Dordrecht, The Netherlands, 2004; pp. 113–129. [Google Scholar]

- Zhang, T.; Kuo, C.-C.J. Audio Content Analysis for Online Audiovisual Data Segmentation and Classification. IEEE Trans. Speech Audio Process. 2001, 9, 441–457. [Google Scholar] [CrossRef]

- Rong, F. Audio Classification Method Based on Machine Learning. In Proceedings of the 2016 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Changsha, China, 17–18 December 2016; IEEE: New York, NY, USA, 2016; pp. 81–84. [Google Scholar]

- Anitta, D.; Joy, A.; Ramaraj, K.; Thilagaraj, M. Implementation of Machine Learning and Audio Visualizer to Understand the Emotion of a Patient. In Proceedings of the 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), Coimbatore, India, 14–16 June 2023; IEEE: New York, NY, USA, 2023; pp. 135–140. [Google Scholar]

- Lo, P.-C.; Liu, C.-Y.; Chou, T.-H. DNN Audio Classification Based on Extracted Spectral Attributes. In Proceedings of the 2022 14th International Conference on Signal Processing Systems (ICSPS), Zhenjiang, China, 18–20 November 2022; IEEE: New York, NY, USA, 2022; pp. 259–262. [Google Scholar]

- Logan, B. Others Mel Frequency Cepstral Coefficients for Music Modeling; Ismir: Plymouth, MA, USA, 2000; Volume 270, p. 11. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Liu, Y.; Yin, Y.; Zhu, Q.; Cui, W. Musical Instrument Recognition by XGBoost Combining Feature Fusion. arXiv 2022, arXiv:2206.00901. [Google Scholar]

- Gusain, R.; Sonker, S.; Rai, S.K.; Arora, A.; Nagarajan, S. Comparison of Neural Networks and XGBoost Algorithm for Music Genre Classification. In Proceedings of the 2022 2nd International Conference on Intelligent Technologies (CONIT), Hubli, India, 24–26 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support Vector Machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Popescu, M.-C.; Balas, V.E.; Perescu-Popescu, L.; Mastorakis, N. Multilayer Perceptron and Neural Networks. WSEAS Trans. Circuits Syst. 2009, 8, 579–588. [Google Scholar]

- Awe, O.O.; Opateye, G.O.; Johnson, C.A.G.; Tayo, O.T.; Dias, R. Weighted Hard and Soft Voting Ensemble Machine Learning Classifiers: Application to Anaemia Diagnosis. In Sustainable Statistical and Data Science Methods and Practices: Reports from LISA 2020 Global Network, Ghana, 2022; Springer: Berlin/Heidelberg, Germany, 2024; pp. 351–374. [Google Scholar]

- Abro, A.A.; Talpur, M.S.H.; Jumani, A.K.; Sıddıque, W.A.; Yaşar, E. Voting Combinations-Based Ensemble: A Hybrid Approach. Celal Bayar Univ. J. Sci. 2021, 18, 257–263. [Google Scholar] [CrossRef]

- Pavlyshenko, B. Using Stacking Approaches for Machine Learning Models. In Proceedings of the 2018 IEEE Second International Conference on Data Stream Mining & Processing (DSMP), Lviv, Ukraine, 21–25 August 2018; IEEE: New York, NY, USA, 2018; pp. 255–258. [Google Scholar]

- Ansari, M.R.; Tumpa, S.A.; Raya, J.A.F.; Murshed, M.N. Comparison between Support Vector Machine and Random Forest for Audio Classification. In Proceedings of the 2021 International Conference on Electronics, Communications and Information Technology (ICECIT), Khulna, Bangladesh, 14–16 September 2021; IEEE: New York, NY, USA, 2021; pp. 1–4. [Google Scholar]

- Prasanna, D.L.; Tripathi, S.L. Machine Learning Classifiers for Speech Detection. In Proceedings of the 2022 IEEE VLSI Device Circuit and System (VLSI DCS), Kolkata, India, 26–27 February 2022; IEEE: New York, NY, USA, 2022; pp. 143–147. [Google Scholar]

- Liashchynskyi, P.; Liashchynskyi, P. Grid Search, Random Search, Genetic Algorithm: A Big Comparison for NAS. arXiv 2019, arXiv:1912.06059. [Google Scholar]

- Belete, D.M.; Huchaiah, M.D. Grid Search in Hyperparameter Optimization of Machine Learning Models for Prediction of HIV/AIDS Test Results. Int. J. Comput. Appl. 2022, 44, 875–886. [Google Scholar] [CrossRef]

- Berrar, D. Others Cross-Validation. 2019. Available online: https://dberrar.github.io/papers/Berrar_EBCB_2nd_edition_Cross-validation_preprint.pdf (accessed on 23 October 2024).

- Yates, L.A.; Aandahl, Z.; Richards, S.A.; Brook, B.W. Cross Validation for Model Selection: A Review with Examples from Ecology. Ecol. Monogr. 2023, 93, e1557. [Google Scholar] [CrossRef]

- Bates, S.; Hastie, T.; Tibshirani, R. Cross-Validation: What Does It Estimate and How Well Does It Do It? J. Am. Stat. Assoc. 2024, 119, 1434–1445. [Google Scholar] [CrossRef]

- Villanis, P. BIOMOVIE Ergo Software, Version 5.5; Infolabmedia: Gressan, Italy, 2024. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: Milton Park, UK, 2013. [Google Scholar]

- Shrout, P.E.; Fleiss, J.L. Intraclass Correlations: Uses in Assessing Rater Reliability. Psychol. Bull. 1979, 86, 420. [Google Scholar] [CrossRef]

- Wagenmakers, E.-J. Jasp Software; Version 0.18.3; University of Amsterdam: Amsterdam, The Netherlands, 2024. [Google Scholar]

- Tang, G.; Liang, R.; Xie, Y.; Bao, Y.; Wang, S. Improved Convolutional Neural Networks for Acoustic Event Classification. Multimed. Tools Appl. 2019, 78, 15801–15816. [Google Scholar] [CrossRef]

- Kong, Q.; Xu, Y.; Sobieraj, I.; Wang, W.; Plumbley, M.D. Sound Event Detection and Time–Frequency Segmentation from Weakly Labelled Data. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 777–787. [Google Scholar] [CrossRef]

- Pueo, B.; Lopez, J.J.; Jimenez-Olmedo, J.M. Audio-Based System for Automatic Measurement of Jump Height in Sports Science. Sensors 2019, 19, 2543. [Google Scholar] [CrossRef] [PubMed]

- Banchero, L.; Lopez, J.J.; Pueo, B.; Jimenez-Olmedo, J.M. Combining Sound and Deep Neural Networks for the Measurement of Jump Height in Sports Science. Sensors 2024, 24, 3505. [Google Scholar] [CrossRef]

- Cowin, J.; Nimphius, S.; Fell, J.; Culhane, P.; Schmidt, M. A Proposed Framework to Describe Movement Variability within Sporting Tasks: A Scoping Review. Sports Med.-Open 2022, 8, 85. [Google Scholar] [CrossRef]

- Paté, A.; Petel, M.; Belhassen, N.; Chadefaux, D. Radiated Sound and Transmitted Vibration Following the Ball/Racket Impact of a Tennis Serve. Vibration 2024, 7, 894–911. [Google Scholar] [CrossRef]

| Groups | Detection System | Video | ML | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| n | Timing (s) Median; IQR | n | Timing (s) Median; IQR | ρ | CV% a | ICC3,1 | 95% CI | k | SE | ||

| G1 | Rebound detection | 80 | 0.81; 0.07 | 69 | 0.81; 0.08 | 0.88 *** | 3.09 | 0.88 | 0.83 to 0.91 | 0.872 | 0.038 |

| Impact detection | 80 | 78 | |||||||||

| G2 | Rebound detection | 48 | 0.56; 0.06 | 40 | 0.57; 0.07 | 0.90 *** | 3.90 | 0.87 | 0.82 to 0.91 | 0.904 | 0.046 |

| Impact detection | 48 | 48 | |||||||||

| Player | N (Valid; Missing) | Median; IQR | Experience (Years) | p-Value Shapiro–Wilk |

|---|---|---|---|---|

| #1 | 18; 2 | 0.85; 0.06 | 1 | <0.001 * |

| #2 | 17; 3 | 0.79; 0.08 | 2.5 | 0.848 |

| #3 | 17; 3 | 0.78; 0.06 | 2.5 | 0.339 |

| #4 | 17; 4 | 0.84; 0.03 | 1 | 0.004 * |

| #5 | 19; 5 | 0.58; 0.11 | 1.5 | <0.001 * |

| #6 | 21; 3 | 0.55; 0.06 | 5 | 0.275 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Caprioli, L.; Najlaoui, A.; Campoli, F.; Dhanasekaran, A.; Edriss, S.; Romagnoli, C.; Zanela, A.; Padua, E.; Bonaiuto, V.; Annino, G. Tennis Timing Assessment by a Machine Learning-Based Acoustic Detection System: A Pilot Study. J. Funct. Morphol. Kinesiol. 2025, 10, 47. https://doi.org/10.3390/jfmk10010047

Caprioli L, Najlaoui A, Campoli F, Dhanasekaran A, Edriss S, Romagnoli C, Zanela A, Padua E, Bonaiuto V, Annino G. Tennis Timing Assessment by a Machine Learning-Based Acoustic Detection System: A Pilot Study. Journal of Functional Morphology and Kinesiology. 2025; 10(1):47. https://doi.org/10.3390/jfmk10010047

Chicago/Turabian StyleCaprioli, Lucio, Amani Najlaoui, Francesca Campoli, Aatheethyaa Dhanasekaran, Saeid Edriss, Cristian Romagnoli, Andrea Zanela, Elvira Padua, Vincenzo Bonaiuto, and Giuseppe Annino. 2025. "Tennis Timing Assessment by a Machine Learning-Based Acoustic Detection System: A Pilot Study" Journal of Functional Morphology and Kinesiology 10, no. 1: 47. https://doi.org/10.3390/jfmk10010047

APA StyleCaprioli, L., Najlaoui, A., Campoli, F., Dhanasekaran, A., Edriss, S., Romagnoli, C., Zanela, A., Padua, E., Bonaiuto, V., & Annino, G. (2025). Tennis Timing Assessment by a Machine Learning-Based Acoustic Detection System: A Pilot Study. Journal of Functional Morphology and Kinesiology, 10(1), 47. https://doi.org/10.3390/jfmk10010047