Image Moment-Based Features for Mass Detection in Breast US Images via Machine Learning and Neural Network Classification Models

Abstract

1. Introduction

2. Related Work

3. Materials and Method

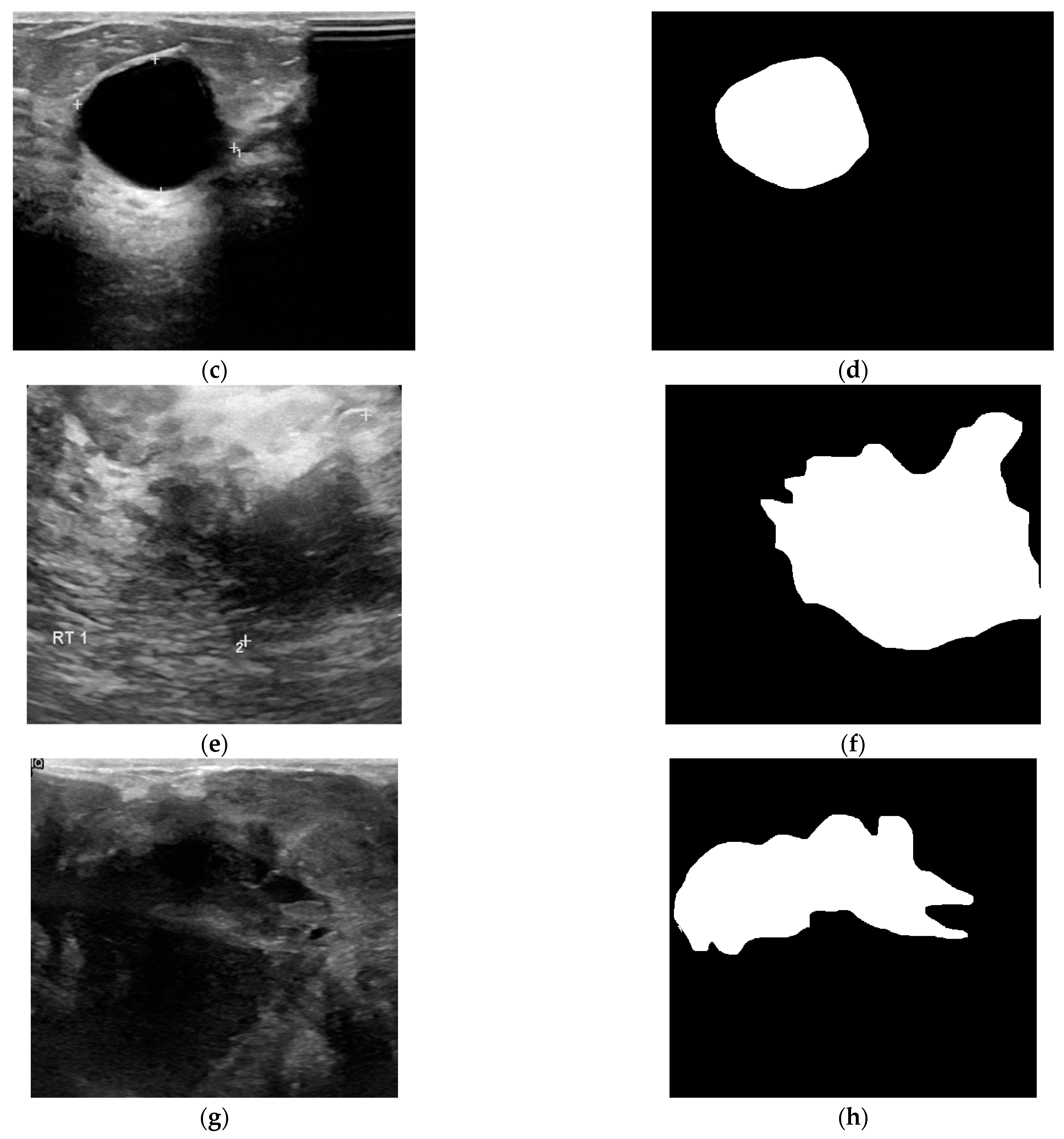

3.1. Dataset

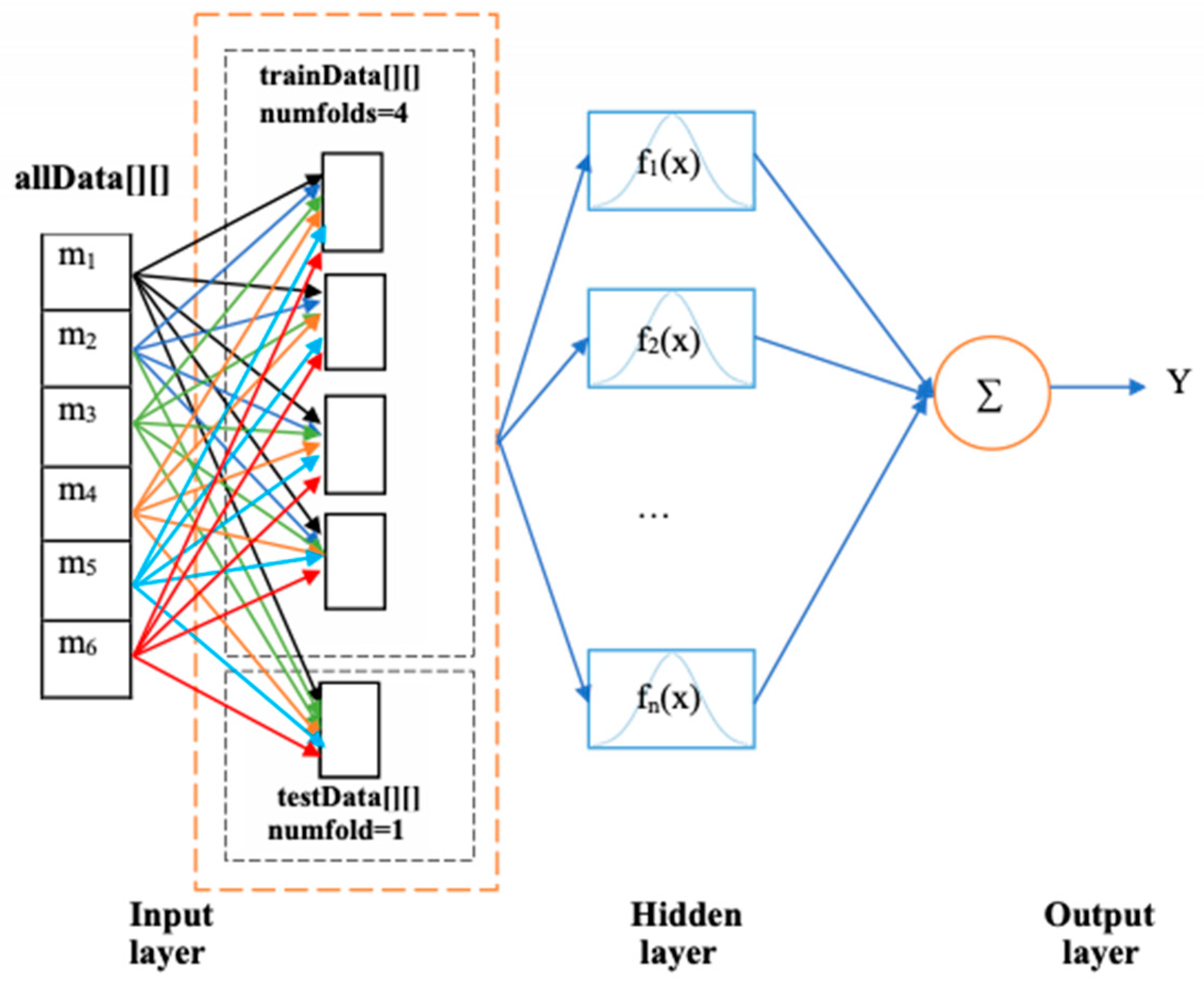

3.2. Feature Extraction and Selection

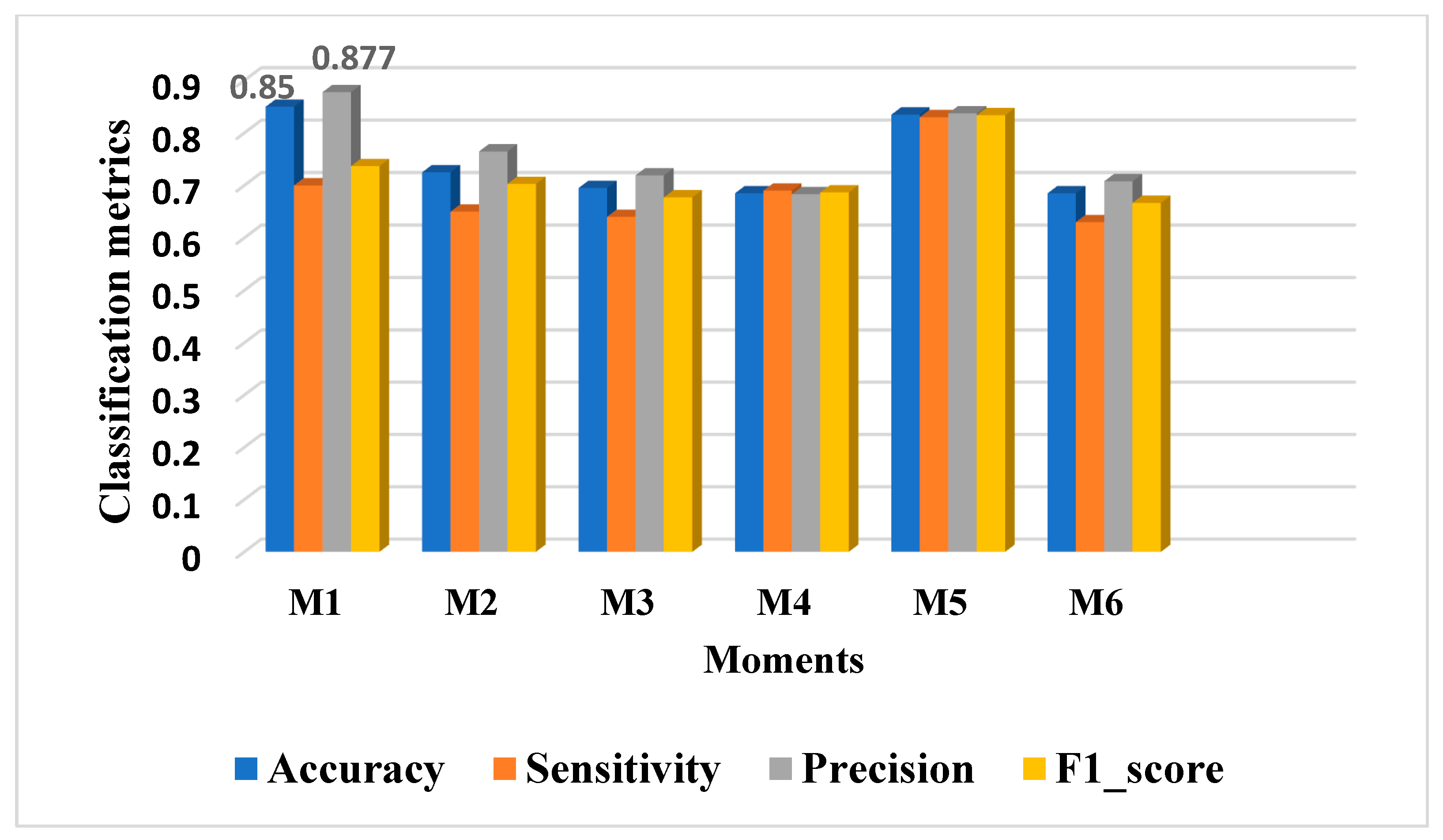

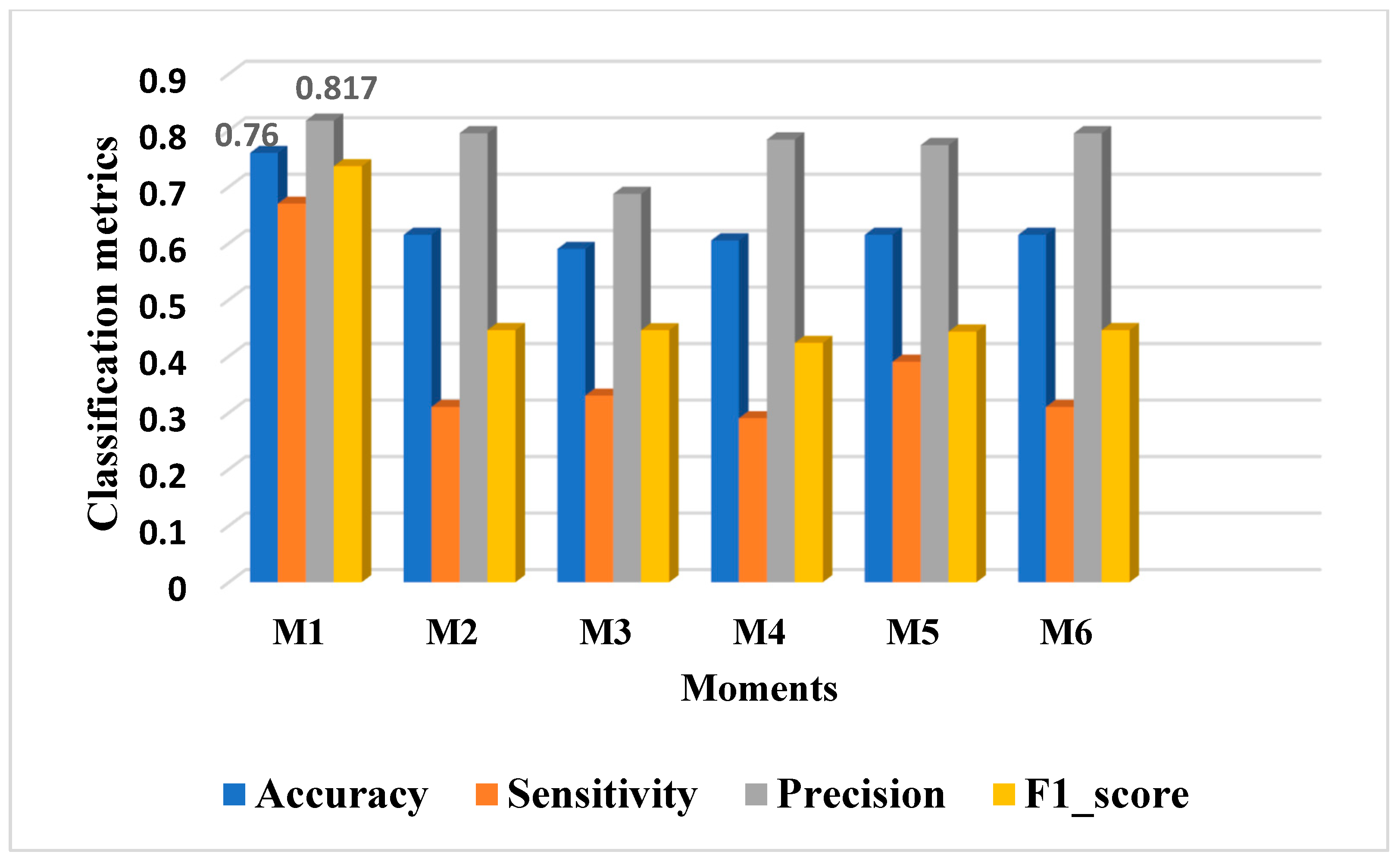

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA A Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Qi, X.; Zhang, L.; Chen, Y.; Pi, Y.; Chen, Y.; Lv, Q.; Yi, Z. Automated diagnosis of breast ultrasonography images using deep neural networks. Med. Image Anal. 2019, 52, 185–198. [Google Scholar] [CrossRef] [PubMed]

- Evans, A.; Trimboli, R.M.; Athanasiou, A.; Balleyguier, C.; Baltzer, P.A.; Bick, U.; Camps Herrero, J.; Clauser, P.; Colin, C.; Cornford, E.; et al. Breast ultrasound: Recommendations for information to women and referring physicians by the European Society of Breast Imaging. Insights Imaging 2018, 9, 449–461. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.-G.; Zhou, L.-Q.; Xu, J.-W.; Wang, J.-Y.; Wei, Q.; Deng, Y.-B.; Cui, X.-W.; Dietrich, C.-F. Artificial intelligence in breast ultrasound. World J. Radiol. 2019, 11, 19–26. [Google Scholar] [CrossRef]

- Yao, A.D.; Cheng, D.L.; Pan, I.; Kitamura, F. Deep learning in neuroradiology: A systematic review of current algorithms and approaches for the new wave of imaging technology. Radiol. Artif. Intell. 2020, 2, e190026. [Google Scholar] [CrossRef]

- Liu, H.; Cui, G.; Luo, Y.; Guo, Y.; Zhao, L.; Wang, Y.; Subasi, A.; Dogan, S.; Tuncer, T. Artificial Intelligence-Based Breast Cancer Diagnosis Using Ultrasound Images and Grid-Based Deep Feature Generator. Int. J. Gen. Med. 2022, 15, 2271–2282. [Google Scholar] [CrossRef]

- Thummalapalem, G.D.; Pradesh, A.; Vaddeswaram, G.D. Automated detection, segmentation and classification using deep learning methods for mammograms-a review. Int. J. Pure Appl. Math. 2018, 119, 627–666. [Google Scholar]

- Wang, H.-Y.; Jiang, Y.-X.; Zhu, Q.-L.; Zhang, J.; Xiao, M.-S.; Liu, H.; Dai, Q.; Li, J.-C.; Sun, Q. Automated Breast Volume Scanning: Identifying 3-D Coronal Plane Imaging Features May Help Categorize Complex Cysts. Ultrasound Med. Biol. 2016, 42, 689–698. [Google Scholar] [CrossRef]

- Berbar, M.A. Hybrid methods for feature extraction for breast masses classification. Egypt. Inform. J. 2018, 19, 63–73. [Google Scholar] [CrossRef]

- Lou, J.-Y.; Yang, X.-L.; Cao, A.-Z. A Spatial Shape Constrained Clustering Method for Mammographic Mass Segmentation. Comput. Math. Methods Med. 2015, 2015, 891692. [Google Scholar] [CrossRef]

- Liang, X.; Yu, J.; Liao, J.; Chen, Z. Convolutional Neural Network for Breast and Thyroid Nodules Diagnosis in Ultrasound Imaging. BioMed Res. Int. 2020, 2020, e1763803. [Google Scholar] [CrossRef] [PubMed]

- Hassan, S.A.; Sayed, M.S.; Abdalla, M.I.; Rashwan, M.A. Detection of breast cancer mass using MSER detector and features matching. Multimed. Tools Appl. 2019, 78, 20239–20262. [Google Scholar] [CrossRef]

- Hu, M.-K. Visual pattern recognition by moment invariants. IEEE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Wason, J.M.; Mander, A.P. The choice of test in phase II cancer trials assessing continuous tumour shrinkage when complete responses are expected. Stat. Methods Med. Res. 2015, 24, 909–919. [Google Scholar] [CrossRef] [PubMed]

- Cherif, W. Optimization of K-NN algorithm by clustering and reliability coefficients: Application to breast-cancer diagnosis. Procedia Comput. Sci. 2018, 127, 293–299. [Google Scholar] [CrossRef]

- Sharma, S.; Mehra, R. Conventional Machine Learning and Deep Learning Approach for Multi-Classification of Breast Cancer Histopathology Images—A Comparative Insight. J. Digit. Imaging 2020, 33, 632–654. [Google Scholar] [CrossRef]

- Podgornova, Y.A.; Sadykov, S.S. Comparative analysis of segmentation algorithms for the allocation of microcalcifications on mammograms. Inf. Technol. Nanotechnol. 2019, 2391, 121–127. [Google Scholar]

- Zhang, X.; Yang, J.; Nguyen, E. Breast cancer detection via Hu moment invariant and feedforward neural network. AIP Conf. Proc. 2018, 1954, 030014. [Google Scholar]

- Mata, B.N.B.U.; Meenakshi, D.M. Mammogram Image Segmentation by Watershed Algorithm and Classification through k-NN Classifier. Bonfring Int. J. Adv. Image Process. 2018, 8, 1–7. [Google Scholar] [CrossRef][Green Version]

- Sadhukhan, S.; Upadhyay, N.; Chakraborty, P. Breast Cancer Diagnosis Using Image Processing and Machine Learning. Emerg. Technol. Model. Graph. 2020, 937, 113–127. [Google Scholar]

- Hao, Y.; Qiao, S.; Zhang, L.; Xu, T.; Bai, Y.; Hu, H.; Zhang, W.; Zhang, G. Breast Cancer Histopathological Images Recognition Based on Low Dimensional Three-Channel Features. Front. Oncol. 2021, 11, 2018. [Google Scholar] [CrossRef] [PubMed]

- Hao, Y.; Zhang, L.; Qiao, S.; Bai, Y.; Cheng, R.; Xue, H.; Hou, Y.; Zhang, W.; Zhang, G. Breast cancer histopathological images classification based on deep semantic features and gray level co-occurrence matrix. PLoS ONE 2022, 17, e0267955. [Google Scholar] [CrossRef] [PubMed]

- Joshi, A.; Mehta, A. Analysis of K- Nearest Neighbor Technique for Breast Cancer Disease Classification. Int. J. Recent Sci. Res. 2018, 9, 26126–26130. [Google Scholar]

- Alshammari, M.M.; Almuhanna, A.; Alhiyafi, J. Mammography Image-Based Diagnosis of Breast Cancer Using Machine Learning: A Pilot Study. Sensors 2022, 22, 203. [Google Scholar] [CrossRef]

- Agaba, A.J.; Abdullahi, M.; Junaidu, S.B.; Hassan Ibrahim, H.; Chiroma, H. Improved multi-classification of breast cancer histopathological images using handcrafted features and deep neural network (dense layer). Intell. Syst. Appl. 2022, 14, 200066–200076. [Google Scholar]

- Xie, W.; Li, Y.; Ma, Y. Breast mass classification in digital mammography based on extreme learning machine. Neurocomputing 2016, 173, 930–941. [Google Scholar] [CrossRef]

- Zhuang, Z.; Yang, Z.; Zhuang, S.; Joseph Raj, A.N.; Yuan, Y.; Nersisson, R. Multi-Features-Based Automated Breast Tumor Diagnosis Using Ultrasound Image and Support Vector Machine. Comput. Intell. Neurosci. 2021, 2021, 9980326. [Google Scholar] [CrossRef]

- Shia, W.-C.; Chen, D.-R. Classification of malignant tumors in breast ultrasound using a pretrained deep residual network model and support vector machine. Comput. Med. Imaging Graph. 2021, 87, 101829–101835. [Google Scholar] [CrossRef]

- Wan, K.W.; Wong, C.H.; Ip, H.F.; Fan, D.; Yuen, P.L.; Fong, H.Y.; Ying, M. Evaluation of the performance of traditional machine learning algorithms, convolutional neural network and AutoML Vision in ultrasound breast lesions classification: A comparative study. Quant. Imaging Med. Surg. 2021, 11, 1381–1393. [Google Scholar] [CrossRef]

- Moldovanu, S.; Damian Michis, F.A.; Biswas, K.C.; Culea-Florescu, A.; Moraru, L. Skin Lesion Classification Based on Surface Fractal Dimensions and Statistical Color Cluster Features Using an Ensemble of Machine Learning Techniques. Cancers 2021, 13, 5256. [Google Scholar] [CrossRef]

- Damian, F.A.; Moldovanu, S.; Dey, N.; Ashour, A.S.; Moraru, L. Feature Selection of Non-Dermoscopic Skin Lesion Images for Nevus and Melanoma Classification. Computation 2020, 8, 41. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef] [PubMed]

- Labuda, N.; Seeliger, J.; Gedrande, T.; Kozak, K. Selecting Adaptive Number of Nearest Neighbors in k-Nearest Neighbor Classifier Apply Diabetes Data. J. Math. Stat. Sci. 2017, 2017, 1–13. [Google Scholar]

| Moments | M1 | M2 | M3 | M4 | M5 | M6 | M7 |

|---|---|---|---|---|---|---|---|

| p-value | 0.04 | <0.01 | <0.01 | <0.01 | <0.01 | <0.01 | 0.90 |

| Model | Features | Accuracy | Sensitivity | Precision | F1-Scores | Ref. |

|---|---|---|---|---|---|---|

| Hu’s moment + colored histogram + Haralick texture + SVM (linear kernel and C = 5) + VGG16 | Hu’s moment, colored histogram, and Haralick texture | 0.8182 | 0.82 | 0.85 | 0.81 | [16] |

| Hu’s moment + Haralick texture + colored histogram + DNN | Hu’s moment, Haralick texture, and colored histogram | 0.98 | 0.98 | 0.97 | 0.97 | [25] |

| Multiple features + SVM | Hu’s moment | 0.925 | 0.95 | 0.905 | 0.927 | [27] |

| Hu’s moment + colored histogram + Haralick texture CNN | Hu’s moment, colored histogram, and Haralick texture | 0.91 | 0.82 | 0.88 | 0.87 | [29] |

| Hu’s moment + K-NN | Hu’s moment | 0.89 | 0.83 | 0.87 | 0.83 | Our model |

| Hu’s moment + RBFNN | Hu’s moment | 0.76 | 0.67 | 0.817 | 0.73 | Our model |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anghelache Nastase, I.-N.; Moldovanu, S.; Moraru, L. Image Moment-Based Features for Mass Detection in Breast US Images via Machine Learning and Neural Network Classification Models. Inventions 2022, 7, 42. https://doi.org/10.3390/inventions7020042

Anghelache Nastase I-N, Moldovanu S, Moraru L. Image Moment-Based Features for Mass Detection in Breast US Images via Machine Learning and Neural Network Classification Models. Inventions. 2022; 7(2):42. https://doi.org/10.3390/inventions7020042

Chicago/Turabian StyleAnghelache Nastase, Iulia-Nela, Simona Moldovanu, and Luminita Moraru. 2022. "Image Moment-Based Features for Mass Detection in Breast US Images via Machine Learning and Neural Network Classification Models" Inventions 7, no. 2: 42. https://doi.org/10.3390/inventions7020042

APA StyleAnghelache Nastase, I.-N., Moldovanu, S., & Moraru, L. (2022). Image Moment-Based Features for Mass Detection in Breast US Images via Machine Learning and Neural Network Classification Models. Inventions, 7(2), 42. https://doi.org/10.3390/inventions7020042