Abstract

This note describes the application of Gaussian mixture regression to track fitting with a Gaussian mixture model of the position errors. The mixture model is assumed to have two components with identical component means. Under the premise that the association of each measurement to a specific mixture component is known, the Gaussian mixture regression is shown to have consistently better resolution than weighted linear regression with equivalent homoskedastic errors. The improvement that can be achieved is systematically investigated over a wide range of mixture distributions. The results confirm that with constant homoskedastic variance the gain is larger for larger mixture weight of the narrow component and for smaller ratio of the width of the narrow component and the width of the wide component.

1. Introduction

In the data analysis of high-energy physics experiments, and particularly in track fitting, Gaussian models of measurements errors or Gaussian models of stochastic processes such as multiple Coulomb scattering and energy loss of electrons by bremsstrahlung may turn out to be too simplistic or downright invalid. In these circumstances Gaussian mixture models (GMMs) can be used in the data analysis to model outliers or tails in position measurements of tracks [1,2], to model the tails of the multiple Coulomb scattering distribution [3,4], or to approximate the distribution of the energy loss of electrons by bremsstrahlung [5]. In these applications it is not known to which component an observation or a physical process corresponds, and it is up to the track fit via the Deterministic Annealing Filter or the Gaussian-sum Filter to determine the association probabilities [6,7,8,9]. The standard method for concurrent maximum-likelihood estimation of the regression parameters and the association probabilities is the EM algorithm [10,11].

In a typical tracking detector such as a silicon strip tracker, the spatial resolution of the measured position is not necessarily uniform across the sensors and depends on the crossing angle of the track and on the type of the cluster created by the particle. By a careful calibration of the sensors in the tracking detector a GMM of the position measurements can be formulated, see [12,13,14]. In such a model the component from which an observation is drawn is known. This can significantly improve the precision of the estimated regression/track parameters with respect to weighted least-squares, as shown below.

This introduction is followed by a recapitulation of weighted regression in linear and nonlinear Gaussian models in Section 2, both for the homoskedastic and for the heteroskedastic case. Section 3 introduces the concept of Gaussian mixture regression (GMR) and derives some elementary results on the covariance matrices of the estimated regression parameters. Section 4 presents the results of simulation studies that apply GMR to track fitting in a simplified tracking detector. The gain in precision that can be obtained by using a GMM rather than a simple Gaussian model strongly depends on the shape of the mixture distribution of the position errors. This shape can be described concisely by two characteristic quantities: the weight p of the wider component, and the ratio r of the smaller over the larger standard deviation. The study covers a wide range of p and r and shows the gain as a function of the number of measurements within a fixed tracker volume. It also investigates the effect of multiple Coulomb scattering on the gain in precision. Finally, Section 5 gives a summary of the results and discusses them in context of the related work in [12,13,14].

2. Linear Regression in Gaussian Models

This section briefly recapitulates the properties of linear Gaussian regression models and the associated estimation procedures.

2.1. Linear Gaussian Regression Models

The general linear Gaussian regression model (LGRM) has the following form:

where is the vector of observations, is the vector of unknown regression parameters, is the known model matrix, is a known vector of constants, and is the vector of observation errors, distributed according to the multivariate normal distribution with mean and covariance matrix . In the following it is assumed that and .

If the covariance matrix is diagonal, i.e., the observation errors are independent, two cases are distinguished in the literature. The errors in Equation (1) and the model are called homoskedastic, if is a multiple of the identity matrix:

Otherwise, the errors in Equation (1) and the model are called heteroskedastic. If is not diagonal, the observation errors are correlated, in which case the the model is called homoskedastic if all diagonal elements are identical, and heteroskedastic otherwise.

2.2. Estimation, Fisher Information Matrix and Efficiency

In least-squares (LS) estimation, the regression parameters are estimated my minimizing the following quadratic form with respect to :

Setting the gradient of to zero gives the LS estimate of , and linear error propagation yields its covariance matrix :

It is easy to see that is an unbiased estimate of the unknown parameter .

In the LGRM, the LS estimate is equal to the maximum-likelihood (ML) estimate. The likelihood function () and its logarithm are given by:

The gradient is equal to:

and its matrix of second derivatives, the Hesse matrix is equal to:

Setting the gradient to zero yields the ML estimate, which is identical to the LS estimate.

Under mild regularity conditions, the covariance matrix of an unbiased estimator cannot be smaller than the inverse of the Fisher information matrix; this is the famous Cramér-Rao inequality [15]. The (expected) Fisher information matrix of the parameters is defined as the negative expectation of the Hesse matrix of the log-likelihood function:

Equation (4) shows that in the LGRM the covariance matrix of the LS/ML estimate is equal to the inverse of the Fisher information matrix. The LS/ML estimator is therefore efficient: no other unbiased estimator of can have a smaller covariance matrix.

2.3. Nonlinear Gaussian Regression Models

In most applications to track fitting, the track model that describes the dependence of the observed track positions on the track parameters is nonlinear. The general nonlinear regression Gaussian model has the following form:

where is a nonlinear smooth function. The estimation of usually proceeds via Taylor expansion of the model function to first order:

where is a suitable expansion point and the Jacobian is evaluated at . The estimate is obtained according to Equation (4). The model function is then re-expanded at , and the procedure is iterated until convergence.

3. Linear Regression in Gaussian Mixture Models

If the observation errors do not follow a normal distribution, the Gaussian linear model can be generalized to a GMM, which is more flexible, but still retains some of the computational advantages of the Gaussian model. Applications of Gaussian mixtures can be traced back to Karl Pearson more than a century ago [16]. In view of the applications in Section 4, the following discussion is restricted to Gaussian mixtures with two components with identical means. The probability density function (PDF) of such a mixture has the following form:

where denotes the normal PDF with mean and variance . The Gaussian mixture (GM) with this PDF is denoted by . The component with index 0 is called the narrow component, the component with index 1 is called the wide component in the following.

3.1. Homoskedastic Mixture Models

We first consider the following linear GMM:

where . The variance of is equal to:

and the joint covariance matrix of is equal to

As the total variance is the same for all i, the GMM is called homoskedastic. By using just the total variances, it can be approximated by a homoskedastic LGRM, and the estimation of the regression parameters proceeds as in Equation (4), which can be simplified to:

If the association of the observations to the components is known, it can be encoded in a binary vector , where indicates the component with variance , for . In the case of independent draws from the mixture PDF in Equation (13), the are independent Bernoulli variables with . The probability of is therefore equal to:

Conditional on the known associations , the model can be interpreted as a heteroskedastic LGRM with a diagonal covariance matrix :

It follows from the binomial theorem that:

The GMR is thus a mixture of heteroskedastic Gaussian linear regressions with mixture weights . In each of the heteroskedastic LGRMs the regression parameters are estimated by:

Conditional on , the estimator is normally distributed with mean and covariance matrix , and therefore unbiased and efficient. The unconditional distribution of is obtained by summing over all with weights . Its density therefore reads:

where is the multivariate normal density of with mean and covariance matrix . is therefore unbiased, and its covariance matrix is obtained by summing over the possible values of with weights :

The unconditional estimator has minimum variance among all estimators that are conditionally unbiased for all , as shown by the following theorem.

Theorem 1.

Let be an estimator of with for all . Then its covariance matrix is not smaller than in the Loewner partial order:

Proof.

As , can be written in the following form:

As is efficient conditional on and is conditionally unbiased, it follows that

Summing over all with weights proves the theorem. □

Collorary.

, with equality if .

Proof.

As for all , the estimator fulfills the premise of the theorem. Its conditional covariance matrix is obtained by linear error propagation:

As is efficient, and . If , is homoskedastic and . □

The effective improvement in precision of the heteroskedastic estimator w.r.t.regarding to the homoskedastic estimator is difficult to quantify by simple formulas, but easy to assess by simulation studies. The results of three such studies in the context of track fitting are presented in Section 4.1, Section 4.2 and Section 4.3, respectively.

3.2. Heteroskedastic Mixture Models

The GMM in Equation (14) can be further generalized to the heteroskedastic case, where the mixture parameters depend on i:

The joint covariance matrix of is now diagonal with

The approximating LGRM that uses just the total variances is now heteroskedastic with covariance matrix . The corresponding estimator with its covariance matrix is computed as in Equation (4).

Conditional on the associations coded in , the covariance matrix now reads:

As in Section 3.1, the unconditional covariance matrix is the weighted sum of the conditional covariance matrices :

It can be proved as before that .

In the application to track fitting, heteroskedastic mixture models can be useful in non-uniform tracking detectors, in which the mixture model obtained by the calibration depends on the layer or on the sensor or on the possible cluster types.

A nonlinear GMM can be approximated by a linear GMM in the same way as a nonlinear Gaussian model can be approximated by a LGRM, see Section 2.3.

4. Track Fitting with Gaussian Mixture Models

4.1. Simulation Study with Straight Tracks

The setting of the first simulation study is a toy detector that consists of n equally spaced sensor planes in the interval from to . The track model is a straight line in the -plane. No material effects are simulated. The track position is recorded in each sensor plane, with a position error generated from a Gaussian mixture, see Equation (13). The total variance in each layer is equal to , corresponding to a standard deviation of . As the main objective of the simulation study is the comparison of the homoskedastic estimator of Equation (17) with the heteroskedastic estimator of Equation (21), the actual value of is irrelevant.

tracks were generated for each of 20 combinations of p and : . The corresponding values of and are collected in Table 1. The number n of planes varied from 3 to 20. For each pair and each value of n the exact covariance matrix was computed according to Equation (23).

Table 1.

Values of and (in µm) for and .

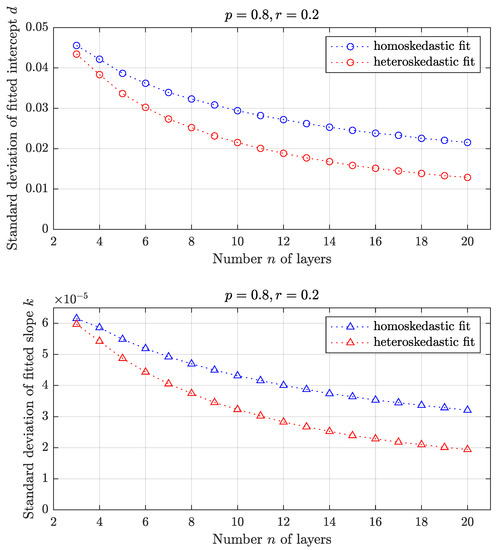

The track parameters were chosen as the intercept d and the slope k. All tracks were fitted with the homoskedastic and with the heteroskedastic error model. Figure 1 shows the estimated standard deviation (rms) of both fits, along with the exact values, for the intercept d and the slope k, with in the range . The standard deviations of the heteroskedastic fit are not only smaller, but also fall at a faster rate than the ones of the homoskedastic fit, i.e., faster than . The agreement between the estimated and the exact standard deviations is excellent.

Figure 1.

Top: estimated rms and exact standard deviation of the fitted intercept d; bottom: estimated rms and exact standard deviation of the fitted slope k, for in the range .

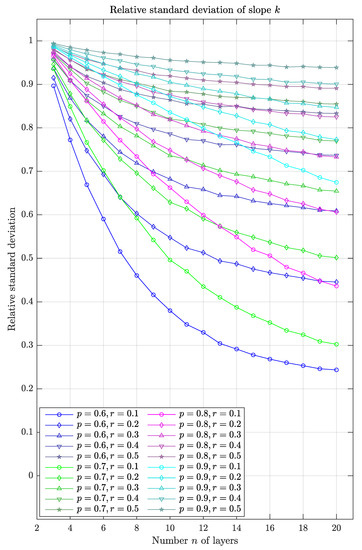

Figure 2 shows the relative standard deviation (heteroskedastic rms over homoskedastic rms) for the slope k and all 20 combinations of p and r. The corresponding plot for the intercept d looks very similar. A clear trend can be observed: the ratio gets smaller with decreasing p and with decreasing r. The largest improvement is therefore seen for , where the probability of very precise measurements () is the largest ().

Figure 2.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the fitted slope k for all 20 combinations of p and r in the range .

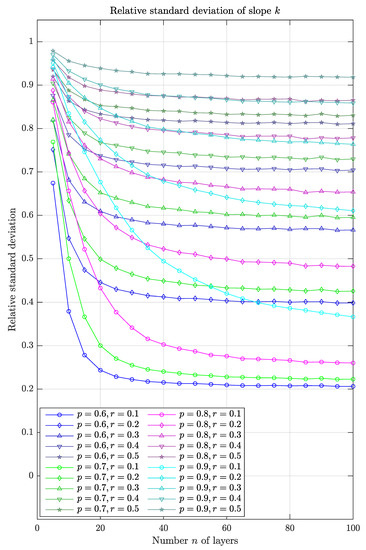

For large values of n the ratio curves approach a constant level, because asymptotically both rms values fall at the same rate, namely , see Figure 3. In the experimentally relevant region the ratio is already close to its lower limit in most, but not all, cases. In these cases, the superior performance of the heteroskedastic fit is fully exploited, and adding more measurements yields little further improvement. The exception is the case , where the limit is reached much later, at in the extreme case.

Figure 3.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the fitted slope k for all 20 combinations of p and r in the range .

4.2. Simulation Study with Circular Tracks

The setting of the second simulation study is a toy detector that consists of n equally spaced concentric cylinders with radii in the interval , with . The track model is a helix through the origin. Only the parameters in the bending plane (-plane) are estimated. The track parameters are: , the azimuth of the track position at ; , the azimuth of the track direction at ; and , the curvature of the circle.

tracks were generated for each of the same 20 combinations of p and as in Section 4.1. The track position in the bending plane was recorded in each cylinder, with a position error generated from the Gaussian mixtures in Table 1. Each sample of tracks consists of 10 subsamples with circles of 10 different radii, namely . The number n of planes varied from 3 to 20.

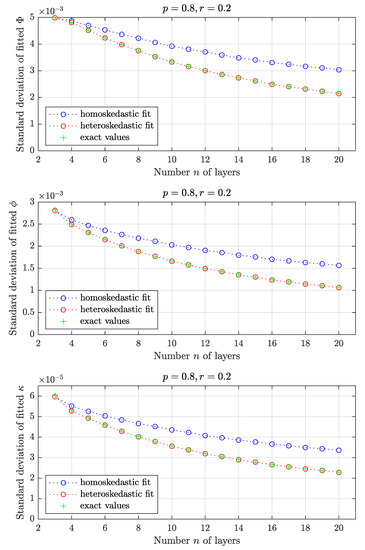

As the track model is nonlinear, estimation was performed according to the procedure in Section 2.3. All tracks were fitted with the homoskedastic and with the heteroskedastic error model, and for each pair and each value of n the exact covariance matrix was computed according to Equation (23). Figure 4 shows the estimated standard deviation (rms) of both fits, along with the exact values, for , and , with in the range . The standard deviations of the heteroskedastic fit are not only smaller, but also fall at a faster rate than the ones of the homoskedastic fit, i.e., faster than . The agreement between the estimated and the exact standard deviations is again excellent.

Figure 4.

Top: estimated rms and exact standard deviation of the fitted angle ; center: estimated rms and exact standard deviation of the fitted angle ; bottom: estimated rms and exact standard deviation of the fitted curvature , for in the range .

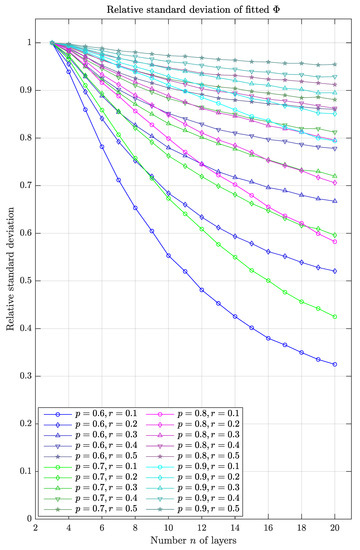

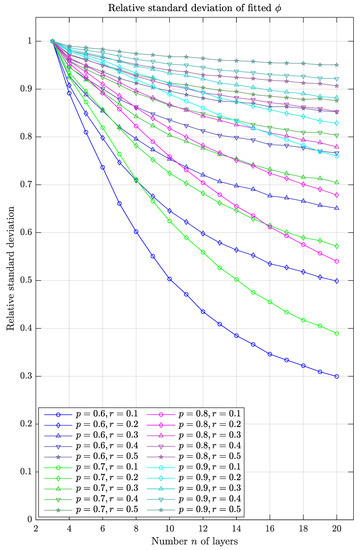

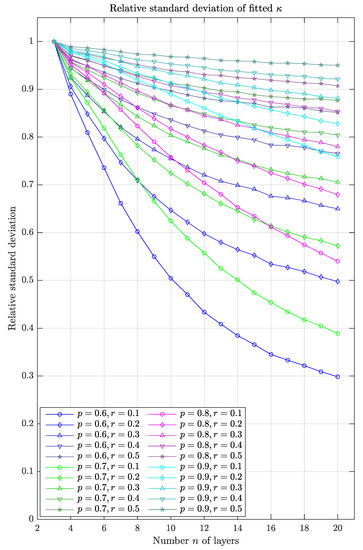

Figure 5, Figure 6 and Figure 7 show the relative standard deviation for the three track parameters and all 20 combinations of p and r. Because of the large correlation between and , Figure 6 and Figure 7 are virtually identical. The same trend as before can be observed: the ratio gets smaller with decreasing p and with decreasing r. The largest improvement is again seen for , where the probability of very precise measurements () is the largest (). Compared to the results of the straight-line fits, the gain in precision is somewhat smaller.

Figure 5.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the fitted angle for all 20 combinations of p and r in the range .

Figure 6.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the fitted angle for all 20 combinations of p and r in the range .

Figure 7.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the fitted curvature for all 20 combinations of p and r in the range .

4.3. Simulation Study with Circular Tracks and Multiple Scattering

The third study investigates the effect of multiple Coulomb scattering (MCS) on the results presented in the previous subsection. To this end, every layer of the toy detector is assumed to have a thickness of 1% of a radiation length. The simulation and the reconstruction of the tracks is modified accordingly, see for example [17].

tracks were generated for each of four values of the transverse momentum, i.e., and for each of the 20 combinations of p and as in Section 4.2. The dip angle of the tracks was set to . The track position in the bending plane was recorded in each cylinder, with a position error generated from the Gaussian mixtures in Table 1 plus a random deviation due to MCS. The number n of planes varied from 3 to 20.

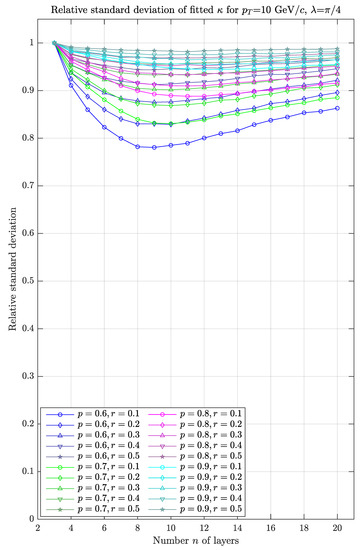

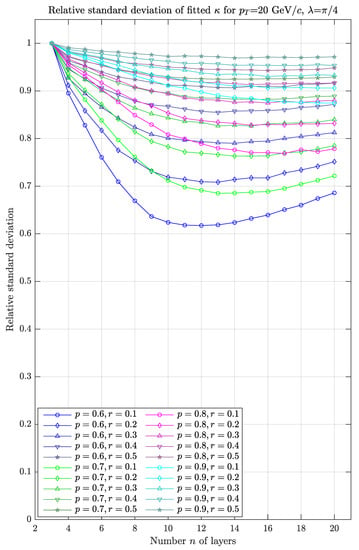

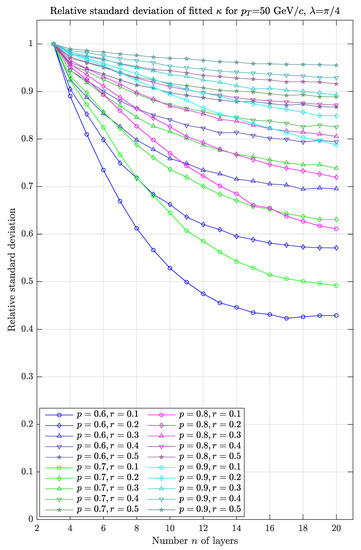

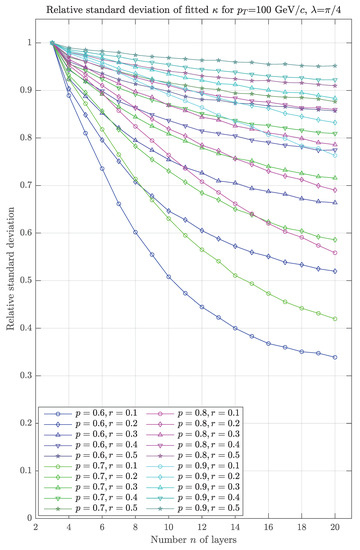

It is to be expected that the benefit provided by the heteroskedastic regression is diminished by the introduction of MCS. This is confirmed by the results in Figure 8, Figure 9, Figure 10 and Figure 11, which show the relative standard deviation of the curvature for the four values of and the 20 combinations of p and r. Although the gain in precision of the heteroskedastic estimator is rather poor for the lowest value of , see Figure 8, it steadily improves with increasing and approaches the optimal level for the highest value of , see Figure 7 and Figure 11.

Figure 8.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the curvature for , and all 20 combinations of p and r in the range .

Figure 9.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the curvature for , and all 20 combinations of p and r in the range .

Figure 10.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the curvature for , and all 20 combinations of p and r in the range .

Figure 11.

Relative standard deviation (heteroskedastic rms over homoskedastic rms) of the curvature for , and all 20 combinations of p and r in the range .

5. Summary and Conclusions

We have evaluated the gain in precision that can be achieved by GMR in track fitting using a mixture model of position errors rather than a simple Gaussian model with an average resolution. The results, both with straight and with circular tracks, show the expected behavior: the gain rises if the mixture weight (proportion) of the narrow component becomes larger, and it rises when the ratio of the width of the narrow component and the width of the wide component becomes smaller. Unsurprisingly, the gain is negatively affected by MCS, depending on the track momentum and on the amount of material.

It is also instructive to look at the results in the light of the findings reported in [12,13,14], in particular the claim that the heteroskedastic regression, which is in fact the GMR described here, achieves a linear growth of the resolution with the number n of detecting layers, instead of the usual behavior [13]. A glance at Figure 3 shows that in fact the heteroskedastic standard deviations initially fall faster than the homoskedastic ones, i.e., faster than . Eventually, however, the relative standard deviations level out at a constant ratio, showing that asymptotically both standard deviations obey the expected mode of falling with n. The observation reported in [13] is clearly a small-sample effect, which—in contrast to most other cases—is very significant in the mixture with , the one assumed in [13]. Fortunately, it is in the range of n that is relevant for realistic tracking detectors, i.e., somewhere between 5 and 20 for a silicon tracker. It seems therefore absolutely worthwhile to optimize the local reconstruction and error calibration of the position measurements as far as possible.

Funding

This research received no external funding.

Acknowledgments

I thank Gregorio Landi for interesting and instructive discussions. I also thank the anonymous reviewers for valuable comments and corrections, and in particular for suggesting to investigate the effects of multiple Coulomb scattering.

Conflicts of Interest

The author declares no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GM | Gaussian Mixture |

| GMM | Gaussian Mixture Model |

| GMR | Gaussian Mixture Regression |

| LGRM | Linear Gaussian Regression Model |

| LS | Least-Squares |

| MCS | Multiple Coulomb Scattering |

| Probability Density Function |

References

- Frühwirth, R. Track fitting with non-Gaussian noise. Comput. Phys. Commun. 1997, 100, 1. [Google Scholar] [CrossRef]

- Frühwirth, R.; Strandlie, A. Track fitting with ambiguities and noise: A study of elastic tracking and nonlinear filters. Comput. Phys. Commun. 1999, 120, 197. [Google Scholar] [CrossRef]

- Frühwirth, R.; Regler, M. On the quantitative modelling of core and tails of multiple scattering by Gaussian mixtures. Nucl. Instrum. Meth. Phys. Res. A 2001, 456, 369. [Google Scholar] [CrossRef]

- Frühwirth, R.; Liendl, M. Mixture models of multiple scattering: Computation and simulation. Comput. Phys. Commun. 2001, 141, 230. [Google Scholar] [CrossRef]

- Frühwirth, R. A Gaussian-mixture approximation of the Bethe-Heitler model of electron energy loss by bremsstrahlung. Comput. Phys. Commun. 2003, 154, 131. [Google Scholar] [CrossRef]

- Adam, W.; Frühwirth, R.; Strandlie, A.; Todorov, T. Reconstruction of electrons with the Gaussian-sum filter in the CMS tracker at the LHC. J. Phys. G Nucl. Part. Phys. 2005, 31, N9. [Google Scholar] [CrossRef]

- Strandlie, A.; Frühwirth, R. Reconstruction of charged tracks in the presence of large amounts of background and noise. Nucl. Instrum. Meth. Phys. Res. A 2006, 566, 157. [Google Scholar] [CrossRef]

- The ATLAS Collaboration. Improved Electron Reconstruction in ATLAS Using the Gaussian Sum Filter-Based Model for Bremsstrahlung; Technical Report ATLAS-CONF-2012-047; CERN: Geneva, Switzerland, 2012. [Google Scholar]

- Strandlie, A.; Frühwirth, R. Track and vertex reconstruction: From classical to adaptive methods. Rev. Mod. Phys. 2010, 82, 1419. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. Royal Stat. Soc. Ser. B 1977, 39, 1. [Google Scholar]

- Borman, S. The Expectation Maximization Algorithm—A Short Tutorial; Technical Report; University of Utah: Salt Lake City, UT, USA, 2004; Available online: tinyurl.com/BormanEM (accessed on 30 August 2020).

- Landi, G.; Landi, G. Optimizing Momentum Resolution with a New Fitting Method for Silicon-Strip Detectors. Instruments 2018, 2, 22. [Google Scholar] [CrossRef]

- Landi, G.; Landi, G. Beyond the Limit of the Least Squares Resolution and the Lucky Model. 2018. Available online: http://xxx.lanl.gov/abs/1808.06708 (accessed on 20 August 2020).

- Landi, G.; Landi, G. The Cramer–Rao Inequality to Improve the Resolution of the Least-Squares Method in Track Fitting. Instruments 2020, 4, 2. [Google Scholar] [CrossRef]

- Kendall, M.; Stuart, A. The Advanced Theory of Statistics, 3rd ed.; Charles Griffin: London, UK, 1973; Volume 2. [Google Scholar]

- Pearson, K. Contributions to the mathematical theory of evolution. Phils. Trans. R. Soc. Lond. A 1894, 185. [Google Scholar]

- Brondolin, E.; Frühwirth, R.; Strandlie, A. Pattern recognition and reconstruction. In Particle Physics Reference Library Volume 2: Detectors for Particles and Radiation; Fabjan, C., Schopper, H., Eds.; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).