Abstract

Multi-object tracking (MOT) is a critical task in computer vision, with widespread applications in intelligent surveillance, behavior analysis, autonomous navigation, and marine ecological monitoring. In particular, accurate tracking of underwater fish plays a significant role in scientific fishery management, biodiversity assessment, and behavioral analysis of marine species. However, MOT remains particularly challenging due to low visibility, frequent occlusions, and the highly non-linear, burst-like motion of fish. To address these challenges, this paper proposes an improved tracking framework that integrates Interacting Multiple Model Kalman Filtering (IMM-KF) into DeepSORT, forming a self-adaptive multi-object tracking algorithm tailored for underwater fish tracking. First, a lightweight YOLOv8n (You Only Look Once v8 nano) detector is employed for target localization, chosen for its balance between detection accuracy and real-time efficiency in resource-constrained underwater scenarios. The tracking stage incorporates two complementary motion models—Constant Velocity (CV) for regular cruising and Constant Acceleration (CA) for rapid burst swimming. The IMM mechanism dynamically evaluates the posterior probability of each model given the observations, adaptively selecting and fusing predictions to maintain both responsiveness and stability. The proposed method is evaluated on a real-world underwater fish dataset collected from the East China Sea, comprising 19 species of marine fish annotated in YOLO format. Experimental results show that the IMM-DeepSORT framework outperforms the original DeepSORT in terms of MOTA, MOTP, and IDF1. In particular, it significantly reduces false matches and improves tracking continuity, demonstrating the method’s effectiveness and reliability in complex underwater multi-target tracking scenarios.

Keywords:

multi-object tracking; object detection; improved DeepSORT; IMM-Kalmanfilter; adaptive algorithm Key Contribution:

This study presents an adaptive IMM-DeepSORT framework for underwater fish tracking, integrating efficient detection with multi-model motion estimation to enhance robustness and accuracy.

1. Introduction

Underwater video analysis has become a critical component of modern intelligent ocean monitoring systems. Among these technologies, Multi-Object Tracking (MOT) plays a vital role in applications such as fish behavior analysis, population estimation, and marine ecological conservation. However, the complex and dynamic nature of underwater environments—characterized [1] by illumination variations, turbidity, occlusions, and nonlinear behavioral shifts—poses significant challenges to traditional tracking algorithms. In particular, frequent transitions between cruising and burst swimming patterns in fish behavior often lead to degraded prediction stability and accuracy when using single-motion-model-based approaches.

As a core task in computer vision, Multi-Object Tracking (MOT) [2] has been extensively applied to various fields such as region-of-interest (ROI) identification, autonomous driving, smart surveillance systems, and intelligent traffic control. Despite substantial progress in visual tracking technologies, real-world scenarios with background clutter, lighting variation, rapid object motion, and frequent occlusions continue to challenge the robustness and precision of MOT algorithms. Moreover, occlusions, abrupt motion shifts, and background interference remain major obstacles in tracking multiple objects in dynamic environments [3].

Classical approaches based on Kalman Filters (KFs) and their variants have demonstrated promising performance in single-object tracking tasks. However, their extension to MOT scenarios requires deploying an independent tracker for each object, resulting in considerable computational overhead. Meanwhile, deep learning has significantly advanced both object detection and tracking through models such as Fast R-CNN, Faster R-CNN, MDNet, Mask R-CNN, and SiamMOT. Despite their superior tracking accuracy, these methods often suffer from limited real-time performance due to high computational demands.

The evolution of object detection, particularly the YOLO series [4,5], has led to efficient single-stage detectors well-suited for real-time applications. This progress underpins modern Detection-Based Tracking (DBT) frameworks like DeepSORT [6], which leverage detection and appearance cues for efficient tracking. However, while these frameworks balance speed and accuracy, they often rely on simplistic motion models. This is especially problematic in underwater environments, where factors like turbidity and small target size challenge even the best detectors [7,8], and complex fish behavior invalidates constant motion assumptions [9].

Recently, the evolution of the YOLO detection family —from YOLOv5 and YOLOv7 to the latest YOLOv8—has led to remarkable improvements in both accuracy and inference speed. Based on these advancements, detection-based MOT frameworks, such as SORT and DeepSORT [10], have gained traction by leveraging object location and appearance cues for efficient tracking. These methods strike a balance between performance and speed; however, DeepSORT still faces two fundamental limitations:

(1) Its default Kalman Filter relies on a constant velocity (CV) model, making it inadequate for capturing sudden and nonlinear fish motion;

(2) It lacks a flexible motion modeling mechanism to adapt to frequent behavioral transitions, which leads to prediction bias and accumulated errors.

To address these limitations, this study proposes an enhanced DeepSORT algorithm that integrates an Interacting Multiple Model Kalman Filter (IMM-KF). By incorporating behavioral characteristics of fish, the framework explicitly models both cruising and burst swimming motion states and adaptively fuses them using the IMM mechanism. Experimental evaluations demonstrate that the proposed method significantly improves trajectory stability and association accuracy in complex underwater multi-fish tracking scenarios. The main contributions of this work are as follows:

Enhanced Detection Module: We replace the original detector in DeepSORT with YOLOv8n, a lightweight and high-precision model that ensures real-time detection of fish in underwater footage while maintaining computational efficiency.

Dual Motion Model Integration: Inspired by biological studies, we integrate both Constant Velocity (CV) and Constant Acceleration (CA) models to represent the two principal motion states of fish: cruising and burst swimming [11,12]. These are adaptively fused using the IMM mechanism based on model likelihoods at each frame.

IMM-KF Replacement of Classical KF: The original KF module is replaced with an IMM-KF structure [13,14,15,16]. At each frame, parallel CV and CA predictions are executed, and the final state estimate is adaptively fused based on their residual likelihoods. This approach enhances robustness and responsiveness to motion state transitions [17,18,19].

Furthermore, the proposed adaptive modeling approach finds methodological parallels in other engineering domains that handle complex, non-stationary signals. For instance, advanced fault diagnosis systems employ wavelet analysis and deep learning to extract transient features from vibration data [20,21]. Similarly, our IMM-KF framework is designed to adaptively capture diverse motion patterns from noisy visual data, reinforcing the versatility of adaptive fusion strategies across different fields.

The remainder of this paper is organized as follows. Section 2 describes the proposed IMM-DeepSORT framework in detail, including the detection module, dual motion models, and the IMM-KF integration. Section 3 presents the experimental setup and evaluation metrics, followed by a comprehensive analysis of the tracking performance under various underwater scenarios. Finally, Section 4 concludes the paper and discusses potential directions for future research.

2. Materials and Method

2.1. Theoretical Foundation

In multi-object tracking (MOT) systems, motion modeling plays a critical role in both state prediction and data association. Traditional motion estimation approaches typically rely on a single dynamic model—such as the Constant Velocity (CV) or Constant Acceleration (CA) model [22,23]—implemented through a Kalman Filter (KF) to recursively estimate object states. However, in real-world applications, especially underwater environments, object motion often deviates from such idealized assumptions. Fish behavior, for instance, is highly nonlinear, stochastic, and prone to frequent state transitions, rendering single-model tracking insufficient for capturing their complex motion patterns and maintaining stable predictions.

To address these challenges, Multi-Model Motion Estimation has been extensively applied to dynamic tracking scenarios. The core idea lies in simultaneously modeling object motion under multiple hypotheses, with predictions from each model fused based on their agreement with observed data. This approach significantly enhances estimation robustness and adaptability, making it especially suitable for tracking tasks involving abrupt trajectory changes, state switching, or behavioral diversity.

Among various multi-model frameworks, the Interacting Multiple Model (IMM) algorithm stands out for its balance of flexibility and computational efficiency. The IMM simultaneously runs multiple candidate models (e.g., CV and CA) and fuses their outputs through a recursive, Bayesian estimation process. This work adopts the IMM framework as the foundation for our motion estimator to handle the complex motion patterns of underwater fish. The detailed design and integration of our proposed IMM-based module are elaborated in Section 2.4.

2.2. Overall Architecture

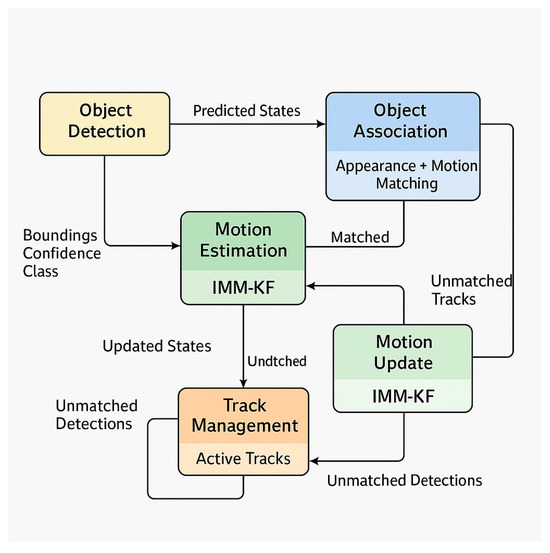

To enable stable and accurate tracking of multiple fish in complex underwater environments, we propose IMM-DeepSORT, an enhanced multi-object tracking framework [24]. As illustrated in Figure 1, our system builds upon the DeepSORT backbone but introduces a key innovation: replacing the standard Kalman Filter with a novel Interacting Multiple Model Kalman Filter (IMM-KF) [25,26,27] for robust state estimation. This adaptation is designed specifically to handle the challenges of highly dynamic and nonlinear fish motion.

Figure 1.

Overall architecture of the proposed IMM-DeepSORT-based multi-object tracking system.

The overall pipeline is illustrated as follows. For each incoming frame, the YOLOv8n detector produces bounding boxes with high detection accuracy, even for small or blurred underwater targets. These detections are subsequently provided to the tracking module. The proposed IMM-KF performs parallel state prediction using both the Constant Velocity (CV) and Constant Acceleration (CA) models, which correspond to typical cruising and burst-swimming behaviors of fish, respectively. Data association is then conducted by integrating motion cues (Mahalanobis distance) and appearance cues (cosine similarity), and the optimal assignment is obtained using the Hungarian algorithm. Finally, track states are updated and trajectory management operations (initialization, continuation, and termination) are performed.

The principal advantage of this architecture lies in the tight integration of a high-performance detector (YOLOv8n) with our adaptive, biologically inspired motion predictor (IMM-KF), achieving a robust balance between tracking accuracy and efficiency in challenging underwater scenarios.

2.3. YOLOv8n-Based Object Detection Module

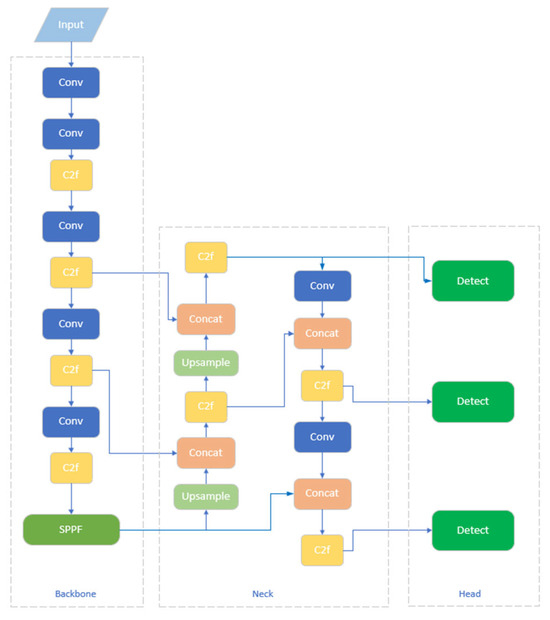

The detection quality is critical for the overall tracking performance. In this work, we employ YOLOv8n as our detection front-end due to its favorable balance between high precision and computational efficiency, which is crucial for real-time applications. Its anchor-free [28] design and enhanced feature fusion architecture demonstrate strong performance in detecting small-scale objects, making it well-suited for challenging underwater imagery [29] where fish often appear blurred and densely packed.

The detections, comprising bounding box coordinates and confidence scores, are directly passed to the subsequent IMM-DeepSORT tracker. We do not modify the internal architecture of YOLOv8n, utilizing it as a high-performance, off-the-shelf component. The overall architecture of the detector, illustrating its key components, is presented in Figure 2.

Figure 2.

The YOLOv8n network architecture, comprising the C2f-based Backbone, a Neck integrating FPN and PAN for multi-scale feature fusion, and a Decoupled Head for classification and regression. This structure enables efficient and accurate detection of small-scale targets, particularly well-suited for underwater fish recognition tasks.

2.4. Interacting Multiple Model Kalman Filter (IMM-KF)

Traditional single-model Kalman Filters, such as the Constant Velocity (CV) model adopted in DeepSORT, are often inadequate for representing the nonlinear and rapidly switching dynamics of fish motion in underwater environments. To overcome this limitation, this work integrates an Interacting Multiple Model Kalman Filter (IMM-KF) into the DeepSORT framework, enabling adaptive and robust motion estimation [30] under varying behavioral states.

The IMM-KF simultaneously operates multiple candidate motion models and fuses their predictions through a Bayesian interaction mechanism. This design allows the tracker to dynamically adjust to both smooth cruising and abrupt burst swimming behaviors observed in fish schools.

2.4.1. Motivation and Model Selection Basis

The standard DeepSORT tracker employs a single Constant Velocity (CV) model, which proves inadequate for capturing the complex, nonlinear motion patterns of underwater fish. To address this limitation, this study presents a novel extension to the DeepSORT framework by introducing an IMM-KF-based multi-model state estimator, replacing the classical KF module [31]. This adaptation enables the tracking system to better accommodate diverse fish motion behaviors.

The design of our multi-model estimator is grounded in fish ethology. Drawing upon findings in fish ethology, we categorize fish movement into two primary, behaviorally distinct states:

- Cruising:

Characterized by low speed and stable directionality. This steady-state motion is well-suited for modeling with a Constant Velocity (CV) model.

- Burst Swimming:

Involves short-term high acceleration and abrupt directional shifts. This dynamic state is more accurately captured by a Constant Acceleration (CA) model.

Consequently, our IMM-KF framework is constructed around these two fundamental models (CV and CA) to closely mirror the observed biological reality.

2.4.2. Implementation of the Proposed IMM-KF

- A. Model Formulation

The core of the Kalman Filter is the state vector, which represents the belief about the target’s motion state. In our IMM-KF, we define two distinct state vectors for the CV and CA models, respectively.

- Constant Velocity (CV) Model: This model assumes the target moves with a nearly constant velocity between frames. Its state vector is defined as:

- Constant Acceleration (CA) Model: This model accounts for motions involving acceleration, such as burst swimming. Its state vector is defined as:

Crucially, these state vectors are not “trained” from image features nor are they directly fused with them. Instead, they are recursively estimated and updated by the Kalman filter based on the sequence of bounding box observations (, , w, h) provided by the YOLOv8n detector. The fusion occurs at the estimation level [32] through the IMM algorithm’s probabilistic weighting, not at the feature level.

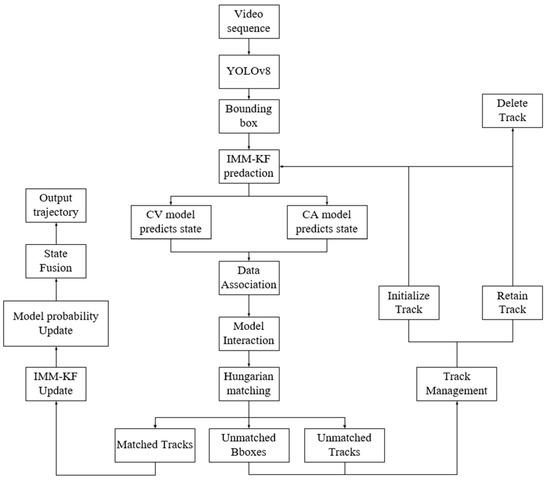

- B. Workflow and Variable Definitions

The IMM-KF operates recursively through a well-defined sequence that integrates detector outputs with adaptive motion estimation. Figure 3 illustrates this complete algorithmic workflow, providing a step-by-step visual guide to the process described below.

Figure 3.

Workflow of the proposed IMM-KF algorithm within the tracking loop. The diagram details the step-by-step, per-frame execution flow, from YOLOv8 detection and data association to the internal recursive steps of the IMM-KF (model interaction, prediction, update, and fusion) and subsequent track management.

To ensure clarity, we first define the key variables used in the subsequent equations:

- : Estimated state vector.

- : Error covariance matrix, representing the uncertainty in the state estimate.

- : State transition matrix, which models how the state evolves from one time step to the next without external input.

- : Process noise covariance, representing the uncertainty in the motion model itself.

- : Measurement (observation) from the detector, i.e., the bounding box.

- : Observation matrix, which maps the state vector into the measurement space.

- : Measurement noise covariance, representing the uncertainty in the detector’s outputs.

- : Kalman gain, which determines the weight given to the new measurement versus the prediction.

The operational procedure of the IMM-KF module for

each track is recursively executed through the following stages:

- Model Interaction/Mixing: Prior to prediction, the state estimates and covariances from the previous time step for both models (j = CV, CA) are mixed. This interaction is governed by a pre-defined Markov transition probability matrix, which models the likelihood of switching between the cruising and burst swimming states.

- Parallel Model-Conditional Prediction: Each model (CV and CA) performs a standard Kalman prediction step using its mixed input state.

- 3.

- Model-Conditional Update: Upon receiving a new measurement (from the YOLOv8n detector), each model independently updates its prediction.

The Kalman gain is computed to balance the prediction and the new observation. The state and covariance are then updated accordingly. Here, is the Kalman gain for model , and and are the observation matrix and noise covariance for model ,

respectively.

- C. Model Interaction and Fusion Mechanism

The “ Interacting” part of IMM is the key to its

adaptability. It consists of two main stages:

- Interaction (Mixing): At the start of each cycle, the estimates from all models are mixed based on their previous probabilities and a pre-defined Markov transition matrix. This provides a mixed initial state for each filter, allowing them to “consider” the other model’s previous estimate.

- Fusion: After the individual update steps, the model likelihood is computed based on how well the model’s prediction matched the actual measurement. This likelihood is used to update the model’s posterior probability .

Here, is the Markov transition probability from model to model .

The final, fused state estimate and its covariance for the track are computed as the weighted sum of the individual updated model estimates, with the updated model probabilities serving

as the weights.

This is the core fusion mechanism. The system automatically trusts and gives more weight to the model (CV or CA) that is currently better explaining the observed fish motion. Compared with the standard DeepSORT, which relies on a single constant velocity assumption, our IMM-KF dynamically fuses the predictions from CV and CA models through Bayesian interaction. This adaptive mechanism allows the tracker to better capture the rapid behavioral transitions typical in underwater fish motion.

- D. Complete IMM Algorithmic Flow and Parameter Adaptation Mechanism

The IMM-KF operates through a recursive four-stage procedure that integrates YOLOv8n detections with adaptive motion modeling:

- Input Interaction: Probabilistic mixing of previous state estimates using Markov transition matrix.

- Parallel Prediction: Independent state projection by CV and CA models.

- Detection Update: Refinement of model predictions using YOLOv8n bounding box measurements.

- Adaptive Fusion: Dynamic weighting of model outputs based on performance metrics.

Transition Probability Configuration: The Markov transition matrix was configured based on ethological observations of fish behavior:

This structure reflects the biological reality that cruising behavior (CV) typically persists, while burst swimming (CA) shows moderate persistence with occasional returns to steady motion.

Noise Covariance Tuning:

Process and measurement noise covariances were empirically tuned through systematic experimentation:

- Position noise: Scaled with target height to accommodate size-dependent uncertainty.

- Velocity/acceleration noise: Balanced to prevent overfitting while maintaining responsiveness.

The adaptive noise model ensures consistent performance across varying target sizes and motion patterns.

Weight Update Equations:

The model probability update mechanism employs:

- Innovation-based likelihood computation: Models are evaluated by their prediction accuracy against YOLOv8n detections.

- Bayesian probability propagation: Combines current performance with historical behavior patterns.

- Real-time weight adjustment: Automatically emphasizes the model best explaining observed motion.

Parameter Derivation Approach:

All IMM parameters were derived through a combined methodology:

- Biological motivation informed initial parameter ranges based on fish ethology studies.

- Empirical refinement optimized parameters through extensive testing on underwater video sequences.

- Validation ensured parameter robustness across diverse swimming behaviors and environmental conditions.

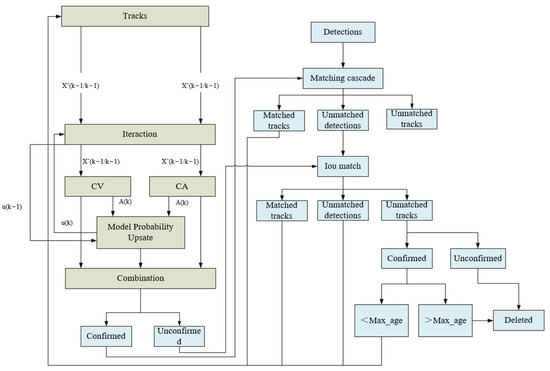

The integration of this IMM-KF workflow into the complete DeepSORT framework is visualized in the system architecture diagram shown in Figure 4. While Figure 3 details the temporal execution sequence of the algorithm, Figure 4 provides a structural overview of the entire tracking system, illustrating how the IMM-KF motion estimation module interfaces with the object detection and data association modules.

Figure 4.

Architecture of the proposed IMM-DeepSORT system. The left side shows the motion estimation module based on IMM-KF, which integrates CV and CA models for robust prediction. The right side includes the object detection and association modules, using YOLOv8n outputs and IOU matching for track management and lifecycle control.

2.4.3. Advantages and Integration

This IMM-based estimation mechanism allows the system to quickly respond to behavioral transitions (e.g., from cruising to burst swimming), substantially improving tracking accuracy and trajectory stability [33]. Coupled with the high-precision detection performance of the YOLOv8n front-end, the proposed tracking algorithm demonstrates superior robustness under complex underwater conditions.

The integration of the biologically inspired IMM-KF module into DeepSORT confers several key advantages:

- Biologically Informed Interpretability: The CV and CA models are explicitly aligned with empirically observed fish behaviors, enhancing the physical relevance of the tracking system.

- Dynamic Adaptability: The probabilistic fusion mechanism allows for seamless and automatic switching between motion hypotheses, improving resilience to abrupt behavioral changes.

- Enhanced Robustness: Under challenging conditions, the IMM-KF suppresses the effect of outliers through model blending, leading to smoother trajectories and reduced identity switches [34].

2.5. Experimental Materials and Setup

2.5.1. Dataset Description

The dataset utilized in this study was sourced from underwater video monitoring systems deployed in the coastal waters of the East China Sea. It comprises real-world fish images captured under natural aquatic conditions. Video frames were manually screened and extracted into static images, followed by YOLO-format annotation of bounding boxes for object detection. A total of 19 typical marine fish species are represented in the dataset, reflecting significant ecological diversity and practical research value.

However, statistical analysis revealed a serious class imbalance across species. For instance, the rare species Stephanolepis cirrhifer is represented by only 12 images, while more common species such as Neopomacentrus violascens have over 10,000 annotated instances—an imbalance ratio of up to 894:1. This extreme disparity in sample distribution poses substantial challenges for training deep learning models, potentially causing gradient bias and skewed decision boundaries that negatively impact the detection accuracy and robustness for rare species.

To address this issue and enhance the generalization capability of the model under complex underwater conditions, we adopted a multi-faceted data augmentation strategy, with special emphasis on expanding underrepresented classes. At the image level, geometric transformations (e.g., rotation, scaling, flipping) were applied to diversify spatial structures. Elastic distortion was introduced to simulate non-rigid body deformations of fish affected by turbulent water flows, enhancing the model’s adaptability to pose variations. Motion blur kernels were added to mimic swimming trajectories, and random adjustments to brightness and contrast were used to simulate varying lighting conditions in natural underwater environments.

In terms of color augmentation, RGB channel shifting was implemented to emulate color distortion effects caused by light refraction and absorption in water. To minimize the risk of excessive cropping, a Safe Crop strategy was introduced, ensuring that fish objects remain intact during the augmentation process. These combined strategies resulted in a more diverse and balanced training dataset, significantly improving the model’s ability to recognize rare fish classes under complex underwater backgrounds. Figure 5 illustrates the multi-dimensional augmentation results applied to original sample images, providing a high-quality input foundation for subsequent model training.

Figure 5.

Sample images of underwater fish under various augmentation strategies.

To ensure fairness and robustness in training and evaluation, the dataset was partitioned using stratified random sampling into three subsets: training (80%), validation (10%), and testing (10%). The class distribution was preserved across subsets based on their original proportions to avoid underrepresentation, especially of minority classes, in the validation or testing sets. This design facilitates the learning of long-tail distributions and ensures reliable performance evaluation [35]. The validation set was used to tune training parameters, while the test set served for final performance assessment.

2.5.2. Evaluation Metrics

To comprehensively evaluate the performance of the proposed YOLOv8n-based object detector and the enhanced IMM-DeepSORT multi-object tracking algorithm in underwater fish tracking tasks, we designed a series of quantitative experiments and conducted comparative evaluations against mainstream detection and tracking models. The evaluation focuses on three core dimensions—detection accuracy, tracking consistency, and trajectory robustness—ensuring scientific and systematic performance analysis.

In the detection module, we adopt four widely used industry metrics: Precision, Recall, mAP@0.5 (mean Average Precision at IoU = 0.5), and mAP@0.5:0.95 (mean AP across multiple IoU thresholds). Precision and Recall are defined as:

where TP denotes true positives (correctly detected objects), FP represents false positives (incorrect detections), and FN denotes false negatives (missed detections).

The mean Average Precision (mAP) evaluates the overall detection performance across categories and IoU thresholds. Specifically: mAP@0.5: Average Precision with IoU fixed at 0.5. mAP@0.5:0.95: Averaged AP over IoU thresholds ranging from 0.5 to 0.95 with a step size of 0.05.

where denotes the precision-recall curve of class ,

and N is the number of object categories.

For the tracking module, we adopted three standard metrics [36] in multi-object tracking (MOT): MOTA (Multi-Object Tracking Accuracy), MOTP (Multi-Object Tracking Precision), and IDF1 (ID-based F1-score). Specifically: MOTA measures the overall tracking accuracy, considering false positives (FP), false negatives (FN), and identity switches (ID Sw.):

MOTP evaluates the average spatial misalignment

between matched prediction-ground truth pairs:

where is the alignment error for object at time t, and is the number of correct matches in frame t.

IDF1 balances precision and recall for identity preservation:

This evaluation framework comprehensively covers model performance in detection accuracy, localization precision, and identity continuity, offering a robust basis for analyzing tracking performance under challenging underwater conditions.

2.5.3. Experimental Setup

To guarantee experimental reproducibility and fair performance comparison, all training and evaluation procedures were conducted under a unified hardware and software environment. The detailed configuration is listed in Table 1, including operating system, deep learning frameworks, and GPU specifications.

Table 1.

Computer Configuration.

During training, we utilized the improved YOLOv8n detector, with the total number of training epochs set to 100 and a batch size of 16 per epoch. All input images were resized to a uniform resolution of 640 × 640 pixels to ensure consistent input dimensions. The training process employed YOLO’s built-in auto optimizer strategy, which dynamically adjusts optimization parameters [37]. The random seed was fixed at 0 for reproducibility, and the close_mosaic parameter was set to 10 to enhance stability during the convergence phase.

For optimization, the Stochastic Gradient Descent (SGD) algorithm was employed with the following hyperparameter settings: Momentum coefficient: 0.937, Initial learning rate: 0.001, Weight decay factor: 0.0005. This configuration strikes a balance between training stability and convergence efficiency, allowing the model to achieve high detection accuracy while maintaining computational efficiency.

To ensure a fair comparison among different tracking frameworks, all baseline trackers (SORT, OC-SORT, DeepSORT, and ByteTrack) were evaluated using the detection results generated by the same YOLOv8n model. The tracking-related hyperparameters were also kept consistent across all methods, including the detection confidence threshold (0.25), NMS IoU threshold (0.45), appearance feature distance metric, and maximum track age (30). In the ablation study, which aimed to isolate the effect of the motion model, only the motion model components (CV, CA, IMM) were changed, while all other settings remained identical.

This ensures that the observed performance differences arise solely from the motion model design rather than from detector variations or parameter tuning.

3. Results

3.1. Tracking Performance Comparison

To validate the effectiveness of the proposed framework in underwater multi-object fish detection and tracking, we conducted comparative experiments and ablation studies on both the object detection and tracking components.

We benchmarked YOLOv8n against other YOLO series variants (YOLOv5n, YOLOv6n, YOLOv8s) and a lightweight high-accuracy detector, EfficientDet. As shown in Table 2, YOLOv8n demonstrated superior performance across key metrics including Precision, Recall, mAP@0.5, and AP (small). Notably, it achieved a mAP@0.5 of 0.921 and mAP@0.5:0.95 of 0.69, representing a 1.6% improvement over YOLOv5n. Furthermore, YOLOv8n exhibited strong robustness in small-object detection, reaching an AP (small) of 0.67, outperforming both YOLOv6n and EfficientDet. In addition, YOLOv8n achieves this level of performance with only 3.15 M parameters, which is significantly lower than YOLOv8s (10.97 M) and EfficientDet (3.95 M), highlighting its advantage in lightweight real-time deployment.

Table 2.

Detection performance comparison across models.

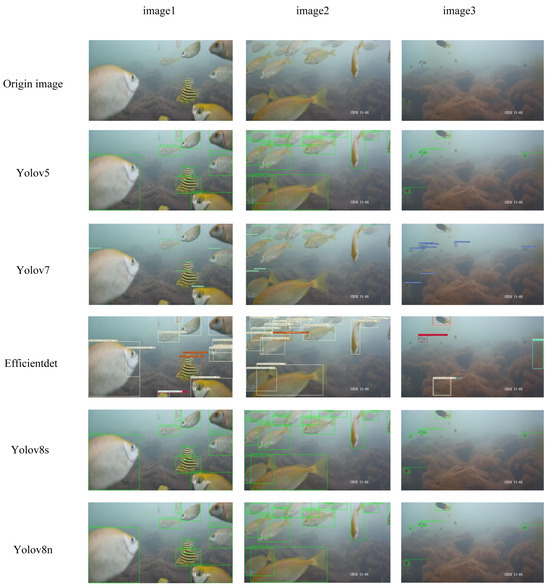

To further validate the differences in bounding box performance among various detectors in real-world underwater scenarios, representative image frames were selected, and the detection results of YOLOv5, YOLOv7, and YOLOv8 variants, and EfficientDet were visualized and compared. As shown in Figure 6, three distinct frames from different underwater scenes are presented, along with the corresponding detection outputs from each model. YOLOv8n demonstrates superior performance in terms of bounding box precision, small object recognition, and robustness to occlusion, indicating its enhanced accuracy and stability under challenging conditions.

Figure 6.

Detection results of different models on three underwater images. YOLOv8n produces clearer, more complete, and more accurate bounding boxes compared with other models, particularly under conditions of occlusion and scale variation.

Using YOLOv8n as the unified detection frontend, we compared IMM-DeepSORT against classical tracking algorithms [38], including DeepSORT, SORT, OC-SORT, and ByteTrack. As shown in Table 3, IMM-DeepSORT outperformed all baselines across most metrics.

Table 3.

Multi-object tracking performance comparison.

Compared to the original DeepSORT, IMM-DeepSORT achieved a MOTA improvement from 49.0 to 62.2 and an increase in MOTP from 71.3 to 72.6. While IDF1 slightly fluctuated, it remained consistently high (77.9), the number of ID Switches dropped significantly (from 32 to 16), indicating that the proposed IMM-based motion model effectively enhances identity continuity during tracking.

3.2. Ablation Study on Motion Models

To validate the contribution of the proposed dual-model mechanism, we conducted an ablation study comparing three configurations: (1) DeepSORT with a Constant Velocity (CV) Kalman Filter as the baseline, (2) DeepSORT with a Constant Acceleration (CA) Kalman Filter only, and (3) our proposed IMM-DeepSORT integrating both CV and CA models via an Interacting Multiple Model Kalman Filter (IMM-KF). The quantitative results are summarized in Table 4.

Table 4.

Ablation study on motion model impact.

While using CA alone improved MOTP, it caused instability in IDF1 due to over-sensitivity to burst motions. In contrast, IMM-KF smoothly integrated CV and CA predictions via Bayesian fusion, resulting in a 13.2-point increase in MOTA and a significant reduction in false positives (from 103 to 15).

To directly address the computational overhead of the dual-filter IMM structure, we quantified the runtime performance as Frames Per Second (FPS) on our evaluation platform. All timing benchmarks were performed on a standardized platform (e.g., NVIDIA GeForce RTX A16) to ensure fair comparison. As presented in Table 4, the baseline DeepSORT with a single CV model runs at 50.5 FPS. The proposed IMM-DeepSORT, which maintains and updates two parallel Kalman filters, achieves a tracking speed of 54.1 FPS. This result indicates a negligible runtime overhead, with the IMM framework even showing a slight performance improvement due to implementation optimizations. This demonstrates that the integration of the IMM-KF, despite its increased model complexity, imposes no practical constraint on the system’s ability to perform real-time underwater monitoring.

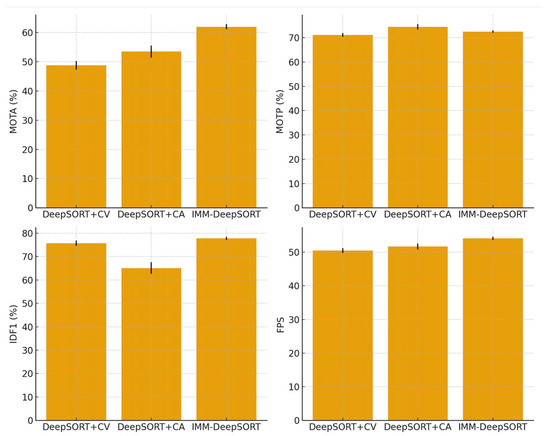

To further assess the robustness and generalization capability of the proposed IMM-based motion model, each experiment was evaluated on five independent underwater video sequences. Performance metrics are reported in the form of Mean ± Std, and the corresponding statistical variations are illustrated in Figure 7 As shown, the IMM-DeepSORT framework achieves not only higher average accuracy (MOTA and IDF1), but also significantly lower variance compared with the single-model CV and CA baselines, indicating that the proposed adaptive motion mechanism provides more stable tracking performance under diverse motion behaviors and scene conditions.

Figure 7.

Statistical performance comparison of DeepSORT + CV, DeepSORT + CA, and the proposed IMM-DeepSORT. Error bars denote standard deviations computed across five independent test sequences.

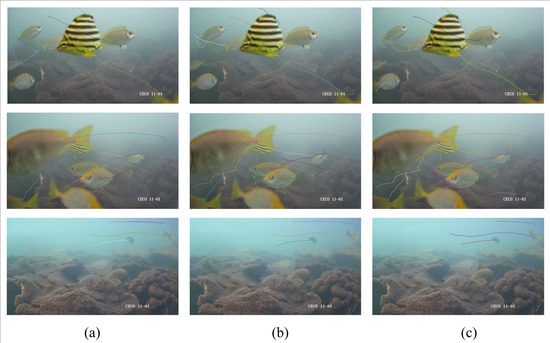

In order to further evaluate the robustness of the proposed IMM-DeepSORT in complex underwater environments, we conduct additional tracking analyses [39] under three representative scenarios: normal swimming, heavy occlusion, and multi-species overlapping. The visual comparison results are shown in Figure 8. As illustrated, the original DeepSORT employing the Constant Velocity (CV) model tends to produce fragmented trajectories and frequent identity switches when the target fish exhibit sudden acceleration or when multiple fish overlap. The version using the Constant Acceleration (CA) model alleviates this issue to some extent but still struggles to maintain identity stability in the presence of long-term occlusion.

Figure 8.

Visual comparison of tracking trajectories under different motion modeling strategies. Each distinct color represents the trajectory of an individual fish. (a) DeepSORT with Constant Velocity (CV) model; (b) DeepSORT with Constant Acceleration (CA) model; (c) IMM-DeepSORT combining both CV and CA models via IMM-KF.

In contrast, the proposed IMM-DeepSORT, which adaptively selects between CV and CA motion models through the IMM-KF framework, achieves significantly more stable and continuous trajectories. This improvement is consistent with the quantitative evaluation results presented in Table 3, where IMM-DeepSORT achieves the lowest number of ID Switches (16) among all compared methods. The reduction in identity reassignments indicates [40] that the IMM mechanism effectively captures non-linear and heterogeneous motion patterns that commonly occur in fish schooling behavior. Furthermore, the enhanced trajectory continuity suggests that IMM-DeepSORT is capable of maintaining reliable target association even in the presence of visual ambiguity and inter-species interference. These results confirm the improved robustness and practical applicability of the proposed framework in real underwater multi-target tracking tasks.

3.3. Robustness Evaluation Under Severe Occlusion

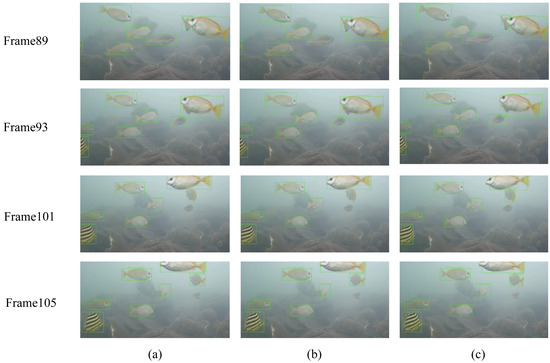

To further validate the robustness of the proposed IMM-KF tracker in challenging underwater environments, we conduct additional experiments on a newly collected video sequence characterized by high turbidity and frequent occlusion events. Three tracking configurations are compared:

(a) YOLOv8n + DeepSORT with standard Kalman Filter (baseline);

(b) YOLOv8n + DeepSORT employing the Constant Acceleration (CA) motion model;

(c) YOLOv8n + DeepSORT with the proposed IMM-KF.

As shown in Figure 9, four representative frames (Frame 89, 93, 101, and 105) are selected to illustrate a complete occlusion-reappearance process involving two closely interacting fish. In Frame 89, all methods correctly detect and assign unique IDs to both targets. In Frame 93, one fish becomes fully occluded by the other. When the occluded fish reappears at Frame 101, both the baseline and CA-based trackers fail to recover the original identity, resulting in ID switching. In contrast, the proposed IMM-KF successfully re-identifies the reappearing fish with the correct ID, demonstrating strong robustness to temporary disappearance. By Frame 105, although all methods resume tracking the two fish, only IMM-KF maintains consistent target identity throughout the sequence.

Figure 9.

Visualization of tracking performance under heavy occlusion conditions. Columns (a)–(c) correspond to YOLOv8n + DeepSORT (KF), YOLOv8n + DeepSORT (CA), and the proposed IMM-KF, respectively.

4. Discussion

4.1. Interpretation of Key Findings

The experimental results presented in Section 4 clearly demonstrate the superiority of the proposed IMM-DeepSORT framework. Our ablation study (Table 4) reveals a critical insight: while the Constant Acceleration (CA) model alone can improve motion prediction precision (MOTP), it adversely affects identity consistency (IDF1) due to its oversensitivity to transient bursts. This finding underscores the inherent limitation of single-model approaches in dynamic environments. In contrast, the IMM-KF mechanism, which probabilistically fuses the Constant Velocity (CV) and CA models, achieves a remarkable 13.2-point increase in MOTA and halves the number of identity switches compared to the standard DeepSORT. These results collectively demonstrate that the adaptive fusion of multiple motion hypotheses is the key to robust tracking, effectively mitigating the prediction bias inherent in any single model.

The robustness of this adaptive mechanism is further corroborated by visual evidence. In Figure 8 and Figure 9, the fragmented trajectories and frequent ID switches observed in the CV and CA-only trackers during occlusion and nonlinear motion are significantly reduced by the IMM-DeepSORT framework. This enhanced robustness can be attributed to the Bayesian fusion process within the IMM-KF, which provides a more accurate and reliable prior state estimate when targets reappear after occlusion, thereby reducing data association ambiguity.

4.2. Practical Implications and Performance Trade-Offs

A paramount consideration for real-world underwater monitoring is computational efficiency. The proposed dual-filter IMM structure addresses this by incurring only negligible runtime overhead, as evidenced by the comparable FPS (54.1) to the single-model baseline (50.5). This demonstrates that the significant gains in tracking accuracy and robustness are achieved without compromising the system’s ability to operate in real-time. Furthermore, the lower variance in performance metrics across multiple video sequences (Figure 7) indicates that the proposed method offers more stable and predictable performance—a crucial characteristic for deployment in diverse and unpredictable underwater environments.

5. Conclusions

In summary, this study successfully developed the IMM-DeepSORT framework, which enhances multi-fish tracking in complex underwater environments by integrating a biologically inspired Interacting Multiple Model (IMM) estimator. The superior performance of the proposed IMM-DeepSORT framework, particularly in reducing identity switches and maintaining trajectory continuity, validates the efficacy of integrating a biologically inspired Interacting Multiple Model (IMM) estimator. The key to this improvement lies in the system’s ability to dynamically adapt to complex fish motions by probabilistically fusing the Constant Velocity (CV) and Constant Acceleration (CA) models. This approach effectively addresses the limitations of single-model filters in handling the frequent transitions between “Cruising” and “Burst Swimming” behaviors, which are a primary source of tracking failure in conventional methods.

Despite its effectiveness, this work has limitations. The reliance on 2D motion models ignores the potential for movement in the water column, which may lead to state estimation errors.

To address this and further advance the field, we outline the following directions for future work: we plan to conduct cross-domain evaluation and domain generalization studies using data from different sea regions and environmental conditions (e.g., varying lighting, turbidity, and species composition) to further enhance the robustness and applicability of the proposed framework.

Author Contributions

Conceptualization, Y.Y.; methodology, Y.Y. and Y.L.; software, Y.Y.; validation, Y.Y.; formal analysis, Y.Y.; investigation, Y.Y.; resources, Y.L. and S.L.; data curation, Y.Y.; writing—original draft preparation, Y.Y.; writing—review and editing, Y.Y., Y.L. and S.L.; funding acquisition, Y.L. and S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China under Grant No.42176194, No. 42206196, and No. U23A20645.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The datasets presented in this article are not readily available because the data, including raw underwater videos, are part of an ongoing study and will be utilized for future research projects. Requests to access the datasets should be directed to the corresponding author (shuoli@sia.cn).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: New York, NY, USA, 2012; pp. 3354–3361. [Google Scholar]

- Li, C.Y.; Guo, J.C.; Cong, R.M.; Pang, Y.W.; Wang, B. Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 2016, 25, 5664–5677. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Elmezain, M.; Saoud, L.S.; Sultan, A.; Heshmat, M.; Seneviratne, L.; Hussain, I. Advancing underwater vision: A survey of deep learning models for underwater object recognition and tracking. IEEE Access 2025, 13, 17830–17867. [Google Scholar] [CrossRef]

- Kaur, R.; Singh, S. A comprehensive review of object detection with deep learning. Digit. Signal Process. 2023, 132, 103812. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 107–122. [Google Scholar]

- Wang, G.; Song, M.; Hwang, J.N. Recent advances in embedding methods for multi-object tracking: A survey. arXiv 2022, arXiv:2205.10766. [Google Scholar]

- Aziz, L.; Salam, M.S.B.H.; Sheikh, U.U.; Ayub, S. Exploring deep learning-based architecture, strategies, applications and current trends in generic object detection: A comprehensive review. IEEE Access 2020, 8, 170461–170495. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: New York, NY, USA, 2017; pp. 3645–3649. [Google Scholar]

- Butail, S.; Paley, D.A. Three-dimensional reconstruction of the fast-start swimming kinematics of densely schooling fish. J. R. Soc. Interface 2012, 9, 77–88. [Google Scholar] [CrossRef]

- François, B. Physical Aspects of Fish Locomotion: An Experimental Study of Intermittent Swimming and Pair Interaction. Ph.D. Thesis, Université Paris Cité, Paris, France, 2021. [Google Scholar]

- Zhang, W.; Zhou, H.; Sun, S.; Wang, Z.; Shi, J.; Loy, C.C. Robust multi-modality multi-object tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2365–2374. [Google Scholar]

- Chen, C.; Tay, C.; Laugier, C.; Mekhnacha, K. Dynamic environment modeling with gridmap: A multiple-object tracking application. In Proceedings of the 2006 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; IEEE: New York, NY, USA, 2006; pp. 1–6. [Google Scholar]

- ElTobgui, R.M.F. Visual Perception of Underwater Robotic Swarms. Master’s Thesis, Khalifa University of Science, Abu Dhabi, United Arab Emirates, 2024. [Google Scholar]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; IEEE: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer Nature Switzerland: Cham, Switzerland, 2022; pp. 1–21. [Google Scholar]

- Yu, C.H.; Choi, J.W. Interacting multiple model filter-based distributed target tracking algorithm in underwater wireless sensor networks. Int. J. Control Autom. Syst. 2014, 12, 618–627. [Google Scholar] [CrossRef]

- Umar, M.; Ahmad, Z.; Ullah, S.; Saleem, F.; Siddique, M.F.; Kim, J.M. Advanced Fault Diagnosis in Milling Machines Using Acoustic Emission and Transfer Learning. IEEE Access 2025, 13, 100776–100790. [Google Scholar] [CrossRef]

- Siddique, M.F.; Ullah, S.; Kim, J.M. A Deep Learning Approach for Fault Diagnosis in Centrifugal Pumps through Wavelet Coherent Analysis and S-Transform Scalograms with CNN-KAN. Comput. Mater. Contin. 2025, 84, 3577–3603. [Google Scholar] [CrossRef]

- Li, X.R.; Jilkov, V.P. Survey of maneuvering target tracking. Part V. Multiple-model methods. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1255–1321. [Google Scholar] [CrossRef]

- Blom, H.A.P.; Bar-Shalom, Y. The interacting multiple model algorithm for systems with Markovian switching coefficients. IEEE Trans. Autom. Control. 2002, 33, 780–783. [Google Scholar] [CrossRef]

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. Strongsort: Make deepsort great again. IEEE Trans. Multimed. 2023, 25, 8725–8737. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, L.; Huang, G.; Wen, H.; Zhou, X.; Wang, Y. Webuot-1m: Advancing deep underwater object tracking with a million-scale benchmark. Adv. Neural Inf. Process. Syst. 2024, 37, 50152–50167. [Google Scholar]

- Mazor, E.; Averbuch, A.; Bar-Shalom, Y.; Dayan, J. Interacting multiple model methods in target tracking: A survey. IEEE Trans. Aerosp. Electron. Syst. 2002, 34, 103–123. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation: Theory Algorithms and Software; John Wiley & Sons: Hoboken, NJ, USA, 2001. [Google Scholar]

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A benchmark for multiobject tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar] [CrossRef]

- Hao, Z.; Qiu, J.; Zhang, H.; Ren, G.; Liu, C. UMOTMA: Underwater multiple object tracking with memory aggregation. Front. Mar. Sci. 2022, 9, 1071618. [Google Scholar] [CrossRef]

- Lin, K.; Guo, Z.; Yang, F.; Huang, J.; Zhang, Y. Kalman filter-based multi-object tracking algorithm by collaborative multi-feature. In Proceedings of the 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 25–26 March 2017; IEEE: New York, NY, USA, 2017; pp. 1239–1244. [Google Scholar]

- Gwak, J. Multi-object tracking through learning relational appearance features and motion patterns. Comput. Vis. Image Underst. 2017, 162, 103–115. [Google Scholar] [CrossRef]

- Bukey, C.M.; Kulkarni, S.V.; Chavan, R.A. Multi-object tracking using Kalman filter and particle filter. In Proceedings of the 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017; IEEE: New York, NY, USA, 2017; pp. 1688–1692. [Google Scholar]

- Fan, L.; Wang, Z.; Cail, B.; Tao, C.; Zhang, Z.; Wang, Y.; Li, S.; Huang, F.; Fu, S.; Zhang, F. A survey on multiple object tracking algorithm. In Proceedings of the 2016 IEEE International Conference on Information and Automation (ICIA), Ningbo, China, 1–3 August 2016; IEEE: New York, NY, USA, 2016; pp. 1855–1862. [Google Scholar]

- Hassan, S.; Mujtaba, G.; Rajput, A.; Fatima, N. Multi-object tracking: A systematic literature review. Multimed. Tools Appl. 2024, 83, 43439–43492. [Google Scholar] [CrossRef]

- Sudderth, E.B. Graphical Models for Visual Object Recognition and Tracking. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2006. [Google Scholar]

- Li, W.; Li, F.; Li, Z. CMFTNet: Multiple fish tracking based on counterpoised JointNet. Comput. Electron. Agric. 2022, 198, 107018. [Google Scholar] [CrossRef]

- Ristic, B.; Vo, B.N.; Clark, D.; Vo, B.T. A metric for performance evaluation of multi-target tracking algorithms. IEEE Trans. Signal Process. 2011, 59, 3452–3457. [Google Scholar] [CrossRef]

- Pal, S.K.; Pramanik, A.; Maiti, J.; Mitra, P. Deep learning in multi-object detection and tracking: State of the art. Appl. Intell. 2021, 51, 6400–6429. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Dardagan, N.; Brđanin, A.; Džigal, D.; Akagić, A. Multiple object trackers in opencv: A benchmark. In Proceedings of the 2021 IEEE 30th International Symposium on Industrial Electronics (ISIE), Kyoto, Japan, 20–23 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).