An Optimized Framework for Detecting Suspicious Accounts in the Ethereum Blockchain Network

Abstract

1. Introduction

- Hybrid Optimization Framework: We present the first integrated framework that applies PSO for feature selection and GA for hyperparameter tuning across three state-of-the-art classifiers (XGBoost, SVM, Isolation Forest).

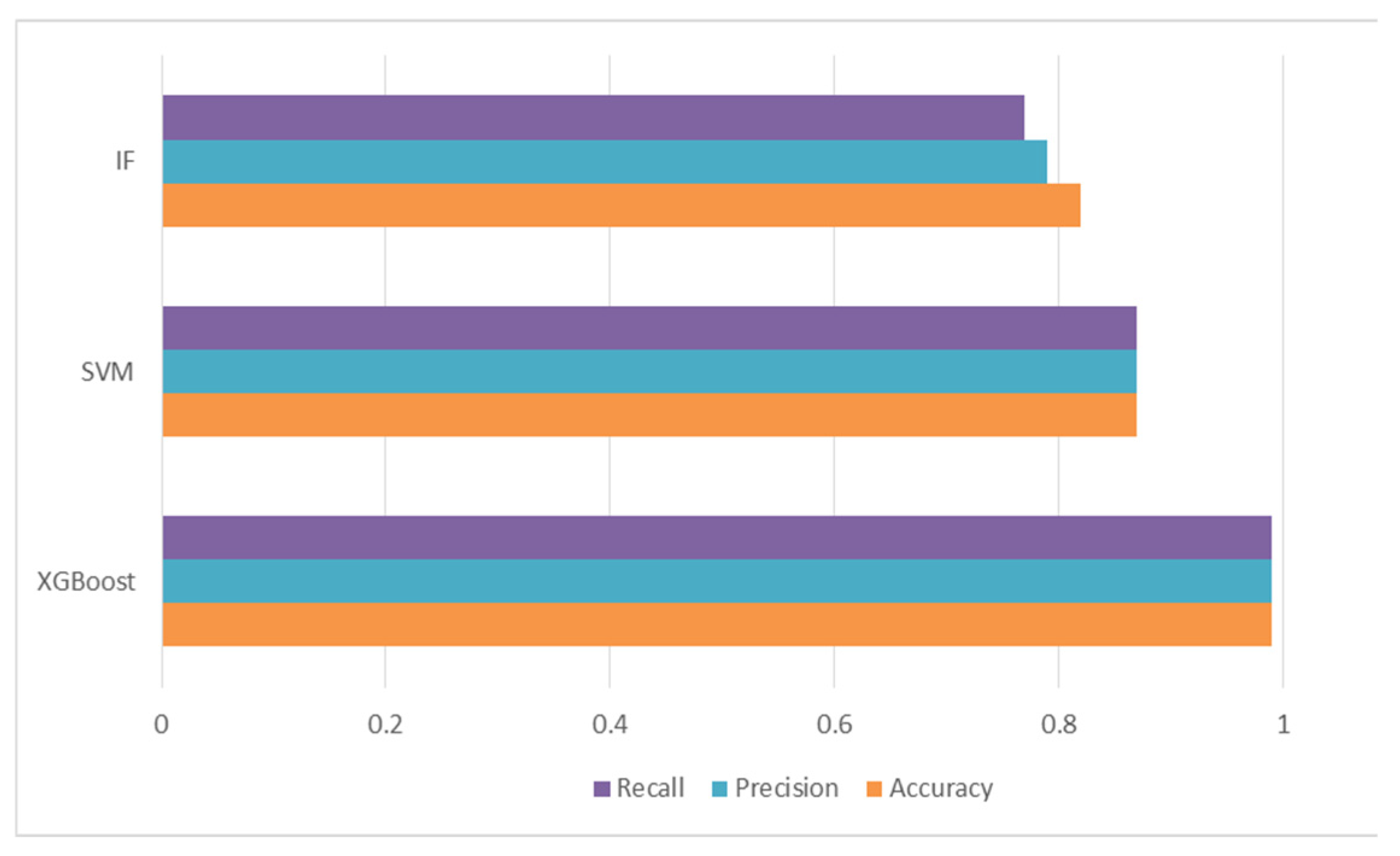

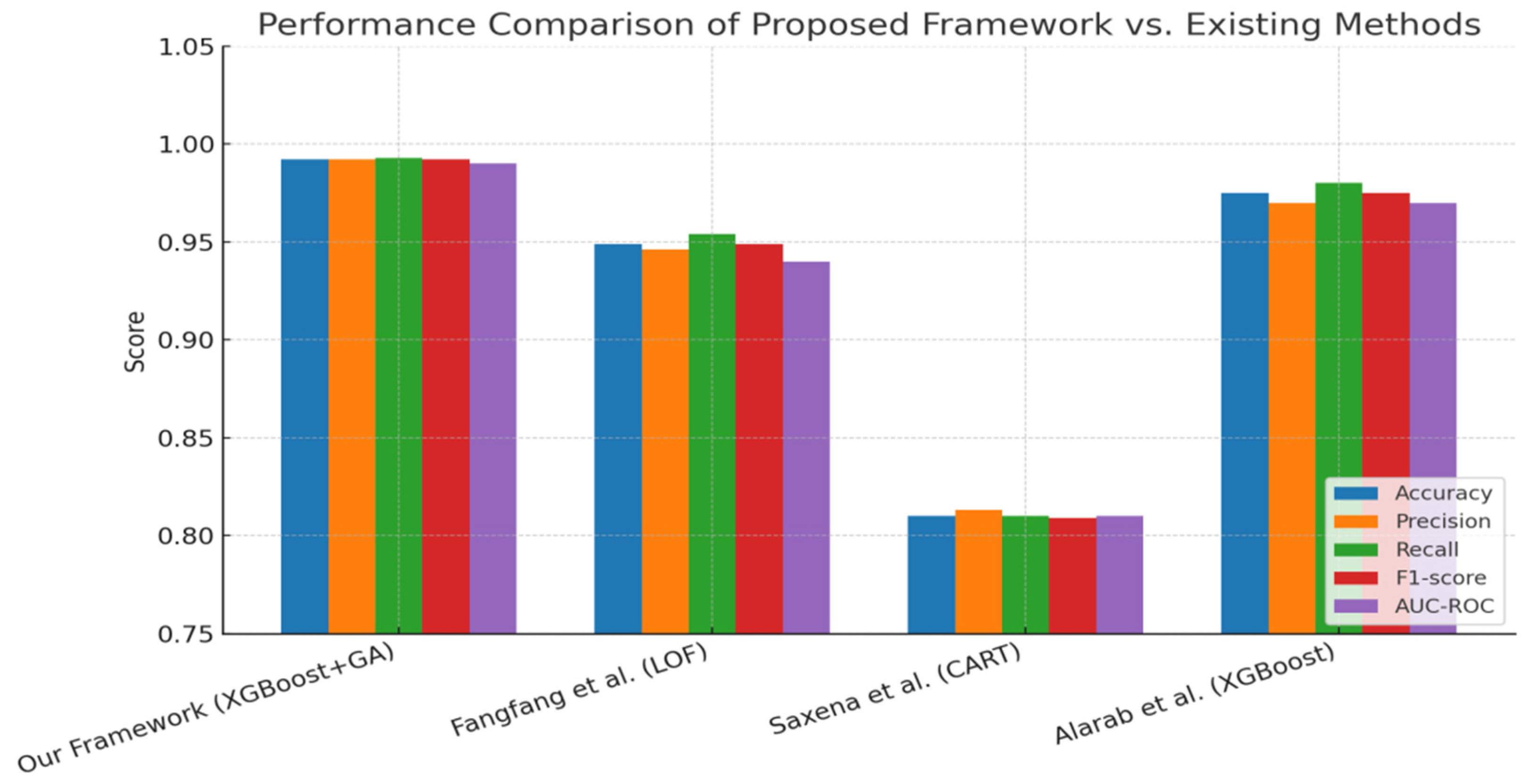

- Performance Gains on Real Data: Experimental results show that the GA-tuned XGBoost consistently outperforms existing methods, achieving up to 99% accuracy and recall, which surpasses reported benchmarks in recent studies.

- Scalability and Robustness: The proposed approach demonstrates strong scalability to large Ethereum datasets and maintains robustness even in the presence of noise and incomplete features.

- Comparative Validation: A thorough baseline comparison with established methods such as Local Outlier Factor (LOF) and Classification and Regression Trees (CART) illustrates clear improvements, reinforcing the originality and practical utility of the proposed framework.

2. Literature Review

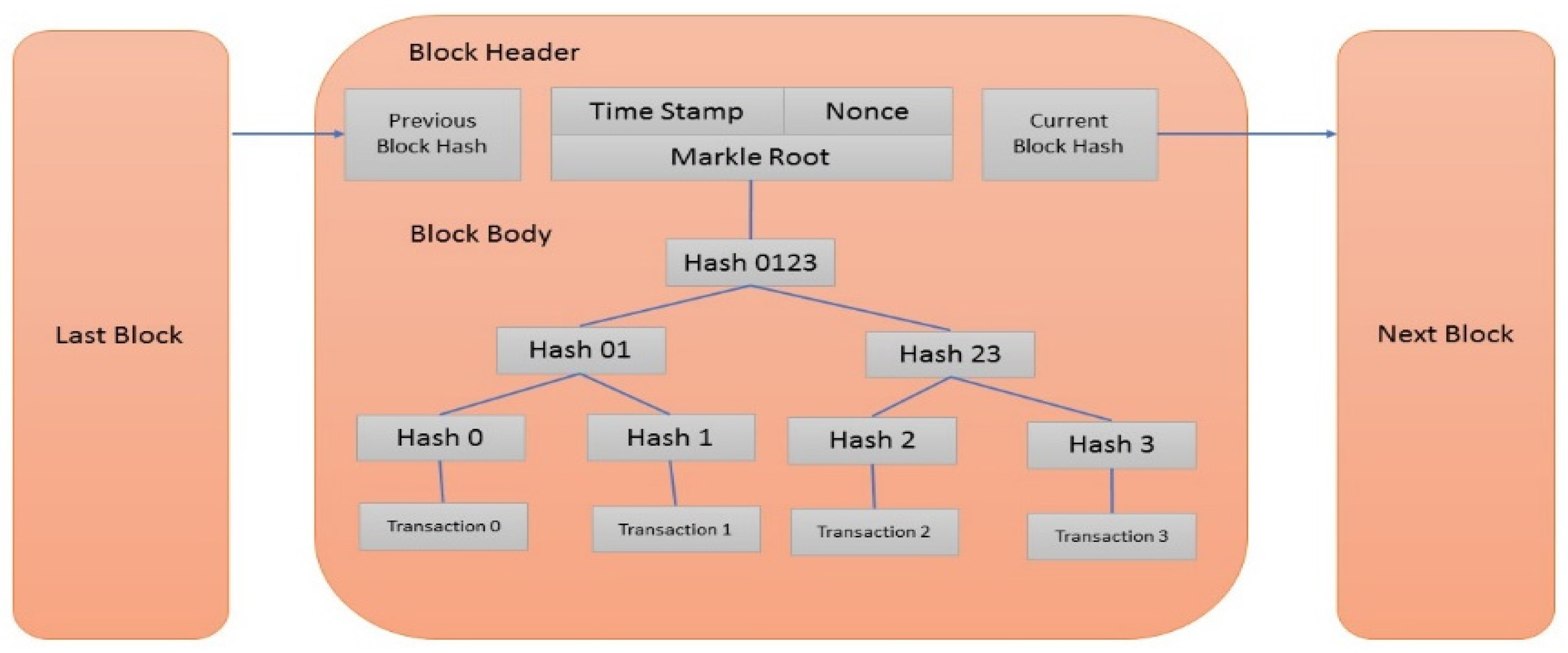

3. The Proposed Ethereum System Model

- Incorporating mined transactions into fresh blocks and uploading these blocks to the Ethereum ledger.

- Notifying other miners about newly mined blocks.

- Accepting newly discovered blocks from other miners and keeping their ledger instances up to date.

Money Laundering Threats in Ethereum Networks

- Money-Mixing attack: In this scheme, a dishonest node leverages a service to blend potentially traceable cryptocurrency funds, masking their origins and rendering them untraceable.

- Pump and Dump attack: A dishonest node artificially inflates the value of an asset or cryptocurrency by creating a false impression of interest.

- Wash-trading attack: A dishonest node rapidly buys and sells a cryptocurrency or asset to create the illusion of market activity.

- Whale Wall Spoofing attack: Similar to wash trading, a large entity (a ‘whale’) places significant buy or sell orders to manipulate the market.

4. The Proposed Detection Model

4.1. Data Collection Stage

4.2. Data Preprocessing Stage

4.2.1. Data Cleaning

4.2.2. Standardization

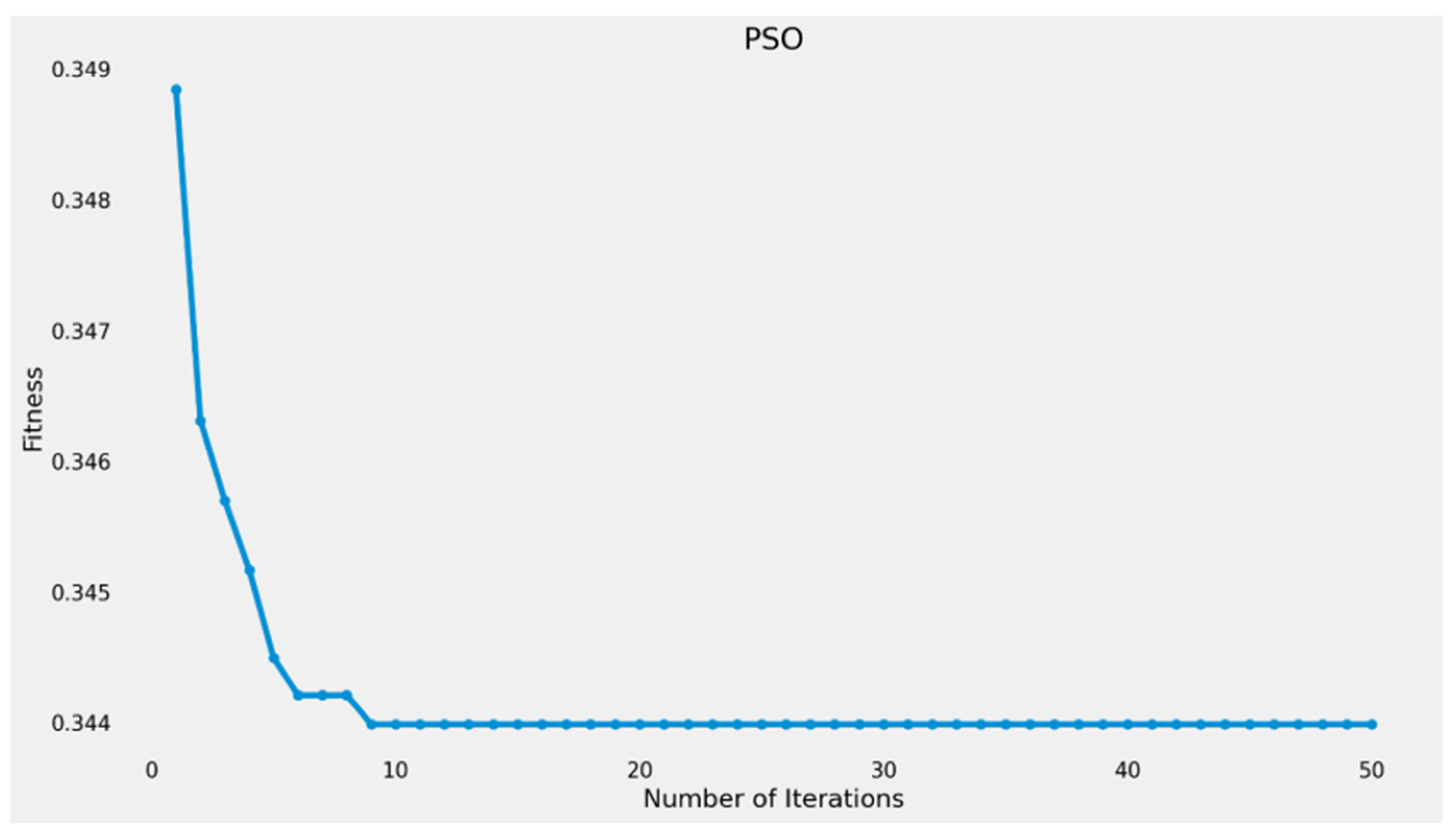

4.2.3. Feature Selection Using Particle Swarm Optimization (PSO)

| Algorithm 1: PSO algorithm | |

| 1: | Initialization parameter: , W, |

| 2: | Population Initialization: Initialize the population with particles having random positions and velocities. |

| 3: | For j = 1 to maximum generation |

| 4: | For i = 1 to the number of particles, |

| 5: | if fitness() > fitness() then |

| 6: | update |

| 7: | |

| 8: | End if |

| 9: | For d = 1 to iteration |

| 10: | Update Velocity: |

| 11: | |

| 12: | |

| 13: | Return new Velocity |

| 14: | If then |

| 15: | Else If then |

| 16: | End If |

| 17: | Update Positions: |

| 18: | if then |

| 19: | Else If then |

| 20: | End IF |

| 21: | End For |

| 22: | End For |

| 23: | End |

4.2.4. Data Balancing

4.2.5. Data Splitting

4.3. Classification Stage

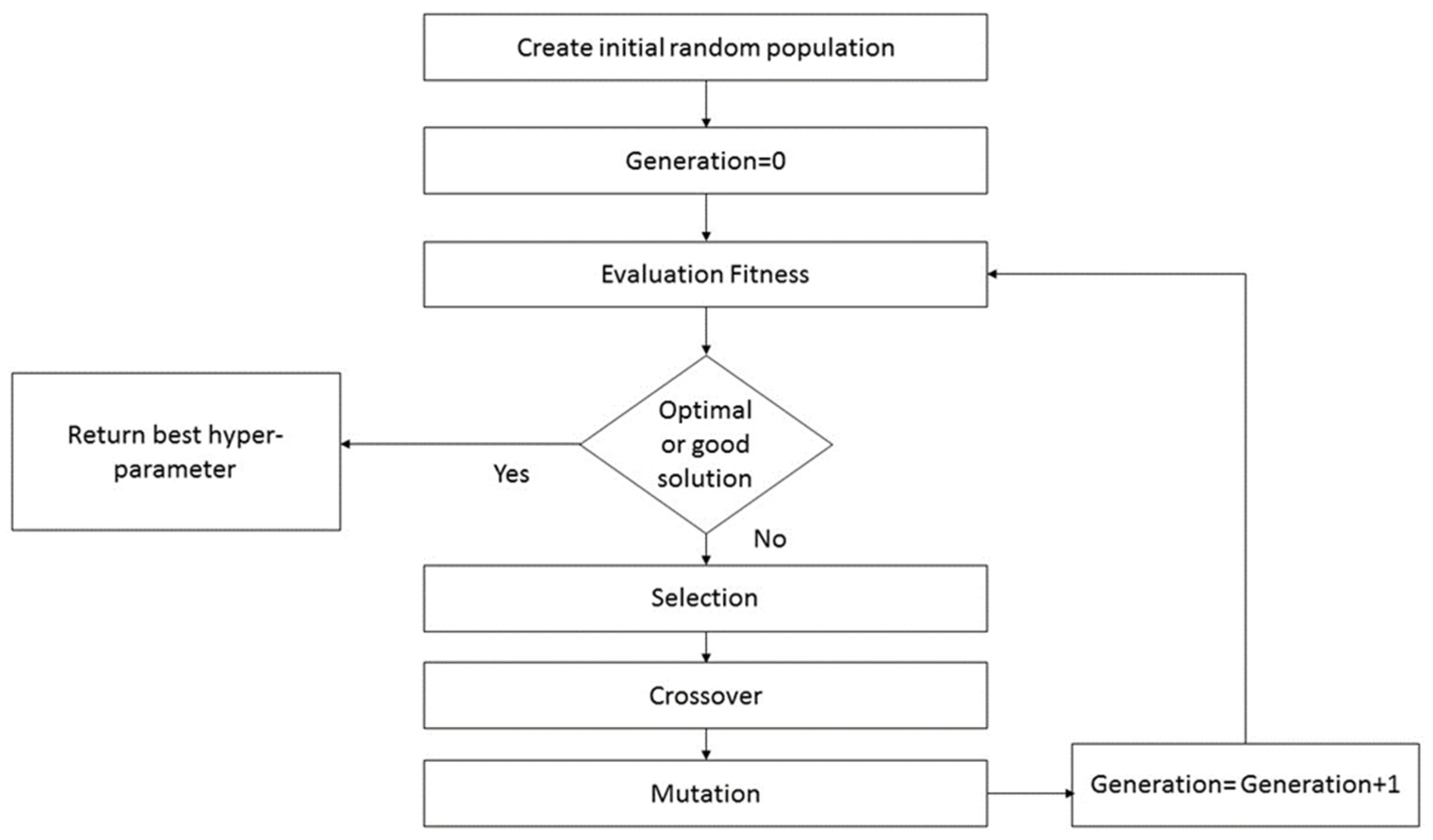

| Algorithm 2: Genetic algorithm for hyper-tuning parameters | |

| 1: | Def genetic_algorithm(hyperparameter_bounds, number of generations, number of parents, offspring_size, mutation_rate) |

| 2: | population = initialize_population (number of parents, hyperparameter_bounds) |

| 3: | Def Fitness Function() |

| 4: | While the current generation < J (maximum number of generations) |

| 5: | fitness_scores = calculate_fitness (population) |

| 6: | parents = selection (population, fitness, number of parents) |

| 7: | offspring = Crossover (parents, offspring_size) |

| 8: | Population = parents + Mutation (offspring, mutation rate, hyperparameter_bounds) |

| 9: | best_hyper-parameters = max (population, key = objective_function) |

| 10: | End While |

| 11: | Return the best hyperparameter. |

| Algorithm 3: XGBoost algorithm | |

| 1: | Initialize parameters: learning rate (η), number of trees (n_trees), maximum depth of trees (max_depth), and regularization parameters (λ, γ). |

| 2: | Initialize the model predictions to a constant value, typically the mean of the target values. |

| 3 | For each tree t in range (1, n_trees + 1) |

| 4: | Calculate the gradient: |

| 5: | Calculate the hessian: |

| 6: | Construct a new decision tree (gradient, hessian) |

| 7: | Apply the regularization parameters (λ, γ) to control the complexity of the tree. |

| 8: | Return new_tree. |

| 9: | Update the model predictions: |

| 10: | Return new_model. |

| 11: | End For |

| Algorithm 4: Support vector machine algorithm | |

| 1: | Initialize parameters: C, γ, max_iter. |

| 2: | Initialize parameters: Weight (w), Bias (b) → small random values. |

| 3: | For iteration k = 1 to max_iter |

| 4: | For each data point (,) |

| margin = | |

| 5: | If margin ≥ 1 Then |

| wnew = w* γ *w | |

| 6: | End If |

| If margin < 1 | |

| 7: | wnew = w − γ *(w − c* *) |

| 8: | End |

| 9: | b = b+ γ *c* |

| 10: | End For |

| 11: | End For |

| Algorithm 5: Isolation forest algorithm | |

| 1: | Initialize parameters: n_estimators, max_samples, Contamination, max_depth, threshold (θ) |

| 2: | Initialize an empty list to store all isolation trees. |

| 3: | For t = 1 to n_estimators + 1 |

| 4: | max_samples → randomly selected from the dataset without replacement. |

| 5: | Build an isolation tree(data, depth) |

| 6: | If depth ≥ max_depth |

| 7: | Return a leaf node with the current depth |

| 8: | Else |

| 9: | Randomly select a feature f from the data features |

| 10: | Randomly select a split value s within the range of feature f for the current data points. |

| 11: | Partition the data into two subsets: |

| 12: | Left subset = {x|< s} |

| 13: | Right subset = {x|≥ s} |

| 14: | Left child = BuildTree(Left subset, depth + 1) |

| 15: | Right child = BuildTree(Right subset, depth + 1) |

| 16: | Return a node with the selected feature f, split s, left child, and right child. |

| 17: | End If |

| 18: | Add the root of the built isolation tree to the list of trees. |

| 19: | End for |

| 20: | Calculate path length (data point x) |

| 21: | Path_length = 0 |

| 22: | For t = 1 to n_estimators |

| 23: | Increment path_length by one at Contamination |

| 24: | If x moves to a leaf node, add the final path length and stop |

| 25: | End for |

| 26: | Return path_length |

| 27: | Calculate the expected path length for each data point x |

| 28: | |

| 29: | Return expected path length |

| 30: | Def an anomaly score function s(x) for x |

| s | |

| 31: | Classify each data point x based on its anomaly score s(x): |

| 32: | If s(x) > θ: classify x as an anomaly. |

| 33: | Else: classify x as usual. |

| 34: | End If |

| Algorithm 6: Proposed detection model | |

| 1: | Load the dataset |

| 2: | Call the PSO algorithm on the whole dataset |

| 3: | Return the best feature subset |

| 4: | Call XGBoost: |

| 5: | Apply GA for Hyperparameter tuning |

| 6: | Train the XGBoost model using the optimal hyperparameters obtained from GA. |

| 7: | Return accuracy rate and confusion matrix |

| 8: | Call SVM: |

| 9: | Apply GA for Hyperparameter tuning |

| 10: | Train the SVM model using the optimal hyperparameters obtained from GA. |

| 11: | Return accuracy rate and confusion matrix |

| 12: | Call Isolation Forest: |

| 13: | Apply GA for Hyperparameter tuning |

| 14: | Train the Isolation Forest model using the optimal hyperparameters obtained from GA. |

| 15: | Return accuracy rate and confusion matrix |

| 16: | End |

5. Results and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Ref. | Year | Contribution | Dataset Name | Class Balance | Data Type | Label | Evaluation Metrics Used | Limitation |

| [9] | 2024 | Proposed XBlockFlow framework for detecting crypto-asset money laundering |

| Benign = 95% Suspicious = 5% | Structured | Accounts | Precision = 90% Recall = 96.2% | This framework is not suitable for all cryptocurrency Ethereum datasets. |

| [10] | 2024 | Proposed framework for the account risk rating and detection of illicit transactions and accounts using graph-based fraud rating. |

| Benign = 69% Suspicious = 31% | Structured | Accounts | Trustworthiness = 99% Reliability for abnormal = 86.8% Reliability for benign = 71.8% | This model needs to improve preprocessing and enhance results. |

| [11] | 2024 | Proposed Densflow framework to detect and trace money laundering accounts using graph-based anomaly detection. |

| Benign = 95% Suspicious = 5% | Structured | Accounts | Precision = 99% Precision = 55.7% Precision = 60.4% Precision = 69.9% | This framework is not suitable for all cryptocurrency Ethereum datasets. This framework needs to improve preprocessing. |

| [12] | 2024 | Proposed anomaly detection for suspicious transactions and accounts using a combination of Local Outlier Factor and cluster algorithms. |

| Benign = 92% Suspicious = 8% | Structured | Accounts and transactions | Accuracy = 95% Recall = 63.16% Precision = 2.40% | The preprocessing steps are not clear. |

| [13] | 2023 | Proposed detection model for money laundering using heuristic algorithms. |

| Benign = 79% Suspicious = 21% | Structured | Accounts | Accuracy = 43.6% | The proposed system needs to increase the number of accounts. Additionally, it needs to enhance the accuracy and efficiency of money laundering detection. |

| [14] | 2023 | Proposed model using supervised learning, including linear, nonlinear, and ensemble models, for classifying and detecting malicious and non-malicious transactions. The model used linear regression (LR), classification and regression trees (CART), and random forest (RF). |

| Benign = 95% Suspicious = 5% | Structured | Accounts and transactions | Accuracy: LR = 60% CART = 60.9% RF = 56.14% | This model needs to improve preprocessing and enhance results. |

| [18] | 2023 | Proposed anomaly detection system to detect money laundering using Long Short-Term Memory (LSTM) and Graph Convolutional Network (GCN). |

| Benign = 95% Suspicious = 5% | Structured | Transactions | Precision = 85.42% Recall = 71.2% F1 = 77% | The dataset is unclear regarding the number of transactions and accounts. |

| [15] | 2022 | Proposed model for detecting illicit transactions or accounts using XGBoost and Random Forest, applying various resampling techniques such as SMOTE, K-means SMOTE, ENN, and OSCCD. |

| Benign = 95% Suspicious = 5% | Structured | Accounts and transactions | In the Bitcoin dataset, Random Forest Accuracy = 99.42% F1-score = 93.93% AUC-score = 91.90% In the Ethereum dataset, XGBoost Accuracy = 99.38%, F1-score = 98.34% AUC-score = 99.70% | The dataset is unclear regarding the number of transactions and accounts. |

| [19] | 2021 | Proposed anti-money laundering system using shallow neural networks and decision trees. Shallow neural networks (SNN) used Scaled Conjugate Gradient Backpropagation, and decision trees (DT) used Bayesian Gradient Optimization to optimize performance. |

Number of transactions = 200,000. | Benign = 95% Suspicious = 5% | Structured | Transactions | SNN = 89.9% DT = 93.4 | This system classifies transactions only; it does not determine the suspicious accounts. The system can classify transactions as unknown. |

| [16] | 2021 | Proposed anomaly detection system for suspicious transactions and accounts using GPU-accelerated machine learning methods: Support Vector Machine (SVM), Random Forest (RF), and Logistic Regression (LR). | Ethereum Database and Bitcoin Database Used 100,000 benign accounts and 988 suspicious accounts | Benign = 99% Suspicious = 1% | Structured | Accounts and transactions | Ethereum dataset Accuracy for SVM = 82.6% RF = 83.4% LR = 81.8% Bitcoin dataset SVM = 98.7% RF = 93% LR = 89.7% |

|

| [17] | 2020 | A proposed system for detecting suspicious accounts using the XGBoost classifier. The model identified the top three features with the most considerable impact on the final model output as ‘Time difference between first and last (Minutes),’ ‘Total Ether balance,’ and ‘Minimum value received.’ | Ethereum Database Number of malicious accounts = 2179 Number of benign = 2502 | Benign = 55% Suspicious = 45% | Structured | Accounts | Accuracy = 96.3% | The proposed system needs to increase the number of accounts. |

| Feature | Description |

|---|---|

| Index | The index number of a row. |

| Address | The address of the Ethereum account. |

| Flag | The transaction is Suspicious or Benign. |

| Avg Min Between Sent Transaction | Average time (in minutes) between sent transactions for the account. |

| Avg Min Between Received Transactions. | Average time (in minutes) between received transactions for the account. |

| Time Difference Between First and Last (Mins) | Time difference (in minutes) between the first and last transaction |

| Sent Transaction. | Total number of benign transactions sent. |

| Received Transaction. | Total number of benign transactions received. |

| Number of Created Contracts | Total number of created contract transactions |

| Unique Received From Addresses | Total number of unique addresses to which the account received transactions. |

| Unique Sent To Addresses | Total number of unique addresses to which the account sent transactions |

| Min Value Received | Minimum value in Ether ever received |

| Max Value Received | Maximum value in Ether ever received |

| Avg Value Received | The average value in Ether ever received |

| Min Value Sent | Minimum value of Ether ever sent |

| Max Value Sent | Maximum value of Ether ever sent |

| Avg Value Sent | The average value of Ether ever sent from the account |

| Min Value Sent to Contract | Minimum value of Ether sent to a contract |

| Max Value Sent to Contract | Maximum value of Ether sent to a contract |

| Avg Value Sent to Contract | The average value of Ether sent to contracts |

| Total Transactions (including contract creation) | Total number of transactions, including those to create contracts. |

| Total Ether Sent | Total Ether sent to the account address |

| Total Ether Received | Total Ether received for the account address |

| Total Ether Sent Contracts | Total Ether sent to contract addresses |

| Total Ether Balance | Total Ether balance following enacted transactions |

| Total ERC20 Transactions | Total number of ERC20 token transfer transactions. |

| Total ERC20 Received (in Ether) | Total ERC20 token received transactions in Ether |

| Total ERC20 Sent (in Ether) | The total value of the ERC20 token sent in Ether |

| Total ERC20 Sent Contract (in Ether) | Total ERC20 token transfer to other contracts in Ether |

| Unique ERC20 Sent Address | Number of unique addresses that received ERC20 token transactions. |

| Unique ERC20 Received Address | Number of ERC20 token transactions received from Unique addresses |

| Unique ERC20 Received Contract Address | Number of ERC20 token transactions received from Unique contract addresses |

| Avg Time Between Sent Transaction (ERC20) | Average time (in minutes) between ERC20 token sent transactions |

| Avg Time Between Received Transaction (ERC20) | Average time (in minutes) between ERC20 token received transactions |

| Avg Time Between Contract Transaction (ERC20) | Average time (in minutes) ERC20 token between sent token transactions |

| Min Value Received (ERC20) | Minimum value (in Ether) received from ERC20 token transactions for the account. |

| Max Value Received (ERC20) | Maximum value (in Ether) received from ERC20 token transactions for the account. |

| Avg Value Received (ERC20) | Average value (in Ether) received from ERC20 token transactions for the account. |

| Minimum Value Sent (ERC20) | The minimum value (in Ether) sent from ERC20 token transactions for the account. |

| Maximum Value Sent (ERC20) | The maximum value (in Ether) sent from ERC20 token transactions for the account. |

| Average Value Sent (ERC20) | Average value (in Ether) sent from ERC20 token transactions for the account. |

| Min Value Sent Contract (ERC20) | The minimum value (in Ether) sent from ERC20 token transactions for the contract. |

| Max Value Sent Contract(ERC20) | The maximum value (in Ether) sent from ERC20 token transactions for the contract. |

| Avg Value Sent Contract (ERC20) | Average value (in Ether) sent from ERC20 token transactions for the contract. |

| Unique Sent Token Name (ERC20) | Number of Unique ERC20 tokens transferred |

| Unique Received Token Name (ERC20) | Number of Unique ERC20 tokens received |

| Most Sent Token Type (ERC20) | Most sent tokens for the account via an ERC20 transaction |

| Most Received Token Type (ERC20) | Most received tokens for the account via ERC20 transactions |

References

- Kumar, S.; Lim, W.M.; Sivarajah, U.; Kaur, J. Artificial intelligence and blockchain integration in business: Trends from a bibliometric-content analysis. Inf. Syst. Front. 2023, 25, 871–896. [Google Scholar] [CrossRef] [PubMed]

- Duy, P.T.; Hien, D.T.T.; Hien, D.H.; Pham, V.H. A survey on opportunities and challenges of Blockchain technology adoption for revolutionary innovation. In Proceedings of the 9th International Symposium on Information and Communication Technology, Da Nang City, Viet Nam, 6–7 December 2018. [Google Scholar]

- Global Blockchain Market Overview 2024–2028. PR Newswire. 2024. Available online: https://www.reportlinker.com/p04226790/?utm_source=PRN (accessed on 29 June 2024).

- Samanta, S.; Mohanta, B.K.; Pati, S.P.; Jena, D. A framework to build user profile on cryptocurrency data for detection of money laundering activities. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Saratov, Russia, 7–8 February 2019; pp. 425–429. [Google Scholar]

- Lorenz, J.; Silva, M.I.; Aparício, D.; Ascensão, J.T.; Bizarro, P. Machine learning methods to detect money laundering in the bitcoin blockchain in the presence of label scarcity. In Proceedings of the First ACM International Conference on AI in Finance, New York, NY, USA, 15–16 October 2020; pp. 1–8. [Google Scholar]

- Calafos, M.W.; Dimitoglou, G. Cyber laundering: Money laundering from fiat money to cryptocurrency. In Principles and Practice of Blockchains; Springer: Cham, Switzerland, 2022; pp. 271–300. [Google Scholar]

- Sayadi, S.; Rejeb, S.B.; Choukair, Z. Anomaly detection model over blockchain electronic transactions. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 895–900. [Google Scholar]

- Ashfaq, T.; Khalid, R.; Yahaya, A.S.; Aslam, S.; Azar, A.T.; Alsafari, S.; Hameed, I.A. A machine learning and blockchain-based efficient fraud detection mechanism. Sensors 2022, 22, 7162. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Lin, D.; Fu, Q.; Yang, S.; Chen, T.; Zheng, Z.; Song, B. Towards understanding asset flows in crypto money laundering through the lenses of Ethereum heists. IEEE Trans. Inf. Forensics Secur. 2023, 19, 1994–2009. [Google Scholar] [CrossRef]

- Fu, Q.; Lin, D.; Wu, J.; Zheng, Z. A general framework for the account risk rating on Ethereum: Toward safer blockchain technology. IEEE Trans. Comput. Soc. Syst. 2024, 11, 1865–1875. [Google Scholar] [CrossRef]

- Lin, D.; Wu, J.; Yu, Y.; Fu, Q.; Zheng, Z.; Yang, C. DenseFlow: Spotting cryptocurrency money laundering in Ethereum transaction graphs. In Proceedings of the ACM on Web Conference, Singapore, 13–17 May 2024; pp. 4429–4438. [Google Scholar]

- Zhou, F.; Chen, Y.; Zhu, C.; Jiang, L.; Liao, X.; Zhong, Z.; Chen, X.; Chen, Y.; Zhao, Y. Visual analysis of money laundering in cryptocurrency exchange. IEEE Trans. Comput. Soc. Syst. 2024, 11, 731–745. [Google Scholar] [CrossRef]

- Lv, W.; Liu, J.; Zhou, L. Detection of money laundering address over the Ethereum blockchain. In Proceedings of the 2023 5th International Conference on Frontiers Technology of Information and Computer (ICFTIC), Qiangdao, China, 17–19 November 2023; pp. 866–869. [Google Scholar]

- Saxena, R.; Arora, D.; Nagar, V. Classifying transactional addresses using supervised learning approaches over Ethereum blockchain. Procedia Comput. Sci. 2023, 218, 2018–2025. [Google Scholar] [CrossRef]

- Alarab, I.; Prakoonwit, S. Effect of data resampling on feature importance in imbalanced blockchain data: Comparison studies of resampling techniques. Data Sci. Manag. 2022, 5, 66–76. [Google Scholar] [CrossRef]

- Elmougy, Y.; Manzi, O. Anomaly detection on Bitcoin and Ethereum networks using GPU-accelerated machine learning methods. In Proceedings of the 2021 31st International Conference on Computer Theory and Applications (ICCTA), Alexandria, Egypt, 11–13 December 2021; pp. 166–171. [Google Scholar]

- Farrugia, S.; Ellul, J.; Azzopardi, G. Detection of illicit accounts over the Ethereum blockchain. Expert Syst. Appl. 2020, 150, 113318. [Google Scholar] [CrossRef]

- Yang, G.; Liu, X.; Li, B. Anti-money laundering supervision by intelligent algorithm. Comput. Secur. 2023, 132, 103344. [Google Scholar] [CrossRef]

- Badawi, A.A.; Al-Haija, Q.A. Detection of money laundering in bitcoin transactions. In Proceedings of the 4th Smart Cities Symposium, Online, 21–23 November 2021; pp. 458–464. [Google Scholar]

- Arigela, S.S.D.; Voola, P. Blockchain open source tools: Ethereum and Hyperledger Fabric. In Proceedings of the 2023 International Conference on Artificial Intelligence and Knowledge Discovery in Concurrent Engineering (ICECONF), Chennai, India, 5–7 January 2023; pp. 1–8. [Google Scholar]

- Sarathchandra, T.; Jayawikrama, D. A decentralized social network architecture. In Proceedings of the 2021 International Research Conference on Smart Computing and Systems Engineering (SCSE), Colombo, Sri Lanka, 16 September 2021; pp. 251–257. [Google Scholar]

- Maleh, Y.; Shojafar, M.; Alazab, M.; Romdhani, I. Blockchain for Cybersecurity and Privacy: Architectures, Challenges, and Applications; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Wen, B.; Wang, Y.; Ding, Y.; Zheng, H.; Qin, B.; Yang, C. Security and privacy protection technologies in securing blockchain applications. Inf. Sci. 2023, 645, 119322. [Google Scholar] [CrossRef]

- Sanka, A.I.; Irfan, M.; Huang, I.; Cheung, R.C. A survey of breakthrough in blockchain technology: Adoptions, applications, challenges and future research. Comput. Commun. 2021, 169, 179–201. [Google Scholar] [CrossRef]

- Raj, P.; Saini, K.; Surianarayanan, C. Edge/Fog Computing Paradigm: The Concept, Platforms and Applications; Academic Press: Cambridge, MA, USA; Elsevier Inc.: Amsterdam, The Netherlands, 2022. [Google Scholar]

- Aggarwal, S.; Kumar, N. Architecture of blockchain. In The Blockchain Technology for Secure and Smart Applications Across Industry Verticals; Advances in Computers; Academic Press: Cambridge, MA, USA, 2021; Volume 121, pp. 171–192. [Google Scholar]

- Mohanta, B.K.; Jena, D.; Panda, S.S.; Sobhanayak, S. Blockchain technology: A survey on applications and security privacy challenges. Internet Things 2019, 8, 100107. [Google Scholar] [CrossRef]

- Reyna, A.; Martín, C.; Chen, J.; Soler, E.; Díaz, M. On blockchain and its integration with IoT: Challenges and opportunities. Future Gener. Comput. Syst. 2018, 88, 173–190. [Google Scholar] [CrossRef]

- Chithanuru, V.; Ramaiah, M. An anomaly detection on blockchain infrastructure using artificial intelligence techniques: Challenges and future directions—A review. Concurr. Comput. Pract. Exp. 2023, 35, e7724. [Google Scholar] [CrossRef]

- Huynh, T.T.; Nguyen, T.D.; Tan, H. A survey on security and privacy issues of blockchain technology. In Proceedings of the 2019 International Conference on System Science and Engineering (ICSSE), Dong Hoi, Vietnam, 20–21 July 2019; pp. 362–367. [Google Scholar]

- Joshi, A.P.; Han, M.; Wang, Y. A survey on security and privacy issues of blockchain technology. Math. Found. Comput. 2018, 1, 121–147. [Google Scholar] [CrossRef]

- Singh, S.; Hosen, A.S.M.S.; Yoon, B. Blockchain security attacks, challenges, and solutions for the future distributed IoT network. IEEE Access 2021, 9, 13938–13959. [Google Scholar] [CrossRef]

- Homoliak, I.; Venugopalan, S.; Hum, Q.; Szalachowski, P. A security reference architecture for blockchains. In Proceedings of the 2019 IEEE International Conference on Blockchain (Blockchain), Atlanta, GA, USA, 14–17 July 2019; pp. 390–397. [Google Scholar]

- Jain, V.K.; Tripathi, M. Multi-objective approach for detecting vulnerabilities in Ethereum smart contracts. In Proceedings of the 2023 International Conference on Emerging Trends in Networks and Computer Communications (ETNCC), Windhoek, Namibia, 16–18 August 2023; pp. 1–6. [Google Scholar]

- Chen, T.; Zhu, Y.; Li, Z.; Chen, J.; Li, X.; Luo, X.; Lin, X.; Zhange, X. Understanding Ethereum via graph analysis. ACM Trans. Internet Technol. (TOIT) 2020, 20, 1–32. [Google Scholar] [CrossRef]

- Etherscan. Available online: https://etherscan.io (accessed on 20 February 2024).

- Cabello-Solorzano, K.; de Araujo, I.O.; Peña, M.; Correia, L.; Tallón-Ballesteros, A.J. The impact of data normalization on the accuracy of machine learning algorithms: A comparative analysis. In Proceedings of the International Conference on Soft Computing Models in Industrial and Environmental Applications, Salamanca, Spain, 5–7 September 2023; pp. 344–353. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Yang, X.-S. Nature-Inspired Optimization Algorithms; Academic Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Nguyen, Q.M.; Le, N.K.; Nguyen, L.M. Scalable and secure federated xgboost. In Proceedings of the 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation forest. In Proceedings of the 2008 8th IEEE International Conference on Data Mining, Pisa, Italy, 15–19 December 2008; pp. 413–422. [Google Scholar]

- Shanthi, D.L.; Chethan, N. Genetic algorithm-based hyper-parameter tuning to improve the performance of machine learning models. SN Comput. Sci. 2022, 4, 119. [Google Scholar] [CrossRef]

- Alibrahim, A.; Ludwig, S.A. Hyperparameter optimization: Comparing genetic algorithm against grid search and Bayesian optimization. In Proceedings of the IEEE Congress on Evolutionary Computation, Kraków, Poland, 28 June–1 July 2021; pp. 1551–1559. [Google Scholar]

- Gen, M.; Lin, L. Genetic algorithms and their applications. In Springer Handbook of Engineering Statistics; Springer: London, UK, 2023; pp. 635–674. [Google Scholar]

- Li, H. Support vector machine. In Machine Learning Methods; Springer Nature: Singapore, 2023; pp. 127–177. [Google Scholar]

- Susmaga, R. Confusion matrix visualization. In Intelligent Information Processing and Web Mining, Proceedings of the International IIS: IIPWM ‘04 Conference, Zakopane, Poland, 17–20 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 107–116. [Google Scholar]

| Algorithm | Parameter | Description |

|---|---|---|

| XGBoost | Learning rate (η) | It is the step size shrinkage used in updates to prevent overfitting |

| n_estimators | The number of boosting rounds. | |

| max_depth | The maximum depth of a tree is used to control overfitting. | |

| min_child_weight | Defines the minimum sum of weights of all observations required for a child. | |

| reg_alpha | Used in high-dimensionality cases to speed up the algorithm. | |

| colsample_bytree | The subsample ratio of columns when constructing each tree. | |

| reg_lambda (λ) | Used for the regularization part of XGBoost. | |

| Gamma (γ) | Specifies the minimum loss reduction required to make a split, dependent on the loss function. | |

| SVM | C | Used to control the trade-off between achieving low training error and low testing error. |

| Kernel Parameters | Include parameters specific to the chosen kernel function, such as gamma for the radial basis function kernel. | |

| IF | n_estimators | The number of trees. |

| max_samples | The subset sample was used in each round. | |

| Contamination | The proportion of outliers in the dataset. |

| Feature | Description |

|---|---|

| Avg Min Between Received Transactions | Average time (in minutes) between received transactions for the account. |

| Time Difference Between First and Last (Mins) | Time difference (in minutes) between the first and last transaction |

| Sent Transaction | Total number of benign transactions sent. |

| Received Transaction | Total number of benign transactions received. |

| Unique Received From Addresses | Total number of unique addresses to which the account received transactions. |

| Unique Sent To Addresses | Total number of unique addresses to which the account sent transactions. |

| Avg Value Sent | The average value of Ether ever sent from the account |

| Total Transactions (including contract creation) | Total number of transactions, including those to create contracts. |

| Total ERC20 Transactions | Total number of ERC20 token transfer transactions |

| Total ERC20 sent (in Ether) | The total value of the ERC20 token sent in Ether |

| Unique ERC20 Sent address | Number of unique addresses that received ERC20 token transactions. |

| Minimum Value Sent (ERC20) | The minimum value (in Ether) sent from ERC20 token transactions for the account. |

| Maximum Value Sent (ERC20) | The maximum value (in Ether) sent from ERC20 token transactions for the account. |

| Average Value Sent (ERC20) | Average value (in Ether) sent from ERC20 token transactions for the account. |

| Parameters | Description |

|---|---|

| Gen_no | The generation number. |

| N_evals | The number of individuals evaluated in that generation. |

| Avg | The average fitness value of the population in that generation. |

| Std | The standard deviation of the fitness values indicates the variability within the population. |

| Min | The minimum fitness value in the population. |

| Max | The maximum fitness value in the population. |

| Gen_no | N_evals | Avg | Std | Min | Max |

|---|---|---|---|---|---|

| 0 | 50 | 0.878 | 0.013 | 0.853 | 0.8997 |

| 1 | 33 | 0.890 | 0.005 | 0.874 | 0.9002 |

| 2 | 30 | 0.894 | 0.004 | 0.887 | 0.9004 |

| 3 | 32 | 0.897 | 0.003 | 0.889 | 0.9024 |

| 4 | 32 | 0.899 | 0.0002 | 0.893 | 0.9035 |

| 5 | 29 | 0.901 | 0.0019 | 0.894 | 0.9063 |

| 6 | 23 | 0.902 | 0.0027 | 0.982 | 0.9098 |

| 7 | 24 | 0.905 | 0.0024 | 0.901 | 0.9098 |

| 8 | 20 | 0.906 | 0.0019 | 0.902 | 0.9104 |

| 9 | 34 | 0.907 | 0.0012 | 0.905 | 0.9104 |

| 10 | 28 | 0.908 | 0.001 | 0.906 | 0.9106 |

| 11 | 21 | 0.909 | 0.0009 | 0.908 | 0.9123 |

| 12 | 31 | 0.910 | 0.0012 | 0.9066 | 0.9152 |

| 13 | 38 | 0.911 | 0.1524 | 0.9087 | 0.9161 |

| 14 | 28 | 0.912 | 0.0018 | 0.9088 | 0.9179 |

| 15 | 27 | 0.914 | 0.0018 | 0.9087 | 0.9178 |

| 16 | 25 | 0.915 | 0.0014 | 0.9113 | 0.9174 |

| 17 | 25 | 0.915 | 0.0009 | 0.9126 | 0.9178 |

| 18 | 33 | 0.916 | 0.0007 | 0.9121 | 0.9183 |

| 19 | 22 | 0.917 | 0.0007 | 0.9162 | 0.9183 |

| 20 | 31 | 0.918 | 0.0005 | 0.9162 | 0.9183 |

| Hyperparameters | N_estimators | Gamma | Min_child_ Weight | Col_sample_ by Tree | Max_depth | Reg_lambda | Learning Rate |

|---|---|---|---|---|---|---|---|

| Default | 100 | 0 | 1 | 1 | 6 | 1 | 0.3 |

| Tuning with GA | 79 | 0.28 | 1.94 | 0.58 | 5 | 0.77 | 0.55 |

| Hyperparameters | C | Gamma |

|---|---|---|

| Default | 0.1 | 0.1 |

| Tuning with GA | 10.92 | 13.262 |

| Hyper-Parameters | Contamination | Max_Samples | N_estimator |

|---|---|---|---|

| Default | 0.1 | ‘Auto’ = 256 | 100 |

| Tuning with GA | 0.3 | 1000 | 89 |

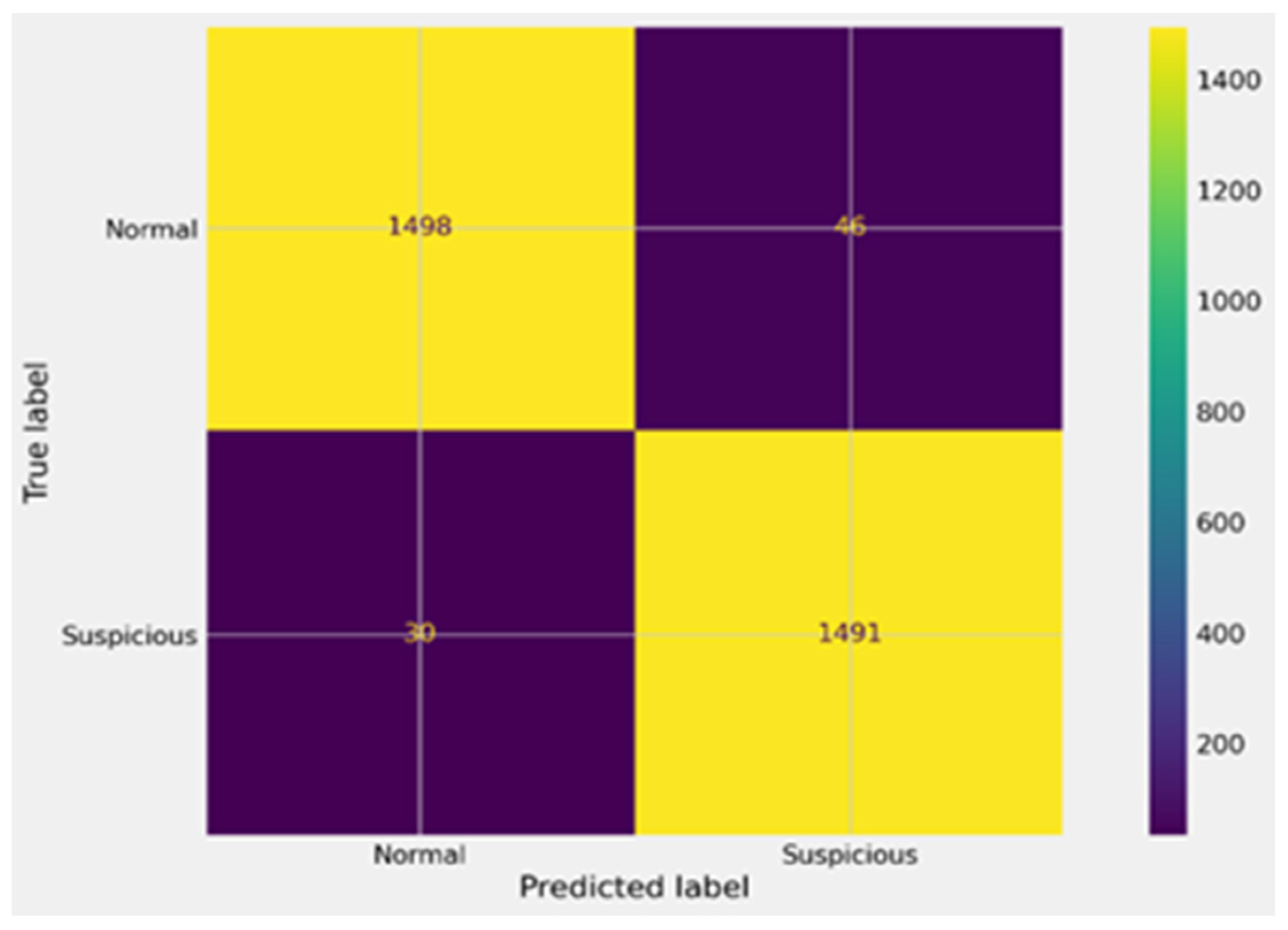

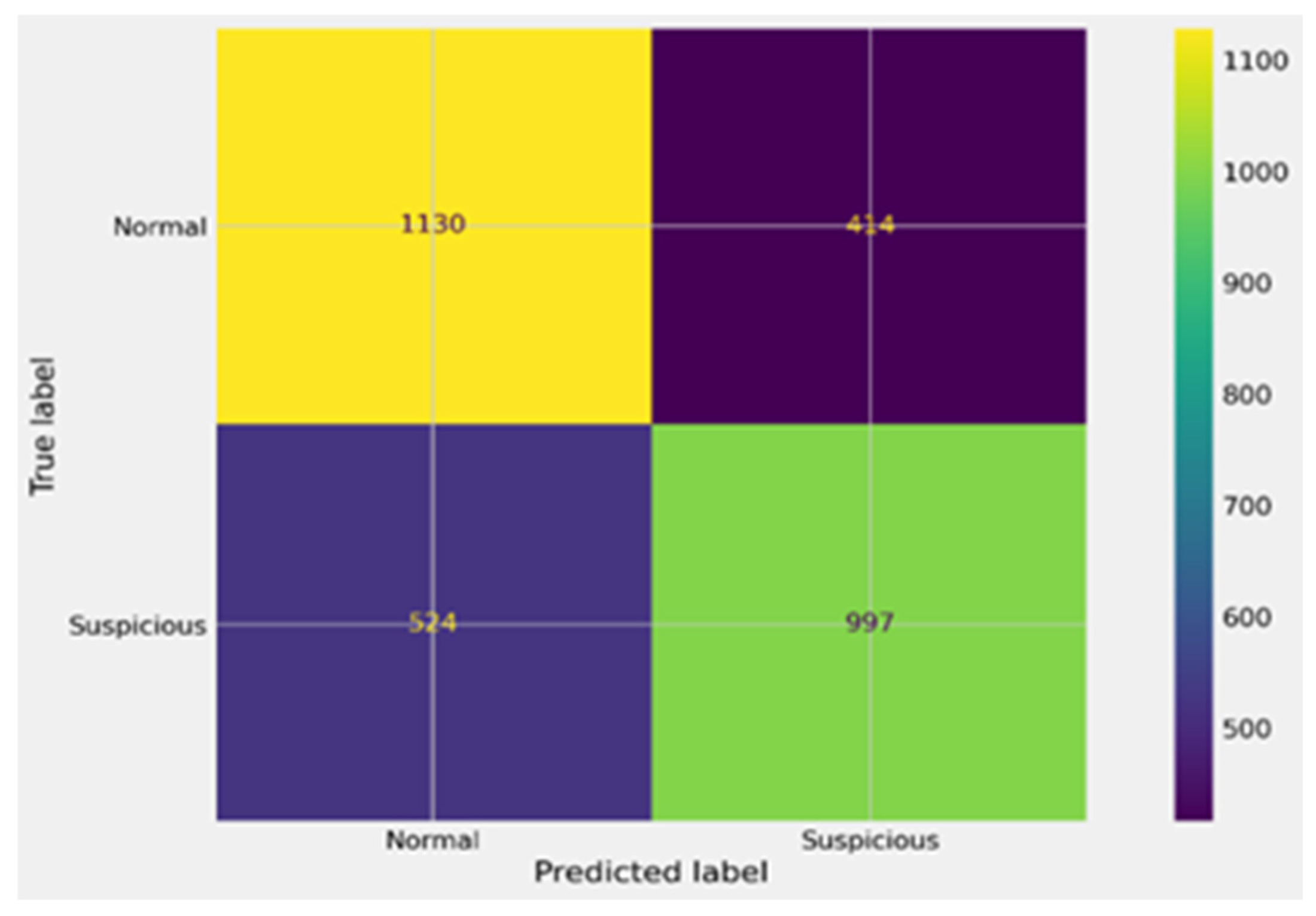

| Algorithm | Accuracy | MAE | Precision | Recall | F1-Score | AUC-ROC | TP | FP | TN | FN |

|---|---|---|---|---|---|---|---|---|---|---|

| XGBoost | 0.975 | 0.03 | 0.97 | 0.98 | 0.975 | 0.97 | 1498 | 46 | 1491 | 30 |

| SVM | 0.744 | 0.26 | 0.826 | 0.713 | 0.765 | 0.71 | 1275 | 269 | 1008 | 513 |

| IF | 0.694 | 0.3 | 0.732 | 0.683 | 0.707 | 0.53 | 1130 | 414 | 997 | 524 |

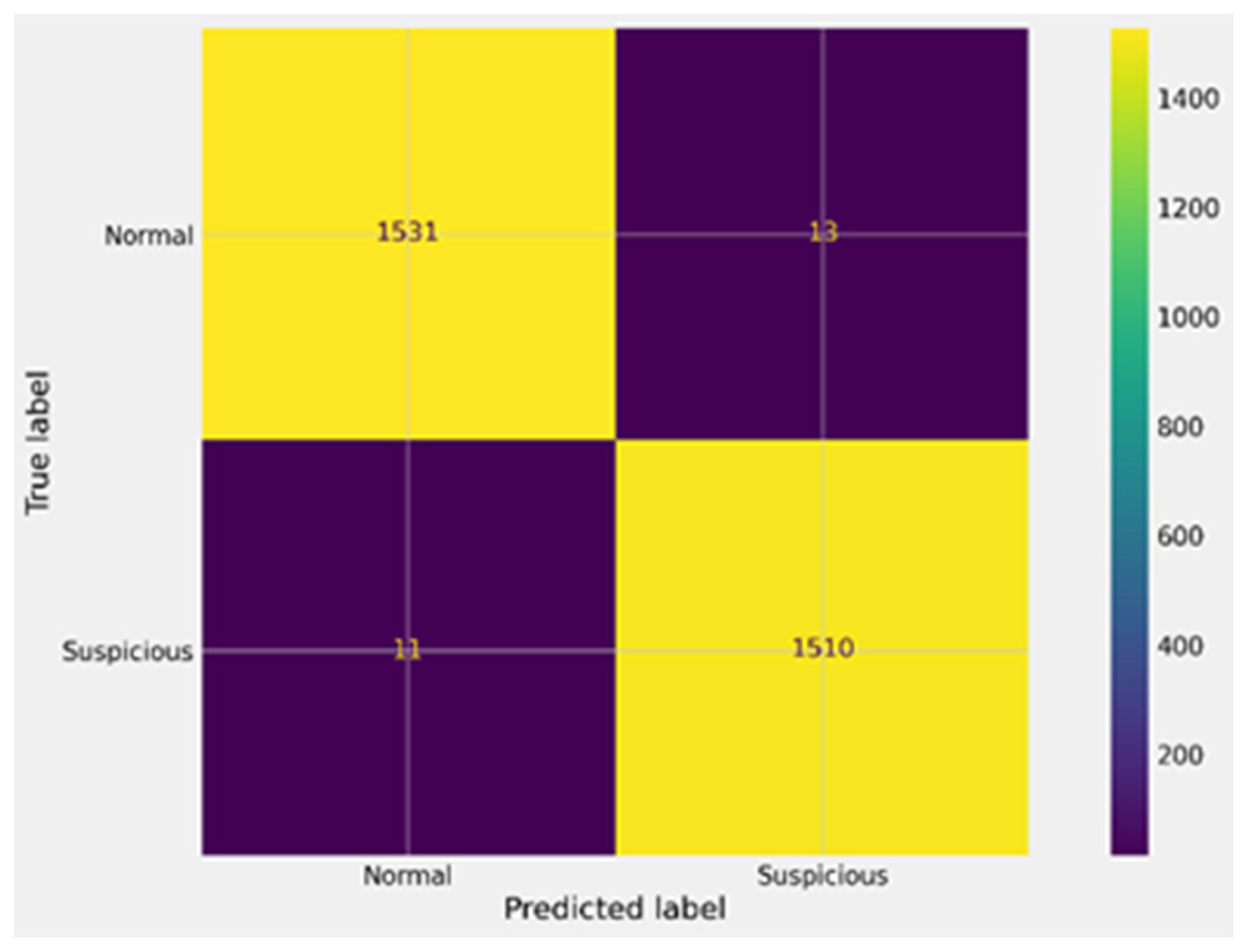

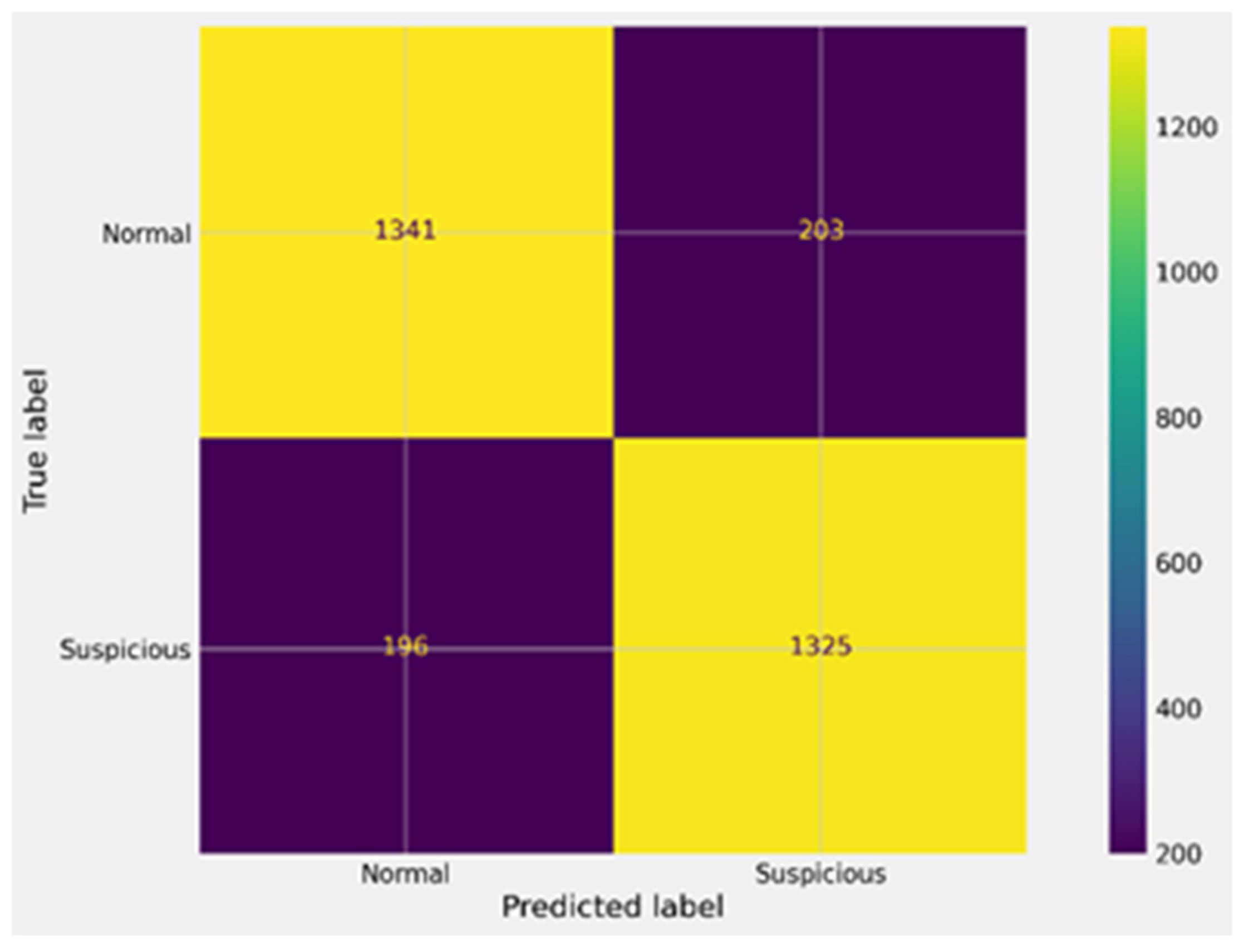

| Algorithm | Accuracy | MAE | Precision | Recall | F1-Score | AUC-ROC | TP | FP | TN | FN |

|---|---|---|---|---|---|---|---|---|---|---|

| XGBoost after GA | 0.992 | 0.01 | 0.992 | 0.993 | 0.992 | 0.99 | 1531 | 13 | 1510 | 11 |

| SVM after GA | 0.87 | 0.13 | 0.869 | 0.872 | 0.87 | 0.86 | 1341 | 203 | 1325 | 196 |

| IF after GA | 0.824 | 0.18 | 0.824 | 0.827 | 0.825 | 0.79 | 1273 | 271 | 1254 | 267 |

| Method | Algorithm | Accuracy | MAE | Precision | Recall | F1-Score | AUC-ROC | Standard Deviation of Accuracy |

|---|---|---|---|---|---|---|---|---|

| Our Proposed Framework | XGBoost with GA | 0.992 | 0.01 | 0.992 | 0.993 | 0.992 | 0.99 | 0.109 |

| Fangfang et al. [12] | LOF | 0.949 | 0.06 | 0.946 | 0.954 | 0.949 | 0.94 | 0.103 |

| Saxena et al. [14] | CART | 0.810 | 0.22 | 0.813 | 0.810 | 0.809 | 0.81 | 0.035 |

| Alarab et al. [16] | XGBoost | 0.975 | 0.03 | 0.970 | 0.980 | 0.975 | 0.97 | 0.107 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Attar, N.E.; Salama, M.H.; Abdelfattah, M.; Taha, S. An Optimized Framework for Detecting Suspicious Accounts in the Ethereum Blockchain Network. Cryptography 2025, 9, 63. https://doi.org/10.3390/cryptography9040063

El-Attar NE, Salama MH, Abdelfattah M, Taha S. An Optimized Framework for Detecting Suspicious Accounts in the Ethereum Blockchain Network. Cryptography. 2025; 9(4):63. https://doi.org/10.3390/cryptography9040063

Chicago/Turabian StyleEl-Attar, Noha E., Marwa H. Salama, Mohamed Abdelfattah, and Sanaa Taha. 2025. "An Optimized Framework for Detecting Suspicious Accounts in the Ethereum Blockchain Network" Cryptography 9, no. 4: 63. https://doi.org/10.3390/cryptography9040063

APA StyleEl-Attar, N. E., Salama, M. H., Abdelfattah, M., & Taha, S. (2025). An Optimized Framework for Detecting Suspicious Accounts in the Ethereum Blockchain Network. Cryptography, 9(4), 63. https://doi.org/10.3390/cryptography9040063