Post-Quantum and Code-Based Cryptography—Some Prospective Research Directions

Abstract

:1. Introduction

2. Quantum Computing

Shor’s 1994 and Grover’s 1996 Algorithms

3. Post-Quantum Cryptography

3.1. Post-Quantum Cryptography Candidates

3.1.1. Hash-Based Cryptography

3.1.2. Code-Based Cryptography

3.1.3. Multivariate Cryptography

3.1.4. Lattice-Based Cryptography

3.1.5. Isogeny-Based Cryptography

3.1.6. Comparison of Post-Quantum Cryptography Algorithms

3.2. Industry Adoption of Post-Quantum Cryptography

- Industry survey of post-quantum cryptography,

- Revenue Assessment of post-quantum cryptography,

- Industry initiatives in PQC—PQC R&D, PQC-based products, PQC products, PQC consulting.

3.2.1. Industry Survey

- The awareness or the understanding about PQC with industry professionals,

- The industry professionals’ prediction of timelines by which quantum computers would break the existing modular arithmetic cryptographic algorithms,

- The understanding among the industry professionals about the significance of threat imposed by quantum computing on existing cryptographic algorithms,

- The study of industry readiness to adopt PQC.

3.2.2. Revenue Assessment of Post-Quantum Cryptography

3.2.3. Industry Initiatives in PQC–PQC R& D, PQC-Based Products, PQC Products, PQC Consulting

- Development of post-quantum-based products—for example, Avaya has tied up with post-quantum (a leading organization developing post-quantum solutions), to incorporate post-quantum security into its products.

- PQC products—Organizations like Infineon, Qualcomm (OnBoard Security), Thales, Envieta, etc. have developed post-quantum security hardware/software products [50].

- Post-quantum consulting—Utimaco is one of the leading players which provides for post-quantum cryptography consulting.

3.3. Standardization Efforts in PQC

3.3.1. NIST

3.3.2. International Telecommunication Union (ITU)

3.3.3. European Telecommunications Standards Institute (ETSI)

3.3.4. ISO

3.3.5. CRYPTREC

- libpqcrypto [66] is a new cryptographic software library produced by the PQCRYPTO project. libpqcrypto collects this software into an integrated library, with (i) a unified compilation framework; (ii) an automatic test framework; (iii) automatic selection of the fastest implementation of each system; (iv) a unified C interface following the NaCl/TweetNaCl/SUPERCOP/libsodium API; (v) a unified Python interface (vi) command-line signature/verification/encryption/decryption tools, and (vii) command-line benchmarking tools.

- The Cloud Security Alliance Quantum-Safe Security Working Group’s [67] goal is to address key generation and transmission methods that will aid the industry in understanding quantum-safe methods for protecting data through quantum key distribution (QKD) and post-quantum cryptography (PQC). The goal of the working group is to support the quantum-safe cryptography community in the development and deployment of a framework to protect data, whether in movement or at rest. Several reports and whitepapers on quantum safe cryptography have been published.

- NSA is publicly sharing guidance on quantum key distribution (QKD) and quantum cryptography (QC) as it relates to secure National Security Systems (NSS). NSA is responsible for the cybersecurity of NSS, i.e., systems that transmit classified and/or otherwise sensitive data. Due to the nature of these systems, NSS owners require especially robust assurance in their cryptographic solutions; some amount of uncertainty may be acceptable for other system owners, but not for NSS. While it has great theoretical interest, and has been the subject of many widely publicized demonstrations, it suffers from limitations and implementation challenges that make it impractical for use in NSS operational networks.

3.4. Post-Quantum Cryptography Tools and Technology

3.4.1. Codecrypt

- Encrypt, decrypt, sign and verify data

- Key management operations

- Input and output from standard I/O, as well as files

- View options including help and ASCII formatting

3.4.2. Open Quantum Safe

3.4.3. jLBC

3.4.4. Microsoft’s Lattice Cryptography Library

3.4.5. libPQP

4. Code-Based Cryptography

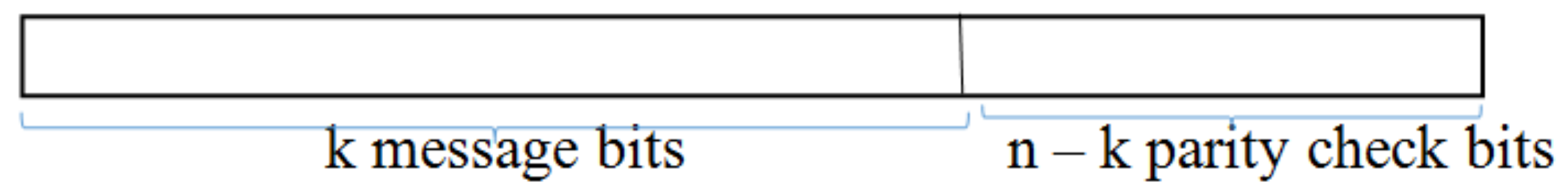

- A generator matrix G of an code C is a matrix G such that . Generator matrix is of the form , where is the identity matrix and Q is a - matrix (redundant part).

- A parity-check matrix H of an code C is an − matrix H, such that .

- Parity-check matrix H is generated from the generator matrix as .

- List Decoding—Given C and x, outputs the list , of all codewords at distance at most t to the vector x with decoding radius t.

- Minimum Distance Decoding—Minimum distance decoding (MDD) is also known as nearest neighbour decoding, and tries to minimize the Hamming distance for all codewords given a received .

- Maximum Likelihood Decoding—Given a received codeword , maximum likelihood decoding (MLD) tries to find the codeword to maximize the probability that x was received, given that y was sent.

- Syndrome Decoding—For an code C, we can assume that the parity-check matrix H is given. Syndrome , Y is received code and where e is the error bit.

4.1. Different Types of Error-Correcting Codes

4.2. Operations on Codes

- An code is punctured by deleting any of its parity bits to become a code.

- An code is extended by adding an additional parity bit to become a code.

- An code is shortened by deleting any of its information bits to become a code.

- An code is lengthened by adding an additional information bit to become a code.

- An code is expurgated by deleting some of its codewords. If half of the codewords are deleted such that the remainder form a linear subcode, then the code becomes a code.

- An code is augmented by adding new codewords. If the number of codewords added is 2k such that the resulting code is linear, then the code becomes a code.

4.3. Properties to Be Fulfilled by Linear Codes

4.3.1. Hamming Metric

- The Hamming Distance between two linear codes in is the number of coordinates where they differ.

- The Hamming Weight of a linear code is the number of non-zero coordinates.

- The Minimum Distance of a linear code C is the smallest Hamming weight of a nonzero codeword in C.

- A code is called maximum distance separable (MDS) code when its is equal to .

- Plotkin bound [72]: For any binary linear block code, , the minimum distance of a code cannot exceed the average weight of all nonzero codewords.

- Gilbert Varshamov Bound [72]: For a fixed value of n and k, Gilbert–Varshamov Bound gives a lower bound on . According to this bound, if then there exists an binary linear block code whose minimum distance is outlast .

- A Hamming sphere of radius t contains all possible received vectors that are at a Hamming distance less than t from a code word. The size of a Hamming sphere for an Binary Linear Block Code is, , where .

- The Hamming bound: A t-error correcting Binary Linear Block Code must have redundancy n − k such that −. An Binary Linear Block Code which satisfies the Hamming bound is called a perfect code.

4.3.2. Rank Metric

4.3.3. Lee Metric

4.4. Relationship between Codes

4.5. Common Code-Based Cryptographic Algorithms

4.5.1. Code-Based Encryption

4.5.2. Code-Based Signature Schemes

4.6. Attacks in Code-Based Cryptography

- Critical Attacks:

- 1.

- Broadcast attack: This attack [104] aims to recover a single message sent to several recipients. Here, the cryptanalyst knows only several ciphertexts of the same message. Since the same message is encrypted with several public keys, it was found that it is possible to recover the message. This attack has been used to break the Niederreiter and HyMES (Hybrid McEliece Encryption Scheme) cryptosystems.

- 2.

- Known partial plaintext attack: A known partial plaintext attack [105] is an attack for which only a part of the plaintext is known.

- 3.

- Sidelnikov–Shestakov attack: This attack [106] aims to recover an alternative private key from the public key.

- 4.

- Generalised known partial plaintext attack: This attack [102] allows to recover the plaintext by knowing a bit’s positions of the original message.

- 5.

- Message-resend attack: A message-resend condition [102] is given if the same message is encrypted and sent twice (or several times) with two different random error vectors to the same recipient.

- 6.

- Related-message: In a related-message attack against a cryptosystem, the attacker obtains several ciphertexts such that there exists a known relation between the corresponding plaintexts.

- 7.

- Chosen plaintext (CPA): A chosen-plaintext attack [102] is an attack model for cryptanalysis which presumes that the attacker can choose arbitrary plaintexts to be encrypted and obtain the corresponding ciphertexts. The goal of the attack is to gain some further information that reduces the security of the encryption scheme. In the worst case, a chosen-plaintext attack could reveal the scheme’s secret key. For some chosen-plaintext attacks, only a small part of the plaintext needs to be chosen by the attacker: such attacks are known as plaintext injection attacks. Two forms of chosen-plaintext attack can be distinguished: Batch chosen-plaintext attack, where the cryptanalyst chooses all plaintexts before any of them are encrypted.Adaptive chosen plaintext attack, where the cryptanalyst makes a series of interactive queries, choosing subsequent plaintexts based on the information from the previous encryptions.

- 8.

- Chosen-ciphertext attack (CCA) In a chosen-ciphertext attack [102], an attacker has access to a decryption oracle that allows decrypting any chosen ciphertext (except the one that the attacker attempts to reveal). In the general setting, the attacker has to choose all cipher texts in advance before querying the oracle.In the adaptive chosen-ciphertext attack, formalized by Rackoff and Simon (1991), one can adapt this selection depending on the interaction with the oracle. An especially noted variant of the chosen-ciphertext attack is the lunchtime, midnight, or indifferent attack, in which an attacker may make adaptive chosen-ciphertext queries, but only up until a certain point, after which the attacker must demonstrate some improved ability to attack the system.

- 9.

- Reaction attack: This attack [107] can be considered as a weaker version of the chosen-ciphertext attack. Here, instead of receiving the decrypted ciphertexts from the oracle, the attacker only observes the reaction of this one. Usually, this means whether the oracle was able to decrypt the ciphertext.

- 10.

- Malleability attack: A cryptosystem is vulnerable to a malleability attack [107] of its ciphertexts if an attacker can create new valid ciphertexts from a given one, and if the new ciphertexts decrypt to a clear text which is related to the original message.

- Non-Critical Attacks:

- 1.

- Information Set Decoding (ISD) Attack: ISD algorithms [103]) are the most efficient attacks against the code-based cryptosystems. They attempt to solve the general decoding problem. That is, if m is a plaintext and c = is a ciphertext, where e is a vector of weight t and G a generator matrix, then ISD algorithms take c as input and recover m (or, equivalently, e).

- 2.

- Generalized Birthday Algorithm (GBA): The GBA algorithm [108] is named after the famous birthday paradox which allows one to quickly find common entries in lists. This algorithm tries to solve the syndrome decoding problem. That is, for given parity check matrix H, syndrome s, and integer t, it tries to find a vector e of weight t such that HeT = s T. In other words, it tries to find a set of columns of H whose weighted sum equals the given syndrome.

- 3.

- Support Splitting Algorithm (SSA): The SSA algorithm [109] decides the question of whether two given codes are permutation equivalent, i.e., one can be obtained from the other by permuting the coordinates. For this, SSA makes use of invariants and signatures (not digital signatures). An invariant is a property of a code that is invariant under permutation, while a signature is a local property of a code and one of its coordinates. The difficulty of using invariants and signatures to decide whether two codes are permutation equivalent is that most invariants are either too coarse (i.e., they take the same value for too many codes which are not permutation equivalent) or the complexity to compute them is very high. The SSA solves this issue by starting with a coarse signature and adaptively refines it in every iteration.

4.7. Related Work

- reduction of key size—large key size is one of the important limitations of CBC and reducing the key size is an important research direction explored

- use of new kinds of linear and non-linear codes in CBC, viz. QC-MDPC, QC-LDPC, etc.—recently CBC using these kinds of codes have been proposed to overcome various kinds of attacks

- algorithms for resolving new kinds of security attacks—there are various security attacks possible in CBC and various techniques and algorithms to counteract the same have been proposed

- evolving new signature schemes—signature schemes using CBC were a recent addition to CBC research

5. Research Directions Identified in Code-Based Cryptography

5.1. Dynamic Code-Based Cryptographic Algorithms

5.2. Use of Other Types of Codes in Code-Based Cryptography

5.3. Privacy-Preserving Code-Based Cryptography

5.4. Prospective Applicability of Codes with Lattice-Based Cryptography

- 1.

- Typical lattice-based cryptographic schemes have used q-ary lattices to solve SIS and LWE problems [133]. Linear code of length n and dimension k is a linear subspace which is called a q-ary code. The possibility of using q-ary lattices [134] to implement ternary codes i.e., q-ary codes in code-based cryptographic schemes is an unexplored area. It may be noted here that DNA cryptography is a Quaternary code which has received due exploration from the authors but only needs to be ascertained for its quantum attack resistance.

- 2.

- There is a major lattice algorithmic technique that has no clear counterpart for codes, namely, basis reduction. There seems to be no analogue notions of reduction for codes, or at least they are not explicit nor associated with reduction algorithms. We are also unaware of any study of how such reduced bases would help with decoding tasks. This observation leads to two questions.

- Is there an algorithmic reduction theory for codes, analogue to one of the lattices?

- If so, can it be useful for decoding tasks?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shor, P.W. Polynomial-Time Algorithms for Prime Factorization and Discrete Logarithms on a Quantum Computer. SIAM J. Comput. 1997, 26, 1484–1509. [Google Scholar] [CrossRef] [Green Version]

- Yan, S.Y. Integer factorization and discrete logarithms. Primality Testing and Integer Factorization in Public-Key Cryptography; Springer: Berlin/Heidelberg, Germany, 2004; pp. 139–191. [Google Scholar]

- Rivest, R.; Shamir, A.; Adleman, L. A method for obtaining digital for signatures and public-Key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- Kuwakado, H.; Morii, M. Quantum distinguisher between the 3-round Feistel cipher and the random permutation. In Proceedings of the IEEE International Symposium on Information Theory, Austin, TX, USA, 12–18 June 2010; pp. 2682–2685. [Google Scholar]

- McEliece, R.J. A public-key cryptosystem based on algebraic. Coding Thv 1978, 4244, 114–116. [Google Scholar]

- Merkle, R. Secrecy, Authentication, and Public Key Systems; Computer Science Series; UMI Research Press: Ann Arbor, MI, USA, 1982. [Google Scholar]

- Patarin, J. Hidden fields equations (HFE) and isomorphisms of polynomials (IP): Two new families of asymmetric algorithms. In Proceedings of the International Conference on the Theory and Applications of Cryptographic Techniques, Saragossa, Spain, 12–16 May 1996; pp. 33–48. [Google Scholar]

- Hoffstein, J.; Pipher, J.; Silverman, J.H. NTRU: A ring-based public key cryptosystem. In International Algorithmic Number Theory Symposium; Springer: Berlin/Heidelberg, Germany, 1998; pp. 267–288. [Google Scholar]

- Regev, O. On lattices, learning with errors, random linear codes, and cryptography. JACM 2009, 56, 34. [Google Scholar] [CrossRef]

- Jao, D.; Feo, L.D. Towards Quantum-Resistant Cryptosystems from Supersingular Elliptic Curve Isogenies. PQCrypto 2011, 7071, 19–34. [Google Scholar]

- Nielsen, M.A.; Chuang, I. Quantum Computation and Quantum Information; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Benioff, P. The computer as a physical system: A microscopic quantum mechanical Hamiltonian model of computers as represented by Turing machines. J. Stat. Phys. 1980, 22, 563–591. [Google Scholar] [CrossRef]

- Manin, Y. Mathematics and Physics; American Mathematical Society: Providence, RI, USA, 1981. [Google Scholar]

- Feynman, R.P.U. Simulating physics with computers. Int. J. Theor. Phys. 1982, 21, 467–488. [Google Scholar] [CrossRef]

- Deutsch, D. Quantum Theory, the Church-Turing Principle and the Universal Quantum Computer. Proc. R. Soc. Lond. 1985, A400, 97–117. [Google Scholar]

- Shor, P.W. Algorithms for quantum computation: Discrete Logarithms and Factoring. In Proceedings of the 35th Annual Symposium on Foundations of Computer Science, Santa Fe, NM, USA, 20–22 November 1994; pp. 124–134. [Google Scholar]

- Grover, L.K. A fast quantum mechanical algorithm for database search. In Proceedings of the 28th Annual ACM Symposium on Theory of Computing, Philadephia, PA, USA, 22–24 May 1996; pp. 212–219. [Google Scholar]

- Buchmann, J.; Lauter, K.; Mosca, M. Postquantum Cryptography-State of the Art. IEEE Secur. Priv. 2017, 15, 12–13. [Google Scholar] [CrossRef] [Green Version]

- Umana, V.G. Post Quantum Cryptography. Ph.D. Thesis, Technical University of Denmark, Lyngby, Denmark, 2011. [Google Scholar]

- Beullens, W.; D’Anvers, J.; Hülsing, A.; Lange, T.; Panny, L.; Guilhem, C.d.S.; Smart, N.P. Post-Quantum Cryptography: Current State and Quantum Mitigation; Technical Report; European Union Agency for Cybersecurity: Athens, Greece, 2021. [Google Scholar]

- Merkle, R. A certified digital signature. In Advances in Cryptology – CRYPTO’89; Springer: Berlin/Heidelberg, Germany, 1989; pp. 218–238. [Google Scholar]

- Butin, D. Hash-based signatures: State of play. IEEE Secur. Priv. 2007, 15, 37–43. [Google Scholar] [CrossRef]

- Bernstein, D.J.; Hülsing, A.; Kölbl, S.; Niederhagen, R.; Rijneveld, J.; Schwabe, P. The SPHINCS+ Signature Framework. Available online: http://www.informationweek.com/news/201202317 (accessed on 20 November 2020).

- NIST. PQC Standardization Process: Third Round Candidate Announcement. 2020. Available online: https://csrc.nist.gov/News/2020/pqc-third-round-candidate-announcement (accessed on 20 November 2020).

- Cayrel, P.L.; ElYousfi, M.; Hoffmann, G.; Meziani, M.; Niebuhr, R. Recent Progress in Code-Based Cryptography. In International Conference on Information Security and Assurance; Springer: Berlin/Heidelberg, Germany, 2011; pp. 21–32. [Google Scholar]

- Sendrier, N. Code-Based Cryptography: State of the Art and Perspectives. IEEE Secur. Priv. 2017, 15, 44–50. [Google Scholar] [CrossRef]

- Ding, J.; Petzoldt, A. Current state of multivariate cryptography. IEEE Secur. Priv. 2017, 15, 28–36. [Google Scholar] [CrossRef]

- Chen, M.; Ding, J.; Kannwischer, M.; Patarin, J.; Petzoldt, A.; Schmidt, D.; Yang, B. Rainbow Signature. Available online: https://www.pqcrainbow.org/ (accessed on 27 August 2020).

- Casanova, A.; Faueère, J.C.; Macario-Rat, G.; Patarin, J.; Perret, L.; Ryckeghem, J. GeMSS: A great multivariate short signature. Available online: https://www-polsys.lip6.fr/Links/NIST/GeMSS.html (accessed on 8 December 2020).

- Chi, D.P.; Choi, J.W.; Kim, J.S.; Kim, T. Lattice Based Cryptography for Beginners. Available online: https://eprint.iacr.org/2015/938 (accessed on 20 November 2020).

- Lepoint, T. Design and Implementation of Lattice-Based Cryptography. Ph.D. Thesis, Ecole Normale Euérieure de Paris—ENS, Paris, France, 2014. [Google Scholar]

- Alkim, D.; Ducas, L.; Pöppelmann, T.; Schwabe, P. Post-Quantum Key Exchange—A New Hope. Available online: https://eprint.iacr.org/2015/1092 (accessed on 20 November 2020).

- Ducas, L.; Durmus, A.; Lepoint, T.; Lyubashevsky, V. Lattice Signatures and Bimodal Gaussians. Available online: https://eprint.iacr.org/2013/383 (accessed on 20 November 2020).

- Bos, J.; Ducas, L.; Kiltz, E.; Lepoint, T.; Lyubashevsky, V.; Schanck, J.M.; Schwabe, P.; Seiler, G.; Stehlé, D. Cryptology ePrint Archive: Report 2017/634. Available online: https://eprint.iacr.org/2017/634/20170627:201157 (accessed on 20 November 2020).

- Chen, C.; Danba, O.; Hoffstein, J.; Hülsing, A.; Rijneveld, J.; Saito, T.; Schanck, J.M.; Schwabe, P.; Whyte, W.; Xagawa, K.; et al. NTRU: A Submission to the NIST Post-Quantum Standardization Effort. Available online: https://ntru.org/ (accessed on 12 July 2020).

- D’Anvers, J.P.; Karmakar, A.; Roy, S.S.; Vercauteren, F. Saber: Module-LWR Based Key Exchange, CPA-Secure Encryption and CCA-Secure KEM. Available online: https://eprint.iacr.org/2018/230/20181026:121404 (accessed on 20 November 2020).

- Bernstein, D.J.; Chuengsatiansup, C.; Lange, T.; Vredendaal, C.V. NTRU Prime: Reducing Attack Surface at Low Cost. Available online: https://eprint.iacr.org/2016/461 (accessed on 20 November 2020).

- Ducas, L.; Lepoint, T.; Lyubashevsky, V.; Schwabe, P.; Seiler, G.; Stehle, D. CRYSTALS—Dilithium: Digital Signatures from Module Lattices. Available online: https://eprint.iacr.org/2017/633 (accessed on 20 November 2020).

- Fouque, P.A.; Hoffstein, J.; Kirchner, P.; Lyubashevsky, V.; Pornin, T.; Prest, T.; Ricosset, T.; Seiler, G.; Whyte, W.; Zhang, Z. Falcon: Fast-Fourier Lattice-Based Compact Signatures over NTRU. Available online: https://www.di.ens.fr/~prest/Publications/falcon.pdf (accessed on 3 January 2021).

- Supersingular Isogeny Diffie–Hellman Key Exchange (SIDH). Available online: https://en.wikipedia.org/wiki/Supersingular_isogeny_key_exchange (accessed on 4 February 2021).

- Costello, C.; Longa, P.; Naehrig, M. Efficient algorithms for supersingular isogeny Diffie-Hellman. In Proceedings of the Annual International Cryptology Conference, Santa Barbara, CA, USA, 14–18 August 2016. [Google Scholar]

- Valyukh, V. Performance and Compari-Son of Post-Quantum Crypto-Graphic Algorithms. 2017. Available online: http://www.liu.se (accessed on 18 August 2020).

- Gaithuru, J.N.; Bakhtiari, M. Insight into the operation of NTRU and a comparative study of NTRU, RSA and ECC public key cryptosystems. In Proceedings of the 2014 8th Malaysian Software Engineering Conference (MySEC), Langkawi, Malaysia, 23–24 September 2014; pp. 273–278. [Google Scholar]

- Espitau, T.; Fouque, P.A.; Gérard, B.; Tibouchi, M. Side-channel attacks on BLISS lattice-based signatures. In Proceedings of the ACM SIGSAC Conference, Dallas, TX, USA, 30 October–3 November 2017; pp. 1857–1874. [Google Scholar]

- Petzoldt, A.; Bulygin, S.; Buchmann, J. Selecting parameters for the rainbow signature scheme. Lect. Notes Comput. Sci. 2010, 6061, 218–240. [Google Scholar]

- Bernstein, D.J.; Hopwood, D.; Hülsing, A.; Lange, T.; Niederhagen, R.; Papachristodoulou, L.; Schneider, M.; Schwabe, P.; Wilcox-O’hearn, Z. SPHINCS: Practical stateless hash-based signatures. Lect. Notes Comput. Sci. 2015, 9056, 368–397. [Google Scholar]

- Bernstein, D.J.; Dobraunig, C.; Eichlseder, M.; Fluhrer, S.; Gazdag, S.I.; Kampanakis, P.; Lange, T.; Lauridsen, M.M.; Mendel, F.; Niederhagen, R.; et al. SPHINCS+: Submission to the NIST Post-Quantum Project. 2019. Available online: https://sphincs.org/data/sphincs+-round2-specification.pdf#page=6&zoom=100,0,254 (accessed on 15 July 2020).

- Tillich, J. Attacks in Code Based Cryptography: A Survey, New Results and Open Problems. 2018. Available online: www.math.fau.edu/april09.code.based.survey.pdf (accessed on 12 September 2020).

- Repka, M. McELIECE PKC calculator. J. Electr. Eng. 2014, 65, 342–348. [Google Scholar]

- Post-Quantum Cryptography: A Ten-Year Market and Technology Forecast. 2020. Available online: https://www.researchandmarkets.com/reports/4700915/post-quantum-cryptography-a-ten-year-market-and#relb0-5118342 (accessed on 5 July 2020).

- IBM. Post-Quantum Cryptography. Available online: https://researcher.watson.ibm.com/researcher/view_group.php?id=8231 (accessed on 11 November 2020).

- Microsoft. Post Quantum Cryptography. Available online: https://www.microsoft.com/en-us/research/project/post-quantum-cryptography/ (accessed on 11 November 2020).

- Avaya. Post-Quantum to Team on Identity-as-a-Service. Available online: https://www.avaya.com/en/about-avaya/newsroom/pr-us-1803012c/ (accessed on 28 February 2021).

- Envieta. Post Quantum Consulting. Available online: https://envieta.com/post-quantum-consulting (accessed on 28 February 2021).

- Google and Cloudflare are Testing Post-Quantum Cryptography. Available online: https://www.revyuh.com/news/hardware-and-gadgets/google-cloudflare-testing-post-quantum-cryptography/ (accessed on 9 February 2021).

- Infineon Technologies. Post-Quantum Cryptography: Cybersecurity in Post-Quantum Computer World. Available online: https://www.infineon.com/cms/en/product/promopages/post-quantum-cryptography/ (accessed on 9 February 2021).

- Security Innovation Announces Intent to Create OnBoard Security Inc. Available online: https://www.globenewswire.com/news-release/2017/02/14/917023/0/en/Security-Innovation-Announces-Intent-to-Create-OnBoard-Security-Inc.html (accessed on 10 February 2021).

- Post-Quantum Cryptography|CSRC. Available online: https://csrc.nist.gov/projects/post-quantum-cryptography/post-quantum-cryptography-standardization (accessed on 7 February 2021).

- Alagic, G.; Alperin-Sheriff, J.; Apon, D.; Cooper, D.; Dang, Q.; Kelsey, J.; Liu, Y.; Miller, C.; Moody, D.; Peralta, R.; et al. Status Report on the Second Round of the NIST Post-Quantum Cryptography Standardization Proces. Available online: https://csrc.nist.gov/publications/detail/nistir/8309/final (accessed on 3 February 2021).

- Qin, H. Standardization of Quantum Cryptography in ITU-T and ISO/IEC, Qcrypt. 2020. Available online: https://2020.qcrypt.net/slides/Qcrypt2020_ITU_ISO.pdf (accessed on 5 February 2021).

- ETSI ICT Standards. Available online: https://www.etsi.org/standards (accessed on 5 February 2021).

- Chen, L. Preparation of Standardization of Quantum-Resistant Cryptography in ISO/IEC JTC1 SC27. 2018. Available online: https://docbox.etsi.org/Workshop/2018/201811_ETSI_IQC_QUANTUMSAFE/TECHNICAL_TRACK/01worldtour/NICT_Moriai.pdf (accessed on 5 February 2021).

- Shinohara, N.; Moriai, S. Trends in Post-Quantum Cryptography: Cryptosystems for the Quantum Computing Era. 2019. Available online: https://www.ituaj.jp/wp-content/uploads/2019/01/nb31-1_web-05-Special-TrendsPostQuantum.pdf (accessed on 14 July 2020).

- Framework to Integrate Post-quantum Key Exchanges into Internet Key Exchange Protocol Version 2 (IKEv2). Available online: https://tools.ietf.org/id/draft-tjhai-ipsecme-hybrid-qske-ikev2-03.html (accessed on 15 October 2020).

- Paterson, K. Post-Quantum Crypto Standardisation in IETF/IRTF. Available online: www.isg.rhul.ac.uk/~kp (accessed on 3 February 2021).

- libpqcrypto: Intro. Available online: https://libpqcrypto.org/ (accessed on 18 January 2021).

- Quantum-Safe Security|Cloud Security Alliance. Available online: https://cloudsecurityalliance.org/research/working-groups/quantum-safe-security/ (accessed on 5 February 2021).

- Kratochvíl, M. Implementation of Cryptosystem Based on Error-Correcting Codes; Technical Report; Faculty of Mathematics and Physics, Charles University in Prague: Prague, Czech Republic, 2013. [Google Scholar]

- Stebila, D.; Mosca, M. Post-quantum key exchange for the Internet and the Open Quantum Safe project. Sel. Areas Cryptogr. 2016, 10532, 1–24. [Google Scholar]

- Minihold, M. Linear Codes and Applications in Cryptography. Master’s Thesis, Vienna University of Technology, Vienna, Austria, 2013. [Google Scholar]

- Londahl, C. Some Notes on Code-Based Cryptography. Ph.D. Thesis, Lund University, Lund, Sweden, 2015. [Google Scholar]

- Williams, F.M.; Sloane, N. The Theory of Error-Correcting Codes; North Holland Publishing Company: Amsterdam, The Netherlands, 1977. [Google Scholar]

- Gadouleau, M.; Yan, Z. Properties of Codes with Rank Metric. In Proceedings of the IEEE Globecom 2006, San Francisco, CA, USA, 27 November–1 December 2006. [Google Scholar] [CrossRef]

- Penaz, U.M. On The similarities between Rank and Hamming Weights and their Applications to Network Coding. IEEE Trans. Inf. Theory 2016, 62, 4081–4095. [Google Scholar] [CrossRef] [Green Version]

- Tim, L.; Alderson, S.H. On Maximum Lee Distance Codes. J. Discret. Math. 2013, 2013, 625912. [Google Scholar]

- Valentijn, A. Goppa Codes and Their Use in the McEliece Cryptosystems. 2015. Available online: https://surface.syr.edu/honors_capstone/845/ (accessed on 20 November 2020).

- Maliky, S.A.; Sattar, B.; Abbas, N.A. Multidisciplinary perspectives in cryptology and information security. In Multidisciplinary Perspectives in Cryptology and Information Security; IGI Global: Philadephia, PA, USA, 2014; pp. 1–443. [Google Scholar]

- Joachim, R. An Overview to Code Based Cryptography. 2016. Available online: hkumath.hku.hk/~ghan/WAM/Joachim.pdf (accessed on 7 June 2020).

- Roering, C. Coding Theory-Based Cryptopraphy: McEliece Cryptosystems in Sage. 2015. Available online: https://digitalcommons.csbsju.edu/honors_theses/17/ (accessed on 20 November 2020).

- Niederreiter, H. Knapsack-type Cryptosystems and algebraic coding theory. Probl. Control. Inf. Theory 1986, 15, 157–166. [Google Scholar]

- Heyse, S.; von Maurich, I.; Güneysu, T. Smaller Keys for Code-Based Cryptography: QC-MDPC McEliece Implementations on Embedded Devices. In Cryptographic Hardware and Embedded Systems, CHES 2013. Lecture Notes in Computer Science; Bertoni, G., Ed.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8086, pp. 273–292. [Google Scholar]

- von Maurich, I.; Güneysu, T. Lightweight code-based cryptography: QC-MDPC McEliece encryption on reconfigurable devices. In Proceedings of the 2014 Design, Automation Test in Europe Conference Exhibition (DATE), Dresden, Germany, 24–28 March 2014; pp. 1–6. [Google Scholar]

- Heyse, S.; Güneysu, T. Code-based cryptography on reconfigurable hardware: Tweaking Niederreiter encryption for performance. J. Cryptogr. Eng. 2013, 3, 29–43. [Google Scholar] [CrossRef]

- Courtois, N.T.; Finiasz, M.; Sendrier, N. How to Achieve a McEliece-Based Digital Signature Scheme. In Advances in Cryptology—ASIACRYPT 2001; Springer: Berlin/Heidelberg, Germany, 2001; pp. 157–174. [Google Scholar]

- Dallot, L. Towards a Concrete Security Proof of Courtois, Finiasz and Sendrier Signature Scheme. In Research in Cryptology WEWoRC 2007; Springer: Berlin/Heidelberg, Germany, 2008; pp. 65–77. [Google Scholar]

- Stern, J. A new identification scheme based on syndrome decoding. In Advances in Cryptology—CRYPTO’93; Springer: Berlin/Heidelberg, Germany, 1994; pp. 13–21. [Google Scholar]

- Jain, A.; Krenn, S.; Pietrzak, K.; Tentes, A. Commitments and efficient zero-knowledge proofs from learning parity with noise. In Proceedings of the International Conference on the Theory and Application of Cryptology and Information Security, Beijing, China, 2–6 December 2012; pp. 663–680. [Google Scholar]

- Cayrel, P.; Alaoui, S.M.E.Y.; Hoffmann, G.; Véron, P. An improved threshold ring signature scheme based on error correcting codes. In Proceedings of the 4th International Conference on Arithmetic of Finite Fields, Bochum, Germany, 16–19 June 2012; pp. 45–63. [Google Scholar]

- Roy, P.S.; Morozov, K.; Fukushima, K. Evaluation of Code-Based Signature Schemes. 2019. Available online: https://eprint.iacr.org/2019/544 (accessed on 21 January 2021).

- Gaborit, P.; Ruatta, O.; Schrek, J.; Zémor, G. New Results for Rank-Based Cryptography. In Progress in Cryptology—AFRICACRYPT 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1–12.

- Debris-Alazard, T.; Tillich, J. Two attacks on rank metric code based schemes: Ranksign and an IBE scheme. In Advances in Cryptology—ASIACRYPT 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 62–92. [Google Scholar]

- Fukushima, K.; Roy, P.S.; Xu, R.; Kiyomoto, K.M.S.; Takagi, T. RaCoSS: Random Code-Based Signature Scheme, 2017. Available online: https://eprint.iacr.org/2018/831 (accessed on 20 November 2020).

- Bernstein, D.J.; Hulsing, A.; Lange, T.; Panny, L. Comments on RaCoSS, a Submission to NIST’s PQC Competition, 2017. Available online: https://helaas.org/racoss/ (accessed on 20 November 2020).

- Roy, P.S.; Morozov, K.; Fukushima, K.; Kiyomoto, S.; Takagi, T.; Code-Based Signature Scheme Without Trapdoors. Int. Assoc. Cryptologic Res. 2018. Available online: https://eprint.iacr.org/2021/294 (accessed on 20 November 2020).

- Xagawa, K. Practical Attack on RaCoSS-R. Int. Assoc. Cryptologic Res. 2018, 831. Available online: https://eprint.iacr.org/2018/831 (accessed on 20 November 2020).

- Persichetti, E. Efficient one-time signatures from quasi-cyclic codes: A full treatment. Cryptography 2018, 2, 30. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Xing, C.; Yeo, S.L. A New Code Based Signature Scheme without Trapdoors. Available online: https://eprint.iacr.org/2020/1250 (accessed on 20 November 2020).

- Santini, P.; Baldi, M.; Chiaraluce, F. Cryptanalysis of a one-time code-based digital signature scheme. In Proceedings of the IEEE International Symposium on Information Theory, Paris, France, 7–12 September 2019; pp. 2594–2598. [Google Scholar]

- Deneuville, J.; Gaborit, P. Cryptanalysis of a code-based one-time signature. Des. Codes Cryptogr. 2020, 88, 1857–1866. [Google Scholar] [CrossRef]

- Aragon, N.; Blazy, O.; Gaborit, P.; Hauteville, A.; Zémor, G. Durandal: A rank metric based signature scheme. In Advances in Cryptology—EUROCRYPT 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 728–758. [Google Scholar]

- Debris-Alazard, T.; Sendrier, N.; Tillich, J. Wave: A new family of trapdoor one-way preimage sampleable functions based on codes. In Advances in Cryptology—ASIACRYPT 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 21–51. [Google Scholar]

- Cayrel, P.; Gueye, C.T.; Ndiaye, O.; Niebuhr, R. Critical attacks in Code based cryptography. Int. J. Inf. Coding Theory 2015, 3, 158–176. [Google Scholar] [CrossRef]

- Niebuhr, R. Attacking and Defending Code-based Cryptosystems. Ph.D. Thesis, Vom Fachbereich Informatik der Technischen Universität Darmstadt, Darmstadt, Germany, 2012. [Google Scholar]

- Niebuhr, R.; Cayrel, P.L. Broadcast attacks against code-based encryption schemes. In Research in Cryptology; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Niebuhr, R. Application of Algebraic-Geometric Codes in Cryptography. Technical Report; Fachbereich Mathematik der Technischen Universität Darmstadt: Darmstadt, Germany, 2006. [Google Scholar]

- Sidelnikov, V.; Shestakov, S. On the insecurity of cryptosystems based on generalized Reed-Solomon Codes. Discret. Math. 1992, 1, 439–444. [Google Scholar] [CrossRef]

- Kobara, K.; Imai, H. Semantically Secure McEliece Public-key Cryptosystems—Conversions for McEliece PKC. In Proceedings of the 4th International Workshop on Practice and Theory in Public Key Cryptosystems, Cheju Island, Korea, 13–15 February 2001; pp. 19–35. [Google Scholar]

- Wagner, D. A generalized birthday problem. In Advances in Cryptology—CRYPTO 2002; Springer: Berlin/Heidelberg, Germany, 2002; pp. 288–303. [Google Scholar]

- Sendrier, N. Finding the permutation between equivalent linear codes: The support splitting algorithm. IEEE Trans. Inf. Theory 2000, 46, 1193–1203. [Google Scholar] [CrossRef]

- Overbeck, R.; Sendrier, N. Code-Based Cryptography; Springer: Berlin/Heidelberg, Germany, 2008; pp. 95–146. [Google Scholar]

- Bernstein, D.J.; Buchmann, J.; Dahmen, E. Post-Quantum Cryptography; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Repka, M.; Cayrel, P.L. Cryptography Based on Error Correcting Codes: A Survey; IGI Global: Philadephia, PA, USA, 2014. [Google Scholar]

- PQCRYPTO. Post-Quantum Cryptography for Long-Term Security; Technical Report; Project number: Horizon 2020 ICT-645622; Technische Universiteit Eindhoven: Eindhoven, The Netherlands, 2015. [Google Scholar]

- Bucerzan, D.; Dragoi, V.; Kalachi, H. Evolution of the McEliece Public Key Encryption Scheme. In International Conference for Information Technology and Communications SecITC 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 129–149. [Google Scholar]

- Drăgoi, V.; Richmond, T.; Bucerzan, D.; Legay, A. Survey on Cryptanalysis of Code-Based Cryptography: From Theoretical to Physical Attacks. In Proceedings of the 7th International Conference on Computers Communications and Control (ICCCC), Oradea, Romania, 11–15 May 2018. [Google Scholar]

- Best Codes: 27 Steps—Instructables. Available online: https://www.instructables.com/Best-Codes/ (accessed on 18 November 2020).

- Hussain, U.N. A Novel String Matrix Modeling Based DNA Computing Inspired Cryptosystem. Ph.D. Thesis, Pondicherry University, Puducherry, India, 2016. [Google Scholar]

- Kumar, P.V.; Aluvalu, R. Key policy attribute based encryption (KP-ABE): A review. Int. J. Innov. Emerg. Res. Eng. 2015, 2, 49–52. [Google Scholar]

- Gentry, C. A Fully Homomorphic Encryption Scheme. Ph.D. Thesis, Stanford University, Stanford, CA, USA, 2009. [Google Scholar]

- Zhao, C.; Yang, Y.-T.; Li, Z.-C. The homomorphic properties of McEliece public-key cryptosystem. In Proceedings of the 2012 Fourth International Conference on Multimedia Information Networking and Security, Nanjing, China, 2–4 November 2012; pp. 39–42. [Google Scholar]

- Line Coding Techniques. Available online: https://technologyuk.net/telecommunications/telecom-principles/line-coding-techniques.shtml (accessed on 16 November 2020).

- Huffman, D.A. A method for the construction of minimum-redundancy codes. Proc. IRE 1952, 40, 1098–1101. [Google Scholar] [CrossRef]

- Carron, L.P. Morse Code: The Essential Language; Radio Amateur’s Library, American Radio Relay League: Newington, CT, USA, 1986; ISBN 0-87259-035-6. [Google Scholar]

- ASCII—Wikipedia. Available online: https://en.wikipedia.org/wiki/ASCII (accessed on 20 November 2020).

- Unicode—Wikipedia. Available online: https://en.wikipedia.org/wiki/Unicode (accessed on 18 November 2020).

- Number Systems (Binary, Octal, Decimal, Hexadecimal). Available online: https://www.mathemania.com/lesson/number-systems/ (accessed on 19 November 2020).

- HTML Codes. Available online: https://www.html.am/html-codes/ (accessed on 18 November 2020).

- QR Code—Wikipedia. Available online: https://en.wikipedia.org/wiki/QR{_}code (accessed on 18 November 2020).

- Barcode—Wikipedia. Available online: https://en.wikipedia.org/wiki/Barcode (accessed on 19 November 2020).

- Categorical Data Encoding Techniques to Boost Your Model in Python! Available online: https://www.analyticsvidhya.com/blog/2020/08/types-of-categorical-data-encoding/ (accessed on 16 November 2020).

- Categorical Feature Encoding in SAS (Bayesian Encoders)—Selerity. Available online: https://seleritysas.com/blog/2021/02/19/categorical-feature-encoding-in-sas-bayesian-encoders/ (accessed on 16 November 2020).

- Braille—Wikipedia. Available online: https://en.wikipedia.org/wiki/Braille (accessed on 20 November 2020).

- Peikert, C. A Decade of Lattice Cryptography; Now Foundations and Trends: Norwell, MA, USA, 2016. [Google Scholar]

- Campello, A.; Jorge, G.; Costa, S. Decoding q-ary lattices in the Lee metric. In Proceedings of the 2011 IEEE Information Theory Workshop, Paraty, Brazil, 16–20 October 2011. [Google Scholar] [CrossRef]

| Serial Number | Post-Quantum Algorithm Category | Post-Quantum Algorithms available in this Category | Name of Most Prevalent Algorithm | Type of Algorithm - Encryption/ Signature/ Key Exchange | Public Key Size | Private Key Size | Signature Size | Strengths | Weaknesses | included in open-source library Liboqs | Attacks | Other Detailed Comparisons |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Lattice Based Crypto- gaphy | 1. Encr- yption/ Decry- ption 2. Signa- ture 3. Key Exch- ange (RLWE) | NTRU Encrypt | E | 6130 B | 6743 B | – | 1. More efficient encryption and decryption, in both hardware and software implementations 2. Much faster key generation allowing the use of disposable keys. 3. low memory use allows it to use in applications such as mobile devices and smart-cards. | 1. Complexity is high in NTRU 2. There is the possibility of the occurrence of a decryption failure from a validly created ciphertext | ✓ | Brute Force attack, meet-in-middle attack, lattice reduction attack, chosen cipher text attack | Gaithru et al. 2014 [43] |

| BLISS II (Bimodal Lattice Signature Scheme) | S | 7 KB | 2 KB | 5 KB | ✗ | Side Channel Attack, Branch tracing attack, Rejection Sampling, Scalar Product Leakage | Espitau et al. 2017 [44] | |||||

| 2 | Multi-Variate | Signature only | Rainbow | S | 124 KB | 95 KB | 424 KB | It is based on the difficulty of solving systems of multivariate equations | Only Signature Scheme is available | ✓ | Direct Attack, Min Rank Attack, High Rank Attack, UOV Attack, UOV Reconciliation attack, Attacks against hash function | Petzoldt et al. 2010 [45] |

| 3 | Hash Based Signature | Signature Only | SPHINCS | S | 1 KB | 1 KB | 41 KB | 1. Best alternative to number theore- tic signature 2. Small and medi- um size signatures 3. Small Key size | Only Signature Scheme is available Speed | ✗ | Subset Resilience, One-wayness, Second Pre-image resistance, PRG, PRF and undetectability, Fault Injection Attacks | Bernstein et al. 2015 [46] |

| SPHINCS+ | S | 32 B | 64 B | 8 KB | ✓ | Distinct-function multi-target second-preimage resistance, Pseudorandomness (of function families), and interleaved target subset resilience, timing attack, differential and fault attacks | Bernstein et al. 2019 [47] | |||||

| 4 | Super-singular elliptic curve isogeny cryptography | Key Exchange Only | Supersing-ular Isogeny Diffie Helman (SIDH) | K | 751 B 564 (compressed SIDH) | 48 B 48 (compressed SIDH) | – | Difficulty of computing isogenies between supersingular elliptic curves which is immune to quantum attacks | Cannot be used for non-interactive key exchange, can only be safely used with CCA2 protection | ✓ | Side-channel attacks, Auxiliary points active attack, adaptive attack | Costello et al. 2016 [41] |

| 5 | Code-Based Cryptography | 1. Encryption/Decryption 2. Signature | Classic McEliece Cryptosystem | E | 1 MB | 11.5 KB | – | One of the cryptosystem which is successful till the third round of NIST Post-Quantum algorithm standardization process | Very Large Key size | ✓ | Structural Attack, Key recovery attack, Squaring Attack, Power Analysis Attack, Side Channel attack, Reaction attack, Distinguishing attack, message recovery attack | Tillich 2018 [48], Repka et al. 2014 [49] |

| Serial Number | Name of the Organization | Country | Type of PQC Work Involved | Algorithms Used | Collaborator | PQC Product Developed |

|---|---|---|---|---|---|---|

| 1. | Avaya [53] | USA | PQC based products | Post-Quantum | Quantum-safe messaging, voice calls and document sharing | |

| 2. | Envieta Systems [54] | USA | PQC products, PQC Consulting | – | Developed Hardware and Software Post-Quantum Implementation Cores including those for embedded systems as well | |

| 3. | Google [55] | USA | PQC products | HRSS-SXY (variant of NTRU encryption) and SIKE (supersingular isogeny key exchange) | Cloudfare | Post-quantum cryptography˙encryption and signature methods for chrome browser |

| 4. | IBM [51] | Switzer-land | R&D | Lattice-based Cryptography | – | Quantum safe Cloud and Systems |

| 5. | Infineon [56] | Germany | PQC products | Variant of New Hope Algorithm | – | Implemented a post-quantum key exchange scheme on a commercially available contactless smart card chip Post-Quantum security for Government Identity Documents, ICT technology, Automotive Security, Communication Protocols |

| 6. | Isara [56] | Canada | PQC products | Hierarchical Signature Scheme (HSS) and eXtended Merkle Signature Scheme (XMSS) | Futurex, Post-Quantum | ISARA Radiate, Quantum-safe Toolkit is a high-performance, lightweight, standards-based quantum-safe software development kit, built for developers who want to test and integrate next-generation post-quantum cryptography into their commercial products |

| 7. | Microsoft Research [52] | USA | PQC products | FrodoKEM, SIKE, Picnic, QTesla | – | Post-Quantum SSH, TLS, VPN |

| 8. | Qualcomm/ OnBoard Security [57] | USA | PQC based products | pqNTRUsign | OnBoard Security | OnBoard Security has developed a digital signature algorithm that can resist all known quantum computing attacks. pqNTRUsign will replace RSA and ECDSA, the most commonly used quantum-vulnerable signature schemes. |

| Post-Quantum Algorithm Type | Third Round Finalist | Technology | Alternate Candidates | Technology |

|---|---|---|---|---|

| Public Key Encryption/ Key Encapsulation Mechanisms | Classic McEliece | Code | BIKE | Code |

| CRYSTALS KYBER | Lattice | FrodoKEM | Lattice | |

| NTRU | Lattice | HQC | Code | |

| SABER | Lattice | SIKE | Supersingular Isogeny | |

| Digital Signature Algorithms | CRYSTALS– DILITHIUM | Lattice | GeMSS | Multivariate Polynomial |

| FALCON | Lattice | PICNIC | Other | |

| RAINBOW | Multivariate Polynomial | SPHIMCS+ | Hash |

| Parameter | Country | Focus Area of Standardization in PQC | Function Standards/QOS Standards | Status |

|---|---|---|---|---|

| NIST | USA | Quantum resistant Algorithm Standardization for Cryptography, Key encapsulation mechanism, digital signature | Function Standards | Round 1 and Round 2 of standardization process is completed. Round 3 in progress |

| ETSI | Europe | Quantum Safe Cryptography, Quantum Key Distribution | Function Standards | Published standards |

| ISO | NA (A Non-Governmental Organization) | Quantum Key Distribution | Function Standards | Work in progress—Standards yet to be published |

| ITU | A specialized agency of United Nations | Quantum Key Distribution | Function and QOS Standards | Only two standards published. Others are work in progress |

| CRYPTREC | Japan | Quantum resistant Algorithm Standardization for Cryptography, Key encapsulation mechanism, digital signature | Function Standards | List of standardized algorithms are expected to be published between 2022-2024 |

| Serial Number | Code-Based Cryptography | Technique & Codes Used | Applied to |

|---|---|---|---|

| 1 | Mc Eliece | Binary Goppa Codes, GRS, Concatenated Codes, Product codes, Quasi- Cyclic, Reed muller codes, Rank matric ( Gadidulin) codes, LDPC, MDPC, Genaralized Shri vastava codes | Computing Systems, Embedded Devices [81], FPGA systems [82] |

| 2 | Niederreiter | GRS Codes, Quasi-Cyclic Codes, Binary Goppa codes | Computing Systems, FPGA Systems [83] |

| Stern | Jain et al. | Cayrel et al. | |

|---|---|---|---|

| Keygen Sign Verify | 0.0170 ms 31.5 ms 2.27 ms | 0.0201 ms 16.5 ms 135 ms | 0.339 ms 24.3 ms 9.81 ms |

| sk pk System prams Signature | 1.24 bits 512 bits 65.5 kB 245 kB | 1536 bits 1024 bits 65.5 kB 263 kB | 1840 bits 920 bits 229 kB 229 kB |

| Serial Number | Purpose of Encoding | Code | Type of Code | Properties to Be Fulfilled for Encoding | Support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Complete Character Set Encoding | Representation, Manipulation and Storage in Digital Systems | Data Hiding | Support Multiple Data Types | Dynamic Encoding | Variable Encoding | Hard Decoding | Randomness of Encoding | Possible for Decoding | |||||

| 1 | To encode data for digital data communication | All codes generated using Line coding techniques [121] | Binary data encoded to digital signals | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

| 2 | To encode data for digital data communication with error correction capabilities [121] | All codes generated using Block Codes and Convolutional Coding Techniques [72] | Binary data encoded to linear or non-linear codes with error detection and correction capabilities | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ |

| 3 | To encode data in a compressed format for faster message communication | Huffman Codes [122], | Alphabets/character set encoded to a compressed code | ✓ | ✓ | ✓ | NA | ✗ | ✗ | ✓ | ✗ | ✓ | ✗ |

| Morse Code [123] | (a)Alphabets/ Character set encoded to a compressed code | ✓ | ✓ | ✓ | NA | ✗ | ✗ | ✓ | ✓ | ✗ | |||

| (b)Alphabets/ Character set encoded to image(s)/ symbols(s)/ patterns(s) | |||||||||||||

| 4 | To represent data in a digital system | Ascii, Unicode, … [124,125] | Alphabets/character set encoded to a number | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

| 5 | To store and manipulate data in a digital system | Binary, BCD, Hexadecimal, Octal [126] | Alphabets/character set encoded to binary/BCD/Hexadecimal/Octal through ordinal encoding | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

| 6 | Programmatic Representation of Character Set | HTML Code [127] | Alphabets/character set encoded to a Hexadecimal number | ✓ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

| 7 | To communicate digital data confidentially | Atbash, Caesar Cipher, Columnar Cipher, Combination cipher, Grid Transposition cipher, Keyboard Code, Phone code, Rot Cipher, Rout Cipher [116] | Alphabets encoded to another alphabet | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

| A1Z26 | Alphabets encoded to a number | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ||

| QR Code, Bar Code, | Alphabets/Character Set encoded to image(s)/symbol(s)/pattern(s) | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ||

| Dice Cipher, Digraph cipher, Dorabella Cipher, Rosicrucian Cipher, Pigpen cipher [116,128,129] | |||||||||||||

| Francis Bacon Code [116] | Alphabets/character set encoded to a sequence of alphabets | ✗ | ✓ | ✓ | ✗ | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ | ||

| DNA Code [117] | Alphabets/character set encoded to a sequence of alphabets | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| 8 | To represent data or data set features to be used in machine learning | Ordinal Code or label code | Data encoded to a non-numerical label | NA | ✓ | ✓ | NA | NA | NA | NA | NA | ✓ | ✗ |

| One Hot Encoding, Dummy Encoding, Effect Encoding, Binary Encoding, Base N Encoding, Multi-Label Binarizer, DictVectorizer [130] | Data is converted to a vector | NA | ✓ | ✓ | NA | NA | NA | NA | NA | ✓ | ✗ | ||

| Hash Encoding [130] | Data is converted to its hash value | NA | ✓ | ✓ | NA | NA | NA | NA | NA | ✗ | ✗ | ||

| Bayesian Encoding [131] | Data is encoded to its average value | NA | ✓ | NA | NA | NA | NA | NA | NA | ✗ | |||

| 9 | To represent data in a format comprehensible for visually challenged persons | Braille Code [132] | Alphabets/character set encoded to image(s)/symbol(s)/pattern(s) | ✓ | ✓ | ✓ | NA | ✗ | ✗ | ✗ | ✗ | ✓ | ✗ |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Balamurugan, C.; Singh, K.; Ganesan, G.; Rajarajan, M. Post-Quantum and Code-Based Cryptography—Some Prospective Research Directions. Cryptography 2021, 5, 38. https://doi.org/10.3390/cryptography5040038

Balamurugan C, Singh K, Ganesan G, Rajarajan M. Post-Quantum and Code-Based Cryptography—Some Prospective Research Directions. Cryptography. 2021; 5(4):38. https://doi.org/10.3390/cryptography5040038

Chicago/Turabian StyleBalamurugan, Chithralekha, Kalpana Singh, Ganeshvani Ganesan, and Muttukrishnan Rajarajan. 2021. "Post-Quantum and Code-Based Cryptography—Some Prospective Research Directions" Cryptography 5, no. 4: 38. https://doi.org/10.3390/cryptography5040038

APA StyleBalamurugan, C., Singh, K., Ganesan, G., & Rajarajan, M. (2021). Post-Quantum and Code-Based Cryptography—Some Prospective Research Directions. Cryptography, 5(4), 38. https://doi.org/10.3390/cryptography5040038