1. Introduction

Life is rich with challenges, decision-making, and questions we pose to ourselves. Decision-making occurs within a context whose characteristics we will refer to as The Setting.

Ethics is a discipline concerned with good and wrong moral values and norms that can be right and wrong. Norms define standards of acceptable behavior by groups. Specific ethical systems, through their norms (computable conventions), constrain and partially solve the problem of life. The importance of ethics for society is paramount, as no social group can stay cohesive and in existence if there are no constraints on the behavior of individuals. For example, frequent, reasonless escalations and attacks with killing or injuring others would dissolve any group. Authors [

1] refer to morality as pro- or anti-social norms with direct benefit or cost to others (e.g., theft, murder, generosity, sharing).

Significant technological and cultural advancements have occurred throughout the last millennia of human history. The speed with which these changes arrived was accelerating. However, it was still a pedestrian pace compared to the changes coming with more excellent connectivity (internet), stronger computation (Moore’s and descendant laws), cognitively powerful non-human entities (artificial intelligence), and many other disruptive technologies made possible by those. Strong computation and algorithms introduce powerful, flexible, and fast-changing entities into society while the connectivity diffuses the effects of their actions to all corners of the world. All social groups will become paired with these artificial entities, and social adaptation and integration will, due to the speed of changes, be tested as never before. Technology that is the source of difficulties in the first place can, through its dual use, also be used to help alleviate the problem. Wittgenstein suggested a pragmatic view on language development through language games [

2]. We wish to pursue a similar line of thought with ethics and investigate its properties from the computational perspective. We shall tease out different properties that might help in modeling, simulating, and potentially innovating ethical systems that will circumvent issues and deliver us to the good side of future history.

Cooperation was a topic of thorough research conducted and surveyed from the perspective of social [

3,

4] and natural sciences [

5]. The former has approached the problem from the top through empirical studies on people. They face interpretation problems because scarce results under-constrain the studied complex setting and leave a multitude of plausible interpretations. Social physicists have approached the problem bottom-up by researching the evolution of cooperation in a simplified utilitarian setting of social dilemma games with low strategic complexity. This narrow focus has enabled them to establish a richness of rigorous conclusions. However, their applicability to realistic cases is quite limited for several reasons. Simplifying assumptions that need to be made for computational reasons also limits the transfer of results to other situations. Cooperation in social dilemma games is only one form of a more general class of moral behavior [

6]. Preferences of agents in some situations cannot be entirely explained just by the monetary outcomes of games, but following personal norms can offer a better explanation [

7]. Moreover, Bowles [

8] claims that incentives and social preferences are not separable, and the former affects the latter. Additionally, Broome [

9] criticizes approaches that assume a single objective that affects each agent’s decision-making. Although cooperation is much better understood, there are no conclusive answers to essential questions about cooperation and ethics.

Design for values (value-sensitive and ethically aligned) is an application of ethics that calls for responsible innovation in the face of accelerating progress that strains the existing social fabric [

10,

11]. We hold that with the increasing complexity of technology, we are hitting the limits of inference, such as unverifiability and limits to explainability [

12], that make that well-intentioned proposition long-term infeasible in the current form due to cognitively superior agents with which value alignment is still a wide-open problem.

The contributions of this paper are:

We offer a review of work in different fields related to investigating cooperation and ethics.

We pivot from the existing practice by focusing on ethics as the first-class mechanism, teasing out its general properties to provide common ground for future interdisciplinary investigations. Additionally, we enrich the description with a computational perspective that relates to computational efficiency. The research in social physics has been narrowly focused and sometimes off-mark by focusing on problems that do not possess these properties. It aimed to show conditions and mechanisms under which cooperation emerges from unbiased and simplified non-cooperative agents. Such generality is a vital a priori requirement, and the found conditions may not even be aligned with our current situation. On the other hand, we accept and carefully describe the current position where humans have significant prosocial bias.

We argue for more intentional moral innovation to prepare for coexistence with cognitively superior agents. Current ethics so far emerged collaterally has some deficient properties that make value alignment with advanced technologies even more challenging. We can even use technology for meet-in-the-middle approaches to value alignment. Based on computational complexity considerations, we provide a few pointers regarding how this can be made.

Section 2 deals with the basic properties of ethics.

Section 3 considers modeling situations/decisions posing ethical dilemmas through game theory and multi-agent systems.

Section 4 deals with ethics and its importance in group coordination. Furthermore, it elaborates algorithmic role and utility of the human values in group coordination. Evolutionary game theory as a modeling basis for ethics is described in

Section 5. In

Section 6, we dive into the advanced properties of ethics that deal with global inconsistencies and fine balance between competition and cooperation in groups. Conclusions are drawn in

Section 7 and future directions are proposed in

Section 8.

3. Ethics, Multiagency and Games

Game theory is a branch of science that deals with interactions between different actors, precisely the level of operation for ethics. Classical Game Theory (CGT) is based on rationality and just-in-time computation interleaved with acting with an unrealistic amount of information and computing. CGT enables simple interepisodic learning (memory) on the level of an individual. Evolutionary Game Theory (EGT) in the classical form is an application of game theory on evolving populations, and it does not require rationality. It is a form of evolutionary policy search where the genotype completely describes the lifetime behavior (phenotype). Hence, “learning” in EGT is populational and intergenerational. Multi-Agent Reinforcement Learning (MARL) [

38,

39] is a more modern framework than the previous two. It enables more complex and structured strategic learning on the level of individuals during their lifetime. It scales to more complex group dynamics and strategies than CGT, bringing about individual and lifetime learning compared to EGT.

It is plausible that ethics has arisen due to evolutionary processes that a game theory can model. Therefore, it can be represented by an evolutionary model containing a representation of the population’s state and a dynamic set of laws influencing the state changes over time. Different mechanisms have been used to explain the rise of cooperation, norms, and ethics in societies: kinship altruism, direct reciprocity, indirect reciprocity, network reciprocity, group selection, and many others [

6,

40,

41]. They have been analyzed from different perspectives, including biologists, political scientists, anthropologists, sociologists, social physicists, economists, etc. The following three concepts are crucial for our exposition. Nash equilibrium is a strategy profile from which deviation would not be profitable for any player. Evolutionary Stable Strategy (ESS) is a refinement of the evolutionary stable Nash equilibrium. The population adopting it could not be invaded by mutant strategy through natural selection. Finally, correlated equilibrium is a generalization of Nash equilibrium that emerges in the presence of a correlation device.

3.1. Examples of Games

Many games are used in literature for theoretical analysis [

5,

7] and behavioral experiments [

4]. Here, we give several examples with results obtained from them.

The Prisoner’s Dilemma (PD) is one of the fundamental problems of game theory that shows remarkable property that can be connected to emergent ethics based on direct reciprocity [

17]. This problem exemplifies pure competition, which is the most challenging environment for cooperation. Namely, in the case of a single-iteration PD game, the maximum benefit comes from selfish play, that is, from betraying a cooperating partner. However, when the problem is changed to a multi-iteration prisoner’s dilemma, we can get cooperation between partners as stable and optimal behavior. By the folk theorem, iterated PD has an abundance of Nash equilibria, which solving process ends up sensitively depending on the specifics of the environment [

5,

42]—with both defection/extortion [

43] and generosity being a possible dominant solution [

44].

Cooperative behavior can also be observed in different contexts, such as where neighbors settle disputes in ways that are not achievable between strangers [

45]. In the repeated play, selfishness is charged because the teammate has insight into the player’s past moves, making it not profitable to be selfish through direct reciprocity. However, nowadays, we have tools such as Internet reputations and social media ratings that are publicly available, giving us insight into players’ past moves without previously playing games.

The Stag Hunt (SH) problem in game theory originated from Rousseau’s Discourse on Inequality as a prototype of the social contract. It describes the trade-off between safety and cooperation to achieve more significant individual gain [

46]. Unlike the PD problem, where an individual’s rationality and mutual benefit are conflicted, in the SH problem, the rational decision is nearly a product of beliefs about what the other player will do. If both players decide to employ the same strategy, stag hunting and hare hunting are the best options. However, if one player chooses to hunt stag, he risks the other player will not cooperate. On the other hand, a player choosing to hunt a hare is not faced with such a risk since the other player’s actions do not influence his outcome, meaning rational players face a dilemma of mutual benefit and personal risk [

47].

Fair division theory deals with procedures for dividing a bundle of goods among

n players where each has equal rights to the goods. Comparing which procedure is the most equitable gives a fair insight into popular notions of equity [

48]. The modern theories of fair division are used for various purposes, such as division of inheritance, divorce settlement, and frequency allocation in electronics. The most common division procedure is divide and choose, used for a fair division of continuous resources. Steinhaus describes it in an example of dividing a cake among two people where the first person cuts the cake into two pieces and the second person selects one of the pieces; the first person then receives the remaining piece [

49]. Such a game is categorized in the field of mechanism design, where the setting of the game gives players an incentive to achieve the desired outcome [

50]. However, the procedure proposed by Steinhaus does not always yield fairness in a complex scenario setting since a person might behave more greedily to acquire more of the goods he desires. A procedure that is considered fair implies that the allocation of the goods should be performed in a manner where no person prefers the other person’s share [

51].

EGT shows in several examples, e.g., PD, Hawk/dove, Stag/hare [

15], the tendency that cooperation is a better approach in the long run (an iterated relational game). At the same time, selfishness tends to be better in the single-step (transactional version of the game). These results of repeated games depend on the settings of problems and the utilization of different mechanisms that support the emergence of cooperation [

6].

3.2. Modelling Choices

Two main choices are given when representing the population: continuous or discrete models. Continuous (aggregative) models describe the population using global statistics. The distribution of the genotypes and phenotypes in the population represents the individual’s inherited behavior and the influence of the environment on the individual, respectively. Since the population’s state is described as frequency data, the differences between individuals are lost in such a model. On the other hand, the discrete (agent-based) models maintain each individual’s genotype/phenotype information in addition to other properties such as the location in the social network and spatial position [

52].

The fundamental difference between the two models is in computational complexity. Aggregative models can be expressed as a set of differential/difference equations, making it possible to find the solution analytically. On the other hand, solving problems solely using analytical techniques is not feasible with discrete (agent-based) models. Therefore, one must run a series of computer simulations and employ Monte Carlo methods to yield the solution (i.e., convergence behavior). However, despite being computationally less demanding and heavily utilized in solving multiplayer games, aggregative models cannot be utilized for modeling structured relations. Human interactions within society are represented as structured interactions, i.e., humans are constrained to the network of social relationships. That means interactions with close ones and their respective groups will significantly impact future behavior, unlike random strangers [

53,

54]. Therefore, utilizing aggregative models for modeling human interactions would be detrimental because structured interactions between individuals produce different outcomes compared to unstructured interactions [

55].

The introduction of the structure in evolutionary game-theoretic models dramatically influenced the model’s long-term behavior [

5,

52]. Embedding human-like social interaction structure into the structure of the agent-based models enables forecasting the less divergent long-term behavior, which resembles the actual human population. Therefore, such evolutionary game-theoretic models can account for a wide variety of human behaviors predicting the outcomes of many cooperative ethical dilemma games elaborated above, such as the Prisoner’s dilemma, Stag Hunt, and fair division in the Nash bargaining game [

5,

56]. It can be observed that, ultimately, the structure of society heavily influences the evolution of social norms [

52].

4. Ethics and Coordination

If we put actors into (limited) material circumstances, we can expect that better-performing actors gain an advantage. In such circumstances, moral and ethical rules arise spontaneously to enable cooperation since greater coordinated groups are more effective than individuals if they have a similar developmental basis [

17]. It is argued that cooperation helped the human race survive in a discrepancy with competitiveness [

57]. Cooperation has been the basic organizational unit of the development of civilization since the time of hunter-gatherers [

27].

Traffic is an excellent example of written and unwritten rules of conduct [

58,

59]. It is in the interest of every driver to cross the road from A to B as quickly and safely as possible. By refusing to follow the written rules, the driver risks being stopped by the police and losing his driving license (which, in this case, means expulsion from the game or losing the opportunity to participate). Failure to follow the unwritten rules carries the risk of condemnation, i.e., lousy will by other players or their refusal to cooperate. Well-engineered traffic rules enable the transport system to work effectively and at increased performance.

The application of the Prisoner’s Dilemma is the same here. If the driver of car X drives in an unknown place to which he will never return, selfish behavior, such as taking away the advantage of and not letting other vehicles through, will bring him maximum short-term benefit. However, when the driver of car X does the same in the community where he is known, such behavior will bring him a bad reputation. Such stigma will negatively impact future rides regarding legal penalties and consequences outside the ride, e.g., degraded relations with community members. Whether a person has selfish or altruistic interests, both people know that in expectation, it is most profitable to follow the rules [

60]. Violation of the rules can bring a one-time benefit, i.e., overtaking in the opposite lane over the full line, if the necessary conditions are met, and the person is not fined or physically punished for this procedure. If a person repeats this procedure, the chances of a positive outcome are reduced, and the person risks being excluded from traffic and being punished with some form of legal penalty, which means that in the long run, it is unprofitable to break the set rules consistently. In this case, it is opportune to follow the rules to get a satisfactory result, i.e., to reach the ride’s goal. Legal codes of conduct in traffic are found on almost every part of the road regarding prohibitions, permits, or warnings making traffic an excellent example of legally enforceable and supervised ethics. Moreover, behavioral rules of individuals in society are another, yet more subtle, example where ethical rules are unwritten. On the other hand, laws are an example of written applied ethics; however, they under-define human interactions, further honed with unwritten (traditional, habitual) rules.

Another example of ethics (and law) are community standards and rules, for example, in online circumstances. Facebook uses agent-based models to simulate the effects of different rules [

61]. Ethicists try out different rules and test for consequences in the system. This is a form of consequentialist exploration whereby deontology is made based on rules’ consequences (consequentially derived). Moreover, consequentialism relies on the principle of inherent cognitive limits unattainable to limited agents, especially in real time. On the other hand, a simple set of rules is easy to follow, even in real-time, for a limited agent. Therefore, it makes sense to invest considerable effort in moral innovation to pre-calculate offline straightforward sets of rules that can be quickly followed under more strict limitations. The principle of offline pre-calculation of ethical rules in conditions with enough time and computation resources is similar to planning and acting under time constraints.

Algorithmic Role and Utility of Human Values in Coordination

In addition to moral and legal obligations, there is also the issue of human values. Coordination is non-trivial, even hard to achieve. Mathematical-computational models and their analysis can reinforce the previous statement [

62,

63]. For example, the problem of finding Nash equilibrium is PPAD-complete; hence solving it might take prohibitively long. This is the case in a single [

63] and iterated settings of problems [

64], despite the folk theorem and abundance of Nash equilibria in the latter. Additionally, Nash equilibrium is achieved by rational actors only if they share beliefs about how the game is played. The rational actor model has no inherent mechanisms to enforce shared beliefs, so complex Nash equilibria do not arise spontaneously between rational agents. On the other hand, there is a concept of correlated equilibrium that is an appropriate equilibrium concept for social theory [

65]. It is a generalization of Nash equilibrium which includes the correlation device in the model that induces correlated beliefs between the agents. Correlation devices can take the form of shared playing history, selection of players, public signals (like group symbols), etc. [

66]. Additionally, finding a correlated equilibrium is much easier than Nash equilibrium as it can be done in polynomial time for any number of players and strategies in a broad class of games by using linear programming, even though finding the optimal one is still NP-hard [

67].

Values are legally and morally undefined items individuals elevate, value, and cultivate because of cultural and personal prejudices [

68]. We hypothesize that shared values ingrained in us through culture are an emerging phenomenon that helps coordinate in a fast heuristic fashion. This is in line with results suggesting that moral judgments are driven at least partly by imprecise heuristics and emotions [

69]. Authors in [

7] have mathematically modeled moral preferences by augmenting single-objective utility function with a weighted (scalarized) term for following personal norms (in addition to monetary outcomes).

Human values are an emerging concept that allows for easier coordination among like-minded people within a community. Suppose a person makes judgments based on a pre-judgment created by the human values defined above. There is an increased chance that the foundation will lead the person to a different conclusion from someone with different human values. If we have correlated values, we have a similar basis for decision-making, hence heuristically aiming for a correlated equilibrium.

Norms as sets of rules and conventions could also be a correlating device if they are simple enough to follow [

17]. They should at least be explainable and comprehensible [

70,

71,

72,

73]. However, following many rules is certainly computationally hard, as constraint satisfaction problems from computer science can attest [

74]. Using continuous fields of values enables using approximate-continuous instead of combinatorial reasoning, making it a very effective mechanism that can be seen in today’s deep neural networks. If we were to use combinatorial reasoning in complex and fast situations, we would be paralyzed in decision-making under our cognitive limits, and coordination would be rare [

75]. Even worse would be trying to calculate Nash equilibrium on the fly, outside the realm of games with a choreographer.

From a philosophical and psychological point of view, human values can be represented as a mixture of clustered criteria individuals use to evaluate actions, people, and events. Moreover, the Values Theory identifies ten distinct value orientations common among people in all cultures. Those values are derived from the human condition’s three universal requirements: individuals’ biological needs, requisites of coordinated social interaction, and groups’ survival and welfare needs [

76]. Individuals communicate these ten values with the remainder of the group to pursue their goals. According to the Values Theory, these goals are described as trans-situational and of varying importance serving as guiding principles in people’s lives [

77].

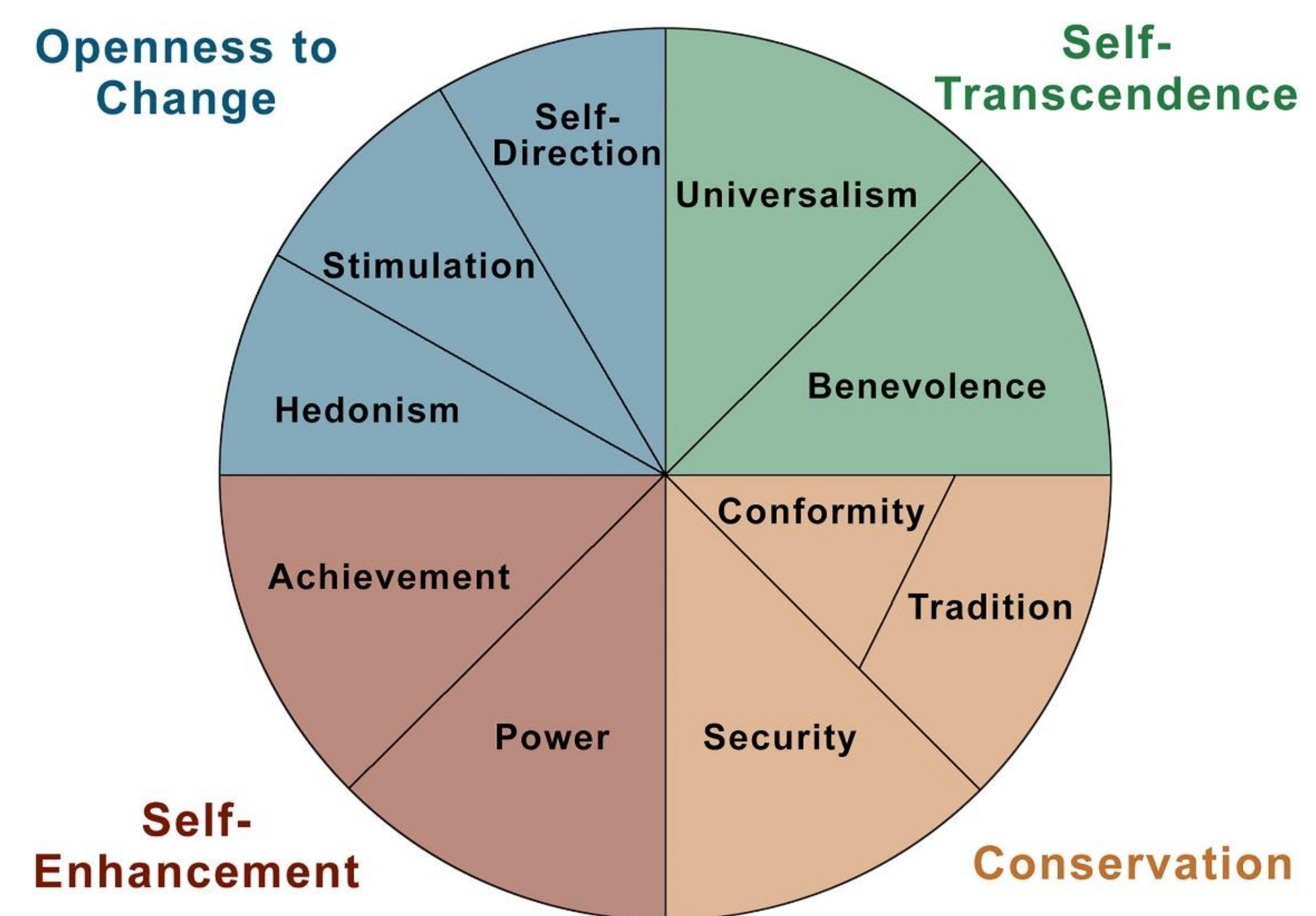

Figure 1 depicts the ten values in a circular arrangement so that the distance and antagonism of their underlying motivation are inversely proportional, i.e., two close values share a similar motivation, and two opposite values have opposing motivations. Moreover, values can be divided into two planes: self-enhancement (pursuit of self-interest) versus self-transcendence (concern for the interests of others) and openness (independence and openness to new experiences) versus conservation (resistance to change).

5. EGT as Modeling Basis

A commonly accepted hypothesis is that evolutionary processes shaped life on earth. Similarly, EGT can be used to determine the influence of external forces on heterogeneous ethics as its underlying component. Therefore, a possible explanation of those external forces is the necessity for cooperation as the basis of heterogeneous ethics on which all today’s civilizations are built. It is argued that EGT is a realistic explanation of the material circumstances that preceded the creation of the first unwritten moral rules of cooperation [

78,

79].

EGT implements the three main pillars: (1) higher payoff strategies over time replace lower payoff strategies, also known as the “survival of the fittest”; (2) evolutionary change does not happen rapidly; (3) players’ future actions are made reflexively without reasoning [

80]. Biologists and mathematicians initially developed the evolutionary game to resolve open questions in evolutionary biology [

81]. However, it has far-reaching implications in many areas, such as economics, ethics, industrial organization, policy analysis, and law. In general, evolutionary game models are suitable for systems where agents’ behavior changes over time and interacts with the other agents influencing their behavior. However, the other agents must not collectively influence the behavior of an individual agent, and all decisions must be made reflexively [

80]. One downside to original EGT is that players (agents) are born with a particular strategy that cannot be changed during their lifespan [

82], making it unrealistic to model humans who evolve and change their strategy of social interactions throughout time. However, EGT, as it is currently defined, is suitable for modeling reptiles that do not have strong learning capabilities [

83]. Simple, parametric forms of learning through memory and reputation mechanisms have been implemented in EGT, but it does not include richer lifetime learning due to computational complexity concerns. For modeling humans, extensions of EGT should be investigated to find concepts interpolated between stable evolutionary strategies and Nash equilibria since the first is reactive (without deliberation). In contrast, the second is unrealistically rational and computationally demanding. Correlated equilibrium is a good direction with a good balance of power and efficiency.

The usage of EGT as a basis for modeling morality has been extensively discussed and previously mentioned by Alexander in his The Structural Evolution of Morality, where he recognized the deficiencies of solely using EGT and proposed utilizing the combination of EGT, theory of bounded rationality, and research in psychology [

52]. Although EGT enables the identification of behavior that maximizes the expected long-term utility, the motivation behind this behavior and the subsequent action that complies with the moral theory remains unexplained. To maximize an individual’s lifetime utility, his actions must be bounded by rationality, requiring reliance on moral heuristics such as fair split and cooperation. Consequently, incorporating bounded rationality into one’s actions complies with moral theory [

84].

Authors in extended evolutionary synthesis propose improving systems focused on genetic evolution by considering the co-evolution of genome and culture. Cultural evolution alters the environment faced by genes, indirectly influencing natural selection. Adding social norms with the possibility of arbitration can substantially widen the range of successful cooperation [

85]. This can explain the ultra-sociality of the human species. This co-evolution supposedly creates multiple equilibria, among which many are group-beneficial.

According to this line of thinking, in-group competition solves the free-rider problem with punishments, reputation, and signaling, which are mechanisms for large-scale cooperation. It sustains adherence to norms and settles the group into some correlated equilibrium. What is unique about these mechanisms is that they can sustain any costly behavior with or without communal benefit. They can sustain social norms that need not necessarily be cooperative [

4].

Cultural evolution is a much faster and more innovative information processing system. Unlike genetic evolution, where there are two models for recombining traits, there are many more models simultaneously from which cultural traits interact. Additionally, transmission fidelity is much lower, and selection is strongly influenced by psychological processes, which drives greater innovation [

4]. As it is known, the success of strategies in a population is conditional on the populational distribution of other strategies, and these conditions can shift fast in changing. Using cultural learning, individuals can quickly adapt behavior to circumstances for which genetic learning is too slow by imitation learning and can keep cooperation from collapsing. Hence, culture may have created prolonged cooperation based on indirect reciprocity, which may have been just enough for genetic evolution to pick it up to develop supportive psychology to perpetuate it.

Cultural evolution is more likely to create inter-group competition since it is fast, noisy, and nonvertical compared to genetic evolution [

86]. This competition puts groups against each other performance-wise, and it tends to lead to more prosocial norms and institutions. Competition at a lower level (of smaller groups) can help cooperation at higher levels (of greater collectives), and vice versa, stronger cooperation at a lower level can be detrimental to cooperation at a higher level [

87]. Sometimes inter-group competition weakens kin bonds, reducing effectiveness at lower scales to promote effectiveness at higher scales [

4].

EGT is somewhat successful in modeling social phenomena due to interactions between individuals trying to maximize utility. The emergence of altruism in an

n-player prisoner’s dilemma using EGT is proposed by [

88]. Authors suggest that utilizing an EGT approach has been shown to help understand the inherited similarities between weak and strong altruism. The influence of social learning on human adaptability is discussed in [

89]. By using the EGT approach to model the social learning of individuals through selective imitation, the authors supported the hypothesis. The development of social norms as an evolutionary process is another example of modeling social phenomena. Evolutionary psychologists argue that humans lack logical problem-solving skills [

90]. Therefore, humans do not reason what is true or false when faced with reasoning; they match different patterns to a particular case. In [

91], it was shown that human development is more consistent with cumulative cultural learners than with Machiavellian intelligence that tries to outmaneuver an opponent strategically. People will use previously learned reasoning that includes obligated, permitted, or forbidden actions. Social norms and, consequently, inheriting such reasoning can be justified using EGT [

92]. Authors [

31] describe how social norms, through sanctions, transform mixed-incentive games with social dilemmas where cooperative outcomes are unstable into easier coordination problems.

ESS conditioned on cues from public signals have been proven to be correlated equilibria of the game [

93], and these equilibria can be found by repeated play [

94]. Authors [

58,

66] model social norms that act as “choreographers” that induce correlated beliefs in agents, allowing them to coordinate on a correlated equilibrium of the game.

EGT and its extension to genetic-cultural co-evolution can model dynamics, progress, and limits. What is necessary is to incorporate cognition and more complex learning and strategies into cultural processes to make more precise dynamic change models. Additionally, a mixture of games should be modeled on a set of players, with uncertainty surrounding the specifics of the game played and outcomes. Such players would have evolving interests that depend on a selection model that mirrors the one in humans. Something along that line of thinking, but outside of ethical considerations, was done in machine learning to solve a large set of tasks with the same agent [

95]. In social physics, some progress has been achieved in multi-games [

96] and modeling more complex group dynamics with higher-order interactions [

53].

6. Advanced Properties of Ethics

In the following section, we shall cover the views on the advanced properties of ethics in the literature. We deal with the structure underlying ethics as a patchwork of norms that are locally consistent. From this follows further issues of dilemmas through the global inconsistencies that jeopardize the coordination, especially in novel situations. Finally, we cover the importance of tension and balance between cooperation and competition in well-functioning societies. Although seemingly exclusively opposing forces, competition plays important role in innovation and cohesion within cooperation.

6.1. Social Norms as Behavioral Patterns

Ethics consist of behavioral patterns/regularities (social conventions, of which norms are a subset) that can be observed in resolutions of recurring coordination problems-situations [

23,

31] in a society of agents with similar capabilities. These patterns are emergent through time from the interactions in the environment. Under the assumption of evolutionary-guided changes (e.g., genetic-cultural co-evolution), all circumstances that often appeared in time were used for selective pressure [

4]. For these reasons, it is expected that such norms would be locally consistent and good performing in frequent circumstances that led to their creation. Authors in [

66] have shown that natural selection can be a blind choreographer that spontaneously creates beliefs and norms from stochastic events to serve as correlated equilibria without sophisticated knowledge or external enforcement. These beliefs and norms can be sustained using simpler and, later, more complex mechanisms [

97].

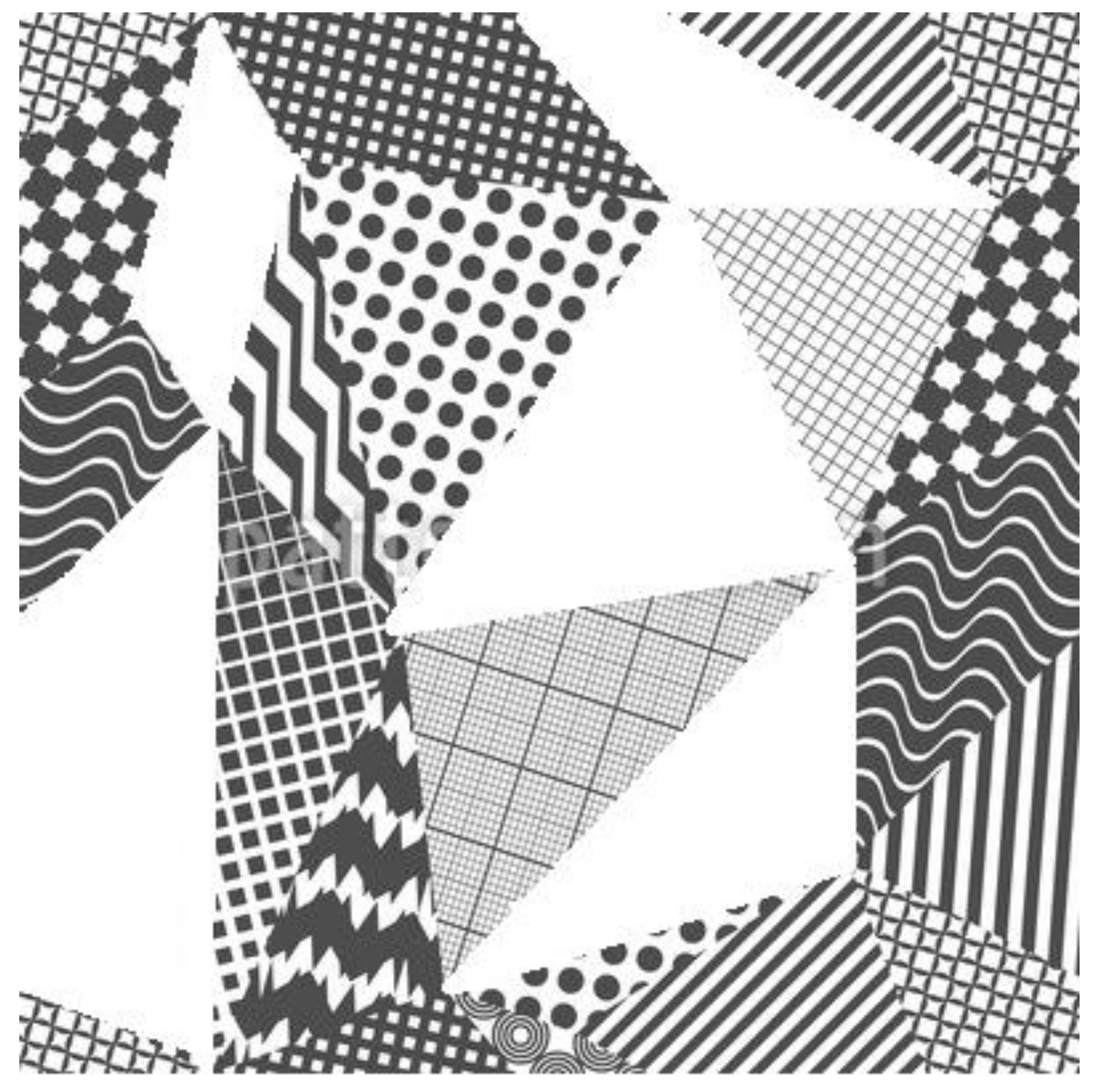

Humans face various situations where certain decisions must be made during their lifetime. Such situations are simply a part of life, and we cannot avoid them. However, making decisions and confronting the resulting consequences is under our power. Throughout the evolution of humankind, individuals have been confronted with various decisions passed to and replicated by others over generations. Over time, the aggregation of these decisions led to the development of ethics, which can be compared to a patchwork, as depicted in

Figure 2. Every patch in patchwork represents similar situations (episodic games) and belonging norms. However, the two neighboring patches are similar in problem space but have different norms that govern them. Additionally, the white patches represent the absence of norms in certain areas due to the absence of lived experience in that space. Examples of such white patches might involve significantly novel and impactful technology (such as super-intelligence). In that case, humans have to extrapolate norms from neighboring patches, i.e., similar ethical settings. The extrapolation, if it may be uniquely done in the first place, is not guaranteed good performance or relevance.

6.2. Consistency of Ethics

In addition to multicriteriality as a source of dilemmas for all normative ethics, deontological ethical systems may additionally experience dilemmas through inconsistencies. Two mechanisms yield inconsistency: norm confusion and faulty execution.

When extrapolating to substantially new situations from existing patterns, we might get norm confusion—inconsistencies between the different patches of locally consistent patterns. It is unclear which norm should be applied, and we get into a dilemma [

31]. These inconsistencies are problematic for algorithmization and alignment with future AI systems. All existing ethics contain inconsistencies, with evident contradictions if looked at from a high-enough level. Such inconsistencies are not necessarily visible locally.

Faulty execution yields moral inconsistency in humans based purely on emotions associated with a particular case, i.e., moral dilemma, and not on formal inconsistencies. The root cause of such inconsistencies occurs when an individual, faced with dilemmas, treats the same moral cases differently. Moral learning is the process of learning from such mistakes and self-improvement, allowing the individual to maintain consistency with moral norms shared within a society. However, avoiding moral inconsistencies through moral learning is not always straightforward due to conflict with self-interest. Moreover, moral norms are generic, i.e., applied to a wide array of cases, and consequently, there will always be exceptions. For an individual (learning agent) to learn through moral problems on their own, one must think about moral problems from the other’s perspective. For example, using Bayesian reasoning, one can derive a clear moral rule based on the judgments of other individuals [

98].

To avoid biases when dealing with ethical decisions, philosopher John Rawls proposed the Veil of Ignorance as a tool for increasing personal consistency regarding some forms of faulty execution. Here, one should imagine sitting behind a veil of ignorance, keeping him away from his identity and personal circumstances. By being ignorant in such a manner, one can objectively make decisions. This would lead to a society that should help those who are socially or economically lacking behind [

99] because robust optimization under total ignorance yields a maximin solution.

6.3. Cooperation vs. Competition

The question of the place of competition within well-functioning societies is open for investigation. It is argued that ethics based on cooperation brings more significant progress in the long run [

100,

101]. However, the relationship and the balance between the two are complex, even if the desired final goal is worldwide cooperation. Social physics exhibits a complex relationship between the emergence of the two that is very sensitive to the setting of the problem. Competition in society plays both an innovative and cohesive role in cooperation.

In addition to being the basis for the development of civilization, cooperation incorporates individual and social interests and helps create a balance among community members. On the micro-level, in civilized societies and everyday activities, cooperation with other community members is more profitable in the long run due to the installed norms and institutions. This makes personal goals faster and easier while achieving greater communal well-being. The opposite of cooperation is competitiveness, which in itself is not bad. Competition is one of the drivers of innovation, while cooperation is more effective at operational issues in repeated situations. It is good to be competitive with, for example, a past version of ourselves, set personal goals, and fight to achieve them. Additionally, competition is a cohesive element of cooperation.

According to the extended evolutionary synthesis, in-group competition is vital to solving the free-rider problem through punishment, reputation, and signaling mechanisms. Hence, it improves adherence to group norms. This efficiently leads to the correlated equilibrium.

Inter-group competition is important solely for correlated equilibrium selection, i.e., search. In line with theoretical results, searching for optimal correlative equilibrium is a painfully slow process. It can be incomplete to remove group-damaging norms—especially when the latter are entangled with important cooperative norms. Additionally, the balance and distribution of competition and cooperation are sensitive, whereby competition on lower levels can favor cooperation at higher and stricter cooperation on lower levels can lead to collapse on a higher level. Such complex group dynamics can be modeled and tested on graphs [

54] and hypergraphs [

53].

In the long term, cooperation outweighs competition when relying on scarce resources. This is best described in Hardin’s The Tragedy of the Commons [

102], where each individual consumes resources at the expense of the others in a rivalrous fashion. If everyone acted solely upon their self-interest, the result would be a depletion of the common resources to everyone’s detriment. The solution to the posed problem is the introduction of regulations by a higher authority or collective agreement, which leads to the correlated equilibrium [

103]. Regulations could directly control the resource pool by excluding the individuals who excessively consume the resources or regulating consumption use. On the other hand, self-organized cooperative arrangements among individuals can rapidly overcome the problem (with a punishment mechanism for deviators). Here, the individuals share a common sense of collectivism, making their interest not to deplete all resources selfishly [

102].

7. Conclusions

We have looked at ethics through an analytical prism to find some of its constitutive properties. The problem that ethics tries to solve is improving group performance in a setting that is multi-criteria, dynamic, and poised by uncertainties. Ethics operates on a large societal scale, making for a complex setting in which adaptability is crucial. Ethics emerged collaterally through cultural evolution on a longer time scale, meaning all changes have been slow and gradual. This seems to have worked well, but the shortening of timescales and greater societal perturbations due to rapid technological advances are jeopardizing the effectiveness of such an unguided process. Furthermore, current ethical systems are globally inconsistent, though they are locally consistent. This can lead to additional dilemmas that pose a further risk for the coordination, especially in novel situations. Then, we proceeded in the direction that could help with future work in guiding that process and reducing inherent risks—modeling and general computational/algorithmic issues. We must pick the appropriate model type and be wary of flaws in models of certain systems to remove them. Additionally, appropriate algorithmic approaches must be selected to circumvent the problems of computational complexity that could void the guiding efforts infeasible.

We argue that ethics is related to multi-agent interaction so that game theory can adequately model it, especially variants of evolutionary game theory. Moreover, correlated equilibrium is an important and appropriate concept that can be efficiently computationally found in the presence of a shared correlation device. Honed behavioral patterns—social norms—can play the role of a correlation device if they are simple enough to follow. However, following many rules is certainly computationally hard, as constraint satisfaction problems from computer science can attest [

74]. Values can approximate complex ethical norms and thereby help the coordination by offering better correlation devices that reduce computational complexity.

Ethics is focused on cooperation, but it also depends on the competition for efficiency and adaptability. Moreover, the balance between competition and cooperation is delicate. The levels at which competition takes place significantly impact the level at which beneficial cooperation emerges, if at all. Mechanisms such as reputation, signaling, and punishment are elements of in-group competition that drive group cohesion. Social norms within-group competition play a crucial role as a correlation device that enables finding a correlated equilibrium into which a group may settle computationally efficiently. This is in stark contrast to the problem of finding Nash equilibrium which is PPAD-complete, and solving it might take a long time. However, there are no guarantees that the found correlated equilibrium benefits its group. Inter-group competition drives a slow search for better equilibria. This slowness is in line with the results of the computational complexity theory. The optimal ethical system could be computationally found in principle, though at an impractically high computational cost.