Provably Safe Artificial General Intelligence via Interactive Proofs

Abstract

1. Introduction

1.1. ‘Hard Take-Off’ and Automated AGI Government

1.2. Intrinsic and Extrinsic AGI Control Systems

Our goal is to design self-organizing systems, comprising networks of interacting human and autonomous agents, that sustainably optimize for one or more objective functions.… Our challenge is to choose the right incentives, rules, and interfaces such that agents in our system, whom we do not control, self-organize in a way that fulfills the purpose of our protocol.Ramirez, The Graph [13]

1.3. Preserving Safety and Control Transitively across AGI Generations

1.4. Lack of Proof of Safe AGI or Methods to Prove Safe AGI

1.5. Defining “Safe AGI”; Value-Alignment; Ethics and Morality

- Most humans seek to impose their values on others.

- Most humans shift their values depending on circumstances (‘situation ethics’).

2. The Fundamental Problem of Asymmetric Technological Ability

3. Interactive Proof Systems Solve the General Technological Asymmetry Problem

4. Interactive Proof Systems Provide a Transitive Methodology to Prove AGIn Safety

- Identifying a property of AGI, e.g., value-alignment, to which to apply IPS.

- Identifying or creating an IPS method to apply to the property.

- Developing a representation of the property to which a method is applicable.

- Using a pseudo-random number generator compatible with the method [33].

5. The Extreme Generality of Interactive Proof Systems

6. Correct Interpretation of the Probability of the Proof

7. Epistemology: Undecidability, Incompleteness, Inconsistency, Unprovable Theorems

8. Properties of Interactive Proof Systems

- The methods used by the Prover are not specified and unbounded.

- The Prover is assumed to have greater computational power than the Verifier.

- The Verifier accepts a proof based on an arbitrarily small chance that the proof is incorrect or he has been fooled by the Prover.

9. Multiple Prover Interactive Proof Systems (MIP)

10. Random vs. Non-Random Sampling, Prover’s Exploitation of Bias

11. Applying IPS to Proving Safe AGI: Examples

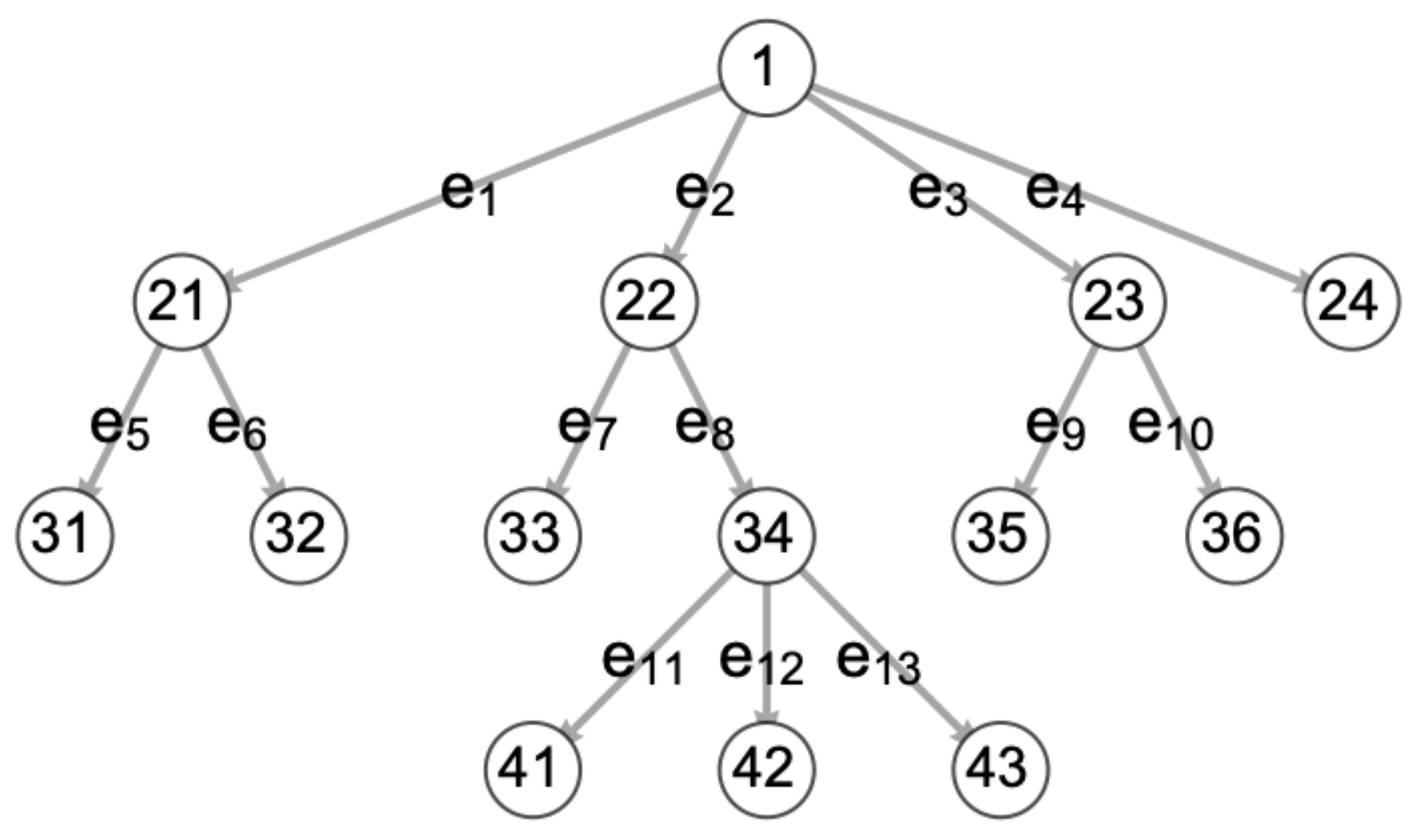

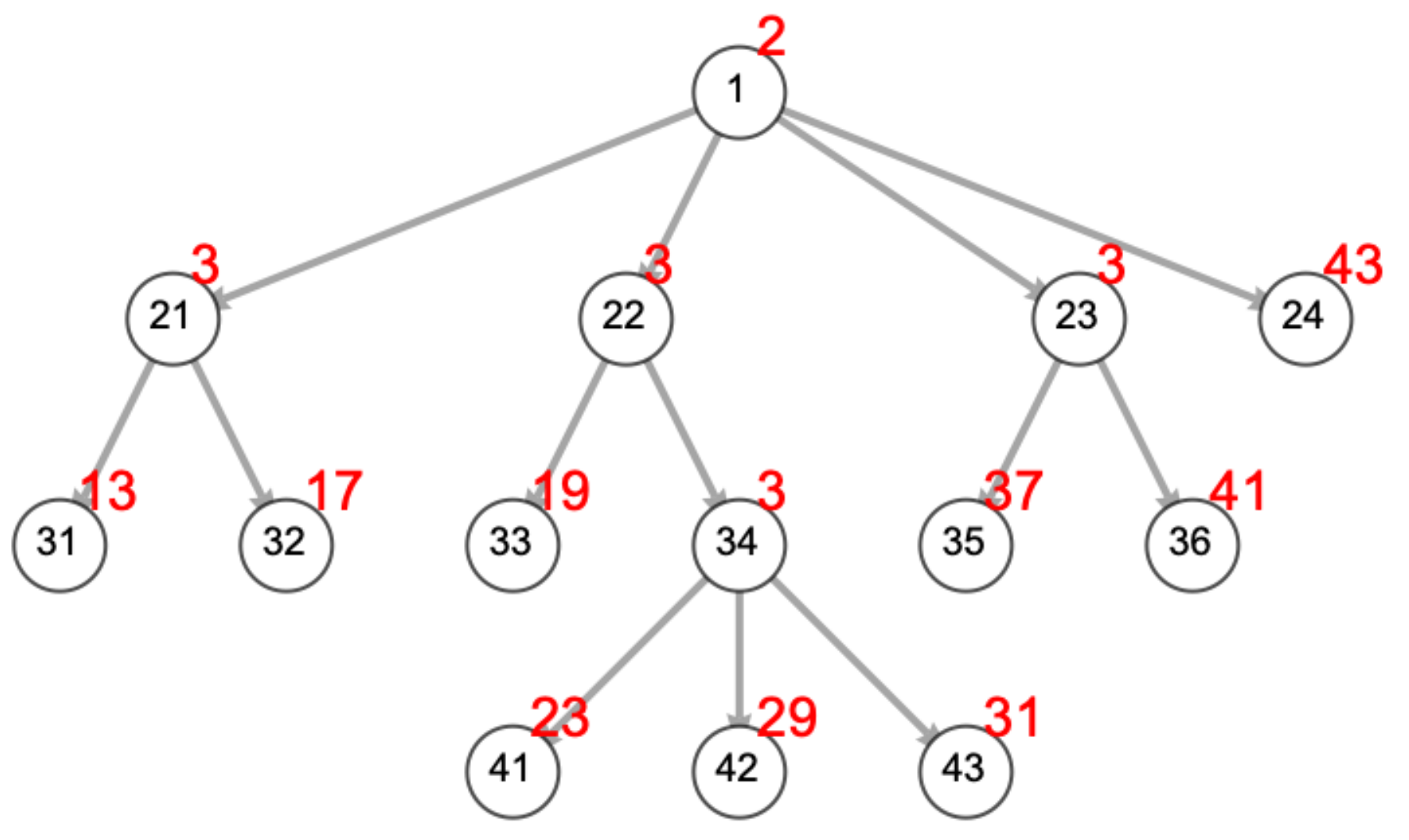

11.1. Detection of Behavior Control System (BCS) Forgery via Acyclic Graphs

- Verifier selects elements ai through am randomly from F.

- Prover evaluates the assigned polynomials p1 and p2 at a1 through am.

- If p1(a1, …, am) = p2(a1, …, am), Verifier accepts, otherwise, rejects.

11.2. Program-Checking via Graph Nonisomorphism

11.3. Axiomatic System Representations

- Axioms A = {a1, a2, a3, …, ai}.

- Transformation rules .

- Compositions of axioms and inference rules C = {c1, c2, c3, …, ck}, e.g.,

- .

- .

11.4. Checking for Ethical or Moral Behavior

11.5. BPP Method 1: Random Sampling of Behaviors

11.6. BPP Method 2: Random Sampling of Formulae

11.7. BPP Methods 3 and 4: Multiple-Prover Versions of #1 and #2

11.8. BPP Method 5: Behavior Program Correctness

11.9. BPP Method 6: A SAT Representation of Behavior Control

12. Probabilistically Checkable Proofs (PCP Theorem)

13. If ‘Safety’ Can Never Be Described Precisely or Perilous Paths Are Overlooked

14. Securing Ethics Modules via Distributed Ledger Technology

- A safe AGI ethics module E1 is developed via simulation in the sandbox.

- The safe AGI ethics E1 is encrypted and stored as an immutable reference copy E1R via DLT.

- All AGIs of a given computational class are endowed with E1.

- To alter the archived reference copy E1R requires a strong level S1 of consensus.

- To alter AGIn’s personal copy of its ethics BT E1i requires a strong level S2 of consensus S2 ≤ S1.

- The smart contract IPS compares AGIn’s E1i with E1R.

15. Interactive Proof Procedure with Multiple Provers in the Sandbox

- Initialization of multiple provers. A number of identical AGIs from a generation are initialized with different conditions. The OII… tetratic progression of their individual state-space trajectories will quickly diverge.

- A smart contract requires and records their identity credentials [63].

- The Verifiers ask the Provers to attempt proofs (tree-traversals) of identical, randomly chosen formulae (behaviors).

- The proofs will be different, but if the ethics and behavior control system are valid, the behaviors (theorems) will be within circumscribed limits.

16. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

Appendix A.1. Logical Foundations of IPS

Appendix A.2. Deterministic Turing Machine

Appendix A.3. Probabilistic and Nondeterministic Turing Machines

Appendix A.4. Bounded Probabilistic Polynomial Time (BPP)

Interactive Proof Systems

References

- Yampolskiy, R.; Sotala, K. Risks of the Journey to the Singularity. In The Technological Singularity; Callaghan, V., Miller, J., Yampolskiy, R., Armstrong, S., Eds.; Springer: Berlin, Germany, 2017; pp. 11–24. [Google Scholar]

- Yampolskiy, R. Taxonomy of Pathways to Dangerous Artificial Intelligence. In Proceedings of the Workshops of the 30th AAAI Conference on AI, Ethics, and Society, Louisville, AL, USA, 12–13 February 2016; pp. 143–148. [Google Scholar]

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2014; p. 415. [Google Scholar]

- Babcock, J.; Krámar, J.; Yampolskiy, R.V. Guidelines for Artificial Intelligence Containment. 2017, p. 13. Available online: https://www.cambridge.org/core/books/abs/nextgeneration-ethics/guidelines-for-artificial-intelligence-ppcontainment/9A75BAFDE4FEEAA92EBE84C7B9EF8F21 (accessed on 4 October 2021).

- Callaghan, V.; Miller, J.; Yampolskiy, R.; Armstrong, S. The Technological Singularity: Managing the Journey; Springer: Berlin, Germany, 2017. [Google Scholar]

- Turchin, A. A Map: AGI Failures Modes and Levels. LessWrong 2015 [Cited 5 February 2018]. Available online: http://immortality-roadmap.com/AIfails.pdf (accessed on 4 October 2021).

- Yampolskiy, R.; Duettman, A. Artificial Superintelligence: Coordination & Strategy; MDPI: Basel, Switzerland, 2020; p. 197. [Google Scholar]

- Yampolskiy, R. On controllability of artificial intelligence. 2020. Available online: https://philpapers.org/archive/YAMOCO.pdf (accessed on 4 October 2021).

- Yudkowsky, E. Artificial Intelligence as a Positive and Negative Factor in Global Risk. In Global Catastrophic Risks; Bostrom, N., Ćirković, M.M., Eds.; Oxford University Press: New York, NY, USA, 2008; pp. 308–345. [Google Scholar]

- Carlson, K.W. Safe Artificial General Intelligence via Distributed Ledger Technology. Big Data Cogn. Comput. 2019, 3, 40. [Google Scholar] [CrossRef]

- Yampolskiy, R.V. From Seed AI to Technological Singularity via Recursively Self-Improving Software. arXiv 2015, arXiv:1502.06512. [Google Scholar]

- Good, I.J. Speculations concerning the first ultraintelligent machine. Adv. Comput. 1965, 6, 31–61. [Google Scholar]

- Ramirez, B. Modeling Cryptoeconomic Protocols as Complex Systems - Part 1 (thegraph.com). Available online: https://thegraph.com/blog/modeling-cryptoeconomic-protocols-as-complex-systems-part-1 (accessed on 4 October 2021).

- Armstrong, S. AGI Chaining. 2007. Available online: https://www.lesswrong.com/tag/agi-chaining (accessed on 9 September 2021).

- Omohundro, S. Autonomous technology and the greater human good. J. Exp. Theor. Artif. Intell. 2014, 26, 303–315. [Google Scholar] [CrossRef]

- Russell, S.J. Human Compatible: Artificial Intelligence and the Problem of Control; Viking: New York, NY, USA, 2019. [Google Scholar]

- Yampolskiy, R.V. What are the ultimate limits to computational techniques: Verifier theory and unverifiability. Phys. Scr. 2017, 92, 1–8. [Google Scholar] [CrossRef]

- Williams, R.; Yampolskiy, R. Understanding and Avoiding AI Failures: A Practical Guide. Philosophies 2021, 6, 53. [Google Scholar] [CrossRef]

- Tegmark, M. Life 3.0: Being Human in the Age of Artificial Intelligence, 1st ed.; Alfred, A., Ed.; Knopf: New York, NY, USA, 2017. [Google Scholar]

- Soares, N. The value learning problem. In Proceedings of the Ethics for Artificial Intelligence Workshop at 25th IJCAI, New York, NY, USA, 9 July 2016. [Google Scholar]

- Silver, D.; Singh, S.; Precup, S.; Sutton, R.S. Reward is Enough. Artif. Intell. 2021, 299. [Google Scholar] [CrossRef]

- Yampolskiy, R. Artificial Intelligence Safety Engineering: Why Machine Ethics Is a Wrong Approach. In Philosophy and Theory of Artificial Intelligence; Müller, V.C., Ed.; Springer: Berlin, Germany, 2012; pp. 389–396. [Google Scholar]

- Soares, N.; Fallenstein, B. Agent Foundations for Aligning Machine Intelligence with Human Interests: A Technical Research Agenda. Mach. Intell. Res. Inst. 2014. [Google Scholar] [CrossRef]

- Future of Life Institute. ASILOMAR AI Principles. 2017. Available online: https://futureoflife.org/ai-principles/ (accessed on 22 December 2018).

- Hanson, R. Prefer Law to Values. 2009. Available online: http://www.overcomingbias.com/2009/10/prefer-law-to-values.html (accessed on 4 October 2021).

- Rothbard, M.N. Man, Economy, and State: A Treatise on Economic Principles; Ludwig Von Mises Institute: Auburn, AL, USA, 1993; p. 987. [Google Scholar]

- Aharonov, D.; Vazirani, U.V. Is Quantum Mechanics Falsifiable? A Computational Perspective on the Foundations of Quantum Mechanics. In Computability: Turing, Gödel, Church, and Beyond; Copeland, B.J., Posy, C.J., Shagrir, O., Eds.; MIT Press: Cambridge, MA, USA, 2015; pp. 329–394. [Google Scholar]

- Feynman, R.P. Quantum Mechanical Computers. Opt. News 1985, 11, 11–20. [Google Scholar] [CrossRef]

- Goldwasser, S.; Micali, S.; Rackoff, C. The Knowledge Complexity of Interactive Proof Systems. SIAM J. Comput. 1989, 18, 186–208. [Google Scholar] [CrossRef]

- Babai, L. Trading Group Theory for Randomness. In Proceedings of the Seventeenth Annual ACM Symposium on Theory of Computing, Providence, RI, USA, 6–8 May 1985; pp. 421–429. [Google Scholar]

- Yampolskiy, R.V. Unpredictability of AI: On the impossibility of accurately predicting all actions of a smarter agent. J. Artifcial Intell. Conscious. 2020, 7, 109–118. [Google Scholar] [CrossRef]

- Arora, S.; Barak, B. Computational Complexity: A Modern Approach; Cambridge Univ. Press: Cambridge, UK, 2009; p. 579. [Google Scholar]

- Sipser, M. Introduction to the Theory of Computation, 3rd ed.; Course Technology Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Rabin, M. A Probabilistic Algorithm for Testing Primality. J. Number Theory 1980, 12, 128–138. [Google Scholar] [CrossRef]

- Wagon, S. Mathematica in Action: Problem Solving through Visualization and Computation, 3rd ed.; Springer: New York, NY, USA, 2010; p. 578. [Google Scholar]

- Ribenboim, P. The Little Book of Bigger Primes; Springer: New York, NY, USA, 2004; p. 1. [Google Scholar]

- LeVeque, W.J. Fundamentals of Number Theory; Dover, Ed.; Dover: New York, NY, USA, 1996; p. 280. [Google Scholar]

- Wolfram, S. A New Kind of Science; Wolfram Media: Champaign, IL, USA, 2002; p. 1197. [Google Scholar]

- Calude, C.S.; Jürgensen, H. Is complexity a source of incompleteness? Adv. Appl. Math. 2005, 35, 1–15. [Google Scholar] [CrossRef][Green Version]

- Chaitin, G.J. The Unknowable. Springer Series in Discrete Mathematics and Theoretical Computer Science; Springer: New York, NY, USA, 1999; p. 122. [Google Scholar]

- Calude, C.S.; Rudeanu, S. Proving as a computable procedure. Fundam. Inform. 2005, 64, 1–10. [Google Scholar]

- Boolos, G.; Jeffrey, R.C. Computability and Logic, 3rd ed.; Cambridge University Press: Oxford, UK, 1989; p. 304. [Google Scholar]

- Davis, M. The Undecidable; Basic Papers on Undecidable Propositions; Unsolvable Problems and Computable Functions; Raven Press: Hewlett, NY, USA, 1965; p. 440. [Google Scholar]

- Chaitin, G.J. Meta Math!: The Quest for Omega, 1st ed.; Pantheon Books: New York, NY, USA, 2005; p. 220. [Google Scholar]

- Calude, C.; Paăun, G. Finite versus Infinite: Contributions to an Eternal Dilemma. Discrete Mathematics and Theoretical Computer Science; Springer: London, UK, 2000; p. 371. [Google Scholar]

- Newell, A. Unified Theories of Cognition. William James Lectures; Harvard Univ. Press: Cambridge, UK, 1990; p. 549. [Google Scholar]

- Goodstein, R. Transfinite ordinals in recursive number theory. J. Symb. Log. 1947, 12, 123–129. [Google Scholar] [CrossRef][Green Version]

- Potapov, A.; Svitenkov, A.; Vinogradov, Y. Differences between Kolmogorov Complexity and Solomonoff Probability: Consequences for AGI. In Artificial General Intelligence; Springer: Berlin, Germany, 2012. [Google Scholar]

- Babai, L.; Fortnow, L.; Lund, C. Non-deterministic exponential time has two-prover interactive protocols. Comput Complex. 1991, 1, 3–40. [Google Scholar] [CrossRef]

- Miller, J.D.; Yampolskiy, R.; Häggström, O. An AGI modifying its utility function in violation of the strong orthogonality thesis. Philosophies 2020, 5, 40. [Google Scholar] [CrossRef]

- Howe, W.J.; Yampolskiy, R.V. Impossibility of unambiguous communication as a source of failure in AI systems. 2020. Available online: https://www.researchgate.net/profile/Roman-Yampolskiy/publication/343812839_Impossibility_of_Unambiguous_Communication_as_a_Source_of_Failure_in_AI_Systems/links/5f411ebb299bf13404e0b7c5/Impossibility-of-Unambiguous-Communication-as-a-Source-of-Failure-in-AI-Systems.pdf (accessed on 4 October 2021). [CrossRef]

- Horowitz, E. Programming Languages, a Grand Tour: A Collection of Papers, Computer software engineering series, 2nd ed.; Computer Science Press: Rockville, MD, USA, 1985; p. 758. [Google Scholar]

- DeLong, H. A Profile of Mathematical Logic. In Addison-Wesley series in mathematics; Addison-Wesley: Reading, MA, USA, 1970; p. 304. [Google Scholar]

- Enderton, H.B. A Mathematical Introduction to Logic; Academic Press: New York, NY, USA, 1972; p. 295. [Google Scholar]

- Iovino, M.; Scukins, E.; Styrud, J.; Ögren, P.; Smith, C. A survey of behavior trees in robotics and AI. arXiv 2020, arXiv:2005.05842v2. [Google Scholar]

- Defense Innovation Board. AI Principles: Recommendations on the Ethical Use of Artificial Intelligence by the Department of Defense. U.S. Department of Defense; 2019. Available online: https://media.defense.gov/2019/Oct/31/2002204458/-1/-1/0/DIB_AI_PRINCIPLES_PRIMARY_DOCUMENT.PDF (accessed on 4 October 2021).

- Karp, R.M. Reducibility among Combinatorial Problems, in Complexity of Computer Computations; Miller, R.E., Thatcher, J.W., Bohlinger, J.D., Eds.; Springer: Boston, MA, USA, 1972. [Google Scholar]

- Garey, M.R.; Johnson, D.S. Computers and Intractability: A Guide to the Theory of NP-Completeness; W. H. Freeman: San Francisco, CA, USA, 1979; p. 338. [Google Scholar]

- Arora, S.; Safra, S. Probabilistic checking of proofs: A new characterization of NP. JACM 2012, 45, 70–122. [Google Scholar] [CrossRef]

- Asimov, I. Robot; Gnome Press: New York, NY, USA, 1950; p. 253. [Google Scholar]

- Yudkowsky, E. Complex Value Systems in Friendly AI. In Artificial General Intelligence; Schmidhuber, J., Thórisson, K.R., Looks, M., Eds.; Springer: Berlin, Germany, 2011; pp. 389–393. [Google Scholar]

- Yampolskiy, R.V. Leakproofing singularity—Artificial intelligence confinement problem. J. Conscious. Stud. 2012, 19, 194–214. [Google Scholar]

- Yampolskiy, R.V. Behavioral Biometrics for Verification and Recognition of AI Programs. In Proceedings of the SPIE—The International Society for Optical Engineering, Buffalo, NY, USA, 20–23 January 2008. [Google Scholar] [CrossRef]

- Bore, N.K. Promoting distributed trust in machine learning and computational simulation via a blockchain network. arXiv 2018, arXiv:1810.11126. [Google Scholar]

- Hind, M. Increasing trust in AI services through Supplier’s Declarations of Conformity. arXiv 2018, arXiv:1808.0726129. [Google Scholar]

| Value-aligned interaction | Voluntary, non-fraudulent transactions driven by individual value-sets |

| Value mis-aligned interaction | A set of values preferred by ≥1 agent(s) forced on ≥1 agent(s) |

| Syntactical Symbol | Prime | Model |

|---|---|---|

| a1 | p1 | 2 |

| a2 | p2 | 3 |

| a3 | p3 | 5 |

| … | … | … |

| an | pn | pn |

| r1 | pn+1 | pn+1 |

| r2 | pn+2 | pn+2 |

| r3 | pn+3 | pn+3 |

| … | … | … |

| rn | pm | pm |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Carlson, K. Provably Safe Artificial General Intelligence via Interactive Proofs. Philosophies 2021, 6, 83. https://doi.org/10.3390/philosophies6040083

Carlson K. Provably Safe Artificial General Intelligence via Interactive Proofs. Philosophies. 2021; 6(4):83. https://doi.org/10.3390/philosophies6040083

Chicago/Turabian StyleCarlson, Kristen. 2021. "Provably Safe Artificial General Intelligence via Interactive Proofs" Philosophies 6, no. 4: 83. https://doi.org/10.3390/philosophies6040083

APA StyleCarlson, K. (2021). Provably Safe Artificial General Intelligence via Interactive Proofs. Philosophies, 6(4), 83. https://doi.org/10.3390/philosophies6040083