1. Introduction

With the advent of the information age, we have witnessed an unprecedented surge in data volume across various domains, ranging from engineering [

1] to ecology [

2] and from information technology [

3] to manufacturing [

4] and management [

5]. The complexity of the problems in these fields is increasing and is often characterized by multiple objectives [

6] and high-dimensional characteristics [

7]. The high dimensionality and redundancy inherent in raw datasets can lead to excessive consumption of computational resources, adversely affecting the efficacy of learning algorithms. Thus, selecting more important data, even in datasets with a limited amount of data, is essential for increasing classification success. FS has emerged as an essential data preprocessing technique that has garnered substantial interest over recent decades. This method increases the classification accuracy and reduces the data size by selecting the most appropriate subset of features from the original dataset [

8].

FS encompasses a spectrum of methods, broadly classified into filter, wrapper, and hybrid approaches [

9]. Among them, filters are faster than wrappers, but they ignore the relationships among features and cannot deal with redundant information. While wrappers are relatively computationally expensive, they can attain better results than filters because of the utilization of learning techniques in the evaluation process [

10]. The quest for the best feature subset has been a foundation of FS, with the determination of this subset relying heavily on the search methodologies and evaluation strategies of the candidate features. The evolution of optimization algorithms in FS has seen a transition from traditional full search, random search, sequential search, and incremental search methods to metaheuristic search approaches [

8]. Metaheuristic algorithms (MAs) have become prevalent because of their ability to navigate large search spaces efficiently and effectively, avoiding the obstacles of local optima while seeking global optima [

11,

12]. Various metaheuristic methods, including several recent algorithms, have been applied to address FS problems [

13,

14,

15,

16,

17,

18]. Moreover, there are also some hybridizations or improved optimizers in the FS techniques [

19,

20,

21,

22,

23]. The reason for the appearance of many such works on FS problems is that no FS technique can address all the varieties of FS problems. Hence, we need extensive opportunities to develop new efficient models for FS cases [

24].

The BWO is a recently proposed population-based metaheuristic with promising optimization capabilities for addressing continuous problems [

25]. The construction of BWO is inspired mainly by the behaviours of beluga whales, including swimming, preying, and whale fall. The BWO is a derivative-free optimization technique that is easy to implement. Compared with the whale optimization algorithm (WOA) [

26], the grey wolf optimizer (GWO) [

27], particle swarm optimization (PSO) [

28], and other algorithms have local solid development capabilities. The main merit of this optimizer is the equilibrium between exploration and exploitation that ensures global convergence. Owing to its excellent advantages, BWO, or its modified version, has been employed in many fields, such as image semantic segmentation [

29], cluster routing in wireless sensor networks [

30], landslide susceptibility modelling [

31], speech emotion recognition [

32], short-term hydrothermal scheduling [

33], and demand-side management [

34]. In addition, some modified versions of BWO have been developed to accomplish specific optimization problems [

35,

36,

37]. However, as a novel optimizer, BWO has been poorly studied for its effectiveness in more problems. In other words, even though this method is an excellent optimizer, it also faces some challenges in terms of improving the search ability, accelerating the convergence rate, and addressing complex optimization problems. It is necessary to extend the application fields of BWO to make this optimizer more worthy.

Although the BWO algorithm can achieve certain optimization effects in the early stages of the algorithm, in the later stages, due to insufficient population diversity and a singular exploration angle, the algorithm often has difficulty obtaining better solutions and is prone to falling into local optima. Furthermore, as the problem′s dimensions and complexity increase, the optimization capability of the BWO algorithm decreases, exploration accuracy decreases, the convergence speed decreases, and it becomes difficult to find other high-quality solutions [

35,

37]. The food chain embodies the principle of survival of the fittest in nature, and each organism has certain limitations in its survival strategy [

22]. These limitations inspire us to deeply analyze and improve the biological behaviour-based mathematical models when designing evolutionary algorithms that simulate biological habits. Although the existing evolutionary algorithms can address many optimization problems, by constructing mathematical models that optimize biological habits, and by refining some mathematical theories, we can construct excellent mathematical models for solving optimization problems, which has the potential to further enhance the performance of the algorithms [

38].

To improve the effectiveness of the original BWO and help it overcome some physiological limitations, this paper introduces several mathematical theories. First, improved circle mapping (ICM) [

39] and dynamic opposition-based learning (DOBL) [

40] were introduced to increase the diversity of an algorithm during the search process, thereby reducing the risk of falling into local optima and enhancing the search efficiency and accuracy. Second, the EP of GWO [

27] was integrated to maintain a subpopulation composed of the best individuals, which guided the main population to evolve towards the global optimum, enhancing the algorithm′s ability to escape from local optima. Third, we integrated the SLFSUP strategy so that the MSBWO could conduct a more detailed and in-depth search within local areas, enhancing the rigor and accuracy of the development of local spaces. Finally, by introducing the Gold-SA [

41] to update the population, we accelerated the convergence speed of the algorithm while maintaining the diversity of the population and improving the quality of the solutions. We tested MSBWO on twenty-three benchmark continuous problems. Simultaneously, we interrogated the feature selection problem to evaluate this proposed approach.

The main contributions of this paper are as follows:

- ◆

Four improvement strategies, namely, ICMDOBL population initialization, EP, SLFSUP, and Gold-SA, were used to improve the optimization performance of the BWO algorithm.

- ◆

Twenty-three global optimization tasks for intelligent optimization algorithm testing were used to evaluate the proposed MSBWO and compare it with other conventional and SOTA advanced metaheuristic approaches.

- ◆

The developed MSBWO was transformed into a binary model for tackling FS problems for the first time. Furthermore, the binary MSBWO was compared with other FS techniques on several UCI datasets.

The structure of this article is as follows: A detailed description of the standard BWO exploration and exploitation process is presented in

Section 2.

Section 3 introduces MSBWO, which incorporates several strategies, and proposes MSBWO for feature selection tasks. The experimental setup and results analysis of this study are shown in

Section 4. Finally, in the fifth section, the conclusions and description of the work are given. To aid in understanding, this article includes a comprehensive list of relevant abbreviations, summarized in

Table 1.

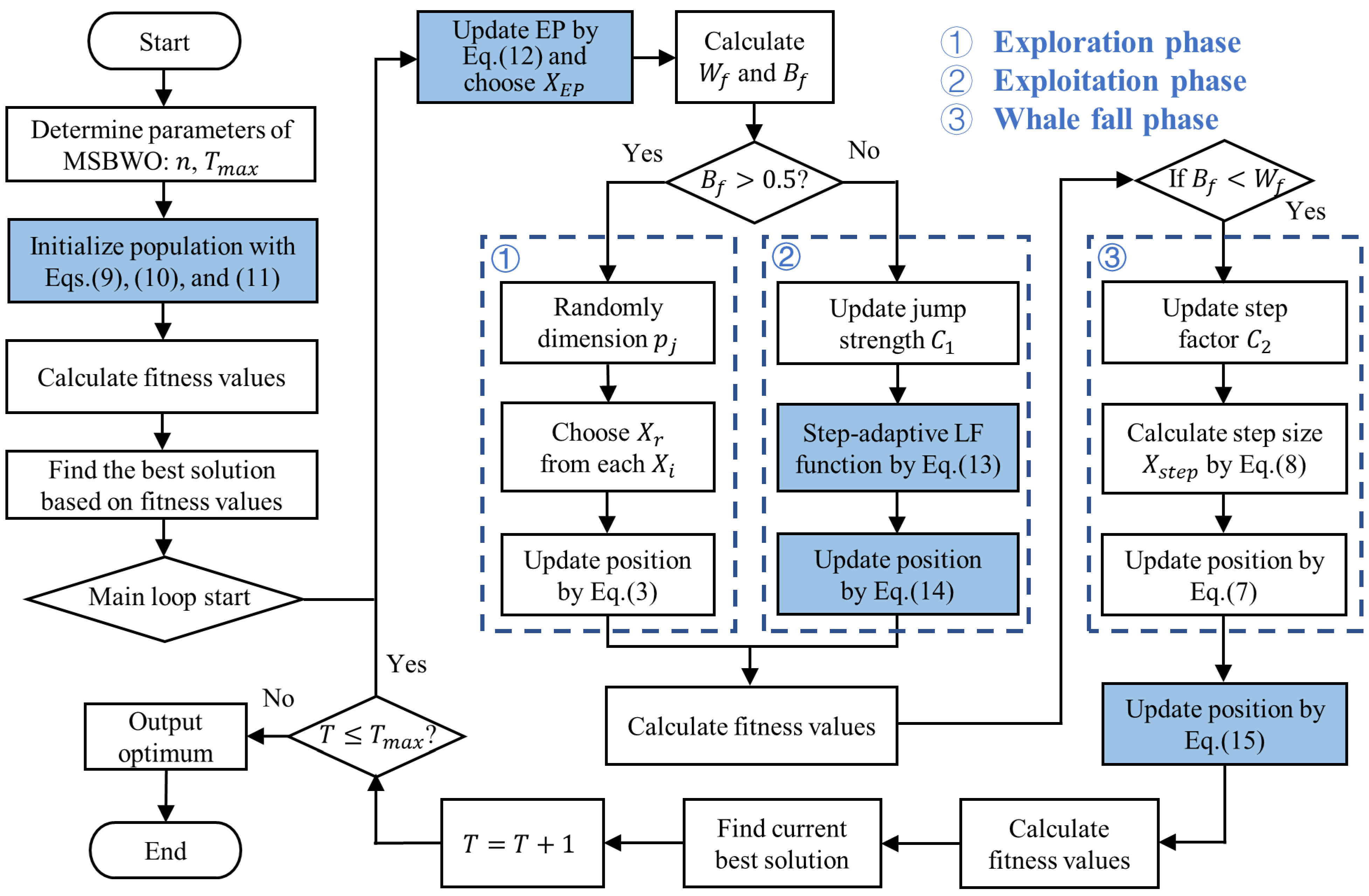

3. Proposed MSBWO

The proposed MSBWO introduces four fruitful strategies: (1) the ICMDOBL population diversity strategy, (2) the EP mechanism, (3) the SLFSUP, and (4) the Gold-SA population update mechanism. In addition, the feature selection problem can be solved by updating MSBWO to a binary version.

3.1. ICMDOBL Population Diversity

Owing to the random population generation of the BWO algorithm, it can lead to an uneven population distribution, which may result in reduced population diversity and lower population quality, thereby affecting the convergence of the algorithm. Chaotic mapping is characterized by uncertainty, irreducibility, and unpredictability [

43], which can lead to a more uniform population distribution than probability-dependent random generation. MSBWO generates an initial population with chaotic mapping to increase the diversity of potential solutions. There are common chaotic mappings, such as logistic mapping, tent mapping [

44], sine mapping [

45], and circle mapping [

46]. Circle mapping is more stable and has a higher coverage rate of chaotic values. Considering that circle mapping takes values more densely between [0.2, 0.6], the circle mapping formula is improved as follows:

where

represents the sequence value of the

ith beluga whale on the

jth dimension, and

is the chaotic sequence value of the (

i+1)th beluga whale on the

jth dimension. Then, the values are scaled and shifted to generate

with values between

and

for each dimension:

DOBL [

40,

47] is introduced to further increase population diversity and improve the quality of the initial solutions. The specific formula is expressed as follows:

where

is the population established with the ICM method, as shown in Equation (10), and

and

are random numbers between (0, 1). The DOBL generates

and an opposition population

and then merges these two populations into a new population,

. The fitness values of

are calculated, and the greedy strategy is used for full competition within the new population. The best

N individuals are then selected as the initial population. Using ICMDOBL, MSBWO starts iterating from individuals with better fitness, thereby enhancing the convergence.

3.2. EP Strategy

In the case of location updating, beluga whales always use the best whale as prey. If the prey has fallen into a local optimum, all subsequent search agents will converge to it, leading to a premature convergence of the algorithm. In the GWO algorithm [

27], a hierarchical system was proposed to update the positions according to the mean position of the first best three grey wolves to avoid the shortcomings caused by guiding a single search agent.

Inspired by GWO, the EP strategy is integrated into MSBWO. The first three best solutions obtained thus far, and their weighted average, are included as the candidate elites in the EP. The first three best solutions are conducive to exploration, whereas the weighted average position represents the evolutionary trend of the entire superior population, which is beneficial for exploitation. Position updating is guided by the agent randomly selected from the EP, improving the algorithm′s ability to escape from local optima.

The EP strategy is modelled as:

where

is the fitness value, and

is set to 3.

3.3. SLFSUP Strategy

In the exploitation phase, BWO uses LF with a fixed step to improve its convergence. However, at different stages of the algorithm, the expected step of LF may vary. The larger the step of LF is, the easier it is to find the optimum result, but it reduces the search precision. The smaller the step size is, the higher the search precision, but it reduces the search speed. Therefore, the step-adaptive LF strategy is used in MSBWO to improve its exploitation and convergence accuracy. In the early stages of iteration, MSBWO uses LF with a larger step so that it can fully exploit the solution space, whereas it becomes more refined in the later stage with a decreasing LF step. The step-adaptive LF strategy is calculated as follows:

As stated in Equation (4), position updating in the exploitation of BWO involves a random agent, the best agent, and the current agent. There may still be omissions for the possible solution. In WOA [

26], the spiral updating position strategy is used according to the position of the prey, namely, the best solution obtained, and the position of the whale adjusts the distance when searching for prey. Such a strategy can make full use of the regional information and improve the search capabilities. Therefore, MSBWO introduces this method to enhance the algorithm′s rigor and accuracy in the development of the local space and to strengthen the local search ability.

The position updating model in the exploitation process based on the SLFSUP is as follows:

where

is the position of an agent randomly selected from

,

is the current position for a random beluga whale to maintain its diversity,

b is a constant for defining the shape of the spiral, and

is a random number in [−1, 1].

is set to 1 in MSBWO.

3.4. Golden-SA Update Mechanism

Inspired by the relationship between the sine function and a one-unit radius circle, the Gold-SA [

41] scans all values of the sine function. The algorithm has strong global searching capabilities. The golden section ratio is used in the position updating of Gold-SA so that it can completely scan the search space as much as possible, thus accelerating convergence and escaping from local optima.

In the MSBWO, the Gold-SA mechanism is utilized to update the beluga whale population to increase the global search ability. The position updating with Gold-SA is given as follows:

where

is a random number in the range

and where

is a random number in the range

.

and

are the coefficients obtained via the golden section method, which aims at narrowing the search space and allowing the current value to approach the target value. They can be expressed as follows:

where

is the golden number, and the initial values of

and

are

and

, respectively.

The proposed improvement strategies are applied to BWO, and the flow chart of the MSBWO is shown in

Figure 1.

3.5. Binary MSBWO

FS is a binary decision optimization problem with a theoretical solution that is exponential, using 1 to represent the selection of the feature, and 0 to represent the non-selection of the feature. As an improved algorithm of the original BWO, the proposed MSBWO has a greatly improved search performance. Therefore, this study applies it to obtain a better feature subset. However, to apply MSBWO to the FS problem, the search space of the agents needs to be restricted. Moreover, binary transformation is required to map the continuous values to the corresponding binary values [

48].

To address the above issues, Equations (17) and (18) are used for initialization:

where

is the

jth component of the

ith agent,

is the size of the features, and

is a random number between (0, 1).

After position updating, the sigmoid function is used for discretization. The transfer function and position updating equation selected in this paper are shown in Equations (19) and (20).

As a combination optimization, the FS has two main goals. One is to improve the classification performance, and the other is to minimize the number of selected features. Therefore, the fitness function is shown in Equation (21).

where

represents the classification error rate of the RF classifier,

D denotes the number of features in the original dataset, and

denotes the length of the selected feature subset.

and

are used to balance the relationship between the error rate and the ratio of selected features;

,

.

3.6. Computational Complexity

To gain a better understanding of the implementation process of the MSBWO algorithm proposed in this paper, the computational complexity of MSBWO is analyzed as follows. The computational complexity of the MSBWO relies on three main steps: initialization, fitness evaluation, and updating of the beluga whale. In the initialization phase of MSBWO, the computational complexity of each agent is assumed to be , where is the dimension of a particular problem. The computational complexity of ICMDOBL is , where is the population size. After entering the iteration, the computational complexity of EP is . In the exploration and exploitation phases, the novel exploitation mechanism replaces the original exploration mechanism, and the computational complexity is similar to that of BWO, which is represented as . The computation of the whale fall phase can also be approximated as , similar to BWO. Additionally, the Gold-SA is an extra searching strategy whose computational complexity is . Therefore, the total computational complexity of MSBWO is evaluated approximately as . Thus, the MSBWO algorithm proposed in this paper has greater computational complexity than the original BWO algorithm.

4. Experiments and Results Analysis

While confirming the performance of MSBWO, sufficient targeted experiments were performed in this work. The results of the comparative observations are discussed in a comprehensive analysis. To decrease the influence of external factors, every task in this work was conducted in the same setting. With respect to the parameter settings for the metaheuristic algorithms, a total of 50 search agents were set up, except for those used for the FS experiments, and multiple iterations were completed. To reduce the impact of experimental randomness, each algorithm was executed on the benchmark function 30 times.

We applied two statistical performance measures, the mean and standard deviation (std), which represent the robustness of the tested methods, to assess the optimization ability of the MSBWO. Furthermore, some statistically significant results were used to estimate the success of the MSBWO. In this study, we utilized the Wilcoxon rank-sum test to analyze the significant differences in the statistical results among the compared approaches. The significance level was set to 0.05. In the results of the Wilcoxon rank-sum test, the rows identified by ‘+/=/−’ are the results of the significance analysis. The symbol ‘+’ indicates that MSBWO outperforms the other compared approaches significantly, ‘=’ indicates that there is no significant difference between MSBWO and the other compared approaches, and ‘−’ indicates that MSBWO is worse than the other compared methods. Additionally, the Friedman test was applied to express the average ranking performance (denoted as ARV) of all the compared approaches more closely for further statistical comparison.

Section 4.1 presents an extensive scalability analysis to perform a more comprehensive investigation into the efficiency of MSBWO on CEC2005 benchmark problems [

49], as shown in

Table 2,

Table 3 and

Table 4.

Section 4.2 investigates the impact of different optimization strategies on the final search for the global optimal solution. In

Section 4.3, MSBWO is compared with other conventional MAs in terms of convergence speed and accuracy on the race functions.

Section 4.4 compares MSBWO to the SOTA metaheuristic approaches that were introduced in 2024. In

Section 4.5, 10 datasets are selected from the UCI machine learning library to test the performance of the binary MSBWO in FS.

All the experiments were performed on a 2.60 GHz Intel i7-10750H CPU equipped with 16 GB of RAM and Windows 10 OS and were programmed in MATLAB R2023b.

4.1. Scalability Analysis of MSBWO

The dimensions of the optimization problems affect the efficiency of the algorithm. Therefore, it is necessary to conduct an extensive scalability analysis to perform a more comprehensive investigation into the efficiency of MSBWO. The purpose of the scalability evaluation experiment is to compare the performance of MSBWO with that of BWO as the number of dimensions increases. In this section, we test the first 13 of 23 benchmark problems with dimensions of 100, 200, 500, 1000, and 2000.

In each experiment, 30 dependent runs were applied to each method to reduce the influence of randomness on the experimental results. Additionally, the maximum number of iterations was set to 500, and the population size was set to 50. The parameter initialization of all the methods was the same as that of their original references.

The results in

Table 5 present the obtained statistical values for 13 problems on each dimension. According to the statistical results in

Table 5, MSBWO is more successful than BWO in addressing the optimization problems on each dimension. Despite the significant statistical results at 1000/2000 dimensions being somewhat reduced compared with the results at 100/200/500 dimensions, the solutions of each function with 1000/2000 dimensions achieved by MSBWO are much closer to the optimal solution than BWO, according to statistical measures (average values and standard deviations).

For the unimodal problems (F1-F7), MSBWO outperforms BWO, except for F5 and F6, and at 1000/2000 dimensions, which indicates that MSBWO significantly strengthens the exploitative ability of BWO at 100/200/500 dimensions. For the multimodal problems (F8-F13), the MSBWO is better than the BWO for F8 with each dimension. MSBWO shows no difference from BWO when addressing F9-F11, and both attained their optimal solutions. However, BWO is superior to MSBWO for F12 and F13 with dimensions of 1000 and 2000. That is, at 1000/2000 dimensions, the advantage of MSBWO over the original BWO is not as pronounced as it is at 100/200/500 dimensions.

From the standard deviation perspective, the standard deviations of the MSBWO on each dimension are lower than those of the BWO when solving functions F2, F4, and F7, which are equal to those of the BWO with functions F1, F3, F9, F10, and F11. This indicates that the optimization ability of MSBWO is no less than that of BWO, and this stability is not significantly affected by the number of dimensions. Although the performance of MSBWO is not superior to that of BWO on F8, MSBWO can find a satisfactory solution. Moreover, MSBWO attains a lower ‘ARV’ than BWO does in each case of dimension, which clearly reveals the superiority of MSBWO without the dependence of dimension.

It can be concluded that the strategies integrated into MSBWO facilitate the balance of exploration and exploitation and significantly enhance the search ability in different dimensions for specific problems.

4.2. Cross-Evaluation of the Proposed MSBWO

To verify the contributions of various improvement strategies to MSBWO, this section compares the original BWO algorithm with five incomplete versions of the MSBWO algorithm.

Section 3.1,

Section 3.2,

Section 3.3,

Section 3.4 introduce four integration strategies, including ICMDOBL, EP, SLFSUP, and Golden-SA, into the original BWO. In this section, the performance after mixing and crossing is tested and compared, mainly by means of linear combinations. In

Table 6, “1” means that the mechanism was selected, and “0” means that it was not. We refer to the BWO combined with ICMDOBL as ICMDOBL_BWO, and the fusion of the BWO and EP strategies as EP_BWO. The combination of BWO and SLFSUP is denoted as SLFSUP_BWO, and the fusion of BWO and Golden-SA is denoted as GSA_BWO. In addition, the EP_GSA_BWO integrates BWO with the EP and Golden-SA strategies. The dimensions of the various methods were set to 30. Each algorithm was executed on all 23 benchmark functions 30 times.

From the horizontal comparison in

Table 7, it is not difficult to find that the improvement strategies introduced into MSBWO enhance the performance of BWO to varying degrees. ICMDOBL_BWO outperforms traditional BWO on the test functions except for F12. The mechanism helps the algorithm start searching from a broader solution space, ultimately stably converging to the optimal solution. EP_BWO emphasizes the inheritance of excellent agents while maintaining population diversity, which helps the algorithm quickly converge to a high-quality area in the solution space. EP_BWO significantly outperforms BWO on the F1–F6, F12–F14, F15, and F21–F23 functions, showing good performance in maintaining population diversity and accelerating the convergence speed. SLFSUP_BWO improves the algorithm′s exploration capability in the search space by adaptively adjusting the search step size, and it significantly outperforms BWO on the F1-F4 functions. GSA_BWO updates the population position by simulating the dynamic changes in the sine waves. This strategy stands out in that it improves the algorithm′s global search capability and helps find the global optimal solution. GSA_BWO significantly outperforms BWO except for F7–F11, and F15. Each improvement strategy has unique advantages and is suitable for different types of problems. EP_GSA_BWO performs best on multimodal problems, whether multimodal or fixed-dimensional multimodal problems, as it integrates the advantages of the EP and Gold-SA strategies.

As shown by the std, EP_GSA_BWO has the best stability on most test functions. Its std is generally zero or very small, except for F8. However, GSA_BWO and EP_GSA_BWO have larger stds on F8, indicating that the Gold-SA strategy has a significant fluctuation in performance on F8. EP_BWO generally has a smaller std on the test functions, indicating good stability. The std of ICMDOBL_BWO is generally similar to that of BWO, indicating that the improvement strategy has little impact on stability.

It can be seen from the Wilcoxon signed-rank test and ARV that the variant BWO clearly enhances the performance of BWO, although each improvement strategy has unique advantages and applicable scenarios.

4.3. Comparison with Conventional MAs

To further assess the optimization performance of MSBWO, in addition to BWO, we select four well-known MAs to participate in the competition, namely, dung beetle optimizer (DBO) [

50], GWO [

27], WOA [

26], and PSO [

28]. The parameter initialization of all the algorithms was the same as that of their original references. Additionally, the population size was 50, the dimension was 30, and the maximum iteration number was 500. In addition, each function was executed 30 times.

Table 8 presents the statistical outcomes in terms of the mean and standard deviation (marked by ‘std’) of the proposed MSBWO compared with other selected algorithms on 23 benchmark problems. The statistical significances of values in

Table 8 are shown in

Table 9.

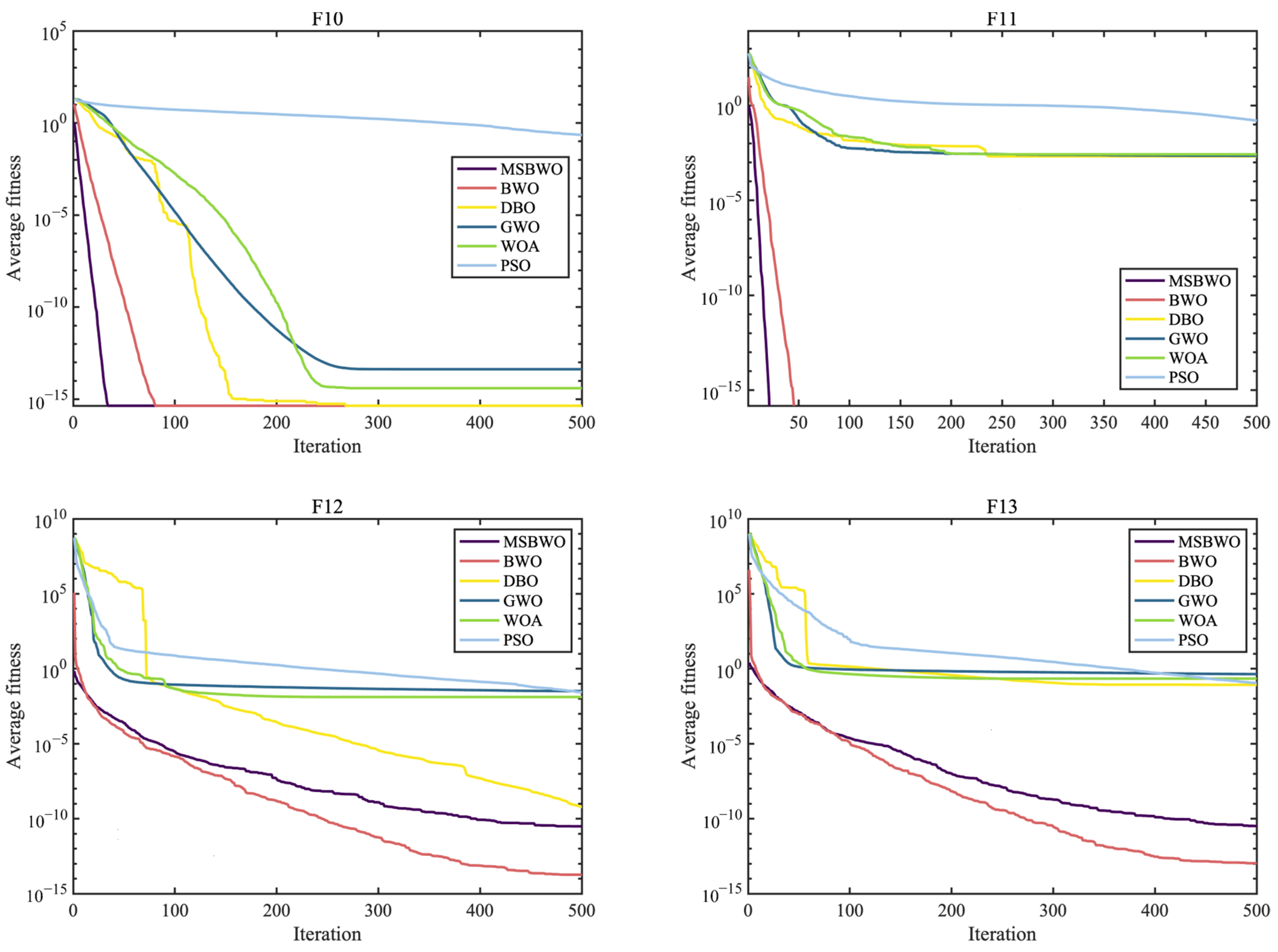

Figure 2,

Figure 3 and

Figure 4 present the convergence curves of the three categories of algorithms.

From the statistical results listed in

Table 8, MSBWO can find the best solutions, even the optimal solutions, on most of the functions, except for F12, F13, F17, and F19. For F12 and F13, the performance of MSBWO is worse than that of BWO; however, the results are close to those of BWO and are far better than those of any of the other four methods. For F17 and F19, MSBWO performs next in terms of performance to the first method, namely, DBO and PSO on F17 and PSO on F19. All algorithms present similar best average values for F16-F19 but different standard deviations.

According to the

p values of the Wilcoxon rank-sum test, which analyzes the significant difference between the paired algorithms in

Table 9, the performance of MSBWO has significantly positive differences in these four functions compared with that of the other compared methods. From the overall significant statistical results of the Wilcoxon rank-sum test on all the functions, the worst-case MSBWO produces 14 significantly better, 7 equal, and 2 significantly worse results than the PSO does, and the best case is that MSBWO overwhelmingly succeeds on almost all of the algorithms compared with GWO. It makes sense that MSBWO obtains the best ARV of 1.4565 in the Friedman test. Therefore, the conclusion can be drawn that the proposed MSBWO is the best approach with considerable advantages over five competitive swarm-based algorithms.

From the standard deviation perspective, the standard deviations of MSBWO are the lowest for 15 functions, although those of MSBWO are not the lowest for F1, F3, and F9-F11. These results indicate that the optimization ability of MSBWO is more stable than that of the other algorithms. The performance of MSBWO is not superior on F12, F13, F17, or F19; however, MSBWO can find satisfactory solutions when solving these functions.

The curves in

Figure 2,

Figure 3 and

Figure 4 intuitively draw the convergence rates of the proposed MSBWO, BWO, DBO, GWO, WOA, and PSO for addressing the unimodal (F1, F2, F6, and F7), multimodal (F10–F13), and composition (F15, F21–F23) problems. According to

Figure 2,

Figure 3 and

Figure 4, the MSBWO has powerful advantages in terms of the convergence rate over the other approaches in terms of F1, F2, F10, F11, F15, and F21–F23. Other approaches, especially DBO, GWO, WOA, and PSO, stagnate into local optima during early optimization on F1, F2, F6, F11, F12, and F15, whereas MSBWO has the fastest convergence rate and can obtain the best solutions on these functions. These trends indicate that the improvement in MSBWO is clearly confirmed in most cases of the unimodal, multimodal, and composition tasks.

Accordingly, these experimental results verify that the developed MSBWO has an efficient searching ability at an accelerated convergence speed, which benefits mainly from the ICMDOBL strategy and EP strategy. The ICMDOBL strategy helps the algorithm to have better initial random agents, and the individuals are more equally scattered in the global space and have a better chance to approach the global optimal solution. Moreover, the SLFSUP mechanism allows the algorithm to adjust the step size during the search process according to the current search situation, which can excellently achieve a reasonable balance between the exploitation and exploration abilities. Gold-SA also accelerates convergence and escape from local optima.

4.4. Comparison with SOTA Algorithms

To further investigate the advantages of the proposed MSBWO, the algorithm was compared against five SOTA MAs that were introduced in 2024, namely, the horned lizard optimization algorithm (HLOA) [

51], hippopotamus optimization (HO) [

52], parrot optimizer (PO) [

53], crested porcupine optimizer (CPO) [

54], and black-winged kite algorithm (BKA) [

55]. The simulation results, including the Wilcoxon test and the Friedman test results, can be seen in

Table 10.

Table 11 records the

p values of the Wilcoxon test, which were used to investigate the significant differences between MSBWO and one of the compared algorithms. The statistical significances of values in

Table 10 are shown in

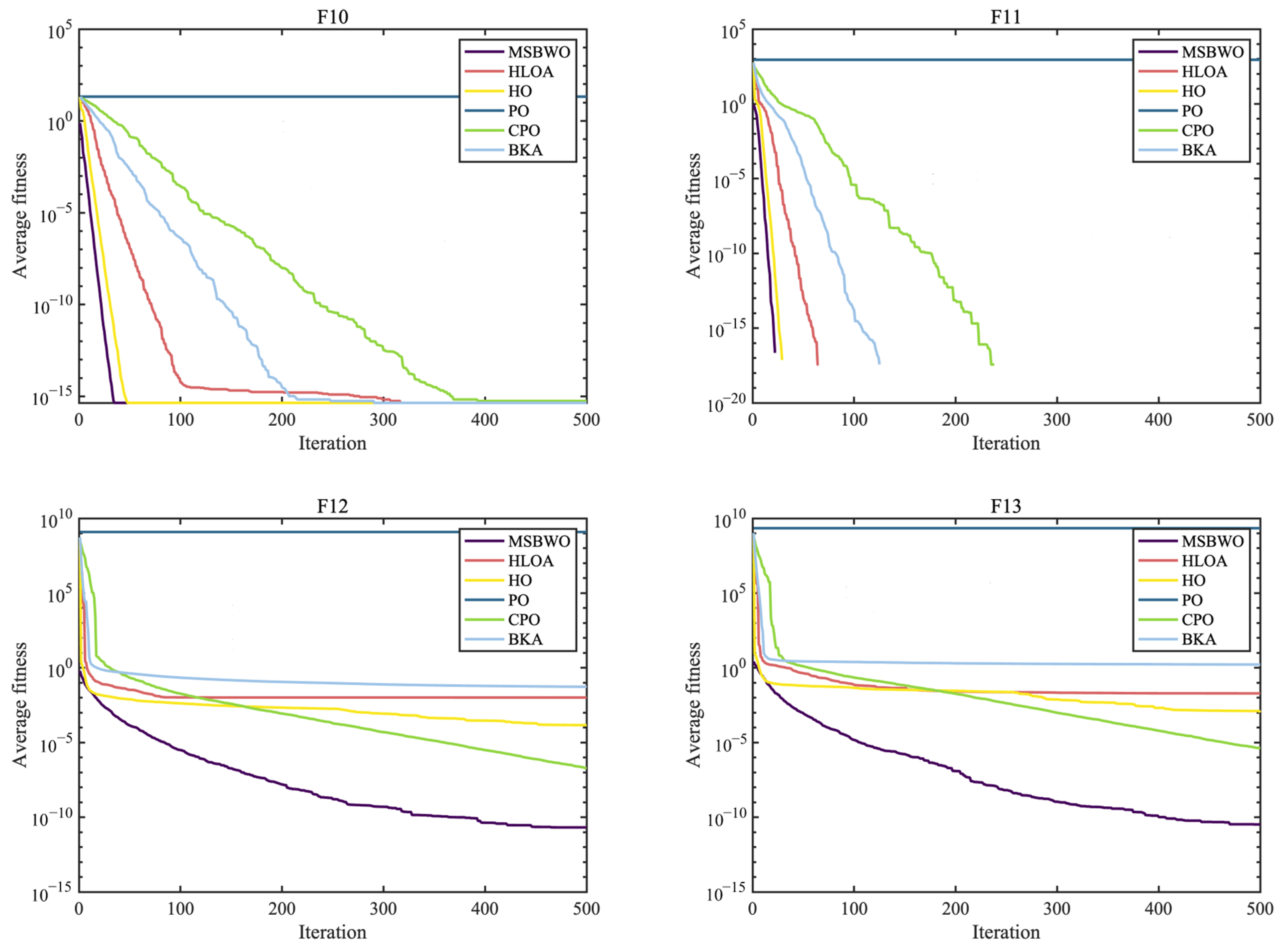

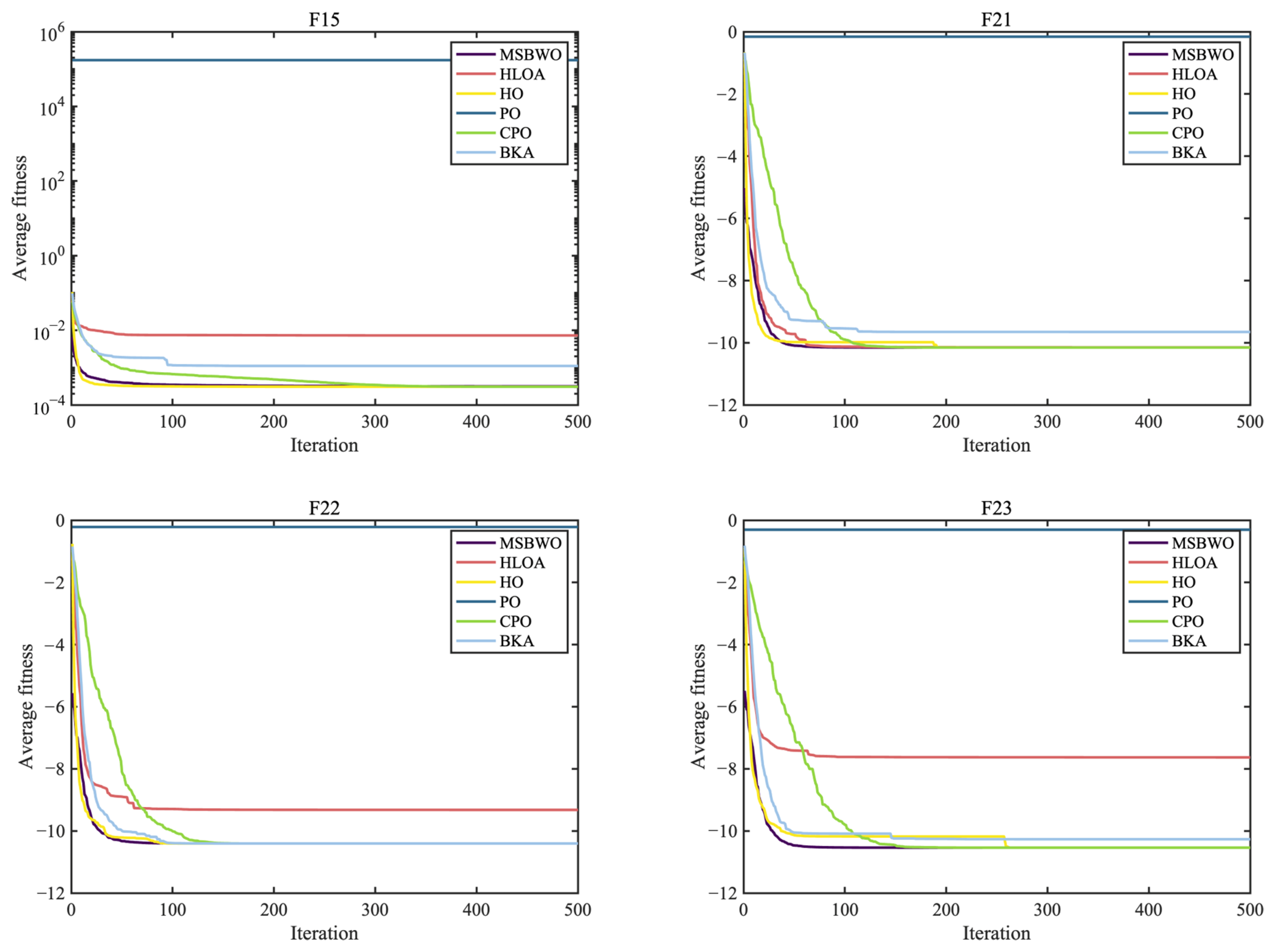

Table 11. These results are clearly illustrated in the convergence curves in

Figure 5,

Figure 6 and

Figure 7.

As reported in

Table 10, MSBWO achieves the best solutions for approximately 78% of the functions except for F7, F14, F15, and F20. For F7, MSBWO is only worse than PO. The performance of MSBWO on F14 is worse than that of HO, CPO, and BKA. For F15, the means of HO, PO, and CPO are better than those of MSBWO. Nevertheless, according to the

p values in

Table 11, for F15, there is no significant difference between MSBWO and the PO. For F20, the solution obtained via MSBWO approaches the best solutions.

According to the ARV, the established MSBWO is ranked the best, with a value of 2.2174. Additionally, from

Table 11, we observe that MSBWO significantly outperforms the other competitors in general on F1–F13, except for F9–F11, for which all the algorithms achieve the best solutions. This indicates that MSBWO is significantly better than the other five algorithms in optimizing both unimodal and multimodal problems, which reflects its excellent exploitation ability and explorative ability. For F16–F23, MSBWO shows competitive optimization capability. This shows that the performance of MSBWO in handling composition problems is not worse than those of the above advanced methods. According to the above investigations, the performance of MSBWO is superior to that of these outstanding optimizers from an overall perspective.

The convergence curves again prove the merits of MSBWO in an obvious way. From

Figure 5 for unimodal functions (F1, F3, F6, and F7) and

Figure 6 for multimodal functions (F10–F13), MSBWO significantly achieves the best outcome and fastest convergence rate. In contrast, other competitors, including HO, stagnate into local optima during the early stage on F6, F12, and F13. For the fixed-dimensional functions in

Figure 7, although MSBWO does not achieve the fastest convergence rate, the difference in convergence speed compared with the comparative algorithms is not significant, and it also obtains the global optimal solution.

Therefore, the multiple strategies integrated into the MSBWO contribute to strengthening the balance between diversification and intensification. MSBWO effectively has a faster convergence rate or better search ability than outstanding advanced optimizers such as the HLOA, HO, PO, CPO, and BKA.

4.5. Feature Selection Experiment

This section presents a more comprehensive study on the proposed MSBWO in a binary manner according to the feature selection (FS) rules. Distinct test datasets were used to test the proposed approach for FS. They are available from the UCI repository, which can be obtained from the website

https://archive.ics.uci.edu/datasets (accessed on 5 May 2024). The details of the datasets used for feature selection are shown in

Table 12. As revealed in

Table 12, the datasets contain different sizes of features and instances and belong to different subject areas. The difference in the dataset is beneficial for testing the proposed method from different viewpoints.

In this study, we chose the common RF classifier. Simultaneously, four other FS approaches, including binary GWO (BGWO), binary WOA (BWOA), binary DBO (BDBO), and binary BWO (BBWO), are regarded as competitors against the proposed BMSBWO to confirm its efficiency. In the fitness function, is set to 0.9. The number of decision trees in the RF classifier is set to 20. Additionally, the population size is 20, and the maximum number of iterations is 50. In addition, each function was executed 30 times.

The numerical results of comparing BMSBWO with BGWO, BWOA, BDBO, and BBWO on each dataset for FS problems are recorded in

Table 13,

Table 14,

Table 15 and

Table 16 in terms of fitness, error rate, mean feature selection size, and average running time. The metric mean feature selection size determines the FS ratio by dividing the FS size by the total size of the features in the original dataset.

As outlined in

Table 13, the excellent performance of the BMSBWO is evidently superior to that of the BGWO, BWOA, BDBO, and BBWO on high-dimensional samples S5-S10 in terms of fitness. For the S1-S4 datasets, the BMSBWO is not the sole best, but it still demonstrates good performance. Notably, BBWO exhibits equally excellent fitness values on the S1-S4 datasets as BMSBWO. From the final ARV obtained, the average fitness values obtained by the BMSBWO are much lower than those of the other peers. This shows that the performance of the BMSBWO is superior to those of the other algorithms. According to the final rank values in

Table 14, the classification accuracy obtained via the BMSBWO still exceeds those of the other algorithms. Except for S1, S4, and S8, the classification error rates of BMSBWO are lower than those of its rivals.

The ultimate goal of feature selection is to improve the prediction accuracy and reduce the dimensionality of the prediction results. Obtaining the optimal feature subset by eliminating features with little or no predictive information and strongly correlated redundant features is the core of this work.

Table 15 shows that the BMSBWO algorithm obtains a subset of features with minimum dimensionality on each dataset, indicating that the BMSBWO algorithm has a better feature selection capability. Combined with the classification error rate in

Table 14, it can always filter out fewer features with a low error rate. Furthermore, BMSBWO even achieves a 0% error rate by selecting the fewest features on S4.

A comparison of the time consumption results in

Table 16 reveals that BMSBWO ranks fifth, which shows that it takes more time than most binary optimizers. This is because the improved strategies, such as EP, SLFSUP, and Golden-SA, somewhat affect the time cost, which can also be seen from the computational complexity of MSBWO. Although BMSBWO has a greater time cost, considering the comprehensive performance of

Table 13,

Table 14 and

Table 15 is worthwhile. BMSBWO outperforms the other four binary optimizers in handling the feature selection problem. Of course, how to reduce the consumption of the BMSBWO computing time while ensuring performance is still the direction of our future research.

The best fitness values during the iterative process are presented below as convergence curves to make the experimental results more intuitive and clearer.

Figure 8 shows the convergence curves of the algorithm when comparing 10 datasets. The Y-axis shows the average fitness value under ten independent executions, and the X-axis indicates the number of iterations. The convergence values of the BMSBWO are much smaller than those of the other algorithms on approximately 80% of the benchmark datasets. It can also be observed that the MSBWO method is not prone to falling into local optima, demonstrating stronger exploration capabilities on the S5 dataset. All of these benefit from the variety of update methods provided by the ICMDOBL, EP, and SLFSUP strategies, which ensure the diversity of the population and enable the algorithm to have more opportunities to explore optimal regions.

Handling the balance between the global exploration and local exploitation search phases is a significant factor that makes BMSBWO superior to the other algorithms. The experimental results indicate that its powerful search capability enables the BMSBWO to perform excellently on a wide range of complex problems.

5. Conclusions and Future Works

In this paper, a novel improved BWO was constructed to optimize the diversity of population positions and the exploration–exploitation imbalance of the original BWO. The proposed optimizer is called MSBWO, which contains an initialization stage and an updating stage. In the updating stage, the EP, SLFSUP, and Golden-SA strategies were integrated with BWO to improve the rigor and accuracy of the algorithm in local space exploration, enhancing local search capabilities and accelerating the convergence speed of the algorithm.

The algorithm was applied to CEC2005 global optimization problems. The global optimization performance of MSBWO was verified by comparing it to other conventional algorithms, DBO, GWO, WOA, and PSO, as well as the SOTA algorithms HLOA, HO, PO, CPO, and BKA. The comprehensive results of the experiment indicated that the established MSBWO has excellent exploration abilities, which helps the algorithm jump out of local optimal values and accurately explore more promising regions in most cases. Thus, it is better than other optimizers in terms of search ability and convergence speed when tackling global optimization problems.

In addition, we mapped MSBWO into binary space via a mapping function based on the continuous version of MSBWO as a feature selection technique. Ten UCI datasets of different dimensions were utilized to benchmark the performance of binary MSBWO in feature selection. The experimental results clearly verified that the BMSBWO outperforms the other investigated methods with respect to fitness, mean feature selection size, and error rate measures compared with the other algorithms. This has important implications in terms of reducing the data dimensionality and improving the computing performance.

Accordingly, we can regard the proposed MSBWO algorithm as a potential global optimization method as well as a promising feature selection technique. However, the integration of improvement strategies, which contribute to enhancing the performance of the original BWO, resulted in more time costs to attain high-quality best solutions. Therefore, it is necessary to harmonize efficiency with accuracy when tackling practical problems. In future studies, a promising direction is to use the proposed method in multi-objective optimization tasks. We can also expand the application of this method to more real-life problems such as machine learning, medical applications, financial fields, and engineering optimization tasks. Moreover, research on integrating the novel BWO algorithm with other strategies to build a much better optimizer is a worthwhile endeavour.