1. Introduction

With evolving market demands and increasing competition, industries face growing pressure for shorter product delivery times and higher customization requirements. The manufacturing sector, particularly in processing and assembly-oriented enterprises, increasingly emphasizes producing multiple varieties in small batches, often facilitated by batch flow technology [

1].

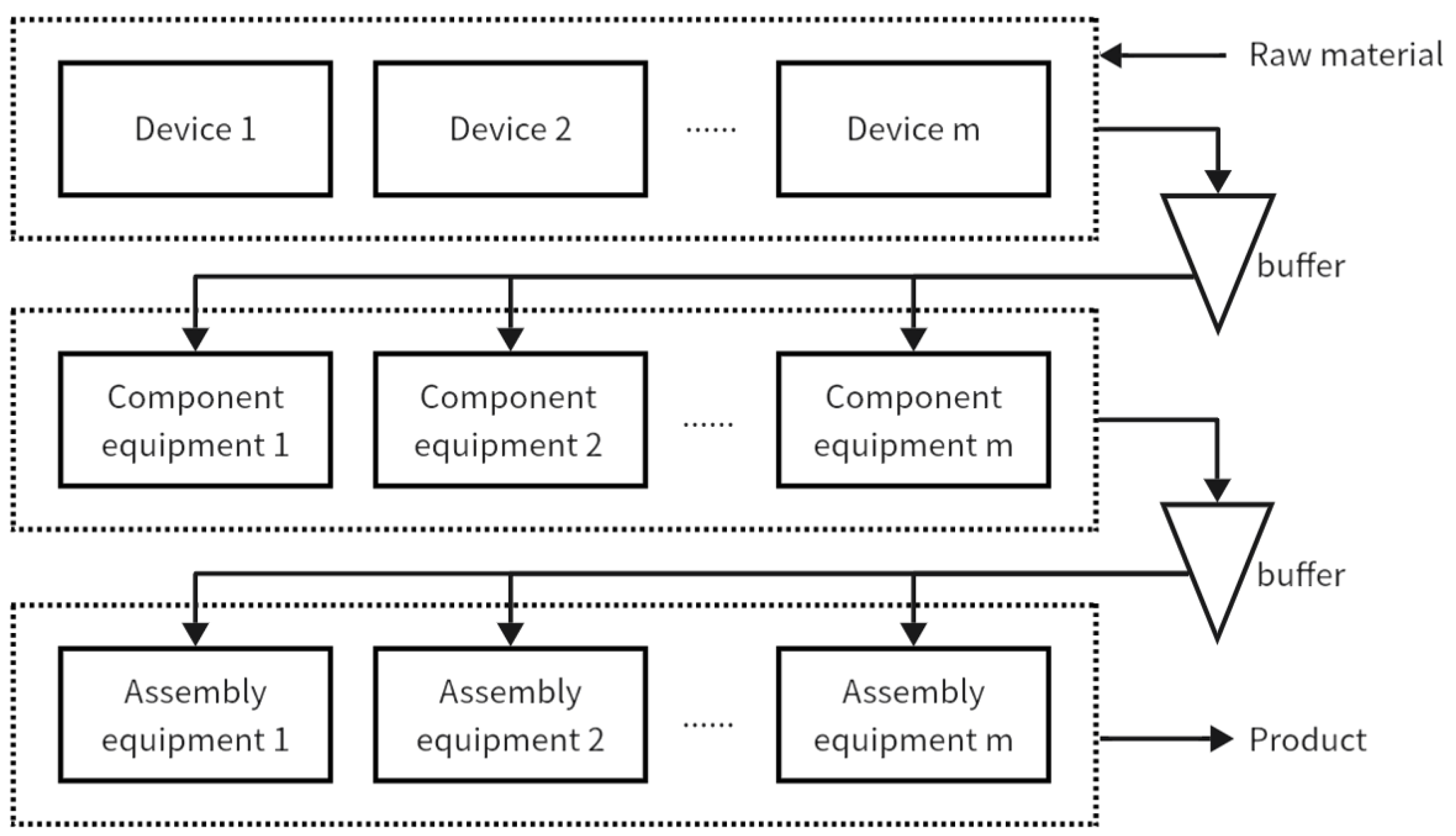

As the diversity and complexity of product types escalate, the need for the coordinated production of parts, components, and products becomes more critical. This complexity often results in extensive buffer areas within workshops for parts turnover and storage, substantially lengthening waiting times throughout the production cycle. Addressing these inefficiencies is vital for boosting productivity per unit area and addressing land scarcity issues in manufacturing settings.

Products such as vacuums, air conditioners, and refrigerators, which exhibit a wide variety in type, generally undergo a three-stage processing and assembly sequence: starting with plastic injection molding, followed by component assembly, and concluding with final product assembly once all parts are ready. These stages are subject to interdependent assembly requirements [

2], which can cause delays between stages and lead to the accumulation of work-in-progress in buffer zones, thereby extending production cycles. Therefore, developing efficient scheduling methods is essential for optimizing the production flow of parts and products.

The variability in processing times across different operations and the involvement of multiple machines per process necessitate effective batch division and the strategic allocation of processing equipment to each sub-batch [

3]. This study originates from the production processes of multi-variety, small-batch household appliances such as vacuums and aims to explore the three-stage batch flow mixed assembly flow shop scheduling problem, considering non-sequential setup times and multiple tooling constraints, with the goal of minimizing the maximum completion time. Setup times for switching between injection molding molds and assembly jigs primarily occur during setup and debugging phases, independent of production sequence, thus diminishing the sequence’s impact on setup times. Batch flow scheduling [

4] is advantageous, as it reduces waiting times, minimizes buffer area sizes, boosts production efficiency, shortens delivery times, and increases output per unit area, rendering it highly relevant and beneficial in practical settings. Existing research on batch flow scheduling often neglects the consideration of production resources beyond equipment, such as raw materials, manpower, and tooling [

5]. Different parts frequently require varied molds, and distinct products need specific assembly jigs. The widespread use of molds across diverse industries, such as machinery and electronics, under the multivariety, small-batch production mode, underscores the practical significance of scheduling mixed batches under multiple tooling constraints. In terms of workshop scheduling, the maximum completion time metric effectively captures other conventional scheduling metrics [

6], such as waiting times for parts processing, making it the focal optimization objective.

Past research has tackled batch flow mixed assembly flow shop scheduling problems [

7,

8,

9,

10,

11,

12], yet most studies have presumed that sub-batches are either unmixable or of equal size, which does not reflect the complexities of actual production settings. This study addresses more intricate scenarios involving varying sub-batch sizes and multi-stage batch flow systems, aiming to minimize the maximum completion time through an effective migrating birds optimization (EMBO) algorithm.

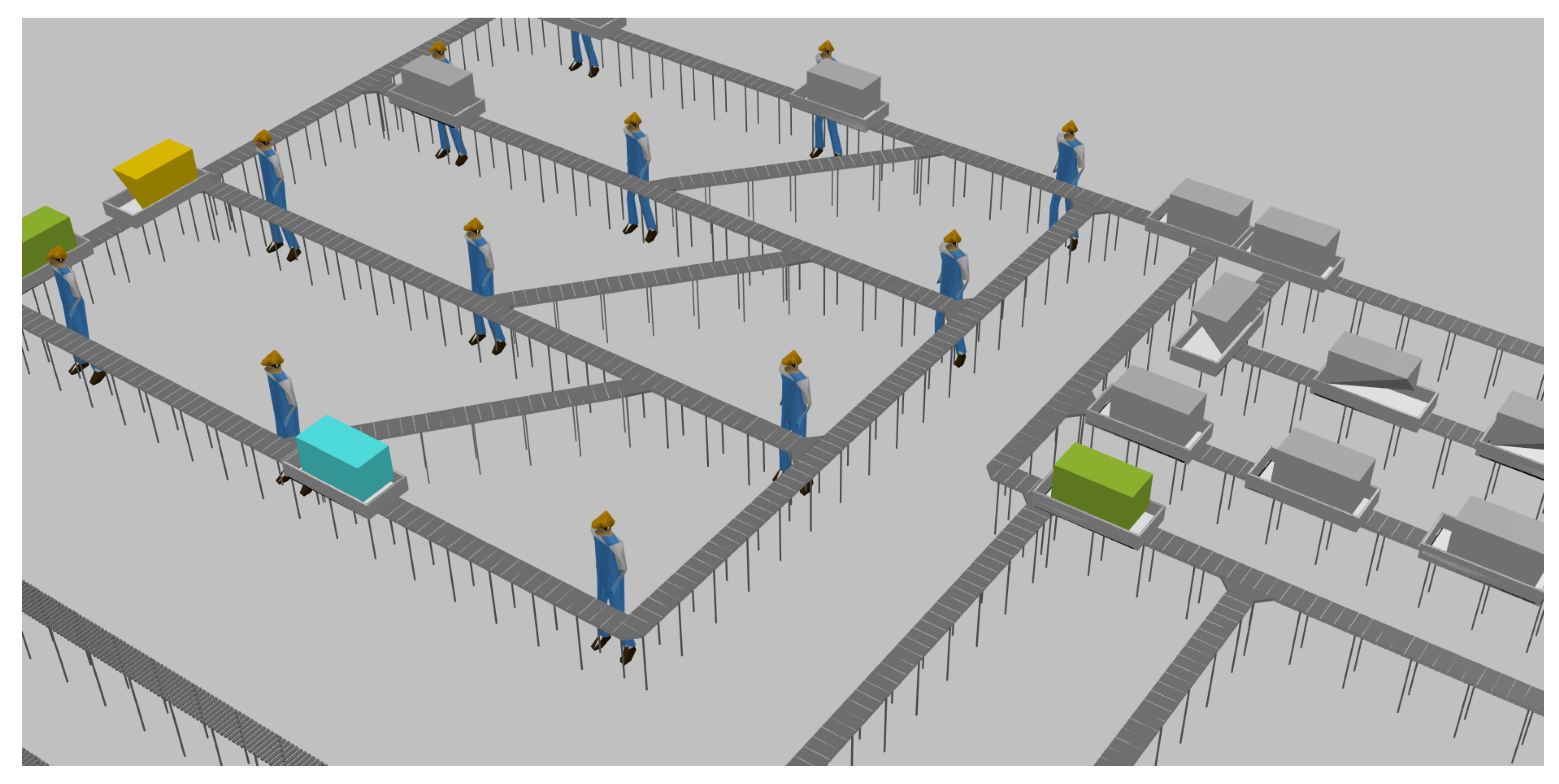

Moreover, virtual simulation offers substantial potential for production control by facilitating the precise management of complex mixed flows via real-time data interaction. This technological framework ensures the seamless integration of the algorithmic solutions proposed in this study with real-world applications [

13,

14,

15].

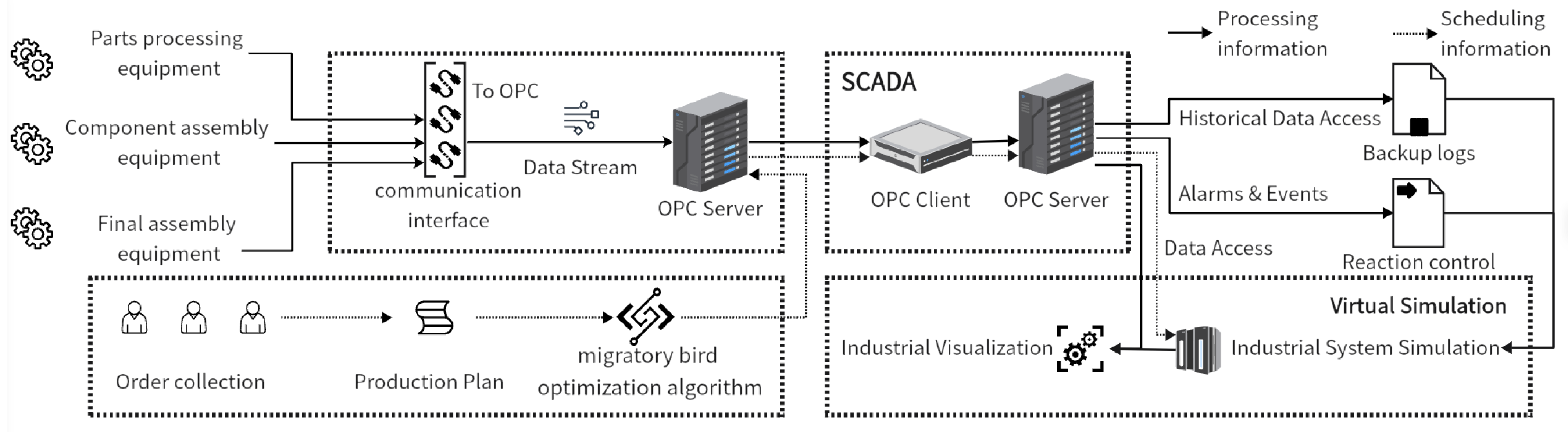

The encoding mechanism is structured in two parts to achieve this goal. Its features are utilized to design multiple neighborhood structures incorporating an adaptive adjustment strategy. This strategy improves the search efficiency of the neighborhood structures. Furthermore, to facilitate the verification of the algorithm’s feasibility and to make its application more visual, the algorithm is embedded into a simulation system for implementation. And a data interaction method and the relevant interfaces are designed.

The paper is structured as follows.

Section 2 introduces the research status in four related directions, with each sub-section focusing on a specific aspect.

Section 3 discusses the system development process, with

Section 3.1 describing the overall system design.

Section 3.2 elaborates on the problem and its mathematical modeling.

Section 4 covers the algorithm design, building on previous content.

Section 4.1 discusses the batch strategy,

Section 4.2 outlines the encoding and decoding methods, and

Section 4.3 explains the improvement strategies and overall algorithm flow.

Section 5 addresses the encapsulation of the algorithm and problem, detailing the design process of the visual simulation experiment. Each sub-section includes real-world case studies, experimental parameter settings, algorithm testing, and model comparisons. Finally,

Section 6 offers the conclusion.

2. Research Background

2.1. Virtual Simulation

As orders become increasingly diversified and complex, the demand for refined control within factories has become more apparent. Virtual simulation technology has emerged as a critical solution to meet this demand. Technologies such as digital twins and Cyber–Physical Systems (CPSs) are integral components of this approach [

16].

Virtual simulation, as a type of virtual technology, fundamentally represents the actual operation of factories through digital means. The demand for digital twins in industrial production is escalating, driven by their ability to enhance data analysis, regression, and prediction techniques, as well as control processing equipment via information feedback [

17]. With advancements in protocols and standards such as OPC UA (Open Platform Communications Unified Architecture), coupled with a growing range of communication technologies, factory digitalization is becoming increasingly achievable [

18,

19]. Since being introduced in 2012, digital twins have captured significant academic and industrial interest [

14]. A robust body of literature has evolved, focusing on developing more reliable and secure digital twin systems and presenting a variety of mature methodologies [

17,

20,

21]. Furthermore, the application of sophisticated algorithms within digital twins and the Industrial Internet of Things (IIoT) has shown considerable promise. Notably, an innovative alternating optimization algorithm has been designed to address mixed-integer non-convex optimization problems in digital twins, aiming to minimize the total task completion delay for all IIoT devices [

13]. The adoption of advanced computational techniques such as deep learning and federated optimization learning, which facilitate rapid problem-solving and decision-making, is now extending into areas like energy optimization [

14,

15].

Additionally, virtual simulation is frequently employed in training programs across various industries, offering a more cost-effective approach. This includes applications in the precast concrete industry [

22], training for medical and nursing students [

16,

23], and psychological education systems [

24], among others. Its wide-ranging utility spans across multiple sectors.

2.2. Batch Flow Mixed Flow Workshop Scheduling Problem

The batch flow mixed assembly flow shop scheduling problem has been extensively studied. Zhang et al. [

7] analyzed a two-stage mixed assembly flow shop with non-interchangeable sub-batches, proposing heuristic algorithms for scheduling sequences and sub-batch divisions with a single device in the second stage. Defersha et al. [

8] employed a parallel genetic algorithm for multi-stage mixed assembly flow shop scheduling with interchangeable sub-batches, maintaining uniformity across all processing batches. Zhang et al. [

9] enhanced the migrating birds optimization algorithm to tackle equal-sized sub-batch scheduling issues. Qin et al. [

10] introduced a two-stage ant colony algorithm focused on multiple constraints for equal-sized sub-batches, while Zhang Biao [

11] developed a multi-objective optimization model for multi-variety, small-batch production in both static and dynamic environments. Aqil et al. [

12] applied a discrete wavelet optimization algorithm to address the complexities of non-interchangeable sub-batch scheduling. Despite the extensive research, most studies have been predicated on assumptions of non-interchangeable or uniformly sized sub-batches. However, real-world production environments often feature scenarios such as multiple devices processing identical workpieces, diverse batch sizes, and varied batching strategies across different stages. These factors introduce greater complexity and uncertainty into batch division, necessitating more sophisticated scheduling solutions. Although exact algorithms can theoretically provide optimal solutions, their practical application is limited by escalating computational demands as the problem scale increases. In response to these challenges, this study tackles a three-stage batch flow mixed assembly flow shop scheduling problem, considering non-sequential setup times and multiple tooling constraints. It aims to model the problem with the goal of minimizing the maximum completion time, introducing an effective migrating birds optimization algorithm (EMBO) specifically adapted to these complex requirements.

2.3. Bionic Optimization Algorithm

The application of bionic optimization algorithms in industrial production has reached a high level of maturity. However, it is crucial to select the appropriate framework for different problems to achieve faster and more effective solutions. Numerous algorithmic frameworks exist in this field, which can be utilized to address various industrial production challenges.

For instance, in their study, M. Rojas-Santiago et al. employed the Ant Colony Optimization (ACO) algorithm to find initial solutions, which were then optimized using other local heuristic algorithms [

25]. G. Rivera et al. used the particle swarm optimization (PSO) algorithm to solve scheduling problems involving parallel machines [

26]. In the research conducted by O. Abdolazimi et al., an improved Artificial Bee Colony (ABC) algorithm was applied to tackle a newly proposed problem, solving a mathematical model related to Benders decomposition and Lagrangian relaxation algorithms [

27]. G. Deng et al. optimized factory production lines using the migrating birds optimization (MBO) algorithm, which incorporates a diversification mechanism, demonstrating a significant advantage over other heuristic algorithms in addressing this issue [

28]. Additionally, C. Liu et al. introduced a hybrid algorithm that combines dynamic principal component analysis with a genetic algorithm (GA) for fault diagnosis in industrial processes [

29].

As a result, a wide array of bionic optimization algorithms has been applied in the industrial domain, with most achieving commendable success.

Besides biomimetic optimization algorithms, other computational frameworks have proven to offer distinct advantages. Specifically, deep learning and reinforcement learning are emerging as key approaches in this domain.

Shu Luo et al. proposed a multi-layer Deep Q-Network (DQN) agent, which implies that the model can adapt to more complex and urgent rules. This includes a set of six requirements that conform to the rules [

30]. In deep reinforcement learning, Deep Q-Networks (DQN) are widely used. Similar studies include Hua Gong’s research on the Flexible Flow Shop Production Scheduling Problem [

31] and Yuandou Wang’s work addressing multi-workflow completion time and user costs [

32]. In addition to the classic and universal DQN, Gelegen Che and colleagues proposed the use of deep reinforcement learning to address multi-objective optimization problems encountered in production [

33]. This approach also facilitates real-time analysis and flexible balancing between cost and performance.

Though reinforcement learning and deep learning deliver strong performance, they typically demand extensive data and computational resources. This makes them less suited for the present study, as gathering such datasets would involve considerable extra costs. Biomimetic optimization algorithms, in contrast to non-biomimetic ones, can yield satisfactory results with fewer resources, making them advantageous for enterprises.

However, biomimetic optimization algorithms still come with high computational costs. Moreover, the solutions from these heuristic algorithms can be unstable, as they mimic biological behaviors, which may not suit all problems. These methods also often demand significant parameter tuning. Despite these challenges, given the need for high performance in multi-modal, non-linear, and complex spaces, these algorithms are better suited for this study. To overcome these limitations, this study introduces several enhancements, essential for ensuring stable and high-quality outcomes.

2.4. Modeling and Simulation

Utilizing a physical simulation model is essential for verifying the outcomes of algorithms, ensuring their feasibility by considering a broad spectrum of practical factors. The frameworks and systems dedicated to simulation and modeling are robust, incorporating a variety of emerging simulation technologies [

34] and adhering to established simulation standards [

35,

36].

In the industrial sector, these applications are prevalent. With advancements in real-time communication, simulations have become more aligned with the progress of digital twins, where the interoperability of standards plays a critical role [

37].

2.5. Research Content

This study focuses on addressing the scheduling challenges in mixed batch flow assembly shops. To tackle this, we first introduce a visual simulation framework for the assembly shop in

Section 3, where the problem is modeled and mathematically defined. In

Section 4, building on the previous chapter’s mathematical model, we design an MBO algorithm. The algorithm specifically tackles the batch division issue, with tailored strategies designed to address this challenge. The algorithm is then detailed, including its encoding, decoding, evolutionary strategies, and operational processes.

This algorithm is integrated into the visual simulation, with experimental parameters defined accordingly. Comparative simulation experiments with different parameter settings demonstrate the algorithm’s effectiveness in solving the problem, along with the robustness of the simulation framework.

4. Algorithm Design

Given the strong NP-hard characteristics of the batch flow mixed assembly line scheduling problems, precise algorithms often struggle to solve them efficiently within a reasonable timeframe. Intelligent optimization algorithms, tailored to the specific characteristics of these problems, offer a practical approach for rapid solution generation. The migrating birds optimization (MBO) algorithm, a novel metaheuristic with unique sharing and benefiting mechanisms [

38], facilitates detailed neighborhood searches and promotes rapid evolution toward optimal solutions. It is renowned for its efficient local search capabilities and excellent convergence performance.

The MBO algorithm has demonstrated superior solution quality in bipartite matching problems [

39] and has achieved notable success in addressing scheduling optimization challenges [

38,

40,

41]. In the context of batch scheduling, which requires the careful consideration of batch partitioning and sequencing of sub-batches, the MBO algorithm employs mutation-based neighborhood searches [

41]. Its effective exploration of neighborhoods ensures the optimization of batch vectors without violating constraints, rendering it particularly suitable for addressing these complex scheduling issues.

Building on this foundation, we propose an enhanced migrating birds optimization (EMBO) algorithm to tackle the three-stage batch flow mixed assembly line scheduling problem. First, the approach begins with the determination of batch partitioning strategies, which are influenced by mold quantities and collaborative production across the three stages. Second, coding and decoding mechanisms are developed, reflecting the specific characteristics of the problem and the initial partitioning strategies. Next, neighborhood structures specifically tailored for the dual challenges of batch partitioning and sub-batch sequencing are crafted. Finally, strategies for the adaptive adjustment of neighborhood structures and enhancements through competitive mechanisms are introduced to boost the algorithm’s performance. The detailed workflow of the EMBO algorithm is outlined, providing a comprehensive overview of its implementation.

4.1. Batch Partitioning Strategy

Common batch partitioning strategies [

42] include equal-sized batches, where tasks are divided into equally sized production sub-batches, and unequal-sized batches, where sub-batches may vary in size. These strategies can be further categorized into uniform sub-batches, where the batch partitioning scheme remains consistent across all operations of a task, and variable sub-batches, where the partitioning scheme may differ across different operations of a task. Given the collaborative production across three stages, each with varying processing capacities, adopting different batch partitioning strategies for each stage can effectively minimize the maximum completion time. Consequently, an unequal and variable strategy is adopted: the maximum batch size for each stage operation is determined as

.

4.2. Encoding and Decoding Mechanism

A complete batch flow scheme encompasses the number of batches for each sub-batch, the quantity of each sub-batch, the processing sequence of sub-batches, and the processing equipment for each sub-batch. Addressing the dual sub-problems of batch partitioning and sub-batch scheduling, a two-stage encoding mechanism is devised: batch partitioning encoding and arrangement encoding [

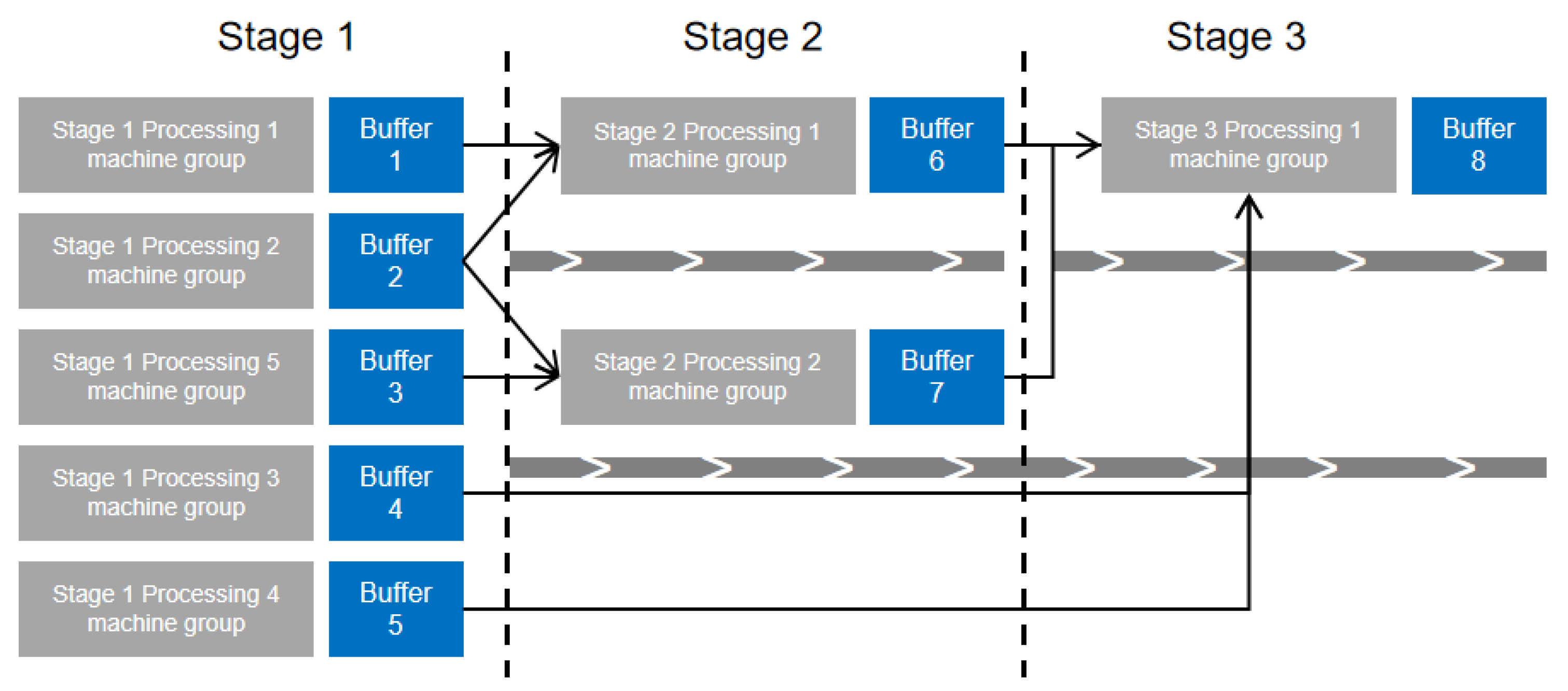

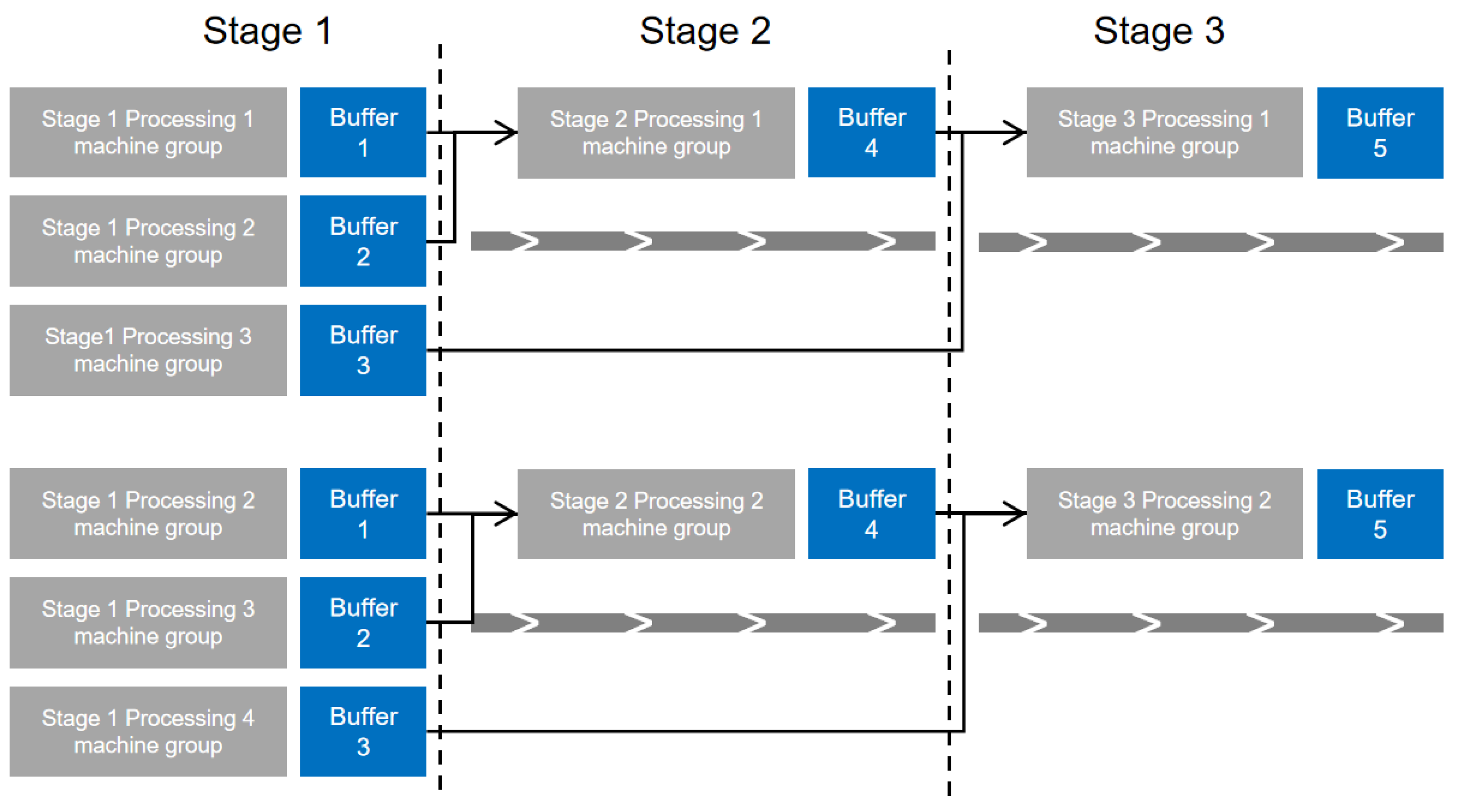

43]. To illustrate the encoding mechanism, we use simple Products 1 and 2 as examples. Different operations within the same stage are represented numerically, with specific operations depicted in

Figure 4. The maximum number of batches for each stage operation is set at two.

4.2.1. Algorithm Encoding

Batch partitioning encoding tackles the number of batches per sub-batch and the quantity of each sub-batch. Utilizing an unequal and variable strategy for batch partitioning necessitates defining the workpiece partitioning schemes for all products across various stages and operations, with varying quantities of workpiece sub-batches, thus escalating the problem’s complexity. To streamline this, identical processing operations for different products are segmented into workpiece sub-batches, facilitating batch partitioning and reducing the computational overhead. For this purpose, we utilize

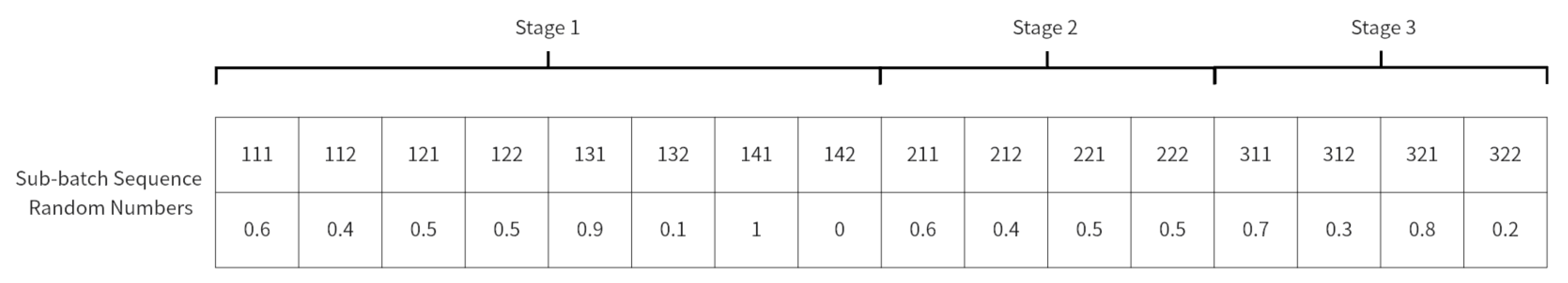

random numbers

with a precision of 0.1 within the range [0, 1.0] to determine the number of batches and the quantity of each sub-batch for operations in all three stages as illustrated in

Figure 5.

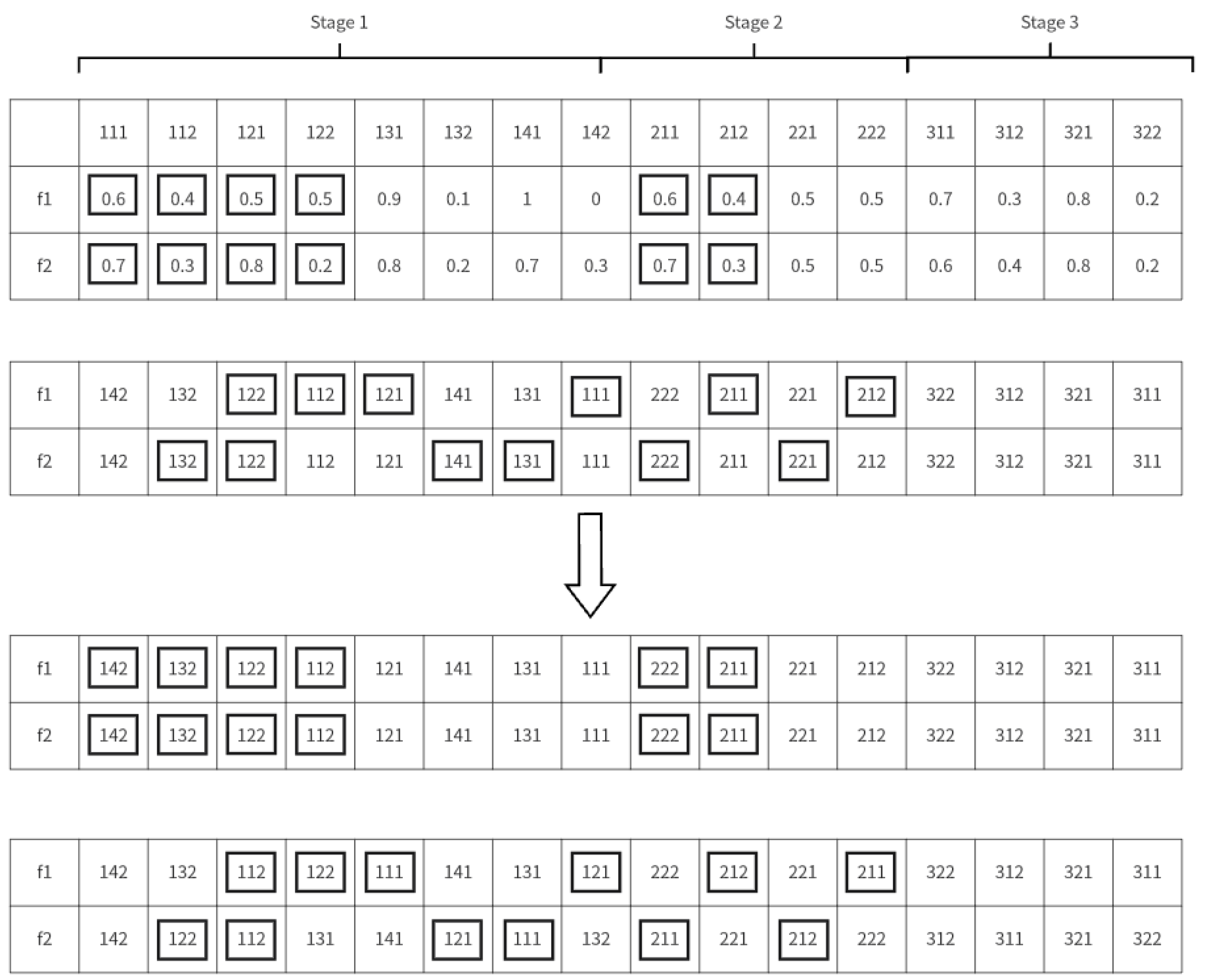

Arrangement encoding focuses on the sub-batch processing sequence. In batch partitioning encoding (

Figure 5), sub-batch sequences across different stages are randomly arranged to create the arrangement encoding, addressing the sub-problem of sub-batch scheduling as depicted in

Figure 6.

A minimum limit constraint is imposed on sub-batches, requiring that the quantity of each sub-batch either exceeds the minimum production batch size for the corresponding operation or is zero. Equations (

10) and (

11) are utilized to ascertain the quantity of each sub-batch while adhering to the aforementioned constraint:

Here,

represents the actual total number of batches for stage

g, operation

i, which is derived from the count of non-zero random numbers

.

is a random number between [0, 1.0] for the

k-th sub-batch of stage

g, operation

i:

Here, denotes the quantity of the -th sub-batch for stage g, operation i.

4.2.2. Algorithm Decoding

The decoding mechanism is designed to address the assignment of processing equipment to sub-batches, taking into account the differing capabilities of each piece of equipment. Sub-batches are scheduled based on a “first come, first served” principle. Initially, the “earliest available” rule is applied to determine the most immediate availability of equipment. This is followed by the “capability priority” rule, which prioritizes equipment based on shorter processing times, thereby selecting the most suitable processing equipment from the available set.

By adhering to these rules, alongside considering assembly completion constraints, the start and end times for processing each stage’s sub-batches are established. The ultimate goal of this process is to minimize the maximum completion time, ensuring the efficient and effective use of resources.

4.3. Improvement Strategy

4.3.1. Neighborhood Structure

The MBO algorithm evolves by iterating over a neighborhood solution set, where the design of the neighborhood structure significantly impacts the solution quality and convergence speed. Therefore, developing efficient neighborhood structures is essential. The two-stage encoding addresses different sub-problems, each requiring a tailored encoding design for specific neighborhood structures, and introduces a structure that concurrently addresses both sub-problems.

- (1)

Neighborhood Structure for Batch Division Encoding: This involves the mutation of process batch blocks by randomly selecting a sub-batch of a process type at any stage within the constraint range and varying it randomly, which impacts other sub-batches. The number of sub-batches can mutate to zero, effectively reducing the batch count. The exchange of process batch blocks entails randomly selecting two process batch numbers with maximum batch counts for exchange.

- (2)

Neighborhood Structure for Permutation Encoding: In algorithms for scheduling processing sequences, operations commonly used to construct the neighborhood structure include random exchange, forward insertion, backward insertion, pair exchange, optimal insertion, and optimal exchange.

- (3)

Neighborhood Structure for Two-Stage Encoding: In the MBO algorithm, early evolution stages with flocking birds are prone to replacement by shared domain solutions, leading to the potential discarding of superior encoding segments. To address this, new neighborhood solutions are proposed by crossing current flocking birds with domain solutions. Considering assembly constraints, a neighborhood structure based on the uniform crossing of subordinate process nodes is proposed. This design involves randomly selecting a node process, forming a set with its subordinate processes, and exchanging random numbers of all process sub-batches in this set. Subsequently, the permutation encoding of all process sub-batches in different stages is exchanged in sequence. For instance, taking Stage 2, Process 1 as an example, new encodings z1 and z2 are generated from the f1 and f2 encodings as detailed in

Figure 7.

4.3.2. Adaptive Adjustment of Domain Structure

The algorithm incorporates a total of nine neighborhood structures, each exhibiting varying levels of search effectiveness at different stages of the optimization process. For example, in the later stages, the optimal insertion and optimal exchange structures in the permutation encoding tend to outperform the other four structures, indicating a need to increase their application frequency. To address this, an adaptive adjustment strategy is introduced [

44], which updates the corresponding weights based on the performance of neighborhood structures in previous iterations. This adjustment controls the usage probability of each structure through a roulette wheel selection method, optimizing the efficiency of the algorithm across different iterations.

Given the unequal number of neighborhood structures corresponding to the two-stage encoding, a weight of one is assigned to each structure in permutation encoding, and a weight of two to the other structures. The roulette wheel method is used to randomly select a neighborhood structure based on weight

to generate neighborhood solutions, updating weights after each iteration. The neighborhood structure weights are adjusted using the following formula:

where

denotes the iteration count of the algorithm;

represents the usage frequency of structure

i;

accumulates scores for structure

i, incremented by 1 if structure

i produces a solution superior to the original; and

controls how quickly the weight

responds to the effectiveness of structure

i.

4.3.3. Competition Mechanism

In the MBO algorithm, after a predetermined number of rounds, the following birds replace the leading birds. If superior solutions are positioned further back in the queue, they may take longer to exert their influence, potentially impeding the optimization efficiency. Furthermore, a lack of interaction between the adjacent queues can decrease the population diversity. To mitigate these issues, intra-population competition is introduced [

41] after bird flocking concludes. This strategy helps maintain population diversity and enhances the algorithm optimization efficiency. The specific steps are as follows:

- (1)

Randomly select a pair of individuals from the following birds and compare their fitness.

- (2)

If the fitness of the leading bird is lower, swap their positions; otherwise, leave them unchanged.

4.4. EMBO Process

To facilitate the description of the Enhanced Migrating Birds Optimization (EMBO) algorithm, the following parameters are defined:

N as the number of individuals in the bird flock,

a as the number of neighborhood solutions generated by each individual,

b as the number of neighborhood solutions transferred to the next individual,

as the number of rounds of migration, Temp as the queue from which the leading birds replace following birds, and

as the number of competitions. The detailed algorithmic flow of EMBO is illustrated in

Figure 8. The diagram consists of two types of lines: dashed and solid. Dashed lines indicate operations on data or solutions, while solid lines illustrate the algorithm’s flow.

6. Conclusions

For the three-stage hybrid model assembly flow shop scheduling problem, this study, from the perspective of bionic optimization algorithms, simulates the behavior of migratory birds and proposes an EMBO algorithm tailored to address this specific problem efficiently integrated within a virtual simulation framework to enable the real-time monitoring and control of production processes. A comprehensive communication system has been developed based on OPC to support the virtual simulation model. Comparative analyses between non-equal-sized variable batching strategies and equal-sized variable batching strategies demonstrate the superior effectiveness of the former in the context of mixed-model assembly flow shop scheduling. Extensive random repeated experiments on cases of various scales and comparisons with other intelligent algorithms for batch scheduling problems confirm the effectiveness and robustness of the EMBO algorithm in addressing the mixed-model assembly flow shop scheduling problem with non-equal-sized variable batching. The research also involves a detailed investigation into the three-stage mixed-model assembly flow shop scheduling problem, leading to the establishment of a mathematical model that explores batch partitioning strategies within this context. An EMBO algorithm has been designed, featuring a two-phase encoding approach based on batch partitioning and permutation sequence sub-problems. Additionally, a domain structure that simultaneously optimizes these two sub-problems and an adaptive adjustment strategy for multiple domain structures are proposed. The primary optimization objective of this study is to minimize the maximum completion time. Future research will expand the scope to include multiple objectives such as delivery time, production cost, and total flow time to better align with practical production scenarios, thereby further enhancing the investigation into batch scheduling strategies.

This study does not address multi-objective production scheduling, including factors like meeting delivery deadlines, minimizing costs, and reducing total flow time. In practice, these objectives are crucial and warrant further exploration in batch flow scheduling. Future research will focus on addressing these objectives.