3.1. Neck Mechanism

The human neck is an important part of the body, connecting the head to the body and allowing for many flexible movements. This includes the bone structure of the cervical spine with seven vertebrae (C1 to C7), of which C1 (atlas) and C2 (axis) have a special structure that allows head rotation [

23]. The atlanto-occipital joint provides the connection between the skull and C1, allowing for head nodding. The atlantoaxial joint connects C1 and C2, allowing head rotation. In short, the neck has three degrees of freedom, including three angular movements: nodding angle (flexion/extension), head tilt (lateral bending), and head rotation (rotation). To design a humanoid robot neck, the degrees of freedom and motion need to be replicated. This is done by using mechanical joints that simulate the cervical vertebrae, replacing them with motors to control the joints while also installing sensors to monitor the position and impact force. Light and durable materials such as aluminum or light alloys are used to ensure the robot neck is strong enough to support the head yet light for ease of movement. In this way, the robot neck can accurately simulate the functions and movements of the human neck, creating naturalness and flexibility in the robot’s operations. Accordingly, to easily and accurately simulate the functions and movements of the human neck, the parameters of the working space of the human neck are surveyed to propose the motor to reproduce the movements, as shown in

Table 4.

Lateral bending does not directly contribute to the expression of core emotions, such as happiness, sadness, fear, and anger. These fundamental emotions are primarily represented through facial muscle movements involving the brows, eyes, mouth, and cheeks. Lateral bending is typically not required for the clear expression of these emotions. As a result, this degree of freedom is excluded from this study to simplify the neck load-bearing systems. The robot neck design with two degrees of freedom, including head nodding (flexion/extension) and head rotation (rotation), is proposed to achieve a balance between simplicity and basic expressive capabilities. In particular, the focus is on these two degrees of freedom reducing the complexity of the mechanical and control systems, thereby reducing costs and development time. A more limited number of joints and moving parts decreases the likelihood of problems and increases system durability, while specifically reducing the overall weight of the robot neck, making it lighter and easier to integrate into the overall robot design. In particular, nodding movements express emotions such as agreement, concentration, or sadness, and head rotation conveys behaviors such as looking around, paying attention, or showing interest in a specific direction, which is enough to reproduce many basic expressions and helps the robot interact effectively in many situations.

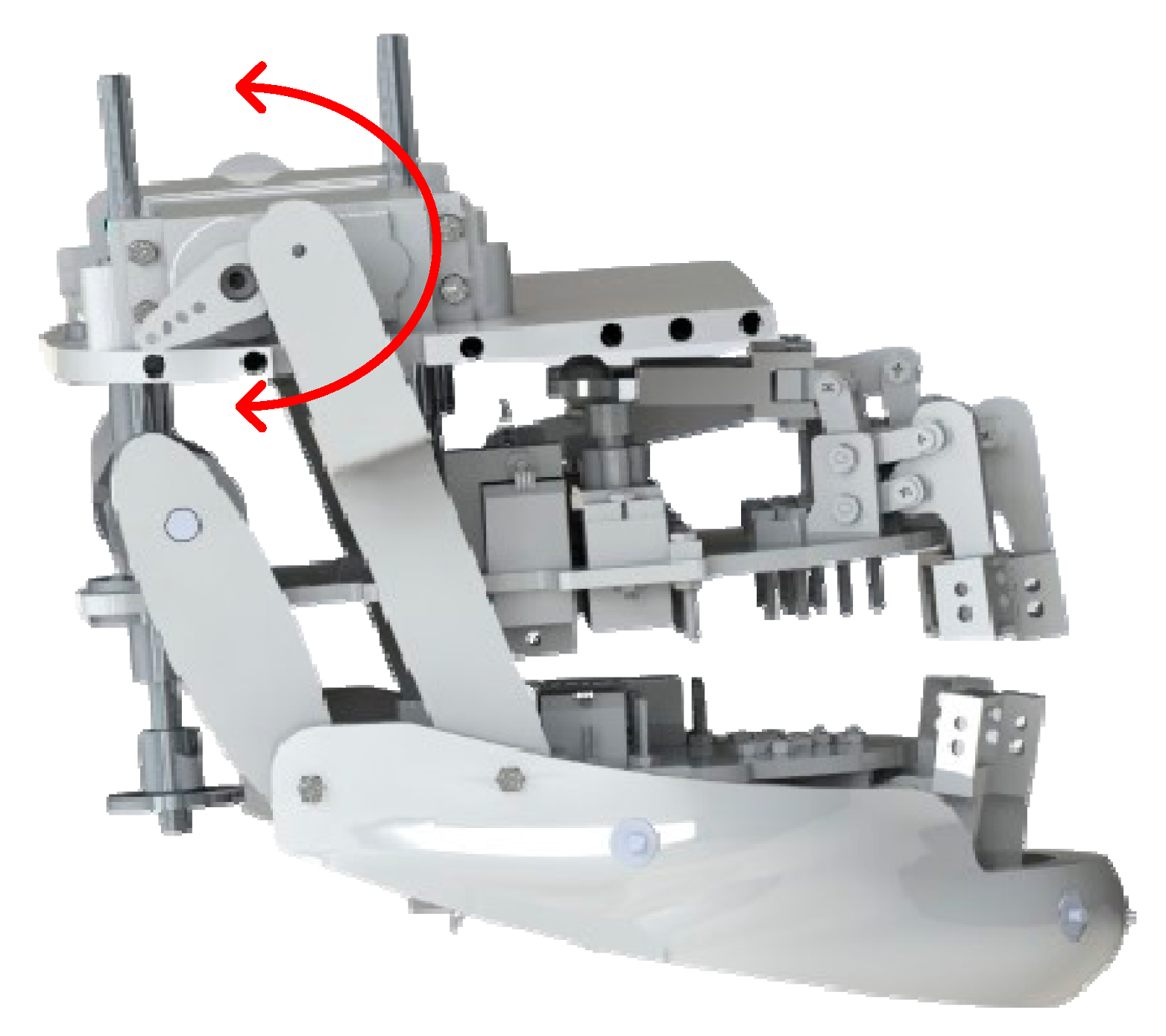

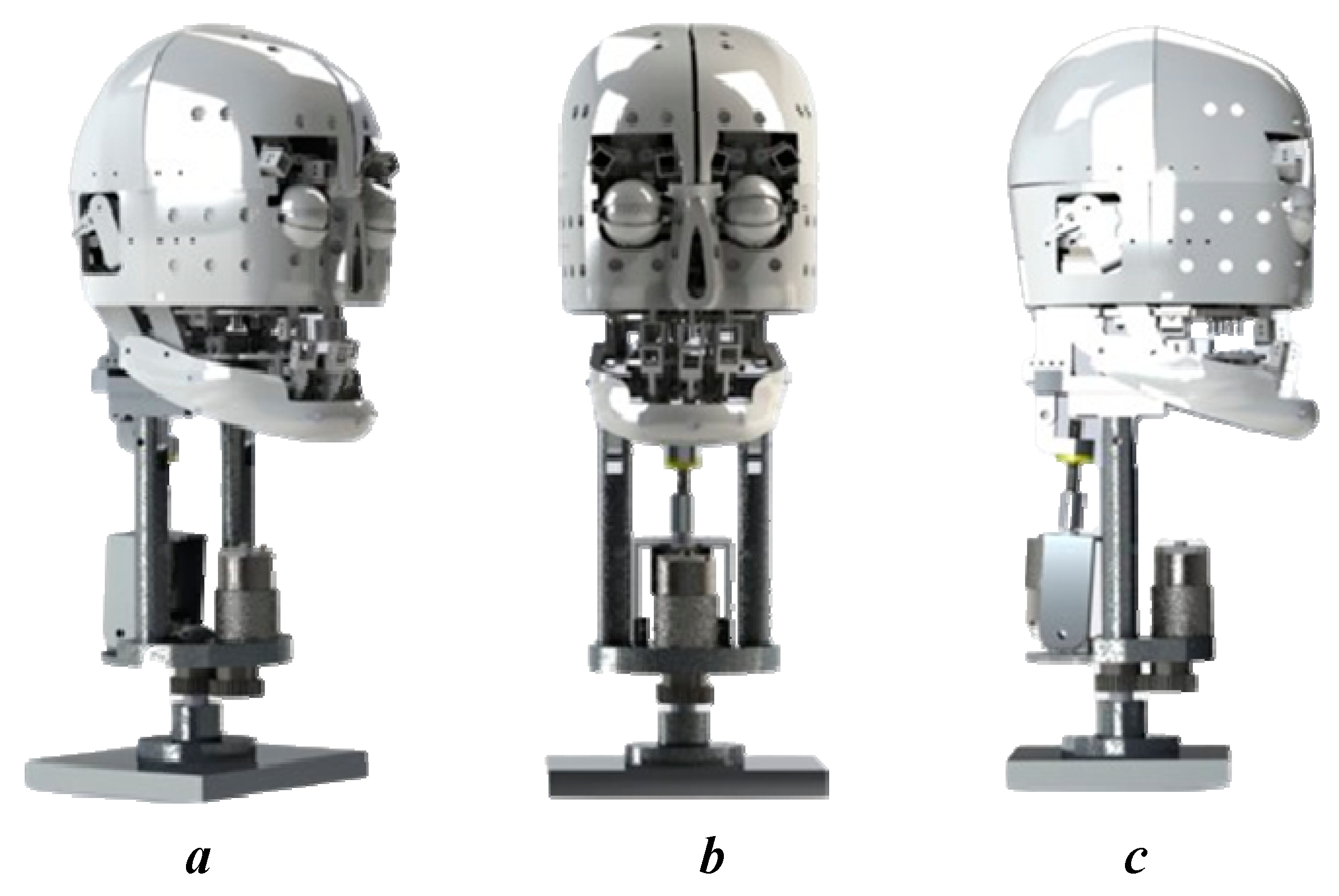

The robot neck design includes two main degrees of freedom: yaw and pitch. It uses a gear mechanism and lead screw to control movement. The gear mechanism facilitates the head rotation (yaw) movement. In particular, gear 1 is attached directly to the motor shaft. When the engine rotates, this gear also rotates. Gear 2 is locked to the middle shaft and meshes with gear 1. Gear 2 does not move but allows the transmission of force from gear 1. When the motor rotates, gear 1 rotates accordingly, creating force acting on gear 2. Because gear 2 is stationary, this force creates a rotation for the robot’s head, allowing it to rotate left and right around the central axis, thereby mimicking the rotation of a human head looking around. Next, the pitching motion is achieved through the lead screw mechanism, which includes a motor attached to the lead screw shaft through a shaft coupling, creating rotational motion. The lead screw shaft is used to convert the rotational motion of the motor into translational motion and is installed on a flat surface using a rocking mechanism. The mechanism pushes the platform up or down in accordance with the linear motion of the lead screw shaft. Since the platform is designed like a seesaw, the head can move forward or backward. As a result, the robot’s head can nod up and down, simulating a human nodding motion.

The robot neck design integrates these two mechanisms to create a flexible and realistic movement system, helping the robot demonstrate complex movements and interact more effectively. In particular, the rotation and nodding mechanisms operate independently, with the former allowing the robot’s head to rotate left or right, and the latter enabling it to move up or down. In coordinated movement, the combination of these two movements allows the robot head to perform more complex actions. For example, the robot can turn its head to the left while nodding, creating a movement similar to when a human tilts its head in curiosity or when bowing and turning to look around. In short, the neck mechanism with a combination of rotation and nodding motion creates a flexible and natural movement system that mimics the movements of the human neck. This not only enhances the robot’s expressive capabilities but also increases its effectiveness in communication and interaction with the surrounding environment. The mechanism is set up with two degrees of freedom, with the center of gravity placed at the middle of the shaft to reduce pressure on the motors, as shown in

Figure 2a. The movements of the neck are shown in

Figure 2b, which depicts the kinematic scheme of the robot neck and includes two main angles controlled by the two motors. In this diagram, the

z-axis is the vertical axis, and the

θ1 (Yaw) angle is the rotation around the

z-axis. When

θ1 changes, this movement is represented by a rotation around the vertical axis, providing the basis for the robot to look around in the horizontal plane, allowing the robot’s head to perform rotational movements from left to right and vice versa, altering the horizontal viewing direction during the interaction. Similarly, the

x-axis is the horizontal axis in the kinematic scheme, and the

θ2 (Pitch) angle is the tilt around the

x-axis. When

θ2 changes, the movement is represented by a rotation around the horizontal axis, facilitating the adjustment of the robot’s viewing angle vertically and allowing the robot to adjust its viewing height. The yaw angle is calculated using Equation (1):

where

k1 and

k2 are the radius of gears

Z1 and

Z2, as indicated in

Figure 2b;

is the rotation angle of motor 1. The pitch angle is controlled by motor 2 with

θ2 presented by Equation (2):

where

K is the transfer factor;

is the rotation angle of motor 2.

3.2. Mouth Mechanism

The human mouth consists of the jaw and lips. The jaw is the main part of the mouth and plays an important role in the process of chewing food [

24]. Meanwhile, the lips are the outermost part of the mouth and have many different functions, including protecting the mouth, keeping it moist, participating in speech, and expressing emotions. The human jaw and lips are the most influential in expressing emotions and social interactions. These two parts are not only parts of the body but also powerful tools, helping us express and communicate our emotions, opinions, and mental conditions effectively. The jaw consists of muscle bundles, such as the masseter and temporalis, which not only help us chew food but also exhibit rich emotional expressions. When smiling, the jaw widens and lifts, creating a bright and happy smile. Conversely, in stressful or angry situations, the jaw tenses and grinds the teeth together, showing tension and stress. Despite their small size, lips are an important part of the face and play a large role in expressing emotions. When smiling, the lips widen and lift, creating a bright and cheerful expression. Conversely, when expressing sad or worried emotions, the lips may narrow and pursue, conveying seriousness and insecurity. Accordingly, the parameters of the working space of the human jaw and lips are surveyed to propose the motor to reproduce the movements as shown in

Table 5.

The degrees of freedom of the mouth will include the degrees of freedom of the jaw and the degrees of freedom of the lips, allowing for different movements to express emotions. In particular, the human jaw has three degrees of freedom, which include open and close (1 DoF), allowing the lower jaw to open and close to perform actions such as talking and chewing. Sliding anteriorly and posteriorly (1 DoF) allows the lower jaw to move forward and backward, aiding in biting and chewing. Sliding laterally (1 DoF) helps the lower jaw move left and right, which is necessary for even chewing on both sides. The degrees of freedom of the lips enable many flexible movements, with each movement corresponding to a degree of freedom. These include opening and closing (1 DoF), where the upper and lower lips can separate and close, and contraction and expansion (1 DoF), which allow the lips to contract or expand, changing shape to express different emotions. Elevation and descent (2 DoF) enable each lip to be raised or lowered independently, creating different expressions such as smiling or frowning. Finally, angular motion (2 DoF), where each corner of the lips can move at different angles, helps produce complex expressions.

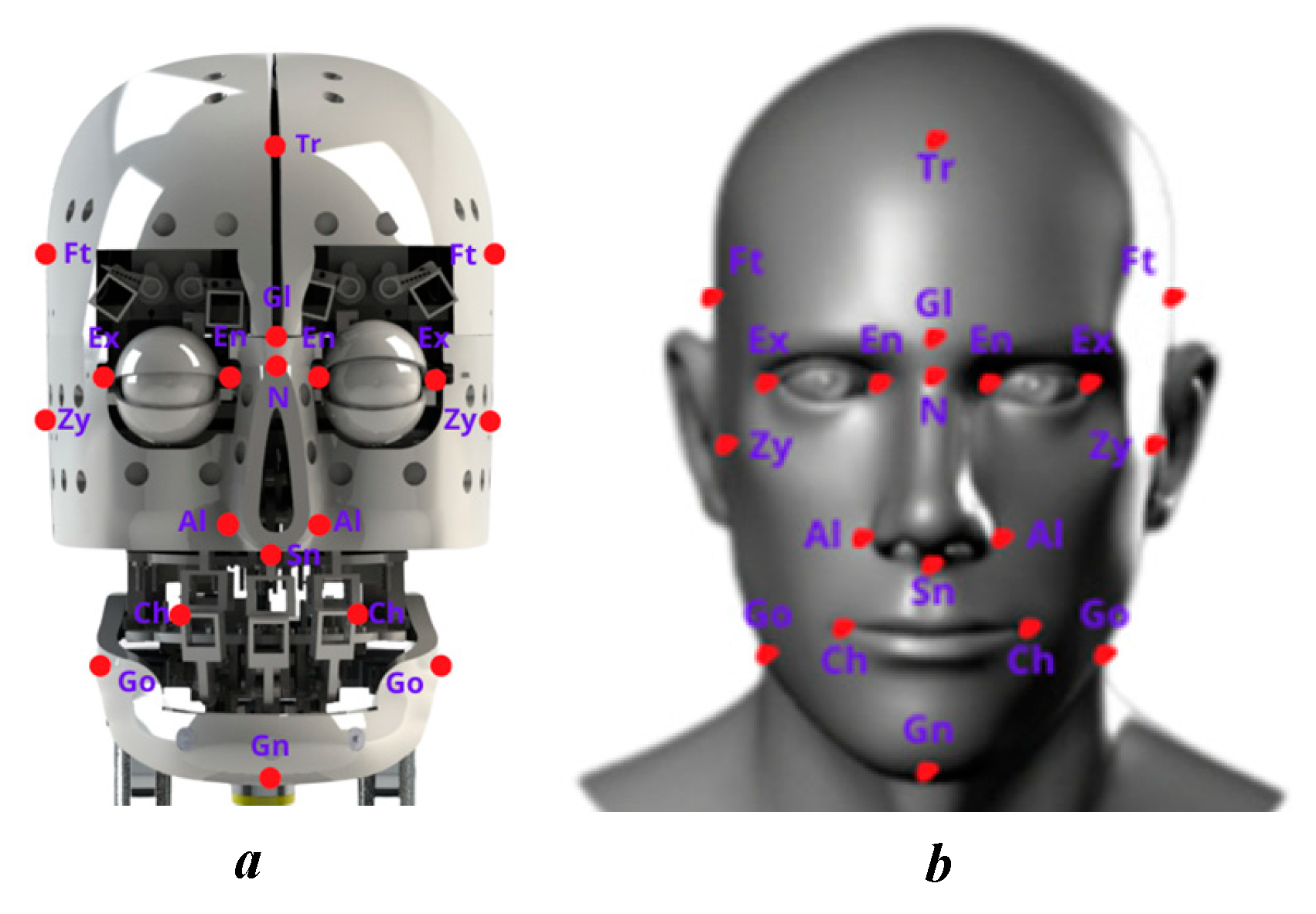

In designing a humanoid robot head to create realistic emotions, choosing to use six lip control points instead of eight points is a reasonable and effective decision. Reducing the number of control points helps simplify the design, reduce complexity, and save costs. This also reduces the weight and size of the system, while increasing reliability and making maintenance easier. Although there are fewer control points, six control points can still be enough to create the most basic human expressions such as smiling, grimacing, pursed lips, and open mouth. While eight points can allow for more subtle expressions, six points are capable of producing realistic and natural expressions if controlled properly. Systems with fewer control points are typically less complex and, therefore, less likely to fail. This can increase the reliability of the robot. Fewer control points allow for more efficient control algorithms, focusing on optimizing the software to create the most natural expressions possible. Furthermore, programming and optimizing lip movements become more efficient, focusing on control quality and generating smooth, precise expressions. However, with only six control points, the ability to create complex and delicate expressions can be limited. Secondary emotions, such as a slight smile or wrinkling of the lips, may not be reproduced as accurately as when using eight points. The lack of two control points can reduce the fluidity and naturalness of lip movements, making expressions look more stiff or unnatural. Some specific expressions, such as a sideways lip curl or subtle movements of the corners of the lips, may be difficult or impossible to achieve with just six control points. Nonetheless, with primary emotions, these points can be reproduced completely, and the omission of unnecessary points makes the control and reproduction of movements smoother. The six landmarks are proposed for the lip mechanism as shown in

Figure 3 with the upper and lower lips. In

Figure 3c, the kinematic diagram clearly outlines the connections and relationships between the motors, lever arms, and the points on the lips, illustrating how the movements are coordinated to achieve realistic lip expressions. Accordingly, the upper lip movement corresponds to the three marked points, and these points are the connection nodes between the motor control mechanisms and the robot’s lips, allowing for up-and-down movement. The lower lip is also represented with three points using a similar mechanism. The movement of the points on the lips through lever arms, along with the concentric joint circles that provide flexible movement, allows the lever arms to rotate in line with motor direction. When a servo operates, it rotates and pulls or pushes the lever arm, resulting in the vertical movement (up or down) of the corresponding control point on the robot’s lips. At the end of each lever arm, the highlighted points are connected to the skin of the robot’s face, ensuring that the lever arm’s movement is directly transmitted to the lip movement. This action mimics the natural movements of the lips and allows the robot to create various facial expressions. The movement parameters and workspaces of the lips are shown in

Table 6.

In the robot’s jaw design, the jaw is controlled by two large motors combined with four connecting rods, including two main connecting rods and two rear auxiliary connecting rods as shown in

Figure 4. This mechanism allows the jaw to open the mouth at a wider angle, allowing actions such as talking, laughing, or simulated eating to appear natural and realistic. Two large motors provide powerful and stable force to ensure smooth and precise jaw opening and closing movements. When the jaw opens, the connecting rods pull on the lower lip, helping the lower lip move in sync with the jaw, creating a more natural expression. The combination of motors and connecting rods not only enhances the realism of movements but also helps the robot express emotions clearly and effectively, offering a close and vivid communication experience with users. The two rear auxiliary rods play an important role in reducing working space and optimizing jaw movement. They support the primary drive system, helping to distribute force evenly and maintain structural stability as the jaw moves. Thanks to the auxiliary rods, jaw movements become smoother and more precise, minimizing vibrations and increasing the realism of expressions, helping the robot to reproduce actions and emotions more realistically, accurately, and human-like. The coordination between motors and the main and auxiliary rod systems enhances the efficiency and precision of movements and ensures that the robot can express emotions clearly and naturally, providing a vivid and realistic communication experience. The movements of the landmarks of the mouth and jaw mechanism, using a crank-slider mechanism integrated with a four-bar linkage system, are described by the general formula, Formula (3):

where

l1 is ground link (fixed);

l2 is crank link;

l3 is coupler link;

l4 is rocker link.

When the motor operates, the rod moves and transmits force to parts of the jaw, contributing to the opening, closing, and movement of the jaw. The motors and connecting rod system provide flexibility and precision in jaw movements. This allows the robot to reproduce emotional expressions realistically and naturally, enhancing its ability to communicate with humans. The robot can maintain consistency and stability in expressions. This makes the robot’s expressions believable and understandable, enhancing interaction and connection with the user. In summary, two important mechanisms in robots to express emotions are the lip and jaw mechanisms. The lip structure, with the support of motors and connecting rods, helps the robot reproduce expressions such as smiling, frowning, and opening the mouth naturally. Meanwhile, the jaw mechanism, controlled by motors and connecting rods, is responsible for actions such as talking and eating. Both mechanisms provide flexibility and precision in the robot’s emotional expression, creating a close and lively communication experience with humans.

3.3. Eyes Mechanism

The human eye is one of the most complex and important organs, responsible for vision. It works by capturing light and converting it into nerve signals that the brain can understand. Eye movement is controlled by six peripheral muscles, which help the eye move in a variety of directions, including the superior rectus, which lifts the eye and turns it inward. The inferior rectus muscle lowers the eye and turns it inward [

25]. The medial rectus muscle functions to turn the eye inward. The lateral rectus muscle turns the eye outward. The superior oblique muscle turns the eye down and out. The inferior oblique muscle acts to rotate the eye up and out. These muscles work in harmony to perform complex eye movements such as looking up, looking down, looking to the sides, and adjusting vision. This complex control system is operated by nerve signals from the brain, allowing the eyes to move quickly and accurately, giving us the ability to observe and react flexibly to the surrounding environment.

The human eye has a total of three degrees of freedom (DoF), including two main degrees of freedom. Horizontal rotation causes the eyes to move left or right. When rotating the eyes to the right, the lateral rectus muscle of the right eye is responsible for pulling the right eye outward, and the medial rectus muscle rectus of the left eye is responsible for pulling the left eye inward. On the contrary, when turning the eye to the left, the lateral rectus muscle of the left eye is responsible for pulling the left eye outward, and the medial rectus muscle of the right eye is responsible for pulling the right eye inward. These pairs of muscles work in sync with each other to create a smooth movement when turning the eye to the left or right. The external rectus muscle pulls the eye outward, while the medial rectus muscle pulls the eye inward. This precise coordination is controlled by nerve signals from the brain, allowing the eyes to move quickly and accurately. Vertical rotation causes the eyes to move up and down, controlled by the superior rectus muscle, which helps lift the eyes and also helps rotate the eyes inward (intorsion) and move the eyes inward (adduction), while the inferior oblique helps lift the eye and helps rotate the eye outward (extorsion). In contrast, the inferior rectus helps lower the eye and also helps rotate the eye outward (extorsion) and move the eye inward (adduction), in conjunction with the superior oblique muscle, which lowers the eye and helps rotate the eye inward (intorsion). These muscles work in synchrony with each other to produce smooth vertical movements of the eye. The superior rectus and inferior oblique muscles work together to lift the eye, while the inferior rectus and superior oblique muscles work together to lower the eye. Besides, the human eye also has a third degree of freedom, which is axial rotation. This movement involves the eyes rotating around the visual axis, helping to adjust and maintain the stability of the image on the retina when the head is tilted or when the eyes adjust to complex viewing angles. The superior oblique muscle is responsible for turning the eye inward and lowering it, while the inferior oblique muscle helps rotate the eye outward and lift it. Although the axial rotation movement is small and less apparent, it is important to keep the image from turning upside down or tilting, ensuring stable and accurate vision.

The human eyelid has two main degrees of freedom, including elevation and depression movements. The upper eyelid can lift when the eyes are open and lower when the eyes are closed, while the lower eyelid can move slightly up and down. These movements are controlled by three main muscles, including the levator palpebrae superioris, which is responsible for lifting the upper eyelid and helping to open the eyes and is controlled by cranial nerve III (oculomotor nerve). Muller’s muscle also contributes to upper eyelid elevation, especially in maintaining full eye-opening, and is controlled by the autonomic nervous system. In contrast, the orbicularis oculi muscle surrounds the eye, is responsible for lowering the upper eyelid, and helps close the eye, controlling the act of blinking and full eye closure. This muscle is controlled by cranial nerve VII (facial nerve). The movements of the eyeballs and eyelids are described in

Table 7. The designs were selected to ensure human-like workspaces. The structures were required to ensure both the workspace and the structure of the movements, as well as the external appearance, were human-like.

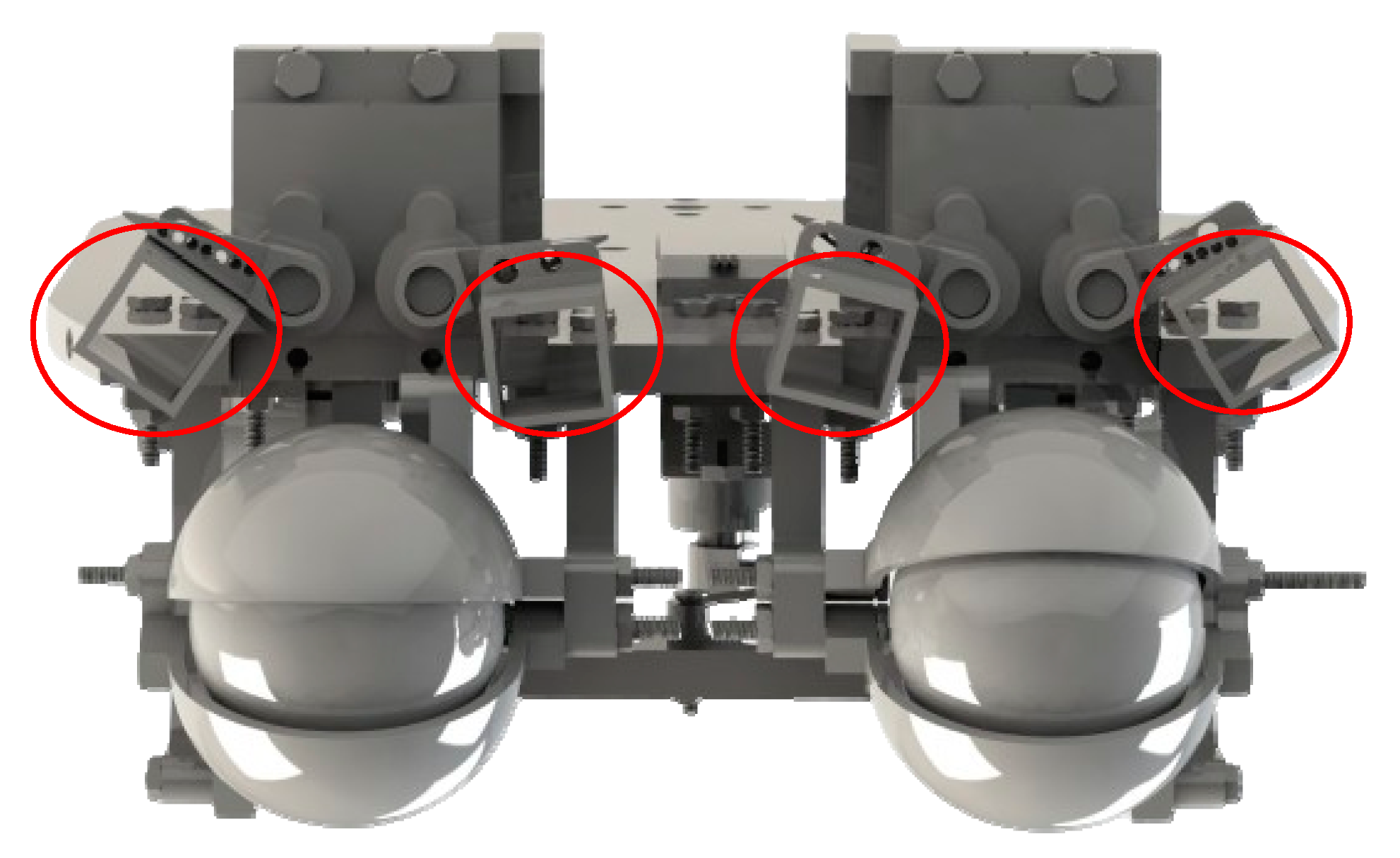

The design of the eye in the robot head consists of a flat transparent mica surface, arranged on the front part of the robot head. This surface is designed to accommodate electronic components such as motors and eye frames. This flat surface can also be made sturdy and precisely machined for installation, and it creates a soft and natural appearance for the robot’s eyes. Next, the bars are arranged vertically or horizontally to secure key components such as eyeballs, eyelids, and motors. Components such as the eyeballs and eyelids are securely attached to these rods, creating a stable and highly precise eye structure. The motors are attached to the rods to control eye movements, including horizontal rotation, vertical rotation, and the opening and closing of the eyelids. The use of motors makes eye movement control flexible and precise and allows the robot to express emotions naturally and realistically. The robot’s eye mechanism uses motors and connecting rods to create synchronized movements between the eyeball and eyelids, creating a realistic and diverse emotional expression. In this way, the robot can move its eyeballs left and right and up and down naturally, just like human eyes. Motors are used to control eye movements, allowing the eyeball to move left, right, up, and down precisely. Connecting rods are connected from the motors to the eyeball and eyelid, ensuring that the movements of the left and right eyes are synchronized with each other. Synchronizing eye movements between the two eyes helps the robot adapt to the surrounding environment and convey emotional expressions naturally. This synchronization creates consistency and reliability in the robot’s emotional expression, helping it interact with humans effectively and engagingly. The mechanism of the eye cluster is shown in

Figure 5, with the degrees of freedom annotated by arrows. This structure ensures that the specific requirements for the shape and limitations of the workspaces are met, ensuring that human-like emotions are reproduced. The eye movements are converted from circular motion to translational motion for the eyelids and up–down motion of the eyeball. Hence, these movements are defined by Equation (4):

where

is the angle of the

i-th motor;

Ke is the transfer factor, for each movement there is a coefficient;

dxy is the distance of the movements in the

x or

y axis for the translational movement, and for rotational movement of the eyeballs,

dxy corresponds to the rotation angle.

The mechanism for synchronized horizontal and vertical eye rotation via motors and connecting rods provides many important advantages in terms of ensuring that eye movements, including horizontal and vertical rotation, occur in synchronization with the motors. This provides precise and flexible control over eye movements. The connecting rod effectively drives the motor to the mechanical elements of the eye, ensuring that the eye can move precisely and smoothly. Additionally, the upper eyelid provides a wider range of emotional expression. From emphasizing the eyes to closing or blinking, the robot enables a wide range of situations and emotions flexibly and provides a wider range of emotional expression; they create a friendly and intimate appearance for the robot. In addition, this eye and eyelid mechanism creates natural and consistent eye movements, providing the robot with a wider range of emotional expressions and making the robot more friendly and engaging, because it can “see” and “react” naturally and realistically.

3.4. Eyes Brown Mechanism

Human eyebrows are an important part of the face, playing a role not only in protecting the eyes but also in expressing emotions and nonverbal communication. They are important elements in expressing emotions, as they can be raised, lowered, or contracted to express different emotional states such as surprise, anger, sadness, and joy. Eyebrow movements can change the entire look of your face and make it clearer. Eyebrows contribute to the shape and structure of the face. Small changes in the eyebrows, such as shaping or plucking, can dramatically change the appearance of the face. In addition, eyebrows play an important role in nonverbal communication by supplementing or replacing speech, helping the other person better understand the speaker’s emotions and intentions. For example, raised eyebrows can indicate surprise or curiosity, while contracted eyebrows can indicate doubt or disagreement. The human eyebrow has one main degree of freedom, which is the up-and-down movement performed by the frontal muscle group (frontalis muscle). It helps pull the eyebrow up, creating an expression of surprise or interest. Next, the frown muscle (corrugator supercilii muscle) pulls the eyebrow down and toward the center, creating a frowning or concentrated expression. In contrast, the slender muscle (procerus muscle) pulls the middle part of the eyebrow down, often in conjunction with the frown muscle, to create an angry or upset expression. Thanks to the coordination of these muscles, the eyebrows can perform a series of complex and diverse movements, making an important contribution to the expression of human emotions and nonverbal communication. Understanding degrees of freedom and related muscles is also an important basis for designing and programming expressions for humanoid robots. The workspace limits are presented in

Table 8.

In the eyebrow design, four motors are used to control the movement of four points on the eyebrow, including the start and end points on each side, forming a moving mechanism that adjusts the eyebrow area. Each motor rotates an axis at a certain angle, creating a circular motion. When the motor rotates, the starting point of the eyebrow will move in the direction determined by the linkage design. This movement can be adjusted to rotate clockwise or counterclockwise, depending on the desired direction of the eyebrow movement. This produces a raised or lowered movement of the eyebrow, similar to the natural movement of the human eyebrow. To create natural expressions, the motors at the start and end points need to be controlled synchronously. This ensures that the entire eyebrow moves up or down consistently and naturally. Using the motor’s circular motion to create a circular eyebrow arc is a reasonable and effective method because the operating range of the eyebrow is very limited. This approach helps save space and makes the robot’s structure more gentle in simulating human expressions. Moving landmarks create movements that allow the robot to express basic human-like emotions. The structure is proposed in

Figure 6.