Abstract

This research paper develops a novel hybrid approach, called hybrid particle swarm optimization–teaching–learning-based optimization (hPSO-TLBO), by combining two metaheuristic algorithms to solve optimization problems. The main idea in hPSO-TLBO design is to integrate the exploitation ability of PSO with the exploration ability of TLBO. The meaning of “exploitation capabilities of PSO” is the ability of PSO to manage local search with the aim of obtaining possible better solutions near the obtained solutions and promising areas of the problem-solving space. Also, “exploration abilities of TLBO” means the ability of TLBO to manage the global search with the aim of preventing the algorithm from getting stuck in inappropriate local optima. hPSO-TLBO design methodology is such that in the first step, the teacher phase in TLBO is combined with the speed equation in PSO. Then, in the second step, the learning phase of TLBO is improved based on each student learning from a selected better student that has a better value for the objective function against the corresponding student. The algorithm is presented in detail, accompanied by a comprehensive mathematical model. A group of benchmarks is used to evaluate the effectiveness of hPSO-TLBO, covering various types such as unimodal, high-dimensional multimodal, and fixed-dimensional multimodal. In addition, CEC 2017 benchmark problems are also utilized for evaluation purposes. The optimization results clearly demonstrate that hPSO-TLBO performs remarkably well in addressing the benchmark functions. It exhibits a remarkable ability to explore and exploit the search space while maintaining a balanced approach throughout the optimization process. Furthermore, a comparative analysis is conducted to evaluate the performance of hPSO-TLBO against twelve widely recognized metaheuristic algorithms. The evaluation of the experimental findings illustrates that hPSO-TLBO consistently outperforms the competing algorithms across various benchmark functions, showcasing its superior performance. The successful deployment of hPSO-TLBO in addressing four engineering challenges highlights its effectiveness in tackling real-world applications.

1. Introduction

Optimization is the process of finding the best solution among all available solutions for an optimization problem [1]. From a mathematical point of view, every optimization problem consists of three main parts: decision variables, constraints, and objective function. Therefore, the goal in optimization is to determine the appropriate values for the decision variables so that the objective function is optimized by respecting the constraints of the problem [2]. There are countless optimization problems in science, engineering, industry, and real-world applications that must be solved using appropriate techniques [3].

Metaheuristic algorithms are one of the most effective approaches used in handling optimization tasks. Metaheuristic algorithms are able to provide suitable solutions for optimization problems without the need for gradient information, only based on random search in the problem solving space, using random operators and trial and error processes [4]. Advantages such as simple concepts, easy implementation, efficiency in nonlinear, nonconvex, discontinuous, nonderivative, NP-hard optimization problems, and efficiency in discrete and unknown search spaces have led to the popularity of metaheuristic algorithms among researchers [5]. The optimization process in metaheuristic algorithms starts with the random generation of a number of solvable solutions for the problem. Then, during an iteration-based process, these initial solutions are improved based on algorithm update steps. At the end, the best improved solution is presented as the solution to the problem [6]. The nature of random search in metaheuristic algorithms means that there is no guarantee of achieving the global optimum using these approaches. However, due to the proximity of the solutions provided by metaheuristic algorithms to the global optimum, they are acceptable as quasi-optimal solutions [7].

In order to perform the search process in the problem-solving space well, metaheuristic algorithms must be able to scan the problem-solving space well at both global and local levels. Global search with the concept of exploration leads to the ability of the algorithm to search all the variables in the search space in order to prevent the algorithm from getting stuck in the local optimal areas and to accurately identify the main optimal area. Local search with the concept of exploitation leads to the ability of the algorithm to search accurately and meticulously around the discovered solutions and promising areas with the aim of achieving solutions that are close to the global optimum. In addition to the ability in exploration and exploitation, what leads to the success of the metaheuristic algorithm in providing a suitable search process is its ability to establish a balance between exploration and exploitation during the search process [8]. The desire of researchers to obtain better solutions for optimization problems has led to the design of numerous metaheuristic algorithms.

The main question of this research whether, considering the many metaheuristic algorithms that have been introduced so far, there is a need to design newer algorithms or develop hybrid approaches from the combination of several metaheuristic algorithms. In response to this question, the no free lunch (NFL) [9] theorem explains that no unique metaheuristic algorithm is the best optimizer for all optimization applications. According to the NFL theorem, the proper performance of a metaheuristic algorithm in solving a set of optimization problems is not a guarantee of the same performance of that algorithm in handling other optimization applications. Therefore, the NFL theorem, by keeping the research field active, motivates researchers to be able to provide more effective solutions for optimization problems by introducing new algorithms as well as developing hybrid versions of the combination of several algorithms.

Numerous metaheuristic algorithms have been designed by researchers. Among these, particle swarm optimization (PSO) [10] and teaching–learning-based optimization (TLBO) [11] are successful and popular algorithms that have been widely employed to deal with optimization problems in various sciences.

The design of PSO is inspired by the movement of flocks of birds and fish in search of food. In PSO design, the position of the best member is used to update the position of the population members. This dependence of the update process on the best member prevents the algorithm from scanning the entire problem-solving space, and as a result, it can lead to the rapid convergence of the algorithm in inappropriate local optima. Therefore, improving the exploration ability in PSO in order to manage the global search plays a significant role in the more successful performance of this algorithm.

In the design of TLBO, it is adapted from the exchange of knowledge between the teacher and students and the students with each other in the educational space of the classroom. The teacher phase in the design of TLBO is such that it has led to the high capability of this algorithm in exploration and global search.

The innovation and novelty of this article are in developing a new hybrid metaheuristic algorithm called hybrid particle swarm optimization–teaching–learning-based optimization (hPSO-TLBO), which is used in handling optimization tasks. The main motivation in designing hybrid algorithms is to benefit from the advantages of two or more algorithms at the same time by combining them. PSO has good quality in exploitation, but on the other hand, it suffers from the weakness of exploration. On the other hand, TLBO has high quality in exploration. Therefore, the main goal in designing hPSO-TLBO is to design a powerful hybrid metaheuristic approach with benefit and combination the exploitation power of PSO and the exploration power of TLBO.

The main contributions of this paper are as follows:

- hPSO-TLBO is developed based on the combination of particle swarm optimization–teaching–learning-based optimization.

- The performance of hPSO-TLBO is tested on fifty-two standard benchmark functions from unimodal, high-dimensional multimodal, fixed-dimensional multimodal types, and the CEC 2017 test suite.

- The performance of hPSO-TLBO is evaluated in handling real-world applications, challenged on four design engineering problems.

- The results of hPSO-TLBO are compared with the performance of twelve well-known metaheuristic algorithms.

This paper is organized as follows: the literature review is presented in Section 2. The proposed hPSO-TLBO approach is introduced and modeled in Section 3. Simulation studies and results are presented in Section 4. The effectiveness of hPSO-TLBO in handling real-world applications is challenged in Section 5. Finally, conclusions and suggestions for future research are provided in Section 6.

2. Literature Review

Various natural phenomena have inspired metaheuristic algorithms, the behavior of living organisms in nature, genetics, and biology, laws and concepts of physics, rules of games, human behavior, and other evolutionary phenomena. Based on the source of inspiration in the design, metaheuristic algorithms are placed in five groups: swarm-based, evolutionary-based, physics-based, game-based, and human-based.

Swarm-based metaheuristic algorithms have been proposed based on modeling swarm behaviors among birds, animals, insects, aquatic animals, plants, and other living organisms in nature. The most famous algorithms of this group are particle swarm optimization (PSO) [10], artificial bee colony (ABC) [12], ant colony optimization (ACO) [13], and firefly algorithm (FA) [14]. The PSO algorithm was developed using inspiration from the movement of flocks of birds and fishes searching for food. ABC was proposed based on the activities of honey bees in a colony, aiming to access food resources. ACO was introduced based on modeling the ability of ants to discover the shortest path between the colony and the food source. FA was developed using inspiration from optical communication between fireflies. Foraging, hunting, migration, digging are among the most common natural behaviors among living organisms, which have been a source of inspiration in the design of swarm-based metaheuristic algorithms such as the coati optimization algorithm (COA) [15], whale optimization algorithm (WOA) [16], white shark optimizer (WSO) [17], reptile search algorithm (RSA) [18], pelican optimization algorithm (POA) [19], kookaburra optimization algorithm (KOA) [20], grey wolf optimizer (GWO) [21], walruses optimization algorithm (WaOA) [22], golden jackal optimization (GJO) [23], honey badger algorithm (HBA) [24], lyrebird optimization algorithm (LOA) [25], marine predator algorithm (MPA) [26], African vultures optimization algorithm (AVOA) [27], and tunicate swarm algorithm (TSA) [28].

Evolutionary-based metaheuristic algorithms have been proposed based on modeling concepts of biology and genetics such as survival of the fittest, natural selection, etc. The genetic algorithm (GA) [29] and differential evolution (DE) [30] are among the most well-known and widely used metaheuristic algorithms developed based on the modeling of the generation process, Darwin’s evolutionary theory, and the use of mutation, crossover, and selection random evolutionary operators. Artificial immune system (AIS) [31] algorithms are designed with inspiration from the human body’s defense mechanism against diseases and microbes.

Physics-based metaheuristic algorithms have been proposed based on modeling concepts, transformations, forces, laws in physics. Simulated annealing (SA) [32] is one of the most famous metaheuristic algorithms of this group, which was developed based on the modeling of the annealing process of metals, during which, based on physical transformations, metals are melted under heat and then slowly cooled to become the crystal of its idea. Physical forces have inspired the design of several algorithms, including the gravitational search algorithm (GSA) [33], based on gravitational force simulation; spring search algorithm (SSA) [34], based on spring potential force simulation; and momentum search algorithm (MSA) [35], based on impulse force simulation. Some of the most popular physics-based methods are water cycle algorithm (WCA) design [36], electromagnetism optimization (EMO) [37], the Archimedes optimization algorithm (AOA) [38], Lichtenberg algorithm (LA) [39], equilibrium optimizer (EO) [40], black hole algorithm (BHA) [41], multi-verse optimizer (MVO) [42], and thermal exchange optimization (TEO) [43].

Game-based metaheuristic algorithms have been proposed, inspired by governing rules, strategies of players, referees, coaches, and other influential factors in individual and group games. The modeling of league matches was a source of inspiration in designing algorithms such as football game-based optimization (FGBO) [44], based on a football game, and the volleyball premier league (VPL) algorithm [45], based on a volleyball league. The effort of players in a tug-of-war competition was the main idea in the design of tug of war optimization (TWO) [46]. Some other game-based algorithms are the golf optimization algorithm (GOA) [47], hide object game optimizer (HOGO) [48], darts game optimizer (DGO) [49], archery algorithm (AA) [5], and puzzle optimization algorithm (POA) [50].

Human-based metaheuristic algorithms have been proposed, inspired by strategies, choices, decisions, thoughts, and other human behaviors in individual and social life. Teaching–learning-based optimization (TLBO) [11] is one of the most famous human-based algorithms, which is designed based on modeling the classroom learning environment and the interactions between students and teachers. Interactions between doctors and patients in order to treat patients is the main idea in the design of doctor and patient optimization (DPO) [51]. Cooperation among the people of a team in order to achieve the set goals of that team is employed in teamwork optimization algorithm (TOA) [52] design. The efforts of both the poor and the rich sections of the society in order to improve their economic situation were a source of inspiration in the design of poor and rich optimization (PRO) [53]. Some of the other human-based metaheuristic algorithms are the mother optimization algorithm (MOA) [54], herd immunity optimizer (CHIO) [55], driving training-based optimization (DTBO) [56], Ali Baba and the Forty Thieves (AFT) [57], election-based optimization algorithm (EBOA) [58], chef-based optimization algorithm (ChBOA) [59], sewing training-based optimization (STBO) [60], language education optimization (LEO) [61], gaining–sharing knowledge-based algorithm (GSK) [62], and war strategy optimization (WSO) [63].

In addition to the groupings stated above, researchers have developed hybrid metaheuristic algorithms by combining two or more metaheuristic algorithms. The main goal and motivation in the construction of hybrid metaheuristic algorithms is to take advantage of several algorithms at the same time in order to improve the performance of the optimization process compared to the single versions of each of the combined algorithms. The combination of TLBO and HS was used to design the hTLBO-HS hybrid approach [64]. hPSO-YUKI was proposed based on the combination of PSO and the YUKI algorithm to address the challenge of double crack identification in CFRP cantilever beams [65]. The hGWO-PSO hybrid approach was designed by integrating GWO and PSO for static and dynamic crack identification [66].

PSO and TLBO algorithms are successful metaheuristic approaches that have always attracted the attention of researchers and have been employed to solve many optimization applications. In addition to using single versions of PSO and TLBO, researchers have tried to develop hybrid approaches by integrating these two algorithms that benefit from the advantages of both algorithms at the same time. A hybrid version of hPSO-TLBO was proposed based on merging the better half of the PSO population and the better half obtained from the TLBO teacher phase. Then, the merged population enters the learner phase of TLBO. In this hybrid approach, there is no change or integration in the equations [67]. A hybrid version of hPSO-TLBO based on population merging was proposed for trajectory optimization [68]. The idea of dividing and merging the population has also been used to solve optimization problems [69]. A hybrid version of PSO and TLBO was proposed for distribution network reconfiguration [70]. A hybrid version of TLBO and SA as well as the use of a support vector machine was developed for gene expression data [71]. From the combination of the sine–cosine algorithm and TLBO, the hSCA-TLBO hybrid approach was proposed for visual tracking [72]. Sunflower optimization and TLBO were combined to develop hSFO-TLBO for biodegradable classification [73]. A hybrid version called hTLBO-SSA was proposed from the combination of the salp swarm algorithm and TLBO for reliability redundancy allocation problems [74]. A hybrid version consisting of PSO and SA was developed under the title of hPSO-SA for mobile robot path planning in warehouses [75]. Harris hawks optimization and PSO were integrated with Ham to design hPSO-HHO for renewable energy applications [76]. A hybrid version called hPSO-GSA was proposed from the combination of PSO and GSA for feature selection [77]. A hybrid version made from PSO and GWO called hPSO-GWO was developed to deal with reliability optimization and redundancy allocation for fire extinguisher drones [78]. A hybrid PSO-GA approach was proposed for flexible flow shop scheduling with transportation [79].

In addition to the development of hybrid metaheuristic algorithms, researchers have tried to improve existing versions of algorithms by making modifications. Therefore, numerous improved versions of metaheuristic algorithms have been proposed by scientists to improve the performance of the original versions of existing algorithms. An improved version of PSO was proposed for efficient maximum power point tracking under partial shading conditions [80]. An improved version of PSO was developed based on hummingbird flight patterns to enhance search quality and population diversity [81]. In order to deal with the planar graph coloring problem, an improved version of PSO was designed [82]. The application of an improved version of PSO was evaluated for the optimization of reactive power [83]. An improved version of TLBO for optimal placement and sizing of electric vehicle charging infrastructure in a grid-tied DC microgrid was proposed [84]. An improved version of TLBO was developed for solving time–cost optimization in generalized construction projects [85]. Two improved TLBO approaches were developed for the solution of inverse boundary design problems [86]. In order to address the challenge of selective harmonic elimination in multilevel inverters, an improved version of TLBO was designed [87].

Based on the best knowledge from the literature review, although several attempts have been made to improve the performance of PSO and TLBO algorithms and also to design hybrid versions of these two algorithms, it is still possible to develop an effective hybrid approach to solve optimization problems by integrating the equations of these two algorithms and making modifications in their design. In order to address this research gap in the study of metaheuristic algorithms, in this paper, a new hybrid metaheuristic approach combining PSO and TLBO was developed, which is discussed in detail in the next section.

3. Hybrid Particle Swarm Optimization–Teaching–Learning-Based Optimization

In this section, PSO and TLBO are discussed first, and their mathematical equations are presented. Then, the proposed hybrid particle swarm optimization–teaching–learning-based optimization (hPSO-TLBO) approach is presented based on the combination of PSO and TLBO.

3.1. Particle Swarm Optimization (PSO)

PSO is a prominent swarm-based metaheuristic algorithm widely known for its ability to emulate the foraging behavior observed in fish and bird flocks, enabling an effective search for optimal solutions. All PSO members are candidate solutions representing values of decision variables based on their position in the search space. The personal best experience and the collective best experience are used in PSO design in the population updating process. represents the best candidate solution that each PSO member has been able to achieve up to the current iteration. is the best candidate solution discovered up to the current iteration by the entire population in the search space. The population update equations in PSO are as follows:

where is the th PSO member, is its velocity, is the best obtained solution so far by the th PSO member, is the best obtained solution so far by overall PSO population, is the inertia weight factor with linear reduction from 0.9 to 0.1 during algorithm iteration, is the maximum number of iterations, is the iteration counter, and are the real numbers with a uniform probability distribution between 0 and 1 (i.e., ), and (fulfilling the condition ) are acceleration constants in which represents the confidence of a PSO member in itself while represents the confidence of a PSO member in the population.

3.2. Teaching–Learning-Based Optimization (TLBO)

TLBO has established itself as a leading and extensively employed human-based metaheuristic algorithm, effectively simulating the dynamics of educational interactions within a classroom setting. Like PSO, each TLBO member is also a candidate solution to the problem based on its position in the search space. In the design of TLBO, the best member of the population with the most knowledge is considered a teacher, and the other population members are considered class students. In TLBO, the position of population members is updated under two phases (the teacher and learner phases).

In the teacher phase, the best member of the population with the highest level of knowledge, denoted as the teacher, tries to raise the academic level of the class by teaching and transferring knowledge to students. The population update equations in TLBO based on the teacher phase are as follows:

where is the th TLBO member, is the teacher, is the mean value of the class, , is a random integer obtained from a uniform distribution on the set , and represents the number of population members.

In the learner phase, the students of the class try to improve their knowledge level and thus the class by helping each other. In TLBO, it is assumed that each student randomly chooses another student and exchanges knowledge. The population update equations in TLBO based on the learner phase are as follows:

where is the th student (), is its objective function value, and .

3.3. Proposed Hybrid Particle Swarm Optimization–Teaching–Learning-Based Optimization (hPSO-TLBO)

This subsection presents the introduction and modeling of the proposed hPSO-TLBO approach, which combines the features of PSO and TLBO. In this design, an attempt was made to use the advantages of each of the mentioned algorithms so as to develop a hybrid metaheuristic algorithm that performs better than PSO or TLBO.

PSO has a high exploitation ability based on the term in the update equations; however, due to the dependence of the update process on the best population member , PSO is weak in global search and exploration. In fact, the term in PSO can stop the algorithm by taking it to the local optimum and reaching the stationary state (early gathering of all population members in a solution).

The teacher phase in TLBO incorporates large and sudden changes in the population’s position, based on term , resulting in global search and exploration capabilities. Enhancing exploration in metaheuristic algorithms improves the search process, preventing it from getting trapped in local optima and accurately identifying the main optimal area. Hence, the primary concept behind the design of the proposed hPSO-TLBO approach is to facilitate the exploration phase in PSO by leveraging the exceptional global search and exploration capabilities of TLBO. According to this, in hPSO-TLBO, a new hybrid metaheuristic algorithm is designed by integrating the exploration ability of TLBO with the exploitation ability of PSO.

For the possibility and effectiveness of the combination of PSO and TLBO, the term was removed from Equation (2) (i.e., equation for velocity), and conversely, to improve the discovery ability, the term from the teacher phase of TLBO was added to this equation. Therefore, the new form of velocity equation in the hPSO-TLBO is as follows:

Then, based on the velocity calculated from Equation (7), and based on Equation (1), a new location for any hPSO-TLBO member is calculated by Equation (8). If the value of the objective function improves at the new location, it supersedes the previous position of the corresponding member based on Equation (9).

where is the new proposed location for the th population member in the search space and is objective function value of .

During the student phase of TLBO, every student chooses another student at random for the purpose of exchanging knowledge. A randomly selected student may have a better or worse knowledge status compared to the student who is the selector. In hPSO-TLBO design, an enhancement is introduced in the student phase, assuming that each student selects a superior student to elevate their knowledge level and enhance overall performance. In this case, if the objective function value of a member represents the scientific level of that member, the set of better students for each hPSO-TLBO member is determined using Equation (10):

where is the set of suitable students for guiding the th member , and is the population member with a better objective function value than member .

In the implementation of hPSO-TLBO, each student uniformly randomly chooses one of the higher-performing students from a given set and proceeds to exchange knowledge with them. Based on the exchange of knowledge in the student phase, a new location of each member is calculated by Equation (11). If the new position leads to an improvement in the objective function value, it replaces the previous position of the corresponding member, as specified by Equation (12).

where is the selected student for guiding the th population member.

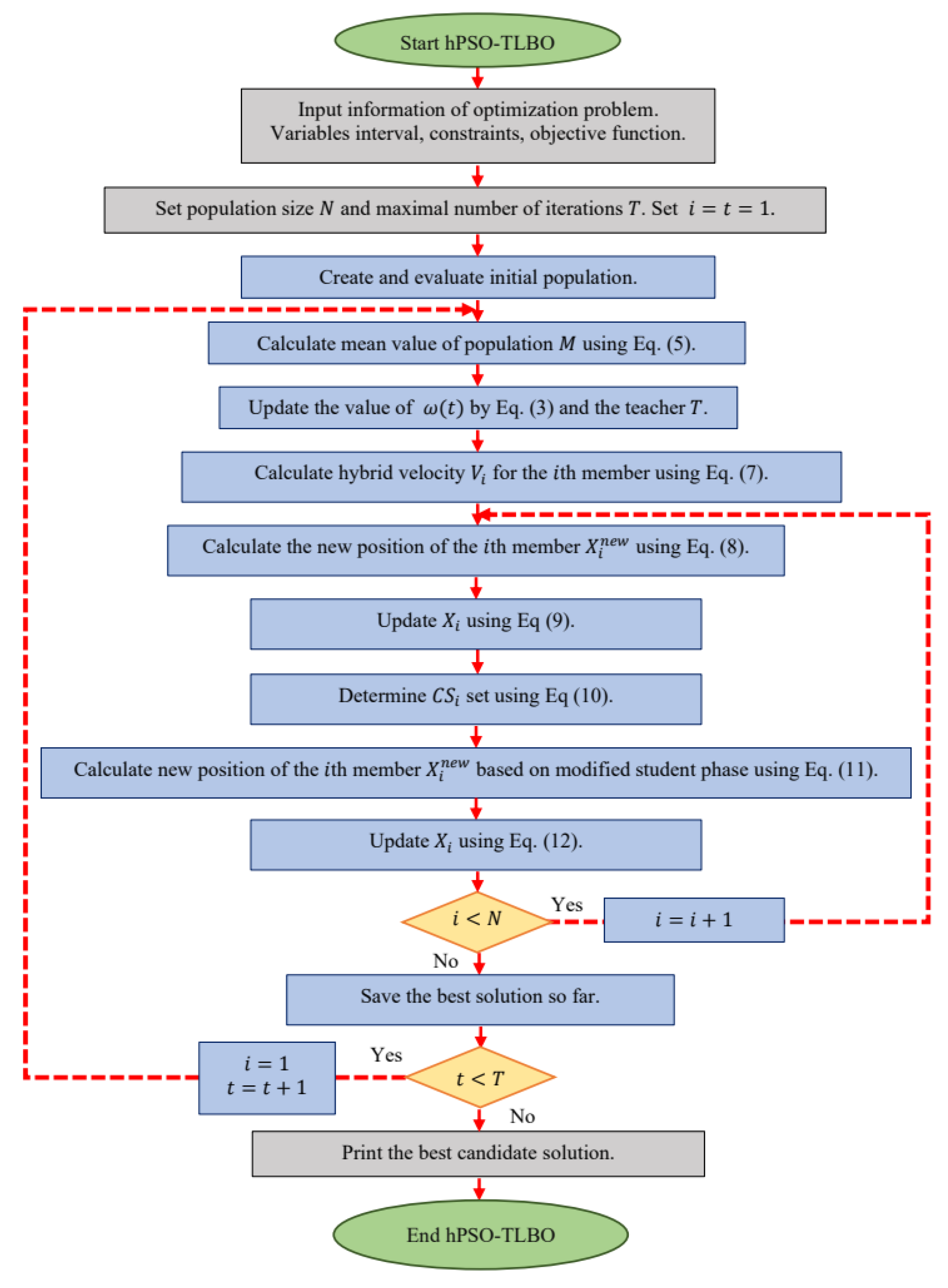

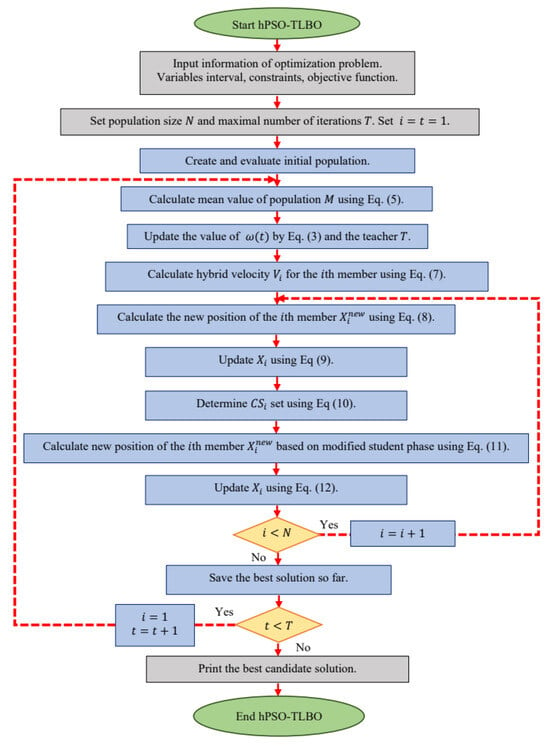

Figure 1 presents a flowchart illustrating the implementation steps of the hPSO-TLBO approach, while Algorithm 1 provides the corresponding pseudocode.

| Algorithm 1. Pseudocode of hPSO-TLBO | ||||

| Start hPSO-TLBO. | ||||

| 1. | Input problem information: variables, objective function, and constraints. | |||

| 2. | Set the population size and the maximum number of iterations . | |||

| 3. | Generate the initial population matrix at random. | |||

| 4. | Evaluate the objective function. | |||

| 5. | For to | |||

| 6. | Update the value of by Equation (3) and the value of the teacher . | |||

| 7. | Calculate using Equation (5). | |||

| 8. | For to | |||

| 9. | Update based on comparison with . | |||

| 10. | Set the best population member as teacher T. | |||

| 11. | Calculate hybrid velocity for the th member using Equation (7). | |||

| 12. | Calculate new position of the th population member using Equation (8). | |||

| 13. | Update the th member using Equation (9). | |||

| 14. | Determine candidate students set for the th member using Equation (10). | |||

| 15. | Calculate the new position of the th population member based on modified student phase by Equation (11). | |||

| 16. | Update the th member using Equation (12). | |||

| 17. | end | |||

| 18. | Save the best candidate solution so far. | |||

| 19. | end | |||

| 20. | Output the best quasi-optimal solution obtained with hPSO-TLBO. | |||

| End hPSO-TLBO. | ||||

Figure 1.

Flowchart of hPSO-TLBO.

3.4. Computational Complexity of hPSO-TLBO

This subsection focuses on evaluating the computational complexity of the hPSO-TLBO algorithm. The initialization of hPSO-TLBO for an optimization problem with m decision variables has a computational complexity of , where represents the number of population members. In each iteration, the position of the population members in the search space is updated in two steps. As a result, in each iteration, the value of the objective function for each population member is computed twice. Hence, the computational complexity of the population update process in hPSO-TLBO is with representing the total number of the algorithm’s iterations. Based on these, the overall computational complexity of the proposed hPSO-TLBO approach is

Similarly, the computational complexity of each of the PSO and TLBO algorithms can also be evaluated. PSO has a computational complexity of and TLBO has a computational complexity of . Therefore, from the point of view of computational complexity, the proposed hPSO-TLBO approach has a similar situation to TLBO, but compared to PSO, it has twice the computational complexity. Actually, the number of function evaluations in each iteration in hPSO-TLBO and TLBO is equal to 2N and in PSO is equal to N.

4. Simulation Studies and Results

In this section, the performance of the proposed hPSO-TLBO approach in solving optimization problems is evaluated. For this purpose, a set of fifty-two standard benchmark functions of unimodal, high-dimensional multimodal, and fixed-dimensional multimodal types [88], and CEC 2017 test suite [89] were employed.

4.1. Performance Comparison and Experimental Settings

In order to check the quality of hPSO-TLBO, the obtained results were compared with the performance of twelve well-known metaheuristic algorithms: PSO, TLBO, improved PSO (IPSO) [81], improved TLBO (ITLBO) [87], hybrid PSO-TLBO (hPT1) developed in [67], hybrid PSO-TLBO (hPT2) developed in [90], GWO, MPA, TSA, RSA, AVOA, WSO. Therefore, hPSO-TLBO was compared with twelve metaheuristic algorithms in total. The experiments were carried out on a Windows 10 computer with a 2.2GHz Core i7 processor and 16 GB of RAM, utilizing MATLAB 2018a as the software environment. The optimization results are reported using six statistical indicators: mean, best, worst, standard deviation (std), median, and rank. In addition, the value of the mean index was used to rank the metaheuristic algorithms in handling each of the benchmark functions.

4.2. Evaluation of Unimodal Test Functions F1 to F7

Unimodal functions are valuable for evaluating the exploitation and local search capabilities of metaheuristic algorithms since they lack local optima. Table 1 presents the optimization results of unimodal functions F1 to F7, obtained using hPSO-TLBO and other competing algorithms. The optimization results demonstrate that hPSO-TLBO excels in local search and exploitation, consistently achieving the global optimum for functions F1 to F6. Furthermore, hPSO-TLBO emerged as the top-performing optimizer for solving function F7. The analysis of simulation outcomes confirms that hPSO-TLBO, with its exceptional exploitation capability and superior results, outperforms competing algorithms in tackling functions F1 to F7 of unimodal type.

Table 1.

Optimization results of unimodal functions.

4.3. Evaluation of High-Dimensional Multimodal Test Functions F8 to F13

Due to having multiple local optima, high-dimensional multimodal functions are suitable options for global exploration and search in metaheuristic algorithms. The results of implementing hPSO-TLBO and competing algorithms on high-dimensional multimodal benchmarks F8 to F13 are presented in Table 2. Based on the results, hPSO-TLBO, with high discovery ability, was able to handle functions F9 and F11 while identifying the main optimal area, converging to the global optimum. The hPSO-TLBO demonstrates exceptional performance as the top optimizer for benchmarks F8, F10, F12, and F13. The simulation results clearly indicate that hPSO-TLBO, with its remarkable exploration capability, outperforms competing algorithms in effectively handling benchmarks F8 to F13 of high-dimensional multimodal type.

Table 2.

Optimization results of high-dimensional multimodal functions.

4.4. Evaluation of Fixed-Dimensional Multimodal Test Functions F14 to F23

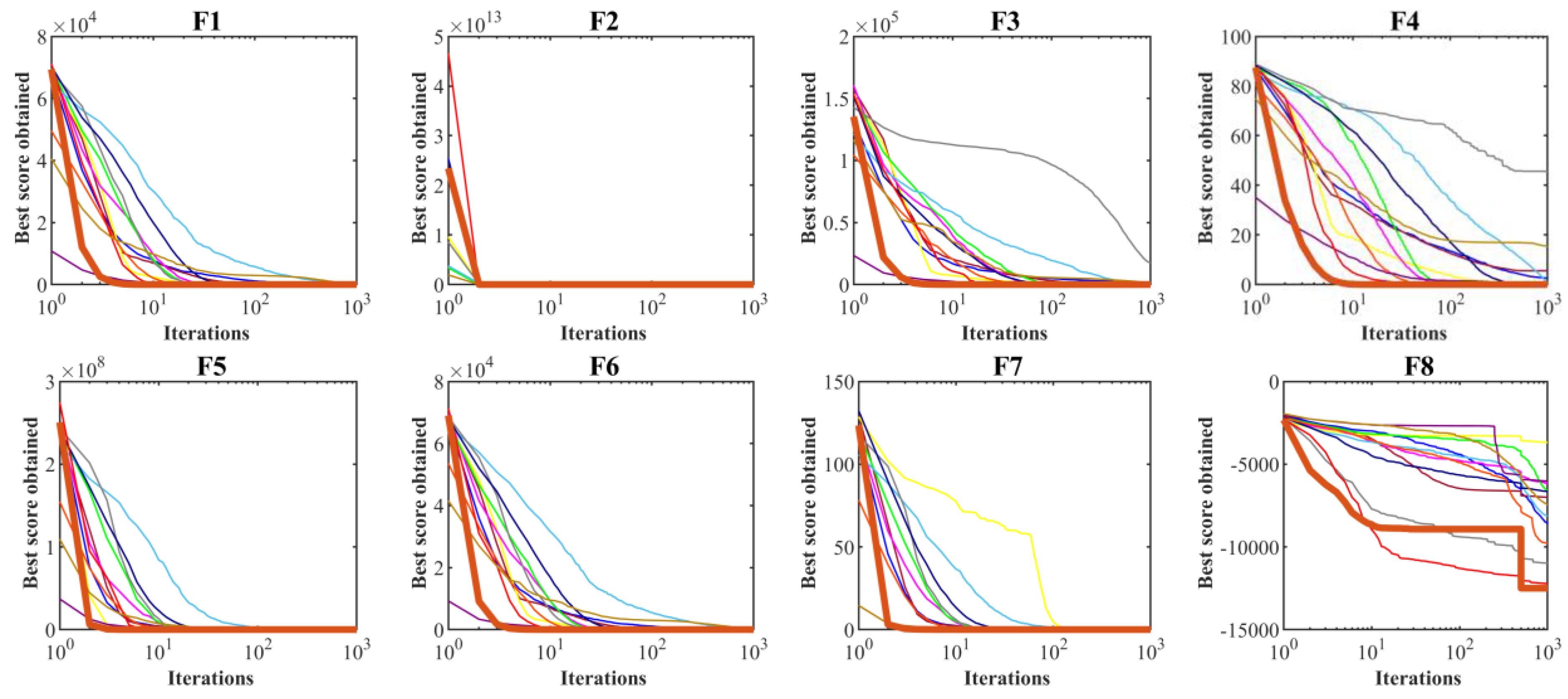

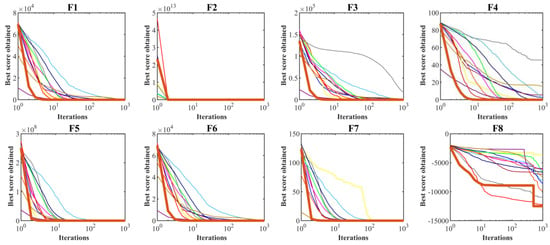

Multimodal functions with a fixed number of dimensions are suitable criteria for simultaneous measurement of exploration and exploitation in metaheuristic algorithms. Table 3 presents the outcomes achieved by applying hPSO-TLBO and other competing optimizers to fixed-dimension multimodal benchmarks F14 to F23. The proposed hPSO-TLBO emerged as the top-performing optimizer for functions F14 to F23, showcasing its effectiveness. In cases where hPSO-TLBO shares the same mean index values with certain competing algorithms, its superior performance is evident through better std index values. The simulation results highlight hPSO-TLBO’s exceptional balance between exploration and exploitation, surpassing competing algorithms in handling fixed-dimension multimodal functions F14 to F23. The performance comparison by convergence curves is illustrated in Figure 2.

Table 3.

Optimization results of fixed-dimensional multimodal functions.

Figure 2.

Convergence curves of performance hPSO-TLBO and twelve competitor optimizers on functions F1 to F23.

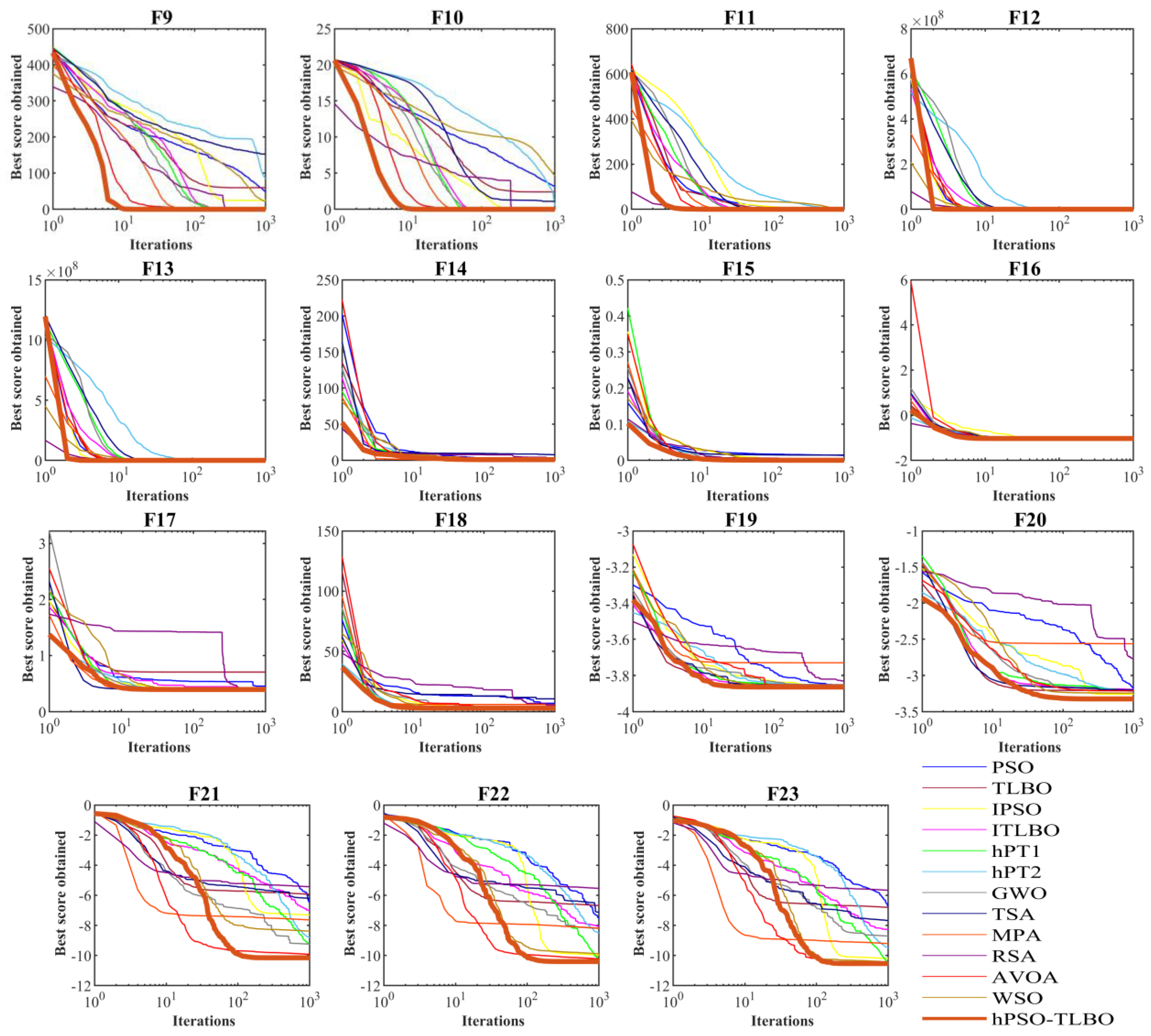

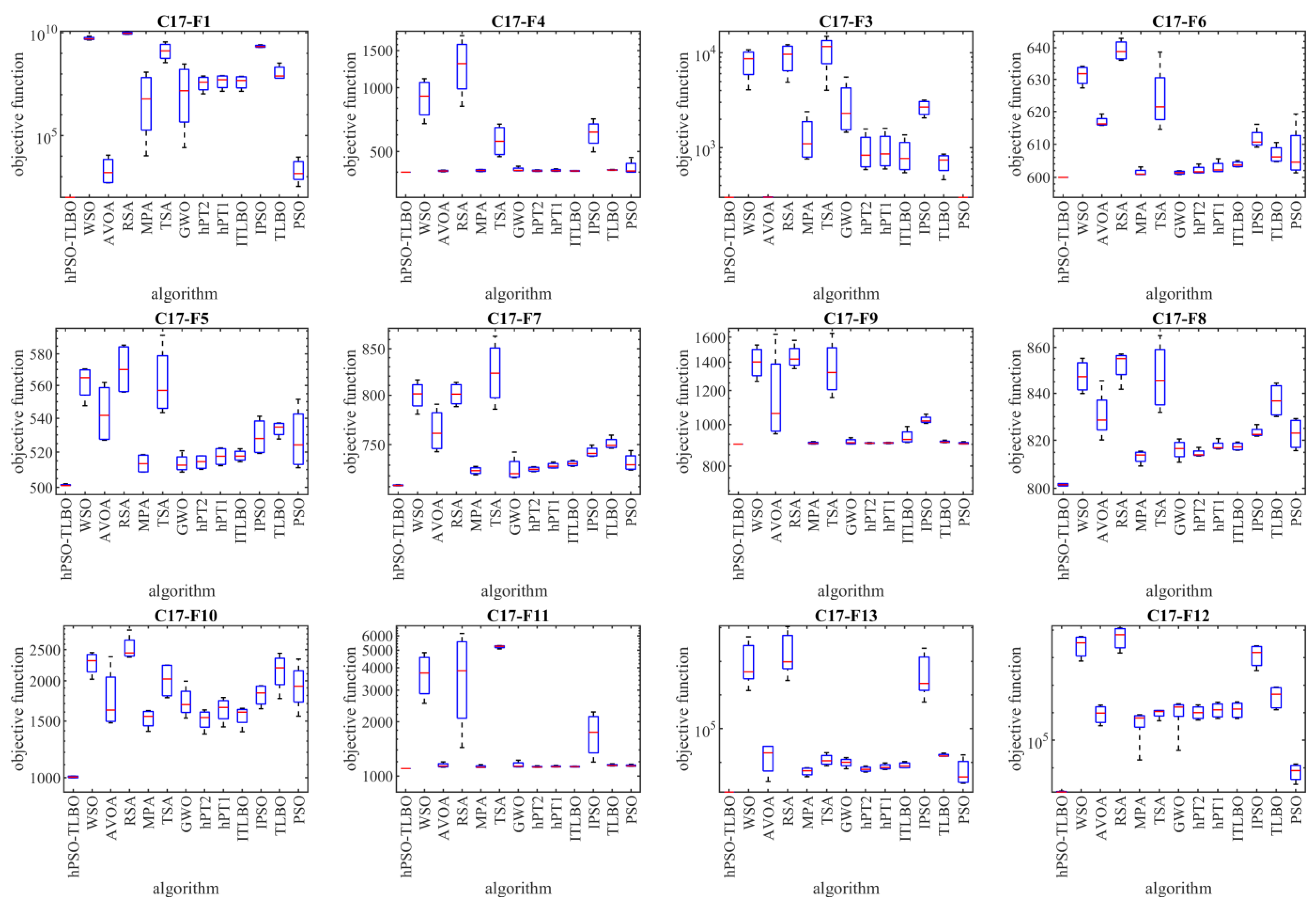

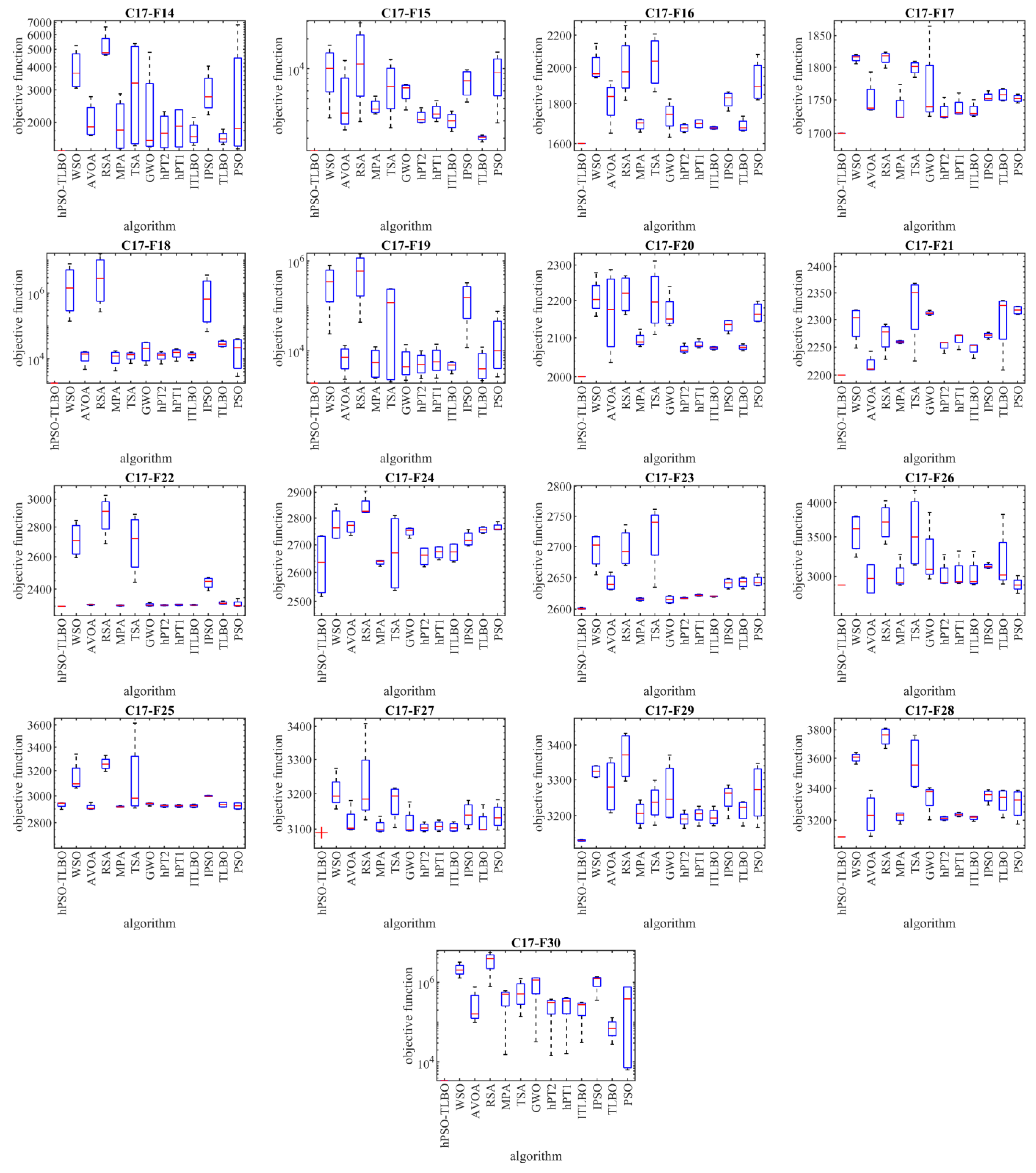

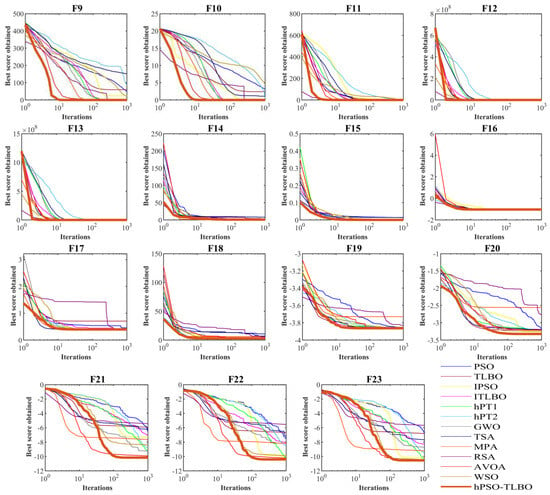

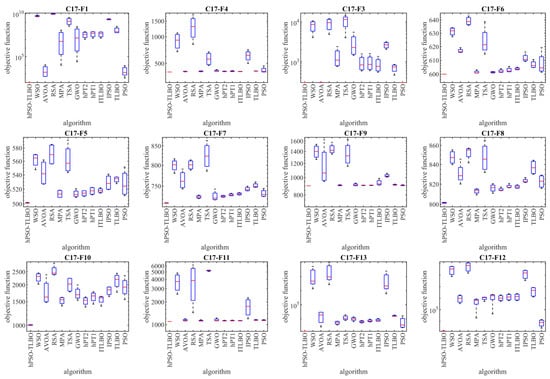

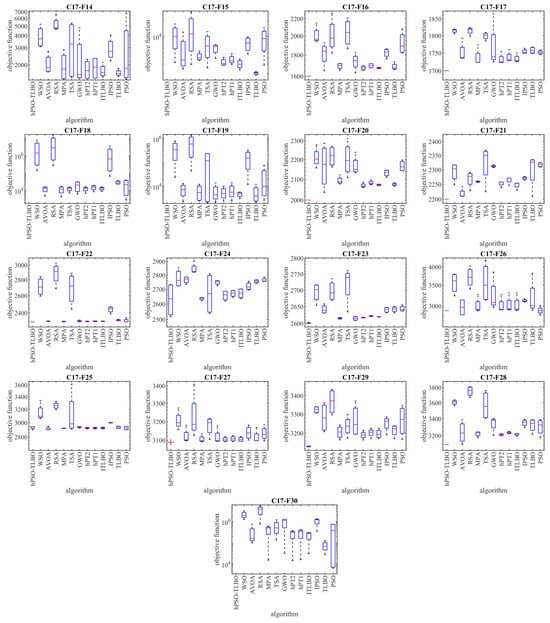

4.5. Evaluation CEC 2017 Test Suite

In this subsection, the performance of hPSO-TLBO is evaluated in handling the CEC 2017 test suite. The test suite employed in this study comprises thirty standard benchmarks, including three unimodal functions (C17-F1 to C17-F3), seven multimodal functions (C17-F4 to C17-F10), ten hybrid functions (C17-F11 to C17-F20), and ten composition functions (C17-F21 to C17-F30). However, the C17-F2 function was excluded from the simulations due to its unstable behavior. Detailed CEC 2017 test suite information can be found in [89]. The implementation results of hPSO-TLBO and other competing algorithms on the CEC 2017 test suite are presented in Table 4. Boxplots of the performance of metaheuristic methods in handling benchmarks from the CEC 2017 set are shown in Figure 3. The optimization results demonstrate that hPSO-TLBO emerged as the top-performing optimizer for functions C17-F1, C17-F3 to C17-F24, and C17-F26 to C17-F30. Overall, evaluating the benchmark functions in the CEC 2017 test set revealed that the proposed hPSO-TLBO approach outperforms competing algorithms in achieving superior results.

Table 4.

Optimization results of CEC 2017 test suite.

Figure 3.

Boxplot diagram of the hPSO-TLBO and competitor optimizers’ performances on the CEC 2017 test set.

4.6. Statistical Analysis

To assess the statistical significance of the superiority of the proposed hPSO-TLBO approach over competing algorithms, a nonparametric statistical test, namely the Wilcoxon signed-rank test [91], was conducted in this subsection. The test examines the mean differences between two data samples and determines whether they differ significantly. The obtained -values from the test were used to evaluate the significance of the differences between hPSO-TLBO and the competing algorithms. The results of the Wilcoxon signed-rank test, indicating the significance of the performance differences among the metaheuristic algorithms, are presented in Table 5. The statistical analysis reveals that the proposed hPSO-TLBO approach exhibits a significant statistical advantage over the competing algorithms when the -value is less than 0.05. The Wilcoxon signed-rank test notably confirms that hPSO-TLBO outperforms all twelve competing metaheuristic algorithms with a significant statistical advantage.

Table 5.

Wilcoxon rank sum test results.

5. hPSO-TLBO for Real-World Applications

In this section, we examine the effectiveness of the proposed hPSO-TLBO approach in addressing four engineering design problems, highlighting one of the key applications of metaheuristic algorithms. These algorithms play a crucial role in solving optimization problems in real-world scenarios.

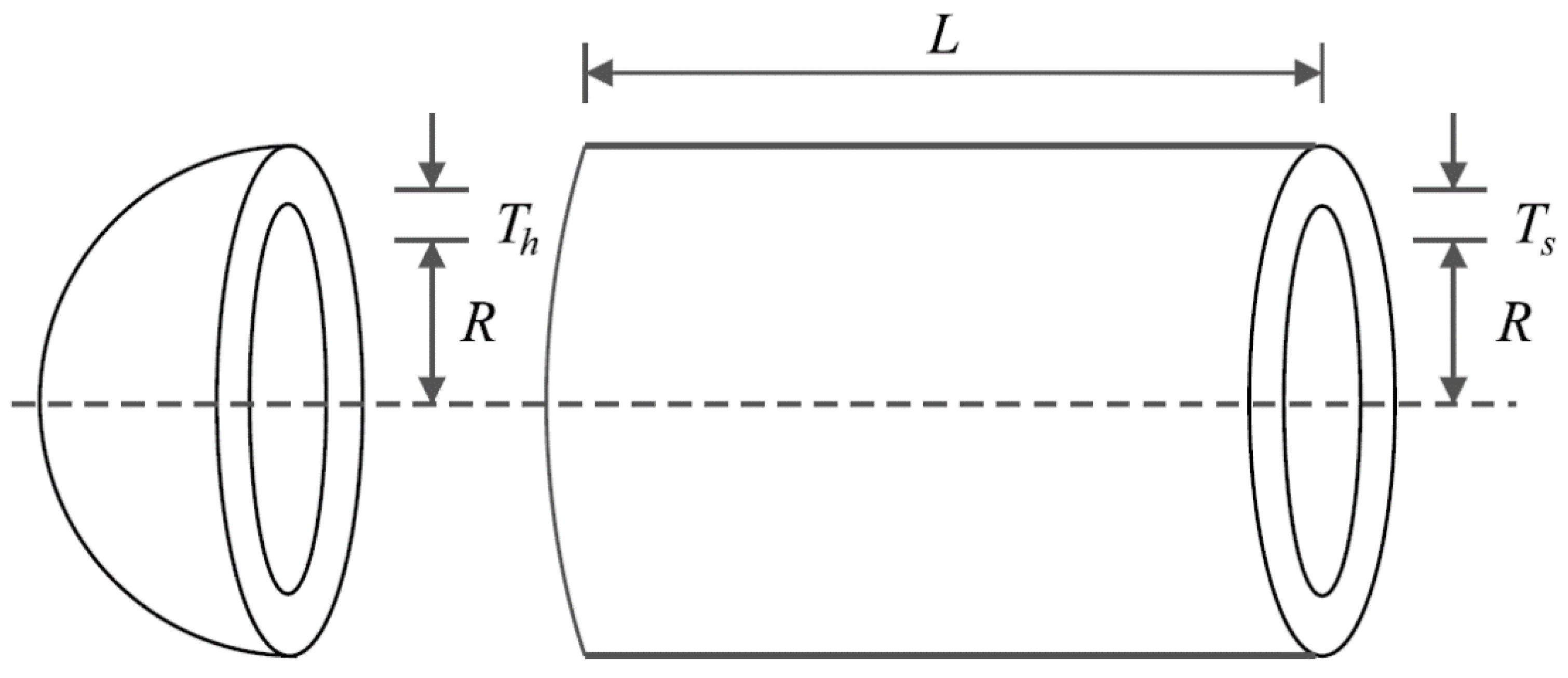

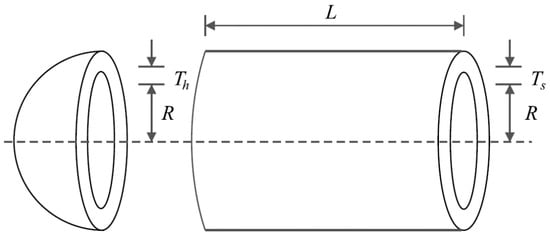

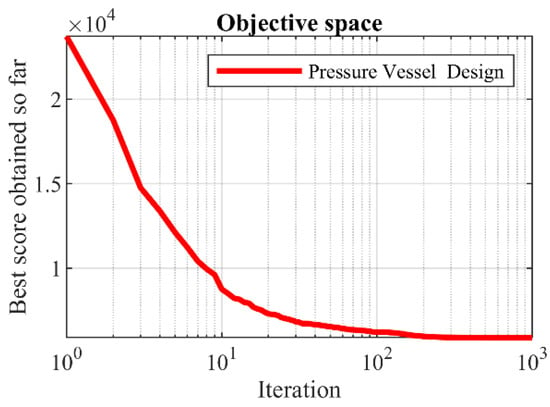

5.1. Pressure Vessel Design Problem

The design of a pressure vessel poses a significant engineering challenge, requiring careful consideration and analysis. The primary objective of this design is to achieve the minimum construction cost while meeting all necessary specifications and requirements. To provide a visual representation, Figure 4 depicts the schematic of the pressure vessel design, aiding in understanding its structural elements and overall layout. The mathematical model governing the pressure vessel design is presented below. This model encapsulates the equations and parameters that define the behavior and characteristics of the pressure vessel [92]:

Figure 4.

Schematic of pressure vessel design.

Consider:

Minimize:

Subject to:

with

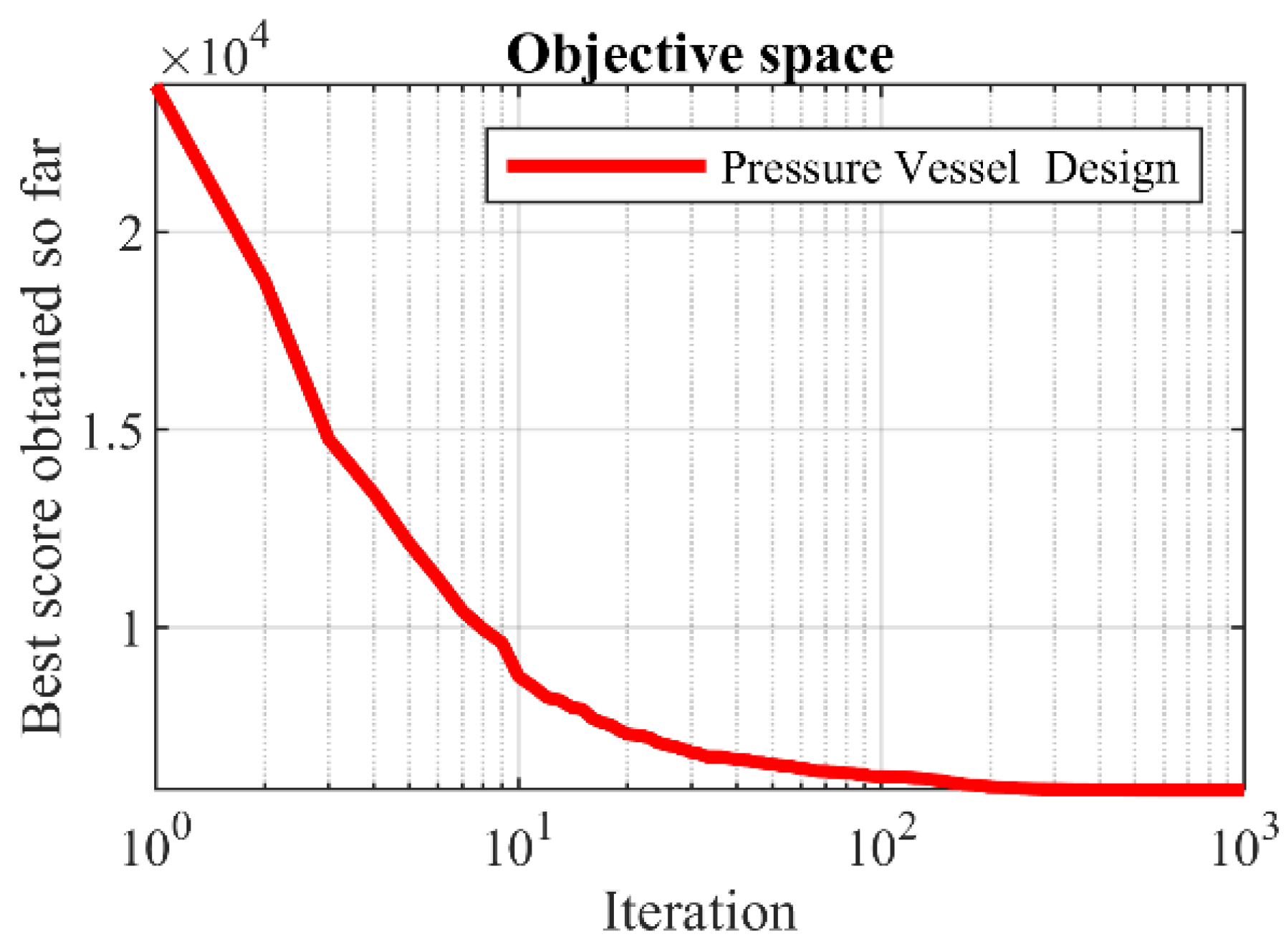

The results of employing hPSO-TLBO and competing algorithms to optimize pressure vessel design are presented in Table 6 and Table 7. The results obtained from the analysis indicate that the hPSO-TLBO algorithm successfully achieved the optimal design solution for the pressure vessel. The design variables were determined as , with the objective function value of . Furthermore, a comprehensive evaluation of the simulation results reveals that the hPSO-TLBO algorithm outperforms other competing algorithms regarding statistical indicators for the pressure vessel design problem. This superiority is demonstrated by the ability of hPSO-TLBO to deliver more favorable results. To visualize the convergence of the hPSO-TLBO algorithm towards the optimal design, Figure 5 illustrates the convergence curve associated with achieving the optimal solution for the pressure vessel.

Table 6.

Performance of optimization algorithms on pressure vessel design problem.

Table 7.

Statistical results of optimization algorithms on pressure vessel design problem.

Figure 5.

hPSO-TLBO’s performance convergence curve on pressure vessel design.

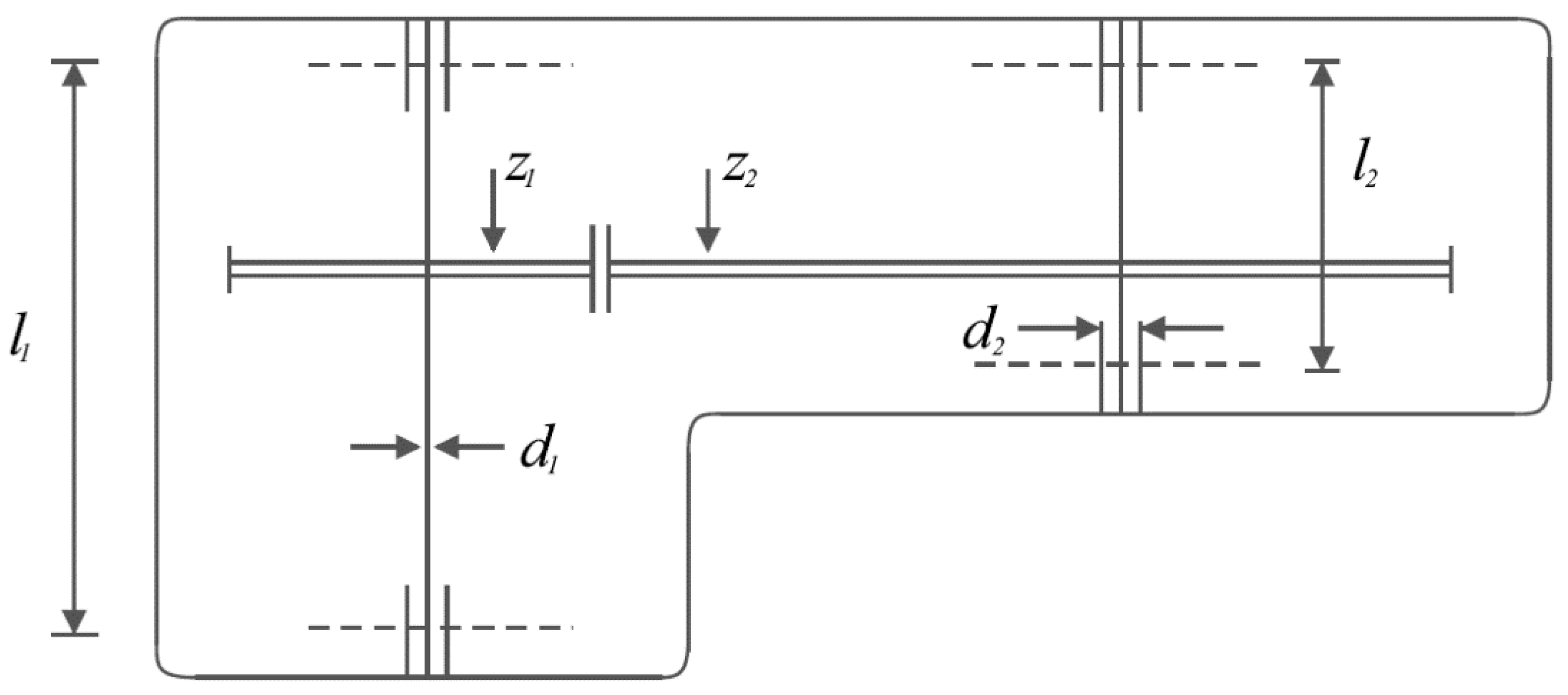

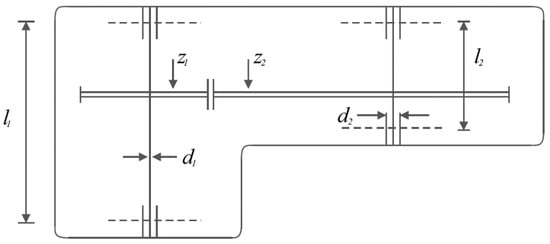

5.2. Speed Reducer Design Problem

The speed reducer design is a real-world application in engineering to minimize speed reducer weight. The speed reducer design schematic is shown in Figure 6. As expressed in [93,94], the mathematical model for the design of the speed reducer is given by the following equation and constraints:

Figure 6.

Schematic of speed reducer design.

Consider:

Minimize:

Subject to:

with

and

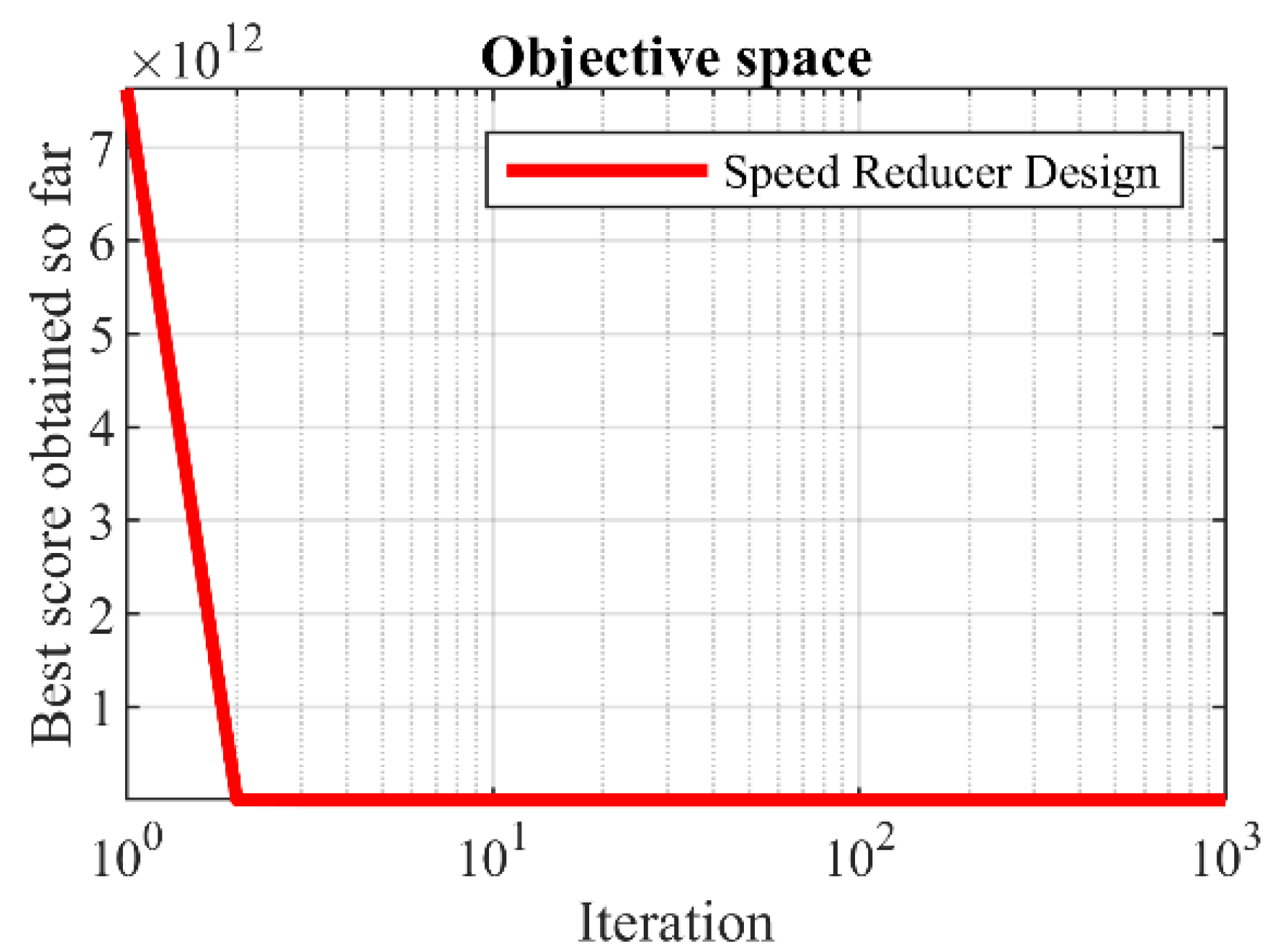

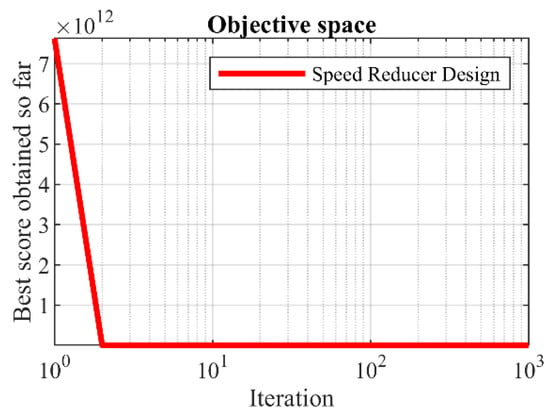

Table 8 and Table 9 display the outcomes obtained by applying the hPSO-TLBO algorithm and other competing algorithms to optimize the design of the speed reducer. The obtained results demonstrate that the hPSO-TLBO algorithm successfully generated the optimal design solution for the speed reducer. The model variables were determined as , resulting in an objective function value of . The simulation results clearly indicate that hPSO-TLBO performs better than other competing methods in tackling the speed reducer design problem. Furthermore, it consistently produces better outcomes and achieves improved results. Figure 7 portrays the convergence curve of the hPSO-TLBO algorithm as it progresses toward attaining the optimal design for the speed reducer, providing a visual representation of its successful performance.

Table 8.

Performance of optimization algorithms on speed reducer design problem.

Table 9.

Statistical results of optimization algorithms on speed reducer design problem.

Figure 7.

hPSO-TLBO’s performance convergence curve on speed reducer design.

5.3. Welded Beam Design

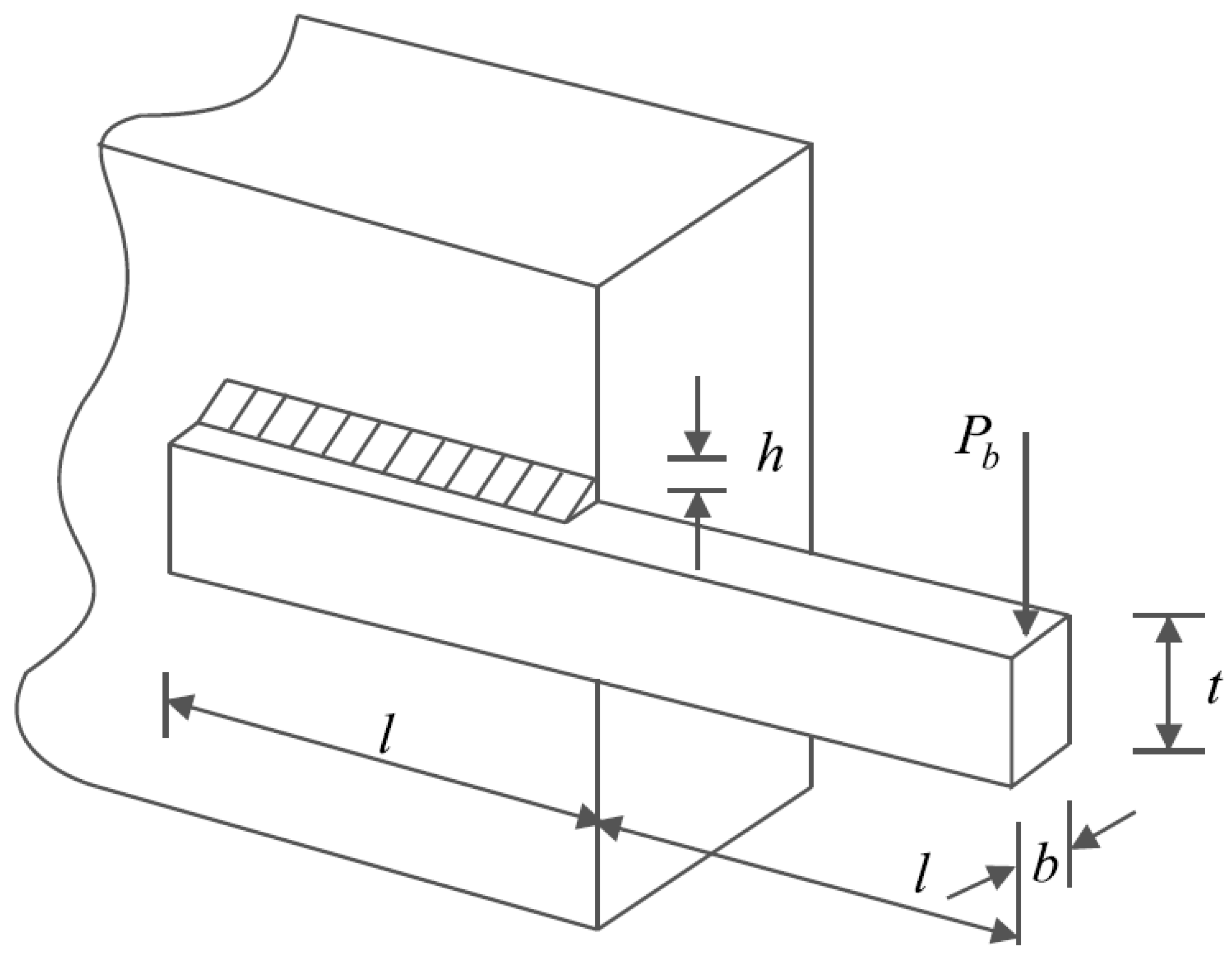

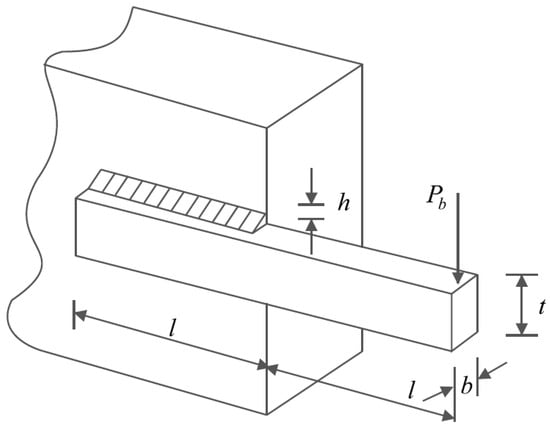

The design of welded beams holds significant importance in real-world engineering applications. Its primary objective is to minimize the fabrication cost associated with welded beam design. To aid in visualizing the design, Figure 8 presents the schematic of a welded beam, illustrating its structural configuration and critical elements. The mathematical model to analyze and optimize the welded beam design is as follows [16]:

Figure 8.

Schematic of welded beam design.

Consider:

Minimize:

Subject to:

where

with

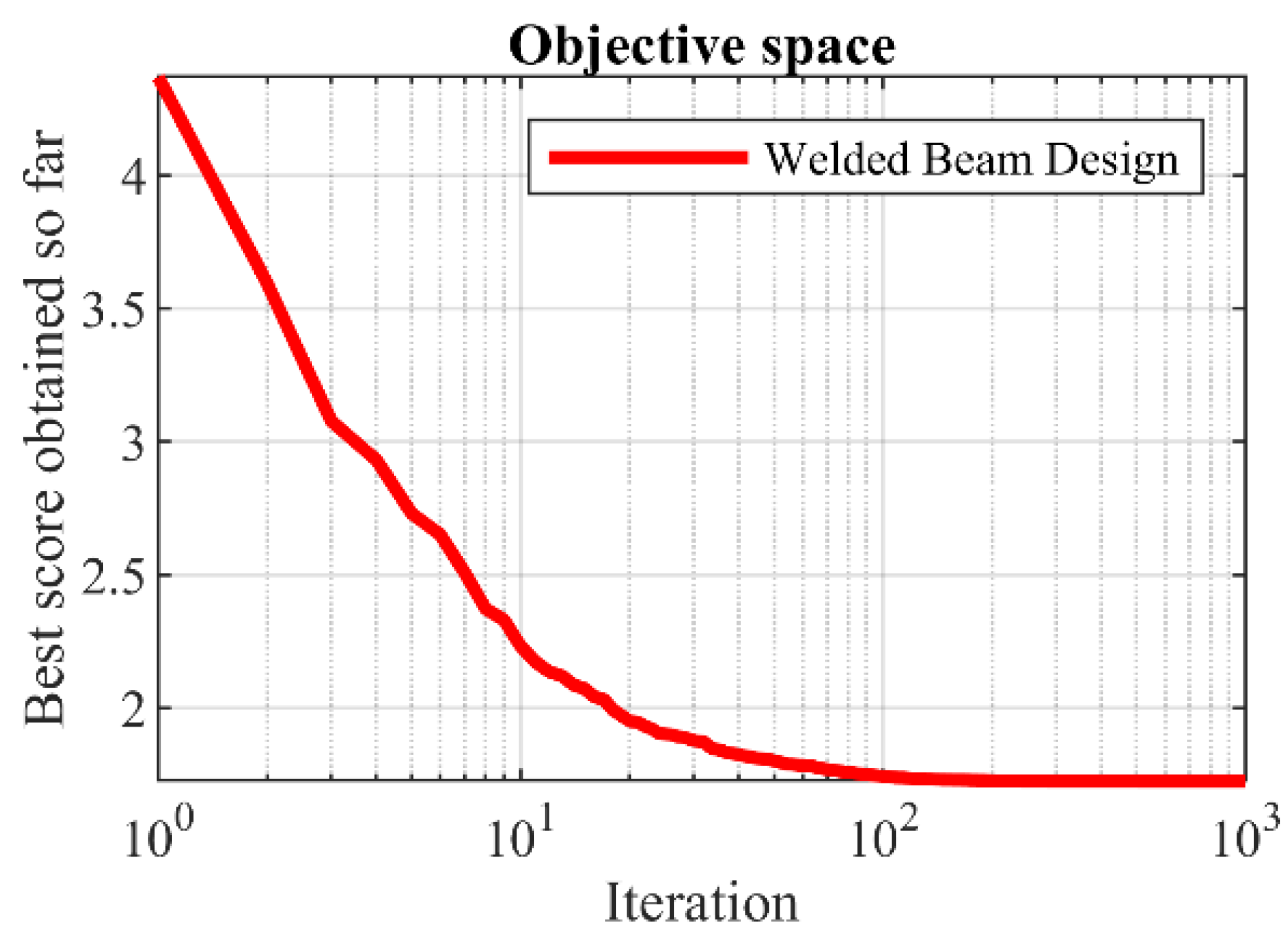

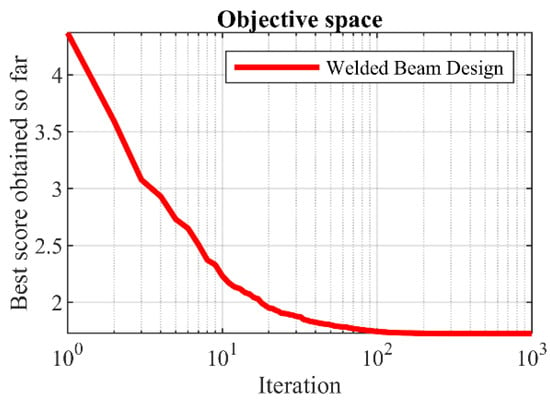

The optimization results for the welded beam design, achieved by employing the proposed hPSO-TLBO algorithm and other competing optimizers, are presented in Table 10 and Table 11. The proposed hPSO-TLBO algorithm yielded the optimal design for the welded beam, as indicated by the obtained results. The design variables were determined to have values of , and the corresponding objective function value was found to be . The simulation outcomes demonstrate that hPSO-TLBO outperforms competing algorithms in terms of statistical indicators and overall effectiveness in optimizing the welded beam design. The process of achieving the optimal design using hPSO-TLBO for the welded beam is depicted in Figure 9.

Table 10.

Performance of optimization algorithms on welded beam design problem.

Table 11.

Statistical results of optimization algorithms on welded beam design problem.

Figure 9.

hPSO-TLBO’s performance convergence curve on welded beam design.

5.4. Tension/Compression Spring Design

The tension/compression spring design is an optimization problem in real-world applications to minimize the weight of a tension/compression spring. The tension/compression spring design schematic is shown in Figure 10. The following mathematical model represents a tension/compression spring, as outlined in [16]:

Figure 10.

Schematic of tension/compression spring design.

Consider:

Minimize:

Subject to:

with

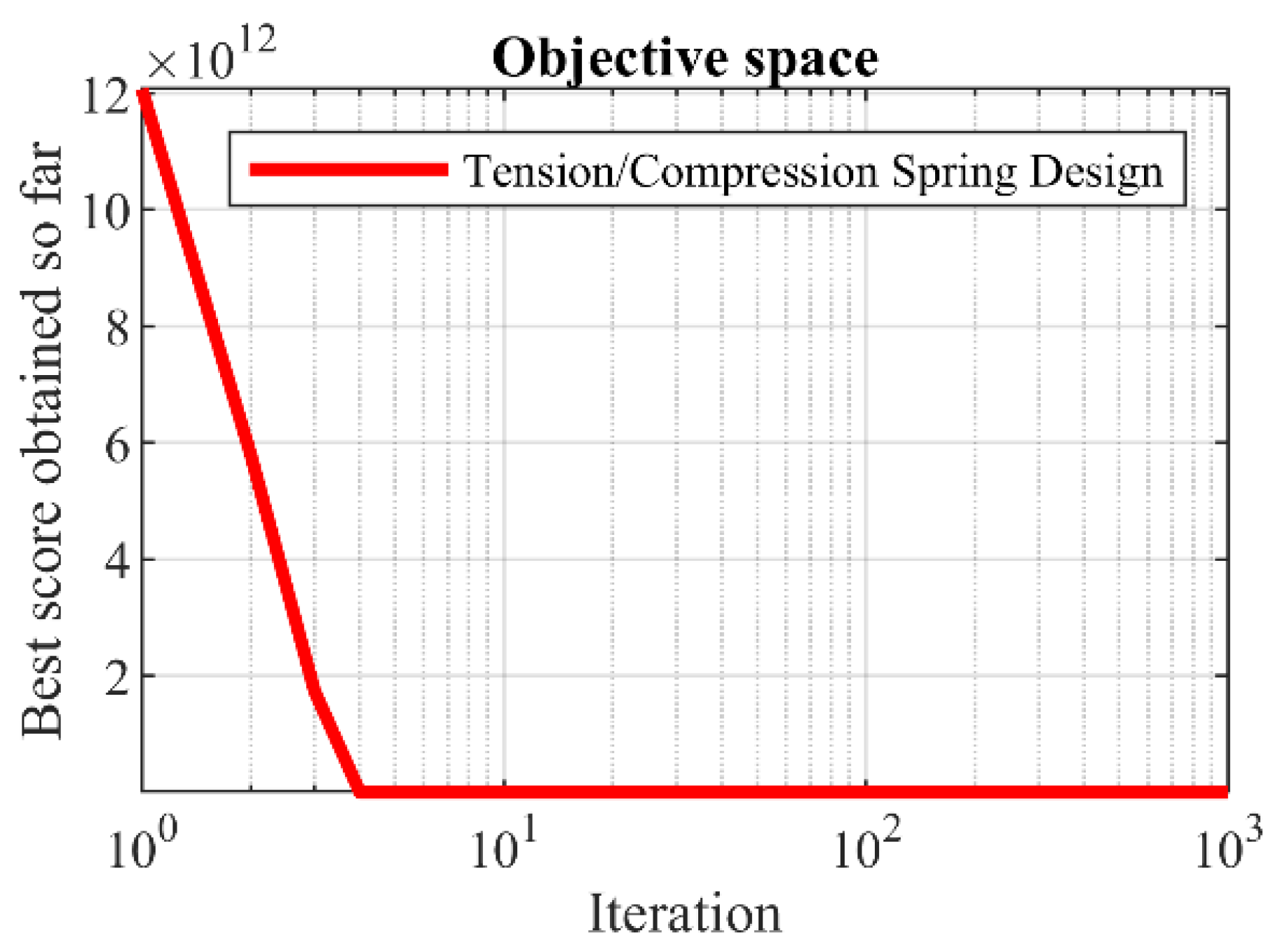

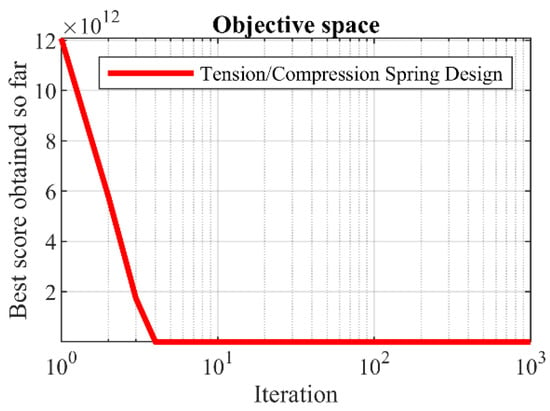

Table 12 and Table 13 showcase the results obtained when employing the hPSO-TLBO algorithm and other competing algorithms for the optimization of the tension/compression spring design. The proposed hPSO-TLBO approach yielded the optimal design for the tension/compression spring, as evidenced by the obtained results. The design variables were determined to have values of , and the corresponding value of the objective function was found to be . Simulation outcomes demonstrate that hPSO-TLBO outperforms competing algorithms, delivering superior outcomes in addressing the tension/compression spring problem. The convergence curve of hPSO-TLBO, illustrating its ability to achieve the optimal design for a tension/compression spring, is depicted in Figure 11.

Table 12.

Performance of optimization algorithms on tension/compression spring design problem.

Table 13.

Statistical results of optimization algorithms on tension/compression spring design problem.

Figure 11.

hPSO-TLBO’s performance convergence curve on tension/compression spring.

6. Conclusions and Future Works

This paper presented a novel hybrid metaheuristic algorithm called hPSO-TLBO, which combines the strengths of particle swarm optimization (PSO) and teaching–learning-based optimization (TLBO). The integration of PSO’s exploitation capability with TLBO’s exploration ability forms the foundation of hPSO-TLBO. The performance of hPSO-TLBO was evaluated on a diverse set of optimization tasks, including fifty-two standard benchmark functions and the CEC 2017 test suite. The results showcase the favorable performance of hPSO-TLBO across a range of benchmark functions, highlighting its capability to balance exploration and exploitation strategies effectively. A comparative analysis with twelve established metaheuristic algorithms further confirms the superior performance of hPSO-TLBO, which is statistically significant according to Wilcoxon analysis. Additionally, the successful application of hPSO-TLBO in solving four engineering design problems showcased its efficacy in real-world scenarios.

The introduction of hPSO-TLBO opens up several avenues for future research. One promising direction involves developing discrete or multi-objective versions of hPSO-TLBO. Exploring the application of hPSO-TLBO in diverse real-world problem domains is another great research prospect.

Author Contributions

Conceptualization, I.M. and M.H.; methodology, M.H.; software, Š.H.; validation, M.H.; formal analysis, Š.H.; investigation, M.H.; resources, M.H.; data curation, Š.H.; writing—original draft preparation, M.H. and I.M.; writing—review and editing, Š.H.; visualization, M.H.; supervision, Š.H.; project administration, I.M.; funding acquisition, Š.H. and I.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Project of Specific Research, Faculty of Science, University of Hradec Králové, 2024.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors thank the University of Hradec Králové for support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhao, S.; Zhang, T.; Ma, S.; Chen, M. Dandelion Optimizer: A nature-inspired metaheuristic algorithm for engineering applications. Eng. Appl. Artif. Intell. 2022, 114, 105075. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.; Mukhametzhanov, M. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Jahani, E.; Chizari, M. Tackling global optimization problems with a novel algorithm—Mouth Brooding Fish algorithm. Appl. Soft Comput. 2018, 62, 987–1002. [Google Scholar] [CrossRef]

- Liberti, L.; Kucherenko, S. Comparison of deterministic and stochastic approaches to global optimization. Int. Trans. Oper. Res. 2005, 12, 263–285. [Google Scholar] [CrossRef]

- Zeidabadi, F.-A.; Dehghani, M.; Trojovský, P.; Hubálovský, Š.; Leiva, V.; Dhiman, G. Archery Algorithm: A Novel Stochastic Optimization Algorithm for Solving Optimization Problems. Comput. Mater. Contin. 2022, 72, 399–416. [Google Scholar] [CrossRef]

- De Armas, J.; Lalla-Ruiz, E.; Tilahun, S.L.; Voß, S. Similarity in metaheuristics: A gentle step towards a comparison methodology. Nat. Comput. 2022, 21, 265–287. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Dehghani, A.; Malik, O.P.; Morales-Menendez, R.; Dhiman, G.; Nouri, N.; Ehsanifar, A.; Guerrero, J.M.; Ramirez-Mendoza, R.A. Binary spring search algorithm for solving various optimization problems. Appl. Sci. 2021, 11, 1286. [Google Scholar] [CrossRef]

- Trojovská, E.; Dehghani, M.; Trojovský, P. Zebra Optimization Algorithm: A New Bio-Inspired Optimization Algorithm for Solving Optimization Algorithm. IEEE Access 2022, 10, 49445–49473. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput.-Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial Bee Colony (ABC) Optimization Algorithm for Solving Constrained Optimization Problems. In International Fuzzy Systems Association World Congress; Springer: Berlin/Heidelberg, Germany, 2007; pp. 789–798. [Google Scholar]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. Part B 1996, 26, 29–41. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.-S. Firefly Algorithms for Multimodal Optimization. In Proceedings of the International Symposium on Stochastic Algorithms, Sapporo, Japan, 26–28 October 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 169–178. [Google Scholar]

- Dehghani, M.; Montazeri, Z.; Trojovská, E.; Trojovský, P. Coati Optimization Algorithm: A new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl.-Based Syst. 2023, 259, 110011. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Braik, M.; Hammouri, A.; Atwan, J.; Al-Betar, M.A.; Awadallah, M.A. White Shark Optimizer: A novel bio-inspired meta-heuristic algorithm for global optimization problems. Knowl.-Based Syst. 2022, 243, 108457. [Google Scholar] [CrossRef]

- Abualigah, L.; Abd Elaziz, M.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. Pelican Optimization Algorithm: A Novel Nature-Inspired Algorithm for Engineering Applications. Sensors 2022, 22, 855. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Bektemyssova, G.; Malik, O.P.; Dhiman, G.; Ahmed, A.E. Kookaburra Optimization Algorithm: A New Bio-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 470. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. A new bio-inspired metaheuristic algorithm for solving optimization problems based on walruses behavior. Sci. Rep. 2023, 13, 8775. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden Jackal Optimization: A Novel Nature-Inspired Optimizer for Engineering Applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Dehghani, M.; Bektemyssova, G.; Montazeri, Z.; Shaikemelev, G.; Malik, O.P.; Dhiman, G. Lyrebird Optimization Algorithm: A New Bio-Inspired Metaheuristic Algorithm for Solving Optimization Problems. Biomimetics 2023, 8, 507. [Google Scholar] [CrossRef] [PubMed]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Abdollahzadeh, B.; Gharehchopogh, F.S.; Mirjalili, S. African vultures optimization algorithm: A new nature-inspired metaheuristic algorithm for global optimization problems. Comput. Ind. Eng. 2021, 158, 107408. [Google Scholar] [CrossRef]

- Kaur, S.; Awasthi, L.K.; Sangal, A.L.; Dhiman, G. Tunicate Swarm Algorithm: A new bio-inspired based metaheuristic paradigm for global optimization. Eng. Appl. Artif. Intell. 2020, 90, 103541. [Google Scholar] [CrossRef]

- Goldberg, D.E.; Holland, J.H. Genetic Algorithms and Machine Learning. Mach. Learn. 1988, 3, 95–99. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- De Castro, L.N.; Timmis, J.I. Artificial immune systems as a novel soft computing paradigm. Soft Comput. 2003, 7, 526–544. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Dhiman, G.; Malik, O.; Morales-Menendez, R.; Ramirez-Mendoza, R.A.; Dehghani, A.; Guerrero, J.M.; Parra-Arroyo, L. A spring search algorithm applied to engineering optimization problems. Appl. Sci. 2020, 10, 6173. [Google Scholar] [CrossRef]

- Dehghani, M.; Samet, H. Momentum search algorithm: A new meta-heuristic optimization algorithm inspired by momentum conservation law. SN Appl. Sci. 2020, 2, 1720. [Google Scholar] [CrossRef]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm–A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Cuevas, E.; Oliva, D.; Zaldivar, D.; Pérez-Cisneros, M.; Sossa, H. Circle detection using electro-magnetism optimization. Inf. Sci. 2012, 182, 40–55. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussain, K.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W. Archimedes optimization algorithm: A new metaheuristic algorithm for solving optimization problems. Appl. Intell. 2021, 51, 1531–1551. [Google Scholar] [CrossRef]

- Pereira, J.L.J.; Francisco, M.B.; Diniz, C.A.; Oliver, G.A.; Cunha, S.S., Jr; Gomes, G.F. Lichtenberg algorithm: A novel hybrid physics-based meta-heuristic for global optimization. Expert Syst. Appl. 2021, 170, 114522. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inf. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Kaveh, A.; Dadras, A. A novel meta-heuristic optimization algorithm: Thermal exchange optimization. Adv. Eng. Softw. 2017, 110, 69–84. [Google Scholar] [CrossRef]

- Dehghani, M.; Mardaneh, M.; Guerrero, J.M.; Malik, O.; Kumar, V. Football game based optimization: An application to solve energy commitment problem. Int. J. Intell. Eng. Syst. 2020, 13, 514–523. [Google Scholar] [CrossRef]

- Moghdani, R.; Salimifard, K. Volleyball premier league algorithm. Appl. Soft Comput. 2018, 64, 161–185. [Google Scholar] [CrossRef]

- Kaveh, A.; Zolghadr, A. A Novel Meta-Heuristic Algorithm: Tug of War Optimization. Int. J. Optim. Civ. Eng. 2016, 6, 469–492. [Google Scholar]

- Montazeri, Z.; Niknam, T.; Aghaei, J.; Malik, O.P.; Dehghani, M.; Dhiman, G. Golf Optimization Algorithm: A New Game-Based Metaheuristic Algorithm and Its Application to Energy Commitment Problem Considering Resilience. Biomimetics 2023, 8, 386. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, M.; Montazeri, Z.; Saremi, S.; Dehghani, A.; Malik, O.P.; Al-Haddad, K.; Guerrero, J.M. HOGO: Hide objects game optimization. Int. J. Intell. Eng. Syst. 2020, 13, 216–225. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Givi, H.; Guerrero, J.M.; Dhiman, G. Darts game optimizer: A new optimization technique based on darts game. Int. J. Intell. Eng. Syst. 2020, 13, 286–294. [Google Scholar] [CrossRef]

- Zeidabadi, F.A.; Dehghani, M. POA: Puzzle Optimization Algorithm. Int. J. Intell. Eng. Syst. 2022, 15, 273–281. [Google Scholar]

- Dehghani, M.; Mardaneh, M.; Guerrero, J.M.; Malik, O.P.; Ramirez-Mendoza, R.A.; Matas, J.; Vasquez, J.C.; Parra-Arroyo, L. A new “Doctor and Patient” optimization algorithm: An application to energy commitment problem. Appl. Sci. 2020, 10, 5791. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Teamwork Optimization Algorithm: A New Optimization Approach for Function Minimization/Maximization. Sensors 2021, 21, 4567. [Google Scholar] [CrossRef]

- Moosavi, S.H.S.; Bardsiri, V.K. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 2019, 86, 165–181. [Google Scholar] [CrossRef]

- Matoušová, I.; Trojovský, P.; Dehghani, M.; Trojovská, E.; Kostra, J. Mother optimization algorithm: A new human-based metaheuristic approach for solving engineering optimization. Sci. Rep. 2023, 13, 10312. [Google Scholar] [CrossRef] [PubMed]

- Al-Betar, M.A.; Alyasseri, Z.A.A.; Awadallah, M.A.; Abu Doush, I. Coronavirus herd immunity optimizer (CHIO). Neural Comput. Appl. 2021, 33, 5011–5042. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, M.; Trojovská, E.; Trojovský, P. A new human-based metaheuristic algorithm for solving optimization problems on the base of simulation of driving training process. Sci. Rep. 2022, 12, 9924. [Google Scholar] [CrossRef] [PubMed]

- Braik, M.; Ryalat, M.H.; Al-Zoubi, H. A novel meta-heuristic algorithm for solving numerical optimization problems: Ali Baba and the forty thieves. Neural Comput. Appl. 2022, 34, 409–455. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M. A new optimization algorithm based on mimicking the voting process for leader selection. PeerJ Comput. Sci. 2022, 8, e976. [Google Scholar] [CrossRef] [PubMed]

- Trojovská, E.; Dehghani, M. A new human-based metahurestic optimization method based on mimicking cooking training. Sci. Rep. 2022, 12, 14861. [Google Scholar] [CrossRef] [PubMed]

- Dehghani, M.; Trojovská, E.; Zuščák, T. A new human-inspired metaheuristic algorithm for solving optimization problems based on mimicking sewing training. Sci. Rep. 2022, 12, 17387. [Google Scholar] [CrossRef]

- Trojovský, P.; Dehghani, M.; Trojovská, E.; Milkova, E. The Language Education Optimization: A New Human-Based Metaheuristic Algorithm for Solving Optimization Problems: Language Education Optimization. Comput. Model. Eng. Sci. 2022, 136, 1527–1573. [Google Scholar]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar] [CrossRef]

- Ayyarao, T.L.; RamaKrishna, N.; Elavarasam, R.M.; Polumahanthi, N.; Rambabu, M.; Saini, G.; Khan, B.; Alatas, B. War Strategy Optimization Algorithm: A New Effective Metaheuristic Algorithm for Global Optimization. IEEE Access 2022, 10, 25073–25105. [Google Scholar] [CrossRef]

- Talatahari, S.; Goodarzimehr, V.; Taghizadieh, N. Hybrid teaching-learning-based optimization and harmony search for optimum design of space trusses. J. Optim. Ind. Eng. 2020, 13, 177–194. [Google Scholar]

- Khatir, A.; Capozucca, R.; Khatir, S.; Magagnini, E.; Benaissa, B.; Le Thanh, C.; Wahab, M.A. A new hybrid PSO-YUKI for double cracks identification in CFRP cantilever beam. Compos. Struct. 2023, 311, 116803. [Google Scholar] [CrossRef]

- Al Thobiani, F.; Khatir, S.; Benaissa, B.; Ghandourah, E.; Mirjalili, S.; Wahab, M.A. A hybrid PSO and Grey Wolf Optimization algorithm for static and dynamic crack identification. Theor. Appl. Fract. Mech. 2022, 118, 103213. [Google Scholar] [CrossRef]

- Singh, R.; Chaudhary, H.; Singh, A.K. A new hybrid teaching–learning particle swarm optimization algorithm for synthesis of linkages to generate path. Sādhanā 2017, 42, 1851–1870. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y. Hybrid teaching-learning-based PSO for trajectory optimisation. Electron. Lett. 2017, 53, 777–779. [Google Scholar] [CrossRef]

- Yun, Y.; Gen, M.; Erdene, T.N. Applying GA-PSO-TLBO approach to engineering optimization problems. Math. Biosci. Eng. 2023, 20, 552–571. [Google Scholar] [CrossRef] [PubMed]

- Azad-Farsani, E.; Zare, M.; Azizipanah-Abarghooee, R.; Askarian-Abyaneh, H. A new hybrid CPSO-TLBO optimization algorithm for distribution network reconfiguration. J. Intell. Fuzzy Syst. 2014, 26, 2175–2184. [Google Scholar] [CrossRef]

- Shukla, A.K.; Singh, P.; Vardhan, M. A new hybrid wrapper TLBO and SA with SVM approach for gene expression data. Inf. Sci. 2019, 503, 238–254. [Google Scholar] [CrossRef]

- Nenavath, H.; Jatoth, R.K. Hybrid SCA–TLBO: A novel optimization algorithm for global optimization and visual tracking. Neural Comput. Appl. 2019, 31, 5497–5526. [Google Scholar] [CrossRef]

- Sharma, S.R.; Singh, B.; Kaur, M. Hybrid SFO and TLBO optimization for biodegradable classification. Soft Comput. 2021, 25, 15417–15443. [Google Scholar] [CrossRef]

- Kundu, T.; Deepmala; Jain, P. A hybrid salp swarm algorithm based on TLBO for reliability redundancy allocation problems. Appl. Intell. 2022, 52, 12630–12667. [Google Scholar] [CrossRef] [PubMed]

- Lin, S.; Liu, A.; Wang, J.; Kong, X. An intelligence-based hybrid PSO-SA for mobile robot path planning in warehouse. J. Comput. Sci. 2023, 67, 101938. [Google Scholar] [CrossRef]

- Murugesan, S.; Suganyadevi, M.V. Performance Analysis of Simplified Seven-Level Inverter using Hybrid HHO-PSO Algorithm for Renewable Energy Applications. Iran. J. Sci. Technol. Trans. Electr. Eng. 2023. [Google Scholar] [CrossRef]

- Hosseini, M.; Navabi, M.S. Hybrid PSO-GSA based approach for feature selection. J. Ind. Eng. Manag. Stud. 2023, 10, 1–15. [Google Scholar]

- Bhandari, A.S.; Kumar, A.; Ram, M. Reliability optimization and redundancy allocation for fire extinguisher drone using hybrid PSO–GWO. Soft Comput. 2023, 27, 14819–14833. [Google Scholar] [CrossRef]

- Amirteimoori, A.; Mahdavi, I.; Solimanpur, M.; Ali, S.S.; Tirkolaee, E.B. A parallel hybrid PSO-GA algorithm for the flexible flow-shop scheduling with transportation. Comput. Ind. Eng. 2022, 173, 108672. [Google Scholar] [CrossRef]

- Koh, J.S.; Tan, R.H.; Lim, W.H.; Tan, N.M. A Modified Particle Swarm Optimization for Efficient Maximum Power Point Tracking under Partial Shading Condition. IEEE Trans. Sustain. Energy 2023, 14, 1822–1834. [Google Scholar] [CrossRef]

- Zare, M.; Akbari, M.-A.; Azizipanah-Abarghooee, R.; Malekpour, M.; Mirjalili, S.; Abualigah, L. A modified Particle Swarm Optimization algorithm with enhanced search quality and population using Hummingbird Flight patterns. Decis. Anal. J. 2023, 7, 100251. [Google Scholar] [CrossRef]

- Cui, G.; Qin, L.; Liu, S.; Wang, Y.; Zhang, X.; Cao, X. Modified PSO algorithm for solving planar graph coloring problem. Prog. Nat. Sci. 2008, 18, 353–357. [Google Scholar] [CrossRef]

- Lihong, H.; Nan, Y.; Jianhua, W.; Ying, S.; Jingjing, D.; Ying, X. Application of Modified PSO in the Optimization of Reactive Power. In Proceedings of the 2009 Chinese Control and Decision Conference, Guilin, China, 17–19 June 2009; pp. 3493–3496. [Google Scholar]

- Krishnamurthy, N.K.; Sabhahit, J.N.; Jadoun, V.K.; Gaonkar, D.N.; Shrivastava, A.; Rao, V.S.; Kudva, G. Optimal Placement and Sizing of Electric Vehicle Charging Infrastructure in a Grid-Tied DC Microgrid Using Modified TLBO Method. Energies 2023, 16, 1781. [Google Scholar] [CrossRef]

- Eirgash, M.A.; Toğan, V.; Dede, T.; Başağa, H.B. Modified Dynamic Opposite Learning Assisted TLBO for Solving Time-Cost Optimization in Generalized Construction Projects. In Structures; Elsevier: Amsterdam, The Netherlands, 2023; pp. 806–821. [Google Scholar]

- Amiri, H.; Radfar, N.; Arab Solghar, A.; Mashayekhi, M. Two ımproved teaching–learning-based optimization algorithms for the solution of ınverse boundary design problems. Soft Comput. 2023, 1–22. [Google Scholar] [CrossRef]

- Yaqoob, M.T.; Rahmat, M.K.; Maharum, S.M.M. Modified teaching learning based optimization for selective harmonic elimination in multilevel inverters. Ain Shams Eng. J. 2022, 13, 101714. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar]

- Awad, N.; Ali, M.; Liang, J.; Qu, B.; Suganthan, P.; Definitions, P. Evaluation criteria for the CEC 2017 special session and competition on single objective real-parameter numerical optimization. Technol. Rep. 2016. [Google Scholar]

- Bashir, M.U.; Paul, W.U.H.; Ahmad, M.; Ali, D.; Ali, M.S. An Efficient Hybrid TLBO-PSO Approach for Congestion Management Employing Real Power Generation Rescheduling. Smart Grid Renew. Energy 2021, 12, 113–135. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

- Kannan, B.; Kramer, S.N. An augmented Lagrange multiplier based method for mixed integer discrete continuous optimization and its applications to mechanical design. J. Mech. Des. 1994, 116, 405–411. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Yang, X.-S. Benchmark problems in structural optimization. In Computational Optimization, Methods and Algorithms; Springer: Berlin/Heidelberg, Germany, 2011; pp. 259–281. [Google Scholar]

- Mezura-Montes, E.; Coello, C.A.C. Useful infeasible solutions in engineering optimization with evolutionary algorithms. In Proceedings of the Mexican International Conference on Artificial Intelligence, Monterrey, Mexico, 14–18 November 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 652–662. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).