A Semi-Autonomous Hierarchical Control Framework for Prosthetic Hands Inspired by Dual Streams of Human

Abstract

1. Introduction

2. Related Work

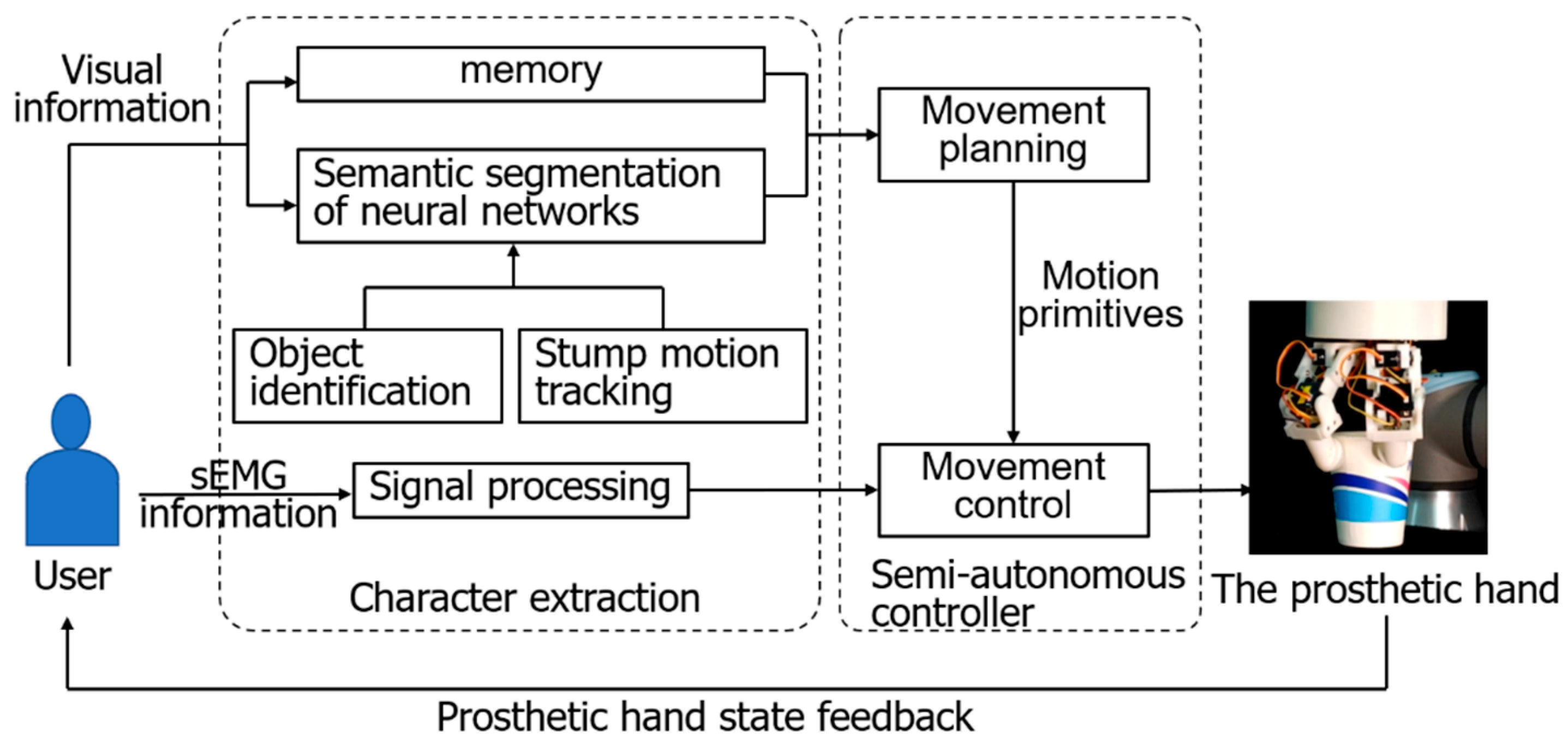

- (1)

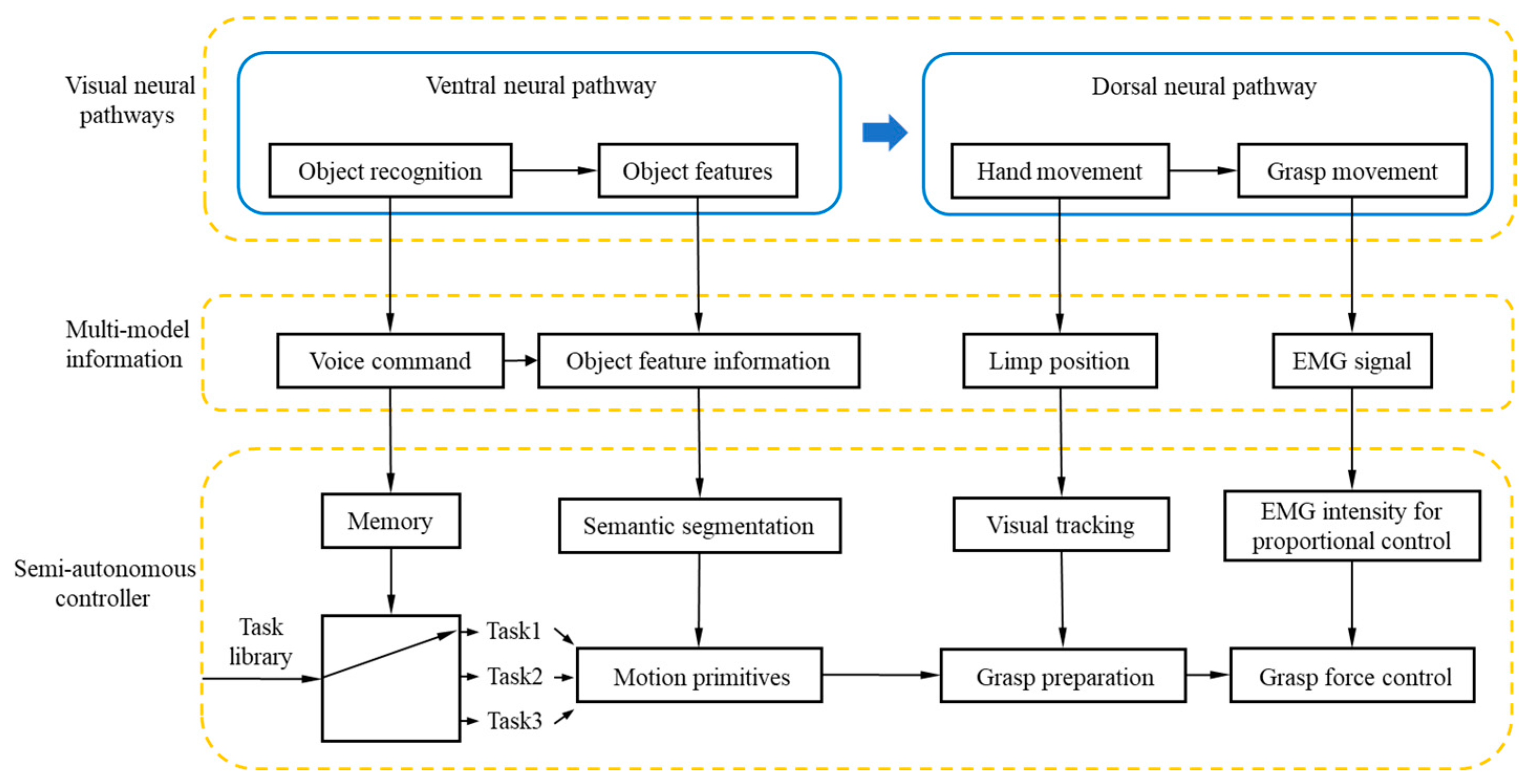

- A controller is constructed based on the pathway of the human ventral–dorsal nerves. Object semantic segmentation and convolutional neural network (CNN) recognition are categorized as the ventral stream, while the motion tracking of the limb is introduced as the control of the dorsal stream. Moreover, the dorsal stream and ventral stream are integrated to ensure accurate motion primitives.

- (2)

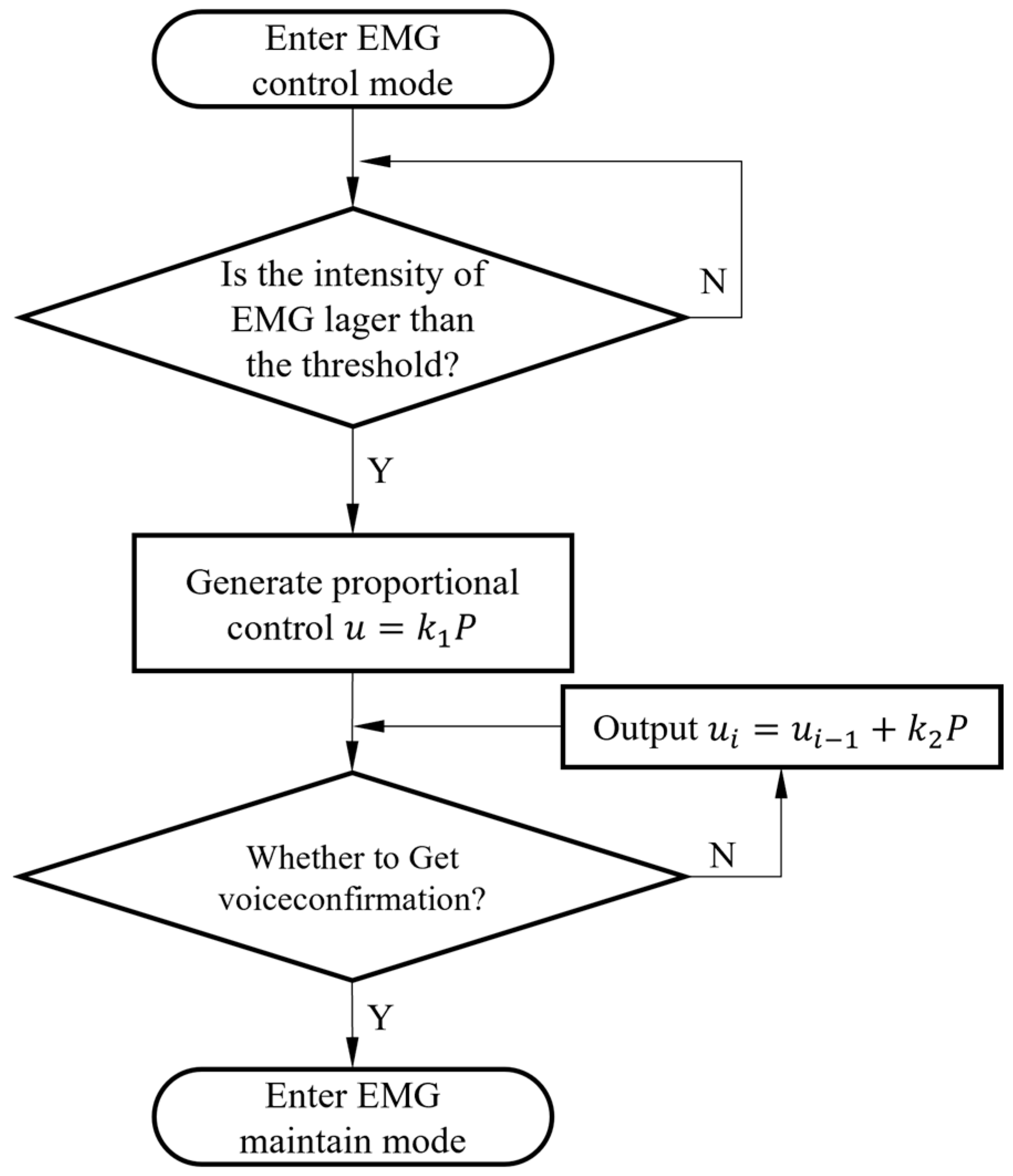

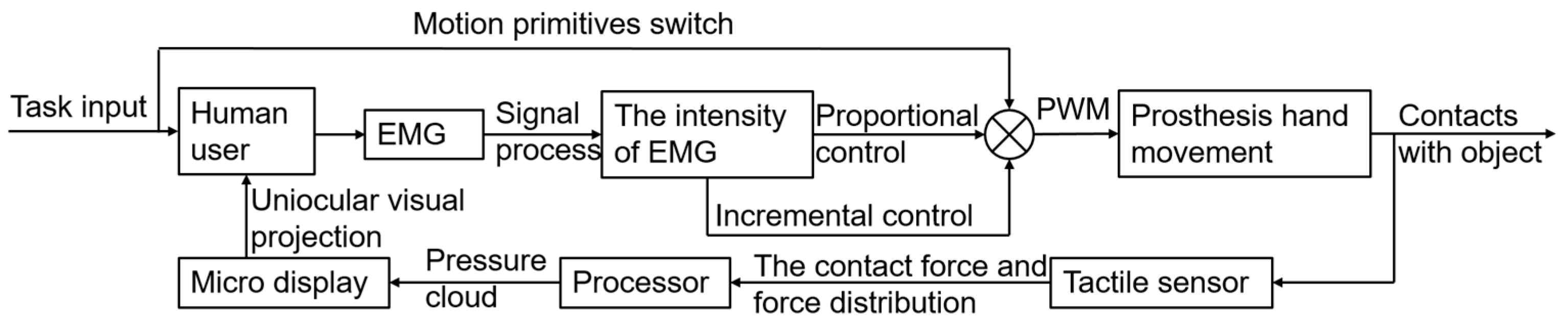

- In order to reduce the cognitive burden, a semi-autonomous controller is proposed. Feedback of the prosthetic hand is integrated to enhance the perceived experience. EMG signals of the user are obtained to realize the human in the loop control.

3. Methodology

3.1. Task-Centric Planning

- (1)

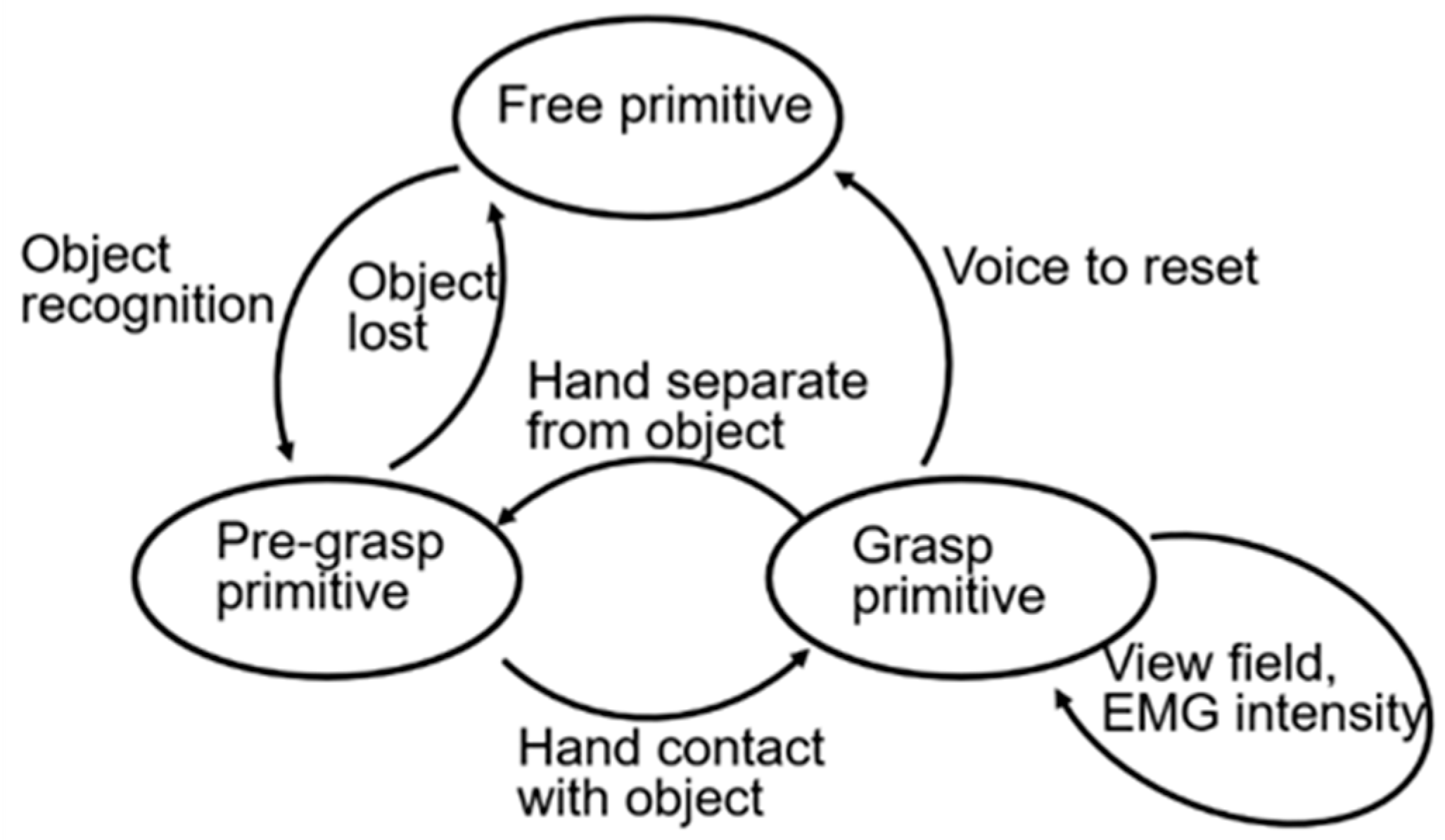

- The beginning of the task. The initial motion primitive is in the free state, which is gesture 1.

- (2)

- Object recognition stage. When the target recognition is completed, the prosthetic hand forms the pre-grasp posture according to the feature information of the object. If the target image is lost, it is estimated that the user gives up grasping, and the prosthetic hand restores to the free primitive state.

- (3)

- Pre-grasp stage. The head-mounted CMOS image sensor obtains the spatial position relationship between the prosthetic hand and the object in real time. When the space distance between the hand and object is less than the threshold value, it is judged that the hand and object are in contact and enter the grasping primitive stage. When the space distance between the hand and object is greater than the threshold value, the hand and object are considered to be separated and return to the pre-hand type stage. The user can change his view field to restore the original free primitive at this stage.

- (4)

- Grasp stage. Control is performed on the prosthetic hand after fusing EMG signal and object vision information feedback.

- (5)

- After grasping, the prosthetic hand ends the grasping task and returns to the initial stage. After completing the grasping task, the prosthetic hand is controlled to separate from the object and restored to the initial free primitive state, changing the view field of the CMOS sensor. In case of unsuccessful separation of the hand and object or an emergency, voice command can perform an emergency reset. We restrict the prosthetic hand from switching directly to the free primitive state when performing grasp primitives for the user’s safety. The aforementioned manipulate sequence planning for the controller is shown in Figure 2.

3.2. Precise Force Control Strategy

4. Experiment

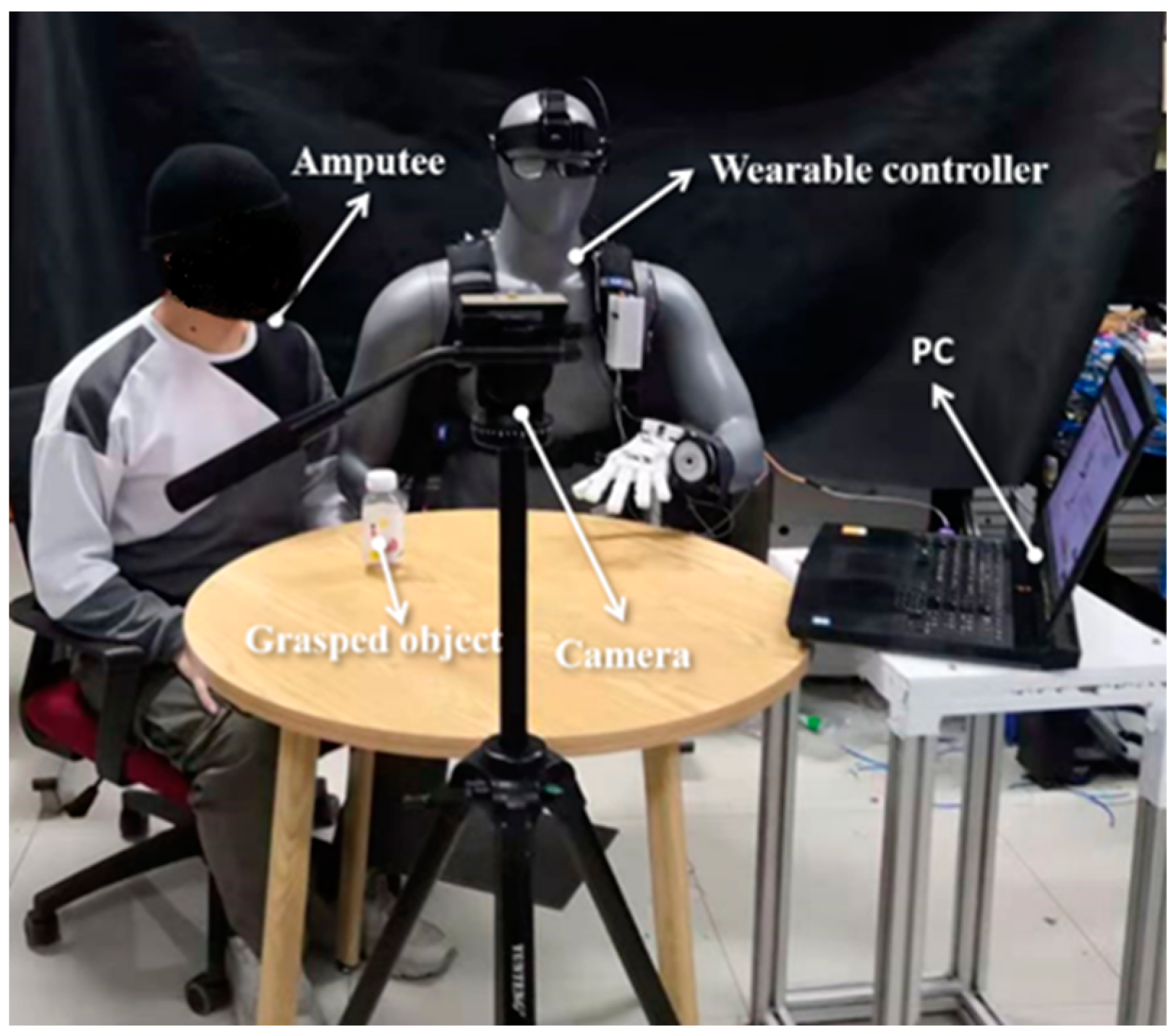

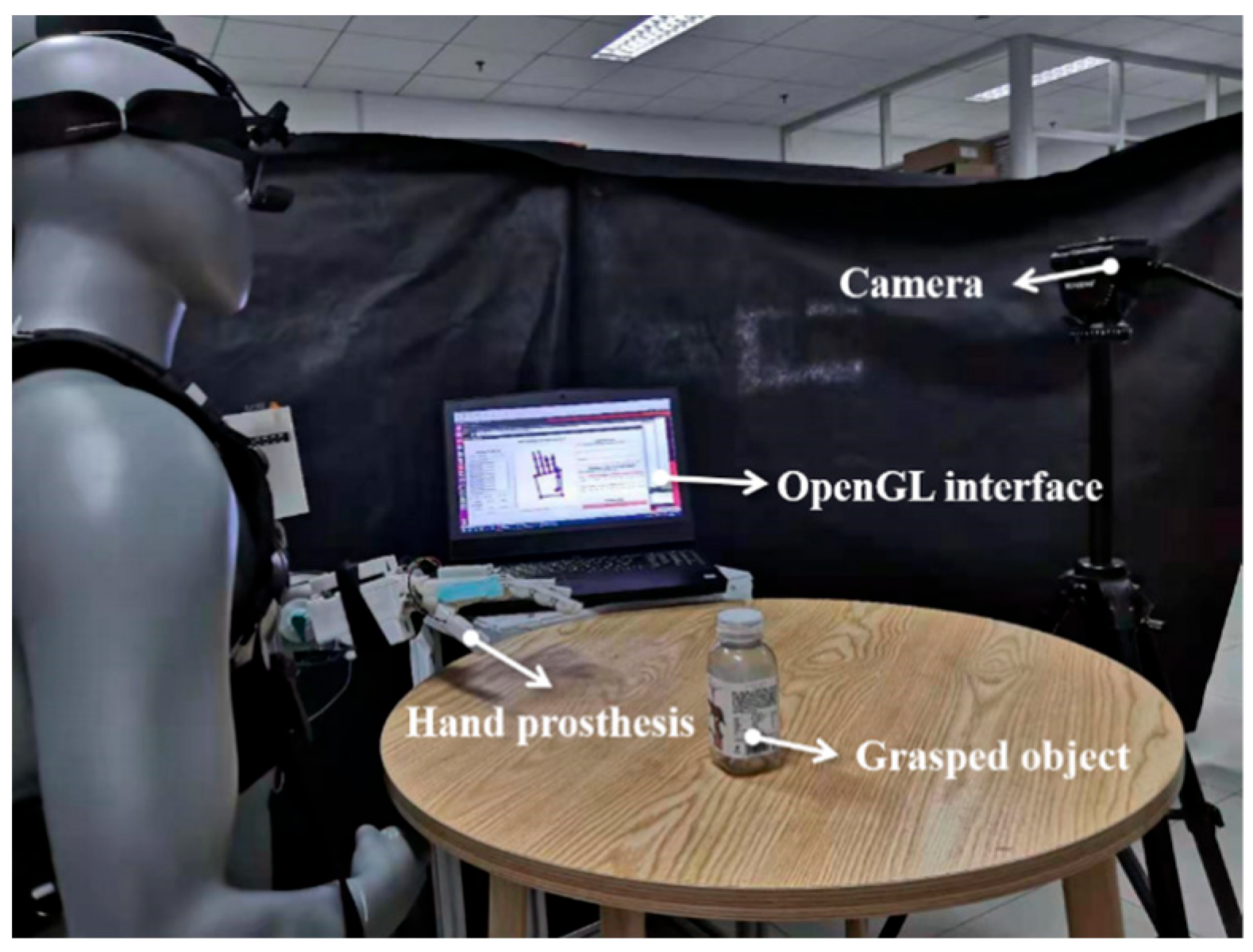

- Jetson Nano (Nvidia, Santa Clara, CA, USA), which has 128-core NVIDIA Maxwell™ architecture GPU and Quad-core ARM® Cortex®-A57 MPCore processor and the semi-autonomous controller are integrated in the Jetson Nano.

- WX151HD CMOS image sensor (S-YUE, Shenzhen, China), which has 150-degree wide angle.

- ZJUT prosthetic hand (developed by Zhejiang University of Technology, Hangzhou, China) is equipped with 5 actuators.

- DYHW110 micro-scale pressure sensor (Dayshensor, Bengbu, China) is integrated in the prosthetic hand to obtain the touch force. It has a range of 5 kg, and the combined error is 0.3% of the full scale (F.S.).

- Vufine+ wearable display (Vufine, Sunnyvale, CA, USA) is a high-definition, wearable display that seamlessly integrates with the proposed control framework.

4.1. Prosthetic Hand Grasping Experiment

- (1)

- The semi-autonomous controller was worn by a dummy model. A prosthetic hand, electromyographic electrode, and head-mounted device on the human subject are used to obtain the human EMG signal and project the signal to the human eye. (Figure 8.1).

- (2)

- The human subject attached the prosthetic hand to his left hand and exhaled, “grab the bottle”. The subject was looking at the bottle with his arm close to it. The head-mounted device will perform visual semantic segmentation and convolutional neural network recognition for the “bottle” in this process. According to the information returned by the CMOS image sensor and the speech task library, the prosthetic hand can switch the combination of motion primitives. (Figure 8.2).

- (3)

- When the human subject’s arm comes close to the “bottle”, it generates an EMG signal. The semi-autonomous controller implements proportional and incremental control under a specific motion primitive, depending on the EMG strength, until the user is instructed to “determine” the prosthetic hand motion. During this period, users can observe the changes in the pressure cloud of the prosthetic hand in real time. (Figure 8.3–6).

- (4)

- The experimental subjects grasp the “bottle” and place it in another position on the table. After placement, the prosthetic hand is released and reset by voice. (Figure 8.7–8).

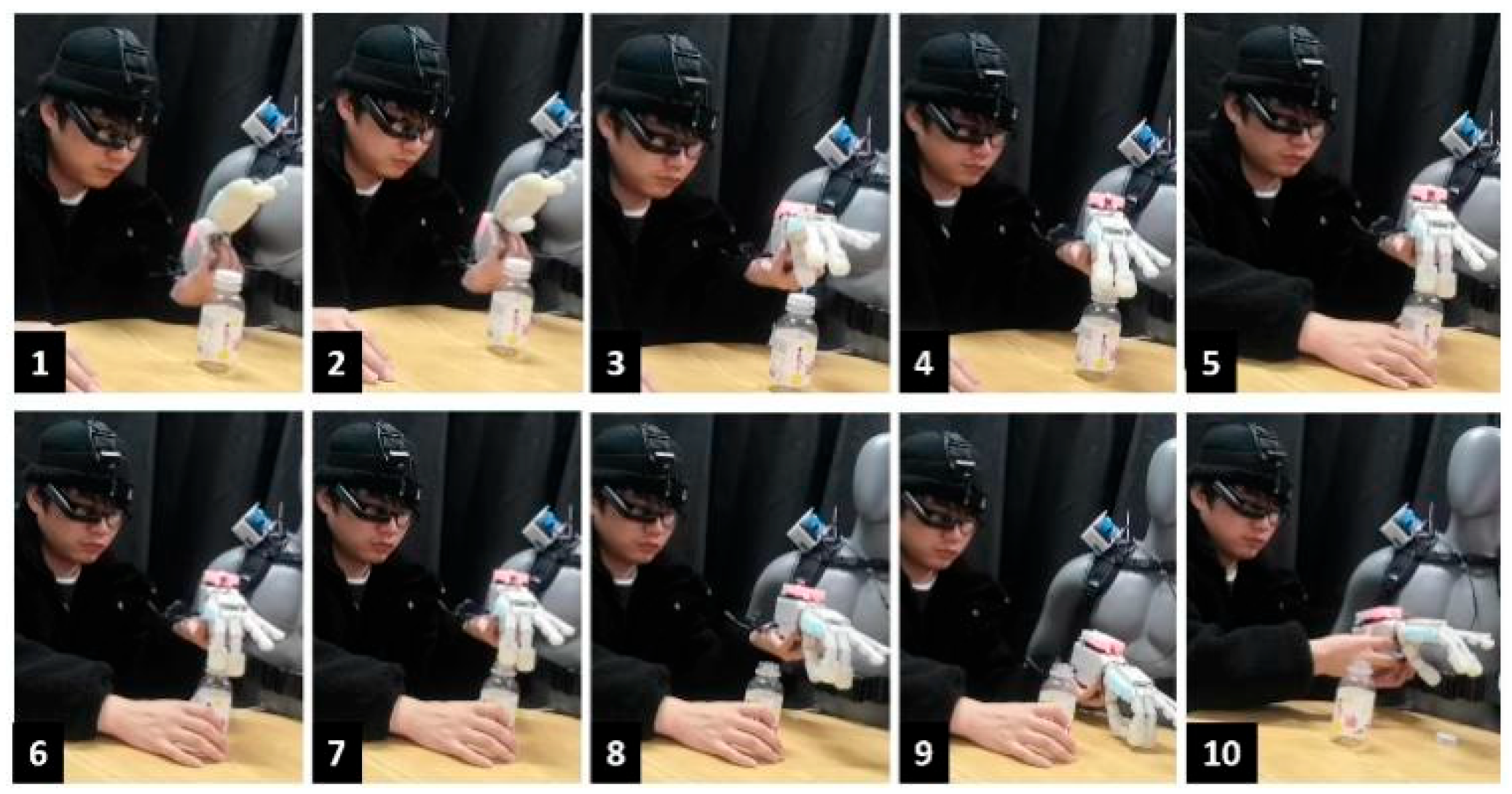

4.2. Prosthetic Hand and Human Hand Coordinative Manipulation Experiments

- (1)

- The semi-autonomous controller was worn by the dummy. A prosthetic hand, EMG electrode, and head-mounted device were attached to the human subjects. (Figure 9.1).

- (2)

- The experimental subject fixed the prosthetic hand on his left hand and exclaimed the command “screw the bottle cap” by voice. While the subject is looking at the “bottle”, the user is grabbing the “bottle” with his right hand, and the prosthetic hand with his left arm is approaching the “bottle cap”. The head-mounted device will perform visual semantic segmentation and convolutional neural network recognition for the “bottle” in this process. According to the information returned by the CMOS image sensor and the speech task library, the prosthetic hand can switch the combination of motion primitives. (Figure 9.2–3).

- (3)

- When the distance between the prosthetic hand and the “bottle cap” is less than the threshold value, the prosthetic hand will grab the “bottle cap”, and the user drives the prosthetic hand to rotate the “bottle cap” to the set angle through his left arm. (Figure 9.4–7).

- (4)

- When the “bottle cap” is not unscrewed and the distance between the prosthetic hand and the “bottle cap” exceeds a certain distance, the prosthetic hand will return to the pre-hand type.

- (5)

- Repeat Step 3 and Step 4 until the cap is unscrewed.

- (6)

- Place the unscrewed bottle cap on the desktop and command the voice to reset the prosthetic hand. (Figure 9.8–10).

4.3. Results

5. Conclusions and Future Works

5.1. Conclusions

- (1)

- In terms of the control layer of the motion primitive planning, the traditional object semantic segmentation and CNN recognition are classified as ventral flow, residual arm motion tracking is introduced as dorsal flow, inspired by the human brain. We optimized the information collection method of the motion primitive planning control layer and the state transfer strategy among the movement primitives according to the multimodal information in different stages of ventral flow and dorsal flow.

- (2)

- In terms of the force control layer, the issue of the user’s “cognitive burden” is reduced in the existing semi-autonomous control strategy, so this paper takes the user as a high-dimensional controller and the EMG strength of flexor digitorum profundus as the control quantity, giving feedback to the prosthetic hand body state, realizing precise force control with human-in-loop.

5.2. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Mouri, T.; Kawasaki, H.; Ito, S. Unknown object grasping strategy imitating human grasping reflex for anthropomorphic robot hand. J. Adv. Mech. Des. Syst. Manuf. 2007, 1, 1–11. [Google Scholar] [CrossRef]

- Stephens-Fripp, B.; Alici, G.; Mutlu, R. A review of non-invasive sensory feedback methods for transradial prosthetic hands. IEEE Access 2018, 6, 6878–6899. [Google Scholar] [CrossRef]

- Biddiss, E.A.; Chau, T.T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef]

- Ortiz, O.; Kuruganti, U.; Blustein, D. A Platform to Assess Brain Dynamics Reflective of Cognitive Load during Prosthesis Use. MEC20 Symposium. 2020. Available online: https://www.researchgate.net/publication/373294777_A_PLATFORM_TO_ASSESS_BRAIN_DYNAMICS_REFLECTIVE_OF_COGNITIVE_LOAD_DURING_PROSTHESIS_USE (accessed on 10 December 2023).

- Parr, J.V.V.; Vine, S.J.; Wilson, M.R.; Harrison, N.R.; Wood, G. Visual attention, EEG alpha power and T7-Fz connectivity are implicated in prosthetic hand control and can be optimized through gaze training. J. Neuroeng. Rehabil. 2019, 16, 52. [Google Scholar] [CrossRef] [PubMed]

- Deeny, S.; Chicoine, C.; Hargrove, L.; Parrish, T.; Jayaraman, A. A simple ERP method for quantitative analysis of cognitive workload in myoelectric prosthesis control and human-machine interaction. PLoS ONE 2014, 9, e112091. [Google Scholar] [CrossRef] [PubMed]

- Parr, J.V.; Wright, D.J.; Uiga, L.; Marshall, B.; Mohamed, M.O.; Wood, G. A scoping review of the application of motor learning principles to optimize myoelectric prosthetic hand control. Prosthet. Orthot. Int. 2022, 46, 274–281. [Google Scholar] [CrossRef] [PubMed]

- Ruo, A.; Villani, V.; Sabattini, L. Use of EEG signals for mental workload assessment in human-robot collaboration. In Proceedings of the International Workshop on Human-Friendly Robotics, Delft, The Netherlands, 22–23 September 2022; pp. 233–247. [Google Scholar]

- Cordella, F.; Ciancio, A.L.; Sacchetti, R.; Davalli, A.; Cutti, A.G.; Guglielmelli, E.; Zollo, L. Literature review on needs of upper limb prosthesis users. Front. Neurosci. 2016, 10, 209. [Google Scholar] [CrossRef]

- Park, J.; Zahabi, M. Cognitive workload assessment of prosthetic devices: A review of literature and meta-analysis. IEEE Trans. Hum. Mach. Syst. 2022, 52, 181–195. [Google Scholar] [CrossRef]

- Foster, R.M.; Kleinholdermann, U.; Leifheit, S.; Franz, V.H. Does bimanual grasping of the Muller-Lyer illusion provide evidence for a functional segregation of dorsal and ventral streams? Neuropsychologia 2012, 50, 3392–3402. [Google Scholar] [CrossRef]

- Cloutman, L.L. Interaction between dorsal and ventral processing streams: Where, when and how? Brain Lang. 2013, 127, 251–263. [Google Scholar] [CrossRef]

- Van Polanen, V.; Davare, M. Interactions between dorsal and ventral streams for controlling skilled grasp. Neuropsychologia 2015, 79, 186–191. [Google Scholar] [CrossRef] [PubMed]

- Brandi, M.-L.; Wohlschläger, A.; Sorg, C.; Hermsdörfer, J. The neural correlates of planning and executing actual tool use. J. Neurosci. 2014, 34, 13183–13194. [Google Scholar] [CrossRef] [PubMed]

- Culham, J.C.; Danckert, S.L.; DeSouza, J.F.X.; Gati, J.S.; Menon, R.S.; Goodale, M.A. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp. Brain Res. 2003, 153, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Meattini, R.; Benatti, S.; Scarcia, U.; De Gregorio, D.; Benini, L.; Melchiorri, C. An sEMG-based human–robot interface for robotic hands using machine learning and synergies. IEEE Trans. Compon. Packag. Manuf. Technol. 2018, 8, 1149–1158. [Google Scholar] [CrossRef]

- Lange, G.; Low, C.Y.; Johar, K.; Hanapiah, F.A.; Kamaruzaman, F. Classification of electroencephalogram data from hand grasp and release movements for BCI controlled prosthesis. Procedia Technol. 2016, 26, 374–381. [Google Scholar] [CrossRef]

- Thomas, N.; Ung, G.; Ayaz, H.; Brown, J.D. Neurophysiological evaluation of haptic feedback for myoelectric prostheses. IEEE Trans. Hum. Mach. Syst. 2021, 51, 253–264. [Google Scholar] [CrossRef]

- Cognolato, M.; Gijsberts, A.; Gregori, V.; Saetta, G.; Giacomino, K.; Hager, A.-G.M.; Gigli, A.; Faccio, D.; Tiengo, C.; Bassetto, F. Gaze, visual, myoelectric, and inertial data of grasps for intelligent prosthetics. Sci. Data 2020, 7, 43. [Google Scholar] [CrossRef]

- Laffranchi, M.; Boccardo, N.; Traverso, S.; Lombardi, L.; Canepa, M.; Lince, A.; Semprini, M.; Saglia, J.A.; Naceri, A.; Sacchetti, R. The Hannes hand prosthesis replicates the key biological properties of the human hand. Sci. Robot. 2020, 5, eabb0467. [Google Scholar] [CrossRef]

- Kilby, J.; Prasad, K.; Mawston, G. Multi-channel surface electromyography electrodes: A review. IEEE Sens. J. 2016, 16, 5510–5519. [Google Scholar] [CrossRef]

- Mendez, V.; Iberite, F.; Shokur, S.; Micera, S. Current solutions and future trends for robotic prosthetic hands. Annu. Rev. Control Robot. Auton. Syst. 2021, 4, 595–627. [Google Scholar] [CrossRef]

- Markovic, M.; Dosen, S.; Popovic, D.; Graimann, B.; Farina, D. Sensor fusion and computer vision for context-aware control of a multi degree-of-freedom prosthesis. J. Neural Eng. 2015, 12, 066022. [Google Scholar] [CrossRef] [PubMed]

- Starke, J.; Weiner, P.; Crell, M.; Asfour, T. Semi-autonomous control of prosthetic hands based on multimodal sensing, human grasp demonstration and user intention. Robot. Auton. Syst. 2022, 154, 104123. [Google Scholar] [CrossRef]

- Fukuda, O.; Takahashi, Y.; Bu, N.; Okumura, H.; Arai, K. Development of an IoT-based prosthetic control system. J. Robot. Mechatron. 2017, 29, 1049–1056. [Google Scholar] [CrossRef]

- Shi, C.; Yang, D.; Zhao, J.; Liu, H. Computer vision-based grasp pattern recognition with application to myoelectric control of dexterous hand prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2090–2099. [Google Scholar] [CrossRef]

- Wang, X.; Haji Fathaliyan, A.; Santos, V.J. Toward shared autonomy control schemes for human-robot systems: Action primitive recognition using eye gaze features. Front. Neurorobotics 2020, 14, 567571. [Google Scholar] [CrossRef] [PubMed]

- Guo, W.; Xu, W.; Zhao, Y.; Shi, X.; Sheng, X.; Zhu, X. Towards Human-in-the-Loop Shared Control for Upper-Limb Prostheses: A Systematic Analysis of State-of-the-Art Technologies. IEEE Trans. Med. Robot. Bionics 2023, 5, 563–579. [Google Scholar] [CrossRef]

- He, Y.; Shima, R.; Fukuda, O.; Bu, N.; Yamaguchi, N.; Okumura, H. Development of distributed control system for vision-based myoelectric prosthetic hand. IEEE Access 2019, 7, 54542–54549. [Google Scholar] [CrossRef]

- He, Y.; Fukuda, O.; Bu, N.; Yamaguchi, N.; Okumura, H. Prosthetic Hand Control System Based on Object Matching and Tracking. The Proceedings of JSME Annual Conference on Robotics and Mechatronics (Robomec), 2019; p. 2P1-M09. Available online: https://www.researchgate.net/publication/338151345_Prosthetic_hand_control_system_based_on_object_matching_and_tracking (accessed on 10 December 2023).

- Vorobev, E.; Mikheev, A.; Konstantinov, A. A Method of Semiautomatic Control for an Arm Prosthesis. J. Mach. Manuf. Reliab. 2018, 47, 290–295. [Google Scholar] [CrossRef]

- Ghosh, S.; Dhall, A.; Hayat, M.; Knibbe, J.; Ji, Q. Automatic gaze analysis: A survey of deep learning based approaches. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 61–84. [Google Scholar] [CrossRef]

- Bullock, I.M.; Ma, R.R.; Dollar, A.M. A hand-centric classification of human and robot dexterous manipulation. IEEE Trans. Haptics 2012, 6, 129–144. [Google Scholar] [CrossRef]

| The Ventral Stream | The Dorsal Stream | |

|---|---|---|

| Function | Identification | Visually guided movement |

| Sensitive features | High sensitivity to spatial | High sensitivity to time |

| Memory features | Long-term memory | Short-term memory |

| Reaction speed | Slow | Quick |

| Comprehension | Very fast | Very slow |

| Reference frame | Object-Centric | Human-Centric |

| Visual input | Fovea or parafovea | Entire retina |

| Toggle Switch | Screw Cap | Grasp Cap | |

|---|---|---|---|

| The rest gesture | gesture 1 | gesture 1 | gesture 1 |

| Pre-shape gesture | gesture 12 | Prepare and pre-envelope | Prepare and pre-envelope |

| Manipulate gesture | gesture 12 | gesture 2 | gesture 2 |

| Task Type | Task Characteristics | |

|---|---|---|

| Coordinative movement of hand and arm | Grasp | Force control |

| Two hands coordination | manipulation | Movements switch |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Zhang, J.; Yang, B.; Ma, X.; Fu, H.; Cai, S.; Bao, G. A Semi-Autonomous Hierarchical Control Framework for Prosthetic Hands Inspired by Dual Streams of Human. Biomimetics 2024, 9, 62. https://doi.org/10.3390/biomimetics9010062

Zhou X, Zhang J, Yang B, Ma X, Fu H, Cai S, Bao G. A Semi-Autonomous Hierarchical Control Framework for Prosthetic Hands Inspired by Dual Streams of Human. Biomimetics. 2024; 9(1):62. https://doi.org/10.3390/biomimetics9010062

Chicago/Turabian StyleZhou, Xuanyi, Jianhua Zhang, Bangchu Yang, Xiaolong Ma, Hao Fu, Shibo Cai, and Guanjun Bao. 2024. "A Semi-Autonomous Hierarchical Control Framework for Prosthetic Hands Inspired by Dual Streams of Human" Biomimetics 9, no. 1: 62. https://doi.org/10.3390/biomimetics9010062

APA StyleZhou, X., Zhang, J., Yang, B., Ma, X., Fu, H., Cai, S., & Bao, G. (2024). A Semi-Autonomous Hierarchical Control Framework for Prosthetic Hands Inspired by Dual Streams of Human. Biomimetics, 9(1), 62. https://doi.org/10.3390/biomimetics9010062