1. Introduction

In recent years, the rise in anthropomorphic robots has permeated various sectors with the growing trend of robot humanization and human–robot societal integration [

1], including medicine [

2], education [

3], industry, and human–robot interaction [

4]. The humanoid robotic arm, a pivotal component of these robots, has significantly propelled advancements in robotic technology. By incorporating anthropomorphic motion control in teleoperation [

5], it becomes more intuitive and convenient to control these robots, further advancing the field.

The journey toward enhanced teleoperation control of humanoid robotic arms has been significantly marked by several key progress areas: one of the pivotal areas of progress lies in sensor fusion technologies. In recent years, sensors have undergone transformative developments, leading to substantial improvements in teleoperation control precision and efficiency. Visual sensors, such as high-resolution cameras and depth sensors [

6], have experienced significant enhancements in both accuracy and speed [

7]. These improvements enable robots to capture and process visual information with unprecedented fidelity. The introduction of new methods enables robots to perceive objects effectively [

8,

9]. Furthermore, force and tactile sensors have evolved to become more sensitive and reliable, endowing robots with the ability to interact with their environments in a more nuanced manner [

10,

11]. The integration of inertial measurement units (IMUs) has raised the bar in terms of precision and latency, significantly enhancing robot state estimation [

12]. Collectively, these strides in sensor technology have paved the way for more effective teleoperation by providing robots with a richer and more accurate understanding of their surroundings.

Another transformative dimension of progress has been the fusion of machine learning techniques with sensor data. This fusion has considerably enriched the perceptual and decision-making abilities of robots. Machine learning models, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), have excelled in feature extraction and real-time data processing. Reinforcement learning algorithms have empowered robots to adapt dynamically to ever-changing and uncertain environments, thereby elevating their autonomy and capacity to comprehend complex surroundings. By harnessing the power of machine learning and adaptive learning [

13,

14] in sensor fusion, robots have become more adept at interpreting sensory information, making informed decisions, and executing tasks with precision and efficiency.

In tandem with these advancements, real-time feedback mechanisms and sophisticated haptic interfaces have revolutionized teleoperators’ ability to control robots. These developments provide teleoperators with immediate sensory feedback from the robot’s sensors, enhancing their situational awareness, and fine-grained control over robotic actions. Operators can now perceive and respond to the robot’s interactions with the environment in real-time, resulting in a more intuitive and responsive teleoperation experience.

Another avenue of exploration has been the integration of various sensor modalities [

15,

16], creating comprehensive perception systems that can handle a wide spectrum of tasks and environments. Researchers have successfully combined visual sensors with force/tactile sensors [

9,

10], IMUs, and proprioceptive sensors. This multi-modal approach [

17] has endowed robots with a holistic understanding of their surroundings, making teleoperation more versatile than ever before. Additionally, this integrated sensing system is not only applicable to upper limb motion perception but also equally effective for lower limb applications. In the fields of lower limb rehabilitation, sports assistance [

18], and lower limb prosthetics [

19], sensor fusion systems have also been widely utilized.

Complementing these technological advancements, a substantial body of related works has further enriched the teleoperation landscape [

20]. These works encompass sensor fusion strategies for enhancing operator control and situational awareness, machine learning-based approaches that adaptively fuse sensor data for more efficient teleoperation [

21], haptic feedback systems that provide operators with tangible sensations of touch and force feedback, as well as investigations into human–robot interaction during teleoperation [

22], aiming to create intuitive interfaces that bridge the gap between human intent and robotic action. Additionally, researchers have prioritized safety in dual-arm robot control by developing redundancy mechanisms [

23] and safety protocols to handle sensor failures and unexpected events, particularly in contexts where human lives or valuable assets are at stake.

Collectively, these remarkable strides in sensor technology, machine learning, real-time feedback, and multi-modal sensor integration, alongside the wealth of related works, have fueled the ongoing development of whole-body teleoperation control for dual-arm robots using sensor fusion.

However, achieving remote real-time motion control of humanoid robotic arms is a challenging and critical research area [

24]. It aims to overcome challenges such as geographical distance and time delays, ensuring that operators can control robot arms in real time from remote locations. This necessitates highly sensitive sensor technology [

25,

26], reliable communication networks, and intelligent control systems. Furthermore, accurate perception of the posture and position of human arms is also crucial. And one of the main difficulties is the precise perception of hand motion [

27], which requires a robust and reliable sensing system. Many wearable devices have been extensively validated for their effectiveness in human-computer interfaces, robot control, and exoskeleton intent control research [

28,

29,

30,

31]. Wearable data gloves have also been proven to enable continuous control of bionic hands, and numerous related studies have confirmed the stability and reliability of this control method [

32,

33]. However, for this research, I chose to use non-contact sensors such as Leap Motion sensors and Microsoft Kinect sensors [

34,

35,

36,

37,

38,

39] mainly because these sensors offer convenience, cost-effectiveness, and high resolution [

40,

41]. It is important to note that these sensors are only used indoors. While both sensors can track hand movements, research by Kim et al. shows that the Leap Motion sensor is more accurate than the Kinect [

42]. Leap Motion can provide high-precision tracking of hand and finger movements and provides a rich software interface for posture and gesture recognition. It has the advantages of robustness, portability, and high precision. However, the Kinect depth camera does a good job of capturing arm motion.

Though the Leap Motion controller has limitations, especially in terms of accuracy and occlusion, multi-sensor fusion has emerged as a solution to ensure consistent data flow. Researchers have combined various sensors for precise hand movement perception. For example, Marin et al. made the first attempt to detect gestures by fusing data from Leap Motion and Kinect [

43]. Eider C. P. Silva et al. used sensor fusion technology to fuse data from the Leap Motion and Myo Armband to create a 3D virtual simulation of the arm’s movement, including the forearm, hand, and fingers, in real time [

44].

Compared to other sensor fusion strategies, the multi-Leap Motion fusion strategy offers significant advantages in terms of data and algorithms. While each sensor may have inherent errors and limitations, the fusion of data from multiple sensors of the same type allows for a substantial reduction in errors and an enhancement of system reliability. In addition, a reasonable spatial layout can also compensate for occlusion issues that arise when using a single Leap Motion controller [

45]. And when it comes to complex motion tracking, the use of multiple sensors can more accurately capture and identify various poses and movements. The multi-sensor system can provide more data perspectives. In this paper, three leap motion sensors are adopted, and the position and attitude angle of the sensors can be adjusted arbitrarily. Through reasonable spatial layout, the sensors can effectively cover each other’s blind areas and provide continuous tracking when the hand rotates, so as to achieve all-round perception of the hand motion. However, For motion perception of the arm, using the Kinect depth camera is a better solution.

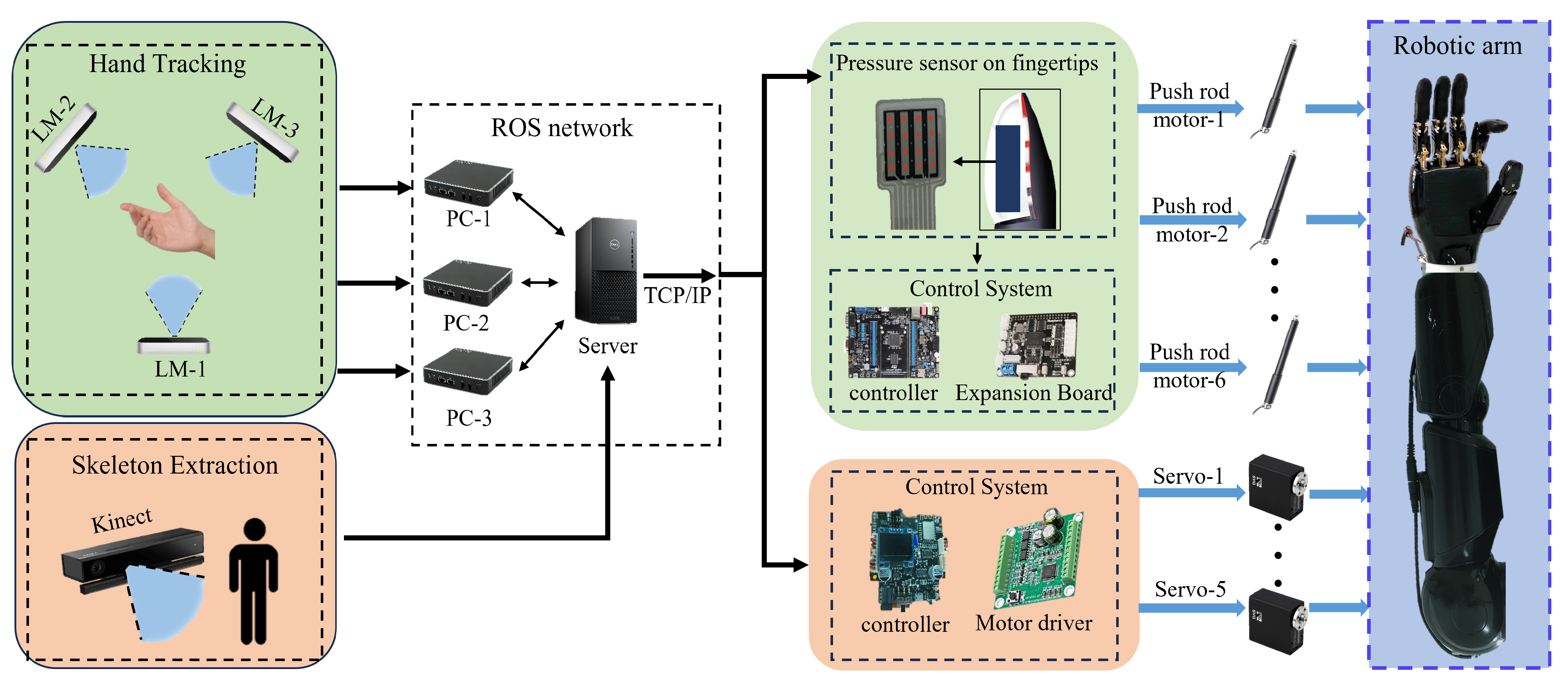

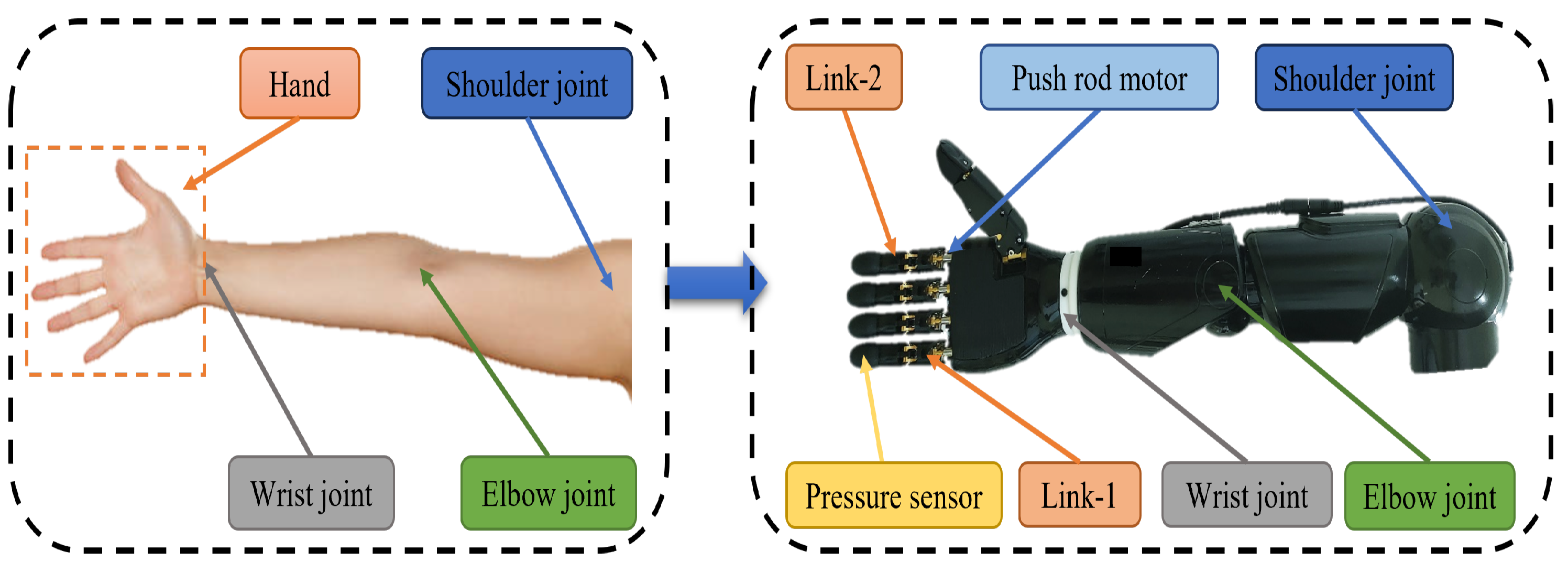

This paper introduces an innovative control strategy for anthropomorphic arms based on sensor fusion. Our research primarily focuses on the following key aspects:

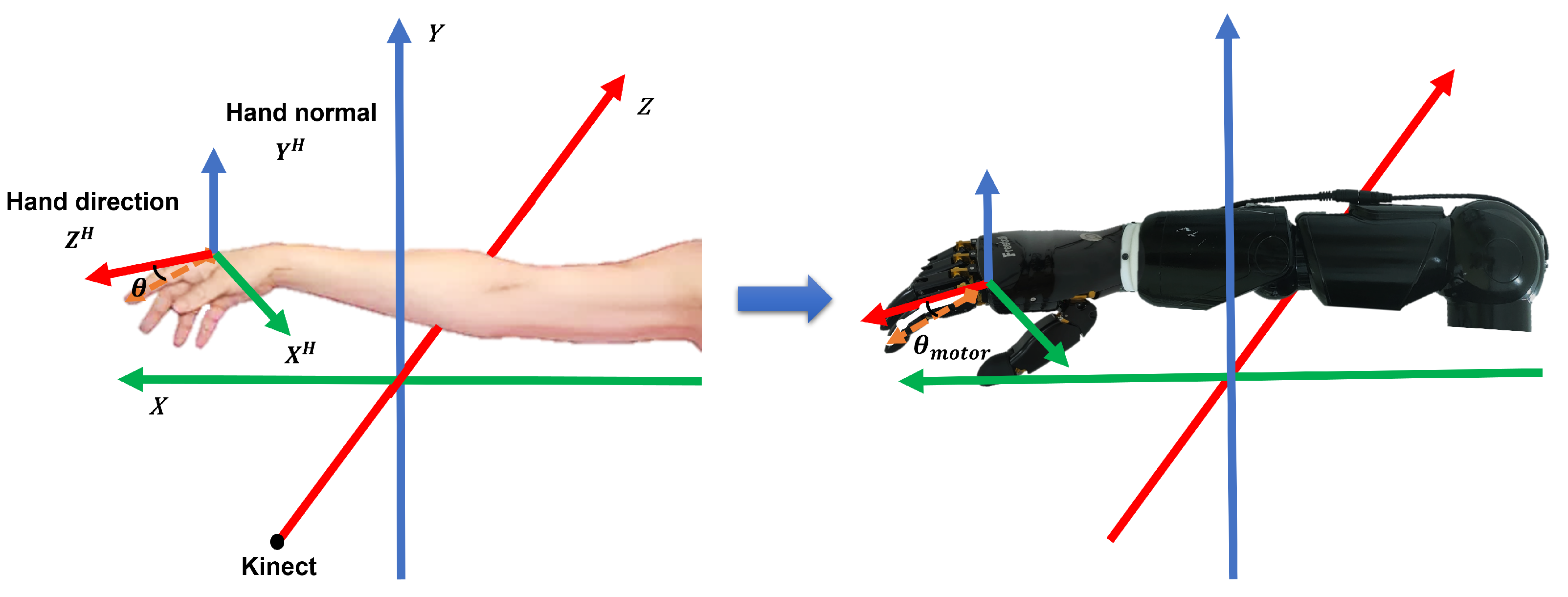

Mapping human finger motion to a 6DOF bionic hand: We present a novel approach that maps human finger motion models onto a 6-degree-of-freedom (6DOF) bionic hand. This mapping allows for direct control of the bionic hand by defining finger-bending angles.

Accurate hand motion perception: To achieve precise perception of hand motion, we leverage multiple Leap Motion sensors. Our method ensures precise control of the bionic hand, even in scenarios where sensors may be obstructed or fall out of their field of view.

Pressure sensor-based force control: Safety is of utmost importance in our control strategy. The fingertips of the bionic hand are equipped with pressure sensors, and we propose an innovative pressure sensor-based force control strategy to ensure the safety of control and operation.

Arm control using Kinect depth camera: We extend our control strategy to encompass the motion and pose of the human arm. By utilizing a Kinect depth camera, we can perceive arm motion, enabling control of the anthropomorphic arm’s movements within a specific spatial range.

Data fusion with Kalman filtering: To overcome the limitations of individual sensors, we employ the Kalman filtering algorithm for data fusion. This enhances the stability and reliability of our system.

3. Experiment and Demonstration

To validate the effectiveness and robustness of our proposed sensor fusion-based control strategy for a humanoid robotic arm and the arm motion tracking system we have developed, we conducted a series of experiments. The purpose of these experiments was to assess the performance and reliability of our control strategy and system in various scenarios. Firstly, we conducted real-time tracking experiments on a human arm using a depth camera. To enhance the quality of data collection, we employed a Kalman filtering algorithm to obtain smoother and more stable data. In the subsequent phases, we performed real-time hand motion tracking using multiple Leap Motion controllers, intentionally skipping the calibration step. By algorithmically fusing hand skeletal framework data, we validated the effectiveness of our proposed approach. To assess the robustness of our multi-sensor fusion system, we deliberately obstructed one of the sensors. This experiment was crucial in evaluating how well our system could adapt to unexpected environmental conditions and sensor failures. Finally, we verified the effectiveness of our safety control strategy based on fingertip pressure sensors for securely gripping fragile objects with the humanoid robotic arm.

Through these experiments, our objective was to comprehensively validate the performance of our proposed control strategy and system, ensuring their stability and reliability across diverse scenarios. This will provide strong support for the control and operation of humanoid robotic arms in practical applications, further advancing the development and application of robotics technology.

3.1. Arm Motion Tracking Experiment

3.1.1. Experimental Content

We employ the Kinect depth camera for extracting the skeletal information of the human arm. Subsequently, we compute the joint angles using the data from each joint point. Ultimately, we implement the kinematic mapping method to ensure accurate control over each joint in the anthropomorphic arm. Throughout this process, we select two noteworthy angles and apply Kalman filtering to them to enhance the smoothness and reliability of the data. The detailed curve depicting the changes in angles has been illustrated in

Figure 8.

We selected two angles (from when the human body does reciprocating motion) and carried out filtering processing. The first angle is the angle between the arm and the front side of the body, and it partially reflects the arm’s position and orientation relative to the body. This angle can be used to assess the arm’s posture and position, especially in robot control, where understanding the arm’s relative position to the body is crucial for avoiding collisions and ensuring the robot performs the required tasks. The second angle is the angle between the upper arm and the lower arm, and it reflects the degree of bending at the arm’s joints. This angle can be used to evaluate the joint movements and bending degree of the arm, which is essential for controlling the motion of the robot arm and executing specific tasks. The experimental results revealed that the raw angle data exhibited noticeable fluctuations. However, after undergoing Kalman filtering, the angle data became significantly smoother. This smoother angle data provides operators with more precise and controllable arm movements, reducing unnecessary oscillations and instability. It allows us to control the humanoid robotic arm more accurately in performing various tasks. This has significant applications in various fields, including medical surgery, remote control in hazardous environments, and assembly tasks.

3.1.2. Algorithm Comparison

To validate the superior performance of the Kalman filtering, we employed four common filtering methods: mean filtering, median filtering, low-pass filtering, and Kalman filtering. These methods were applied to process the data from two angles. Subsequently, we computed the root mean square error (

) for each filtering method when processing the data. The formula for calculating

is represented by Equation (

16) [

54].

where

is a variable used to measure the error between the estimated value and the actual observation value,

n is the total number of samples,

is the

ith observation value, and

is the

ith estimate.

For a clearer performance comparison, we generated comparative

change curves for both angles, as shown in

Figure 9. These visual representations provide an intuitive display of the

variations for the different filtering methods.

Furthermore, we summarized the

means for each filtering method, which are included in the

mean comparison table (

Table 1). A smaller

indicates a lower level of error between the model or estimation and the actual data, signifying a higher level of fitting.

Through comparison, it is evident that the Kalman filter exhibits superior performance, and the successful application of the Kalman filtering algorithm not only enhances the system’s stability but also offers greater precision and control for remote humanoid robotic arm operation.

3.1.3. Comparison of Trajectory Smoothness Metrics

In order to verify the effectiveness of the algorithm on angle filtering, we introduce trajectory smoothness as a quantitative index to compare the smoothness of the original data and the filtered data. The calculation of trajectory smoothness depends on the second derivative of the data, the average of its squares. This indicator reflects the smoothness of the data over the entire motion trajectory, i.e., the degree to which the data change. The specific calculation formula is as follows:

where

N is the number of samples,

represents the second derivative of angle

with respect to time

t, and the average of the sum of squares represents the average degree of change in acceleration, so a smaller smoothness value means that the data changes more smoothly.

In order to present the comparison results in a clearer way, we plotted the curve of the smoothness index over time, as shown in

Figure 10.

From

Figure 10, we can clearly observe that the smoothness values of the original data are significantly higher than those of the filtered data. This indicates that the trajectory data processed by the algorithm presents a more significant smoothness.

3.2. Hand Tracking Experiment under Multi-Sensor Framework

We placed the Leap Motion controller on a slidable track to change its spatial position and did not calibrate it. Since the bone point data are converted and calculated in the hand coordinate system, it has nothing to do with the spatial position of the controller. As shown in

Figure 11, this system calculates the bending angle of each finger, and fuses the data from multiple sensors through an algorithm, so that the calculated angle is more stable and reliable. Through this fusion, we were able to obtain more accurate information on the bending angle of the finger, improving the performance and accuracy of the system.

In this experiment, because the Leap Motion controller did not need to be calibrated, it was free to adjust its position as needed for optimal hand tracking. In

Figure 11, the data change from six angles over 15 s are shown. The bending angle of the thumb and the angle of abduction and adduction were calculated separately. It can be observed that due to the different spatial positions of the three Leap Motion controllers, there will be certain differences in the angles captured by different sensors when performing different hand movements. This difference is mainly due to the occlusion of the finger or palm within the field of view of a certain sensor during hand movement. As a result, the calculated angle data are not entirely reliable.

To solve this problem, we adopted a method of calculating confidence, assigning a weight to each sensor. By analyzing the spatial position relationship between the hand and the sensor, we can assess how reliably each sensor captures hand movements. Depending on the confidence, we can assign different weights to the data of each sensor and use the Kalman filtering algorithm to fuse the data.

Through data fusion, we can obtain a more reliable and stable angle value for controlling the bionic hand. This ensures that the Leap Motion controller can provide accurate and reliable hand posture data for different positions and movements, enabling a more accurate hand control experience.

In order to verify the effectiveness and superiority of the fusion algorithm, we also adopted the quantitative index of trajectory smoothness to compare the difference in smoothness between the data trajectory obtained by a single sensor and the data trajectory after the algorithm fusion. In order to show the comparison results more clearly, we also drew the curves of different sensors and the smoothness index after algorithm fusion over time (as shown in

Figure 12). In these curves, the smaller the smoothness value, the smoother the data changes.

It can be clearly seen from the figure that the trajectory smoothness value of the data processed by the fusion algorithm is significantly lower than that of other single sensors. This clearly shows that fusion algorithms play a significant role in improving data smoothness and make a positive contribution to the stability of the data.

3.3. Occlusion Experiment

On the basis of the previous experiment, we further verified the robustness of this system in tracking the skeletal framework of the hand. By blocking either sensor (as shown in

Figure 13), we can still obtain a stable angular data output. This result fully proves the reliability and robustness of the fusion strategy proposed in this paper.

In this experiment, we occluded different Leap Motion controllers at different time periods, and simultaneously collected data from each Leap Motion controller for angle calculation. As can be clearly seen from

Figure 13, when one of the sensors is blocked, the blocked sensor no longer outputs the corresponding angle information during this period of time. However, this does not affect the hand-tracking capabilities of the other two sensors.

We adopt the Kalman filter fusion algorithm to solve the occlusion problem. By fusing data from multiple sensors, the algorithm can reduce noise and errors and improve the accuracy and stability of data. Even during the time period when occlusion occurs, we can still obtain a stable and reliable angle output. This is because the Kalman filter algorithm can estimate and predict the unknown data according to the existing data and system model, so as to make up for the data loss caused by the occlusion sensor. By using the data fusion algorithm, we can still obtain reliable hand posture data during the time period when occlusion occurs, so as to achieve accurate control of the bionic hand. This design ensures that in practical applications, even if a sensor fails, the user can continue to perform hand operations without being affected.

The redundancy design and the application of the data fusion algorithm effectively improve the reliability and stability of the system, and provide a better user experience. With or without occlusion, our system provides accurate hand tracking and angle calculations, ensuring that users can freely control the bionic hand for a variety of complex movements.

3.4. Safety Control Experiment Based on Pressure Sensor

In this experiment, we used the bionic hand to grasp the paper cup, respectively, when the force control was opened and when the force control was not opened, as shown in

Figure 14. First of all, when we turn on the force control, the bionic hand can grasp the paper cup stably, and there is no large deformation of the paper cup in the process of grasping. However, when we do not turn on the force control, the paper cup has a large deformation in the process of grasping the paper cup by the bionic hand.

In

Figure 14, we compared the change curve of the sum of the pressure values of the middle finger in the force-controlled and non-force-controlled scenarios. In scenario 1, we turned on force control mode. When the total pressure value exceeds the preset threshold (set to 35 mN in this experiment, it is predetermined based on experimental and prior knowledge), the bionic hand stops moving and the total pressure value of the pressure sensor in the middle finger tends to stabilize. This is because the force control mode can control the movement of the bionic hand according to a preset threshold, when the threshold is reached, even if the human hand further grips, the bionic hand will not further apply external forces to avoid over-squeezing the object. However, in scenario 2, since the force control mode is not turned on, the total pressure value of the pressure sensor continues to increase until the paper cup is severely deformed. The contact area between the pressure sensor and the paper cup is reduced, and the pressure value will gradually decrease. In this case, once the human hand is further grasped, the bionic hand will also apply external forces to the paper cup, causing excessive deformation of the paper cup.

By comparing the two scenes, we can see that the open force control mode can improve the holding stability of the bionic hand under the same condition of teleoperation. By setting the right threshold, we can ensure that even when the hand is making a fist, the bionic hand does not apply excessive force, thus avoiding damage and breakage of the object. This is especially important for grasping fragile and deformable objects, as it can provide a safer and more reliable control method that protects the integrity of the object. Therefore, the application of force control mode provides an important guarantee for the practical application of bionic hands. By reasonably setting the threshold and monitoring the pressure value of the bionic fingertip, we can realize the accurate control of the bionic hand, improve the stability of the grip, and thus expand the applicability and reliability of the bionic hand in various application scenarios.

4. Discussion

4.1. Overcoming Workspace Limitations for Humanoid Dual-Arm Robots

While the integration of anthropomorphic robotic hands into two-armed robots has undoubtedly enhanced their ability to achieve human-like control, a fundamental challenge remains largely unaddressed—confined workspaces. Unlike humans, this robotic system is anchored to a fixed base, greatly limiting its coverage and operational flexibility. This inherent limitation poses a significant barrier to realizing the full potential of humanoid dual-arm robots in numerous applications.

The fixed base of humanoid two-arm robots limits their ability to access certain areas, especially those located in hard-to-reach or confined Spaces. In areas critical to mobility and accessibility, such as construction, search and rescue, and manufacturing, current humanoid robot designs do not meet the needs of real-world scenarios. In addition, tasks that involve reaching high or low objects, navigating through cluttered environments, or accessing remote locations are severely hampered by this inherent limitation.

To address this critical challenge, our future work will focus on enhancing the capabilities of humanoid two-arm robots by introducing mobile mounts and telescopic mechanisms. The addition of a mobile chassis will allow robots to travel through their surroundings, expanding their coverage and adaptability. This mobility is critical in scenarios where new areas must be repositioned or explored, such as disaster response tasks, logistics, and outdoor construction. In addition, the combination of telescopic or lifting mechanisms, such as lifting thrusters or telescopic arms, will allow the robot to overcome the limitations associated with height. By extending their reach vertically, humanoid two-armed robots can reach higher objects, extending their usefulness to a wide range of applications, including warehouse automation, maintenance tasks, and infrastructure inspections.

In conclusion, while the integration of anthropomorphic hands into two-armed robots represents a major leap towards human-like control, the fundamental challenge of workspace constraints remains a huge obstacle. Combining mobile bases and telescopic mechanisms in future research work is expected to remove these limitations and open up new horizons for the deployment of humanoid two-arm robots in various fields. These advances will not only enhance the adaptability and versatility of these robotic systems, but will also expand their potential to handle complex real-world tasks with greater efficiency and effectiveness.

4.2. Overcoming the Limitations of Depth Visual Tracking

However, despite the remarkable progress we have made in the control of two-armed robots, current depth vision tracking technology still imposes certain limitations on the free movement of humans in some situations. Depth vision tracking typically requires the operator to remain within the camera’s field of view and is susceptible to interference from environmental factors, such as bright light, obstacles, and changes in perspective. These limitations can affect the operator’s degree of freedom and flexibility when controlling a two-armed robot, especially in situations that require movement or access to complex environments.

To address this challenge, our future work will focus on developing wearable device-based anthropomorphic motion control technologies. This technology will give operators a more natural, free, and intuitive way to control humanoid two-arm robots without the constraints of depth vision tracking. Wearable devices can be equipped with a variety of sensors and other sensing technologies, which can obtain real-time information about the movement of the operator, and maintain control of the robot no matter where they are.

This wearable device-based anthropomorphic motion control will not only increase the operator’s freedom but will also give them greater mobility, allowing them to comfortably control two-armed robots in a variety of environments. This is especially important for tasks that require the operation of robots in complex, unrestricted environments, such as emergency rescue, construction, and other fields. Wearables will become an extension tool for operators, allowing them to move away from the traditional console and closer to the actual operation scenario, thus improving the efficiency and safety of the task.

In summary, although we have made significant progress in depth vision tracking and control of dual-arm robots, the limitations of depth vision tracking remain and remain a challenge for expanding the practical application of dual-arm robots. Therefore, future work will focus on developing wearable device-based anthropomorphic motion control technologies to improve the operator’s freedom and the flexibility of robot control. This will lay the foundation for achieving more extensive and natural human-machine collaboration in a variety of applications, driving robotics forward to better serve human needs.

4.3. Expansion of Future Work

In this study, we employed a remote control approach to operate the robot. In comparison to traditional control methods, this remote control method offers several notable advantages.

Firstly, remote control overcomes the limitations of geographic distance. Traditional control methods typically require the operator to be physically present in the same location as the robot, whereas remote control allows operators to control the robot from a distance at any time. This has significant implications in various application scenarios, such as emergency rescue operations, tasks in hazardous environments, and remote sensing control. Furthermore, remote control enhances the level of safety. Traditional control methods may necessitate the on-site presence of the operator, exposing them to potential risks or hazards. Remote control helps mitigate these risks, enabling operators to maintain a safe distance.

However, it is important to note that remote control methods come with certain challenges and limitations. Network latency and instability can potentially affect real-time performance and control accuracy. Additionally, the reliability of sensors, communication devices, and algorithms is of utmost importance in remote control applications. In our future work, apart from exploring the potential applications of other sensors, such as wearable devices, we plan to investigate various communication frameworks and fusion algorithms. These communication frameworks may include DDS (data distribution service) and ZeroMQ, among others. Furthermore, we will delve into fusion algorithms better suited for nonlinear systems, such as extended Kalman filtering and unscented Kalman filtering, to further enhance the effectiveness and applicability of remote control.

In our force control safety experiment, we have implemented a threshold mechanism to ensure the safety of the bionic hand. If the force exerted by the bionic hand exceeds a predefined threshold, the system automatically halts the movement of the bionic hand. However, it is important to emphasize that the setting of this threshold is based on practical considerations and is not directly compared to human electromyography (EMG) signals or actual human output.

In future work, we plan to conduct a more comprehensive analysis by comparing the force output of the bionic hand with EMG signals from the human body or the forces exerted by the human body. This comparison will help us gain a better understanding of the relationship between the mechanical system’s force output and the natural capabilities of the human body, ensuring that the mechanical system’s force levels remain within a reasonable and safe range. Furthermore, in addition to capturing the actual output of the human body, we also plan to generate the actual motion trajectories of the robotic arm. This will allow us to compare the motion of the robotic arm with the actual human motion, aiding in a more comprehensive evaluation of our system’s performance.

As for the source of the experimental results, the experimental results of this study were obtained based on a single individual. We carefully monitored and recorded the data of a single individual in the experiment, and analyzed and discussed based on these data. In future work, we plan to expand the sample size and conduct statistical analyses to include more individuals to more fully understand potential individual differences and take appropriate approaches to compare or compensate. This will help to further verify the reliability and universality of the experimental results.

5. Conclusions

In this paper, a humanoid arm control strategy based on sensor fusion is proposed to achieve accurate control and humanoid expression of anthropomorphic arm movement. The control strategy uses the combination of depth camera and Leap Motion controller to sense the motion of the human arm and capture the gesture of the hand, thus building a complete human arm motion tracking system. First, the depth camera is used to sense the movement of a person’s arm. By extracting the skeleton information of the human arm, we can obtain the spatial position coordinates of the joint points of the human arm, and then calculate the joint angles through the algorithm, so as to map the joint servo of the humanoid arm to realize the control of the humanoid arm. The Leap Motion controller, meanwhile, is used to capture the movements of a person’s hands. By calculating the flexion angle of the finger, we can accurately express the gesture of the finger, so as to realize the anthropomorphic control of the arm movement. In order to improve the robustness and stability of the tracking system, the Kalman filter algorithm is used to process the perceived data. The algorithm can effectively eliminate the noise and uncertainty in the data, thus improving the accuracy and stability of the tracking system.

To verify the effectiveness of the proposed control strategy, we conducted a series of experiments. Firstly, we carried out experiments on arm and hand motion tracking to verify the accuracy and reliability of the system. Secondly, we conduct occlusion experiments to simulate tracking in complex environments. The experimental results show that the proposed control strategy is effective and robust. Finally, we conducted a comparison experiment between force-controlled and non-force-controlled scenarios. The experimental results show that the anthropomorphic control of the humanoid arm in the force control mode can improve grip stability, making it more suitable for grasping fragile and deformable objects. This provides a more secure and reliable control method for the humanoid arm in practical application.