1. Introduction

Depth estimation is a complex process involving continuous activation of every level of the visual cortex and even higher-level regions. Disparity-sensitive cells of a different kind can be found early in the visual cortex [

1,

2], and it seems that the resulting signals travel through the dorsal and ventral pathways for different purposes; parietal regions (in particular, the anterior and lateral intraparietal regions) make major contributions to depth estimation for visually-guided actions in hand and eye movements [

3,

4], while the inferotemporal cortex supports the creation of 3D shapes based on the relative disparity between objects [

2,

5]. The brain can rely on several cues to estimate the depth of objects, the most important ones being (i) binocular disparity, which allows the visual cortex to have access to two different perspectives of the same environment; (ii) the motion parallax effect, which happens when objects at a greater distance move slower than nearby objects; and (iii) the angular difference between the eyes when fixating on the same object (

vergence). However, the exact contributions of these mechanisms to the overall process of depth estimation, and critically where and how the information processing of these signals occurs, remains unclear.

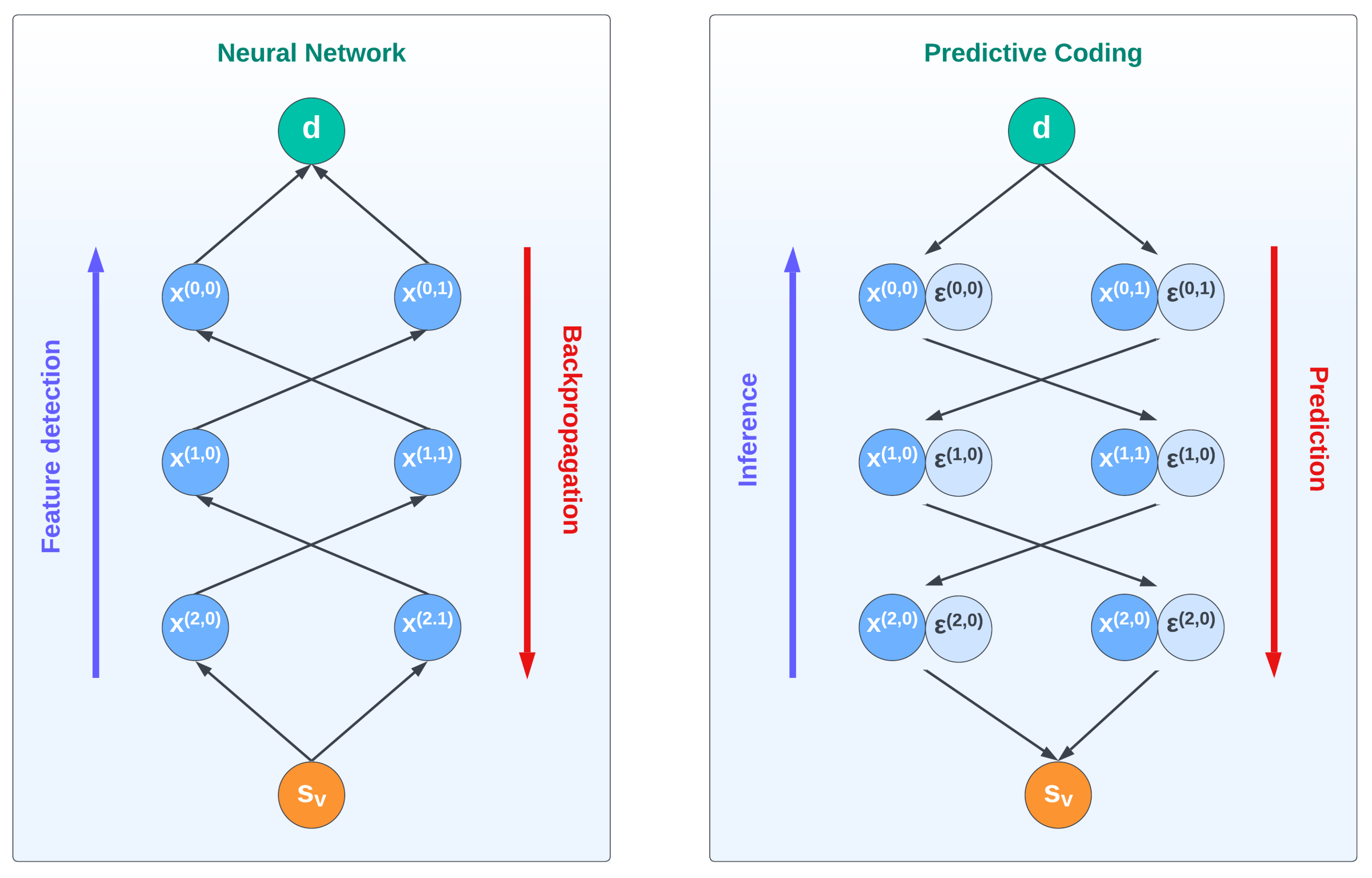

Traditionally, the visual cortex has been associated with a feature detector: as the sensory signals climb the hierarchy, more complex features are detected by increasing cortical levels, such that high-level representations of objects are constructed from lines and contours. This view has inspired the development of Convolutional Neural Networks, which have led to remarkable results in object recognition tasks [

6]. Despite its success, this bottom-up approach is not able to capture several top-down mechanisms that affect our everyday perception of the external world, as in the case of visual illusions [

7]. In recent years, a different perspective has emerged based on Predictive Coding theories that views these illusions not just as unexpected phenomena but as expressions of the main mechanism through which our brain is able to efficiently predict and act over the environment [

8,

9]. Under this view, the biases we perceive are actually hints to better minimize the errors between our sensations and our predictions [

10]. Furthermore, vision is increasingly considered to be an active process that constantly tries to reduce the uncertainty of what will happen next.

In this article, we apply this predictive and inferential view of perception to depth estimation. Specifically, we advance an Active Inference model that is able to estimate the depth of an object based on two projected images through a process of prediction error minimization and active oculomotor behavior. In our model, depth estimation does not consist of a bottom-up process that detects disparities in the images of the two eyes, but of an inference of top-down projective predictions from a high-level representation of the object. In other words, the estimation of the object’s depth naturally arises by inverting a visual generative model wherein the resulting prediction errors flow up the cortical hierarchy, which contrasts with the direct processes occurring in neural networks.

2. Materials and Methods

The theory of Active Inference assumes that an agent is endowed with a generative model that makes predictions over sensory observations [

10,

11,

12,

13], as shown in

Figure 1. The discrepancy between predictions and observations generates a prediction error that is minimized in order to deal with a dynamical environment and to anticipate what will happen next. This generative model hinges on three components encoded in generalized coordinates of increasing temporal orders (e.g., position, velocity, acceleration, etc.): hidden states

, hidden causes

, and sensory signals

. These components are expressed through a nonlinear system that defines the prediction of sensory signals and the evolution of hidden states and causes across time:

In this context,

denotes a differential operator that shifts all temporal orders by one, such as

. Furthermore,

and

stand as noise terms drawn from a Gaussian distribution. The considered joint probability is divided into distinct distributions:

Typically, each distribution is approximated with Gaussian functions:

where

is a prior, while the distributions are expressed in terms of precisions (or inverse variances)

,

, and

.

Following a variational inference method [

14], these distributions are inferred via approximate posteriors

and

. Under appropriate assumptions, minimizing the Variational Free Energy (VFE)

, defined as the disparity between the KL divergence of real and approximate posteriors and the log evidence

leads to the minimization of prediction errors. The belief updates

and

concerning hidden states and hidden causes, respectively, expanded as follows:

where,

,

, and

denote the prediction errors of the sensory signals, dynamics, and priors.

A simple Active Inference scheme can handle various tasks, yet the effectiveness of the theory stems from a hierarchical structure that enables the brain to grasp the hierarchical associations between sensory observations and their causes [

15]. Specifically, the model delineated above can be expanded by linking each hidden cause with another generative model; as a result, the prior becomes the prediction from the layer above, while the observation becomes the likelihood of the layer below.

In contrast, the execution of action is accomplished by minimizing the proprioceptive component of the VFE concerning the motor control signals

:

where

stands for the partial derivative of proprioceptive observations regarding the motor control signals,

are the precisions of the proprioceptive generative model, and

are the generalized proprioceptive prediction errors:

In summary, in Active Inference, goal-directed behavior is generally possible by first biasing the belief over the hidden states through a specific cause. This cause acts as a prior that encodes the agent’s belief about the state of affairs of the world. In this context, action follows because the hidden states generate a proprioceptive prediction error that is suppressed through a reflex arc [

16]. For instance, supposing that the agent has to rotate the arm by a few degrees, the belief over the arm angle is subject to two opposing forces

one from above (pulling it toward its expectation) and one from below (pulling it toward what it is currently perceiving). The tradeoff between the two forces is expressed in terms of the precisions

and

, which encode the agent’s level of confidence about the particular prediction errors. By appropriately tuning the precision parameters, it is possible to smoothly push the belief towards a desired state, eventually driving the real arm through Equation (

8).

3. Results

3.1. Homogeneous Transformations as Hierarchical Active Inference

Classical Predictive Coding models are passive in the sense that the model cannot select its visual stimuli [

8]. On the other hand, our Active Inference model can actively control “eyes” in order to sample those preferred stimuli that reduce prediction errors.

State-of-the-art implementations of oculomotor behavior in Active Inference rely on a latent state (or belief) over the eye angle, and attractors are usually defined directly in the polar domain [

17,

18]. While having interesting implications for simulating saccadic and smooth pursuit eye movements, such models do not consider the fact that eyes fixate on the target from two different perspectives. A similar limitation can be found in models of reaching, in which the 3D position of the object to be reached is directly provided as a visual observation [

19,

20]. Furthermore, because there is only a single level specified in polar coordinates, if one wants to fixate or reach a target defined in Cartesian coordinates, a relatively complex dynamics function has to be defined at that level.

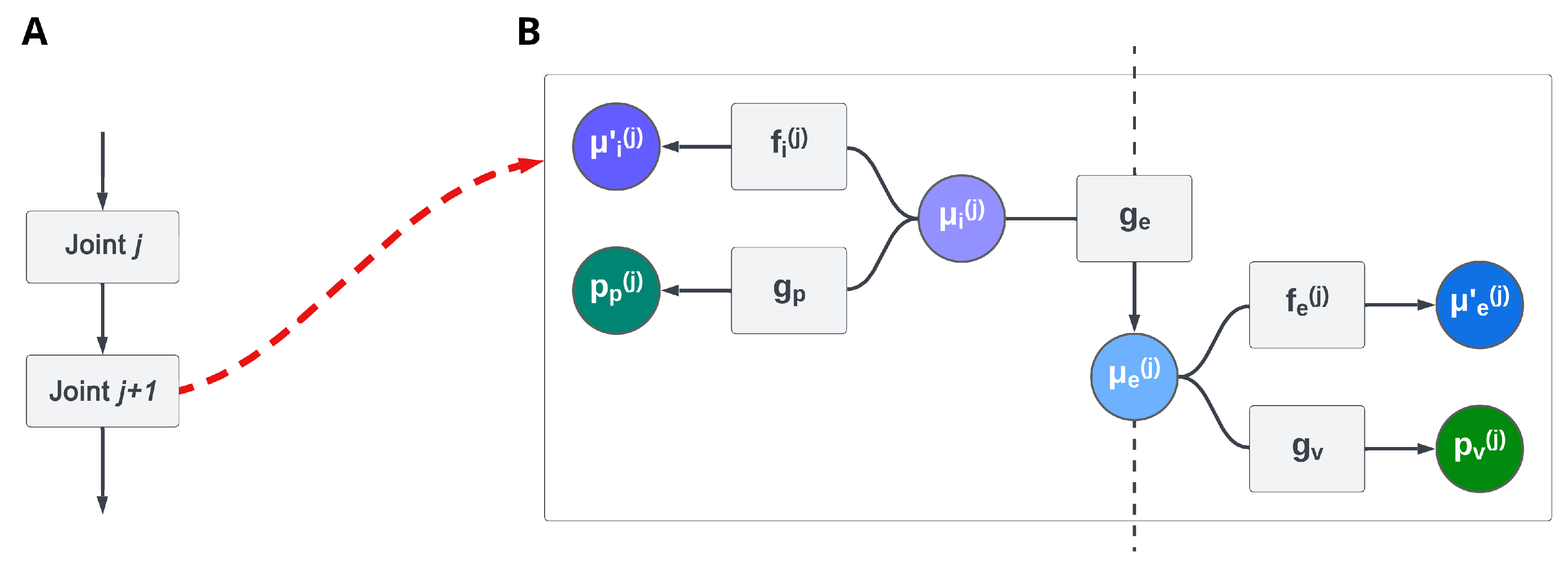

Using a

hierarchical kinematic model—based on Active Inference—that includes both intrinsic (e.g., joint angles and limb lengths) and extrinsic (e.g., Cartesian positions) coordinates affords efficient control of a simulated body [

21]. The extrinsic configuration of the motor plant is computed hierarchically, as shown in

Figure 2. For the relatively simple kinematic control tasks targeted in [

21], these computations only required two simple transformations between reference frames, namely, translations and rotations. However, a hierarchical kinematic model can be easily extended to more complex tasks that require different transformations.

In robotics, transformations between reference frames are usually realized through the multiplication of a linear transformation matrix. These operations can be decomposed into simpler steps where homogeneous coordinates are multiplied one at a time through the chain rule, allowing for more efficient computations. Specifically, if the x and y axes represent a Cartesian plane, a homogeneous representation augments the latter with an additional dimension called the projective space. In this new system, multiplying the point coordinates by the same factor ensures that the mapping remains unaltered, i.e., .

Affine transformations preserve parallel lines, and take the following form:

where the last row ensures that every point always maps to the same plane. Following the chain rule, a point in the plane

can be rotated and translated by multiplication of the corresponding transformations:

where

and

are the sine and cosine of the rotation

and

and

are the coordinates of the translation.

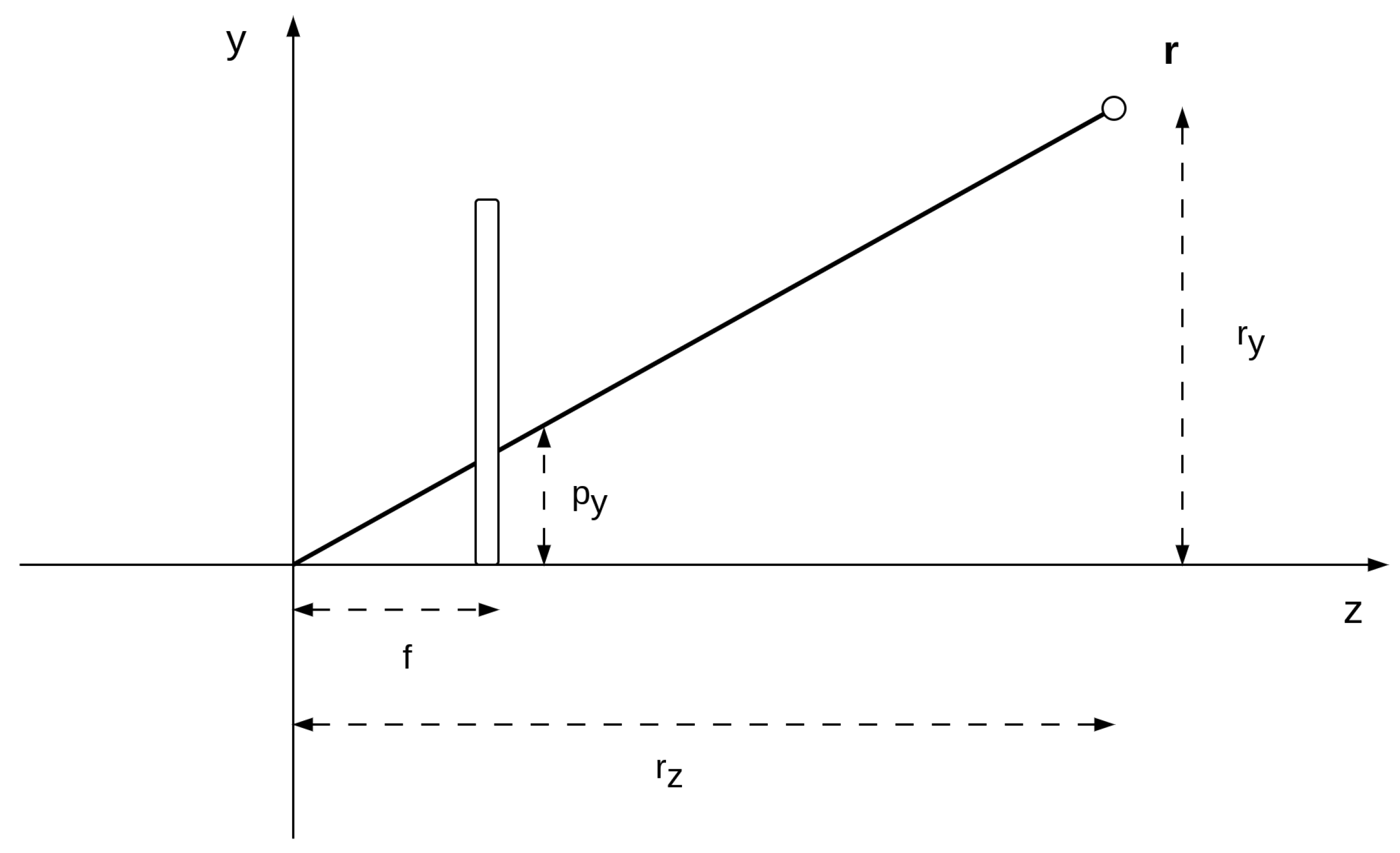

By appropriately changing the values of the matrix, additional affine transformations such as shearing or scaling can be obtained. Critically, if the last row is modified it is possible to realize perspective projections:

such that the new point is no longer mapped on the same plane

. Thus, to map it back to the Cartesian plane, we can divide the x and y coordinates by the last element:

This special transformation is critical for computer vision, as it allows points to be projected on an image plane or the depth of an object to be estimated. If we have a 3D point

expressed in homogeneous coordinates, we can obtain the corresponding 2D point

projected in the camera plane by first performing a roto-translation similar to Equation (

12) through a matrix that encodes the location and orientation of the camera (i.e., the

extrinsic parameters):

and then scaling and converting the point to 2D through the so-called

camera matrix:

The projection is then performed by multiplying the depth coordinate z by the focal length

f, which represents the distance of the image plane from the origin. As before, because the homogeneous representation is up to a scale factor, in order to transform the point

into the Cartesian space we can divide the camera coordinates by the depth coordinate

, as shown in

Figure 3,

Keeping the above in mind, we can generalize the deep kinematic model of [

21] by assuming that each level sequentially applies a series of homogeneous transformations. Specifically, the first belief, called

, contains information about a particular transformation (e.g., by which angle to rotate or by which length to translate a point).

This belief then generates a homogeneous transformation relative to that Degree of Freedom (DoF), which is multiplied by a second belief expressed in a particular reference frame, as exemplified in

Figure 4.

The above equation leads to simple gradient computations through the generated prediction error

, which is needed to iteratively update the two beliefs:

where ⊙ is the element-wise product.

3.2. A Hierarchical Generative Model for Binocular Depth Estimation

In this section, we explain how depth estimation arises by inverting the projective predictions of the two eyes using a hierarchical generative model. For simplicity, we consider an agent interacting with a 2D world, where the depth is the

x coordinate. Nonetheless, the same approach could be used to estimate the depth of a 3D object. We construct the generative model hierarchically, starting from a belief

about the absolute 2D position of an object encoded in homogeneous coordinates, where

is the depth belief. Then, two parallel pathways generate specular predictions

that receive the eye angles encoded in a common vergence-accommodation belief

and transform the absolute coordinates of the object into the two reference frames relative to the eyes:

where

is the homogeneous transformation corresponding to the extrinsic parameters of the camera:

where

is the distance between an eye and the origin (i.e., the middle of the eyes) and the absolute eye angles are as shown below.

Each of these beliefs generates a prediction over a point projected to the corresponding camera plane.

Figure 5 provides a neural-level illustration of the model, with the two branches originating from the two beliefs at the top. Note that while the eye angles belief

generates separate predictions for the two eyes, proprioceptive predictions directly encode angles in the vergence-accommodation system, which is used for action execution [

10].

The absolute point belief (encoded in generalized coordinates up to the second level) is updated as follows:

where

and

are the precisions and prediction errors of the beliefs below and

,

, and

are the function, precision, and prediction error of the dynamics of the same belief.

Thus, this belief is subject to different prediction errors coming from the two eyes; the depth of the point is estimated by averaging these two pathways. Furthermore, an attractor can be defined in the dynamics function if one wishes to control the object encoded in absolute coordinates, e.g., for reaching or grasping tasks.

Similarly, the belief update equation for

is as follows:

where

,

,

, and

are the proprioceptive precision, prediction error, observation, and likelihood function (which in the following simulations is an identity mapping), while

,

, and

are the function, precision, and prediction error of the belief dynamics.

This belief, in addition to being affected by the proprioceptive contribution, is subject to the same prediction errors in the same way as the absolute belief. In this way, the overall free energy can be minimized through two different pathways: (i) by changing the belief about the absolute location of the object (including the depth), or (ii) by modifying the angle of fixation of the eyes. As is shown in the next section, the possibility of using these pathways may create stability issues during goal-directed movements.

In such a case, an attractor can be specified in the dynamics function in order to explicitly control the dynamics of the eyes, e.g., by not fixating on a point on the camera plane and instead rotating the eyes along a particular direction or by a particular angle.

Finally, the belief update equation for the projected point

is as follows:

where

and

are the precisions and prediction errors of the beliefs below,

,

,

, and

are the visual precision, prediction error, observation, and likelihood function, and

,

, and

are the function, precision, and prediction errors of the belief dynamics. Note that in the following simulations we approximate

by a simple identity mapping, meaning that

conveys a Cartesian position.

Unlike the belief over the eye angles , which is only biased by the likelihoods of the levels below, this belief is subject to both a prior encoded in and a visual likelihood from .

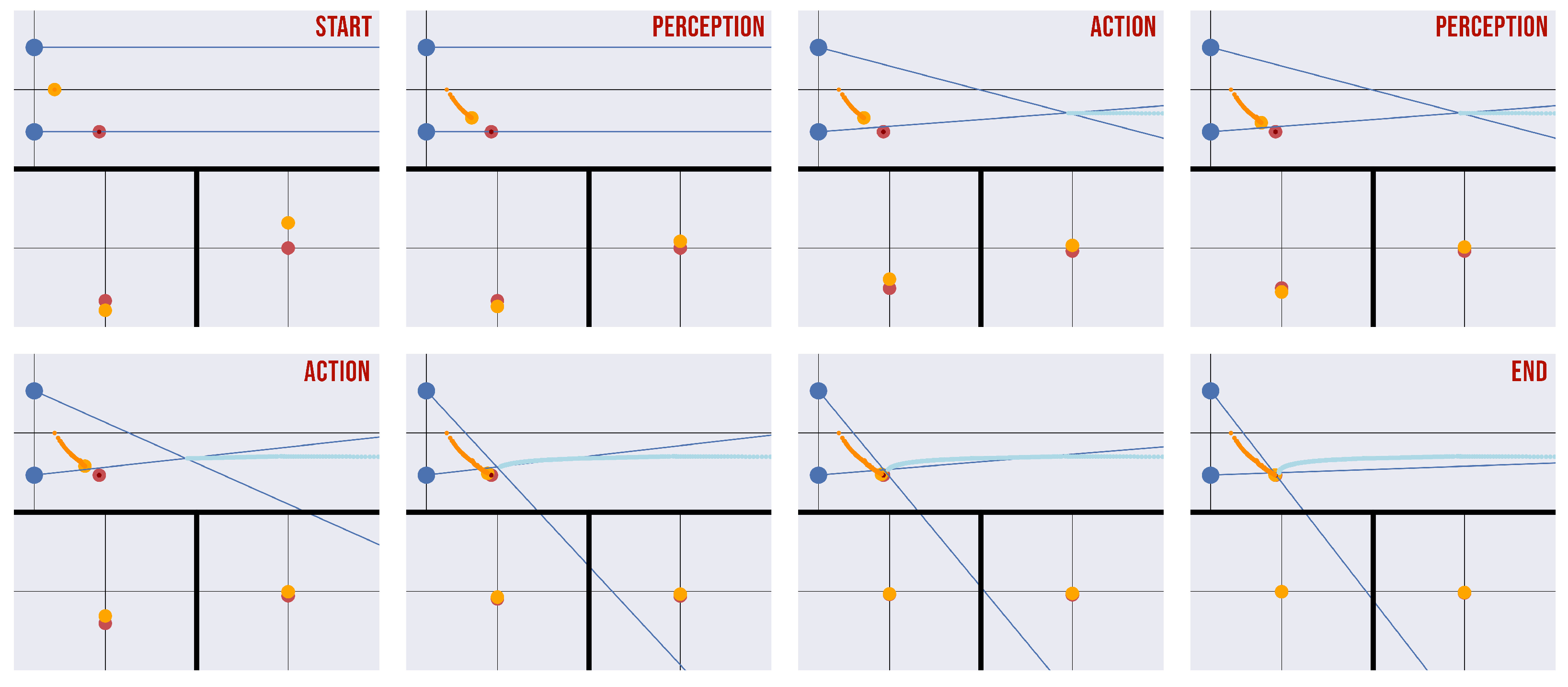

3.3. Active Vision and Target Fixation with Action–Perception Cycles

The model advanced here is not only able to infer the depth of a point, it can fixate it using active vision. This is possible by specifying an appropriate attractor in the dynamics function of the last belief

, or in other words, by setting an “intention” in both eyes [

22] such that the projected position is at the center of the camera planes.

In short,

returns a velocity encoding the difference

between the current belief and the center of the camera plane—expressed in homogeneous coordinates—multiplied by an attractor gain

. Thus, the agent thinks that the projected point will be pulled toward the center with a velocity proportional to

. The generated prediction errors then travel back through the hierarchy, affecting both the absolute and eye angle beliefs. Because what we want in this case is to modify the latter pathway (directly generating proprioceptive predictions for movement), the former pathway can be problematic. In fact, if

already encodes the correct depth of the object, fixation occurs very rapidly; however, if this is not the case, then the prediction errors

are free to flow through all the open pathways, driving the beliefs in different directions and causing the free energy minimization process to become stuck with an incorrect depth coordinate and eye angles [

23].

This abnormal behavior can be avoided by decomposing the task into cyclical phases of action and perception [

24]. During an action phase, the absolute belief is kept fixed, meaning that the relative prediction errors

can only flow towards the belief over the eye angles, which results in the eyes moving according to the depth belief. During a perception phase, action is blocked (either by setting the attractor gain or the proprioceptive precision to zero), while

is free to flow in any direction; this has the result of pushing the depth belief toward the correct value signaled through the sensory observations. In this way, depth estimation is achieved through multiple action and perception cycles until the overall free energy is minimized.

Figure 6 shows a sequence of time frames of a depth estimation task in which the (perceptual) process of depth estimation and the (active) process of target fixation cyclically alternate in different phases every 100 time steps. As can be seen from the visualization of the point projections, the distance between the real and estimated target positions slowly decreases while both positions approach the center of the camera planes, affording efficient depth estimation.

3.4. Model Comparison

We tested the model introduced in

Section 3.2 and

Section 3.3 in a depth estimation task that consists of inferring the 2D position of the object shown in

Figure 6. We compared three different versions of the model. In the first version, the eyes are kept in a fixed position with the eyes parallel to one another (

infer parallel). In the second and third versions, while the model can actively control the eye angles, the initial values are set at the correct target position (

infer vergence) or at a random location (

active vision, as displayed in

Figure 6).

Furthermore, the fovea of the simulated “eye” can have either a uniform or a nonuniform resolution; in the latter condition, the object is represented with greater accuracy when it is near the point of fixation. This reflects the fact that the biological fovea has far more receptors at the center than in the peripheral vision, which has previously been modeled with an exponential link [

25]. Specifically, the variability

of the Gaussian error in the visual observations in our implementation (i.e., in the generative process) exponentially increases with the distance

d between the point of fixation and the real target position:

where

k is a scaling factor which was equal to

in our simulations. In the uniform condition, the visual noise was set to zero.

Figure 7 shows the results of the simulations, including the accuracy (the number of trials in which the agent successfully predicts the 2D position of the target, left panel), mean error (distance between the real and estimated target position at the end of every trial, middle panel), and estimation time (number of steps needed to correctly estimate the target, right panel). The number of time steps for each phase was set to 100, as before. The figure shows that depth estimation with parallel eyes (

infer parallel) in the nonuniform condition results in very low accuracy, especially when the target position is far from the fixation point. This is to be expected, as in this condition the fovea has low resolution at the periphery. If the angle of the eyes is instead set to fixate on the target (

infer vergence), the accuracy is much higher and few fluctuations occur. Finally, the

active vision model that simultaneously implements depth estimation and target fixation achieves a level of performance that is almost on par with the model where the fixation is initialized at the correct position. Indeed, the only appreciable difference between the last two conditions is the slightly greater number of time steps in the

active vision condition.

This pattern of results shows three main things. First, the hierarchical Active Inference model is able to solve the depth estimation problem, as evident from its perfect accuracy in the task. Second, the model is able to infer depth as well as to simultaneously select the best way to sample its preferred stimuli, i.e., by fixating on the target. This is possible because during a trial (as exemplified graphically in

Figure 6) the active vision model obtains increasingly more accurate estimates of the depth as the point of fixation approaches the target. Note that this pattern of results emerges because of the nonuniform resolution of the fovea. In fact, if the foveal resolution is assumed to be uniform (such as in camera models of artificial agents), the best accuracy is achieved by keeping the eyes parallel (

Figure 7,

infer parallel condition). In this case, fixating on the target does not help depth estimation, and indeed hinders and slows it down, which is probably caused by the increased effort that the agent needs to make in order to infer the reference frames of the eyes when they are rotated in different directions. This has the consequence of further increasing the time needed for the active vision model to estimate the depth.

Intuitively, the better performance in the uniform condition is due to the lack of noise in the visual input. Although a more realistic scenario would consider noise in this case, it is reasonable to assume that it would have a much smaller amplitude due to a uniform distribution. Considering only the nonuniform sensory distribution, the better performance in the infer vergence condition relative to active vision could be due to the fact that in the former case the agent starts the inference process from a state of fixation on the correct 3D position. Thus, an active vision strategy in the infer vergence condition only needs to estimate the object’s depth. In comparing these two scenarios, it can be noted that active vision performs almost optimally, similar to the infer vergence condition when the eye angles are set to the correct values for object fixation. However, the latter condition rarely occurs in a realistic setting, and a more meaningful depth estimation comparison would be between active vision and the more general case in which the agent is fixating another object or not fixated on anything in particular, which we approximate with the infer parallel simulation.

4. Discussion

We have advanced a hierarchical Active Inference model for depth estimation and target fixation operating in the state space of the projected camera planes. Our results show that depth estimation can be solved by inference, that is, by inverting a hierarchical generative model that predicts the projection of the eyes from a 2D belief over an object. Furthermore, our results show that active vision dynamics makes the inference particularly effective and that fixating the target drastically improves task accuracy (see

Figure 7). Crucially, the proposed model can be implemented in biologically plausible neural circuits for Predictive Coding [

8,

9,

10], which only require local (top-down and bottom-up) message passing. From a technical perspective, our model shows that inference can be iteratively realized in any homogeneous transformation by combining generative models at different levels, each of which computes a specific transformation, which could be, for instance, a roto-translation for kinematic inference [

21] or a projection for computer vision.

This proposal has several elements of novelty compared to previous approaches to depth estimation. First, by focusing on inference and local message passing, our proposal departs from the trend of viewing cortical processing from a purely bottom-up perspective. The latter is common in neural network approaches, which start from the image of an object and gradually detect more and more complex features, eventually estimating its depth. Moreover, our proposal is distinct from a direct approach that generates the depth of an object from a top-down perspective, e.g., using vergence cues. The role of vergence has long been considered key in facilitating binocular fusion [

5] and maximizing coding efficiency in a single environmental representation [

26]; however, recent studies have dramatically reduced the importance of this mechanism in depth estimation. Binocular fusion might not be strictly necessary for this task [

27], as90% performance of depth estimation is attributed to diplopia [

28] and it has never actually been tested as an absolute distance cue without eliminating all possible confounders [

28]. Moreover, when fixating a target there is always a disparity of vertical fixation in monocular images, with no line precisely intersecting to form a vergence angle [

29]; it has been demonstrated that vergence does not correspond to the exact distance of the object being gazed at [

30]. In keeping with this body of evidence, the vergence belief does not play a critical role in depth estimation in our model, only operating along with a high-level belief over the 2D position of the object in order to predict the projections for the two eyes. These projections are compared with the visual observations, and the resulting prediction errors that flow back through the hierarchy then drive the update of both beliefs (i.e., about the eye angles and the 2D object position). This change occurs in two possible ways: (i) to the estimated depth of the object or (ii) to the vergence-accommodation angles of the eyes, ultimately realizing a specific movement. In sum, depth estimation is not purely a top-down process; rather, it is realized through the inversion of a generative projective model and by averaging the information obtained through the two parallel pathways of the eyes. In conclusion, our model supports the direct (from disparity to both vergence and depth) rather than the indirect (from disparity to vergence and then to depth) hypothesis of depth estimation [

27]. This account is in line with the fact that small changes in vergence (

delta theta) are a consequence of, and not a direct hint about, depth estimation [

28], as well as that reflex-like vergence mechanisms serve only to eliminate small vergence errors, not to actively transfer the gaze to new depth planes [

31].

An interesting consequence of this architecture is that, in contrast to standard neural networks, it permits the imposition of priors over the depth belief in order to drive and speed up the inferential process. Such priors may come through different sensory modalities or other visual cues, e.g., motion parallax or perspective, which we have not considered here. This is in line with the finding that vergence alone is unable to predict depth with ambiguous cues [

5], suggesting that depth belief is constantly influenced by top-down mechanisms and higher-level cues, and does not simply arise directly from perception. In addition to depth priors, using an Active Inference model has the advantage that, if one wishes to fixate on a target, simple attractors can be defined at either the eye angle beliefs or the last projection level, each in their own domain. For example, requiring that the agent should perceive a projection of an object at the center of the camera plane results in the generation of a prediction error that ultimately moves the eyes towards that object, emphasizing the importance of active sensing strategies to enhance inference [

32,

33].

However, the fact that there are two open pathways through which the prediction errors of the projections can flow, i.e., the eye angles and the absolute beliefs, may be problematic in certain cases, e.g., during simultaneous depth estimation and target fixation. It is natural to think that depth estimation follows target fixation. In fact, top-down processing to verge on a target is generally not necessary; when an image is presented to a camera, the latter might move into this projected space directly, resulting in a simpler control [

34,

35]. Then, depth can be computed directly from vergence cues. However, our model assumes that the eye angles generate the projections by first performing a roto-translation in the 2D space using the estimated depth, allowing further mechanisms for more efficient inference. Under this assumption, target fixation in the projected space is possible through a top-down process that is constantly biased by the high-level belief. Nonetheless, a direct vergence control (not considered here) could be implemented by additional connections between the belief over the 2D or projected points and the angle beliefs.

With these considerations, it would appear plausible that the two processes of depth estimation and target fixation might run in parallel. However, when this is the case the prediction errors of the projections drive the two high-level beliefs independently towards a direction that minimizes the free energy, leading the agent to become stuck in an intermediate configuration with incorrect object depth and eye angles. One way to solve this issue that we have pursued here consists of decomposing the task into cyclical phases of action and perception [

23,

24]. During an action phase, the 2D belief is fixed and the agent can fixate on the predicted projections, while during a perception phase the agent can infer the 2D position of an object but is not allowed to move its sight. This implies that the prediction errors of the projections alternately flow in different directions (2D position and eye angles) one step at a time, which results in (i) the object being pulled toward the center of the camera planes and (ii) the estimated 2D position converging toward the correct one, as shown in

Figure 6. Action–perception cycles have been studied in discrete time models of Active Inference; for example, cycles of saccades and visual sampling allow an agent to reduce the uncertainty over the environment, e.g., by rapidly oscillating between different points for recognizing an object [

36,

37]. Here, we show that action–perception cycles are useful in continuous time models, such as the one used here, to ensure effective minimization of the free energy as well as when an agent is required to reach an object with the end-effector while inferring the lengths of its limbs [

23]. In summary, the two processes of recognizing a face and estimating an object’s depth can both be viewed as a process of actively accumulating sensory evidence at different timescales. From a brain-centric perspective, action–perception cycles have often been associated with hippocampal theta rhythms and cortical oscillations, which might indicate segmentation of continuous experiences into discrete elements [

24,

38]. From a more technical perspective, the cyclical scheme that we propose for action–perception cycles, which consists of keeping one aspect of the optimization objective fixed when updating the other, is commonly used in various optimization algorithms such as expectation maximization [

39]; a similar approach is used for learning and inference in Predictive Coding Networks [

40,

41].

Our results show that active vision improves depth estimation. However, if vergence does not provide a useful cue for depth, then how is this possible? The answer lies in the nonuniform resolution of the fovea, which has far more receptors at the center of fixation than in the peripheral vision. It is supposed that this nonlinear resolution allows sensory processing resources to be gathered around the most relevant sources of information [

42]. Under this assumption, the best performance is achieved when both eyes are fixated on the object, as shown in

Figure 7. As noted in [

43], when stereo cameras have a nonsymmetrical vergence angle, the error is at a minimum when the projections of a point fall at the center of the camera planes. Hence, vergence can effectively play a key role in depth estimation while providing a unified representation of the environment. This can be appreciated by considering that the

infer vergence and

active vision models are more accurate than the

infer parallel model in the nonuniform resolution condition. With a uniform resolution, the error is larger when the eyes converge to the target, because the focal angle of the pixels in the center is larger than in the periphery [

43]. In addition to the increased error, the estimation seems to be further slowed down by the inference of the different reference frames due to vergence. Taken together, the consequence is that in the uniform resolution scenario the best estimation is achieved with fixed parallel eyes (see

Figure 7), while active vision does not bring any advantage to the task. Because maintaining parallel eyes in the uniform condition results in a slightly higher accuracy, such simulations may be useful in understanding those cases in which verging on a target would improve model performance. This might be helpful for future studies in bio-inspired robotics, especially when extending the proposed model to implement high-level mechanisms, such as higher-level priors that result from the integration of cues from different sensory modalities or attentional mechanisms that unify the visual sensations into a single experience.

The model presented in this study has a number limitations that could be addressed in future studies. Notably, we used a fixed focal length

f during all the simulations. In a more realistic setting, the focal length might be considered as another DoF of the agent, and might be changed through the suppression of proprioceptive prediction errors in order to speed up the inferential process for objects at different distances. Furthermore, although the presented simulations only estimate the depth of a 2D point, it could potentially be extended to deal with 3D objects and account for vertical binocular disparity [

44]. This would involve augmenting all the latent states with the new dimension and performing a sequence of two rotations as intermediate levels before the eye projections are predicted. Then, the vergence-accommodation belief would be extended with a new DoF, allowing the agent to fixate on 3D objects. In addition, future studies might investigate how the scaling factor in Equation (

28) of the nonuniform resolution could, along with more realistic nonuniform transformations, affect performance and help to model human data (e.g., [

25]). It could be useful to adopt an off-center fovea on one of the two retinas and analyze the agent’s behavior to then bring the two foveas onto the target. Finally, another interesting direction for future research would be to combine the architecture proposed here with a more sophisticated Active Inference kinematics model [

21], for example embodying a humanoid robot with multiple DoFs [

45,

46,

47,

48,

49]. In contrast to state-of-the-art models that provide the agent either with the 3D environment directly as a visual observation [

20] or with a latent space reconstructed from a Variational Autoencoder [

50], this would allow the 3D position of an object to be inferred through the projections of the eyes, then used for subsequent tasks such as reaching and grasping.