Abstract

Aimed at the problems of the Harris Hawks Optimization (HHO) algorithm, including the non-origin symmetric interval update position out-of-bounds rate, low search efficiency, slow convergence speed, and low precision, an Improved Harris Hawks Optimization (IHHO) algorithm is proposed. In this algorithm, a circle map was added to replace the pseudo-random initial population, and the population boundary number was reduced to improve the efficiency of the location update. By introducing a random-oriented strategy, the information exchange between populations was increased and the out-of-bounds position update was reduced. At the same time, the improved sine-trend search strategy was introduced to improve the search performance and reduce the out-of-bound rate. Then, a nonlinear jump strength combining escape energy and jump strength was proposed to improve the convergence accuracy of the algorithm. Finally, the simulation experiment was carried out on the test function and the path planning application of a 2D grid map. The results show that the Improved Harris Hawks Optimization algorithm is more competitive in solving accuracy, convergence speed, and non-origin symmetric interval search efficiency, and verifies the feasibility and effectiveness of the Improved Harris Hawks Optimization in the path planning of a grid map.

1. Introduction

Intelligent agent path planning is currently one of the hottest research topics. In fact, path planning has been widely used in multiple fields, such as mobile robots [1,2,3], UAVs [4], and urban planning [5]. Especially in smart factories, efficient path planning can significantly save time and reduce costs. Path planning can be considered a combinatorial optimization problem. The solution to this type of problem is usually based on traditional algorithms and intelligent algorithms. Traditional algorithms include the A* (A-star) algorithm [6,7], Dijkstra algorithm [8], artificial potential field [9], Rapidly-exploring Random Trees (RRT) [10,11], etc., whereas intelligent algorithms include the Particle Swarm Optimization (PSO) [12], Genetic Algorithm (GA) [13], Grey Wolf Optimizer algorithm (GWO) [14], Artificial Fish Swarm Algorithm (AFSA) [15], Whale Optimization Algorithm (WOA) [16], etc. Due to the simple structure, fast convergence speed, high search precision, and simple operation of the swarm intelligence algorithm, it is currently used in many practical projects [17,18]. Wang et al. proposed an adaptive and balanced Grey Wolf Optimization algorithm, which uses a novel level-based strategy to select random wolves to improve the exploration energy of the algorithm and introduces an adaptive coefficient to dynamically adjust the exploration and exploitation capabilities of different optimization stages. It is used to find the optimal feature subset for high-dimensional classification [19]. Liu et al. proposed a differential evolutionary chaotic Whale Optimization Algorithm, which increases the diversity of the population by introducing the initial population of sine chaos theory. A new adaptive inertia weight is introduced to improve the optimization performance of the algorithm, and the differential variance algorithm is integrated to improve the search speed and accuracy of the algorithm. And, the improved algorithm is applied to the distribution network fault location of the IEEE-33 nodes [20]. Tajziehchi et al. proposed a new approach for selecting optimal accelerographs, and the scaling of them for dynamic time history analysis is presented by the binary genetic algorithm and natural numbers in order to achieve the mean response spectrum, which has a proper matching and a short distance with the target spectrum and indicates the expected earthquake of the site [21]. In the literature, there has been a lot of research on 2D path planning and mobile robots in recent years [1,2,3], especially research on 2D mobile robot path planning [22,23,24,25,26]. The mainstream of mobile robot path planning is based on the grid map. The classification of 2D path planning methods is generally divided into classical algorithms and natural heuristic algorithms. Since classical algorithms cause problems such as high computational cost and low computational efficiency, heuristic algorithms have a fast response speed and are more suitable for the optimization of local path planning [27]. Therefore, the natural heuristic algorithm is the most suitable method for two-dimensional grid map path planning.

The Harris Hawks Optimization algorithm is a swarm intelligence algorithm proposed by Heidari et al. in 2019 [28]. This algorithm simulates the special hunting behavior of the Harris hawk population. The HHO algorithm has a total of six phases: two exploration phases and four exploitation phases. In order to find the optimal solution, HHO randomly executes one of the six stages. Due to its powerful structure, its original performance is far superior to many recognized methods, such as the PSO, GWO, AFSA, and WOA algorithms [29]. Compared with these popular methods, the results of HHO are of relatively high quality. This feature also supports why it is widely used [30]. At the same time, the HHO algorithm also has the characteristics of good convergence speed, powerful neighborhood search, good balance between exploration and exploitation, suitability for many problems, easy implementation, and so on [31]. Therefore, HHO has been applied to convolutional neural network structures [32], image segmentation [33,34], UAV path planning [35], and PM prediction [36], among other fields. However, similar to other swarm intelligence algorithms, the Harris hawks algorithm also has its own drawbacks, such as being prone to falling into local optima, jumping out of the interval in non-origin symmetric intervals, and having low convergence accuracy. With the emergence of more and more highly complex problems, many scholars have tried to improve HHO to enhance its performance. Zhang et al. explored six escaping energy and proposed MHHO, which proves the superiority and effectiveness of the exponential decreasing strategy [37]. Liu et al. introduced square neighborhood topology and random arrays to solve the imbalance problem in the HHO exploration phase and exploitation phase and improve the algorithm’s convergence accuracy and optimization ability [38]. Zhu et al. proposed a method based on chemotaxis correction to improve the HHO algorithm, improving the problem of slow convergence speed and the tendency to fall into local optimum [39]. Sihwail et al. proposed a new search mechanism using an elite backward learning strategy to avoid falling into local optima and enhance search ability [40]. Zhang et al. proposed the ADHHO algorithm based on adaptive coordinated foraging and dispersed foraging to improve the stability of the algorithm because the HHO algorithm has low population diversity and a single search method during the exploration phase [41]. Although these improvements made to the HHO algorithm have achieved good results, it is worth noting that there is no perfect algorithm capable of handling all the optimization problems due to the No Free Lunch (NFL) theory [42]. Moreover, these improvements are not suitable for handling nonsymmetric interval path planning problems.

As engineering problems become more complex and automation becomes more widespread, the environment that path planning technology faces will become more complex too, requiring it to adapt to the environment. However, using traditional strategies, such as the A* (A-star) algorithm and the Dijkstra algorithm, to implement path planning is far from sufficient. Currently, many scholars have conducted research on path planning by applying swarm intelligence algorithms [43] and other strategies. For example, Liu X et al. designed a hybrid path planning algorithm based on optimized reinforcement learning and improved particle swarm optimization. This algorithm optimizes the hyper-parameters of reinforcement learning to make it converge quickly and learn more efficiently. A preset operation was designed for the particle swarm algorithm to reduce the calculation of invalid particles, and the algorithm proposes a correction variable obtained from the cumulative reward of reinforcement learning; this corrects the adaptation of the individual optimal particle and the global optimal position of the particle swarm optimization degree to obtain effective path planning results. Also designed was a selection parameter system to help choose the optimal path [22]. Lab X et al. proposed a method based on the improved A-star ant colony algorithm, using the A-star algorithm to optimize the initial pheromone of the ant colony to improve the convergence speed of the algorithm and, at the same time, increase the enhancement coefficient of the ant colony pheromone to avoid the late stage of the ant colony algorithm’s excess accumulation of pheromones, leading to local optimization problems [23]. Szczepanski R et al. used the artificial bee colony algorithm to optimize the path globally and then used the Dijkstra algorithm to select the shortest path, making it possible to obtain at least a satisfactory path in the workspace with a small computational workload in real-time path planning [24]. Xiang D et al. improved the evaluation function of the A* algorithm, increased the convergence speed of the A* algorithm, and removed the unnecessary nodes in the A* algorithm, thereby reducing the path length and used the mechanism of the greedy algorithm to insert unselected nodes one by one. In the optimal path, the planning of multiple target points is realized at one time [25]. Zhang Z et al. proposed an improved sparrow search algorithm, which added a new domain search strategy and a new position update function to improve the fitness value and convergence speed of the global individual, respectively, and introduced it to obtain high-quality paths and fast convergence, and proposed a linear Path strategy, so that the robot can reach the goal faster [26]. Although the aforementioned fusion research has achieved good results in the path planning of mobile robots, there are also some shortcomings. For example, path planning is implemented by rounding the coordinates when following the grid map, which may sacrifice the length of the path. And, using random points for path planning can not only search for paths in a wider range but also improve the flexibility of path planning and avoid local optimal solutions. The way of randomly picking points can improve globality, flexibility, and robustness.

The current path planning interval is based on map data, and the maps usually use asymmetric origin intervals to represent data distribution. Path planning maps provide an optimal path based on the asymmetric origin interval between the starting point and the endpoint and are asymmetrical. The origin interval will have a certain impact on the algorithm of the path planning map. Due to the asymmetric origin interval between the starting point and the endpoint, the path lengths in different regions are different. Therefore, the algorithm of the path planning map needs to take this into account to ensure that the final path is the shortest one and goes through a suitable area. In the standard HHO algorithm, when facing non-origin symmetric intervals, the position updating formula during the exploration phase may cause the HHO algorithm to jump out of the defined interval, thereby reducing the search efficiency. Because MHHO is biased toward the exploration phase, it improves the formula of the exploration position, making MHHO more likely to jump out of the set range. ADHHO proposed a phase based on adaptive cooperative foraging, dispersed foraging, and a biased exploitation phase, which will make the jump out of the set interval much smaller. However, the position step size improvement is not complete, resulting in a high probability of jumping out of the set interval. Based on the above problems, this paper improves the HHO algorithm; using the circle map during the population initialization reduces the probability of initializing points on the boundary and prevents out-of-bounds during position updating, thus increasing the purpose of the initial population and improving search efficiency. The use of nonlinear jump strength improves the convergence accuracy of the local search stage. Integrating the concept of the A* algorithm into the fitness function of the Harris Hawks Optimization algorithm to plan the path of the 2D grid map, the grid map is no longer limited to the selection and rounding of the center of the grid, but to the random selection of points, so that the path length is shorter and more stable.

In the experimental section, the out-of-bounds rate of the IHHO algorithm is compared with HHO and its variants in asymmetric intervals. A total of 11 benchmark functions are selected to perform the function optimization tasks, compare each strategy of the IHHO separately, and compare with other algorithms. Through the statistics and analysis of the experimental data, the Improved Harris Hawks Optimization algorithm has better performance in solution accuracy, convergence speed, and non-origin symmetric interval search efficiency.

The rest of this paper is organized as follows: In Section 2, a mathematical optimization model for path planning in the grid map is given. In Section 3, the basic principle of HHO is introduced. Section 4 introduces the IHHO algorithm in detail. Section 5 simulates and experiments on the IHHO algorithm and verifies the performance of the IHHO algorithm. Section 6 applies the IHHO algorithm to the path planning of the grid map and verifies its superiority and effectiveness. Section 7 concludes with some conclusions and some prospects for the future.

2. Description of the Mathematical Problem

To study the path planning problem of the grid map, it is necessary to establish a grid map model that is similar to the environment. The obstacles are classified as black blocks, while white blocks represent safe areas. When determining the optimization objective function (fitness function), the path length is the major consideration of the optimization objective.

2.1. Path Length

The path length is the primary consideration in path planning problems, as it affects the robot’s running time and energy consumption. The premise of seeking the shortest path is to avoid obstacles. The calculation formula for the path length is shown in Formula (1), where is the horizontal coordinate of the node and is the vertical coordinate of the node.

2.2. Mathematical Model

The A* algorithm is a commonly used algorithm for path planning. The A* algorithm mainly calculates the goodness of the traversed nodes using the evaluation function [44]. The core expression of the evaluation function is shown in Formula (2).

The evaluation function is set to F, and the value of can estimate the minimum path cost from the initial node to any node n and the minimum path cost from node n to a destination node. is defined as knowing the actual cost from the start node to n nodes, and is defined as the actual cost of the optimal path from n nodes to the target node [45].

For an optimal path cost from the initial node to the target node, it will be divided into two parts, namely and ; is the moving cost from the initial node vector to the target node vector, that is, the path length, and is the path cost of the initial node vector and the target node vector. Then, the mathematical optimization model of the path length cost function can be defined as follows:

By combining the path length and cost function into the optimization objective function, it is obvious that the desired better path in the grid map path planning process is one that is both the shortest and crosses fewer obstacles.

2.3. Grid Modeling

The principle of the grid method is to divide the entire two-dimensional space into grids of the same size and simulate the obstacles and safety areas as a collection of small squares of different colors, which form a grid map. Figure 1 is the simulated 2D modeling of the path planning environment. The position of the obstacle is marked as the black part, the white area is the feasible area, “o” represents the starting point, and “x” represents the endpoint.

Figure 1.

Mathematics model.

3. Standard Harris Hawks Optimization (HHO) Algorithm

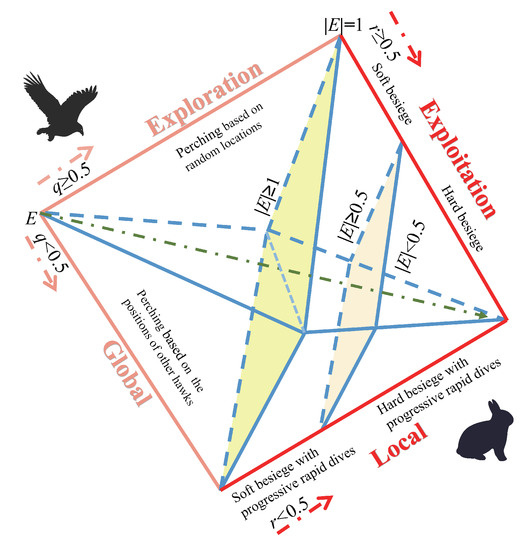

The HHO algorithm simulates the behavior of Harris hawks while hunting rabbits. The algorithm attacks prey through strategies such as assaulting, encirclement, and disturbance. HHO mainly includes two phases, exploration and exploitation, and the selection between the two phases is based on the absolute value of the prey’s escape energy E. Figure 2 shows the different phases.

Figure 2.

Different phases of HHO.

3.1. Exploration Phase

In the Harris Hawks Optimization algorithm, individual Harris hawks are considered candidate solutions, and the best candidate solution at each iteration is treated as the target prey or a near-optimal solution. During the exploration phase, Harris hawks search for prey in two different ways, which are defined as follows:

where denotes a random selection of the individual Harris hawk’s position and is the prey location or optimal location for the Harris hawk population. , , , , and q are all random numbers within the interval (0,1), where , , , and are scaling coefficients used to provide diverse trends and q is used to simulate the random selection of prey. and represent the upper and lower bounds of the search space, respectively. denotes the average position of the Harris hawk population, which is calculated using the following formula:

3.2. Exploitation Phase

During the exploitation phase, in order to trap prey, the Harris hawk employs four different exploitation strategies based on the prey’s behavior. Using a random number r within the range (0,1) to assume if the prey has successfully escaped, if , it means that the prey successfully escapes the encircling circle; otherwise, it means that the prey fails to escape the encircling circle. When , the hawk will use the hard besiege method, while, when , it will use the soft besiege method. When and , meaning that the prey has enough energy to successfully escape, the Harris hawk updates its position using the soft besiege strategy. The specific calculation formula is as follows:

where represents the difference in the position vectors between the prey and the current position vector, J is the random jump strength during the prey’s escape process, and is a random number within the range (0,1).

When and , which means the prey has insufficient energy but can still successfully escape, the Harris hawk uses a hard besiege method for the position update. The specific calculation formula is as follows:

When and , which means the prey has sufficient energy but failed to escape the encirclement, the Harris hawk uses a soft besiege with the progressive rapid dives method for the position update. The specific calculation formula is as follows:

where D is the problem dimension, S is a random variable, and F is the fitness function. is the levy flight function. The specific calculation formula is as follows:

where and are random numbers within the range (0,1) and is a default constant of 1.5.

When and , this means the prey has low escape energy and the hawk continually approaches the prey to reduce the distance. If the prey does not have enough energy to escape, the hawk will try to shorten the central position between itself and the fleeing prey. At this time, the Harris hawk will use a hard besiege with the progressive rapid dives method for the position update. The specific calculation formula is as follows:

where is calculated using Formula (5) and is computed using Formula (11).

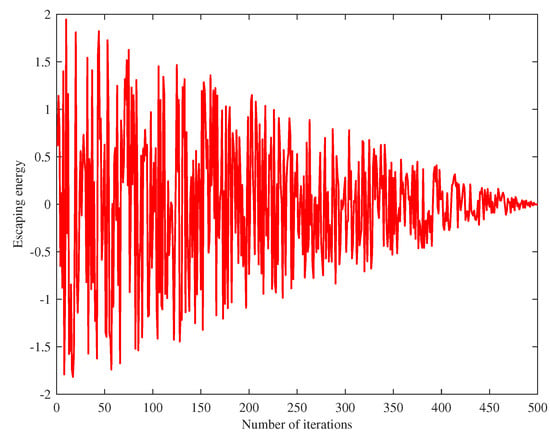

3.3. Escaping Energy

The exploratory and development stages can be converted through the escaped energy E of the prey, and the absolute value of the escaped energy E decreases continuously during the process of evading the pursuit of the Harris hawk. The calculation formula of the escaped energy E is shown below:

where represents the initial state of the prey, which is a random number between (−1,1); t is the current iteration number; and T is the maximum iteration times. The variation of the escaped energy with the iteration times is shown in Figure 3.

Figure 3.

Escaping energy.

4. Improved Harris Hawks Optimization (IHHO) Algorithm

The standard HHO is a stable and high-performance optimization algorithm that can be used to solve many practical engineering problems. Firstly, in the global search phase, only random individuals are used for position updates, and there is a lack of information exchange between populations, resulting in a susceptibility to premature convergence and an inability to escape local optima. Secondly, HHO relies on the average position of the population, which leads to a reduction in diversity in the early phase and may ignore the existence of the optimal solution. Thirdly, the position update formula for the exploration stage has a large step size, which makes it easy to go out-of-bounds during the search phase, especially in non-origin-symmetric intervals. Fourthly, the jump strength of the prey mainly affects the development stage and the random jump strength has a certain probability of causing too large local search step sizes and increasing the instability of the algorithm. By using the nonlinear convergence curve combined with the escaped energy to control the step size, the randomness of the algorithm can be better controlled. In addition, the jump strength of the prey should be proportional to its escaped energy. The lower the energy, the lower the height or distance the prey can jump. Therefore, this paper adopts the following strategies:

- 1.

- Using the circle map to initialize the population to reduce the number of initialization points on the boundary.

- 2.

- Introducing the random guidance strategy and improvement sine-trend search strategy to replace Formula (4), which increases the information exchange between populations, improves diversity, and reduces the step size and premature convergence to some extent, thus increasing the efficiency of the global search of the hawks. The improved sine-trend search strategy reduces the dependence of the population on the average position and guides the individual hawks to approach the prey, thus improving the convergence in the exploration stage.

- 3.

- Proposing the nonlinear jump strength convergence strategy, which combines the random jump strength with the prey’s escape energy to increase the convergence accuracy during a local search.

4.1. Circle Map

Both the chaotic map and pseudo-random strategy possess randomness and unpredictability, but the chaotic map has a certain regularity and is often used instead of the pseudo-random strategy for better results. Many chaotic maps are available, such as the circle map, Chebyshev map, intermittency map, iterative map, logistic map, sine map, sinusoidal map, tent map, and singer map. In order to place the initial position of the Harris hawk as close to the center as possible, we use the circle map to initialize the population. Its mathematical model is as follows:

where is a mod function, is obtained from a random function with the interval (0,1), K is an integer, , and .

4.2. Random Guidance Strategy

In Formula (4) of the exploration phase, using the same random position for updating the current position leads to a lack of communication between the populations and greatly increases the out-of-bounds rate during a search. By calculating the vector differences between multiple random positions and the current position, on the one hand, the out-of-bounds rate can be reduced and, on the other hand, the information exchange between the populations can be increased. This avoids a single global optimal position and prevents the early convergence of HHO to some extent. At the same time, it helps the algorithm to further explore and improves the efficiency of a global search. The formula for calculating the random guidance factor is as follows:

where is the vector position of the random Harris hawk. The vector difference is used as the step size to guide the Harris hawk population to update their positions. The specific formula for the position update is as follows:

where is the vector weight and n is the number of random Harris hawks.

4.3. Improved Sine-Trend Search

In the exploration phase of the standard HHO, the position updates are based on the average position of the population and the updating of the positions in the exploitation phase also depends on the average position of the population. Therefore, when the population is trapped in a local optimum, it hardly produces a new position. When the HHO faces non-origin-symmetric intervals, the position update is easily out of the defined interval, making the position update invalid and reducing the search efficiency.

The Sine Cosine Algorithm (SCA) [46] uses adaptive amplitude changes in sine and cosine functions for global and local search, so that individual Harris hawks can search around themselves, reducing the out-of-bound rate and increasing optimization performance.

As the Harris hawks algorithm is currently in the exploration phase, only the exploration phase of the sine cosine strategy is retained and the exploitation phase of the sine cosine strategy is not used. The formula for the improved sine search is shown as follows:

where is a nonlinear convergence coefficient, is a phase factor, and is a random number between 0 and 1. The formulas for and are as follows:

where is a random number between 0 and 1 and P is an integer of 0 or 1. In order to increase the convergence of the algorithm and guide individuals closer to the population’s optimal value, the golden ratio coefficient [47] is introduced into the formula to search around the vicinity of the optimal value. The formula for the improved sine-trend search strategy is shown as follows:

where c and d are coefficients obtained by incorporating the golden ratio, , , and the golden ratio numbers .

4.4. Nonlinear Jump Strength

In the HHO algorithm, the escape energy E determines the transformation between global search and local search and affects the exploitation phase. The escape energy E of the prey shows a linear downward trend during the process of being chased, while the jump strength J is a random number of (0,2). It is difficult to describe the actual changing trend of biological energy. Some scholars have found that the law of energy consumption can be introduced into the jump strength of prey by combining the escape energy and random jump strength [48]. The overall trend of the physical energy consumption of the prey during the pursuit is declining. As the prey’s physical strength decreases, the jump strength also gradually decreases, which is more in line with biological laws. And, because the jump strength is the influencing factor of the step size in the exploitation phase, it affects the exploitation phase. The jump strength decreases nonlinearly, thus improving the convergence accuracy of the local search stage. The specific calculation formula for nonlinear jump strength is as follows:

4.5. Computational Complexity

4.5.1. Time Complexity Analysis

The time complexity is an important index to evaluate the operation efficiency of an algorithm. The original paper on HHO [28] has already analyzed the time complexity of HHO and will not discuss these details again. The computation time complexity of HHO mainly lies in three aspects: population initialization, fitness evaluation, and position updates. During population initialization, the HHO generates numbers through pseudo-random generation, while IHHO generates numbers through the circle map. The time complexity of both methods for initializing the population is . When updating the position vectors, HHO has a minimum time complexity of and a maximum time complexity of . On the other hand, IHHO does not add a position update formula, so the time complexity for both methods for updating positions is the same.

4.5.2. Performing Step Time Complexity Analysis

The steps to analyze complexity are listed as the following:

- Step 1: During the initial population calculation, the numbers need to be computed, with a computation complexity of .

- Step 2: The fitness value of the individuals in the population needs to be evaluated once, with a computation complexity of .

- Step 3: The escape energy needs to be calculated once, with a computation complexity of .

- Step 4: If the progressive encirclement approach is used, the position needs to be updated twice; otherwise, it only needs to be updated once, resulting in a computation complexity of . If the progressive encirclement approach is used then ; otherwise, .

- Step 5: The fitness value and the overall optimal value need to be updated twice, with a computation complexity of .

- The overall execution time complexity is .

According to the improvement idea mentioned above, the algorithm flow chart of the IHHO is shown in Figure 4. The pseudo code of the IHHO is as follows Algorithm 1:

| Algorithm 1 Pseudo-code of IHHO algorithm. |

| Input: The population size and maximum Output: The location of rabbit and its fitness value

|

Figure 4.

Flow chart of IHHO.

5. Algorithm Simulation Experiment and Analysis

All experiments were implemented in Matlab 2018b. All computations were run with the following hardware: Intel Core i7-8550u, 1.80 GHz, 8 GB RAM, and Windows 10 (64-bit) operating system. The algorithm parameter settings used in the simulation experiment are shown in Table 1.

Table 1.

Parameter settings of algorithm.

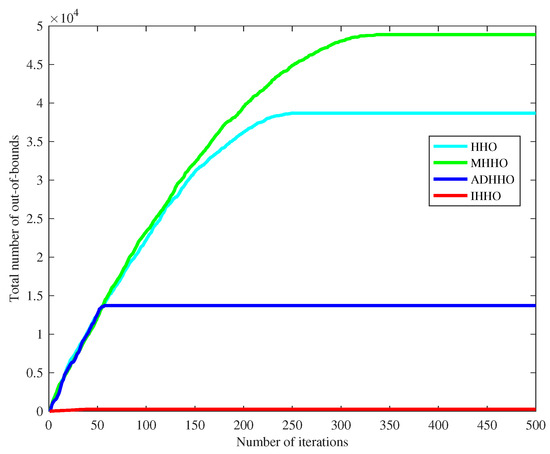

5.1. Out-of-Bounds Comparison

The grid map is a typical non-origin symmetric path planning map. The standard HHO algorithm sets the search step too large in non-origin-symmetric intervals during the exploration phase, which leads to easy position updates that venture outside of the set interval, resulting in ineffective position updates and reduced algorithm efficiency. In contrast, IHHO balances global search and controls the step size during the exploration phase, reducing the probability of venturing outside the set interval and improving algorithm efficiency. This section sets the maximum number of iterations T to 500, the dimensionality D to 30, the population N to 30, and the interval to [0, 100], and conducts 20 function tests, with the final mean taken as the result. As shown in Figure 5, the number of out-of-bounds occurrences is shown in Table 2.

Figure 5.

Comparison diagram of out-of-bound numbers.

Table 2.

Comparison table of total numbers out-of-bounds.

From Figure 5, it can be seen that in the early phase of iteration, the number of out-of-bounds occurrences in HHO continues to increase and, after transitioning from the exploration phase to the development phase and entering local search, the number of out-of-bounds occurrences no longer increases. However, IHHO consistently maintains a low number of out-of-bounds occurrences throughout the exploration phase. A clear comparison can be made through Table 2, which shows that using the improved algorithm can significantly reduce the Harris hawk positions venturing outside the set interval and improve the efficiency of the Harris hawk position updates.

It can be seen from Figure 5 that the inflection point of the line segment is the transformation between the exploration phase and the exploitation phase. The IHHO, HHO, and their variant algorithms are analyzed from the exploration and exploitation behaviors. Exploration and exploitation are two important aspects of the algorithm. When the number of iterations is fixed, if the algorithm is biased toward exploration, the algorithm will find the extreme points of the function faster, but the accuracy will be lost by the algorithm, and it will be easier to jump out of the set range. If the algorithm is biased toward exploitation, the number of out-of-bounds will be reduced, but the algorithm is prone to fall into the local optimum. Therefore, the balance between exploration and exploitation needs to be considered. In general, the results are better when the number of iterations of the two phases is close [49]. As in the introduction and analysis of this paper, IHHO is improved based on non-origin symmetric interval problems. It is a good way to favor the exploitation phase, but the HHO algorithm itself has the disadvantage of being easily trapped in the local optimum. Based on the above, and the analysis of the experimental data, IHHO performance is at its best when the ratio between the exploration and exploitation is close to unity.

5.2. Test Function

In this experiment, 11 benchmark functions with different optimization characteristics were randomly selected from the commonly used CEC test functions, including five unimodal benchmark functions, three multimodal benchmark functions, and three fixed-dimensional benchmark multimodal functions. The IHHO algorithm was tested and compared using these benchmark functions, where F1–F5 are unimodal benchmark functions and F6–F11 are multimodal benchmark functions, all of which can be used to test the optimization performance and convergence accuracy of the algorithm. The test functions are listed in Table 3.

Table 3.

Benchmark function.

In order to better verify the impact of each strategy on the IHHO algorithm, this section uses 11 benchmark functions to compare the circle map, improved sine-trend search, random guidance strategy, nonlinear jump strength, and IHHO. In order to be able to make a fair comparison, the number of populations N is set to 30 and the maximum number of iterations T is 500. Each group was individually tested for 30 rounds and its average value, optimal value, worst value, and standard deviation were recorded. The test results are shown in Table 4.

Table 4.

The value of each test function under different strategies.

From Table 4, and from the test results of the F1–F4 unimodal functions, the improved sine-trend search has the greatest impact on IHHO. According to the test results of function F5, the circle map, improved sine-trend search strategy, and nonlinear jump strength have a greater impact on IHHO than the random guidance strategy. The test results of function F6 show that the impact of nonlinear jump intensity on IHHO is the largest. The impact on functions F7–F8 is similar. According to the test results of functions F9 and F11, the random guidance strategy and the improved sine-trend search strategy have the largest impact on IHHO, while function F10 is the one with the largest impact on IHHO by random guidance strategy and nonlinear jump strength.

From the overall analysis of Table 4, the IHHO algorithm performance of all strategies fusion is optimal, followed by the improved sine-trend search strategy, but the improved sine-trend search strategy will lead to an easier fall into the local optimum because of its tendency to optimality, while the random guidance strategy increases the mutual communication of its population, which can better improve this shortcoming. From the test and results, the circle map and nonlinear jump strength provide support to IHHO in terms of optimization accuracy.

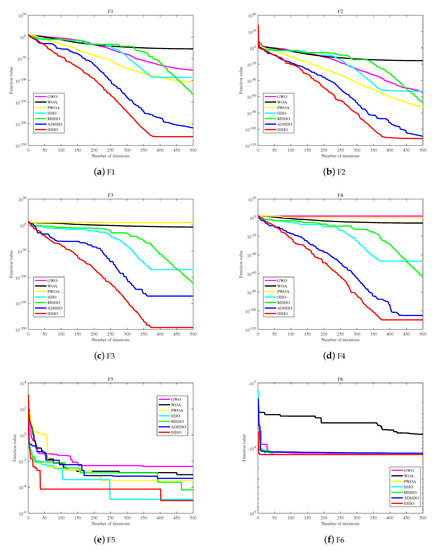

In this section, HHO, GWO [14], WOA [16], PWOA [50], MHHO [37], ADHHO [41], and IHHO were compared using the 11 benchmark functions. To ensure a fair comparison, the population size N was set to 30, and the maximum number of iterations T was set to 500 for each function test. Each function was tested separately for 30 rounds, recording its mean, best, worst, and standard deviation results; the test results are shown in Table 5.

Table 5.

The calculated optimization value of each test function with different algorithms.

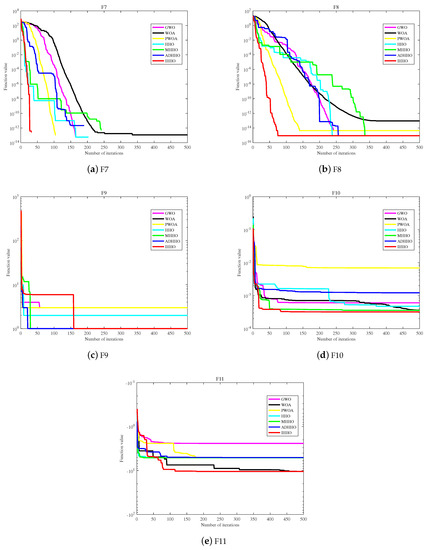

From Table 5, it can be seen that the IHHO algorithm in this paper achieved 11 optimal results on the benchmark test function. The results demonstrate that the improved HHO algorithm is superior to the other five algorithms. On the unimodal test function, the convergence speed of IHHO is the fastest among the other six algorithms, further verifying the effectiveness of the proposed improvements in improving the convergence speed of the HHO algorithm. On the multimodal test function, in the case of test function F6, it can be seen from the standard deviation that IHHO is the most stable among the compared algorithms, including HHO, MHHO, and ADHHO. As for test functions F7 and F8, IHHO performs equally well as HHO, MHHO, and ADHHO. In terms of the fixed-dimensional multimodal benchmark functions F9 and F10, the numerical differences between the test functions are not significant. However, it can still be clearly observed that IHHO has higher stability compared to the other algorithms. Furthermore, based on test function F11, IHHO has improved significantly compared to the other improved and original HHO, while GWO has higher stability on this test function. Overall, the IHHO algorithm maintains a fast search speed and has certain advantages in stability for multimodal functions. On the test functions, it is obvious that IHHO has superior convergence performance and stability compared to the other algorithms. The fitness convergence curves on some test functions are shown in Figure 6 and Figure 7.

Figure 6.

The convergence curves of fitness values of 11 test functions.

Figure 7.

The convergence curves of fitness values of 11 test functions.

In Figure 6, the convergence curve and functions F1 to F4 show that IHHO has higher accuracy and can converge to the global optimal solution more quickly than the other five algorithms. Function F5 converges the fastest in the early stage but falls into a local optimum in the middle stage and jumps out of it in the later stage, obtaining better values than the other five algorithms. Function F6 converges to the optimal value first and maintains a better value than the other five algorithms throughout.

In Figure 7, functions F7 and F8 show that IHHO has excellent search performance and can converge to the optimal value faster than the other five algorithms. Function F9 has the highest initial function value due to the effect of the chaotic map, falls into a local optimum in the middle stage, but eventually jumps out of it, making IHHO the algorithm with the best optimal value ranking among the six algorithms. Functions F10 and F11 show that IHHO performs better than the other comparative algorithms in terms of optimization performance.

Based on the comprehensive analysis of Table 5 and Figure 6 and Figure 7, IHHO has good optimization performance, the ability to jump out of local optima, and higher convergence accuracy. Based on the number of optimal values, IHHO ranks first with 11 optimal values among the six algorithms. In terms of comprehensive convergence accuracy, and optimization performance among the six algorithms, IHHO still ranks first.

6. Grid Map Path Planning

The interval of path planning is a typical non-origin symmetric interval, and the standard HHO algorithm has insufficient search efficiency for non-origin symmetric intervals. However, IHHO has made certain improvements for non-origin symmetry. By combining the HHO’s own position updating mechanism, the IHHO has better advantages and higher stability in path planning on a grid map.

6.1. Fusion of A* and IHHO Algorithm

The A algorithm is a commonly used algorithm for path planning. A mainly calculates the quality of the traversal nodes through an evaluation function, which is shown in Equation (2), where represents the path length as a movement cost and represents the cost function, whose formula is as follows:

where N represents the population size, c represents the number of obstacles crossed, and k is a constant value, which we set as the area of the path planning map. The path length and cost function are combined as the evaluation function (fitness function). In the process of path planning on a grid map, a large constant value is added to the path length for each obstacle crossed as the cost function, and the fitness function is the sum of the path length and cost function, as shown in Formula (24).

6.2. Parameter Setting of Grid Map Path Planning

To better highlight the superiority of the improved algorithm, this paper introduces the EGWO [51], HIWOA [52], HHO, MHHO, ADHHO, and IHHO algorithms for path planning. The introduced EGWO and HIWOA have similar partial test results on the benchmark function. For fairness, the other algorithms adopt the same strategies as IHHO path planning, such as A-star and elite retention. This paper uses two different grid maps, and , so, for the grid map, the number of populations (nodes) is set to 20, according to the horizontal (vertical) of the grid, the maximum iteration times of the path is set to 50, and the maximum iteration times within the population is set to 500. Whereas for the 60 × 60 grid map, the number of populations is set to 60, according to the horizontal (vertical) coordinates of the grid. Due to more information, the maximum iteration times of the population is increased to 1000. The parameter settings in the introduced EGWO and HIWOA algorithms are based on Table 1. The specific parameter settings are shown in Table 6.

Table 6.

Set path planning parameters.

6.3. Experimental Results and Analysis of Path Planning

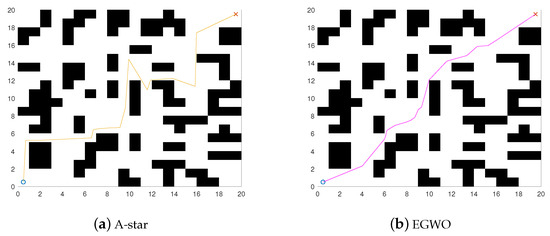

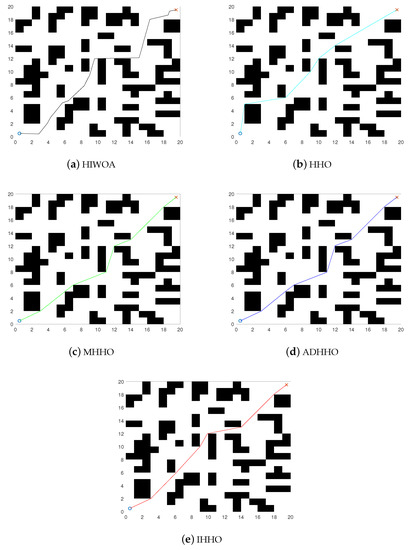

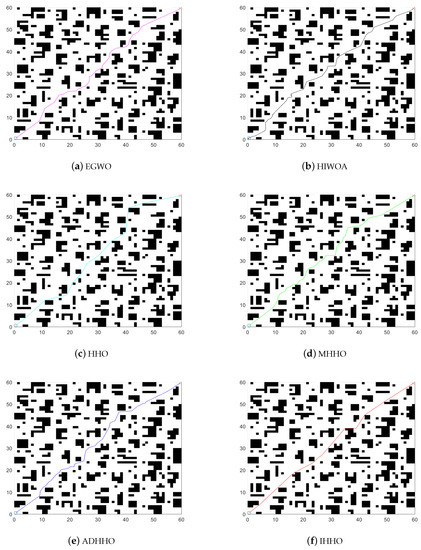

Figure 1 shows the models of the environments. The starting points are both set at (0.5, 0.5) and the endpoints are set at (19.5, 19.5) and (59.5, 59.5), respectively. The black grids represent the obstacles and the rest are safe zones. The results of the improved algorithm and the six introduced algorithms on the grid map path planning are shown in Figure 8 and Figure 9. The results of the path planning on the grid map are shown in Figure 10. The path comparison convergence graph is shown in Figure 11a. and the path comparison convergence graph is shown in Figure 11b. For each experiment, it was conducted 10 times independently and the average value, optimal value, and worst value were recorded. The results are shown in Table 7.

Figure 8.

path comparison diagram.

Figure 9.

path comparison diagram.

Figure 10.

path comparison diagram.

Figure 11.

Path comparison convergence graph. (a) Seven algorithms compare the convergence graph in the path. (b) Six algorithms compare convergence graphs in path.

Table 7.

Path length comparison.

From Table 7, it can be seen that A-star has deficiencies in path planning for random point selection and complex scenarios, but adding swarm intelligence algorithms to A-star can effectively solve this problem.

In Figure 8 and Figure 9, the IHHO algorithm has the shortest path length among all the algorithms in a grid. Compared to the other algorithms, IHHO has achieved good results not only in path selection, but also in path length optimization. In Figure 10, the IHHO algorithm has the best path optimization in a grid, while other algorithms perform poorly in path length optimization, and the path length increases with an increasing number of grids and obstacles. Based on the comparison in Figure 8, Figure 9 and Figure 10, as well as Table 7, it can be concluded that the IHHO algorithm has the shortest path length and the most stable path. IHHO has the best optimization effect, followed by HHO, EGWO, and ADHHO, while HIWOA and MHHO have the worst optimization effect.

In Figure 11a, the IHHO and EGWO algorithms showed excellent optimization performance in the early stages of searching for paths, followed by the ADHHO and HHO algorithms. Throughout the later stages, the IHHO algorithm maintains the shortest path length, while the A-star algorithm only finds the better path later. In Figure 11b, the IHHO and HHO algorithms demonstrated good path optimization performance, followed by the EGWO and MHHO algorithms. In the later stages, the IHHO algorithm showed signs of a continuous optimization of the path length.

The EGWO algorithm borrows the modified position update function from Particle Swarm Optimization (PSO) and makes the GWO algorithm be guided in the position updates with the tent map, which gives it an advantage in diversity in the initial population and, thus, makes it better in the early stage of path planning than HIWOA. HIWOA introduces the inertia weight coefficient, which makes the position update smaller and introduces a nonlinear convergence factor, increasing its convergence accuracy. The HIWOA algorithm also introduces a feedback mechanism that makes the algorithm more capable of escaping local optima, making the HIWOA algorithm better than the EGWO algorithm in the later stage of path planning.

The MHHO and ADHHO algorithms adjust the escape energy, which makes the algorithms biased toward searching, and the path searching range is wide but the performance of local convergence is reduced. The ADHHO algorithm introduces adaptive collaboration and dispersion foraging strategies, which increases its search for paths and corrects the escape energy to enhance the performance of local convergence in the exploitation phase. However, overall, compared with the ADHHO algorithm, the IHHO algorithm still has better convergence and search effectiveness.

7. Conclusions

This article analyzes the effectiveness of the basic HHO algorithm in finding that there are shortcomings in the search effectiveness of the nonzero point symmetric interval. Meanwhile, it is discovered that the basic HHO algorithm has problems with convergence accuracy and convergence speed. This paper proposes an improved HHO algorithm. First, the circle mapping in chaotic mapping is used to initialize the population, increasing its purposefulness and enabling the distribution of the population to be centered, thus reducing the probability of location updates exceeding the bounds and improving the diversity and effectiveness of the algorithm. Secondly, a random direction strategy and an improved sine tendency search strategy are used to improve the exploration stage, completely replacing the global search formula of the original algorithm and constructing a composite novel search formula, effectively improving the effectiveness and efficiency of the algorithm in searching the nonzero point symmetric interval. Finally, the combination of escape energy and jump strength is used to improve the convergence accuracy of the algorithm.

To verify the superiority and feasibility of the new algorithm, first, on the benchmark test function, the four strategies were compared and tested to test the impact of each strategy on the IHHO algorithm. The experimental results show that the four strategies have good performance on IHHO, especially the improved sine-trend search. Then, we compared and tested the GWO, PWOA, HHO, MHHO, ADHHO, and IHHO algorithms. The experimental results show that the IHHO algorithm is generally superior to the compared algorithms, such as GWO, WOA, MHHO, ADHHO, and the basic HHO algorithm, in terms of convergence accuracy, convergence speed, and stability. Finally, we applied IHHO to the path planning of the grid map. In the experimental results, the optimal length of the path planning length of the IHHO algorithm is 1.61–21.06% lower than that of the EGWO, HIWOA, HHO, MHHO, and ADHHO algorithms in Scenario 1, and the average length of the path planning in Scenario 2 is 5.98–13.03% lower than that of the other algorithms. The length is 4.37–9.75% lower than other algorithms, indicating that the IHHO planning ability is significantly better than the EGWO, HIWOA, MHHO, and ADHHO algorithms. The algorithm still has room for optimization, and the path planning is based on a known and fixed grid map. There are still many challenges for future work, such as the continuous optimization of the algorithm, dynamic obstacles, and unknown interference in path planning. Therefore, the improvement of the HHO algorithm and the problem of grid path planning will continue to receive attention. In addition, it can be seen from the experiment that as the map continues to grow, the optimization begins to be difficult, and the effect of randomly picking points is not obvious compared with the center rounding. It can be concluded that this method is suitable for smaller grid maps. Of course, with the continuous improvement of equipment and facilities, we will continue to optimize methods and strive to apply research results to actual environments.

Author Contributions

Conceptualization, L.H.; methodology, L.H. and Q.F.; software, L.H.; validation, L.H.; formal analysis, Q.F. and N.T.; investigation, L.H.; resources, Q.F.; data curation, L.H.; writing—original draft preparation, L.H.; writing—review and editing, L.H. and Q.F.; visualization, L.H.; supervision, Q.F.; project administration, Q.F. and N.T.; funding acquisition, Q.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ningbo Natural Science Foundation, grant number 2021J135.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HHO | Harris Hawks Optimization |

| IHHO | Improved Harris Hawks Optimization |

| UAV | Unmanned Aerial Vehicle |

| PM | Particulate Matter |

| MHHO | Modified Harris Hawks optimization |

| ADHHO | Adaptive Cooperative Foraging and Dispersed Foraging Harris Hawks Optimization |

| NFL | No Free Lunch |

| GWO | Grey Wolf Optimization |

| WOA | Whale Optimization Algorithm |

| EGWO | Efficient Grey Wolf Optimization |

| PSO | Particle Swarm Optimization |

References

- Li, F.F.; Du, Y.; Jia, K.J. Path planning and smoothing of mobile robot based on improved artificial fish swarm algorithm. Sci. Rep. 2022, 12, 659. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Gao, H.; Wang, Z.; Du, C. Improved grey wolf optimization algorithm and application. Sensors 2022, 22, 3810. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Yin, P.; Mo, L. An Improved Grey Wolf Optimizer and Its Application in Robot Path Planning. Biomimetics 2023, 8, 84. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Si, Z.; Li, X.; Wang, D.; Song, H. A novel hybrid particle swarm optimization algorithm for path planning of UAVs. IEEE Internet Things J. 2022, 9, 22547–22558. [Google Scholar] [CrossRef]

- Rajamoorthy, R.; Arunachalam, G.; Kasinathan, P.; Devendiran, R.; Ahmadi, P.; Pandiyan, S.; Muthusamy, S.; Panchal, H.; Kazem, H.A.; Sharma, P. A novel intelligent transport system charging scheduling for electric vehicles using Grey Wolf Optimizer and Sail Fish Optimization algorithms. Energy Sources Part A Recover. Util. Environ. Eff. 2022, 44, 3555–3575. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y. Mobile robot path planning algorithm based on improved a star. Proc. J. Phys. Conf. Ser. 2021, 1848, 012013. [Google Scholar] [CrossRef]

- Gao, H.; Yan, L.; Shen, R.; Ma, W. Design and Implementation of Mobile Robot Path Planning Based on A-Star Algorithm. In Proceedings of the 2022 14th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Changsha, China, 15–16 January 2022; pp. 467–470. [Google Scholar]

- Liu, L.S.; Lin, J.F.; Yao, J.X.; He, D.W.; Zheng, J.S.; Huang, J.; Shi, P. Path planning for smart car based on Dijkstra algorithm and dynamic window approach. Wirel. Commun. Mob. Comput. 2021, 2021, 8881684. [Google Scholar] [CrossRef]

- Lee, K.; Choi, D.; Kim, D. Incorporation of potential fields and motion primitives for the collision avoidance of unmanned aircraft. Appl. Sci. 2021, 11, 3103. [Google Scholar] [CrossRef]

- Yi, J.; Yuan, Q.; Sun, R.; Bai, H. Path planning of a manipulator based on an improved P_RRT* algorithm. Complex Intell. Syst. 2022, 8, 2227–2245. [Google Scholar] [CrossRef]

- Kang, J.G.; Lim, D.W.; Choi, Y.S.; Jang, W.J.; Jung, J.W. Improved RRT-connect algorithm based on triangular inequality for robot path planning. Sensors 2021, 21, 333. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S. Genetic algorithm. Evol. Algorithms Neural Netw. Theory Appl. 2019, 780, 43–55. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Neshat, M.; Sepidnam, G.; Sargolzaei, M.; Toosi, A.N. Artificial fish swarm algorithm: A survey of the state-of-the-art, hybridization, combinatorial and indicative applications. Artif. Intell. Rev. 2014, 42, 965–997. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Tang, J.; Duan, H.; Lao, S. Swarm intelligence algorithms for multiple unmanned aerial vehicles collaboration: A comprehensive review. Artif. Intell. Rev. 2023, 56, 4295–4327. [Google Scholar] [CrossRef]

- Alizadehsani, R.; Roshanzamir, M.; Izadi, N.H.; Gravina, R.; Kabir, H.D.; Nahavandi, D.; Alinejad-Rokny, H.; Khosravi, A.; Acharya, U.R.; Nahavandi, S.; et al. Swarm intelligence in internet of medical things: A review. Sensors 2023, 23, 1466. [Google Scholar] [CrossRef]

- Wang, J.; Lin, D.; Zhang, Y.; Huang, S. An adaptively balanced grey wolf optimization algorithm for feature selection on high-dimensional classification. Eng. Appl. Artif. Intell. 2022, 114, 105088. [Google Scholar] [CrossRef]

- Liu, L.; Zhang, R. Multistrategy improved whale optimization algorithm and its application. Comput. Intell. Neurosci. 2022, 2022, 3418269. [Google Scholar] [CrossRef]

- Tajziehchi, K.; Ghabussi, A.; Alizadeh, H. Control and optimization against earthquake by using genetic algorithm. J. Appl. Eng. Sci. 2018, 8, 73–78. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, D.; Zhang, T.; Zhang, J.; Wang, J. A new path plan method based on hybrid algorithm of reinforcement learning and particle swarm optimization. Eng. Comput. 2022, 39, 993–1019. [Google Scholar] [CrossRef]

- Lan, X.; Lv, X.; Liu, W.; He, Y.; Zhang, X. Research on robot global path planning based on improved A-star ant colony algorithm. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; Volume 5, pp. 613–617. [Google Scholar]

- Szczepanski, R.; Tarczewski, T. Global path planning for mobile robot based on Artificial Bee Colony and Dijkstra’s algorithms. In Proceedings of the 2021 IEEE 19th International Power Electronics and Motion Control Conference (PEMC), Gliwice, Poland, 25–29 April 2021; pp. 724–730. [Google Scholar]

- Xiang, D.; Lin, H.; Ouyang, J.; Huang, D. Combined improved A* and greedy algorithm for path planning of multi-objective mobile robot. Sci. Rep. 2022, 12, 13273. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; He, R.; Yang, K. A bioinspired path planning approach for mobile robots based on improved sparrow search algorithm. Adv. Manuf. 2022, 10, 114–130. [Google Scholar] [CrossRef]

- Liu, L.; Wang, X.; Yang, X.; Liu, H.; Li, J.; Wang, P. Path planning techniques for mobile robots: Review and prospect. Expert Syst. Appl. 2023, 227, 120254. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Gezici, H.; Livatyalı, H. Chaotic Harris hawks optimization algorithm. J. Comput. Des. Eng. 2022, 9, 216–245. [Google Scholar] [CrossRef]

- Shehab, M.; Mashal, I.; Momani, Z.; Shambour, M.K.Y.; AL-Badareen, A.; Al-Dabet, S.; Bataina, N.; Alsoud, A.R.; Abualigah, L. Harris hawks optimization algorithm: Variants and applications. Arch. Comput. Methods Eng. 2022, 29, 5579–5603. [Google Scholar] [CrossRef]

- Hussien, A.G.; Abualigah, L.; Abu Zitar, R.; Hashim, F.A.; Amin, M.; Saber, A.; Almotairi, K.H.; Gandomi, A.H. Recent advances in harris hawks optimization: A comparative study and applications. Electronics 2022, 11, 1919. [Google Scholar] [CrossRef]

- Basha, J.; Bacanin, N.; Vukobrat, N.; Zivkovic, M.; Venkatachalam, K.; Hubálovskỳ, S.; Trojovskỳ, P. Chaotic harris hawks optimization with quasi-reflection-based learning: An application to enhance cnn design. Sensors 2021, 21, 6654. [Google Scholar] [CrossRef]

- Jia, H.; Lang, C.; Oliva, D.; Song, W.; Peng, X. Dynamic harris hawks optimization with mutation mechanism for satellite image segmentation. Remote Sens. 2019, 11, 1421. [Google Scholar] [CrossRef]

- Bao, X.; Jia, H.; Lang, C. A novel hybrid harris hawks optimization for color image multilevel thresholding segmentation. IEEE Access 2019, 7, 76529–76546. [Google Scholar] [CrossRef]

- Zhang, R.; Li, S.; Ding, Y.; Qin, X.; Xia, Q. UAV Path Planning Algorithm Based on Improved Harris Hawks Optimization. Sensors 2022, 22, 5232. [Google Scholar] [CrossRef] [PubMed]

- Du, P.; Wang, J.; Hao, Y.; Niu, T.; Yang, W. A novel hybrid model based on multi-objective Harris hawks optimization algorithm for daily PM2. 5 and PM10 forecasting. Appl. Soft Comput. 2020, 96, 106620. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, X.; Shih, P.C. Modified Harris Hawks optimization algorithm for global optimization problems. Arab. J. Sci. Eng. 2020, 45, 10949–10974. [Google Scholar] [CrossRef]

- Liu, X.; Liang, T. Harris hawk optimization algorithm based on square neighborhood and random array. Control. Decis 2021, 37, 2467–2476. [Google Scholar]

- Zhu, C.; Pan, X.; Zhang, Y. Harris hawks optimization algorithm based on chemotaxis correction. J. Comput. Appl. 2022, 42, 1186. [Google Scholar]

- Sihwail, R.; Omar, K.; Ariffin, K.A.Z.; Tubishat, M. Improved harris hawks optimization using elite opposition-based learning and novel search mechanism for feature selection. IEEE Access 2020, 8, 121127–121145. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, K.; Niu, Y. Improved Harris hawks optimization based on adaptive cooperative foraging and dispersed foraging strategies. IEEE Access 2020, 8, 160297–160314. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Kennedy, J. Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Li, C.; Huang, X.; Ding, J.; Song, K.; Lu, S. Global path planning based on a bidirectional alternating search A* algorithm for mobile robots. Comput. Ind. Eng. 2022, 168, 108123. [Google Scholar] [CrossRef]

- Yao, J.; Lin, C.; Xie, X.; Wang, A.J.; Hung, C.C. Path planning for virtual human motion using improved A* star algorithm. In Proceedings of the 2010 Seventh International Conference on Information Technology: New Generations, Las Vegas, NV, USA, 12–14 April 2010; pp. 1154–1158. [Google Scholar]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Tanyildizi, E.; Demir, G. Golden sine algorithm: A novel math-inspired algorithm. Adv. Electr. Comput. Eng. 2017, 17, 71–78. [Google Scholar] [CrossRef]

- Zhu, C.; Zhang, Y.; Pan, X.; Chen, Q.; Fu, Q. Improved Harris hawks optimization algorithm based on quantum correction and Nelder-Mead simplex method. Math. Biosci. Eng. 2022, 19, 7606–7648. [Google Scholar] [CrossRef] [PubMed]

- Kiani, F.; Nematzadeh, S.; Anka, F.A.; Findikli, M.A. Chaotic Sand Cat Swarm Optimization. Mathematics 2023, 11, 2340. [Google Scholar] [CrossRef]

- Li, Y.; Wang, H.; Fan, J.; Geng, Y. A novel Q-learning algorithm based on improved whale optimization algorithm for path planning. PLoS ONE 2022, 17, e0279438. [Google Scholar] [CrossRef] [PubMed]

- Long, W.; Cai, S.H.; Jiao, J.J.; Wu, T.B. An improved grey wolf optimization algorithm. ACTA Electonica Sin. 2019, 47, 169. [Google Scholar]

- Tang, C.; Sun, W.; Wu, W.; Xue, M. A hybrid improved whale optimization algorithm. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 362–367. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).