Abstract

According to the American Cancer Society, breast cancer is the second largest cause of mortality among women after lung cancer. Women’s death rates can be decreased if breast cancer is diagnosed and treated early. Due to the lengthy duration of manual breast cancer diagnosis, an automated approach is necessary for early cancer identification. This research proposes a novel framework integrating metaheuristic optimization with deep learning and feature selection for robustly classifying breast cancer from ultrasound images. The structure of the proposed methodology consists of five stages, namely, data augmentation to improve the learning of convolutional neural network (CNN) models, transfer learning using GoogleNet deep network for feature extraction, selection of the best set of features using a novel optimization algorithm based on a hybrid of dipper throated and particle swarm optimization algorithms, and classification of the selected features using CNN optimized using the proposed optimization algorithm. To prove the effectiveness of the proposed approach, a set of experiments were conducted on a breast cancer dataset, freely available on Kaggle, to evaluate the performance of the proposed feature selection method and the performance of the optimized CNN. In addition, statistical tests were established to study the stability and difference of the proposed approach compared to state-of-the-art approaches. The achieved results confirmed the superiority of the proposed approach with a classification accuracy of 98.1%, which is better than the other approaches considered in the conducted experiments.

1. Introduction

One of the most frequent types of cancer in women, breast cancer, develops in the breast and then spreads to other regions of the body [1]. Breast cancer is the second most frequent malignancy worldwide (after lung cancer) [2,3]. In the case of breast cancer, the cancer that forms can sometimes be detected using X-ray. Approximately 1.8 million new instances of cancer will be identified in 2020 [4]. Of these, breast cancer will account for almost 30%. Various properties of cells are used to organize them into distinct categories. Malignant breast cancer is one kind, while benign breast cancer is another. Therefore, if the death rate associated with breast cancer is required to be lowered, then early detection is essential [5].

Several imaging methods exist for diagnosing breast cancer at an early stage. In clinical practice, breast ultrasonography is one of the most often utilized diagnostic tools [6,7]. Breast cancer originates in the epithelial cells that line the lobular unit of the terminal duct. Cancer cells that do not invade other tissues are called noninvasive [8]. In in situ cancer, cells are inside the basement membranes of the draining duct and the terminal duct lobular unit. The presence or absence of metastases to the axillary lymph nodes is a major determinant in determining the best course of therapy for breast cancer [9]. When diagnosing and classifying breast problems, ultrasound imaging is among the most popular diagnostic tools [10]. When it comes to cancer, radiological diagnosis is one of the most popular imaging modalities employed, alongside mammography. Nothing is ever said about the potential difficulties it could face in the actual world. There must be careful consideration given to the existence of speckle, and preprocessing techniques such as wavelet-based denoising [11] should be considered in the first and second generations [12].

Ultrasound is a powerful diagnostic technique in dense breast tissue, often detecting breast cancers that mammography misses [13]. Ultrasound is widely utilized in diagnosing breast cancers [9] because it is non-invasive, generally well tolerated by women, and does not expose patients to radiation. Ultrasound imaging is more portable and less expensive than other medical imaging modalities such as MRI and mammography [14]. To aid radiologists in evaluating breast ultrasound testing, computer-aided diagnosis (CAD) systems were created [15,16]. It is challenging to generalize the visual information used by older CAD systems [17,18,19,20,21,22] to ultrasound images captured using various techniques. AI methods for automated breast cancer detection utilizing ultrasound images have made great strides forward in recent years [23,24,25]. Key components of an automated procedure include ultrasound image preprocessing, cancer segmentation, feature extraction from segmented cancer, and classification [26].

Deep learning has recently demonstrated significant improvement in several areas, including cell segmentation [27], skin melanoma identification [28], hemorrhage detection [29], and a few other areas [30,31]. Clinical applications of deep learning in medical imaging have been fruitful, particularly in the detection of breast cancer [32], COVID-19 [33], Alzheimer’s disease identification [34], and brain cancer [35] diagnoses, among others [36,37,38]. The convolutional neural network (CNN) is a multi-layered deep learning architecture. A CNN can take image data and turn it into usable features. Infection detection and categorization are two applications that make use of the attributes. CNN uses feature extraction to retrieve information from the original images. The feature extraction process also generates some irrelevant features from the raw images, which might have a negative impact on classification accuracy. Therefore, for a higher classification accuracy rate, it is crucial to choose the most significant features [39]. Research on how to pick the most useful features from a set of extracted features is ongoing. Selection techniques such as the Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and others have been introduced in the literature and used in medical imaging. These strategies allow us to focus on the optimal subset of features rather than the full feature space. Methods for selecting relevant features have the dual benefit of increasing system accuracy and minimizing processing time [40]. However, a few crucial properties are sometimes overlooked during optimal feature selection, impacting system accuracy. Thus, scientists working in computer vision have developed methods of fusing different types of information together [41]. The fusion procedure boosts the system’s efficacy by multiplying the number of predictors [42]. Serial and parallel fusion are two common feature fusion methods [43].

Within the scope of this paper, the following issues are examined: (i) As a model based on a smaller number of images produces inaccurate prediction, the available ultrasound data are insufficient for building a good deep model. (ii) Misclassification often occurs because of the great degree of resemblance between benign and malignant breast cancer cancers. (iii) Incorrect predictions are made because the features retrieved from images include redundant and unimportant data. To address these issues, a novel approach is proposed based on deep-learning-based and metaheuristic optimization for breast cancer classification from ultrasound images.

The remaining sections of this article are presented as follows. Section 2 presents a discussion of the work that inspired this article. Specifically, deep learning, feature selection, and fusion are presented in Section 3. In Section 4, the results are analyzed and debated. In Section 5, the proposed methodology is wrapped up.

2. Literature Review

Researchers have presented several automated approaches for breast cancer categorization using ultrasound images [44,45]. These methods are based on computer vision. A subset of these researchers collected features from raw images, whereas others focused on the segmentation phase first [46]. In a few instances, researchers employed the preprocessing procedure to increase contrast in the input images and to emphasize the infected region for more accurate feature extraction [47]. One such CAD approach was described for breast cancer screening in [48]. Hilbert Transform (HT) was used to convert the raw data into a brightness-mode image to create the final product. The cancer was then divided into smaller regions using a watershed transformation that was governed by markers. After that, the ensemble decision tree model and the K-nearest neighbor (KNN) classifier were used to extract form and textural data and to classify them. The authors in [3] used semantic segmentation, fuzzy logic, and deep learning to segment and classify breast cancers from ultrasound images. The cancer was segmented using a semantic segmentation strategy after being preprocessed using fuzzy logic. Tumors were eventually classified using one of eight previously trained models.

The radionics classification pipeline was first presented by the authors in [49] and is based on machine learning (ML). This was accomplished by isolating the ROI and extracting the interest features. Machine learning classifiers were used to assign categories to the retrieved features. Results from experiments performed on the breast ultrasound images (BUSI) dataset indicated a rise in precision. The authors in [14] presented a deep learning-based system to classify breast mass from ultrasound images. Improved data transmission was achieved via transfer learning (TL) and deep representation scaling (DRS) layers inserted between blocks of pre-trained CNN. Only the parameters of the DRS layers were altered during network training to adapt the previously trained CNN to assess breast mass categorization from the input images. The DRS approach performed exceptionally well in comparison to state-of-the-art methods, as evidenced by the findings. The authors in [5] presented a Dilated Semantic Segmentation Network (Di-CNN) to find and label breast cancer. When extracting features, they looked to a pre-trained DenseNet201 deep model and refined it through transfer learning. Further, the nodules were categorized using a pre-trained model and a 24-layer CNN that fused feature information in parallel. The findings demonstrated that the fusion procedure enhanced the recognition accuracy.

A contextual level set technique was described for breast cancer segmentation in [50]. A network based on UNet-like encoder-decoder architecture was developed to acquire broad semantic context. The breast cancer classification network proposed by the authors in [51] is a deep, doubly supervised transfer learning model. Specifically, they implemented the Maximum Mean Discrepancy (MMD) criteria within a learning context using the Privileged Information (LUPI) paradigm. Later, they integrated the methods into a new doubly supervised TL network (DDSTN), outperforming both methods individually. In [52], Woo et al. presented an automated technique for analyzing ultrasound images to classify breast cancer. Using numerous convolutional neural network (CNN) models, they coupled them with a new image fusion approach. This approach was experimentalized on both public and private (private) datasets, with impressive results. The authors in [53] introduced a deep learning model to detect breast masses in ultrasound images. Different sizes, shapes, and phenotypes of breast masses were considered. The team used selective kernel U-Net CNN to address these concerns. Using this method, they combined the data and utilized them to experiment on 882 breast images. Moreover, they took into account three additional datasets and enhanced their accuracy.

Breast MRI slices can be analyzed using a computerized method developed by the authors in [54] to find breast tumor sections (BTS). The BTS is enhanced and extracted from 2D MRI slices using a combination thresholding and segmentation strategy. To enhance the BTS, the authors develop tri-level thresholding using the Slime Mould Algorithm and Shannon’s entropy. Then, the authors use watershed segmentation to mine the BTS for useful information. After the BTS were extracted, they were compared to the ground truth to obtain the necessary image performance values. Breast cancer was detected with the use of an extreme learning machine (ELM) by the authors in [55]. Second, irrelevant features were filtered out by employing the gain ratio feature selection strategy. At last, the authors demonstrated and validated a cloud-based approach to remote breast cancer diagnoses on the Wisconsin Diagnostic Breast Cancer dataset.

With edge detection and the U-NET model, the authors in [56] proposed a method for diagnosing brain tumors. Image enhancement, edge detection, and classification utilizing fuzzy logic form the basis of the proposed tumor segmentation system by preprocessing the input images with a contrast enhancement strategy, then using a fuzzy logic-based edge detection method to locate the edge in the original images, and finally using a dual tree-complex wavelet transform at various scale levels. To identify meningioma in brain scans, the authors used the fading sub-band images to calculate the features, which were subsequently categorized using the U-NET CNN classification. Breast thermal images were used to develop an automated breast cancer diagnostic system by the authors in [57]. In the first step, they photographed women with their breasts in a variety of positions. Extensive connection and sophisticated coarse segmentation were used in this research. In subsequent steps, the nodule was honed using a dilated filter. Additionally, the accuracy of the proposed architecture was recommended to be enhanced by introducing a class imbalance loss function.

According to the methods discussed, researchers often overlook the preprocessing stage. The researchers often began with the segmentation process and then moved on to the feature extraction phase. To enhance their categorization accuracy, several of them tried feature fusion. Unfortunately, they did not put much effort into picking the best features. Computational time is now a significant consideration they did not consider. In this study, we put forth a best practice for classifying breast masses using a combination of several deep-learning features. Table 1 summarizes some of the most cutting-edge methods.

Table 1.

A review of classification methods for breast cancer.

3. The Proposed Methodology

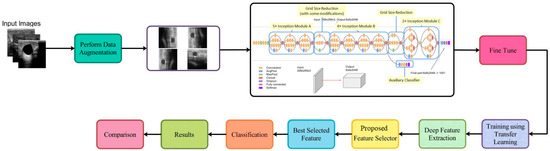

This section describes the proposed framework for ultrasonic breast imaging breast cancer categorization. For a visual representation of the proposed framework’s structure, see Figure 1. The original ultrasound images are first enhanced with additional data before being sent into GoogleNet, a fine-tuned deep network, to be trained. To train, transfer learning is utilized to draw features from a global average pool layer. Improved optimization methods for the extracted features, such as the dipper throated and particle swarm varieties, are used. By applying a probabilistic method, the most useful features are combined. A machine learning classifier is then utilized to categorize the fused features.

Figure 1.

Architecture of the proposed methodology.

3.1. Dataset Augmentation

Recent years have seen significant research on data augmentation’s role in deep learning. However, available datasets in the medical sector are considered to be a low resource, which is a problem for deep learning, as it requires a large number of training samples. Because of this, a data augmentation process is required to boost the variety of the primary dataset. When validating these results, the BUSI dataset is consulted. There are 780 images (500 × 500) in the dataset, with 133 “normal” images, 210 “malignant” images, and 487 “benign” images [58], as shown in Figure 2. All of these data were split in half for use in training and testing. Following this, the normal (56 images), malignant (105 images), and benign images used for training were all collected (243 images). The deep learning model cannot be trained without an additional data augmentation phase. The original ultrasound images are implemented and processed by three different operations, including horizontal flip, vertical flip, and rotation 90 degrees, to expand the variety of the original dataset. The implemented actions are repeated until there are 4000 images in each class. With the enhancements applied, the dataset now contains 12,000 images.

Figure 2.

The three classes of the samples in the adopted dataset.

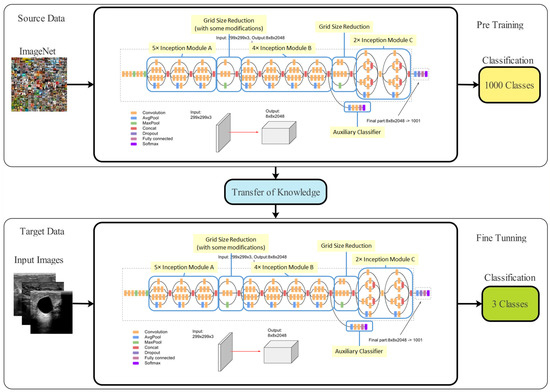

3.2. Transfer Learning

Transfer learning (TL) is a machine learning technique that applies a previously learned model to a new problem. From a practical aspect, a supervised learning agent’s sampling efficiency may be vastly improved by reusing or transferring data from previously taught tasks for the newly learned tasks [59]. Deep feature extraction is performed using TL in this case. To do this, first a model that has been pre-trained is adjusted, and then, another model is trained using TL. An updated deep model is trained using information from the previous model (source domain) (target domain). The new model is then trained with the following hyperparameters: learning rate = 0.001, mini-batch size = 16, epochs = 200, and learning technique = stochastic gradient descent. These features are derived from the modified deep model’s Global Average Pooling (GAP) layer. Then, two reformed optimization techniques are used to fine-tune the retrieved features. Figure 3 depicts a graphical representation of the transfer learning process.

Figure 3.

Architecture of the transfer learning process.

3.3. Classification of Selected Features

The proposed feature selection method selects the most significant features that can be used to robustly categorize the breast cancer case. The classification of the selected features is performed in terms of a convolutional neural network (CNN). The performance of this CNN is boosted by utilizing the proposed optimization algorithm for optimizing the parameters of the CNN. The optimized CNN is then used to classify the selected features. The output of the optimized CNN is analyzed and evaluated using several criteria and is finally compared to the recent classification models to demonstrate its effectiveness and superiority.

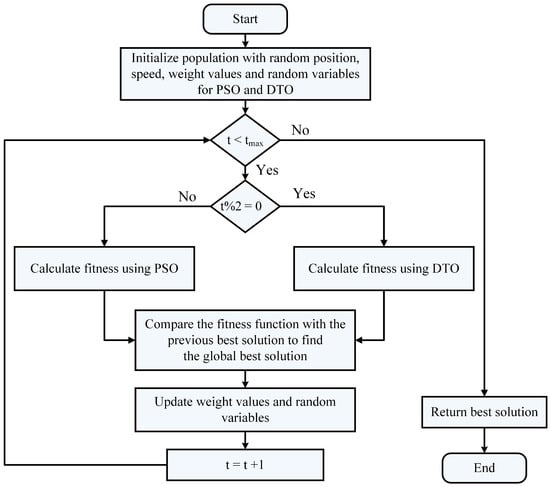

3.4. The Proposed Optimization Algorithm

The proposed optimization algorithm is based on two optimization algorithms, namely, dipper throated optimization (DTO) and particle swarm optimization (PSO) algorithms, which swaps between the two algorithms dynamically and is denoted by dynamic DTPSO (DDPSO). The proposed algorithm exploits the advantages of both algorithms to improve the exploration and exploitation of the optimization process and thus finds the best solution optimally. The steps of the proposed algorithm are shown in the flowchart depicted in Figure 4.

Figure 4.

Flowchart of the proposed DDTPSO algorithm.

3.5. Feature Selection Algorithm

The feature selection process proposed in this work is based on the proposed optimization algorithm but with converting the resulting solution to binary. Therefore, the proposed feature selection method is denoted by binary DDTPSO (bDDTPSO). The conversion to binary is based on the sigmoid function that converts the result of the continuous DDTPSO into binary. The proposed feature selection algorithm is presented in Algorithm 1. This algorithm is used to select the significant features resulting from the feature extraction process performed using the GoogleNet deep network. The selected features are then used to classify the input ultrasound image of a breast to determine the case of cancer.

| Algorithm 1: The proposed binary DDTPSO algorithm. |

| 1: Initialize the parameters of DDPSO algorithm |

| 2: Convert the resulting best solution to binary [0, 1] |

| 3: Evaluate the fitness of the resulting solutions |

| 4: Train KNN to assess the resulting solutions |

| 5: Set t = 1 |

| 6: while do |

| 7: Run DDPSO algorithm to obtain best solutions |

| 8: Convert best solutions to binary using the following equation: |

| 9: Calculate the fitness value |

| 10: Update the parameters of DDTPSO algorithm |

| 11: Update t = t + 1 |

| 12: end while |

| 13: Return best set of features |

4. Experimental Results

The specifications of the machine used to run the conducted experiment are: Windows 11 PC with Core(TM) i5-2430M CPU @ 2.40 GHz and 16 GB of RAM and NVidia GPU with 8 GB memory. Python 3.10 is also used to implement the proposed methodology. A freely available dataset on Kaggle is used to train the proposed methodology for classifying breast cancer cases. The dataset’s training, validation, and testing subsets are all assigned identical random sizes. The testing set is used to evaluate the effectiveness of the provided model, whereas validation is employed while calculating the fitness function for a given solution.

4.1. Configuration Parameters

The experimental setup for the proposed approach is shown in Table 2 for the optimization methods employed in this work. Ten search agents are used for each optimization algorithm with 80 iterations and 20 runs. A k-fold cross-validation value of 10 is applied while training the classification models.

4.2. Evaluation Metrics

The achieved results are assessed using the criteria presented in Table 3. The criteria listed in this table are used to evaluate the performance of the proposed feature selection method [60,61,62,63,64,65]. In addition, the criteria listed in this table are used to assess the performance of the proposed optimized classification model. In this table, the number of runs is denoted by M, the best solution at run j is denoted by , and refers to the best solution vector length. In addition, the size of the test set is denoted by N, and the predicted and actual values are denoted by and , respectively. Moreover, the true positive, true negative, false positive, and false negative are denoted by TP, TN, FP, and FN, respectively.

Table 3.

Evaluation metrics used in assessing the proposed methods.

Table 2.

Configuration parameters of the optimization algorithms.

Table 2.

Configuration parameters of the optimization algorithms.

| Algorithm | Parameter | Value |

|---|---|---|

| DTO [66] | Iterations | 500 |

| Number of runs | 30 | |

| Exploration percentage | 70 | |

| PSO [67] | Acceleration constants | [2, 12] |

| Inertia , | [0.6, 0.9] | |

| Number of particles | 10 | |

| Number of iterations | 80 | |

| WOA [68] | r | [0, 1] |

| Number of iterations | 80 | |

| Number of whales | 10 | |

| a | 2 to 0 | |

| GWO [69] | a | 2 to 0 |

| Number of iterations | 80 | |

| Number of wolves | 10 | |

| SBO [70] | Step size | 0.94 |

| Mutation probability | 0.05 | |

| Lowe and upper limit difference | 0.02 | |

| GA [71] | Cross over | 0.9 |

| Mutation ratio | 0.1 | |

| Selection mechanism | Roulette wheel | |

| Number of iterations | 80 | |

| Number of agents | 10 | |

| MVO [72] | Wormhole existence probability | [0.2, 1] |

| FA [73] | Number of fireflies | 10 |

| BA [74] | Inertia factor | [0, 1] |

4.3. Feature Extraction Evaluation

The evaluation of the feature extraction was performed, and the results are presented and discussed in this section. Table 4 presents the evaluation results of the feature extraction process. The feature extraction is performed in terms of four deep learning models, and the classification results are recorded in the table. This table shows that the results achieved by the GoogleNet deep model are superior to the other deep learning models. Therefore, the GoogleNet deep model is adopted for further steps in the proposed methodology.

Table 4.

Evaluation of the results achieved by various deep learning models.

4.4. Feature Selection Evaluation

Once the features are extracted, the best set of features that can be used to classify the input image are selected. The feature selection is performed using the proposed algorithm presented in the previous section. Eleven other feature selection algorithms are employed for comparison to prove the effectiveness and superiority of the proposed feature selection method. The evaluation of the results achieved by the proposed and other feature selection methods is presented in Table 5. This table shows that the results achieved by the proposed feature selection method are much better than those of the other methods.

Table 5.

Evaluation of the resutls achieved by the feature selection methods.

In addition, a statistical analysis is applied to study the stability of the proposed feature selection methods, and the results are listed in Table 6. In this table, the proposed feature selection methods achieved the minimum standard deviation of the best mean, median, minimum, and maximum measurements compared to the other feature selection methods.

Table 6.

Statistical analysis of the results achieved by the feature selection methods.

On the other hand, two statistical tests were conducted to study the statistical difference between the proposed feature selection method and the other feature selection methods. The first test is the one-way analysis of variance (ANOVA) test, and the other test is the Wilcoxon signed-rank test. The results of these tests are presented in Table 7 and Table 8. As presented in these tables, the most significant parameter is p, which indicates a statistical difference when its value is less than 0.005. The results presented in these tables confirm the statistical difference between the proposed feature selection method and the other feature selection methods.

Table 7.

One-way analysis of variance (ANOVA) test of the feature selection methods compared to the proposed feature selection method.

Table 8.

Wilcoxon signed-rank test of the feature selection methods compared to the proposed feature selection method.

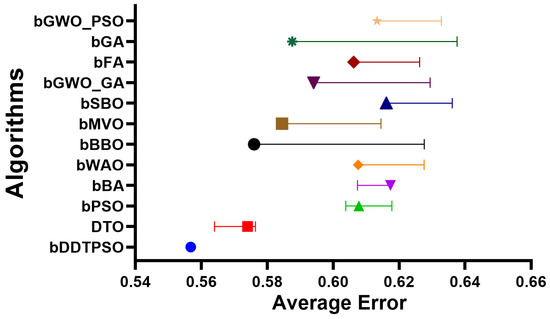

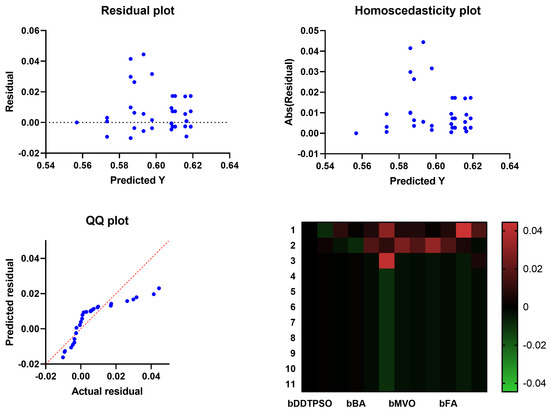

A more in-depth analysis of the results achieved by the proposed feature selection method is presented in terms of the plots shown in Figure 5 and Figure 6. These plots represent the average error the proposed feature selection method achieves compared to the other feature selection methods. As shown in this plot, the proposed method is superior and more robust in selecting the best set of features that can be used to classify breast cancer cases robustly. More analysis of the robustness of the proposed approach is represented by the residual, homoscedasticity, quartile–quartile (QQ), and heatmap plots of Figure 6. In the residual and homoscedasticity plots, the error values are in the range from 0.01 to 0.04, indicating the proposed approach’s accuracy. In addition, the results of the QQ plot are aligned close to the red diagonal line, confirming the accuracy of the proposed method. On the other hand, a set of 11 runs are performed to study the average error of the proposed method with comparison to the other methods. In the heatmap (bottom-right) plot, the results achieved by the proposed method are superior to the results of the other methods. These plots’ results emphasize the proposed method’s effectiveness and superiority.

Figure 5.

The average error achieved by the proposed feature selection method compared to other methods.

Figure 6.

Analysis plots of the results achieved by the proposed feature selection algorithm bDDTPSO.

4.5. Evaluation of Classification Models

The best set of features selected by the proposed feature selection algorithm are then used to train the classification models. In this work, four classifiers are evaluated to choose the best classifier. These classifiers are convolutional neural networks (CNN), neural networks (NN), support vector machines (SVM), and K-nearest neighbors (KNN). The results of these classifiers are presented in Table 9. In this table, the performance of the CNN model is much better than the other models is and thus adopted for optimization to further improve its performance.

Table 9.

Evaluation of the results achieved by various classification models.

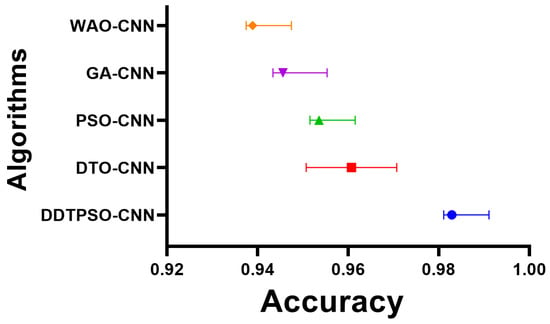

4.6. Evaluation of Optimized CNN Classification

As the CNN model is adopted for the classification of the input images, this model is subjected to optimization using the proposed DDTPSO algorithm to improve its performance. The optimization of this model is performed using four optimization algorithms in addition to the proposed optimization algorithm. The results of the optimized CNN using these optimization algorithms are presented in Table 10. This table shows that the performance of the optimized CNN is much better than the CNN without optimization, especially using the proposed DDTPSO optimization algorithm. These results confirm the effectiveness of the proposed optimization algorithm.

Table 10.

Evaluation results of the optimized CNN for classifying breast cancer cases.

To further analyze the proposed optimization algorithm’s performance, the optimized CNN’s results using different optimization methods are analyzed statistically to show the proposed algorithm’s superiority and statistical difference. Table 11 presents the results of the statistical analysis, which confirm the stability of the optimization of CNN using the proposed algorithm with the minimum value of standard deviation and the best values achieved for the other criteria in the statistical analysis.

Table 11.

Statistical analysis of the results achieved by the optimized CNN.

The statistical difference of the proposed optimization algorithm is studied in terms of the ANOVA and Wilcoxon tests, similar to the study performed on the proposed feature selection method discussed in the previous section. The results of these tests are presented in Table 12 and Table 13. The p values in these tables are less than 0.005, proving the statistical difference between the proposed optimization algorithm and the other algorithms.

Table 12.

Wilcoxon signed-rank test of the optimization models compared to the proposed optimization model.

Table 13.

One-way analysis of variance (ANOVA) test of the optimization models compared to the proposed optimization model.

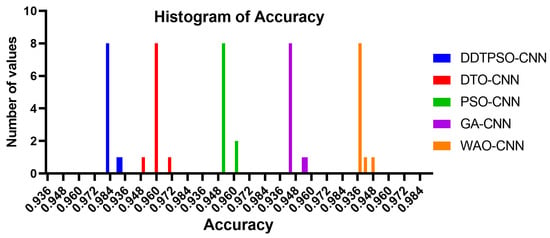

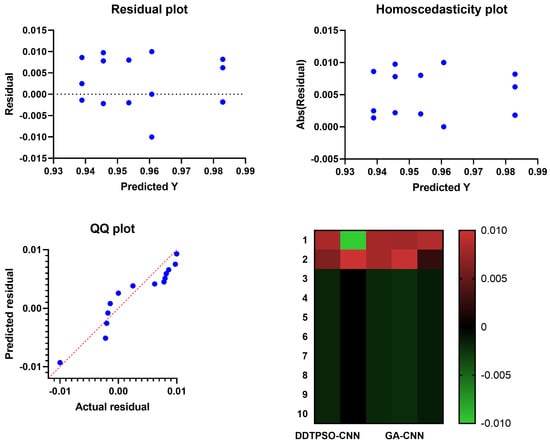

Another in-depth analysis of the results achieved by the proposed optimization method for CNN is presented in terms of the plots shown in Figure 7, Figure 8 and Figure 9. These plots represent the accuracy, histogram of accuracy, and analysis plots achieved by the proposed optimization of CNN compared to the other optimization methods. These plots show that the proposed method is superior and more robust in classifying breast cancer cases.

Figure 7.

Accuracy of the results achieved by CNN optimization using the proposed DDTPSO method compared to other methods.

Figure 8.

Histogram of the accuracy achieved by the proposed method compared to other methods.

Figure 9.

Analysis plots of the results achieved by the proposed DDTPSO optimization algorithm when applied to CNN.

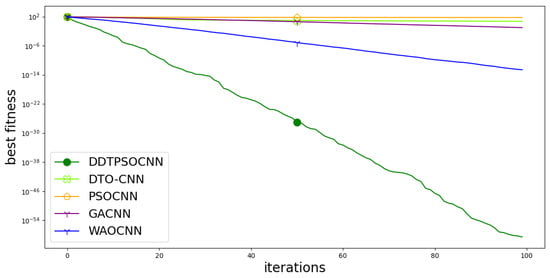

On the other hand, the convergence curve is depicted in Figure 10. This curve is used to study the cost function values versus the computation time. In the figure, it can be noted that the proposed approach is converging faster than the other competitor methods. This result gives additional emphasize of the superiority of the proposed methodology.

Figure 10.

Convergence curve of the proposed method with comparison to other methods.

In addition, the linear regression test is performed to study the relationship between the proposed and other approaches. The regression test results are presented in Table 14. In this table, the calculated p value is less than , indicating the significant relationship between the proposed methodology and the competitor methodologies.

Table 14.

Linear regression test results.

5. Conclusions

This paper proposes a fully automated technique to analyze ultrasound images for signs of breast cancer. The proposed approach relies on a series of consecutive procedures. At first, a GoogleNet deep learning model is applied to the breast ultrasound data, enhancing and retraining it. Therefore, a novel optimization technique is derived from the dipper throated optimization algorithm and the particle swarm optimization algorithm to select the best set of features. This set of features is used in classifying breast cancer cases. Experimental results showed that the proposed approach achieved the highest accuracy, at 98.1% (using the selected features and CNN classifier). Compared to more modern methods, the outcomes are better when the proposed framework is used. This work’s strengths lie in the enhanced dataset, thereby increasing the training strength. In addition, the irrelevant features were eliminated through the selection of best features using a novel feature selection algorithm. In the future, two main steps can be considered: utilizing a dataset of larger size and (ii) developing a new CNN model specifically for breast cancer classification.

Author Contributions

Conceptualization, M.M.E.; methodology, A.A.A. and S.K.T.; software, S.K.T. and M.M.E.; validation, D.S.K.; formal analysis, A.A.A. and D.S.K.; investigation, S.K.T. and M.M.E.; writing—original draft, M.M.E., A.A.A. and D.S.K.; writing—review & editing, A.A.A. and S.K.T.; visualization, A.A.A. and D.S.K.; project administration, S.K.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research project was funded by the Deanship of Scientific Research, Princess Nourah bint Abdulrahman University, through the Program of Research Project Funding After Publication, grant no. (43-PRFA-P-79).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare that they have no conflict of interest to report regarding the present study.

References

- Yu, K.; Chen, S.; Chen, Y. Tumor Segmentation in Breast Ultrasound Image by Means of Res Path Combined with Dense Connection Neural Network. Diagnostics 2021, 11, 1565. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Spezia, M.; Huang, S.; Yuan, C.; Zeng, Z.; Zhang, L.; Ji, X.; Liu, W.; Huang, B.; Luo, W.; et al. Breast cancer development and progression: Risk factors, cancer stem cells, signaling pathways, genomics, and molecular pathogenesis. Genes Dis. 2018, 5, 77–106. [Google Scholar] [CrossRef]

- Badawy, S.M.; Mohamed, A.E.N.A.; Hefnawy, A.A.; Zidan, H.E.; GadAllah, M.T.; El-Banby, G.M. Automatic semantic segmentation of breast tumors in ultrasound images based on combining fuzzy logic and deep learning—A feasibility study. PLoS ONE 2021, 16, e0251899. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.C.; Hu, Z.Q.; Long, J.H.; Zhu, G.M.; Wang, Y.; Jia, Y.; Zhou, J.; Ouyang, Y.; Zeng, Z. Clinical Implications of Tumor-Infiltrating Immune Cells in Breast Cancer. J. Cancer 2019, 10, 6175–6184. [Google Scholar] [CrossRef] [PubMed]

- Irfan, R.; Almazroi, A.A.; Rauf, H.T.; Damaševičius, R.; Nasr, E.A.; Abdelgawad, A.E. Dilated Semantic Segmentation for Breast Ultrasonic Lesion Detection Using Parallel Feature Fusion. Diagnostics 2021, 11, 1212. [Google Scholar] [CrossRef] [PubMed]

- Faust, O.; Acharya, U.R.; Meiburger, K.M.; Molinari, F.; Koh, J.E.W.; Yeong, C.H.; Kongmebhol, P.; Ng, K.H. Comparative assessment of texture features for the identification of cancer in ultrasound images: A review. Biocybern. Biomed. Eng. 2018, 38, 275–296. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Abdelhamid, S.R.A. Optimized Two-Level Ensemble Model for Predicting the Parameters of Metamaterial Antenna. Comput. Mater. Contin. 2022, 73, 917–933. [Google Scholar] [CrossRef]

- Sainsbury, J.R.C.; Anderson, T.J.; Morgan, D.A.L. Breast cancer. BMJ 2000, 321, 745–750. [Google Scholar] [CrossRef]

- Sun, Q.; Lin, X.; Zhao, Y.; Li, L.; Yan, K.; Liang, D.; Sun, D.; Li, Z.C. Deep Learning vs. Radiomics for Predicting Axillary Lymph Node Metastasis of Breast Cancer Using Ultrasound Images: Don’t Forget the Peritumoral Region. Front. Oncol. 2020, 10, 53. [Google Scholar] [CrossRef]

- Almajalid, R.; Shan, J.; Du, Y.; Zhang, M. Development of a Deep-Learning-Based Method for Breast Ultrasound Image Segmentation. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1103–1108. [Google Scholar] [CrossRef]

- Ouahabi, A. (Ed.) Signal and Image Multiresolution Analysis; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

- Sami Khafaga, D.; Ali Alhussan, A.; El-kenawy, E.S.M.; Takieldeen, A.E.; Hassan, T.M.; Hegazy, E.A.; Eid, E.A.F.; Ibrahim, A.; Abdelhamid, A.A. Meta-heuristics for Feature Selection and Classification in Diagnostic Breast-Cancer. Comput. Mater. Contin. 2022, 73, 749–765. [Google Scholar]

- Sood, R.; Rositch, A.F.; Shakoor, D.; Ambinder, E.; Pool, K.L.; Pollack, E.; Mollura, D.J.; Mullen, L.A.; Harvey, S.C. Ultrasound for Breast Cancer Detection Globally: A Systematic Review and Meta-Analysis. J. Glob. Oncol. 2019, 5, 1–17. [Google Scholar] [CrossRef]

- Byra, M. Breast mass classification with transfer learning based on scaling of deep representations. Biomed. Signal Process. Control 2021, 69, 102828. [Google Scholar] [CrossRef]

- Chen, D.R.; Hsiao, Y.H. Computer-aided Diagnosis in Breast Ultrasound. J. Med. Ultrasound 2008, 16, 46–56. [Google Scholar] [CrossRef]

- Moustafa, A.F.; Cary, T.W.; Sultan, L.R.; Schultz, S.M.; Conant, E.F.; Venkatesh, S.S.; Sehgal, C.M. Color Doppler Ultrasound Improves Machine Learning Diagnosis of Breast Cancer. Diagnostics 2020, 10, 631. [Google Scholar] [CrossRef] [PubMed]

- Shen, W.C.; Chang, R.F.; Moon, W.K.; Chou, Y.H.; Huang, C.S. Breast Ultrasound Computer-Aided Diagnosis Using BI-RADS Features. Acad. Radiol. 2007, 14, 928–939. [Google Scholar] [CrossRef]

- Lee, J.H.; Seong, Y.K.; Chang, C.H.; Park, J.; Park, M.; Woo, K.G.; Ko, E.Y. Fourier-based shape feature extraction technique for computer-aided B-Mode ultrasound diagnosis of breast tumor. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; pp. 6551–6554. [Google Scholar] [CrossRef]

- Ding, J.; Cheng, H.D.; Huang, J.; Liu, J.; Zhang, Y. Breast Ultrasound Image Classification Based on Multiple-Instance Learning. J. Digit. Imaging 2012, 25, 620–627. [Google Scholar] [CrossRef]

- Bing, L.; Wang, W. Sparse Representation Based Multi-Instance Learning for Breast Ultrasound Image Classification. Comput. Math. Methods Med. 2017, 2017, e7894705. [Google Scholar] [CrossRef]

- Prabhakar, T.; Poonguzhali, S. Automatic detection and classification of benign and malignant lesions in breast ultrasound images using texture morphological and fractal features. In Proceedings of the 2017 10th Biomedical Engineering International Conference (BMEiCON), Hokkaido, Japan, 31 August–2 September 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, Q.; Suo, J.; Chang, W.; Shi, J.; Chen, M. Dual-modal computer-assisted evaluation of axillary lymph node metastasis in breast cancer patients on both real-time elastography and B-mode ultrasound. Eur. J. Radiol. 2017, 95, 66–74. [Google Scholar] [CrossRef]

- Gao, Y.; Geras, K.J.; Lewin, A.A.; Moy, L. New Frontiers: An Update on Computer-Aided Diagnosis for Breast Imaging in the Age of Artificial Intelligence. Am. J. Roentgenol. 2019, 212, 300–307. [Google Scholar] [CrossRef]

- Geras, K.J.; Mann, R.M.; Moy, L. Artificial Intelligence for Mammography and Digital Breast Tomosynthesis: Current Concepts and Future Perspectives. Radiology 2019, 293, 246–259. [Google Scholar] [CrossRef]

- Fujioka, T.; Mori, M.; Kubota, K.; Oyama, J.; Yamaga, E.; Yashima, Y.; Katsuta, L.; Nomura, K.; Nara, M.; Oda, G.; et al. The Utility of Deep Learning in Breast Ultrasonic Imaging: A Review. Diagnostics 2020, 10, 1055. [Google Scholar] [CrossRef] [PubMed]

- Khafaga, D.S.; Alhussan, A.A.; El-kenawy, E.S.M.; Ibrahim, A.; Abd Elkhalik, S.H.; El-Mashad, S.Y.; Abdelhamid, A.A. Improved Prediction of Metamaterial Antenna Bandwidth Using Adaptive Optimization of LSTM. Comput. Mater. Contin. 2022, 73, 865–881. [Google Scholar] [CrossRef]

- Kadry, S.; Rajinikanth, V.; Taniar, D.; Damaševičius, R.; Valencia, X.P.B. Automated segmentation of leukocyte from hematological images—a study using various CNN schemes. J. Supercomput. 2022, 78, 6974–6994. [Google Scholar] [CrossRef]

- Abayomi-Alli, O.; Damasevicius, R.; Misra, S.; Maskeliunas, R.; Abayomi-Alli, A. Malignant skin melanoma detection using image augmentation by oversamplingin nonlinear lower-dimensional embedding manifold. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 2600–2614. [Google Scholar] [CrossRef]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. Hemorrhage Detection Based on 3D CNN Deep Learning Framework and Feature Fusion for Evaluating Retinal Abnormality in Diabetic Patients. Sensors 2021, 21, 3865. [Google Scholar] [CrossRef] [PubMed]

- Ma, G.; Yue, X. An improved whale optimization algorithm based on multilevel threshold image segmentation using the Otsu method. Eng. Appl. Artif. Intell. 2022, 113, 104960. [Google Scholar] [CrossRef]

- Khan, M.A.; Kadry, S.; Parwekar, P.; Damaševičius, R.; Mehmood, A.; Khan, J.A.; Naqvi, S.R. Human gait analysis for osteoarthritis prediction: A framework of deep learning and kernel extreme learning machine. Complex Intell. Syst. 2021. [Google Scholar] [CrossRef]

- Dhungel, N.; Carneiro, G.; Bradley, A.P. The Automated Learning of Deep Features for Breast Mass Classification from Mammograms. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2016, Proceedings of the 19th International Conference, Athens, Greece, 17–21 October 2016; Lecture Notes in Computer Science; Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 106–114. [Google Scholar] [CrossRef]

- Khan, M.A.; Alhaisoni, M.; Tariq, U.; Hussain, N.; Majid, A.; Damaševičius, R.; Maskeliūnas, R. COVID-19 Case Recognition from Chest CT Images by Deep Learning, Entropy-Controlled Firefly Optimization, and Parallel Feature Fusion. Sensors 2021, 21, 7286. [Google Scholar] [CrossRef]

- Odusami, M.; Maskeliūnas, R.; Damaševičius, R.; Krilavičius, T. Analysis of Features of Alzheimer’s Disease: Detection of Early Stage from Functional Brain Changes in Magnetic Resonance Images Using a Finetuned ResNet18 Network. Diagnostics 2021, 11, 1071. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Masood, M.; Mehmood, A.; Mahum, R.; Khan, M.A.; Kadry, S.; Thinnukool, O. Analysis of Brain MRI Images Using Improved CornerNet Approach. Diagnostics 2021, 11, 1856. [Google Scholar] [CrossRef]

- Metwally, M.; El-Kenawy, E.S.M.; Khodadadi, N.; Mirjalili, S.; Khodadadi, E.; Abotaleb, M.; Alharbi, A.H.; Abdelhamid, A.A.; Ibrahim, A.; Amer, G.M.; et al. Meta-Heuristic Optimization of LSTM-Based Deep Network for Boosting the Prediction of Monkeypox Cases. Mathematics 2022, 10, 3845. [Google Scholar] [CrossRef]

- AlEisa, H.N.; El-kenawy, E.M.; Alhussan, A.A.; Saber, M.; Abdelhamid, A.A.; Khafaga, D.S. Transfer Learning for Chest X-rays Diagnosis Using Dipper Throated Algorithm. Comput. Mater. Contin. 2022, 73, 2371–2387. [Google Scholar] [CrossRef]

- Abdallah, Y.; Abdelhamid, A.; Elarif, T.; Salem, A.B.M. Intelligent Techniques in Medical Volume Visualization. Procedia Comput. Sci. 2015, 65, 546–555. [Google Scholar] [CrossRef]

- Majid, A.; Khan, M.A.; Nam, Y.; Tariq, U.; Roy, S.; Mostafa, R.R.; Sakr, R.H. COVID19 Classification Using CT Images via Ensembles of Deep Learning Models. Comput. Mater. Contin. 2021, 69, 319–337. [Google Scholar] [CrossRef]

- Sharif, M.I.; Khan, M.A.; Alhussein, M.; Aurangzeb, K.; Raza, M. A decision support system for multimodal brain tumor classification using deep learning. Complex Intell. Syst. 2022, 8, 3007–3020. [Google Scholar] [CrossRef]

- Liu, D.; Liu, Y.; Li, S.; Li, W.; Wang, L. Fusion of Handcrafted and Deep Features for Medical Image Classification. J. Phys. Conf. Ser. 2019, 1345, 022052. [Google Scholar] [CrossRef]

- Alinsaif, S.; Lang, J. 3D shearlet-based descriptors combined with deep features for the classification of Alzheimer’s disease based on MRI data. Comput. Biol. Med. 2021, 138, 104879. [Google Scholar] [CrossRef]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Albuquerque, V.H.C.D. Multi-Class Skin Lesion Detection and Classification via Teledermatology. IEEE J. Biomed. Health Inform. 2021, 25, 4267–4275. [Google Scholar] [CrossRef]

- Masud, M.; Eldin Rashed, A.E.; Hossain, M.S. Convolutional neural network-based models for diagnosis of breast cancer. Neural Comput. Appl. 2022, 34, 11383–11394. [Google Scholar] [CrossRef]

- Jiménez-Gaona, Y.; Rodríguez-Álvarez, M.J.; Lakshminarayanan, V. Deep-Learning-Based Computer-Aided Systems for Breast Cancer Imaging: A Critical Review. Appl. Sci. 2020, 10, 8298. [Google Scholar] [CrossRef]

- Muhammad, M.; Zeebaree, D.; Brifcani, A.M.A.; Saeed, J.; Zebari, D.A. Region of Interest Segmentation Based on Clustering Techniques for Breast Cancer Ultrasound Images: A Review. J. Appl. Sci. Technol. Trends 2020, 1, 78–91. [Google Scholar] [CrossRef]

- Huang, K.; Zhang, Y.; Cheng, H.D.; Xing, P. Shape-Adaptive Convolutional Operator for Breast Ultrasound Image Segmentation. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sadad, T.; Hussain, A.; Munir, A.; Habib, M.; Ali Khan, S.; Hussain, S.; Yang, S.; Alawairdhi, M. Identification of Breast Malignancy by Marker-Controlled Watershed Transformation and Hybrid Feature Set for Healthcare. Appl. Sci. 2020, 10, 1900. [Google Scholar] [CrossRef]

- Mishra, A.K.; Roy, P.; Bandyopadhyay, S.; Das, S.K. Breast ultrasound tumour classification: A Machine Learning—Radiomics based approach. Expert Syst. 2021, 38, e12713. [Google Scholar] [CrossRef]

- Hussain, S.; Xi, X.; Ullah, I.; Wu, Y.; Ren, C.; Lianzheng, Z.; Tian, C.; Yin, Y. Contextual Level-Set Method for Breast Tumor Segmentation. IEEE Access 2020, 8, 189343–189353. [Google Scholar] [CrossRef]

- Han, X.; Wang, J.; Zhou, W.; Chang, C.; Ying, S.; Shi, J. Deep Doubly Supervised Transfer Network for Diagnosis of Breast Cancer with Imbalanced Ultrasound Imaging Modalities. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020, Proceedings of the 23rd International Conference, Lima, Peru, 4–8 October 2020; Lecture Notes in Computer Science; Martel, A.L., Abolmaesumi, P., Stoyanov, D., Mateus, D., Zuluaga, M.A., Zhou, S.K., Racoceanu, D., Joskowicz, L., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 141–149. [Google Scholar] [CrossRef]

- Moon, W.K.; Lee, Y.W.; Ke, H.H.; Lee, S.H.; Huang, C.S.; Chang, R.F. Computer-aided diagnosis of breast ultrasound images using ensemble learning from convolutional neural networks. Comput. Methods Programs Biomed. 2020, 190, 105361. [Google Scholar] [CrossRef]

- Byra, M.; Jarosik, P.; Szubert, A.; Galperin, M.; Ojeda-Fournier, H.; Olson, L.; O’Boyle, M.; Comstock, C.; Andre, M. Breast mass segmentation in ultrasound with selective kernel U-Net convolutional neural network. Biomed. Signal Process. Control 2020, 61, 102027. [Google Scholar] [CrossRef]

- Kadry, S.; Damaševičius, R.; Taniar, D.; Rajinikanth, V.; Lawal, I.A. Extraction of Tumour in Breast MRI using Joint Thresholding and Segmentation—A Study. In Proceedings of the 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Lahoura, V.; Singh, H.; Aggarwal, A.; Sharma, B.; Mohammed, M.A.; Damaševičius, R.; Kadry, S.; Cengiz, K. Cloud Computing-Based Framework for Breast Cancer Diagnosis Using Extreme Learning Machine. Diagnostics 2021, 11, 241. [Google Scholar] [CrossRef]

- Maqsood, S.; Damasevicius, R.; Shah, F.M. An Efficient Approach for the Detection of Brain Tumor Using Fuzzy Logic and U-NET CNN Classification. In Computational Science and Its Applications—ICCSA 2021, Proceedings of the 21st International Conference, Cagliari, Italy, 13–16 September 2021; Lecture Notes in Computer Science; Gervasi, O., Murgante, B., Misra, S., Garau, C., Blečić, I., Taniar, D., Apduhan, B.O., Rocha, A.M.A.C., Tarantino, E., Torre, C.M., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 105–118. [Google Scholar] [CrossRef]

- Rajinikanth, V.; Kadry, S.; Taniar, D.; Damaševičius, R.; Rauf, H.T. Breast-Cancer Detection using Thermal Images with Marine-Predators-Algorithm Selected Features. In Proceedings of the 2021 Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Al-Dhabyani, W.; Gomaa, M.; Khaled, H.; Fahmy, A. Dataset of breast ultrasound images. Data Brief 2020, 28, 104863. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.I.; Raza, M.; Anjum, A.; Saba, T.; Shad, S.A. Skin lesion segmentation and classification: A unified framework of deep neural network features fusion and selection. Expert Syst. 2022, 39, e12497. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Mirjalili, S.; Alassery, F.; Zhang, Y.D.; Eid, M.M.; El-Mashad, S.Y.; Aloyaydi, B.A.; Ibrahim, A.; Abdelhamid, A.A. Novel Meta-Heuristic Algorithm for Feature Selection, Unconstrained Functions and Engineering Problems. IEEE Access 2022, 10, 40536–40555. [Google Scholar] [CrossRef]

- Samee, N.A.; El-Kenawy, E.S.M.; Atteia, G.; Jamjoom, M.M.; Ibrahim, A.; Abdelhamid, A.A.; El-Attar, N.E.; Gaber, T.; Slowik, A.; Shams, M.Y. Metaheuristic Optimization Through Deep Learning Classification of COVID-19 in Chest X-Ray Images. Comput. Mater. Contin. 2022, 73, 4193–4210. [Google Scholar]

- Khafaga, D.S.; Alhussan, A.A.; El-Kenawy, E.S.M.; Ibrahim, A.; Metwally, M.; Abdelhamid, A.A. Solving Optimization Problems of Metamaterial and Double T-Shape Antennas Using Advanced Meta-Heuristics Algorithms. IEEE Access 2022, 10, 74449–74471. [Google Scholar] [CrossRef]

- Abdelaziz, A.; Abdelhamid, S.R.A. Robust Prediction of the Bandwidth of Metamaterial Antenna Using Deep Learning. Comput. Mater. Contin. 2022, 72, 2305–2321. [Google Scholar] [CrossRef]

- El-Kenawy, E.S.M.; Mirjalili, S.; Abdelhamid, A.A.; Ibrahim, A.; Khodadadi, N.; Metwally, M. Meta-Heuristic Optimization and Keystroke Dynamics for Authentication of Smartphone Users. Mathematics 2022, 10, 2912. [Google Scholar] [CrossRef]

- El-kenawy, E.S.M.; Albalawi, F.; Ward, S.A.; Ghoneim, S.S.M.; Metwally, M.; Abdelhamid, A.A.; Bailek, N.; Ibrahim, A. Feature Selection and Classification of Transformer Faults Based on Novel Meta-Heuristic Algorithm. Mathematics 2022, 10, 3144. [Google Scholar] [CrossRef]

- Takieldeen, A.E.; El-kenawy, E.S.M.; Hadwan, M.; Zaki, R.M. Dipper Throated Optimization Algorithm for Unconstrained Function and Feature Selection. Comput. Mater. Contin. 2022, 72, 1465–1481. [Google Scholar] [CrossRef]

- Awange, J.L.; Paláncz, B.; Lewis, R.H.; Völgyesi, L. Particle Swarm Optimization. In Mathematical Geosciences: Hybrid Symbolic-Numeric Methods; Awange, J.L., Paláncz, B., Lewis, R.H., Völgyesi, L., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 167–184. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Samareh Moosavi, S.H.; Khatibi Bardsiri, V. Satin bowerbird optimizer: A new optimization algorithm to optimize ANFIS for software development effort estimation. Eng. Appl. Artif. Intell. 2017, 60, 1–15. [Google Scholar] [CrossRef]

- Immanuel, S.D.; Chakraborty, U.K. Genetic Algorithm: An Approach on Optimization. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 17–19 July 2019; pp. 701–708. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Hatamlou, A. Multi-Verse Optimizer: A nature-inspired algorithm for global optimization. Neural Comput. Appl. 2016, 27, 495–513. [Google Scholar] [CrossRef]

- Ariyaratne, M.; Fernando, T. A Comprehensive Review of the Firefly Algorithms for Data Clustering. In Advances in Swarm Intelligence: Variations and Adaptations for Optimization Problems; Biswas, A., Kalayci, C.B., Mirjalili, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 217–239. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).