1. Introduction

Over the past decade, wireless sensor networks (WSNs) have been used in different fields, such as urban management, environmental monitoring, disaster prevention, and military applications, etc. [

1,

2,

3,

4]. A large number of tiny sensors make up the self-organizing distributed network system known as the WSN, and the sensors are typically heterogeneous. In WSN applications, coverage and connectivity are important indicators for determining whether real-time data can be provided to users through the inter-collaboration of sensors. However, the traditional WSN coverage approach deploys sensors at random. This approach will result in insufficient coverage, causing communication conflicts [

5,

6]. In existing research, scholars usually consider coverage when optimizing HWSN coverage, but connectivity is frequently overlooked. Therefore, this paper studies how to improve the coverage and connectivity of HWSNs.

The swarm intelligence (SI) optimization algorithm is a biologically inspired method that is one of the most successful strategies for solving optimization problems [

7,

8]. It is characterized by a fast search speed and strong search capability, avoiding complex theoretical derivation. Examples include particle swarm optimization (PSO) [

9], bald eagle search optimization algorithm (BES) [

10], cuckoo search (CS) [

11], sparrow search algorithm (SSA) [

12], northern goshawk optimization (NGO) [

13], mayfly optimization algorithm (MA) [

14], gray wolf optimization algorithm (GWO) [

15], Harris hawks optimization (HHO) [

16], coot optimization algorithm (COOT) [

17], wild horse optimizer (WHO) [

18] and other algorithms.

The WHO was put forth by Naruei et al. in 2021 as a method of solving algebraic optimization issues. Its optimization performance has significant advantages over the majority of classical algorithms, and it has been widely used to solve various engineering problems. In 2022, Milovanović et al. applied the WHO to multi-objective energy management in microgrids [

19]. Ali et al. applied the WHO to the frequency regulation of a hybrid multi-area power system with a new type of combined fuzzy fractional order PI and TID controllers [

20]. Furthermore, many researchers have improved WHO to improve its optimization capability. In 2022, Li et al. proposed a hybrid multi-strategy improved wild horse optimizer, which can improve the algorithm’s convergence speed, accuracy, and stability [

21]. Ali et al. proposed an improved wild horse optimization algorithm for reliability-based optimal DG planning of radial distribution networks. This algorithm is a high-performance optimization method in terms of exploration–exploitation balance and convergence speed [

22].

In 2017, Tanyildizi et al. proposed the Gold-SA algorithm [

23], which is based on the sine trigonometric function. This algorithm uses a golden sine operator to condense the solution space, efficiently avoiding the local optimal outcome and quickly approaching the global optimum. Additionally, the algorithm contains few parameters and algorithm-dependent operators, which can be well integrated with the other algorithms. In 2022, Wang et al. proposed an improved crystal structure algorithm for engineering optimization problems. This algorithm makes good use of the relationship between the golden sine operator and the unit circle to make the algorithm exploration space more comprehensive, which can effectively speed up the convergence rate of the algorithm [

24]. Yuan et al. proposed a hybrid golden jackal optimization and golden sine algorithm with dynamic lens imaging learning for global optimization problems; the golden sine algorithm is integrated to improve the ability and efficiency of golden jackal optimization [

25]. In 2023, Jia et al. proposed the fusion swarm-intelligence-based decision optimization for energy-efficient train-stopping schemes. Their algorithm incorporates the golden sine strategy to improve the performance of the algorithm [

26].

In recent years, SI optimization algorithms have been used by many scholars for the study of WSN coverage optimization, and fruitful results have been achieved with the continuous development of SI. In 2013, Huang et al. proposed an AFSA-based coverage optimization method for WSN. Simulation results show that AFSA increases the sensors’ coverage in WSN [

27]. In 2015, Zhang proposed a hybrid algorithm of particle swarm and firefly, with particle swarm as the main body and firefly for local search, thus improving the sensor coverage [

28]. In 2016, Wu et al. suggested an improved adaptive PSO-based coverage optimization. This approach first increases the evolution factor and aggregation factor to improve the inertia weights, and then, in order to ensure that the particle population is diverse, it introduces a collision resilience strategy during each iteration of the algorithm [

29]. In 2018, Lu et al. proposed an FA-based WSN coverage optimization technique that involves switching out two sensors’ placements at once to increase network coverage [

30]. In 2019, Nguyen et al. suggested a powerful genetic algorithm based on coverage optimization, effectively addressing various drawbacks of the current metaheuristic algorithms [

31].

Although these SI optimization algorithms have produced many positive results, there is still room for further research into the algorithm’s performance and the optimization of WSN coverage. This research suggests an IWHO to optimize sensor coverage and connectivity. The main contributions are the following:

We improve the WHO algorithm in order to achieve better optimization. The SPM chaotic map is used to improve the population’s quality. The WHO and Golden-SA are hybridized to improve the WHO’s accuracy and arrive at faster convergence. The Cauchy variation and opposition-based learning strategies are also used to avoid falling into a local optimum and broaden the search space.

We test 23 test functions and compare the results to the performance of the IWHO and seven other algorithms. The findings reveal that IWHO has a stronger optimization performance than the others. We use the IWHO to optimize the coverage of a homogeneous WSN and compare the performance with five other algorithms and four improved algorithms proposed in References. The experimental data demonstrate that IWHO can optimize WSN coverage more effectively.

The HWSN coverage problem is optimized using the IWHO, which significantly increases coverage and the connectivity ratio. With the situation of barriers, the same high level of coverage and connectivity of sensors is attained.

2. WSN Coverage Model

Suppose that the monitoring area is a two-dimensional region with an area of

M × N. The

n sensors are randomly arranged in this area and they can be expressed as

U = {

u1,

u2…

un}. Assume that the sensors are heterogeneous with different sensing radii

Rs and communication radii

Rc, and

Rc ≥ 2

Rs. Every sensor can move, and their position can be updated instantly. The sensor is centered on itself and has a sensing radius

Rs as its radius, covering a circular area. If the coordinates of the detected arbitrary sensor

Ui are (

xi,

yi), the coordinates of the target detected sensor

Oj are (

xj,

yj). The Euclidean distance from the detected arbitrary sensor

Ui to the target detected sensor

Oj is expressed as:

The probability of sensor

Oi being perceived by sensor

Ui is denoted by

p(

Ui,

Oi). It signifies that the goal is covered and the probability is 1 when the distance between sensors is smaller than

Rs. It is 0 if it is not covered. The expression is as follows:

Joint sensors’ perception probabilities are defined as follows:

The coverage ratio is an important indicator of the HWSN deployment problem. The coverage ratio is calculated as follows:

The utilization of sensor coverage is evaluated using coverage efficiency. Higher coverage efficiency means achieving the same coverage area with fewer sensors. It is determined by dividing the region’s effective coverage range by the sum of all of the sensors’ coverage ranges. The coverage efficiency

CE is calculated as shown in the following equation, where

Ai denotes the area covered by the

i-th sensor:

In the coverage problem, the connectivity ratio is equally as important as the coverage among sensors. To ensure the reliability of network connectivity, each sensor should be able to connect with at least two or more sensors. If the separation between two sensors is within the

Rc, it is known that the two sensors can be connected to each other. The connectivity ratio between sensor

Oi and target detection sensor

Oj (

i ≠ j) can be defined as:

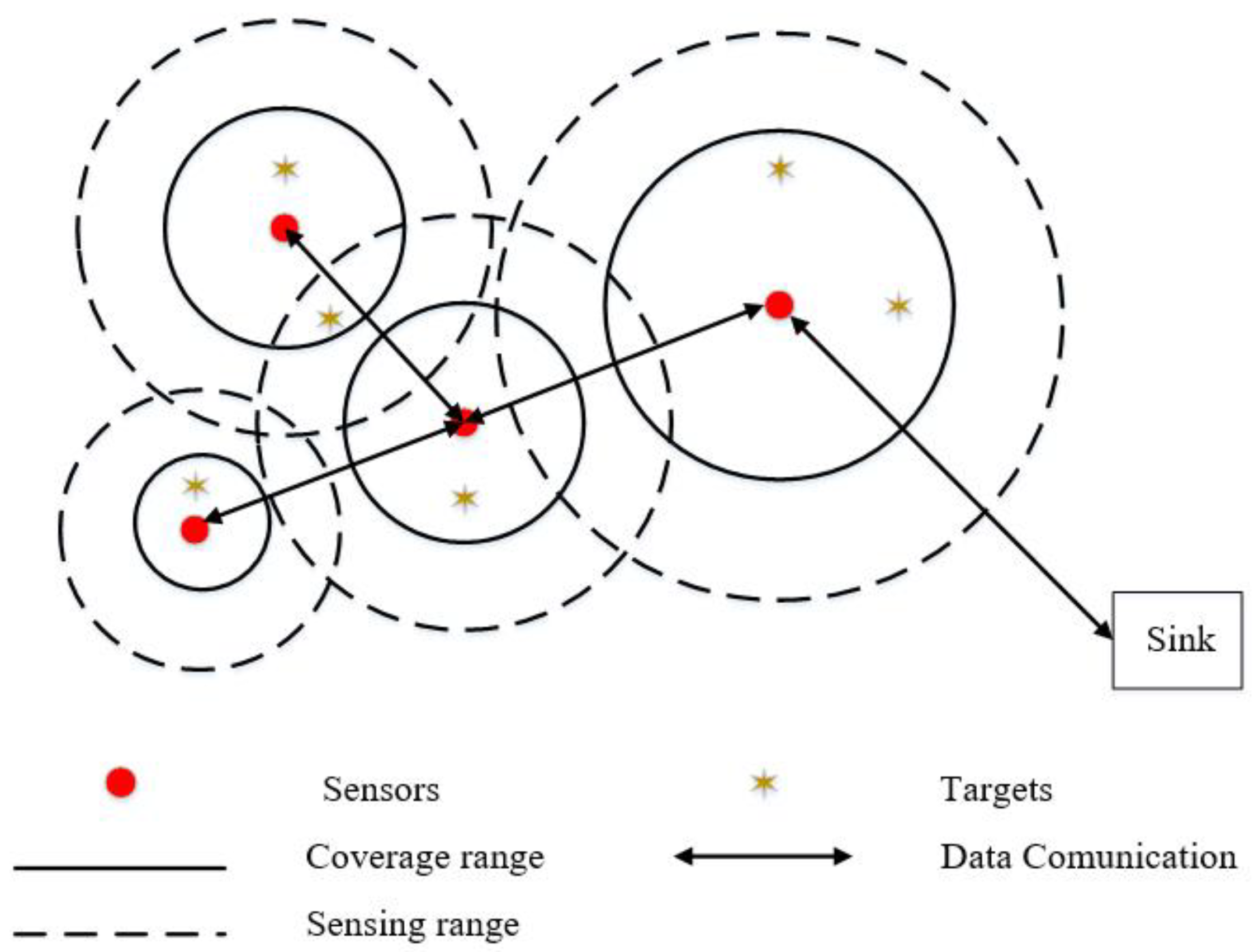

As shown in

Figure 1, a network is connected if there is a path between any two sensors. The connectivity ratio is the proportion of connected paths to the maximum connected paths between sensors. The expression is as follows:

The amount of paths between any two nodes is

n(

n − 1)/2. Therefore, according to sensor coverage and connection, the objective function is:

After several experiments, the values of w1 and w2, respectively were 0.9 and 0.1.

3. Wild Horse Optimizer

The social behavior of wild horses served as a model for the WHO. In the population construction of wild horses, there exist stallions and the rest of the horse herd. The WHO is designed and optimized for various problems based on group behavior, grazing, mating, dominance, and leadership among the stallions and herds in the wild horse population.

The population members are first distributed at random throughout the search ranges. In the beginning, we group this population. If there are N members overall, the number of stallions is G = (N × PS). PS represents the percentage of stallions in the herd, and it serves as the algorithm’s control parameter. The algorithm begins with the group leaders being chosen at random, and as the algorithm progresses, the leaders are chosen based on which group member has the best fitness function.

The stallion is regarded as the center of the grazing area, and the group members move about the center to promote grazing behavior. We propose Equation (9) to model the grazing behavior. Members of the group move and conduct searches with varying radii around the leader:

where Stallion is the leader’s position and

R is a random number within [−2, 2];

Z is calculated as:

where

P is a vector consisting of 0 and 1. The random numbers

,

, and

have a uniform distribution and fall between [0, 1]. Returns for the

IDX indices of the random vector

that satisfy the condition (

P == 0). During algorithm execution,

TDR declines, starting at 1 and eventually reaching 0. The expression is as follows:

where

iter indicates how many iterations are being performed right now, and max

iter indicates the maximum number of iterations.

In order to simulate the behavior of horses leaving and mating, Equation (12) has proposed the same

Crossover operator as the mean value type:

In nature, leaders mostly guide groups to appropriate living environments. If another population dominates the habitat, then that population must leave it. Equation (13) allows calculation of the location of the next habitat searched by the leader in each population:

where

WH is the current location of the most suitable habitat, and

R,

RZ, and

Z are defined as before.

As mentioned in the population initialization phase, the two positions are switched if a group member has a greater fitness value than the leader:

4. Improved Wild Horse Optimizer

To address the issues of the original algorithm, including the difficulty of escaping local optima, lack of accuracy during convergence, and slow speed, three methods are introduced in this study as ways to enhance the WHO algorithm. They improve the starting population, boost optimization speed and accuracy, increase the optimal solution’s disruption, and aid the algorithm’s exit from the local optimum.

4.1. SPM Chaotic Mapping Initialization Population

The optimization ability can benefit from a diversified initial population. In the original WHO algorithm, the rand function is used to randomly initialize the population, which results in uneven distribution and overlapping individuals, and the population diversity decreases rapidly in the later iterations. Chaos is a unique and widespread form of acyclic motion in nonlinear systems, which is widely used in population intelligence algorithms for optimizing the diversity of populations because of its ergodic and stochastic nature. In this paper, we introduce the SPM chaotic mapping model, which has superior chaotic and ergodic properties [

32]. The expression is shown as follows:

Scholars usually choose different chaotic mapping models for optimization of population initialization of population intelligence algorithms. This paper selects the Logistic mapping and Sine mapping with high usage rate, and compares them with SPM mapping under the condition of setting the same initial value and iterating 2000 times.

Figure 2 shows the histograms of the three chaotic mappings, where the horizontal coordinate is the chaotic value and the vertical coordinate is the frequency of that chaotic value. The results prove that SPM mapping has better chaos performance and traversal. SPM mapping was therefore chosen to enhance population variety and make the population distribution more uniform.

Figure 3 shows the population distribution of different chaotic mappings when the population size is 2000. The Logistic and Sine mappings show many individuals overlapping at the boundary, whereas the SPM mapping has a more uniform distribution.

4.2. Golden Sine Algorithm

To fix the disadvantages of the stallion position update method in the WHO algorithm, the Golden-SA algorithm is used in this research. In this paper, the stallion position is updated using the golden sine operator to condense the algorithm’s solution space and enhance the capability of the optimal search. The expression is shown as follows:

where

r1,

r2 are random numbers between [0, 2π] and [0, π]. In the following iteration,

r1 determines the distance that individual

i travels and

r2 determines the direction in which individual

i travels.

x1 and

x2 are the golden partition coefficients. During the iteration, they are utilized to condense the search space and direct the solution to the place that is globally optimal. Its partitioning implementation is shown below:

where

a and

b are the initial golden mean, and

τ is the golden mean ratio.

4.3. Cauchy Variation and Opposition-Based Learning

The WHO algorithm does not perturb the optimal solution after each iteration, which can keep the solution in a locally optimal state. To address this issue, we employ Cauchy variation and opposition-based learning strategies to perturb the solution. This was inspired by MAO et al., who proposed a chaotic squirrel search algorithm with a mixture of stochastic opposition-based learning and Cauchy variation in 2021 [

33].

4.3.1. Cauchy Variation

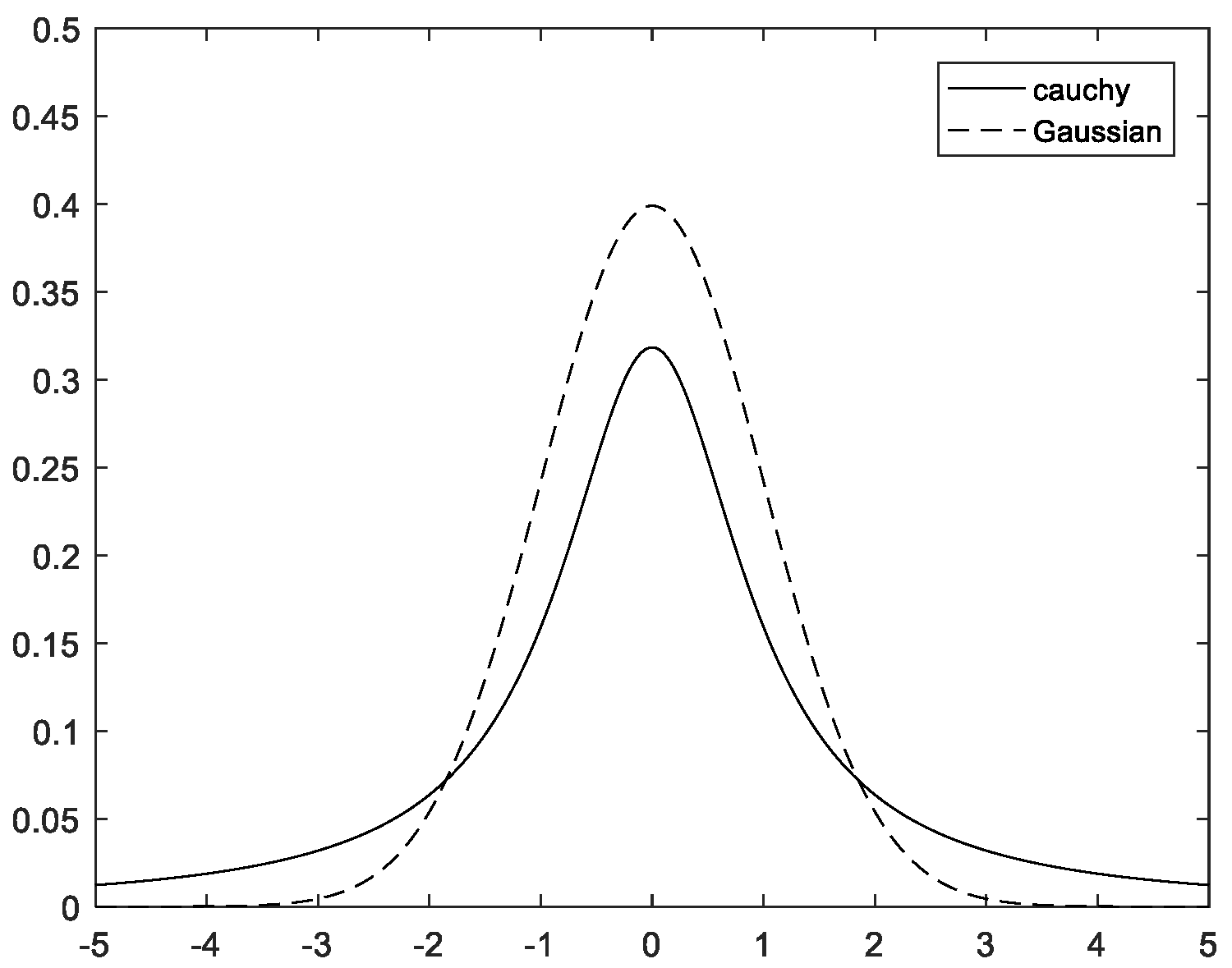

Gaussian and Cauchy distributions are two similar classical probability density distribution functions, and Gaussian variation has also been used by scholars in algorithm improvements.

Figure 4 displays the probability density function curves for both.

In comparison to the Gaussian distribution, as seen in

Figure 4, the Cauchy distribution is longer and flatter at both ends. It flattens out as it approaches 0, moves more slowly, and has a smaller peak close to the origin. As a result, Cauchy variance has better perturbation properties than Gaussian variance. As a result, introducing the Cauchy variation strategy can expand the search space and improve the perturbation ability. The expression is as follows:

4.3.2. Opposition-Based Learning

By building the reverse solution from the existing solution, the opposition-based learning strategy can broaden the solution space of algorithms. To determine which solution is preferable, existing solution is contrasted with the reverse solution. The expression for incorporating the opposition-based learning strategy into the WHO algorithm is as follows:

where

WHback is the reverse solution to the stallion’s optimal position in the

lth generation, rand is a random matrix of dimension obeying the standard uniform distribution of (0, 1), upper and lower boundaries are denoted by

ub and

lb, respectively, and

b1 denotes the information exchange control parameter.

4.3.3. A Dynamic Selection Probability

This paper sets a dynamic selection probability

Pz to choose the strategy to update the stallion position more appropriately. The

Pz is shown as follows:

Pz will be compared to a number chosen at random between (0,1). If Pz > rand then a opposition-based learning strategy starts to work. If Pz < rand then the Cauchy variation strategy is utilized to disturb at the present stallion.

4.4. The Pseudo Code of IWHO

Initialize the first population of horses using the new SPM chaotic sequence

Input IWHO parameters, PC = 0.13, PS = 0.1, a = π, b = −π

Calculate the fitness

Create foal groups and select stallions

Find the best horse

While the end criterion is not satisfied

Calculate TDR using Equation (11)

For number of stallions

Calculate Z using Equation (10)

For number of foals of any group

If rand > PC

Update the position of the foal using Equation (9)

Else

Update the position of the foal using Equation (12)

End

End

Update the position of the StallionGi using Equation (16)

If cost (StallionGi) < cost (Stallion)

Stallion = StallionGi

End

Sort foals of group by cost

Select foal with minimum cost

If cost (foal) < cost (Stallion)

Exchange foal and Stallion position using Equation (14)

End

Calculate Pz using Equation (20)

If Pz < rand

Update the position of Stallion using Equation (19)

Else

Update the position of Stallion using Equation (18)

End

End

Update optimum

End

4.5. Time Complexity Analysis

Time complexity is a significant factor in determining an algorithm’s quality and shows how effectively it operates. The time complexity of the WHO algorithm can be represented as O(N×D×L), where N is the entire population, D is the search space’s dimension, and L is the maximum number of iterations. The following is a depiction of the IWHO algorithm’s time complexity analysis:

In conclusion, IWHO has the same time complexity as WHO, and the three improvement techniques do not make the algorithm’s time complexity any more difficult.

5. IWHO Algorithm-Based Coverage Optimization Design

The process of finding a suitable habitat for a horse herd is analogous to the process of obtaining the optimal coverage of sensors, and the position of the stallion represents the coordinates covered by the sensors. Using the same number of sensors to cover a bigger area while maintaining effective communication is the aim of WSN optimization coverage based on the IWHO. These are the steps:

Step 1: Enter the size of the area to be detected by the WSN, the number of sensors, sensing radius, communication radius, and the IWHO algorithm’s settings;

Step 2: The population is initialized according to Equation (15), where each individual represents a coverage scheme. At this step, the sensors are dispersed randomly around the monitoring region, and Equation (8) is used to determine the initial coverage and connectivity;

Step 3: Update the location information of stallions and foals, and calculate the corresponding adaptation degree. Update the coverage ratio and connectivity ratio according to Equation (8). Find the optimal sensor location;

Step 4: Create a new solution by perturbing at the optimal solution position through dynamic probabilistic selection of a Cauchy variation or a opposition-based learning strategy;

Step 5: Immediately exit the loop if the condition is met. Output the sensor’s best coverage scheme.

6. Simulation Experiments and Analysis

6.1. IWHO Algorithm Performance Test Analysis

6.1.1. Simulation Test Environment

The environment for this simulation test was: Windows 10 Professional, 64-bit OS, Intel(R) Core (TM) i5-4210H CPU @2.90 GHz, 8GB. The simulation software was MATLAB 2016a.

6.1.2. Comparison Objects and Parameter Settings

In this paper, the WHO, SSA, NGO, MA, PSO, COOT, GWO and the IWHO algorithms were selected for comparison. In order to make a fair comparison between each algorithm, we have unified the number of consumed fitness evaluations in the experiment, and the number of consumed fitness evaluations by each algorithm is 30,000. The parameters ware set as shown in

Table 1.

In GWO, α represents the control parameter of a grey wolf when hunting prey. In SSA, ST represents the alarm value, PD represents the number of producers, SD represents the number of sparrows who perceive the danger. In MA, g represents the inertia weight, a1 represents the personal learning coefficient, and a2 and a3 represent the global learning coefficient. In PSO, c1 and c2 represent the learning coefficient, wmin and wmax represent the upper and lower limits of inertia weight. In COOT, R, R1 and R2 are random vectors along the dimensions of the problem.

6.1.3. Benchmark Functions Test

To verify the IWHO algorithm’s capacity for optimization, 23 benchmark functions were chosen for simulation in this study. There are three categories of test function, among which F1–F7 listed in

Table 2 are single-peak test functions, F8–F13 are multi-peak test functions listed in

Table 3 and F14–F23 are fixed-dimension test functions listed in

Table 4. The single-peak test functions are characterized by a single extreme value and are used to test the convergence speed and convergence accuracy of the IWHO. The multi-peak test functions are characterized by multiple local extremes, which can be applied to evaluate the IWHO algorithm’s local and global search capabilities.

6.1.4. Simulation Test Results

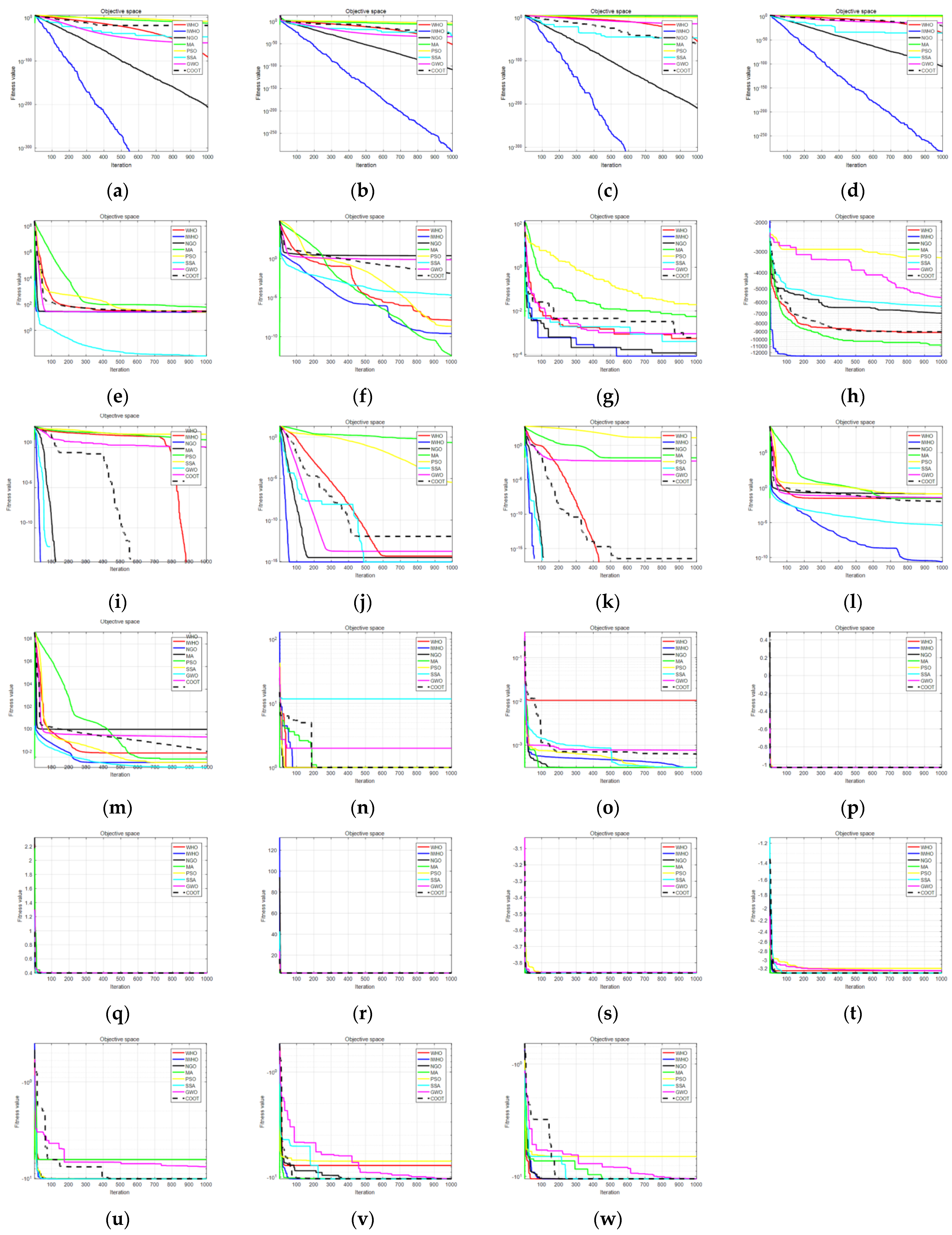

After 30 independent runs,

Table 5 displays the average value and standard deviation for eight algorithms. The IWHO outperforms others. For the single-peak test functions F1–F4 and F7, the IWHO has the best performance and the fastest convergence speed, with a standard deviation of 0, demonstrating that it is robust and stable. IWHO has the second-best performance for F5 and F6. For F9 and F11, although most of the algorithms converge to the ideal optimum, as illustrated in

Figure 5i,k, the IWHO converges faster. Both the IWHO and SSA algorithms have the best results for F10, however, the IWHO goes through fewer iterations, as can be seen in

Figure 5j. For F13, both the PSO algorithm and the SSA algorithm outperform the IWHO, which is ranked third among the eight optimization algorithms. The results in

Table 5 and

Figure 5 for the fixed-dimension test functions show that the IWHO converges to the theoretical optimum with a standard deviation of 0.

In conclusion, the results of 30 independent experiments with eight different algorithms and 23 benchmark test functions show that the IWHO can converge to the ideal optimal values for 14 test functions, F1, F3, F9, F11, F14–F23. This means that the convergence accuracy of IWHO has been improved a lot, but when optimizing some test functions, although the optimal value can be obtained, the convergence speed can still be improved. Additionally, some test functions still fall into local optimization. Among the 23 test results, IWHO ranked first 20 times, second twice, and third once. It can be concluded that the IWHO performs better than the WHO algorithm and effectively improves some of its flaws. Similarly, the IWHO has significant advantages over other algorithms.

6.1.5. Wilcoxon Rank Sum Test

Such data analysis lacks integrity and scientific validity if it only compares and analyzes the mean and standard deviation of the different algorithms. We therefore chose a non-parametric statistical test, the Wilcoxon rank sum test, to further validate the algorithm’s performance. We ran each algorithm independently for 30 times in 23 test functions. For Wilcoxon rank sum test and p calculation, we compared the experimental results of the other algorithms with those of the IWHO. When p < 5%, it was marked it as “+”, indicating that IWHO was better than the comparison algorithm. When p > 5%, it was marked as “−”, indicating that IWHO is inferior to the comparison algorithm. When p was equal to 1, it indicates that it is not suitable for judgment.

The comparison results are shown in

Table 6. In the comparison of various algorithms, most of the rank sum test

p values are less than 0.05, which indicates that the IWHO has significant differences with other algorithms; that is, the IWHO algorithm has better optimization performance.

6.2. Coverage Performance Simulation Test Analysis

To verify the performance of IWHO in optimizing coverage in HWSNs, three experiments were set up by simulating different scenarios and setting different parameters.

Experiment 1 used homogenous sensors, and the IWHO was used to improve the coverage ratio of WSN. In order to demonstrate the IWHO algorithm’s efficacy, five algorithms and four4 improved algorithms were chosen for comparison.

The results of Experiment 1 show how effective the IWHO is in solving the sensor coverage optimization issue. In Experiment 2, the IWHO was used in an HWSN to increase sensor coverage and ensure sensor connectivity.

In Experiment 3, an obstacle was added to the monitoring area to simulate a more realistic scenario. The sensors must avoid the obstacle for coverage, and the IWHO was used to improve the coverage and connectivity ratio of the sensors in the monitoring area.

6.2.1. Simulation Experiment 1 Comparison Results Analysis

In Experiment 1, we only considered the improvement in coverage ratio using Equation (4) as the objective function. To avoid the possibility of the algorithm, the algorithm was run thirty times independently.

Table 7 displays the settings for the sensor parameters.

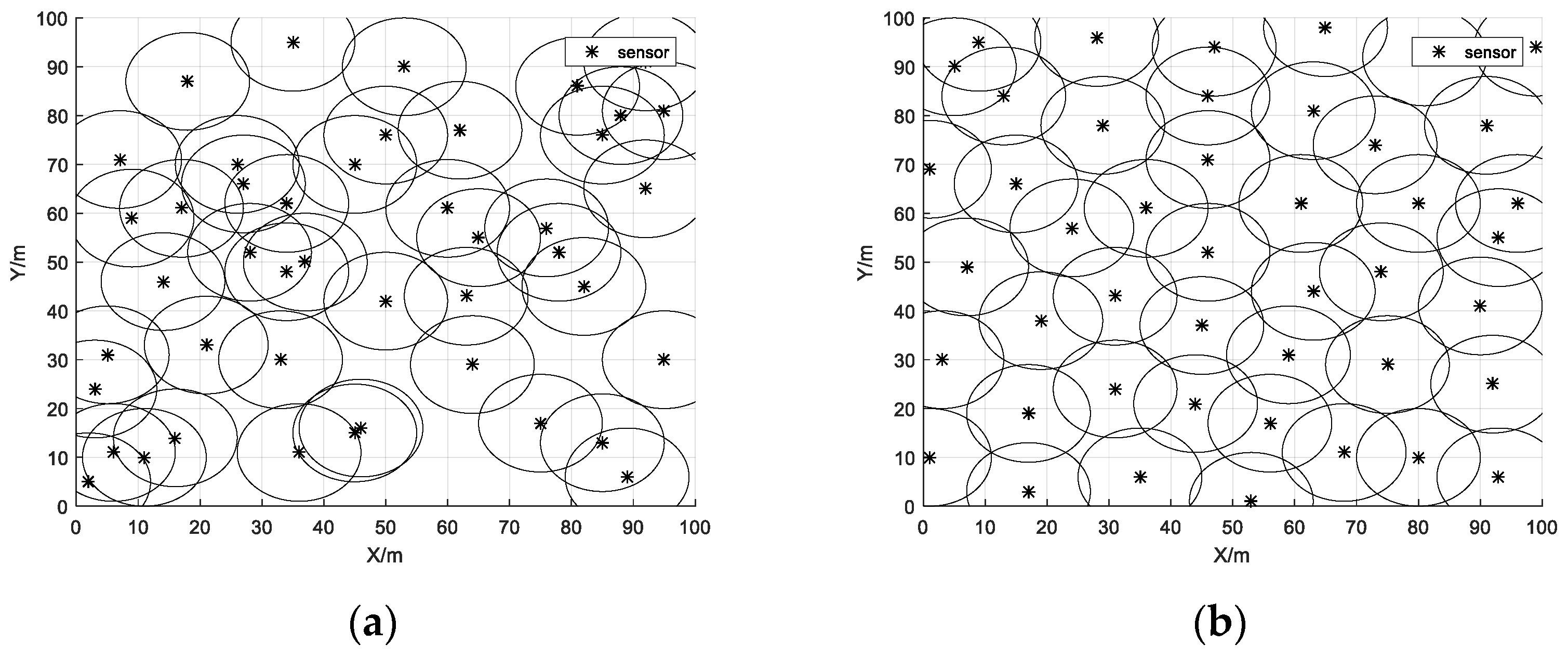

Figure 6a shows how the sensors were initially covered in the monitoring area at random. With the optimization of the IWHO, the overlapping sensors started to decrease. Finally, as shown in

Figure 6b, they were evenly distributed in the monitoring area.

Table 8 demonstrates that the initial coverage reached 79.13%, while after the optimization of the IWHO the coverage reached 97.58%, which is an improvement of 18.45%. At the beginning, there were more redundant sensors in the region, and the region had more obvious energy voids and appeared to be cluttered. After the optimization of the algorithm, the sensor distribution became obviously uniform and the coverage ratio was significantly improved. As a result, the IWHO is effective for WSN coverage optimization.

WHO, PSO, WOA, BES, HHO algorithms were selected for further comparative experiments. All algorithms were run independently thirty times.

Table 6 displays the settings for the sensor parameters.

Table 9 demonstrates that the IWHO, with a coverage ratio of 97.58%, has the best optimization result. The results of the experiments can prove that the IWHO shows better results than the WHO algorithm in solving the WSN coverage optimization problem, with an average coverage improvement of 5.52% and a coverage efficiency improvement of 3.93% after thirty independent runs. It also outperforms the other four algorithms. The coverage ratio after IWHO optimization is 14.56% higher than the worst PSO algorithm and 1.1% higher than the best HHO algorithm.

Figure 7 shows that the IWHO maintains a better coverage ratio for almost all iterations. Additionally, the IWHO algorithm’s coverage efficiency is the highest, coming in at 69.08%, surpassing the PSO algorithm’s coverage efficiency by 10.3%. It demonstrates that the IWHO is optimized by reducing redundancy in sensor coverage.

To further verify the superiority of IWHO in optimizing sensors coverage, the IWHO was compared with the four improved algorithms. To ensure fairness, the parameter settings are kept consistent with those in reference.

6.2.2. Simulation Experiment 2 Comparison Results Analysis

In a complex sensor coverage environment, it is difficult to unify the types of sensors. Therefore, more of the HWSN is often covered in real environments. In Experiment 2, two different types of sensors were set up, and the sensors were dispersed at random throughout the monitoring area. The IWHO was used to optimize the HWSN coverage.

Table 14 displays the settings for the sensor parameters.

N1,2 indicates the number of two types of sensors.

As shown in

Figure 8a,b and

Table 15, IWHO improved coverage equally well when two kinds of different sensors are deployed. The optimized coverage achieved 98.51%, a 17.08% improvement over the initial state. In contrast to Experiment 1, IWHO improved connectivity while improving coverage. As illustrated in

Figure 8c,d and

Table 16, some of the sensors were not connected in the initial state, and the connectivity ratio was only 16.03%. However, after optimization, the connectivity ratio rose to 20.04%, and the overall network connectivity improved.

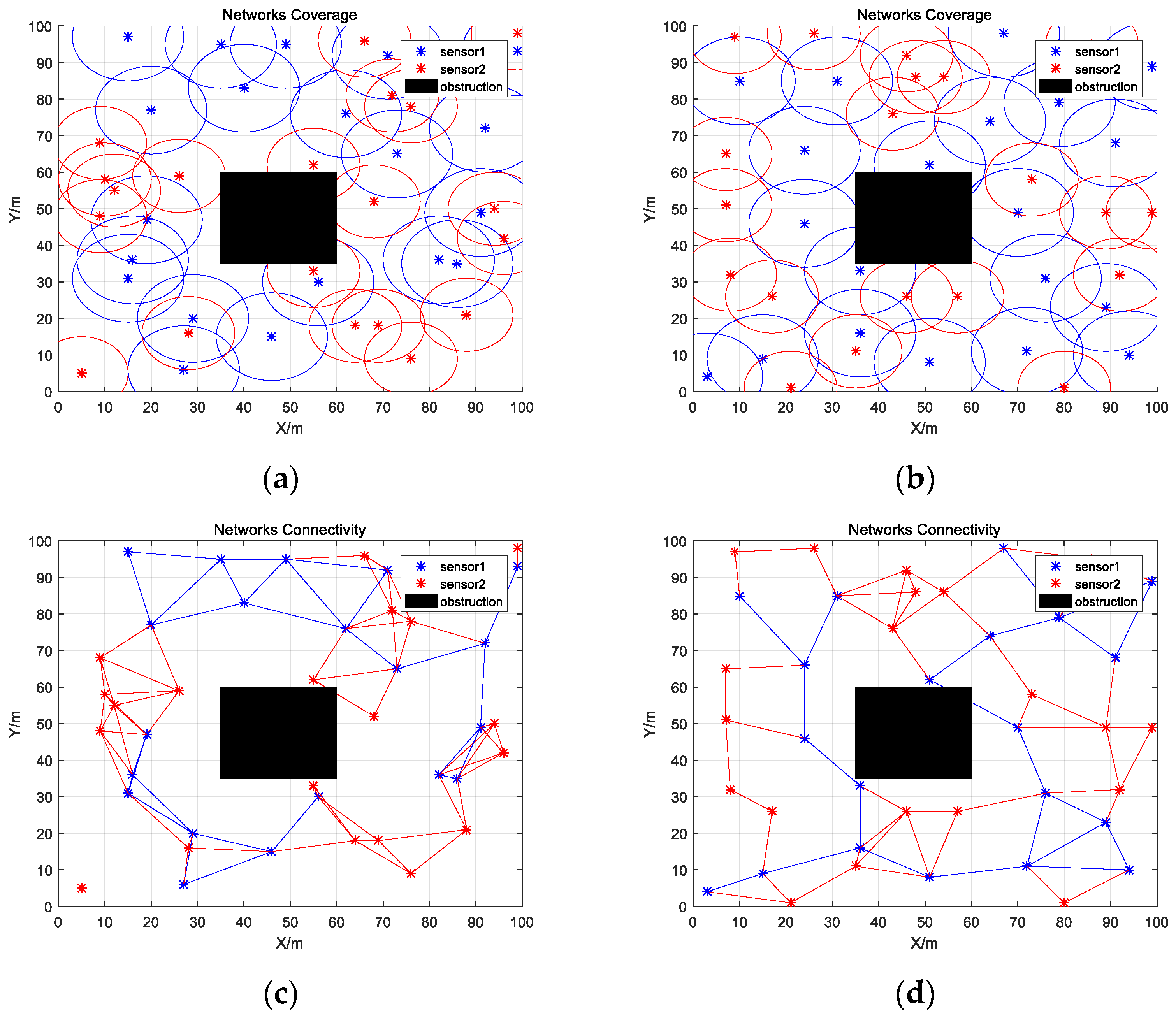

6.2.3. Simulation Experiment 3 Comparison Results Analysis

In the actual coverage area, there are some non-deployable units. In order to simulate a more realistic simulation scenario, a 25 m × 25 m obstacle was added to the monitoring area. Experiment 3 evaluated the IWHO algorithm’s performance in optimizing HWNs coverage with the existence of obstacles.

Table 13 displays the settings for the sensor parameters.

Similarly, as shown in

Figure 9a,b and

Table 17, IWHO can also effectively improve the coverage ratio after adding obstacles to the monitoring area, reaching 99.25%, which is 16.96% higher than the initial state. As shown in

Figure 9c,d and

Table 18, some sensors were not connected at the start. Following optimization, the network connectivity ratio increased to 17.44%.

7. Conclusions

This paper proposes an IWHO to increase the coverage and connectivity of HWSNs. The WHO algorithm’s flaws include a slow rate of convergence, poor convergence accuracy, and a propensity for falling into a local optimum. How can the algorithm be made to perform better as a result? The population is initialized using the SPM chaotic mapping model, followed by the integration of the Golden-SA to enhance the algorithm’s search for optimization, and finally, the best solution is perturbed using the opposition-based learning and Cauchy variation strategy to avoid a local optimum being reached. This study evaluates the optimization performance of the IWHO, the WHO algorithm, and another seven algorithms using 23 benchmark test functions to confirm the performance of the IWHO. According to the results of the simulation, the IWHO outperforms other algorithms in terms of convergence accuracy and speed, as well as the ability to escape local optimization. Although the IWHO can find the ideal optimal value for the majority of test functions, the convergence rate can still be improved. For individual test functions, IWHO still falls into local optimization. To further verify IWHO’s superiority in optimizing sensor coverage, five other algorithms and four improved algorithms were used. The outcomes demonstrate that the IWHO achieves optimum sensor coverage and is superior to other algorithms. The coverage issue of HWSNs is finally resolved via IWHO, which optimizes sensor coverage and enhances sensor connectivity. After adding an obstacle, it can also be optimized to obtain good results.

However, the current research is still insufficient. We can continue enhancing the IWHO to increase its performance and researching the IWHO for multi-objective optimization so that it can handle more complex HWSN coverage and engineering issues.

Author Contributions

Conceptualization, C.Z.; methodology, C.Z.; software, C.Z.; validation, C.Z.; investigation, C.Z. and J.Y.; resources, C.Z. and J.Y.; data curation, C.Z.; writing—original draft preparation, C.Z.; writing—review and editing, J.Y. and C.Z.; visualization, C.Z.; supervision, C.L. and S.Y.; project administration, T.Q. and W.T.; funding acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by NNSF of China (No. 61640014, No. 61963009), Guizhou provincial Science and Technology Projects (No. Qiankehe Zhicheng[2022]Yiban017, No. Qiankehe Zhicheng[2019]2152), Innovation group of Guizhou Education Department (No. Qianjiaohe KY[2021]012), Engineering Research Center of Guizhou Education Department (No. Qianjiaoji[2022]043, No. Qianjiaoji[2022]040), Science and Technology Fund of Guizhou Province (No. Qiankehejichu[2020]1Y266, No. Qiankehejichu [ZK [2022]Yiban103]), Science and Technology Project of Power Construction Corporation of China, Ltd. (No. DJ-ZDXM-2020-19), CASE Library of IOT (KCALK201708).

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kavitha, M.; Geetha, B.G. An efficient city energy management system with secure routing communication using WSN. Clust. Comput. 2019, 22, 13131–13142. [Google Scholar] [CrossRef]

- Laiqa, B.I.; Rana, M.A.L.; Muhammad, F.; Hamza, A. Smart city based autonomous water quality monitoring system using WSN. Wirel. Pers. Commun. 2020, 115, 1805–1820. [Google Scholar]

- Ditipriya, S.; Rina, K.; Sudhakar, T. Semisupervised classification based clustering approach in WSN for forest fire detection. Wirel. Pers. Commun. 2019, 109, 2561–2605. [Google Scholar]

- Sunny, K.; Jan, I.; Bhawani, S.C. WSN-based monitoring and fault detection over a medium-voltage power line using two-end synchronized method. Electr. Eng. 2018, 100, 83–90. [Google Scholar]

- Zhang, Q.; Fok, M.P. A two-phase coverage-enhancing algorithm for hybrid wireless sensor networks. Sensors 2017, 17, 117. [Google Scholar] [CrossRef]

- Adulyasas, A.; Sun, Z.; Wang, N. Connected coverage optimization for sensor scheduling in wireless sensor networks. IEEE Sens. J. 2015, 15, 3877–3892. [Google Scholar] [CrossRef]

- Al-Betar, M.A.; Awadallah, M.A.; Faris, H. Natural selection methods for grey wolf optimizer. Expert Syst. Appl. 2018, 113, 481–498. [Google Scholar] [CrossRef]

- Gao, W.; Guirao, J.G.; Basavanagoud, B.; Wu, J.Z. Partial multi-dividing ontology learning algorithm. Inform. Sci. 2018, 467, 35–58. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Alsattar, H.A.; Zaidan, A.A.; Zaidan, B.B. Novel meta-heuristic bald eagle search optimization algorithm. Artif. Intell. Rev. 2020, 53, 2237–2264. [Google Scholar] [CrossRef]

- Yang, X.S.; Deb, S. Cuckoo search: Recent advances and applications. Neural Comput. Appl. 2014, 24, 169–174. [Google Scholar] [CrossRef]

- Xue, J.K.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. Open Access J. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Dehghani, M.; Hubálovský, Š.; Trojovský, P. Northern goshawk optimization: A new swarm-based algorithm for solving optimization problems. IEEE Access 2021, 9, 162059–162080. [Google Scholar] [CrossRef]

- Konstantinos, Z.; Stelios, T. A mayfly optimization algorithm. Comput. Ind. Eng. 2020, 145, 106559. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Ibrahim, A.; Majdi, M.; Huiling, C. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. A new optimization method based on COOT bird natural life model. Expert Syst. Appl. 2021, 183, 115352. [Google Scholar] [CrossRef]

- Naruei, I.; Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. -Ger. 2021, 17, 3025–3056. [Google Scholar] [CrossRef]

- Milovanović, M.; Klimenta, D.; Panić, M.; Klimenta, J.; Perović, B. An application of Wild Horse Optimizer to multi-objective energy management in a micro-grid. Electr. Eng. 2022, 104, 4521–4541. [Google Scholar] [CrossRef]

- Ali, M.; Kotb, H.; AboRas, M.K.; Abbasy, H.N. Frequency regulation of hybrid multi-area power system using wild horse optimizer based new combined Fuzzy Fractional-Order PI and TID controllers. Alex. Eng. J. 2022, 61, 12187–12210. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, Q.; Han, M.; Cui, R.; Cui, R. Hybrid Multi-Strategy Improved Wild Horse Optimizer. Adv. Intell. Syst. 2022, 4, 2200097. [Google Scholar] [CrossRef]

- Ali, M.H.; Kamel, S.; Hassan, H.M.; Véliz, M.T.; Zawbaa, H.M. An improved wild horse optimization algorithm for reliability based optimal DG planning of radial distribution networks. Energy Rep. 2022, 8, 582–604. [Google Scholar] [CrossRef]

- Tanyildizi, E.; Demir, G. Golden sine algorithm: A novel math-inspired algorithm. Adv. Electr. Comput. Eng. 2017, 17, 71–78. [Google Scholar] [CrossRef]

- Wang, W.T.; Tian, J.; Wu, D. An Improved Crystal Structure Algorithm for Engineering Optimization Problems. Electronics 2022, 11, 4109. [Google Scholar] [CrossRef]

- Yuan, P.L.; Zhang, T.H.; Yao, L.G.; Lu, Y.; Zhuang, W.B. A Hybrid Golden Jackal Optimization and Golden Sine Algorithm with Dynamic Lens-Imaging Learning for Global Optimization Problems. Appl. Sci. 2022, 12, 9709. [Google Scholar] [CrossRef]

- Jia, X.G.; Zhou, X.B.; Bao, J.; Zhai, J.Y.; Yan, R. Fusion Swarm-Intelligence-Based Decision Optimization for Energy-Efficient Train-Stopping Schemes. Appl. Sci. 2023, 13, 1497. [Google Scholar] [CrossRef]

- Huang, Y.Y.; Li, K.Q. Coverage optimization of wireless sensor networks based on artificial fish swarm algorithm. Appl. Res. Comput. 2013, 30, 554–556. [Google Scholar]

- Zhang, Q. Research on Coverage Optimization of Wireless Sensor Networks Based on Swarms Intelligence Algorithm. Master’s thesis, Hunan University, Changsha, China, 2015. [Google Scholar]

- Wu, Y.; He, Q.; Xu, T. Application of improved adaptive particle swarm optimization algorithm in WSN coverage optimization. Chin. J. Sens. Actuators 2016, 2016, 559–565. [Google Scholar]

- Lu, X.L.; Cheng, W.; He, Q.; Yang, J.H.; Xie, X.L. Coverage optimization based on improved firefly algorithm for mobile wireless sensor networks. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications, Chengdu, China, 7–10 December 2018; pp. 899–903. [Google Scholar]

- Nguyen, T.; Hanh, T.; Thanh, B.; Marimuthu, S.P. An efficient genetic algorithm for maximizing area coverage in wireless sensor networks. Inform. Sci. 2019, 488, 58–75. [Google Scholar]

- Ban, D.H.; Lv, X.; Wang, X. Efficient image encryption algorithm based on 1D chaotic map. Coll. Comput. Inf. 2020, 47, 278–284. [Google Scholar]

- Mao, Q.; Zhang, Q. Improved sparrow algorithm combining Cauchy mutation and opposition-based learning. J. Front. Comput. Sci. Technol. 2021, 15, 1155–1164. [Google Scholar]

- Lu, C.; Li, X.B.; Yu, W.J.; Zeng, Z.; Yan, M.M.; Li, X. Sensor network sensing coverage optimization with improved artificial bee colony algorithm using teaching strategy. Computing 2021, 103, 1439–1460. [Google Scholar] [CrossRef]

- Huang, Y.H.; Zhang, J.; Wei, W.; Qin, T.; Fan, Y.C.; Luo, X.M.; Yang, J. Research on coverage optimization in a WSN based on an improved coot bird algorithm. Sensors 2022, 22, 3383. [Google Scholar] [CrossRef] [PubMed]

- Miao, Z.M.; Yuan, X.F.; Zhou, F.Y.; Qiu, X.J.; Song, Y.; Chen, K. Grey wolf optimizer with an enhanced hierarchy and its application to the wireless sensor network coverage optimization problem. Appl. Soft Comput. 2020, 96, 106602. [Google Scholar] [CrossRef]

- Wang, S.P.; Yang, X.P.; Wang, X.Q.; Qian, Z.H. A virtual force algorithm-lévy-embedded grey wolf optimization algorithm for wireless sensor network coverage optimization. Sensors 2019, 19, 2735. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

HWSN coverage model.

Figure 1.

HWSN coverage model.

Figure 2.

Chaotic Mapping Histogram. (a) Logistic mapping; (b) Sine mapping; (c) SPM mapping.

Figure 2.

Chaotic Mapping Histogram. (a) Logistic mapping; (b) Sine mapping; (c) SPM mapping.

Figure 3.

Chaotic Mapping Scatter Diagram. (a) Logistic mapping; (b) Sine mapping; (c) SPM mapping.

Figure 3.

Chaotic Mapping Scatter Diagram. (a) Logistic mapping; (b) Sine mapping; (c) SPM mapping.

Figure 4.

Curves of the probability density functions.

Figure 4.

Curves of the probability density functions.

Figure 5.

Comparison of convergence curves. (a) F1; (b) F2; (c) F3; (d) F4; (e) F5; (f) F6; (g) F7; (h) F8; (i) F9; (j) F10; (k) F11; (l) F12; (m) F13; (n) F14; (o) F15; (p) F16; (q) F17; (r) F18; (s) F19; (t) F20; (u) F21; (v) F22; (w) F23.

Figure 5.

Comparison of convergence curves. (a) F1; (b) F2; (c) F3; (d) F4; (e) F5; (f) F6; (g) F7; (h) F8; (i) F9; (j) F10; (k) F11; (l) F12; (m) F13; (n) F14; (o) F15; (p) F16; (q) F17; (r) F18; (s) F19; (t) F20; (u) F21; (v) F22; (w) F23.

Figure 6.

Coverage maps of sensors. (a) Initial coverage map of sensors; (b) Optimized coverage map of sensors.

Figure 6.

Coverage maps of sensors. (a) Initial coverage map of sensors; (b) Optimized coverage map of sensors.

Figure 7.

Comparison of coverage convergence curves.

Figure 7.

Comparison of coverage convergence curves.

Figure 8.

Coverage and connectivity map of sensors. (a) Initial coverage map of sensors; (b) Optimized coverage map of sensors; (c) Initial connectivity map of sensors; (d) Optimized connectivity map of sensors.

Figure 8.

Coverage and connectivity map of sensors. (a) Initial coverage map of sensors; (b) Optimized coverage map of sensors; (c) Initial connectivity map of sensors; (d) Optimized connectivity map of sensors.

Figure 9.

Obstacle coverage and connectivity map of sensors. (a) Initial obstacle coverage map of sensors; (b) Optimized obstacle coverage map of sensors; (c) Initial obstacle connectivity map of sensors; (d) Optimized obstacle connectivity map of sensors.

Figure 9.

Obstacle coverage and connectivity map of sensors. (a) Initial obstacle coverage map of sensors; (b) Optimized obstacle coverage map of sensors; (c) Initial obstacle connectivity map of sensors; (d) Optimized obstacle connectivity map of sensors.

Table 1.

Parameter settings of the algorithm.

Table 1.

Parameter settings of the algorithm.

| Algorithm | Parameters |

|---|

| GWO | α = [0, 2] |

| SSA | ST = 0.6, PD = 0.7, SD = 0.2 |

| MA | g = 0.8, a1 = 1, a2 = a3 = 1.5 |

| PSO | c1,c2 = 2, wmin = 0.2, wmax = 0.9 |

| COOT | R = [−1, 1], R1 = R2 = [0, 1] |

| WHO | PS = 0.1, PC = 0.13 |

| IWHO | PS = 0.1, PC = 0.13, a = π, b = −π |

Table 2.

Single-peak test functions.

Table 2.

Single-peak test functions.

| F | Function | Range | Dim | fmin |

|---|

| F1 | | [−100, 100] | 30 | 0 |

| F2 | | [−10, 10] | 30 | 0 |

| F3 | | [−100, 100] | 30 | 0 |

| F4 | | [−100, 100] | 30 | 0 |

| F5 | | [−30, 30] | 30 | 0 |

| F6 | | [−100, 100] | 30 | 0 |

| F7 | | [−1.28, 1.28] | 30 | 0 |

Table 3.

Multi-peak test functions.

Table 3.

Multi-peak test functions.

| F | Function | Range | Dim | fmin |

|---|

| F8 | | [−500, 500] | 30 | −418.9829 × n |

| F9 | | [−5.12, 5.12] | 30 | 0 |

| F10 | | [−32, 32] | 30 | 0 |

| F11 | | [−600, 600] | 30 | 0 |

| F12 | | [−50, 50] | 30 | 0 |

| F13 | | [−50, 50] | 30 | 0 |

Table 4.

Fixed-dimension test functions.

Table 4.

Fixed-dimension test functions.

| F | Function | Range | Dim | fmin |

|---|

| F14 | | [−65, 65] | 2 | 1 |

| F15 | | [−5, 5] | 4 | 0.00030 |

| F16 | | [−5, 5] | 2 | −1.0316 |

| F17 | | [−5, 5] | 2 | 0.398 |

| F18 | | [−2, 2] | 2 | 3 |

| F19 | | [1, 3] | 3 | −3.86 |

| F20 | | [0, 1] | 6 | −3.32 |

| F21 | | [0, 10] | 4 | −10.1532 |

| F22 | | [0, 10] | 4 | −10.4028 |

| F23 | | [0, 10] | 4 | −10.5363 |

Table 5.

Results of single-peak and multi-peak benchmark functions.

Table 5.

Results of single-peak and multi-peak benchmark functions.

| Function | Criteria | IWHO | WHO | MA | PSO | NGO | SSA | GWO | COOT |

|---|

| F1 | avg | 0 | 6.629 × 10−91 | 4.714 × 10−12 | 2.737 × 10−10 | 3.979 × 10−211 | 1.122 × 10−43 | 4.529 × 10−59 | 1.321 × 10−39 |

| std | 0 | 3.567 × 10−90 | 2.421 × 10−11 | 1.086 × 10−9 | 0 | 4.747 × 10−43 | 9.959 × 10−12 | 7.114 × 10−39 |

| F2 | avg | 1.025 × 10−291 | 2.506 × 10−50 | 9.438 × 10−8 | 4.021 × 10−4 | 4.77 × 10−106 | 1.461 × 10−26 | 1.109 × 10−34 | 3.414 × 10−26 |

| std | 0 | 1.344 × 10−49 | 1.563 × 10−7 | 3.329 × 10−3 | 2.553 × 10−105 | 6.542 × 10−26 | 1.475 × 10−34 | 1.834 × 10−25 |

| F3 | avg | 0 | 1.572 × 10−56 | 1.859 × 103 | 1.085 | 7.325 × 10−207 | 7.957 × 10−38 | 3.953 × 10−15 | 5.870 × 10−44 |

| std | 0 | 7.437 × 10−56 | 9.828 × 102 | 3.62 × 10−1 | 0 | 4.285 × 10−37 | 1.486 × 10−14 | 3.109 × 10−43 |

| F4 | avg | 2.494 × 10−82 | 6.154 × 10−37 | 3.457 × 101 | 1.102 × 101 | 4.512 × 10−103 | 1.243 × 10−37 | 1.642 × 10−14 | 3.053 × 10−7 |

| std | 0 | 1.232 × 10−36 | 8.807 | 3.057 × 102 | 2.311 × 10−102 | 6.698 × 10−37 | 2.238 × 10−14 | 1.644 × 10−6 |

| F5 | avg | 2.485 × 10−1 | 5.292 × 101 | 5.338 × 101 | 2.987 × 101 | 2.810 × 101 | 8.877 × 10−3 | 2.686 × 101 | 2.971 × 101 |

| std | 2.283 × 10−1 | 10.059 × 102 | 4.787 × 101 | 1.551 × 101 | 9.521 × 10−1 | 7.998 × 10−3 | 9.602 × 10−1 | 7.874 |

| F6 | avg | 4.562 × 10−10 | 1.595 × 10−8 | 6.424 × 10−14 | 8.325 × 10−10 | 2.553 | 2.641 × 10−5 | 6.713 × 10−1 | 8.996 × 10−3 |

| std | 1.356 × 10−9 | 4.095 × 10−8 | 1.867 × 10−13 | 1.826 × 10−9 | 5.301 × 10−1 | 1.352 × 10−5 | 3.432 × 10−1 | 5.114 × 10−3 |

| F7 | avg | 3.703 × 10−5 | 3.498 × 10−4 | 1.234 × 10−2 | 1.982 × 10−2 | 1.079 × 10−4 | 1.077 × 10−3 | 9.155 × 10−4 | 1.098 × 10−3 |

| std | 3.150 × 10−5 | 1.879 × 10−4 | 2.861 × 10−3 | 3.175 × 10−3 | 2.009 × 10−5 | 1.331 × 10−4 | 1.368 × 10−4 | 9.571 × 10−4 |

| F8 | avg | −1.232 × 104 | −9.025 × 103 | −1.053 × 104 | −2.752 × 103 | −7.968 × 103 | −8.195 × 103 | −6.279 × 103 | −7.543 × 103 |

| std | 4.721 × 102 | 7.426 × 102 | 3.316 × 102 | 3.766 × 102 | 5.581 × 102 | 2.483 × 103 | 7.877 × 102 | 6.622 × 102 |

| F9 | avg | 0 | 0 | 7.617 | 4.905 × 101 | 0 | 0 | 1.518 × 10−1 | 4.661 × 10−13 |

| std | 0 | 0 | 4.252 | 1.301 × 101 | 0 | 0 | 8.176 × 10−1 | 2.334 × 10−12 |

| F10 | avg | 8.881 × 10−16 | 3.611 × 10−15 | 3.965 × 10−1 | 5.111 × 10−6 | 3.73 × 10−15 | 8.881 × 10−16 | 1.581 × 10−14 | 2.836 × 10−11 |

| std | 0 | 1.760 × 10−15 | 5.369 × 10−1 | 7.288 × 10−6 | 1.421 × 10−15 | 0 | 2.495 × 10−15 | 1.525 × 10−10 |

| F11 | avg | 0 | 0 | 1.709 × 10−2 | 1.397 × 101 | 0 | 0 | 3.712 × 10−3 | 3.33 × 10−17 |

| std | 0 | 0 | 1.993 × 10−2 | 3.651 | 0 | 0 | 8.541 × 10−3 | 9.132 × 10−17 |

| F12 | avg | 2.310 × 10−11 | 1.727 × 10−2 | 2.839 × 10−2 | 2.901 × 10−1 | 1.524 × 10−1 | 3.643 × 10−6 | 3.721 × 10−2 | 2.676 × 10−2 |

| std | 6.685 × 10−11 | 3.863 × 10−2 | 5.284 × 10−2 | 3.890 × 10−1 | 6.048 × 10−2 | 1.858 × 10−6 | 1.853 × 10−2 | 7.688 × 10−2 |

| F13 | avg | 2.080 × 10−2 | 6.233 × 10−2 | 1.054 × 10−2 | 1.831 × 10−3 | 2.579 | 5.259 × 10−3 | 6.249 × 10−1 | 5.709 × 10−2 |

| std | 3.187 × 10−2 | 3.187 × 10−2 | 2.092 × 10−2 | 4.094 × 10−3 | 4.391 × 10−1 | 6.224 × 10−3 | 2.481 × 10−1 | 4.912 × 10−2 |

| F14 | avg | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 1.171 × 101 | 1.99 | 9.98 × 10−1 |

| std | 0 | 0 | 0 | 3.047 × 10−12 | 0 | 9.536 × 10−1 | 9.920 × 10−1 | 0 |

| F15 | avg | 3.075 × 10−4 | 7.653 × 10−4 | 3.075 × 10−4 | 6.441 × 10−4 | 3.075 × 10−4 | 3.163 × 10−4 | 2.036 × 10−2 | 7.271 × 10−4 |

| std | 1.038 × 10−8 | 2.375 × 10−4 | 3.833 × 10−4 | 3.369 × 10−4 | 4.578 × 10−4 | 6.587 × 10−4 | 2.491 × 10−8 | 5.714 × 10−5 |

| F16 | avg | −1.031 | −1.031 | −1.031 | −1.031 | −1.031 | −1.031 | −1.031 | −1.031 |

| std | 0 | 1.570 × 10−16 | 0 | 0 | 1.57 × 10−16 | 1.087 × 10−9 | 4.158 × 10−10 | 1.776 × 10−15 |

| F17 | avg | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 | 3.978 × 10−1 |

| std | 0 | 0 | 1.153 × 10−7 | 0 | 0 | 1.180 × 10−9 | 1.498 × 10−7 | 1.667 × 10−10 |

| F18 | avg | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 |

| std | 0 | 0 | 3.140 × 10−16 | 7.021 × 10−16 | 0 | 5.518 × 10−8 | 1.017 × 10−5 | 8.038 × 10−14 |

| F19 | avg | −3.862 | −3.862 | −3.862 | −3.862 | −3.862 | −3.862 | −3.862 | −3.862 |

| std | 0 | 0 | 0 | 0 | 4.440 × 10−16 | 3.859 × 10−7 | 3.810 × 10−3 | 4.440 × 10−16 |

| F20 | avg | −3.322 | −3.626 | −3.322 | −3.203 | −3.322 | −3.322 | −3.261 | −3.322 |

| std | 0 | 5.944 × 10−2 | 0 | 0 | 5.916 × 10−7 | 1.097 × 10−6 | 6.038 × 10−2 | 1.416 × 10−10 |

| F21 | avg | −1.015 × 101 | 6.418 | −6.391 | −1.015 × 101 | −1.015 × 101 | −1.015 × 101 | −7.604 | −1.015 × 101 |

| std | 0 | 3.735 | 3.761 | 0 | 6.21 × 10−5 | 6.568 × 10−6 | 2.548 | 1.433 × 10−10 |

| F22 | avg | −1.040 × 101 | −7.765 | −1.040 × 101 | −7.063 | −1.040 × 101 | −1.040 × 101 | −1.040 × 101 | −1.040 × 101 |

| std | 0 | 2.637 | 0 | 3.339 | 2.660 × 10−5 | 1.666 × 10−5 | 3.949 × 10−4 | 1.810 × 10−11 |

| F23 | avg | −1.053 × 101 | −1.053 × 101 | −1.053 × 101 | −6.671 | −1.053 × 101 | −1.053 × 101 | −1.053 × 101 | −1.053 × 101 |

| std | 0 | 0 | 0 | 3.864 | 2.882 × 10−8 | 6.094 × 10−6 | 1.722 × 10−5 | 6.436 × 10−12 |

Table 6.

The result of Wilcoxon rank sum test.

Table 6.

The result of Wilcoxon rank sum test.

| Function | WHO | MA | PSO | NGO | SSA | GWO | COOT |

|---|

| F1 | 1.734 × 10−6 | 1.734 × 10−6 | 1.821 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F2 | 1.734 × 10−6 | 1.734 × 10−6 | 7.691 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F3 | 1.734 × 10−6 | 1.734 × 10−6 | 4.01 × 10−5 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F4 | 1.734 × 10−6 | 1.734 × 10−6 | 1.360 × 10−5 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F5 | 3.882 × 10−6 | 6.564 × 10−6 | 2.224 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 5.171 × 10−1 |

| F6 | 1.639 × 10−5 | 1.356 × 10−1 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F7 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F8 | 1.734 × 10−6 | 2.353 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 4.729 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F9 | 1 | 1.734 × 10−6 | 1.734 × 10−6 | 1 | 1 | 2.441 × 10−4 | 1.25 × 10−2 |

| F10 | 7.744 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 2.727 × 10−6 | 1 | 6.932 × 10−7 | 1.789 × 10−5 |

| F11 | 1 | 1.734 × 10−6 | 1.734 × 10−6 | 1 | 1 | 2.5 × 10−1 | 2.5 × 10−1 |

| F12 | 8.466 × 10−6 | 2.182 × 10−2 | 4.729 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F13 | 7.035 × 10−1 | 2.623 × 10−1 | 1.025 × 10−5 | 1.734 × 10−6 | 2.585 × 10−3 | 1.734 × 10−6 | 1.020 × 10−2 |

| F14 | 8.134 × 10−1 | 1.562 × 10−2 | 7.744 × 10−1 | 1.562 × 10−2 | 7.723 × 10−6 | 1.592 × 10−3 | 5.712 × 10−3 |

| F15 | 1.036 × 10−3 | 4.405 × 10−1 | 5.446 × 10−2 | 8.188 × 10−5 | 2.623 × 10−1 | 1.915 × 10−1 | 2.411 × 10−4 |

| F16 | 1 | 1 | 1 | 1 | 1.734 × 10−6 | 1.734 × 10−6 | 6.25 × 10−2 |

| F17 | 1.734 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 | 2.437 × 10−4 | 7.821 × 10−6 | 1.734 × 10−6 | 1.734 × 10−6 |

| F18 | 7.626 × 10−1 | 2.186 × 10−1 | 1 | 3.75 × 10−1 | 1.734 × 10−6 | 1.734 × 10−6 | 1.47 × 10−6 |

| F19 | 1 | 1 | 1 | 1 | 1.734 × 10−6 | 1.734 × 10−6 | 5 × 10−1 |

| F20 | 2.492 × 10−2 | 6.875 × 10−1 | 2.148 × 10−2 | 9.271 × 10−3 | 1.494 × 10−5 | 1.891 × 10−4 | 6.088 × 10−3 |

| F21 | 1.856 × 10−2 | 1.530 × 10−4 | 1.058 × 10−3 | 4.508 × 10−2 | 5.791 × 10−2 | 4.193 × 10−2 | 5.689 × 10−2 |

| F22 | 1.114 × 10−2 | 8.596 × 10−2 | 1.397 × 10−2 | 8.365 × 10−2 | 1.975 × 10−2 | 6.732 × 10−2 | 1.556 × 10−2 |

| F23 | 7.275 × 10−3 | 1.879 × 10−2 | 2.456 × 10−3 | 1.504 × 10−1 | 1.359 × 10−2 | 4.4913 × 10−2 | 7.139 × 10−1 |

| +/=/− | 16/4/3 | 15/2/6 | 18/3/2 | 16/4/3 | 17/3/3 | 19/0/4 | 18/0/5 |

Table 7.

Experimental 1 parameter configurations.

Table 7.

Experimental 1 parameter configurations.

| Parameters | Values |

|---|

| Area of monitoring (S) | 100 m × 100 m |

| Sensing radius (Rs) | 10 m |

| Number of sensors (N) | 45 |

| Number of iterations (iteration) | 150 |

Table 8.

Comparison of initial coverage and optimize coverage experimental results.

Table 8.

Comparison of initial coverage and optimize coverage experimental results.

| Sensors | Initial Coverage Ratio | Optimize Coverage Ratio |

|---|

| N = 45 | 79.13% | 97.58% |

Table 9.

Comparison of experimental results from various algorithms.

Table 9.

Comparison of experimental results from various algorithms.

| Algorithm | Coverage Ratio | Coverage Efficiency |

|---|

| PSO | 83.02% | 58.75% |

| BES | 91.32% | 64.63% |

| WOA | 94.62% | 66.96% |

| HHO | 96.48% | 68.28% |

| WHO | 92.06% | 65.15% |

| IWHO | 97.58% | 69.08% |

Table 10.

Comparison of TABC and IWHO experimental results.

Table 10.

Comparison of TABC and IWHO experimental results.

| Method | Coverage Ratio |

|---|

| TABC [34] | 96.07% |

| IWHO | 97.58% |

Table 11.

Comparison of COOTCLCO and IWHO experimental results.

Table 11.

Comparison of COOTCLCO and IWHO experimental results.

| Method | Coverage Ratio |

|---|

| COOTCLCO [35] | 96.99% |

| IWHO | 99.17% |

Table 12.

Comparison of GWO-EH and IWHO experimental results.

Table 12.

Comparison of GWO-EH and IWHO experimental results.

| Method | Coverage Ratio |

|---|

| GWO-EH [36] | 83.64% |

| IWHO | 97.78% |

Table 13.

Comparison of VFLGWO and IWHO experimental results.

Table 13.

Comparison of VFLGWO and IWHO experimental results.

| Method | Coverage Ratio |

| VFLGWO [37] | 94.52% |

| IWHO | 99.48% |

Table 14.

Experimental 2 parameter configurations.

Table 14.

Experimental 2 parameter configurations.

| Parameters | Values |

|---|

| Area of monitoring (S) | 100 m × 100 m |

| Sensing radius (Rs1) | 12 m |

| Sensing radius (Rs2) | 10 m |

| Communication radius (Rs1) | 24 m |

| Communication radius (Rs2) | 20 m |

| Number of sensor sensors (N1,2) | 20 |

| Number of iterations (iteration) | 150 |

Table 15.

Comparison of HWSN coverage optimization experimental results.

Table 15.

Comparison of HWSN coverage optimization experimental results.

| Sensors | Initial Coverage Ratio | Optimize Coverage Ratio |

|---|

| N1,2 = 20 | 81.43% | 98.51% |

Table 16.

Comparison of HWSN connectivity optimization experimental results.

Table 16.

Comparison of HWSN connectivity optimization experimental results.

| Sensors | Initial Connectivity Ratio | Optimize Connectivity Ratio |

|---|

| N1,2 = 20 | 16.03% | 20.04% |

Table 17.

Comparison of HWSN obstacle coverage optimization experimental results.

Table 17.

Comparison of HWSN obstacle coverage optimization experimental results.

| Sensors | Initial Coverage Ratio | Optimize Coverage Ratio |

|---|

| N1,2 = 20 | 86.61% | 97.79% |

Table 18.

Comparison of HWSN obstacle connectivity optimization experimental results.

Table 18.

Comparison of HWSN obstacle connectivity optimization experimental results.

| Sensors | Initial Connectivity Ratio | Optimize Connectivity Ratio |

|---|

| N1,2 = 20 | 15.77% | 17.44% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).