3.2. Preprocessing Process

The whole preprocessing process is shown in the red dashed box in

Figure 2. In this part, we divide the preprocessing process into two cases: non-initial frame and initial frame.

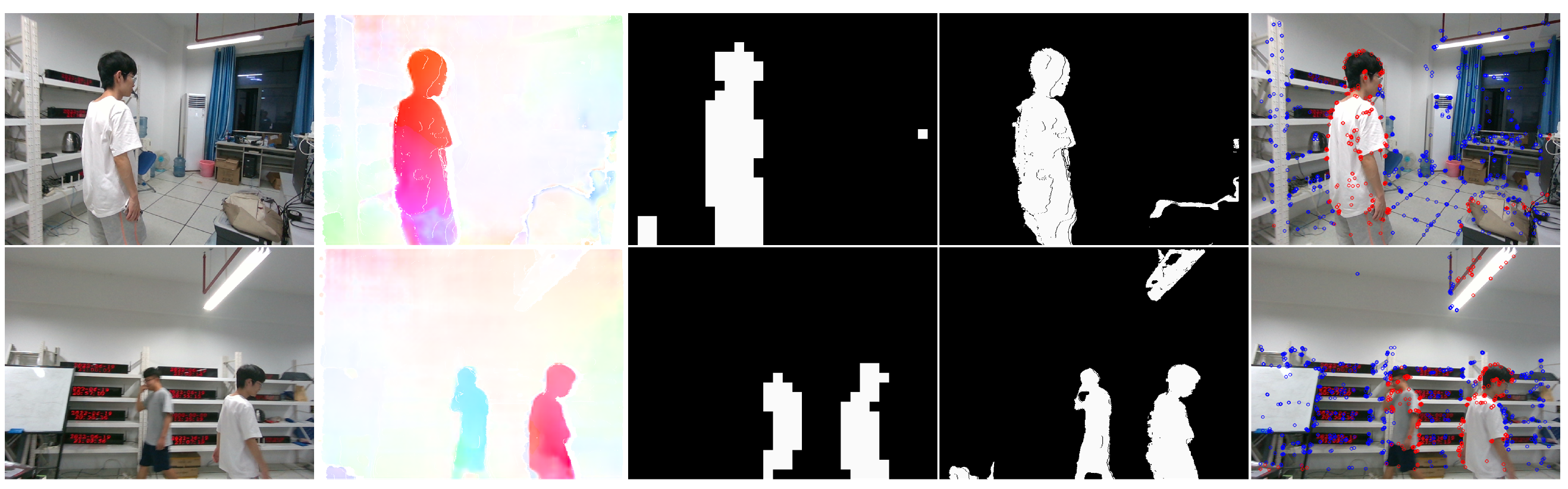

Figure 2 shows the detailed implementation flow of removing the dynamic key point in the non-initial frame state. It can be seen that the process is divided into three modules, namely Segmenting dynamic–static Areas, Moving Object Removal and Low-Cost Tracking. The Segmenting Dynamic–Static Areas module is first executed, and outputs two kinds of dynamic–static segmentation mask images, namely the dynamic–static grid segmentation mask image and the clustering dynamic–static segmentation mask image. Then, the Moving Object Removal module uses the obtained two kinds of dynamic–static mask images to remove the key points in the dynamic area, and the Low-Cost Tracking module calculates the rough camera pose using the static key points. Finally, the Segmenting Dynamic–Static Areas module and the Moving Object Removal module will be executed again to obtain the precise static key points, and the final result will be sent to the Tracking process. This detailed process is: We first compute the rough scene flow of the

t-th frame by warping the

-th frame using the precise transformation matrix

, where

means the transformation matrix between the

-th frame and the

t-1-th frame. Then, we can partition clearly the grid mask image into dynamic or static region parts through statistically operating on the scene flow and use the segmented grid mask image to clearly distinguish the dynamic–static attribute of the clustering blocks. Next, both the dynamic–static grid mask image and the deep mean clustering dynamic–static mask image are used to remove the key points of the dynamic objects. A more accurate transformation matrix

is calculated in the Low-Cost Tracking process using the remaining static environment key points, and a more accurate scene flow is generated. Now, the obtained precise scene flow can be segmented into foreground and background. Finally, the accurate camera positioning can be obtained using the static matching points in the background.

Unlike the case of non-initial frames, a rough transformation matrix , where means the initial time and , means the transform matrix from the -th frame to -th frame is obtained by the Low-Cost Tracking algorithm in the case of the initial frame, and the rest process in the case of the initial frame is the same as the non-initial frame’s rest process.

In this

Figure 2, the preprocessing process, the Segmenting Dynamic–Static Areas module, and the Moving Object Removal module jointly complete the Motion Removal process in

Figure 1. The red arrow path means the first Motion Removal process, and the blue arrow path means the Low-Cost Tracking and the second Motion Removal process. When the red arrow process is completed, the blue arrow process is then executed. Note that the result generated by the first Motion Removal process is used as an initial input of the blue arrow process in the Moving Object Removal module. The green arrows indicate the final output of the preprocessing process.The following sections give the details of our approach.

3.3. Calculation of Scene Flow

Theoretically, the optical flow of an image contains scene flow and camera self-motion flow, which are caused by the dynamic objects and the camera motion, respectively. Now, we can segment the moving objects using the optical flow with an appropriate threshold. However, the self-motion flow of the camera will interfere with the optical flow of moving objects, which makes it difficult to give the appropriate threshold. So, separating the scene flow from the optical flow will be helpful for the segmentation of moving objects.

FlowFusion [

38] proposes a 2D scene flow solution method. Optical flow is the coordinate difference of a same point under a camera coordinate system at different moments. The estimation of the optical flow

from time

t − 1 to time

t is as follows [

38]:

where

represents the coordinate vector of the 3D point in the world coordinate system at time

t − 1,

represents the difference of the 3D coordinate vector of a certain point between time

and

t,

represents the mapping from 3D points to 2D points, and

represents the camera pose at time

t. The optical flow consists of the scene flow and the camera self-motion flow. The relationship between 2D optical flow and scene flow can be described as follows [

38]:

where

and

represent 2D scene flow and camera self-motion flow, respectively. Camera self-motion flow is an optical flow due to the camera’s own motion. The camera transformation matrix is used to warp the original coordinate values to other camera coordinate systems to obtain new coordinates, and the new coordinates are subtracted from the original coordinates to obtain the camera self-motion flow. Now, the camera self-motion flow is calculated as follows [

38]:

where

is a warp calculation and is represented by Equation (

4), which warps the coordinates of a pixel point in one image coordinate system to another image coordinate system to obtain its new coordinates.

are the pixel coordinates on the image and

represents the depth of point

.

represents the transformation matrix between two different frames, and

represents the Lie algebra transformation which contains rotation and translation.

It is very time-consuming to compute the dense optical flow, so this paper uses the PWC-NET neural network [

39] to estimate the optical flow. In order to avoid too much error caused by the depth camera during measurement, the threshold value is set to 7 m. The error caused by the depth camera will be less than 2 cm in the range of 4 m, but it will rise rapidly when the range is more than 4 m and can reach almost 4 cm when the range is 7 m. Excessive errors are harmful to our method, so the threshold is set to 7 m. As can be seen from the scene flow image in

Figure 2, the static background pixels are almost close to white because the camera’s self-motion flow greatly weakens the static part of the optical flow. In contrast, the foreground pixels are brightly colored because the camera’s self-motion flow does not have much effect on the dynamic part.

In the process of calculating the scene flow, we use the transformation matrix from the -th frame to t-th frame to replace the roughly calculated transformation matrix between the -th frame and t-th frame by the low-cost tracking algorithm. Our approach has two advantages: (1) the iteration speed is accelerated because it reduces one time pose solution process; (2) the information between two sequential frames is effectively correlated.

3.4. Algorithm Design for Dynamic Grid Segmentation

It is very time-consuming to directly execute scene flow statistics for each pixel, and the discrete error points will also affect the dynamic–static segmentation of the scene flow. To solve this problem, a grid containing 20 × 20 units is used to divide the scene flow image into N grid regions. The reason for setting the grid region as 20 × 20 is as follows: A large grid will result in too many unnecessary pixels and easily lead to wrongly distinguish the dynamic–static regions. On the contrary, a small grid will consume too much calculation time. In our experiment, we found that the size of 20 × 20 is most suitable for the grid. It can ensure the accuracy of the algorithm in distinguishing the dynamic–static regions without consuming too much calculation time. Then, a 2-norm sum of a grid can be obtained by calculating the total size of the 2-norm of the scene flow of all pixels within a grid, and finally a statistical computing is executed based on the 2-norm sums of all grids. This method can greatly speed up the operation and eliminate the influence of outliers. It is worth noting that the moving objects will cause a larger depth difference than the static area, so we only calculate the depth difference between the pixels within the 2 × 2 range of a grid center and their matching points and incorporate this information into the dynamic–static segmentation calculation.

First of all, since we only perform statistical calculations for the scene in the range of 7 m, all grids which only contain the scenes outside the range of 7 m will be removed. The grids containing the scenes both within and outside the range of 7 m will be determined whether they are valid grids or not, according to Equation (

5). Let the grid be

, and the valid grid calculation method is as follows:

where

represents the number of pixels with a depth of less than 7 m in the grid

, and

represents the total number of pixels in the grid

. Equation (

5) means that when the ratio of the number of valid pixels to the total number of pixels is less than

, the grid

will be regarded as an invalid grid. Considering that some grids are likely the edge grids, and we need to retain those edge grids to contain enough information, we set the threshold to 0.8. Moreover, if the threshold is too small, the grids containing less information will also be retained, which is not conducive to the segmentation of dynamic regions.

In our method, the grid-based process of dynamic–static segmentation is as follows:

- Step 1:

Remove the invalid grids and keep the valid grids.

- Step 2:

Calculate the 2-norm sum for each grid by calculating the total size of the 2-norm of the scene flow of all pixels within the grid and find the grid with the smallest 2-norm sum among the valid grids.

- Step 3:

The dynamic and the static grids are segmented through the segment model, which is built based on a given threshold value and the grid with the smallest 2-norm sum. The detailed implementation process is given below.

Now, we can calculate the 2-norm size of the scene flow for each pixel as follows:

where

and

are the elements of the scene flow vector, and

i and

j mean the

i-th pixel and the

j-th grid, respectively. The scene flow of the

j-th grid is the sum of the scene flow of the pixels in the

j-th grid, namely

. We select the grid

with the smallest scene flow in the valid grids

as the static background grid. Finally, We add the depth difference in the process of grid division statistics.

We derive our depth difference equation according to the principle of the literature [

13]. Let

be the coordinate of the upper left corner of the center 2 × 2 area of grid

, and the 2 × 2 depth difference at the center of the grid can be calculated as:

where

is to extract the depth value of the map point, and

and

are matched by optical flow [

40]:

where

and

are the elements of the optical flow vector.We use scene flow

and grid center depth difference together to find the dynamic grid. Then, we can compute the sum of scene flow and the center depth difference for grid

by the following equation:

The grid with the smallest scene flow value in the valid grid is used as the smallest static background grid

. Now, based on the obtained static background grid

, we can divide all grids into two different parts, namely the dynamic grid part and static grid part by the following equation:

Among them,

is 3.3∼3.9, and

is 10∼20. The reason to set the values of

and

is that using the transformation matrix

at the previous moment as the initial transformation matrix

to segment the current frame may lead to a high error and affect the gap between

, the two moving scene flows, which makes it difficult to judge the distinction between dynamic–static regions. In this case, when the threshold is set too small, the static background will be wrongly segmented into dynamic regions. Furthermore, if there is no valid grid, namely

, a threshold must be set to distinguish dynamic–static grids:

where

is set to 1500∼2000. Considering a special case that most of the pixels in the picture are moving, to retain some moving points and ensure that there are enough feature points to help SLAM run stably, the threshold is chosen in the range between 1500 and 2000. The reason for setting the threshold to 1500∼2000 is as follows: The scene flow of one pixel point of a vigorously moving object is at least 3, so the value of the scene flows of one grid is at least

. Moreover, the inaccurate transformation matrix will make the error range of the scene flows of one grid be about 0.4∼0.8. Then, the minimum error value of the scene flows of one grid is about

, and the maximum error value of the scene flows of one grid is about

. Furthermore, the influence of depth difference needs to be considered, and the depth difference between two consecutive frames is about 4∼6cm. According to the value range of

is 10∼20, the minimum depth difference threshold is

, and the maximum depth difference threshold is

. Then, we have the threshold range

. The method to judge whether a grid is a dynamic or static grid is shown in Algorithm 1.

| Algorithm 1 Dynamic–static grid discrimination. |

| Input: scene flow image and depth image |

| Output: Dynamic–static grid sets and |

| 1: ,,, |

| 2: |

| 3: for do |

| 4: |

| 5: if then |

| 6: |

| 7: if then |

| 8: |

| 9: end if |

| 10: else |

| 11: |

| 12: end if |

| 13: end for |

| 14: if then |

| 15: |

| 16: else |

| 17: |

| 18: end if |

| 19: for do |

| 20: |

| 21: if then |

| 22: |

| 23: else |

| 24: |

| 25: end if |

| 26: end for |

3.5. Dynamic Segmentation Algorithm Based on Deep K-Means Clustering

The simple grid segmentation method cannot segment all key points of the dynamic target region, and there are some potential dynamic points that need to be removed together. For example, people may move the chair they are sitting on when they get up. Then, the moving chair is a potential dynamic object [

9,

10,

13]. According to the suggestion proposed in the paper of Joint VO–SF [

21], we selected 24 classes to segment the image. The number of k-means clusters is set according to the image size, for example, if the image size is

, then the number of k-means clusters is

. This is given empirically by the Joint VO–SF authors, mainly to provide medium-sized clustering blocks.

Next, the segmented grid mask image is used to make a distinction between dynamic–static attributes of the cluster blocks. Let the dynamic overlapping area between a cluster block image and a segmented grid mask image be

S, and the entire cluster block is considered to be dynamic if the ratio of

S to the cluster block is great than

. The corresponding method can be shown as:

where

represents the number of elements in the set

Z and

represents the pixel point set of the

i-th mean cluster. We found that similar regions are in the same motion, so we set

to be 0.3∼0.5. Finally, we eliminate the potential key points in the dynamic clustering regions. The above implementation process can be shown as Algorithm 2. We did not set up 24 clustering blocks for all experiments but only for the experiments with

images. Only 24 clustering blocks are available for

image sizes. If the image size is

, then the number of k-means clusters is

So, the 24 clusters are not constant numbers in Algorithm 2. When working with larger images or smaller images, one has to go for modifying the number of clustering blocks. Bigger images have more clustering blocks and vice versa.

| Algorithm 2 Dynamic–static K–means clusters discrimination. |

| Input: Grid mask image and depth image |

| Output: Dynamic–static cluster block sets and |

| K-means clustering was carried out on the depth image, and the clustering was |

| 24 blocks |

| 2: for do |

| |

| 4: if then |

| |

| 6: else |

| |

| 8: end if |

| end for |

3.6. Acceleration Strategy

As shown in

Figure 3, we use a two-layer pyramid for k-means clustering acceleration. First, we use a 4 × 4 Gaussian kernel to reduce the image to 1/4 of the original image. Then, we perform k-means clustering on the upper image, and the iterative times are no more than 10. The upper image clustering results in 24 clustering blocks, and we map the centroids of these clustering blocks back into the original image. As shown in

Figure 3, a brown centroid of one cluster on the upper image is mapped to a blue pixel block with the size of 4 × 4 in the original image. Within the range of blue pixel blocks, the coordinate closest to the average depth is the center of the cluster block. We perform the following calculation for each pixel point

in the pixel block:

where

denotes the depth of pixel point

. The pixel point

with the smallest CenterError is used as the mean clustering center of the original image, and then the original pixel points are assigned to each clustering center.

The running time of our method is mainly consumed in the process of k-means clustering iteration. The time complexity of k-means clustering is . Where N is the number of data objects, K is the number of clustering blocks, and t is the number of iteration times. In layer 1, N, K, and t are , 24, and 10, respectively. In layer 0, N, K, and t are , 24, and 1, respectively.