Graph-Driven Micro-Expression Rendering with Emotionally Diverse Expressions for Lifelike Digital Humans

Abstract

1. Introduction

2. Related Work

2.1. Trends in Micro-Expression Recognition

2.2. AU Detection

2.3. Micro-Expression Modeling in Virtual Humans

2.4. Comparative Analysis and Methodological Innovations

2.4.1. Methodological Innovations

- Graph-Based Modeling of Action Units: Each AU is represented as a node in a symmetric adjacency matrix, capturing inherent facial muscle dependencies. A graph convolutional network (GCN), built on AU co-occurrence statistics, enhances structural sensitivity and generalization for precise AU value extraction, driving animation curve generation for realistic digital human expressions without emotion classification.

- Joint Spatiotemporal Feature Extraction: To simultaneously capture spatial configurations and temporal dynamics, a 3D-ResNet-18 backbone is adopted. To enhance the modeling of subtle temporal variations, the backbone is further integrated with an Enhanced Long-term Recurrent Convolutional Network (ELRCN), thereby improving the sensitivity to transient and low-intensity motion cues, which are critical for micro-expression analysis.

- Emotion-Driven Animation Mapping Mechanism: The extracted AU activation patterns are mapped into parameterized facial muscle trajectories via continuous motion curves, which in turn drive the expression synthesis module of the virtual human. This mapping strategy enables the generation of contextually appropriate and emotionally expressive facial animations, exceeding the expressive capacity of traditional template-based methods.

2.4.2. Architectural Innovations

- Spatiotemporal Feature Extraction: Given that micro-expressions (MEs) are brief (<0.5 s) and subtle in amplitude, we utilize a lightweight yet effective backbone—3D-ResNet-18—for end-to-end modeling of video segments. The 3D convolutional neural network (3D-CNN) slides jointly across spatial (x, y) and temporal (t) dimensions, enabling the network to perceive fine-grained motion variations between consecutive frames. This makes it particularly suitable for temporally sensitive and low-amplitude signals such as MEs [16]. Additionally, the Enhanced Long-term Recurrent Convolutional Network (ELRCN) [40] incorporates two learning modules to strengthen both spatial and temporal representations.

- AU Relationship Modeling: AUs, as defined in the Facial Action Coding System (FACS), are physiologically interpretable units that encode facial muscle movements and exhibit cross-subject consistency. Therefore, they are widely used in micro-expression analysis and synthesis. Liu et al. [28] manually defined 13 facial regions and used 3D filters to perform convolution over feature maps for AU localization. Inspired by this approach, we introduce a GCN to model co-occurrence relationships between AUs. Each AU is represented as a node in a graph, and the edge weights are defined based on empirical co-occurrence probabilities.

3. Framework and Methods

3.1. Overall System Architecture

3.2. Temporal Segmentation

3.3. Graph Structure Modeling Based on AU Co-Occurrence Relationships

3.4. Animation Synthesis with Diverse Emotional Profiles

- Invoke the FindRow method to match the input expression curve with entries in the AU dictionary and extract the corresponding RowValue (a time–displacement key-value pair).

- Based on RowValue->Time and RowValue->Disp, create animation keyframes via FKeyHandle and append them to the animation curve.

- Use SetKeyTangentMode to set the tangent mode to automatic (RCTMAuto), and call SetKeyInterpMode to set the interpolation mode to cubic (RCIMCubic), improving transition quality between keyframes.

4. Experiments

4.1. System Performance Evaluation

4.1.1. Dataset and Experimental Settings

4.1.2. Implementation Details

4.2. User Subjective Perception Study

4.2.1. Participant Demographics

4.2.2. Experimental Hypothesis and Questionnaire Design

4.3. Data Analysis

4.4. Questionnaire Analysis Results

- In terms of overall perceptual ratings, Video B was rated significantly higher than Video A, indicating that the inclusion of micro-expressions had a positive impact on the overall user experience.

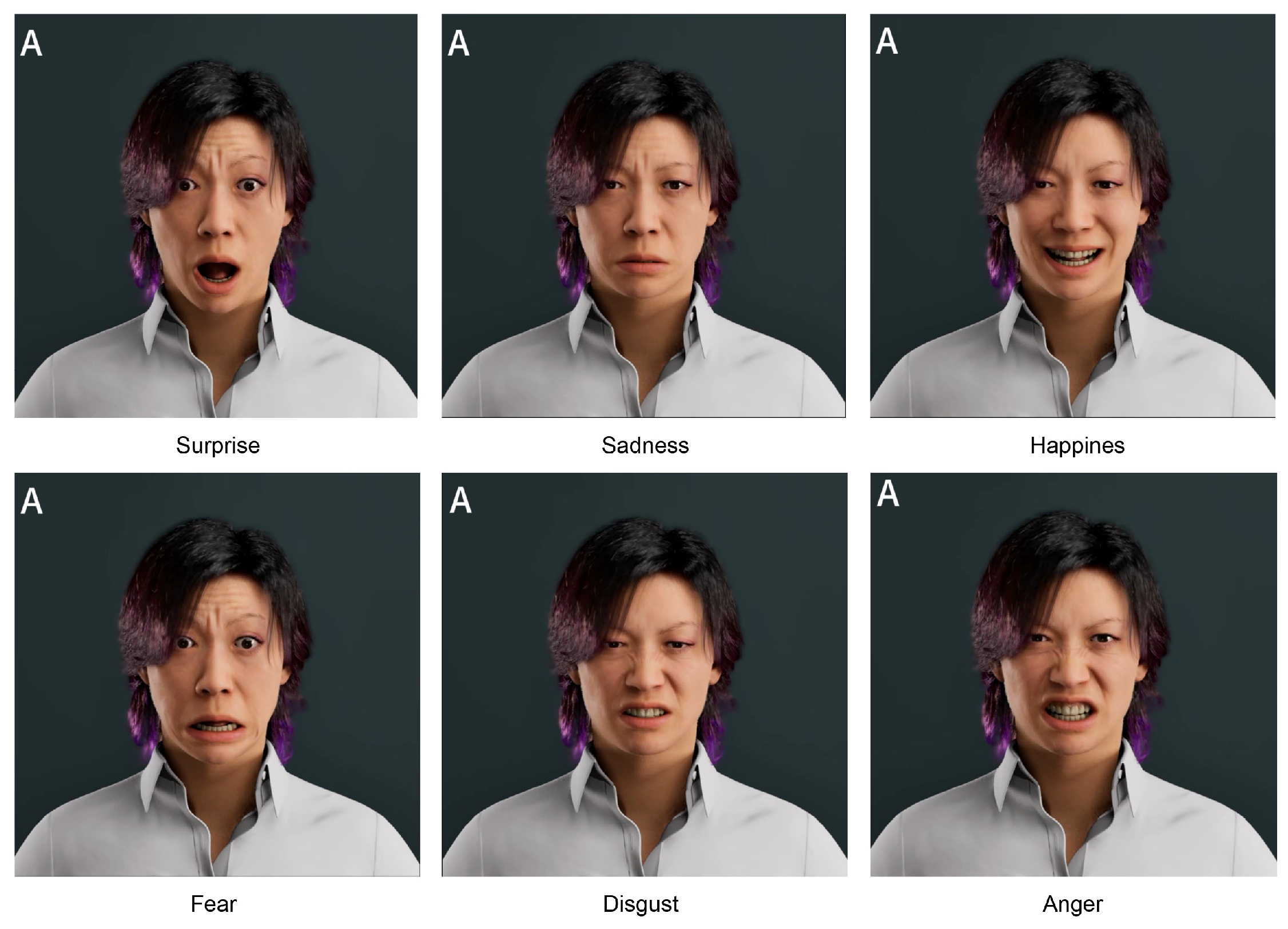

- In the analysis of the six basic emotions, Video B showed significantly higher ratings for fear (, ) and disgust (, ), both of which met the Bonferroni-corrected significance threshold (). This suggests that micro-expressions notably enhanced the expressiveness and perceptual salience of specific negative emotions in virtual humans.

- In the paired sample t-tests, Video B also received significantly higher ratings than Video A in the dimensions of clarity (, ) and authenticity (, ), indicating that micro-expressions improved both the detail and credibility of facial expressions.

- Regression analysis further revealed that participants’ recognition scores of the virtual human significantly predicted their ratings of emotional expressiveness (, ). The regression model met the assumptions of residual normality and independence, indicating a strong positive correlation between recognition clarity and emotion perception.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Queiroz, R.; Musse, S.; Badler, N. Investigating Macroexpressions and Microexpressions in Computer Graphics Animated Faces. PRESENCE Teleoperators Virtual Environ. 2014, 23, 191–208. [Google Scholar] [CrossRef]

- Hou, T.; Adamo, N.; Villani, N.J. Micro-expressions in Animated Agents. In Intelligent Human Systems Integration (IHSI 2022): Integrating People and Intelligent Systems; AHFE Open Access: Orlando, FL, USA, 2022; Volume 22. [Google Scholar] [CrossRef]

- Li, X.; Hong, X.; Moilanen, A.; Huang, X.; Pfister, T.; Zhao, G.; Pietikäinen, M. Reading Hidden Emotions: Spontaneous Micro-expression Spotting and Recognition. arXiv 2015, arXiv:1511.00423. [Google Scholar]

- Ren, H.; Zheng, Z.; Zhang, J.; Wang, Q.; Wang, Y. Electroencephalography (EEG)-Based Comfort Evaluation of Free-Form and Regular-Form Landscapes in Virtual Reality. Appl. Sci. 2024, 14, 933. [Google Scholar] [CrossRef]

- Shi, M.; Wang, R.; Zhang, L. Novel Insights into Rural Spatial Design: A Bio-Behavioral Study Employing Eye-Tracking and Electrocardiography Measures. PLoS ONE 2025, 20, e0322301. [Google Scholar] [CrossRef] [PubMed]

- Tian, L.; Wang, Q.; Zhang, B.; Bo, L. EMO: Emote Portrait Alive–Generating Expressive Portrait Videos with Audio2Video Diffusion Model under Weak Conditions. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Gerogiannis, D.; Paraperas Papantoniou, F.; Potamias, R.A.; Lattas, A.; Moschoglou, S.; Ploumpis, S.; Zafeiriou, S. AnimateMe: 4D Facial Expressions via Diffusion Models. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Fan, Y.; Ji, S.; Xu, X.; Lin, C.; Liu, Z.; Dai, B.; Liu, Y.J.; Shum, H.Y.; Wang, B. FaceFormer: Speech-Driven 3D Facial Animation With Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Dong, Z.; Wang, G.; Li, S.; Yap, M.H.; Wang, S.J.; Yan, W.J. Spontaneous Facial Expressions and Micro-expressions Coding: From Brain to Face. Front. Psychol. 2022, 12, 8763852. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.J.; Wu, Q.; Liang, J.; Chen, Y.H.; Fu, X. How Fast Are the Leaked Facial Expressions: The Duration of Micro-Expressions. J. Nonverbal Behav. 2013, 37, 217–230. [Google Scholar] [CrossRef]

- Hong, X.; Xu, Y.; Zhao, G. LBP-TOP: A Tensor Unfolding Revisit. In Asian Conference on Computer Vision; Chen, C.S., Lu, J., Ma, K.K., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 513–527. [Google Scholar]

- Chaudhry, R.; Ravichandran, A.; Hager, G.; Vidal, R. Histograms of Oriented Optical Flow and Binet-Cauchy Kernels on Nonlinear Dynamical Systems for the Recognition of Human Actions. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1932–1939. [Google Scholar]

- Horn, B.K.P.; Schunck, B.G. Determining Optical Flow. Artif. intell. 1980, 17, 185–203. [Google Scholar] [CrossRef]

- Koenderink, J.J. Optic Flow. Vis. Res. 1986, 26, 161–179. [Google Scholar] [CrossRef] [PubMed]

- Yan, W.J.; Li, X.; Wang, S.J.; Zhao, G.; Liu, Y.J.; Chen, Y.H.; Fu, X. CASME II: An Improved Spontaneous Micro-Expression Database and the Baseline Evaluation. PLoS ONE 2014, 9, e86041. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar] [CrossRef]

- Yap, C.H.; Yap, M.H.; Davison, A.; Kendrick, C.; Li, J.; Wang, S.J.; Cunningham, R. 3D-CNN for Facial Micro- and Macro-Expression Spotting on Long Video Sequences Using Temporal Oriented Reference Frame. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 7016–7020. [Google Scholar]

- Zhang, L.W.; Li, J.; Wang, S.J.; Duan, X.H.; Yan, W.J.; Xie, H.Y.; Huang, S.C. Spatio-Temporal Fusion for Macro- and Micro-Expression Spotting in Long Video Sequences. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 734–741. [Google Scholar]

- Khor, H.Q.; See, J.; Phan, R.C.W.; Lin, W. Enriched Long-Term Recurrent Convolutional Network for Facial Micro-Expression Recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 667–674. [Google Scholar]

- Qingqing, W. Micro-Expression Recognition Method Based on CNN-LSTM Hybrid Network. Int. J. Wirel. Mob. Comput. 2022, 23, 67–77. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhao, X.; Ma, H.; Wang, R. STA-GCN: Spatio-Temporal AU Graph Convolution Network for Facial Micro-Expression Recognition. In Proceedings of the Pattern Recognition and Computer Vision, Beijing, China, 29 October–1 November 2021; Ma, H., Wang, L., Zhang, C., Wu, F., Tan, T., Wang, Y., Lai, J., Zhao, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 80–91. [Google Scholar]

- Lo, L.; Xie, H.X.; Shuai, H.H.; Cheng, W.H. MER-GCN: Micro-Expression Recognition Based on Relation Modeling with Graph Convolutional Networks. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; pp. 79–84. [Google Scholar]

- A New Neuro-Optimal Nonlinear Tracking Control Method via Integral Reinforcement Learning with Applications to Nuclear Systems. Neurocomputing 2022, 483, 361–369. [CrossRef]

- Lin, T.; Zhao, X.; Su, H.; Wang, C.; Yang, M. BSN: Boundary Sensitive Network for Temporal Action Proposal Generation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, Y.; Lin, W.; Zhang, Y.; Xu, J.; Xu, Y. Leveraging Vision Transformers and Entropy-Based Attention for Accurate Micro-Expression Recognition; Nature Publishing Group: London, UK, 2025; Volume 15, p. 13711. [Google Scholar]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); University Press: New York, NY, USA, 1997. [Google Scholar]

- Liu, Z.; Dong, J.; Zhang, C.; Wang, L.; Dang, J. Relation Modeling with Graph Convolutional Networks for Facial Action Unit Detection. In Multimedia Modeling (MMM 2020); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; Volume 11962, pp. 489–501. [Google Scholar] [CrossRef]

- Matsuyama, Y.; Bhardwaj, A.; Zhao, R.; Romeo, O.; Akoju, S.; Cassell, J. Socially-Aware Animated Intelligent Personal Assistant Agent. In Proceedings of the 17th Annual Meeting of the Special Interest Group on Discourse and Dialogue, Los Angeles, CA, USA, 13–15 September 2016; pp. 224–227. [Google Scholar]

- Marsella, S.; Gratch, J.; Petta, P. Computational models of emotion; Oxford Univ. Press: New York, NY, USA, 2010; Volume 11, Number 1, pp. 21–46. [Google Scholar]

- Coface: Global Credit Insurance Solutions To Protect Your Business. 2025. Available online: https://www.coface.com/ (accessed on 2 September 2025).

- Fan, Y.; Lu, X.; Li, D.; Liu, Y. Video-Based Emotion Recognition Using CNN-RNN and C3D Hybrid Networks. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 445–450. [Google Scholar]

- Mei, L.; Lai, J.; Feng, Z.; Xie, X. Open-World Group Retrieval with Ambiguity Removal: A Benchmark. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 584–591. [Google Scholar]

- Quang, N.V.; Chun, J.; Tokuyama, T. CapsuleNet for Micro-Expression Recognition. In Proceedings of the 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019), Lille, France, 14–18 May 2019; pp. 1–7. [Google Scholar]

- Wang, S.J.; Li, B.J.; Liu, Y.J.; Yan, W.J.; Ou, X.; Huang, X.; Xu, F.; Fu, X. Micro-Expression Recognition with Small Sample Size by Transferring Long-Term Convolutional Neural Network. Neurocomputing 2018, 312, 251–262. [Google Scholar] [CrossRef]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric Non-Local Neural Networks for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar]

- Li, H.; Lin, Z.; Shen, X.; Brandt, J.; Hua, G. A Convolutional Neural Network Cascade for Face Detection. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5325–5334. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar]

- Ochs, P.; Brox, T. Object Segmentation in Video: A Hierarchical Variational Approach for Turning Point Trajectories into Dense Regions. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1583–1590. [Google Scholar]

- Ding, S.; Qu, S.; Xi, Y.; Sangaiah, A.K.; Wan, S. Image Caption Generation with High-Level Image Features. Pattern Recognit. Lett. 2019, 123, 89–95. [Google Scholar] [CrossRef]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future Frame Prediction for Anomaly Detection - A New Baseline. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6536–6545. [Google Scholar]

- Wilson, J.; Song, J.; Fu, Y.; Zhang, A.; Capodieci, A.; Jayakumar, P.; Barton, K.; Ghaffari, M. MotionSC: Data Set and Network for Real-Time Semantic Mapping in Dynamic Environments. arXiv 2022, arXiv:2203.07060. [Google Scholar] [CrossRef]

- Epic Games. FRichCurve API Reference—Unreal Engine 5.0 Documentation. Available online: https://dev.epicgames.com/documentation/en-us/unreal-engine/API/Runtime/Engine/Curves/FRichCurve?application_version=5.0 (accessed on 2 September 2025).

- Wang, F.; Ainouz, S.; Lian, C.; Bensrhair, A. Multimodality Semantic Segmentation Based on Polarization and Color Images. Neurocomputing 2017, 253, 193–200. [Google Scholar] [CrossRef]

- Yuhong, H. Research on Micro-Expression Spotting Method Based on Optical Flow Features. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, China, 20–24 October 2021; pp. 4803–4807. [Google Scholar]

- Cohn, J.; Zlochower, A.; Lien, J.; Kanade, T. Feature-Point Tracking by Optical Flow Discriminates Subtle Differences in Facial Expression. In Proceedings of the Third IEEE International Conference on Automatic Face and Gesture Recognition, Nara, Japan, 14–16 April 1998; pp. 396–401. [Google Scholar]

- Li, M.; Zha, Q.; Wu, H. Soften the Mask: Adaptive Temporal Soft Mask for Efficient Dynamic Facial Expression Recognition. arXiv 2025, arXiv:2502.21004. [Google Scholar]

- Yang, B.; Wu, J.; Ikeda, K.; Hattori, G.; Sugano, M.; Iwasawa, Y.; Matsuo, Y. Deep Learning Pipeline for Spotting Macro- and Micro-Expressions in Long Video Sequences Based on Action Units and Optical Flow. Pattern Recognit. Lett. 2023, 165, 63–74. [Google Scholar] [CrossRef]

- Yu, W.W.; Jiang, J.; Li, Y.J. LSSNet: A Two-Stream Convolutional Neural Network for Spotting Macro- and Micro-Expression in Long Videos. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, China, 20–24 October 2021; pp. 4745–4749. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Routledge: New York, NY, USA, 2013. [Google Scholar]

| Method | Category | Key Features | Strengths | Limitations |

|---|---|---|---|---|

| LBP-TOP [11] | Handcrafted | Local binary patterns on three orthogonal planes | Robust to illumination; low computational cost | Limited to static features; poor temporal dynamics |

| HOOF [12] | Handcrafted | Histogram of oriented optical flow | Captures motion cues effectively | Sensitive to noise; no AU interdependencies |

| CNN + LSTM [32] | Deep Learning | Spatial CNN with temporal LSTM | Models sequential dependencies | Ignores AU structural relationships; high complexity |

| MER-GCN [33] | Deep Learning | Graph convolutional network for MER | Captures AU co-occurrences | Symmetric graphs only; no emotion modulation |

| CapsuleNet [34] | Deep Learning | Capsule networks for feature routing | Handles part-whole relationships | Limited temporal integration; no animation mapping |

| SARA [29] | Animation System | Behavior Markup Language (BML) | Supports basic emotion synthesis | Rigid transitions; no micro-expression support |

| ARIA [30] | Animation System | Modular input–processing–output | Flexible architecture | Relies on predefined templates; low granularity |

| AU_GCN _CUR | Deep Learning + Graph | 3D-ResNet + Symmetric GCN | Joint spatiotemporal-AU modeling; end-to-end animation | Higher computational load for graphs |

| Layer | Kernel Size | Output Size |

|---|---|---|

| Conv1 | , stride | |

| ResBlock1 | ||

| ResBlock2 | ||

| ResBlock3 | ||

| ResBlock4 | ||

| Global Avg. Pooling | Spatial-temporal pooling |

| AU | FACS Description | Rig Control Channels (L/R as Named in the Rig) |

|---|---|---|

| AU1 | Inner brow raiser | CTRL_L_brow_raiseIn; CTRL_R_brow_raiseIn |

| AU2 | Outer brow raiser | CTRL_L_brow_raiseOut; CTRL_R_brow_raiseOut |

| AU4 | Brow lowerer | CTRL_L_brow_down; CTRL_R_brow_down; CTRL_L_brow_lateral; CTRL_R_brow_lateral |

| AU5 | Upper lid raiser | CTRL_L_eye_eyelidU; CTRL_R_eye_eyelidU |

| AU6 | Cheek raiser | CTRL_L_eye_cheekRaise; CTRL_R_eye_cheekRaise |

| AU7 | Lid tightener | CTRL_L_eye_squintInner; CTRL_R_eye_squintInner |

| AU9 | Nose wrinkler | CTRL_L_nose; CTRL_R_nose; CTRL_R_nose_wrinkleUpper; CTRL_L_nose_wrinkleUpper |

| AU10 | Upper lip raiser | CTRL_L_mouth_upperLipRaise; CTRL_R_mouth_upperLipRaise |

| AU12 | Lip corner puller | CTRL_L_mouth_cornerPull; CTRL_R_mouth_cornerPull |

| AU15 | Lip corner depressor | CTRL_L_mouth_cornerDepress; CTRL_R_mouth_cornerDepress |

| AU20 | Lip stretcher | CTRL_L_mouth_stretch; CTRL_R_mouth_stretch |

| AU23 | Lip tightener | CTRL_L_mouth_tightenU; CTRL_R_mouth_tightenU; CTRL_L_mouth_tightenD; CTRL_R_mouth_tightenD |

| Model | k-fold Acc (%) | LOSO Acc (%) | Code Rerun |

|---|---|---|---|

| 3D-ResNet (pre-trained) | 61.92 (±6.53) | 56.20 (±28.31) | Yes |

| 3D-ResNet | 84.87 (±3.80) | 80.51 (±6.58) | Yes |

| AU_GCN_CUR | 84.80 (±3.89) | 80.40 (±6.13) | Yes |

| Method | k-fold Accuracy (%) | LOSO Accuracy (%) |

|---|---|---|

| ThreeDFlow | 63.48 | 42.58 |

| CNN + LSTM | 63.78 | 48.35 |

| CapsuleNet | 44.83 | 31.16 |

| AU_GCN_CUR | 84.80 | 80.40 |

| Category | Method | Accuracy (%) | F1-Score (%) |

|---|---|---|---|

| Hand-crafted | MDMD [44] | 57.07 | 23.50 |

| SP-FD [45] | 21.31 | 12.43 | |

| OF-FD [46] | 37.82 | 35.34 | |

| LBP-TOP [11] | 56.98 | 42.40 | |

| LOCP-TOP [16] | 45.53 | 42.25 | |

| Deep-learning | MER–GCN [33] | 54.40 | 30.30 |

| SOFTNe [47] | 24.10 | 20.22 | |

| Concat–CNN [48] | 25.05 | 20.19 | |

| LSSNet [49] | 37.70 | 32.50 | |

| AU_GCN_CUR * | 84.87 | 77.93 |

| Emotion | Mean A | Mean B | Diff (A-B) | p-Value | Cohen’s d |

|---|---|---|---|---|---|

| Anger | 3.6931 | 3.8232 | −0.1301 | 0.207 | 0.658 |

| Disgust | 3.6362 | 3.8293 | −0.1931 | 0.096 | 0.739 |

| Fear | 3.5305 | 3.8150 | −0.2846 | 0.013 | 0.723 |

| Happiness | 3.4939 | 3.5874 | −0.0935 | 0.471 | 0.829 |

| Sadness | 3.5528 | 3.7378 | −0.1850 | 0.146 | 0.811 |

| Surprise | 3.6667 | 3.5854 | 0.0813 | 0.523 | 0.813 |

| Overall | 3.4463 | 3.7027 | −0.2564 | 0.005 | 0.048 |

| Dimension | Mean A | Mean B | Diff (A-B) | p-Value | Cohen’s d |

|---|---|---|---|---|---|

| Anger | 3.6931 | 3.8232 | −0.1301 | 0.068 | 0.658 |

| Disgust | 3.6362 | 3.8293 | −0.1931 | 0.008 | 0.739 |

| Fear | 3.5305 | 3.8150 | −0.2846 | <0.001 | 0.723 |

| Happiness | 3.4939 | 3.5874 | −0.0935 | 0.325 | 0.829 |

| Sadness | 3.5528 | 3.7378 | −0.1850 | 0.044 | 0.811 |

| Surprise | 3.6667 | 3.5854 | 0.0813 | 0.425 | 0.813 |

| Dimension | Mean A | Mean B | Diff (A-B) | p-Value | Cohen’s d |

|---|---|---|---|---|---|

| Clarity | 3.6545 | 3.8130 | −0.1585 | <0.001 | 0.678 |

| Naturalness | 3.5730 | 3.6220 | −0.0488 | 0.460 | 0.595 |

| Authenticity | 3.5600 | 3.7500 | −0.1950 | 0.004 | 0.686 |

| Variable | Coefficient | Std. Error | t-Value | p-Value |

|---|---|---|---|---|

| Intercept (Constant) | 2.446 | 0.232 | 10.558 | <0.001 |

| Recognition_Mean | 0.336 | 0.061 | 5.542 | <0.001 |

| Model Summary | R2 = 0.277 | Adj. R2 = 0.268 | F = 30.72 | p < 0.001 |

| Shapiro-Wilk Test | W = 0.9926 | p = 0.9235 | (Residuals are normally distributed) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, L.; Yang, F.; Lin, Y.; Zhang, J.; Whang, M. Graph-Driven Micro-Expression Rendering with Emotionally Diverse Expressions for Lifelike Digital Humans. Biomimetics 2025, 10, 587. https://doi.org/10.3390/biomimetics10090587

Fang L, Yang F, Lin Y, Zhang J, Whang M. Graph-Driven Micro-Expression Rendering with Emotionally Diverse Expressions for Lifelike Digital Humans. Biomimetics. 2025; 10(9):587. https://doi.org/10.3390/biomimetics10090587

Chicago/Turabian StyleFang, Lei, Fan Yang, Yichen Lin, Jing Zhang, and Mincheol Whang. 2025. "Graph-Driven Micro-Expression Rendering with Emotionally Diverse Expressions for Lifelike Digital Humans" Biomimetics 10, no. 9: 587. https://doi.org/10.3390/biomimetics10090587

APA StyleFang, L., Yang, F., Lin, Y., Zhang, J., & Whang, M. (2025). Graph-Driven Micro-Expression Rendering with Emotionally Diverse Expressions for Lifelike Digital Humans. Biomimetics, 10(9), 587. https://doi.org/10.3390/biomimetics10090587