Improved Automatic Deep Model for Automatic Detection of Movement Intention from EEG Signals

Abstract

1. Introduction

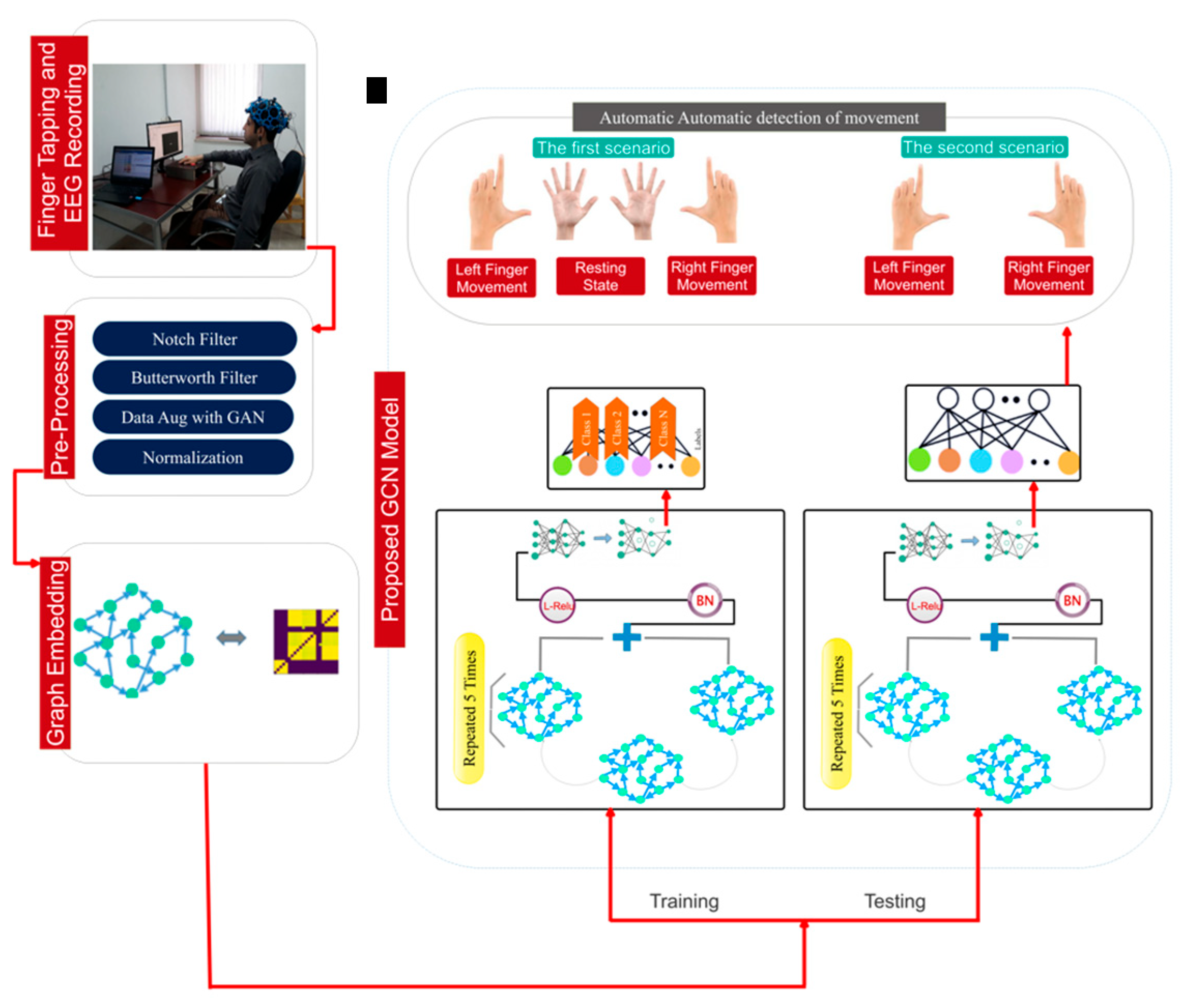

- A.

- Preparation of a database of EEG signals during movement intention testing in two separate scenarios

- B.

- Automatic presentation of an intelligent system in the automatic classification of movement intention based on the combination of graph theory and deep convolutional networks

- C.

- Presenting a new model with high speed and accuracy for classifying left finger stroke, right finger stroke, and the resting state

- D.

- Achieving the highest classification accuracy for the two-class mode compared to recent research

- E.

- Ability to apply the algorithm in noisy environments in order to use the proposed model in online applications

2. Materials and Procedures

2.1. Generative Adversarial Networks (GANs)

2.2. Graph Convolutional Network

3. Proposed Model

3.1. Data Acquisition

3.2. Pre-Processing of EEG Data

- I.

- To eliminate the interference caused by the 50 Hz frequency of municipal electricity, a notch filter was applied to the EEG data collected from the F3-C3, Fz-Cz, F4-C4, C3-P3, Cz-Pz, and C4-P4 channel pairs.

- II.

- The recorded data underwent processing through a second-order Butterworth filter, targeting the frequency range of 0.05 to 60 Hz for the respective channels of the recorded signals.

- III.

- The recorded data are augmented through GANs to mitigate the occurrence of overfitting. Data augmentation in the GAN is performed by the generator and the discriminator, as previously stated. The subsequent section will provide a comprehensive description of the data augmentation process utilizing the GAN. The generator and discriminator in the GAN execute data augmentation, as previously mentioned. A uniformly distributed 100-dimensional vector is transformed into a 1 × 204,800-dimensional signal by the generating network. The generator produces a one-dimensional signal with vector dimensions of 100, characterized by a uniform distribution. The generating network consists of six convolutional layers, each with dimensions of 512, 1024, 2048, 40,996, 8192, and 204,800. Batch normalization and Relu activation are utilized in each layer. The repetitions and learning rate are established at 150 and 0.01, respectively. The discriminative network receives a one-dimensional vector as input and assesses its authenticity. This network consists of six dense layers. Employing adversarial generative networks, the data is enhanced from 204,800 dimensions to 250,000 dimensions.

- IV.

- During the final phase, data normalization is executed to optimize the training process within the range of 0 to 1.

3.3. Graph Design

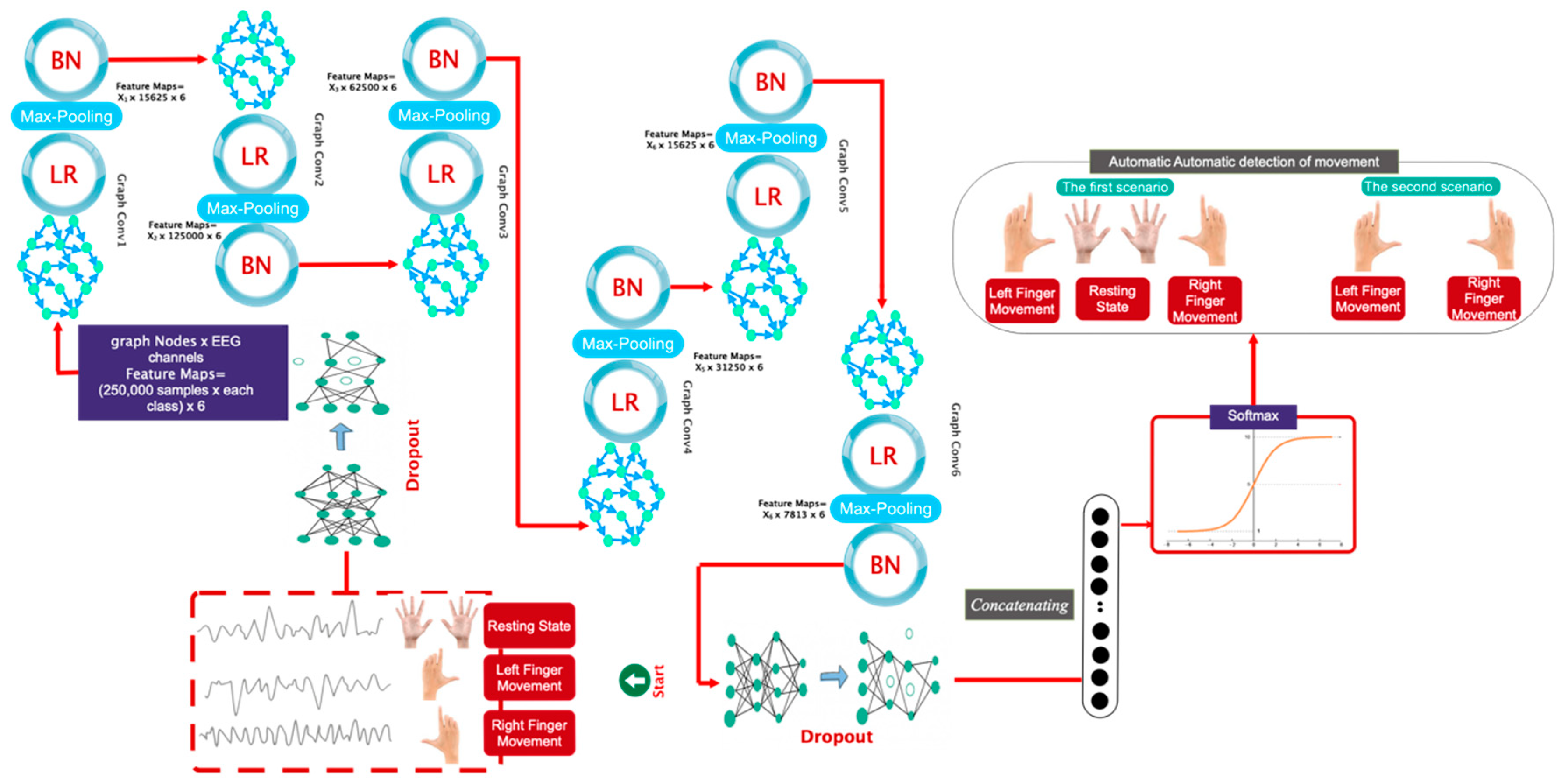

3.4. Customized Architecture

3.5. Series of Tests, Validation, and Training

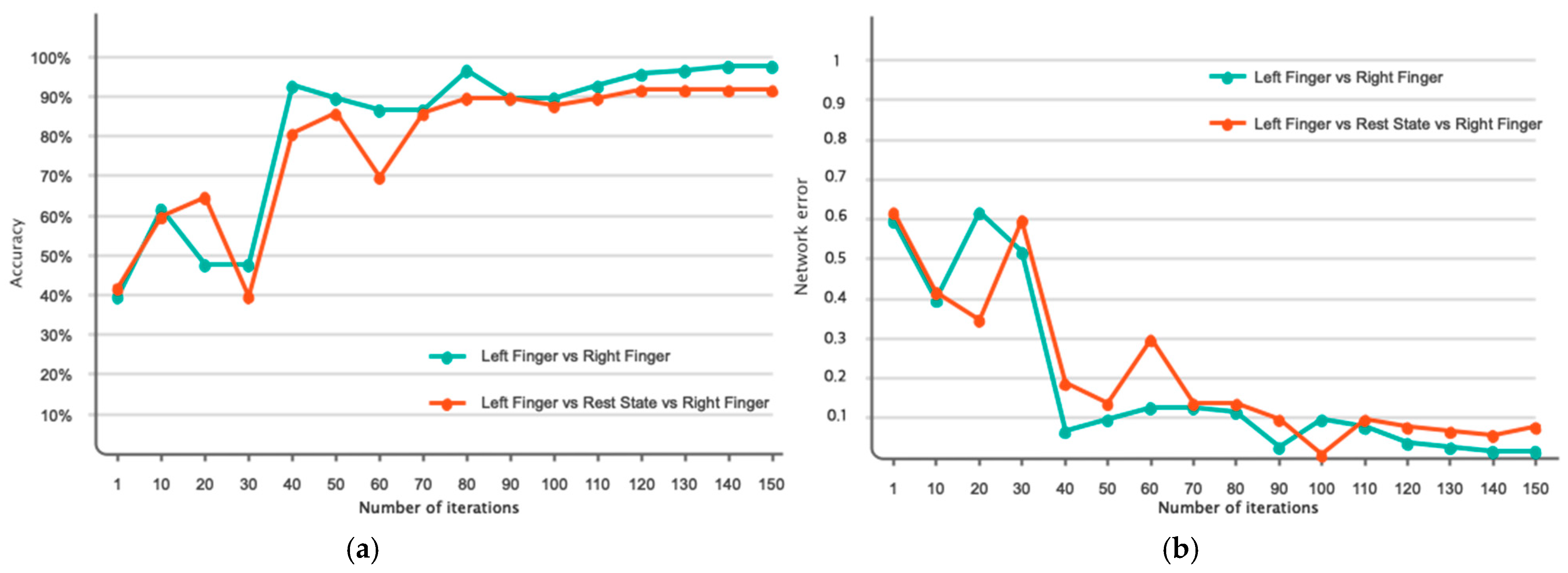

4. Experimental Results

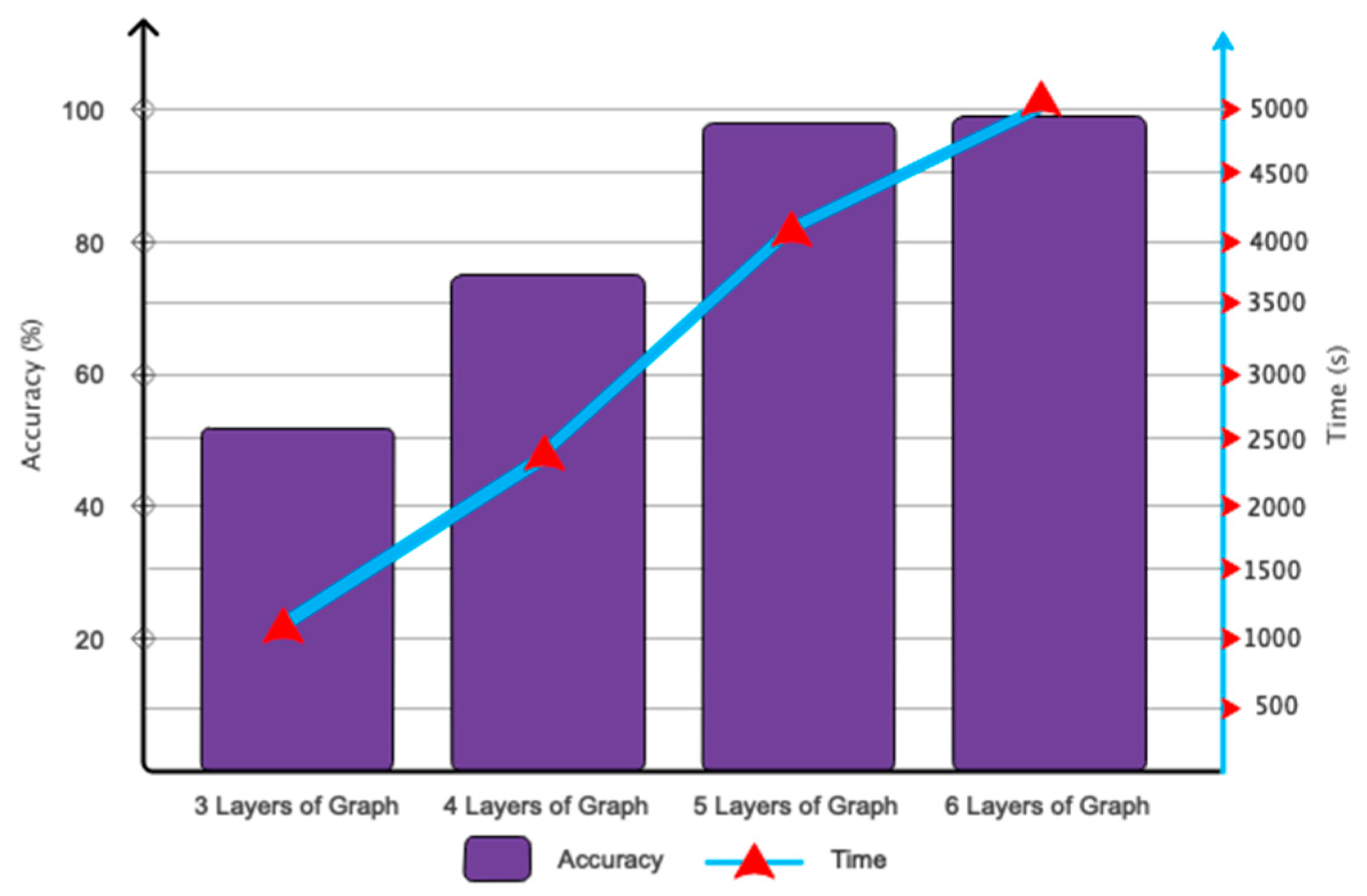

4.1. Enhancing Outcomes

4.2. Results of the Simulation

4.3. Comparison with Current Methodologies and Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nawaser, K.; Jafarkhani, F.; Khamoushi, S.; Yazdi, A.; Mohsenifard, H.; Gharleghi, B. The Dark Side of Digitalization: A Visual Journey of Research through Digital Game Addiction and Mental Health. IEEE Eng. Manag. Rev. 2024, 1, 1–27. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Z.; Zhang, X.; Huang, G.; Liu, Y.; Chen, X. EEG-Based Emotion Recognition via Transformer Neural Architecture Search. IEEE Trans. Ind. Inform. 2022, 19, 6016–6025. [Google Scholar] [CrossRef]

- Khalil, M.A.; Can, J.; George, K. Deep Learning Applications in Brain Computer Interface Based Lie Detection. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 189–192. [Google Scholar]

- Kang, Q.; Li, F.; Gao, J. Exploring the Functional Brain Network of Deception in Source-Level EEG via Partial Mutual Information. Electronics 2023, 12, 1633. [Google Scholar] [CrossRef]

- Li, F.; Zhu, H.; Xu, J.; Gao, Q.; Guo, H.; Wu, S.; Li, X.; He, S. Lie detection using fNIRS monitoring of inhibition-related brain regions discriminates infrequent but not frequent liars. Front. Hum. Neurosci. 2018, 12, 71. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Yousefi Rezaii, T.; Mousavi, Z.; Meshini, S. Automatic Stage Scoring of Single-Channel Sleep EEG Using CEEMD of Genetic Algorithm and Neural Network. Comput. Intell. Electr. Eng. 2018, 9, 15–28. [Google Scholar]

- Delmas, H.; Denault, V.; Burgoon, J.K.; Dunbar, N.E. A review of automatic lie detection from facial features. J. Nonverbal Behav. 2024, 48, 93–136. [Google Scholar] [CrossRef]

- Kanna, R.K.; Kripa, N.; Vasuki, R. Systematic Design Of Lie Detector System Utilizing EEG Signals Acquisition. Int. J. Sci. Technol. Res. 2021, 9, 610–612. [Google Scholar]

- Abootalebi, V.; Moradi, M.H.; Khalilzadeh, M.A. A new approach for EEG feature extraction in P300-based lie detection. Comput. Methods Programs Biomed. 2009, 94, 48–57. [Google Scholar] [CrossRef]

- Amir, S.; Ahmed, N.; Chowdhry, B.S. Lie detection in interrogations using digital signal processing of brain waves. In Proceedings of the 2013 3rd International Conference on Instrumentation, Communications, Information Technology and Biomedical Engineering (ICICI-BME), Bandung, Indonesia, 7–8 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 209–214. [Google Scholar]

- Mohammed, I.J.; George, L.E. A Survey for Lie Detection Methodology Using EEG Signal Processing. J. Al-Qadisiyah Comput. Sci. Math. 2022, 14, 42–54. [Google Scholar] [CrossRef]

- Gao, J.; Tian, H.; Yang, Y.; Yu, X.; Li, C.; Rao, N. A novel algorithm to enhance P300 in single trials: Application to lie detection using F-score and SVM. PLoS ONE 2014, 9, e109700. [Google Scholar] [CrossRef]

- Simbolon, A.I.; Turnip, A.; Hutahaean, J.; Siagian, Y.; Irawati, N. An experiment of lie detection based EEG-P300 classified by SVM algorithm. In Proceedings of the 2015 International Conference on Automation, Cognitive Science, Optics, Micro Electro-Mechanical System, and Information Technology (ICACOMIT), Bandung, Indonesia, 29–30 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 68–71. [Google Scholar]

- Saini, N.; Bhardwaj, S.; Agarwal, R. Classification of EEG signals using hybrid combination of features for lie detection. Neural Comput. Appl. 2020, 32, 3777–3787. [Google Scholar] [CrossRef]

- Dodia, S.; Edla, D.R.; Bablani, A.; Cheruku, R. Lie detection using extreme learning machine: A concealed information test based on short-time Fourier transform and binary bat optimization using a novel fitness function. Comput. Intell. 2020, 36, 637–658. [Google Scholar] [CrossRef]

- Yohan, K. Using EEG and Machine Learning to Perform Lie Detection. Bachelor’s Thesis, University of Moratuwa, Moratuwa, Sri Lanka, 2019. [Google Scholar]

- Baghel, N.; Singh, D.; Dutta, M.K.; Burget, R.; Myska, V. Truth identification from EEG signal by using convolution neural network: Lie detection. In Proceedings of the 2020 43rd International Conference on Telecommunications and Signal Processing (TSP), Milan, Italy, 7–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 550–553. [Google Scholar]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef]

- Iqbal, T.; Ali, H. Generative adversarial network for medical images (MI-GAN). J. Med. Syst. 2018, 42, 231. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; Li, Y. Simple and deep graph convolutional networks. In Proceedings of the International Conference on Machine Learning, Vancouver, BC, Canada, 13–19 July 2025; PMLR: New York, NY, USA, 2020; pp. 1725–1735. [Google Scholar]

- Murugeswari, P.; Vijayalakshmi, S. A New Method of Interval Type-2 Fuzzy-Based CNN for Image Classification. In Computational Vision and Bio-Inspired Computing: ICCVBIC 2020; Springer: Singapore, 2021; pp. 733–746. [Google Scholar]

- Somers, L.P.; Bosten, J.M. Predicted effectiveness of EnChroma multi-notch filters for enhancing color perception in anomalous trichromats. Vis. Res. 2024, 218, 108381. [Google Scholar] [CrossRef] [PubMed]

- Suescún-Díaz, D.; Ule-Duque, G.; Cardoso-Páez, L.E. Butterworth filter to reduce reactivity fluctuations. Karbala Int. J. Mod. Sci. 2024, 10, 8. [Google Scholar] [CrossRef]

- Henderi, H.; Wahyuningsih, T.; Rahwanto, E. Comparison of Min-Max normalization and Z-Score Normalization in the K-nearest neighbor (kNN) Algorithm to Test the Accuracy of Types of Breast Cancer. Int. J. Inform. Inf. Syst. 2021, 4, 13–20. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Van Esesn, B.C.; Awwal, A.A.S.; Asari, V.K. The history began from alexnet: A comprehensive survey on deep learning approaches. arXiv 2018, arXiv:1803.01164. [Google Scholar] [CrossRef]

- Nouleho, S.; Barth, D.; Quessette, F.; Weisser, M.-A.; Watel, D.; David, O. A new graph modelisation for molecule similarity. arXiv 2018, arXiv:1807.04528. [Google Scholar] [CrossRef]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar] [CrossRef]

- Shahini, N.; Bahrami, Z.; Sheykhivand, S.; Marandi, S.; Danishvar, M.; Danishvar, S.; Roosta, Y. Automatically identified EEG signals of movement intention based on CNN network (End-To-End). Electronics 2022, 11, 3297. [Google Scholar] [CrossRef]

- Jochumsen, M.; Poulsen, K.B.; Sørensen, S.L.; Sulkjær, C.S.; Corydon, F.K.; Strauss, L.S.; Roos, J.B. Single-trial movement intention detection estimation in patients with Parkinson’s disease: A movement-related cortical potential study. J. Neural Eng. 2024, 21, 046036. [Google Scholar] [CrossRef]

- Lutes, N.; Nadendla, V.S.S.; Krishnamurthy, K. Convolutional spiking neural networks for intent detection based on anticipatory brain potentials using electroencephalogram. Sci. Rep. 2024, 14, 8850. [Google Scholar] [CrossRef]

- Choi, H.J.; Das, S.; Peng, S.; Bajcsy, R.; Figueroa, N. On the Feasibility of EEG-based Motor Intention Detection for Real-Time Robot Assistive Control. arXiv 2024, arXiv:2403.08149. [Google Scholar]

- Dong, R.; Zhang, X.; Li, H.; Masengo, G.; Zhu, A.; Shi, X.; He, C. EEG generation mechanism of lower limb active movement intention and its virtual reality induction enhancement: A preliminary study. Front. Neurosci. 2024, 17, 1305850. [Google Scholar] [CrossRef]

- You, A.; Kim, J.K.; Ryu, I.H.; Yoo, T.K. Application of generative adversarial networks (GAN) for ophthalmology image domains: A survey. Eye Vis. 2022, 9, 6. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph cnn for learning on point clouds. ACM Trans. Graph. (Tog) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Bogaerts, T.; Masegosa, A.D.; Angarita-Zapata, J.S.; Onieva, E.; Hellinckx, P. A graph CNN-LSTM neural network for short and long-term traffic forecasting based on trajectory data. Transp. Res. Part C Emerg. Technol. 2020, 112, 62–77. [Google Scholar] [CrossRef]

- Si, C.; Yu, W.; Zhou, P.; Zhou, Y.; Wang, X.; Yan, S. Inception transformer. Adv. Neural Inf. Process. Syst. 2022, 35, 23495–23509. [Google Scholar]

- Wen, L.; Li, X.; Li, X.; Gao, L. A new transfer learning based on VGG-19 network for fault diagnosis. In Proceedings of the 2019 IEEE 23rd international conference on computer supported cooperative work in design (CSCWD), Porto, Portugal, 6–8 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 205–209. [Google Scholar]

- Du, G.; Cao, X.; Liang, J.; Chen, X.; Zhan, Y. Medical Image Segmentation based on U-Net: A Review. J. Imaging Sci. Technol. 2020, 64, 020508–1–020508-12. [Google Scholar] [CrossRef]

- Guo, G.; Wang, H.; Bell, D.; Bi, Y.; Greer, K. KNN model-based approach in classification. In Proceedings of the On The Move to Meaningful Internet Systems 2003: CoopIS, DOA, and ODBASE: OTM Confederated International Conferences, CoopIS, DOA, and ODBASE 2003, Catania, Italy, 3–7 November 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 986–996. [Google Scholar]

- Huang, S.; Cai, N.; Pacheco, P.P.; Narrandes, S.; Wang, Y.; Xu, W. Applications of support vector machine (SVM) learning in cancer genomics. Cancer Genom. Proteom. 2018, 15, 41–51. [Google Scholar]

- Popescu, M.-C.; Balas, V.E.; Perescu-Popescu, L.; Mastorakis, N. Multilayer perceptron and neural networks. WSEAS Trans. Circuits Syst. 2009, 8, 579–588. [Google Scholar]

- Sajjadi Mohammadabadi, S.M. From Generative AI to Innovative AI: An Evolutionary Roadmap. arXiv 2025, arXiv:2503.11419. [Google Scholar] [CrossRef]

- Sadeghi, S.; Niu, C. Augmenting Human Decision-Making in K-12 Education: The Role of Artificial Intelligence in Assisting the Recruitment and Retention of Teachers of Color for Enhanced Diversity and Inclusivity. Leadersh. Policy Sch. 2024, 1–21. [Google Scholar] [CrossRef]

- Golkarfard, A.; Sadeghmalakabadi, S.; Talebian, S.; Basirat, S.; Golchin, N. Ethical Challenges of AI Integration in Architecture and Built Environment. Curr. Opin. 2025, 5, 1136–1147. [Google Scholar] [CrossRef]

- Ahmadirad, Z. Evaluating the Influence of AI on Market Values in Finance: Distinguishing Between Authentic Growth and Speculative Hype. Int. J. Adv. Res. Humanit. Law 2024, 1, 50–57. [Google Scholar] [CrossRef]

- Dehghanpour Abyaneh, M.; Narimani, P.; Javadi, M.S.; Golabchi, M.; Attarsharghi, S.; Hadad, M. Predicting Surface Roughness and Grinding Forces in UNS S34700 Steel Grinding: A Machine Learning and Genetic Algorithm Approach to Coolant Effects. Physchem 2024, 4, 495–523. [Google Scholar] [CrossRef]

- Narimani, P.; Abyaneh, M.D.; Golabchi, M.; Golchin, B.; Haque, R.; Jamshidi, A. Digitalization of Analysis of a Concrete Block Layer Using Machine Learning as a Sustainable Approach. Sustainability 2024, 16, 7591. [Google Scholar] [CrossRef]

- Mahdavimanshadi, M.; Anaraki, M.G.; Mowlai, M.; Ahmadirad, Z. A Multistage Stochastic Optimization Model for Resilient Pharmaceutical Supply Chain in COVID-19 Pandemic Based on Patient Group Priority. In Proceedings of the 2024 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 3 May 2024; pp. 382–387. [Google Scholar] [CrossRef]

- Afsharfard, A.; Jafari, A.; Rad, Y.A.; Tehrani, H.; Kim, K.C. Modifying Vibratory Behavior of the Car Seat to Decrease the Neck Injury. J. Vib. Eng. Technol. 2023, 11, 1115–1126. [Google Scholar] [CrossRef]

- Sadeghi, S.; Marjani, T.; Hassani, A.; Moreno, J. Development of Optimal Stock Portfolio Selection Model in the Tehran Stock Exchange by Employing Markowitz Mean-Semivariance Model. J. Financ. Issues 2022, 20, 47–71. [Google Scholar] [CrossRef]

- Mohammedi, M.; Naseri, M.; Mehrabi Jorshary, K.; Golchin, N.; Akhmedov, S.; Uglu, V.S.O. Economic, Environmental, and Technical Optimal Energy Scheduling of Smart Hybrid Energy System Considering Demand Response Participation. Oper. Res. Forum 2025, 6, 83. [Google Scholar] [CrossRef]

- Naseri, S.; Eshraghi, S.; Talebian, S. Innovative Sustainable Architecture: A Lesson Learned from Amphibious House in the UK. Curr. Opin. 2024, 4, 766–777. [Google Scholar] [CrossRef]

- Du, T.X.; Jorshary, K.M.; Seyedrezaei, M.; Uglu, V.S.O. Optimal Energy Scheduling of Load Demand with Two-Level Multi-Objective Functions in Smart Electrical Grid. Oper. Res. Forum 2025, 6, 66. [Google Scholar] [CrossRef]

- Amiri, N.; Honarmand, M.; Dizani, M.; Moosavi, A.; Kazemzadeh Hannani, S. Shear-Thinning Droplet Formation inside a Microfluidic T-Junction under an Electric Field. Acta Mech. 2021, 232, 2535–2554. [Google Scholar] [CrossRef]

- Mohammadabadi, S.M.S.; Zawad, S.; Yan, F.; Yang, L. Speed Up Federated Learning in Heterogeneous Environments: A Dynamic Tiering Approach. IEEE Internet Things J. 2024, 12, 5026–5035. [Google Scholar] [CrossRef]

- Basirat, S.; Raoufi, S.; Bazmandeh, D.; Khamoushi, S.; Entezami, M. Ranking of AI-Based Criteria in Health Tourism Using Fuzzy SWARA Method. Comput. Decis. Mak. 2025, 2, 530–545. [Google Scholar] [CrossRef]

- Khatami, S.S.; Shoeibi, M.; Salehi, R.; Kaveh, M. Energy-Efficient and Secure Double RIS-Aided Wireless Sensor Networks: A QoS-Aware Fuzzy Deep Reinforcement Learning Approach. J. Sens. Actuator Netw. 2025, 14, 18. [Google Scholar] [CrossRef]

- Nezhad, K.K.; Ahmadirad, Z.; Mohammadi, A.T. The Dynamics of Modern Business: Integrating Research Findings into Practical Management; Nobel Sciences: Stockholm, Sweden, 2024. [Google Scholar]

- Ahmadirad, Z. The Beneficial Role of Silicon Valley’s Technological Innovations and Venture Capital in Strengthening Global Financial Markets. Int. J. Mod. Achiev. Sci. Eng. Technol. 2024, 1, 9–17. [Google Scholar] [CrossRef]

- Saremi, S.Y.; Taghizadeh, M. RFID Adoption by Supply Chain Organizations in Malaysia. Int. Proc. Econ. Dev. Res. 2013, 59, 178. [Google Scholar]

- Pazouki, S.; Jamshidi, M.B.; Jalali, M.; Tafreshi, A. The Integration of Big Data in FinTech: Review of Enhancing Financial Services through Advanced Technologies. World J. Adv. Res. Rev. 2025, 25, 546–556. [Google Scholar] [CrossRef]

- Mansouri, S.; Mohammed, H.; Korchiev, N.; Anyanwu, K. Taming Smart Contracts with Blockchain Transaction Primitives: A Possibility? In Proceedings of the 2024 IEEE International Conference on Blockchain (Blockchain), Trinity College Dublin, Dublin, Ireland, 19–22 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 575–582. [Google Scholar] [CrossRef]

- Mansouri, S.; Samatova, V.; Korchiev, N.; Anyanwu, K. DeMaTO: An Ontology for Modeling Transactional Behavior in Decentralized Marketplaces. In Proceedings of the 2023 IEEE/WIC International Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), Venice, Italy, 26–29 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 171–180. [Google Scholar] [CrossRef]

- Khorsandi, H.; Mohsenibeigzadeh, M.; Tashakkori, A.; Kazemi, B.; Khorashadi Moghaddam, P.; Ahmadirad, Z. Driving Innovation in Education: The Role of Transformational Leadership and Knowledge Sharing Strategies. Curr. Opin. 2024, 4, 505–515. [Google Scholar] [CrossRef]

- Mohammadabadi, S.M.S.; Yang, L.; Yan, F.; Zhang, J. Communication-Efficient Training Workload Balancing for Decentralized Multi-Agent Learning. In Proceedings of the 2024 IEEE 44th International Conference on Distributed Computing Systems (ICDCS), Jersey City, NJ, USA, 23–26 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 680–691. [Google Scholar] [CrossRef]

- Asadi, M.; Taheri, R. Enhancing Peer Assessment and Engagement in Online IELTS Writing Course through a Teacher’s Multifaceted Approach and AI Integration. Technol. Assist. Lang. Educ. 2024, 2, 94–117. [Google Scholar] [CrossRef]

- Abbasi, E.; Dwyer, E. The Efficacy of Commercial Computer Games as Vocabulary Learning Tools for EFL Students: An Empirical Investigation. Sunshine State TESOL J. 2024, 16, 24–35. [Google Scholar] [CrossRef]

- Mohaghegh, S.; Kondo, S.; Yemiscioglu, G.; Muhtaroglu, A. A Novel Multiplier Hardware Organization for Finite Fields Defined by All-One Polynomials. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 5084–5088. [Google Scholar] [CrossRef]

- Mohaghegh, A.; Huang, C. Feature-Guided Sampling Strategy for Adaptive Model Order Reduction of Convection-Dominated Problems. arXiv 2025. [Google Scholar] [CrossRef]

- Talebian, S.; Golkarieh, A.; Eshraghi, S.; Naseri, M.; Naseri, S. Artificial Intelligence Impacts on Architecture and Smart Built Environments: A Comprehensive Review. Adv. Civ. Eng. Environ. Sci. 2025, 2, 45–56. [Google Scholar] [CrossRef]

- Karkehabadi, A.; Sadeghmalakabadi, S. Evaluating Deep Learning Models for Architectural Image Classification: A Case Study on the UC Davis Campus. In Proceedings of the 2024 IEEE 8th International Conference on Information and Communication Technology (CICT), Prayagraj, UP, India, 6–8 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Naseri, S. AI in Architecture and Urban Design and Planning: Case Studies on Three AI Applications. GSC Adv. Res. Rev. 2024, 21, 565–577. [Google Scholar] [CrossRef]

- Hanif, E.; Hashemnejad, H.; Ghafourian, M. Factors Affecting the Augmentation of Spatial Dynamics in Social Sustainability with an Emphasis on Human-Orientation of Space. J. Hist. Cult. Art Res. 2017, 6, 419–435. [Google Scholar] [CrossRef]

- Barati Nia, A.; Moug, D.M.; Huffman, A.P.; DeJong, J.T. Numerical Investigation of Piezocone Dissipation Tests in Clay: Sensitivity of Interpreted Coefficient of Consolidation to Rigidity Index Selection. In Cone Penetration Testing 2022; CRC Press: Boca Raton, FI, USA, 2022; pp. 282–287. [Google Scholar] [CrossRef]

- Barati-Nia, A.; Parrott, A.E.; Sorenson, K.; Moug, D.M.; Khosravifar, A. Comparing Cyclic Direct Simple Shear Behavior of Fine-Grained Soil Prepared with SHANSEP or Recompression Approaches. In Proceedings of the Geotechnical Frontiers 2025, Louisville, KY, USA, 2–5 March 2025; ASCE: Reston, VA, USA, 2025; pp. 419–429. [Google Scholar] [CrossRef]

- Splechtna, R.; Behravan, M.; Jelovic, M.; Gracanin, D.; Hauser, H.; Matkovic, K. Interactive Design-of-Experiments: Optimizing a Cooling System. IEEE Trans. Vis. Comput. Graph. 2024, 31, 44–53. [Google Scholar] [CrossRef]

- Entezami, M.; Basirat, S.; Moghaddami, B.; Bazmandeh, D.; Charkhian, D. Examining the Importance of AI-Based Criteria in the Development of the Digital Economy: A Multi-Criteria Decision-Making Approach. J. Soft Comput. Decis. Anal. 2025, 3, 72–95. [Google Scholar] [CrossRef]

- Roshdieh, N.; Farzad, G. The Effect of Fiscal Decentralization on Foreign Direct Investment in Developing Countries: Panel Smooth Transition Regression. Int. Res. J. Econ. Manag. Stud. (IRJEMS) 2024, 3, 133–140. [Google Scholar] [CrossRef]

- Pazouki, S.; Jamshidi, M.B.; Jalali, M.; Tafreshi, A. Artificial Intelligence and Digital Technologies in Finance: A Comprehensive Review. J. Econ. Financ. Account. Stud. 2025, 7, 54–69. [Google Scholar] [CrossRef]

- Ahmadirad, Z. The Role of AI and Machine Learning in Supply Chain Optimization. Int. J. Mod. Achiev. Sci. Eng. Technol. 2025, 2, 1–8. [Google Scholar] [CrossRef]

- Motta de Castro, E.; Bozorgmehrian, F.; Carrola, M.; Koerner, H.; Samouei, H.; Asadi, A. Sulfur-Driven Reactive Processing of Multiscale Graphene/Carbon Fiber-Polyether Ether Ketone (PEEK) Composites with Tailored Crystallinity and Enhanced Mechanical Performance. Compos. Part B Eng. 2025, 295, 112180. [Google Scholar] [CrossRef]

- Pazouki, S.; Jamshidi, M.B.; Jalali, M.; Tafreshi, A. Transformative Impact of AI and Digital Technologies on the FinTech Industry: A Comprehensive Review. Int. J. Adv. Res. Humanit. Law 2025, 2, 1–27. [Google Scholar] [CrossRef]

- Azadmanesh, M.; Roshanian, J.; Georgiev, K.; Todrov, M.; Hassanalian, M. Synchronization of Angular Velocities of Chaotic Leader-Follower Satellites Using a Novel Integral Terminal Sliding Mode Controller. Aerosp. Sci. Technol. 2024, 150, 109211. [Google Scholar] [CrossRef]

- Kavianpour, S.; Haghighi, F.; Sheykhfard, A.; Das, S.; Fountas, G.; Oshanreh, M.M. Assessing the Risk of Pedestrian Crossing Behavior on Suburban Roads Using Structural Equation Model. J. Traffic Transp. Eng. (Engl. Ed.) 2024, 11, 853–866. [Google Scholar] [CrossRef]

- Pourasghar, A.; Mehdizadeh, E.; Wong, T.C.; Hoskoppal, A.K.; Brigham, J.C. A Computationally Efficient Approach for Estimation of Tissue Material Parameters from Clinical Imaging Data Using a Level Set Method. J. Eng. Mech. 2024, 150, 04024075. [Google Scholar] [CrossRef]

- Espahbod, S. Intelligent Freight Transportation and Supply Chain Drivers: A Literature Survey. In Proceedings of the Seventh International Forum on Decision Sciences; Springer: Singapore, 2020; pp. 49–56. [Google Scholar] [CrossRef]

- Mirbakhsh, A.; Lee, J.; Besenski, D. Spring–Mass–Damper-Based Platooning Logic for Automated Vehicles. Transp. Res. Rec. 2023, 2677, 1264–1274. [Google Scholar] [CrossRef]

- Sajjadi Mohammadabadi, S.M.; Entezami, M.; Karimi Moghaddam, A.; Orangian, M.; Nejadshamsi, S. Generative Artificial Intelligence for Distributed Learning to Enhance Smart Grid Communication. Int. J. Intell. Netw. 2024, 5, 267–274. [Google Scholar] [CrossRef]

- Mirbakhsh, A.; Lee, J.; Besenski, D. Development of a Signal-Free Intersection Control System for CAVs and Corridor Level Impact Assessment. Future Transp. 2023, 3, 552–567. [Google Scholar] [CrossRef]

- Dokhanian, S.; Sodagartojgi, A.; Tehranian, K.; Ahmadirad, Z.; Moghaddam, P.K.; Mohsenibeigzadeh, M. Exploring the Impact of Supply Chain Integration and Agility on Commodity Supply Chain Performance. World J. Adv. Res. Rev. 2024, 22, 441–450. [Google Scholar] [CrossRef]

- Gudarzi Farahani, Y.; Mirarab Baygi, S.A.; Abbasi Nahoji, M.; Roshdieh, N. Presenting the Early Warning Model of Financial Systemic Risk in Iran’s Financial Market Using the LSTM Model. Int. J. Finance Manag. Account. 2026, 11, 29–38. [Google Scholar] [CrossRef]

- Ghoreishi, E.; Abolhassani, B.; Huang, Y.; Acharya, S.; Lou, W.; Hou, Y.T. Cyrus: A DRL-Based Puncturing Solution to URLLC/eMBB Multiplexing in O-RAN. In Proceedings of the 2024 33rd International Conference on Computer Communications and Networks (ICCCN), Kailua-Kona, HI, USA, 29–31 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–9. [Google Scholar] [CrossRef]

| Layer | Shape of Weight Tensor | Shape of Bias | Number of Parameters |

|---|---|---|---|

| Graph 1 | (x1, 250,000, 250,000) | 250,000 | 62,500,000,000 × x1 + 250,000 |

| Graph 2 | (x2, 250,000, 125,000) | 125,000 | 31,250,000,000 × x2 + 125,000 |

| Graph 3 | (x3, 125,000, 62,500) | 62,500 | 7,812,500,000 × x3 + 62,500 |

| Graph 4 | (x4, 31,250, 15,625) | 15,625 | 488,281,250 × x4 + 31,250 |

| Graph 5 | (x5, 15,625, 7813) | 7813 | 122,078,125 × x5 + 15,625 |

| Graph 6 | (x6, 7813, 3907) | 3907 | 30,525,391 × x6 + 7813 |

| Flattening Layer | - | 2 | 3907 |

| Model | Parameters | Values | Optimal Value |

|---|---|---|---|

| GAN | Batch Size | 4, 6, 8, 10, 12 | 10 |

| Optimizer | Adam, SGD, Adamax | Adam | |

| Conv layers | 3, 4, 5, 6 | 6 | |

| Learning Rate | 0.1, 0.01, 0.001, 0.0001 | 0.01 | |

| Number of GConv | 2, 3, 4, 5, 6, 7 | 6 | |

| ConvGraph | Batch Size in DFCGN | 8, 16, 32 | 32 |

| Batch Normalization | ReLU, Leaky-ReLU | Leaky-ReLU | |

| Learning Rate in DFCGN | 0.1, 0.01, 0.001, 0.0001, 0.00001 | 0.0001 | |

| Dropout Rate | 0.1, 0.2, 0.3 | 0.1 | |

| Weight of Optimizer | 10 × 10−3, 10 × 10−4, 10 × 10−5, 10 × 10−6 |

| Measurement Index | Accuracy (%) | Sensitivity (%) | Precision (%) | Specificity (%) | Kappa Coefficient |

|---|---|---|---|---|---|

| 2-class | 98.1 | 97.4 | 97.4 | 97.8 | 0.88 |

| 3-class | 92.2 | 91.7 | 89.4 | 91.4 | 0.81 |

| Research | The Method Used | ACC (%) |

|---|---|---|

| Jochumsen et al. [24] | CSP + SVM | 80 |

| Xu et al. [25] | MRCP Component + KNN | 75 |

| Jiang et al. [26] | MRCP Component | 76 |

| Wairagkar et al. [27] | ERD Component + KNN | 78 |

| Shahini et al. [28] | CNN | 89 |

| Jochumsen et al. [29] | Hand Crafted Features + KNN | 89 |

| Lutes et al. [30] | CNN | 98.50 (two class) |

| Choi et al. [31] | Hand Crafted Features + SVM | 86 |

| Dong et al. [32] | Transfer Learning | 85 |

| Our Model | GAN + Graph Theory + CNN | 98.2 (two class) 92 (three class) |

| Method | Feature Learning (ACC) | Handcrafted Features (ACC) |

|---|---|---|

| KNN | 76% | 82% |

| SVM | 80% | 85% |

| CNN | 84% | 60% |

| MLP | 75% | 79% |

| P-M | 92% | 69% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zare Lahijan, L.; Meshgini, S.; Afrouzian, R.; Danishvar, S. Improved Automatic Deep Model for Automatic Detection of Movement Intention from EEG Signals. Biomimetics 2025, 10, 506. https://doi.org/10.3390/biomimetics10080506

Zare Lahijan L, Meshgini S, Afrouzian R, Danishvar S. Improved Automatic Deep Model for Automatic Detection of Movement Intention from EEG Signals. Biomimetics. 2025; 10(8):506. https://doi.org/10.3390/biomimetics10080506

Chicago/Turabian StyleZare Lahijan, Lida, Saeed Meshgini, Reza Afrouzian, and Sebelan Danishvar. 2025. "Improved Automatic Deep Model for Automatic Detection of Movement Intention from EEG Signals" Biomimetics 10, no. 8: 506. https://doi.org/10.3390/biomimetics10080506

APA StyleZare Lahijan, L., Meshgini, S., Afrouzian, R., & Danishvar, S. (2025). Improved Automatic Deep Model for Automatic Detection of Movement Intention from EEG Signals. Biomimetics, 10(8), 506. https://doi.org/10.3390/biomimetics10080506