Abstract

The Parrot Optimizer (PO) is a new optimization algorithm based on the behaviors of trained Pyrrhura Molinae parrots. In this paper, an improved PO (IPO) is proposed for solving global optimization problems and training the multilayer perceptron. The basic PO is enhanced by using three improvements, which are aerial search strategy, modified staying behavior, and improved communicating behavior. The aerial search strategy is derived from Arctic Puffin Optimization and is employed to enhance the exploration ability of PO. The staying behavior and communicating behavior of PO are modified using random movement and roulette fitness–distance balance selection methods to achieve a better balance between exploration and exploitation. To evaluate the optimization performance of the proposed IPO, twelve CEC2022 test functions and five standard classification datasets are selected for the experimental tests. The results between IPO and the other six well-known optimization algorithms show that IPO has superior performance for solving complex global optimization problems. The results between IPO and the other six well-known optimization algorithms show that IPO has superior performance for solving complex global optimization problems. In addition, IPO has been applied to optimize a multilayer perceptron model for classifying the oral English teaching quality evaluation dataset. An MLP model with a 10-21-3 structure is constructed for the classification of evaluation outcomes. The results show that IPO-MLP outperforms other algorithms with the highest classification accuracy of 88.33%, which proves the effectiveness of the developed method.

1. Introduction

Metaheuristic algorithms are one type of stochastic searching method used to find an optimal solution within a given search space. In recent decades, metaheuristic algorithms have become very popular for solving different types of optimization problems due to their flexibility, no-derivation mechanism, and simplicity [1]. The exploration and exploitation phases are two important processes for the metaheuristic algorithms. The former is used to find the optimal solution in the global scope and avoid the local optimum, while the latter is used to improve the accuracy of the optimal solution. Up to now, researchers have proposed various metaheuristic algorithms according to the natural biological habits, physical and chemical laws, human behaviors and so on, such as Particle Swarm Optimization (PSO) [2], Grey Wolf Optimizer (GWO) [3], Snake Optimizer (SO) [4], Arithmetic Optimization Algorithm (AOA) [5], Reptile Search Algorithm (RSA) [6], Slime Mould Algorithm (SMA) [7], Remora Optimization Algorithm (ROA) [8], and recently proposed algorithms Pied Kingfisher Optimizer (PKO) [9], and Secretary Bird Optimization Algorithm (SBOA) [10].

One of the most important applications of metaheuristic algorithms is the training of artificial neural networks (ANNs). Among different types of artificial neural networks, the multilayer perceptron (MLP) has a simple structure and efficient performance and can be used to solve various classification problems in reality. The parameters, such as weights and biases of the MLP, can be optimized by the metaheuristic algorithms. Although there are many metaheuristic algorithms developed to train the MLP, according to the NFL theorem [11], new algorithms are always required when solving emerging complex optimization problems.

In the field of college oral English teaching, the goal is to improve students’ spoken language skills and develop their abilities to express their views clearly, accurately, and fluently, which are essential for their academic success, career development, and lifelong learning [12]. Strengthening supervision and feedback in the teaching process is a powerful means to ensure the teaching effect. Generally speaking, an integrated teaching quality evaluation system includes information from a supervisor, colleagues, students, and teachers. Therefore, the teaching quality evaluation model needs to consider a variety of factors, which can be a challenging nonlinear optimization problem. The traditional English teaching quality evaluation methods, such as the grey relational analysis method [13], the analytic hierarchy process [14], and fuzzy comprehensive evaluation method [15], are subjective and contain random defects, resulting in inaccurate evaluation results.

In the literature, there are some studies that have discussed teaching quality evaluations. For example, Zhang et al. [13] adopted the principal component analysis and support vector machine to improve the evaluation precision of English teaching quality. Lu et al. [16] constructed the English interpretation teaching quality evaluation model using the RBF neural network, which is optimized by a genetic algorithm. Wei et al. [17] investigated the evaluation performance of the college English teaching effect using an improved quantum particle swarm algorithm and a support vector machine. Zhang [18] also studied the problem of college English teaching effect evaluation, and the least squares support vector machine method was applied for the evaluation. Tan et al. [19] introduced the oral English teaching quality evaluation method based on a BP neural network, which is optimized by an improved crow search algorithm. Miao et al. [20] applied the decision tree algorithm to evaluate the teaching effect of oral English teaching with high accuracy and short time-consuming. Up to now, it still can be a challenge to develop an effective and reliable model for the accurate evaluation in the field of English teaching quality. The related works of English teaching quality evaluation are reported in Table 1.

Table 1.

Overview of recent literature studies on English teaching quality evaluation.

Inspired by the four different behavioral characteristics of Pyrrhura Molinae parrots, Lian et al. proposed a new metaheuristic algorithm called Parrot Optimizer (PO) in 2024 [21]. The PO simulates parrots’ behaviors of foraging, staying, communicating, and fear of strangers, aiming to achieve a balance between exploration and exploitation. Although the PO algorithm produces satisfactory results for a variety of real-world engineering optimization problems, it does not perform well in solving high-dimensional optimization problems. In this paper, the PO algorithm is improved by using multiple improvement mechanisms and applied to solve the MLP classification problems.

The main contributions of this article are articulated as follows:

- An improved PO (IPO) is proposed in this paper, which adopts three improvements, namely the aerial search strategy, modified staying behavior, and communicating behavior;

- The proposed IPO is tested using twelve CEC2022 test functions;

- The numerical results, Wilcoxon signed-rank test, Friedman ranking test, convergence curves, and boxplots demonstrate the superiority of IPO compared to PO and the other five methods;

- The effectiveness of IPO-MLP is verified in training the multilayer perceptron for solving the classification problems, including five classification datasets and an oral English teaching quality evaluation problem.

The framework of the rest paper is outlined as follows: In Section 2, the methodology employed in this research is provided in detail. Section 3 gives the improvement methods of PO. The results of the proposed IPO on CEC2022, standard classification datasets, and the oral English teaching quality evaluation problem are presented in Section 4. Finally, Section 5 concludes this paper.

2. Preliminaries

2.1. Multilayer Perceptron

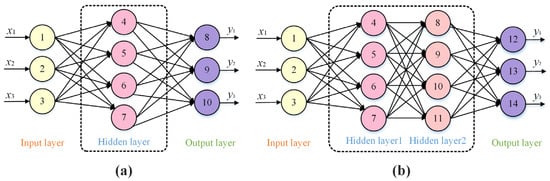

Multilayer perceptron (MLP) is one type of feedforward neural network (FNN), which has shown the powerful ability to solve nonlinear problems [22]. As shown in Figure 1, two MLP models are presented, which have structures of 3-4-3 and 3-4-4-3, respectively. The input information starts at the input layer and passes layer by layer until it reaches the output layer. In general, there is one input layer, one output layer, and one or more hidden layers in an MLP model, and each layer has multiple nodes. These nodes in the hidden or output layers are used to perform the neural network computation. Like other neural networks, the weights between nodes and the biases of nodes are the key parameters that need to be optimized.

Figure 1.

Structure of MLP model: (a) MLP with 3-4-3 structure. (b) MLP with 3-4-4-3 structure.

The MLP calculation process can be represented by the following equations.

The first step is to calculate the weighted sum Hj of j-th node by using Equation (1):

where ωij, xi, and θj are the weight, input, and bias of the j-th node, respectively.

Then the output of nodes is calculated by using Equation (2):

where f(Hj) denotes the active function calculation, and the applied function in this paper is the sigmoid function. In the next layer or output layer, the outputs are calculated in a manner similar to Equations (1) and (2). And finally, the output results are obtained.

For the training of MLP, the mean square error (MSE) is treated as the objective function, which is defined as follows:

where S is the number of all samples. M is the number of nodes in the output layer. and indicate the output value and real value of the k-th output node for the n-th sample. According to Equation (3), the smaller the mean square error is, the closer the model output value is to the actual value. Therefore, the training process of the MLP model can be regarded as a minimum value problem, and the goal is to find the optimal weights and biases.

2.2. The Parrot Optimizer

The PO is a novel stochastic optimization algorithm inspired by the special behaviors of Pyrrhura Molinae parrots [21]. Four types of behavioral traits are modeled in PO: foraging, staying, communicating, and fear of strangers. The process of PO is described as follows.

2.2.1. Population Initialization

In PO, the first step is to initialize the population. The position of the i-th agent is calculated as follows:

where lb and ub are the lower and upper boundaries, respectively; rand is a random value evenly distributed between 0 and 1. N is the size of the population. By using Equation (1), the initial positions of population individuals are randomly generated within the upper and lower boundary ranges.

2.2.2. Foraging Behavior

In the foraging behavior, Pyrrhura Molinae parrots will consider the location of food or the owner and then fly to the estimated location. The mathematical model is described as follows:

where and indicate the positions of the i-th agent at the current and next iteration; Xbest denotes the location of food or the owner; levy(D) denotes the Levy distribution operator and D is the dimension of the objective function; is the average location of the population.

2.2.3. Staying Behavior

In the staying behavior, Pyrrhura Molinae parrots will fly to the owner and stay on the owner’s body for a while. This behavior is formulated as follows:

where ones(1, D) represents an all-1 vector of D dimension. Xbest × levy(D) represents the behavior of flying to the owner, and rand × ones(1, D) represents the behavior of staying on the owner’s body for a while.

2.2.4. Communicating Behavior

The communicating behavior of Pyrrhura Molinae parrots can be divided into two types: flying to the flock and without flying to the flock. Considering that both cases have the same probability of happening, these two types of communication behaviors can be represented as follows:

where P1 is a random number in the range of [0, 1].

2.2.5. Fear of Strangers’ Behavior

Pyrrhura Molinae parrots also have the behavior trait of being afraid of strangers. They will fly towards the owner and move away from the strangers. This behavior is formulated as follows:

where O denotes the behavior of flying towards the owner, and L denotes the behavior of moving away from the strangers.

2.3. Aerial Search Strategy

The aerial search strategy is developed in Arctic Puffin Optimization (APO) [23], which displays a strong ability for global exploration. The mathematical model of it can be shown in the following equations.

where randn denotes a random value following the standard normal distribution; r is a random integer in [1, N – 1]. The round denotes a function that is used to round values to the nearest whole number.

2.4. Fitness–Distance Balance (FDB) Selection

Fitness–distance balance (FDB) selection is a well-known and effective improvement method applied in metaheuristic algorithms, which is proposed by Kahraman et al. in 2020 [24]. FDB selection can improve the search process of the optimization method by balancing the fitness and the distance between the current agent and the best agent. The first step of FDB is to calculate the distance between the candidate solution and the best solution, as shown in Equation (13).

where DPi denotes the Euclidean distance between the i-th candidate solution and the best solution. In other cases, Manhattan distance and Minkowski distance can also be adopted as the distance metrics. Then the distance vector DP for the candidate solution can be obtained, which is shown in Equation (14).

In the second step, two factors of fitness and distance are comprehensively considered to obtain the score of each individual, as shown in Equation (15).

where w is a weight coefficient with the value range [0, 1]; norm denotes the normalized operator; f is the fitness function vector of the population. The score vector SP is shown in Equation (16).

In this paper, a variant of the FDB called RFDB is selected to use the roulette wheel selection [25], which selects an individual according to the score vector SP. In the RFDB, the scores of all individuals are summed, which is Ssum = S1 + S2 + …+ SN. Then the probability of each individual being selected is the ratio of the individual’s score to the sum of all scores, which is Si/Ssum. Therefore, the higher the score, the greater the chance of being selected.

3. Improved Parrot Optimizer

3.1. Motivation

The motivation to improve the PO is to improve the performance and adaptability of the algorithm to meet the increasingly complex requirements of optimization problems. Although the basic PO displays good performance in some optimization problems, it still has limitations when dealing with complicated nonlinear problems, such as the oral English teaching quality evaluation problem. Therefore, this paper introduces efficient strategies and improving techniques to the PO, so that the enhanced PO can flexibly adjust the search strategies during the searching process to improve its search ability and convergence speed, and reduce the possibility of falling into the local optima.

3.2. Proposal for IPO

To enhance the global and local search ability of basic PO, several modifications are applied to it, including aerial search strategy, new staying behavior, and roulette fitness–distance balance selection. The details are shown below.

3.2.1. New Exploration Equations Using Aerial Search Strategy

The original PO has a weak exploration phase, so we try to enhance it by using the aerial search strategy that existed in Arctic Puffin Optimization, as shown in Equations (11) and (12).

3.2.2. New Staying Behavior

The staying behavior of PO includes flying to the host and randomly stopping at the host’s body. To increase the local search of the PO, it is assumed that the parrot is already on its owner’s body and might move randomly. Thus, according to Equation (6), the modified staying behavior is described as follows:

where P2 is a random value between 0 and 1, indicating the same possibility of flying and moving for a parrot.

3.2.3. New Communicating Behavior

In the proposed IPO, the RFDB selection is applied to the process of communicating behavior to balance the global and local exploration of PO. The improved communicating behavior can be represented as follows:

where P3 is a random value between 0 and 1. is the selected agent using the RFDB selection method.

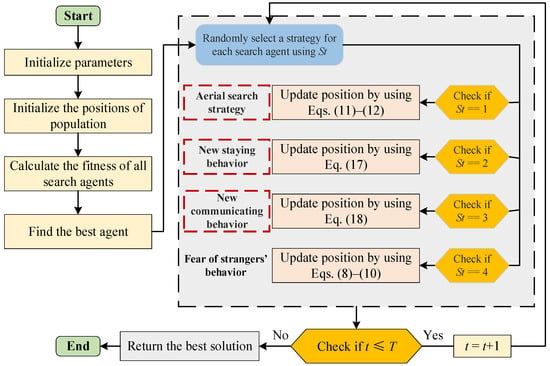

3.2.4. Architecture of the Proposed IPO

The Pseudocode of IPO is given in Algorithm 1, and the flowchart of IPO is shown in Figure 2. When the IPO begins the optimizing process, the positions of the population are first initialized and the optimal individual is determined. Then, the algorithm enters a cyclic iterative process, and each individual performs a certain behavior according to the parameter St, including aerial search strategy, modified staying behavior, improved communicating behavior, and fear of strangers’ behavior. When the number of algorithm iterations reaches the terminating condition, the loop exits and outputs the found optimal solution.

| Algorithm 1: Pseudocode of the Proposed IPO |

| 1. Initialize the IPO parameters: population size N, maximum iterations T. 2. Initialize the population’s positions randomly and identify the best agent. 3. For t = 1:T 4. Calculate the fitness function. 5. Find the best agent. 6. For i = 1:N 7. St = randi([1, 4]) 8. If St == 1 9. Behavior 1: aerial search strategy 10. Update position by Equations (11) and (12). 11. Elseif St == 2 12. Behavior 2: new staying behavior 13. Update position by Equation (17). 14. Elseif St == 3 15. Behavior 3: new communicating behavior 16. Update position by Equation (18). 17. Elseif St == 4 18. Behavior 4: fear of strangers’ behavior 19. Update position by Equations (8)–(10). 20. End if 21. i = i + 1 22. End for 23. t = t + 1 24. End For 25. Return the best solution |

Figure 2.

The flowchart of IPO.

3.2.5. The Computational Complexity Analysis of IPO

The computational complexity is an important indicator for the performance evaluation of optimization methods [5]. Set the population size to N, the maximum number of iterations to T, and the dimension of the objective function to D. For the original PO, the computational complexity of initialization is O(N × D). The computational complexity during the iterations is O(N × D × T). Thus, the total computational complexity of PO is O(N × D) + O(N × D × T) = O(N × D × (1 + T)). For the IPO, all the applied modifications will not increase the computational complexity. Therefore, the computational complexity of the proposed IPO is the same as the PO, which is O(N × D × (1 + T)).

4. Experimental Results

In this section, the optimization performance of IPO is evaluated using two types of test experiments, which are CEC2022 benchmark functions [26] and MLP model training. The results of IPO are compared with six other advanced algorithms, including PO [21], Harris Hawks Optimization (HHO) [27], Sine Cosine Algorithm (SCA) [28], Osprey optimization algorithm (OOA) [29], AOA [5], and Aquila Optimizer (AO) [30]. The detailed control parameters of these algorithms are provided in Table 2. To ensure a fair comparison of experimental results, all experiments were independently run 30 times, and for each optimization problem, the maximum number of iterations of all optimization algorithms is set to 500, and the population size is set to 30. The experiments are performed by MATLAB R2021b on a PC with Windows 11 operating system and Intel(R) Core(TM) i7-10700 CPU @ 2.90 GHz and 32.00 GB RAM.

Table 2.

The parameter settings of the proposed algorithm and the compared algorithms.

4.1. Case 1: CEC2022 Test Sets

The proposed IPO is first tested by using twelve CEC2022 benchmark functions [10,31], where F1 is a unimodal function, F2–F5 are multimodal functions, F6–F8 are hybrid functions, and F9–F12 are composition functions. The details of test functions are presented in Table 3. The range indicates the search range of search space. Fmin indicates the theoretical optimal value. The dimensions of all problems are set to 20.

Table 3.

The detailed information on the CEC2022 test functions.

4.1.1. Ablation Test

This section analyzes the influence of different strategies on the optimization performance of the PO algorithm, including aerial search strategy, new staying behavior, and new communicating behavior. Table 4 shows different versions of the improved PO algorithm, where one denotes that a strategy is adopted, and zero means a strategy is not adopted.

Table 4.

PO and improved PO with different strategies.

Table 5 provides the results of the sensitivity analysis for the original PO and all other variants from IPO1 to IPO7 algorithms on the CEC2022 test functions. It can be observed that IPO7 has better optimization results than other algorithms. It has ranked the 1st in F1−F3, F5, and F7−F11 whereas it ranked 2nd only in F4 (after IPO4) and F6 (after IPO5). Therefore, the three improvement strategies are helpful to improve the optimization performance of the PO algorithm. From this point forward, we will refer to IPO7 simply as IPO.

Table 5.

Sensitivity analysis of PO and its improved versions.

4.1.2. Comparison and Analysis with Other Methods

Table 6 shows the test results of mean, best, worst, and standard deviation for all algorithms. The best results of mean values for these test functions are marked in bold. It can be seen from Table 6 that the IPO algorithm outperforms the comparison algorithms in test functions F1, F2, F5, F6, F9, F1, and F12, which shows a strong competitive performance. It is also noted that AO obtains the best mean values on F3, F4, F7, and F8, and PO wins on F10. Overall, the proposed IPO displays obviously better performance than other algorithms.

Table 6.

Numerical results of IPO and other compared algorithms on CEC2022 test functions.

Moreover, Table 7 gives the results of the Friedman ranking test. It is clearly shown that IPO ranks first compared to the other six algorithms, while AO ranks second and basic PO ranks third. It is further proved that the proposed IPO is superior to other algorithms on CEC2022 test functions.

Table 7.

Friedman ranking results of all algorithms on CEC2022 test functions.

Table 8 shows the p-values results of the Wilcoxon signed-rank test. In this test, The optimization results of IPO are compared respectively with those of each algorithm. The number of the comparison results is 15. p-values smaller than 0.05 indicate that the function results of IPO and comparative algorithms have significant differences. Otherwise, there is no significant difference between them. Moreover, the signs ”+/=/–” denote the results of IPO are better, equal, and worse than those of compare algorithms. From Table 8, it is obviously found that IPO has a significant difference compared to the other six methods and shows better optimization performance.

Table 8.

Wilcoxon signed-rank test p-values results of IPO compared to other algorithms on CEC2022 test functions.

Table 9 reports the results of the average computational time on each test function. It can be seen that due to the addition of several improvement strategies, the calculation time of the IPO is slightly longer than that of the PO. The AOA algorithm has the shortest calculation time because of its simple structure, while the AO algorithm is more complex and has the longest calculation time.

Table 9.

The average computational time for each algorithm on CEC2022 test functions.

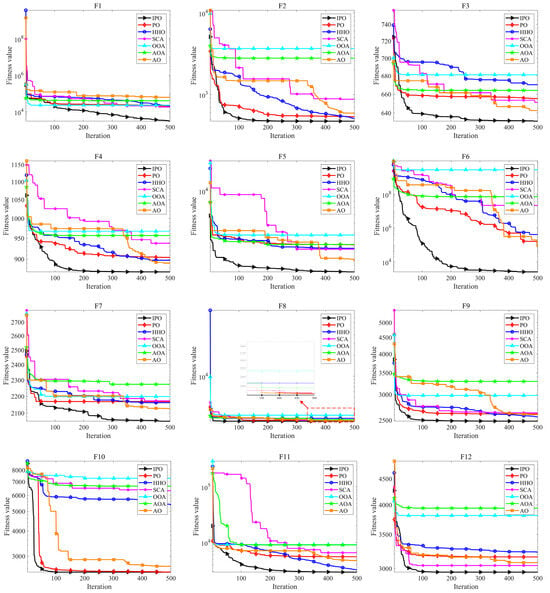

Figure 3 displays the convergence curves of all algorithms on each of the CEC2022 test functions. It can be seen that the IPO converges faster than other algorithms in most cases. In the early stage of the iteration process, IPO can quickly identify better solutions to avoid local optima, such as F2-F6, and F11. Meanwhile, in the subsequent iteration process, the accuracy of the solution can be continuously improved, such as F1 and F6, which proves the effectiveness of the applied improvement strategies. Therefore, IPO shows excellent exploration and exploitation abilities for solving the CEC2022 problems.

Figure 3.

Convergence curves of all algorithms on CEC2022 test functions.

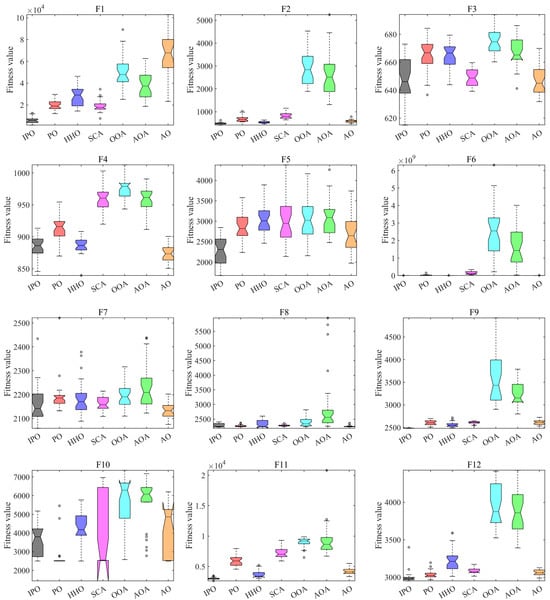

Figure 4 presents the boxplots of all algorithms on each CEC2022 test function. It is shown that the boxplots of IPO on F1, F6, F9, F11, and F12 are noticeably narrower than other algorithms, indicating its good stability when solving these problems. In addition, the medians of boxplots for IPO on F1, F5, F9, F11, and F12 are also significantly lower than other methods, showing better accuracy. In other cases, IPO also presents competitive results. Thus, IPO has the merits for solving these optimization problems.

Figure 4.

Boxplots of all algorithms on CEC2022 test functions.

4.2. Case 2: Standard Classification Datasets

The second test contains five standard classification datasets, which are XOR, Ballon, Iris, Breast cancer, and Heart [32]. The details of these datasets are provided in Table 10. The MLP models are applied to solve these classification problems. It can be seen that each classification dataset has a corresponding MLP with different structures and parameters.

Table 10.

The detailed information of classification datasets.

The training results of IPO-MLP and other compared methods are shown in Table 11. The best mean results are marked in bold. It is found that IPO-MLP has obtained the best mean results among these methods and ranks first at the final rank. In particular, IPO-MLP has achieved significantly lower MSE results in the Balloon dataset. Therefore, IPO-MLP exhibits superior performance in the training of MLP models.

Table 11.

MSE results on training data.

Table 12 gives the results of classification accuracy results. The bold values mean the best mean results. It is observed that although the training results of IPO-MLP are the best, its classification accuracy is not the highest on Breast cancer and Heart datasets. Overall, IPO-MLP has obtained competitive results on these five classification datasets. The Friedman ranking results show that the IPO-MLP is the best.

Table 12.

Classification accuracy results on test data (%).

4.3. Case 3: Oral English Education Evaluation Problem

In the third case, the proposed IPO is used to solve the oral English education evaluation problem. The oral English education evaluation problem can be regarded as a classification problem with multiple features. In this paper, the evaluation model is constructed using a multilayer perceptron model with a 10-21-3 structure. Then, the weights and biases of this MLP model are optimized by using the proposed IPO. The results are shown below.

4.3.1. Indexes of Oral English Teaching Quality Evaluation Model

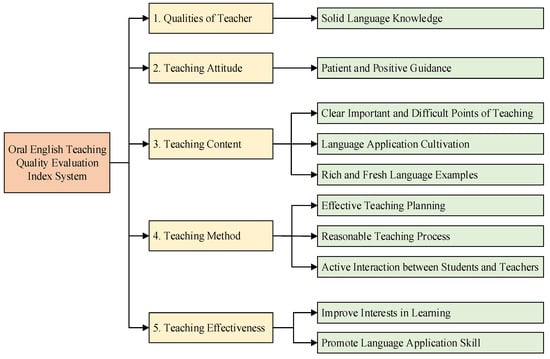

For better evaluating the oral English teaching quality, the indexes of oral English teaching quality evaluation problem are selected according to the works in [19]. The index system is constructed as shown in Figure 5, which has five first-grade indexes: qualities of teacher, teaching attitude, teaching content, teaching method, and teaching effectiveness. In each first-grade index, there are one or more second-grade indexes. These elements play a leading role in the oral English teaching evaluation and ensure the scientific and reasonable teaching quality evaluation system.

Figure 5.

Oral English teaching quality evaluation index system.

As can be seen in Figure 5, ten features in the oral English education evaluation problem are selected to determine the evaluation outcomes of teachers. The evaluation results are divided into three cases, which are excellent, good, and qualified. Therefore, an MLP model with a 10-21-3 structure is constructed to find the relationship between the indexes and evaluation outcomes. The node number of the hidden layer is determined by an empirical formula 2 × n + 1 [33], where n is the number of input parameters.

4.3.2. Analysis of Testing Results

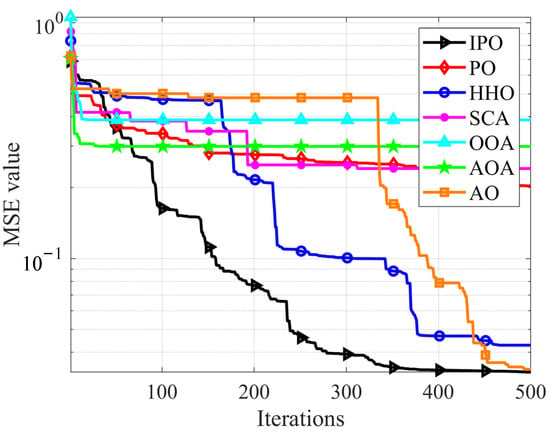

For the experiments, a total of 60 groups of oral English quality evaluation data were collected, of which 20 are excellent, 20 are good, and 20 are qualified. The proposed IPO and other six compared methods are employed to train the constructed MLP models. The experiments are conducted for 30 times. The results are shown in Figure 6, Table 13, and Table 14.

Figure 6.

The convergence curves of average MSE for all algorithms.

Table 13.

Comparison of training results.

Table 14.

Comparison of accuracy results (%).

Figure 6 shows the convergence curves of average MSE during the training process. It is observed that the IPO presents the ability to consistently find smaller MSE values throughout the whole process and obtain the smallest value at last, while the original PO shows poor search ability for this problem. It is also noted that HHO and AO also show good optimization results compared to IPO. Table 13 provides the training results of all algorithms. It can be found that IPO has the best performance for training the evaluation model. In terms of the mean value index, IPO-MLP obtains the lowest value with 4.111E-02 and ranks first among all algorithms.

Meanwhile, the accuracy results are given in Table 14. It can be seen that IPO-MLP also ranks first with a mean accuracy of 7.022 × 101. The IPO-MLP also obtains the highest accuracy of 8.833 × 101. Therefore, the proposed IPO has the merits of solving the oral English teaching quality evaluation problem. By using the proposed IPO-MLP, the decision-makers can better allocate teaching resources based on the accurate assessment of teachers’ teaching levels.

5. Conclusions and Future Work

In this paper, the aerial search strategy, modified staying behavior, and roulette fitness–distance balance selection are used to improve the basic PO for better optimization performance. The proposed IPO was tested on twelve CEC2022 test functions and applied to optimize the parameters of MLP models for classification problems. The results of the IPO significantly outperformed the other six advanced algorithms, including PO, HHO, SCA, OOA, AOA, and AO. An evaluation model of oral English teaching quality is also constructed using an MLP model with a 10-21-3 structure. The IPO is used to optimize the weight and bias parameters of the evaluation model. The results show that the IPO-MLP model can more accurately evaluate the outcomes of oral English teaching quality and has obtained the highest accuracy of 88.33%.

In future work, the suggested optimizer can be extended to tackle a broader range of complex optimization problems, such as robotic path planning, feature selection in high-dimensional datasets, and dynamic scheduling tasks. Additionally, the IPO-MLP framework can be integrated with other metaheuristic algorithms to enhance convergence speed and solution quality. Another promising direction is the automatic optimization of MLP architecture, including activation function selection, hidden layer configuration, and neuron count, potentially using self-adaptive or co-evolutionary strategies. These enhancements can further improve the robustness and generalization ability of the proposed approach across diverse application domains.

Author Contributions

Conceptualization, F.L. and C.D.; methodology, F.L. and R.Z.; software, F.L. and R.Z.; formal analysis, C.D.; investigation, F.L.; writing—original draft preparation, F.L., A.G.H., and R.Z.; writing—review and editing, F.L., A.G.H., and R.Z.; visualization, F.L.; supervision, C.D.; project administration, F.L.; funding acquisition, F.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Educational Scientific Research Project for Young and Middle-aged Teachers in Fujian Province (JSZW22045).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are available as asked.

Acknowledgments

We appreciate the editors and reviewers who spent time on the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Hashim, F.A.; Hussien, A.G. Snake Optimizer: A novel meta-heuristic optimization algorithm. Knowl.-Based Syst. 2022, 242, 108320. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The Arithmetic Optimization Algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Abualigah, L.; Elaziz, M.A.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime Mould Algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Jia, H.; Peng, X.; Lang, C. Remora optimization algorithm. Expert Syst. Appl. 2021, 185, 115665. [Google Scholar] [CrossRef]

- Bouaouda, A.; Hashim, F.A.; Sayouti, Y.; Hussien, A.G. Pied kingfisher optimizer: A new bio-inspired algorithm for solving numerical optimization and industrial engineering problems. Neural Comput. Appl. 2024, 36, 15455–15513. [Google Scholar] [CrossRef]

- Fu, Y.; Liu, D.; Chen, J.; He, L. Secretary bird optimization algorithm: A new metaheuristic for solving global optimization problems. Artif. Intell. Rev. 2024, 57, 123. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Hui, Y. Evaluation of Blended Oral English Teaching Based on the Mixed Model of SPOC and Deep Learning. Sci. Program. 2021, 2021, 7044779. [Google Scholar] [CrossRef]

- Zhang, M.Y. English teaching quality evaluation based on principal component analysis and support vector machine. Mod. Electron. Technol. 2018, 41, 178–182. [Google Scholar]

- Zhou, S.R.; Tan, B. Electrocardiogram soft computing using hybrid deep learning CNN-ELM. Appl. Soft Comput. 2019, 86, 105778. [Google Scholar] [CrossRef]

- Liu, Y.G.; Xiong, G. Research on the evaluation of higher vocational computer course teaching quality based on FAHP. Inf. Technol. 2016, 5, 147–149, 153. [Google Scholar]

- Lu, C.; He, B.; Zhang, R. Evaluation of English interpretation teaching quality based on GA optimized RBF neural network. J. Intell. Fuzzy Syst. 2021, 40, 3185–3192. [Google Scholar] [CrossRef]

- Wei, C.Y.; Tsai, S.B. Evaluation Model of College English Teaching Effect Based on Particle Swarm Algorithm and Support Vector Machine. Math. Probl. Eng. 2022, 2022, 7132900. [Google Scholar] [CrossRef]

- Zhang, B.F. Evaluation and Optimization of College English Teaching Effect Based on Improved Support Vector Machine Algorithm. Sci. Program. 2022, 2022, 3124135.1–3124135.9. [Google Scholar]

- Tan, M.D.; Qu, L.D. Evaluation of oral English teaching quality based on BP neural network optimized by improved crow search algorithm. J. Intell. Fuzzy Syst. 2023, 45, 11909–11924. [Google Scholar] [CrossRef]

- Miao, L.; Zhou, Q. Evaluation Method of Oral English Digital Teaching Quality Based on Decision Tree Algorithm. In Proceedings of the e-Learning, e-Education, and Online Training, Harbin, China, 9–10 July 2022; Fu, W., Sun, G., Eds.; Springer: Cham, Switzerland, 2022; Volume 454, pp. 388–400. [Google Scholar]

- Lian, J.B.; Hui, G.H.; Ma, L.; Zhu, T.; Wu, X.C.; Heidari, A.A.; Chen, Y.; Chen, H.L. Parrot optimizer: Algorithm and applications to medical problems. Comput. Biol. Med. 2024, 172, 108064. [Google Scholar] [CrossRef]

- Meng, X.Q.; Jiang, J.H.; Wang, H. AGWO: Advanced GWO in multi-layer perception optimization. Expert Syst. Appl. 2021, 173, 114676. [Google Scholar] [CrossRef]

- Wang, W.C.; Tian, W.C.; Xu, D.M.; Zang, H.F. Arctic puffin optimization: A bio-inspired metaheuristic algorithm for solving engineering design optimization. Adv. Eng. Softw. 2024, 195, 103694. [Google Scholar] [CrossRef]

- Kahraman, H.T.; Aras, S.; Gedikli, E. Fitness-distance balance (FDB): A new selection method for meta-heuristic search algorithms. Knowl.-Based Syst. 2020, 190, 105169. [Google Scholar] [CrossRef]

- Bakır, H. Dynamic fitness-distance balance-based artificial rabbits optimization algorithm to solve optimal power flow problem. Expert Syst. Appl. 2024, 240, 122460. [Google Scholar] [CrossRef]

- Kumar, A.; Price, K.V.; Mohamed, A.W.; Hadi, A.A.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2022 Special Session and Competition on Single Objective Bound Constrained Numerical Optimization; Technical Report; Indian Institute of Technology: Varanasi, India, 2021. [Google Scholar]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H.L. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Osprey optimization algorithm: A new bio-inspired metaheuristic algorithm for solving engineering optimization problems. Front. Mech. Eng. 2023, 8, 1126450. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Elaziz, M.A.; Ewees, A.A.; Al-qaness, M.A.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Büşra, I.; Murat, K.; Şaban, G. An improved butterfly optimization algorithm for training the feedforward artificial neural networks. Soft Comput. 2023, 27, 3887–3905. [Google Scholar]

- Bansal, P.; Gupta, S.; Kumar, S.; Sharma, S.; Sharma, S. MLP-LOA: A metaheuristic approach to design an optimal multilayer perceptron. Soft Comput. 2019, 23, 12331–12345. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).