Abstract

The maximum k-coverage problem (MKCP) is a problem of finding a solution that includes the maximum number of covered rows by selecting k columns from an m n matrix of 0s and 1s. This is an NP-hard problem that is difficult to solve in a realistic time; therefore, it cannot be solved with a general deterministic algorithm. In this study, genetic algorithms (GAs), an evolutionary arithmetic technique, were used to solve the MKCP. Genetic algorithms (GAs) are meta-heuristic algorithms that create an initial solution group, select two parent solutions from the solution group, apply crossover and repair operations, and replace the generated offspring with the previous parent solution to move to the next generation. Here, to solve the MKCP with binary and integer encoding, genetic algorithms were designed with various crossover and repair operators, and the results of the proposed algorithms were demonstrated using benchmark data from the OR-library. The performances of the GAs with various crossover and repair operators were also compared for each encoding type through experiments. In binary encoding, the combination of uniform crossover and random repair improved the average objective value by up to 3.24% compared to one-point crossover and random repair across the tested instances. The conservative repair method was not suitable for binary encoding compared to the random repair method. In contrast, in integer encoding, the combination of uniform crossover and conservative repair achieved up to 4.47% better average performance than one-point crossover and conservative repair. The conservative repair method was less suitable with one-point crossover operators than the random repair method, but, with uniform crossover, was better.

1. Introduction

The maximum k-coverage problem (MKCP) is a type of set-covering problem. When matrix A = is a binary matrix with a size of , the purpose of the MKCP is to select k columns covering the matrix such that the number of rows contained is at its maximum [1,2]. The MKCP has applications in many engineering fields, such as the maximum covering location problem [3], cloud computing [4], blog-watch [5], influence maximization problems for target marketing [6], and optimizing recommendation system in e-commerce [7].

The MKCP is famous for its NP-hardness [8]. GAs do not guarantee finding an optimal solution for NP-hard problems in polynomial time. However, they are well known for providing a reasonably better solution in a suitable time than heuristic and deterministic algorithms for optimization [9].

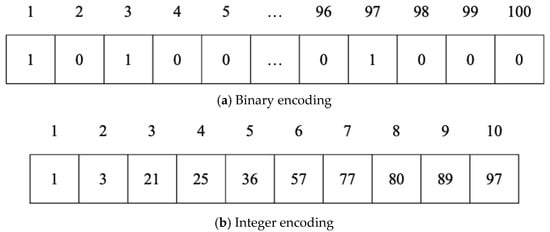

The solution for the MKCP in GAs can be expressed in a one-dimensional array whose elements present whether the corresponding column is selected or not. In integer encoding, the element of the array itself indicates whether that column is selected or not. In binary encoding, the element has only a value of 1 or 0. The value of 1 means the corresponding column is selected, and the value of 0 means it is not selected.

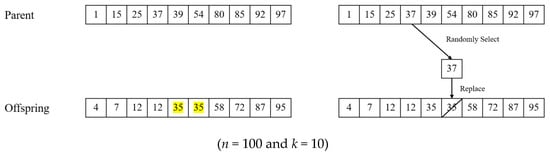

Figure 1 shows an example of a binary encoding and integer encoding, where A is a matrix and the value of k is 10. In binary encoding, an array for a solution contains only 0 and 1. The size of the array is 100 because the number of columns of the matrix is 100. The value of 1 means that the column corresponding to the index of the element is selected for the solution. In integer encoding, the size of an array for a solution is 10 because the value of k is 10. The value of each element in the array means that the corresponding column is selected. Because of these characteristics, there is a difference between binary encoding and integer encoding.

Figure 1.

Examples of binary and integer encodings for the MKCP.

The performances of GAs for the MKCP with the two encodings and genetic operators were examined in this study. Through the experiments, the best combinations of encodings and operators could be derived. Genetic algorithms were designed with two different encoding methods, binary and integer encodings. In the proposed GAs, two solutions composed of k columns are randomly selected from a solution group, which is called a population. An offspring solution is generated by sequentially applying crossover, repair, and replacement operations. After a predetermined number of generations, the solution with the maximum target value is selected as the final solution. The performances of the proposed methods, which are based on binary and integer encodings, with various crossover operators and repair feasibilities, are compared and verified [10,11].

In this study, GAs for solving the MKCP are presented in detail, and various encodings and genetic operators are investigated. The effectiveness of GAs for solving the MKCP could be shown through the experiments. This study also showed which combination of encodings and genetic operators is more effective in solving the MKCP.

The organization of the paper is as follows: Section 2 reviews the related works in the field. Section 3 defines the problem being addressed. Section 4 details the genetic algorithm (GA) used for the MKCP in this study. Section 5 presents and analyzes the experimental results. Finally, Section 6 concludes the paper and offers suggestions for future directions.

2. Related Works

The MKCP is a combinatorial problem and there have been several theoretical studies on the generalizations and algorithms for solving the problem [12]. The purpose of the maximum coverage problem is to select k sets so that the weight of the covered element is maximized. There is a previous study that solves the MKCP with a standard greedy algorithm by Hochbaum and Pathria [13]. The algorithm proposed in the paper adds sets repeatedly so that the weight is maximized when adding sets. In [13], it was proved that the proposed greedy algorithm has an approximation ratio of . Nemhauser et al. [14] tried to solve a more generalized version of the MKCP. They proposed an approximation algorithm for the problem and obtained the same approximation ratio. Although it is already well known that the MKCP is a type of NP-hard problem, Feige [15] proved the inapproximability of the problem. In other words, when P = NP is not true, the algorithm for solving the MKCP cannot have a better approximation ratio than (). Resende suggested another way to solve the MKCP. A greedy randomized adaptive search procedure (GRASP) was used as a heuristic algorithm [16]. The upper bound for optimal values was obtained by considering a linear programming relaxation. GRASP performed better than the greedy algorithm, but no theoretical proof of GRASP was made. Later, the constrained MKCP was studied by Khuller et al. [17]. Instead of limiting the size of the solution, each set had a cost and was made available only if it was less than a predetermined cost. To calculate the approximation ratio, Khuller et al. [17] devised two algorithms. The first one achieved an approximation ratio of and the second one achieved an approximation ratio of . In addition, they demonstrated that under similar conditions proposed by Feige [15], the highest possible approximation ratio for the constrained MKCP is .

The MKCP is an NP-hard problem [8]. It is strongly suspected that there are no polynomial-time algorithms for NP-hard problems, even though that has not been proven thus far. Hence, it is nearly impossible to find the optimal solution in practical time. To solve this kind of problem, heuristic algorithms are usually applied. Heuristic algorithms may not find the optimal solution, but they try to find reasonably good solutions in practical time.

The genetic algorithm is also a meta-heuristic algorithm. It can be applied to many NP-hard problems as well as the MKCP. The encoding scheme is a very important factor in GAs. The given information must be encoded into a specific bit string and there have been various encoding schemes according to characteristics of problems [18,19]. Binary encoding is one of the most common encoding schemes. Payne and Glen [20] developed a GA based on binary encoding to identify similarities between molecules. In their research, binary encoding is used for the position and shape of molecules. Longyan et al. [21] studied three different methods of using a binary-encoded GA for wind farm design. Although binary encoding is the most common encoding scheme, in some cases, the gene or chromosome is represented using a string of some values [22]. These values can be real, integer numbers, or characters.

Among various genetic operators, the crossover is considered the most critical genetic operator in GAs. The crossover operator is used to create offspring by mixing information from two or more parents. Some well-known crossover operators are one-point, two-point, k-point, and uniform crossovers [23]. In one-point crossover, one point is randomly selected. From that point, the genetic information of the two parents is exchanged. In two-point and k-point crossover, two or more random points are selected, and the parents’ genetic information is exchanged from the selected point as in the one-point crossover. In uniform crossover, specific points are not selected for the exchange of genetic information. Instead, for each gene it is randomly determined whether it will be exchanged or not.

MKCP is deeply connected to the minimum set covering problem (MSCP), which is a frequently researched NP-hard combinatorial optimization problem. Meta-heuristics, such as tabu search [24], ant colony optimization [25], and particle swarm optimization [26], have been applied to the MSCP, but the MKCP has been less studied [8]. The techniques used in the MSCP are challenging to apply directly to the MKCP because the two problems have slightly different structures. For solving the MKCP, there is previous research using adaptive binary particle swarm optimization, which is a representative meta-heuristic algorithm [27]. In other research, the method of combining the ant system with effective local search has been proposed [28]. Both studies focused on effective applications of meta-heuristic algorithms to solve the MKCP. In this paper, GAs were used to solve the MKCP. The performances of GAs with various genetic operators are investigated and analyzed. This study can be used as a guide for designing GAs to solve the MKCP efficiently.

Recent studies have further expanded the design of genetic operators to enhance the scalability and robustness of GAs, especially in large-scale optimization settings. For instance, Akopov [29] proposed a matrix-based hybrid genetic algorithm (MBHGA) for solving agent-based models, integrating real and integer encodings with hybrid crossover mechanisms to improve convergence speed and accuracy in multi-agent systems. Deb and Beyer [30] introduced a self-adaptive GA using simulated binary crossover (SBX), which dynamically adjusts the crossover distribution index to balance exploration and exploitation in real-coded optimization problems.

These works demonstrate the flexibility and adaptability of genetic algorithms when combined with carefully designed operators and encoding strategies. Although our current study focuses on classical crossover and repair operators under binary and integer encodings, incorporating more advanced operator schemes like SBX or hybrid frameworks is a promising direction for future research, especially for scaling the MKCP to even larger problem instances.

3. Problem Statement

Let be an 0–1 matrix and let be a weight function for each row of matrix A. The challenge of this problem is to select k columns for the maximum number of covered rows of matrix A. This can be formulated as follows:

I(·) is an indicator function determining whether 0 (false) or 1 (true) [8]. In this study, we assume that the weights are equal to 1. The fitness of a particular solution was measured according to how many rows of a problem matrix were covered.

In this study, 65 instances of 11 set cover problems from the OR-library [31] were used in our experiments. The details for each dataset are shown in Table 1. In Table 1, each number of rows and columns is represented by m and n, respectively. In previous research on the MKCP [10], values of k were fixed as 10 and 20. However, here, the values of k using the tightness ratio were determined in the same way as in [8]; the high tightness ratio means the largest optimal value [9]. Each k value corresponding to a tightness ratio is described in Table 2. To apply genetic algorithms to each data instance, 400 individuals were generated by randomly selecting k columns for an initial population of genetic algorithms.

Table 1.

Dataset used for experiments.

Table 2.

k Values according to tightness ratios.

4. Encoding and Genetic Operators for the MKCP

Genetic Algorithms (GAs) are a class of meta-heuristic algorithms inspired by the process of natural selection. They are widely used for solving combinatorial and NP-hard problems due to their ability to efficiently explore large search spaces.

A typical GA starts by generating an initial population of candidate solutions. In each generation, individuals from the current population are selected based on their fitness, and new solutions (offspring) are generated by applying crossover (recombination) and mutation operations. The offspring then replace some or all individuals in the current population, depending on the replacement strategy. This process continues for a fixed number of generations or until a convergence criterion is met.

The performance of a GA heavily depends on the encoding of solutions, the choice of genetic operators (crossover, mutation, repair), and parameter settings such as population size and number of generations. In this study, we focus on encoding and operator choices tailored to the maximum k-coverage problem (MKCP), and the mutation operation is partially integrated into the repair process.

4.1. GA Encodings for the MKCP

The choice of binary and integer encodings is closely aligned with the structure of the MKCP. In binary encoding, the representation naturally supports fixed-length chromosomes with element-wise selection flags, making it compatible with standard crossover and mutation techniques in genetic algorithms. Integer encoding, on the other hand, directly represents a solution by listing the k selected column indices, which reduces redundancy and allows for more compact representation, especially in problems where k << n.

These two encoding methods have been commonly employed in GA-based studies of combinatorial optimization problems, including feature selection, facility location, and routing [18,20,22]. Their simplicity and effectiveness make them suitable candidates for benchmarking GA performance on the MKCP. In this study, we systematically evaluate both encodings under controlled experimental settings.

In integer encoding, a solution is represented with integers, which correspond to the indices of selected rows, while in binary encoding, a solution is represented by 1s and 0s, with 1 indicating that the corresponding row is selected, and 0 implying it is not [8].

In integer encoding, the length of a solution array is determined according to the k value of the MKCP. For example, if the k value of the MKCP is 40, the length of the array of the solution will also be 40. The range of the integers that comprise the array of a solution is determined by the number of columns of the matrix to be solved. If the number of columns of the matrix is 2000, a correct solution is generated with integers from 1 to 2000.

In binary encoding, the length of an array of a solution is determined according to the number of columns of the matrix to be solved. The value of k determines the number of 1s in a solution; for example, a k value of 40 implies 40 1s in a solution. A value of 1 in a solution implies selecting the column corresponding to the index.

4.2. Crossover Operators for the MKCP

The genetic algorithm is an evolutionary arithmetic technique and is an algorithm technique that finds an optimal solution by imitating the evolutionary process of nature. A typical GA determines the optimum value of a problem function based on a repetitive process of recombination of two parent solutions to create an offspring solution [8].

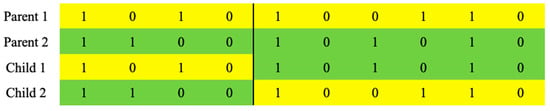

In this study, the number of the population of GAs was set to 400; to create child solutions, four-hundred solutions in the population were randomly paired, and one-point and uniform crossovers, which are representative traditional crossovers of genetic algorithms, were used as crossover operators. The performances of binary and integer encodings were analyzed with these crossover operators. A one-point crossover operator generates a child solution by copying another part of two parent solutions, based on point p, which is selected randomly from the one-dimensional array of a chromosome with length n. An example of this process is presented in Figure 2.

Figure 2.

An example of one-point crossover.

In Figure 2, yellow cells represent genes inherited from Parent 1, and green cells represent genes inherited from Parent 2. A value of 1 indicates that a corresponding column is selected, and a value of 0 indicates that it is not selected. In the figure, point p between the 4th and 5th genes is selected as a crossover point. For our study, only Child 1 was adopted, and Child 2 was not considered. The entire number of possible crossover points in one-point crossover is n−1 [32].

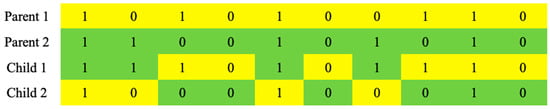

A uniform crossover operator generates an offspring solution by selecting each gene from either parent with equal probability [2]. That is, for each index, there is a 50 percent probability of which parent to select. In the uniform crossover, chromosomes are not divided into segments as they are in one-point crossover [33]. Each gene is dealt with separately. Again, in our GAs, only Child 1 was adopted, and Child 2 was not considered. An example of the process is presented in Figure 3. In the figure, yellow cells represent genes inherited from Parent 1, and green cells represent genes inherited from Parent 2. A value of 1 indicates that a corresponding column is selected, and a value of 0 indicates that it is not selected.

Figure 3.

An example of uniform crossover.

In [34], Bolotbekova et al. employed a crossover ensemble method to explore the most suitable combination. Similarly, we conducted experiments with various crossover combinations to observe performance tendencies, with the results presented in Appendix A.

4.3. Repair Operators for the MKCP

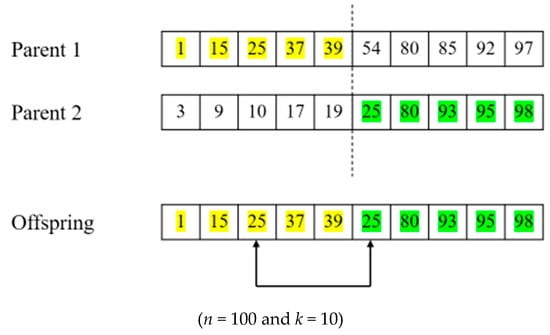

After both one-point and uniform crossover operations, the generated offspring may be infeasible, that is, it does not satisfy the constraint on the MKCP [35]. In this case (Figure 4), a repairing phase can operate to reinstate feasibility, and this makes it possible to perform a part of mutation functions.

Figure 4.

How infeasible solutions can be generated after one-point crossover. Yellow cells represent genes inherited from Parent 1, and green cells represent genes inherited from Parent 2.

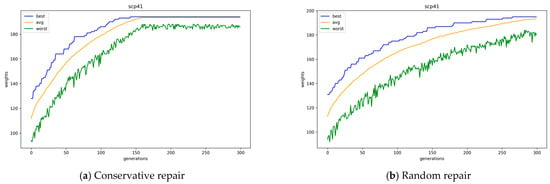

Figure 5 presents an example of the process of a conservative repair operation in integer encoding. In Figure 5, the offspring has a duplicate value of 35, which makes the solution infeasible. A conservative repair operator takes a gene from parent solutions and replaces one of the duplicated genes of the offspring with the gene from a parent. In this process, the gene is randomly selected from a parent solution. Figure 5 shows that a value of 37 is selected from a parent solution, and one of the duplicated genes with a value of 35 is replaced with 37. The repair operator repeats this process until the constraint on the MKCP is satisfied.

Figure 5.

An example of a conservative repair operation.

A random repair operator performs the entire process similarly to a conservative repair operator [36], the difference being that replacements for duplicated values are not taken from parent solutions, but randomly selected among all the possible values. Both repair operators can have the function of mutation. Therefore, in this study, the mutation process of the genetic algorithm was partially replaced by a repair operation, and a separate mutation operator was not applied.

In this study, a separate mutation operator was not applied. Instead, mutation-like behavior was embedded within the repair process. Specifically, the random repair operator introduces diversity by randomly selecting replacement values when a solution becomes infeasible after crossover. This mechanism mimics the role of mutation in maintaining genetic variation without explicitly invoking a dedicated mutation step.

Although the mutation probability was not separately defined, this effect is triggered every time an infeasible solution is repaired—effectively operating at a frequency determined by the rate of infeasible offspring generation. This design choice simplifies the algorithm while ensuring that exploration is preserved. Future work may further investigate the explicit integration of advanced mutation operators alongside repair-based correction.

4.4. Genetic Framework for the MKCP

Before each offspring generation, a population of 400 parent solutions is randomly paired to generate 200 offspring solutions. This population size of 400 is commonly used when solving the MKCP problem using GAs to cover the large search space of this problem [2]. The generated offspring solutions are exchanged with 200 parent solutions with the least objective function values. The best solution and its objective value obtained over 300 generations are recorded as the result of the performed GA. Figure 6 is the pseudocode of the GA used in our experiment.

Figure 6.

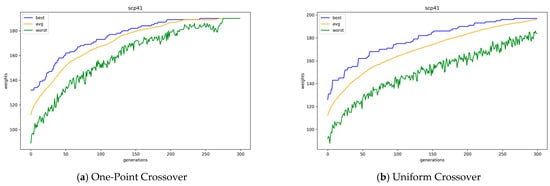

Convergence of GAs with one-point and uniform crossover operators in binary encoding.

Initially, a population of solutions is randomly generated, and after a predetermined number of generations (300), the GA is terminated. In a generation, solutions are randomly paired, and for each pair, an offspring is produced by crossover and repair operators with 100% probability [2]. Repair operators are applied only when the produced offspring is not feasible, that is, it does not satisfy the constraint on the MKCP. Next, the newly generated offspring are evaluated, and solutions in the previous population are sorted according to their objective values. Half the previous population with low fitness is replaced by new offspring. Based on the genetic framework in Algorithm 1, different crossover and repair operators are applied and compared in our experiments [8].

| Algorithm 1: Pseudocode of Genetic Algorithm Used in this Study |

| Input: Population size N, Number of generation maximum_generation Output: Best individual Initialize a population P of N individuals // Ensure initial population satisfies the k-column constraint (exactly k columns selected) for i = 1 to maximum_generation do Randomly form N/2 pairs from population P offspring_list ← ∅ for each pair (p1, p2) do offspring = crossover(p1, p2) if (constraint is not satisfied) then while (constraint is not satisfied) do repair(offspring) end while end if // No separate mutation step; repair includes random replacement to induce diversity offspring_list ← offspring_list ∪ offspring end for evaluate fitness values of offspring_list sort P by fitness values in descending order Replace the bottom half of P with offspring_list end for Return the best individual in P Return the best individual in P |

5. Experimental Results

5.1. Experimental Settings

Table 2 shows the size of the data used in the experiments; the experiments were conducted by setting the k value differently for each of the number of rows and columns of the corresponding matrix for the MKCP.

A total of eight experiments were conducted with two types of encoding methods (binary encoding, integer encoding), two types of crossover operators (one-point crossover, uniform crossover), and two types of repair operators (conservative repair, random repair). In all experiments, the number of populations was fixed at 400 and the number of generations was fixed at 300. In each generation, half the population with low fitness value was replaced by child solutions that had been generated based on 200 pairs of parent solutions. Parent solutions were randomly paired before crossover operations. For each experiment, 30 runs of GAs were performed, and the average objective value over 30 runs with average running time was recorded. Each experiment was conducted on all tightness ratios. The experiments were run on a computer equipped with a 3.2 GHz AMD Ryzen 7 5800H processor (Advanced Micro Devices, Santa Clara, CA, USA) and 16 GB of RAM. The proposed genetic algorithms were implemented using the Java programming language.

5.2. Results with Binary Encoding

Table 3 presents the average for each best objective value and running time of 30 runs of GAs with binary encoding. As shown in Table 3, uniform crossover operations show better results than those of one-point crossover. This characteristic manifests regardless of the type of repair operation. In particular, the larger the instance size and k value, the larger the performance difference between the two crossover methods.

Table 3.

Results with binary encoding.

In Figure 6, the average, best, and worst objective values of the GA solution according to the generation are plotted with two types of crossover operators for problem instance Scp41, which is an instance of the problem set Scp4x. In Figure 6, random repair was applied, and it shows that one-point crossover operations converge slightly faster than uniform crossover operations. In the case of one-point crossover operations, the average and best values already became similar from approximately the 150th generation, whereas, in the case of uniform crossover operations, they did not converge until approximately the 300th generation. In addition, by examining the worst-performing individuals within the population, it can be observed that the overall quality of individuals is higher when using a one-point crossover compared to a uniform crossover.

Differences in performance results according to repair methods can also be seen in Table 3. Comparing conservative repair and random repair, conservative repair showed worse performance than random repair in binary encoding. The running times of GAs with the conservative repair method were slightly faster than that of GAs with the random repair method; however, the solution quality of the conservative repair method, according to objective values, was significantly lower than that of the random repair method. The average performance of the combination of uniform crossover and random repair improved by up to 3.24% compared to the combination of one-point crossover and random repair. Furthermore, we conducted Welch’s t-test to compare the performance of different crossover methods under the same repair strategy. The results statistically validate that the use of uniform crossover yields significantly better performance than one-point crossover, regardless of the repair method applied.

The random repair method has a slightly larger mutation effect than the conservative repair method. Hence, the convergence of the solution population is slow when using the random repair method. That is, the random repair method can maintain more diversity in solutions than the conservative repair method. Similar to the comparative results of crossover operations, maintaining diversity without rapid convergence is advantageous for solving the MKCP.

5.3. Results with Integer Encoding

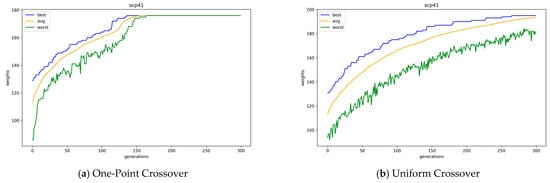

Table 4 presents the average for each best objective value and running time of 30 runs of GAs with integer encoding. In the table, the combination of uniform crossover operations and the conservative repair method has the best results overall. However, in cases with the random repair method, one-point crossover operations show better results when the k value is large, while uniform crossover operations show better results when the k value is small. Figure 7 presents the average, best, and worst objective values of the GAs solution population according to the offspring generation with one-point and uniform crossover operations for problem instance scp41, where the random repair method is used. Here, as in binary encoding, GAs with one-point crossover operations converge slightly faster than GAs with uniform crossover operations. According to an example case in Figure 7, GAs with one-point crossover operations converge completely at the 150th generation, whereas GAs with uniform crossover operations do not converge even at the 300th generation. Moreover, in the case of uniform crossover operations, the best value continuously increases until just before the end of the runs of GAs. Moreover, the worst objective value of the GA with one-point crossover eventually reaches the average objective value at the 164th generation, while the GA with uniform crossover fails to do so. In Table 4, the results of one-point crossover operators are better when the k value is large simply because the GAs with uniform crossover operations have not yet converged to the optimal value. The average performance of the combination of uniform crossover and conservative repair improved by up to 4.47% compared to that of one-point crossover and conservative repair. Also, we conducted Welch’s t-test to evaluate the performance of different crossover methods under the same repair strategy. The results statistically confirm that uniform crossover yields better performance than one-point crossover, regardless of the repair method applied, except for the case of scpnrfx with tightness ratios of 0.4 and 0.6.

Table 4.

Results with integer encoding.

Figure 7.

Convergence of GAs with one-point and uniform crossover operations in integer encoding.

According to Table 4, the conservative repair method was worse than the random repair method when one-point crossover operators were used. However, the conservative repair method showed better results than the random repair method when uniform crossover operators were used. The higher the k value, the larger the result value difference between the two repair methods. For example, when the k value is 4 or 8 (instance set scpnrex or scpnrfx with tightness ratio 0.8), there is a very small difference; however, when the k value is 40 (instance sets scp4x, scp5x, scpcx, and scpnrgx with tightness ratio 0.8), there is a large difference. In the integer encoding, the combination of the conservative repair method and uniform crossover operations showed the best performance.

Figure 8 presents the average, best, and worst objective values of GA population, according to the offspring generation, with conservative and random repair methods for problem instance scp41, where uniform crossover operators are used. Here, the GAs with the conservative repair method converge a little faster than GAs with the random repair method. The conservative repair method not only converges faster, but also finds a better solution than the random repair method when uniform crossover operators are used. The conservative repair demonstrates more stable improvement in the worst objective values and achieves earlier convergence compared to the random repair.

Figure 8.

Convergence of GAs with conservative and random repair methods.

5.4. Comparison of the Performance of Binary and Integer Encodings for the MKCP

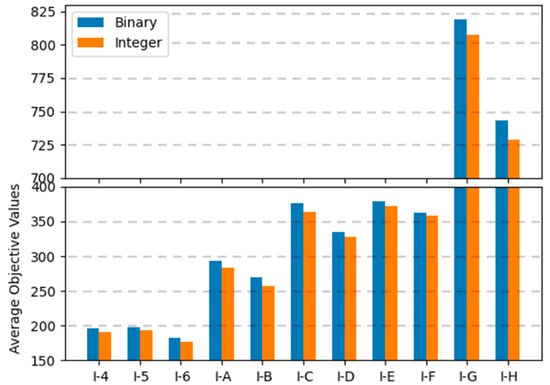

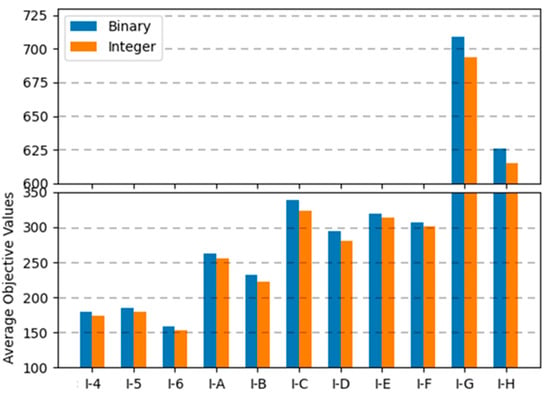

In binary encoding, the combination of uniform crossover operations and the random repair method was the best among the range of combinations; the conservative repair method did not show significant performance variations, and one-point crossover operations were not appropriate for finding optimal solutions for the MKCP. In integer encoding, the combination of uniform crossover operations and the conservative repair method was the best. The two methods that showed the best results for each encoding are compared in graphs in Figure 9, Figure 10 and Figure 11. Comparing all three graphs, they show a consistent trend regardless of the tightness ratio. Although there are differences in objective values according to the tightness ratio, binary encoding showed better performance.

Figure 9.

Average objective values of two encodings for instance sets with a tightness ratio of 0.8.

Figure 10.

Average objective values of two encodings for instance sets with a tightness ratio of 0.6.

Figure 11.

Average objective values of two encodings for instance sets with a tightness ratio of 0.4.

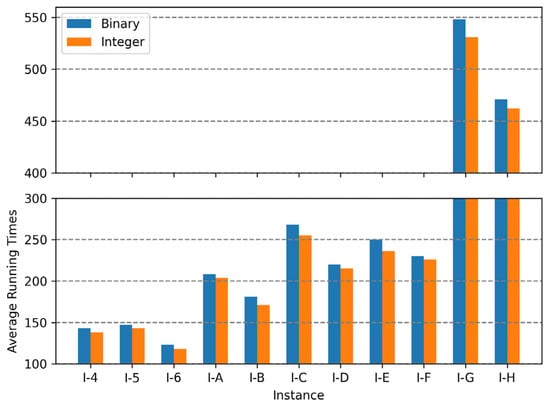

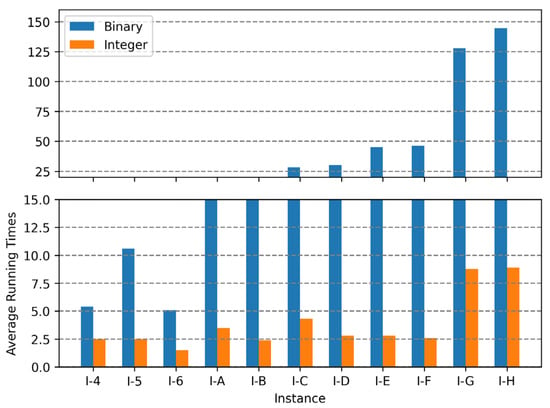

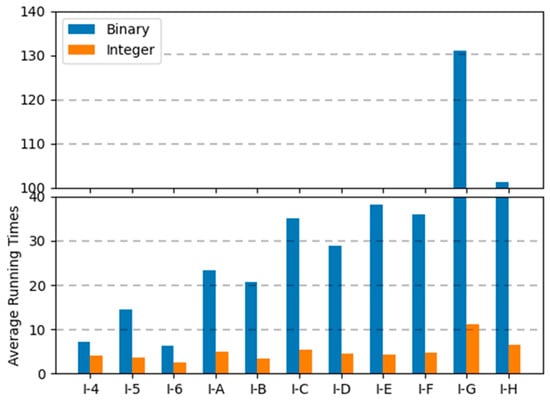

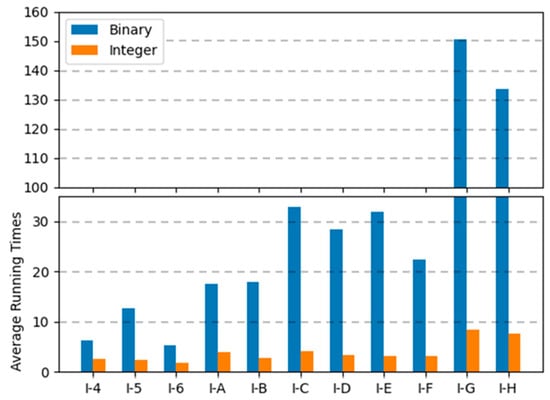

Figure 12, Figure 13 and Figure 14 are the graphs of average running times results for each encoding. These figures show more dramatic differences than the graphs of objective values. With integer encoding, the GAs terminated in less than 10 s regardless of the tightness ratio and the size of the instance. In contrast, with binary encoding the run time for the GAs increased with the size of the problem instance set. The tightness ratio did not have a significant impact on the run times for the GAs with binary encoding. In binary encoding, the length of a solution did not change according to the tightness ratio. However, with integer encoding, the smaller the tightness ratio is, the shorter the length of a solution; therefore, GAs for problem sets with a tightness ratio of 0.4 demonstrated shorter run times than those for problem sets with a tightness ratio of 0.8.

Figure 12.

Average running times of two encodings for instance sets with a tightness ratio of 0.8.

Figure 13.

Average running times of two encodings for instance sets with a tightness ratio of 0.6.

Figure 14.

Average running times of two encodings for instance sets with a tightness ratio of 0.4.

6. Discussion

In our experiments, we evaluated the performance of genetic algorithms using two types of encoding (binary encoding and integer encoding), two crossover operators (one-point crossover and uniform crossover), and two repair strategies (conservative repair and random repair) across various problem sizes. For binary encoding, uniform crossover consistently outperformed one-point crossover, regardless of the repair strategy. Notably, the combination of uniform crossover and random repair always yielded better objective values than conservative repair. In one instance with a tightness ratio of 0.8, this combination achieved the best objective value of 819.97 for the f instance. This improvement was statistically significant compared to one-point crossover, with a p-value of 3.29 10−20. Similar trends were observed for instances with a tightness ratio of 0.6, where uniform crossover combined with random repair consistently delivered superior performance. For instances with a tightness ratio of 0.4, the performance of uniform crossover with either conservative or random repair was comparable, and both combinations outperformed one-point crossover. Although uniform crossover generally resulted in statistically significant improvements over one-point crossover, an exception was observed for the Scpnrfx instance, where the p-value was 2.63 10−1, indicating a lack of statistical significance (p < 0.05).

For integer encoding, the use of conservative repair in conjunction with uniform crossover consistently led to the best performance. In fact, this combination achieved the best results in 30 out of 33 instances, outperforming all other crossover and repair combinations. These findings suggest that, while uniform crossover is generally advantageous, the choice of repair strategy may depend on the encoding type and the specific characteristics of the problem instance. In the case of a tightness ratio of 0.8, the combination of uniform crossover and conservative repair consistently outperformed the combination of one-point crossover and conservative repair across all instances. For a tightness ratio of 0.6, results for the Scpnrfx dataset showed that uniform crossover with conservative repair achieved an objective value of 301.95, which was slightly better than the 301.25 obtained with one-point crossover. However, this difference was not statistically significant, as indicated by a p-value of 5.35 10−1. When the tightness ratio was reduced to 0.4, the performance gap between repair strategies became negligible. For example, in the Scpbx instance, uniform crossover with conservative repair resulted in an objective value of 171.34. The use of random repair in this case yielded a very similar value of 171.36. Both approaches outperformed one-point crossover. Similarly, in the Scpnrex instance, random repair again outperformed conservative repair. Random repair achieved a marginally better result of 236.29, compared to 236.19 obtained with conservative repair. Although the difference between the two repair strategies in this case was minimal, it still suggests a slight edge for random repair. These results show that conservative repair not only outperformed random repair, but also achieved the best performance when combined with uniform crossover. However, the magnitude and statistical significance of this advantage varied depending on the tightness ratio and the specific problem instance.

7. Conclusions

In binary encoding, uniform crossover operations showed better results than those of one-point crossover. This is because one-point crossover operations cannot maintain a sufficient diversity of solutions, as the offspring from one-point crossover operations are biased toward a parent solution. Additionally, the conservative repair method was not suitable for finding optimal solutions for the MKCP compared to the random repair method.

In integer encoding, the combination of uniform crossover operation and conservative repair method showed the best results overall. However, in cases of the random repair method, the crossover operation with better performance depended on the value of k; when k was small, uniform crossover operations found better solutions than those of one-point crossover, and when k was large, one-point crossover operations found better solutions. These results are because, with uniform crossover operations, the GAs did not converge enough for large k instances. Therefore, by conducting experiments with more offspring generations, different results can be produced. However, except for the instances with k value 40, uniform crossover operations were better than one-point crossover operations overall. The conservative repair method was worse than the random repair method when one-point crossover operators were used. However, with uniform crossover operations, the conservative repair method was better than the random repair method.

Throughout the experiments, one-point crossover operations converged quicker than those of uniform crossover. Uniform crossover operations maintained a diversity of solutions in the population better than that of one-point crossover. Despite the faster convergence of one-point crossover, its lack of diversity often resulted in premature convergence to suboptimal solutions. On the other hand, uniform crossover’s ability to preserve diversity in the population allowed for a more thorough exploration of the search space. Since the search space in binary encoding is discrete, conservative repair, which inherits genes from parent solutions, failed to maintain the diversity of offspring. In contrast, random repair allowed for greater exploration of the search space, resulting in better performance. In integer encoding, random repair satisfies the cardinality constraint k by adding new subsets randomly, which can introduce low-quality solutions into the offspring. In contrast, conservative repair tends to preserve subsets inherited from the parent solution, thereby maintaining well-performing components that contribute to better overall solution quality.

In binary encoding, the combination of uniform crossover operations and the random repair method was the best, while in integer encoding, the combination of uniform crossover operations and the conservative repair method was the best. Between the two encoding methods, GA runs with the binary encoding framework showed better performance than GA runs with the integer encoding framework in terms of objective values. Conversely, in terms of time utility, integer encoding was much better because the length of the encoded solution using integer encoding is much shorter than that using binary encoding.

However, this study has several limitations. Although we conducted experiments on various instances, there is a need to investigate a broader range of crossover strategies. In addition, further comparative studies are required to evaluate the effectiveness of the operator combinations used in this work on other types of optimization problems beyond the MKCP, and to assess their applicability to real-world problems.

In this study, the effective combination of encodings and genetic operators for the MKCP was shown; however, the relationship between encodings and genetic operators was not analyzed in detail.

Author Contributions

Conceptualization, Y.C. and Y.Y.; methodology, Y.C. and Y.Y.; software, Y.C. and J.K.; validation, Y.Y.; formal analysis, Y.C. and J.K.; investigation, Y.C.; resources, Y.C.; data curation, Y.C.; writing—original draft preparation, Y.C.; writing—review and editing, Y.C., J.K., and Y.Y.; visualization, Y.C. and J.K.; supervision, Y.Y.; project administration, Y.Y.; funding acquisition, Y.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Education of the Republic of Korea and the National Research Foundation of Korea (NRF-2022S1A5C2A07090938) and by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. 2022R1F1A1066017). This work was also supported by the Korea Institute of Energy Technology Evaluation and Planning (KETEP) and the Ministry of Trade, Industry & Energy, Republic of Korea (RS-2024-00442817).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in [OR-Library] at [https://people.brunel.ac.uk/~mastjjb/jeb/orlib/scpinfo.html] (accessed on 15 October 2022), reference number [33].

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Experimental Analysis of Crossover Operators in Binary-Encoding with Random Repair for scp4x

We conducted an additional experiment on the SCP4 instance with a tightness ratio of 0.8, using binary encoding and random repair. This experiment aimed to investigate the performance of various crossover ensembles. To observe the effect of combining different crossover methods, we employed one-point crossover (OX1), two-point crossover (OX2), and uniform crossover (Uniform). The seeds used for these ensemble crossovers were different from those in the main experiments.

Table A1 presents the average and standard deviation of performance and computation time for SCP4x, evaluated using various crossover combinations. In the crossover ensembles, a random number between 0 and 1 was generated each time an offspring was created, and a crossover operator was selected probabilistically based on this value. As shown in Table A1, the OX2 + Uniform ensemble achieved the best performance, while using only OX1 resulted in the worst performance. Notably, while OX1 and OX2 fail to ensure population diversity in binary encoding, leading to poorer performance compared to Uniform, their performance significantly improves when used in conjunction with Uniform. These results suggest that an appropriate combination of crossover operators can significantly enhance the performance of a genetic algorithm compared to using a single crossover operator.

Table A1.

Performance of different crossover ensembles using binary encoding and random repair on SCP4x with a tightness ratio of 0.8.

Table A1.

Performance of different crossover ensembles using binary encoding and random repair on SCP4x with a tightness ratio of 0.8.

| Methods | Average | std | Time * | std |

|---|---|---|---|---|

| OX1 + OX2 + Uniform | 193.85 | 1.67 | 35.20 | 19.68 |

| OX1 + OX2 | 190.31 | 2.60 | 25.87 | 1.44 |

| OX1 + Uniform | 194.04 | 2.09 | 41.40 | 2.31 |

| OX2 + Uniform | 194.70 | 1.87 | 40.35 | 2.25 |

| OX1 | 188.98 | 2.77 | 24.43 | 1.37 |

| OX2 | 191.24 | 2.25 | 26.87 | 1.57 |

| Uniform | 192.97 | 2.96 | 40.27 | 2.73 |

* CPU Time in seconds.

References

- Xu, H.; Li, X.-Y.; Huang, L.; Deng, H.; Huang, H.; Wang, H. Incremental deployment and throughput maximization routing for a hybrid SDN. IEEE/ACM Trans. Netw. 2017, 25, 1861–1875. [Google Scholar] [CrossRef]

- Yoon, Y.; Kim, Y.-H.; Moon, B.-R. Feasibility-preserving crossover for maximum k-coverage problem. In Proceedings of the 10th annual conference on Genetic and evolutionary computation, Atlanta, GA, USA, 12–16 July 2008; pp. 593–598. [Google Scholar]

- Máximo, V.R.; Nascimento, M.C.; Carvalho, A.C. Intelligent-guided adaptive search for the maximum covering location problem. Comput. Oper. Res. 2017, 78, 129–137. [Google Scholar] [CrossRef]

- Liu, Q.; Cai, W.; Shen, J.; Fu, Z.; Liu, X.; Linge, N. A speculative approach to spatial-temporal efficiency with multi-objective optimization in a heterogeneous cloud environment. Secur. Commun. Netw. 2016, 9, 4002–4012. [Google Scholar] [CrossRef]

- Saha, B.; Getoor, L. On maximum coverage in the streaming model & application to multi-topic blog-watch. In Proceedings of the 2009 Siam International Conference On Data Mining, Sparks, NV, USA, 30 April–2 May 2009; Society for Industrial and Applied Mathematics: University City, MO, USA, 2009; pp. 697–708. [Google Scholar]

- Li, F.-H.; Li, C.-T.; Shan, M.-K. Labeled influence maximization in social networks for target marketing. In Proceedings of the 2011 IEEE Third International Conference on Privacy, Security, Risk and Trust and 2011 IEEE Third International Conference on Social Computing, Boston, MA, USA, 9–11 October 2011; pp. 560–563. [Google Scholar]

- Hammar, M.; Karlsson, R.; Nilsson, B.J. Using maximum coverage to optimize recommendation systems in e-commerce. In Proceedings of the 7th ACM conference on Recommender systems, Hong Kong, China, 12–16 October 2013; pp. 265–272. [Google Scholar]

- Yoon, Y.; Kim, Y.-H. Gene-similarity normalization in a genetic algorithm for the maximum k-Coverage problem. Mathematics 2020, 8, 513. [Google Scholar] [CrossRef]

- Diveev, A.; Bobr, O. Variational genetic algorithm for np-hard scheduling problem solution. Procedia Comput. Sci. 2017, 103, 52–58. [Google Scholar] [CrossRef]

- Cheng, X.; An, L.; Zhang, Z. Integer Encoding Genetic Algorithm for Optimizing Redundancy Allocation of Series-parallel Systems. J. Eng. Sci. Technol. Rev. 2019, 12, 126–136. [Google Scholar] [CrossRef]

- Maniscalco, V.; Polito, S.G.; Intagliata, A. Tree-based genetic algorithm with binary encoding for QoS routing. In Proceedings of the 2013 Seventh International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing, Taichung, Taiwan, 3–5 July 2013; pp. 101–107. [Google Scholar]

- Wang, Y.; Ouyang, D.; Yin, M.; Zhang, L.; Zhang, Y. A restart local search algorithm for solving maximum set k-covering problem. Neural Comput. Appl. 2018, 29, 755–765. [Google Scholar] [CrossRef]

- Hochbaum, D.S.; Pathria, A. Analysis of the greedy approach in problems of maximum k-coverage. Nav. Res. Logist. (NRL) 1998, 45, 615–627. [Google Scholar] [CrossRef]

- Nemhauser, G.L.; Wolsey, L.A.; Fisher, M.L. An analysis of approximations for maximizing submodular set functions—I. Math. Program. 1978, 14, 265–294. [Google Scholar] [CrossRef]

- Feige, U. A threshold of ln n for approximating set cover. J. ACM (JACM) 1998, 45, 634–652. [Google Scholar] [CrossRef]

- Resende, M.G. Computing approximate solutions of the maximum covering problem with GRASP. J. Heuristics 1998, 4, 161–177. [Google Scholar] [CrossRef]

- Khuller, S.; Moss, A.; Naor, J.S. The budgeted maximum coverage problem. Inf. Process. Lett. 1999, 70, 39–45. [Google Scholar] [CrossRef]

- Lee, J.-Y.; Kim, M.-S.; Kim, C.-T.; Lee, J.-J. Study on encoding schemes in compact genetic algorithm for the continuous numerical problems. In Proceedings of the SICE Annual Conference 2007, Takamatsu, Japan, 17–20 September 2007; pp. 2694–2699. [Google Scholar]

- Shi, G.; Iima, H.; Sannomiya, N. A new encoding scheme for solving job shop problems by genetic algorithm. In Proceedings of the 35th IEEE Conference on Decision and Control, Kobe, Japan, 13 December 1996; pp. 4395–4400. [Google Scholar]

- Payne, A.; Glen, R.C. Molecular recognition using a binary genetic search algorithm. J. Mol. Graph. 1993, 11, 74–91. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Kan, M.S.; Shahriar, M.R.; Tan, A.C. Different approaches of applying single-objective binary genetic algorithm on the wind farm design. In Proceedings of the World Congress on Engineering Asset Management 2014, Pretoria, South Africa, 28–31 October 2014. [Google Scholar]

- Sivanandam, S.; Deepa, S.; Sivanandam, S.; Deepa, S. Genetic algorithm optimization problems. In Introduction to Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2008; pp. 165–209. [Google Scholar]

- Soon, G.K.; Guan, T.T.; On, C.K.; Alfred, R.; Anthony, P. A comparison on the performance of crossover techniques in video game. In Proceedings of the 2013 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 29 November–1 December 2013; pp. 493–498. [Google Scholar]

- Caserta, M. Tabu search-based metaheuristic algorithm for large-scale set covering problems. In Metaheuristics: Progress in Complex Systems Optimization; Springer: Berlin/Heidelberg, Germany, 2007; pp. 43–63. [Google Scholar]

- Al-Shihabi, S.; Arafeh, M.; Barghash, M. An improved hybrid algorithm for the set covering problem. Comput. Ind. Eng. 2015, 85, 328–334. [Google Scholar] [CrossRef]

- Balaji, S.; Revathi, N. A new approach for solving set covering problem using jumping particle swarm optimization method. Nat. Comput. 2016, 15, 503–517. [Google Scholar] [CrossRef]

- Lin, G.; Guan, J. Solving maximum set k-covering problem by an adaptive binary particle swarm optimization method. Knowl. -Based Syst. 2018, 142, 95–107. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, X.; Hu, S.; Wang, Y.; Yin, M. Combining max–min ant system with effective local search for solving the maximum set k-covering problem. Knowl.-Based Syst. 2022, 239, 108000. [Google Scholar] [CrossRef]

- Akopov, A.S. MBHGA: A Matrix-Based Hybrid Genetic Algorithm for Solving an Agent-Based Model of Controlled Trade Interactions. IEEE Access 2025, 13, 26843–26863. [Google Scholar] [CrossRef]

- Deb, K.; Beyer, H.-G. Self-adaptive genetic algorithms with simulated binary crossover. Evol. Comput. 2001, 9, 197–221. [Google Scholar] [CrossRef]

- Beasley, J.E. OR-Library: Distributing test problems by electronic mail. J. Oper. Res. Soc. 1990, 41, 1069–1072. [Google Scholar] [CrossRef]

- Zainuddin, F.A.; Abd Samad, M.F.; Tunggal, D. A review of crossover methods and problem representation of genetic algorithm in recent engineering applications. Int. J. Adv. Sci. Technol. 2020, 29, 759–769. [Google Scholar]

- Huang, S.; Cohen, M.B.; Memon, A.M. Repairing GUI test suites using a genetic algorithm. In Proceedings of the 2010 Third International Conference on Software Testing, Verification and Validation, Paris, France, 6–10 April 2010; pp. 245–254. [Google Scholar]

- Bolotbekova, A.; Hakli, H.; Beskirli, A. Trip route optimization based on bus transit using genetic algorithm with different crossover techniques: A case study in Konya/Türkiye. Sci. Rep. 2025, 15, 2491. [Google Scholar] [CrossRef] [PubMed]

- Abd Rahman, R.; Ramli, R. Average concept of crossover operator in real coded genetic algorithm. Int. Proc. Econ. Dev. Res. 2013, 63, 73–77. [Google Scholar]

- Laboudi, Z.; Chikhi, S. Comparison of genetic algorithm and quantum genetic algorithm. Int. Arab J. Inf. Technol. 2012, 9, 243–249. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).