Vocabulary at the Living–Machine Interface: A Narrative Review of Shared Lexicon for Hybrid AI

Abstract

1. Introduction

2. Literature Review

Biomimetics: A Test-Bed for Terminological Collision

3. Methods

4. Results

4.1. Concept Characteristics Before Clustering

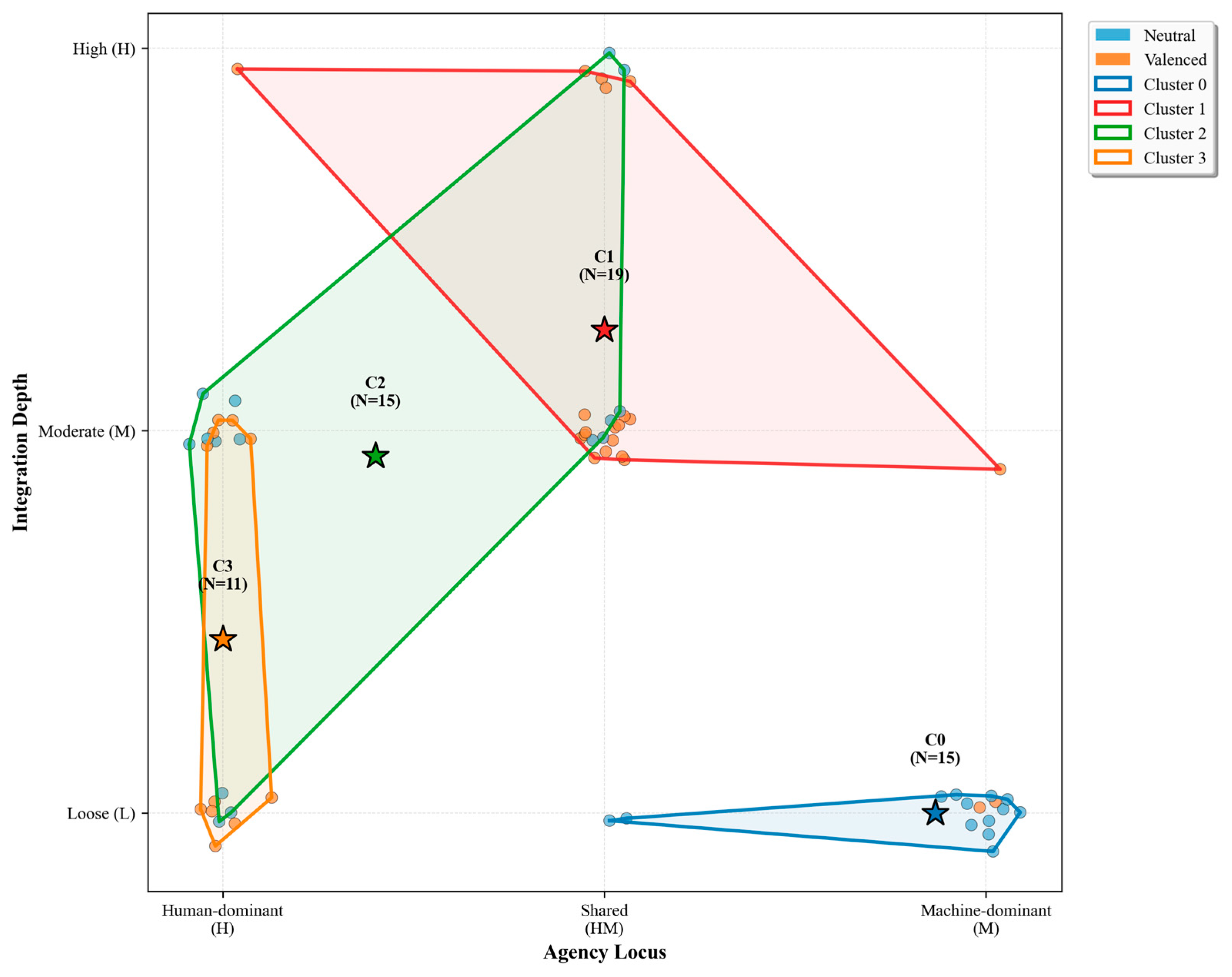

4.2. Cluster Landscape

4.3. Robustness Check

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| HMS | Hybrid Machine Systems |

| HCI | Human–computer Interaction |

| BCI | Brain Computer Interface |

| HITL | Human in the Loop |

| AITL | AI in the Loop |

| JCS | Joint-Cognitive Systems |

Appendix A

Appendix A.1

| Term | Standard Definition | Reference Source | Agency Locus | Integration Depth | Normativity |

|---|---|---|---|---|---|

| Hybrid-AI | Combines heterogeneous AI paradigms (e.g., symbolic reasoning and neural learning) so their complementary strengths yield more robust, generalizable systems. | “Hybrid AI is defined as the combination of reasoning on symbolic knowledge with machine learning from data (objects) embodied in an intelligent agent. Hybrid AI is part of systems of Hybrid Intelligence (HI), where intelligent agents learn and reason and interact with humans and other agents in relation to the environment that they are situated in. Intelligent agents need to acquire awareness about themselves, their context and users (both human and other agents” [31] | M | L | 0 |

| Hybrid Intelligence | The combined intelligence of humans and AI systems, leveraging complementary strengths. | “A hybrid AI approach that integrates data-driven machine learning with domain knowledge, optimization, and reasoning” [32]. | HM | Ms | 1 |

| Neuro-Symbolic AI | Integrates neural representation learning with symbolic knowledge and reasoning to improve interpretability, data efficiency, and generalization. | “The ability to achieve complex goals by combining human and artificial intelligence, thereby reaching superior results to those each of them could have accomplished separately, and continuously improve by learning from each other” [4]. | M | L | 0 |

| Multi-Agent System | A setting where multiple autonomous agents interact: cooperatively or competitively: to solve tasks that exceed the capability of any single agent. | “The AI-human hybrid outperforms its human-only or LLM-only counterpart” [33]. | M | L | 0 |

| Agentic AI (LLM Agents & Orchestration) | Large language model-based agents plan, act, and coordinate tools or sub-agents to pursue goals, often under an orchestration framework for reliability and control. | “Neuro-Symbolic AI is defined as the subfield of artificial intelligence (AI) that combines neural and symbolic approaches” [34]. | M | L | 0 |

| Mixture-of-Experts (MoE) LLMs | Sparse architectures route tokens to specialized expert sub-networks, increasing capacity without proportional compute at inference. | “A Multi-Agent System is characterized by properties including flexible autonomy, reactivity, pro-activeness, social ability, the distributable nature of agents, the possibility of emergent behavior, and the fault tolerance [35]. | M | L | 0 |

| Human-in-the-Loop (HITL) | A system where AI performs inference and decision-making, and humans intervene when necessary to provide corrections, feedback, supervision, or domain knowledge. | “A Multi-Agent System (MAS) is an extension of the agent technology where a group of loosely connected autonomous agents act in an environment to achieve a common goal” [36]. | M | L | 0 |

| AI-in-the-Loop (AITL) | A system where humans make the final decisions, and AI assists by offering perception, inference, suggestions, or action support. | “Multi-agent systems (MAS) are a fast developing information technology, where a number of intelligent agents, representing the real world parties, co-operate or compete to reach the desired objectives designed by their owners” [37]. | H | M | 0 |

| Human on the loop | Humans monitor autonomous operations and intervene or override when needed, maintaining ultimate authority without micromanaging each action. | “Agentic AI systems represent an emergent class of intelligent architectures in which multiple specialized agents collaborate to achieve complex, high-level objectives utilizing collaborative reasoning and multi-step planning” [38]. | H | L | 0 |

| Adjustable Autonomy | The locus of control shifts dynamically between human and agent based on context, competence, and risk to balance safety and efficiency. | “The MoE framework is based on a simple yet powerful idea: different parts of a model, known as experts, specialize in different tasks or aspects of the data” [39]. | HM | M | 1 |

| Shared Autonomy | Human intent and autonomous assistance are blended in real time so the system helps without seizing full control. | “Human-in-the-Loop is a configuration where humans perform “supervisory control in computational systems’ operations” requiring “human feedback and responsibility for performance management, exception handling and improvement” [40]. | HM | M | 1 |

| Mixed-Initiative Systems | Both human and AI proactively propose actions or plans, negotiating initiative and control throughout the task. | “AI systems drive the inference and decision-making process, but humans intervene to provide corrections and supervision” [41]. | HM | M | 1 |

| Coactive Design | Design emphasizes interdependence: agents are built to anticipate, expose, and adapt to human needs so joint activity remains fluent. | “Humans make the ultimate decisions, while AI systems assist with perception, inference, and action” [41]. | HM | M | 1 |

| Human–AI Collaboration | Humans and AI work together toward shared outcomes when AI typically serves as a tool or assistant. | Humans on the loop positions humans as supervisors of automated systems. Instead of being directly involved in every decision (as HITL), humans monitor processes and intervene only when necessary, typically when anomalies or edge cases arise [42]. | HM | M | 1 |

| Human–AI Teaming | Humans and AI function as interdependent teammates, sharing situation awareness, coordinating roles, and adapting to each other’s strengths. | “Provides an autonomous system with a variable autonomy in which its operators have the options to work in different autonomy states and change its level of autonomy while the system operates” [43]. | HM | M | 1 |

| Human–AI Co-Creation | Creative workflows weave human intent with generative AI capabilities to explore options, iterate, and refine outcomes together. | “Systems that share control with the human user” [44]. | HM | M | 1 |

| Human–AI Complementarity | Integrating human strengths (context understanding, ethical reasoning, sensemaking) with AI strengths (scale, speed, pattern recognition) to achieve joint performance gains. | “In shared autonomy, a user and autonomous system work together to achieve shared goals” [45]. | HM | M | 1 |

| Participatory AI | Stakeholders help specify objectives, constraints, and impacts of AI systems, embedding participation across design and governance. | “Mixed-initiative refers to a flexible interaction strategy, where each agent can contribute to the task what it does best” [46]. | H | L | 1 |

| Shared Mental Models | Team members maintain compatible representations of goals, roles, and system state, enabling coordination with minimal overhead. | This definition explicitly contrasts with adaptive systems (agent responds without intervention) and adaptable systems (agent suggests pre-programmed actions), highlighting its core principle of collaborative human-agent decision-making. The article further describes this model as capturing “the advantage of adaptive and adaptable processes without sacrificing the human’s decision authority” [47]. | HM | M | 1 |

| Co-Adaptive Systems | Humans and AI adapt to each other over time, updating strategies, interfaces, or models to improve joint performance. | “Mixed-initiative human–robot teams” [48]. | HM | M | 0 |

| Interactive Machine Learning (IML) | Humans iteratively provide labels, features, or constraints while observing model updates, closing the loop between learning and use. | “The term ‘coactive design’ was coined as a way of characterizing an approach to the design of HRI that takes interdependence as the central organizing principle among people and robots working together in joint activity” [49]. | H | M | 1 |

| Reinforcement Learning from Human Feedback (RLHF) | Policies are optimized against human preference signals so model behavior aligns with human values and instructions. | A collaborative system where humans and AI work together on a task. This involves integration, interaction, or collaboration to leverage complementary capabilities [50]. | H | M | 1 |

| Preference-Based Learning | Agents infer a preference ordering over trajectories or outcomes from comparisons or feedback rather than explicit rewards. | “Adaptable automation together with effective human-factored interface designs are key to enabling human-automation teaming” [51]. | H | M | 1 |

| Decision Support Systems (DSS) | Interactive information systems that synthesize data, models, and user judgment to aid: not replace: managerial decision-making. | “Human–AI teaming focuses on interactions between human and AI teammates where a team must jointly accomplish collaborative tasks with shared goals” [52]. | H | L | 0 |

| Adaptive Automation | Automation level is adjusted in response to operator state or task demands, mitigating overload while preserving engagement. | Humans and generative AI systems jointly shape creative outputs, with both actively manipulating and refining the artifacts under development [53]. | HM | M | 1 |

| Augmented Decision Making | AI and analytics enhance human judgment by revealing patterns, forecasts, and counterfactuals, enabling better and more informed decision-making while humans retain final authority. | Combining the distinct, non-overlapping capabilities of humans and artificial intelligence to “achieve superior results in comparison to the isolated entities operating independently” [54]. | H | L | 1 |

| Human-Augmented AI | AI systems where human knowledge, feedback, or demonstrations are integrated into training and deployment, enabling models to perform more reliably and effectively in real-world contexts. | Participatory AI can be defined as an approach that empowers end users to directly interact with and guide AI systems to create solutions for their specific needs [55]. | H | L | 1 |

| Human Factors (in AI) | A research area focusing on how humans perceive, interact with, and are influenced by AI systems. | “Shared mental models are shared knowledge structures allowing team members to draw on their own well-structured knowledge as a basis for selecting actions that are consistent and coordinated with those of their teammates” [56]. | H | L | 0 |

| Explainable AI (XAI) | Techniques and methods that make AI systems’ decisions, reasoning processes, and outputs understandable to humans, improving transparency, trust, and accountability without necessarily reducing performance. | “Both a human user and a machine should be able to adapt to the other through experiencing the interactions occurring between them” [57]. | M | L | 1 |

| Human-Centered AI (HCAI) | An approach that keeps human values, control, and usability at the forefront of AI design, deployment, and governance. | Sub-systems or agents undergo “reciprocal selective pressures and adaptations” driven by “reciprocal adaptations between and within socio-economic and natural systems” [58]. | H | M | 1 |

| Augmented Intelligence | Positions AI as an amplifier of human abilities rather than a replacement, emphasizing complementary roles and oversight. | Interactive Machine Learning (IML) is defined as a process where “model gets updated immediately in response to user input), focused (only a particular aspect of the model is updated), and incremental (the magnitude of the update is small). It also “allows users to interactively examine the impact of their actions and adapt subsequent inputs to obtain desired behaviors” [55]. | H | L | 1 |

| Augmented Cognition | Uses AI and adaptive systems to support or enhance cognitive processes (e.g., attention, memory, decision-making) via real-time feedback and adaptive interfaces. | Reinforcement Learning from Human Feedback (RLHF) is a training procedure whose “only essential steps are human feedback data collection, preference modeling, and RLHF training” [59]. | H | M | 1 |

| Human–robot Interaction (HRI) | Studies and designs two-way human–robot engagement: from physical collaboration to social communication: for safe, efficient, and intuitive teamwork. | Preference-Based Learning is a method that learns desired reward functions by asking humans for their relative preferences between two sample trajectories instead of requesting demonstrations or numerical reward assignments [60,61]. | HM | M | 0 |

| Collaborative Robots (Cobots) | Robots designed to operate in close proximity with people, using safety-rated control and interaction modes to share workspaces. | “A decision support system (DSS) is defined as an interactive computer-based information system that is designed to support solutions on decision problems” [62]. | HM | M | 1, −1 |

| Humanoid/Social Robot | Robots with humanlike form or social behaviors that support communication, instruction, or companionship. | Adaptive automation is defined as “the dynamic allocation of control of system functions to a human operator and/or computer over time with the purpose of optimizing overall system performance” [63]. | M | M | 1, −1 |

| Bionic Human | A human enhanced or restored by integrating artificial components, such as prosthetics, implants, or wearable robotics, that augment, restore, or replace physiological and sensory functions, enabling improved mobility, strength, or perception. | The use of AI insights to “enhance managerial decisions” by analyzing large datasets, detecting patterns, and providing recommendations, while humans retain responsibility for responsibility over decision and action selection [64]. | HM | H | 1, −1 |

| Wearable Robotics | A broad class of robotic devices worn on the body that assist, augment, or restore human movement, strength, or sensory functions. | “Human-augmented AI refers to AI systems that are trained by humans and continuously improve their performance based on human input” [65]. | HM | H | 1, −1 |

| Powered exoskeleton | A wearable robotic framework with motorized actuators that assist, augment, or restore human movement and strength through real-time sensorimotor synchronization, used in rehabilitation, industry, military, and mobility enhancement. | “Human Factors as “a very broad discipline that encompasses human interaction in all its task-oriented forms” and addresses “traditional human-factors problems such as trust in automation” for the AI-specific angle [66]. | HM | H | 1, −1 |

| Embodied AI | Intelligence emerges through an agent’s body interacting with the world, coupling perception, action, and learning. | “Experiences, behavioral preferences, decision-making styles,” along with their susceptibility to biases of end users constitute human factors impacting AI usage [67]. | M | L | 0 |

| Brain–Computer Interface (BCI) | A technology that enables direct communication between the human brain and external devices, allowing AI systems to read, interpret, or influence neural activity for purposes such as control, augmentation, or rehabilitation. | Al system capable of explaining how it obtained a specific solution (e.g., a classification or detection outcome) and answering “wh” questions (such as “why”, “when”, “where”, etc.). This capability is absent in traditional Al models [68]. | H | H | 1, −1 |

| Immersive Technology (XR) | An umbrella for virtual, augmented, and mixed reality that blends real and synthetic stimuli to expand perception and action. | “HCAI is an approach that seeks to position humans at the center of the AI lifecycle, improving human performance reliably, safely, and trustworthily by augmenting human capabilities rather than replacing them, with a focus on human well-being, values, and agency [69,70]. | H | M | 0 |

| Virtual Reality (VR) | Computer-generated environments create a sense of presence that supports training, simulation, and embodied experimentation. | A human-centric AI mechanism is an approach that centers human needs and values by ensuring human control, usability, explainability, ethical safeguards, and user involvement throughout AI development [70]. | H | M | 0 |

| Augmented Reality (AR) | Digital content is registered onto the physical world in real time to guide perception, action, or collaboration. | “Augmented Intelligence is defined as “a situation where the Human–AI combination/system performs better than the human working alone” [50]. | H | M | 0 |

| Cyber-Physical Systems (CPS) | Systems that tightly integrate computation, networking, and physical processes through sensing, communication, and control loops, enabling real-time monitoring, coordination, and automation across diverse domains. | “AI systems augment human intelligence... AI systems tend to extend or amplify human capabilities by providing support systems such as predictive analytics rather than replacing them, resulting in augmented (human) intelligence” [65]. | M | L | 0 |

| AI Use Case | A specific scenario where AI is applied to solve a problem or address a particular need, such as fraud detection in finance, digital twin cities, medical diagnosis, or autonomous driving. | “Augmented cognition is a form of human-systems interaction in which a tight coupling between user and computer is achieved via physiological and neurophysiological sensing of a user’s cognitive state” [71]. | M | L | 0 |

| Distributed Cognition | Cognitive processes are spread across people, artifacts, and environments rather than contained solely in individual minds. | HRI is a field reviewing “the current status” and challenges of interactions between humans and robots [72]. | HM | H | 0 |

| Joint Cognitive Systems | Human and machine form a single cognitive unit whose resilience depends on coordination, feedback, and graceful extensibility. | “Collaborative robots (cobots) are industrial robots designed to work alongside humans with the ability to physically interact with a human within a collaborative workspace without needing additional safety structures such as fences” [73]. | HM | H | 0 |

| Sociotechnical Systems (STS) | Sociotechnical System views humans, technologies, and organizations as interdependent components, emphasizing the joint optimization of social and technical factors for effective outcomes. | “Collaborative robots are such robots that are designed to work along their human counterparts and share the same working space as co-workers” [74]. | HM | L | 0 |

| AI-Mediated Communication (HMC) | AI transforms how people create, filter, and interpret messages, introducing new norms and risks in mediated interaction. | Humanoid/Social Robots are designed to “help through advanced interaction driven by user needs (e.g., tutoring, physical therapy, daily life assistance, emotional expression) via multimodal interfaces (speech, gestures, and input devices)” [75]. | HM | M | 0 |

| Cyborg | A human-technology assemblage that maintains homeostasis through integrated biological and artificial components. | A humanoid/social robot is designed to “look and behave like a human” and can “more meaningfully engage consumers on a social level” than traditional self-service technologies [76]. | HM | H | 1, −1 |

| Post-human Assemblage | A post-humanist concept describing networks of humans, AI, technologies, and environments where agency, identity, and meaning are co-constructed and distributed beyond the human. | “You may know someone with an artificial leg or arm. You may know someone with a hearing aid. Even if you don’t know anyone like that, it is likely that you or someone you know wears glasses. These people are allbionichumans. Any artificial-that is, man-made-part or device that is used on a person is called a prosthesis” [77]. | HM | M | 0 |

| Human–AI Coevolution | Humans and AI systems evolve together over time, with mutual influence on capabilities, behaviors, and decision-making frameworks, leading to adaptive changes on both sides. | “Sense, and responsively act, in concert with their wearer”; “encompassing the human body and its surroundings as their environment” [78]. | HM | M | 1, −1 |

| Human–computer Interaction (HCI) | A discipline that studies and designs interactive computing to fit human capabilities, needs, and values. | “The exoskeleton is an external structural mechanism with joints and links corresponding to those of the human body” [79]. | H | M | 0 |

| Algorithm Aversion | People discount algorithms after observing small mistakes, preferring human judgment even when the algorithm is statistically superior. | “Embodied AI integrates “traditional intelligence concepts from vision, language, and reasoning into an artificial embodiment” to solve tasks in virtual environments [80]. | H | L | −1 |

| Algorithm Appreciation | People sometimes weigh algorithmic advice more than human advice, particularly for objective tasks and aggregated predictions. | “Embodied AI is designed to determine whether agents can display intelligence that is not just limited to solving abstract problems in a virtual environment (cyber space), but that is also capable of navigating the complexity and unpredictability of the physical world” [81]. | H | L | 1 |

| Autonomous System | A physical or digital system capable of performing tasks or making decisions independently using its perception, reasoning, and control capabilities, with minimal or no human intervention. | Brain–computer interfaces (BCI) are systems that “activate electronic or mechanical devices with brain activity alone” [82]. | M | L | 0 |

| Transformative AI (TAI) | AI systems defined by their consequences, capable of driving societal transitions comparable to or greater than the agricultural or industrial revolution. | Immersive technology is defined as technologies like augmented reality (AR) and virtual reality (VR) that provide customers with “interactivity, visual behavior, and immersive experience” [83]. | M | L | 1 |

| General-purpose AI (GPAI) | General-purpose AI refers to AI Systems that have a wide range of possible uses, both intended and unintended by the developers. They can be applied to many different tasks in various fields, often without substantial modification and fine-tuning. | “VR is defined as “the use of computer-generated 3D environment, that the user can navigate and interact with, resulting in real-time simulation of one or more of the user’s five senses,” characterized by visualization, immersion, and interactivity [84]. | M | L | 0 |

| Conversational AI | Conversational AI is an AI system designed to interact with humans through natural language processing and generation. | VR specifically offers “a realistic computer-generated immersive environment” that allows user perception of senses like vision, hearing, and touch [83]. | H | M | 0 |

| Generative AI | AI systems that create new content: such as text, images, audio, video, or code: by learning patterns from data and generating original, context-relevant outputs. | AR is defined as “the enhancement of a real-world environment using layers of computer-generated images through a device” [84]. | HM | L | 0 |

Categorical Variable Encoding:

| |||||

Appendix A.2

| Machine-Led Low Integration Dominance | Shared Collaborative Normative | Human-Led Medium Integration | Human-Centric Low Integration |

|---|---|---|---|

| AI Use Case | Adaptive Automation | AI-Mediated Communication (HMC) | Algorithm Appreciation |

| Agentic AI (LLM Agents & Orchestration) | Adjustable Autonomy | AI-in-the-Loop (AITL) | Algorithm Aversion |

| Autonomous System | Bionic Human | Augmented Reality (AR) | Augmented Cognition |

| Cyber-Physical Systems (CPS) | Brain–Computer Interface (BCI) | Co-Adaptive Systems | Augmented Decision Making |

| Embodied AI | Coactive Design | Conversational AI | Augmented Intelligence |

| Explainable AI (XAI) | Collaborative Robots (Cobots) | Decision Support Systems (DSS) | Human-Augmented AI |

| General-purpose AI (GPAI) | Cyborg | Distributed Cognition | Human-Centered Artificial Intelligence (HCAI) |

| Generative AI | Human–AI Co-Creation | Human Factors (in AI) | Interactive Machine Learning (IML) |

| Human-in-the-Loop (HITL) | Human–AI Coevolution | Human on the loop | Participatory AI |

| Hybrid AI | Human–AI Collaboration | Human–computer Interaction (HCI) | Preference-Based Learning |

| Mixture-of-Experts (MoE) LLMs | Human–AI Complementarity | Human–robot Interaction (HRI) | Reinforcement Learning from Human Feedback (RLHF) |

| Multi-Agent System | Human–AI Teaming | Immersive Technology (XR) | |

| Neuro-Symbolic AI | Humanoid/Social Robot | Joint Cognitive Systems | |

| Sociotechnical Systems (STS) | Hybrid Intelligence | Post-human Assemblage | |

| Transformative AI (TAI) | Mixed-Initiative Systems | Virtual Reality (VR) | |

| Powered exoskeleton | |||

| Shared Autonomy | |||

| Shared Mental Models | |||

| Wearable Robotics |

References

- Clynes, M.E.; Kline, N.S. Cyborgs and Space. Astronautics 1960, 5, 74–76. [Google Scholar]

- Haraway, D.J. A Cyborg Manifesto: Science, Technology, and Socialist-Feminism in the Late Twentieth Century. In Simians, Cyborgs, and Women: The Reinvention of Nature; Routledge: New York, NY, USA, 1991; pp. 149–181. [Google Scholar]

- Dijk, J.; Schutte, K.; Oggero, S. A Vision on Hybrid AI for Military Applications. In Artificial Intelligence and Machine Learning in Defense Applications; SPIE: Bellingham, DC, USA, 2019; Volume 11169, pp. 119–126. [Google Scholar] [CrossRef]

- Dellermann, D.; Ebel, P.; Söllner, M.; Leimeister, J.M. Hybrid Intelligence. Bus. Inf. Syst. Eng. 2019, 61, 637–643. [Google Scholar] [CrossRef]

- Hollnagel, E.; Woods, D.D. Joint Cognitive Systems: Foundations of Cognitive Systems Engineering; CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar] [CrossRef]

- Sauer, C.R.; Burggräf, P.; Steinberg, F. A Systematic Review of Machine Learning for Hybrid Intelligence in Production Management. Decis. Anal. J. 2025, 15, 100574. [Google Scholar] [CrossRef]

- Jain, V.; Wadhwani, K.; Eastman, J.K. Artificial Intelligence Consumer Behavior: A Hybrid Review and Research Agenda. J. Consum. Behav. 2024, 23, 676–697. [Google Scholar] [CrossRef]

- Mariani, M.M.; Perez-Vega, R.; Wirtz, J. AI in Marketing, Consumer Research and Psychology: A Systematic Literature Review and Research Agenda. Psychol. Mark. 2022, 39, 755–776. [Google Scholar] [CrossRef]

- Calvo Rubio, L.M.; Ufarte Ruiz, M.J. Artificial Intelligence and Journalism: Systematic Review of Scientific Production in Web of Science and Scopus (2008–2019). Commun. Soc. 2021, 34, 159–176. [Google Scholar] [CrossRef]

- Almatrafi, O.; Johri, A.; Lee, H. A Systematic Review of AI Literacy Conceptualization, Constructs, and Implementation and Assessment Efforts (2019–2023). Comput. Educ. Open 2024, 6, 100173. [Google Scholar] [CrossRef]

- van Dinter, R.; Tekinerdogan, B.; Catal, C. Automation of Systematic Literature Reviews: A Systematic Literature Review. Inf. Softw. Technol. 2021, 136, 106589. [Google Scholar] [CrossRef]

- Yau, K.-L.A.; Saleem, Y.; Chong, Y.-W.; Fan, X.; Eyu, J.M.; Chieng, D. The Augmented Intelligence Perspective on Human-in-The-Loop Reinforcement Learning: Review, Concept Designs, and Future Directions. IEEE Trans. Hum.-Mach. Syst. 2024, 54, 762–777. [Google Scholar] [CrossRef]

- Amazu, C.W.; Demichela, M.; Fissore, D. Human-in-the-Loop Configurations in Process and Energy Industries: A Systematic Review. In Proceedings of the 32nd European Safety and Reliability Conference, ESREL 2022—Understanding and Managing Risk and Reliability for a Sustainable Future, Dublin, Ireland, 28 August–1 September 2022; pp. 3234–3241. [Google Scholar] [CrossRef]

- Licklider, J.C.R. Man-Computer Symbiosis. IRE Trans. Hum. Factors Electron. 1960, HFE-1, 4–11. [Google Scholar] [CrossRef]

- Clark, A. Natural-Born Cyborgs: Minds, Technologies, and the Future of Human Intelligence; Oxford University Press: Oxford, NY, USA, 2004. [Google Scholar]

- Bradley, D. Prescribing Sensitive Cyborg Tissues: Biomaterials. Mater. Today 2012, 15, 424. [Google Scholar] [CrossRef]

- Reuell, P. Merging the Biological, Electronic. Harvard Gazette. Available online: https://news.harvard.edu/gazette/story/2012/08/merging-the-biological-electronic/ (accessed on 12 September 2025).

- Feiner, R.; Wertheim, L.; Gazit, D.; Kalish, O.; Mishal, G.; Shapira, A.; Dvir, T. A Stretchable and Flexible Cardiac Tissue–Electronics Hybrid Enabling Multiple Drug Release, Sensing, and Stimulation. Small 2019, 15, 1805526. [Google Scholar] [CrossRef]

- Zhang, T.-H.; Huang, Z.-Z.; Jiang, L.; Lv, S.-Z.; Zhu, W.-T.; Zhang, C.-F.; Shi, Y.-S.; Ge, S.-Q. Light-Guided Cyborg Beetles: An Analysis of the Phototactic Behavior and Steering Control of Endebius Florensis (Coleoptera: Scarabaeidae). Biomimetics 2025, 10, 513. [Google Scholar] [CrossRef]

- Hutchins, E. Cognition in the Wild; The MIT Press: Cambridge, MA, USA, 1995. [Google Scholar] [CrossRef]

- O’Neill, T.; McNeese, N.; Barron, A.; Schelble, B. Human-Autonomy Teaming: A Review and Analysis of the Empirical Literature. Hum. Factors 2022, 64, 904–938. [Google Scholar] [CrossRef] [PubMed]

- Kamar, E. Directions in Hybrid Intelligence: Complementing AI Systems with Human Intelligence. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence (IJCAI-16); IJCAI Press: New York, NY, USA, 2016; pp. 4070–4073. [Google Scholar]

- IBM. IBM Annual Report 2019; Annual Report; International Business Machines Corporation (IBM): Armonk, NY, USA, 2020. [Google Scholar]

- Justice, B. In Digital Age, Doctors Must Excel in the Clinic and the Cloud. American Medical Association. Available online: https://www.ama-assn.org/practice-management/digital-health/digital-age-doctors-must-excel-clinic-and-cloud (accessed on 12 September 2025).

- Gunkel, D.J. Robot Rights; MIT Press: Cambridge, MA, USA, 2024. [Google Scholar]

- Xu, Y.; Sang, B.; Zhang, Y. Application of Improved Sparrow Search Algorithm to Path Planning of Mobile Robots. Biomimetics 2024, 9, 351. [Google Scholar] [CrossRef]

- Xu, N.W.; Townsend, J.P.; Costello, J.H.; Colin, S.P.; Gemmell, B.J.; Dabiri, J.O. Field Testing of Biohybrid Robotic Jellyfish to Demonstrate Enhanced Swimming Speeds. Biomimetics 2020, 5, 64. [Google Scholar] [CrossRef] [PubMed]

- Anuszczyk, S.R.; Dabiri, J.O. Electromechanical Enhancement of Live Jellyfish for Ocean Exploration. Bioinspiration Biomim. 2024, 19, 026018. [Google Scholar] [CrossRef]

- Schelble, B.G.; Lopez, J.; Textor, C.; Zhang, R.; McNeese, N.J.; Pak, R.; Freeman, G. Towards Ethical AI: Empirically Investigating Dimensions of AI Ethics, Trust Repair, and Performance in Human-AI Teaming. Hum. Factors 2024, 66, 1037–1055. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.; Chu, Y.; Xu, Z.; Liu, P. To Apologize or Not to Apologize? Trust Repair After Automated Vehicles’ Mistakes. Transp. Res. Rec. J. Transp. Res. Board 2025, 2679, 03611981251355535. [Google Scholar] [CrossRef]

- Meyer-Vitali, A.; Bakker, R.; van Bekkum, M.; de Boer, M.; Burghouts, G.; van Diggelen, J.; Dijk, J.; Grappiolo, C.; de Greeff, J.; Huizing, A.; et al. Hybrid AI White Paper; TNO 2019 R11941; TNO: Hague, The Netherlands, 2019; p. 27. [Google Scholar]

- Huizing, A.; Veenman, C.; Neerincx, M.; Dijk, J. Hybrid AI: The Way Forward in AI by Developing Four Dimensions. In Trustworthy AI—Integrating Learning, Optimization and Reasoning; Springer: Cham, Switzerland, 2021; pp. 71–76. [Google Scholar] [CrossRef]

- Arora, N.; Chakraborty, I.; Nishimura, Y. AI–Human Hybrids for Marketing Research: Leveraging Large Language Models (LLMs) as Collaborators. J. Mark. 2025, 89, 43–70. [Google Scholar] [CrossRef]

- Hitzler, P.; Eberhart, A.; Ebrahimi, M.; Sarker, M.K.; Zhou, L. Neuro-Symbolic Approaches in Artificial Intelligence. Natl. Sci. Rev. 2022, 9, nwac035. [Google Scholar] [CrossRef] [PubMed]

- McArthur, S.D.J.; Davidson, E.M.; Catterson, V.M.; Dimeas, A.L.; Hatziargyriou, N.D.; Ponci, F.; Funabashi, T. Multi-Agent Systems for Power Engineering Applications—Part II: Technologies, Standards, and Tools for Building Multi-Agent Systems. IEEE Trans. Power Syst. 2007, 22, 1753–1759. [Google Scholar] [CrossRef]

- Balaji, P.G.; Srinivasan, D. An Introduction to Multi-Agent Systems. In Innovations in Multi-Agent Systems and Applications—1; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–27. [Google Scholar] [CrossRef]

- Ren, Z.; Anumba, C.J. Multi-Agent Systems in Construction–State of the Art and Prospects. Autom. Constr. 2004, 13, 421–434. [Google Scholar] [CrossRef]

- Sapkota, R.; Roumeliotis, K.I.; Karkee, M. AI Agents vs. Agentic AI: A Conceptual Taxonomy, Applications and Challenges. arXiv 2025. [Google Scholar] [CrossRef]

- Cai, W.; Jiang, J.; Wang, F.; Tang, J.; Kim, S.; Huang, J. A Survey on Mixture of Experts in Large Language Models. IEEE Trans. Knowl. Data Eng. 2025, 37, 3896–3915. [Google Scholar] [CrossRef]

- Grønsund, T.; Aanestad, M. Augmenting the Algorithm: Emerging Human-in-the-Loop Work Configurations. J. Strateg. Inf. Syst. 2020, 29, 101614. [Google Scholar] [CrossRef]

- Natarajan, S.; Mathur, S.; Sidheekh, S.; Stammer, W.; Kersting, K. Human-in-the-Loop or AI-in-the-Loop? Automate or Collaborate? Proc. AAAI Conf. Artif. Intell. 2025, 39, 28594–28600. [Google Scholar] [CrossRef]

- Shah, S. Humans on the Loop vs. in the Loop: Striking the Balance in Decision-Making. Trackmind Solutions. Available online: https://www.trackmind.com/humans-in-the-loop-vs-on-the-loop/ (accessed on 21 August 2025).

- Mostafa, S.A.; Ahmad, M.S.; Mustapha, A. Adjustable Autonomy: A Systematic Literature Review. Artif. Intell. Rev. 2019, 51, 149–186. [Google Scholar] [CrossRef]

- Gopinath, D.; Jain, S.; Argall, B.D. Human-in-the-Loop Optimization of Shared Autonomy in Assistive Robotics. IEEE Robot. Autom. Lett. 2017, 2, 247–254. [Google Scholar] [CrossRef]

- Javdani, S.; Admoni, H.; Pellegrinelli, S.; Srinivasa, S.S.; Bagnell, J.A. Shared Autonomy via Hindsight Optimization for Teleoperation and Teaming. Int. J. Robot. Res. 2018, 37, 717–742. [Google Scholar] [CrossRef]

- Allen, J.E.; Guinn, C.I.; Horvtz, E. Mixed-Initiative Interaction. IEEE Intell. Syst. Their Appl. 1999, 14, 14–23. [Google Scholar] [CrossRef]

- Barnes, M.J.; Chen, J.Y.C.; Jentsch, F. Designing for Mixed-Initiative Interactions between Human and Autonomous Systems in Complex Environments. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 1386–1390. [Google Scholar] [CrossRef]

- Jiang, S.; Arkin, R.C. Mixed-Initiative Human-Robot Interaction: Definition, Taxonomy, and Survey. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 954–961. [Google Scholar] [CrossRef]

- Johnson, M.; Bradshaw, J.M.; Feltovich, P.J.; Jonker, C.M.; Van Riemsdijk, M.B.; Sierhuis, M. Coactive Design: Designing Support for Interdependence in Joint Activity. J. Hum.-Robot Interact. 2014, 3, 43–69. [Google Scholar] [CrossRef]

- Vaccaro, M.; Almaatouq, A.; Malone, T. When Combinations of Humans and AI Are Useful: A Systematic Review and Meta-Analysis. Nat. Hum. Behav. 2024, 8, 2293–2303. [Google Scholar] [CrossRef] [PubMed]

- Calhoun, G. Adaptable (Not Adaptive) Automation: Forefront of Human–Automation Teaming. Hum. Factors J. Hum. Factors Ergon. Soc. 2022, 64, 269–277. [Google Scholar] [CrossRef]

- Zhao, M.; Simmons, R.; Admoni, H. The Role of Adaptation in Collective Human–AI Teaming. Top. Cogn. Sci. 2025, 17, 291–323. [Google Scholar] [CrossRef]

- He, J.; Houde, S.; Weisz, J.D. Which Contributions Deserve Credit? Perceptions of Attribution in Human-AI Co-Creation. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, CHI ’25, Yokohama, Japan, 26 April–1 May 2025; pp. 1–18. [Google Scholar] [CrossRef]

- Hemmer, P.; Schemmer, M.; Vössing, M.; Kühl, N. Human-AI Complementarity in Hybrid Intelligence Systems: A Structured Literature Review. PACIS 2021, 78, 118. [Google Scholar]

- Amershi, S.; Cakmak, M.; Knox, W.B.; Kulesza, T. Power to the People: The Role of Humans in Interactive Machine Learning. AI Mag. 2014, 35, 105–120. [Google Scholar] [CrossRef]

- Mathieu, J.E.; Goodwin, G.F. The Influence of Shared Mental Models on Team Process and Performance. J. Appl. Psychol. 2000, 85, 273. [Google Scholar] [CrossRef]

- Rammel, C.; Stagl, S.; Wilfing, H. Managing Complex Adaptive Systems—A Co-Evolutionary Perspective on Natural Resource Management. Ecol. Econ. 2007, 63, 9–21. [Google Scholar] [CrossRef]

- Sawaragi, T. Dynamical and complex behaviors in human-machine co-adaptive systems. IFAC Proc. Vol. 2005, 38, 94–99. [Google Scholar] [CrossRef]

- Bai, Y.; Jones, A.; Ndousse, K.; Askell, A.; Chen, A.; DasSarma, N.; Drain, D.; Fort, S.; Ganguli, D.; Henighan, T.; et al. Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback. arXiv 2022. [Google Scholar] [CrossRef]

- Wirth, C.; Akrour, R.; Neumann, G.; Fürnkranz, J. A Survey of Preference-Based Reinforcement Learning Methods. J. Mach. Learn. Res. 2017, 18, 1–46. [Google Scholar]

- Sadigh, D.; Dragan, A.; Sastry, S.; Seshia, S. Active Preference-Based Learning of Reward Functions. Proceedings of the Robotics: Science and Systems (RSS 2017); Robotics Science and Systems Foundation: Cambridge, MA, USA, 2017. [Google Scholar] [CrossRef]

- Liu, S.; Duffy, A.H.B.; Whitfield, R.I.; Boyle, I.M. Integration of Decision Support Systems to Improve Decision Support Performance. Knowl. Inf. Syst. 2010, 22, 261–286. [Google Scholar] [CrossRef]

- Kaber, D.B.; Wright, M.C.; PrinzelIII, L.J.; Clamann, M.P. Adaptive Automation of Human-Machine System Information-Processing Functions. Hum. Factors 2005, 47, 730–741. [Google Scholar] [CrossRef] [PubMed]

- Herath Pathirannehelage, S.; Shrestha, Y.R.; Von Krogh, G. Design Principles for Artificial Intelligence-Augmented Decision Making: An Action Design Research Study. Eur. J. Inf. Syst. 2025, 34, 207–229. [Google Scholar] [CrossRef]

- Jarrahi, M.H.; Lutz, C.; Newlands, G. Artificial Intelligence, Human Intelligence and Hybrid Intelligence Based on Mutual Augmentation. Big Data Soc. 2022, 9, 20539517221142824. [Google Scholar] [CrossRef]

- Chignell, M.; Wang, L.; Zare, A.; Li, J. The Evolution of HCI and Human Factors: Integrating Human and Artificial Intelligence. ACM Trans. Comput.-Hum. Interact. 2023, 30, 1–30. [Google Scholar] [CrossRef]

- Felmingham, C.M.; Adler, N.R.; Ge, Z.; Morton, R.L.; Janda, M.; Mar, V.J. The Importance of Incorporating Human Factors in the Design and Implementation of Artificial Intelligence for Skin Cancer Diagnosis in the Real World. Am. J. Clin. Dermatol. 2021, 22, 233–242. [Google Scholar] [CrossRef] [PubMed]

- Gohel, P.; Singh, P.; Mohanty, M. Explainable AI: Current Status and Future Directions. arXiv 2021. [Google Scholar] [CrossRef]

- Xu, W.; Dainoff, M.J.; Ge, L.; Gao, Z. Transitioning to Human Interaction with AI Systems: New Challenges and Opportunities for HCI Professionals to Enable Human-Centered AI. Int. J. Hum.-Comput. Interact. 2023, 39, 494–518. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy. Int. J. Hum. Comput. Interact. 2020, 36, 495–504. [Google Scholar] [CrossRef]

- Stanney, K.M.; Schmorrow, D.D.; Johnston, M.; Fuchs, S.; Jones, D.; Hale, K.S.; Ahmad, A.; Young, P. Augmented Cognition: An Overview. Rev. Hum. Factors Ergon. 2009, 5, 195–224. [Google Scholar] [CrossRef]

- Sheridan, T. Human–Robot Interaction. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 525–532. [Google Scholar] [CrossRef]

- Liu, L.; Guo, F.; Zou, Z.; Duffy, V.G. Application, Development and Future Opportunities of Collaborative Robots (Cobots) in Manufacturing: A Literature Review. Int. J. Hum. Comput. Interact. 2024, 40, 915–932. [Google Scholar] [CrossRef]

- Sherwani, F.; Asad, M.M.; Ibrahim, B.S.K.K. Collaborative Robots and Industrial Revolution 4.0 (IR 4.0). In Proceedings of the 2020 International Conference on Emerging Trends in Smart Technologies (ICETST), Karachi, Pakistan, 26–27 March 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Conti, D.; Di Nuovo, S.; Buono, S.; Di Nuovo, A. Robots in Education and Care of Children with Developmental Disabilities: A Study on Acceptance by Experienced and Future Professionals. Int. J. Soc. Robot. 2017, 9, 51–62. [Google Scholar] [CrossRef]

- Mende, M.; Scott, M.L.; van Doorn, J.; Grewal, D.; Shanks, I. Service Robots Rising: How Humanoid Robots Influence Service Experiences and Elicit Compensatory Consumer Responses. J. Mark. Res. 2019, 56, 535–556. [Google Scholar] [CrossRef]

- Cobb, A.B. The Bionic Human; The Rosen Publishing Group, Inc.: New York, NY, USA, 2002. [Google Scholar]

- Zhu, M.; Biswas, S.; Dinulescu, S.I.; Kastor, N.; Hawkes, E.W.; Visell, Y. Soft, Wearable Robotics and Haptics: Technologies, Trends, and Emerging Applications. Proc. IEEE 2022, 110, 246–272. [Google Scholar] [CrossRef]

- Perry, J.C.; Rosen, J.; Burns, S. Upper-Limb Powered Exoskeleton Design. IEEEASME Trans. Mechatron. 2007, 12, 408–417. [Google Scholar] [CrossRef]

- Duan, J.; Yu, S.; Tan, H.L.; Zhu, H.; Tan, C. A Survey of Embodied AI: From Simulators to Research Tasks. IEEE Trans. Emerg. Top. Comput. Intell. 2022, 6, 230–244. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, W.; Bai, Y.; Liang, X.; Li, G.; Gao, W.; Lin, L. Aligning Cyber Space With Physical World: A Comprehensive Survey on Embodied AI. IEEE/ASME Trans. Mechatron. 2025, 30, 1–22. [Google Scholar] [CrossRef]

- Birbaumer, N. Breaking the Silence: Brain–Computer Interfaces (BCI) for Communication and Motor Control. Psychophysiology 2006, 43, 517–532. [Google Scholar] [CrossRef] [PubMed]

- Fan, X.; Jiang, X.; Deng, N. Immersive Technology: A Meta-Analysis of Augmented/Virtual Reality Applications and Their Impact on Tourism Experience. Tour. Manag. 2022, 91, 104534. [Google Scholar] [CrossRef]

- Yung, R.; Khoo-Lattimore, C. New Realities: A Systematic Literature Review on Virtual Reality and Augmented Reality in Tourism Research. Curr. Issues Tour. 2019, 22, 2056–2081. [Google Scholar] [CrossRef]

| Dimension | Code | Definition | Decision Criteria |

|---|---|---|---|

| Agency Locus | (H) Human-dominant | Human retains primary decision-making and control | Final approval, veto rights, or manual takeover is explicit. |

| (HM) Shared/Dynamic | Control dynamically allocated or jointly shared between human and machine | Requires all three conditions: (a) Human execution channel explicit; (b) Machine execution explicit; (c) Shared control/handoff mechanism present. | |

| (M) Machine-dominant | Machine retains primary control; human mainly sets goals or monitors | Machine autonomy or automatic policy execution stated; no human override mentioned. | |

| Integration Depth | (L) Loose coupling | Functional complementarity, low interdependence; systems run in parallel | No human actor mentioned in the definition or only for advisory/approval/offline interaction; human & machine loosely connected or separate. |

| (M) Moderate coupling | Substantial information exchange and feedback loops without physiological embedding | Human actor is explicit, and coordination/feedback/interaction cues are present, but no physiological/biomechanical integration. | |

| (H) High coupling | Physiological, cognitive, or mechanical embedding and sustained, continuous interaction; human and machine form a unified operational system | Human and machine operate as a unified operational system; explicit reference to body/device-level integration & closed-loop interaction. | |

| Normative Orientation | (1) Positive stance | Highlights benefits, efficiency, capability enhancement, and empowerment | Assumes trustworthiness, improved autonomy, or better outcomes. |

| (−1) Negative stance | Highlights risks, harms, threats, or ethical concerns | Assumes loss of control, liability concerns, or erosion of trust. | |

| (0) Neutral stance | Purely descriptive or definitional, no explicit value judgment | Focuses on what it is, not what it does. | |

| (1,−1) Mixed stance | Contains both positive and negative normative signals | Reflects uncertain trade-offs, dual-use nature, or socio-ethical complexity. |

| Cluster | Label | Size (n) | Percentage |

|---|---|---|---|

| C0 | Machine-Led Low Integration | 15 | 25.00% |

| C1 | Shared Collaborative Normative | 19 | 31.70% |

| C2 | Human-Led Medium Integration | 15 | 25.00% |

| C3 | Human-Centric Low Integration | 11 | 18.30% |

| Total | 60 | 100% |

| Dimension | Level/Value | n | % of Total |

|---|---|---|---|

| Agency | Human-led | 21 | 35.0% |

| Shared | 25 | 41.7% | |

| Machine-led | 14 | 23.3% | |

| Integration | Low | 24 | 40.0% |

| Medium | 29 | 48.3% | |

| High | 7 | 11.7% | |

| Normativity | Negative | 1 | 1.7% |

| Neutral | 36 | 60.0% | |

| Positive | 23 | 38.3% | |

| Means | Agency = 0.88 Integration = 0.72 Normativity = 0.53 | ||

| Cluster | Label | Agency Centroid | Integration Centroid | Normativity Centroid | Top Concepts |

|---|---|---|---|---|---|

| Cluster 0 | Machine-Led Low Integration | 1.87 | 0.00 | 0.13 | Hybrid-AI, Neuro-Symbolic AI, Multi-Agent System |

| Cluster 1 | Shared Collaborative Normative | 1.00 | 1.26 | 1.00 | Hybrid Intelligence, Adjustable Autonomy, Shared Autonomy |

| Cluster 2 | Human-Led Medium Integration | 0.40 | 0.93 | 0.00 | AI-in-the-Loop (AITL), Immersive Technology (XR), Virtual Reality (VR) |

| Cluster 3 | Human-Centric Low Integration | 0.00 | 0.45 | 1.00 | Participatory AI, Augmented Decision Making |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prahl, A.; Li, Y. Vocabulary at the Living–Machine Interface: A Narrative Review of Shared Lexicon for Hybrid AI. Biomimetics 2025, 10, 723. https://doi.org/10.3390/biomimetics10110723

Prahl A, Li Y. Vocabulary at the Living–Machine Interface: A Narrative Review of Shared Lexicon for Hybrid AI. Biomimetics. 2025; 10(11):723. https://doi.org/10.3390/biomimetics10110723

Chicago/Turabian StylePrahl, Andrew, and Yan Li. 2025. "Vocabulary at the Living–Machine Interface: A Narrative Review of Shared Lexicon for Hybrid AI" Biomimetics 10, no. 11: 723. https://doi.org/10.3390/biomimetics10110723

APA StylePrahl, A., & Li, Y. (2025). Vocabulary at the Living–Machine Interface: A Narrative Review of Shared Lexicon for Hybrid AI. Biomimetics, 10(11), 723. https://doi.org/10.3390/biomimetics10110723