DE-YOLOv13-S: Research on a Biomimetic Vision-Based Model for Yield Detection of Yunnan Large-Leaf Tea Trees

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset Construction

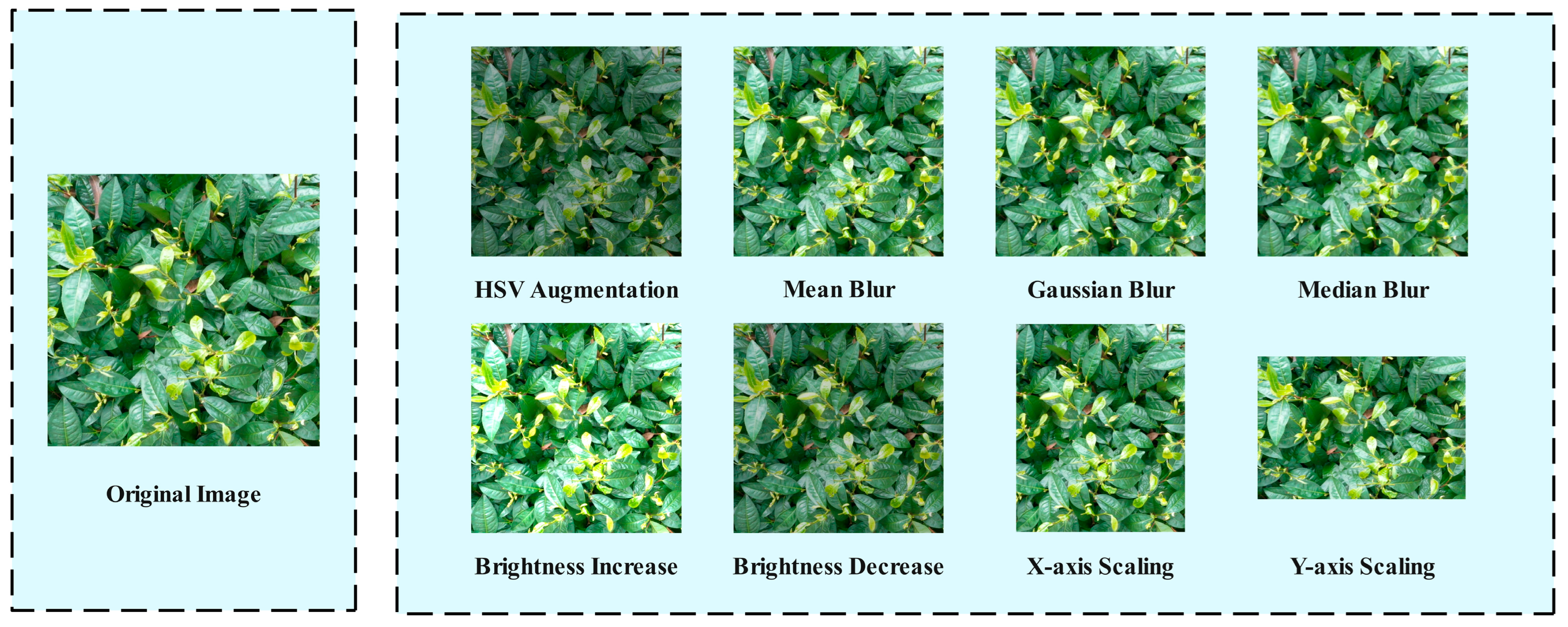

2.2. Data Augmentation

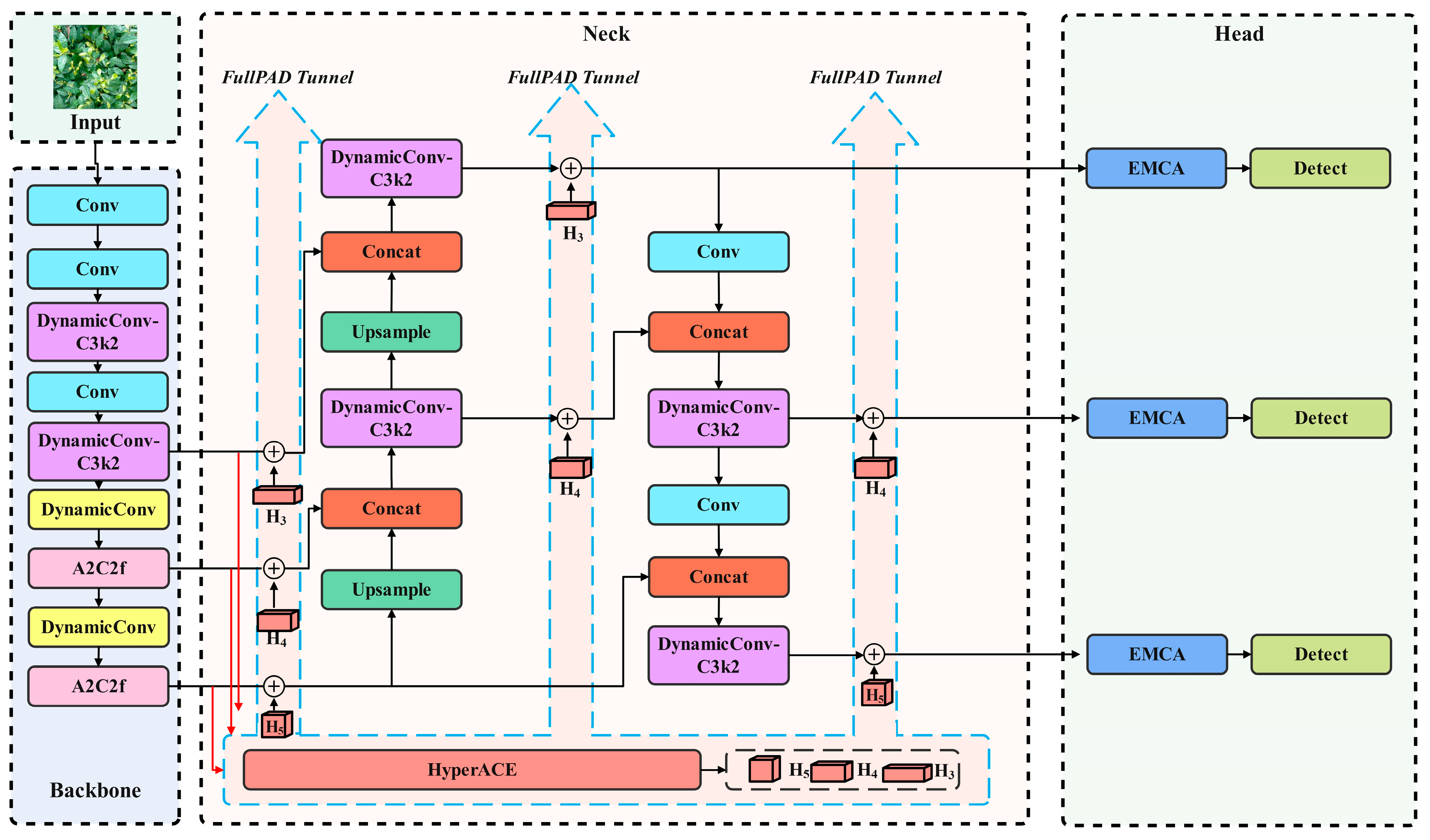

2.3. Improvements to the YOLOv13 Network

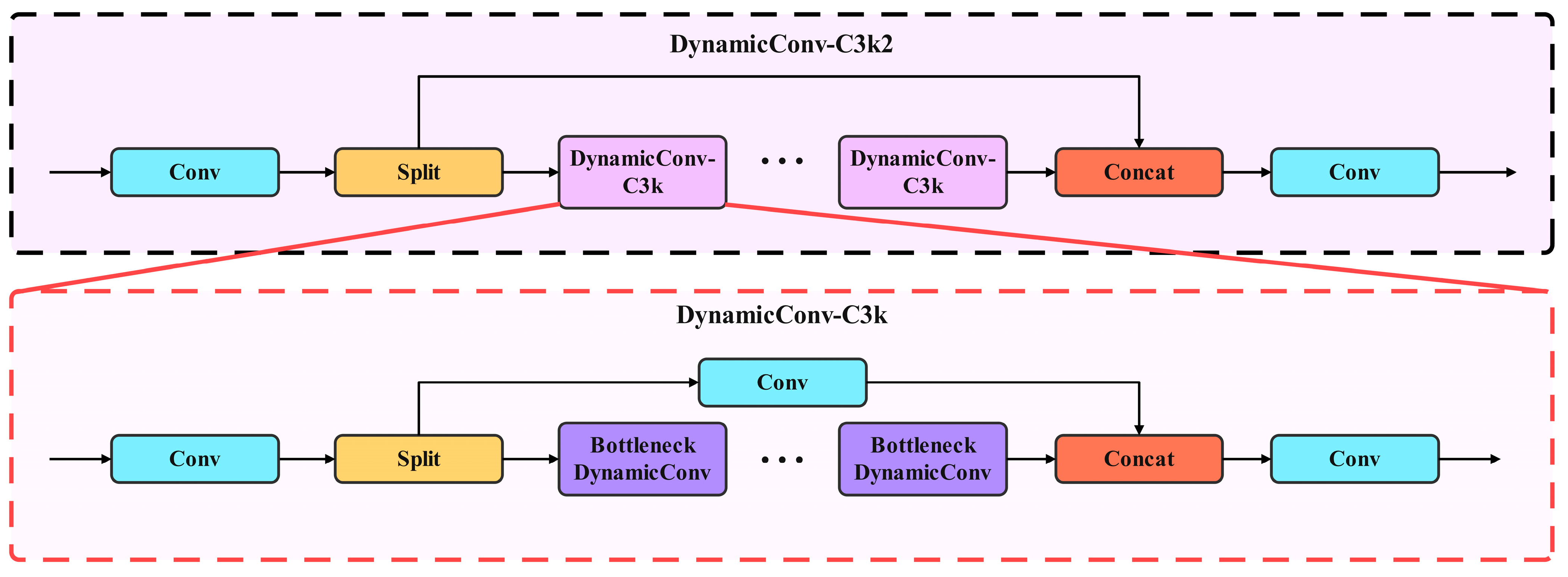

2.3.1. DynamicConv Optimization

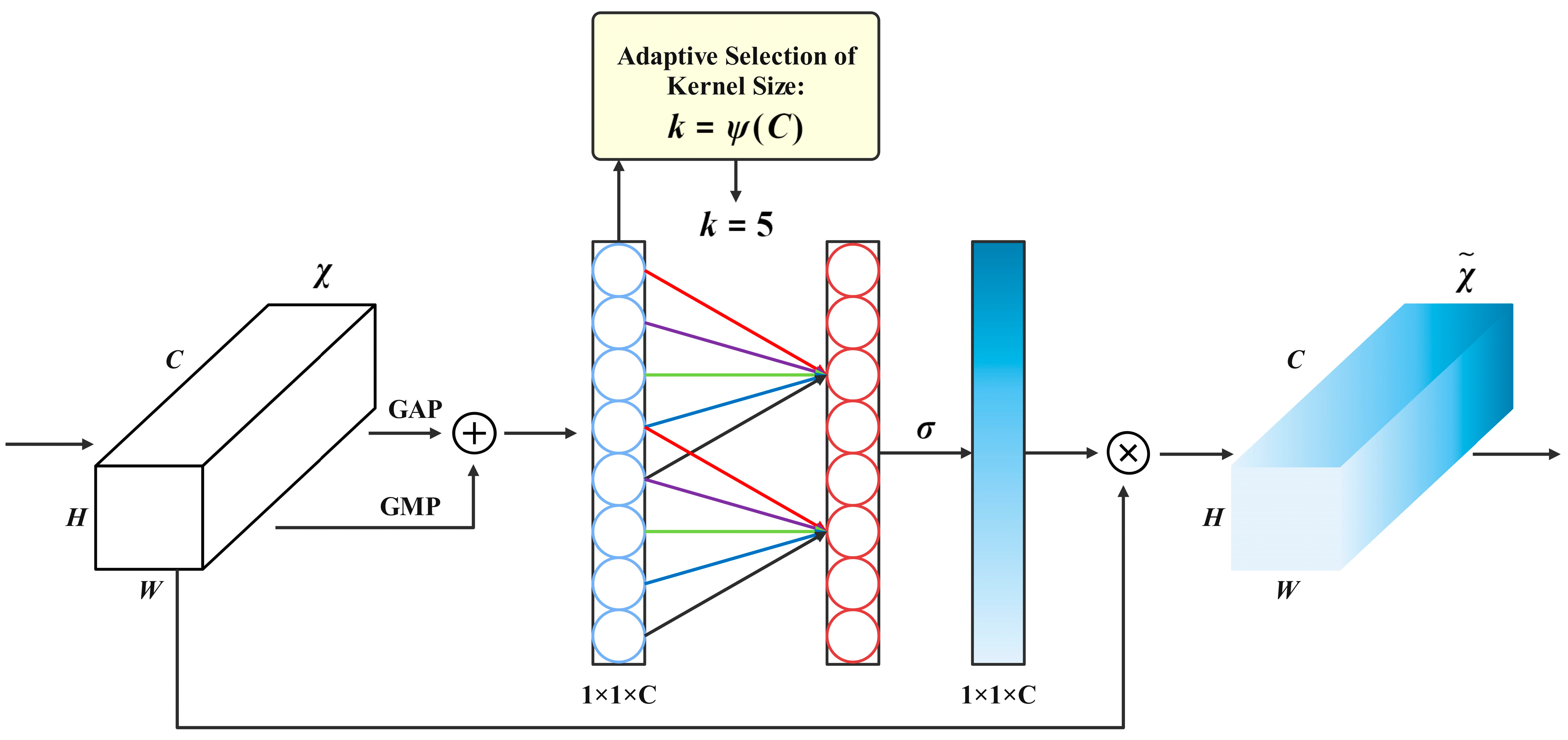

2.3.2. Efficient Mixed Pooling Channel Attention Optimization

2.3.3. Scale-Based Dynamic Loss Optimization

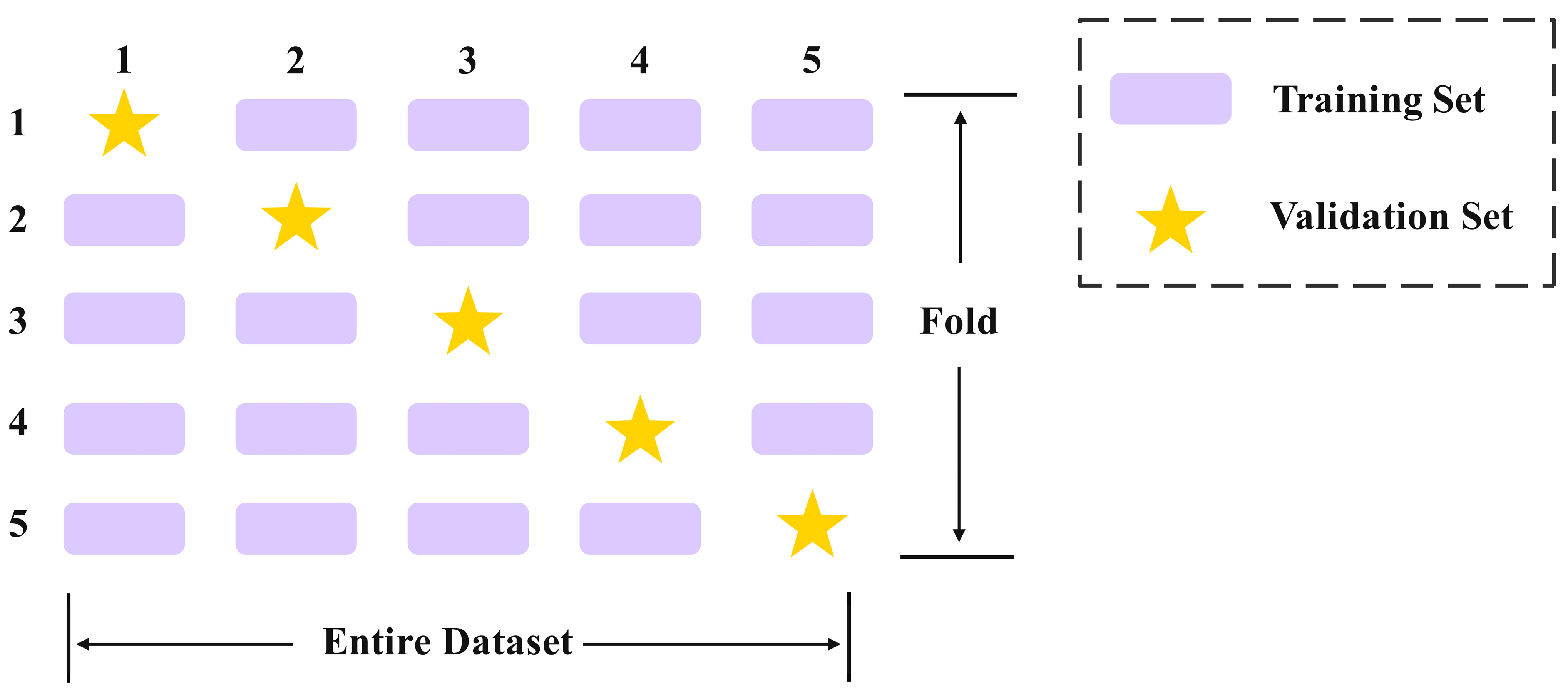

2.4. Five-Fold Cross Validation

2.5. Evaluation Metrics

3. Results and Analysis

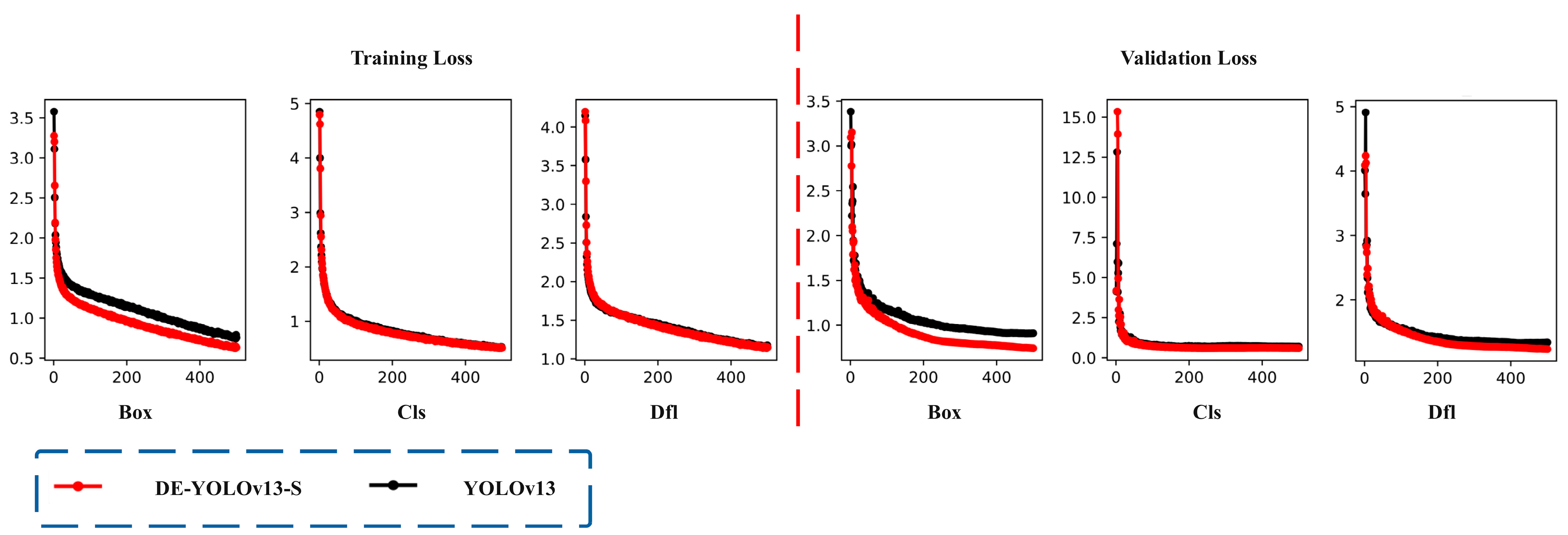

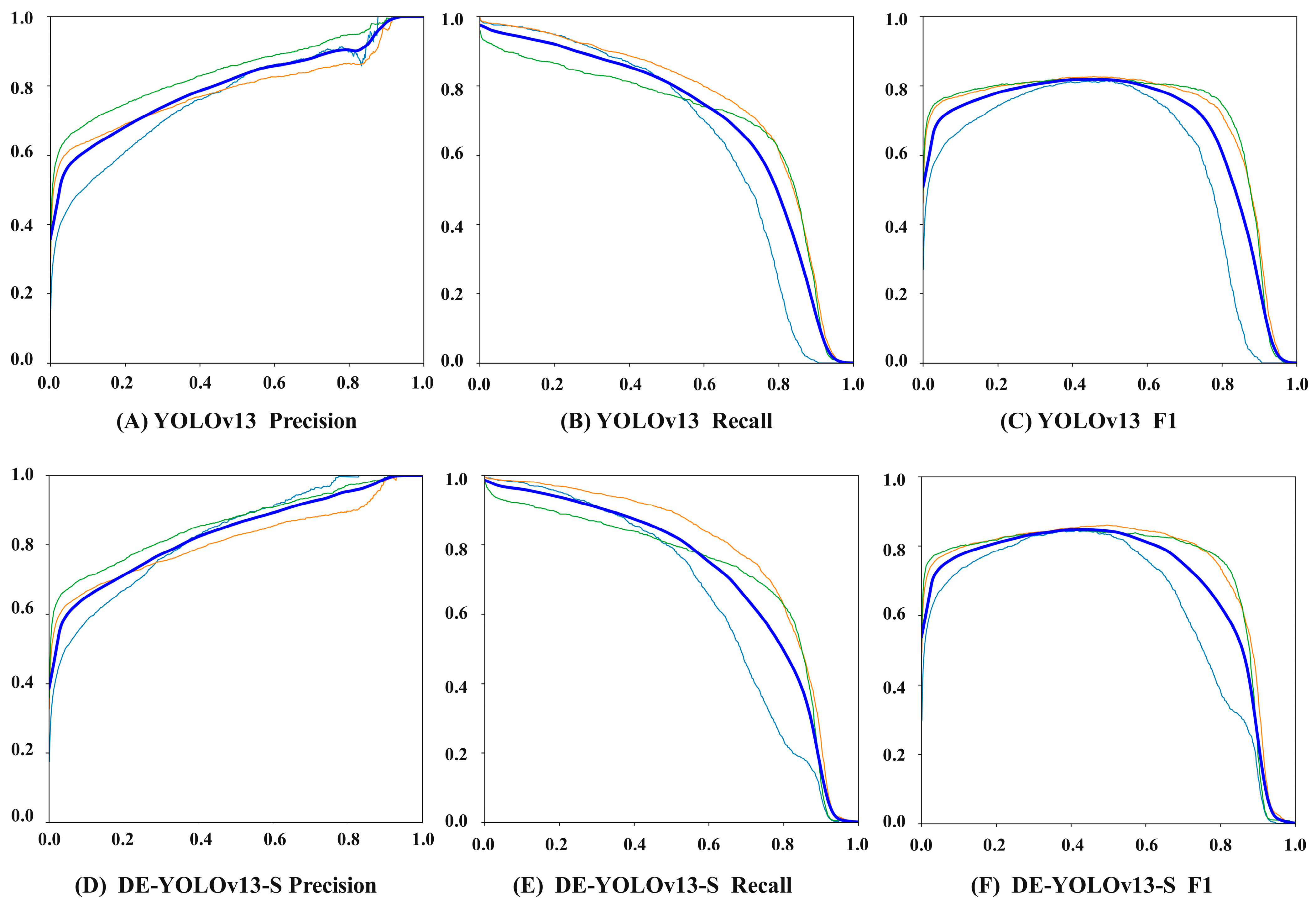

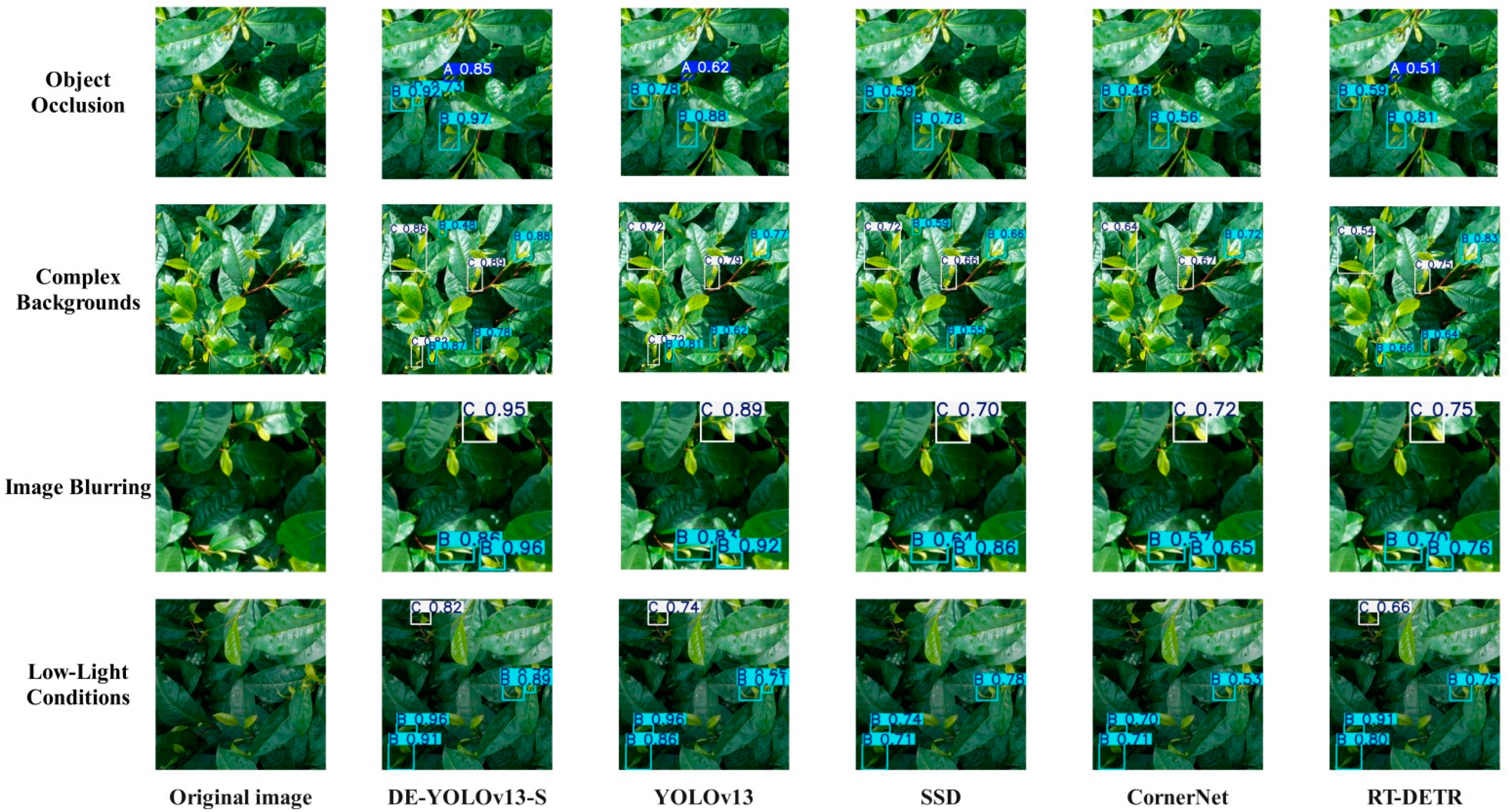

3.1. Analysis of Model Results

3.2. Ablation Experiments

3.3. Model Comparison Experiments

4. Conclusions and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, S.; Liu, W.; Cheng, X.; Wang, Z.; Yuan, F.; Wu, W.; Liao, S. Evaluating the productivity of ancient Pu’er tea trees (Camellia sinensis var. assamica): A multivariate modeling approach. Plant Methods 2022, 18, 95. [Google Scholar] [CrossRef] [PubMed]

- Yang, N.; Han, M.H.; Teng, R.M.; Yang, Y.Z.; Wang, Y.H.; Xiong, A.S.; Zhuang, J. Exogenous melatonin enhances photosynthetic capacity and related gene expression in a dose-dependent manner in the tea plant (Camellia sinensis (L.) Kuntze). Int. J. Mol. Sci. 2022, 23, 6694. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Zhou, J.; Pan, W.; Sun, T.; Liu, M.; Tang, R.; Li, Z.; Ma, Q.; Wu, L. Effects of combined application of nitrogen, phosphorus, and potassium fertilizers on tea (Camellia sinensis) growth and fungal community. Appl. Soil Ecol. 2023, 181, 104661. [Google Scholar] [CrossRef]

- Roy, C.; Naskar, S.; Ghosh, S.; Rahaman, P.; Mahanta, S.; Sarkar, N.; Kundu Chaudhuri, R.; Babu, A.; Roy, S.; Chakraborti, D. Sucking pest management in tea (Camellia sinensis (L.) Kuntze) cultivation: Integrating conventional methods with bio-control strategies. Crop Prot. 2024, 183, 106759. [Google Scholar] [CrossRef]

- Yang, Q.; Gu, J.; Xiong, T.; Wang, Q.; Huang, J.; Xi, Y.; Shen, Z. RFA-YOLOv8: A Robust Tea Bud Detection Model with Adaptive Illumination Enhancement for Complex Orchard Environments. Agriculture 2025, 15, 1982. [Google Scholar] [CrossRef]

- Zhuang, Z.H.; Tsai, H.P.; Chen, C.I. Estimating Tea Plant Physiological Parameters Using Unmanned Aerial Vehicle Imagery and Machine Learning Algorithms. Sensors 2025, 25, 1966. [Google Scholar] [CrossRef]

- Yu, R.; Xie, Y.; Li, Q.; Guo, Z.; Dai, Y.; Fang, Z.; Li, J. Development and Experiment of Adaptive Oolong Tea Harvesting Robot Based on Visual Localization. Agriculture 2024, 14, 2213. [Google Scholar] [CrossRef]

- Mpouziotas, D.; Karvelis, P.; Stylios, C. Advanced Computer Vision Methods for Tracking Wild Birds from Drone Footage. Drones 2024, 8, 259. [Google Scholar] [CrossRef]

- Wang, X.; Sheng, Y.; Hao, Q.; Hou, H.; Nie, S. YOLO-HVS: Infrared Small Target Detection Inspired by the Human Visual System. Biomimetics 2025, 10, 451. [Google Scholar] [CrossRef]

- Wang, S.M.; Yu, C.P.; Ma, J.H.; Ouyang, J.X.; Zhao, Z.M.; Xuan, Y.M.; Fan, D.M.; Yu, J.F.; Wang, X.C.; Zheng, X.Q. Tea yield estimation using UAV images and deep learning. Ind. Crops Prod. 2024, 212, 118358. [Google Scholar] [CrossRef]

- Jiang, Y.; Wei, Z.; Hu, G. Detection of tea leaf blight in UAV remote sensing images by integrating super-resolution and detection networks. Environ. Monit. Assess. 2024, 196, 1044. [Google Scholar] [CrossRef]

- Guo, X.; Yang, C.; Wang, Z.; Zhang, J.; Zhang, S.; Wang, B. Research on the Yunnan Large-Leaf Tea Tree Disease Detection Model Based on the Improved YOLOv10 Network and UAV Remote Sensing. Appl. Sci. 2025, 15, 5301. [Google Scholar] [CrossRef]

- Wang, J.; Jiang, M.; Abbas, T.; Chen, H.; Jiang, Y. YOLOv-MA: A High-Precision Foreign Object Detection Algorithm for Rice. Agriculture 2025, 15, 1354. [Google Scholar] [CrossRef]

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Ding, G.; Du, S.; Wu, Z.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arxiv 2025, arXiv:2506.17733. [Google Scholar]

- Han, K.; Wang, Y.; Guo, J.; Wu, E. ParameterNet: Parameters are all you need. arxiv 2023, arXiv:2306.14525. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Yang, J.; Liu, S.; Wu, J.; Su, X.; Hai, N.; Huang, X. Pinwheel-shaped Convolution and Scale-based Dynamic Loss for Infrared Small Target Detection. arxiv 2024, arXiv:2412.16986. [Google Scholar] [CrossRef]

- Chen, M.M.; Liao, Q.H.; Qian, L.L.; Zou, H.D.; Li, Y.L.; Song, Y.; Xia, Y.; Liu, Y.; Liu, H.Y.; Liu, Z.L. Effects of Geographical Origin and Tree Age on the Stable Isotopes and Multi-Elements of Pu-erh Tea. Foods 2024, 13, 473. [Google Scholar] [CrossRef]

- Xu, K.; Sun, W.; Chen, D.; Qing, Y.; Xing, J.; Yang, R. Early Sweet Potato Plant Detection Method Based on YOLOv8s (ESPPD-YOLO): A Model for Early Sweet Potato Plant Detection in a Complex Field Environment. Agronomy 2024, 14, 2650. [Google Scholar] [CrossRef]

- Duan, Y.; Han, W.; Guo, P.; Wei, X. YOLOv8-GDCI: Research on the Phytophthora Blight Detection Method of Different Parts of Chili Based on Improved YOLOv8 Model. Agronomy 2024, 14, 2734. [Google Scholar] [CrossRef]

- Li, H.; Yuan, W.; Xia, Y.; Wang, Z.; He, J.; Wang, Q.; Zhang, S.; Li, L.; Yang, F.; Wang, B. YOLOv8n-WSE-Pest: A Lightweight Deep Learning Model Based on YOLOv8n for Pest Identification in Tea Gardens. Appl. Sci. 2024, 14, 8748. [Google Scholar] [CrossRef]

- Wang, Z.; Hu, S.; Wang, X.; Gao, Y.; Zhang, W.; Chen, Y.; Lin, H.; Gao, T.; Chen, J.; Gong, X.; et al. YOLOv13-Cone-Lite: An Enhanced Algorithm for Traffic Cone Detection in Autonomous Formula Racing Cars. Appl. Sci. 2025, 15, 9501. [Google Scholar] [CrossRef]

- Li, Z.; Sun, J.; Shen, Y.; Yang, Y.; Wang, X.; Wang, X.; Tian, P.; Qian, Y. Deep migration learning-based recognition of diseases and insect pests in Yunnan tea under complex environments. Plant Methods 2024, 20, 101. [Google Scholar] [CrossRef]

- Appleby, T.R. Motion Sensitivity in Center-Surround Receptive Fields of Primate Retinal Ganglion Cells. Ph.D. Thesis, University of Washington, Seattle, WA, USA, 2024. [Google Scholar]

- Kim, H.W.; Kim, J.H.; Shin, D.H.; Jung, M.C.; Park, T.W.; Park, H.J.; Han, J.K.; Hwang, C.S. Neuromorphic Visual Receptive Field Hardware with Vertically Integrated Indium-Gallium-Zinc-Oxide Optoelectronic Memristors over Silicon Neuron Transistors. Adv. Mater. 2025, e13907. [Google Scholar] [CrossRef]

- Wang, S.; Kong, H.; Li, B.; Zheng, F. Fab-ME: A vision state-space and attention-enhanced framework for fabric defect detection. In Advanced Intelligent Computing Technology and Applications; Huang, D.S., Pan, Y., Chen, W., Li, B., Eds.; Springer: Singapore, 2025; pp. 103–114. [Google Scholar] [CrossRef]

- Huang, P.; Tian, S.; Su, Y.; Tan, W.; Dong, Y.; Xu, W. IA-CIOU: An improved IOU bounding box loss function for SAR ship target detection methods. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 10569–10582. [Google Scholar] [CrossRef]

- DeFigueiredo, R.P.; Bernardino, A. An overview of space-variant and active vision mechanisms for resource-constrained human inspired robotic vision. Auton. Robot. 2023, 47, 1119–1135. [Google Scholar] [CrossRef]

- Tapia, G.; Allende-Cid, H.; Chabert, S.; Mery, D.; Salas, R. Benchmarking YOLO models for intracranial hemorrhage detection using varied CT data sources. IEEE Access 2024, 12, 188084–188101. [Google Scholar] [CrossRef]

- Shen, L.; Lang, B.; Song, Z. DS-YOLOv8-based object detection method for remote sensing images. IEEE Access 2023, 11, 125122–125137. [Google Scholar] [CrossRef]

- Zhou, X.; Lee, W.S.; Ampatzidis, Y.; Chen, Y.; Peres, N.; Fraisse, C. Strawberry maturity classification from UAV and near-ground imaging using deep learning. Smart Agric. Technol. 2021, 1, 100001. [Google Scholar] [CrossRef]

- Jiang, W.; Yang, L.; Bu, Y. Research on the Identification and Classification of Marine Debris Based on Improved YOLOv8. J. Mar. Sci. Eng. 2024, 12, 1748. [Google Scholar] [CrossRef]

- Li, M.; Jia, T.; Wang, H.; Ma, B.; Lu, H.; Lin, S. AO-DETR: Anti-Overlapping DETR for X-Ray Prohibited Items Detection. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 12076–12090. [Google Scholar] [CrossRef]

- Wang, N.; Wu, Q.; Gui, Y.; Hu, Q.; Li, W. Cross-Modal Segmentation Network for Winter Wheat Mapping in Complex Terrain Using Remote-Sensing Multi-Temporal Images and DEM Data. Remote Sens. 2024, 16, 1775. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, D.; Liu, M.; Liu, Z.; Dong, Q.; Zou, H.; Hao, H.; Su, Y. YOLO-PDGT: A lightweight and efficient algorithm for unripe pomegranate detection and counting. Measurement 2025, 254, 117852. [Google Scholar] [CrossRef]

- Chen, J.; Sun, L.; Song, Y.; Geng, Y.; Xu, H.; Xu, W. 3D Surface Highlight Removal Method Based on Detection Mask. Arab. J. Sci. Eng. 2025, 1–13. [Google Scholar] [CrossRef]

- Goceri, E. GAN based augmentation using a hybrid loss function for dermoscopy images. Artif. Intell. Rev. 2024, 57, 234. [Google Scholar] [CrossRef]

- Qureshi, A.M.; Butt, A.H.; Alazeb, A.; AlMudawi, N.; Alonazi, M.; Almujally, N.A.; Jalal, A.; Liu, H. Semantic Segmentation and YOLO Detector over Aerial Vehicle Images. Comput. Mater. Contin. 2024, 80, 3315–3332. [Google Scholar] [CrossRef]

- Wang, X.; Song, X.; Li, Z.; Wang, H. YOLO-DBS: Efficient Target Detection in Complex Underwater Scene Images Based on Improved YOLOv8. J. Ocean Univ. China 2025, 24, 979–992. [Google Scholar] [CrossRef]

- Li, Z.; Xiao, L.; Shen, M.; Tang, X. A lightweight YOLOv8-based model with Squeeze-and-Excitation Version 2 for crack detection of pipelines. Appl. Soft Comput. 2025, 177, 113260. [Google Scholar] [CrossRef]

- Fan, C.L. Evaluation model for crack detection with deep learning: Improved confusion matrix based on linear features. J. Constr. Eng. Manag. 2025, 151, 04024210. [Google Scholar] [CrossRef]

- Distadio, L.F.; Johl, S. Shades of Green in the Bond Market: The Role of External Verification Reports. Int. J. Account. 2025, 2541001. [Google Scholar] [CrossRef]

| Tea Category | Original Image | External Validation Set | ||

|---|---|---|---|---|

| Total Number of Labels | Training Set (Five Fold Cross Validation) | Test Set | ||

| Tender Buds | 1986 | 1612 | 374 | 545 |

| One Bud and One Leaf | 2723 | 2286 | 437 | 645 |

| One Bud and Two Leaf | 2694 | 2246 | 448 | 674 |

| ID | From | Params | Module | Arguments |

|---|---|---|---|---|

| 0 | −1 | 464 | Conv | [3, 16, 3, 2] |

| 1 | −1 | 2368 | Conv | [16, 32, 3, 2, 1, 2] |

| 2 | −1 | 15,940 | DynamicConv-C3k2 | [32, 64, 1, False, 0.25] |

| 3 | −1 | 9344 | Conv | [64, 64, 3, 2, 1, 4] |

| 4 | −1 | 63,108 | DynamicConv-C3k2 | [64, 128, 1, False, 0.25] |

| 5 | −1 | 590,596 | DynamicConv | [128, 128, 3, 2] |

| 6 | −1 | 174,720 | A2C2f | [128, 128, 2, True, 4] |

| 7 | −1 | 1,180,676 | DynamicConv | [128, 256, 3, 2] |

| 8 | −1 | 677,120 | A2C2f | [256, 256, 2, True, 1] |

| 9 | [4, 6, 8] | 273,536 | HyperACE | [128, 128, 1, 4, True, True, 0.5, 1, ‘both’] |

| 10 | −1 | 0 | Upsample | [None, 2, ‘nearest’] |

| 11 | 9 | 33,280 | DownsampleConv | [128] |

| 12 | [6, 9] | 1 | FullPAD_Tunnel | [] |

| 13 | [4, 10] | 1 | FullPAD_Tunnel | [] |

| 14 | [8, 11] | 1 | FullPAD_Tunnel | [] |

| 15 | −1 | 0 | Upsample | [None, 2, ‘nearest’] |

| 16 | [−1, 12] | 0 | Concat | [1] |

| 17 | −1 | 175,368 | DynamicConv-C3k2 | [384, 128, 1, True] |

| 18 | [−1, 9] | 1 | FullPAD_Tunnel | [] |

| 19 | 17 | 0 | Upsample | [None, 2, ‘nearest’] |

| 20 | [−1, 13] | 0 | Concat | [1] |

| 21 | −1 | 48,264 | DynamicConv-C3k2 | [256, 64, 1, True] |

| 22 | 10 | 8320 | Conv | [128, 64, 1, 1] |

| 23 | [21, 22] | 1 | FullPAD_Tunnel | [] |

| 24 | −1 | 36,992 | Conv | [64, 64, 3, 2] |

| 25 | [−1, 18] | 0 | Concat | [1] |

| 26 | −1 | 150,792 | DynamicConv-C3k2 | [192, 128, 1, True] |

| 27 | [−1, 9] | 1 | FullPAD_Tunnel | [] |

| 28 | 26 | 147,712 | Conv | [128, 128, 3, 2] |

| 29 | [−1, 14] | 0 | Concat | [1] |

| 30 | −1 | 600,584 | DynamicConv-C3k2 | [384, 256, 1, True] |

| 31 | [−1, 11] | 1 | FullPAD_Tunnel | [] |

| 32 | 23 | 4 | EMCA | [64] |

| 33 | 27 | 4 | EMCA | [128] |

| 34 | 31 | 4 | EMCA | [256] |

| 35 | [32, 33, 34] | 431,257 | Detect | [3, [64, 128, 256]] |

| Model | Precision (%) | Recall (%) | mAP (%) | FLOPs (G) | Parameters | Gradients | FPS |

|---|---|---|---|---|---|---|---|

| YOLOv13 | 78.54 | 85.22 | 88.71 | 6.4 | 2,460,496 | 2,460,480 | 62.11 |

| D-YOLOv13 | 80.25 | 86.32 | 90.07 | 6.2 | 4,620,448 | 4,620,432 | 64.51 |

| E-YOLOv13 | 81.28 | 85.31 | 89.34 | 6.4 | 2,460,508 | 2,460,492 | 61.73 |

| YOLOv13-S | 79.46 | 86.94 | 89.56 | 6.4 | 2,460,496 | 2,460,480 | 63.69 |

| DE-YOLOv13 | 82.12 | 85.58 | 90.97 | 6.2 | 4,620,460 | 4,620,444 | 63.29 |

| D-YOLOv13-S | 82.03 | 87.01 | 91.18 | 6.2 | 4,620,448 | 4,620,432 | 64.94 |

| E-YOLOv13-S | 81.31 | 87.12 | 90.92 | 6.4 | 2,460,508 | 2,460,492 | 62.50 |

| DE-YOLOv13-S | 82.32 | 87.26 | 92.06 | 6.2 | 4,620,460 | 4,620,444 | 64.10 |

| Model | Precision (%) | Recall (%) | F1 (%) | A_AP (%) | B_AP (%) | C_AP (%) | mAP (%) |

|---|---|---|---|---|---|---|---|

| DE-YOLOv13-S | 82.32 | 87.26 | 84.72 | 93.11 | 94.62 | 88.45 | 92.06 |

| YOLOv13 | 78.54 | 85.22 | 81.74 | 90.52 | 91.54 | 84.07 | 88.71 |

| SSD | 74.19 | 77.49 | 75.80 | 79.55 | 79.83 | 77.56 | 78.98 |

| CornerNet | 70.29 | 77.36 | 73.66 | 76.67 | 78.33 | 74.95 | 76.65 |

| RT-DETR | 78.18 | 83.66 | 80.83 | 87.76 | 89.23 | 83.67 | 86.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Guo, X.; Tan, M.; Yang, C.; Wang, Z.; Li, G.; Wang, B. DE-YOLOv13-S: Research on a Biomimetic Vision-Based Model for Yield Detection of Yunnan Large-Leaf Tea Trees. Biomimetics 2025, 10, 724. https://doi.org/10.3390/biomimetics10110724

Zhang S, Guo X, Tan M, Yang C, Wang Z, Li G, Wang B. DE-YOLOv13-S: Research on a Biomimetic Vision-Based Model for Yield Detection of Yunnan Large-Leaf Tea Trees. Biomimetics. 2025; 10(11):724. https://doi.org/10.3390/biomimetics10110724

Chicago/Turabian StyleZhang, Shihao, Xiaoxue Guo, Meng Tan, Chunhua Yang, Zejun Wang, Gongming Li, and Baijuan Wang. 2025. "DE-YOLOv13-S: Research on a Biomimetic Vision-Based Model for Yield Detection of Yunnan Large-Leaf Tea Trees" Biomimetics 10, no. 11: 724. https://doi.org/10.3390/biomimetics10110724

APA StyleZhang, S., Guo, X., Tan, M., Yang, C., Wang, Z., Li, G., & Wang, B. (2025). DE-YOLOv13-S: Research on a Biomimetic Vision-Based Model for Yield Detection of Yunnan Large-Leaf Tea Trees. Biomimetics, 10(11), 724. https://doi.org/10.3390/biomimetics10110724