Extended Kalman Filter-Based Visual Odometry in Dynamic Environments Using Modified 1-Point RANSAC

Abstract

1. Introduction

- (1)

- A new algorithm of EKF-based visual odometry using stereo camera is proposed, which utilizes both static and dynamic landmarks for ego-pose estimation. This method uses instance segmentation to detect and track objects from image, so that it can use the characteristics of image. Also, in multiple object tracking on road, our method is robust to truncation due to angle of view, which often causes a shift in the observed point cloud centroid as the object moves near the boundary of the field of view.

- (2)

- Reasonable outlier rejection in dynamic environment is conducted by 1-point RANSAC and RANSAC-based transformation estimation from point cloud. The method of outlier rejection and determining whether an object is static or dynamic is proposed.

- (3)

- Tests are conducted using real-world image dataset to evaluate the performance of ego-pose estimation in dynamic environment with noise.

2. Modified 1-Point RANSAC for Dynamic Environments

2.1. Models for EKF

2.2. Classical 1-Point RANSAC Assuming Static Environments

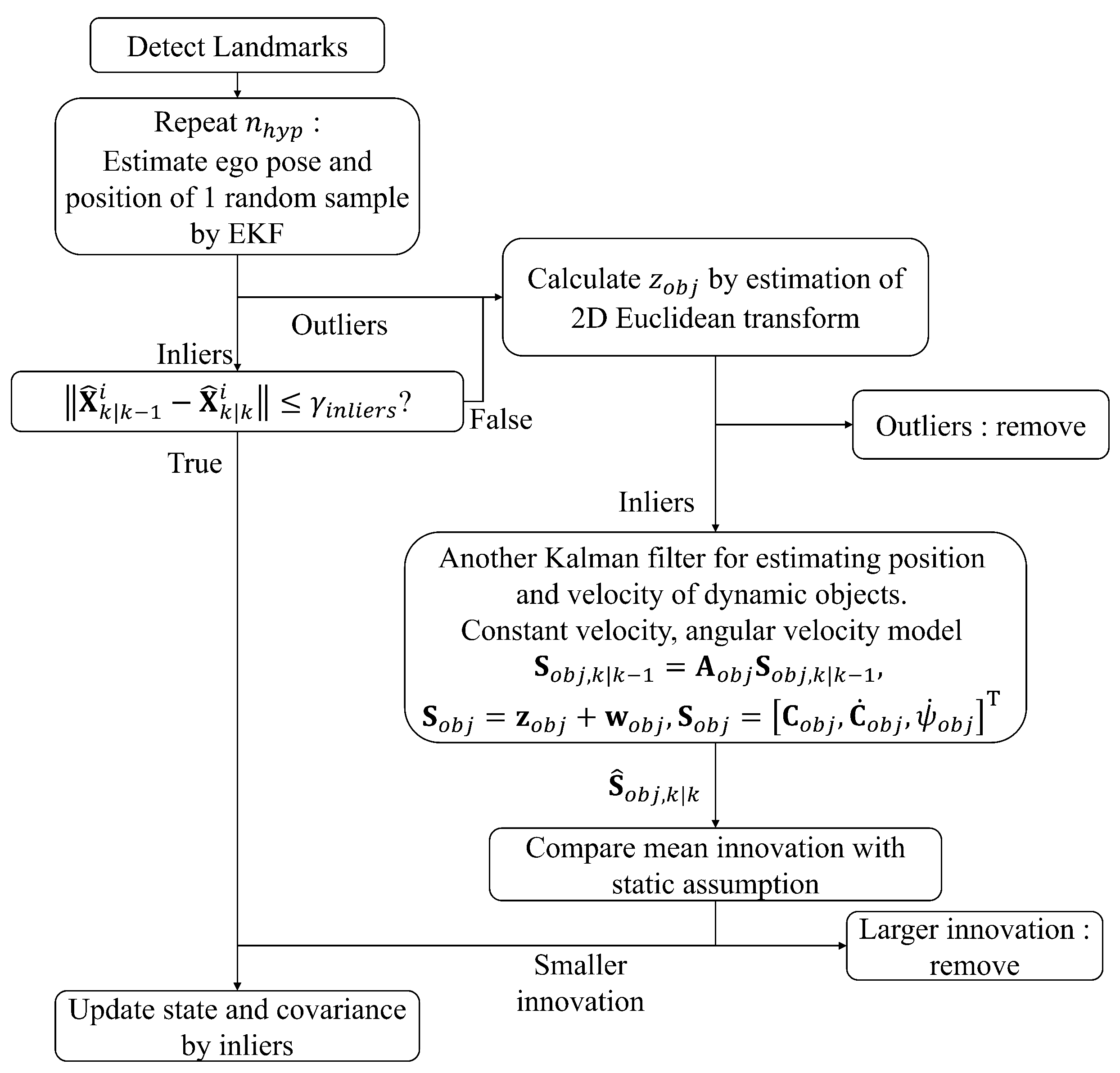

2.3. Modified 1-Point RANSAC Assuming Dynamic Environments

- (1)

- Measurement (Section 2.3.1): Landmark positions are reconstructed in the camera coordinate system from stereo image pairs. Instance segmentation is then applied to classify features into object and background regions.

- (2)

- Classical 1-point RANSAC (Section 2.3.2): Matched landmarks between consecutive frames are processed using the standard 1-point RANSAC to classify inliers and outliers. Outliers, as well as inliers with large innovation, are treated as potentially dynamic features. The estimated ego-pose at this stage serves as a temporary estimate for the following steps.

- (3)

- Object motion estimation (Section 2.3.3): The rigid-body motion of each detected object is estimated from its point cloud using a RANSAC-based Euclidean transform. A secondary Kalman filter is then applied to refine the state of each object using the estimated transformation.

- (4)

- Augmented state estimation (Section 2.3.4): The ego-pose and landmark states are jointly updated using inliers from both static and dynamic landmarks within the EKF framework, enabling consistent estimation under dynamic scenes.

- (5)

- Mapping of newly detected landmarks (Section 2.3.5): Newly observed or unmatched landmarks are initialized and incorporated into the map using the filtered ego-pose.

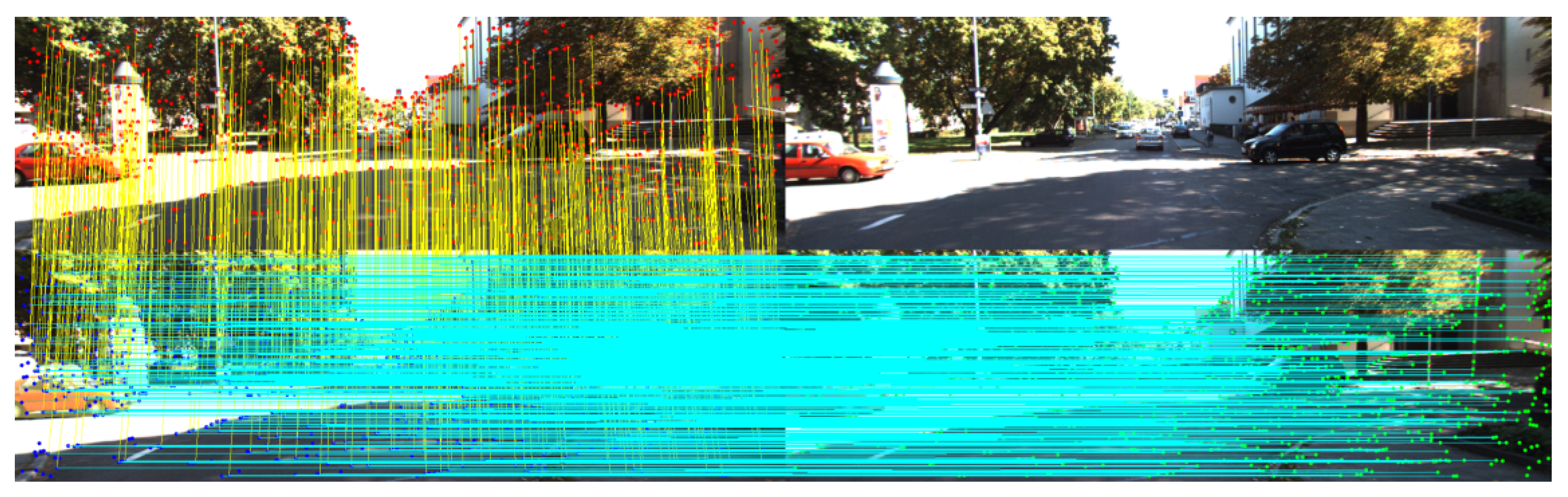

2.3.1. Feature Detection, Matching and Multiple Object Tracking

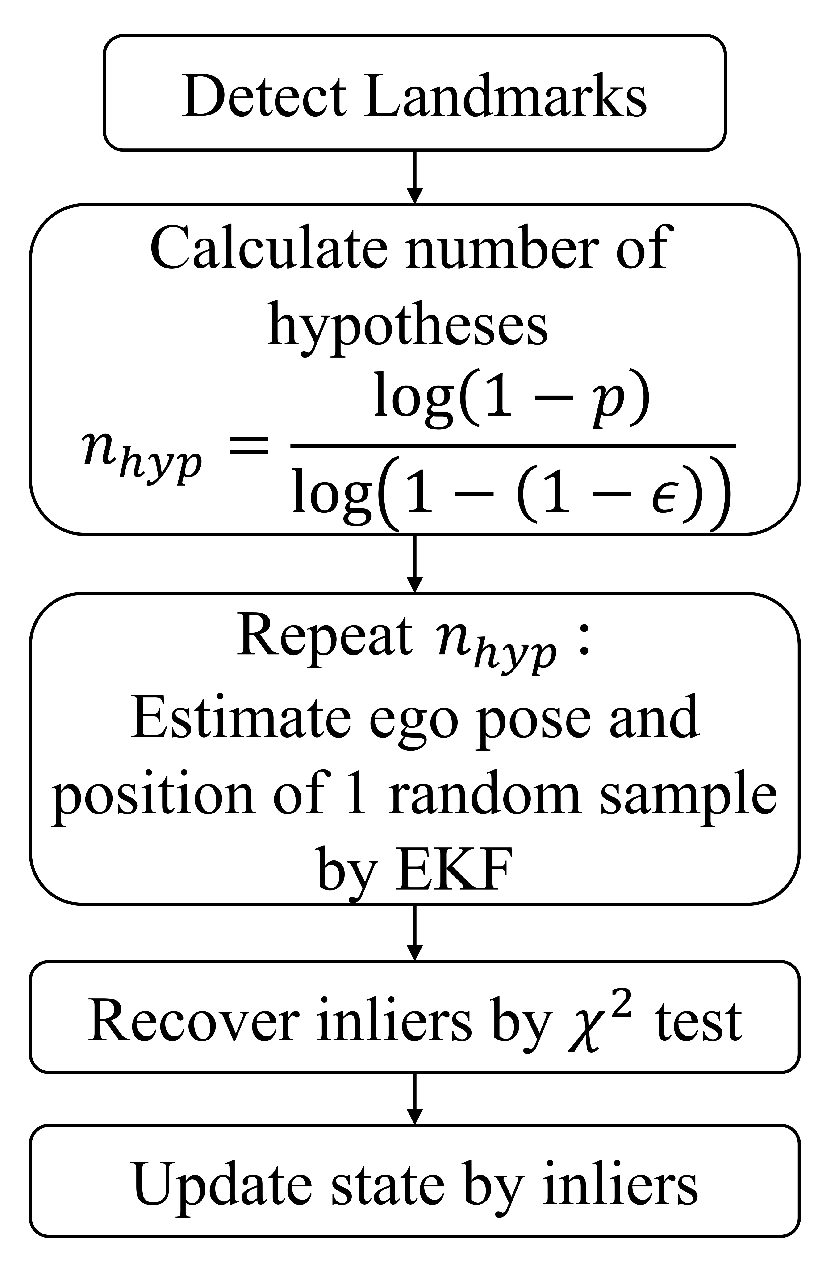

2.3.2. 1-Point RANSAC Assuming Static Environment

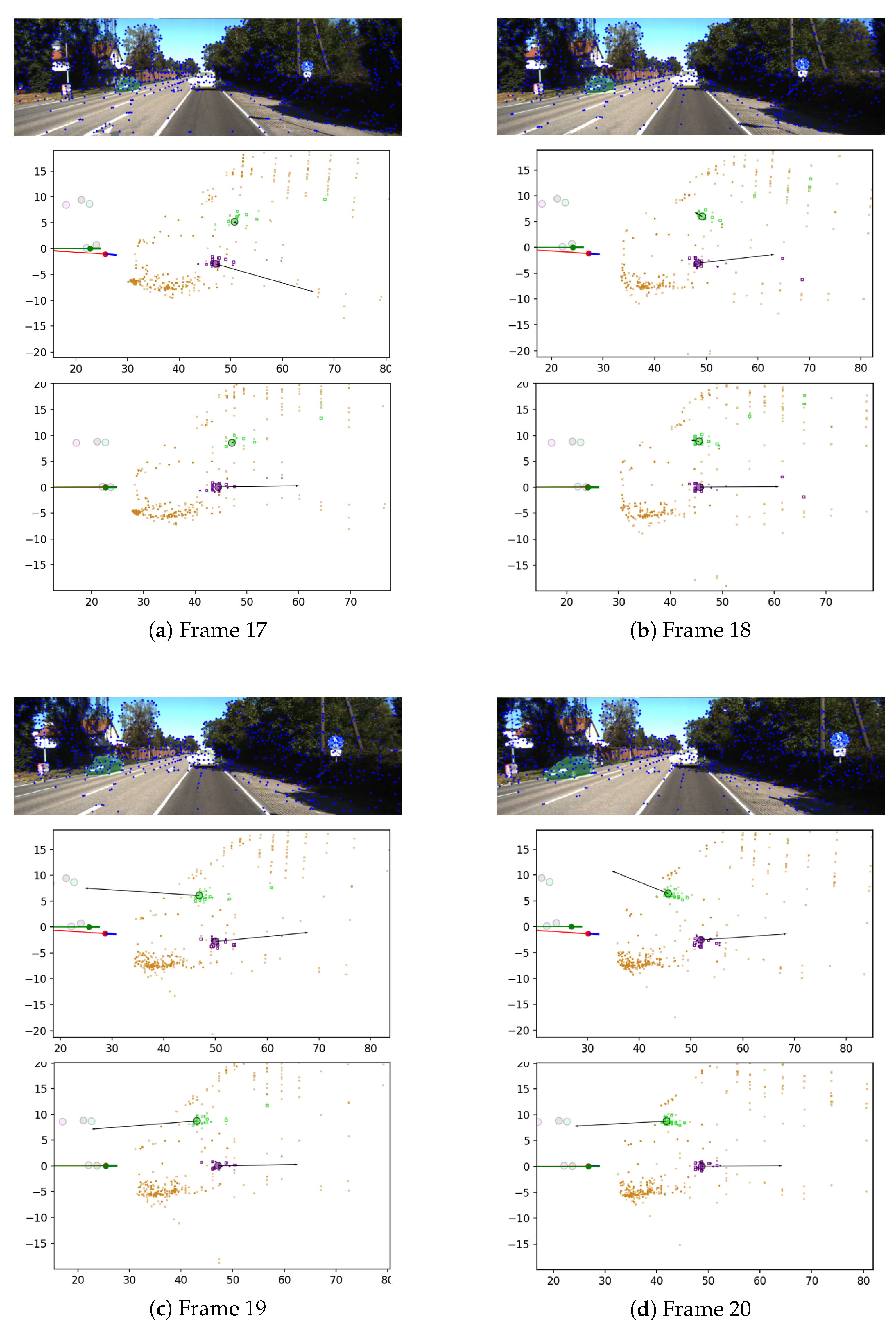

2.3.3. Estimation of Planar Motion of Dynamic Objects with Kalman Filter

2.3.4. Augmented Estimation with Both Static and Dynamic Landmarks

- (1)

- State Prediction

- (2)

- Covariance Prediction

- (3)

- Residual

- (4)

- Innovation Covariance

- (5)

- Kalman Gain

- (6)

- State Update

- (7)

- Covariance Update

2.3.5. Mapping of Newly Detected Landmarks

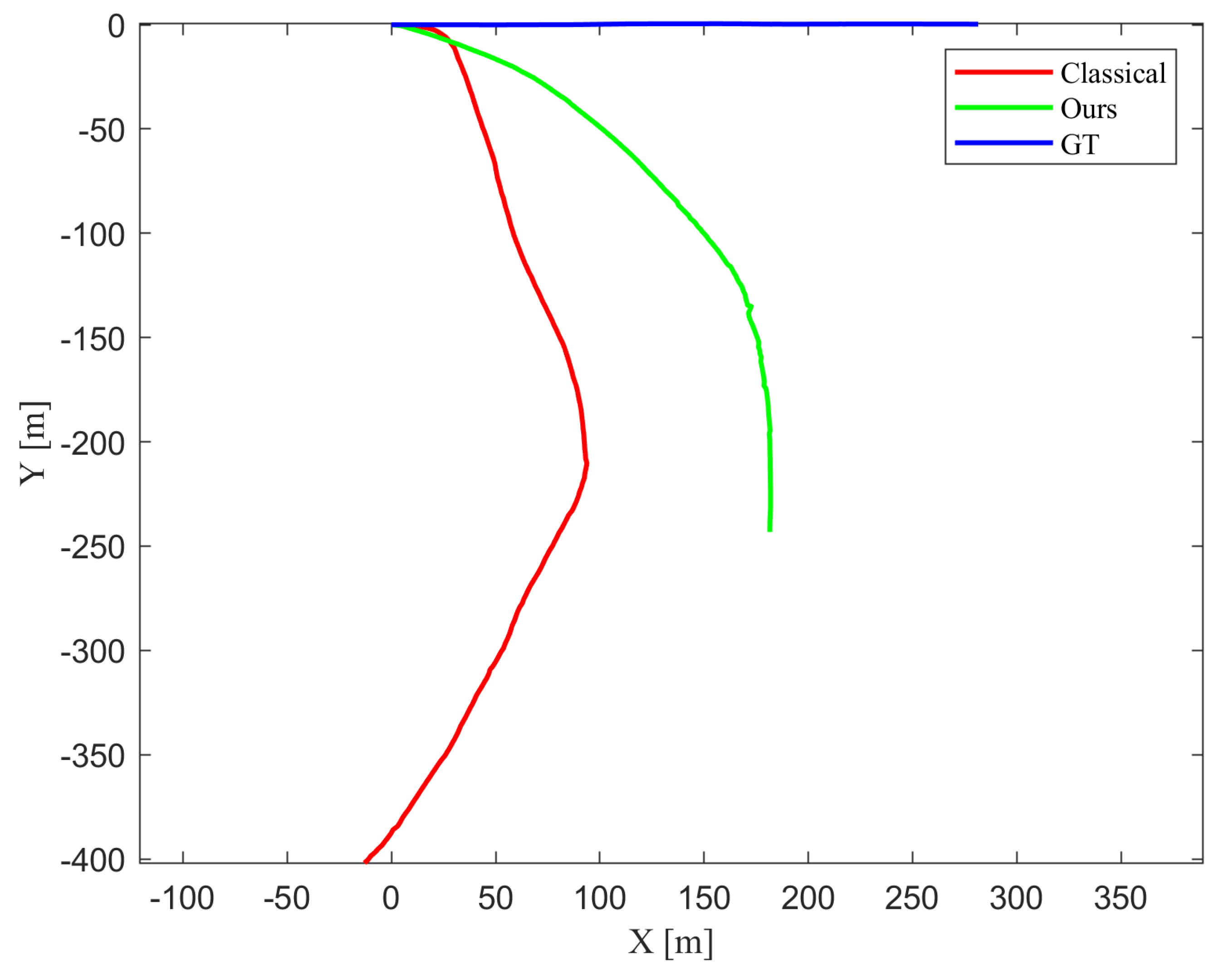

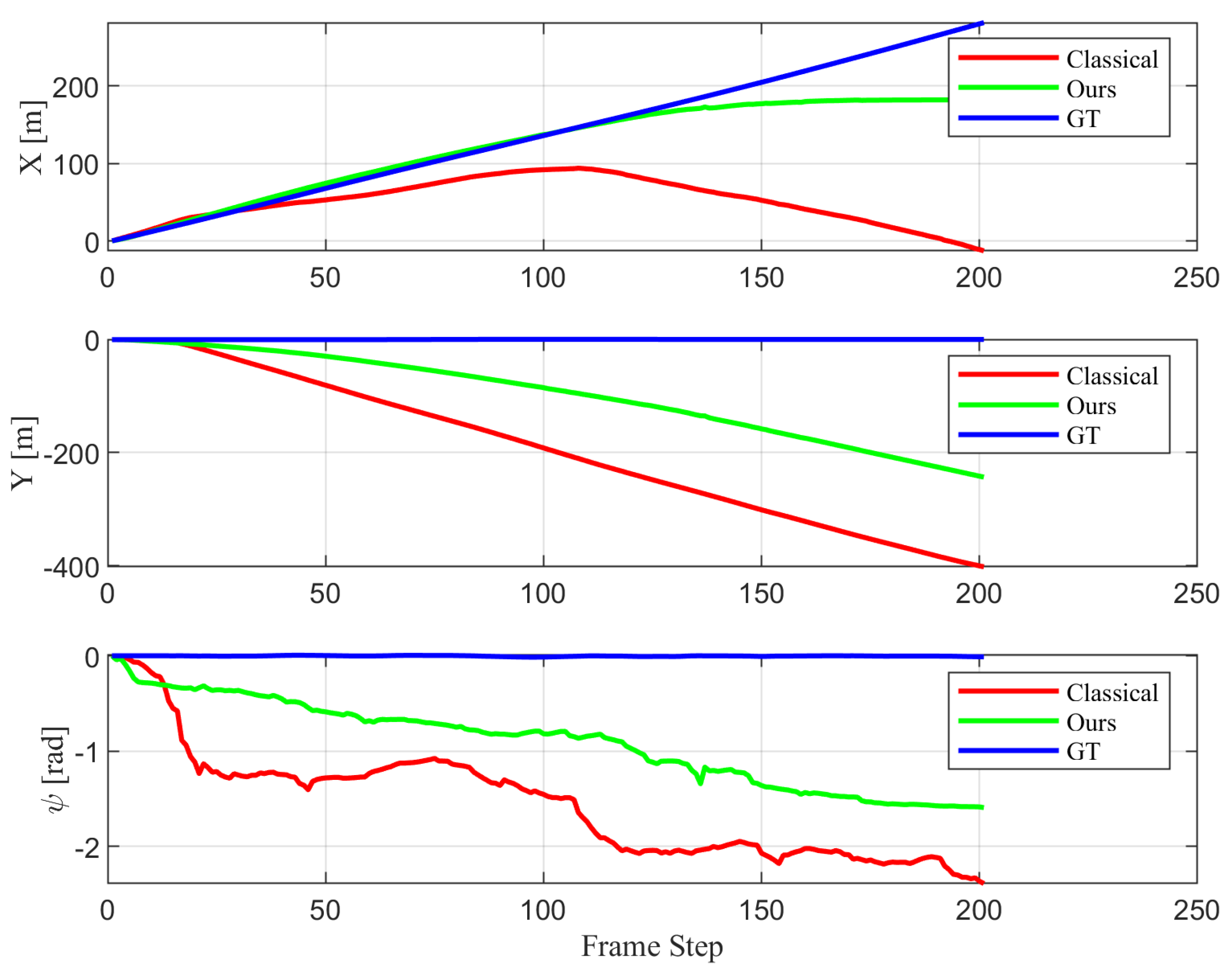

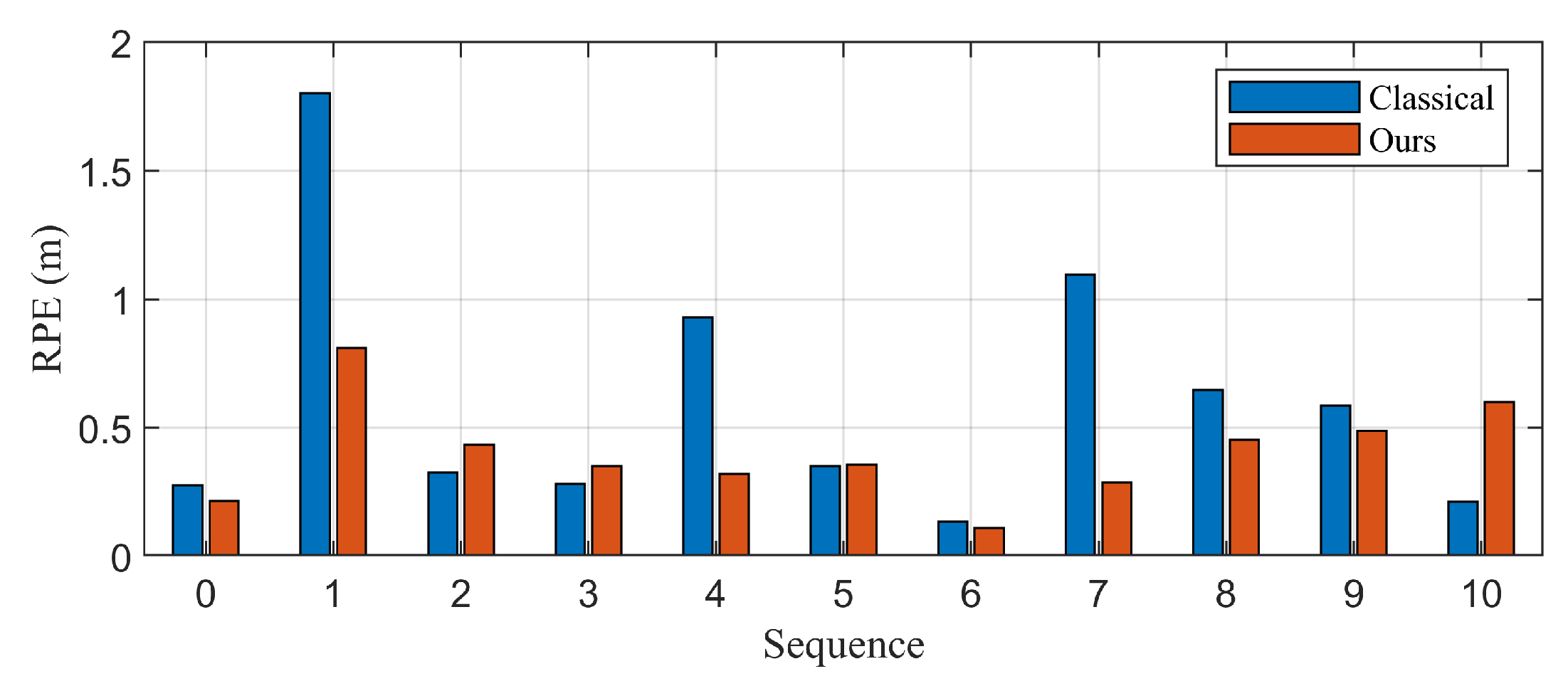

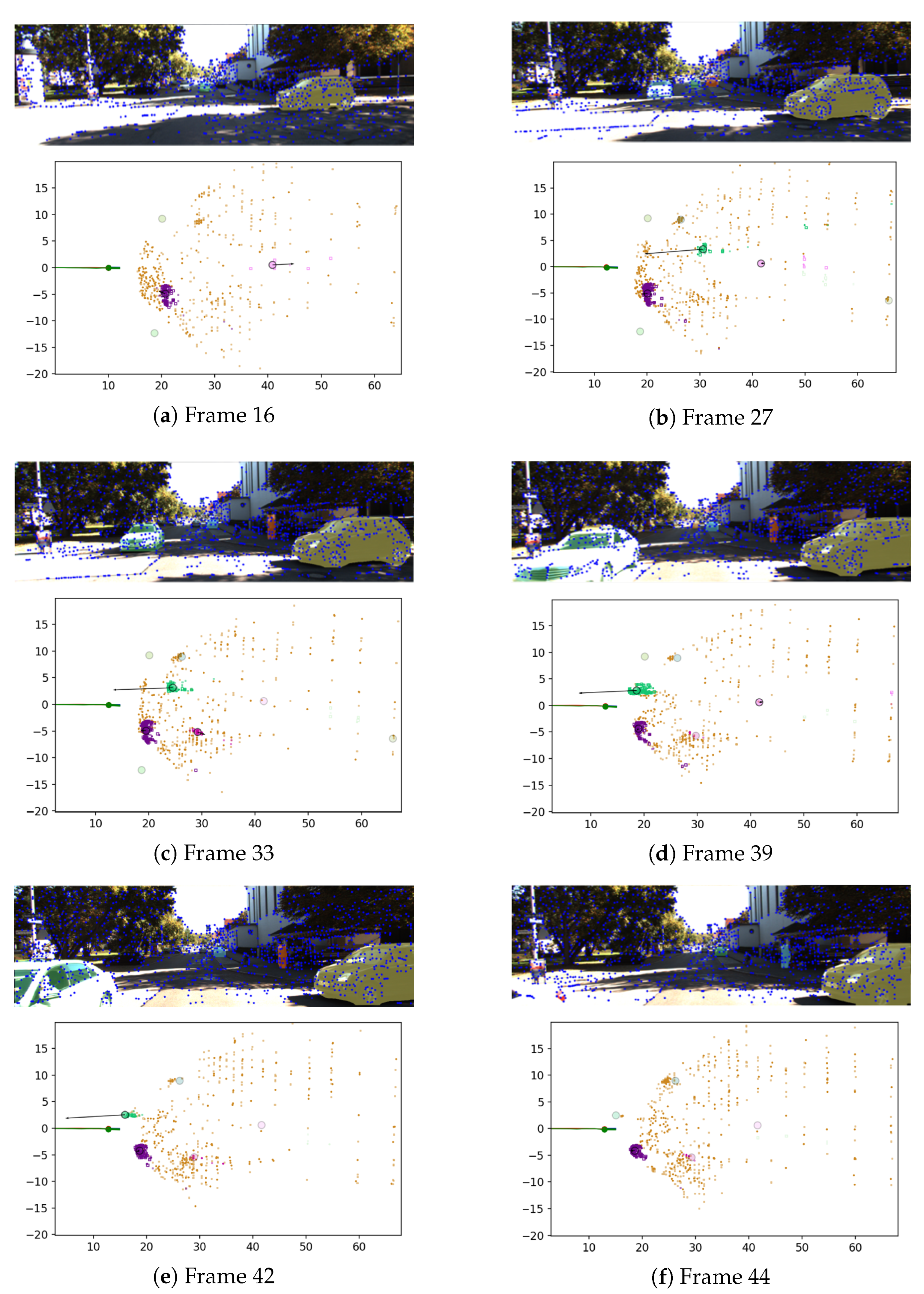

3. Evaluation

3.1. Environment Settings

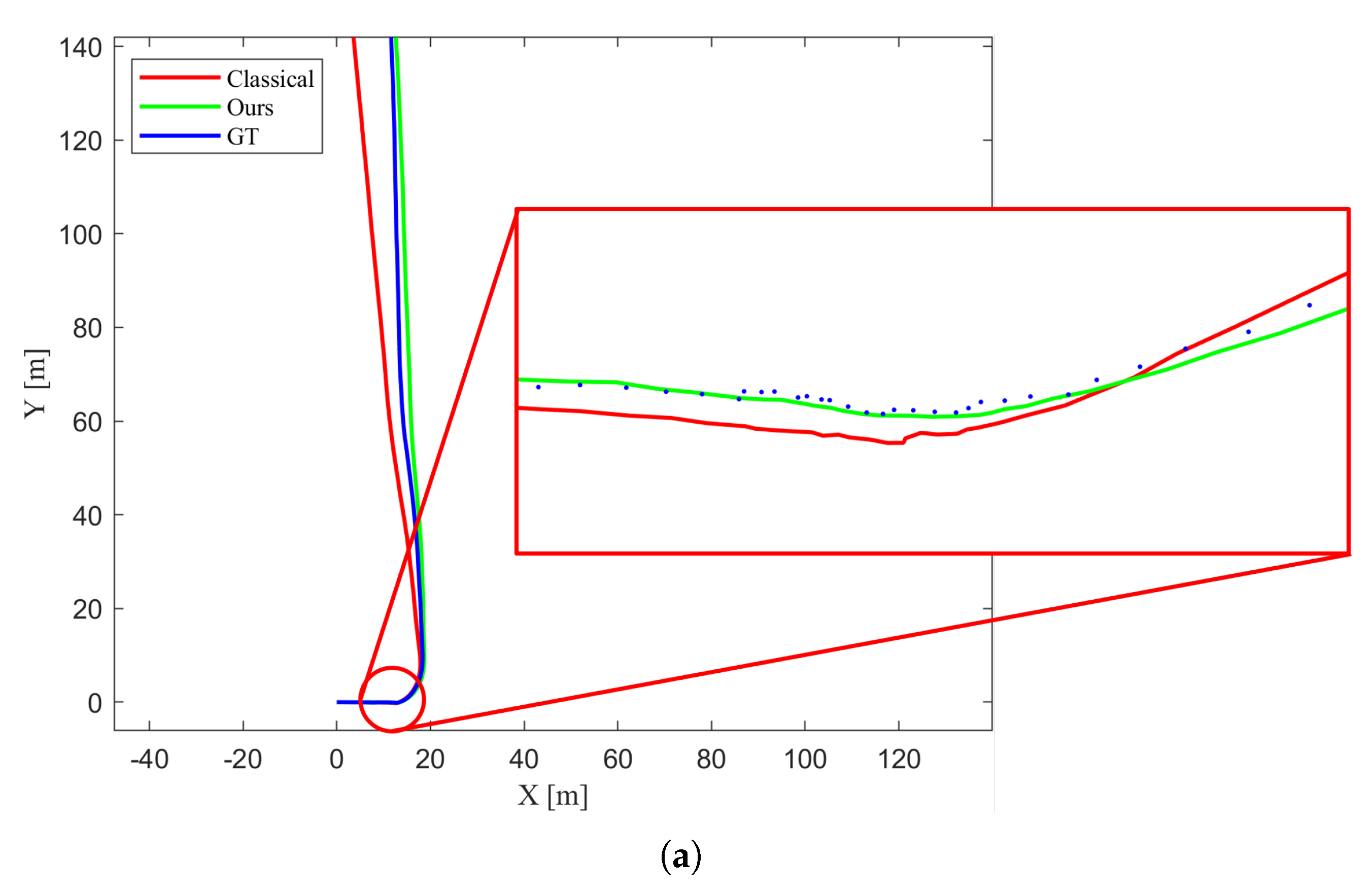

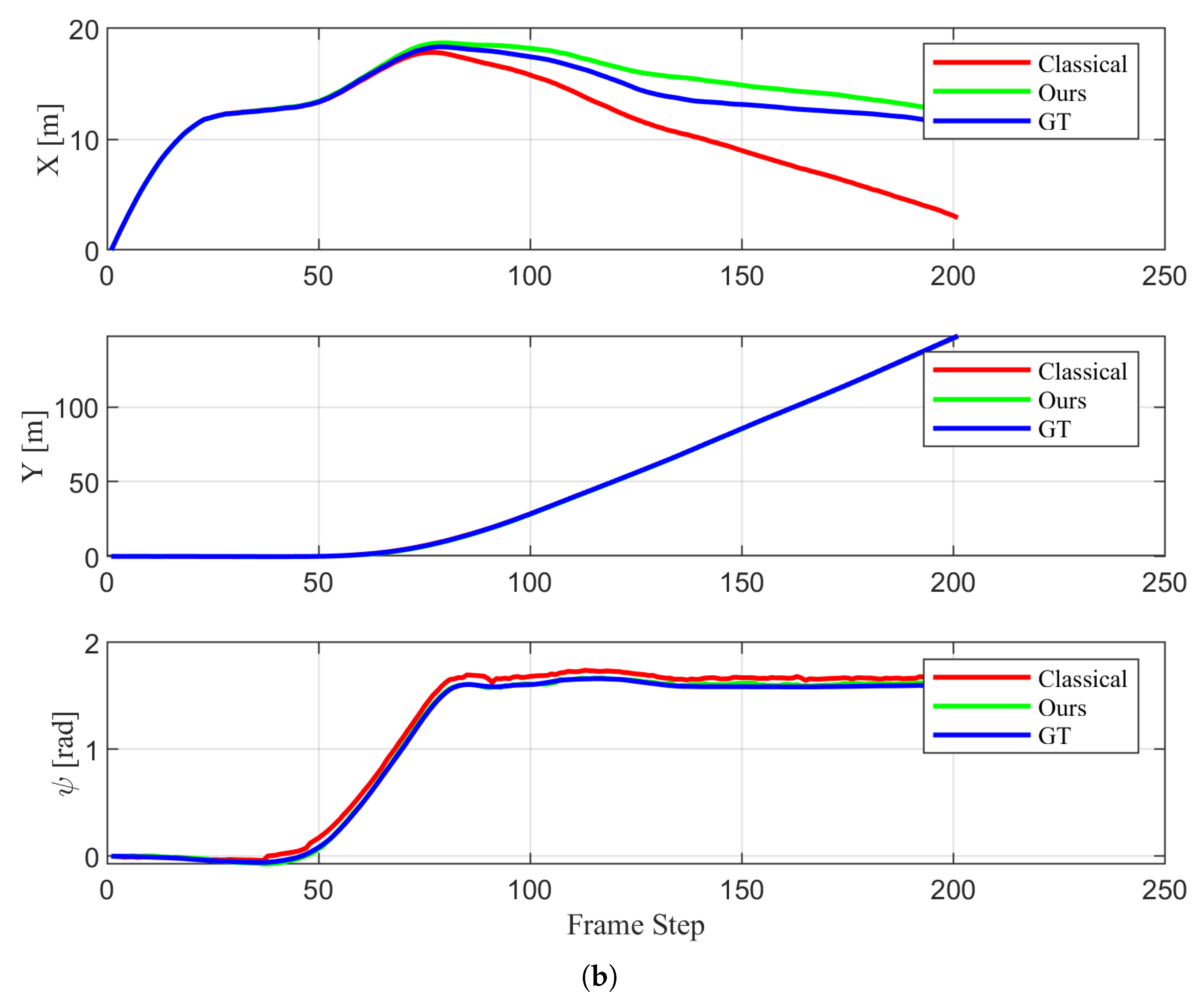

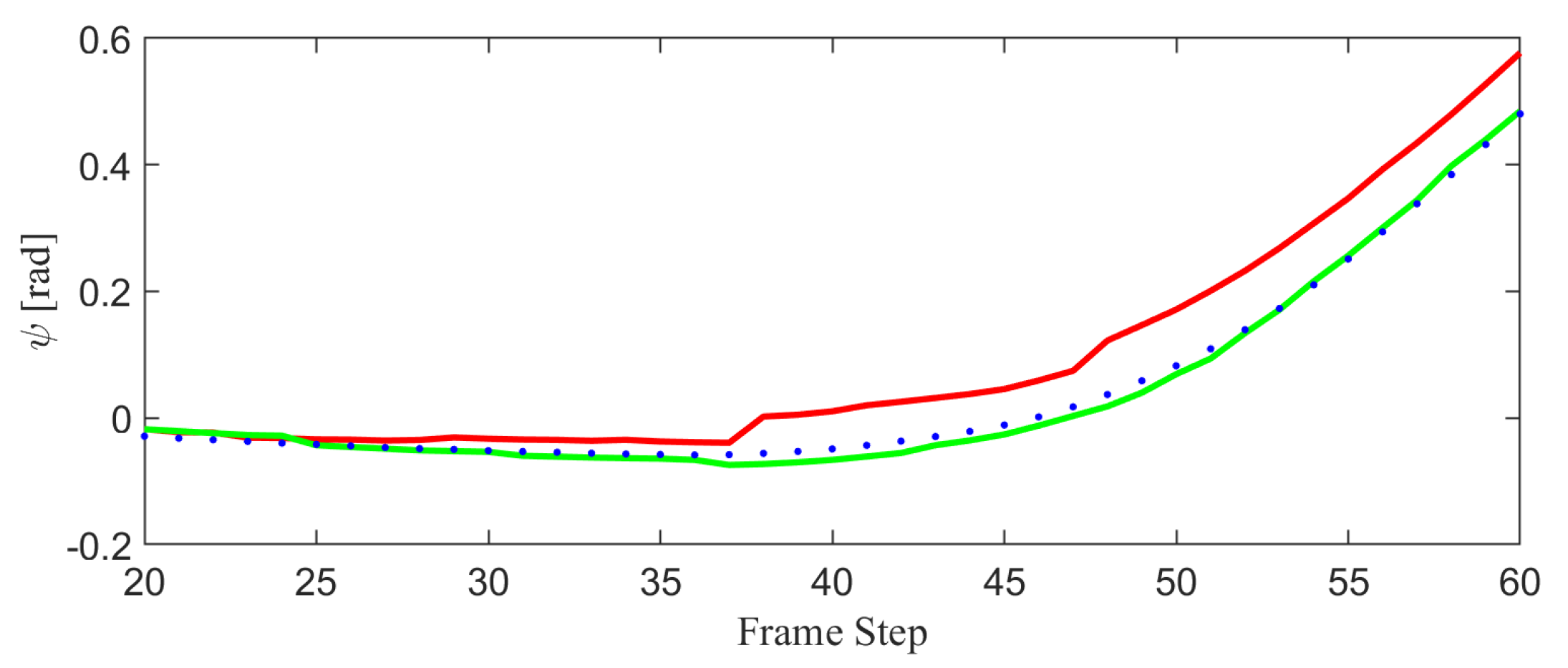

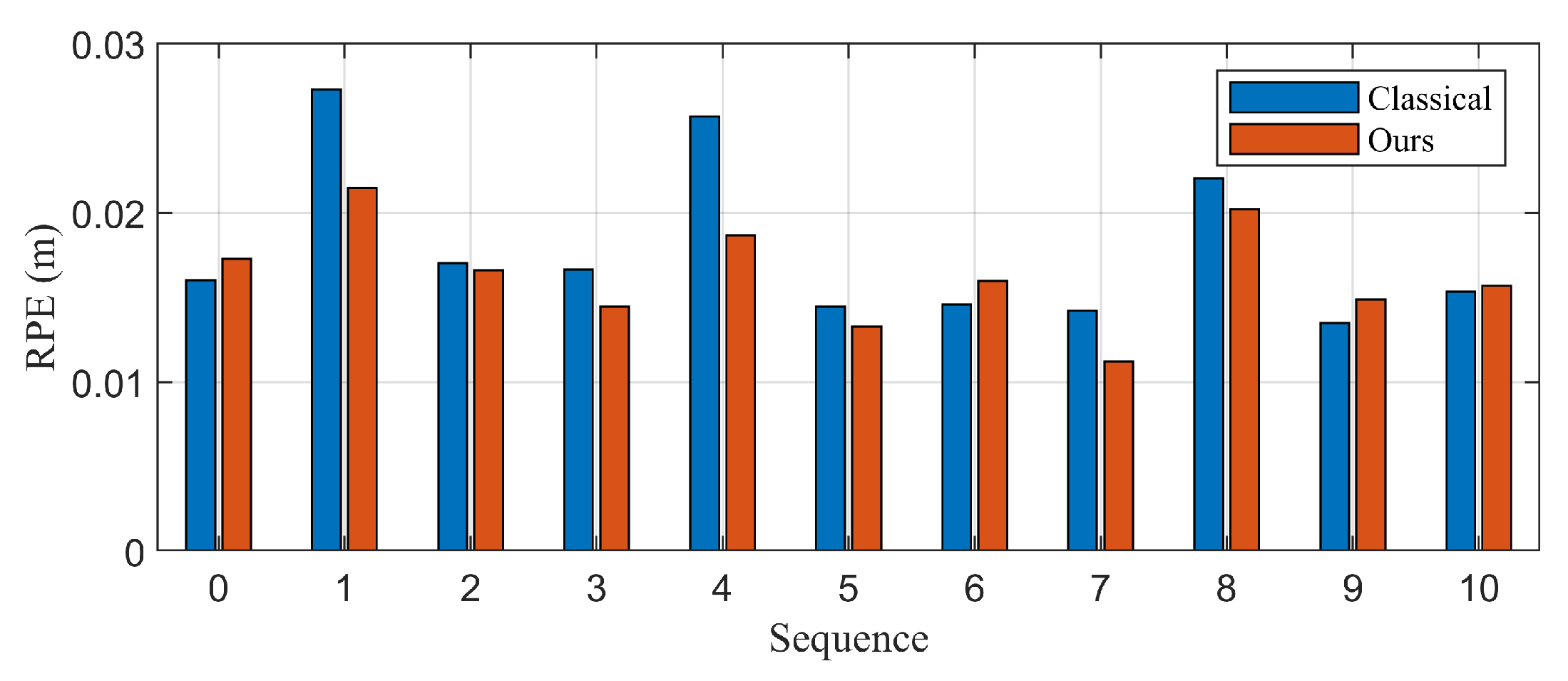

3.2. Evaluation Using an Augmented State Vector Including Control Inputs

3.3. Evaluation Using Control Inputs as Measurements

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Classical 1-Point RANSAC

| Algorithm A1 1-Point RANSAC-Based EKF Pose Estimation |

|

Appendix B. RANSAC-Based Euclidean Transform Estimation

| Algorithm A2 compute_rigid_transform: Least-Squares Rigid Transformation Estimation |

|

| Algorithm A3 estimate_rigid_transform_ransac: Deterministic RANSAC-based 2D Rigid Transformation Estimation |

|

Appendix C. Calculation of Object State

| Algorithm A4 Adaptive RANSAC-based Velocity and Rotation Estimation |

|

Appendix D. Measurement Noise Covariance in Polar Coordinates

References

- Gay, S.; Le Run, K.; Pissaloux, E.; Romeo, K.; Lecomte, C. Towards a predictive bio-inspired navigation model. Information 2021, 12, 100. [Google Scholar] [CrossRef]

- Wang, Y.; Shao, B.; Zhang, C.; Zhao, J.; Cai, Z. Revio: Range-and event-based visual-inertial odometry for bio-inspired sensors. Biomimetics 2022, 7, 169. [Google Scholar] [CrossRef] [PubMed]

- Scaramuzza, D.; Fraundorfer, F. Visual odometry [tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EP n P: An accurate O (n) solution to the P n P problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Ferraz, L.; Binefa, X.; Moreno-Noguer, F. Very fast solution to the PnP problem with algebraic outlier rejection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 501–508. [Google Scholar]

- Jiang, Y.; Chen, H.; Xiong, G.; Scaramuzza, D. Icp stereo visual odometry for wheeled vehicles based on a 1dof motion prior. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; IEEE: New York, NY, USA, 2014; pp. 585–592. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the International Workshop on Vision Algorithms, Corfu, Greece, 21–22 September 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 298–372. [Google Scholar]

- Davison, A.J.; Reid, I.D.; Molton, N.D.; Stasse, O. MonoSLAM: Real-time single camera SLAM. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1052–1067. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2017; IEEE: New York, NY, USA, 2007; pp. 225–234. [Google Scholar]

- Büchner, M.; Dahiya, L.; Dorer, S.; Ramtekkar, V.; Nishimiya, K.; Cattaneo, D.; Valada, A. Visual Loop Closure Detection Through Deep Graph Consensus. arXiv 2025, arXiv:2505.21754. [Google Scholar] [CrossRef]

- Wang, N.; Chen, X.; Shi, C.; Zheng, Z.; Yu, H.; Lu, H. SGLC: Semantic graph-guided coarse-fine-refine full loop closing for LiDAR SLAM. IEEE Robot. Autom. Lett. 2024, 9, 11545–11552. [Google Scholar] [CrossRef]

- Lim, T.Y.; Sun, B.; Pollefeys, M.; Blum, H. Loop Closure from Two Views: Revisiting PGO for Scalable Trajectory Estimation through Monocular Priors. arXiv 2025, arXiv:2503.16275. [Google Scholar] [CrossRef]

- Ligorio, G.; Sabatini, A.M. Extended Kalman filter-based methods for pose estimation using visual, inertial and magnetic sensors: Comparative analysis and performance evaluation. Sensors 2013, 13, 1919–1941. [Google Scholar] [CrossRef]

- van Goor, P.; Mahony, R. An equivariant filter for visual inertial odometry. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 14432–14438. [Google Scholar]

- Bosse, M.; Agamennoni, G.; Gilitschenski, I. Robust estimation and applications in robotics. Found. Trends® Robot. 2016, 4, 225–269. [Google Scholar] [CrossRef]

- Agarwal, P.; Tipaldi, G.D.; Spinello, L.; Stachniss, C.; Burgard, W. Robust map optimization using dynamic covariance scaling. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; IEEE: New York, NY, USA, 2013; pp. 62–69. [Google Scholar]

- Sünderhauf, N.; Protzel, P. Switchable constraints for robust pose graph SLAM. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; IEEE: New York, NY, USA, 2012; pp. 1879–1884. [Google Scholar]

- Neira, J.; Tardós, J.D. Data association in stochastic mapping using the joint compatibility test. IEEE Trans. Robot. Autom. 2002, 17, 890–897. [Google Scholar] [CrossRef]

- Civera, J.; Grasa, O.G.; Davison, A.J.; Montiel, J. 1-point RANSAC for EKF-based structure from motion. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; IEEE: New York, NY, USA, 2009; pp. 3498–3504. [Google Scholar]

- Cao, L.; Liu, J.; Lei, J.; Zhang, W.; Chen, Y.; Hyyppä, J. Real-time motion state estimation of feature points based on optical flow field for robust monocular visual-inertial odometry in dynamic scenes. Expert Syst. Appl. 2025, 274, 126813. [Google Scholar] [CrossRef]

- Ding, S.; Ma, T.; Li, Y.; Xu, S.; Yang, Z. RD-VIO: Relative-depth-aided visual-inertial odometry for autonomous underwater vehicles. Appl. Ocean. Res. 2023, 134, 103532. [Google Scholar] [CrossRef]

- Ballester, I.; Fontan, A.; Civera, J.; Strobl, K.H.; Triebel, R. DOT: Dynamic object tracking for visual SLAM. arXiv 2020, arXiv:2010.00052. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Z.; Chen, H.; Li, J. A Motion Segmentation Dynamic SLAM for Indoor GNSS-Denied Environments. Sensors 2025, 25, 4952. [Google Scholar] [CrossRef]

- Ram, K.; Kharyal, C.; Harithas, S.S.; Krishna, K.M. RP-VIO: Robust plane-based visual-inertial odometry for dynamic environments. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: New York, NY, USA, 2021; pp. 9198–9205. [Google Scholar]

- Eckenhoff, K.; Geneva, P.; Merrill, N.; Huang, G. Schmidt-EKF-based visual-inertial moving object tracking. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: New York, NY, USA, 2020; pp. 651–657. [Google Scholar]

- Ma, G.; Li, C.; Jing, H.; Kuang, B.; Li, M.; Wang, X.; Jia, G. Schur Complement Optimized Iterative EKF for Visual–Inertial Odometry in Autonomous Vehicles. Machines 2025, 13, 582. [Google Scholar] [CrossRef]

- Song, S.; Lim, H.; Lee, A.J.; Myung, H. DynaVINS: A visual-inertial SLAM for dynamic environments. IEEE Robot. Autom. Lett. 2022, 7, 11523–11530. [Google Scholar] [CrossRef]

- Song, S.; Lim, H.; Lee, A.J.; Myung, H. DynaVINS++: Robust visual-inertial state estimator in dynamic environments by adaptive truncated least squares and stable state recovery. IEEE Robot. Autom. Lett. 2024. [Google Scholar] [CrossRef]

- Liu, J.; Yao, D.; Liu, N.; Yuan, Y. Towards Biologically-Inspired Visual SLAM in Dynamic Environments: IPL-SLAM with Instance Segmentation and Point-Line Feature Fusion. Biomimetics 2025, 10, 558. [Google Scholar] [CrossRef]

- Todoran, H.G.; Bader, M. Extended Kalman Filter (EKF)-based local SLAM in dynamic environments: A framework. In Proceedings of the Advances in Robot Design and Intelligent Control: Proceedings of the 24th International Conference on Robotics in Alpe-Adria-Danube Region (RAAD), Cluj-Napoca, Romania, 5–7 June 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 459–469. [Google Scholar]

- Chiu, C.Y. SLAM Backends with Objects in Motion: A Unifying Framework and Tutorial. arXiv 2022, arXiv:2207.05043. [Google Scholar]

- Tian, X.; Zhu, Z.; Zhao, J.; Tian, G.; Ye, C. DL-SLOT: Tightly-coupled dynamic LiDAR SLAM and 3D object tracking based on collaborative graph optimization. IEEE Trans. Intell. Veh. 2023, 9, 1017–1027. [Google Scholar] [CrossRef]

- Huang, J.; Yang, S.; Zhao, Z.; Lai, Y.; Hu, S. ClusterSLAM: A SLAM backend for simultaneous rigid body clustering and motion estimation, Comput. Vis. Media 2021, 7, 87–101. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–21. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. Density-based spatial clustering of applications with noise. In Proceedings of the International Conference on Knowledge Discovery and Data Mining, Portland, OR, USA, 2–4 August 1996; Volume 240. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

| Sequence | Classical | Proposed |

|---|---|---|

| 00 | 0.2754 | 0.2130 |

| 01 | 1.8018 | 0.8112 |

| 02 | 0.3248 | 0.4334 |

| 03 | 0.2791 | 0.3508 |

| 04 | 0.9288 | 0.3193 |

| 05 | 0.3511 | 0.3559 |

| 06 | 0.1342 | 0.1094 |

| 07 | 1.0964 | 0.2852 |

| 08 | 0.6455 | 0.4526 |

| 09 | 0.5847 | 0.4866 |

| 10 | 0.2120 | 0.5997 |

| Mean | 0.6031 | 0.4016 |

| Dynamic cases | 1.1254 | 0.5277 |

| Sequence | Classical | Proposed |

|---|---|---|

| 00 | 0.0160 | 0.0173 |

| 01 | 0.0273 | 0.0214 |

| 02 | 0.0170 | 0.0166 |

| 03 | 0.0167 | 0.0144 |

| 04 | 0.0257 | 0.0187 |

| 05 | 0.0145 | 0.0133 |

| 06 | 0.0146 | 0.0160 |

| 07 | 0.0142 | 0.0112 |

| 08 | 0.0220 | 0.0202 |

| 09 | 0.0135 | 0.0149 |

| 10 | 0.0153 | 0.0157 |

| Mean | 0.0179 | 0.0163 |

| Dynamic cases | 0.0250 | 0.0201 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, J.; Kang, J. Extended Kalman Filter-Based Visual Odometry in Dynamic Environments Using Modified 1-Point RANSAC. Biomimetics 2025, 10, 710. https://doi.org/10.3390/biomimetics10100710

Lee J, Kang J. Extended Kalman Filter-Based Visual Odometry in Dynamic Environments Using Modified 1-Point RANSAC. Biomimetics. 2025; 10(10):710. https://doi.org/10.3390/biomimetics10100710

Chicago/Turabian StyleLee, Jinhee, and Jaeyoung Kang. 2025. "Extended Kalman Filter-Based Visual Odometry in Dynamic Environments Using Modified 1-Point RANSAC" Biomimetics 10, no. 10: 710. https://doi.org/10.3390/biomimetics10100710

APA StyleLee, J., & Kang, J. (2025). Extended Kalman Filter-Based Visual Odometry in Dynamic Environments Using Modified 1-Point RANSAC. Biomimetics, 10(10), 710. https://doi.org/10.3390/biomimetics10100710